Abstract

Accurate mapping of localization error distribution is essential for assessing passive sensor systems and guiding sensor placement. However, conventional analytical methods like the Geometrical Dilution of Precision (GDOP) rely on idealized error models, failing to capture the complex, heterogeneous error distributions typical of real-world environments. To overcome this challenge, we propose a novel data-driven framework that reconstructs high-fidelity localization error maps from sparse observations in TDOA-based systems. Specifically, we model the error distribution as a tensor and formulate the reconstruction as a tensor completion problem. A key innovation is our physics-informed regularization strategy, which incorporates prior knowledge from the analytical error covariance matrix into the tensor factorization process. This allows for robust recovery of the complete error map even from highly incomplete data. Experiments on a real-world dataset validate the superiority of our approach, showing an accuracy improvement of at least 27.96% over state-of-the-art methods.

1. Introduction

Passive localization is ubiquitous in fields ranging from wireless sensor networks (WSNs) to autonomous driving [1,2,3]. The reliability of these systems hinges on a thorough understanding of their performance across the operational domain. Consequently, a high-fidelity spatial localization error map is an indispensable tool, providing crucial insights for system evaluation, operational planning, and strategic sensor deployment. In practice, however, acquiring a complete and dense error map is often infeasible due to cost and logistical constraints, typically yielding only sparse measurements at discrete locations. Therefore, robustly reconstructing the full error distribution from limited data remains a significant challenge in the field.

Currently, the Geometric Dilution of Precision (GDOP) is the most widely adopted metric for performance evaluation [4,5]. The underlying principle is that measurement errors (e.g., in Time-Difference-of-Arrival, TDOA) are amplified by the geometric arrangement of the sensors, where GDOP provides a scalar metric to quantify this amplification factor [6,7]. Typically, unknown model parameters, such as measurement error variances, are estimated statistically from limited emitters and then extrapolated to unobserved regions. To enhance model fidelity in complex environments, numerous extensions to the basic GDOP model have been proposed. These include Weighted GDOP (WGDOP) variants accounting for heterogeneous noise in satellite navigation [8,9,10], as well as adaptations for indoor spaces [11,12], urban canyons [13], UAV-assisted networks [1,14,15], and WSNs [5,16].

Despite these refinements, model-driven methods share a fundamental limitation: they are predicated on idealized assumptions and oversimplified error models. They often fail to capture the complex coupling of error sources in real-world environment ssuch as non-line-of-sight (NLOS) propagation, multipath fading, and clock drift, leading to an unavoidable mismatch between predicted and actual system performance.

To mitigate this model mismatch, data-driven paradigms have emerged as a promising alternative. By learning directly from measurement data, these methods capture fine-grained environmental effects and complex error structures without relying on rigid physical models. Existing data-driven strategies for spatial map reconstruction generally fall into three categories. The first includes classical spatial interpolation techniques, such as Kriging [17,18] and kernel-based methods [19,20,21,22], which estimate values at unobserved locations based on spatial correlation. The second category comprises low-rank matrix and tensor completion methods, including compressed sensing [23], singular value thresholding [24,25,26], and tensor decomposition algorithms [27,28,29]. For instance, Zhang et al. [28] utilized block term decomposition (BTD) to reconstruct electromagnetic maps, while Sun et al. [30] improved accuracy by combining local interpolation with nuclear norm minimization (NNM-T). The third category encompasses machine learning approaches, such as RadioUNet [31], autoencoders [32], and Vision Transformer (ViT)-based methods [33]. While powerful, the efficacy of these purely data-driven methods is critically contingent on the availability of dense and uniformly distributed data [34,35]. In practical scenarios with sparse measurements, their performance degrades sharply, often failing to converge to physically meaningful solutions.

To overcome the limitations of inaccurate physical models and insufficient observational data, this paper proposes a framework that integrates model-based insights with the flexibility of data-driven approaches. First, we model the three-dimensional (3-D) spatial distribution of localization error as a third-order tensor, termed Tensorized GDOP (TGDOP). This representation offers a powerful mathematical tool to describe complex spatial error distributions, capturing intrinsic multi-dimensional structures and inherent anisotropy [36] that scalar metrics like GDOP fail to express. Subsequently, we formulate the reconstruction of the complete error map as a tensor completion problem. To solve this ill-posed problem under sparse measurement conditions, we develop a physics-informed regularization strategy. Specifically, we incorporate prior knowledge from the analytical error covariance matrix directly into the tensor factorization process by imposing polynomial constraints on the factor matrices. This physics-based constraint guides the reconstruction, ensuring the solution adheres to the underlying geometric principles of localization. It effectively compensates for missing observations, dramatically enhancing reconstruction accuracy and robustness without relying on simplified environmental models. Furthermore, this approach is not scenario-dependent and holds potential for extension to complex multi-system fusion scenarios.

Notably, while the concept of physics-informed learning is prominent in Physics-Informed Neural Networks (PINNs) [37], our usage differs fundamentally. PINNs typically integrate partial differential equations (PDEs) into a loss function and require training on large datasets to learn latent physical laws. In contrast, the proposed approach embeds these laws directly into the tensor structure as constraints for decomposition. Consequently, our method operates in a single-shot manner, which is training-free and requires no historical data. Experimental results on a real-world dataset demonstrate that, even with only 1% of observation data available, the proposed framework improves reconstruction accuracy by at least 27.96% compared to state-of-the-art baselines.

The main contributions of this paper are summarized as follows:

- Tensor-Based Spatial Error Modeling (TGDOP): We propose a novel framework that models the spatial distribution of positioning errors as a third-order tensor. Unlike conventional scalar GDOP metrics, this tensor representation explicitly captures the anisotropic characteristics and complex coupling of error sources in real-world 3-D environments.

- Physics-Informed Sparse Reconstruction Algorithm: We develop a robust tensor completion algorithm tailored for extremely sparse observational data. By deriving spatial properties from the theoretical error covariance matrix, we introduce polynomial constraints to the factor matrices during tensor decomposition.

- Training-Free and Model-Robust Performance: The proposed method operates as a single-shot, data-driven approach that does not require historical training data or idealized channel assumptions, validated on both simulated and real-world datasets.

The remainder of this paper is organized as follows: Section 2 describes the problem formulation, and Section 3 provides the preliminaries. The definition and properties of TGDOP are detailed in Section 4, followed by the sparse reconstruction algorithm in Section 5. Section 6 presents simulation and real-data experiments, and conclusions are drawn in Section 7.

2. Problem Statement

Consider a 3-D space discretized into a grid of cells. The coordinate corresponding to a grid cell with index is denoted by , where represents the set of all such indices, with cardinality . Let be the set of indices for grid cells containing emitters.

The characterization of positioning error begins with understanding its statistical nature at a specific location . Assume there are N independent positioning results (for ) for an emitter at a true location , then the positioning error for each result is given by . The covariance matrix of the positioning error at is then calculated by

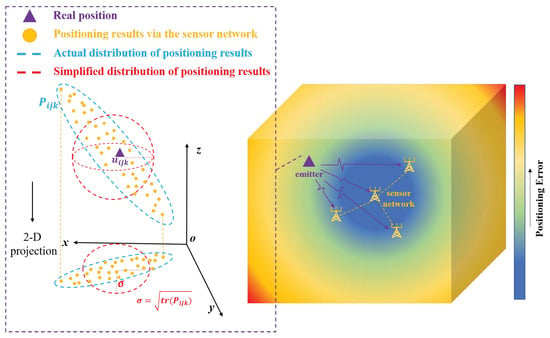

where . Suppose the positioning error measurements are available for any grid cell, then a heatmap of the spatial distribution of localization error can be generated by calculating the Root Mean Square Error (RMSE) value at each grid point, , as depicted in Figure 1.

Figure 1.

Schematicheatmap illustrating the magnitude of positioning error in a representative 3-D TDOA positioning system.

In real-world applications, however, the acquisition of valid measurements is restricted to a very limited number of locations equipped with emitters. This inherent limitation means that statistical approaches can only characterize the positioning error locally and are incapable of perceiving the error map across the entire space. To overcome this, conventional methods use a parameterized empirical model for instead of a statistical one in (1), allowing extrapolation to emitter-free locations. These models typically define a few unknown parameters based on key error sources, which are then solved for using the limited available measurements. A typical example is the error map derived by the GDOP model for TDOA systems, which primarily accounts for errors in TDOA measurement noise and sensor position uncertainties :

Here, the specific forms of the geometry matrix and the intermediate parameter error covariance are detailed in Section 4.2. The variance of the l-th TDOA measurement, , can be further expressed based on signal parameters [38] as

where is the root mean square (RMS) signal bandwidth, B is the signal bandwidth, T is the RMS integration time, and is the effective signal-to-noise ratio for the l-th TDOA calculation.

The parametric models can align with statistical estimates calculated by (1) if the underlying model assumptions are accurate. However, real-world scenarios involve complex propagation channels and diverse, often unmodeled, error sources. For instance, in typical NLOS scenarios, while the factors detailed (such as signal bandwidth and SNR) in (3) influence the standard deviation of TDOA measurements, the error component arising from NLOS propagation often plays a more dominant and decisive role. However, the GDOP model would be highly inaccurate because it does not account for NLOS errors. Moreover, the need to design distinct models for tailored to different localization systems and specific application scenarios significantly curtails the practical utility and generalizability of conventional models.

To address the limitations of conventional models, the tensor-based framework surpasses conventional models by learning complex spatial distributions directly from data, independent of empirical models. We define the tensor representation of the 3-D positioning error map as . Its components along the Cartesian axes (x, y, and z) are denoted as , , and , respectively. Then, the relationship between and its components is given by

In practical scenarios with spatially sparse emitters, the error statistics can only be measured at indices . The measurement model for an element at index is

where and represent the observation errors associated with and , respectively.

The learnable parameters in traditional models are limited to a predefined set of error sources from a prior model. In contrast, the tensor model’s parameters consist of the factor matrices and the core tensor (detailed in Section 4.1), where the factor matrices characterize the axial trends of the error distribution, and the core tensor represents the coupling relationships among these axial components. Thus, the tensor model can better adapt to complex real-world environments for inference in regions with no observations.

In this context, our primary problem is to reconstruct the complete underlying error component tensors (and, consequently, the total error tensor ) across the entire spatial domain from sparse and noisy measurements (and ) available only at indices . It is typically the case that the number of observed points is much smaller than the total number of grid points, i.e., , and the observed locations are often randomly distributed.

Notation: In this paper, we follow the established convention in signal processing. We denote scalars, vectors, matrices, and tensors with lowercase letters , boldface lowercase , boldface capitals , and underlined boldface capitals , respectively. denotes the -th element of a third-order tensor. The colon notation indicates the sub-tensors of a given tensor. Italic capitals are also used to denote the index upper bounds. We use the superscripts and to represent the inverse and the pseudo-inverse of a matrix, respectively. In addition, we use to denote the Frobenius norms of a tensor. The operator stacks its scalar arguments in a square diagonal matrix, is the covariance of and , and computes the trace of a matrix. The operator is defined as . The outer product follows that . The Hadamard product follows that . The Kronecker product is derived by , and its capital symbol is used to denote the multiplicative form. Then, the Khatri–Rao product is defined as the block-wise Kronecker product: . The denotes the Kronecker delta. The identity matrix is represented by , zero matrix is denoted by , and is a column vector of all ones of length N.

3. Preliminaries

We begin with fundamental tensor analysis concepts to grasp the approach outlined in this paper. The issue discussed in this paper aligns with BTD, so the emphasis is on introducing the key concepts of BTD.

3.1. Mode-N Unfolding and Mode Product

For an N-th order tensor , the mode-n unfolding of , denoted as , unfolds the n-th fiber as the columns of the resulting matrix. It maps the tensor element to the matrix element , where

The mode-n product of the with the is denoted as , and it follows that

Its matrix representation is expressed as

3.2. Block Tensor Decomposition in Multilinear Rank-(L,M,N) Terms

Unlike matrices, tensor rank determination is an NP-hard problem, leading to varied rank definitions among algorithms. The concept of multilinear rank is proposed in BTD. The tensor’s mode-n rank is defined as

Definition 1

(Mode-n rank). The mode-n rank of a tensor is the dimension of the subspace spanned by its mode-n vectors.

Then, a third-order tensor’s multilinear rank is if its mode-1 rank, mode-2 rank, and mode-3 rank are equal to L, M, and N, respectively.

A decomposition of a tensor in a sum of rank-(L,M,N) terms can be written as

where is rank-(L,M,N), and , , and are matrices with full column rank. Typically, the symbol is referred to as the core tensor, while the matrices , , and are denoted as the factor matrices. Calculating the mode-n unfolding of (9), we have

where , , and .

4. TGDOP and Its Properties

In this section, we introduce the TGDOP to characterize the distribution of localization errors and investigate the potential properties of TGDOP by analyzing the covariance matrix of positioning errors. Initially, we present a decomposed formulation of TGDOP, which explicitly accounts for the anisotropic characteristics inherent in localization errors. Subsequently, we analyze the general expressions for the error covariance matrix under various localization schemes. This analysis aims to establish the universality of the TGDOP model across different localization methodologies. Finally, by mapping the properties of the covariance matrix to the fundamental characteristics of the factor matrices within the TGDOP tensor space, we discuss the theoretical underpinnings that enable data-driven reconstruction using this model.

4.1. Tensor Model of Positioning Error Distribution

In real-world applications, conventional positioning error models are disturbed by model mismatch because their constrained parametric form limits their ability to capture the multiplicity of error sources found in complex environments. In contrast, tensor models are structured with far greater expressive power, allowing them to be effectively data-driven in constructing models that are sufficiently accurate and adaptable for practical applications.

The expressive power of tensor models stems from their core tensor and factor matrices. Analogous to the singular value decomposition (SVD) in 2-D cases, the 3-D tensor could be characterized by eigenspaces defined by three sets of eigenvectors. We denote these eigenspaces as , , and , corresponding to the factor matrices on the x, y, and z dimensions, respectively. Consequently, we have

where is the core tensor of . Substituting (11) into (4), can be expressed as

The theoretical TGDOP model is presented in (12). Employing a BTD framework, this model effectively maps the spatial distribution of the diagonal components of the covariance matrix onto a set of latent core tensors, . When this formulation is integrated with the expression for TGDOP measurements given in (5), it yields . The probability distribution of the measurement noise here satisfies Theorem 1.

Theorem 1

(Measurement Noise Distribution). Consider a 3-D scenario where an emitter is located at , and there are N positioning results for this emitter. Let represent the covariance matrix of the positioning error. When N is relatively larger than the diagonal elements in , the observation noise of and at follows that

The proof is relegated to Appendix A. Theorem 1 establishes the condition under which measurements conform to a Gaussian distribution.

Notably, the TGDOP model presented in (12) involves not only the tensor but also all its constituent core tensors and factor matrices. These elements enable the reconstruction of both and its components, . In essence, TGDOP can be represented by a higher-order tensor satisfying . We demonstrate that , which lacks directional information, is a collapse of the directionally informed along different dimensions. The relationship between and is further elucidated in Appendix C through the definition of a tensor operation.

4.2. The Covariance Matrix of Positioning Error

The covariance matrix of the positioning error is directly related to the TGDOP measurements, so the properties of the covariance matrix are the theoretical basis for designing the TGDOP factor matrix. Many documents have derived the covariance matrices of positioning errors under different positioning systems [36,39,40,41,42] in 2-D cases. This subsection proposes the covariance matrices of positioning errors for the TDOA positioning system in a 3-D Cartesian coordinate system.

Now, by defining the covariance matrix of the positioning error as , we have for a 3-D scenario. Assume there are N sensors for TDOA positioning, where the main sensor and the auxiliary sensors are located at and , respectively, and the radiation source is located at . Let denote the TDOA measurements for the i-th group of sensors, denote the variance of , and denote the variance of the sensor position error. The i-th row of the direction cosine matrix is given by

Let c denote the light speed, then the covariance matrix of the positioning error follows that , where

Next, by defining to represent the sum of the covariance matrices of all possible estimation errors, we have

It is found that under different localization regimes, the error covariance matrix can consistently be derived from and in a form analogous to (15). This is because (15) elucidates the principal sources of localization error. Specifically, represents the inherent estimation errors within the localization system, while quantifies the anisotropic amplification effect on these errors attributable to the station geometry. Therefore, (15) can be considered a general expression for the localization error covariance matrix. Moreover, according to (5), the TGDOP measurements also incorporate this universal expressive capability, which explains its applicability across various localization systems.

4.3. Properties of TGDOP Derived from Error Covariance Matrix

A thorough understanding of its factor matrix properties is paramount to enable the effective reconstruction of the TGDOP model introduced in (12). This subsection investigates these properties by first examining the positioning error covariance matrix. We then translate the characteristics of this covariance matrix to the factor matrices in the tensor space, thereby establishing the smoothness, non-negativity, and low-rank properties of the proposed TGDOP model.

4.3.1. Spatial Smoothness

The mathematical notation is commonly used to describe the smoothness of a function. Specifically, indicates that a function is continuous over its domain, while signifies that its first derivative exists and is also continuous over its domain (i.e., the function is continuously differentiable). Theorem 2 outlines the conditions for spatial continuity when the covariance matrix is treated as a matrix function of spatial position. A detailed derivation is available in Appendix B.

Theorem 2 (Continuity of Derivatives).

when satisfies the following conditions:

- ;

- .

(x, y, z) represent the position variables of the radiation source in the Cartesian coordinate system.

Theorem 2 illustrates that the spatial continuity of plays a crucial role in the TGDOP model. Subsequently, we will analyze the continuity conditions for in the TDOA localization system. Calculate the :

where is defined in (13). It can be readily observed that for an interval I where the condition holds, the partial derivative is continuous on I (i.e., ). The forms of and are analogous to that of , and thus, they adhere to the same continuity properties. Furthermore, itself is continuous on I (i.e., ). Additionally, under a typical/standard sensor configuration, possesses full column rank. Consequently, it can be readily concluded that is based on Theorem 2.

4.3.2. Non-Negative

Theorem 3 states that the covariance matrix is symmetric and positive semi-definite. Let . Then, for all , we have

Considering the definition of for in (5), it follows that . By defining and , respectively, similar conclusions can be drawn for and . Leveraging this property, non-negative constraints are applied to the factor matrices of (12) in the subsequent algorithm. This confines the solution space to the domain of non-negative real numbers, reducing the variable dimensions in parameter optimization.

Theorem 3 (Symmetric Positive Semi-definite).

Let , where denotes the positioning error vector. Then, satisfies the following:

- ;

- .

Proof.

□

4.3.3. Low Rank

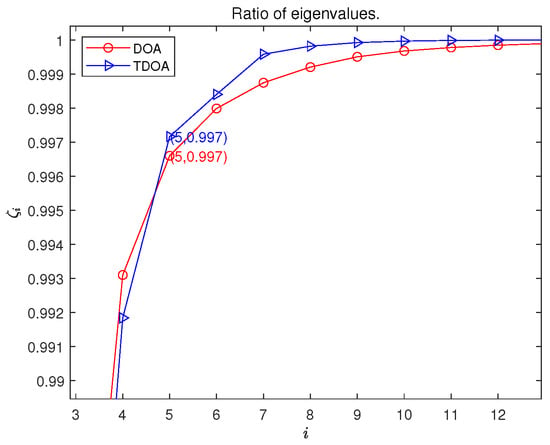

To investigate the hypothesized low-rank property of the proposed tensor model , which is constructed from individual covariance matrices as per (5), our approach is to analyze the rank characteristics of its constituent slices using SVD. The methodology involves simulating a scenario with high variability in the underlying error parameters to assess if low-rank structures persist even under such complex conditions.

The positioning error covariance matrix at a location is generally expressed from (15) as . Here, is a geometry-dependent transformation matrix, and is a symmetric, positive, semi-definite matrix encapsulating the statistics of underlying sensor measurements or intermediate error parameters, influenced by environmental factors . Performing a Cholesky decomposition on yields , where is a lower triangular matrix. Consequently, the covariance matrix can be written as

The matrix is determined by the fixed station configuration. Thus, the variability and specific structure of the positioning error map across different environments are primarily determined by .

Assuming there are independent intermediate variables contributing to (e.g., if N is the number of variables per sensor and M is the number of sensors or total measurements, then could be ), is an matrix, and thus . We populate the non-zero elements of with random values to simulate a complex error environment lacking strong prior structural information. The tensor is then constructed using these generated matrices. We then perform SVD on matrix slices of . For instance, if a results in a matrix (for ), the normalized cumulative energy is defined as , where is the j-th singular value of in descending order, and . Then, the average of cumulative energy for K slices is defined as .

Figure 2 plots versus i for such an analysis, where the considered tensor slice dimensions result in singular values. It is found from Figure 2 that the first five singular values account for the majority of the energy in the analyzed tensor. This rapid decay of singular values suggests that the matrix representation of the positioning error distribution, even under these simulated complex conditions, exhibits a strong low-rank characteristic. This finding provides empirical support for the premise that the spatial distribution of positioning error can be effectively modeled by low-rank structures, offering a foundation for the low-rank reconstruction algorithm developed in this paper. While this analysis is based on a simulated scenario, the results suggest a general tendency that is further exploited by our proposed method.

Figure 2.

The plot of normalized cumulative singular value energy versus singular value index i for the TGDOP, with generated randomly. This indicates the potential low-rank property of the TGDOP.

5. Reconstruction Algorithm for TGDOP

In this section, we propose the sparse reconstruction algorithm for TGDOP. In cases where the positioning error sources are unidentified and limited emitters are available, we propose a BTD-based algorithm to enhance reconstruction performance under sparse measurements by constraining the factor matrix. In addition, we extend the algorithm when the directional measurements of positioning error are available. Specifically, we constrain the corresponding factor matrices with the directional measurements of positioning error in the objective function and reconstruct the optimized factor matrices into TGDOP.

5.1. BTD-Based Sparse Reconstruction Algorithm for TGDOP

We begin by outlining the context for the proposed algorithm. In this paper, a “sparse observational scenario” signifies a situation where calibration emitters are present in only a limited number of grids within the target area. Sensors receive electromagnetic signals from these emitters. Subsequent statistical analysis of multiple positioning results at each grid yields positioning errors; the squared magnitudes of these errors constitute the observational values, as detailed in (5).

As outlined in (12), the primary objective is the reconstruction of the complete positioning error tensor. This goal is achieved by solving the following optimization problem, which models the error tensor as a sum of R BTD components:

In this formulation, denotes the measurement tensor. Each term in the summation, , represents a BTD component comprising a core tensor and associated factor matrices , , and . In accordance with (12), the number of BTD blocks is set to 3, representing the three directional components: x, y, and z. The dimensions satisfy (for ), and these factor matrices are constrained to possess full column rank, a common requirement for model identifiability.

5.1.1. Designing the Structure of Factor Matrices

Section 4.3 analyzes the intrinsic properties of the positioning error tensor . The factor matrices in the tensor decomposition represent projections of ’s features along its different modes. Consequently, the characteristics of should inform the structure of these factor matrices. Theorem 2 reveals the spatial smoothness inherent in positioning errors. This implies that the column vectors of the factor matrices (which capture variations along spatial dimensions) can effectively represent most of the information using low-order polynomials. This prior knowledge is particularly valuable in scenarios with sparse observational data. Standard random initialization of factor matrices fails to incorporate this fundamental prior, potentially leading to models that are poorly constrained by sparse measurements and thus increasing the risk of convergence to suboptimal local minima.

Theorem 2 establishes the continuity of elements within the tensor of positioning error distribution. Here, we relate this property to the factor matrices of the tensor via Theorem 4, the detailed proof of which is provided in Appendix E.

Theorem 4

(Vandermonde-like structure). Given that the error distribution tensor exhibits continuity with respect to spatial position and possesses a low-rank structure, it follows that the column vectors of its factor matrices reside in a subspace generated by low-order polynomials.

Based on Theorem 4, we propose structuring the column vectors of each factor matrix using polynomial generators. The optimization then focuses on two main steps. First, a polynomial coefficient matrix is randomly initialized. Second, these coefficients are used to construct the columns of the actual factor matrix. Subsequent optimization iterations refine these polynomial coefficients rather than directly adjusting the elements of the factor matrices. This methodology explicitly incorporates the prior knowledge of spatial smoothness, promoting spatial continuity in the reconstructed tensor. Furthermore, by embedding this structural prior, the approach reduces the search space and guides the optimization, thereby decreasing the likelihood of converging to undesirable local optima.

Consider optimizing in (19) as an illustrative example. We first randomly initialize a polynomial coefficient matrix , where m is the number of coefficients used to define a polynomial of degree . Each of the columns of is generated using a distinct set of m polynomial coefficients. The initial factor matrix is then constructed as

where is the Vandermonde matrix whose columns form a basis for polynomials up to degree . Since is full column rank (given ), optimizing the objective function with respect to is equivalent to optimizing with respect to . The optimization task thus shifts from determining (with parameters) to determining (with parameters), significantly reducing the dimension of parameters to be optimized in the solution space when . This reduction effectively smooths the optimization landscape and prevents the algorithm from getting trapped in bad local minima associated with high-frequency noise, thereby improving the robustness to random initialization.

To ensure the non-negativity of the factor matrices indicated in Theorem 3, we define the final factor matrix through an element-wise squaring operation:

Upon substituting Equations (20) and (21) into the objective function (denoted as ) defined in (19), the optimization problem concerning the factor matrix can be re-expressed as

Consequently, optimizing the objective function from (19) concerning the factor matrix (now denoted to reflect its construction) becomes equivalent to optimizing for the polynomial coefficient matrix . For brevity, any subsequent reference to optimizing a factor matrix implies the optimization of its underlying polynomial parameter matrix .

5.1.2. Sparse Formulation of the Objective Function

In practical applications, tensor reconstruction often addresses scenarios with sparse measurements, where the measured data covers only a minor portion of the entire target area. To model this, we introduce a binary sampling tensor , where if the element is observed (i.e., ), and 0 otherwise. Assuming the unobserved entries in are represented as zeros, the optimization problem for sparse data is formulated as

5.1.3. Solving Equation (23) Using Block Coordinate Descent

Now, we employ a Block Coordinate Descent (BCD) strategy to solve (23), which involves iteratively minimizing the objective function with respect to one block of variables while keeping the others fixed. This leads to the following four sub-problems, derived from the mode-n matricized forms of the objective function:

where

The variables to be optimized in (23) are the sets of factor matrices , , (collectively denoted ), and the set of core tensors . Denoting the objective function in (23) by , the BCD method iteratively solves the following:

Each sub-problem in (26) is a linear least squares problem concerning the optimized variable block and is therefore convex. Consequently, an Alternating Least Squares (ALS) approach, a specific type of BCD, can be employed, as detailed in Algorithm 1.

| Algorithm 1 ALS algorithm for solving (23). |

|

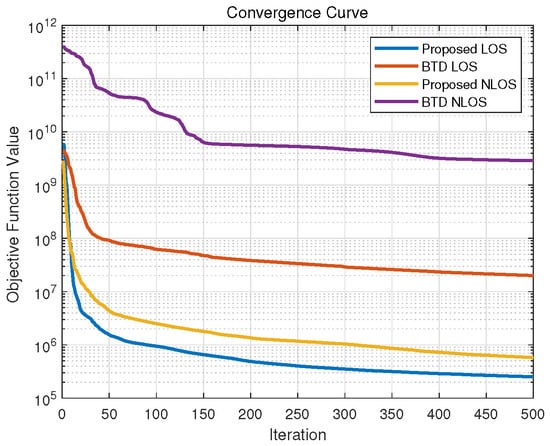

The focus of this study lies in tensor modeling methodology, so we employed a straightforward ALS-BCD optimization scheme without an exhaustive comparison of alternative reconstruction strategies. Figure 3 illustrates the average convergence curves of the proposed algorithm and the classical BTD algorithm in LOS and NLOS scenarios, based on 100 random initializations. In the figure, the x-axis represents the number of optimization steps performed by the ALS algorithm, and the y-axis represents the total squared Frobenius norm error defined in (23). This value is calculated based on the normalized measurement tensor and is used to illustrate the relative magnitude of the convergence trend. It can be observed that the proposed algorithm outperforms the classical BTD method in terms of both convergence speed and stability, and exhibits sufficient robustness to initial value selection. Nevertheless, to extend the algorithm to larger tensor spaces, advanced techniques such as momentum-based updates, stochastic optimization, or distributed computation can be incorporated to further boost convergence speed and robustness.

Figure 3.

The average convergence curves of the proposed algorithm and the classical BTD algorithm in LOS and NLOS scenarios, with 100 random initializations.

For the aforementioned solution process, the derivation of its computational complexity is provided in Appendix D:

where denotes the number of observed samples. It is evident that the complexity of the proposed algorithm is acceptable under the low-rank assumption. However, when the multilinear rank is large, reconstruction accuracy must be sacrificed to reduce time costs. Future research may overcome this technical bottleneck by modifying the optimization strategy.

5.2. Solution Approach with Available Directional Measurements

The rotational ambiguity of sub-tensors is a known challenge in tensor decomposition and is often handled with prior constraints, initialization, or post-processing [28]. This subsection explores an alternative solution strategy when directional components of the positioning error are available as measurements. It is emphasized that the proposed method does not decompose the tensor into three arbitrary blocks. Instead, it reconstructs three physically meaningful components grounded in the definition of the error covariance matrix. This ensures that the physical correspondence is guaranteed by the model’s design from the outset, rather than being an ambiguous interpretation of the decomposition results.

While existing research on positioning errors has largely concentrated on scalar distributions (e.g., GDOP-based models) that overlook directional characteristics, such information is not irretrievable. When a set of N positioning measurements is collected for an emitter at a known location , the directional error components can be extracted through statistical analysis.

The availability of these directional measurements enables a refined modeling approach. We can reformulate the objective function to model each of the R observed directional tensors with a dedicated BTD component, leading to the expression:

This objective would then be decomposed into sub-problems:

Utilizing directional measurements aims to provide richer constraints for the factor matrices and core tensors, potentially improving the accuracy of directional positioning error reconstruction. Similar to Algorithm 1, a BCD approach can be applied to solve these convex sub-problems; the detailed algorithmic steps are omitted here for brevity.

5.3. Lower Bound on Sample Complexity

In this section, we discuss the lower bound of the data requirement of the proposed algorithm under the random uniform sampling strategy. We consider the recovery of a K-th order tensor , which is represented as a sum of R low-rank Tucker tensors : . Each component tensor has a Tucker decomposition:

where is the core tensor and is the factor matrix for component r along mode k, with being the mode-k Tucker rank. The analysis results on Tucker decomposition [43,44] indicate that the lower bound for the sample complexity is determined by the most difficult-to-recover matrix unfolding, . The recovery difficulty of a matrix is lower-bounded by a function proportional to , that is,

In Section 5.1.1, we assume that each column of every factor matrix is a polynomial sampled at distinct points. This structural prior can be formulated as a factorization , where and . Here, represents the mode-k rank of the r-th component of the tensor, and represents the order used for polynomial approximation. Each row of contains the coefficients for the corresponding column of in the basis defined by .

The mode-k unfolding of the r-th component tensor, , is given by

Substituting the polynomial constraint for all modes , we obtain

The expression for shows that its column space is a subspace of the column space of the known matrix :

The search for the left singular vectors is thus restricted from the ambient space to the -dimensional subspace spanned by the columns of . The effective row dimension is reduced from to . Using the mixed-product property of the Kronecker product on the right-hand side of the expression:

This implies that the row space of is contained within the column space of the matrix , whose dimension is . The effective column dimension is therefore reduced from to .

For the matrix recovery problem of , we conclude that it is rank- with effective row dimension and column dimension. Applying the standard lower bound for matrix recovery yields the bound for this specific unfolding:

Theorem 5 gives the overall sample complexity lower bound for the BTD recovery problem, showing that the sample complexity is determined by the bottleneck among all unfoldings.

Theorem 5.

For the recovery of a BTD tensor with polynomial factor matrix constraints, the required number of measurements m has the following lower bound:

where is the Tucker rank and is the degree of freedom for the factor matrix of component r along mode k.

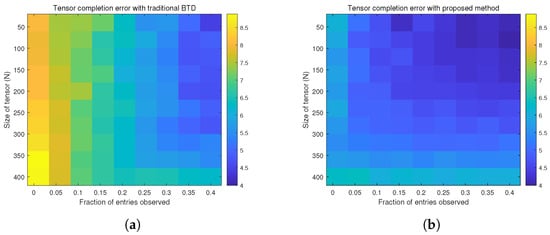

Figure 4 shows the log-reconstruction error under different observation ratios. The x-axis represents a ratio between 0 and 1, indicating the proportion of observed data points relative to the total number of tensor elements. The y-axis denotes the size N of the three-dimensional tensor. The colorbar of the heatmap represents the reconstruction error on a logarithmic scale. The performance of the conventional BTD algorithm and the proposed method is presented in Figure 4a and Figure 4b, respectively. The results indicate that under identical sampling conditions, our method exhibits superior performance by achieving a lower reconstruction error. This consequently reduces the sample lower bound for achieving high-fidelity tensor reconstruction.

Figure 4.

Analysis of the impact of sample size on the performance of tensor reconstruction. (a) Reconstruction error of the conventional BTD model. (b) Reconstruction error of the BTD model with factor matrix prior constraints.

6. Results with Measurements and Simulations

To evaluate the performance and robustness of the proposed algorithm under diverse conditions, we conduct simulations on two typical TDOA-based positioning scenarios, denoted as and , respectively. The experimental findings and conclusions can also be extended to Direction-of-Arrival (DOA) positioning scenarios. Nevertheless, specific results from DOA experiments are not further detailed in this paper for brevity.

The scenario utilizes a 3-D TDOA positioning system with four sensors. Three sets of TDOA measurements are the intermediate parameters. The standard deviations for these TDOA measurement errors are set to 18 ns, 20 ns, and 25 ns. The standard deviation of sensor position errors is modeled to be 0.5 m. The sensor coordinates (in meters) are , , , and , forming a “Y”-shaped constellation. This configuration is chosen not only because it represents the most common and high-performing geometry in distributed positioning, but also to align with the experimental setup described later. Due to a shared reference sensor in TDOA calculations, correlations are introduced among the TDOA measurement errors. Let the TDOA error vector be . The correlation matrix for these TDOA errors has . The maximum simulated detection range extends to 8 km, with the target area’s elevation spanning from 0.5 km to 5 km. This target volume is discretized into a grid of points. Scenario builds upon by incorporating multipath errors within a quarter of the region spanning elevations from 0.5 km to 1.4 km. The multipath error affecting the TDOA estimates is modeled using an exponential distribution with a mean of 100 ns.

To quantitatively assess the algorithm’s performance, several error metrics were employed. Mean Frobenius Norm Error (MFNE) is used to evaluate the average reconstruction error of a tensor over multiple trials: , where is the true tensor, is the tensor reconstructed in the m-th Monte Carlo trial, and M is the total number of trials. Relative Frobenius Norm Error (RFNE) measures the reconstruction error relative to the magnitude of the true tensor: . This metric is typically calculated for each trial or averaged over M trials. Signal-to-Noise Ratio (SNR) represents the ratio of the true signal power to the noise power in the measurements, defined in decibels (dB) as , where is the true underlying (noise-free) tensor and is the observed noisy tensor.

6.1. Multilinear Rank Analysis

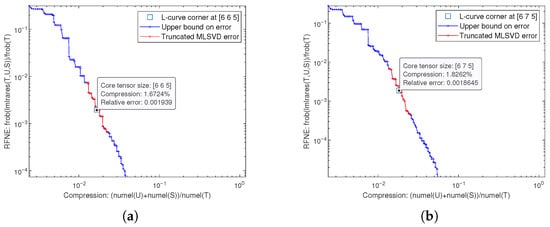

In this subsection, we employ the L-curve method (Note: The L-curve corner identifies the inflection point where further increases in model parameters yield diminishing returns in error reduction; the rank at this corner is selected as the optimal multilinear rank to balance accuracy and model simplicity.) to determine a suitable multilinear rank for the tensor model optimized by our proposed algorithm. Given that the concept of low rank is relative to a tensor’s dimensions, we estimate the multilinear rank for the target tensor.

To manage computational load during rank analysis, especially for large tensors, we introduce a down-sampling factor . The analysis is performed on a down-sampled version of the target data tensor, denoted as . The L-curve method typically involves plotting a measure of reconstruction error against model complexity. The corner of this curve often indicates an optimal trade-off. We identify this region by conducting trials with various combinations of multilinear ranks and select the ranks near the L-curve’s inflection point. This selection is further guided by the criterion that the RFNE between the and its low-rank approximation falls below a predefined threshold .

Figure 5 illustrates the estimation of the tensor’s multilinear rank using the L-curve method at . The x-axis represents the compression ratio, defined as the percentage of parameters required for reconstruction relative to the total tensor size, while the y-axis denotes the RFNE. The selection of provides an optimal trade-off, because it reduces the number of elements by a factor of , enabling rapid iterative rank searching while maintaining the macro-scale spatial correlation of the error distribution as guaranteed by the continuity of the TGDOP model. Furthermore, extra experimental results also reveal that across various down-sampling factors from 1 to 20, the estimated multilinear rank for each mode consistently remained below 10% of that mode’s original dimension, underscoring the inherent low-rank nature of the data.

Figure 5.

Multilinear rank estimation via the L-curve method at . Iterate through multilinear ranks and select the first point in the Pareto front set with an error below the predefined threshold as the L-curve corner. (a) The L-curve for scenario . (b) The L-curve for scenario .

6.2. Performance Under Sparse Measurements

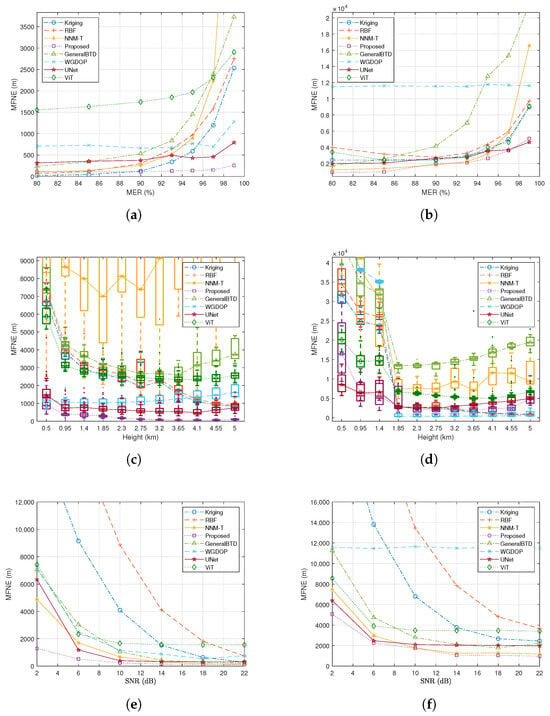

This subsection evaluates the algorithm’s reconstruction performance when the emitters are sparsely and randomly distributed within the target area. We define the missing emitter ratio () as the proportion of grid points in the target area lacking emitter data [45]. We conducted experiments with varying from 80% to 99% (corresponding to an observation ratio of 20% to 1%) to assess performance under increasing sparsity where the observation ratio is defined as 100% MER. For these experiments, the down-sampling factor is set to 10, the SNR is maintained at 22 dB, and the number of Monte Carlo trials (M) is 50.

Figure 6 compares the reconstruction performance of the proposed algorithm against several baseline methods across different MER levels. The baseline algorithms included in the comparison encompass both classic reconstruction algorithms and deep learning (DL)-based generative algorithms. It is worth noting that the scenario addressed in this paper is a “single-shot reconstruction” problem, characterized by extremely sparse valid observations and the absence of a historical database. Traditional DL methods typically fail in such zero-shot and extremely sparse conditions due to the inability to acquire sufficient training data. To enable the neural networks to function in this context, we generated 50 sets of historical data to serve as prior information for each training session. Although this constitutes an unfair comparison (as it provides an advantage to the DL baselines), we include these results to verify the superiority of the proposed algorithm from another perspective.

Figure 6.

Comparison of reconstruction performance between the proposed algorithm and baseline methods (). (a,b) Reconstruction versus at for and . (c,d) Boxplots of reconstruction versus elevation with (observation ratio = 1%) and for and . (e,f) Reconstruction versus with (observation ratio = 20%) for and .

Figure 6a,b demonstrate that the proposed algorithm achieves the lowest , indicating superior reconstruction performance. Specifically, at a high sparsity level of (only 1% observation ratio), the proposed algorithm exhibits a performance improvement ranging from 43.58% to 97.23% compared to the baseline methods, as detailed in Table 1. Here, is defined as . Note that the GeneralBTD method represents the standard BTD without the proposed physics-based polynomial constraints. The significant performance gap between the proposed algorithm and GeneralBTD quantifies the contribution of the proposed physical constraints. Furthermore, comparative analysis indicates that the proposed algorithm attains comparable reconstruction accuracy while requiring significantly fewer emitters. This substantial reduction in required emitters can translate to considerable operational cost savings.

Table 1.

Reconstruction Performance Comparison: , , (Observation Ratio = 1%), .

Figure 6c,d present boxplots illustrating the distribution of at different elevations for . As shown in Figure 6d, the introduction of NLOS error at altitudes between 0.5 km and 1.4 km leads to significant reconstruction errors for the WGDOP algorithm in this range. Consequently, its MFNE in Figure 6b is much higher than that of the other algorithms. These boxplots summarize key statistical measures such as quartiles and medians. A smaller inter-quartile range and more compact box shape generally indicate more consistent performance (i.e., higher robustness) across varying elevations. The results suggest that the proposed algorithm exhibits superior consistency compared to the baseline methods in this regard.

6.3. Performance Under Noise

In this subsection, we investigate the impact of observational noise on the algorithm’s reconstruction performance. We evaluate the reconstruction performance of the proposed and baseline schemes for values ranging from 2 dB to 22 dB. For these experiments, the down-sampling factor was fixed at 10, the MER at 80%, and the number of Monte Carlo trials (M) at 50.

Figure 6e,f illustrate the influence of varying levels on the reconstruction performance. Across the tested noise levels, the proposed algorithm consistently demonstrates superior reconstruction performance and exhibits greater resilience to noise compared to the other methods.

To quantitatively assess this noise resilience, we define a metric termed “Slope” as the average absolute rate of change of with respect to :

where and represent the n-th smallest value and its corresponding reconstruction error, respectively. denotes the total number of distinct levels evaluated in the experiment. A smaller Slope value indicates better noise robustness. Table 2 presents the Slope values for all algorithms in both scenarios. The percentage improvement in this Slope metric for the proposed algorithm, relative to the baseline methods, ranges from 7.11% to 95.92%, indicating superior noise adaptability. In scenario , the WGDOP method’s low Slope value is notable, primarily because NLOS errors, rather than observational noise, dictate its reconstruction accuracy. Figure 6d shows high reconstruction errors for this method at elevations from 0.5 km to 1.4 km due to multipath effects. Thus, despite good noise robustness, its overall reconstruction performance is substantially inferior to the proposed algorithm, corroborating the conclusions in Table 2.

Table 2.

Noise Resistance Performance (, , ).

6.4. Performance with Available Directional Measurements

In this subsection, we evaluate the proposed algorithm’s performance in a scenario where directional measurements of positioning errors are available. This validates the extended algorithm introduced in Section 5.2, assessing its reconstruction accuracy for both scalar () and vector () representations of the spatial distribution of positioning error.

Table 3 presents performance metrics under varying degrees of MER. Here, quantifies the reconstruction error for the . is computed as the mean of the individual MFNEs obtained for each directional component tensor: . Comparing the results in Table 3 with those presented for the scalar error model in Figure 6a, it is found that incorporating directional information enhances the proposed algorithm’s performance. This improvement is observed in the reconstruction of both scalar and vector distributions of positioning errors across various conditions.

Table 3.

Performance with directional measurements for various (, , ).

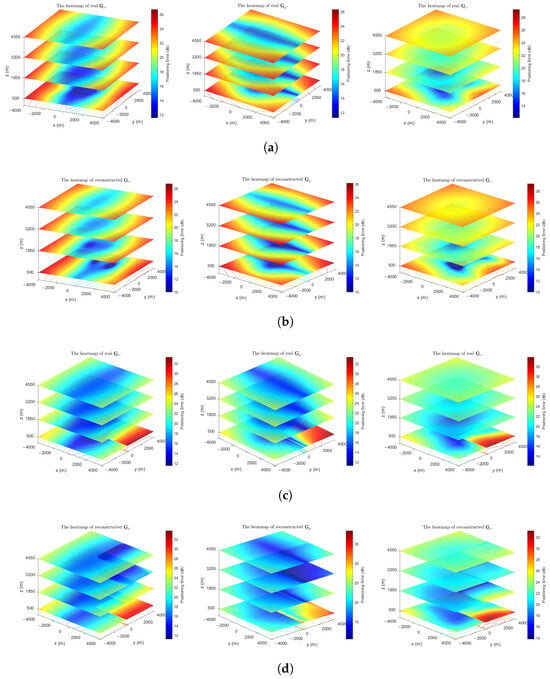

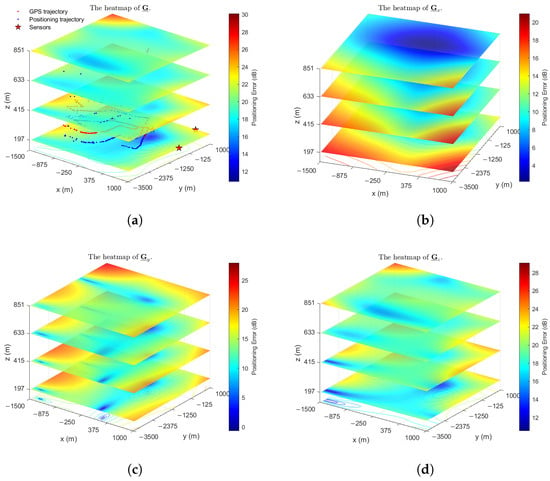

To more intuitively demonstrate the reconstruction performance for , Figure 7 depicts heatmaps of the true and reconstructed directional component tensors in and . For enhanced visualization, particularly when positioning errors are small, the heatmap values are presented on a dB scale, specifically showing for .

Figure 7.

Heatmaps of real and reconstructed directional distributions of positioning error (, , ). Values are shown on a dB scale for visualization. (a) The real heatmaps of positioning error distribution in . (b) The reconstructed heatmaps of positioning error distribution in . (c) The real heatmaps of positioning error distribution in . (d) The reconstructed heatmaps of positioning error distribution in .

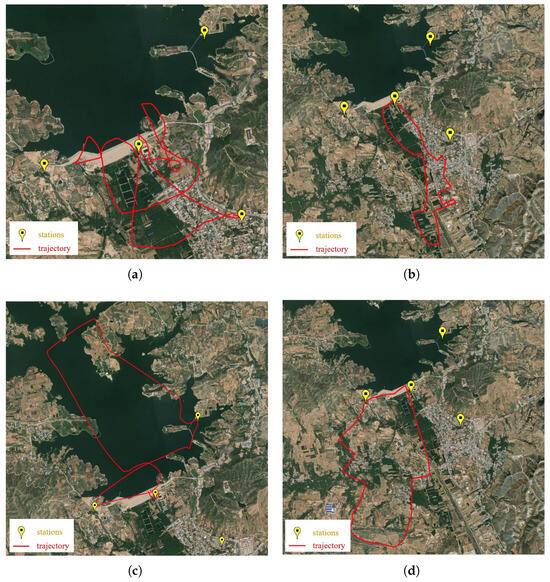

6.5. Real-Data Experiment

In this subsection, we further validate the proposed algorithm using a real-world dataset collected from a TDOA positioning system. The dataset covers an area of m2 and includes measurements across 120 frequency bands, ranging from to . The experimental setup comprises four sensors and an unmanned aerial vehicle (UAV) acting as the emitter. The sensors are deployed in a Y-shaped configuration, with baseline distances of 1 , , and from the peripheral sensors to the central reference sensor, respectively. Figure 8 illustrates the experimental site, including the sensor layout and four sets of flight trajectories for the UAV.

Figure 8.

Schematic diagram of the experimental site, sensor stations layout, and UAV flight trajectories. (a) Trajectory : flight trajectory in large-scale open terrain. (b) Trajectory : flight trajectory in small-scale open terrain. (c) Trajectory : low-altitude flight trajectory over a lake. (d) Trajectory : low-altitude flight trajectory in an urban area.

A key challenge presented by this dataset is its extreme sparsity. For example, the trajectory is sampled at 386 distinct locations, with ground-truth positions from its onboard GPS. To analyze the spatial distribution of positioning errors, the target area was discretized into a fine-grained grid. Consequently, the 386 measurement points represent only a tiny fraction of the 400,000 grid points, resulting in a MER of approximately 99.9%. This high-sparsity scenario serves as a stringent test for the algorithm’s tensor completion capabilities. To evaluate the algorithm’s performance under these conditions, we adopt a cross-validation approach. For each Monte Carlo trial, a subset of the observed data is randomly selected for training the algorithms, with the remainder reserved for testing. The proportion of data used for training is denoted by . A total of Monte Carlo trials are conducted for each value of .

Table 4 presents the percentage of performance improvement of the proposed algorithm compared with the comparative methods in various trajectories. The final column indicates whether an algorithm was capable of reconstructing the complete tensor (“Y” for yes, “N” for no), as some methods could only reconstruct partial slices or fail under extreme sparsity. The performance of the proposed algorithm is comparable to that of the classical GDOP method under simple channel conditions (such as ), while it has obvious advantages in complex channel environments (such as ). It can also be observed from Table 4 that under relatively ideal environmental conditions (), the proposed algorithm yields only marginal performance improvements compared to simpler baseline algorithms (e.g., WGDOP), despite possessing significantly higher computational complexity. This raises the question of determining the appropriate scenarios for its application. In practice, however, error map reconstruction is typically performed offline to evaluate the station deployment of positioning systems; thus, time complexity is not the primary concern. In such cases, it is recommended to employ the proposed algorithm across all scenarios, trading higher computational complexity for a performance gain of approximately 30%. Conversely, for time-sensitive scenarios where computational efficiency is critical, we recommend restricting the use of the proposed algorithm to complex terrains, such as urban areas or canyons.

Table 4.

Performance improvement (%) of the Proposed Algorithm compared with the Comparison Algorithms in four trajectories.

Then, we take trajectory as an example to give a further analysis. Table 5 presents the average of the reconstructed positioning error tensor on the test sets in trajectory , along with computation times.

Table 5.

Performance on Trajectory , where MFNE is reported for the Test Set.

When comparing the proposed tensor-based modeling approach with WGDOP, we observe that our method further reduces the by over 27.96%. This highlights the benefit of our model in capturing complex error distributions. In contrast, traditional data-driven interpolation methods such as RBF and Kriging struggle significantly under such highly sparse conditions, particularly when entire rows or columns (or more generally, large contiguous regions) of the tensor lack measurements, leading to their failure in reconstructing the complete tensor. The NNM-T algorithm was also tested but is omitted from the table as it failed to converge reliably under this level of data scarcity. It also indicates that the proposed algorithm incurs a longer computation time compared to some baselines. However, real-time inference is often not a primary requirement because the analysis of the positioning error map is typically performed offline as a post-processing step.

Figure 9 presents heatmaps of the reconstructed positioning error map from the real-world data using the proposed algorithm. It suggests that the proposed method effectively models underlying error characteristics and can provide valuable guidance for system analysis. For instance, examining the magnitudes of the reconstructed directional error components in Figure 9b–d, we observe that the error magnitudes typically follow the relationship . This implies that the positioning reliability is highest along the z-axis and lowest along the x-axis for this specific setup, an observation consistent with statistical analysis of the raw positioning data.

Figure 9.

Reconstructed positioning error map for trajectory (, ). Values are presented on a dB scale for visualization. (a) Reconstructed heatmap of the spatial distribution of positioning error. (b–d) Reconstructed heatmaps of the spatial distribution of positioning error in x, y, and z directions, respectively.

7. Conclusions

This paper tackles the critical challenge of high-fidelity reconstruction of spatial localization error maps from limited measurements. We propose a novel tensor-based framework that overcomes the limitations of conventional model-driven approaches, which are constrained by idealized assumptions, and purely data-driven methods that are dependent on dense observational data. Central to our approach is the TGDOP model, a novel representation that captures the complexity and anisotropic nature of localization errors. To address the practical issue of data scarcity, we developed a physics-informed tensor completion algorithm. By incorporating prior knowledge derived from the analytical properties of the error covariance matrix directly into the factorization process, our algorithm achieves robust reconstruction even from severely incomplete data. Comprehensive experiments on both simulated and real-world TDOA datasets demonstrate the superiority of the proposed method. Specifically, under extremely sparse conditions, our method improves reconstruction accuracy by at least 27.96% compared to state-of-the-art baselines. Future work will explore more efficient optimization strategies for large-scale scenarios and investigate advanced constraints on factor matrices to capture richer physical information.

Author Contributions

Conceptualization, Z.Z. and Z.H.; methodology, Z.Z. and Z.H.; software, Z.Z.; validation, Z.Z., C.W. and Q.J.; formal analysis, Z.Z. and Z.H.; investigation, Z.Z.; resources, Z.Z. and Z.H.; data curation, Z.Z. and Z.H.; writing—original draft preparation, Z.Z.; writing—review and editing, Z.Z., Z.H., C.W. and Q.J.; visualization, Z.Z., C.W. and Q.J.; supervision, Z.Z., C.W. and Q.J.; project administration, Z.Z., C.W. and Q.J.; funding acquisition, Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the project “Electromagnetic Detection Payloads and Big Data Processing”, grant number 20232002290.

Data Availability Statement

The codes and datasets in this paper are available at https://github.com/gitZHZhang/Code_and_Data_for_TGDOP.git (accessed on 7 January 2026).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Proof of Theorem 1

Consider a 3-D scenario with a single emitter at a true location . We have N positioning results for this emitter, denoted as for . We assume the positioning errors are drawn from a distribution with mean and a covariance matrix , where the -th element of is estimated as . The positioning results are assumed to follow a multivariate normal distribution, and their spatial spread often forms an ellipsoid. The probability density function (PDF) of is given by

According to the properties of the multivariate normal distribution, a 3-D random variable that follows a joint Gaussian distribution has Gaussian marginal and conditional distributions. Thus, we have , , and . To simplify the analysis and intuitively assess the error magnitudes along each coordinate axis, this paper utilizes the diagonal elements of the covariance matrix to approximately characterize the extent of the positioning error. So, we can treat , , and as independent variables in the following analysis.

Applying this to the positioning results relative to the true emitter location , each component of the i-th positioning result follows for and . Suppose that and are calculated from the N positioning results relative to the true location . Since , it follows that (Chi-squared distribution with 1 degree of freedom). Thus, can be written as

where denotes a Gamma distribution with shape and rate (or as scale). Here, and rate .

The total tensor is decomposed into the sum of three terms, that is, . Note that are independent sums of squared normals, then the distribution of their sum would be a sum of scaled independent variables, which generally has a complex form (generalized Chi-squared distribution).

However, by the Central Limit Theorem (CLT), for large N, each can be approximated by a Gaussian distribution: and . Thus, for large N,

For the sum , , and . Then, for large N,

Note that N, the number of samples, represents repeated localizations of an emitter at a specific position. Consequently, for a stationary emitter, acquiring a substantial sample size N is typically feasible in practical applications.

Correspondingly, the estimation errors and follow

Appendix B. Proof of Theorem 2

Considering the emitter’s position as the independent variable in a 3-D scenario, differentiating both sides of (15) with respect to yields

To calculate the , we obtain

Then, we analyze the continuity of the two terms in .

The continuity of Term 1 is contingent upon the following:

- Continuity of : If (i.e., is continuously differentiable on the interval I), then its transpose is also . The product is consequently . The matrix inversion operation, , is a smooth mapping on the set of invertible matrices. Therefore, provided is invertible for all , is also .

- Continuity of product terms and : Since , both and its derivative (and similarly ) are continuous on I (i.e., ). Consequently, their products and are also continuous on I.

Given these points, Term 1, being composed of sums and products of continuous matrix functions (specifically, , , , and ), is itself continuous on I. Then, we concentrate on the continuity of Term 2. As established, (and thus also ). Since , its transpose derivative is continuous on I (i.e., ). The product of these two continuous matrix functions, , is therefore continuous on I.

To sum up, a conclusion can be drawn that (that is, ) under the following conditions:

- .

- , (where n is the number of columns of , ensuring is invertible).

Appendix C. Definition and Properties of a Tensor Operation

Analogous to how multiple systems of equations can be represented using matrix multiplication, we introduce a tensor operation, the mode-n transpose product, denoted as “”. This operation is designed to represent linear relationships between higher-order and lower-order tensors. A formal definition is provided below.

Definition 2

(Mode-n Transpose Product). Define a Kth-order tensor and a cell array . Here, is the weight matrix and the others are the mask matrices. The dimensions of these matrices are given by

where and Then, the mode-n transpose product of and is defined as

where

Compared with the mode-n product defined in (8), the mode-n product typically applies a linear transformation to the mode-n fibers without altering their fundamental vector structure. In contrast, the mode-n transpose product effectively compresses the tensor along its n-th dimension. Specifically, each mode-n fiber, , is mapped to a scalar value in the resulting tensor .

Using the operator “”, (12) can be expressed as

Let us elaborate on this example to illustrate the fiber reorganization. We begin by noting the dimensions: , , , , and . According to (A8), we obtain

Suppose the fiber corresponds to the mth column in , and corresponds to the n-th column. Based on (6), we have

In the matrix , each pair of non-zero elements in the column vectors are separated by zeros. Correspondingly, when , , and in (A13), the difference in the fiber column indices in is . According to the rules of matrix operations, (A10) is equivalent to

Similarly, analogous analysis results can be derived for Equations (A11) and (A12), that is,

Next, we introduce the general physical meaning of the mode-n transpose product. Substitute in (A8). Reorganize the into a block matrix form such that , where , then we have

From (A16), we can conclude that the elements in are the weights of (i.e., the mode-1 unfolding matrices of the sub-tensors), and is the linear transformation matrix of . Therefore, the mode-n transpose product is essentially a vectorized representation of linear operations on the sub-tensor slices of the tensor along the nth dimension. The weight matrix and the mask matrices play the same role in the matrices and , so we could generalize (A16) as

Appendix D. Computational Complexity Analysis

We analyze the computational complexity of the proposed algorithm per iteration. Let denote the number of sparse observation samples, R be the number of block terms, and represent the multilinear ranks for the three modes, respectively. Additionally, let m denote the degree of the polynomial basis functions used in the physics-informed constraints. The algorithm employs a BCD strategy, which iteratively updates the core tensors and the factor matrices. The complexity is determined by the least squares (LS) solvers applied in each step. In the step of updating the core tensors , the algorithm solves for all elements within the core tensors simultaneously. The total number of optimization variables, denoted as , is given by the sum of elements in R tensors of size :

The update involves solving a linear least squares problem with equations. The computational cost is dominated by the complexity of the LS solver, which is generally quadratic in the number of variables for a fixed number of observations:

When updating the factor matrices like for mode-1 with polynomial constraints, the optimization targets the polynomial coefficient matrix rather than the full factor matrix. The number of variables for mode-1 across R components is

Consequently, the complexity for updating the factor matrices along all three modes is

Combining the costs from the core tensor and factor matrix updates, the total computational complexity per iteration is

It is observed that the complexity term associated with the core tensors, , grows with the product of the ranks across all modes, whereas the term associated with the factor matrices, , grows linearly with respect to the mode dimensions. In practical settings, the polynomial degree m is a small constant (typically ). Therefore, the core tensor update dominates the computational load:

As a result, the term involving m is often negligible in the asymptotic analysis, and the overall complexity can be simplified to

Appendix E. Proof of Theorem 4

Let the positioning error distribution in 3-D space be described by a function . We construct the error tensor , where the elements are obtained by sampling on a spatial grid :

According to the high-dimensional generalization of the Schmidt decomposition, the low-rank property implies strong separability. We can approximate f as a sum of products of separable univariate functions:

where are the weighted coefficients, and are the principal component basis functions along the axes, respectively. Since the original function f is continuous with respect to position, and the error field is typically governed by physical laws, integral operator theory dictates that the decomposed basis functions (e.g., ) must inherit the smoothness of f. Thus, . Consider an arbitrary basis function in the x-direction, . Since is a continuous function defined on a closed interval, the Weierstrass Approximation Theorem is applicable. For a smooth function that is determined by the low-rank principal components, we can approximate it with high precision using a low-order polynomial of degree d:

This implies that the function approximately belongs to the polynomial function space spanned by . Returning to the discrete decomposition of tensor :

Physically, each column of the mode-1 factor matrix represents the discretized vector of the basis function evaluated at the sampling points :

Combining this with the polynomial form in (A27), we expand as

Define the matrix , the column vector of the factor matrix can be expressed as Through the derivation above, we have proven that the column vectors of the factor matrix (and similarly for ) can be approximated as linear combinations of the columns of a Vandermonde matrix. Therefore, the column vectors of the factor matrices lie within the linear subspace generated by the low-order polynomial basis , that is,

References

- Lyu, J.; Song, T.; He, S. Range-Only UWB 6-D Pose Estimator for Micro UAVs: Performance Analysis. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 5284–5301. [Google Scholar] [CrossRef]

- Jiang, S.; Zhao, C.; Zhu, Y.; Wang, C.; Du, Y. A Practical and Economical Ultra-wideband Base Station Placement Approach for Indoor Autonomous Driving Systems. J. Adv. Transp. 2022, 2022, 3815306. [Google Scholar] [CrossRef]

- Causa, F.; Fasano, G. Improving Navigation in GNSS-Challenging Environments: Multi-UAS Cooperation and Generalized Dilution of Precision. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 1462–1479. [Google Scholar] [CrossRef]

- Feng, D.; Wang, C.; He, C.; Zhuang, Y.; Xia, X.G. Kalman-Filter-Based Integration of IMU and UWB for High-Accuracy Indoor Positioning and Navigation. IEEE Internet Things J. 2020, 7, 3133–3146. [Google Scholar] [CrossRef]

- Mazuelas, S.; Lorenzo, R.M.; Bahillo, A.; Fernandez, P.; Prieto, J.; Abril, E.J. Topology Assessment Provided by Weighted Barycentric Parameters in Harsh Environment Wireless Location Systems. IEEE Trans. Signal Process. 2010, 58, 3842–3857. [Google Scholar] [CrossRef]

- Wang, D.; Qin, H.; Zhang, Y.; Yang, Y.; Lv, H. Fast Clustering Satellite Selection Based on Doppler Positioning GDOP Lower Bound for LEO Constellation. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 9401–9410. [Google Scholar] [CrossRef]

- Wang, Y.; Ho, K.C. TDOA Source Localization in the Presence of Synchronization Clock Bias and Sensor Position Errors. IEEE Trans. Signal Process. 2013, 61, 4532–4544. [Google Scholar] [CrossRef]

- Won, D.H.; Ahn, J.; Lee, S.W.; Lee, J.; Sung, S.; Park, H.W.; Park, J.P.; Lee, Y.J. Weighted DOP With Consideration on Elevation-Dependent Range Errors of GNSS Satellites. IEEE Trans. Instrum. Meas. 2012, 61, 3241–3250. [Google Scholar] [CrossRef]

- Chen, C.S. Weighted Geometric Dilution of Precision Calculations with Matrix Multiplication. Sensors 2015, 15, 803–817. [Google Scholar] [CrossRef]

- Won, D.H.; Lee, E.; Heo, M.; Lee, S.W.; Lee, J.; Kim, J.; Sung, S.; Lee, Y.J. Selective Integration of GNSS, Vision Sensor, and INS Using Weighted DOP Under GNSS-Challenged Environments. IEEE Trans. Instrum. Meas. 2014, 63, 2288–2298. [Google Scholar] [CrossRef]

- Wang, M.; Chen, Z.; Zhou, Z.; Fu, J.; Qiu, H. Analysis of the Applicability of Dilution of Precision in the Base Station Configuration Optimization of Ultrawideband Indoor TDOA Positioning System. IEEE Access 2020, 8, 225076–225087. [Google Scholar] [CrossRef]

- Liang, X.; Pan, S.; Du, S.; Yu, B.; Li, S. An Optimization Method for Indoor Pseudolites Anchor Layout Based on MG-MOPSO. Remote Sens. 2025, 17, 1909. [Google Scholar] [CrossRef]

- Hong, W.; Choi, K.; Lee, E.; Im, S.; Heo, M. Analysis of GNSS Performance Index Using Feature Points of Sky-View Image. IEEE Trans. Intell. Transp. Syst. 2014, 15, 889–895. [Google Scholar] [CrossRef]

- Rao, R.M.; Emenonye, D.R. Iterative RNDOP-Optimal Anchor Placement for Beyond Convex Hull ToA-Based Localization: Performance Bounds and Heuristic Algorithms. IEEE Trans. Veh. Technol. 2024, 73, 7287–7303. [Google Scholar] [CrossRef]

- Ding, Y.; Shen, D.; Pham, K.; Chen, G. Measurement Along the Path of Unmanned Aerial Vehicles for Best Horizontal Dilution of Precision and Geometric Dilution of Precision. Sensors 2025, 25, 3901. [Google Scholar] [CrossRef]

- Deng, Z.; Wang, H.; Zheng, X.; Yin, L. Base Station Selection for Hybrid TDOA/RTT/DOA Positioning in Mixed LOS/NLOS Environment. Sensors 2020, 20, 4132. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Niu, X.; Tang, J.; Mao, D.; Qian, C. Fast Signals of Opportunity Fingerprint Database Maintenance with Autonomous Unmanned Ground Vehicle for Indoor Positioning. Sensors 2018, 18, 3419. [Google Scholar] [CrossRef]

- Roger, S.; Botella, C.; Pérez-Solano, J.J.; Perez, J. Application of Radio Environment Map Reconstruction Techniques to Platoon-based Cellular V2X Communications. Sensors 2020, 20, 2440. [Google Scholar] [CrossRef]

- Hong, S.; Chae, J. Active Learning With Multiple Kernels. IEEE Trans. Neural Networks Learn. Syst. 2022, 33, 2980–2994. [Google Scholar] [CrossRef] [PubMed]

- Lyu, S.; Xiang, Y.; Soja, B.; Wang, N.; Yu, W.; Truong, T.K. Uncertainties of Interpolating Satellite-Specific Slant Ionospheric Delays and Impacts on PPP-RTK. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 490–505. [Google Scholar] [CrossRef]

- Bazerque, J.A.; Giannakis, G.B. Nonparametric Basis Pursuit via Sparse Kernel-Based Learning: A Unifying View with Advances in Blind Methods. IEEE Signal Process. Mag. 2013, 30, 112–125. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M. Sub-pixel mapping of remote sensing images based on radial basis function interpolation. ISPRS J. Photogramm. Remote Sens. 2014, 92, 1–15. [Google Scholar] [CrossRef]

- Golbabaee, M.; Arberet, S.; Vandergheynst, P. Compressive Source Separation: Theory and Methods for Hyperspectral Imaging. IEEE Trans. Image Process. 2013, 22, 5096–5110. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Xie, H.B.; Fan, X.; Xu, R.Y.D.; Van Huffel, S.; Sisson, S.A.; Mengersen, K. Image Denoising Based on Nonlocal Bayesian Singular Value Thresholding and Stein’s Unbiased Risk Estimator. IEEE Trans. Image Process. 2019, 28, 4899–4911. [Google Scholar] [CrossRef] [PubMed]

- Ye, J.; Xiong, F.; Zhou, J.; Qian, Y. Iterative Low-Rank Network for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5528015. [Google Scholar] [CrossRef]

- Candès, E.J.; Sing-Long, C.A.; Trzasko, J.D. Unbiased Risk Estimates for Singular Value Thresholding and Spectral Estimators. IEEE Trans. Signal Process. 2013, 61, 4643–4657. [Google Scholar] [CrossRef]

- De Lathauwer, L. Decompositions of a higher-order tensor in block terms—Part II: Definitions and uniqueness. SIAM J. Matrix Anal. Appl. 2008, 30, 1033–1066. [Google Scholar] [CrossRef]

- Zhang, G.; Fu, X.; Wang, J.; Zhao, X.L.; Hong, M. Spectrum cartography via coupled block-term tensor decomposition. IEEE Trans. Signal Process. 2020, 68, 3660–3675. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Yao, C.; Tu, G.; Li, J. Hermitian Toeplitz Covariance Tensor Completion With Missing Slices for Angle Estimation in Bistatic MIMO Radars. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 8401–8418. [Google Scholar] [CrossRef]

- Sun, H.; Chen, J. Propagation map reconstruction via interpolation assisted matrix completion. IEEE Trans. Signal Process. 2022, 70, 6154–6169. [Google Scholar] [CrossRef]

- Levie, R.; Yapar, Ç.; Kutyniok, G.; Caire, G. RadioUNet: Fast radio map estimation with convolutional neural networks. IEEE Trans. Wirel. Commun. 2021, 20, 4001–4015. [Google Scholar] [CrossRef]

- Teganya, Y.; Romero, D. Deep Completion Autoencoders for Radio Map Estimation. IEEE Trans. Wirel. Commun. 2022, 21, 1710–1724. [Google Scholar] [CrossRef]

- Yu, H.; She, C.; Yue, C.; Hou, Z.; Rogers, P.; Vucetic, B.; Li, Y. Distributed Split Learning for Map-Based Signal Strength Prediction Empowered by Deep Vision Transformer. IEEE Trans. Veh. Technol. 2024, 73, 2358–2373. [Google Scholar] [CrossRef]

- Specht, M. Statistical Distribution Analysis of Navigation Positioning System Errors—Issue of the Empirical Sample Size. Sensors 2020, 20, 7144. [Google Scholar] [CrossRef] [PubMed]

- Specht, M. Consistency analysis of global positioning system position errors with typical statistical distributions. J. Navig. 2021, 74, 1201–1218. [Google Scholar] [CrossRef]

- Paradowski, L. Uncertainty ellipses and their application to interval estimation of emitter position. IEEE Trans. Aerosp. Electron. Syst. 1997, 33, 126–133. [Google Scholar] [CrossRef]

- Lombardi, G.; Crivello, A.; Barsocchi, P.; Chessa, S.; Furfari, F. Reducing Training Data for Indoor Positioning through Physics-Informed Neural Networks. In Proceedings of the 2025 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Tampere, Finland, 15–18 September 2025; pp. 1–6. [Google Scholar]

- Stein, S. Algorithms for ambiguity function processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 588–599. [Google Scholar] [CrossRef]

- Shin, D.H.; Sung, T.K. Comparisons of error characteristics between TOA and TDOA positioning. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 307–311. [Google Scholar] [CrossRef]