Abstract

Efficient intra-mode decision for depth maps assumes a pivotal role in augmenting the overall performance of 3D-HEVC. Existing research endeavors predominantly rely on fast mode screening strategies grounded in texture characteristics or machine learning techniques. These strategies, to a certain extent, mitigate the complexity of mode search. Nevertheless, these approaches often fall short of fully leveraging the intrinsic spatio-temporal correlations within depth maps. Moreover, strategies relying on deterministic classifiers exhibit insufficient discrimination reliability in regions featuring edge mutations or intricate structures. To tackle these challenges, this paper presents a two-stage fast intra-mode decision algorithm for depth maps, integrating naive Bayes probability estimation and fuzzy support vector machine (FSVM). Initially, it confines the candidate mode space through spatio-temporal prior modeling. Subsequently, FSVM is employed to enhance the decision accuracy in regions with low confidence. This methodology constructs a joint mode decision framework spanning from probability screening to refined classification. By doing so, it significantly reduces the computational burden while preserving rate-distortion performance, thereby attaining an effective equilibrium between encoding complexity and performance. Experimental findings demonstrate that the proposed algorithm reduces the average encoding time by 52.30% with merely a 0.68% increment in BDBR. Additionally, it showcases stable universality across test sequences of diverse resolutions and scenes.

1. Introduction

In recent years, 3D video technology has found extensive applications across diverse fields, including film and television production, game development, and medical imaging. It has emerged as one of the pivotal directions in the development of multimedia technology. The multi-view plus depth (MVD) structure, through the simultaneous capture of texture images and their corresponding depth maps, empowers the system to generate high-quality virtual viewpoints by leveraging the depth-image-based rendering (DIBR) technology. This accomplishment enhances the three-dimensional visual immersion at an exceedingly low additional bit rate cost [1]. Notably, the quality of virtual viewpoints generated by the DIBR technology is highly contingent upon the coding efficiency of depth maps [2,3]. Consequently, attaining efficient depth map coding has become a crucial underpinning for the practical implementation of 3D video technology. It plays an irreplaceable and substantial role in optimizing the overall performance of the 3D-HEVC standard.

To facilitate the coexistence of sharp edges and extensive flat regions in depth maps, the 3D-HEVC standard has incorporated innovative prediction modes and coding tools, such as depth modeling modes (DMMs) and segmented DC coding (SDC). These additions are designed to preserve edge information in depth maps more efficiently [4,5,6]. Nevertheless, this optimization has presented a formidable challenge: during the intra-mode decision-making process for depth maps, it is necessary to recursively traverse all possible combinations of the 35 prediction modes of traditional HEVC and the newly introduced coding tools [7]. This procedure utilizes an exhaustive search approach to determine the scheme with the lowest rate-distortion cost. However, this operation results in an exponential growth in the computational complexity of the encoding process, thus imposing a significant limitation on the application of 3D-HEVC in real-time scenarios. Consequently, the effective reduction of the computational complexity of depth map encoding without sacrificing rate-distortion performance has emerged as a central issue that demands urgent attention in the field of 3D video coding.

Among the existing research findings, fast algorithms for depth maps can be categorized into the following two types. One type pertains to intra-frame mode prediction, which expedites the search process by pre-emptively reducing infrequent modes or skipping invalid ones. As reported in ref. [8], a high-probability initial candidate set is constructed based on the statistical probability of the depth map intra-mode. Subsequently, a simplified candidate set is chosen via a low-complexity intra-prediction cost in conjunction with an adaptive threshold. Ultimately, only the simplified set is employed to compute the complete rate-distortion cost for determining the optimal mode. The essence of this approach lies in leveraging the characteristics of the depth map and a lightweight screening mechanism to substantially reduce the computational burden of the 3D-HEVC depth map intra-mode decision, while incurring minimal degradation in coding performance. As reported in ref. [9], a rapid intra-frame mode prediction method grounded in machine learning was put forward. Leveraging the output results of decision trees (DT) corresponding to each mode as the foundation, the number of candidate modes was substantially decreased through the adaptive skipping of HEVC prediction modes and DMM during the mode decision process. Lin et al. [10] partitioned sensitive and insensitive regions by computing visual saliency indicators such as the PU gradient edge strength and the correlation with texture map details. They then integrated perceptual weighted cost and a dynamic threshold to streamline intra-frame modes. This approach significantly mitigated the intra-frame coding complexity of 3D-HEVC depth frames without compromising visual quality. As reported in ref. [11], a Deep Mode Prediction Convolutional Neural Network (DMP-CNN) was put forward to decide whether to incorporate the DMM mode into the candidate list and skip unnecessary mode decision computations. This approach trains a deep learning model to directly forecast the optimal depth modeling mode. It does so by extracting the spatial neighborhood features of the depth map prediction unit, the corresponding texture map features, and the encoding parameters as inputs. The second type aims at reducing computational complexity by accelerating the coding unit (CU) partitioning process. As reported in ref. [12], a fast CU partitioning algorithm based on decision trees was proposed. This study employed the J48 algorithm in WEKA 3.8 to construct three decision trees, each corresponding to a specific CU size. Subsequently, some attributes highly relevant to the CU partitioning decision were selected from all evaluated attributes through information gain. As reported in ref. [13], a CU partitioning algorithm grounded in boundary continuity was put forward. In this algorithm, the total sum of squares (TSS) of each boundary of the CU and the TSStotal are computed. When they are less than or equal to the pre-set threshold or the RDcost of the current optimal mode meets the criteria, the CU partitioning is prematurely terminated. As reported in ref. [14], the depth map blocks are categorized into uniform, simple edge, and complex edge types via a convolutional neural network. Based on the classification outcomes, the encoding process is adaptively streamlined: for smooth blocks, the search is terminated ahead of time; for simple edge blocks, only a limited number of modes are examined; and only for complex blocks is a comprehensive search carried out. This significantly reduces the encoding complexity of 3D-HEVC depth maps.

Based on the foregoing analysis, despite the fact that these methods have enhanced the encoding speed, several notable limitations still persist. Firstly, the majority of these methods independently exploit either spatial or temporal context information. As a result, they fail to comprehensively and profoundly explore the intrinsic spatio-temporal correlations within depth maps, thereby leading to inadequate prediction reliability. Secondly, machine learning-based approaches predominantly rely on deterministic classifiers. These classifiers exhibit poor robustness when differentiating ambiguous regions, such as depth edges and complex structures, and are highly susceptible to misjudgment. Thirdly, certain methods involve redundant processing or insufficient feature extraction for low-probability patterns. This makes it arduous to strike an optimal balance between encoding efficiency and performance.

The spatial distribution of depth maps exhibits strong continuity, and the optimal modes of adjacent prediction units (PUs) share a high degree of similarity. Moreover, in a stable scene, a notable correlation exists between the modes of PUs at the same position in the previous frame and those in the current frame. This characteristic offers valuable insights for related research. Building upon this, this paper presents a two-stage fast intra-mode decision algorithm for depth maps, which integrates naive bayes probability estimation and Fuzzy Support Vector Machine (FSVM). By effectively modeling spatio-temporal correlations and adaptively determining confidence levels, this algorithm addresses the conflict between the accuracy of mode selection and complexity control in existing methods. As a result, it attains a coordinated optimization of coding efficiency and rate-distortion performance.

This algorithm initially constructs a spatio-temporal prior-guided Bayesian framework. By leveraging the pattern information of adjacent and co-located PUs, it estimates the posterior probability of candidate patterns. The adaptive confidence is computed through probability entropy, enabling the rapid screening of high-probability candidate patterns. When the confidence level is low, the FSVM model is introduced to integrate multi-dimensional features, including edge strength, variance, and quantization parameter (QP), for fine-grained classification. This approach enhances the discrimination accuracy in complex regions. The two-stage joint decision-making mechanism of “coarse screening–fine classification” not only significantly reduces the computational cost of pattern search but also effectively guarantees the stability of coding performance.

The contributions of this paper can be summarized as follows:

1. This study presents a spatio-temporal prior-guided Bayesian framework. By exploring the pattern correlations of adjacent and co-located PUs, this framework estimates the posterior probability of candidate modes. This approach effectively shrinks the search space and bolsters the prediction reliability.

2. A normalized probability entropy confidence index is introduced to quantify the uncertainty inherent in Bayesian prediction. Additionally, an adaptive early termination mechanism is devised. When the confidence level is high, the mode can be directly selected, thereby reducing redundant computations.

3. In scenarios with low confidence, a pattern refinement strategy grounded in the FSVM is put forward. Through the integration of fuzzy membership and multi-dimensional discriminative features, this strategy enables robust classification in regions with deep discontinuities and complex structures, thus enhancing the discrimination accuracy.

The remainder of this paper is structured as follows. Section 2 presents the characteristics of 3D-HEVC intra-frame mode prediction. Section 3 meticulously elaborates on the fast depth map mode decision method proposed herein. Section 4 and Section 5 respectively provide the analysis of experimental results and the conclusion.

2. Observation and Analysis

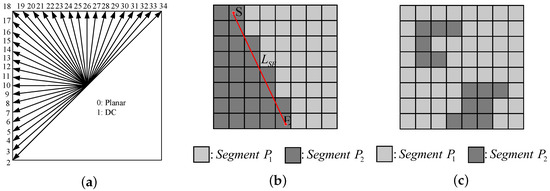

In the 3D-HEVC standard, depth map coding inherits 35 traditional intra prediction modes from HEVC [15,16], as depicted in Figure 1a. Among these, 33 intra angular modes are capable of representing diverse prediction directions. In addition to these angular prediction modes, the DC mode and Planar mode can efficiently handle smooth or approximately homogeneous regions. To retain the sharp edge characteristics of the depth map, 3D-HEVC introduces two additional depth modeling modes, namely DMM1 and DMM4 [17]. These two modes partition each prediction unit into two non-rectangular regions, with each region being characterized by a constant pixel value (CPV) [18,19,20]. For each wedge-shaped division in DMM1, as illustrated in Figure 1b, the two regions and are separated by a straight-line boundary . S and E denote the start and end points of , respectively. DMM4 can employ any contour division method, as shown in Figure 1c, and its division result is determined by the co-located reconstructed texture PU using the threshold method. Nevertheless, in the latest test model, DMM4 is no longer applicable to depth intra slices.

Figure 1.

Depth intra prediction modes in 3D-HEVC: (a) Angular mode; (b) DMM1; (c) DMM4.

As depicted in Figure 2, the flowchart of the depth intra-prediction mode decision is presented. The key process of the depth intra-mode decision can be concisely summarized into the following steps:

Figure 2.

Depth intra mode decision.

Step 1: The initial step in intra prediction involves conducting a rough mode decision (RMD) for the HEVC intra modes. Initially, the sum of absolute transformer differences (SATD) of the coefficients following the Hadamard transform of the residuals for 35 intra modes is computed. Subsequently, the cost is calculated using Formula (1). Finally, several candidate modes with the lowest costs are included in the list of optimal mode candidates. Specifically, for 8 × 8 or 4 × 4 size PUs, 8 modes with the minimum costs are chosen, whereas for PUs of other sizes, 3 modes with the lowest costs are selected [21].

where denotes the Lagrangian multiplier, and denotes the number of encoding bits required for encoding the intra-mode of the current frame.

Step 2: The optimal prediction mode of the current PU exhibits a remarkably high degree of similarity to the optimal prediction modes of the adjacent encoded PUs on its left and top. Consequently, the three optimal prediction modes of the adjacent encoded PUs on the left and top are designated as the Most Probable Modes (MPMs), denoted as MPM[0], MPM[1], and MPM[2]. Following Rate–Distortion Optimization (RDO), it is ascertained whether the current MPM is encompassed within the list of optimal mode candidates. If not, the MPM mode is incorporated into the candidate mode list and encoded as the corresponding MPM mode number [22].

Step 3: After incorporating the MPM mode into the candidate list, conduct a search for the optimally matching wedge partition among all the candidate schemes of the wedge mode and include it in the candidate mode list.

Step 4: The optimal prediction mode is derived via the RDO procedure. For each mode within the list of optimal mode candidates, the residual acquired by applying this mode undergoes transformation, quantization, and entropy encoding to yield the final coding bit rate Bmode associated with using the mode. Subsequently, the rate-distortion cost RDCost resulting from using the mode is computed through Formula (2).

In this context, represents the distortion, where the sum of squared errors is employed. Specifically, it is computed as the sum of the squared differences between each pixel of the original image block and the corresponding pixel of the block reconstructed after encoding and decoding. The symbol denotes the Lagrange multiplier, while refers to the total number of bits required to encode this particular mode.

Step 5: The complete residual quadtree (RQT) search is carried out by utilizing the intra mode chosen in the fourth step to ascertain the optimal transform kernel size [23].

Owing to the fact that the intra-mode decision for depth map frames necessitates the examination of a substantial number of candidate modes, its encoding time constitutes approximately 86% of the total encoding time of 3D-HEVC, as reported in ref. [24]. Consequently, if the optimal prediction mode selected by the current PU can be predicted beforehand or the quantity of its candidate modes can be diminished, the encoding time can be significantly curtailed. Hence, the development of effective methodologies capable of accurately predicting the optimal intra-mode or prematurely terminating the redundant mode search is of utmost importance.

3. Proposed Fast Algorithm for Depth Map Mode Decision

3.1. Mode Probability Estimation Based on Naïve Bayes

The pixel values of a depth map inherently represent the distance between objects and the camera. Their spatial distribution typically exhibits strong continuity. Simultaneously, under the premise of a stable scene structure, the CU mode at the identical position in the preceding frame demonstrates a high degree of correlation with the current PU. Specifically, this characteristic implies a high probability that adjacent PUs and the current PU are part of the same spatial area, and their optimal intra-frame modes are remarkably similar. Regarding the PU at the position corresponding to the current PU in the previous frame, when the scene does not experience substantial movement, their optimal modes generally remain consistent [25]. Based on the foregoing analysis, this paper incorporates spatio-temporal context information into the traditional Naïve Bayes model. By leveraging the prediction modes of adjacent and co-located PUs, prior constraints are imposed on the mode distribution of the current PU, thereby enhancing the accuracy of mode probability estimation. In this study, the current PU is designated as , while the PUs to its left, upper left, above, upper right, and the co-located PU in the previous frame are denoted as , , , , and , respectively, as depicted in Figure 3. The mode information of these PUs forms the spatio-temporal context set of the current PU:

Figure 3.

Current PU and its spatio-temporal contextual PUs. The current PU , its spatially adjacent PUs (, , , and ), and the co-located PU in the previous frame () are shown in red. The reference block and target block illustrate the temporal correspondence between consecutive frames.

In light of the aforementioned spatial and temporal correlations, this paper formulates a candidate mode set leveraging the neighborhood PU information. In the HEVC standard, the intra-prediction modes consist of Planar, DC, and 33 angular modes. Additionally, 3D-HEVC incorporates two depth modeling modes for depth maps. Nevertheless, in practical encoding scenarios, the optimal mode for the majority of PUs is predominantly concentrated within a limited number of common modes [26]. When probability estimation is carried out for all modes, not only does the computational burden increase substantially, but also the contribution of low-probability modes to the final decision is negligibly small, leading to only marginal improvement in overall performance. Consequently, in this paper, a candidate mode set is first generated leveraging spatial and temporal neighborhood information, and the probability is estimated only for modes with a higher likelihood. The encoded mode information of these neighboring PUs is then extracted to form the initial mode set.

The frequency of the occurrence of patterns within this set is meticulously counted. Patterns with higher occurrence counts are then retained as the primary candidates. Simultaneously, to bolster the stability of prediction, the Planar and DC modes are additionally incorporated. Consequently, the candidate mode set is as follows:

Here, selects the top-K modes according to empirical frequency or prior probability, where denotes the total number of candidate modes. In this work, , including the planar mode, the DC mode, eight angular modes, and the DMM1 mode. This candidate set covers most high-probability optimal modes, thereby preserving prediction accuracy while substantially reducing the subsequent model complexity.

The posterior probability that the current PU belongs to mode is given by the Naïve Bayes rule:

Among these, denotes the prior probability of pattern . represents the encoded optimal mode of the k-th context PU in . represents the conditional probability that the adjacent or same-position CU mode is given that the current CU mode is . stands for the occurrence frequency where the adjacent CU mode is and the current CU mode is . By means of this probability model, the posterior probability distribution corresponding to each mode in the candidate mode set can be derived, thus reflecting the likelihood of it becoming the optimal mode.

To acquire the key parameters and , we carried out comprehensive experiments. Specifically, under the All-Intra (AI) configuration [27], four quantization parameters, namely 34, 39, 42, and 45, were set. To satisfy the demands of different resolutions, for videos in the 1024 × 768 category, Kendo and Newspaper were selected; for videos in the 1920 × 1088 category, Shark and Poznan_Street were chosen. These videos encompass a wide range of motion features and texture types. When the current PU selects the Planar mode, the probability that all five related PUs also select Planar is presented in Table 1. Adopting a similar methodology, we can derive the probability that adjacent PUs choose any mode when the current PU selects a specific intra mode. The correlation between the current PU and the PU at the same position in the previous frame is influenced by scene motion. In static or low-motion videos, this correlation is relatively strong. However, it generally remains lower than the correlation with spatially neighboring PUs.

Table 1.

Probabilities of contextual PUs.

Due to the fact that the Naive Bayes assumes feature conditional independence, the prediction results may exhibit uncertainty in certain areas (such as deep edges or high-texture regions). Consequently, this paper incorporates probability entropy to quantify the prediction confidence. Probability entropy is defined as:

The symbol represents the set of neighboring PUs of the current PU. Specifically, includes the PUs located to the left, upper-left, above, upper-right, as well as the co-located PU in the previous frame.

It is normalized to as:

Here, C denotes the confidence measure and lies in the range . A smaller entropy (i.e., a larger C) indicates higher model confidence. Based on this measure, an adaptive decision strategy is adopted: when C exceeds a threshold (i.e., the entropy is low), the prediction is regarded as stable, and the intra-prediction mode with the highest posterior probability is directly selected as the optimal mode.

When the confidence level drops below the threshold , it serves as an indication that the model harbors uncertainty. At this juncture, the top three moedes exhibiting the highest probabilities are retained to constitute the candidate moede set . Subsequently, the FSVM model is invoked for a refined judgment. In this study, the confidence level threshold is empirically set at 0.65. This value has been determined to strike a balance between accuracy and complexity across multiple sets of experiments.

3.2. Mode Decision Method Based on Fuzzy Support Vector Machine

In intra-prediction of depth map frames, the Naïve Bayes (NB) model estimates the posterior probability distribution of candidate prediction modes for the current PU by leveraging mode information from adjacent PUs and co-located PUs in the preceding frame. However, owing to the conditional independence assumption inherent in NB, the model tends to yield a more diffuse posterior probability distribution in regions characterized by complex textures, sharp depth discontinuities, or prominent edge structures. This often results in increased uncertainty or “blurring” in the predicted modes. To improve mode decision accuracy in such ambiguous regions, we integrate a Fuzzy Support Vector Machine (FSVM) with the NB probability estimation, establishing a coarse-to-fine joint decision framework. The FSVM, an extension of the conventional support vector machine, introduces a fuzzy membership degree for each training sample to represent its reliability [28]. By assigning lower membership values to noisy or boundary-ambiguous samples, their impact on the classification hyperplane is diminished during training, leading to a more stable and smoother decision boundary and thereby enhancing model robustness.

Given a training set , where denotes the feature vector of the i-th sample and satisfies ( is a d-dimension real number space), is the class label, and is the fuzzy membership function of the sample. The membership value satisfies , and it reflects the credibility of sample belonging to its labeled class .

In the framework of support vector machines, the input samples are mapped into a high-dimensional feature space through a nonlinear mapping function , yielding the transformed training set . The separating hyperplane is expressed as

Here, is the weight vector and b is the bias term. The kernel function is defined as

Here, denotes the transpose of .

Generally, the formulation of the fuzzy support vector machine can be expressed as follows:

Here, is the slack variable measuring the extent of margin violation. In Equation (13), denotes the fuzzy membership degree of sample , which reduces the influence of noisy or unreliable samples during margin optimization. For notational convenience, we define when , and when . Therefore, the two membership-weighted slack penalties in Equation (13) correspond to positive-class and negative-class samples, respectively. and are the penalty coefficients controlling the contributions of the two classes.

In this paper, the FSVM refinement stage is activated only when the confidence value C produced by the NB model is less than or equal to the threshold . Specifically, three modes with the highest posterior probabilities are selected from the Bayesian distribution to form the candidate mode set. Then, the posterior probabilities of the candidate modes, the normalized entropy feature, and the designed structural/statistical features are concatenated to construct the FSVM input vector:

Feature extraction plays a pivotal role in learning-based mode discrimination. On one hand, the feature quality directly affects the achievable upper bound of classifier performance; on the other hand, in FSVM, the effectiveness of membership-weighting also depends on whether the features can adequately characterize the structural disparities and texture distribution of the PU. If the extracted features fail to represent the PU structure comprehensively, the classifier may still produce misjudgments even with fuzzy memberships, especially in the ambiguous regions near decision boundaries. Accordingly, this study considers both the spatial structural characteristics and statistical properties of PUs, and designs and extracts a set of highly discriminative features for FSVM training and classification, as summarized in Table 2. The definitions and roles of these features are detailed below.

Table 2.

Summary of feature definitions and their roles.

. The quantization parameter governs the compression intensity, with higher QP values promoting edge blurring and loss of structural detail, thereby diminishing the benefits of complex patterns and favoring simpler ones. Conversely, lower QP values preserve edge sharpness and facilitate the maintenance of optimal representation in complex patterns [29].

. The hierarchical prior derived from the optimal mode of the parent PU guides the candidate mode selection in the child PU, promoting consistency with the parent’s directional or modal characteristics and thus improving decision-making stability.

. Variance reflects local structural complexity. Low variance typically corresponds to smooth, homogeneous regions and thus favors simpler prediction modes, whereas high variance indicates richer textures or sharper structures and often calls for more diverse directional modes to better suppress distortion.

. Edge strength and orientation are closely correlated with prediction-mode direction: strong, well-defined edges typically constrain the optimal mode to a small subset of candidates aligned with the dominant edge direction, whereas weak edges provide limited directional cues and thus require a broader candidate set to mitigate misclassification.

. The mean value can be used as a coarse proxy for the block-to-camera distance: regions closer to the camera typically exhibit finer structural details, which may increase the likelihood of invoking DMM evaluation.

. The RD cost of the first mode in the RD list is considered. A small value of this parameter indicates that the coding block can be efficiently encoded via traditional HEVC intra prediction modes. In such a scenario, the evaluation process of DMM can be omitted.

Based on the foregoing analysis, we fuse these features, the posterior probabilities of the candidate patterns, and the normalized entropy into the input feature vector of the FSVM. To reduce the scale differences among heterogeneous features and improve the numerical stability of the classification process, a logarithmic compression is applied to the feature values. This operation aims to alleviate excessive variations in feature magnitudes rather than enforce a strict range constraint. Specifically, the logarithmic transformation is defined as follows:

Here, x denotes the raw feature and denotes the logarithmically transformed feature.

The NB model generates different candidate mode sets for different PUs by exploiting spatial and temporal neighborhood information. Owing to the considerable heterogeneity in structural characteristics across regions, the candidate sets for each PU typically vary. Training an independent classifier for each candidate combination would greatly increase computational burden and may suffer from sparse samples, making it difficult to guarantee model stability and generalization. To address this issue, we design an FSVM scheme with global training and local decision-making: during training, a unified FSVM is learned using samples from the full mode space; during inference, the FSVM performs local discrimination only within the high-probability candidate modes provided by NB.

Let the multi-class training set be , where denotes the input feature vector of the i-th PU and is the ground-truth intra-prediction mode label. Using an RBF kernel with the implicit mapping , the FSVM learns a discriminative function for each mode:

where denotes the decision score of the jth candidate mode; represents the weight vector of the j-th mode, and denotes the bias term corresponding to the j-th mode.

Training over the full mode space enables the FSVM to capture global decision boundaries and cross-mode dependencies, thereby improving generalization and discriminative robustness. Nevertheless, during inference, the classifier does not exhaustively evaluate all M modes. Instead, it performs local judgment within the candidate set produced by NB. The discriminative strength of each candidate mode is computed based on the input feature vector x, and the final predicted mode is selected as

Here, denotes the index of the mode with the highest score.

3.3. Overview of the Algorithm

Based on the foregoing analysis, the procedure of the algorithm proposed in this paper is as follows. Initially, the pattern information of adjacent and same-position PUs is extracted from the current PU to construct the neighborhood set , which captures the spatio-temporal correlations. Simultaneously, a set of initial candidate patterns with high probabilities is established. Subsequently, the posterior probability of the current PU within the initial candidate pattern set is computed using the Naïve Bayes model. Moreover, the normalized probability entropy and confidence C are derived from the probability distribution. If the confidence exceeds the threshold , it implies that the prediction result is highly accurate, and the pattern with the highest probability is directly chosen as the optimal intra-frame prediction mode. Conversely, if the confidence is lower than the threshold, it indicates that the pattern distribution is relatively scattered and the prediction result is highly uncertain. In this case, the algorithm retains the top three high-probability patterns as the candidate set and feeds their probability features along with the designed features into the FSVM model for refined discrimination. The FSVM calculates the discrimination strength of the candidate patterns based on the input feature vector and ultimately selects the pattern with the highest discrimination strength as the final intra-frame mode of the current PU. The algorithm flowchart is presented in Figure 4.

Figure 4.

Overall algorithm flow chart.

4. Experimental Results and Analysis

To assess the proposed 3D-HEVC depth map mode decision algorithm, we incorporated it into the 3D-HEVC reference software (HTM-16.2) [30]. The experiments were conducted on eight multiview test sequences recommended by the JCT-3V working group [31], covering two spatial resolutions, namely and . The detailed information of these test sequences is summarized in Table 3. Each sequence set consists of three texture video views and three corresponding depth map views.

Table 3.

Test sequences and associated parameter information.

All experiments were carried out strictly in accordance with the 3D-HEVC Common Test Conditions (CTC) specified by JCT-3V, under the All-Intra (AI) configuration. In this configuration, all frames are encoded independently using intra prediction only, and no inter-coded frames (i.e., P-frames or B-frames) are involved. Consequently, the effective GOP size is set to 1. The encoder was configured in a 3-view scenario, including one independent center view and two dependent side views (left and right). The encoding order followed the sequence , , , , , , where and denote the texture and depth frames of the i-th view, respectively. CTUs were fixed at pixels with a maximum partition depth of 4, and the fast QTL option was enabled. QPs were set to for depth-map coding and for texture-video coding. We compare the proposed method against the 3D-HEVC encoder with full-search mode evaluation and against representative fast algorithms, as summarized in Tables 4 and 6. The coding performance is appraised in terms of encoding time and total bit rate. Specifically, BDBR represents the optimization magnitude of the total bit rate in 3D video coding, while indicates the percentage variation in the overall encoding time. The calculation of is presented as follows:

Among them, and denote the running times of the proposed algorithm and the original 3D-HEVC encoder, respectively.

4.1. A Performance Comparison and Analysis Between the Algorithm Proposed in This Paper and the Original 3D-HEVC Encoder Are Conducted

In this section, a comprehensive analysis of the simulation results regarding the algorithm’s performance will be carried out. Table 4 showcases the performance comparison data between the proposed algorithm and the original 3D-HEVC encoder. The experimental findings indicate that, while preserving comparable rate-distortion performance, the overall proposed algorithm can effectively decrease the encoding time of 3D-HEVC across all test sequences. As presented in Table 4, it is evident that for sequences with a resolution of 1024 × 768, the average encoding time is decreased by 55.37%. For sequences with a resolution of 1920 × 1088, the average encoding time is diminished by 50.45%. Among these, the Kendo sequence attains the maximum reduction of 56.47%, whereas the GT_Fly sequence reaches the minimum reduction of 47.95%. This phenomenon can be attributed to the fact that for sequences such as Kendo, the smooth regions of the depth map occupy a relatively high proportion. The Naive Bayes model can rapidly identify the high-confidence candidate set. Most prediction blocks can be directly encoded in the highest probability mode, and the number of FSVM decision-making instances is limited, thereby resulting in a more substantial reduction in encoding time. Conversely, sequences like GT_Fly feature dense edges and significant depth gradient variations. To ensure prediction accuracy, more frequent invocations of FSVM are required, which leads to a relatively smaller reduction in encoding time. Overall, the proposed algorithm is capable of attaining a dynamic equilibrium between complexity and performance in diverse scenarios, leveraging the Bayesian confidence and the FSVM decision mechanism. It reduces the encoding time by an average of 52.30% and boosts the average BDBR by 0.68%. Despite ensuring a certain level of rate-distortion performance loss, it remarkably enhances the efficiency of intra-mode decision for 3D-HEVC depth maps.

Table 4.

Comparison of the proposed algorithm with the 3D-HEVC encoder.

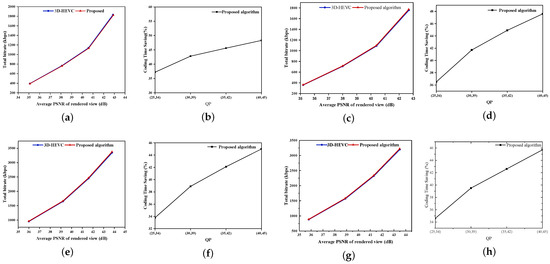

Figure 5 depicts the RD performance and the encoding time savings achieved by the proposed algorithm and the 3D-HEVC reference encoder under four representative test sequences. As shown in the figure, under various combinations of texture-video and depth-map quantization parameters, namely (25, 34), (30, 39), (35, 42), and (40, 45), the RD curves of the proposed algorithm and the baseline 3D-HEVC encoder are largely coincident. This indicates that the proposed method can maintain rate-distortion performance comparable to that of the original encoder while substantially reducing computational complexity. In terms of encoding time, the time-saving curves increase as the QP becomes larger. This phenomenon can be attributed to the following reasons. When the QP is low, due to finer PU partitioning and a more scattered mode distribution, the decision ratio of the FSVM is relatively high, resulting in limited time savings. Conversely, as the QP increases, the number of PUs decreases and the Bayesian confidence level improves. This enables the algorithm to terminate mode decisions earlier and more frequently, thereby achieving a more pronounced acceleration effect. Overall, the results demonstrate that the proposed method can strike a favorable balance between complexity and encoding performance under diverse quantization parameter conditions.

Figure 5.

The RD performance and the reduction in encoding time of the proposed algorithm are compared with those of the 3D-HEVC encoder for four representative test sequences under different combinations of texture and depth QPs. (a) RD performance on the “Balloons” sequence. (b) Encoding-time saving curves for “Balloons”. (c) RD performance on the “Kendo” sequence. (d) Encoding-time saving curves for “Kendo”. (e) RD performance on the “Undo_Dancer” sequence. (f) Encoding-time saving curves for “Undo_Dancer”. (g) RD performance on the “Poznan_Hall2” sequence. (h) Encoding-time saving curves for “Poznan_Hall2”.

4.2. Ablation Experiments and Analysis

To validate the efficacy of each core module within the intra-mode decision approach for depth maps proposed in this paper, a series of ablation experiments were conducted under the HTM-16.2 reference software and the Common Test Conditions (CTC) configuration. The corresponding results are summarized in Table 5. The proposed methodology encompasses three core modules: spatio-temporal prior-guided Bayesian probability estimation, an entropy-based confidence measure for early termination, and a refined decision module based on FSVM. By selectively eliminating each module, we assessed its individual contribution to the overall coding efficiency and rate-distortion performance.

Table 5.

Ablation study results of the proposed method.

The Impact of FSVM. By comparing the “Full model” with the “model without FSVM (w/o FSVM),” it can be clearly observed that, in the absence of FSVM-based refined classification, the average BDBR increases from 0.68% to 0.89%. This indicates that FSVM provides essential and effective compensation when handling depth-discontinuous regions and complex structural patterns. Furthermore, since the fine classification step is omitted, the encoding complexity is slightly reduced, resulting in a 4.43% increase in the time-saving ratio. These findings demonstrate that the FSVM module plays a crucial role in achieving accurate mode prediction for depth blocks with complex structural characteristics.

Impact of the Confidence Mechanism. When the confidence mechanism is removed, all PUs are required to engage in the FSVM decision-making process. Consequently, the average time is significantly reduced to 41.12%. Although the BDBR of this scenario is comparable to that of the complete model, its complexity has increased substantially. This finding suggests that the confidence, serving as an effective adaptive control mechanism, can remarkably decrease unnecessary invocations of FSVM while maintaining the rate-distortion performance at a nearly unchanged level. Thus, it attains the objective of reducing the overall complexity.

Effect of Spatio-Temporal Prior. When both the spatio-temporal prior and Bayesian modeling (w/o prior) are eliminated, the algorithm performance experiences the most substantial degradation. This outcome indicates that leveraging the spatio-temporal correlation among prediction units is of utmost importance for shrinking the mode search space.

Performance of a Single-stage Classifier. The model consisting solely of FSVM is required to conduct prediction operations on 35 intra modes and DMM. Through a comprehensive comparison, it can be observed that the strategy of single-stage classification within the full mode space, relying merely on FSVM, results in an increase in BDBR to 1.04%. Meanwhile, the time savings are significantly diminished to 21.63%. These comparison results indirectly validate the significance and indispensability of the two-stage mode decision framework proposed in this paper.

Overall, the ablation experiments demonstrate that the removal of any single module results in varying degrees of rate-distortion performance degradation or an increase in computational complexity. This indicates that the full model attains the optimal balance between coding efficiency and prediction accuracy.

4.3. Comparative Results Between the Proposed Method and State-of-the-Art Fast Methods

To further elucidate the performance of the proposed algorithm, it is compared with representative fast methods reported in refs. [8,32], as summarized in Table 6. A fast intra-mode decision algorithm for depth map intra coding based on efficient feature selection is proposed in ref. [32]. By introducing a Self-Organizing Map (SOM) model and extracting key features such as texture information and quantization parameters, the method performs mode clustering to effectively reduce the search space of candidate modes. Consequently, the encoding complexity is significantly decreased while the quality of the synthesized view is maintained.

Table 6.

Comparison with related works.

As shown in Table 6, the proposed method results in an average coding efficiency loss of 0.68%. In comparison, the coding efficiency losses reported in refs. [8,32] are 0.78% and 0.26%, respectively. Compared with the method in ref. [8], the proposed method reduces the coding efficiency loss by 0.10%, whereas it incurs an additional loss of 0.42% when compared with the method in refs. [32]. In terms of coding time, the proposed algorithm achieves an average time saving of 52.30%, while the methods in refs. [8,32] report average time savings of 51.5% and 39.8%, respectively. Therefore, the proposed method exhibits clear advantages in encoding speed, achieving relative improvements of 0.8% and 12.5% over the methods in refs. [8,32], respectively. Overall, the experimental results demonstrate that the proposed algorithm attains a favorable trade-off between coding efficiency and coding time.

Table 7 summarizes the comparative results between the proposed method and several recent related approaches. A hierarchical CU partition prediction model, termed Swin-HierNet, was proposed in ref. [33], which leverages the Swin Transformer architecture and multi-branch networks. By incorporating multi-branch feature fusion and a recursive decision strategy, the model enables efficient CU partitioning in the intra-frame coding of 3D-HEVC depth maps. Compared with the method in ref. [33], the proposed approach incurs a slightly higher coding quality loss of 0.33%, while achieving a 3.87% improvement in coding time saving, indicating a further reduction in computational complexity with only marginal impact on rate–distortion performance.

Table 7.

Comparison of the proposed method with recent state-of-the-art methods.

A convolutional neural network incorporating an attention mechanism, feature distillation, and edge perception has been proposed to predict CU partition structures in depth maps by jointly exploiting global, local, and edge-related features [34]. Compared with this method, the proposed approach achieves a higher coding time saving of 6.39%, while also reducing the coding quality loss by 0.41%.

It is worth noting that some existing approaches may achieve superior performance in certain individual metrics, such as coding efficiency or rate–distortion optimization, typically at the cost of increased model complexity or additional computational overhead. In contrast, the proposed method is designed to achieve a balanced trade-off between coding efficiency and coding quality, providing competitive performance while maintaining relatively low computational complexity.

5. Conclusions

This study focuses on the substantial computational complexity associated with intra-mode decision in depth maps. It presents a two-stage fast intra-mode decision algorithm for depth maps, which integrates naive Bayes probability estimation with FSVM decision-making. This approach effectively harnesses the structural correlations within both spatial and temporal neighborhoods. Initially, it accomplishes preliminary screening through candidate mode constraints and confidence determination. Subsequently, FSVM is employed to enhance the decision accuracy in complex regions. Experimental findings indicate that this algorithm significantly alleviates the overall encoding complexity while essentially maintaining the rate-distortion performance. Specifically, it achieves an average reduction in encoding time of 52.30% and only a 0.68% increase in BDBR. Moreover, it exhibits excellent stability and universality across various types of sequences.

Although the proposed two-stage fast mode-decision framework achieves a favorable trade-off between encoding complexity and Rate–Distortion performance, several limitations remain. First, the method leverages spatio-temporal priors from neighboring PUs and the co-located PU in the previous frame to narrow the candidate-mode set. In sequences with rapid motion or severe depth-map noise, the correlation of the co-located PU may weaken, reducing prior reliability and potentially biasing candidate screening. Second, the framework relies on parameters such as the confidence threshold and the number of retained candidate modes. With fixed settings, it may be difficult to maintain an optimal balance across different sequences, resolutions, and QP conditions. Future work will address these issues in two directions: (i) incorporating prior-reliability assessment with adaptive weighting to relax the candidate set or switch to a more robust candidate-generation strategy when the prior is unreliable; and (ii) developing content-adaptive schemes for thresholding and candidate selection based on QP, resolution, and scene complexity to improve cross-sequence generalization.

Author Contributions

Conceptualization, E.T. and J.Z.; methodology, E.T.; software, J.Z.; validation, E.T., Q.Z. and J.Z.; formalanalysis, J.Z.; investigation, J.Z.; resources, Q.Z.; datacuration, J.Z.; writing—originaldraft, J.Z.; writing—reviewandediting, J.Z.; visualization, E.T.; supervision, Q.Z.; project administration, Q.Z.; funding acquisition, Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China No. 61771432 and No. 61302118, and the Key projects Natural Science Foundation of Henan 232300421150, and Zhongyuan Science and Technology Innovation Leadership Program 244200510026, the Scientific and Technological Project of Henan Province 232102211014 and 23210221101.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| 3D-HEVC | 3Dextensionstandard of High-Efficiency Video Coding |

| HEVC | High-Efficiency Video Coding |

| PU | Prediction Unit |

| RDO | Rate Distortion Optimization |

| QP | Quantization Parameter |

| BDBR | Bjøntegaard Delta Bit-Rate |

| CTC | Common Test Conditions |

| DIBR | Depth-Image-Based Rendering |

| HTM | 3D-HEVC Test Model |

| FSVM | Fuzzy Support Vector Machine |

| NB | Naïve Bayes |

| RDcost | RD-Distortion cost |

| RDO | RD-Distortion Optimization |

| RMD | Rough Mode Decision |

| DMM | Depth Modeling Mode |

References

- Bakkouri, S.; Elyousfi, A.; Hamout, H. Fast CU size and mode decision algorithm for 3D-HEVC intercoding. Multimed. Tools Appl. 2020, 79, 6987–7004. [Google Scholar]

- Wang, F.; Du, Y.; Zhang, Q. Fast algorithm for depth map intra-frame coding in 3D-HEVC based on Swin Transformer and multi-branch network. Electronics 2025, 14, 1703. [Google Scholar] [CrossRef]

- Li, T.; Wang, H.; Chen, Y.; Yu, L. Fast depth intra coding based on spatial correlation and rate distortion cost in 3D-HEVC. Signal Process. Image Commun. 2020, 80, 115668. [Google Scholar] [CrossRef]

- Liu, C.; Jia, K. Multi-layer features fusion model-guided low-complexity 3D-HEVC intra coding. IEEE Access 2024, 12, 41074–41083. [Google Scholar]

- Liu, S.; Cui, S.; Li, T.; Liu, H.; Yang, Q.; Yang, H. Fast CU partition algorithm based on Swin-Transformer for depth intra coding in 3D-HEVC. Multimed. Tools Appl. 2024, 83, 90315–90329. [Google Scholar] [CrossRef]

- Moura, C.; Saldanha, M.; Sanchez, G.; Marcon, C.; Porto, M.; Agostini, L. Fast intra mode decision for 3D-HEVC depth map coding using decision trees. In Proceedings of the 27th IEEE International Conference on Electronics, Circuits and Systems (ICECS), Glasgow, UK, 23–25 November 2020; pp. 1–4. [Google Scholar]

- Zhang, H.; Yao, W.; Huang, H.; Wu, Y.; Dai, G. Adaptive coding unit size convolutional neural network for fast 3D-HEVC depth map intra coding. J. Electron. Imaging 2021, 30, 041405. [Google Scholar]

- Lee, J.Y.; Park, S. Fast depth intra mode decision using intra prediction cost and probability in 3D-HEVC. Multimed. Tools Appl. 2024, 83, 80411–80424. [Google Scholar] [CrossRef]

- Lee, J.Y.; Kang, M.; Park, S. Fast depth intra mode decision using machine learning in 3D-HEVC. SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Lin, J.R.; Chen, M.J.; Yeh, C.H.; Hsu, Y.C. Visual perception based algorithm for fast depth intra coding of 3D-HEVC. IEEE Trans. Multimed. 2021, 24, 1707–1720. [Google Scholar]

- Zhou, T.; Chen, J.; Lin, Q. Optimizing intra coding in 3D-HEVC with DMP. In Proceedings of the International Conference on Advanced Image Processing Technology (AIPT 2024), Shanghai, China, 10–12 January 2024; pp. 247–252. [Google Scholar]

- Saldanha, M.; Sanchez, G.; Marcon, C.; Agostini, L. Fast 3D-HEVC depth map encoding using machine learning. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 850–861. [Google Scholar] [CrossRef]

- Chen, M.J.; Lin, J.R.; Hsu, Y.C.; Yeh, C.H. Fast 3D-HEVC depth intra coding based on boundary continuity. IEEE Access 2021, 9, 79588–79599. [Google Scholar] [CrossRef]

- Liu, C.; Jia, K.; Liu, P. Fast depth intra coding based on depth edge classification network in 3D-HEVC. IEEE Trans. Broadcast. 2021, 68, 97–109. [Google Scholar] [CrossRef]

- Fu, C.H.; Chan, Y.L.; Zhang, H.B.; Tsang, S.H.; Xu, S.T. Efficient depth intra frame coding in 3D-HEVC by corner points. IEEE Trans. Image Process. 2020, 30, 1608–1622. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Jia, K.; Liu, P.; Sun, Z. Fast intra-mode decision for depth map coding in 3D-HEVC. J. Real-Time Image Process. 2020, 17, 1637–1646. [Google Scholar] [CrossRef]

- Hamout, H.; Elyousfi, A. Low 3D-HEVC depth map intra mode selection complexity based on clustering algorithm and edge detection. In Proceedings of the International Conference on Artificial Intelligence and Green Computing, Istanbul, Turkey, 5–7 June 2023; pp. 3–15. [Google Scholar]

- Li, T.; Yu, L.; Wang, H.; Chen, Y. Fast depth intra coding based on texture feature and spatio-temporal correlation in 3D-HEVC. IET Image Process. 2021, 15, 206–217. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y.; Huang, L.; Jiang, B.; Su, R. Adaptive CU split prediction and fast mode decision based on JND model for 3D-HEVC texture coding. Digit. Signal Process. 2020, 106, 102851. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y.; Huang, L.; Wei, T.; Su, R. Fast coding scheme for low complexity 3D-HEVC based on video content property. Multimed. Tools Appl. 2021, 80, 25909–25925. [Google Scholar] [CrossRef]

- Chen, Z.; Shi, J.; Li, W. Learned fast HEVC intra coding. IEEE Trans. Image Process. 2020, 29, 5431–5446. [Google Scholar] [CrossRef]

- Fu, C.H.; Chen, H.; Chan, Y.L.; Tsang, S.H.; Zhu, X. Early termination for fast intra mode decision in depth map coding using DIS-inheritance. Signal Process. Image Commun. 2020, 80, 115644. [Google Scholar] [CrossRef]

- Li, Y.; Yang, G.; Zhu, Y.; Ding, X.; Song, Y.; Zhang, D. Hybrid stopping model-based fast PU and CU decision for 3D-HEVC texture coding. J. Real-Time Image Process. 2020, 17, 1227–1238. [Google Scholar]

- Wu, Y.; Jia, K. Fast coding based on dual-head attention network in 3D-HEVC. J. Real-Time Image Process. 2025, 22, 170. [Google Scholar] [CrossRef]

- Bakkouri, S.; Elyousfi, A. Adaptive CU size decision algorithm based on gradient boosting machines for 3D-HEVC inter-coding. Multimed. Tools Appl. 2023, 82, 32539–32557. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, N.; Yang, G.; Zhu, Y.; Ding, X. Self-learning residual model for fast intra CU size decision in 3D-HEVC. Signal Process. Image Commun. 2020, 80, 115660. [Google Scholar] [CrossRef]

- Hamout, H.; Elyousfi, A. Fast depth map intra-mode selection for 3D-HEVC intra-coding. Signal Image Video Process. 2020, 14, 1301–1308. [Google Scholar] [CrossRef]

- Zhang, Z.; Yu, L.; Qian, J.; Wang, H. Learning-based fast depth inter coding for 3D-HEVC via XGBoost. In Proceedings of the 2022 Data Compression Conference (DCC), Snowbird, UT, USA, 22–25 March 2022; pp. 43–52. [Google Scholar]

- Yao, W.X.; Wang, X.X.; Yang, D.; Wang, X.; Li, W. A fast wedgelet partitioning for depth map prediction in 3D-HEVC. In Proceedings of the Twelfth International Conference on Graphics and Image Processing (ICGIP 2020), Online, 5–7 December 2020; pp. 257–266. [Google Scholar]

- Omran, N.; Kachouri, R.; Maraoui, A.; Werda, I.; Hamdi, B. 3D-HEVC fast CCL intra partitioning algorithm for low bitrate applications. In Proceedings of the 2025 IEEE 22nd International Multi-Conference on Systems, Signals & Devices (SSD), Madrid, Spain, 18–21 February 2025; pp. 749–755. [Google Scholar]

- Li, Y.; Yang, G.; Qu, A.; Zhu, Y. Tunable early CU size decision for depth map intra coding using unsupervised learning. Digit. Signal Process. 2022, 123, 103448. [Google Scholar] [CrossRef]

- Hamout, H.; Hammani, A.; Elyousfi, A. Fast 3D-HEVC intra-prediction for depth map using self-organizing map and efficient features. Signal Image Video Process. 2024, 18, 2289–2296. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, H.; Zhang, Q. Fast Intra-Coding Unit Partitioning for 3D-HEVC intra coding depth maps via hierarchical feature fusion. Electronics 2025, 14, 3646. [Google Scholar] [CrossRef]

- Zeng, J.; Chen, J. Fast CU partitioning for 3D-HEVC depth map based on ADET-CNN. J. Imaging Sci. Technol. 2025, 69, 020508. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.