Abstract

Infrared images are often degraded by complex noise due to hardware and environmental factors, posing challenges for subsequent processing and target detection. To overcome the shortcomings of existing denoising methods in balancing noise removal and detail preservation, this paper proposes a Wavelet Transform Enhanced Infrared Denoising Model (WTEIDM). Firstly, a Wavelet Transform Self-Attention (WTSA) is designed, which combines the frequency-domain decomposition ability of the discrete wavelet transform (DWT) with the dynamic weighting mechanism of self-attention to achieve effective separation of noise and detail. Secondly, a Multi-Scale Gated Linear Unit (MSGLU) is devised to improve the ability to capture detail information and dynamically control features through dual-branch multi-scale depth-wise convolution and gating strategy. Finally, a Parallel Hybrid Attention Module (PHAM) is proposed to enhance cross-dimensional feature fusion effect through the parallel cross-interaction of spatial and channel attention. Extensive experiments are conducted on five infrared datasets under different noise levels (σ = 15, 25, and 50). The results demonstrate that the proposed WTEIDM outperforms several state-of-the-art denoising algorithms on both PSNR and SSIM metrics, confirming its superior generalization capability and robustness.

1. Introduction

As a detection technique for sensing invisible thermal radiation, infrared imaging has been widely applied in critical fields such as military reconnaissance, medical diagnosis, industrial monitoring, and security systems, owing to its strong penetration capability in low-light conditions and excellent resistance to environmental interference. However, during the acquisition and transmission processes, infrared images are inevitably corrupted by noise due to environmental factors or the imaging device itself [1]. Common noise types include impulse noise and Gaussian noise, with the latter being particularly destructive and challenging to remove [2]. Such noise not only degrades the visual quality of infrared images but also adversely affects subsequent analysis and processing tasks. Therefore, infrared image denoising holds significant theoretical research value and practical importance.

Given the prevalence and detrimental effects of noise in infrared images, researchers have long been dedicated to developing effective denoising algorithms. Existing approaches can be broadly categorized into two groups: signal processing methods based on image statistics and data-driven deep learning techniques. Traditional signal processing methods construct mathematical models based on statistical characteristics or spatial structural features of images to separate noise from authentic signals. Common techniques approaches include: spatial domain methods (e.g., mean filtering [3], median filtering [4], bilateral filtering [5]), frequency domain methods (e.g., Wiener filtering [6], wavelet transform [7], Fourier transform [8]), and hybrid spatial-frequency combined methods such as non-local means (NLM) [9], block-matching and 3D filtering (BM3D) [10]. Among this series of denoising methods, certain limitations exist in handling Gaussian noise: mean filtering tends to cause blurring of image edges and detail textures; the effectiveness of Wiener filtering relies on signal and noise prior knowledge that is difficult to accurately obtain; while the relatively high-performing BM3D algorithm also heavily depends on manually tuning of predefined parameters such as search window size, patch dimensions, similarity thresholds. Consequently, traditional denoising methods exhibit high dependency on specific model assumptions and manual parameter optimization, making it challenging to adaptively balance denoising strength and the preservation of critical details (e.g., weak edges and textures) when processing complex and variable infrared noise.

In recent years, the rapid advancement of artificial intelligence, particularly the breakthroughs in data-driven deep learning within computer vision, has provided novel technical pathways for infrared image denoising research. Unlike traditional methods that rely on handcrafted models and features, the denoising approaches based deep learning leverage the powerful end-to-end learning capability of deep neural networks. These methods can autonomously learn the statistical distribution of noise and the underlying structural information of images directly from large-scale datasets containing noisy images and their corresponding clean versions. Owing to this distinct advantage, this research direction has garnered extensive attention and in-depth investigation from scholars worldwide. Among various deep learning architectures, Transformer model has emerged as a promising candidate for low-level vision tasks due to its ability to effectively model long-range dependencies through self-attention mechanisms, which has also driven preliminary explorations of its application in image denoising. For instance, methods like Pureformer [11] and TBSN [12] have demonstrated promising results in natural image denoising by modeling long-range dependencies through self-attention mechanisms.

However, directly applying the Transformer architectures to infrared images with complex noise still faces multifaceted challenges. First, infrared images generally suffer from low signal-to-noise ratio (SNR). As traditional self-attention operates globally in pixel space, it often struggles to distinguish noise from genuine details, thereby resulting in over-smoothing or residual noise. Second, most vision Transformers lack explicit modeling of frequency domain information, which limits their ability to effectively capture the distinct distribution of noise and structural details across different frequency bands. Furthermore, the standard Feedforward Network (FFN) in Transformers is typically composed of fully connected layers, which have limited ability to adapt to local spatial structures and multi-scale features. This limitation hinders the preservation of critical details such as edges and textures. Multi-scale feature processing has been widely validated as an effective strategy in low-level vision tasks, enhancing scale-aware representation learning and strengthening feature interaction across receptive fields. Jin et al. [13] proposed a multi-branch Taylor expansion-based convolution framework, demonstrating strong capability in scale-aware feature interaction for image restoration. DDMSNet [14] designed a dual-branch dynamic multi-scale module that adjusts receptive fields according to input content, providing a valuable reference for multi-scale feature extraction in denoising tasks. However, directly adopting such multi-scale designs may still be insufficient for infrared denoising. Due to the low SNR, weak edges and textures are easily overwhelmed by noise, demanding more selective and adaptive feature modulation. These limitations have motivated ongoing efforts to optimize Transformer-based denoising models, while also prompting researchers to explore alternative deep learning frameworks to address infrared image denoising challenges.

Although dedicated Transformer-based models for infrared denoising are still in their early stages, existing deep learning methods—developed based on attention, reinforcement learning, or adversarial learning frameworks—have provided valuable insights into feature extraction and noise suppression. For instance, Li et al. [15] introduced a deep learning model incorporating a second-order attention mechanism and non-local modules. While it demonstrates effective feature extraction and noise fitting, its capability to preserve fine details in infrared image remains limited. Zhang et al. [16] designed a deep reinforcement learning model that optimizes the extraction of star targets under few frames’ conditions. However, this approach tends to cause blurring of details surrounding the targets. Hu et al. [17] proposed a symmetric multi-scale encoder–decoder structure model, which enhances the reconstruction quality of infrared images through multi-scale information extraction guidance. However, some loss of edge details remains. Yang et al. [18] employed adversarial learning combined with a multi-level feature attention network (MLFAN) to achieve effective denoising, but the approach still shows deficiencies in preserving fine textures when integrating features from different levels. The SwinDenoising method proposed by Wu et al. [19] enhanced robustness by capturing both local and global features. However, its ability to restore details within complex backgrounds remains an area for improvement. Although these deep learning approaches have achieved promising denoising results, a key challenge that requires further attention is achieving an optimal balance between denoising strength and the preservation of detail integrity.

To effectively address the aforementioned challenges, this paper proposes a Wavelet Transform enhanced Infrared Image denoising model (WTEIDM). The main contributions are summarized as follows:

- (1)

- The Wavelet Transform Self-Attention (WTSA) mechanism is designed, which leverages wavelet transformation to decompose features and accurately capture critical information. By integrating self-attention, it achieves a balance between infrared denoising and detail preservation.

- (2)

- A Multi-scale Gated Linear Unit (MSGLU) is developed, which employs 3 × 3 and 5 × 5 depth-wise convolutions to construct multi-scale branches. Coupled with a gating mechanism, it enables dynamic feature modulation and enhances the capture of detailed information.

- (3)

- A Parallel Hybrid Attention Module (PHAM) is introduced, which performs feature fusion through the parallel and interactive operation of spatial and channel attention. This enhances cross-dimensional feature integration and improving detail retention capability.

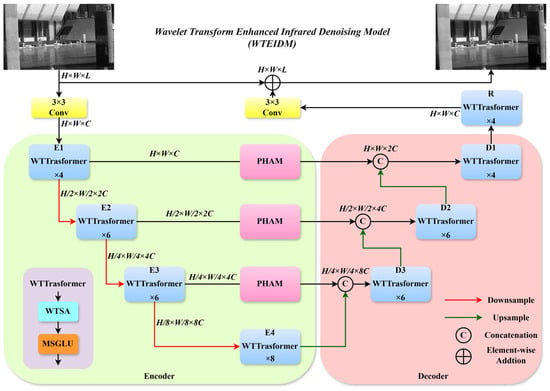

2. The Proposed WTEIDM

To address the challenge of balancing noise suppression and detail preservation in infrared image denoising, this paper proposes a wavelet transform enhanced infrared denoising model (WTEIDM). The model is built upon a U-Net architecture integrated with a Transformer backbone, with its overall structure illustrated in Figure 1. In the encoder part, a WTTransformer module is designed, which integrates a wavelet transform self-attention (WTSA) mechanism and a multi-scale gated linear unit (MSGLU). The WTSA performs feature decomposition via wavelet transformation to accurately capture essential information, while the MSGLU applies multi-scale depth-wise convolution and dynamic gating to enhance the representation of edges, textures, and other detailed structures during noise removal. Between the encoder and decoder, a parallel hybrid attention module (PHAM) is incorporated to perform feature fusion via the synergistic operation of parallel spatial and channel attention pathways. This design enhances the discrimination between noise and meaningful details and reinforces the cross-stage propagation of critical features, thereby mitigating detail loss during denoising. The decoder adopts a layered WTTransformer structure combined with up-sampling and feature concatenation to progressively restore image details. In summary, the proposed model effectively suppresses noise while preserving structural integrity through collaborative encoding, fusion, and decoding, which significantly improves the denoising quality of infrared images.

Figure 1.

The network structure of WTEIDM. Data flow (solid arrows): Noisy image → Conv/Normalization (initial feature extraction) → Encoder (stacked WTTransformer: WTSA→MSGLU) → PHAM (cross-dimensional fusion) → Decoder (stacked WTTransformer + up-sampling) → Conv (output denoised image); Skip connections (dashed arrows) bridge encoder–decoder features of the same scale to compensate detail loss. Module collaboration: WTSA (frequency-domain noise-detail separation) and MSGLU (multi-scale spatial modulation) form the core of WTTransformer; PHAM (between encoder–decoder) fuses spatial-channel features, forming a “separation-modulation-fusion-reconstruction” closed loop.

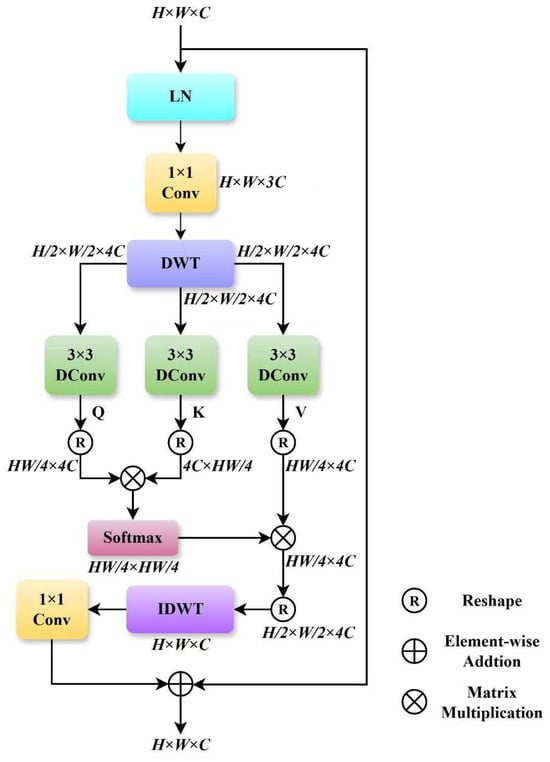

2.1. Wavelet Transform Self-Attention (WTSA)

In infrared image denoising, when the traditional self-attention mechanisms operate in the pixel space for feature interaction, it is susceptible to the low signal-to-noise ratio characteristic of infrared images. Consequently, it struggles to effectively distinguish between noise and detail features. Furthermore, due to its limited ability to discriminate features in the frequency domain, it fails to adequately capture detail information embedded in different frequency components. This often leads to either loss of detail or noise retention in the denoised output.

To overcome these shortcomings, we design a wavelet transform self-attention (WTSA) mechanism. This model integrates the frequency-domain decomposition capability of discrete wavelet transform (DWT) with the dynamic feature weighting advantages of self-attention, allowing for accurate separation of noise and meaningful detail features. The structure of WTSA is illustrated in Figure 2.

Figure 2.

The network structure of WTSA module.

The core workflow of the WTSA module comprises four stages: feature preprocessing, wavelet frequency-domain decomposition, attentive feature interaction, and feature reconstruction. First, the input feature map undergoes layer normalization for distribution standardization, which stabilizes training and suppresses gradient fluctuation. Subsequently, the normalized features are passed through a 1 × 1 convolution to adjust the channel dimension to 3C, yielding an intermediate feature . This channel transformation step reduces parameter redundancy for the subsequent wavelet decomposition and attention computations.

Next, the feature map is decomposed by the Discrete Wavelet Transform (DWT) into four sub-band features . In this process, the Haar wavelet basis function is adopted, whose step-shaped filter can effectively capture local edge information in the image, suppressing feature redundancy while enhancing inter-channel discriminability. Moreover, the Haar wavelet exhibits high computational efficiency, contributing to a favorable trade-off between feature representation accuracy and computational cost. The four resulting sub bands consist of one low-frequency approximation component, which carries the global structural information of the image, and three high-frequency detail components that encompass detail features such as edges and texture, along with noise. This frequency-domain decomposition achieves a preliminary separation of noise and details in the frequency domain, providing more discriminative feature representations for subsequent attention mechanism. In the stage of attentive feature interaction, is fed into three parallel 3 × 3 depth-wise convolutions (DConv) to extract the Query (Q), Key (K), and Value (V) feature components, respectively. The formulation is as follows:

The 3 × 3 depth-wise convolution leverages its local receptive field to enhance the extraction of local features within the four sub-bands, enabling the generated , and features to better align with the data distribution in the wavelet domain. Subsequently, Q, K and V are reshaped into sequence forms , and , respectively. The attention weight matrix is computed as follows:

where , , , and d denotes a learnable scaling factor, respectively. This process dynamically amplifies informative feature weights while suppressing interference from noise-dominated sub-bands.

Finally, the output of the attention mechanism is reshaped to restore its spatial dimensions, and the four sub-band features are reconstructed via the inverse discrete wavelet transform (IDWT) into . This is followed by a 1 × 1 convolution that optimizes the consistency of the channel features, producing the reconstructed features . A residual connection is incorporated to preserve original feature information, and the final output is given by:

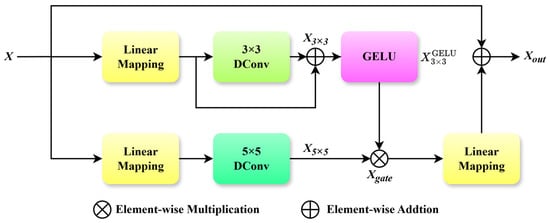

2.2. Multi-Scale Gated Linear Unit (MSGLU)

In the Transformer [20] architecture, the feedforward network (FFN) serves as a critical component following the self-attention layer, enhancing feature representation through bilinear transformation and nonlinear activation. However, in the task of infrared image denoising, the FFN exhibits notable limitations. First, its linear mapping struggles to effectively utilize spatial information and fails to capture inter-pixel correlations, which leads to the loss of edge and texture details. Second, the undifferentiated processing of channels tends to cause noise and meaningful details to overlap, thereby restricting the model’s adaptability to complex noise patterns and multi-scale structures. To overcome these limitations of the FFN, this paper introduces a Multi-scale Gated Linear Unit (MSGLU), as shown in Figure 3. By integrating dual-branch multi-scale depth-wise convolution with a gating mechanism, the MSGLU achieves precise detail feature extraction and dynamic feature modulation.

Figure 3.

The network structure of MSGLU module.

The MSGLU module adopts a dual-branch parallel architecture. The input feature is first processed through linear mapping layers and then fed into two separate branches. In the first branch, the linearly mapped features undergo 3 × 3 depth-wise convolution (DConv) to extract local fine-grained features, such as edges and textures. Subsequently, a residual connection is applied to integrate the original linear features, preserving information completeness and yielding features . These features are subsequently activated by a GELU function to enhance nonlinear expressiveness. In the second branch, the linear mapped features generate output features via a 5 × 5 depth-wise convolution (DConv). This operation captures global multi-scale contextual information, including large-scale structural characteristics and noise distribution.

To achieve dynamic modulation of multi-scale features, the MSGLU model incorporates a gating mechanism. This mechanism performs an element-wise multiplication of the GELU-activated features from the 3 × 3 depth-wise convolution branch with those from the 5 × 5 branch, thus adaptively weighting the contributions of features at different scales through gating logic. This process is formulated as:

where denotes element-wise multiplication, and represents the GELU-activated output of the 3 × 3 depth-wise convolution branch.

Finally, the gated features undergoes a linear projection to adjust its channels and dimensions, followed by fusion with the original input X via a residual connection to produce the final output . The expression is as follows:

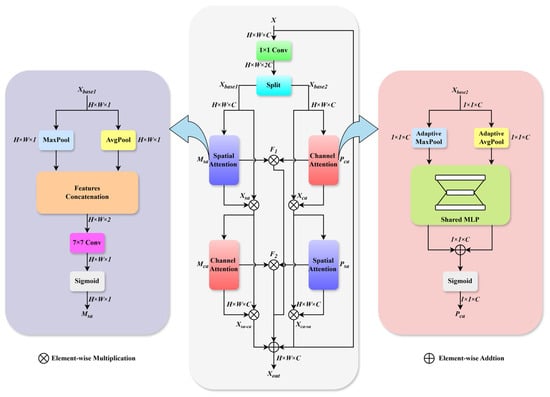

2.3. Parallel Hybrid Attention Module (PHAM)

Current standalone attention mechanisms, like spatial or channel attention, present clear limitations. Spatial attention effectively captures pixel-position relationships; however, this comes at the cost of ignoring feature variations in the channel dimension. Conversely, while channel attention excels at facilitating information exchange between channels, it often fails to accurately capture localized spatial details.

To address this issue, we design a parallel hybrid attention module (PHAM), as illustrated in Figure 4. The PHAM consists of two parallel branches, each comprising both spatial and channel attention units. These components are interconnected to enhance the correlations between spatial and channel dimensions, thereby improving noise suppression and detail preservation. Simultaneously, a residual connection is incorporated to preserve the fundamental features and mitigate detail loss during infrared image denoising.

Figure 4.

The network structure of PHAM.

As illustrated in Figure 4, the input feature map is first processed via a 1 × 1 convolution to adjust the channel dimension to 2C, producing base features suitable for attention mechanisms. These features are then split into two parallel branches and for dual-attention interaction. The module employs two distinct pathways: a spatially guided channel attention path and a channel-guided spatial attention path. The former prioritizes preserving spatial structural information, while the latter focuses on highlighting inter-channel feature differences, thereby generating distinct intermediate feature representations. Finally, through a cross-fusion operation, the two branches complement each other’s information, achieving a deep enhancement of both spatial and channel features.

In the spatially guided channel attention path, branch features undergoes max pooling and average pooling, respectively, to aggregate spatial information. The pooled features are then concatenated and processed through a 7 × 7 convolution, followed by a sigmoid activation to generate the spatial attention weights . The above process can be expressed by the following formulas:

These weights are applied to the input features via element-wise multiplication to produce spatially enhanced features :

The spatially enhanced features then enter a channel attention module, which employs adaptive max pooling (AMP) and adaptive average pooling (AAP), followed by a shared multilayer perceptron (MLP). The MLP outputs are summed and activated by sigmoid to generate channel attention weights :

The final output of this path is obtained by the element-wise multiplication of and :

In the complementary channel-guided spatial attention path, branch features are first processed by channel attention to generate weights , which are applied to obtain channel-enhanced features . The above process can be expressed by the following formulas:

These features first undergo spatial attention processing to generate weights . The final output is then obtained via the element-wise multiplication of and , formulated as follows:

The outputs from both pathways are element-wise summed and further enriched through cross-feature interactions. Specifically, hybrid features and are generated to capture complementary attention patterns:

The final output features are obtained by fusing the original input X, the output of the spatially guided channel attention module, the output of the channel-guided spatial attention module, and the two hybrid features F1 and F2 through residual connections:

3. Experiments and Results

3.1. Dataset

The experiments employ the public dataset IR700 [21], acquired using a long-wave infrared telescope. The dataset contains a variety of subject images such as people, vehicles, roads, and buildings. It has high resolution and relatively clear content features to ensure the effectiveness of the experiment. The dataset is randomly divided into two subsets: training set (600 images) and testing set (100 images).

To verify the generalization performance of the model, this paper uses four infrared image datasets covering different scenes as an extended test set, namely IR100 [22], Flir [23], ESPOL FIR [24], and DLS-NUC-100 [25], where each dataset contains 100, 50, 101, and 100 images.

3.2. Experimental Settings

To facilitate comparison with existing image denoising methods, this study uses simulated infrared noisy images during training and testing. Gaussian noise with a mean of 0 and standard deviations σ of 15, 25, and 50 is added to the original images to simulate infrared noise of varying intensities. By training and testing the comparative models under these noise levels, we comprehensively evaluate their robustness under different noise conditions and ensure comparability of the experimental results. All methods were trained under the same strategy and run independently three times using three different random seeds to assess the stability of the results. The final performance metric was calculated based on the mean and standard deviation of the three runs, and statistical analysis was performed to assess the differences in results using a significance test (p-value). When calculating the p-value, we used a two-tailed t-test, setting the significance level α = 0.05 and the degrees of freedom df = 2.

The WTEIDM adopts a 4-layer encoder–decoder architecture. The four WTTransformer modules (E1, E2, E3, E4) in the encoder are set to 4, 6, 6, and 8 blocks, respectively. The three WTTransformer modules in the decoder (D1, D2, D3) are set to 4, 6, and 6 blocks, respectively. The WTTransformer module R in the final feature refinement stage is set to 4 blocks. Within each WTSA layer, the number of heads in the multi-head self-attention mechanism is set to 1, 2, 4, and 8, respectively, and the head count in module R is set to 1.

During training, image patches of size 64 × 64 pixels are randomly cropped from the training set and trained in mini-batches of 8 samples each. Furthermore, image augmentation is performed via horizontal flipping and random rotations of 90°, 180°, and 270°. The Charbonnier loss function is optimized using the Adam optimizer. The batch size is set to 32, and the training epochs are 3000.

The experiments are conducted on a Windows 11 system equipped with an NVIDIA GeForce RTX 4090 GPU (24 GB VRAM) and an Intel Core i9-13900K CPU @ 3.00 GHz. The software environment is built on Python 3.9, PyTorch 2.0.0, and CUDA 11.8.

3.3. Evaluation Indicators

To validate and evaluate the performance of the model, this study adopts two evaluation indicators widely used in the field of image denoising: peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM).

PSNR measures the similarity between an image and its processed version by calculating the ratio of the maximum grayscale value to the mean squared error (MSE) between the original and processed images. A higher PSNR value indicates that the quality of the denoised image is closer to the original image. The formula is as follows:

where m and n represent the number of rows and columns of the image, I denotes the original image, and I′ represents the denoised image. MAX represents the maximum grayscale value of the image.

SSIM measures the spatial structural similarity of images by comparing information such as brightness, contrast, and structure in local areas of the images. Its value ranges from 0 to 1, with values closer to 1 indicating greater similarity between the two images. The calculation formula is as follows:

where and represent the average of the original and the denoised images respectively. and denote their variances, is the covariance between them, and , are constants to stabilize the division.

3.4. Ablation Experiments

To validate the contribution of each module in the WTEIDM to infrared image denoising performance, we adopt a traditional Transformer architecture as the baseline (BASIC) and train it on the IR700 dataset with Gaussian noise at σ = 15. Experiments are conducted on five test sets: IR700_test, IR100, Flir, ESPOL FIR, and DLS-NUC-100. The statistical significance of the results was assessed by calculating p-values in comparison with the BASIC baseline, and the detailed results are summarized in Table 1.

Table 1.

Ablation experiment with Gaussian noise σ of 15 (mean ± SD; p-values).

The experimental results show that the introduction of the WTSA module yields improvements of approximately 0.09 dB in PSNR and 0.0025 in SSIM, with gains being statistically significant (p < 0.05) across all test sets. This indicates that the module exhibits significant effectiveness in noise reduction and feature preservation. Further addition of the MSGLU module leads to more significant performance gains, with PSNR increasing by an average 0.21 dB and SSIM by 0.0058, all highly statistically significant (p < 0.01). Notably, the SSIM improvements of 0.0074 and 0.0087 on the IR700_test and DLS-NUC-100 test sets, underscoring the module’s ability to strengthen feature processing and refine denoising. Ultimately, the complete model combining the WTSA, MSGLU, and PHAM achieves optimal performance. It surpasses the BASIC baseline by average margins of 0.31 dB in PSNR and 0.0088 in SSIM, with all improvements highly statistically significant (p < 0.01). This superior performance highlights the PHAM module’s strength in spatial-channel feature fusion and detail enhancement, which effectively alleviates the issue of detail loss.

Furthermore, an ablation study on the placement of the PHAM is conducted. To this end, we designed two configurations: Encoder-PHAM, which inserts the module after the three WTTransformer modules (E1, E2, E3) in the encoder; Decoder-PHAM, where it is placed after the three WTTransformer modules (D1, D2, D3) in the Decoder. The experimental results are presented in Table 2.

Table 2.

The ablation experiment results of PHAM at different positions (mean ± SD; p-values vs. BASIC baseline in parentheses).

The experimental results demonstrate that deploying the PHAM at any tested locations improves model performance, though the extent of improvement varies with the deployment position. Based on the average performance across the five test sets, the Encoder-PHAM configuration increases the PSNR by approximately 0.02 dB and the SSIM by 0.0006. The Decoder-PHAM configuration yields more notable gains, improving the PSNR by about 0.05 dB and the SSIM by 0.0015. Although both configurations provide certain performance gains, the improvements are not statistically significant compared to the baseline model (p > 0.05). In contrast, the proposed WTEIDM, which places PHAM between the encoder and decoder, achieves the most pronounced enhancement. It yields an average improvement of roughly 0.06 dB in PSNR and 0.0018 in SSIM, with both gains being statistically significant (p < 0.05).

These findings demonstrate that the PHAM effectively enhances feature refinement at various locations, yet its efficacy is closely tied to its structural position. In particular, when placed between the encoder and decoder, the module fully leverages its capability for cross-level information fusion and feature enhancement, thereby boosting the overall denoising performance of the model on infrared images.

To verify the effectiveness of configuring the 3 × 3 and 5 × 5 depth-separable convolutional kernel sizes in the MSGLU module, we conducted ablation experiments comparing different kernel combinations using WTSA + PHAM as the baseline model. Four size combinations were investigated: (1 × 1, 3 × 3), (3 × 3, 5 × 5), (5 × 5, 7 × 7), and (3 × 3, 7 × 7). The results are detailed in Table 3.

Table 3.

The ablation experiment results of Kernel Size Configuration in the MSGLU Module (mean ± SD; p-values).

The experimental results indicate that the performance of MSGLU module is affected significantly by the kernel size combinations, with the proposed (3 × 3, 5 × 5) configuration achieving the most optimal performance improvement. Specifically, in the average performance evaluation across five test sets, the (1 × 1, 3 × 3) combination decreased PSNR by approximately 0.09 dB and SSIM by 0.0033 compared to the baseline, with statistically significant differences (p < 0.05). This performance decline can be attributed to the limited receptive field of the 1 × 1 kernel, which struggles to effectively extract spatial dependencies between pixels, thereby limiting feature representation capabilities. In contrast, the (5 × 5, 7 × 7) and (3 × 3, 7 × 7) combinations showed no statistically significant difference from the baseline (p > 0.1). While the 7 × 7 kernel expands the receptive field, it introduces excessive background noise and redundant information, increasing computational cost without providing discriminative feature enhancement. The proposed (3 × 3, 5 × 5) combination improved average PSNR and SSIM by approximately 0.06 dB and 0.0024, respectively, with highly statistically significant improvements (p < 0.01). In this configuration, the 3 × 3 kernel effectively captures local fine-grained structures (such as edges and textures), while the 5 × 5 kernel appropriately models global contextual information, thereby achieving an effective balance between detail preservation and high-level semantic modeling.

These results provide a rationale for selecting the combination of 3 × 3 and 5 × 5 convolution kernels in the MSGLU module. This configuration achieves an effective balance between feature extraction capability, detail retention, and computational efficiency, which is crucial for further improving the denoising performance of the WTEIDM.

3.5. Comparative Experiments

To comprehensively evaluate the performance of the proposed WTEIDM, we compare it against several state-of-the-art image denoising algorithms, including DnCNN [26], DRUNet [27], MemNet [28], MWCNN [29], and IDTransformer [30]. To ensure an objective and fair comparison, all methods are trained under an identical protocol on the IR700 dataset with Gaussian noise (σ = 15, 25, and 50) and subsequently tested on five infrared test sets (IR700_test, IR100, Flir, ESPOL FIR, and DLS-NUC-100). The statistical significance of all methods was calculated by comparing them with IDTransformer. The specific p-values and detailed results are summarized in Table 4.

Table 4.

The comparative experimental results of different methods (mean ± SD; p-values).

As can be seen from the results, the proposed WTEIDM method achieves the best performance across all noise levels and test sets, significantly outperforming other competing algorithms. Compared to the suboptimal model IDTransformer, WTEIDM shows consistent improvements: at σ = 15, it provides average gains of 0.05 dB in PSNR and 0.0023 in SSIM. These performance advantages are statistically significant (p < 0.05) on most test sets; Under σ = 50, the performance advantage is even more substantial, with an average PSNR increase of 0.16 dB and an SSIM improvement of 0.0035, all of which are highly statistically significant (p < 0.02). Under σ = 25, the model’s PSNR improves by approximately 0.06 dB and SSIM by approximately 0.0014. Although these gains fall near the conventional significance threshold (p ≈ 0.05–0.06), its consistently positive trend indicates potential for further optimization, warranting deeper validation in larger or more complex scenarios. Notably, WTEIDM maintains a stable leading performance across all test sets, demonstrating strong generalization capability and robustness.

To comprehensively evaluate the computational complexity and storage requirements of each model, we calculated the number of parameters, floating-point operations (FLOPs), inference speed (FPS), and weights of all models under the training conditions of the IR700 dataset (noise level σ = 15). The results are summarized in Table 5.

Table 5.

A comprehensive comparison of different methods in terms of computational efficiency and resource consumption.

From an efficiency perspective, the proposed WTEIDM maintains a compact architecture with moderate computation and run-time cost. Compared to the BASIC baseline, it achieves a lighter configuration and faster inference, indicating that the design improves restoration performance without increasing deployment burden. Moreover, relative to IDTransformer, WTEIDM introduces only minimal additional complexity while maintaining a comparable inference speed, suggesting that the performance gains are attained with limited extra cost.

In summary, the proposed WTEIDM consistently outperforms existing advanced methods in quantitative metrics, with statistically significant improvements that validate its effectiveness for infrared image denoising. Simultaneously, it achieves a favorable balance among denoising quality, computational efficiency, and resource consumption. This balance is attributable to the fact that each core component contributes to performance gains while incurring controlled overhead, rendering the overall model well-suited for practical deployment.

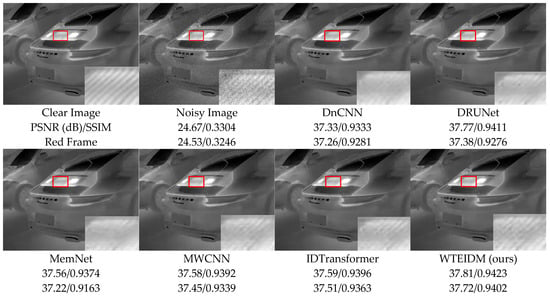

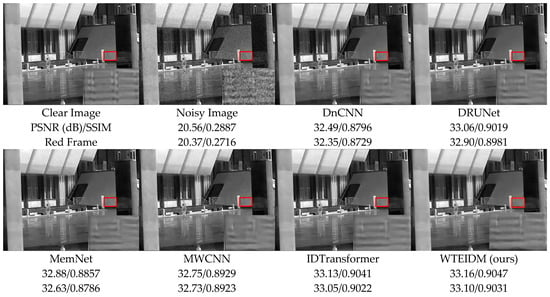

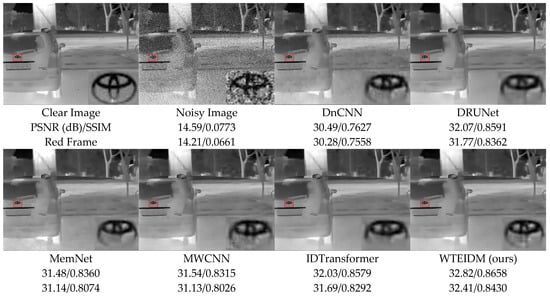

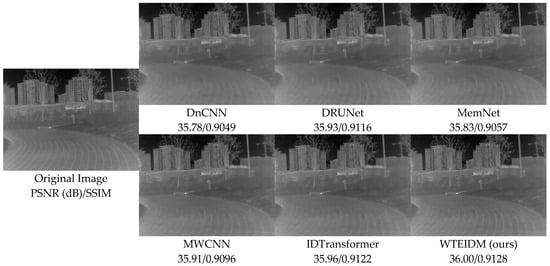

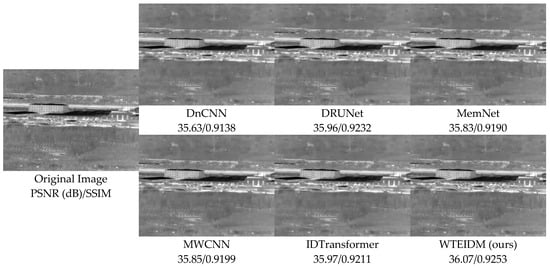

3.6. Visualization of Experimental Results and Analysis

To validate the performance of the WTEIDM in infrared image denoising, we present visual comparisons of images with different noise levels from the test set, as shown in Figure 5, Figure 6 and Figure 7. The sub-figures are arranged as follows, from left to right: the original image, the noisy image, and the denoised results processed by DnCNN, DRUNet, MemNet, MWCNN, IDTransformer, and the proposed WTEIDM. A red box highlights a local region in each image, with a magnified view displayed in the lower-right corner for detailed comparison. The corresponding PSNR and SSIM values of both the full image and the red frame region are provided below each result to enable quantitative evaluation of local detail preservation.

Figure 5.

Comparisons of denoising results on image 37.png from the DLS-NUC-100 test dataset under noise level of σ = 15. Red boxes: magnified regions for detail comparison.

Figure 6.

Comparison of denoising results on image 2.png from the IR700 test dataset under noise level of σ = 25. Red boxes: magnified regions for detail comparison.

Figure 7.

Comparison of denoising results on image 000982.png from the IR100 test dataset under noise level of σ = 50. Red boxes: magnified regions for detail comparison.

Figure 5, Figure 6 and Figure 7 present visual comparisons of denoising results on different test images under noise levels of σ = 15, 25, 50, respectively. In Figure 5, the red box highlights the ventilation grille at the rear of the vehicle. While most comparative algorithms introduce shadows or blurring of details after denoising, WTEIDM effectively suppresses noise while better preserving the original structural features, demonstrating superior detail retention. In Figure 6, the red box marks a railing structure. Although IDTransformer achieves a reasonable balance between noise removal and feature preservation, WTEIDM outperforms it in both noise suppression and structural integrity, recovering railing details more completely. In Figure 7, the logo area shows that WTEIDM preserves contours and fine details more effectively than other methods.

Overall, the comprehensive results indicate that WTEIDM consistently restores key details under varying noise conditions, significantly enhancing the quality of infrared image denoising.

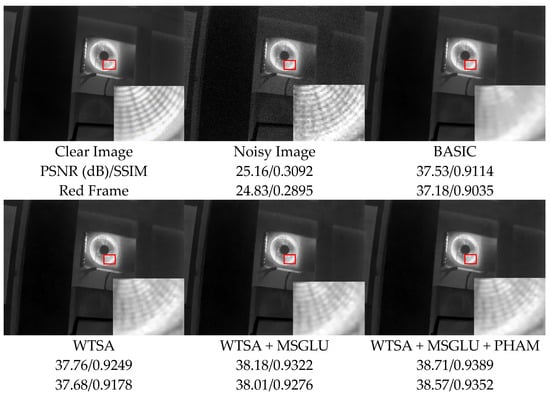

We also visualized the ablation experiment results of sample “34.png” in the test dataset DLS-NUC-100 to visually verify the effectiveness of each core module, as shown in Figure 8. To highlight structural details, the red-boxed regions in each image are locally magnified for detailed comparison and analysis.

Figure 8.

Comparison of denoising effects of the ablation experiment on image 34.png from the DLS-NUC-100 test dataset under noise level σ = 15. Red boxes: magnified regions for detail comparison.

The visualization results show that the noisy image is severely affected by noise, and the structural texture information is significantly blurred. Although the BASIC model achieves a preliminary denoising effect, noise remains in some local areas, and edge details are not fully restored. In contrast, integrating the WTSA module significantly improves the image denoising quality, effectively enhancing the restoration of details and the clarity of structure, thus verifying the effectiveness of WTSA in separating noise and detail features. The introduction of the MSGLU module further optimized the noise reduction performance, resulting in clearer edge contours and textures, effectively alleviating the blurring of details caused by insufficient feature modulation. Ultimately, the complete model integrating the WTSA, MSGLU, and PHAM presented the best visual restoration effect, not only completely suppressing residual noise but also reconstructing the fine structural details in the image in a more natural and clear manner.

The above visualization results are consistent with the quantitative analysis conclusions in Section 3.4, jointly validating the progressive optimization mechanism of each core module: WTSA achieves the preliminary separation of noise and detail features, MSGLU further strengthens the extraction capability of multi-scale detail information, and PHAM facilitates cross-dimensional feature fusion between spatial and channel dimensions. Together, they enable a better balance between noise suppression and structural detail preservation.

To validate the generalization ability and robustness of the proposed WTEIDM on real-world noisy infrared images, we conducted supplementary experiments on the public dataset IRSTD-1k [31]. Figure 9 and Figure 10, respectively, show the denoising results of different methods on the samples “XDU228.png” and “XDU1000.png” under real noise conditions. The experimental results demonstrate that even in real noise environments, WTEIDM still achieves the best performance in terms of both PSNR and SSIM metrics, and significantly outperforms other methods in both structural integrity and textural details preservation. Specifically, in Figure 9, WTEIDM exhibits outstanding performance in restoring runway stripes and ground textures. In Figure 10, the model can also better preserve the basic contours and local details of the wooded area. These results show that WTEIDM can still effectively achieve noise suppression and detail preservation in the face of mixed noise or structural noise interference that exist in real scenes, further verifying its good robustness and generalization ability in practical applications.

Figure 9.

Comparison of denoising results various methods on image XDU228.png from the IRSTD-1k dataset.

Figure 10.

Comparison of denoising results of various methods on the image XDU1000.png in the IRSTD-1k dataset.

4. Limitation

Although WTEIDM achieves a favorable efficiency–performance trade-off, there is still room for improvement in model compactness and deployment cost. As summarized in Table 5, WTEIDM (18.79 M parameters and 14.20 G FLOPs) is notably lighter than the BASIC baseline, but it is still less compact than some lightweight CNN-based alternatives. Moreover, compared with IDTransformer, WTEIDM introduces additional computational overhead in terms of parameters and FLOPs, which may be a consideration under strict edge-device budgets. Furthermore, in a small number of challenging cases, the denoised results may exhibit slight over-smoothing or minor residual noise, indicating that detail preservation in complex scenes or regions could be further improved.

In future work, we will pursue more deployment-friendly designs by developing efficient attention and parameter-sharing strategies, along with implementation-level optimization, to further reduce parameters, FLOPs, and memory footprint while maintaining denoising quality. We will also explore model compression and acceleration techniques, such as pruning, quantization, and knowledge distillation, to enable real-time inference on resource-constrained infrared devices. To better handle challenging samples, we plan to incorporate structure-aware supervision (e.g., edge/gradient constraints) and refine multi-scale feature modulation, enabling more faithful preservation of weak textures and subtle thermal boundaries. Furthermore, we will extend training and evaluation to more realistic infrared degradations and sensor-dependent noise patterns, and investigate noise-aware adaptation to improve generalization in practical imaging scenarios.

5. Conclusions

This paper proposes a wavelet transform-based enhanced infrared denoising model (WTEIDM). The model incorporates a wavelet transform self-attention (WTSA) mechanism, which achieves effective separation of noise and detail components in the frequency domain and enhances the perception of key features. A multi-scale gated linear unit (MSGLU) is designed to improve the richness of feature representation and adaptability regulation capability through a multi-branch convolutional structure and gating mechanism. Additionally, a parallel hybrid attention module (PHAM) is introduced to further strengthen cross-dimensional feature fusion and detail retention through the complementarity interaction of spatial and channel attention paths. Extensive experiments are conducted on five infrared datasets under different noise levels. The results demonstrate that the proposed method achieves superior denoising performance in terms of PSNR and SSIM metrics compared to existing denoising methods.

Author Contributions

Conceptualization, H.L. and Y.Z.; methodology, L.Y.; software, H.Z. and L.Y.; validation, Y.Z., L.Y. and H.Z.; formal analysis, L.Y.; investigation, H.Z.; resources, H.L.; data curation, Y.Z.; writing—original draft preparation, H.L.; writing—review and editing, Y.Z. and L.Y.; visualization, H.Z.; supervision, H.L. and Y.Z.; project administration, H.L. and Y.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yin, Z.; Liu, S.; Tong, X. Infrared image denoising algorithm based on domain adaptation. J. Laser Infrared 2023, 53, 449–456. [Google Scholar]

- Ou, W.; Wan, L. Infrared image denoising based on double density complex wavelet and coefficient correlation. J. Opt. Tech. 2023, 49, 238–244. [Google Scholar]

- Han, H. Improved non-local means filtering algorithm for infrared images in NSCT domain. J. Infrared Technol. 2015, 37, 34–38. [Google Scholar]

- Gu, D. Bilateral weighted median filter for removing impulse noise in infrared image. J. Chin. J. Sens. Actuators 2024, 37, 492–498. [Google Scholar]

- Hao, J.; Du, Y.; Wang, S.; Ren, J. Infrared image enhancement algorithm based on wavelet transform and improved bilateral filtering. J. Infrared Technol. 2024, 46, 1051–1059. [Google Scholar]

- Zhai, P.; Wang, P. Application of adaptive Wiener filter in molten steel infrared image denoising. J. Infrared Technol. 2021, 43, 665–669. [Google Scholar]

- Liu, G.; Gong, Y.; Zhang, H.; Liang, H. Infrared spectrum denoising algorithm based on wavelet transform optimization EEMD combined with SG. J. Infrared Technol. 2024, 46, 1453–1458. [Google Scholar]

- Wu, J.; Niu, H.; Zhang, H.; Xu, J. A hybrid Fourier-wavelet method for infrared image denoising of cable porcelain terminal based on wavelet coefficient GSM model. J. Electr. Meas. Instrum. 2018, 55, 113–117. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J. Non-local means denoising. J. Image Process. Line 2011, 1, 208–212. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. J. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Arnim, G.; Aditi, P.; Aishwarya, J.; Satya, N.T.; Sachin, C.; Praful, H.; Akshay, D.; Santosh, V.; Subrahamanyam, M. Pureformer: Transformer-Based Image Denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2025), Nashville, TN, USA, 10–17 June 2025; pp. 1441–1449. [Google Scholar]

- Li, J.; Zhang, Z.; Zuo, W. Rethinking Transformer-Based Blind-Spot Network for Self-Supervised Image Denoising. J. Proc. AAAI Conf. Artif. Intell. 2025, 39, 4788–4796. [Google Scholar] [CrossRef]

- Jin, Z.; Qiu, Y.; Zhang, K.; Li, H.; Luo, W. MB-TaylorFormer V2: Improved Multi-Branch Linear Transformer Expanded by Taylor Formula for Image Restoration. J. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 5990–6005. [Google Scholar] [CrossRef]

- Zhang, K.; Li, R.; Yu, Y.; Luo, W.; Li, C. Deep Dense Multi-Scale Network for Snow Removal Using Semantic and Depth Priors. J. IEEE Trans. Image Process. 2021, 30, 7419–7431. [Google Scholar] [CrossRef]

- Li, Z.; Luo, S.; Chen, M.; Wu, H.; Wang, T.; Cheng, L. Infrared thermal imaging denoising method based on second-order channel attention mechanism. J. Infrared Phys. Technol. 2021, 116, 103789. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, W.; Ma, Z.; Yin, L.; Xie, M.; Wu, Y. Infrared star image denoising using regions with deep reinforcement learning. J. Infrared Phys. Technol. 2021, 117, 103819. [Google Scholar] [CrossRef]

- Hu, X.; Luo, S.; He, C.; Wu, W.; Wu, H. Infrared thermal image denoising with symmetric multi-scale sampling network. J. Infrared Phys. Technol. 2023, 134, 104909. [Google Scholar] [CrossRef]

- Yang, P.; Wu, H.; Cheng, L.; Luo, S. Infrared image denoising via adversarial learning with multi-level feature attention network. J. Infrared Phys. Technol. 2023, 128, 104527. [Google Scholar] [CrossRef]

- Wu, W.; Dong, X.; Li, R.; Chen, H.; Cheng, L. SwinDenoising: A Local and Global Feature Fusion Algorithm for Infrared Image Denoising. J. Math. 2024, 12, 2968. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zou, Y.; Zhang, L.; Liu, C.; Wang, B.; Hu, Y.; Chen, Q. Super-resolution reconstruction of infrared images based on a convolutional neural network with skip connections. J. Opt. Lasers Eng. 2021, 146, 106717. [Google Scholar] [CrossRef]

- Huang, Y.; Jiang, Z.; Lan, R.; Zhang, S.; Pi, K. Infrared image super-resolution via transfer learning and PSRGAN. J. IEEE Signal Process. Lett. 2021, 28, 982–986. [Google Scholar] [CrossRef]

- Rivadeneira, R.E.; Sappa, A.D.; Vintimilla, B.X. Thermal image super-resolution: A novel architecture and dataset. In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Application (VISIGRAPP 2020), Valletta, Malta, 27–29 February 2020; pp. 111–119. [Google Scholar]

- Rivadeneira, R.E.; Suárez, P.L.; Sappa, A.D.; Vintimilla, B.X. Thermal Image SuperResolution Through Deep Convolutional Neural Network. In Proceedings of the International Conference on Image Analysis and Recognition (ICIAR 2019), Waterloo, ON, Canada, 27–29 August 2019; pp. 417–426. [Google Scholar]

- He, Z.; Cao, Y.; Dong, Y.; Yang, J.; Cao, Y.; Tisse, C. Single-image-based nonuniformity correction of uncooled long-wave infrared detectors: A deep-learning approach. J. Appl. Opt. 2018, 57, D155–D164. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. J. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Gool, L.V.; Timofte, R. Plug-and-play image restoration with deep denoiser prior. J. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6360–6376. [Google Scholar] [CrossRef] [PubMed]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. Memnet: A persistent memory network for image restoration. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 4539–4547. [Google Scholar]

- Liu, P.; Zhang, H.; Zhang, K.; Lin, L.; Zuo, W. Multi-level wavelet-CNN for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR 2018), Salt Lake City, UT, USA, 18–22 June 2018; pp. 773–782. [Google Scholar]

- Shen, Z.; Qin, F.; Ge, R.; Wang, C.; Zhang, K.; Huang, J. IDTransformer: Infrared image denoising method based on convolutional transposed self-attention. Alex. Eng. J. 2025, 110, 310–321. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape Matters for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022), New Orleans, LA, USA, 18–24 June 2022; pp. 877–886. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.