Abstract

As a crucial component of aero-engines, the accurate measurement of the motion parameters of the vector nozzle is of great significance for thrust vector control and flight performance improvement. This paper designs a dual-bearing rotating nozzle to simulate the motion of a real vector nozzle and a multi-view vision platform specifically for measuring the motion parameters of the vector nozzle. To address the parameter measurement problem, this paper proposes a technical solution of “3D reconstruction-point cloud segmentation-fitting measurement”. First, a deep learning-based multi-view stereo vision method is used to reconstruct the nozzle model in 3D. Then, the point transformer method is used to specifically extract the point cloud of the nozzle end face region. Finally, the RANSAC method is used to fit a spatial circle to the end face point cloud for measurement purposes. This paper verifies the effectiveness of the proposed method through multiple sets of comparative experiments and evaluates the reliability and stability of the measurement system using analysis of variance in measurement system analysis. The final analysis results show that the and NDC of the measurement system are 2.81% and 7.69%, respectively, and 1.84%, 50, 18, and 76, respectively, indicating that the measurement system has high accuracy and stability.

1. Introduction

Vectoring nozzles are an important component of aero-engines [1,2,3], and their main function is to guide and accelerate the airflow ejected from the engine to generate thrust. The magnitude and direction of thrust are controlled by changing the nozzle opening and angle. This not only improves the aircraft’s maneuverability at high angles of attack and low speeds, but also helps pilots perform many difficult and tactically valuable maneuvers, significantly increasing the aircraft’s tactical flexibility and flight stability. To ensure the control accuracy and reliability of vectoring nozzles under complex operating conditions, non-contact measurement of their geometric parameters (such as deflection angle and opening) has become a core requirement in design verification, health monitoring, and closed-loop control [4,5].

In the field of vector nozzle geometric parameter measurement, existing measurement methods mainly include: contact sensor/measurement platform-based [6], geometric modeling based on laser ranging or structured light scanning [7], and indirect inversion methods combining geometric modeling and CFD/pressure measurement [8,9]. Zhang et al. [10] used a laser tracker to perform static geometric measurement of the thrust line of a rocket engine. Although it has high accuracy, the equipment cost is high and the deployment is complicated. For vision methods, Guo et al. [11] designed a vision measurement system based on nozzle rotation axis modeling. By extracting image marker points and the spatial transformation relationship, the nozzle pitch and yaw angles are estimated. Cui et al. [12] used binocular stereo vision and the geometric constraint model to improve measurement stability, providing a new path for non-contact measurement.

Despite the progress made in visual measurement accuracy, some problems remain. Contact measurement devices can affect the movement of vector nozzles and reduce measurement accuracy under vibration conditions. Laser ranging devices are complex to deploy and susceptible to occlusion. While camera calibration methods have the advantage of being non-contact, they are not suitable for vector nozzle scenarios with sparse surface textures and complex structures. They also lack a complete 3D reconstruction process, resulting in incomplete structural perception. Furthermore, their robustness to high-frequency deformation and multi-part coordinated motion scenarios needs improvement [13,14,15]. Therefore, we must carefully consider the following issues.

- How do we use non-contact measurement methods and adapt to the dynamic changes of the nozzle at the same time? This includes the joint calibration of multiple cameras and the perception of the nozzle structure.

- How do we specifically extract the geometric parameters of the nozzle? These include the nozzle opening size, nozzle deflection angle, and azimuth angle.

In response to the above problems and research gaps, this paper proposes a “3D reconstruction-point cloud segmentation-fitting measurement” technical solution. A dual-bearing rotating nozzle is designed to simulate the motion of a real vector nozzle, and a multi-view imaging platform is built to capture multi-view images as data sources. First, the intrinsic and extrinsic parameters of the multi-view camera are estimated using the Structure-from-Motion method, and a deep learning-based multi-view stereo vision method is employed to achieve high-precision dense reconstruction of the nozzle structure, obtaining complete point cloud data. Subsequently, a Transformer-based point cloud segmentation network is used to accurately segment the end face points. Finally, the RANSAC circle fitting algorithm and spatial geometry theory are used to accurately measure the nozzle opening and deflection angle. This paper proposes a specific dynamic parameter extraction method based on multi-view vision. This method can fully perceive the nozzle structure during 3D reconstruction and ultimately specifically extract motion parameters such as nozzle opening and deflection angle. The proposed method is not limited to the measurement of motion parameters of vector nozzles, but can also be extended to other complex and movable components, such as adjustable air intakes and deflection surfaces, providing a high-precision and scalable solution for the measurement of motion parameters of other complex structures.

In summary, the main contributions of this paper are summarized as follows.

- A technical solution of “3D reconstruction–point cloud segmentation–fitting measurement” was proposed in this paper, which innovatively realizes “non-contact, no additional, and calibration-free” visual measurement of the motion geometry parameters of vector nozzles.

- An axisymmetric vector nozzle model was designed and a multi-view vision platform was built in this paper, through which the dynamic geometric parameters of the vector nozzle can be obtained by three methods: drive motor parameter calculation, laser sensor, and vision measurement, thereby achieving the measurement of the accuracy and consistency of the vision measurement method.

- AprilTag-encoded landmarks were used in this paper to address the lack of absolute scale information in the vector nozzle point cloud obtained using multi-camera stereo vision. The complex multi-camera joint calibration process was replaced, reducing the complexity of the visual measurement method and the requirements for the test environment.

2. Related Works

2.1. Thrust Vector Nozzle

Vector nozzle technology is an essential technology for aero-engines, which has greatly improved the performance of aircraft, specifically by improving the aircraft’s maneuverability and agility, reducing the aerodynamic control surfaces of the aircraft, reducing the tail fin, etc. [16,17,18]. Since the 1970s, various countries around the world have conducted extensive research on thrust vector nozzle technology. In 1973, Pratt Whitney of the United States proposed an axisymmetric convergent nozzle [19]. The nozzle can swing up and down in the pitch plane relative to the axis of rotation, thereby obtaining additional aircraft control torque. The advantages of this device are simple structure, good airtightness, and simple and reliable vector control. In 1986, Pratt Whitney of the United States designed a thrust vector nozzle with pitch, yaw and reverse thrust, namely the spherical convergent adjustment vane nozzle [20]. The pitch vector angle of the nozzle reaches ±30°, the yaw vector angle reaches ±20°, and the steering is flexible. The American GE company initially researched axisymmetric thrust vectoring nozzles that can rotate 360°—the axisymmetric vectoring nozzle (AVEN) [21]. By deflecting the expansion section of the nozzle in all directions, the thrust of the nozzle is vectored in all directions. It retains the good aerodynamic performance of the previous axisymmetric expansion nozzles, and expands the function of the expansion section in the connection, so that it can generate ultrasonic airflow and deflect the airflow direction according to the needs of the aircraft [22].

Following research, this paper designed a dual-bearing rotating nozzle model capable of simulating the angle changes and nozzle opening and closing of a vector nozzle. The nozzle model can achieve omnidirectional deflection with a vector deflection angle . and a vector azimuth angle , with a maximum nozzle end face diameter of 270 mm. A laser rangefinder and angle calculation are used to accurately obtain the actual nozzle deflection angle and opening size.

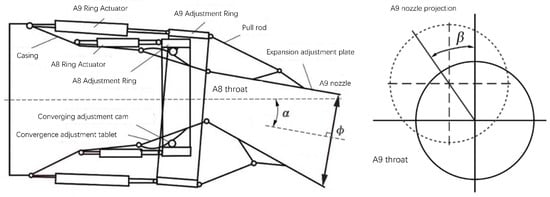

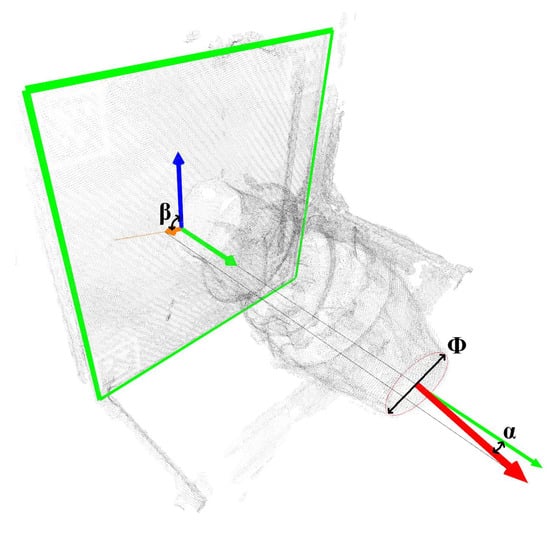

The definition of the motion parameters of the vector nozzle is shown in Figure 1. The attitude of the A9 nozzle end face in the vectoring nozzle directly controls the magnitude and direction of the aero-engine thrust vector. This attitude can be fully described by three motion parameters: nozzle diameter , vector deflection angle , and vector azimuth angle . The nozzle diameter is the diameter of the approximately circular A9 nozzle end face formed by the ends of multiple expanding vanes. The nozzle diameter directly determines the nozzle exit area, thus affecting the matching relationship between airflow velocity and mass flow rate, and controlling the engine thrust. The vector deflection angle is the angle between the normal to the A9 nozzle end face and the normal to the A8 throat surface. The vector deflection angle reflects the magnitude of the thrust vector deviating from the engine axis, i.e., the magnitude of the lateral force. As increases, the engine can generate greater lateral force and torque, assisting the aircraft in maneuvering. The thrust vector azimuth angle is the angle between the projection of the normal to the A9 nozzle end face onto the A8 throat surface and the positive pitch direction of the aircraft. It varies within the range of −180° to 180°, and its sign is determined using the right-hand rule based on the normal to the A8 throat surface. The thrust vector azimuth angle reflects the direction in which the thrust vector deviates from the engine axis, i.e., the direction of the lateral force. Assuming , when , the lateral force is upward along the pitch direction; when , the lateral force is to the left along the horizontal direction.

Figure 1.

Definition diagram of vector nozzle motion parameters.

2.2. Multi-View Stereo Vision Measurement

Multi-view stereo vision measurement technology acquires images of the object to be measured from different angles using a visual sensor [23], obtains target information from different angles, and then performs stereo matching based on a 3D model to complete the measurement task. Among them, multi-view stereo vision measurement methods are based on monocular and binocular vision and can be composed of multiple monocular or binocular systems. The basic principle of binocular stereo vision is similar to the imaging system of the human eye [24], which consists of two cameras located on the same plane, with parallel optical axes and the same height. With the development of deep learning, deep learning-based multi-view stereo vision methods have become mainstream. In 2018, Yao et al. proposed an end-to-end deep learning architecture called MVSNet [25], whose core lies in learning feature extraction, cost volume construction and depth regularization strategy. Subsequently, researchers have made many improvements to these core processes [26,27,28]. In recent years, the Transformer structure has been introduced into the field of multi-view stereo vision, such as MVSFormer [29], which achieves more effective cross-view information fusion by constructing a feature pyramid and a view attention mechanism. MVSFormer++ [30] combines a pre-trained visual Transformer (ViT) and a feature pyramid network (FPN) to demonstrate superior performance in multi-scale feature extraction and cost volume aggregation, significantly improving the structural perception and robustness of reconstruction, and is particularly suitable for reconstruction scenarios with complex backgrounds and sparse textures. However, current mainstream multi-view stereo vision methods focus on improving the accuracy of disparity estimation, rather than on the scale consistency and measurability of reconstruction results, which has limitations when facing application scenarios such as engineering measurement. To this end, this paper uses AprilTag encoding marker points [31] on the basis of high-precision dense reconstruction of multi-view stereo vision, which solves the problem that the vector point cloud obtained by multi-view stereo vision methods does not have absolute scale information. This provides a reliable foundation for subsequent point cloud segmentation and fitting measurement.

2.3. Point Cloud Segmentation

Three-dimensional point clouds are an important means of understanding spatial structure and are widely used in fields such as autonomous driving, embodied intelligence and industrial geometric measurement. According to different processing methods, existing segmentation methods are mainly divided into the following three categories: point-level methods, voxelization methods, and projection and attention methods. PointNet [32] first proposed to directly process point cloud coordinates with the multilayer perceptron, which has permutation invariance. Then, PointNet++ [33] introduced hierarchical sampling and neighborhood aggregation, which improved the local structure modeling ability. Voxelization methods convert point clouds into regular grids, which facilitates the extraction of features using standard convolution operations. For example, VoxelNet [34] converts point clouds into regular voxel grids and extracts spatial features through three-dimensional convolution. SparseConvNet [35] and MinkowskiNet [36] and other methods use sparse convolution operations to effectively reduce redundant computation. Zhao et al. [37] proposed a multi-task network with PointNet as a shared encoder and three parallel branches, and proved that the shared encoder and joint training can improve the segmentation robustness and overall preprocessing efficiency in noisy scenes. In recent years, the Transformer structure has been introduced into the field of point cloud semantic understanding. The Point Transformer series [38] has introduced an attention mechanism to significantly improve the ability to model semantic dependencies between points. GPSFormer [39] proposed the Global Perception Module (GPM) and Local Structure Fitting Convolution (LSFConv), which take into account both local geometric details and global semantic information. Based on Point Transformer, this paper uses the nozzle point cloud face as a label to create a dataset. Based on the S3DIS pre-trained weights, it fine-tunes the weights and combines them with the Focal Loss training strategy to segment the vector nozzle end face point cloud.

2.4. Fitting Measurement

Geometric fitting of three-dimensional point clouds is a key step in extracting structural parameters and achieving precise measurement. Taubin circle fitting [40] and Pratt fitting method [41] are widely used in circle/ellipse parameter estimation, but they are all circle fitting methods applied to two-dimensional planes and are not directly applicable to the fitting of circular structures in three-dimensional point clouds. RANSAC [42] is the most widely used robust fitting method, which is suitable for vector nozzle end face measurement tasks. After completing point cloud segmentation, this paper uses the RANSAC fitting algorithm to perform circle fitting on the nozzle end face and background plate, and then extracts geometric parameters such as nozzle opening and deflection angle to achieve a high-precision and robust automated measurement process.

3. Proposed Method

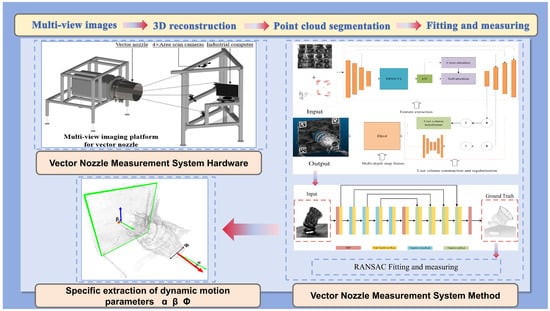

This section primarily introduces our method for measuring the motion parameters of a vector nozzle. As shown in Figure 2, firstly, this paper adopts a three-dimensional nozzle reconstruction method based on multi-view stereo vision, while introducing AprilTag visual markers as a point cloud scale reference. Subsequently, this paper uses a point Transformer point cloud segmentation network to automatically extract the nozzle end face region, and finally, based on the RANSAC fitting algorithm, achieves high-precision measurement of the vector nozzle nozzle opening, deflection angle, and azimuth angle.

Figure 2.

Overall framework diagram.

3.1. 3D Reconstruction

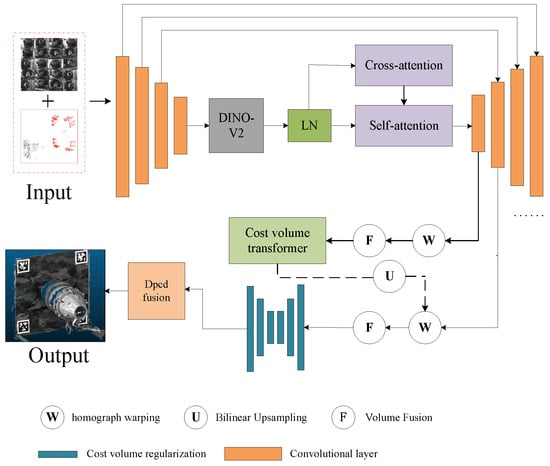

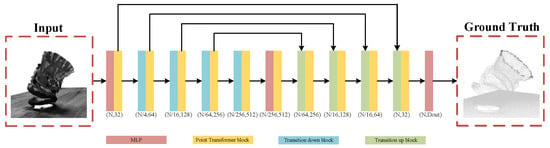

During the motion of the vector nozzle, it is essential to fully perceive the nozzle structure and the camera pose of the scene. Therefore, this paper adopts a multi-view stereo vision-based method for 3D reconstruction of the nozzle model. First, sparse reconstruction of the scene is performed using structure from motion to obtain the poses of 20 area array cameras. Based on the camera pose information, a deep learning-based multi-view stereo vision method is then used. This paper employs a transformer-based multi-view stereo vision method, as shown in Figure 3, to obtain a 3D representation of the dense point cloud of the scene.

Figure 3.

Multi-view stereo vision reconstruction network diagram.

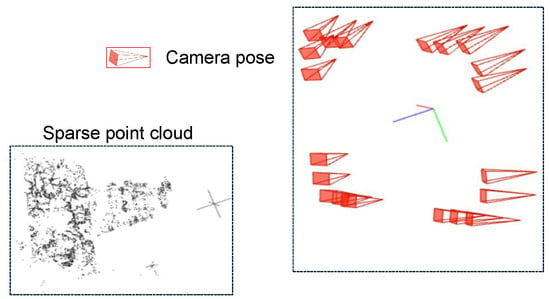

3.1.1. Structure-from-Motion

Structure-from-Motion starts with two initial views, estimates the relative camera pose through feature matching and 2D-2D geometric constraints, and then reconstructs spatial points using triangulation. New images are introduced using a 3D-2D PnP method, recursively performing point cloud expansion and camera pose solving. This paper uses an incremental Structure-from-Motion method implemented with COLMAP to calibrate 20 industrial cameras. To improve accuracy, COLMAP introduces Bundle Adjustment optimization to globally and jointly adjust the positions of all cameras and points. The final output includes the camera intrinsic and extrinsic parameters Ki, Ri, Ti, and a sparse 3D point cloud for each image. The results are shown in Figure 4. In the input, the left side represents the sparse point cloud of the nozzle scene, and the right side represents the poses of the 20 industrial cameras.

Figure 4.

Schematic diagram of SFM results.

3.1.2. Multi-View Stereo Vision

The core of the multi-view stereo vision algorithm is to first select a reference viewpoint as the baseline viewpoint based on the multi-view camera matching score obtained from Structure-from-Motion. Then, a deep learning network is used to extract features from the input image. The homography matrix Hi is used to reproject the features of the source feature image under different depth assumptions to the reference viewpoint, thus constructing the feature volume Vi from the source image to the reference image.

The cost volume is generated by averaging and averaging the feature volumes of the source views. 3DCNN is then used to regularize the cost volume, resulting in a three-dimensional probability volume representing the probability of a pixel being at depth d. To obtain the depth estimate of the reference view from the probability volume , the expectation along the depth dimension is calculated. The depth estimation formula is as follows:

The Transformer-based multi-view stereo vision framework MVSFormer++ was adopted, as shown in Figure 3. This paper provides 20 input images of size 1280 × 960, along with camera parameters provided by SFM. First, multi-scale features of the input images are extracted using FPN and DINOv2. At each scale level, based on the camera parameters, homography transformation is used to align the images to the reference view according to the assumed depth, forming an aligned feature body on a multi-scale, discrete depth assumption. Based on this, a four-dimensional vector cost volume is constructed at this scale level, with dimensions of , where and represent the height and width of the feature map at the s-th scale level; represents the number of layers in the discrete depth hypothesis at the s-th scale level; and represents the number of feature channels at the s-th scale level. Then, at the coarsest scale, the cost volume is regularized using Cost volume transformer. The four-dimensional vector cost volume is regularized to obtain a three-dimensional probability volume . Its dimension is . Finally, the expectation of the three-dimensional probability volume is calculated along the depth dimension to obtain a depth map D (1280 × 960), and the scene dense point cloud is obtained by fusing the depth maps.

3.1.3. AprilTag Scale Return

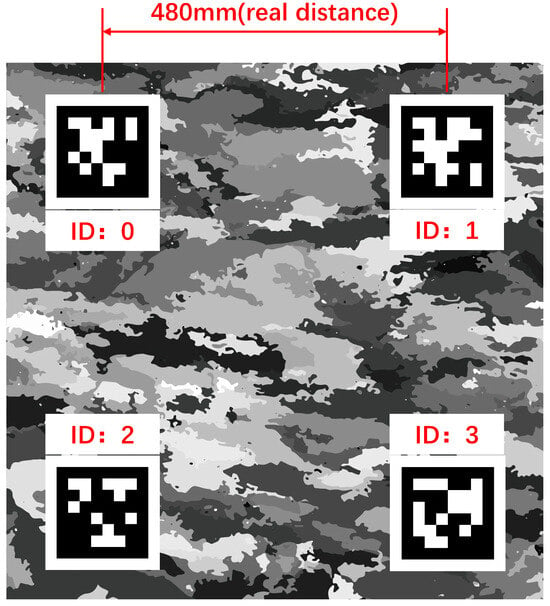

Since the reconstruction results of Structure-from-Motion and multi-view vision matching only retain relative structural information and cannot provide the true physical scale, an external scale reference needs to be introduced for recovery. In this paper, four AprilTag markers with known spacing are placed on the nozzle reference plate as shown in Figure 5, which serve as the scale reference source. At the same time, this paper takes the direction of the line connecting the centers of the markers as the zero value direction of the azimuth angle .

Figure 5.

AprilTag-coded marker diagram.

Considering the problems of occlusion and insufficient resolution in directly detecting marker positions in dense point clouds, this paper adopts an indirect strategy of image-space detection to 3D mapping to obtain the 3D position of markers in the point cloud, and then obtains the distance in the point cloud coordinate system. First, 2D detection is performed to detect AprilTag markers in multi-view 2D images. In the reconstruction pipeline of MVSFormer++, the 3D coordinates of the neighborhood points near the center points of the four AprilTags are determined by depth map fusion. Finally, the scale factor is obtained by calculating the physical distance between any two AprilTag centers and the Euclidean distance in the reconstructed AprilTag point cloud.

where Dreal is the physical distance to the center of the AprilTag; Drec is the Euclidean distance in the reconstructed point cloud of the AprilTag.

3.2. End Face Point Cloud Segmentation

To achieve a high degree of automation in the measurement process, manually screening end face points frame by frame in the scenario of vector nozzle motion is almost impractical. This paper adopts a transformer-based point cloud segmentation network, which can quickly and accurately identify the end face region in the point cloud, thereby realizing automated measurement.

3.2.1. End Face Segmentation Dataset

To improve the generalization ability of point cloud segmentation for nozzle scenes, this paper preprocesses the dense point cloud obtained by multi-view visual matching and reconstruction and creates a point cloud segmentation dataset.

The overall preprocessing workflow is based on Open3D, and the specific steps are shown in Algorithm 1: First, it is determined whether the point cloud contains color information; if the original point cloud is in RGB format, it is converted to grayscale format to unify the data channel structure and simplify the subsequent processing. Based on the scale factor calculated in the previous section, the point cloud coordinates are uniformly scaled to make the point cloud coordinate space consistent with the actual physical space, ensuring the true scale of subsequent geometric parameter estimation. Considering that multi-view stereo matching reconstruction methods are often accompanied by redundant structures and sparse point clusters, this paper uses a density statistical method to extract the main structural regions. By statistically analyzing the neighborhood density of each point, the core region with the highest point density is cropped and retained, and the background and invalid structures are initially removed. To reduce the computational burden and maintain structural uniformity, a voxel downsampling method is then used to divide the point cloud into a fixed-size voxel grid. Points within each voxel are represented by their centroids, thereby reducing the number of points while retaining spatial distribution characteristics. Finally, statistical filtering is performed to remove outliers.

The nozzle end-face segmentation dataset is designed as follows: The data type includes three different vector nozzle models, covering various opening angles and deflection angles. This paper defines the segmentation task as a binary segmentation problem, involving nozzle end-face point clouds and other background point clouds. Both the nozzle end-face and background point clouds are semantically labeled using CloudCompare. Each point cloud contains xyz coordinates and a binary label, and after the proposed 3D reconstruction process and Open3D-based point cloud post-processing, it contains an average of approximately 100,000 valid points. The dataset contains 80 point cloud scenes, with 70% used for training, 10% for testing, and 20% for validation. This dataset is specifically designed for nozzle end-face segmentation tasks and exhibits good label quality and structural generalization ability.

| Algorithm 1 Point cloud post-processing algorithm |

|

3.2.2. Point Cloud Segmentation Algorithm

This paper adopts the Point Transformer point cloud segmentation network framework, as shown in Figure 6. Specifically, the network input is an N*C tensor, where N represents the number of points in the point cloud, and C represents the feature channels. Initially, it is a six-dimensional vector containing positional (x, y, z) information and color (r, g, b) information. In the encoder stage, as the number of layers increases, the number of points N is gradually downsampled, but the number of feature channels Ci increases accordingly. In the decoder stage, through upsampling and skip connections, the number of points N is gradually restored, and the number of feature channels gradually decreases. The final output is N*K, where K is the number of segmentation categories. In this paper, the number of categories is 2, i.e., K is 2. The network as a whole adopts an Encoder–Decoder architecture, which is particularly suitable for processing nozzle structures with obvious local geometric shapes and class imbalance in point clouds. Its core attention calculation can be formally represented as

where represents the center point, and represents the neighboring points around the center point. By simultaneously modeling local neighborhood point cloud features and global dependencies through a self-attention mechanism, the spatial structure information of the point cloud is effectively captured, thereby improving the robustness and accuracy of segmentation.

Figure 6.

End Face Segmentation Network Diagram.

Since the nozzle end face points account for a relatively small proportion of the overall point cloud, significant class imbalance occurs during training. Therefore, this work employs a Focal Loss strategy:

By adjusting the class balance factor and the focusing silver , the weights of the positive and negative class point clouds can be effectively balanced, and the training can be focused on the difficult-to-classify samples. The final training backend segmentation accuracy was 0.8738, and the inversion of performance (IoU) was 0.6461.

3.3. Fitting Measurement

3.3.1. Geometric Fitting

Since the point cloud of the nozzle end face we obtained is not completely coplanar, this paper uses a two-stage RANSAC algorithm to perform spatial circle fitting on the nozzle end face.

In the first stage, RANSAC is applied to the nozzle end-face point cloud to obtain the best-fit plane . Then, the nozzle end-face point cloud P is orthogonally projected onto the fitting plane to form a planar point set , which is used for two-dimensional circle fitting in the second stage.

In the second stage, RANSAC is performed on the planar point set on the fitting plane to fit a two-dimensional circle. This yields the coordinates c of the center of the planar circle, the radius r, and the normal vector .

Meanwhile, this paper uses a single-stage RANSAC plane fitting method to obtain the plane and its normal vector from the point cloud of the reference plate region, which serves as a reference for measuring the nozzle deflection angle and azimuth angle.

3.3.2. Motion Parameter Measurement

Based on the fitting results from the previous section, the key geometric parameters of the nozzle structure can now be calculated. The nozzle opening is the diameter of the spatial circle, calculated from the radius r of the fitted circle:

The deflection angle is the angle between the nozzle normal vector and the reference plate normal vector . It is calculated using the vector angle formula:

By calculation, key parameters such as nozzle opening and deflection angle can be reliably extracted. This module serves as the key output of the entire vision measurement system, directly measuring the geometric parameters of the vector nozzle mechanism.

4. Experiment and Analysis

This section mainly introduces our measurement system, experimental methods, evaluation indicators, and measurement result analysis. To verify the effectiveness of the proposed method, this paper conducted three sets of comparative experiments to measure the motion parameters of the vector nozzle under different attitudes. Finally, we used MSA to perform quantitative analysis of the measurement results based on the established evaluation indicators, and ensured the repeatability and stability of the measurement system.

4.1. Measurement System

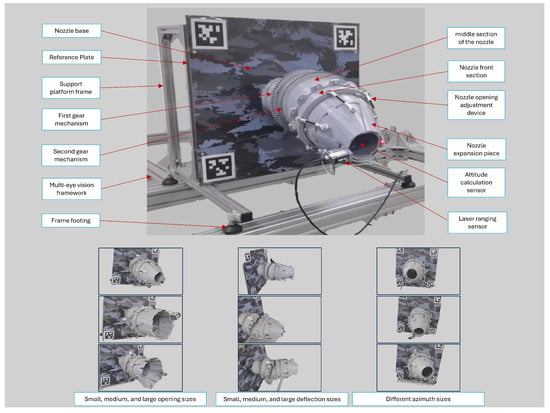

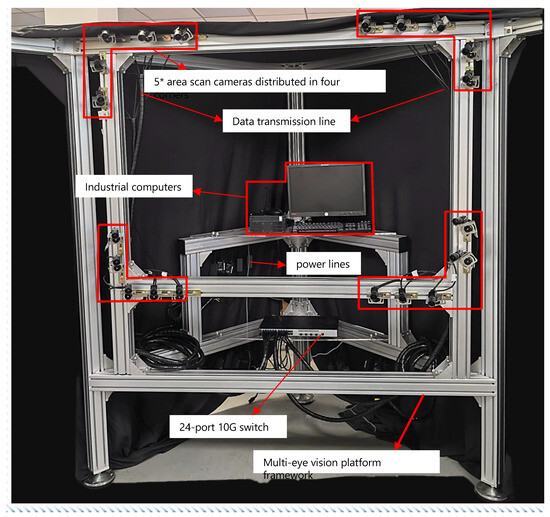

This paper constructs a dual-bearing rotating vector nozzle for simulating the accuracy test of vector nozzle motion, and designs a multi-view vision platform specifically for capturing multi-view images. The overall structure is shown in Figure 7.

Figure 7.

Measurement system structure diagram.

The structure of the vector nozzle is shown in Figure 8. The nozzle opening is adjusted by adjustment device, and the nozzle deflection is achieved by two sets of gear mechanisms. Both sets of gears are driven by DC motors. The motor, combined with a speed controller and forward/reverse and travel limit switches, can realize the forward/reverse rotation of the nozzle, speed regulation, and limit control. The nozzle deflection direction reset and zeroing are accomplished through two sets of photoelectric switch sensors and attitude calculation sensors.

Figure 8.

Schematic diagram of vector nozzle mechanism.

The nozzle opening was measured using a laser rangefinder to determine the actual nozzle diameter. To obtain the true nozzle deflection angle, this paper studied the motion of the nozzle model. Based on Figure 8, we simplified the model as shown in Figure 6, where segment BD rotates around the x-axis of the absolute coordinate system, and segment EF rotates around the axis. Through coordinate transformation and geometric characteristics, we know that the angle between and represents the nozzle deflection attitude. This paper calculates the true nozzle deflection angle using the rotation angles of two gear mechanisms. The calculation method is as follows:

where is the rotation angle of the rear gear, and is the rotation angle of the front gear.

Meanwhile, this paper placed a reference plate directly behind the nozzle model, with its plane parallel to the plane of the rear gear bearing. The entire support platform is constructed of 2020 aluminum profiles and can be used to fix the overall nozzle model and support the motor controller and power supply. Ultimately, our designed vector nozzle model can achieve omnidirectional deflection with and .

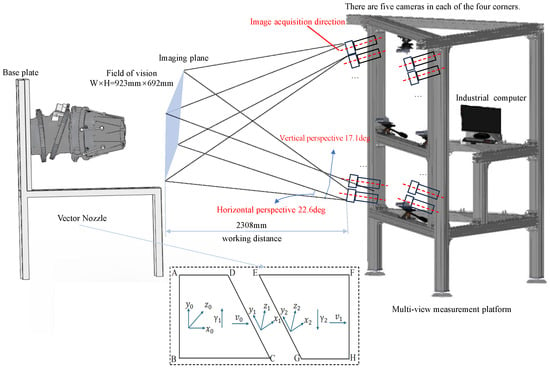

As shown in Figure 9, to achieve real-time synchronous acquisition of multi-view images of the vector nozzle, this paper built a multi-view vision platform. Considering both safety and stability, 2 mm thick 8080 European standard aluminum profiles were used as the raw material for the frame construction. The platform is equipped with 20 MV-CE013-50GM (Hangzhou Hikrobot Co., Ltd., Hangzhou, China) industrial area array cameras with MVL-MF1224M-5MPE lenses (Hangzhou Hikrobot Co., Ltd., Hangzhou, China). The cameras are stably connected to the platform via adapters, ball heads, corner brackets, and T-bolts. Simultaneously, the cameras are connected to an HIK industrial control computer via a 24-port 10 Gigabit switch (TP-Link Technologies Co., Ltd., Shenzhen, China). This paper built the imaging workflow based on Vision Master, ultimately achieving synchronous multi-view imaging.

Figure 9.

Multi-view vision platform structure diagram.

The experimental details are as follows: This experiment was conducted on a multi-view vision platform based on Vision Master, continuously acquiring multi-view images of the nozzle from 20 cameras simultaneously. First, the acquired multi-view images were input into a reconstruction algorithm framework to obtain a dense 3D point cloud model. Then, the reconstructed point cloud model was tested on a pre-trained point transformer network to obtain the nozzle end-face point cloud. Finally, the RANSAC method was used to calculate and obtain the nozzle’s motion parameters. All experimental algorithms in this paper were implemented on a PyTorch 2.0 framework within an Ubuntu 20.04 Python 3.8 environment. The measurement results were visualized as shown in Figure 10. Ultimately, the measurement requirements for vector nozzles were achieved using the proposed measurement method framework. In this diagram, the red circle represents the measured end face diameter, the orange arrow indicates the initial reference direction of the azimuth angle, and the blue arrow indicates the calculated azimuth angle direction; the angle between the two is the azimuth angle magnitude. The green arrow indicates the normal direction of the reference plate, and the red arrow indicates the normal direction of the vector nozzle end face circle; the angle between the two corresponds to the nozzle deflection angle.

Figure 10.

Visualization of measurement results diagram.

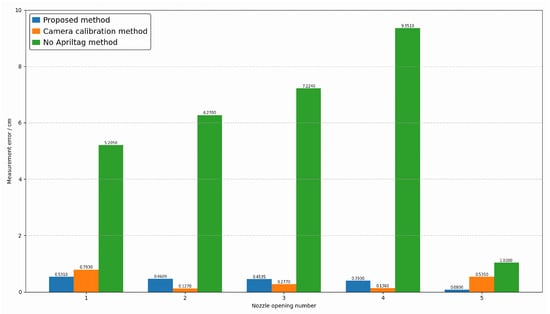

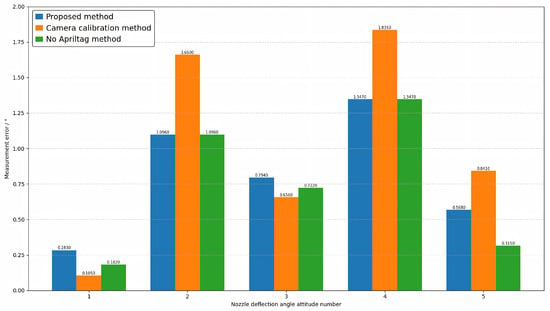

4.2. Comparative Experiment

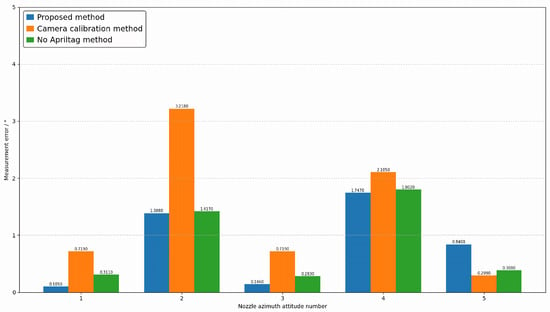

To verify the effectiveness of the proposed method, three sets of comparative experiments were designed. The first set of experiments involved direct measurement based on our proposed method framework using multi-view vision to detect marker points. The second set involved joint calibration of 20 industrial cameras, testing the proposed method under conditions based on real camera poses. The third set measures without adding markers within the framework of the method proposed in this paper. This paper conducts experiments on the attitudes of five different nozzles, including opening angle, deflection angle, and azimuth angle. The results are shown in Figure 11, Figure 12 and Figure 13, where the horizontal axis represents the number of poses, and the vertical axis represents the measurement error. Blue represents the proposed method, orange represents the camera calibration method, and green represents the method without AprilTag marker points. Experimental results show that the proposed detection method can replace the camera calibration process while maintaining measurement accuracy, and the proposed measurement method achieves measurement accuracy with relatively small errors. This enables the measurement of vector nozzle motion parameters.

Figure 11.

Vector Nozzle Opening Error Analysis Diagram.

Figure 12.

Vector Nozzle Deflection Angle Error Diagram.

Figure 13.

Vector Nozzle Azimuth Error Diagram.

4.3. Evaluation Indicators and Measurement Systems Analysis

This paper uses measurement system analysis (MSA) to evaluate the experimental results. MSA can systematically analyze the performance of measurement systems, which mainly include five key characteristics: accuracy, precision, stability, linearity, and resolution. Different analysis methods need to be selected for different measurement systems. The measurement system proposed in this paper is classified as a metrological, repeatable, and automated measurement system, and is validated using analysis of variance (ANOVA). ANOVA is a standard statistical analysis technique, with the main evaluation indicators being and NDC. According to the AIAGMSA fourth edition standard, a measurement system is acceptable when . indicates that the measurement system can distinguish differences between different measured objects.

quantitatively analyzes the contribution of different sources of variation in a measurement system by decomposing the variance of measurement data. The relationship between them can be expressed as:

The first term in the formula represents the actual variation of the measured object; the second term represents the reproducibility variation; the third term represents the repeatability variation; and the last term represents the variation caused by the interaction. The total GRR variance, total variation, and percentage variation of the measurement system can then be calculated.

NDC represents the number of valid categories that a measurement system can distinguish; it is a statistical indicator of the system’s resolution.

Its calculation formula is:

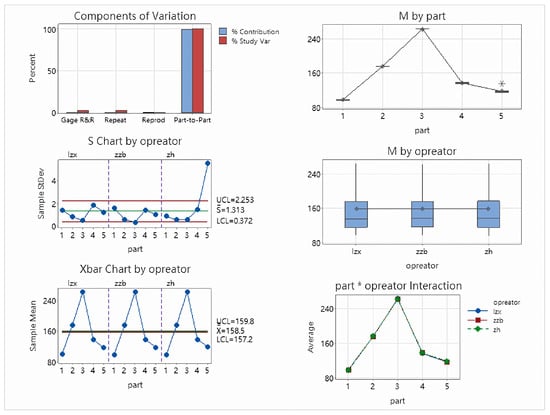

This paper uses Minitab 20 software to analyze three motion parameters of the vector nozzle: opening, deflection, and azimuth attitude. The results show that the and NDC of the measurement system are 2.81% and 7.69%, and 1.84%, 50, 18, and 76, respectively, indicating that the measurement system has high accuracy and stability. Finally, the variance analysis report for the three motion parameters was obtained, as shown in the figure below. Analysis of the X-plot and S-plot shows that all measurement points are within the control limits, indicating that the measurement system has stability and good repeatability. Furthermore, the results remain highly consistent among different operators, with no significant operator variation. The variation between measurement variables mainly originates from the variation between workpieces, and the error caused by the measuring instrument itself is very small. This demonstrates that the measurement system proposed in this paper has superior accuracy and reliability.

This paper uses Minitab software to analyze three motion parameters: nozzle opening, deflection, and azimuth attitude. This paper sets up three operators for each motion parameter and repeats the measurement of the five parameters ten times. The opening analysis table is shown in Table 1. The analysis of variance report is shown in Figure 14.

Table 1.

Analysis of variance table of opening.

Figure 14.

Opening ANOVA plot.

The opening analysis results show a Total Gage R&R of , far below the evaluation standard, indicating that the measurement system has high reliability for aperture measurement. Repeatability is and Reproducibility is , indicating minimal fluctuations in the measuring equipment and no significant differences between operators. The Part-to-Part ratio is , and the NDC is 50, demonstrating that the measurement system can effectively distinguish the true differences between different samples. Various control charts (Xbar, S, Operator interaction chart, etc.) all exhibit good stability, no out-of-limit points, and no interaction effects, further validating the effectiveness and consistency of the measurement system for aperture measurement.

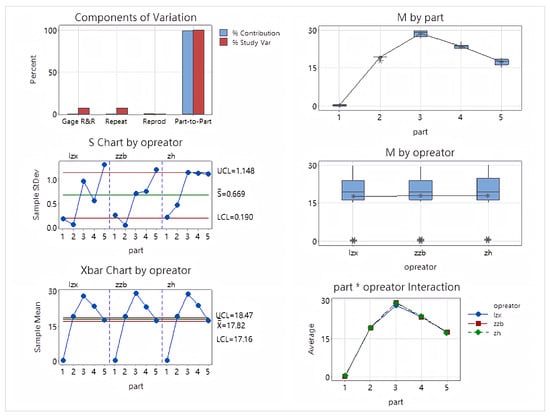

Secondly, the deflection analysis table is shown in Table 2. The analysis of variance report is shown in Figure 15.

Table 2.

Analysis of variance table of deflection.

Figure 15.

Deflection angle ANOVA plot.

The deflection analysis results showed a Total Gage R&R of , lower than the evaluation standard, indicating that the measurement system has high reliability for deflection angle measurement. Repeatability was and Reproducibility was , indicating minimal fluctuations in the measurement equipment and no significant differences between operators. The Part-to-Part ratio was , and the NDC was 18, demonstrating that the measurement system can effectively distinguish the true differences between different samples. Various control charts (Xbar, S, Operator interaction chart, etc.) all showed good stability, no out-of-limit points, and no interaction effects, further validating the effectiveness and consistency of the measurement system for deflection measurement.

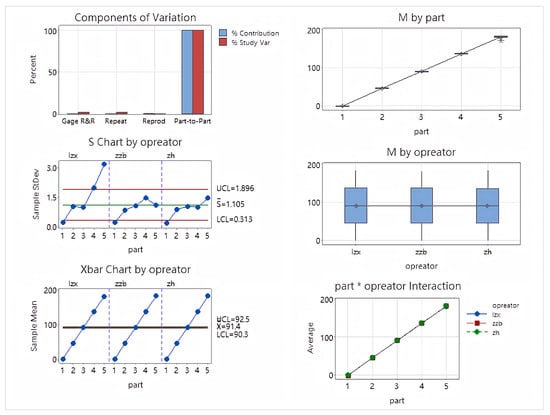

Finally, the azimuth analysis table is shown in Table 3. The analysis of variance report is shown in Figure 16.

Table 3.

Analysis of variance table of azimuth.

Figure 16.

Azimuth ANOVA plot.

The azimuth analysis results show a Total Gage R&R of , lower than the evaluation standard, indicating that the measurement system has high reliability for orientation angle measurement. Repeatability is and Reproducibility is , indicating minimal fluctuations in the measurement equipment and no significant differences between operators. The Part-to-Part ratio is , and the NDC is 76, demonstrating that the measurement system can effectively distinguish the true differences between different samples. Various control charts (Xbar, S, Operator interaction chart, etc.) all exhibit good stability, no out-of-limit points, and no interaction effects, further validating the effectiveness and consistency of the measurement system for azimuth measurement.

5. Conclusions

In this paper, a multi-view stereo vision-based system for measuring the motion parameters of a vector nozzle is proposed, which features high accuracy, a large measurement range, and good stability. This paper designed a dual-bearing rotating nozzle and built a multi-view vision platform for experimental verification. A technical scheme of ‘ 3D reconstruction—point cloud segmentation—fitting measurement’ was proposed. Experimental results show that the system can achieve high-precision measurement. Furthermore, the stability and reliability of the proposed measurement system in measuring the motion parameters of the vector nozzle were demonstrated through MSA quantitative analysis. Although our method demonstrates good accuracy and stability, it still has certain limitations. Firstly, the proposed method has a high operating cost; currently, the system can only achieve offline real-time measurement and does not yet support online real-time measurement. Secondly, the system relies on a multi-view vision platform, resulting in high deployment complexity, and further optimization is needed for practical engineering applications. Future work could focus on algorithm lightweighting or researching more compact hardware configurations to achieve online real-time measurement capabilities.

Author Contributions

Conceptualization, Z.L. and Z.Z.; methodology, Z.L.; validation, Z.L., Z.Z. and H.Z.; formal analysis, Z.L.; investigation, Z.Z.; resources, K.S., C.L., Y.Z. and Y.Y.; data curation, Z.L.; writing—original draft preparation, Z.L.; writing—review and editing, K.S.; visualization, H.Z.; supervision, K.S.; project administration, K.S., C.L., Y.Z. and Y.Y.; funding acquisition, K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Fundamental Research Funds for the Central Universities (N2403010).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author (The data are not publicly available due to institutional policy and ongoing research confidentiality).

Acknowledgments

The authors would like to express their gratitude to colleagues who provided valuable insights and technical assistance during the course of this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Afridi, S.; Khan, T.A.; Shah, S.I.A.; Shams, T.A.; Mohiuddin, K.; Kukulka, D.J. Techniques of Fluidic Thrust Vectoring in Jet Engine Nozzles: A Review. Energies 2023, 16, 5721. [Google Scholar] [CrossRef]

- Catana, R.M.; Badea, G.P. Experimental Analysis on the Operating Line of Two Gas Turbine Engines by Testing with Different Exhaust Nozzle Geometries. Energies 2023, 16, 5627. [Google Scholar] [CrossRef]

- Ramraj, H.; Sundararaj, T.; Sekar, C.; Arora, R.; Kushari, A. Effect of nozzle exit area on the performance of a turbojet engine. Aerosp. Sci. Technol. 2021, 116, 106844. [Google Scholar] [CrossRef]

- Li, B.; Rui, X.; Zhou, Q. Study on simulation and experiment of control for multiple launch rocket system by computed torque method. Nonlinear Dyn. 2017, 91, 1639–1652. [Google Scholar] [CrossRef]

- Kwon, K.; Choia, H. Control of laminar vortex shedding behind a circular cylinder using splitter plates. Phys. Fluids 1996, 8, 479–485. [Google Scholar] [CrossRef]

- Ferlauto, M.; Marsilio, R. Numerical Investigation of the Dynamic Characteristics of a Dual-Throat-Nozzle for Fluidic Thrust-Vectoring. AIAA J. 2017, 55, 86–89. [Google Scholar] [CrossRef]

- Zhang, A.; Huang, J.; Sun, Z.; Duan, J.; Zhang, Y.; Shen, Y. Leakage Detection in Subway Tunnels Using 3D Point Cloud Data: Integrating Intensity and Geometric Features with XGBoost Classifier. Sensors 2025, 25, 4475. [Google Scholar] [CrossRef] [PubMed]

- Hakim, K.; Hamitouche, T.; Said, B. Numerical Simulation of Fluidic Thrust Vectoring in The Conical Nozzle. J. Adv. Res. Fluid Mech. Therm. Sci. 2024, 73, 88–105. [Google Scholar] [CrossRef]

- Acquah, R.; Misiulis, E.; Sandak, A.; Skarbalius, G.; Navakas, R.; Džiugys, A.; Sandak, J. Integrating LiDAR, Photogrammetry, and Computational Fluid Dynamics for Wind Flow Simulations Around Existing Buildings. Remote Sens. 2025, 17, 556. [Google Scholar] [CrossRef]

- Zhang, C.; Tang, W.; Li, H.P.; Wang, J.; Chen, J. Application of laser tracker to thrust line measurement of solid rocket motor. J. Solid Rocket. Technol. 2007, 30, 548–551. [Google Scholar]

- Guo, Y.; Chen, G.; Ye, D.; Yu, X.; Yuan, F. 2-DOF Angle Measurement of Rocket Nozzle with Multivision. Adv. Mech. Eng. 2013, 5, 942580. [Google Scholar] [CrossRef]

- Cui, J.; Feng, D.; Min, C.; Tian, Q. Novel method of rocket nozzle motion parameters non-contact consistency measurement based on stereo vision. Optik 2019, 195, 163049. [Google Scholar] [CrossRef]

- Soyluoğlu, M.; Orabi, R.; Hermon, S.; Bakirtzis, N. Digitizing Challenging Heritage Sites with the Use of iPhone LiDAR and Photogrammetry: The Case-Study of Sourp Magar Monastery in Cyprus. Geomatics 2025, 5, 44. [Google Scholar] [CrossRef]

- Hayashi, A.; Kochi, N.; Kodama, K.; Isobe, S.; Tanabata, T. CLCFM3: A 3D Reconstruction Algorithm Based On Photogrammetry for High-Precision Whole Plant Sensing Using All-Around Images. Sensors 2025, 25, 5829. [Google Scholar] [CrossRef]

- Xu, H.; Zeng, Y.; Qiu, M.; Wang, X. Construction of a High-Precision Underwater 3D Reconstruction System Based on Line Laser. Sensors 2025, 25, 5615. [Google Scholar] [CrossRef] [PubMed]

- Xia, X.; Sun, Z.; Hu, Y.; Qiang, H.; Zhu, Y.; Zhang, Y. Numerical Investigation of the Two-Phase Flow Characteristics of an Axisymmetric Bypass Dual-Throat Nozzle. Aerospace 2025, 12, 226. [Google Scholar] [CrossRef]

- Tao, Z.; Li, J.; Cheng, B. Key Technology of Aircraft Propulsion System—Thrust Vectoring Technology. J. Air Force Eng. Univ. 2000, 1, 86–87. [Google Scholar]

- Sieder-Katzmann, J.; Propst, M.; Stark, R.H.; Schneider, D.; General, S.; Tajmar, M.; Bach, C. Surface Pressure Measurement of Truncated, Linear Aerospike Nozzles Utilising Secondary Injection for Aerodynamic Thrust Vectoring. Aerospace 2024, 11, 507. [Google Scholar] [CrossRef]

- Zhang, R.; Xu, L.; Gong, Y.; Xia, Z. Numerical Analysis of Full-envelope Thrust Characteristics of Axisymmetric Convergent-divergent Nozzle with Single Actuating Ring. Aeroengine 2024, 50, 82–84. [Google Scholar]

- Liang, C.; Jin, B.; Li, Y. Development of Spherical Convergent Flap Nozzle. Aeroengine 2002, 3, 55–57. [Google Scholar]

- Zhang, H.; Zhang, Q.; Meng, L.; Luo, Z.; Song, H. Kinematic Modeling and Trajectory Analysis of Axisymmetric Vectoring Nozzle. Aeroengine 2024, 50, 87–89. [Google Scholar]

- Yao, S.; Luo, Z.; Zhang, H.; Xu, C.; Ji, H.; Pang, C. Research on Axisymmetric Vectoring Exhaust Nozzle Dynamic Characteristics Considering Aerodynamic and Thermal Loads Effect. J. Appl. Fluid Mech. 2025, 18, 1381–1395. [Google Scholar] [CrossRef]

- Wen, X.; Wang, J.; Zhang, G.; Niu, L. Three-Dimensional Morphology and Size Measurement of High-Temperature Metal Components Based on Machine Vision Technology: A Review. Sensors 2021, 21, 4680. [Google Scholar] [CrossRef]

- Li, T.; Liu, C.; Liu, Y.; Wang, T.; Yang, D. Binocular stereo vision calibration based on alternate adjustment algorithm. Optik 2018, 173, 13–20. [Google Scholar] [CrossRef]

- Yao, Y.; Luo, Z.; Li, S.; Fang, T.; Quan, L. MVSNet: Depth Inference for Unstructured Multi-view Stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 767–783. [Google Scholar]

- Yang, J.; Mao, W.; Alvarez, J.M.; Liu, M. Cost Volume Pyramid Based Depth Inference for Multi-View Stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4121–4130. [Google Scholar]

- Gu, X.; Fan, Z.; Zhu, S.; Dai, Z.; Tan, F.; Tan, P. Cascade Cost Volume for High-Resolution Multi-View Stereo and Stereo Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2495–2504. [Google Scholar]

- Zhang, Z.; Peng, R.; Hu, Y.; Wang, R. GeoMVSNet: Learning Multi-View Stereo with Geometry Perception. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 21508–21518. [Google Scholar]

- Cao, C.; Ren, X.; Fu, Y. MVSFormer: Multi-View Stereo by Learning Robust Image Features and Temperature-based Depth. Trans. Mach. Learn. Res. TMLR 2023, 4, 1–10. Available online: https://openreview.net/forum?id=2VWR6JfwNo (accessed on 10 October 2025).

- Cao, C.; Ren, X.; Fu, Y. MVSFormer++: Revealing the Devil in Transformer’s Details for Multi-View Stereo. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Graham, B.; Engelcke, M.; van der Maaten, L. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Choy, C.B.; Gwak, J.; Savarese, S. 4D Spatio-Temporal ConvNets: Minkowski Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3075–3084. [Google Scholar]

- Zhao, L.; Hu, Y.; Yang, X.; Dou, Z.; Kang, L. Robust multi-task learning network for complex LiDAR point cloud data preprocessing. Expert Syst. Appl. 2024, 237, 121552. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.S.; Koltun, V. Point Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Wang, C.; Wu, M.; Lam, S.K.; Ning, X.; Yu, S.; Wang, R.; Srikanthan, T. GPSformer: A global perception and local structure fitting-based transformer for point cloud understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 75–92. [Google Scholar]

- Taubin, G. Estimation of Planar Curves, Surfaces, and Nonplanar Space Curves Defined by Implicit Equations with Applications to Edge and Range Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 1115–1133. [Google Scholar] [CrossRef]

- Pratt, V. Direct Least-Squares Fitting of Algebraic Surfaces. In Proceedings of the ACM SIGGRAPH Conference, Anaheim, CA, USA, 27–31 July 1987; pp. 145–152. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.