Abstract

The global shift towards renewable energy is increasingly driven by the need to reduce carbon emissions and address urban energy demands sustainably. Solar power, as an accessible and efficient energy source, offers substantial potential for integration within urban environments. However, there remains a lack of a comprehensive evaluation framework for accurately predicting the energy generation of urban solar panel installations. Therefore, in this study, we develop a YOLO-based semantic segmentation framework to estimate the energy generation potential of existing solar panels in a city-scale fashion and use the Elephant andCastle area of London city as a case study. The results demonstrate that the proposed model can detect and segment solar panels in complex urban environments with an accuracy of 98.32%, and the total area of solar panels in the designated area is 127.75 m2.

1. Introduction

The increasing shortage of fossil fuel resources, coupled with rising energy demands and pollution, has accelerated the global transition toward renewable energy sources. Unlike traditional energy options, renewable sources—such as solar, wind, biomass, and geothermal—offer a flexible, environmentally friendly approach to energy generation and consumption, attracting substantial interest from both industry and academia [1,2]. Among these alternatives, solar energy stands out as one of the most practical options, thanks to its high efficiency, ease of deployment, and safety [3].

Solar power has been experiencing significant growth worldwide, with China emerging as a global leader in both the adoption and production of solar photovoltaic (PV) technology [4]. In recent years, China has made remarkable strides in expanding its solar power capacity. According to the International Renewable Energy Agency (IRENA), China’s installed solar PV capacity increased from 130.25 GW in 2017 to 392.61 GW in 2022 [5]. Meanwhile, cities consume 78% of global energy [6], and urban environments with their extensive built spaces present an ideal setting for photovoltaic (PV) installations. Building “solar cities” thus holds considerable potential to reduce carbon emissions and promote carbon-neutral urban infrastructures [7]. Despite these advantages, effectively harnessing solar energy at a city scale faces significant challenges. The most critical and complex challenge is to accurately assess the energy generation potential of large-scale solar deployment in urban areas [8], which could benefit the energy planning and sustainable development of urban areas, as well as the optimization of smart grid. To address the above challenges, this paper utilizes satellite imagery to assess the solar energy generation potential at the city scale with the proposal of a Yolo-based evaluation model.

The remainder of the paper is structured as follows: We will first review the related work in Section 2, followed by the introduction of the satellite imagery-based solar panel area estimation framework in Section 3. Then, we present the Yolo-based semantic segmentation model in a detailed manner in Section 4. After that, we use the Elephant and Castle area of London city as a case study for experiments and discuss the results in Section 5. Finally, we draw conclusions in Section 6.

2. Related Work

2.1. Solar Panel-Based Energy Harvesting

Owing to its cleanliness and usability, the effort of introducing solar power into urban areas has become an emerging trend in academia. For instance, in [9], the economic and environmental analysis of installing a photovoltaic noise barrier along a metro line in China has been discussed; different metrics such as net present value [10], pay-back period, and energy pay-back time are employed to assess the economic viability, profitability, and environmental benefits of installing a photovoltaic system; the on-site collected data show that the use of the photovoltaic noise barrier can generate approximately 5000 KWh/year with notable social benefit of reducing pollution. The work in [11] investigates the potential of installing roof-mounted solar photovoltaic devices; a geographic information system (GIS)-based approach is designed to compute the usable roof area and the potential installed capacity, and the potential electricity generation is estimated, and the approximate annual electricity production for Vasteras, Sweden, on pitched roofs is 801 GWh. Similarly, the study in [12] designs an analysis framework to assess the potential of employing photovoltaic systems on buildings to offset residential electricity consumption in Shenzhen, China, and the simulation results show that the total photovoltaic energy production exceeds 88% of the total local electricity demand. The research in [13] designs a solar city framework with the aid of 3D urban surface modeling and solar photovoltaic planning; the analysis shows that solar photovoltaic plays a key role in urban decarbonization.

2.2. Solar Panel Segmentation Algorithms

Since accurately estimating the power capacity of solar panels is essential for effective energy planning, grid integration, and maximizing renewable energy utilization, much research has been dedicated to assessing the installed capacity of solar panels with satellite or aerial images. The Feature Pyramid Network (FPN) [14] has been widely adopted for its ability to capture multi-scale features, making it effective for semantic and instance segmentation tasks. Leveraging this capability, FPN has also become a key component in modern YOLO (You Only Look Once) models, enabling hierarchical feature fusion that enhances object localization and segmentation performance. For example, the research in [15] analyzes the characteristics and challenges of computer vision-based rooftop photovoltaic panel image segmentation with deep learning-based segmentation and clustering approaches; a comprehensive methodology is designed involving statistical, pixel-resolution, and visual feature analysis across multi-resolution datasets; the results identify that class imbalance, resolution thresholds, and homogeneous texture and heterogeneous color are the key factors that affect the performance of semantic segmentation. The work in [16] focuses on detecting defects in solar panels to ensure their safety and efficiency during long-term operation; a YOLOv5-based deep learning model is constructed and trained using an enhanced dataset with diversified solar panel defect types, combined with data augmentation and transfer learning techniques; experimental results show that the model achieves 96.5% accuracy in identifying six categories of defects, demonstrating high reliability and applicability for real-world photovoltaic inspection tasks. Similarly, the study in [17] detects defects in solar panels with various computer vision-based models; YOLO v5, v9, v10, and v11 are trained and tested in both thermal and optical datasets; the results show that YOLOv11-X achieves the best performance among all YOLO and classical machine learning models. Instead of focusing on rooftop photovoltaic panels, the work in [18] proposes a semantic segmentation model, namely PVNet, to extract industrial-scale photovoltaic panels composed of several arrays from high-resolution remote sensing imagery; the proposed PVNet method consists of a coarse prediction module to identify PV panel areas and a fine optimization module to improve boundary precision through residual refinement; the experimental results show that the proposed algorithm outperforms classical segmentation algorithms in key metrics such as precision and Intersection over Union (IoU). The study in [19] designs a hyperspectral solar segmentation network to enhance the low-quality solar panel satellite imagery for higher segmentation efficiency; by using histogram equalization to enhance image quality, hyperspectral synthetic decomposition to divide images into 31 spectral bands for feature selection, and replacing the conventional U-net layers with Chebyshev transformation layers, the results show that the proposed model surpasses state-of-the-art CNN (Convolutional Neural Network) and transformer models in accuracy, efficiency, and scalability. The study in [20] develops a dual-branch UNet-based framework for extracting photovoltaic panels from heterogeneous GF-2 and Sentinel-2 imagery; by incorporating spatial attention, channel attention, and a feature fusion module to address cross-sensor spatial–spectral inconsistencies, the proposed PV-UNet demonstrates clear advantages over mainstream CNN- and transformer-based segmentation models. The work in [21] similarly develops a detail-oriented photovoltaic segmentation network inspired by the DeepLabv3+ architecture; by enhancing multi-scale context aggregation through atrous convolution, refining boundary details with an edge-preserving module, and employing a decoder optimized for fine-grained spatial reconstruction, the proposed PV-Refiner achieves more precise delineation of solar panel regions and consistently outperforms baseline DeepLabV3+, UNet, and other commonly used segmentation models. Likewise, the work in [22] introduces a refined PSPNet for semantic segmentation of polarimetric SAR imagery in agricultural regions; through multilevel feature fusion, a polarimetric channel attention mechanism, and an edge-aware loss function, the method achieves more consistent spatial representations and surpasses widely used segmentation architectures such as PSPNet, UNet, DeepLabV3+, MP-ResNet, and DAFN. Similarly, the study in [23] proposes a transformer-based photovoltaic extraction framework tailored for heterogeneous remote sensing imagery; by leveraging global self-attention to model long-range spectral–spatial dependencies, together with multi-source normalization and contrast-enhanced feature refinement, the method effectively mitigates inter-sensor variations and exhibits stronger robustness than conventional CNN-based segmentation networks. Despite the substantial progress in solar panel segmentation, several research gaps remain to be addressed:

- Lack of an end-to-end framework for converting segmented solar panel regions into accurate area estimations. Most existing approaches focus solely on pixel-level segmentation, without providing a complete pipeline that links segmentation outputs to physical rooftop area measurements required for installed capacity estimation.

- Sensitivity to real-world imaging conditions. Shadows, occlusions, roof material diversity, panel aging, dust accumulation, and low-contrast imagery still degrade segmentation robustness across both CNN-based and transformer-based models.

- Weak boundary preservation. Thin and irregular rooftop panel edges are easily blurred or fragmented, as many current architectures do not explicitly enforce edge consistency, resulting in incomplete or imprecise boundary delineation.

To address these gaps, the present study develops a complete end-to-end pipeline for solar panel area estimation and enhances segmentation robustness by integrating attention mechanisms that improve feature discrimination under challenging imaging conditions and strengthen boundary preservation.

3. Solar Panel Area Estimation Framework

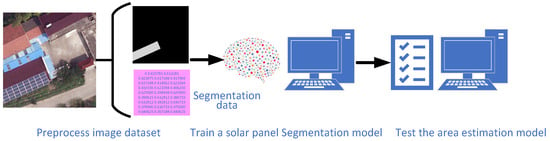

Based on the above literature review, there remains a lack of models capable of directly estimating the area of solar panels from satellite images in a pipeline manner. To address this gap, this section proposes an area estimation framework that trains a predictive model to learn the relationship between satellite imagery and the corresponding solar panel areas. The workflows can be divided into a training process and a predicting process. The diagram of the training workflow is shown in Figure 1 and is composed of three steps: (1) preprocessing the solar panel image dataset, (2) training a solar panel segmentation model, and (3) testing the model. The workflow of the predicting process is illustrated in Figure 2 and consists of four steps: (1) downloading satellite images, (2) converting them into image tiles, (3) performing semantic segmentation operations, and (4) calculating the area of solar panels.

Figure 1.

The diagram of the training workflow of the solar panel area estimation framework.

Figure 2.

The diagram of the computational workflow of the solar panel area estimation.

In the training process, we first use a mixed dataset to train and validate our model; the dataset integrates a dataset collected from Jiangsu Province, China [24], and a dataset collected in France [25]. The dataset contains 22112 aerial images including original images and labeled solar panel positions (see Figure 3). In this research, we use 70% of the data for training, 20% of the data for validation during the training process to prevent overfitting, and the remaining 10% of the data for testing.

Figure 3.

Demonstration of the multi-resolution satellite imagery dataset used for the photovoltaic panel segmentation task.

On the other hand, in the predicting process, we use self-collected satellite imagery of the Elephant and Castle area in London city as a case study to test the proposed model. Firstly, we download the digital map images via the Environmental Systems Research Institute (ESRI) World Imagery service interface [26]; then, we cut each digital map image into image tiles; after that, we utilize our model to conduct solar panel segmentation and calculate the total area of all detected panels.

In addition to estimating the physical surface area of photovoltaic installations, the segmentation output of the proposed framework serves as the foundation for downstream solar power forecasting. Specifically, the extracted pixel-level masks are converted into real-world PV surface area using the Web Mercator ground-resolution model, enabling the calculation of the installed PV capacity based on standard power density assumptions. This estimated capacity is subsequently integrated into an AI-aided solar power generation forecasting model, which predicts the capacity utilization factor (CUF)—defined as the ratio of the actual energy output to the theoretical maximum output under full-capacity operation. The forecasting model leverages multi-dimensional weather variables (including DHI, DNI, GHI, temperature, humidity, wind speed, and cloud cover), real-time solar radiation data, and historical CUF measurements to accurately predict CUF using a Long Short-Term Memory (LSTM) network. Finally, the expected solar electricity generation is obtained as the product of the predicted CUF and the estimated PV capacity. This two-stage area-to-energy workflow ensures that the segmented spatial information can be seamlessly transformed into actionable energy estimates, enhancing the practical applicability of the proposed framework for urban solar energy assessment and planning.

4. Yolo-Based Semantic Segmentation Model

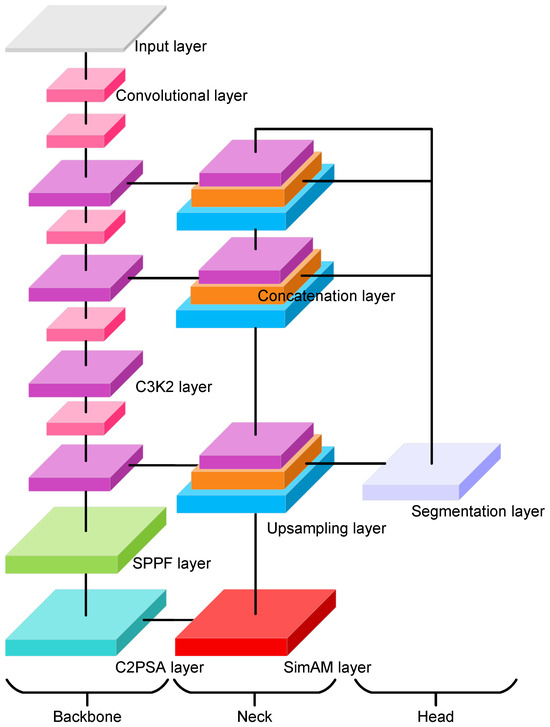

To compute the area of existing solar panels, the most critical step is to recognize and segment these solar panels. To this end, we develop a YOLO11x-seg based [27,28] objective segmentation model with an extra attention module (see Figure 4) to compute the area of solar panels within the designated area. The YOLOv11x-seg model is an extension of the YOLO object detection framework, designed specifically for semantic segmentation tasks. It integrates the efficiency and speed of the YOLO architecture with advanced segmentation capabilities, enabling it to detect and segment objects simultaneously in real time. The model consists of a backbone module, a neck module, and a segmentation head module. The backbone module is a pretrained convolutional neural network that extracts feature maps from the input image; it is composed of several customized convolutional layers and C3k2 layers, an SPPF layer, and a C2PSA layer. In our modified architecture, the backbone further incorporates SimAM, a parameter-free neuron-level attention mechanism that adjusts the importance of spatial activations without introducing additional learnable parameters. The SimAM block is integrated at the end of the backbone to strengthen spatial feature discrimination, enabling the model to more effectively refine and highlight solar panel regions. The neck module is where features from the backbone are refined and passed to the segmentation heads. It consists of two repeated sequences of upsampling, concatenation, and C3k2 layers and two repeated sequences of convolutional, concatenation, and C3k2 layers. The segmentation head is used to perform pixel-wise classification to output a mask where each pixel corresponds to the solar panel class; it takes multi-scale feature maps from different pyramid layers and outputs detection predictions (bounding box, objectness, and class scores) along with a 32-dimensional mask coefficient vector for each instance. In parallel, a prototype network generates 256 prototype masks, which are linearly combined with the instance-specific coefficients to produce the final instance masks. Table 1 summarizes the major training hyper-parameters and architectural settings adopted for the proposed model.

Figure 4.

The proposed semantic segmentation framework based on YOLOv11x-seg and SimAM.

Table 1.

Hyper-parameter settings for the proposed model.

For an input image , the designed framework first employs convolutional layers to extract features of solar panels at different scales. For the convolutional layers, it applies a convolution with stride and padding to produce output channels, followed by batch normalization to stabilize training and SiLU activation to increase nonlinearity. In our implementation, the YOLO-based downsampling convolutions adopt the standard configuration of kernel size , stride , and padding , consistent with the default YOLO design for spatial reduction. The detailed equations of the convolutional layer are shown below:

where denotes the output feature value corresponding to the n-th input image at spatial position of output channel , with and . represents the convolution kernel weights, and is the bias term. Indices and refer to output and input channels, while with and denote kernel offsets. Terms , , and correspond to stride, padding, and dilation in the vertical and horizontal directions, respectively. The input tensor I is indexed at positions , and values outside the valid range are treated as zeros (zero padding).

The output spatial dimensions and are given by the following:

To stabilize training and accelerate convergence, the convolution output is normalized and re-scaled using batch normalization:

where denotes the convolution output of the n-th input feature map at spatial position of channel , and are the mean and variance of channel computed over the mini-batch, are learnable scale and shift parameters, and is a small constant for numerical stability.

To introduce nonlinearity and enhance feature representation, a gating mechanism based on the sigmoid function is applied:

where is the batch-normalized feature value, denotes the sigmoid activation, and represents the final output feature map after activation.

The convolutional layer is stacked in tandem with a C3k2 block. The C3k2 block is a variant of the cross-stage partial (CSP) structure designed to enhance feature representation while maintaining computational efficiency. In this block, the input feature map is first split along the channel dimension into two branches. The first branch, known as the shortcut branch, applies only a convolution to preserve part of the input information with minimal transformation. The second branch, referred to as the residual branch, processes the features through two consecutive bottleneck blocks, where each bottleneck consists of a convolution for channel reduction, a convolution for spatial feature extraction, and a residual connection to retain original information. The outputs from these two branches are then concatenated along the channel axis and passed through a final convolution to fuse the features, producing the output of the C3k2 block. By combining lightweight computation with deeper feature extraction, the C3k2 structure provides an effective balance between efficiency and representational power. The detailed equations of the C3k2 block are shown below.

where is the input feature map, represents the shortcut branch, denotes two consecutive bottleneck transformations on the residual branch, Concat concatenates the two branches along the channel axis, and the outer fuses the concatenated features into the final block output. To further enhance the representation capacity, we adopt the bottleneck structure defined as follows:

where is the input feature map, reduces the channel dimension, extracts spatial features, and the residual addition preserves the original information while enhancing feature representation.

After feature extraction by the convolutional layers, the network employs an SPPF module followed by a C2PSA module. The SPPF (Spatial Pyramid Pooling—Fast) module applies successive pooling operations with different receptive fields and concatenates the results, enabling the network to capture multi-scale spatial context efficiently. The C2PSA (cross-stage partial with parallel self-attention) module then integrates cross-stage partial connections with both channel and spatial attention mechanisms, which enhances long-range dependencies and improves the discriminative ability of the fused features.

where X is the output feature map of Equation (4), , , and . Here, Concat denotes channel-wise concatenation of multi-scale pooled features, and is used for dimensionality reduction and fusion.

where X is the output feature map of Equation (6), are two splits of X along the channel dimension, and A is the attention-enhanced feature computed from .

where CA denotes channel attention, SA denotes spatial attention, and are balancing coefficients. The concatenated features are finally fused by a convolution to form the block output.

After passing through the SPPF and C2PSA modules, the feature map is further refined by a SimAM (Simple Attention Module) block [29]. The SimAM module introduces a parameter-free attention mechanism that adaptively emphasizes informative regions while suppressing less relevant responses, thereby improving the representational power of the extracted features without additional learnable parameters. Together, the SPPF, C2PSA, and SimAM modules enhance the features in a complementary manner: SPPF captures multi-scale spatial context, C2PSA strengthens long-range dependencies via channel and spatial attention, and SimAM highlights fine-grained salient information, resulting in richer and more discriminative feature representations.

where denotes the feature value at spatial position of channel c in the n-th input feature map. Terms and are the mean and variance of the local region centered at , is a balancing coefficient, is a temperature scaling factor, is the sigmoid function, and is the refined feature after applying SimAM attention.

Following the SimAM block, the network adopts a neck module based on a Feature Pyramid Network (FPN) structure. The neck is designed to fuse multi-scale features from different backbone stages and to enhance both high-level semantics and low-level spatial details. It consists of two repeated operations, each composed of upsampling, feature concatenation with the corresponding backbone output, and a C3k2 block (already defined in Equation (4)). This hierarchical design ensures that shallow and deep features are integrated, enabling robust detection of objects at different scales.

where , and denote the feature maps extracted from backbone stages of third, fifth, and ninth layers with downsampling strides of 32, 16, and 8, respectively, is a upsampling operation (nearest-neighbor interpolation as defined in Equation (13), denotes channel-wise concatenation of two feature maps as defined in Equation (14), and is the transformation block defined in Equation (4). Here, and are the fused feature maps generated at the first and second stages of the neck, respectively.

where is the input feature map, r is the upsampling factor (typically ), is the upsampled feature map, and indexes the spatial coordinates of the output, while denotes the corresponding location in the input feature map.

where and are two input feature maps with the same spatial size, and the output is obtained by concatenating and along the channel dimension.

The segmentation head is designed to generate instance-specific masks in parallel with bounding box and class predictions. It consists of two branches: a prototype branch that produces a fixed set of global prototype masks shared across all instances, and a coefficient branch that predicts a coefficient vector for each detected instance. The final instance masks are obtained by linearly combining the prototype masks with the predicted coefficients, followed by sigmoid activation and optional cropping within the bounding boxes. All parameters are optimized end-to-end using a segmentation loss, which ensures that prototypes, projection weights, and coefficients are learned jointly during training. The overall formulation of the segmentation head is expressed as follows:

where denotes the prototype masks generated from the input feature maps F by function with parameters ; is the projection matrix, and denotes its -th element mapping prototype channel c to the reduced channel r; is the bias vector with its r-th component; is the coefficient vector for the n-th instance predicted by function with parameters ; is the sigmoid activation; and is the predicted mask probability at spatial position for instance n. Here, indexes the reduced (projected) channels, indexes’ prototype channels, and we set in our experiments.

The reduced prototype representation is obtained through a convolution as follows:

where is the projection matrix, is the bias vector, with denoting its r-th component, and denotes the reduced prototype at spatial position for channel r.

The final instance mask is generated by linearly combining the reduced prototypes with the predicted coefficients as follows:

where denotes the sigmoid activation, and is the soft mask probability at spatial position for instance n.

The segmentation loss function used to supervise mask learning is defined as follows:

where N is the number of instances, is the ground-truth mask for instance n, and are the binary cross-entropy and Dice loss functions, respectively, and are their balancing weights.

During training, the segmentation loss is minimized with respect to all parameters of the segmentation head, and the gradients are propagated through the entire architecture in a unified manner. Specifically, the error signals flow from the final mask predictions back to the instance coefficients and their prediction branch , while simultaneously being transmitted to the projection weights and the prototype representations , and are further propagated to update the parameters of the prototype generator . In this way, the prototype masks, the projection matrix, and the instance coefficients are jointly optimized, enabling the model to learn coherent and discriminative mask representations in an end-to-end fashion.

To explicitly describe how the segmentation loss propagates gradients through the segmentation head, we decompose the overall gradient flow as follows:

where is the set of predicted masks for all instances, are the prototype masks generated by the prototype branch with parameters , are the projection weights, and are the instance-specific coefficient vectors predicted by the coefficient branch with parameters .

The gradient with respect to the instance coefficients is given by the following:

where is the r-th coefficient of instance n, are the reduced prototypes, and is the derivative of the sigmoid function.

Similarly, the gradient with respect to the projection weights is as follows:

where is the element of the projection matrix mapping prototype channel c to the reduced channel r, is the c-th prototype at spatial position , and is obtained by backpropagation from Equation (20).

The gradient with respect to the prototypes is as follows:

where is the prototype value at channel c and location , and the gradient accumulates from all reduced channels via .

The gradient with respect to the prototype branch parameters is expressed as follows:

where are the learnable parameters of the prototype generator , and denotes the prototype feature value at channel c and spatial position .

Similarly, the gradient with respect to the coefficient branch parameters is as follows:

where are the learnable parameters of the coefficient prediction head , and is the r-th coefficient for instance n.

The total physical area of detected solar panels is estimated by converting the number of foreground pixels in the segmentation mask into square meters. This conversion relies on the ground resolution of the Web Mercator projection, expressed as meters per pixel (mpp), which depends on the zoom level z and the latitude of the image center. When solar panels span across multiple tiled images, each visible portion is segmented independently, and the final area is obtained by summing pixel-level mask counts over all tiles. This aggregation strategy prevents both double-counting and underestimation in boundary regions, ensuring accurate estimation even when panels intersect tile borders. The final area is computed as the product of the pixel count and the squared ground resolution:

where A denotes the estimated physical area in square meters (m2), represents the number of foreground pixels (solar panel pixels) in the segmentation mask, and is the ground resolution in meters per pixel at latitude and zoom level z.

The ground resolution is defined as follows:

where is the latitude of the image center (in radians when used in ), z is the integer zoom level of the tile, is the pixel dimension of the retrieved tile image (in our treatment, ), T is the tile size in pixels (typically ), and = 156,543.03392 m/pixel is the resolution factor at the equator for zoom level in the Web Mercator projection.

5. Experiments and Discussion

To evaluate the effectiveness of the proposed model, the original YOLO11-seg model was also employed for comparison purposes. Both models were trained and fine-tuned on a mixed dataset, which consists of 22,112 satellite images in total. The dataset was split into three subsets to ensure robust training and evaluation: 70% (15,478 images) for training, 20% (4422 images) for validation, and the remaining 10% (2212 images) for testing. All experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 4070 Ti GPU (12 GB VRAM), running Python 3.11 with PyTorch 2.9.1 and CUDA 13.0 acceleration. The proposed model contains 114 layers with 4.38 million parameters and 19.0 GFLOPs after fusion. During inference, the system achieves an average runtime of 1.7 ms per image, indicating that the network is highly efficient and suitable for large-scale satellite image segmentation tasks.

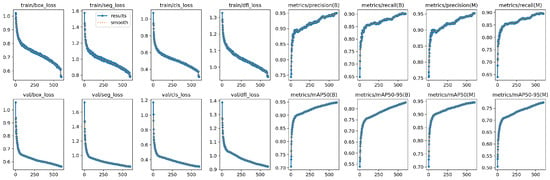

Figure 5 illustrates the training dynamics of the proposed model, including loss curves and evaluation metrics across 50 epochs. From the first row, it can be observed that the training losses for bounding box regression, segmentation, classification, and distribution focal loss steadily decrease, indicating stable convergence of the model. Similarly, the validation losses shown in the second row exhibit a consistent downward trend, confirming that the model generalizes well without obvious overfitting. The precision and recall curves for both bounding box detection (B) and mask segmentation (M) demonstrate continuous improvement, with precision reaching over 0.85 and recall stabilizing around 0.70 by the end of training. Furthermore, the mean Average Precision (mAP) at IoU threshold 0.5 (mAP50) reaches approximately 0.78 for bounding boxes and 0.72 for masks, while the stricter mAP50–95 metric reaches 0.60 and 0.52, respectively. These results suggest that the proposed model achieves robust detection and segmentation performance, with a favorable balance between precision and recall. The smoothness and stability of all curves further confirm the effectiveness of the adopted network design and training strategy.

Figure 5.

The training dynamics of the proposed model.

Figure 6 presents sample qualitative results of the proposed segmentation model on the validation dataset. The model successfully detects and segments rooftop solar panels in high-resolution satellite imagery, with the predicted masks shown in blue overlaid on the original images. The results demonstrate that the model is able to accurately capture both the shape and spatial distribution of solar panels across varying roof orientations, scales, and background contexts. Although some overlaps between bounding boxes and masks can be observed due to densely arranged panels, the overall predictions align well with the ground-truth annotations, confirming the robustness of the model in handling complex urban environments.

Figure 6.

The sample results of the proposed segmentation model in complex urban environments.

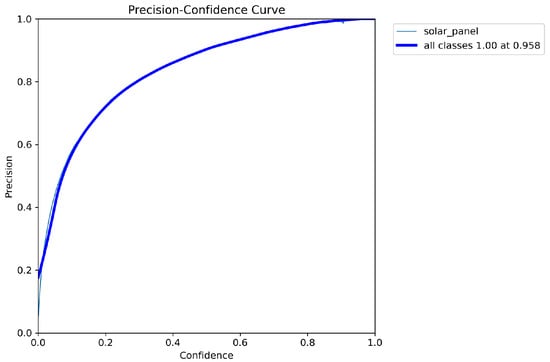

Figure 7 presents the precision–confidence curve for the solar panel segmentation task based on mask predictions. The precision steadily improves as the confidence threshold increases, reaching 1.0 at approximately 0.952 confidence. This trend indicates that the model is highly reliable in distinguishing true positives when stricter confidence criteria are applied, effectively eliminating false detections. Compared with lower confidence thresholds, where precision values fluctuate, the curve demonstrates that the model achieves robust segmentation performance when confidence is set above 0.5. Such characteristics are essential for practical deployment, where ensuring accurate mask-level detection is critical to avoid false segmentation of non-solar regions.

Figure 7.

The precision–confidence curve for the solar panel segmentation task.

To comprehensively evaluate the segmentation quality of the proposed model, we employ three widely used metrics: Intersection over Union (IoU), F1-Score, and Pixel Accuracy. These metrics capture complementary aspects of segmentation quality: IoU measures the spatial overlap between predicted and ground-truth masks, F1-Score reflects the balance between precision and recall, and Pixel Accuracy quantifies global correctness across the entire image. Together, they provide a more complete and reliable assessment of model performance than any single metric alone. IoU is defined as

which measures the overlap between predicted masks and the ground truth. Although IoU is known to be sensitive to small objects and class imbalance, rooftop PV panels in our task form large, continuous regions where IoU provides a stable and meaningful measure of mask alignment. To mitigate the known limitations of IoU, we additionally report F1-Score and Pixel Accuracy, which together offer a more complete assessment of segmentation performance.

The F1-Score is expressed as

providing a balance between precision and recall. Pixel Accuracy is defined as

which evaluates the overall proportion of correctly classified pixels.

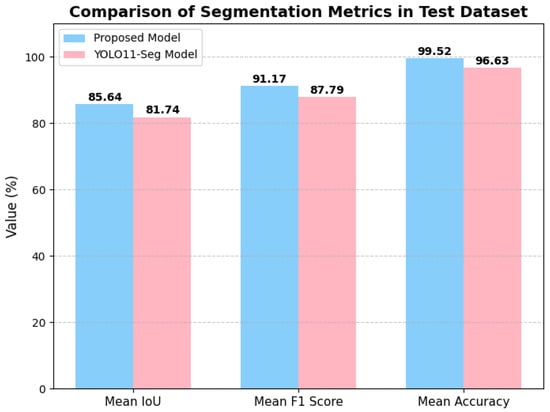

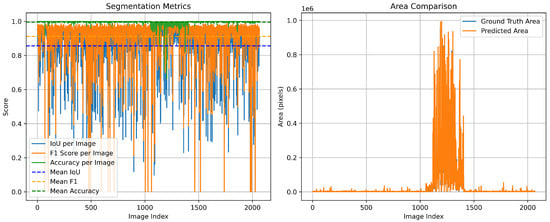

As illustrated in Figure 8, the proposed model achieves consistently high performance, with mean IoU, F1-Score, and Accuracy reaching 0.8564, 0.9117, and 0.9952, respectively. In comparison, the baseline YOLO11-Seg model attains lower values of 0.8174, 0.8779, and 0.9663. This demonstrates that our proposed model provides a clear improvement across all three evaluation metrics. Furthermore, the area comparison in Figure 9 demonstrates that the predicted segmentation areas closely follow the variations of the ground-truth areas, confirming that our method not only ensures pixel-level accuracy but also preserves global structural consistency.

Figure 8.

Comparison of segmentation accuracy among the various models.

Figure 9.

Segmentation metrics and area comparison of the proposed model in the test dataset.

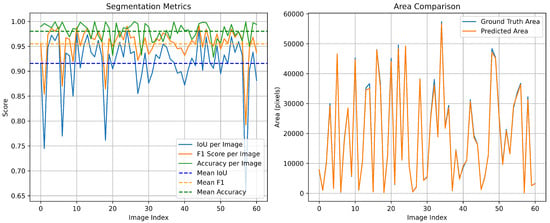

To further examine the robustness of the proposed segmentation model in shaded urban environments, we conduct an additional experiment on the PV01 rooftop subset of the Jiangsu multi-resolution dataset for photovoltaic panel segmentation [24]. PV01 consists of 0.1 m UAV images of distributed rooftop PV installations in dense urban areas, where many rooftops are partially covered by cast shadows from neighboring buildings or trees. Such shadows often make PV modules visually similar to surrounding roof materials and dark background regions, and have been reported to reduce segmentation accuracy for small-scale rooftop systems. On the PV01 test split, our proposed model achieves segmentation performance with mean IoU of 0.9156, F1-Score of 0.9549, and Mean Accuracy of 0.9802 (see Figure 10). These results suggest that the proposed method maintains good robustness in partially shaded urban scenes, although extreme shadow occlusions remain challenging and will be addressed in our future work.

Figure 10.

Segmentation metrics and area comparison of the proposed model in the Jiangsu multi-resolution PVO1 test dataset (63 images).

As a case study, we conducted experiments in a designated study area located in the Elephant and Castle district of London, UK, as illustrated in Figure 11. High-resolution satellite imagery of this area was obtained using the ESRI world imagery service. The selected region is bounded by the geographical coordinates (51.5020, −0.1097) and (51.4914, −0.0898), covering the Elephant and Castle area, and was divided into image tiles of size . The tiled satellite images were processed through the proposed segmentation framework, and the real-world area was computed by converting pixel counts using the meter-per-pixel factor (see Equations (25) and (26)) derived from the zoom level and the (x, y) tile indices stored in each image filename. These indices uniquely determine the tile’s geographic position and allow accurate estimation of ground resolution. After segmentation, all detected rooftop PV regions were manually inspected and corrected to ensure the reliability of the final measurement. Following this refined procedure, the total rooftop solar panel area in the study region was determined to be 127.75 m2.

Figure 11.

The designated area for the evaluation of the proposed model.

6. Conclusions

This study presented a YOLO-based semantic segmentation framework for solar photovoltaic (PV) panel detection and area estimation in urban environments. By introducing the enhanced architectural module SimAM, the proposed model significantly improved segmentation performance compared with the baseline YOLO11-Seg. Experimental evaluation on the Elephant and Castle area of London demonstrated that the proposed model achieved a mean IoU of 0.8564, a mean F1-Score of 0.9117, and a Mean Accuracy of 0.9952, surpassing the YOLO11-Seg baseline ( 0.8174, 0.8779, and 0.9663, respectively). Moreover, the predicted solar panel areas closely matched the ground truth, with an average relative difference of less than 2.5%, thereby confirming the reliability of our approach not only at the pixel level but also in area estimation. In addition to these technical improvements, the accurate quantification of rooftop PV areas provides an important foundation for urban energy-flow optimization, supporting data-driven scheduling of distributed renewable resources and contributing to long-term reductions in carbon emissions. Our future research will also explore enhancing model robustness under real-world degradation factors such as shadows, soiling, panel aging, and adverse weather conditions—limitations inherent in current satellite PV datasets. Beyond this, we aim to leverage generative adversarial networks (GANs) [30] to generate synthetic satellite images and simulate various urban scenarios, and explore hybrid models that combine LSTM [31] with other machine learning techniques such as convolutional neural networks or attention mechanisms [32]. Moreover, we will explore the possibility of introducing spiking neural networks for efficient solar panel detection and segmentation [33,34,35,36], and the optimization of energy flows across solar power resources with operational models [2,37].

Author Contributions

Conceptualization, J.Z.; Methodology, J.Z.; Software, J.Z., B.L. and H.B.; Validation, J.Z. and B.L.; Formal analysis, J.Z., D.C., B.L. and Z.Z.; Investigation, J.Z. and H.B.; Writing—original draft, J.Z.; Writing—review and editing, D.C., Z.Z. and P.X.; Visualization, J.Z.; Supervision, P.X.; Project administration, P.X.; Funding acquisition, P.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kahvecioğlu, G.; Morton, D.P.; Wagner, M.J. Dispatch optimization of a concentrating solar power system under uncertain solar irradiance and energy prices. Appl. Energy 2022, 326, 119978. [Google Scholar] [CrossRef]

- Bi, H.; Shang, W.L.; Chen, Y.; Wang, K. Joint optimization for pedestrian, information and energy flows in emergency response systems with energy harvesting and energy sharing. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22421–22435. [Google Scholar] [CrossRef]

- Chen, F.; Yang, Q.; Zheng, N.; Wang, Y.; Huang, J.; Xing, L.; Li, J.; Feng, S.; Chen, G.; Kleissl, J. Assessment of concentrated solar power generation potential in China based on Geographic Information System (GIS). Appl. Energy 2022, 315, 119045. [Google Scholar] [CrossRef]

- Bai, B.; Wang, Z.; Chen, J. Shaping the solar future: An analysis of policy evolution, prospects and implications in China’s photovoltaic industry. Energy Strategy Rev. 2024, 54, 101474. [Google Scholar] [CrossRef]

- Yin, G.; He, X.; Qin, Y.; Chen, L.; Hu, Y.; Liu, Y.; Zhang, C. Assessing China’s solar power potential: Uncertainty quantification and economic analysis. Resour. Conserv. Recycl. 2025, 212, 107908. [Google Scholar] [CrossRef]

- Ulpiani, G.; Vetters, N.; Shtjefni, D.; Kakoulaki, G.; Taylor, N. Let’s hear it from the cities: On the role of renewable energy in reaching climate neutrality in urban Europe. Renew. Sustain. Energy Rev. 2023, 183, 113444. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, N.; Ye, Z.; Jiang, K.; Lin, Z.; Zhang, H.; Xu, Y.; Liu, Q.; Huang, H. Carbon reduction benefits of photovoltaic-green roofs and their climate change mitigation potential: A case study of Xiamen city. Sustain. Cities Soc. 2024, 114, 105760. [Google Scholar] [CrossRef]

- Yang, Q.; Huang, T.; Wang, S.; Li, J.; Dai, S.; Wright, S.; Wang, Y.; Peng, H. A GIS-based high spatial resolution assessment of large-scale PV generation potential in China. Appl. Energy 2019, 247, 254–269. [Google Scholar] [CrossRef]

- Gu, M.; Liu, Y.; Yang, J.; Peng, L.; Zhao, C.; Yang, Z.; Yang, J.; Fang, W.; Fang, J.; Zhao, Z. Estimation of environmental effect of PVNB installed along a metro line in China. Renew. Energy 2012, 45, 237–244. [Google Scholar] [CrossRef]

- Seng, L.Y.; Lalchand, G.; Lin, G.M.S. Economical, environmental and technical analysis of building integrated photovoltaic systems in Malaysia. Energy Policy 2008, 36, 2130–2142. [Google Scholar] [CrossRef]

- Yang, Y.; Campana, P.E.; Stridh, B.; Yan, J. Potential analysis of roof-mounted solar photovoltaics in Sweden. Appl. Energy 2020, 279, 115786. [Google Scholar] [CrossRef]

- An, Y.; Chen, T.; Shi, L.; Heng, C.K.; Fan, J. Solar energy potential using GIS-based urban residential environmental data: A case study of Shenzhen, China. Sustain. Cities Soc. 2023, 93, 104547. [Google Scholar] [CrossRef]

- Zhu, R.; Kwan, M.P.; Perera, A.T.D.; Fan, H.; Yang, B.; Chen, B.; Chen, M.; Qian, Z.; Zhang, H.; Zhang, X.; et al. GIScience can facilitate the development of solar cities for energy transition. Adv. Appl. Energy 2023, 10, 100129. [Google Scholar] [CrossRef]

- Yuan, Y.; Fang, J.; Lu, X.; Feng, Y. Spatial structure preserving feature pyramid network for semantic image segmentation. Acm Trans. Multimed. Comput. Commun. Appl. (Tomm) 2019, 15, 1–19. [Google Scholar]

- Li, P.; Zhang, H.; Guo, Z.; Lyu, S.; Chen, J.; Li, W.; Song, X.; Shibasaki, R.; Yan, J. Understanding rooftop PV panel semantic segmentation of satellite and aerial images for better using machine learning. Adv. Appl. Energy 2021, 4, 100057. [Google Scholar] [CrossRef]

- Huang, J.; Zeng, K.; Zhang, Z.; Zhong, W. Solar panel defect detection design based on YOLO v5 algorithm. Heliyon 2023, 9, e18826. [Google Scholar] [CrossRef]

- Ghahremani, A.; Adams, S.D.; Norton, M.; Khoo, S.Y.; Kouzani, A.Z. Detecting Defects in Solar Panels Using the YOLO v10 and v11 Algorithms. Electronics 2025, 14, 344. [Google Scholar] [CrossRef]

- Wang, J.; Chen, X.; Jiang, W.; Hua, L.; Liu, J.; Sui, H. PVNet: A novel semantic segmentation model for extracting high-quality photovoltaic panels in large-scale systems from high-resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 119, 103309. [Google Scholar] [CrossRef]

- Gasparyan, H.A.; Davtyan, T.A.; Agaian, S.S. A novel framework for solar panel segmentation from remote sensing images: Utilizing Chebyshev transformer and hyperspectral decomposition. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–11. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, Y.; Li, K.; Ji, W.; Sun, H. Extracting photovoltaic panels from heterogeneous remote sensing images with spatial and spectral differences. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5553–5564. [Google Scholar] [CrossRef]

- Zhu, R.; Guo, D.; Wong, M.S.; Qian, Z.; Chen, M.; Yang, B.; Chen, B.; Zhang, H.; You, L.; Heo, J.; et al. Deep solar PV refiner: A detail-oriented deep learning network for refined segmentation of photovoltaic areas from satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 116, 103134. [Google Scholar] [CrossRef]

- Zhang, R.; Chen, J.; Feng, L.; Li, S.; Yang, W.; Guo, D. A refined pyramid scene parsing network for polarimetric SAR image semantic segmentation in agricultural areas. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4014805. [Google Scholar] [CrossRef]

- Mahboob, Z.; Khan, M.A.; Lodhi, E.; Nawaz, T.; Khan, U.S. Using segformer for effective semantic cell segmentation for fault detection in photovoltaic arrays. IEEE J. Photovoltaics 2025, 15, 320–331. [Google Scholar] [CrossRef]

- Jiang, H.; Yao, L.; Lu, N.; Qin, J.; Liu, T.; Liu, Y.; Zhou, C. Multi-resolution dataset for photovoltaic panel segmentation from satellite and aerial imagery. Earth Syst. Sci. Data Discuss. 2021, 2021, 1–17. [Google Scholar] [CrossRef]

- Kasmi, G.; Saint-Drenan, Y.M.; Trebosc, D.; Jolivet, R.; Leloux, J.; Sarr, B.; Dubus, L. A crowdsourced dataset of aerial images with annotated solar photovoltaic arrays and installation metadata. Sci. Data 2023, 10, 59. [Google Scholar] [CrossRef]

- Institute, E.S.R. World Imagery. 2025. Available online: https://www.arcgis.com/home/item.html?id=10df2279f9684e4a9f6a7f08febac2a9 (accessed on 4 September 2025).

- Jocher, G.; Qiu, J. Ultralytics YOLO11, 2024. Licensed Under AGPL-3.0. Available online: https://docs.ultralytics.com/models/yolo11/#can-yolo11-be-deployed-on-edge-devices (accessed on 4 September 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Conference, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. Acm 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Yadav, H.; Thakkar, A. NOA-LSTM: An efficient LSTM cell architecture for time series forecasting. Expert Syst. Appl. 2024, 238, 122333. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Fang, W.; Yu, Z.; Chen, Y.; Huang, T.; Masquelier, T.; Tian, Y. Deep residual learning in spiking neural networks. Adv. Neural Inf. Process. Syst. 2021, 34, 21056–21069. [Google Scholar]

- Hu, Y.; Tang, H.; Pan, G. Spiking deep residual networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 5200–5205. [Google Scholar] [CrossRef] [PubMed]

- Lei, Z.; Yao, M.; Hu, J.; Luo, X.; Lu, Y.; Xu, B.; Li, G. Spike2former: Efficient spiking transformer for high-performance image segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 Feburary–4 March 2025; pp. 1364–1372. [Google Scholar]

- Bi, H.; Shang, W.L.; Chen, Y.; Wang, K.; Yu, Q.; Sui, Y. GIS aided sustainable urban road management with a unifying queueing and neural network model. Appl. Energy 2021, 291, 116818. [Google Scholar] [CrossRef]

- Bi, H.; Shang, W.L.; Chen, Y.; Yu, K.; Ochieng, W.Y. An incentive based road traffic control mechanism for COVID-19 pandemic alike emergency preparedness and response. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25092–25105. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.