Highlights

- IRIS-QResNet, a custom ResNet model enhanced with a quanvolutional layer for more accurate iris recognition that uses few samples per subject without applying augmentation.

- IRIS-QResNet proves its ability for efficient biometric authentication by consistently achieving superior accuracy and generalization across four benchmark datasets.

What are the main findings?

- Compared with IResNet, the traditional baseline, IRIS-QResNet model significantly improves recognition accuracy and robustness, even in small-sample, augmentation-free settings.

- Across multiple iris datasets, IRIS-QResNet strengthens multilayer feature extraction, resulting in measurable performance gains of up to 16.66% in identification accuracy.

What are the implications of the main findings?

- By effectively integrating quantum-inspired layers into classical deep networks, higher discriminative power and data efficiency can be achieved, reducing dependence on large training datasets and data augmentation.

- These results open the path toward scalable and sustainable AI solutions for biometric systems, establishing a viable bridge between conventional and emerging quantum machine learning architectures.

Abstract

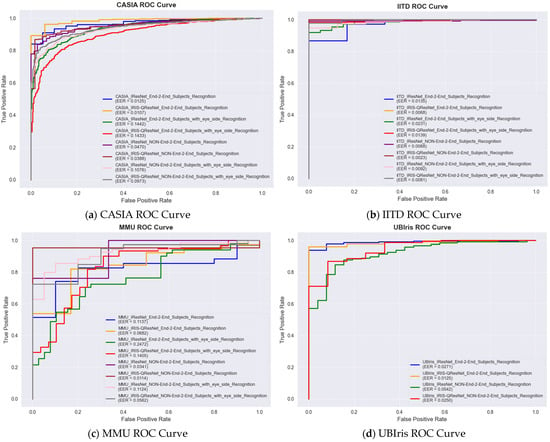

Iris recognition continues to pose challenges for deep learning models, despite its status as one of the most reliable biometric authentication techniques. These challenges become more pronounced when training data is limited, as subtle, high-dimensional patterns are easily missed. To address this issue and strengthen both feature extraction and recognition accuracy, this study introduces IRIS-QResNet, a customized ResNet-18 architecture augmented with a quanvolutional layer. The quanvolutional layer simulates quantum effects such as entanglement and superposition and incorporates sinusoidal feature encoding, enabling more discriminative multilayer representations. To evaluate the model, we conducted 14 experiments on the CASIA-Thousands, IITD, MMU, and UBIris datasets, comparing the performance of the proposed IRIS-QResNet with that of the IResNet baseline. While IResNet occasionally yielded subpar accuracy, ranging from 50.00% to 98.66%, and higher loss values ranging from 0.1060 to 2.0640, comparative analyses showed that IRIS-QResNet consistently outperformed it. IRIS-QResNet achieved lower loss (ranging from 0.0570 to 1.8130), higher accuracy (ranging from 66.67% to 99.55%), and demon-started improvement margins spanning from 0.1870% in the CASIA End-to-End subject recognition with eye-side to 16.67% in the MMU End-to-End subject recognition with eye-side. Loss reductions ranged from 0.0360 in the CASIA End-to-End subject recognition without eye-side to 1.0280 in the UBIris Non-End-to-End subject recognition. Overall, the model exhibited robust generalization across recognition tasks despite the absence of data augmentation. These findings indicate that quantum-inspired modifications provide a practical and scalable approach for enhancing the discriminative capacity of residual networks, offering a promising bridge between classical deep learning and emerging quantum machine learning paradigms.

1. Introduction

Safe and trustworthy identity authentication has grown to be a major worldwide concern. In a variety of fields, today, including banking digital platforms, healthcare, and border control, people are required to present identification. Traditional password-based systems are becoming increasingly ineffective because they are vulnerable to theft, duplication, and human error. A safer substitute, however, is provided by biometric technologies, which are based on distinct physiological or behavioral characteristics [1]. The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) [2] define biometric systems as automated procedures that uniquely identify people based on behavioral or physiological traits. In contrast to behavioral modalities, which include voice, gait, and typing dynamics, physiological modalities include iris patterns, facial geometry, and fingerprints [3]. Iris recognition is highly unique, stable, informative, safe, and contactless [4]. The iris of humans, as shown in Figure 1, has special textural structures [5] that are incredibly intricate, persistent throughout life, and even different between the two eyes of an individual [6]. The iris largely remains unchanged after early childhood and even after death [7], in contrast to fingerprints that may deteriorate due to physical labor or facial features that can change with aging, cosmetic makeup, or surgeries. Additionally, non-contact hygienic and forgery-resistant iris-based systems have remarkably low rates of false acceptance and rejection [8]. Therefore, the iris is regarded as one of the most reliable physiological characteristics for extensive identity authentication [9,10].

Figure 1.

The right and left eyes of subject no. 53 of the IITD dataset, showing the distinctiveness of the irises is even among the two eyes of the same person. (a) The right eye; (b) the left eye.

The three primary phases of a handcrafted iris recognition pipeline are segmentation, feature extraction, and matching [11]. The iris region is often segmented to eliminate occlusions from the eyelids and lashes after obtaining a high-quality eye image. After the iris texture has been segmented, discriminative features are extracted and compared for identification or verification [11]. Early methods used handcrafted features like Local Binary Patterns (LBP) [12], Gabor filters [13], and wavelet transforms [14], which were frequently coupled with conventional classifiers like Support Vector Machines (SVMs) or K-Nearest Neighbors (k-NN) [15]. These techniques performed well in controlled settings, but struggled with noise and nonlinear texture variations, which limited their robustness and cross-sensor generalization.

Iris recognition has advanced significantly since the emergence of deep learning. Manual feature engineering is rapidly becoming no longer necessary because Deep Neural Networks (DNNs) and Residual Networks (ResNets) can automatically learn hierarchical and highly discriminative features straight from raw iris images [4,11]. ResNets can achieve better accuracy and robustness by capturing both local and global iris textural patterns using residual skip connections and very deep convolutional blocks [16]. When compared to the basic Conventional Neural Networks (CNNs), their end-to-end architecture simplifies the procedure and produces better benchmark results [4,17]. Deep models, however, have several drawbacks: (1) they need large training datasets in order to generalize well [16,18], (2) using small iris datasets, which typically contain only five to ten samples per class, may result in overfitting and decreased accuracy [19], (3) training and inference are computationally costly, which limits their use on devices with limited resources [20], and (4) end-to-end systems may unintentionally capture/rely on irrelevant information like scleral or eyelid features, while robust iris localization is still a challenge [21,22]. These limitations are particularly problematic for iris recognition because acquiring large-scale iris datasets is constrained by privacy regulations, collection costs, and the requirement for specialized near-infrared imaging equipment. Moreover, conventional data augmentation techniques, such as rotation, scaling, and brightness adjustment, merely increase sample quantity without fundamentally enriching the feature representation space. This approach fails to address the core challenge: how to extract maximally discriminative features from the limited available data while avoiding the capture of spurious, non-iris patterns.

The intricate, multiscale texture patterns of the iris present unique challenges for conventional CNNs. Although CNN-based approaches demonstrate strong performance in iris recognition when trained on large datasets, they face clear limitations in small-sample regimes due to: (1) localized receptive fields that may fail to capture long-range spatial dependencies between distant iris features [23] (2) fixed kernel sizes that constrain multiscale feature extraction [24], and (3) high sensitivity to limited training data [25]. These constraints are particularly critical in iris recognition, where large-scale data collection is restricted by privacy concerns and specialized acquisition requirements. Quantum-inspired transformations offer a mechanism for addressing these challenges [26]. Specifically, quanvolutional layers employ parameterized operations derived from principles of quantum superposition and entanglement to project classical image data into exponentially larger Hilbert spaces [27]. Superposition enables the simultaneous evaluation of multiple feature combinations, allowing the model to examine numerous texture patterns in parallel, a capability that becomes especially valuable in data-scarce scenarios, where exhaustive feature exploration is infeasible [28]. Additionally, entanglement-based transformations can encode long-range spatial dependencies and non-local correlations within iris textures that standard convolutions, constrained by limited receptive fields, cannot sufficiently represent [29]. These operations enrich the representational space by introducing additional degrees of freedom and cross-channel correlations without requiring quantum hardware [30,31]. Unlike data augmentation, which solely increases sample quantity, quanvolutional layers fundamentally expand the model’s representational capacity [30]. By projecting features into higher-dimensional spaces through parameterized quantum transformations, these layers facilitate the discovery of discriminative iris patterns that are beyond the expressive reach of classical convolutional filters [31]. This enhancement allows the network to focus more effectively on genuine iris texture information, reducing reliance on irrelevant scleral or eyelid features. Recent studies have reported that quanvolutional layers improve generalization and pattern separability [32,33], with increased robustness to noise and cross-dataset variability [28,29,34]. Despite these promising developments, the use of quantum-inspired techniques in iris recognition remains largely unexplored. Given the natural correspondence between the high-dimensional structure of iris textures and the expanded representational potential of quantum-inspired transformations, this research direction warrants systematic investigation.

By incorporating quanvolutional layers into residual blocks, the hybrid ResNet-18 framework proposed in this study (IRIS-QResNet) seeks to close that gap. Moreover, instead of relying on synthetic sample expansion, the proposed IRIS-QResNet directly improves feature richness by enriching the representational space of the input, rather than merely increasing its quantity. This strategy leverages quantum-inspired feature mixing to extract fine-grained iris textures without introducing augmentation-induced distortions, offering particular advantages in extremely small-sample regimes. From small and varied datasets, this integration enables efficient deep feature extraction with enhanced generalization, while preserving computational efficiency. The suggested approach aims for high accuracy and robustness even with small sample sizes per class, in contrast to standard deep models that primarily rely on data augmentation or require massive datasets. The contributions of this paper are threefold:

- 1.

- A hybrid iris recognition framework that supports both End-to-End and non-End-to-End modes and it is generalizable and applicable to a variety of datasets, varying in sizes, i.e., tiny, small, and mid-size.

- 2.

- A unique customized ResNet-18 architecture that can manage datasets with different dimensions and forms, including very small-sample regimes (e.g., five to ten images per class) without the need for data augmentation.

- 3.

- An experimental examination of quantum-inspired improvements by the proposed IRIS-QResNet model that is methodical and involves integrating quanvolutional layers and assessing it on four benchmark datasets, along with comparative analysis to measure gains in accuracy, robustness, and loss reduction.

The remainder of the paper is organized as follows: Section 2 describes the background and related works. Section 3 introduces the proposed custom ResNet-18 model with the quanvolutional layer. Section 4 is for setting up the experiment. Section 5 discusses the experiments conducted together with their results and comparative evaluations. Finally, conclusions are drawn in Section 6.

2. Background and Related Work

This section reviews the generations of iris recognition systems from basic handcrafted techniques to modern deep CNN and quantum-inspired models.

2.1. Handcrafted Iris Recognition Approaches

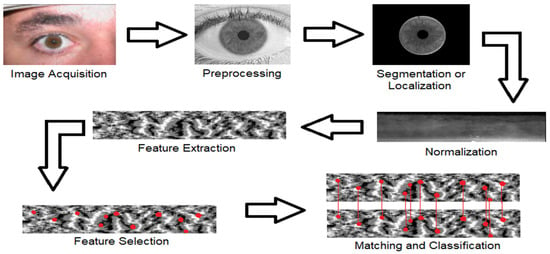

The history of iris recognition closely resembles the evolution of biometric systems generally. Due to the iris’s uniqueness, stability, and rich texture patterns, researchers have turned their attention to it as a significant modality for secure identity authentication in digital and governmental applications [35]. The early efforts of iris recognition pipelines [36,37,38], as shown in Figure 2, include iris image acquisition, preprocessing, segmentation, normalization, feature extraction, feature selection (represented as red dots in the figure), matching of the selected features, and classification of the recognized subjects. Since the accuracy of the system was directly influenced by the discriminative power of the extracted features, the feature extraction stage was the most important. Earlier systems employed handcrafted features based on mathematical transformations such as wavelet transforms [14], which provided multi-resolution analysis, and Gabor filters [13], which modeled texture frequency and orientation. Additionally, Local Binary Patterns (LBP) [13] offered straightforward yet useful micro-texture descriptors. Shallow classifiers, such as SVM or k-NN, were commonly used in conjunction with these features [15]. Because of their interpretability and computational efficiency, these methods performed well under controlled imaging conditions. Nevertheless, several restrictions surfaced [4,11]. Handcrafted features had a fixed descriptive power once they were designed, making them less flexible to change datasets or environmental conditions [39]. Their performance might drastically decline in unrestricted settings with noise, occlusions, or lighting shifting. Furthermore, these approaches repeatedly worked in low-dimensional feature spaces, preventing them from capturing hierarchical and highly nonlinear information in iris textures. Precise segmentation was also crucial for accuracy, where mistakes in boundary localization greatly reduced performance [4,11]. It was evident by the late 2000s that handcrafted pipelines lacked generalization capabilities despite being effective and interpretable. Deep learning approaches were made possible by the growing efforts to devise techniques that could automatically learn robust nonlinear representations.

Figure 2.

Overview of the handcrafted iris recognition pipeline. Key processing stages follow the approaches outlined in [36,37].

2.2. Deep CNN-Based Iris Recognition Approaches

Iris recognition was revolutionized by deep learning [40], which removed the need for manual techniques by enabling models to learn discriminative representations straight from data [41,42]. According to Yin et al. [4], because of their capacity to take advantage of spatial hierarchies in images, CNNs became the most popular deep learning architecture. Manual feature engineering is eliminated by end-to-end CNN-based systems, which feed raw iris images into the network, and an embedding or classification label is the output. Comparison with handcrafted techniques, CNNs significantly decreased false acceptance and rejection rates [4,8]. Multimodal frameworks enhanced robustness in difficult situations by combining iris data with other biometric modalities like face or fingerprint [10], transferring learning of pre-trained networks from sizable natural image datasets [18], and enforcing hybrid CNN-transformer models that capture long-range dependencies [40]. ResNets are a well-known subclass of CNN architecture made to address issues with feature degradation and vanishing gradients in very deep networks. They are especially beneficial in improving feature learning and robustness by maintaining the hierarchical convolutional structure, while adding skip connections. However, challenges persist despite these pros, as several iris datasets include only a few samples per subject, while CNNs require large datasets to generalize effectively. When models memorize training data instead of acquiring transferable patterns, this may often result in overfitting. Augmenting data (e.g., rotation, scaling, and illumination changes) [16,18] can introduce artifacts and provide only a limited amount of improvement. Computationally demanding deep networks require a lot of computing power [4,11], where training and deployment require powerful GPUs [43]. End-to-end CNNs may also unintentionally pick up unrelated features (e.g., damaging cross-dataset generalization or eyelid textures of sclera veins) [4]. On the other hand, iris localization is still challenging across a variety of sensors and settings [39,44]. Lack of data, high computational cost, poor localization, and degraded cross-sensor robustness are among the problems that highlight the need for alternative paradigms that preserve CNNs’ learning capabilities while enhancing efficacy and versatility. Quantum-inspired computing is a promising avenue to pursue.

2.3. Quantum-Inspired Image Classification Approaches and Remaining Gaps

Recent applications of quantum computing ideas to solve classical (non-quantum) machine learning have led to quantum-inspired methodologies. While true quantum computers are still in the Noisy Intermediate-Scale Quantum (NISQ) stage [45], models inspired by quantum technology use standard hardware to simulate quantum behavior [26,46]. Such models improve pattern separability without the use of quantum processors by leveraging high-dimensional feature mappings that are comparable to those of quantum systems. A quanvolutional (quantum convolutional) layer is inspired by quantum computing concepts added to a DNN model for enhancement reasons [33,47]. It splits an image into patches, encodes each patch as a quantum state, performs unitary transformations, and then projects the output back into classical space. By enriching feature representations, this process enables easier linear separation of intricate or overlapping patterns. Research on general image classification demonstrates that quanvolutional layers can improve robustness to noise and cross-domain variations, while improving generalization with fewer parameters [26,33,47]. Integration into current pipelines is allowed by compatibility with CNN architectures [33,48,49]. However, there is still much to learn about the applications of quantum-inspired techniques to biometric recognition, particularly iris recognition. Simple tasks like classifying flowers or numbers have been among the focus of previous research [48,50]. Research on the interaction of quanvolutional layers with biometric [51,52,53], iris-specific problems, like small datasets, cross-sensor variability, or imprecise localization, is scarce if it exists at all; to the best of our knowledge, few articles are available on biometrics, face-specific, but none about iris. Furthermore, little is known about how quantum-inspired encoding and data augmentation interact. Basically, quantum-encoded data, which is transformed using a quantum transformer such as the Quantum Fourier Transformer (QFT), may be distorted by the augmentation transformer, thereby reducing performance rather than increasing it [54]. The absence of cross-dataset evaluation in earlier research work is another significant drawback. It is challenging to evaluate generalization in real-world scenarios with numerous sensors and diverse populations, as many experiments rely on a single dataset.

To fill these research gaps, this study presents IRIS-QResNet as a customized ResNet-18 framework that incorporates quanvolutional layers into its residual blocks. The suggested hybrid architecture preserves compatibility with ResNet architecture while increasing feature extraction efficiency and generalization on augmentation-free small-sample data. Four different iris datasets are evaluated in fourteen experiments that directly contrast baseline and hybrid configurations. This study bridges the gap between CNN-based and quantum-inspired paradigms in biometric systems by being one of the first thorough examinations of quantum-inspired deep learning for iris recognition.

3. IRIS-QResNet: The Proposed Model

To address the aforementioned issues, we propose IRIS-QResNet, a system customized for iris recognition purposes that is enhanced with a quantum-inspired layer (also known as quanvolutional layer), as its name suggests. The core design of our proposed model (IRIS-QResNet) is the baseline model (IResNet), as we denote throughout the rest of this paper. Though it shares structural similarities with the standard ResNet-18, we customized it for iris biometrics to function well even on high-variation low-sample datasets, such as those with as few as five images per class. In contrast to standard ResNet-18 configurations tailored for extensive natural image datasets (e.g., ImageNet), IResNet integrates training and architectural adaptation to improve data efficiency and better suit the subtle intra-class variability and fine-grained textures found in iris patterns.

3.1. IResNet: The Baseline Model

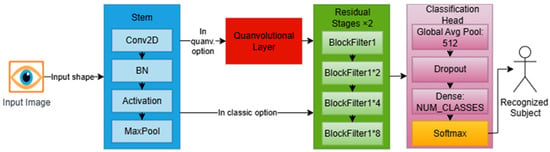

The conventional ResNet-18 architecture serves as the core of our baseline model (IResNet), which comprises three components customized for the iris recognition context and datasets. IResNet does not need nor depend on data augmentation, making it a suitable core for the proposed IRIS-QResNet. Figure 3 illustrates the three components of IResNet: the stem block for handling input data, the residual stages, and the classification head, and shows the quanvolutional layer added to IResNet to extended to IRIS-QResNet.

Figure 3.

IRIS-QResNet conceptual model, showing where the quanvolutional layer is installed to extend our baseline IResNet model to the proposed quantum-inspired counterpart.

3.1.1. Stem Block for Input Handling

The stem block of IResNet converts the raw iris image into a compact and discriminative feature representation. It has been redesigned to work with a grayscale iris image I, with feature map x, as shown in Equation (1).

where is the set of real numbers, and are the spatial dimensions, and denotes the grayscale. This stem block aims to suppress noise and illumination irregularities that are typical in iris datasets while performing early spatial abstraction, normalization, and nonlinear activation, thereby preserving low-level texture cues, such as furrows, crypts, and pigment variations. For each input iris image I, we use a compact and smaller 3 × 3 kernel for convolution with two strides, Batch Normalization , Swish activation (), followed by 3 × 3 Max-Pooling instead of the original 7 × 7 kernel of the convolutional layer found in standard ResNet-18. In addition to preserving computational efficiency, this modification lessens over-smoothing and enhances the preservation of micro-level iris structures illustrated in Equation (2):

The Swish activation function is defined as follows in Equation (3):

and is preferred over ReLU for small and medium-sized datasets due to its smoother gradient flow and more reliable empirical convergence [55]. Swish (also known as SiLU, or Sigmoid-weighted Linear Unit) provides distinct advantages through improved gradient propagation. Unlike ReLU, which outputs zero for all negative inputs, Swish retains a non-zero derivative even for negative values where Equation (4) expresses it as

allowing gradients to propagate backward through the network and mitigating vanishing gradient issues in deeper architectures [56]. Swish’s smooth, non-monotonic activation characteristics allow it to suppress large negative responses while selectively retaining small negative activations that may encode subtle discriminative cues [57]. This property is particularly advantageous in iris recognition, where fine-grained structures, such as crypts, furrows, and collarette variations, are often represented by low-magnitude activations that must be preserved to maintain discriminative power under limited-sample conditions. This lighter stem design preserves fine-grained iris texture details and lowers parameter count and overfitting risk in small datasets. Such capabilities are essential for precise recognition, particularly in datasets with few samples per class. By reducing the number of learnable parameters, the smaller kernel size also enhances model generalization in training scenarios with limited resources. The batch normalization momentum ( = 0.9) and a lightweight configurable L2 regularization term was empirically chosen to stabilize convergence without suppressing subtle discriminative features. The stem block produces a 64-channel normalized feature map with improved local contrast and decreased spatial resolution. As inputs to later residual stages, these representations offer the best possible trade-off between computational efficiency and spatial detail. Overall, the customized stem block offers a minimalistic yet expressive front-end, designed to preserve iris texture in cross-sensor and small-sample cases. Given its smaller kernel size, Swish activation, and precisely calibrated yet configurable regularization, it is a crucial component of the model’s robustness and generalization ability.

Kernel Standardization for Fair Comparison

While standard ResNet employs a 7 × 7 stem, the original IResNet configuration used heterogeneous kernel sizes distributed as 7, 3, 3, 3, 3, 1 across the stem and residual layers, with the quanvolutional layer using a 1 × 1 quantum measurement kernel. To ensure fair evaluation across multiple, diverse, and challenging iris datasets (e.g., MMU with eye-side information and UBIris) and to prevent dataset-specific biases, all convolutional kernels were standardized to 3 × 3 in both the customized baseline IResNet and the proposed IRIS-QResNet. Maintaining a larger 7 × 7 stem in the classical model would have granted a broader initial receptive field and introduced bias into performance comparisons, potentially inflating classical performance relative to the quantum-enhanced model. While some performance variations were observed with the original heterogeneous kernel distribution, the unified 3 × 3 configuration provides a consistent framework for evaluating the contribution of the quanvolutional layer. This choice was not introduced as an optimization strategy but to ensure receptive-field parity across architectures, thereby isolating the benefits of quantum-inspired enhancements rather than stem redesign.

3.1.2. Residual Stages

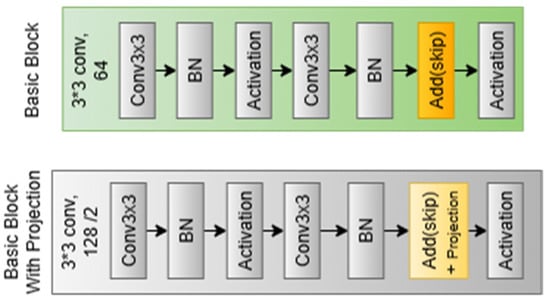

The core of the baseline model is made up of residual stages, which allow for deep representation learning of iris textures and hierarchical feature extraction. The receptive field gradually widens during these phases, enabling the model to represent local and global iris features, such as cornea patterns, furrows, and crypts. Even in situations with limited training data, the residual design ensures stable convergence by maintaining gradient flow and reducing degradation in deeper networks. In line with an 18-layer depth, the baseline model consists of four residual stages with filter sizes [64,128,256,512] and repeat configurations of [2,2,2,2]. Each step consists of stacked basic residual blocks, as shown in Figure 4, with two 3 × 3 convolutional layers in each block. Batch normalization and Swish activation are then applied. One block can be mathematically expressed as follows in Equation (5).

where is the residual connection that, when required, aligns dimensions using a 3 × 3 projection, stands for the input tensor, or literally the features come from the last block, and is the block output.

Figure 4.

Building blocks of a single residual block, (top) the basic block, and (down) the basic block with the projection process.

The residual stages of IResNet differ from those of general ResNet implementation in several ways. First, the benefit of using Swish activation rather than ReLU is that it produces smoother gradients, improving training stability and discrimination across minute changes in iris texture. Second, across different datasets, a lighter configurable regularization and a high batch normalization momentum ( = 0.9) are added to reduce overfitting without compromising representational capabilities. Third, stride-2 convolutions are used for each downsampling operation between stages instead of pooling, which improves feature compactness for iris recognition and permits a more learnable spatial reduction. This combination provides a more favorable trade-off between robustness and model complexity.

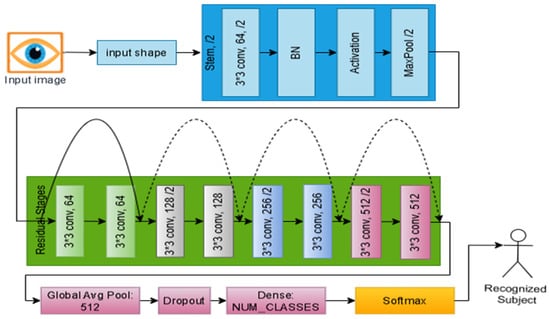

While deeper stages (256 and 512 filters) capture more abstract global discriminative structures, the early residual stages (64 and 128 filters) concentrate on fine-grained localized patterns within the iris texture. Following the last phase, a high-level feature tensor is created, which contains the macrostructural and microtextural data required for accurate identity representation. These customized residual stages make up a balanced hierarchical encoder that maintains computational efficiency while preserving discriminative iris information. Swish activations, optimized normalization parameters, and learnable downsampling procedures are integrated to guarantee the network’s resilience in noisy cross-sensor and limited-sample acquisition situations. Figure 5 shows a detailed workflow for IResNet, where each residual block maintains a direct skip connection between its input and output. A projection shortcut employing a 3 × 3 convolution and batch normalization aligns the dimensions when the input and output dimensions differ, ensuring correct residual addition. Without necessitating architectural changes, this mechanism maintains gradient flow across depth and stabilizes training.

Figure 5.

Detailed diagram of the IResNet baseline, solid arrows represents the normal connections, dashed arrows illustrates the skip connections.

3.1.3. Regularization and Classification

This final part of the baseline IResNet architecture combines deep representations into discriminative identity judgments. It accomplishes two main goals: (1) forcing structural regularity on the learned features to improve generalization in the case of limited biometric data and (2) converting spatial feature maps into a condensed class-probability representation appropriate for iris identity prediction. The network introduces a controlled regularization step prior to classification, selectively disrupting intermediate activations during training. This encourages the model to use distributed representations instead of local features committed to memory. To prevent overfitting and improve the resilience of extracted iris embeddings against changes in illumination, noise, and occlusions, such regularization serves as a constraint that restricts co-adaptation between neurons. A global average pooling operation is used to aggregate the multi-dimensional feature maps following spatial encoding through the convolutional and residual hierarchies. This process efficiently converts the learned feature hierarchy into a condensed global descriptor of iris texture by compressing spatial dimensions while maintaining the most prominent activation responses. To guarantee that every sample is mapped to one of the predetermined identity classes, the pooled feature vector is subsequently run through a fully connected (dense) layer with a SoftMax activation function that produces normalized class probabilities, as follows Equation (6):

where represents the class probability distribution (i.e., SoftMax), zi is the final logit associated with the ith class, and is the number of iris classes (identities). The classification and regularization procedures ensure that the model preserves a balance between generalization and discriminative precision. The SoftMax-based classification layer converts the high-level learned features into identity probabilities that can be understood semantically, while regularization enforces smoother parameter landscapes and reduces overconfidence. By connecting high-level decision formation with low-level feature extraction, this combination completes the architectural pipeline. As the last interpretive link in the baseline model, this stage essentially transforms learned spatial representations into categorical predictions, while preserving the model’s stability and generalization. It also guarantees the robustness and dependability of the decision-making process in iris recognition by combining feature regularization with global pooling and probabilistic mapping.

3.2. IRIS-QResNet: The Enhanced IResNet by a Quanvolutional Layer

Since iris textures naturally display intricate nonlinear patterns in both radial and angular directions, integrating such patterns for iris recognition is motivating. Quanvolutional representations employ mixing dynamics and trigonometric embeddings to enhance feature diversity without the need for artificial data augmentation, whereas CNNs frequently impose locality biases that may limit their capacity to capture subtle variations.

3.2.1. Quantum State from Classic Input

Prior to the quantum encoding process, the preparation phase for the classical-to-quantum state, i.e., the normalization state, can be mathematically formulated by Equation (7) as:

In this case, is the general pure sate, |i⟩ indicates the qubits’ computational basis states (i.e., ∣0⟩, ∣1⟩, ∣2⟩, …, ∣ − 1⟩), denotes complex amplitude coefficients obtained from the output features of the previous step, usually represented in Equation (8) as:

which measures the ratio of the contribution of every computational basis state (|i⟩ to the total quantum states |ψ⟩), while |ψ⟩ or in Dirac notations “ket”, is the quantum state vector of a system consists of , and the summation means the state |ψ⟩ is a superposition of basis states and runs over all the possible basis state for the identified value of . This makes it possible for quantum computers to execute numerous computations at once. The amplitude coefficient must also satisfy the condition of normalization expressed by Equation (9) as:

This equation ensures that the total probability of all measurement outcomes equals 1, where the square is the possibility of measuring the basic state. With a probability denoted by , the system collapses to one of the basis states |i⟩. The essence of quantum states in superposition is encapsulated in Equation (9), which shows how quantum mechanics is probabilistic and how coefficients affect measurement results.

3.2.2. The Proposed Quanvolutional Layer

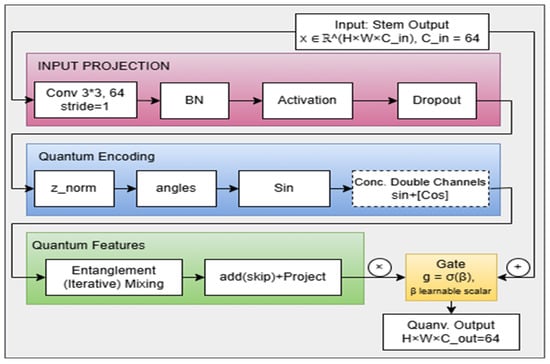

Figure 6 shows the detailed architecture of the quanvolutional layer that was abstracted and shown earlier as a red box in Figure 3. While alternative placements (e.g., before the stem or at multiple locations) are theoretically possible, this study specifically reports the results for the post-stem placement. The chosen position is motivated by conceptual and computational considerations, as described above, and provides a consistent framework for evaluating the impact of the quanvolutional layer. Systematic exploration of alternative placements will be considered in future work. The layer performs quantum-inspired feature encoding through sine–cosine transformations followed by convolutional mixing and gated residual integration. Its design ensures that enhanced features complement the baseline convolutional output without creating shape mismatches across residual paths. The layer starts by receiving an input feature map in a formal sense that represents the spatial dimensions and the number of channels, respectively (i.e., it is the exact output of the baseline stem block). This layer has a two-option pathway, depending on the required projection, to avoid output shape mismatch between the inner layers of the quanvolutional layer and the residual layers that receive the overall output from the quanvolutional layer.

Figure 6.

Detailed architecture of the proposed quanvolutional layer, shown as abstracted red box in Figure 3.

Input Projection (Stem Transformation)

For the long path, where no projection is needed, the stem block of the quanvolutional layer consists of a 2D convolutional layer that has a = 1; not 2 as the stem of the baseline. Then there is a batch normalization block, followed by an activation block (swish). The other difference from the baseline stem block is that here we have a dropout, not a max pooling block. As such, the stem block can be expressed in Equation (10) as:

The dropout is implemented to enhance robust features, but it does not change the shape of the activation output. Thus, this block maintains spatial and channel dimensionality, ensuring that the output z has the same shape as the required quantum channel count.

Quantum Encoding (Feature Normalization and Sinusoidal Mapping): Classic-to-Quantum Conversion

Each feature in is normalized using a bounded nonlinearity (tanh) to map the projected activations into a trigonometric embedding space inspired by quantum mechanics. This mechanism does not implement amplitude encoding, complex-valued rotations, or entangling gates. Instead, it provides a classical approximation of the rotational degrees of freedom common to single-qubit parameterizations, i.e., linearly scaled with structured trainable parameters: and . This normalization can be calculated using Equation (11):

where is the feature maps from the previous layer Equation (11), = 0.75 is a scaling constant (fixed in implementation), and the result of the equation is a set of angles for trigonometric encoding. The trainable parameters are inspired by the angular update rules of qubit rotations but entirely implemented using real-valued classical operations. The tanh function ensures that feature values are constrained to the range [−1, 1], preventing unbounded phase values and improving training stability. The encoded angles are then transformed into trigonometric feature representations or the sine channel in Equation (12) is:

where the representation can be further enhanced by concatenating an optional cosine channel, expressed by Equation (13) as:

This dual-channel encoding follows the Euler identity () expressed in Equation (14) as

enabling simulation of complex quantum amplitudes using real-valued tensors. The quantum feature tensor that results from the concatenation, in Equation (15), is:

where denotes the concatenation process. Using both channels doubles the dimensionality and produces a richer embedding that encodes phase-like geometry in feature space. Although no actual quantum states or complex amplitudes are formed, the paired trigonometric channels generate correlated variations that later layers can exploit in an “entanglement-like” manner.

The sine–cosine encoding is employed as a trigonometric transformation that maps classical feature activations into a form compatible with quantum-inspired operations. Prior work has shown that encoding input vectors with sine-based angle transformations introduces nonlinearity and periodicity, allowing the model to capture circular interactions among feature components that mimic convolutional behavior in quantum circuits [58]. This encoding also provides a structured representation suitable for quantum-inspired mixing layers, where the entanglement-like operations can exploit correlations across channels. Such transformations have been used successfully even with limited data, demonstrating robustness under finite-sample conditions and enabling feature extraction that is compatible with real quantum computations [59]. Positioning the sine–cosine encoding before the entanglement-like mixing step ensures that the resulting features retain these properties and can encode multi-channel dependencies in a manner analogous to quantum feature embeddings.

Quantum Entanglement Feature Mixing

Following the trigonometric encoding, the tensor Q(x) is processed according to Equation (16) by a sequence of convolutional mixing layers designed to capture cross-channel and spatial dependencies:

where is a depth parameter in the interval, and is the initial quantum feature tensor. Each iteration applies a 3 × 3 convolution, batch normalization, and a Swish activation. In all experiments, we set , This value balances computational efficiency and representational power. The complexity of the mixing stage scales is , where Cquantum is the quantum channel count (128 when combining sine and cosine encodings from 64 base channels). With , in all experiments, the process remains computationally efficient while providing two nonlinear mixing layers to recombine trigonometric encodings both spatially and across channels.

Entanglement-like Feature Mixing and Iris Texture Representation

Convolving sine–cosine channels produce emergent relational properties distinct from standard convolutional processing. Because these channels encode coupled angular embeddings, their joint transformation yields composite responses that depend simultaneously on local spatial context and the encoded angular phase. This creates cross-dependent relationships analogous to entanglement, without invoking quantum hardware or quantum operations. The mixed sine–cosine channel interactions create non-separable feature responses, enabling the network to encode joint variations in iris texture rather than isolated sinusoidal components. Through multi-scale relational mixing, small convolutional kernels unify micro-structures, crypts, furrows, and radial fibers, into spatially organized descriptors that better capture identity-bearing configuration patterns. Leveraging the unit-circle geometry of sine–cosine pairs, the model represents deformation phenomena such as curvature changes or rotational symmetries as stable angular-phase shifts, yielding implicit rotation-aware behavior. Together, these properties reorganize iris patterns into relational and geometric manifolds, enhancing discriminability beyond intensity-driven representations.

Significance for Iris Recognition

Iris individuality is defined by the relative arrangement of fine micro-structures. The quanvolutional layer captures these arrangements by encoding them into a geometric feature space in which spatial distortions, pupil dilation, and mild rotations are naturally accommodated. Rather than merely refining local features, the mixing stage reinterprets texture elements as components of a coherent geometric pattern, supporting more robust recognition under real-world variations. The mixing operation functions analogously to entanglement, as it couples the activation channels and enables distant or disjoint iris micro-patterns, such as crypts, furrows, and radial streaks, to be represented jointly rather than independently. This coupling generates feature vectors whose components encode non-local correlations, allowing the network to capture relationships between iris structures that classical convolutions, constrained by localized receptive fields, typically fail to model. As a result, the model learns integrated descriptors of iris texture that remain stable under dilation, rotation, and partial occlusion.

Output Projection and Gated Residual Integration

Following the mixing stage, the enhanced tensor is projected back to the original feature dimensionality using a 3 × 3 convolution followed by batch normalization in Equation (17):

This projected output is then fused with the residual pathway (the original input features) through a learnable gating mechanism that regulates the contribution of the enhanced features. Given ∈ (0, 1), the final output of the quanvolutional layer is formulated by Equation (18) as:

where , the residual branch, When the input and output channel dimensions differ, is implemented as a 3 × 3 convolutional projections followed by batch normalization to match dimensions as Equation (19) illustrates:

In cases of dimensional agreement, the residual reduces to identity mapping, expressed by Equation (20)

Learnable Gate Parameter

The weighting coefficient, ∈ (0, 1), is obtained or parameterized from a trainable scalar logit , through a sigmoid activation in Equation (21) as:

the parameter = 0, to provide stability at initialization ( = 0.5) between the residual and enhanced pathways, and flexibility and adaptability during training as is updated via backpropagation, allowing the model to learn how strongly the enhanced features should influence the final representation. A learnable gate adaptively fuses baseline and quantum-inspired pathways, preserving balanced gradient flow and preventing premature dominance of a single source. Empirical gate values remaining within the 0.3–0.7 range indicate consistent, moderate contribution from the quantum pathway, enhancing interpretability while ensuring that quantum-inspired features complement rather than override standard convolutional processing.

3.2.3. From Quantum Formalism to Classical Implementation

The quantum state formalism presented in Equations (7)–(9) serves as a conceptual foundation for the design of our quanvolutional layer. While these equations describe the structure of true quantum states, characterized by complex amplitudes, phase rotations, and normalization constraints, the implementation used in our model is entirely classical. Accordingly, formalism is not executed on quantum hardware and does not involve complex-valued computation, unitary transformations, or amplitude encoding in the strict quantum-mechanical sense. Instead, the formal framework motivates a set of classical transformations designed to approximate certain representational properties of quantum systems while remaining fully compatible with convolutional neural networks. The trigonometric encoding uses Euler’s identity to map each angle parameter into paired ) and ) channels, forming a real-valued analog of complex amplitudes that fits naturally into standard CNNs. This two-channel structure provides a superposition-like embedding, where information is expressed through angular variation rather than intensity, yielding richer multicomponent features. A tanh-based normalization keeps angles bounded, stabilizing training by preventing divergence and maintaining consistent phase representations. The method remains strictly quantum-inspired, as all operations, real-valued activations, deterministic inference, and classical convolutions, are executed on conventional hardware. Although classical, the approach parallels prior quantum-inspired models by leveraging geometric properties of parameterized quantum states, and its sine–cosine channels, phase transformations, and cross-channel mixing serve as practical analogs of complex amplitudes, phase rotations, and entanglement-like correlations.

4. Experimental Setup

As we intend to compare the performance of both our models, the baseline IResNet and the enhanced IRIS-QResNet, we need to specify the experimental setups for evaluating their performance in identification and authentication.

4.1. Datasets and Preprocessing

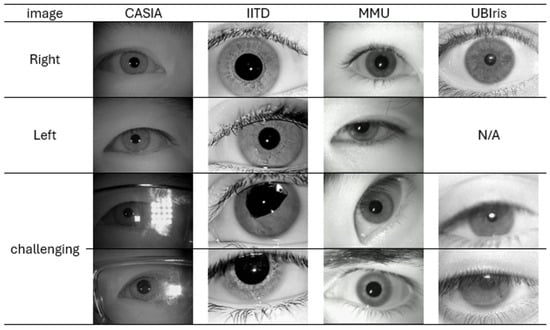

We use pre-acquired well-known benchmark datasets to evaluate our proposed model, including CASIA-Iris-Thousand, IITD Database, MMU-Iris-Database v1, and UBIRIS_v1. The Institute of Automation, Chinese Academy of Sciences (CASIA), has offered a group of datasets. CASIA-Iris-Thousand [60] is a dataset that includes 20,000 images for 1000 subjects. In this research, we use only 5 images per eye (right/left) side and 10 per subject from the first 800 subject, namely a total of 8000 images. Kumar and Passi from the Indian Institute of Technology, Delhi (IITD) [61] collected iris images of 224 subjects,10 per subject, and 5 per eye side. Although this dataset has 2240 images, the first 13 people have images of only the left eye, resulting in only 2175 images for side-specific iris recognition. The Malaysian Multimedia University (MMU) [62] collected iris images from 45 subject, 10 per subject and 5 per right/left eye. The University of Beira (UB), Covilhã, Portugal, created the first version of the UBIris dataset [63] to obtain a noisy dataset with blurred, unfocused, and rotated images, which can also be closed and have light reflections besides the eyelids and eyelashes obstructions. A total of 1214 images of a single eye per Subject were acquired from 241 subject. We use only 1205 images from subjects who have 5 images.

Figure 7 presents examples of eye images from each of the four datasets. The table includes samples of both the right and left eye, and illustrates the distinct challenges associated with each dataset. In the CASIA dataset, reflections from glasses and variations in lighting conditions represent major issues. Additionally, the iris often appears smaller due to greater camera distance compared to other datasets. The IITD dataset includes images of eyes affected by surgeries or medical conditions, which can change iris overall shape and texture. The MMU dataset contains images where the eyes are not looking straight ahead and where different illumination wavelengths make the same iris appear as two concentric circles instead of one. UBIris has blurry eyes and fatigued eyes which can make the pupil appear distorted or illusory. These challenges typically necessitate preprocessing steps before further analysis to ensure fair model evaluation. Therefore, and for preprocessing, as the four datasets differ in size or color mode, we standardized all images to be in grayscale with OpenCV and resize to 160 × 120 to maintain consistent input dimensionality across datasets while preserving texture details. After that, we evaluate two experimental configurations to assess model robustness: End-to-End and Non-End-to-End approaches. In End-to-End approach, Raw 160 × 120 grayscale images (after resizing only, with no segmentation applied) are fed directly into the network. The model is therefore required to implicitly address occlusions, reflections, and iris localization through its convolutional layers. This setup serves to evaluate the model’s robustness under real-world, unconstrained imaging conditions. On the other hand, to apply the Non-End-to-End approach, pupil and iris boundaries were localized using coordinates stored in Excel files, obtained through a semi-automated process in which automated detection was applied first, and manual correction was performed only when required. Automated localization used median filtering (5 × 5) followed by Circular Hough Transform for pupil detection, and iris radii were estimated geometrically by adding dataset-calibrated constants in {15, 20, 25, 50, 75} to the pupil center. Results were validated by enforcing anatomical constraints (e.g., pupil radius is less than iris radius, then center difference is less than 10 pixels). Automated detection errors (8.4% CASIA, 2.5% IITD, 3.6% MMU, 6.5% UBIris) were corrected through a standardized interface in which overlays were displayed, operators verified accuracy, and incorrect circles were replaced by manual detection of the pupil center and one iris-boundary point; corrected coordinates were saved to Excel and used directly in all subsequent processing. Operators were blinded to subject identity, and verification was completed before train/test splitting to prevent bias. Using the verified coordinates, pupil and iris circles were drawn with OpenCV circle, and a binary mask was generated by filling the iris boundary in white and the pupil in black, retaining the iris annulus via OpenCV bitwise AND. Segmented regions were normalized using Daugman’s rubber sheet model with 80 radial and 320 angular samples, mapping each angle () to Cartesian coordinates as expressed by Equation (22):

where and are the coordinates of the pupil, yielding 80 × 320 normalized iris images. Eyelid and eyelash occlusions were removed by processing each half of the normalized image using intensity inversion, thresholding (T = 0.9), morphological erosion, removal of small components (<10 pixels), and parabolic fitting to the upper occlusion boundary, with pixels above the fitted curve replaced by the mean iris intensity before recombining both halves. Optional enhancement variants included median filtering (5 × 5) and CLAHE (clipLimit = 5.0, tileGrid = 8 × 8) [64]. A summary of the resulting images per class and dataset is shown in Table 1, where there are seven groups of images that were used to train, validate, and then test the models. For testing both models in the Non-End-to-End and End-to-End approaches, using the seven dataset groups for each, which led to 14 main experiments outlined in Table 2. Every main experiment has two sub-experiments: one with the baseline and the other with the enhanced model. This results in 28 experiments.

Figure 7.

Examples of the four datasets samples, showing right, left and some samples of challenges.

Table 1.

Summary of used images and classes per dataset, subject, and subject’s eye right/left side.

Table 2.

14 iris recognition experiments across datasets using cropped iris and full eye approaches.

4.2. Setting Parameters

As with any recognition model, we need to setup parameters to help the model perform its tasks as efficiently as possible. However, as we need to compare the performance of the baseline with the enhancement model, we need to carefully set these parameters to avoid bias, as they will have the same values for both the baseline and the enhanced model within the same experiment. In this subsection, we discuss two types of parameters:

4.2.1. Dataset-Customizable Parameters

As listed in Table 3, the hyperparameters were adjusted according to the characteristics of each dataset to ensure stable and effective optimization while maintaining fair comparability between the baseline IResNet and the enhanced IRIS-QResNet. Although the overall optimization strategy remained unchanged, dataset size and complexity required modifying specific training parameters. Smaller datasets, such as CASIA, UBIris, and MMU, necessitated stronger regularization (higher L2 weight decay and dropout rates) to mitigate overfitting, whereas the moderate-sized IITD dataset enabled longer training with reduced regularization. The batch size was fixed at 16 for all datasets except IITD, where a larger batch size yielded smoother gradient updates. Learning rates were selected empirically per dataset CASIA/UBIris (2 × 10−4), IITD (3 × 10−4), and MMU (4 × 10−4), reflecting the inverse relationship between dataset size and the need for gradient exploration in small-sample regimes. A ReduceLROnPlateau scheduler (patience = 6, factor = 0.5) further stabilized convergence. To ensure a fair comparison, the baseline and quantum-enhanced models always used identical hyperparameters within each experiment. During development, certain challenging conditions, particularly the MMU End-to-End (eye-side) setting, required repeated stabilization. To avoid over-specializing hyperparameters to a single subset, a consistent selection criterion was adopted: if at least two of the four groups of a dataset achieved ≥90% accuracy under a particular configuration, that same configuration was applied to the remaining groups, even when their accuracies differed. This strategy preserved reproducibility and stability across heterogeneous datasets while preventing parameter tuning from artificially inflating the observed benefit of the quanvolutional layer.

Table 3.

Dataset-customizable parameter settings for best iris recognition performance per dataset.

L2 regularization was applied specifically to convolutional and dense kernels using the kernel_regularizer parameter in TensorFlow/Keras, while batch normalization parameters were excluded to avoid constraining scale and shift statistics. The regularization strength was dataset-dependent: or small datasets (CASIA, UBIris) and . or medium-scale datasets (IITD), complemented by weight decay through AdamW ranging from 1 × 10−6 for small datasets to 5 × 10−5 for larger datasets. This decoupled implementation prevents redundant penalization during parameter updates and preserves discriminative iris textures essential for recognition performance. To ensure reproducibility, gallery and probe sets were constructed in a subject-disjoint manner. For identification experiments, a single reference image per eye per subject formed the gallery, while the remaining images comprised the probe set. Authentication experiments used genuine scores computed between corresponding probe and gallery images, while impostor scores were obtained by comparing probe images with non-matching gallery entries. Authentication thresholds were determined per dataset by optimizing the Equal Error Rate (EER) on a validation subset prior to testing. For IRIS-QResNet, classical feature maps extracted by the stem block were converted to quantum state coefficients via trigonometric encoding. Normalized pixel intensities were scaled into rotation angles for quantum gates, and parameterized quantum circuits were applied to small patches of these feature maps. Outputs were then mapped back to classical tensors through measurement expectation values, enabling seamless integration with subsequent convolutional layers while preserving gradient flow.

4.2.2. Common Parameters

Common parameters are set with the same values for both models in all experiments:

- Optimization: Both models were trained using identical optimization settings to ensure strict comparability. The AdamW optimizer, which combines Adam’s adaptive moment estimation with decoupled weight decay, was used throughout all experiments. Parameter updates follow Equation (23):where represents the trainable parameters vector (i.e., vector of weights and biases) of all trainable parameters at time t, represents the learning rate that rescales the updates, and are bias-corrected first and second moment estimates, is a numerical stability constant, and is the weight decay coefficient. Using the same optimizer configuration isolates the effect of the quantum-inspired modules and ensures that both architectures are optimized under identical dynamics. During training, we monitored gradient norms, validation-loss evolution, and weight-update magnitudes for both IResNet and IRIS-QResNet. In all experiments, the optimizer produced smooth, monotonic decreases in validation loss without oscillations, divergence, or abrupt spikes. Importantly, the quantum-inspired blocks did not introduce additional gradient instability beyond what is typical for residual CNNs, confirming that the shared AdamW configuration yields stable and well-behaved convergence for both networks.

- Regularization, weight decay, and loss function: Regularization strategies were applied consistently across both architectures. Dropout was used as described in Equation (10), and total training loss was defined by Equation (24):where is the total loss that the model aims to decrease, is the sparse categorical cross-entropy loss, which can be computed in Equation (25) as follows:where yi is the true label, is the predicted probability, and N is the number of classes. The term is a regularization element, particularly L2, also known as weight decay. Here, to prevent overfitting, the regularization strength, represented by λ, controls the trade-off between fitting the data and maintaining small model weights, promoting simpler models that perform better when applied to unseen data. θ is the parameters or weights of the model, and represents the squared L2 norm of these parameters, where it can be simplified by Equation (26) as:This formulation helps prevent overfitting by constraining parameter magnitudes, promoting better generalization to unseen iris samples.

- Gradient flow optimization: Residual connections play a central role in stabilizing gradient propagation, enabling both models to learn discriminative features without the need for heavy data augmentation. The gradient flow through each residual block is given by Equation (27):where stands for the loss gradient relative to the input of layer l, and is the gradient with respect to the input of layer l. The gradient propagated from the next layer, , in relation to the output , and , is the derivative of the residual function F (typically the convolutional output excluding the skip connection) with respect to . It illustrates how variations in the input to the residual blocks affect their outputs. The statement signifies the effect of the identity mapping and the residual function. The identity mapping. The value 1 denotes that the gradient flow via the skip connection is still intact, while reflects the extra gradient contribution brought about by transformations of the residual functions. The skip connection guarantees that gradients remain at least as large as those propagated from the next layer, even when the residual branch contributes only a small derivative. This mechanism is especially important for IRIS-QResNet, where the quantum-inspired gated pathway introduces additional nonlinear transformations. The preserved identity component ensures that vanishing gradients cannot occur even when gating attenuates the residual branch, allowing AdamW (Equation (23)) to maintain stable updates throughout both architectures.

- Feature space regularization: Implicit regularization is further supported by batch normalization and dropout, which reduce sensitivity to variations in iris appearance. The architecture itself provides multi-resolution analysis through progressive downsampling and channel expansion, enabling effective feature extraction without dependence on complex or artificial augmentation schemes. ResNet-18 remains one of the most reliable baselines for iris recognition due to its proven balance of representational strength and computational efficiency.

- A type of multi-resolution analysis is naturally implemented through architectural progression, where features are extracted at various spatial resolutions using channel expansion and progressive downsampling. ResNet-18 is among the best baseline architectures for iris recognition due to its theoretical underpinnings and empirical benefits. It offers strong feature extraction capabilities and computational efficiency without the need for intricate data augmentations.

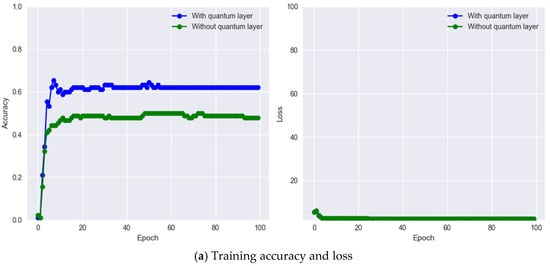

To confirm that the presence of gated quantum-inspired residual paths did not alter optimization behavior, we explicitly measured convergence characteristics across all training runs. While AdamW can theoretically behave differently when encountering nonlinear gates and weight-sampling noise, both IResNet and IRIS-QResNet demonstrated stable and monotonic loss reduction. Layer-wise gradient norms consistently fell within the range (0.3–1.7), and weight-update magnitudes remained stable across epochs. No oscillatory behavior, sudden spikes, or divergence, phenomena sometimes associated with gated residual networks, were observed. Representative convergence curves and gradient-norm trajectories are provided in next section to document optimizer stability and ensure full reproducibility.

All convolutional kernels, across the stem, residual blocks, and post-quanvolutional layers, were fixed to 3 × 3 to ensure fair architectural comparison. The quanvolutional layer was inserted immediately after the stem, where the input is reduced to ~40 × 30 or 80 × 20 while retaining key iris structures such as edges, gradients, crypts, furrows, and collarette patterns. Introducing the quantum-inspired transformation at this stage enriches these foundational descriptors before they propagate through deeper residual blocks. This early placement also ensures computational efficiency: spatial dimensions are already reduced by ~75%, and the channel depth remains manageable (64 channels), preventing the exponential cost that would arise in later layers. The computational complexity follows . Positioning the layer early provides stable gradient flow to all quantum parameters , supported by AdamW, residual connections, L2 regularization, and dropout, yielding reliable convergence on CASIA, UBIris, IITD, and MMU without divergence. Since iris identity is encoded in fine-grained multiscale textures, quantum enhancement at the low-to-intermediate feature level offers the greatest discriminative gain, while deeper layers contribute diminishing returns. For small datasets (5–10 images per class), a single post-stem quantum layer increases representational richness without significant parameter growth, avoiding the overfitting risk associated with stacking multiple quantum layers.

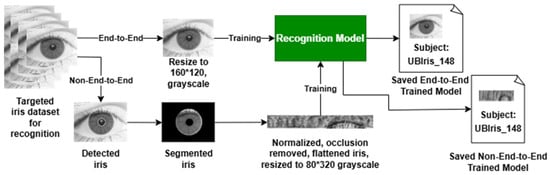

4.3. End-to-End and Non-End-to-End Setups

The main purpose of the proposed IRIS-QResNet is to enable enhanced quantum-inspired capabilities in an End-to-End or a Non-End-to-End mode for iris recognition, as shown in Figure 8. As such, the model is able to handle images in two different sizes. First, we read the full eye image for the End-to-End approach, where the only preprocessing here is converting the image to grayscale. Moreover, as the datasets vary in image dimensions, we force all images to have a width (W) of 160 and a height (H) of 120. No further preprocessing is required as the model should select the most important pixels on its own. On the other hand, for the Non-End-to-End approach, we need to apply comprehensive preprocessing so that only the cropped iris with the fewest occlusions and anomalies is used for training the model. Thus, the preprocessing includes iris localization to select the iris area of interest, then segment it, normalize it to represent a pattern rectangle, remove all occlusions and anomalies as much as possible, and finally resize it to : 320 and : 80. In both approaches, the data are sent to train the model. As soon as training is completed successfully, the model is saved for further usage in biometric recognition tasks. Furthermore, since our datasets contain entirely distinct subjects, cross-dataset evaluation on the same identities is not feasible. To ensure rigorous testing, we divided each dataset into training, validation, and testing subsets, guaranteeing that at least one image per subject was excluded from training. The model was trained using model.fit on the training set, validated during training on the validation set, and evaluated on the held-out testing set. The reported accuracies therefore reflect performance on completely unseen subjects, demonstrating the model’s ability to generalize to new individuals within the same dataset. We also did not perform cross-evaluation between End-to-End and Non-End-to-End configurations within the same dataset (e.g., testing Non-End-to-End models on End-to-End images and vice versa) because the differing image dimensions would cause implementation errors. Therefore, evaluating the models on unseen data within the same experimental setup was the most appropriate and feasible approach for this study.

Figure 8.

IRIS-QResNet using End-to-End and Non-End-to-End approaches for iris recognition.

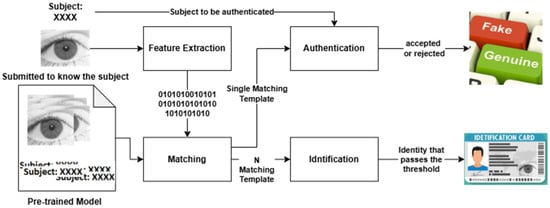

Identification and authentication are the two well-known scenarios for iris recognition. According to Jaha [65] identification is a one-to-many recognition problem, where we need to identify a subject using an unknown iris sample as an unseen query biometric template. In response to the query, the system ought to determine if this biometric template matches a known subject, and if so, the system retrieves the top-match identity from the enrolled individuals in the gallery. On the other hand, authentication is a one-to-one recognition problem, where a gallery is probed using an unseen iris sample to verify a claimed identity as a query biometric template. Based on the authenticity of the biometric template compared to the previously enrolled templates for that claimed subject in the gallery, the system should evaluate the query and determine whether it belongs to the claimed identity before accepting or rejecting it, in accordance with the confidence level control [65]. Figure 9 describes and differentiates how IResNet and IRIS-QResNet are used and evaluated in authentication and identification scenarios.

Figure 9.

Authentication scenario vs. identification scenario using IResNet and IRIS-QResNet.

The main difference between the two scenarios is that in identification, we just match the iris to know whose it is, while in authentication, we match the iris against potential subject information to determine whether they are or are not the authentic subject. To this extent, in identification, as shown in Figure 9, the eye image, as if in the End-to-End approach, will be sent for feature extraction that will be matched with the pre-recorded data in the pre-trained model. The matching process enforces N-matching template, and then the result will be the subject data that passes the matching phase. In authentication, on the other hand, the model needs to obtain the potential subject data along with the eye image, so that the authentication process will match the single query template with the available subject data to be authenticated. The result here will just specify either to accept the subject claim as a genuine user or reject it as an imposter user.

4.4. Evaluation Metrics

Initially, to emphasize the novelty, superiority, and contributions of our research work compared to related literature, we introduce a comparative analysis and discussion, highlighting research gaps and aspects that this work addresses, while existing related studies either partially or never considered. Given the evident dissimilarities and absence of fairly comparable aspects between this work and existing work, quantitative comparisons are deemed unrepresentative and impractical. However, to overcome this challenge, we use the baseline model (IResNet) as a representative of classical models to compare the accuracy and loss of the proposed enhanced quantum-inspired model (IRIS-QResNet), with both models using the same values of the feeding parameters to ensure fairness and credibility across comparisons.

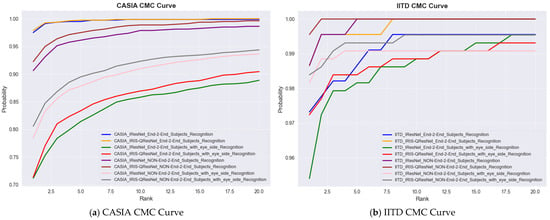

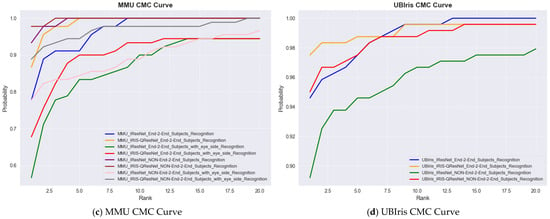

We evaluate our models using a range of standard biometric performance metrics. The Cumulative Match Characteristic (CMC) curve, used usually in identification scenarios, provides a cumulative measure of the models’ performance in correctly matching a probe image to its corresponding identity within a ranked gallery. The Rank-1 and Rank-5 recognition rates are major CMC performance indicators, respectively, indicating the likelihood of correctly identifying a person at the top match and among the top five ranked candidates. Moreover, we use the Area Under the CMC Curve (CMC-AUC) to summarize the overall identification accuracy across all ranks.

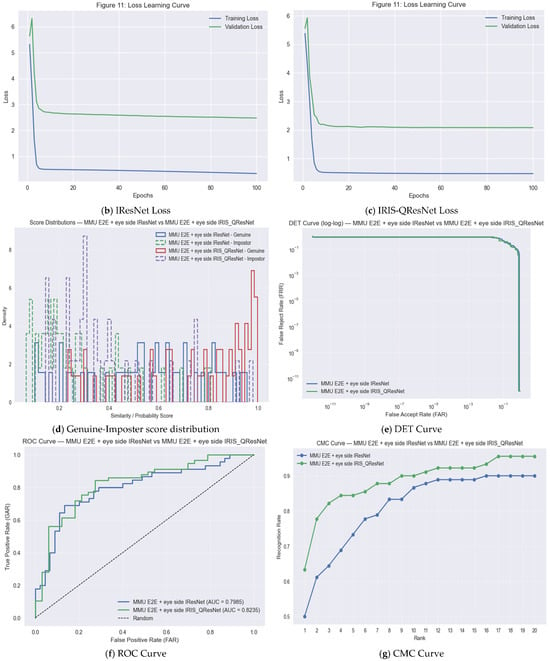

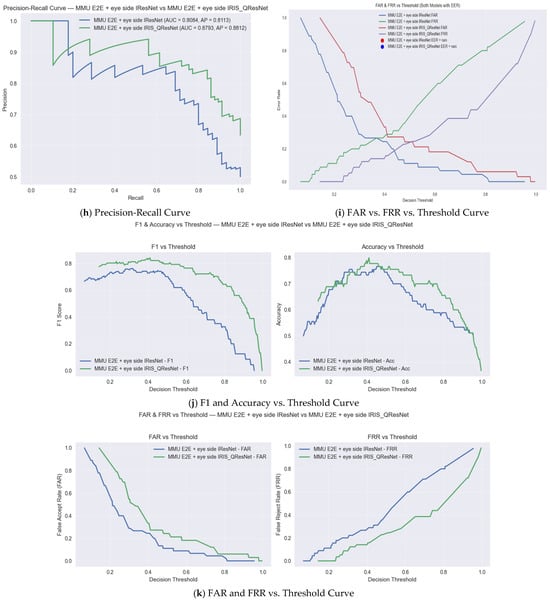

For authentication experiments, match score distributions were explicitly computed to evaluate genuine–impostor separability. For each subject, one template was treated as a query and matched against all enrolled templates. Scores from the same identity formed the genuine distribution, whereas scores from all other identities formed the impostor distribution. These distributions were used to compute the ROC curve, AUC, and Equal Error Rate (EER). The decision threshold was obtained at the intersection of the False Acceptance Rate (FAR) and False Rejection Rate (FRR). All authentication plots (genuine vs. impostor histograms, ROC curves) are included in next section as an example of one of the 14 experiments with the smallest but the hardest dataset, i.e., MMU End-to-End with Eye side. In addition, we examine the trade-off between the True Acceptance Rate (TAR) and the False Acceptance Rate (FAR) over different decision thresholds using the Receiver Operating Characteristic (ROC) curve. To evaluate authentication performance, we report the Area Under the ROC Curve (ROC-AUC), the Equal Error Rate (EER), and the decidability index While ROC-AUC quantifies the global discriminability of the system across all thresholds, the EER provides a threshold-specific operating point at which the False Acceptance Rate (FAR) equals the False Rejection Rate (FRR). In addition, the decidability index was computed to quantify the statistical separation between genuine and impostor score distributions, offering a direct assessment of biometric reliability and the robustness of score space separability. Because is highly informative for evaluating the stability of the recognition system and is widely recommended in biometric performance reporting, numerical values for every dataset and each experimental configuration are reported in the Results section together with the score distribution plots. Performance evaluation of MMU End-to-End iris recognition with eye-side using IResNet and IRIS-QResNet. (a–c) show training accuracy and loss dynamics, (d) depicts genuine–imposter score distributions, (e–g) show verification and identification curves (DET, ROC, CMC), and (h–k) illustrate threshold-based performance metrics. IRIS-QResNet demonstrates improved separability and earlier convergence, confirming enhanced discriminative capacity under challenging small-sample conditions These complementary metrics collectively provide a complete assessment of both identification and authentication performance in accordance with contemporary biometric evaluation guidelines.

5. Experiment Results and Discussion

This section discusses the two models, IRIS-QResNet and its baseline IResNet, which are evaluated and thoroughly compared in iris identification and authentication. Our experimental design emphasizes controlled comparison to isolate the specific contributions of quantum-inspired architectural components while ensuring scientific rigor and reproducibility.

5.1. Comparison with the State of the Art

The quantum-inspired functionalities used in this research are considered transformation functions as they depend on the Quantum Fast Fourier Transformer (QFFT) that inherently modify feature representations. Thus, the proposed model (IRIS-QResNet) and its baseline (IResNet) for iris recognition do not use augmentation intentionally to ensure fair comparison, allowing us to isolate the contribution of the quantum-inspired transformations. However, this makes it harder to compare them with available solutions, as augmentation has always been used to increase the accuracy of deep models. Table 4 summarizes where our research differs, situates our work relative to recent handcrafted, deep, and quantum-inspired approaches. and shows how it will not be fair or comparably representative in quantitative terms with available solutions.

In handcrafted approaches, it is not compulsory to use augmentation if the model can learn from small datasets; examples are LBP with Haar wavelet models [14], Multiple methods fusion [66], and Hybrid BSIF + Gabor [67]. However, none of these models use a quantum-inspired approach or deep learning, although these are modern articles. For a deep CNN-based approach, DNN in general forces augmentation; if not, then it will be another type of transformer, such as wavelength transformer, python built-in image transformer, or QFFT, which is the basis of many quanvolutional layers. However, some researchers did not state it clearly, such as in the CNN model of Sallam et al. [68] and the deep SSLDMNet-Iris [25] models, especially Sallam’s suggested model, which did not specify whether it used augmentation or how many layers it has. Both models, in addition to Gabor with DBN [69], EnhanceDeepIris [70], and Multibiometric System [71] are not quantum-inspired, but the latter three clearly depend on data augmentation. Quantum-inspired articles do not depend on augmentation. QFFT or other quantum filters, their work directly contradicts with the data augmentation or other transformers, which lead to lose the features rather than increasing them or maximizing the data for rich features. This explains clearly why we forced no augmentation in this research. Examples from the state of the art: QiNN [72] using CIFAR-0 to enhance augmentation itself, QNN [50] using MNIST, and QCNN [48] using the iris flower dataset. QNN and QCNN do not use augmentation as the quanvolutional layer performs the transformation task to enhance the results. Some researchers tried to use iris recognition as the domain for their quantum-inspired works, such NAS-QDCNN [73] which reported accuracy raised with 96.83% without telling which dataset. There are also quantum algorithms [74], and Enhanced Iris Biometrics [75] which did not report any accuracy. Post-quantum authentication [76] used quantum inspired fundamentals to decrease the success possibility of any attack attempt.

Although the accuracies reported by our models may appear moderate compared to some dataset-specific works claiming near-perfect results, those studies typically depend on handcrafted features, augmentation pipelines, or dataset-tuned designs. IRIS-QResNet, by contrast, operates on large feature spaces (Table 5) without augmentation or hybrid handcrafted preprocessing. Although the accuracies reported by our models may appear moderate compared to some dataset-specific works claiming near-perfect results, those studies typically depend on handcrafted features, augmentation pipelines, or dataset-tuned designs. IRIS-QResNet, by contrast, operates on large feature spaces (Table 5).

Table 4.

Summary of comparative gap analysis where our proposed work overpasses earlier work.

Table 4.

Summary of comparative gap analysis where our proposed work overpasses earlier work.

| Approach | Technique Used | Biometric | Iris | Shared Dataset | Used Dataset | Segmented | Augmented * | Other Preprocessing | Quantum-Inspired | Deep Learning | Eye-Side Recognition | Achieved Accuracy | Reference |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Handcrafted | LBP with Haar wavelet | ✓ | ✓ | ✓: MMUv1 | 1. MMU1 2. MMU2 | ✘ | ✘ | ✓ | ✘ | ✘ | ✘ | DCT_LBP_KNN: 85.71% DCT_LBP_SVM: 91.73% HWT_LBP_RF: 83.46% | [14] |

| Multiple methods fusion | ✓ | ✓ | ✓: CASIA | CASIA v4.0 | ✓ | ✘ | ✓ | ✘ | ✘ | ✓: Separate | Left eyes: 98.67% Right eyes: 96.66% | [66] | |

| Hybrid BSIF + Gabor: | ✓ | ✓ | ✓: IITD | IITD | ✓: partially | ✘ | ✓ | ✘ | ✘ | ✘ | 95.4% | [67] | |

| Deep CNN-Based | CNN | ✓ | ✓ | ✓: CASIA | 1. CASIA-Iris-V1 2. ATVS-FIr DB | ✓ ✘ | Unknown | Unknown | ✘ | ✓ | ✘ | Cropped IRIS:97.82% IRIS Region:98% | [68] |