IRIS-QResNet: A Quantum-Inspired Deep Model for Efficient Iris Biometric Identification and Authentication

Highlights

- IRIS-QResNet, a custom ResNet model enhanced with a quanvolutional layer for more accurate iris recognition that uses few samples per subject without applying augmentation.

- IRIS-QResNet proves its ability for efficient biometric authentication by consistently achieving superior accuracy and generalization across four benchmark datasets.

- Compared with IResNet, the traditional baseline, IRIS-QResNet model significantly improves recognition accuracy and robustness, even in small-sample, augmentation-free settings.

- Across multiple iris datasets, IRIS-QResNet strengthens multilayer feature extraction, resulting in measurable performance gains of up to 16.66% in identification accuracy.

- By effectively integrating quantum-inspired layers into classical deep networks, higher discriminative power and data efficiency can be achieved, reducing dependence on large training datasets and data augmentation.

- These results open the path toward scalable and sustainable AI solutions for biometric systems, establishing a viable bridge between conventional and emerging quantum machine learning architectures.

Abstract

1. Introduction

- 1.

- A hybrid iris recognition framework that supports both End-to-End and non-End-to-End modes and it is generalizable and applicable to a variety of datasets, varying in sizes, i.e., tiny, small, and mid-size.

- 2.

- A unique customized ResNet-18 architecture that can manage datasets with different dimensions and forms, including very small-sample regimes (e.g., five to ten images per class) without the need for data augmentation.

- 3.

- An experimental examination of quantum-inspired improvements by the proposed IRIS-QResNet model that is methodical and involves integrating quanvolutional layers and assessing it on four benchmark datasets, along with comparative analysis to measure gains in accuracy, robustness, and loss reduction.

2. Background and Related Work

2.1. Handcrafted Iris Recognition Approaches

2.2. Deep CNN-Based Iris Recognition Approaches

2.3. Quantum-Inspired Image Classification Approaches and Remaining Gaps

3. IRIS-QResNet: The Proposed Model

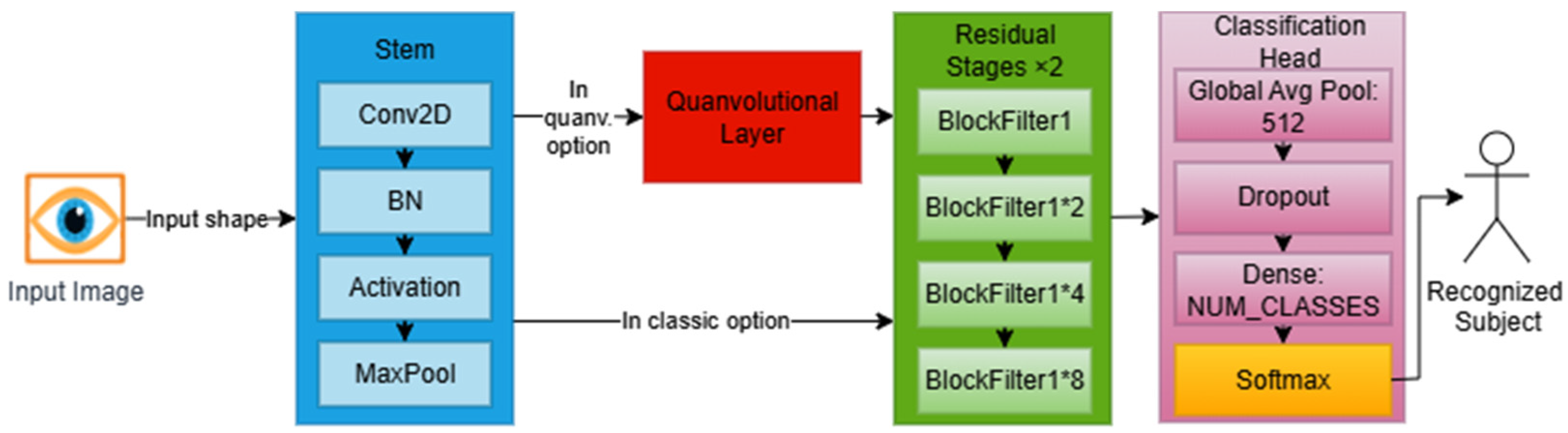

3.1. IResNet: The Baseline Model

3.1.1. Stem Block for Input Handling

Kernel Standardization for Fair Comparison

3.1.2. Residual Stages

3.1.3. Regularization and Classification

3.2. IRIS-QResNet: The Enhanced IResNet by a Quanvolutional Layer

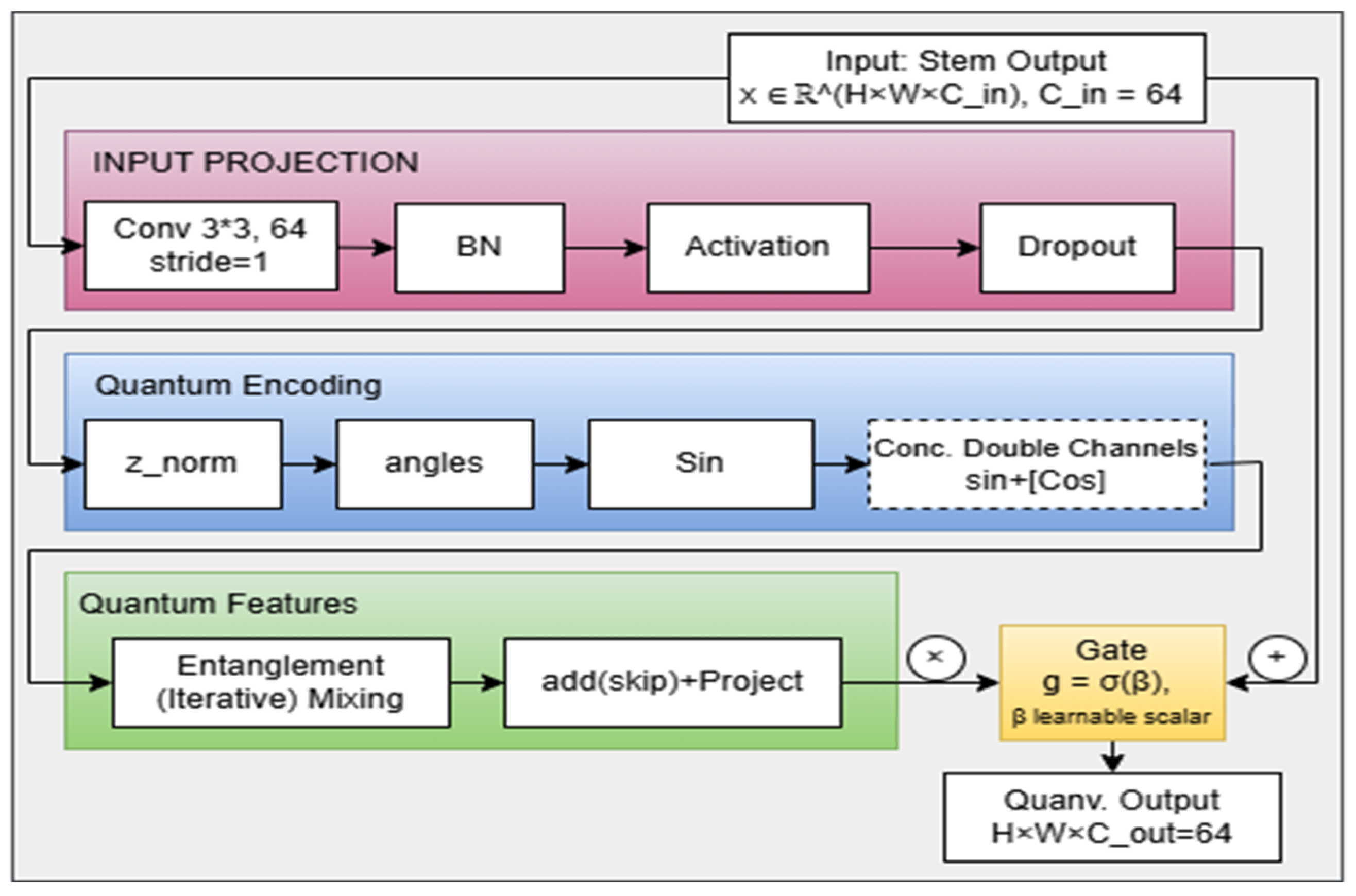

3.2.1. Quantum State from Classic Input

3.2.2. The Proposed Quanvolutional Layer

Input Projection (Stem Transformation)

Quantum Encoding (Feature Normalization and Sinusoidal Mapping): Classic-to-Quantum Conversion

Quantum Entanglement Feature Mixing

Entanglement-like Feature Mixing and Iris Texture Representation

Significance for Iris Recognition

Output Projection and Gated Residual Integration

Learnable Gate Parameter

3.2.3. From Quantum Formalism to Classical Implementation

4. Experimental Setup

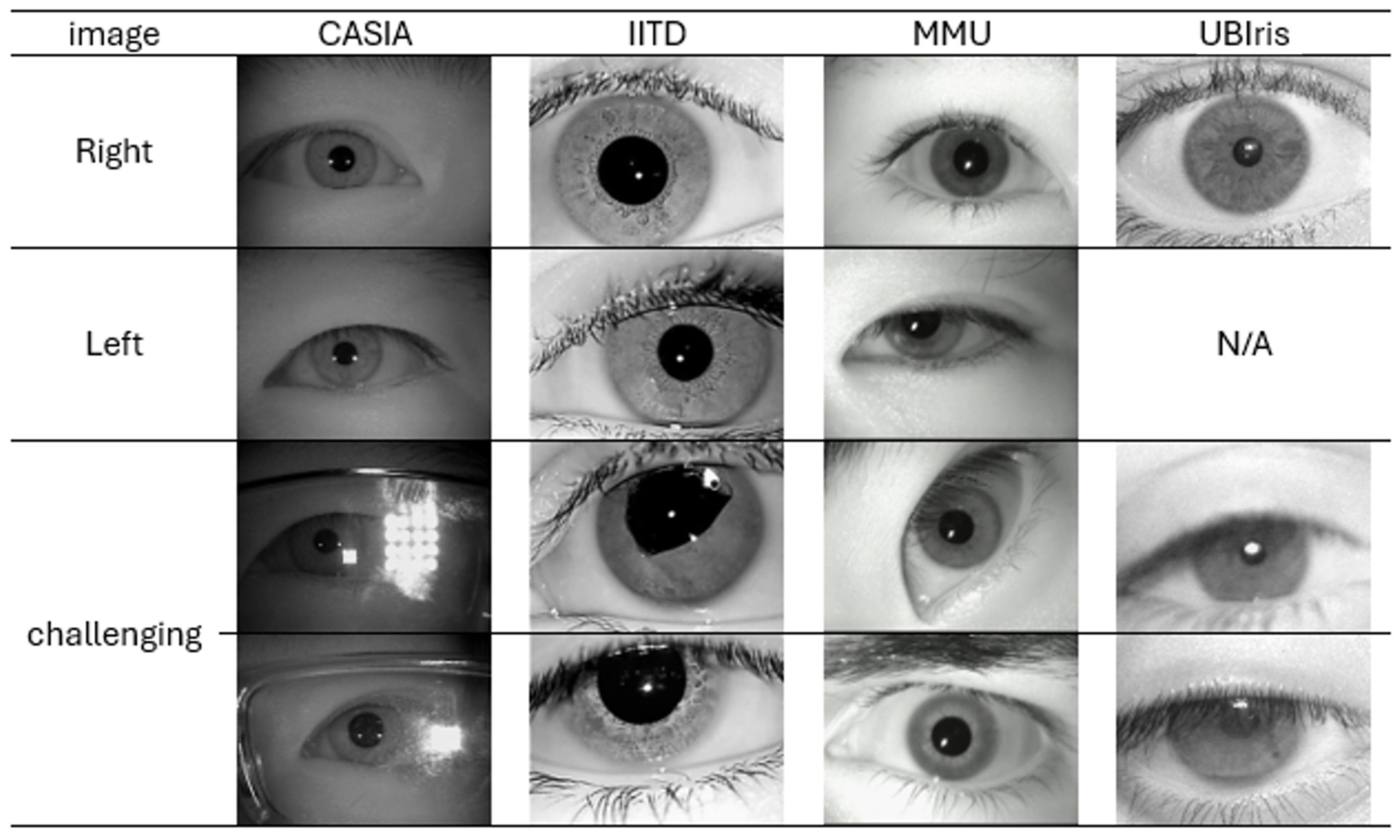

4.1. Datasets and Preprocessing

4.2. Setting Parameters

4.2.1. Dataset-Customizable Parameters

4.2.2. Common Parameters

- Optimization: Both models were trained using identical optimization settings to ensure strict comparability. The AdamW optimizer, which combines Adam’s adaptive moment estimation with decoupled weight decay, was used throughout all experiments. Parameter updates follow Equation (23):where represents the trainable parameters vector (i.e., vector of weights and biases) of all trainable parameters at time t, represents the learning rate that rescales the updates, and are bias-corrected first and second moment estimates, is a numerical stability constant, and is the weight decay coefficient. Using the same optimizer configuration isolates the effect of the quantum-inspired modules and ensures that both architectures are optimized under identical dynamics. During training, we monitored gradient norms, validation-loss evolution, and weight-update magnitudes for both IResNet and IRIS-QResNet. In all experiments, the optimizer produced smooth, monotonic decreases in validation loss without oscillations, divergence, or abrupt spikes. Importantly, the quantum-inspired blocks did not introduce additional gradient instability beyond what is typical for residual CNNs, confirming that the shared AdamW configuration yields stable and well-behaved convergence for both networks.

- Regularization, weight decay, and loss function: Regularization strategies were applied consistently across both architectures. Dropout was used as described in Equation (10), and total training loss was defined by Equation (24):where is the total loss that the model aims to decrease, is the sparse categorical cross-entropy loss, which can be computed in Equation (25) as follows:where yi is the true label, is the predicted probability, and N is the number of classes. The term is a regularization element, particularly L2, also known as weight decay. Here, to prevent overfitting, the regularization strength, represented by λ, controls the trade-off between fitting the data and maintaining small model weights, promoting simpler models that perform better when applied to unseen data. θ is the parameters or weights of the model, and represents the squared L2 norm of these parameters, where it can be simplified by Equation (26) as:This formulation helps prevent overfitting by constraining parameter magnitudes, promoting better generalization to unseen iris samples.

- Gradient flow optimization: Residual connections play a central role in stabilizing gradient propagation, enabling both models to learn discriminative features without the need for heavy data augmentation. The gradient flow through each residual block is given by Equation (27):where stands for the loss gradient relative to the input of layer l, and is the gradient with respect to the input of layer l. The gradient propagated from the next layer, , in relation to the output , and , is the derivative of the residual function F (typically the convolutional output excluding the skip connection) with respect to . It illustrates how variations in the input to the residual blocks affect their outputs. The statement signifies the effect of the identity mapping and the residual function. The identity mapping. The value 1 denotes that the gradient flow via the skip connection is still intact, while reflects the extra gradient contribution brought about by transformations of the residual functions. The skip connection guarantees that gradients remain at least as large as those propagated from the next layer, even when the residual branch contributes only a small derivative. This mechanism is especially important for IRIS-QResNet, where the quantum-inspired gated pathway introduces additional nonlinear transformations. The preserved identity component ensures that vanishing gradients cannot occur even when gating attenuates the residual branch, allowing AdamW (Equation (23)) to maintain stable updates throughout both architectures.

- Feature space regularization: Implicit regularization is further supported by batch normalization and dropout, which reduce sensitivity to variations in iris appearance. The architecture itself provides multi-resolution analysis through progressive downsampling and channel expansion, enabling effective feature extraction without dependence on complex or artificial augmentation schemes. ResNet-18 remains one of the most reliable baselines for iris recognition due to its proven balance of representational strength and computational efficiency.

- A type of multi-resolution analysis is naturally implemented through architectural progression, where features are extracted at various spatial resolutions using channel expansion and progressive downsampling. ResNet-18 is among the best baseline architectures for iris recognition due to its theoretical underpinnings and empirical benefits. It offers strong feature extraction capabilities and computational efficiency without the need for intricate data augmentations.

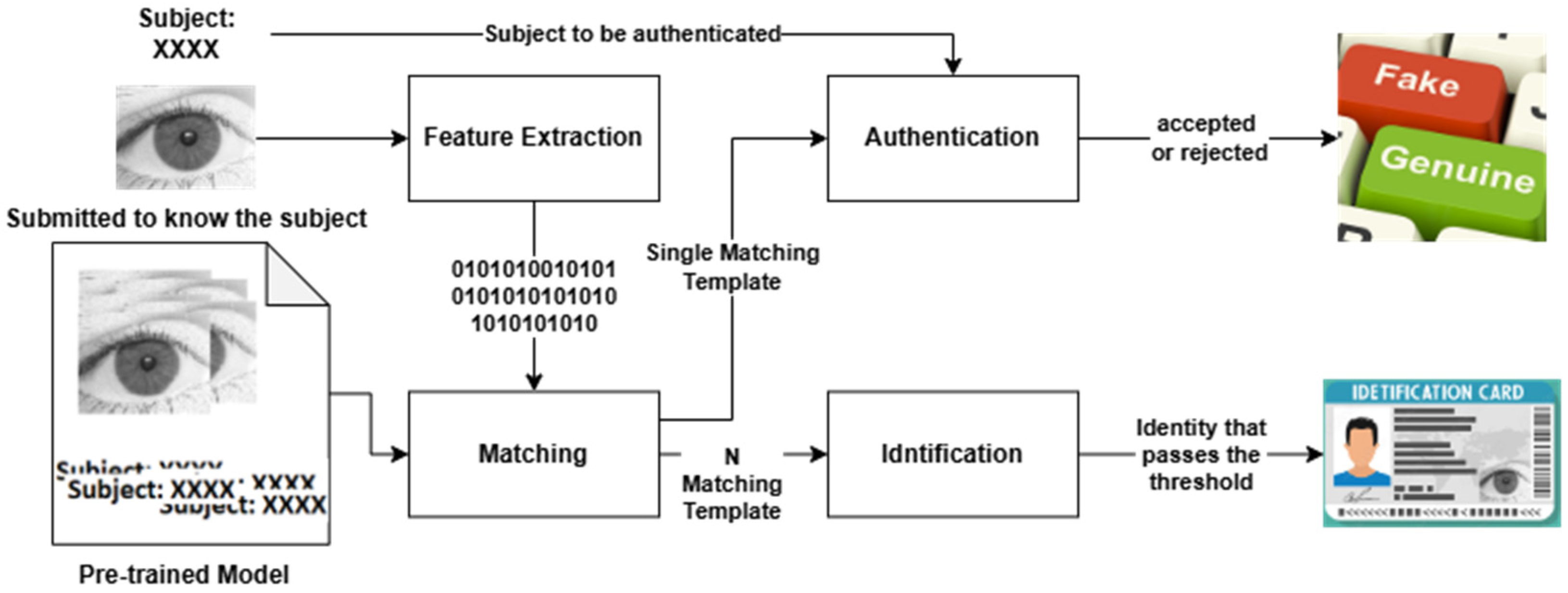

4.3. End-to-End and Non-End-to-End Setups

4.4. Evaluation Metrics

5. Experiment Results and Discussion

5.1. Comparison with the State of the Art

| Approach | Technique Used | Biometric | Iris | Shared Dataset | Used Dataset | Segmented | Augmented * | Other Preprocessing | Quantum-Inspired | Deep Learning | Eye-Side Recognition | Achieved Accuracy | Reference |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Handcrafted | LBP with Haar wavelet | ✓ | ✓ | ✓: MMUv1 | 1. MMU1 2. MMU2 | ✘ | ✘ | ✓ | ✘ | ✘ | ✘ | DCT_LBP_KNN: 85.71% DCT_LBP_SVM: 91.73% HWT_LBP_RF: 83.46% | [14] |

| Multiple methods fusion | ✓ | ✓ | ✓: CASIA | CASIA v4.0 | ✓ | ✘ | ✓ | ✘ | ✘ | ✓: Separate | Left eyes: 98.67% Right eyes: 96.66% | [66] | |

| Hybrid BSIF + Gabor: | ✓ | ✓ | ✓: IITD | IITD | ✓: partially | ✘ | ✓ | ✘ | ✘ | ✘ | 95.4% | [67] | |

| Deep CNN-Based | CNN | ✓ | ✓ | ✓: CASIA | 1. CASIA-Iris-V1 2. ATVS-FIr DB | ✓ ✘ | Unknown | Unknown | ✘ | ✓ | ✘ | Cropped IRIS:97.82% IRIS Region:98% | [68] |

| deep SSLDMNet-Iris with Fisher’s Linear Discriminant (FLD) | ✓ | ✓ | ✓: all | CASIA 1.0, 2.0, 3.0, 4.0, IITD, UBIris, MMU | Unknown | Unknown | ✓ | ✘ | ✓ | ✘ | CASIA: 99.5% IITD: 99.90% MMU: 100% UBIris: 99.97% | [25] | |

| Gabor with DBN | ✓ | ✓ | ✓: CASIA | CASIA-4-interval, CASIA-4-lamp, JLUBR-IRIS | ✓ | ✓ | ✓ | ✘ | ✓ | ✘ | CASIA-4-interval: 99.998 CASIA-4-lamp: 99.904 | [69] | |

| EnhanceDeepIris | ✓ | ✓ | ✓: CASIA | ND-IRIS-0405, CASIA-Lamp | ✓ | ✓ | ✓ | ✘ | ✓ | ✘ | CASIA-Lamp: 98.88% | [70] | |

| Multibiometric System | ✓ | ✓ | ✓: CASIA, IITD | CASIA-V3, IITD | ✓ | ✓ | ✓ | ✘ | ✓ | ✓: Separate | IITD left: 99% IITD right: 99% CASIA left: 94% CASIA right: 93% | [71] | |

| Quantum-inspired | QiNN | ✘ | ✘ | ✘ | - | - | ✘ | - | ✓ | ✓ | ✘ | - | [72] |

| QNN | ✘ | ✘ | ✘ | - | - | ✘ | - | ✓ | ✓ | ✘ | - | [50] | |

| QCNN: iris the flower | ✘ | ✓ | ✘ | - | - | ✘ | - | ✓ | ✓ | ✘ | - | [48] | |

| Quantum Algorithms | ✓ | ✓ | ✓: CASIA | CASIA V1.0 | Not specified | ✘ | ✓ | ✓ | ✘ | Not specified | No recorded accuracy | [74] | |

| Enhanced Iris Biometrics | ✓ | ✓ | ✓: UBIris | UBIris | Not specified | ✘ | ✓ | ✓ | ✘ | Single side | No recorded accuracy | [75] | |

| Post-quantum authentication | ✓ | ✓ | ✓: UBIris | UBIris, another dataset | ✓ | ✘ | ✓ | ✓ | ✘ | Single side | No recorded accuracy | [76] | |

| Our approach | IResNet (Baseline) | ✓ | ✓ | CASIA thousand, IITD, UBIris, MMU | ✘ | ✘ | ✘ | ✘ | ✓ | ✘ | CASIA: 97.50% IITD: 97.32% MMU: 77.78% UBIris: 94.61% | [This research] | |

| ✓: + | CASIA: 71.19% IITD: 95.40% MMU: 50.00% | ||||||||||||

| ✓ | ✓ | ✘ | CASIA: 90.63% IITD: 98.66% MMU: 93.33% UBIris: 89.21% | ||||||||||

| ✓: + | CASIA: 78.50% IITD: 98.16% MMU: 77.78% | ||||||||||||

| IRIS_QResNet (quantum-inspired) | ✓ | ✓ | ✘ | ✘ | ✘ | ✘ | ✓ | ✘ | CASIA: 97.88% IITD: 98.66% MMU: 86.67% UBIris: 97.51% | ||||

| ✓: + | CASIA: 71.38% IITD: 97.24% MMU: 66.67% | ||||||||||||

| ✓ | ✓ | ✘ | CASIA: 92.25% IITD: 99.55% MMU: 97.78% UBIris: 95.02% | ||||||||||

| ✓: + | CASIA: 80.56% IITD: 98.39% MMU: 88.89% | ||||||||||||

| Dataset | Metric | End-to-End (E2E) | Non-End-to-End (~E2E) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| IResNet | IRIS-QResNet | Imp. % | with Eye Side | IResNet | IRIS-QResNet | Imp. % | with Eye Side | ||

| CASIA | Acc. | 97.50 | 97.87 | 0.3750 | ✘ | 90.63 | 92.25 | 1.6250 | ✘ |

| Loss | 0.11 | 0.07 | −0.036 | 0.45 | 0.34 | −0.1070 | |||

| Parameters | 12,954,336 | 13,139,297 | 185,473 | 12,954,336 | 13,139,297 | 185,473 | |||

| CI | [0.9627, 0.9678] | [0.9718, 0.9763] | - | [0.8603, 0.87] | [0.8921, 0.9009] | - | |||

| g | - | 0.4495 | - | - | 0.4252 | - | |||

| Acc. | 71.19 | 71.38 | 0.1870 | ✓ | 78.50 | 80.56 | 2.0630 | ✓ | |

| Loss | 1.94 | 1.81 | −0.1220 | 1.17 | 1.01 | −0.1550 | |||

| Parameters | 69,072,896 | 69,257,857 | 185,473 | 13,364,736 | 13,549,697 | 185,473 | |||

| CI | [0.8258, 0.8365] | [0.7968, 0.8075] | - | [0.6665, 0.681] | [0.7372, 0.7507] | - | |||

| g | - | 0.4621 | - | - | 0.4325 | - | |||

| IITD | Acc. | 97.32 | 98.66 | 1.3400 | ✘ | 98.66 | 99.55 | 0.8930 | ✘ |

| Loss | 0.25 | 0.12 | −0.1380 | 0.14 | 0.06 | −0.0790 | |||

| Parameters | 12,658,848 | 12,843,809 | 185,473 | 12,658,848 | 12,843,809 | 185,473 | |||

| CI | [0.8798, 0.8978] | [0.9623, 0.9722] | - | [0.9253, 0.9382] | [0.9782, 0.9863] | - | |||

| g | - | 0.4842 | - | - | 0.4833 | - | |||

| Acc. | 95.40 | 97.24 | 1.8390 | ✓ | 98.16 | 98.39 | 0.2300 | ✓ | |

| Loss | 0.41 | 0.22 | −0.1930 | 0.55 | 0.17 | −0.3790 | |||

| Parameters | 12,767,091 | 12,952,052 | 185,473 | 12,767,091 | 12,952,052 | 185,473 | |||

| CI | [0.8196, 0.8426] | [0.9193, 0.9354] | - | [0.7016, 0.7244] | [0.9299, 0.9428] | - | |||

| g | - | 0.4842 | - | - | 0.4848 | - | |||

| MMU | Acc. | 77.77 | 86.67 | 8.8890 | ✘ | 93.33 | 97.78 | 4.4450 | ✘ |

| Loss | 1.29 | 0.86 | −0.4240 | 0.60 | 0.47 | −0.1310 | |||

| Parameters | 12,567,021 | 12,751,982 | 185,473 | 12,567,021 | 12,751,982 | 185,473 | |||

| CI | [0.7046, 0.754] | [0.8344, 0.8734] | - | [0.7734, 0.8133] | [0.932, 0.9556] | - | |||

| g | - | 0.4904 | - | - | 0.4892 | - | |||

| Acc. | 50.00 | 66.67 | 16.67 | ✓ | 77.78 | 88.89 | 11.111 | ✓ | |

| Loss | 2.064 | 1.543 | −0.6490 | 1.76 | 0.95 | −0.8060 | |||

| Parameters | 12,590,106 | 12,775,067 | 185,473 | 12,590,106 | 12,775,067 | 185,473 | |||

| CI | [0.3976, 0.4455] | [0.5595, 0.6115] | - | [0.4052, 0.4591] | [0.7473, 0.799] | - | |||

| g | - | 0.4912 | - | - | 0.4910 | - | |||

| UBIris | Acc. | 94.60 | 97.51 | 2.9040 | ✘ | 89.21 | 95.02 | 5.8090 | ✘ |

| Loss | 0.41 | 0.16 | −0.2530 | 1.44 | 0.42 | −1.0280 | |||

| Parameters | 12,667,569 | 12,862,642 | 185,473 | 12,667,569 | 12,852,530 | 185,473 | |||

| CI | [0.7849, 0.8153] | [0.9328, 0.952] | [0.3939, 0.4294] | [0.7994, 0.8291] | |||||

| g | - | 0.4870 | - | - | 0.4875 | - | |||

5.2. Test Accuracy and Loss

5.2.1. Comparison of Decision Factors

5.2.2. Comparative Observation and Novelty Analysis

5.3. Authentication Performance

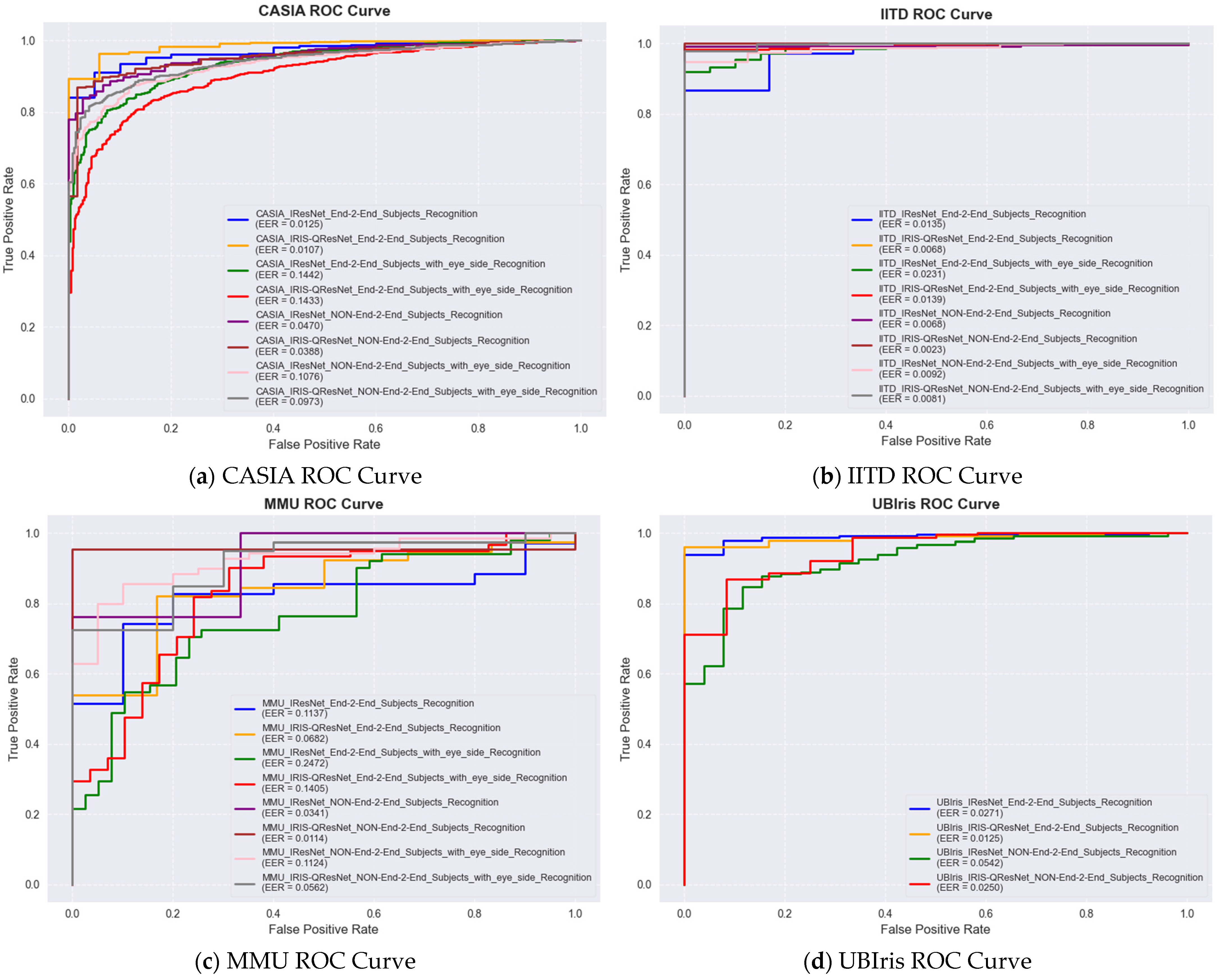

5.3.1. ROC Curve Analysis

- IRIS-QResNet demonstrates lower EER and steeper ROC curves, evidencing greater discriminative capability.

- Non-End-to-End modes generally outperform End-to-End, as non-end-to-end embeddings emphasize iris-texture features more strongly than joint segmentation/recognition.

- Eye-side metadata introduces additional variability, reducing accuracy on both models; the effect is modest when comparing Non-End-to-End with eye-side vs. End-to-End without eye-side.

5.3.2. Cases Where the Baseline Slightly Exceeds IRIS-QResNet

5.3.3. EER, Accuracy, and Threshold Behavior

- Lower error rates across all thresholds for IRIS-QResNet

- Greater advantage in low-FAR regimes, critical for high-security deployments

- Higher accuracy and F1 across all decision boundaries, indicating better probability calibration.

5.3.4. Distributional Separation and d′ Improvements:

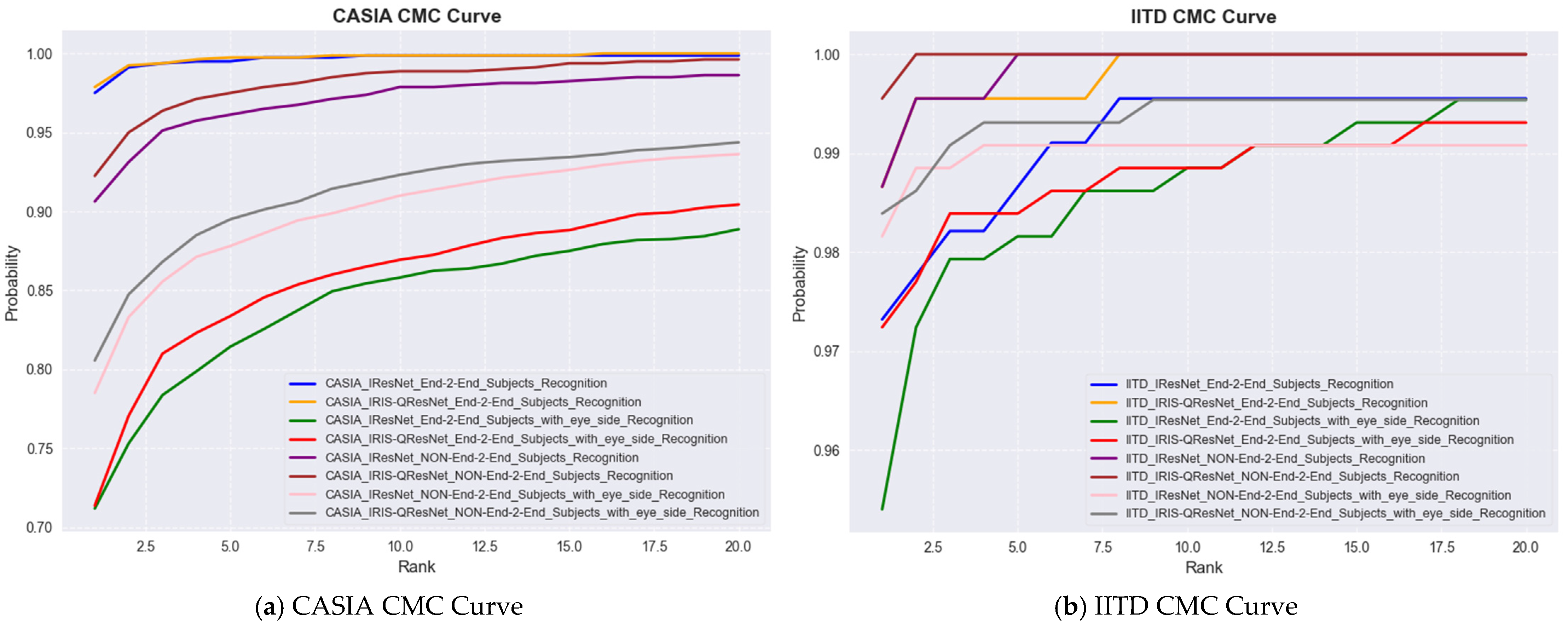

5.4. Identification Performance

- IRIS-QResNet consistently demonstrate superior discriminative capability and improved convergence behavior, achieving higher CMC-AUC, precision, and recall values than its IResNet peer, particularly under challenging imaging conditions.

- When the iris texture is stable and uniform, the advantage of independently optimized feature extraction and classification stages becomes evident. This is reflected in the Non-End-to-End recognition mode, which generally achieves higher accuracy than the End-to-End mode on the IITD and MMU datasets. Conversely, End-to-End mode exhibits superior performance on the CASIA and UBIris datasets, where joint optimization effectively manages greater variability and noise.

- The inclusion of eye-side information introduces additional variability that negatively affects recognition performance. This degradation is most apparent in the End-to-End configurations, where the IResNet subject recognition with eye-side models consistently yield the lowest results. However, the impact remains relatively minor when comparing Non-End-to-End recognition with eye-side cues to End-to-End recognition without the eye-side.

- Impact of Eye-Side Metadata: Including eye-side information introduces additional intra-class variability, slightly reducing performance in both verification and identification tasks. Despite this, IRIS-QResNet maintains superior performance over IResNet in all configurations, demonstrating its resilience to increased feature variability and confirming that quantum-inspired feature extraction enhances robustness.

- End-to-End vs. Non-End-to-End Trends: Across all datasets, Non-End-to-End embeddings generally exhibit stronger separability than End-to-End configurations, particularly in controlled datasets such as IITD and MMU. This trend is consistent with the improved identification performance observed in CMC curves (Figure 11), where Non-End-to-End modes achieve higher early-rank recognition probabilities. Conversely, End-to-End modes tend to perform better in heterogeneous or noisy datasets, such as CASIA and UBIris, where joint optimization of segmentation and recognition layers improves generalization.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IRIS-QResNet | The proposed model |

| IResNet | The baseline model |

| DNN | Deep Neural Network |

| CNN | Convolutional Neural Networks |

| ISO | International Organization for Standardization |

| IEC | International Electrotechnical Commission |

| LBP | Local Binary Patterns |

| SVM | Support Vector Machines |

| k-NN | K-Nearest Neighbors |

| ResNet | Residual Networks |

| GPU | Graphical Processing Unit |

| NISQ | Noisy Intermediate-Scale Quantum |

| QFT | Quantum Fourier Transformer |

| QFFT | Quantum Fast Fourier Transformer |

| QNN | Quantum Neural Network |

| QCNN | Quantum Convolutional Neural Networks |

| QiNN | Quantum-inspired Neural Network |

| CASIA | Institute of Automation, Chinese Academy of Sciences |

| IITD | Indian Institute of Technology, Delhi |

| MMU | The Malaysian Multimedia University |

| UBIris | The iris dataset associated by University of Beira |

References

- Syed, W.K.; Mohammed, A.; Reddy, J.K.; Dhanasekaran, S. Biometric authentication systems in banking: A technical evaluation of security measures. In Proceedings of the 2024 IEEE 3rd World Conference on Applied Intelligence and Computing (AIC), Gwalior, India, 27–28 July 2024; pp. 1331–1336. [Google Scholar]

- ISO/IEC 2382-37:2022; Information Technology, Vocabulary, Part 37: Biometrics. International Organization for Standardization: Geneva, Switzerland, 2022.

- Cabanillas, F.L.; Leiva, F.M.; Castillo, J.F.P.; Higueras-Castillo, E. Biometrics: Challenges, Trends and Opportunities; CRC Press: Boca Raton, FL, USA, 2025. [Google Scholar]

- Yin, Y.; He, S.; Zhang, R.; Chang, H.; Zhang, J. Deep learning for iris recognition: A review. Neural Comput. Appl. 2025, 37, 11125–11173. [Google Scholar] [CrossRef]

- Ezhilarasan, M.; Poovayar Priya, M. A Novel Approach to the Extraction of Iris Features Using a Deep Learning Network. Res. Sq. 2024, preprint. [Google Scholar]

- Moazed, K.T. Iris Anatomy. In The Iris: Understanding the Essentials; Springer International Publishing: Cham, Switzerland, 2020; pp. 15–29. [Google Scholar] [CrossRef]

- El-Sofany, H.; Bouallegue, B.; El-Latif, Y.M.A. A proposed biometric authentication hybrid approach using iris recognition for improving cloud security. Heliyon 2024, 10, e36390. [Google Scholar] [CrossRef]

- Valantic and Fujitsu. White Paper Valantic Biolock for Use with SAP®ERP—Powered by Fujitsu PalmSecure. 2018. Available online: https://docs.ts.fujitsu.com/dl.aspx?id=b4cbaa07-5eb2-4f75-a1b5-9f6383299be3 (accessed on 25 October 2025).

- Sharma, S.B.; Dhall, I.; Nayak, S.R.; Chatterjee, P. Reliable Biometric Authentication with Privacy Protection. In Advances in Communication, Devices and Networking, Proceedings of the ICCDN 2021, Sikkim, India, 15–16 December 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 233–249. [Google Scholar]

- Al-Waisy, A.S.; Qahwaji, R.; Ipson, S.; Al-Fahdawi, S.; Nagem, T.A.M. A multi-biometric iris recognition system based on a deep learning approach. Pattern Anal. Appl. 2018, 21, 783–802. [Google Scholar] [CrossRef]

- Nguyen, K.; Proença, H.; Alonso-Fernandez, F. Deep learning for iris recognition: A survey. ACM Comput. Surv. 2024, 56, 1–35. [Google Scholar] [CrossRef]

- Khayyat, M.M.; Zamzami, N.; Zhang, L.; Nappi, M.; Umer, M. Fuzzy-CNN: Improving personal human identification based on IRIS recognition using LBP features. J. Inf. Secur. Appl. 2024, 83, 103761. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Learning gabor texture features for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1621–1631. [Google Scholar]

- Sen, E.; Ince, I.F.; Ozkurt, A.; Akin, F.I. Local Binary Pattern in the Frequency Domain: Performance Comparison with Discrete Cosine Transform and Haar Wavelet Transform. Eng. Technol. Appl. Sci. Res. 2025, 15, 21456–21461. [Google Scholar] [CrossRef]

- Bhargav, T.; Balachander, B. Implementation of iris based monitoring system using KNN in comparison with SVM for better accuracy. AIP Conf. Proc. 2024, 2853, 020073. [Google Scholar] [CrossRef]

- Damayanti, F.A.; Asmara, R.A.; Prasetyo, G.B. Residual Network Deep Learning Model with Data Augmentation Effects in the Implementation of Iris Recognition. Int. J. Front. Technol. Eng. 2024, 2, 79–86. [Google Scholar] [CrossRef]

- Chen, Y.; Gan, H.; Chen, H.; Zeng, Y.; Xu, L.; Heidari, A.A.; Zhu, X.; Liu, Y. Accurate iris segmentation and recognition using an end-to-end unified framework based on MADNet and DSANet. Neurocomputing 2023, 517, 264–278. [Google Scholar] [CrossRef]

- Hattab, A.; Behloul, A.; Hattab, W. An Accurate Iris Recognition System Based on Deep Transfer Learning and Data Augmentation. In Proceedings of the 2024 1st International Conference on Innovative and Intelligent Information Technologies (IC3IT), Batna, Algeria, 3–5 December 2024; pp. 1–6. [Google Scholar]

- Kailas, A.; Girimallaih, M.; Madigahalli, M.; Mahadevachar, V.K.; Somashekarappa, P.K. Ensemble of convolutional neural network and DeepResNet for multimodal biometric authentication system. Int. J. Electr. Comput. Eng. 2025, 15, 4279–4295. [Google Scholar] [CrossRef]

- Lawrence, T. Deep Neural Network Generation for Image Classification Within Resource-Constrained Environments Using Evolutionary and Hand-Crafted Processes. Ph.D. Thesis, University of Northumbria, Newcastle, UK, 2021. [Google Scholar]

- AlRifaee, M.; Almanasra, S.; Hnaif, A.; Althunibat, A.; Abdallah, M.; Alrawashdeh, T. Adaptive Segmentation for Unconstrained Iris Recognition. Comput. Mater. Contin. 2024, 78, 1591–1609. [Google Scholar] [CrossRef]

- Jan, F.; Alrashed, S.; Min-Allah, N. Iris segmentation for non-ideal Iris biometric systems. Multimed. Tools Appl. 2024, 83, 15223–15251. [Google Scholar] [CrossRef]

- Benita, J.; Vijay, M. Implementation of Vision Transformers (ViTs) based Advanced Iris Image Analysis for Non-Invasive Detection of Diabetic Conditions. In Proceedings of the 2025 International Conference on Intelligent Computing and Control Systems (ICICCS), Erode, India, 19–21 March 2025; pp. 1451–1457. [Google Scholar]

- Wei, J.; Wang, Y.; Huang, H.; He, R.; Sun, Z.; Gao, X. Contextual Measures for Iris Recognition. IEEE Trans. Inf. Forensics Secur. 2022, 18, 57–70. [Google Scholar] [CrossRef]

- Kadhim, S.; Paw, J.K.S.; Tak, Y.C.; Ameen, S.; Alkhayyat, A. Deep Learning for Robust Iris Recognition: Introducing Synchronized Spatiotemporal Linear Discriminant Model-Iris. Adv. Artif. Intell. Mach. Learn. 2025, 5, 3446–3464. [Google Scholar] [CrossRef]

- Gill, S.S.; Cetinkaya, O.; Marrone, S.; Claudino, D.; Haunschild, D.; Schlote, L.; Wu, H.; Ottaviani, C.; Liu, X.; Pragna Machupalli, S.; et al. Quantum computing: Vision and challenges. arXiv 2024, arXiv:2403.02240. [Google Scholar] [CrossRef]

- Rizvi, S.M.A.; Paracha, U.I.; Khalid, U.; Lee, K.; Shin, H. Quantum Machine Learning: Towards Hybrid Quantum-Classical Vision Models. Mathematics 2025, 13, 2645. [Google Scholar] [CrossRef]

- Khanna, S.; Dhingra, S.; Sindhwani, N.; Vashisth, R. Improved Pattern Recognition—Quantum Machine Learning. In Quantum Machine Learning in Industrial Automation; Springer: Cham, Switzerland, 2025; pp. 405–427. [Google Scholar]

- Li, Y.; Cheng, Z.; Zhang, Y.; Xu, R. QPCNet: A Hybrid Quantum Positional Encoding and Channel Attention Network for Image Classification. Phys. Scr. 2025, 100, 115101. [Google Scholar] [CrossRef]

- Orka, N.A.; Awal, M.A.; Liò, P.; Pogrebna, G.; Ross, A.G.; Moni, M.A. Quantum Deep Learning in Neuroinformatics: A Systematic Review. Artif. Intell. Rev. 2025, 58, 134. [Google Scholar] [CrossRef]

- Kumaran, K.; Sajjan, M.; Oh, S.; Kais, S. Random Projection Using Random Quantum Circuits. Phys. Rev. Res. 2024, 6, 13010. [Google Scholar] [CrossRef]

- Danish, S.M.; Kumar, S. Quantum Convolutional Neural Networks Based Human Activity Recognition Using CSI. In Proceedings of the 2025 17th International Conference on COMmunication Systems and NETworks (COMSNETS), Bengaluru, India, 6–10 January 2025; pp. 945–949. [Google Scholar]

- Henderson, M.; Shakya, S.; Pradhan, S.; Cook, T. Quanvolutional neural networks: Powering image recognition with quantum circuits. Quantum Mach. Intell. 2020, 2, 2. [Google Scholar] [CrossRef]

- Ng, K.-H.; Song, T.; Liu, Z. Hybrid Quantum-Classical Convolutional Neural Networks for Image Classification in Multiple Color Spaces. arXiv 2024, arXiv:2406.02229. [Google Scholar]

- El-Sayed, M.A.; Abdel-Latif, M.A. Iris recognition approach for identity verification with DWT and multiclass SVM. PeerJ Comput. Sci. 2022, 8, e919. [Google Scholar] [CrossRef]

- Mehrotra, H.; Sa, P.K.; Majhi, B. Fast segmentation and adaptive SURF descriptor for iris recognition. Math. Comput. Model. 2013, 58, 132–146. [Google Scholar] [CrossRef]

- Malgheet, J.R.; Manshor, N.B.; Affendey, L.S.; Halin, A.B.A. Iris recognition development techniques: A comprehensive review. Complexity 2021, 2021, 6641247. [Google Scholar] [CrossRef]

- Spataro, L.; Pannozzo, A.; Raponi, F. Iris Recognition: Comparison of Traditional Biometric Pipeline vs. Deep Learning-Based Methods. 2025. Available online: https://federicoraponi.it/data/files/iris-recognition/IrisRecognitionProject.pdf (accessed on 1 May 2025).

- Toizumi, T.; Takahashi, K.; Tsukada, M. Segmentation-free direct iris localization networks. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 991–1000. [Google Scholar]

- Latif, S.A.; Sidek, K.A.; Hashim, A.H.A. An Efficient Iris Recognition Technique using CNN and Vision Transformer. J. Adv. Res. Appl. Sci. Eng. Technol. 2024, 34, 235–245. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidiy, A.; Wang, Y. An experimental study of deep convolutional features for iris recognition. In Proceedings of the 2016 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, 3 December 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Al-Dabbas, H.M.; Azeez, R.A.; Ali, A.E. High-accuracy models for iris recognition with merging features. Int. J. Adv. Appl. Sci. 2024, 11, 89–96. [Google Scholar] [CrossRef]

- Ruiz-Beltrán, C.A.; Romero-Garcés, A.; González-Garcia, M.; Marfil, R.; Bandera, A. FPGA-Based CNN for eye detection in an Iris recognition at a distance system. Electronics 2023, 12, 4713. [Google Scholar] [CrossRef]

- Sohail, M.A.; Ghosh, C.; Mandal, S.; Mallick, M.M. Specular reflection removal techniques for noisy and non-ideal iris images: Two new approaches. In Proceedings of the International Conference on Frontiers in Computing and Systems: COMSYS 2021, Shillong, India, 29 September–1 October 2022; pp. 277–289. [Google Scholar]

- AbuGhanem, M.; Eleuch, H. NISQ computers: A path to quantum supremacy. IEEE Access 2024, 12, 102941–102961. [Google Scholar] [CrossRef]

- Benedetti, M.; Lloyd, E.; Sack, S.; Fiorentini, M. Parameterized Quantum Circuits as Machine Learning Models. Quantum. Sci. Technol. 2019, 4, 43001. [Google Scholar] [CrossRef]

- Wold, K. Parameterized Quantum Circuits for Machine Learning. Master’s Thesis, University of Oslo—Faculty of Mathematics and Natural Sciences, Oslo, Norway, 2021. [Google Scholar]

- Tomal, S.M.; Al Shafin, A.; Afaf, A.; Bhattacharjee, D. Quantum Convolutional Neural Network: A Hybrid Quantum-Classical Approach for Iris Dataset Classification. arXiv 2024, arXiv:2410.16344. [Google Scholar] [CrossRef]

- Chen, A.; Yin, H.-L.; Chen, Z.-B.; Wu, S. Hybrid Quantum-inspired Resnet and Densenet for Pattern Recognition with Completeness Analysis. arXiv 2024, arXiv:2403.05754. [Google Scholar] [CrossRef]

- Senokosov, A.; Sedykh, A.; Sagingalieva, A.; Kyriacou, B.; Melnikov, A. Quantum machine learning for image classification. Mach. Learn. Sci. Technol. 2024, 5, 015040. [Google Scholar] [CrossRef]

- Alsubai, S.; Alqahtani, A.; Alanazi, A.; Sha, M.; Gumaei, A. Facial emotion recognition using deep quantum and advanced transfer learning mechanism. Front. Comput. Neurosci. 2024, 18, 1435956. [Google Scholar] [CrossRef]

- Ciylan, F.; Ciylan, B.I. Fake human face recognition with classical-quantum hybrid transfer learning. Comput. Inform. 2021, 1, 46–55. [Google Scholar]

- Hossain, S.; Umer, S.; Rout, R.K.; Al Marzouqi, H. A deep quantum convolutional neural network based facial expression recognition for mental health analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 1556–1565. [Google Scholar] [CrossRef]

- Tomar, S.; Tripathi, R.; Kumar, S. Comprehensive survey of qml: From data analysis to algorithmic advancements. arXiv 2025, arXiv:2501.09528. [Google Scholar] [CrossRef]

- Misra, D. Mish: A Self Regularized Non-Monotonic Activation Function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Pandey, G.K.; Srivastava, S. ResNet-18 Comparative Analysis of Various Activation Functions for Image Classification. In Proceedings of the 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 26–28 April 2023; pp. 595–601. [Google Scholar]

- Hosny, M.; Elgendy, I.A.; Chelloug, S.A.; Albashrawi, M.A. Attention-Based Convolutional Neural Network Model for Skin Cancer Classification. IEEE Access 2025, 13, 172027–172050. [Google Scholar] [CrossRef]

- Anand, R. Quantum-Enhanced Soil Nutrient Estimation Exploiting Hyperspectral Data with Quantum Fourier Transform. IEEE Geosci. Remote Sens. Lett. 2025, 22, 5507705. [Google Scholar]

- Kashani, S.; Alqasemi, M.; Hammond, J. A Quantum Fourier Transform (QFT) Based Note Detection Algorithm. arXiv 2022, arXiv:2204.11775. [Google Scholar] [CrossRef]

- Institute of Automation. Chinese Academy of Sciences; CASIA-Iris-Thousand. Available online: http://Biometrics.idealtest.org/ (accessed on 1 January 2024).

- Kumar, A.; Passi, A. Comparison and combination of iris matchers for reliable personal authentication. Pattern Recognit. 2010, 43, 1016–1026. [Google Scholar] [CrossRef]

- Multimedia University. MMU Iris Image Database; Multimedia University: Cyberjaya, Malaysia, 2005. [Google Scholar]

- Proença, H.; Alexandre, L.A. UBIRIS: A noisy iris image database. In Proceedings of the 13th International Conference on Image Analysis and Processing—ICIAP 2005, Cagliari, Italy, 6–8 September 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 970–977. [Google Scholar]

- Anden, E. Impact of Occlusion Removal on PCA and LDA for Iris Recognition, Stanford University. 2016, pp. 1–6. Available online: https://web.stanford.edu/class/ee368/Project_Autumn_1516/Reports/Anden.pdf (accessed on 12 March 2024).

- Jaha, E.S. Augmented Deep-Feature-Based Ear Recognition Using Increased Discriminatory Soft Biometrics. Comput. Model. Eng. Sci. 2025, 144, 3645–3678. [Google Scholar] [CrossRef]

- Hashim, A.N.; Al-Khalidy, R.R. Iris identification based on the fusion of multiple methods. Iraqi J. Sci. 2021, 62, 1364–1375. [Google Scholar] [CrossRef]

- Mulyana, S.; Wibowo, M.E.; Kurniawan, A. Two-Step Iris Recognition Verification Using 2D Gabor Wavelet and Domain-Specific Binarized Statistical Image Features. IJCCS Indones. J. Comput. Cybern. Syst. 2025, 19, 1–5. [Google Scholar]

- Sallam, A.; Al Amery, H.; Al-Qudasi, S.; Al-Ghorbani, S.; Rassem, T.H.; Makbol, N.M. Iris recognition system using convolutional neural network. In Proceedings of the 2021 International Conference on Software Engineering & Computer Systems and 4th International Conference on Computational Science and Information Management (ICSECS-ICOCSIM), Pekan, Malaysia, 24–26 August 2021; pp. 109–114. [Google Scholar]

- He, F.; Han, Y.; Wang, H.; Ji, J.; Liu, Y.; Ma, Z. Deep learning architecture for iris recognition based on optimal Gabor filters and deep belief network. J. Electron. Imaging 2017, 26, 23005. [Google Scholar] [CrossRef]

- He, S.; Li, X. EnhanceDeepIris Model for Iris Recognition Applications. IEEE Access 2024, 12, 66809–66821. [Google Scholar] [CrossRef]

- Therar, H.M.; Mohammed, L.D.E.A.; Ali, A.J. Multibiometric system for iris recognition based convolutional neural network and transfer learning. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1105, 12032. [Google Scholar] [CrossRef]

- Michael, R.T.F.; Rmesh, G.; Agoramoorthy, M.; Sujatha, D. Quantum-Inspired Neural Network For Enhanced Image Recognition. In Proceedings of the 2023 Intelligent Computing and Control for Engineering and Business Systems (ICCEBS), Chennai, India, 14–15 December 2023; pp. 1–5. [Google Scholar]

- Midhunchakkaravarthy, D.; Shirke-Deshmukh, S.; Deshpande, V. NAS-QDCNN: NEURON ATTENTION STAGE-BY-STAGE QUANTUM DILATED CONVOLUTIONAL NEURAL NETWORK FOR IRIS RECOGNITION AT A DISTANCE. Biomed. Eng. 2025, 2550021. [Google Scholar] [CrossRef]

- Li, H.; Zhang, S.; Zhang, Z. Research on Iris Recognition Method Based on Quantum Algorithms. TELKOMNIKA Indones. J. Electr. Eng. 2014, 12, 6846–6851. [Google Scholar] [CrossRef]

- Kale, D.R.; Shinde, H.B.; Shreshthi, R.R.; Jadhav, A.N.; Salunkhe, M.J.; Patil, A.R. Quantum-Enhanced Iris Biometrics: Ad-vancing Privacy and Security in Healthcare Systems. In Proceedings of the 2025 International Conference on Next Gener-ation Information System Engineering (NGISE), Ghaziabad, Delhi (NCR), India, 28–29 March 2025; Volume 1, pp. 1–6. [Google Scholar]

- López-Garc’ia, M.; Cantó-Navarro, E. Post-Quantum Authentication Framework Based on Iris Recognition and Homo-morphic Encryption. IEEE Access 2025, 13, 155015–155030. [Google Scholar] [CrossRef]

| Dataset | Casia | IITD | MMU | UBIris | |||

|---|---|---|---|---|---|---|---|

| Recognized Target | Subject | Subject with Eye Side | Subject | Subject with Eye Side | Subject | Subject with Eye Side | Subject |

| Images | 8000 | 2240 | 2170 | 450 | 1205 | ||

| Images per class | 10 | 5 | 10 | 5 | 10 | 5 | 5 |

| Classes | 800 | 1600 | 224 | 453 | 45 | 90 | 241 |

| Dataset | Non-End-to-End (Cropped Iris) | End-to-End (Full Eye) | ||

|---|---|---|---|---|

| Subject | Subject with Eye Side | Subject | Subject with Eye Side | |

| Images size | 80 × 320 | 160 × 120 | ||

| Images per class (Subject) | 10 | 5 | 10 | 5 |

| Casia | ✓ | ✓ | ✓ | ✓ |

| IITD | ✓ | ✓ | ✓ | ✓ |

| MMU | ✓ | ✓ | ✓ | ✓ |

| UBIris | ✓ | N.A. | ✓ | N.A. |

| Dataset | Epochs | Batch Size | LR | WD | Reg. | Dropout |

|---|---|---|---|---|---|---|

| CASIA and UBIris | 100 | 16 | 2 × 10−4 | 1 × 10−6 | 1 × 10−6 | 0.15 |

| IITD | 120 | 32 | 3 × 10−4 | 3 × 10−6 | 5 × 10−6 | 0.18 |

| MMU | 100 | 16 | 4 × 10−4 | 5 × 10−5 | 5 × 10−5 | 0.4 |

| Dataset | Case | C/ Q | ROC-AUC | EER | Accuracy | Genuine | Imposter | d’ | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test Acc. | 1-EER | Improve | Avg | Std | Avg | Std | ||||||

| CASIA | End-to-End(E2E) | C | 0.9699 | 0.0125 | 97.50 | 0.9875 | Baseline | 0.9753 | 0.0920 | 0.5732 | 0.2429 | 2.1893 |

| Q | 0.9860 | 0.0107 | 97.88 | 0.9894 | 0.18% | 0.9844 | 0.0682 | 0.4996 | 0.2298 | 2.8602 | ||

| E2E + eye-side | C | 0.9372 | 0.1442 | 71.19 | 0.8559 | Baseline | 0.9434 | 0.1282 | 0.5538 | 0.2391 | 2.0309 | |

| Q | 0.9049 | 0.1433 | 71.38 | 0.8568 | 0.09% | 0.9051 | 0.1641 | 0.5456 | 0.2246 | 1.8277 | ||

| Non-End-to-End(~E2E) | C | 0.9570 | 0.0470 | 90.63 | 0.9531 | Baseline | 0.9116 | 0.1651 | 0.4161 | 0.1884 | 2.7973 | |

| Q | 0.9548 | 0.0388 | 92.25 | 0.9612 | 0.82% | 0.9333 | 0.1515 | 0.4576 | 0.2005 | 2.6770 | ||

| ~E2E+ eye-side | C | 0.9359 | 0.1076 | 78.50 | 0.8925 | Baseline | 0.7914 | 0.2609 | 0.2443 | 0.1552 | 2.5487 | |

| Q | 0.9434 | 0.0973 | 80.56 | 0.9028 | 1.03% | 0.8509 | 0.2282 | 0.3005 | 0.1745 | 2.7096 | ||

| IITD | End-to-End(E2E) | C | 0.9694 | 0.0135 | 97.32 | 0.9866 | Baseline | 0.9054 | 0.1929 | 0.2850 | 0.2148 | 3.0390 |

| Q | 0.9925 | 0.0068 | 98.66 | 0.9933 | 0.67% | 0.9756 | 0.0957 | 0.3524 | 0.1218 | 5.6898 | ||

| E2E + eye-side | C | 0.9842 | 0.0231 | 95.40 | 0.9770 | Baseline | 0.8652 | 0.2303 | 0.1243 | 0.0951 | 4.2052 | |

| Q | 0.9931 | 0.0139 | 97.24 | 0.9862 | 0.92% | 0.9488 | 0.1437 | 0.1706 | 0.0973 | 6.3416 | ||

| Non-End-to-End(~E2E) | C | 0.9925 | 0.0068 | 98.66 | 0.9933 | Baseline | 0.9418 | 0.1305 | 0.1944 | 0.0437 | 7.6803 | |

| Q | 1.0000 | 0.0023 | 99.55 | 0.9978 | 0.45% | 0.9856 | 0.0828 | 0.2243 | 0.0000 | 13.0029 | ||

| ~E2E+ eye-side | C | 0.9859 | 0.0092 | 98.16 | 0.9908 | Baseline | 0.7252 | 0.2592 | 0.0633 | 0.0543 | 3.5346 | |

| Q | 0.9967 | 0.0081 | 98.39 | 0.9920 | 0.11% | 0.9495 | 0.1133 | 0.1337 | 0.1562 | 5.9789 | ||

| MMU | End-to-End(E2E) | C | 0.8200 | 0.1137 | 77.78 | 0.8864 | Baseline | 0.7875 | 0.2616 | 0.5255 | 0.1730 | 1.1814 |

| Q | 0.8419 | 0.0682 | 86.67 | 0.9319 | 4.55% | 0.8874 | 0.1883 | 0.6360 | 0.2210 | 1.2245 | ||

| E2E + eye-side | C | 0.8316 | 0.2528 | 50.00 | 0.7472 | Baseline | 0.5698 | 0.2394 | 0.2733 | 0.1821 | 1.3941 | |

| Q | 0.7789 | 0.1685 | 66.67 | 0.8315 | 8.43% | 0.6775 | 0.2642 | 0.4016 | 0.2172 | 1.1408 | ||

| Non-End-to-End(~E2E) | C | 0.9206 | 0.0341 | 93.33 | 0.9659 | Baseline | 0.8224 | 0.1847 | 0.3863 | 0.2139 | 2.1823 | |

| Q | 0.9545 | 0.0114 | 97.78 | 0.9887 | 2.28% | 0.9535 | 0.1111 | 0.5156 | 0.0000 | 5.5741 | ||

| ~E2E+ eye-side | C | 0.9221 | 0.1124 | 77.78 | 0.8877 | Baseline | 0.5120 | 0.2817 | 0.1528 | 0.0721 | 1.7470 | |

| Q | 0.9125 | 0.0562 | 88.89 | 0.9439 | 5.62% | 0.8283 | 0.2320 | 0.3321 | 0.2357 | 2.1218 | ||

| UBIris | End-to-End | C | 0.9882 | 0.0271 | 94.60 | 0.9730 | Baseline | 0.8392 | 0.2185 | 0.1144 | 0.0826 | 4.3881 |

| Q | 0.9858 | 0.0125 | 97.51 | 0.9875 | 1.46% | 0.9592 | 0.1331 | 0.2824 | 0.1535 | 4.7110 | ||

| Non-End-to-End | C | 0.9202 | 0.0542 | 89.21 | 0.9459 | Baseline | 0.4525 | 0.3078 | 0.0743 | 0.0716 | 1.6925 | |

| Q | 0.9465 | 0.0250 | 95.02 | 0.9750 | 2.92% | 0.8442 | 0.2272 | 0.2418 | 0.2579 | 2.4787 | ||

| Dataset | Case | Classic/Quantum | Accuracy | CMC-AUC | Precision | Recall | |||

|---|---|---|---|---|---|---|---|---|---|

| R1 | R5 | Avg. (R1–R5) | Improve | ||||||

| CASIA | End-to-End | Classic | 0.9750 | 0.9950 | 99.0000 | Baseline | 94.7030 | 0.9631 | 0.9750 |

| Quantum | 0.9788 | 0.9975 | 99.1750 | 0.38% | 94.7720 | 0.9681 | 0.9788 | ||

| End-to-End with eye-side | Classic | 0.7119 | 0.8144 | 77.2380 | Baseline | 80.2170 | 0.6207 | 0.7119 | |

| Quantum | 0.7137 | 0.8338 | 79.0250 | 0.18% | 81.7080 | 0.6231 | 0.7137 | ||

| Non-End-to-End | Classic | 0.9062 | 0.9613 | 94.1500 | Baseline | 92.2380 | 0.8679 | 0.9062 | |

| Quantum | 0.9225 | 0.9750 | 95.6500 | 1.63% | 93.3660 | 0.8882 | 0.9225 | ||

| Non-End-to-End with eye-side | Classic | 0.7850 | 0.8781 | 84.4620 | Baseline | 85.6250 | 0.7090 | 0.7850 | |

| Quantum | 0.8056 | 0.8950 | 86.0250 | 2.06% | 86.7360 | 0.7347 | 0.8056 | ||

| IITD | End-to-End | Classic | 0.9732 | 0.9866 | 98.0360 | Baseline | 94.2080 | 0.9598 | 0.9732 |

| Quantum | 0.9866 | 0.9955 | 99.3750 | 1.34% | 94.8330 | 0.9799 | 0.9866 | ||

| End-to-End with eye-side | Classic | 0.9540 | 0.9816 | 97.3330 | Baseline | 93.7360 | 0.9322 | 0.9540 | |

| Quantum | 0.9724 | 0.9839 | 98.0230 | 1.84% | 93.8560 | 0.9586 | 0.9724 | ||

| Non-End-to-End | Classic | 0.9866 | 1.0000 | 99.4640 | Baseline | 94.9000 | 0.9799 | 0.9866 | |

| Quantum | 0.9955 | 1.0000 | 99.9110 | 0.89% | 94.9890 | 0.9933 | 0.9955 | ||

| Non-End-to-End with eye-side | Classic | 0.9816 | 0.9908 | 98.8050 | Baseline | 94.0800 | 0.9736 | 0.9816 | |

| Quantum | 0.9839 | 0.9931 | 98.9430 | 0.23% | 94.4080 | 0.9759 | 0.9839 | ||

| MMU | End-to-End | Classic | 0.7778 | 0.9111 | 88.0000 | Baseline | 92.1110 | 0.6926 | 0.7778 |

| Quantum | 0.8667 | 1.0000 | 95.5560 | 8.89% | 94.2220 | 0.8111 | 0.8667 | ||

| End-to-End with eye-side | Classic | 0.5000 | 0.7444 | 73.5560 | Baseline | 87.6000 | 0.3920 | 0.5000 | |

| Quantum | 0.66.66 | 0.8556 | 80.6670 | 16.67% | 89.5600 | 0.5796 | 0.6667 | ||

| Non-End-to-End | Classic | 0.9333 | 1.0000 | 97.7780 | Baseline | 94.6110 | 0.9000 | 0.9333 | |

| Quantum | 0.9778 | 1.0000 | 99.1110 | 4.45% | 94.8330 | 0.9667 | 0.9778 | ||

| Non-End-to-End with eye-side | Classic | 0.7778 | 0.8444 | 82.2220 | Baseline | 85.0280 | 0.6833 | 0.7778 | |

| Quantum | 0.8889 | 0.9444 | 92.6670 | 11.11% | 92.1110 | 0.8407 | 0.8889 | ||

| UBIris | End-to-End | Classic | 0.9461 | 0.9751 | 96.1830 | Baseline | 93.9110 | 0.9205 | 0.9461 |

| Quantum | 0.9751 | 0.9876 | 98.2570 | 2.90% | 94.2010 | 0.9627 | 0.9751 | ||

| Non-End-to-End | Classic | 0.8921 | 0.9461 | 92.7800 | Baseline | 91.0890 | 0.8489 | 0.8921 | |

| Quantum | 0.9502 | 0.9751 | 96.5980 | 5.81% | 93.6830 | 0.9302 | 0.9502 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Dahan, N.A.; Jaha, E.S. IRIS-QResNet: A Quantum-Inspired Deep Model for Efficient Iris Biometric Identification and Authentication. Sensors 2026, 26, 121. https://doi.org/10.3390/s26010121

Dahan NA, Jaha ES. IRIS-QResNet: A Quantum-Inspired Deep Model for Efficient Iris Biometric Identification and Authentication. Sensors. 2026; 26(1):121. https://doi.org/10.3390/s26010121

Chicago/Turabian StyleDahan, Neama Abdulaziz, and Emad Sami Jaha. 2026. "IRIS-QResNet: A Quantum-Inspired Deep Model for Efficient Iris Biometric Identification and Authentication" Sensors 26, no. 1: 121. https://doi.org/10.3390/s26010121

APA StyleDahan, N. A., & Jaha, E. S. (2026). IRIS-QResNet: A Quantum-Inspired Deep Model for Efficient Iris Biometric Identification and Authentication. Sensors, 26(1), 121. https://doi.org/10.3390/s26010121