Highlights

What are the main findings?

- Facial surface EMG can assess facial palsy severity.

- Biofeedback in facial palsy can be facilitated by appropriate EMG parameters.

What are the implications of the main findings?

- Motion classification (movement vs. rest) by sEMG is feasible even in severe cases of facial palsy.

- The results constitute the foundation for further studies on biofeedback algorithms needed for EMG biofeedback in facial palsy.

Abstract

Facial palsy (FP) significantly impacts patients’ quality of life. The accurate classification of FP severity is crucial for personalized treatment planning. Additionally, electromyographic (EMG)-based biofeedback shows promising results in improving recovery outcomes. This prospective study aims to identify EMG time series features that can both classify FP and facilitate biofeedback. Therefore, it investigated surface EMG in FP patients and healthy controls during three different facial movements. Repeated-measures ANOVAs (rmANOVA) were conducted to examine the effects of MOTION (move/rest), SIDE (healthy/lesioned) and the House–Brackmann score (HB), across 20 distinct EMG parameters. Correlation analysis was performed between HB and the asymmetry index of EMG parameters, complemented by Fisher score calculations to assess feature relevance in distinguishing between HB levels. Overall, 55 subjects (51.2 ± 14.73 years, 35 female) were included in the study. RmANOVAs revealed a highly significant effect of MOTION across almost all movement types (p < 0.001). Integrating the findings from rmANOVA, the correlation analysis and Fisher score analysis, at least 5/20 EMG parameters were determined to be robust indicators for assessing the degree of paresis and guiding biofeedback. This study demonstrates that EMG can reliably determine severity and guide effective biofeedback in FP, and in severe cases. Our findings support the integration of EMG into personalized rehabilitation strategies. However, further studies are mandatory to improve recovery outcomes.

Keywords:

facial palsy; recovery; rehabilitation; grading; electromyography; biofeedback; time series features 1. Introduction

The manifestation of facial palsy (FP) can cause a significant reduction in quality of life (QoL) [1,2,3,4,5]. In addition to aesthetics and psychological problems, physical complaints such as oral incompetence, incomplete eye closure or temporomandibular joint dysfunction affect patients’ daily lives. Whereas idiopathic FP patients often recover very well, the recovery phase after iatrogenic FP can sometimes be significantly delayed [6,7]. In order to ensure a proper recovery process and therapy monitoring, it is essential to objectively quantify the degree of FP. Most commonly used conventional grading scales, such as the House–Brackmann (HB) score and the Sunnybrook scale, have the disadvantage that they are highly dependent on the examiner [8,9,10,11]. An invasive electromyography (iEMG), on the other hand, can objectively measure muscle activity, but is painful for the patient and involves considerable time and effort in everyday practice.

In this regard, surface electromyography (sEMG) may be a suitable method for quantifying the degree of FP. Surface EMG does not examine individual muscle fibers, but a summation of the electrical activity of multiple motor units, including those from neighboring muscles. This can lead to interference phenomena and potential artifacts. Despite this imprecision compared to the iEMG, a few studies have demonstrated adequate application of sEMG for FP grading [12,13,14,15,16]. Franz et al. [13] investigated the correlation between sEMG and the Sunnybrook scale using 33 patients with FP after vestibular schwannoma (VS) surgery. They found a significantly higher variability of sEMG on the lesioned side as well as a significant correlation with the Sunnybrook score. Another study developed a semi-automated assessment system for facial nerve function based on sEMG and machine learning to replace the subjective HB score with a more objective method [12]. The sEMG showed promising classification performance indicating an alternative to the HB score, especially in the assessment of facial nerve function after VS surgery. However, the underlying data are based on a small patient cohort, and the grading of EMG characteristics remains unclear due to the use of machine learning methods.

At the same time, there is evidence that sEMG applications can be used for biofeedback training in FP patients or after facial nerve reconstruction [17,18,19,20,21,22,23,24]. For instance, it has been shown that synkinetic activity can be significantly reduced or prevented by sEMG biofeedback in cases of a facial aberrant reinnervation syndrome (FARS) [17,24]. Cronin et al. [20] revealed that neuromuscular facial retraining in combination with EMG can significantly improve facial function, symmetry and movement even in long-term FP. However, the biofeedback training programs performed in the studies differ considerably in terms of the selected patient population, the frequency and timing of the training. In addition, for the assessment of a sEMG, multiple (time series) features (e.g., root mean square [RMS], mean absolute value [MAV], and variance [VAR]) can be surveyed [25,26,27]. Previous studies have mostly used commercial biofeedback systems designed for use on extremity muscles applying mainly the MAV, RMS and integrated EMG (iEMG) [28].

Nevertheless, it is still quite unclear which parameters are best suited for facial applications and can be used for monitoring the degree of FP on the one hand and for biofeedback training on the other. The aim of this study was to identify sEMG time series parameters that are suitable for the classification and biofeedback of FP.

2. Materials and Methods

2.1. Study Cohort

This prospective, single center study examined a total of 55 German subjects with facial sEMG to find suitable sEMG parameters both for detecting the degree of FP and for facial biofeedback. Patients were recruited during in- and outpatient stays at the Department of Neurosurgery at the University Hospital Tübingen, Germany, between July and December 2024. They had either undergone previous tumor resection in the cerebellopontine angle or were diagnosed with idiopathic facial palsy, and presented with or without unilateral facial palsy of varying severity according to the HB score. In addition, a healthy cohort (without cranial surgery) was also recruited at the Tübingen University Hospital. Exclusion criteria were bilateral facial palsy, cognitive deficits, pregnancy and factors that make EMG measurement in the face impossible (e.g., skin rash, heavy beard growth). The study was approved by the local ethics committee and performed in accordance with the Declaration of Helsinki. All participants gave written informed consent.

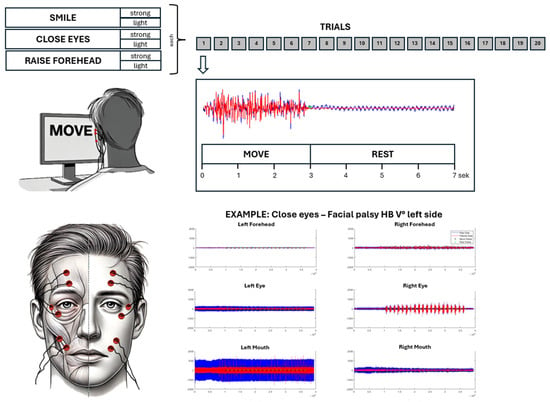

2.2. Data Acquisition and Experimental Setup

After grading the facial palsy according to the HB score (I: no facial palsy to VI: complete paralysis) and positioning the electrodes (reusable Ag-AgCl snap electrodes with 4 mm diameter, Biopac Systems, Inc., Goleta, CA, USA) in a bipolar setting according to the scheme in Figure 1, each participant took part in a 30 min sEMG session. A bipolar electrode configuration was selected, as previous studies indicated a potential advantage in surface EMG applications in comparison to monopolar and common average reference configurations [29]. Furthermore, special consideration was given to the impedances being as small as feasible in order to achieve the best possible EMG signal quality [30]. The session consisted of 6 runs with different movements, which were performed in a randomized order: smile strongly, smile lightly, close eyes strongly, close eyes lightly, raise forehead strongly, raise forehead lightly. Each run consisted of 20 trials, i.e., the same movement sequence was performed 20 times with a 3 s MOVE interval and a 4 s REST interval. The patients received the commands via a computer screen in front of them. In addition, they received an auditory signal at the beginning of the REST cycle. Prior to starting the experiment, subjects were instructed in detail about the meaning of the commands. During “smile strongly”, they were supposed to smile with maximum force, while during “smile lightly”, they were asked to smile with a slight mouth angle excursion. Closing the eyes meant always closing the eyes completely, but squinting hard during “close eyes strongly” and only closing them gently during “close eyes lightly”. Finally, “raise forehead strongly” meant raising the forehead again with maximum force, while the forehead should only be raised a little during “raise forehead lightly”. Throughout the whole session, a trained investigator was present in the room and monitored the performance of each trial to ensure that all commands were understood and executed correctly. The entire EMG dataset was streamed with the commercial Neuro Omega (software version 1.6.5.0) recording device (Alpha Omega Engineering, Nof HaGalil, Israel) at a sampling rate of 2000 Hz and transferred and stored on an external computer.

Figure 1.

Experimental setup. Six EMG channels are recorded according to the shown template (bottom left) during the performance of six mimic movement exercises/runs (top left). Each run consisted of 20 trials, which were composed of a MOVE (3 s) and a REST interval (4 s) (top right).

2.3. EMG Analysis and Feature Extraction

Offline data analysis was performed using custom-written scripts in MATLAB (MathWorks Inc., Natick, MA, USA, R2022b). Raw electromyographic data of all movement types/runs were imported into MATLAB. Subsequently, data were filtered with a 4th order Butterworth bandpass filter (10–250 Hz) and a 4th order Butterworth bandstop filter (suppression of frequencies from 48.5–51.5 Hz). Based on the predefined timing structure of the experimental protocol (3-s MOVE, 4-s REST), the data were algorithmically segmented into REST and MOVE intervals. This ensured consistent alignment with the stimuli presented during acquisition. Following segmentation, 20 different time series features were determined for each individual interval for both the healthy and lesioned facial side. Detailed information on the sEMG features analyzed can be found in Appendix A. For further analysis, the absolute values of the features for MOVE and REST and healthy and impaired facial side, as well as an asymmetry index (AI), were determined. The AI was calculated as follows (shown using the MAV as sEMG feature), where 0% describes complete symmetry and a higher AI value describes increasing asymmetry between the facial sides:

2.4. Statistics

All statistical tests were performed using MATLAB (MathWorks Inc., Natick, MA, USA, R2022b) and SPSS (IBM SPSS Statistics for Windows, Version 30.0. Armonk, NY, USA, IBM Corp.). Univariate repeated-measure ANOVAs (rmANOVA) were performed separately for every movement type (i.e., smile strongly, smile lightly, close eyes strongly, close eyes lightly, raise forehead strongly, and raise forehead lightly) based on the absolute values of the time series feature to determine whether there is an effect of the state of motion (MOTION: MOVE vs. REST), side of the face (SIDE: healthy vs. lesioned), and/or House–Brackmann grade (HB) for each of the 20 features. MOTION and SIDE are between-subject factors, while HB was included as a within-subject factor. To account for the anatomical specificity of each facial movement, only electrodes relevant to the respective movement were included in the analysis: e.g., for mouth movements, electrodes positioned around the mouth were analyzed. The Mauchly test was performed to check the sphericity assumption and, if necessary, the degrees of freedom were adjusted using Greenhouse–Geisser correction.

Being the only between-subject factor, the statistical analysis of HB might be affected by the fact that time series values show a huge inter-subject variability. Absolute time series values are hardly comparable between subjects and depend on several other factors such as positioning of the electrodes, impedances, patient’s motivations. One widely used method to enable an inter-subject comparison is to normalize time series features to the healthy side resulting in the presented AI. Therefore, in addition to the rmANOVA, a two-tailed Spearman correlation analysis was performed between the AI of the 20 sEMG features and the HB score (i.e., HB I healthy controls, HB I patients, HB II + III, HB IV + V, HB VI). Furthermore, the Fisher Score (FS) for the AI of the features was calculated to assess the discriminative power of the features with respect to the different HB grades. The Fisher Score is defined as:

where is the number of classes (i.e., HB grades), is the number of samples in class , is the mean of class , is the overall mean of the feature across all samples, and is the variance within class . This score quantifies the relationship between within- and between-class variance, providing an additional measure of feature relevance for classification. Values of p < 0.05 were considered significant.

3. Results

3.1. Experimental and Clinical Characteristics

This study enrolled 55 subjects (51.2 ± 14.73 years; 35 female), including 40 patients with facial palsy HB grade II-VI, while 15 subjects had no facial palsy (HB I). The majority of FP were iatrogenic (37/40, 92.5%). On average, FP had been present for 1.84 years, and 8/55 (14.5%) subjects presented with facial aberrant reinnervation syndrome. All patients completed the study, and there were no side effects or undesired events during measurements. Specific cohorts’ characteristics are shown in Table 1.

Table 1.

Cohort characteristics.

3.2. Parameters for Motion Classification and Biofeedback Applications

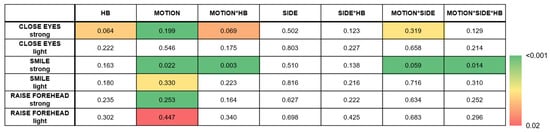

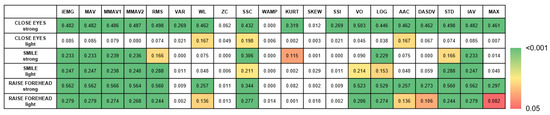

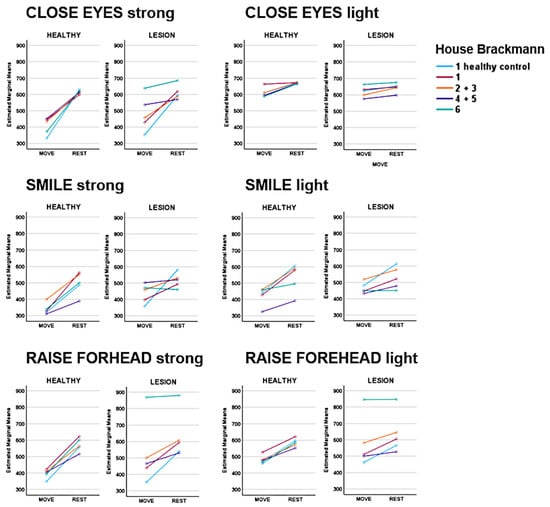

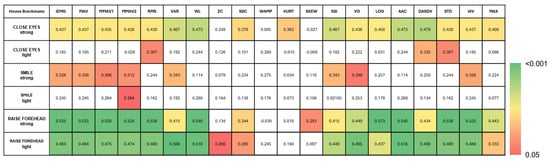

The rmANOVA demonstrated a significant main effect of MOTION (MOVE/REST) for nearly all movement types, with the strongest effect apparent for strong movements (p < 0.001; Figure 2). Post hoc analysis revealed the slope sign change (SSC) as the best predictor of MOTION, although several other time series features revealed comparable performances: integrated EMG (iEMG), mean absolute value (MAV), modified mean absolute value 1 (MMAV1), modified mean absolute value 2 (MMAV2), root mean square (RMS), log detector (LOG), standard deviation (STD), and the integral of absolute value (IAV) (Figure 3). A detailed evaluation of the SSC showed good MOTION discrimination for the healthy SIDE. The difference between MOVE and REST on the affected side decreased with increasing HB grade. However, distinctions can still be made up to HB grades VI and V (Figure 4).

Figure 2.

Multivariate test of repeated-measurement analysis showing the strongest effect for MOTION. While the box colors represent the significance level (p-value), the number values represent the Wilks’ λ. White boxes represent non-significant values.

Figure 3.

Univariate follow-up tests of MOTION in the rmANOVA. While the box colors represent the significance level (p-value), the number values represent the effect size (partial eta-squared, η2). White boxes represent non-significant values.

Figure 4.

Slope sign change (SSC) values across different House–Brackmann (HB) scores. While MOVE and REST can be well differentiated on the healthy side by the SSC, the difference between MOVE and REST on the affected side decreases with increasing HB grade. However, distinctions can still be made up to HB grades IV + V.

For the side of the face (SIDE), no significant effect was found for any of the movements, which can be attributed to the circumstance that (i) healthy subjects are also integrated in the analysis and (ii) there should be no difference between the two sides during REST. A significant effect for the HB was observed only in “CLOSE EYES strong” (Wilks’ Λ = 0.064, F(60, 114) = 1.77, p = 0.005). Post hoc tests revealed a significant result for all EMG features except zero crossing (ZC), slope sign change (SSC), Willison amplitude (WA), kurtosis (KURT) and skewness (SKEW).

In summary, sEMG features can discriminate MOTION independent for both sides lesioned and healthy (MOTION). However, only in the “CLOSE EYES strong” and “SMILE strong” conditions does sEMG detect differences between the SIDES, namely for the MOVE periode (MOTIONxSIDE). The HB affects the sEMG of the lesioned SIDE during SIDE but not REST (MOTIONxSIDExHB). However, this was only significant for the “SMILE strong” condition. Notably, unilateral FP seemed to also affect the sEMG of the healthy side (see HB, MOTIONxHB in “CLOSE EYES strong” and “SMILE strong” conditions).

3.3. Feature Extraction for Facial Nerve Grading

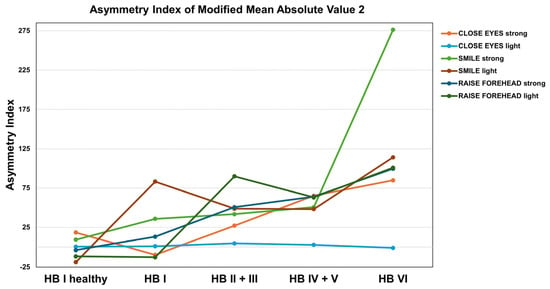

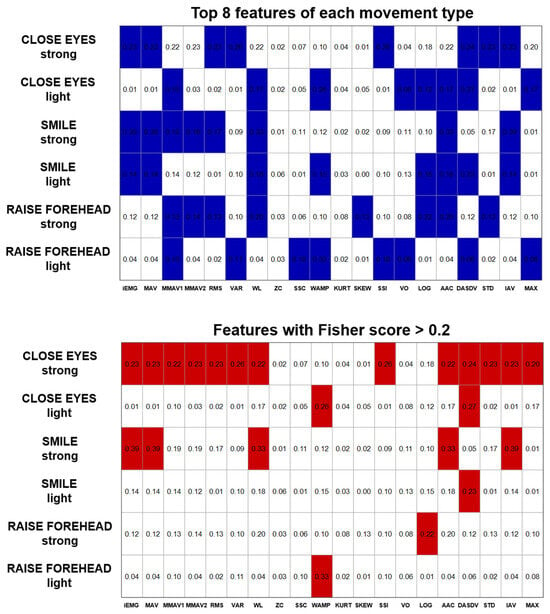

Correlation analysis of the AI with the HB score indicated that only a few of the time series features indicated a significant correlation for the movements “CLOSE EYES light” and “SMILE light” (Figure 5). However, the MMAV2-AI correlated with the HB grade in 5/6 movement types (Figure 6). Also, iEMG, MAV, MMAV1, RMS, VAR, SSI, VO, DASDV, STD, and IAV correlated in four out of six movements. The strongest correlations were found for the forehead movements. In contrast, the forehead movements showed rather low Fisher scores (Figure 7). However, consistent with the correlation analysis, ZC, KURT, SSC and SKEW were found to discriminate poorly between the HB grades, while iEMG, MAV, DASDV and IAV appeared to be suitable for this purpose.

Figure 5.

Correlation Heatmap demonstrates the correlation between the asymmetry index (AI) of the 20 time series features during different movements with the House–Brackmann score. While the box colors represent the significance level (p-value), the number values represent the strength of correlation (correlation coefficient). White boxes represent non-significant values.

Figure 6.

Asymmetry index of the modified mean absolute value 2 (MMAV2) demonstrating strongest correlations in forehead movements.

Figure 7.

Fisher scores demonstrating the discriminative power of the AI of the EMG features with respect to the different HB grades. The top graph shows the 8 best features for each movement type, while the bottom graph highlights the Fisher scores >0.2.

4. Discussion

Previous EMG biofeedback studies in FP are limited by utilizing feedback devices designed for extremities, although the fine mimic muscles of the face are structured differently than the muscles of the limbs [31]. In the present study, we were able to show that there are considerable differences between the various EMG time series features in the correlation to HB grade as well as in the classification between a movement and rest phase of the facial muscles. Future studies should therefore take into account that the facial EMG must be analyzed in a specialized manner.

Rutkowska et al. [28] identified in studies of emotional expressions the most frequently used features were the MAV, RMS and Integrated EMG (iEMG). In this context, our study shows the slope sign change to be suitable for distinguishing between different motion states (MOVE and REST). However, the SSC asymmetry index was not well correlated with the HB score in all movement types and intensities. In addition, the Fisher score analysis did not prove the SSC-AI to be suitable for HB classification. Combining statistical analyses (i.e., repeated measures, correlation analysis and Fisher score), iEMG, MAV, MMAV1, RMS and IAV seem to be the most proper parameters for the presented requirements, which is in line with the results of Rutkowska et al. [28]. These techniques all quantify the EMG’s energy information using mathematical calculations such as integration, averaging and squaring [26,27]. While the iEMG represents the summed activity of the EMG signal, MAV provides the mean absolute value. MMAV1 is a modified version of MAV to improve signal assessment depending on the specific measurement conditions. Whereas the RMS describes the signal energy and is more sensitive to high amplitudes, the IAV calculates the total absolute amplitude of the surface EMG signal over a given time period, providing a measure of the overall level of muscle activity regardless of signal polarity.

These parameters should be investigated further in future studies on sEMG and biofeedback in facial palsy. The main concern in this respect is to determine whether the combination of parameters or even the use of artificial intelligence with automated signal analysis might lead to better results than using only one parameter. This question is particularly important regarding (i) the classification of the severity of a facial palsy and (ii) the prediction of facial nerve recovery. While there are numerous studies investigating automatic FP classification using facial images and videos [32,33,34,35], there are only few studies using electrophysiological recordings (such as EMG). In this context, Holze et al. developed a semi-automated assessment system for facial nerve function based on sEMG and machine learning to replace the subjective HB score with a more objective method [12]. The sEMG showed promising performance (area under the curve [AUC]: 0.72–0.91) as a reliable alternative to the HB score. Although the present study and that of Holze et al. [12] primarily included patients with mild to moderate FP (HB I–III), several noteworthy methodological and clinical differences distinguish the two. We included a larger cohort and focused not only on FP severity classification but also on identifying interpretable and robust EMG parameters that could serve as the foundation for future biofeedback training. Therefore, EMG signals were analyzed not only during active movement, but also during rest phases to investigate whether the parameters can differentiate between the two states. This distinction is particularly relevant for biofeedback applications, where dynamic changes and asymmetries between rest and activation play a central role. Furthermore, while the machine learning approach by Holze et al. [12] was optimized for classification accuracy, such models may be less suited for biofeedback purposes, where transparent and user-understandable feedback parameters are needed to guide patient engagement and training effectiveness.

Most studies on prediction models for FP focus on the probability of predicting the occurrence of FP after certain procedures (e.g., resection of vestibular schwannomas) and/or the use of clinical parameters (e.g., size of tumors, age of a patient, severity of paresis) [36,37,38,39]. There was only one study by Khisimoto et al. that investigated the predictive value of different artificial intelligence models for predicting the occurrence of synkinesia after FP using electroneurography [40]. The results demonstrated a good predictive performance (AUC: 0.90.) of a machine-learning-based logistic regression method, which represents a contrast-or rather progress-compared to a study by Azuma et al. [41], who were unable to predict the occurrence of synkinesia using the ENoG (without using machine learning). However, we are not aware of any studies that combine sEMG and artificial intelligence for prediction of FP outcome in this context.

Further factors to consider when evaluating sEMG for facial biofeedback are the stages of nerve damage and recovery. As in the case of a peripheral nerve injury of the limbs, various degrees of nerve injury (i.e., neuropraxia, axonotmesis, neurotmesis) can also occur in peripheral FP [42,43,44]. The extent of clinical impairment depends on this, as does the speed of recovery. EMG biofeedback, therefore, must differ during the paralytic phase than during the synkinetic phase of a FARS. However, a common point to emphasize is that the aim of facial training-such as neuromuscular retraining-is not to develop maximum muscle contraction, as this tends to promote syn- and dyskinesia (even in the paralytic phase) [45]. Instead, the aim is to learn to control the force intensity and coordinate the facial muscles. Our study addresses this by examining the three movement types, and thus the usefulness of the time series features, for different contraction strengths (strong and light). The results indicate that some time series features, such as kurtosis or waveform length, could be used for the stronger movements, but may not be able to adequately detect differences between movement and rest for weaker movements. Hence, in early phases or more severe paresis, robust features such as RMS and MAV may be preferable, whereas in later phases—when the aim is to improve coordination of the mimic muscles and reduce synkinesia—other parameters may be more suitable. A stage-specific selection of features could improve the effectiveness of facial EMG biofeedback by aligning signal analysis with the functional needs of each recovery phase. However, our study only controlled the different intensities using instructions. In the future, it would be conceivable to control the intensity objectively, e.g., by using the sEMG of the healthy side, by grading the intensity as a percentage of the maximum force or by combining the sEMG with kinematic measurements or visual controls [46,47].

Limitations

The main limitation of the study is that the EMG features were only examined using 12 electrodes (6 per facial side, bipolar setting). However, the mimic musculature consists of >15 muscles, so interference phenomena and inaccuracies may occur due to our electrode setup. More precise statements about muscle function might be possible using a high-density EMG [48,49,50,51]. At the same time, the many electrodes may contribute to reduced mobility in the facial area. This would be contrary to a biofeedback application. Another limitation lies in the unbalanced cohort, with only 7 healthy controls compared to 48 patients. However, the focus of the study was not a direct statistical comparison between healthy and patient groups, but rather the evaluation of suitable time series features of an EMG for biofeedback training and the classification of FP. The uneven distribution across HB grades also reflects typical clinical reality but limits the generalizability of subgroup comparisons. Future studies with larger and more balanced samples are warranted to validate the findings. Finally, other important factors influencing the outcome of biofeedback interventions—such as training frequency, session duration, or the specific type of feedback (e.g., visual, auditory, multimodal)—were not within the scope of our investigation. These parameters, however, likely have a considerable impact on training efficacy and user compliance and should be systematically explored in future studies. Integrating these aspects into experimental designs may help to better understand how to tailor biofeedback interventions for different stages of FP and individual patient needs.

5. Conclusions

The research findings indicate that sEMG can reliably determine facial palsy severity and guide biofeedback interventions after facial palsy. Appropriate EMG parameters, such as the iEMG, RMS or MAV, should be used to enable the best possible differentiation between rest and movement, even with minor movements and severe paresis. In this context, the present study constitutes the foundation for further investigations of biofeedback algorithms and training modalities required for EMG biofeedback in facial palsy.

Author Contributions

K.M., I.M. and G.N. contributed to the conception and design of the study. K.M., I.M. and A.P. contributed to the acquisition and analysis of data as well as the literature search and drafting of the manuscript. G.N., A.S. (Alexia Stark), M.G., A.S. (Aldo Spolaore) and M.T. contributed to the acquisition of data and manuscript review. All authors have read and agreed to the published version of the manuscript.

Funding

The data was collected as part of a project on biofeedback for facial palsy funded by the Federal Ministry of Education and Research Germany (BMBF). The BMBF had no influence on data or reporting.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Ethics Committee of the university Tuebingen (protocol code: 326/2024BO1).

Informed Consent Statement

All participants gave written informed consent.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to ethical reasons.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. Therefore, none of the authors has any conflict of interest to disclose.

Abbreviations

The following abbreviations are used in this manuscript:

| AAC | average amplitude change |

| AI | asymmetry index |

| AUC | area-under-the-curve |

| DASDV | difference absolute standard value |

| FARS | facial aberrant reinnervation syndrome |

| FP | facial palsy |

| HB | House–Brackmann score |

| IAV | integral of absolute value |

| iEMG | intregrated electromyography |

| KURT | kurtosis |

| LOG | log detector |

| MAV | mean absolute value |

| MAX | maximal amplitude |

| MMAV1 | modified mean absolute value 1 |

| MMAV2 | modified mean absolute value 2 |

| QoL | quality of life |

| RMS | root mean square |

| sEMG | surface electromyography |

| SKEW | skewness |

| SSC | slope sign change |

| SSI | simple square integral |

| STD | standard deviation |

| VAR | variance |

| VO | V-order |

| VS | vestibular schwannoma |

| WAMP | Willison amplitude |

| WL | waveform length |

Appendix A

Table A1.

Description of time series features.

Table A1.

Description of time series features.

| Feature | Abbrev. | Mathematical Definition | Description | |

|---|---|---|---|---|

| Integrated EMG | iEMG | N = length of signal = EMG signal in a segment |

| |

| Mean Absolute Value | MAV | N = length of signal = EMG signal in a segment |

| |

| Modified Mean Absolute Value 1 | MMAV1 | N = length of signal = EMG signal in a segment = weighting function that determines the position within the time window |

| |

| Modified Mean Absolute Value 2 | MMAV2 | N = length of signal = EMG signal in a segment = weighting function that determines the position within the time window |

| |

| Root Mean Square | RMS | N = length of signal = EMG signal in a segment |

| |

| Variance | VAR | N = length of signal = EMG signal in a segment |

| |

| Waveform Length | WL | N = length of signal = EMG signal in a segment |

| |

| Zero Crossing | ZC | N = length of signal = EMG signal in a segment |

| |

| Slope Sign Change | SSC | N = length of signal = EMG signal in a segment |

| |

| Willison Amplitude | WAMP | N = length of signal = EMG signal in a segment Threshold in present study: 10% of the maximum absolute amplitude of the signal |

| |

| Kurtosis | KURT | N = length of signal = EMG signal in a segment = mean of the data = standard deviation of dataset |

| |

| Skewness | SKEW | N = length of signal/number of signal values = EMG signal at i = mean of the data = standard deviation of signal |

| |

| Simple Square Integral | SSI | N = length of signal = EMG signal in a segment |

| |

| V-Order | VO | N = length of signal/number of signal values = EMG signal at i v = order of calculation (in present study 3) |

| |

| Log detector | LOG | N = length of signal = EMG signal in a segment |

| |

| Average Amplitude Change | AAC | N = length of signal = EMG signal in a segment |

| |

| Difference Absolute Standard Value | DASDV | N = length of signal = EMG signal in a segment |

| |

| Standard Deviation | STD | N = length of signal = EMG signal in a segment = mean value of the EMG signal |

| |

| Integral of Absolute Value | IAV | N = length of signal/number of samples = EMG signal at instance i |

| |

| Maximal Amplitude | MAX | = EMG signal as function of time max = maximum function that determines the largest value from the signal amounts |

| |

(Chowdhury et al., 2013 [27]; Nazmi et al., 2016 [26]).

References

- Hotton, M.; Huggons, E.; Hamlet, C.; Shore, D.; Johnson, D.; Norris, J.H.; Kilcoyne, S.; Dalton, L. The Psychosocial Impact of Facial Palsy: A Systematic Review. Br. J. Health Psychol. 2020, 25, 695–727. [Google Scholar] [CrossRef] [PubMed]

- Nellis, J.C.; Ishii, M.; Byrne, P.J.; Boahene, K.D.O.; Dey, J.K.; Ishii, L.E.; Boahene, K.D.O.; Dey, J.K.; Ishii, L.E. Association among Facial Paralysis, Depression, and Quality of Life in Facial Plastic Surgery Patients. JAMA Facial Plast. Surg. 2017, 19, 190–196. [Google Scholar] [CrossRef] [PubMed]

- Verhoeff, R.; Bruins, T.E.; Ingels, K.J.A.O.; Werker, P.M.N.; van Veen, M.M. A Cross-Sectional Analysis of Facial Palsy-Related Quality of Life in 125 Patients: Comparing Linear, Quadratic and Cubic Regression Analyses. Clin. Otolaryngol. 2022, 47, 541–545. [Google Scholar] [CrossRef] [PubMed]

- Machetanz, K.; Lee, L.; Wang, S.S.; Tatagiba, M.; Naros, G. Trading Mental and Physical Health in Vestibular Schwannoma Treatment Decision. Front. Oncol. 2023, 13, 1152833. [Google Scholar] [CrossRef]

- Cross, T.; Sheard, C.E.; Garrud, P.; Nikolopoulos, T.P.; O’Donoghue, G.M. Impact of Facial Paralysis on Patients with Acoustic Neuroma. Laryngoscope 2000, 110, 1539–1542. [Google Scholar] [CrossRef]

- Urban, E.; Volk, G.F.; Geißler, K.; Thielker, J.; Dittberner, A.; Klingner, C.; Witte, O.W.; Guntinas-Lichius, O. Prognostic Factors for the Outcome of Bells’ Palsy: A Cohort Register-Based Study. Clin. Otolaryngol. 2020, 45, 754–761. [Google Scholar] [CrossRef]

- Hayler, R.; Clark, J.; Croxson, G.; Coulson, S.; Hussain, G.; Ngo, Q.; Ch’ng, S.; Low, T.H. Sydney Facial Nerve Clinic: Experience of a Multidisciplinary Team. ANZ J. Surg. 2020, 90, 856–860. [Google Scholar] [CrossRef]

- Kanerva, M.; Poussa, T.; Pitkäranta, A. Sunnybrook and House-Brackmann Facial Grading Systems: Intrarater Repeatability and Interrater Agreement. Otolaryngol. Head Neck Surg. 2006, 135, 865–871. [Google Scholar] [CrossRef]

- House, J.W.; Brackmann, D.E. Facial Nerve Grading System. Otolaryngol. Head Neck Surg. 1985, 93, 146–147. [Google Scholar] [CrossRef]

- Ross, B.G.; Fradet, G.; Nedzelski, J.M. Development of a Sensitive Clinical Facial Grading System. Otolaryngol. Head Neck Surg. 1996, 114, 380–386. [Google Scholar] [CrossRef]

- Scheller, C.; Wienke, A.; Tatagiba, M.; Gharabaghi, A.; Ramina, K.F.; Scheller, K.; Prell, J.; Zenk, J.; Ganslandt, O.; Bischoff, B.; et al. Interobserver Variability of the House-Brackmann Facial Nerve Grading System for the Analysis of a Randomized Multi-Center Phase III Trial. Acta Neurochir. 2017, 159, 733–738. [Google Scholar] [CrossRef]

- Holze, M.; Rensch, L.; Prell, J.; Scheller, C.; Simmermacher, S.; Scheer, M.; Strauss, C.; Rampp, S. Learning from EMG: Semi-Automated Grading of Facial Nerve Function. J. Clin. Monit. Comput. 2022, 36, 1509–1517. [Google Scholar] [CrossRef]

- Franz, L.; Marioni, G.; Daloiso, A.; Biancoli, E.; Tealdo, G.; Cazzador, D.; Nicolai, P.; de Filippis, C.; Zanoletti, E. Facial Surface Electromyography: A Novel Approach to Facial Nerve Functional Evaluation after Vestibular Schwannoma Surgery. J. Clin. Med. 2024, 13, 590. [Google Scholar] [CrossRef]

- Kim, G.H.; Park, J.H.; Kim, T.K.; Lee, E.J.; Jung, S.E.; Seo, J.C.; Kim, C.H.; Choi, Y.M.; Yoon, H.M. Correlation Between Accompanying Symptoms of Facial Nerve Palsy, Clinical Assessment Scales and Surface Electromyography. J. Acupunct. Res. 2022, 39, 297–303. [Google Scholar] [CrossRef]

- Ryu, H.M.; Lee, S.J.; Park, E.J.; Kim, S.G.; Kim, K.H.; Choi, Y.M.; Kim, J.U.; Song, B.Y.; Kim, C.H.; Yoon, H.M.; et al. Study on the Validity of Surface Electromyography as Assessment Tools for Facial Nerve Palsy. J. Pharmacopunct. 2018, 21, 258. [Google Scholar] [CrossRef]

- Machetanz, K.; Grimm, F.; Schäfer, R.; Trakolis, L.; Hurth, H.; Haas, P.; Gharabaghi, A.; Tatagiba, M.; Naros, G. Design and Evaluation of a Custom-Made Electromyographic Biofeedback System for Facial Rehabilitation. Front. Neurosci. 2022, 16, 666173. [Google Scholar] [CrossRef]

- Volk, G.F.; Roediger, B.; Geißler, K.; Kuttenreich, A.-M.; Klingner, C.M.; Dobel, C.; Guntinas-Lichius, O. Effect of an Intensified Combined Electromyography and Visual Feedback Training on Facial Grading in Patients With Post-Paralytic Facial Synkinesis. Front. Rehabil. Sci. 2021, 2, 746188. [Google Scholar] [CrossRef]

- Hammerschlag, P.E.; Brudny, J.; Cusumano, R.; Cohen, N.L. Hypoglossal-Facial Nerve Anastomosis and Electromyographic Feedback Rehabilitation. Laryngoscope 1987, 97, 705–709. [Google Scholar] [CrossRef]

- Ross, B.; Nedzelski, J.M.; McLean, J.A. Efficacy of Feedback Training in Long-Standing Facial Nerve Paresis. Laryngoscope 1991, 101, 744–750. [Google Scholar] [CrossRef]

- Cronin, G.W.; Steenerson, R.L. The Effectiveness of Neuromuscular Facial Retraining Combined with Electromyography in Facial Paralysis Rehabilitation. Otolaryngol. Head Neck Surg. 2003, 128, 534–538. [Google Scholar] [CrossRef]

- Duarte-Moreira, R.J.; Castro, K.V.-F.; Luz-Santos, C.; Martins, J.V.P.; Sá, K.N.; Baptista, A.F. Electromyographic Biofeedback in Motor Function Recovery After Peripheral Nerve Injury: An Integrative Review of the Literature. Appl. Psychophysiol. Biofeedback 2018, 43, 247–257. [Google Scholar] [CrossRef]

- Molina, A.H.; Harvey, J.; Pitt, K.M.; Lee, J.; Barlow, S.M. Effects of Real-Time IEMG Biofeedback on Facial Muscle Activation Patterns in a Child with Congenital Unilateral Facial Palsy. Biomed. J. Sci. Tech. Res. 2022, 47, 38074–38088. [Google Scholar] [CrossRef]

- Pourmomeny, A.A.; Zadmehre, H.; Mirshamsi, M.; Mahmodi, Z. Prevention of Synkinesis by Biofeedback Therapy: A Randomized Clinical Trial. Otol. Neurotol. 2014, 35, 739–742. [Google Scholar] [CrossRef]

- Dalla-Toffola, E.; Bossi, D.; Buonocore, M.; Montomoli, C.; Petrucci, L.; Alfonsi, E. Usefulness of BFB/EMG in Facial Palsy Rehabilitation. Disabil Rehabil 2005, 27, 809–815. [Google Scholar] [CrossRef]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C.; Tarpin-Bernard, F.; Laurillau, Y. EMG Feature Evaluation for Improving Myoelectric Pattern Recognition Robustness. Expert. Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Nazmi, N.; Rahman, M.A.A.; Yamamoto, S.I.; Ahmad, S.A.; Zamzuri, H.; Mazlan, S.A. A Review of Classification Techniques of EMG Signals during Isotonic and Isometric Contractions. Sensors 2016, 16, 1304. [Google Scholar] [CrossRef]

- Chowdhury, R.H.; Reaz, M.B.I.; Bin Mohd Ali, M.A.; Bakar, A.A.A.; Chellappan, K.; Chang, T.G. Surface Electromyography Signal Processing and Classification Techniques. Sensors 2013, 13, 12431–12466. [Google Scholar] [CrossRef]

- Rutkowska, J.M.; Ghilardi, T.; Vacaru, S.V.; van Schaik, J.E.; Meyer, M.; Hunnius, S.; Oostenveld, R. Optimal Processing of Surface Facial EMG to Identify Emotional Expressions: A Data-Driven Approach. Behav. Res. Methods 2024, 56, 7331–7344. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, K.; Jin, Y.; Qiu, F.; Yao, Y.; Xu, L. Influence of Electrode Configuration on Muscle-Fiber-Conduction-Velocity Estimation Using Surface Electromyography. IEEE Trans. Biomed. Eng. 2022, 69, 2414–2422. [Google Scholar] [CrossRef]

- Sae-lim, W.; Phukpattaranont, P.; Thongpull, K. Effect of Electrode Skin Impedance on Electromyography Signal Quality. In Proceedings of the 2018 15th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Chiang Rai, Thailand, 18–21 July 2018; pp. 748–751. [Google Scholar] [CrossRef]

- Cattaneo, L.; Pavesi, G. The Facial Motor System. Neurosci. Biobehav. Rev. 2014, 38, 135. [Google Scholar] [CrossRef]

- Sajid, M.; Shafique, T.; Baig, M.; Riaz, I.; Amin, S.; Manzoor, S. Automatic Grading of Palsy Using Asymmetrical Facial Features: A Study Complemented by New Solutions. Symmetry 2018, 10, 242. [Google Scholar] [CrossRef]

- Jiang, C.; Wu, J.; Zhong, W.; Wei, M.; Tong, J.; Yu, H.; Wang, L. Automatic Facial Paralysis Assessment via Computational Image Analysis. J. Healthc. Eng. 2020, 2020, 2398542. [Google Scholar] [CrossRef]

- Zhuang, Y.; McDonald, M.; Uribe, O.; Yin, X.; Parikh, D.; Southerland, A.M.; Rohde, G.K. Facial Weakness Analysis and Quantification of Static Images. IEEE J. Biomed. Health Inform. 2020, 24, 2260–2267. [Google Scholar] [CrossRef]

- Eisenhardt, S.; Volk, G.F.; Rozen, S.; Parra-Dominguez, G.S.; Garcia-Capulin, C.H.; Sanchez-Yanez, R.E. Automatic Facial Palsy Diagnosis as a Classification Problem Using Regional Information Extracted from a Photograph. Diagnostics 2022, 12, 1528. [Google Scholar] [CrossRef]

- Wang, J. Prediction of Postoperative Recovery in Patients with Acoustic Neuroma Using Machine Learning and SMOTE-ENN Techniques. Math. Biosci. Eng. 2022, 19, 10407–10423. [Google Scholar] [CrossRef]

- Chiesa-Estomba, C.M.; Echaniz, O.; Sistiaga Suarez, J.A.; González-García, J.A.; Larruscain, E.; Altuna, X.; Medela, A.; Graña, M. Machine Learning Models for Predicting Facial Nerve Palsy in Parotid Gland Surgery for Benign Tumors. J. Surg. Res. 2021, 262, 57–64. [Google Scholar] [CrossRef]

- Heman-Ackah, S.M.; Blue, R.; Quimby, A.E.; Abdallah, H.; Sweeney, E.M.; Chauhan, D.; Hwa, T.; Brant, J.; Ruckenstein, M.J.; Bigelow, D.C.; et al. A Multi-Institutional Machine Learning Algorithm for Prognosticating Facial Nerve Injury Following Microsurgical Resection of Vestibular Schwannoma. Sci. Rep. 2024, 14, 12963. [Google Scholar] [CrossRef]

- Zohdy, Y.M.; Alawieh, A.M.; Bray, D.; Pradilla, G.; Garzon-Muvdi, T.; Ashram, Y.A. An Artificial Neural Network Model for Predicting Postoperative Facial Nerve Outcomes After Vestibular Schwannoma Surgery. Neurosurgery 2024, 94, 805–812. [Google Scholar] [CrossRef]

- Kishimoto-Urata, M.; Urata, S.; Nishijima, H.; Baba, S.; Fujimaki, Y.; Kondo, K.; Yamasoba, T. Predicting Synkinesis Caused by Bell’s Palsy or Ramsay Hunt Syndrome Using Machine Learning-Based Logistic Regression. Laryngoscope Investig. Otolaryngol. 2023, 8, 1189–1195. [Google Scholar] [CrossRef]

- Azuma, T.; Nakamura, K.; Takahashi, M.; Miyoshi, H.; Toda, N.; Iwasaki, H.; Fuchigami, T.; Sato, G.; Kitamura, Y.; Abe, K.; et al. Electroneurography Cannot Predict When Facial Synkinesis Develops in Patients with Facial Palsy. J. Med. Invest. 2020, 67, 87–89. [Google Scholar] [CrossRef]

- Hussain, G.; Wang, J.; Rasul, A.; Anwar, H.; Qasim, M.; Zafar, S.; Aziz, N.; Razzaq, A.; Hussain, R.; de Aguilar, J.L.G.; et al. Current Status of Therapeutic Approaches against Peripheral Nerve Injuries: A Detailed Story from Injury to Recovery. Int. J. Biol. Sci. 2020, 16, 116. [Google Scholar] [CrossRef]

- Guntinas-Lichius, O.; Volk, G.F.; Olsen, K.D.; Mäkitie, A.A.; Silver, C.E.; Zafereo, M.E.; Rinaldo, A.; Randolph, G.W.; Simo, R.; Shaha, A.R.; et al. Facial Nerve Electrodiagnostics for Patients with Facial Palsy: A Clinical Practice Guideline. Eur. Arch. Oto-Rhino-Laryngol. 2020, 277, 1855–1874. [Google Scholar] [CrossRef]

- Lee, D.H. Clinical Efficacy of Electroneurography in Acute Facial Paralysis. J. Audiol. Otol. 2016, 20, 8–12. [Google Scholar] [CrossRef]

- Kim, D.R.; Kim, J.H.; Jung, S.H.; Won, Y.J.; Seo, S.M.; Park, J.S.; Kim, W.S.; Kim, G.C.; Kim, J. Neuromuscular Retraining Therapy for Early Stage Severe Bell’s Palsy Patients Minimizes Facial Synkinesis. Clin. Rehabil. 2023, 37, 1510–1520. [Google Scholar] [CrossRef]

- Demeco, A.; Marotta, N.; Moggio, L.; Pino, I.; Marinaro, C.; Barletta, M.; Petraroli, A.; Palumbo, A.; Ammendolia, A. Quantitative Analysis of Movements in Facial Nerve Palsy with Surface Electromyography and Kinematic Analysis. J. Electromyogr. Kinesiol. 2021, 56, 102485. [Google Scholar] [CrossRef]

- Rodríguez Martínez, E.A.; Polezhaeva, O.; Marcellin, F.; Colin, É.; Boyaval, L.; Sarhan, F.R.; Dakpé, S. DeepSmile: Anomaly Detection Software for Facial Movement Assessment. Diagnostics 2023, 13, 254. [Google Scholar] [CrossRef]

- Cui, H.; Zhong, W.; Zhu, M.; Jiang, N.; Huang, X.; Lan, K.; Hu, L.; Chen, S.; Yang, Z.; Yu, H.; et al. Facial Electromyography Mapping in Healthy and Bell’s Palsy Subjects: A High-Density Surface EMG Study. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 3662–3665. [Google Scholar] [CrossRef]

- Funk, P.F.; Levit, B.; Bar-Haim, C.; Ben-Dov, D.; Volk, G.F.; Grassme, R.; Anders, C.; Guntinas-Lichius, O.; Hanein, Y. Wireless High-Resolution Surface Facial Electromyography Mask for Discrimination of Standardized Facial Expressions in Healthy Adults. Sci. Rep. 2024, 14, 19317. [Google Scholar] [CrossRef]

- Mueller, N.; Trentzsch, V.; Grassme, R.; Guntinas-Lichius, O.; Volk, G.F.; Anders, C. High-Resolution Surface Electromyographic Activities of Facial Muscles during Mimic Movements in Healthy Adults: A Prospective Observational Study. Front. Hum. Neurosci. 2022, 16, 1029415. [Google Scholar] [CrossRef]

- Gat, L.; Gerston, A.; Shikun, L.; Inzelberg, L.; Hanein, Y. Similarities and Disparities between Visual Analysis and High-Resolution Electromyography of Facial Expressions. PLoS ONE 2022, 17, e0262286. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).