Surface Electromyographic Features for Severity Classification in Facial Palsy: Insights from a German Cohort and Implications for Future Biofeedback Use

Abstract

Highlights

- Facial surface EMG can assess facial palsy severity.

- Biofeedback in facial palsy can be facilitated by appropriate EMG parameters.

- Motion classification (movement vs. rest) by sEMG is feasible even in severe cases of facial palsy.

- The results constitute the foundation for further studies on biofeedback algorithms needed for EMG biofeedback in facial palsy.

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Cohort

2.2. Data Acquisition and Experimental Setup

2.3. EMG Analysis and Feature Extraction

2.4. Statistics

3. Results

3.1. Experimental and Clinical Characteristics

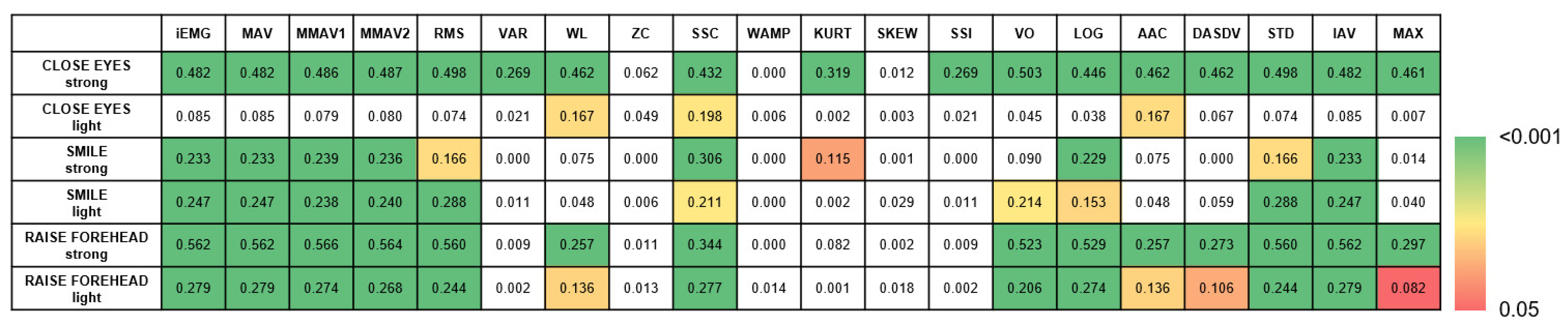

3.2. Parameters for Motion Classification and Biofeedback Applications

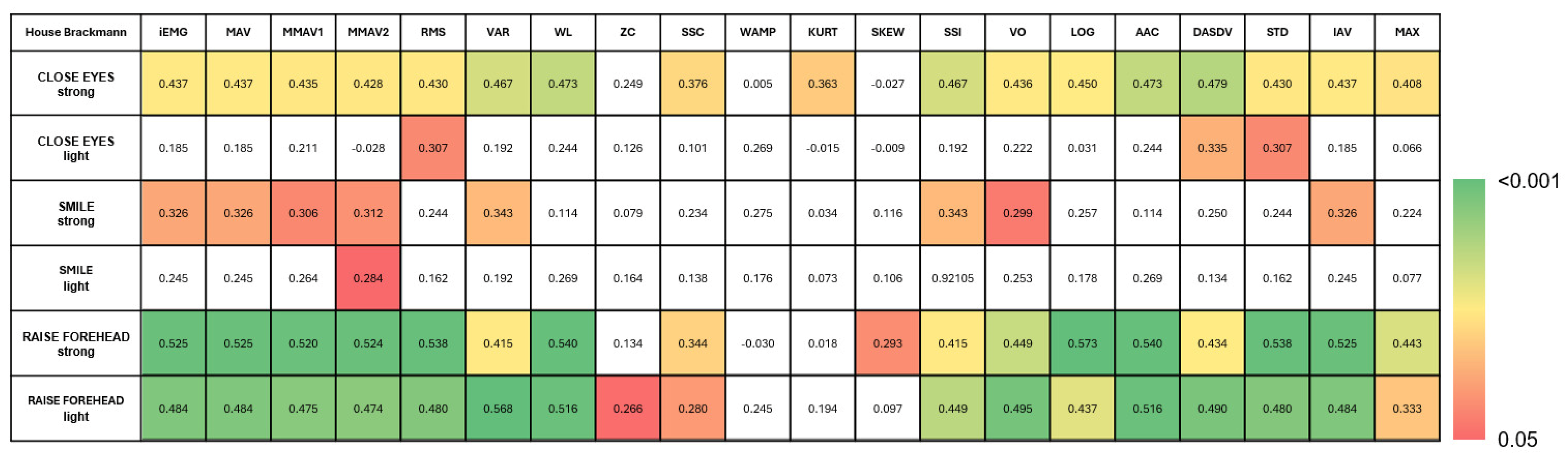

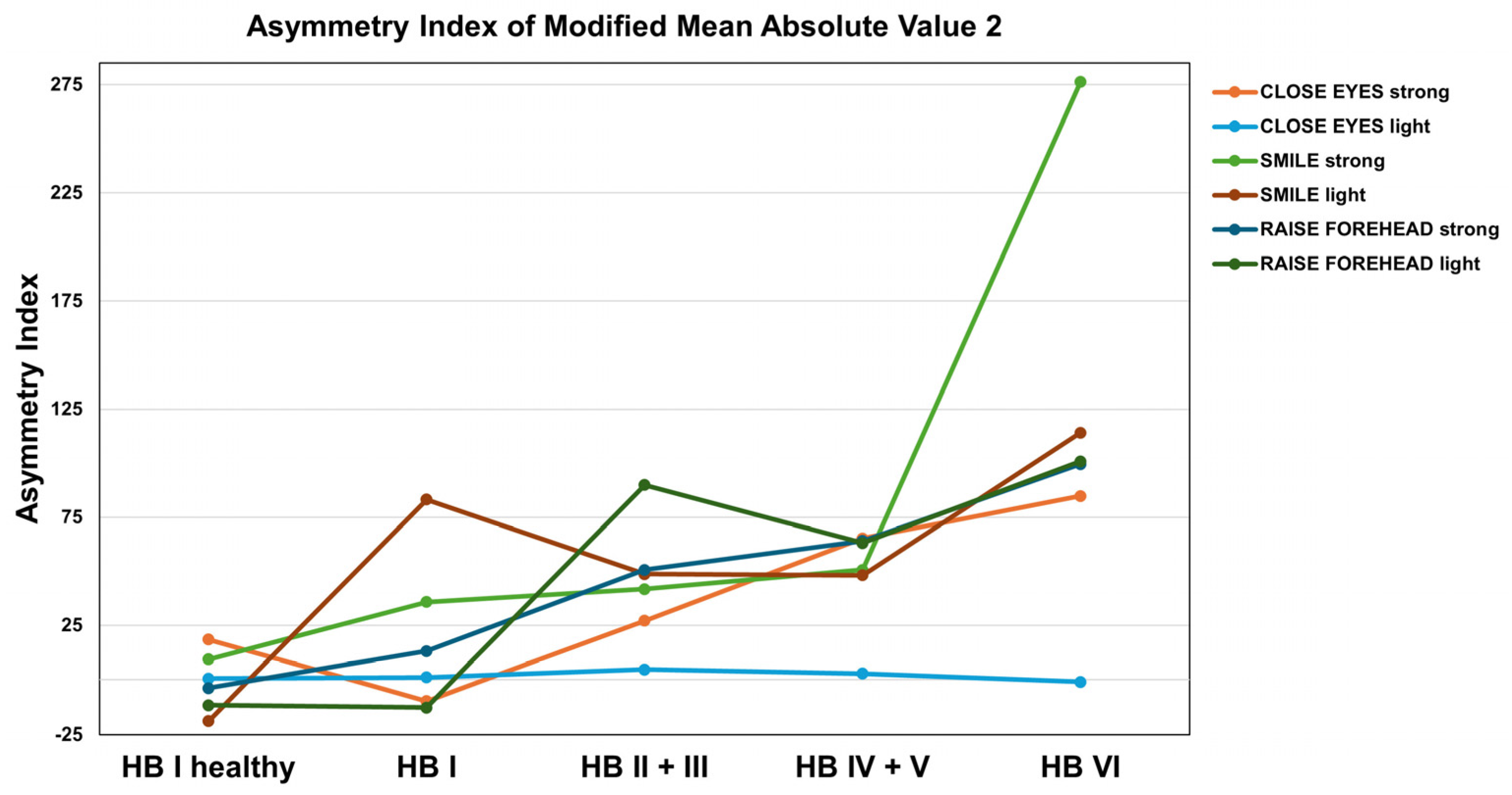

3.3. Feature Extraction for Facial Nerve Grading

4. Discussion

Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAC | average amplitude change |

| AI | asymmetry index |

| AUC | area-under-the-curve |

| DASDV | difference absolute standard value |

| FARS | facial aberrant reinnervation syndrome |

| FP | facial palsy |

| HB | House–Brackmann score |

| IAV | integral of absolute value |

| iEMG | intregrated electromyography |

| KURT | kurtosis |

| LOG | log detector |

| MAV | mean absolute value |

| MAX | maximal amplitude |

| MMAV1 | modified mean absolute value 1 |

| MMAV2 | modified mean absolute value 2 |

| QoL | quality of life |

| RMS | root mean square |

| sEMG | surface electromyography |

| SKEW | skewness |

| SSC | slope sign change |

| SSI | simple square integral |

| STD | standard deviation |

| VAR | variance |

| VO | V-order |

| VS | vestibular schwannoma |

| WAMP | Willison amplitude |

| WL | waveform length |

Appendix A

| Feature | Abbrev. | Mathematical Definition | Description | |

|---|---|---|---|---|

| Integrated EMG | iEMG | N = length of signal = EMG signal in a segment |

| |

| Mean Absolute Value | MAV | N = length of signal = EMG signal in a segment |

| |

| Modified Mean Absolute Value 1 | MMAV1 | N = length of signal = EMG signal in a segment = weighting function that determines the position within the time window |

| |

| Modified Mean Absolute Value 2 | MMAV2 | N = length of signal = EMG signal in a segment = weighting function that determines the position within the time window |

| |

| Root Mean Square | RMS | N = length of signal = EMG signal in a segment |

| |

| Variance | VAR | N = length of signal = EMG signal in a segment |

| |

| Waveform Length | WL | N = length of signal = EMG signal in a segment |

| |

| Zero Crossing | ZC | N = length of signal = EMG signal in a segment |

| |

| Slope Sign Change | SSC | N = length of signal = EMG signal in a segment |

| |

| Willison Amplitude | WAMP | N = length of signal = EMG signal in a segment Threshold in present study: 10% of the maximum absolute amplitude of the signal |

| |

| Kurtosis | KURT | N = length of signal = EMG signal in a segment = mean of the data = standard deviation of dataset |

| |

| Skewness | SKEW | N = length of signal/number of signal values = EMG signal at i = mean of the data = standard deviation of signal |

| |

| Simple Square Integral | SSI | N = length of signal = EMG signal in a segment |

| |

| V-Order | VO | N = length of signal/number of signal values = EMG signal at i v = order of calculation (in present study 3) |

| |

| Log detector | LOG | N = length of signal = EMG signal in a segment |

| |

| Average Amplitude Change | AAC | N = length of signal = EMG signal in a segment |

| |

| Difference Absolute Standard Value | DASDV | N = length of signal = EMG signal in a segment |

| |

| Standard Deviation | STD | N = length of signal = EMG signal in a segment = mean value of the EMG signal |

| |

| Integral of Absolute Value | IAV | N = length of signal/number of samples = EMG signal at instance i |

| |

| Maximal Amplitude | MAX | = EMG signal as function of time max = maximum function that determines the largest value from the signal amounts |

| |

References

- Hotton, M.; Huggons, E.; Hamlet, C.; Shore, D.; Johnson, D.; Norris, J.H.; Kilcoyne, S.; Dalton, L. The Psychosocial Impact of Facial Palsy: A Systematic Review. Br. J. Health Psychol. 2020, 25, 695–727. [Google Scholar] [CrossRef] [PubMed]

- Nellis, J.C.; Ishii, M.; Byrne, P.J.; Boahene, K.D.O.; Dey, J.K.; Ishii, L.E.; Boahene, K.D.O.; Dey, J.K.; Ishii, L.E. Association among Facial Paralysis, Depression, and Quality of Life in Facial Plastic Surgery Patients. JAMA Facial Plast. Surg. 2017, 19, 190–196. [Google Scholar] [CrossRef] [PubMed]

- Verhoeff, R.; Bruins, T.E.; Ingels, K.J.A.O.; Werker, P.M.N.; van Veen, M.M. A Cross-Sectional Analysis of Facial Palsy-Related Quality of Life in 125 Patients: Comparing Linear, Quadratic and Cubic Regression Analyses. Clin. Otolaryngol. 2022, 47, 541–545. [Google Scholar] [CrossRef] [PubMed]

- Machetanz, K.; Lee, L.; Wang, S.S.; Tatagiba, M.; Naros, G. Trading Mental and Physical Health in Vestibular Schwannoma Treatment Decision. Front. Oncol. 2023, 13, 1152833. [Google Scholar] [CrossRef]

- Cross, T.; Sheard, C.E.; Garrud, P.; Nikolopoulos, T.P.; O’Donoghue, G.M. Impact of Facial Paralysis on Patients with Acoustic Neuroma. Laryngoscope 2000, 110, 1539–1542. [Google Scholar] [CrossRef]

- Urban, E.; Volk, G.F.; Geißler, K.; Thielker, J.; Dittberner, A.; Klingner, C.; Witte, O.W.; Guntinas-Lichius, O. Prognostic Factors for the Outcome of Bells’ Palsy: A Cohort Register-Based Study. Clin. Otolaryngol. 2020, 45, 754–761. [Google Scholar] [CrossRef]

- Hayler, R.; Clark, J.; Croxson, G.; Coulson, S.; Hussain, G.; Ngo, Q.; Ch’ng, S.; Low, T.H. Sydney Facial Nerve Clinic: Experience of a Multidisciplinary Team. ANZ J. Surg. 2020, 90, 856–860. [Google Scholar] [CrossRef]

- Kanerva, M.; Poussa, T.; Pitkäranta, A. Sunnybrook and House-Brackmann Facial Grading Systems: Intrarater Repeatability and Interrater Agreement. Otolaryngol. Head Neck Surg. 2006, 135, 865–871. [Google Scholar] [CrossRef]

- House, J.W.; Brackmann, D.E. Facial Nerve Grading System. Otolaryngol. Head Neck Surg. 1985, 93, 146–147. [Google Scholar] [CrossRef]

- Ross, B.G.; Fradet, G.; Nedzelski, J.M. Development of a Sensitive Clinical Facial Grading System. Otolaryngol. Head Neck Surg. 1996, 114, 380–386. [Google Scholar] [CrossRef]

- Scheller, C.; Wienke, A.; Tatagiba, M.; Gharabaghi, A.; Ramina, K.F.; Scheller, K.; Prell, J.; Zenk, J.; Ganslandt, O.; Bischoff, B.; et al. Interobserver Variability of the House-Brackmann Facial Nerve Grading System for the Analysis of a Randomized Multi-Center Phase III Trial. Acta Neurochir. 2017, 159, 733–738. [Google Scholar] [CrossRef]

- Holze, M.; Rensch, L.; Prell, J.; Scheller, C.; Simmermacher, S.; Scheer, M.; Strauss, C.; Rampp, S. Learning from EMG: Semi-Automated Grading of Facial Nerve Function. J. Clin. Monit. Comput. 2022, 36, 1509–1517. [Google Scholar] [CrossRef]

- Franz, L.; Marioni, G.; Daloiso, A.; Biancoli, E.; Tealdo, G.; Cazzador, D.; Nicolai, P.; de Filippis, C.; Zanoletti, E. Facial Surface Electromyography: A Novel Approach to Facial Nerve Functional Evaluation after Vestibular Schwannoma Surgery. J. Clin. Med. 2024, 13, 590. [Google Scholar] [CrossRef]

- Kim, G.H.; Park, J.H.; Kim, T.K.; Lee, E.J.; Jung, S.E.; Seo, J.C.; Kim, C.H.; Choi, Y.M.; Yoon, H.M. Correlation Between Accompanying Symptoms of Facial Nerve Palsy, Clinical Assessment Scales and Surface Electromyography. J. Acupunct. Res. 2022, 39, 297–303. [Google Scholar] [CrossRef]

- Ryu, H.M.; Lee, S.J.; Park, E.J.; Kim, S.G.; Kim, K.H.; Choi, Y.M.; Kim, J.U.; Song, B.Y.; Kim, C.H.; Yoon, H.M.; et al. Study on the Validity of Surface Electromyography as Assessment Tools for Facial Nerve Palsy. J. Pharmacopunct. 2018, 21, 258. [Google Scholar] [CrossRef]

- Machetanz, K.; Grimm, F.; Schäfer, R.; Trakolis, L.; Hurth, H.; Haas, P.; Gharabaghi, A.; Tatagiba, M.; Naros, G. Design and Evaluation of a Custom-Made Electromyographic Biofeedback System for Facial Rehabilitation. Front. Neurosci. 2022, 16, 666173. [Google Scholar] [CrossRef]

- Volk, G.F.; Roediger, B.; Geißler, K.; Kuttenreich, A.-M.; Klingner, C.M.; Dobel, C.; Guntinas-Lichius, O. Effect of an Intensified Combined Electromyography and Visual Feedback Training on Facial Grading in Patients With Post-Paralytic Facial Synkinesis. Front. Rehabil. Sci. 2021, 2, 746188. [Google Scholar] [CrossRef]

- Hammerschlag, P.E.; Brudny, J.; Cusumano, R.; Cohen, N.L. Hypoglossal-Facial Nerve Anastomosis and Electromyographic Feedback Rehabilitation. Laryngoscope 1987, 97, 705–709. [Google Scholar] [CrossRef]

- Ross, B.; Nedzelski, J.M.; McLean, J.A. Efficacy of Feedback Training in Long-Standing Facial Nerve Paresis. Laryngoscope 1991, 101, 744–750. [Google Scholar] [CrossRef]

- Cronin, G.W.; Steenerson, R.L. The Effectiveness of Neuromuscular Facial Retraining Combined with Electromyography in Facial Paralysis Rehabilitation. Otolaryngol. Head Neck Surg. 2003, 128, 534–538. [Google Scholar] [CrossRef]

- Duarte-Moreira, R.J.; Castro, K.V.-F.; Luz-Santos, C.; Martins, J.V.P.; Sá, K.N.; Baptista, A.F. Electromyographic Biofeedback in Motor Function Recovery After Peripheral Nerve Injury: An Integrative Review of the Literature. Appl. Psychophysiol. Biofeedback 2018, 43, 247–257. [Google Scholar] [CrossRef]

- Molina, A.H.; Harvey, J.; Pitt, K.M.; Lee, J.; Barlow, S.M. Effects of Real-Time IEMG Biofeedback on Facial Muscle Activation Patterns in a Child with Congenital Unilateral Facial Palsy. Biomed. J. Sci. Tech. Res. 2022, 47, 38074–38088. [Google Scholar] [CrossRef]

- Pourmomeny, A.A.; Zadmehre, H.; Mirshamsi, M.; Mahmodi, Z. Prevention of Synkinesis by Biofeedback Therapy: A Randomized Clinical Trial. Otol. Neurotol. 2014, 35, 739–742. [Google Scholar] [CrossRef]

- Dalla-Toffola, E.; Bossi, D.; Buonocore, M.; Montomoli, C.; Petrucci, L.; Alfonsi, E. Usefulness of BFB/EMG in Facial Palsy Rehabilitation. Disabil Rehabil 2005, 27, 809–815. [Google Scholar] [CrossRef]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C.; Tarpin-Bernard, F.; Laurillau, Y. EMG Feature Evaluation for Improving Myoelectric Pattern Recognition Robustness. Expert. Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Nazmi, N.; Rahman, M.A.A.; Yamamoto, S.I.; Ahmad, S.A.; Zamzuri, H.; Mazlan, S.A. A Review of Classification Techniques of EMG Signals during Isotonic and Isometric Contractions. Sensors 2016, 16, 1304. [Google Scholar] [CrossRef]

- Chowdhury, R.H.; Reaz, M.B.I.; Bin Mohd Ali, M.A.; Bakar, A.A.A.; Chellappan, K.; Chang, T.G. Surface Electromyography Signal Processing and Classification Techniques. Sensors 2013, 13, 12431–12466. [Google Scholar] [CrossRef]

- Rutkowska, J.M.; Ghilardi, T.; Vacaru, S.V.; van Schaik, J.E.; Meyer, M.; Hunnius, S.; Oostenveld, R. Optimal Processing of Surface Facial EMG to Identify Emotional Expressions: A Data-Driven Approach. Behav. Res. Methods 2024, 56, 7331–7344. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, K.; Jin, Y.; Qiu, F.; Yao, Y.; Xu, L. Influence of Electrode Configuration on Muscle-Fiber-Conduction-Velocity Estimation Using Surface Electromyography. IEEE Trans. Biomed. Eng. 2022, 69, 2414–2422. [Google Scholar] [CrossRef]

- Sae-lim, W.; Phukpattaranont, P.; Thongpull, K. Effect of Electrode Skin Impedance on Electromyography Signal Quality. In Proceedings of the 2018 15th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Chiang Rai, Thailand, 18–21 July 2018; pp. 748–751. [Google Scholar] [CrossRef]

- Cattaneo, L.; Pavesi, G. The Facial Motor System. Neurosci. Biobehav. Rev. 2014, 38, 135. [Google Scholar] [CrossRef]

- Sajid, M.; Shafique, T.; Baig, M.; Riaz, I.; Amin, S.; Manzoor, S. Automatic Grading of Palsy Using Asymmetrical Facial Features: A Study Complemented by New Solutions. Symmetry 2018, 10, 242. [Google Scholar] [CrossRef]

- Jiang, C.; Wu, J.; Zhong, W.; Wei, M.; Tong, J.; Yu, H.; Wang, L. Automatic Facial Paralysis Assessment via Computational Image Analysis. J. Healthc. Eng. 2020, 2020, 2398542. [Google Scholar] [CrossRef]

- Zhuang, Y.; McDonald, M.; Uribe, O.; Yin, X.; Parikh, D.; Southerland, A.M.; Rohde, G.K. Facial Weakness Analysis and Quantification of Static Images. IEEE J. Biomed. Health Inform. 2020, 24, 2260–2267. [Google Scholar] [CrossRef]

- Eisenhardt, S.; Volk, G.F.; Rozen, S.; Parra-Dominguez, G.S.; Garcia-Capulin, C.H.; Sanchez-Yanez, R.E. Automatic Facial Palsy Diagnosis as a Classification Problem Using Regional Information Extracted from a Photograph. Diagnostics 2022, 12, 1528. [Google Scholar] [CrossRef]

- Wang, J. Prediction of Postoperative Recovery in Patients with Acoustic Neuroma Using Machine Learning and SMOTE-ENN Techniques. Math. Biosci. Eng. 2022, 19, 10407–10423. [Google Scholar] [CrossRef]

- Chiesa-Estomba, C.M.; Echaniz, O.; Sistiaga Suarez, J.A.; González-García, J.A.; Larruscain, E.; Altuna, X.; Medela, A.; Graña, M. Machine Learning Models for Predicting Facial Nerve Palsy in Parotid Gland Surgery for Benign Tumors. J. Surg. Res. 2021, 262, 57–64. [Google Scholar] [CrossRef]

- Heman-Ackah, S.M.; Blue, R.; Quimby, A.E.; Abdallah, H.; Sweeney, E.M.; Chauhan, D.; Hwa, T.; Brant, J.; Ruckenstein, M.J.; Bigelow, D.C.; et al. A Multi-Institutional Machine Learning Algorithm for Prognosticating Facial Nerve Injury Following Microsurgical Resection of Vestibular Schwannoma. Sci. Rep. 2024, 14, 12963. [Google Scholar] [CrossRef]

- Zohdy, Y.M.; Alawieh, A.M.; Bray, D.; Pradilla, G.; Garzon-Muvdi, T.; Ashram, Y.A. An Artificial Neural Network Model for Predicting Postoperative Facial Nerve Outcomes After Vestibular Schwannoma Surgery. Neurosurgery 2024, 94, 805–812. [Google Scholar] [CrossRef]

- Kishimoto-Urata, M.; Urata, S.; Nishijima, H.; Baba, S.; Fujimaki, Y.; Kondo, K.; Yamasoba, T. Predicting Synkinesis Caused by Bell’s Palsy or Ramsay Hunt Syndrome Using Machine Learning-Based Logistic Regression. Laryngoscope Investig. Otolaryngol. 2023, 8, 1189–1195. [Google Scholar] [CrossRef]

- Azuma, T.; Nakamura, K.; Takahashi, M.; Miyoshi, H.; Toda, N.; Iwasaki, H.; Fuchigami, T.; Sato, G.; Kitamura, Y.; Abe, K.; et al. Electroneurography Cannot Predict When Facial Synkinesis Develops in Patients with Facial Palsy. J. Med. Invest. 2020, 67, 87–89. [Google Scholar] [CrossRef]

- Hussain, G.; Wang, J.; Rasul, A.; Anwar, H.; Qasim, M.; Zafar, S.; Aziz, N.; Razzaq, A.; Hussain, R.; de Aguilar, J.L.G.; et al. Current Status of Therapeutic Approaches against Peripheral Nerve Injuries: A Detailed Story from Injury to Recovery. Int. J. Biol. Sci. 2020, 16, 116. [Google Scholar] [CrossRef]

- Guntinas-Lichius, O.; Volk, G.F.; Olsen, K.D.; Mäkitie, A.A.; Silver, C.E.; Zafereo, M.E.; Rinaldo, A.; Randolph, G.W.; Simo, R.; Shaha, A.R.; et al. Facial Nerve Electrodiagnostics for Patients with Facial Palsy: A Clinical Practice Guideline. Eur. Arch. Oto-Rhino-Laryngol. 2020, 277, 1855–1874. [Google Scholar] [CrossRef]

- Lee, D.H. Clinical Efficacy of Electroneurography in Acute Facial Paralysis. J. Audiol. Otol. 2016, 20, 8–12. [Google Scholar] [CrossRef]

- Kim, D.R.; Kim, J.H.; Jung, S.H.; Won, Y.J.; Seo, S.M.; Park, J.S.; Kim, W.S.; Kim, G.C.; Kim, J. Neuromuscular Retraining Therapy for Early Stage Severe Bell’s Palsy Patients Minimizes Facial Synkinesis. Clin. Rehabil. 2023, 37, 1510–1520. [Google Scholar] [CrossRef]

- Demeco, A.; Marotta, N.; Moggio, L.; Pino, I.; Marinaro, C.; Barletta, M.; Petraroli, A.; Palumbo, A.; Ammendolia, A. Quantitative Analysis of Movements in Facial Nerve Palsy with Surface Electromyography and Kinematic Analysis. J. Electromyogr. Kinesiol. 2021, 56, 102485. [Google Scholar] [CrossRef]

- Rodríguez Martínez, E.A.; Polezhaeva, O.; Marcellin, F.; Colin, É.; Boyaval, L.; Sarhan, F.R.; Dakpé, S. DeepSmile: Anomaly Detection Software for Facial Movement Assessment. Diagnostics 2023, 13, 254. [Google Scholar] [CrossRef]

- Cui, H.; Zhong, W.; Zhu, M.; Jiang, N.; Huang, X.; Lan, K.; Hu, L.; Chen, S.; Yang, Z.; Yu, H.; et al. Facial Electromyography Mapping in Healthy and Bell’s Palsy Subjects: A High-Density Surface EMG Study. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 3662–3665. [Google Scholar] [CrossRef]

- Funk, P.F.; Levit, B.; Bar-Haim, C.; Ben-Dov, D.; Volk, G.F.; Grassme, R.; Anders, C.; Guntinas-Lichius, O.; Hanein, Y. Wireless High-Resolution Surface Facial Electromyography Mask for Discrimination of Standardized Facial Expressions in Healthy Adults. Sci. Rep. 2024, 14, 19317. [Google Scholar] [CrossRef]

- Mueller, N.; Trentzsch, V.; Grassme, R.; Guntinas-Lichius, O.; Volk, G.F.; Anders, C. High-Resolution Surface Electromyographic Activities of Facial Muscles during Mimic Movements in Healthy Adults: A Prospective Observational Study. Front. Hum. Neurosci. 2022, 16, 1029415. [Google Scholar] [CrossRef]

- Gat, L.; Gerston, A.; Shikun, L.; Inzelberg, L.; Hanein, Y. Similarities and Disparities between Visual Analysis and High-Resolution Electromyography of Facial Expressions. PLoS ONE 2022, 17, e0262286. [Google Scholar] [CrossRef]

| Total n = 55 | Patients n = 48 | Healthy Subjects n = 7 | |

|---|---|---|---|

| Age | 51.2 ± 14.73 | 53.85 ± 12.62 | 32.57 ± 15.7 |

| Gender | |||

| male | 20 (36.4%) | 17 (35.4%) | 3 (42.9%) |

| female | 35 (63.6%) | 31 (64.6%) | 4 (57.1%) |

| HB grade | |||

| I | 15 (27.2%) | 8 (16.7%) | 7 (100%) |

| II | 8 (14.5%) | 8 (16.7%) | 0 (0%) |

| III | 21 (38.2%) | 21 (43.8%) | 0 (0%) |

| IV | 5 (9.1%) | 5 (10.4%) | 0 (0%) |

| V | 4 (7.3%) | 4 (8.3%) | 0 (0%) |

| VI | 2 (3.6%) | 2 (4.2%) | 0 (0%) |

| Side of FP/or surgery | |||

| right | 19 (34.5%) | 19 (34.5%) | 0 (0%) |

| left | 29 (52.7%) | 29 (52.7%) | 0 (0%) |

| no facial palsy/surgery | 7 (12.7%) | 0 (0%) | 7 (100%) |

| Etiology of FP | |||

| idiopathic | 2 (3.6%) | 2 (4.2%) | 0 (0%) |

| tumor | 1 (1.8%) | 1 (2.1%) | 0 (0%) |

| iatrogenic | 37 (67.3%) | 37 (77.1%) | 0 (0%) |

| no FP | 15 (27.3%) | 8 (16.6%) | 7 (100%) |

| Period since onset of FP/surgery | - | ||

| overall | 672.27 ± 2158.78 days (=1.84 years) | 672.27 ± 2158.78 days (=1.84 years) | |

| HB I | 3.25 ± 1.04 days | 3.25 ± 1.04 days | |

| HB II-III | 850.97 ± 2431.85 days | 850.97 ± 2431.85 days | |

| HB IV-V | 839.44 ± 2444.37 days | 839.44 ± 2444.37 days | |

| HB VI | 5.00 ± 1.41 days | 5.00 ± 1.41 days | |

| FARS | |||

| yes | 8 (14.5%) | 8 (16.7%) | 0 (0%) |

| no | 47 (85.5%) | 40 (83.3%) | 7 (100%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manzoor, I.; Popescu, A.; Stark, A.; Gorbachuk, M.; Spolaore, A.; Tatagiba, M.; Naros, G.; Machetanz, K. Surface Electromyographic Features for Severity Classification in Facial Palsy: Insights from a German Cohort and Implications for Future Biofeedback Use. Sensors 2025, 25, 2949. https://doi.org/10.3390/s25092949

Manzoor I, Popescu A, Stark A, Gorbachuk M, Spolaore A, Tatagiba M, Naros G, Machetanz K. Surface Electromyographic Features for Severity Classification in Facial Palsy: Insights from a German Cohort and Implications for Future Biofeedback Use. Sensors. 2025; 25(9):2949. https://doi.org/10.3390/s25092949

Chicago/Turabian StyleManzoor, Ibrahim, Aryana Popescu, Alexia Stark, Mykola Gorbachuk, Aldo Spolaore, Marcos Tatagiba, Georgios Naros, and Kathrin Machetanz. 2025. "Surface Electromyographic Features for Severity Classification in Facial Palsy: Insights from a German Cohort and Implications for Future Biofeedback Use" Sensors 25, no. 9: 2949. https://doi.org/10.3390/s25092949

APA StyleManzoor, I., Popescu, A., Stark, A., Gorbachuk, M., Spolaore, A., Tatagiba, M., Naros, G., & Machetanz, K. (2025). Surface Electromyographic Features for Severity Classification in Facial Palsy: Insights from a German Cohort and Implications for Future Biofeedback Use. Sensors, 25(9), 2949. https://doi.org/10.3390/s25092949