Abstract

This paper presents a novel image fusion method designed to enhance the integration of infrared and visible images through the use of a residual attention mechanism. The primary objective is to generate a fused image that effectively combines the thermal radiation information from infrared images with the detailed texture and background information from visible images. To achieve this, we propose a multi-level feature extraction and fusion framework that encodes both shallow and deep image features. In this framework, deep features are utilized as queries, while shallow features function as keys and values within a residual cross-attention module. This architecture enables a more refined fusion process by selectively attending to and integrating relevant information from different feature levels. Additionally, we introduce a dynamic feature preservation loss function to optimize the fusion process, ensuring the retention of critical details from both source images. Experimental results demonstrate that the proposed method outperforms existing fusion techniques across various quantitative metrics and delivers superior visual quality.

1. Introduction

Due to the limitations of imaging technology and the finite information content of a single image, capturing both the salient features and fine details of a target in one image remains a significant challenge. However, fields such as military reconnaissance, autonomous driving, and object detection demand high-quality and comprehensive image information, sparking widespread research interest in image fusion technology [1,2,3]. Among the various fusion techniques, combining infrared (IR) and visible (VI) light images has emerged as a key research focus. Infrared images excel at highlighting the thermal characteristics of target objects, improving target detection, while visible light images provide richer textures and detailed color information. Advances in image fusion technology are crucial for further enhancing the performance of these application areas.

Early developments in image fusion date back to the 1990s, when researchers began exploring traditional fusion techniques. Johnson [4] and Broussard [5] introduced a neural model called the Pulse-Coupled Neural Network (PCNN), which was first applied to image fusion two years later. The application of PCNN in image fusion is characterized by two main approaches: applying the model exclusively to the high-frequency subbands of an image or to both high- and low-frequency subbands simultaneously. The PCNN model effectively mitigated the issue of detail loss commonly associated with traditional fusion techniques. However, its intricate network architecture and complex parameter configurations presented challenges, particularly in terms of computational efficiency and generalization ability, which required further improvement. Approximately two decades later, Liu et al. [6] pioneered the application of Convolutional Neural Networks (CNNs) to image fusion tasks. CNN-based methods overcame the limitations of traditional algorithms that required manually designed fusion rules. Nonetheless, the complexity of the loss function design in CNNs still constrains their overall performance. For instance, Zhang et al. [7] introduced the PMGI framework, which utilizes an end-to-end network to model the fusion problem as one of preserving both texture and pixel intensity. Similarly, Ma et al. [8] proposed STDFusionNet, which integrates residual modules into the network to enhance infrared target visibility against visible light backgrounds. Furthermore, Xu et al. [9] developed U2Fusion, a unified end-to-end image fusion network that employs elastic weight consolidation to significantly reduce temporal and spatial complexities during training. Generative Adversarial Networks (GANs) have also been applied to image fusion. Ma et al. [3] leveraged the GAN framework introduced by Goodfellow et al. [10] for fusing infrared and visible light images. They later improved this approach by proposing DDcGAN [11], which replaced the single generator and single discriminator architecture with a single generator and dual discriminators to address the issue of modality bias in fusion results. More recently, Zhao et al. [12] proposed DDFM, a fusion algorithm based on Denoising Diffusion Probabilistic Models (DDPMs). This redefined the image fusion process as a conditional generation problem under the DDPM sampling framework. However, GAN-based methods still face challenges, such as training instability [13,14], and determining the optimal number of training iterations for the generator and discriminator remains difficult. In addition to CNNs and GANs, Autoencoders (AEs) have also been extensively studied for image fusion. For example, DeepFuse [15], proposed by Prabhakar et al., is a notable AE-based model initially designed for multi-focus image fusion. Despite its original purpose, the model has demonstrated effectiveness in the fusion of infrared and visible light images as well.

This paper focuses on image fusion methods based on Transformers, which were originally introduced by Vaswani et al. [16] in 2017 for natural language processing (NLP) tasks. In 2020, Dosovitskiy et al. [17] adapted Transformers for computer vision applications. Their self-attention mechanisms allow Transformers to effectively capture long-range dependencies in images, which is critical for understanding complex image content. Compared to traditional Convolutional Neural Networks (CNNs), Transformers process information more efficiently in parallel, significantly accelerating both training and inference. These advantages have been demonstrated in models such as SwinFusion [18], CDDFuse [19], and DATFuse [20], which have achieved impressive results in the fusion of infrared (IR) and visible (VI) light images. Despite the remarkable performance of existing Transformer-based image fusion methods, several limitations persist. First, the attention mechanism, a core component of Transformers, primarily focuses on global information while often neglecting fine-grained local details from the input images. Second, the Transformer architecture was originally designed for NLP tasks, where sentences are tokenized into semantically meaningful units based on words. However, when adapted to image processing, images are typically divided into simple patches that serve as tokens, which do not inherently convey semantic meanings analogous to those in NLP. Third, in the field of image fusion, there is a strong demand to preserve more texture details from the original images while maintaining a high level of consistency between the fused image’s style and its input sources. However, current loss functions lack the adaptability to dynamically adjust fusion objectives based on the varying properties of input images. Finally, beyond image fusion, deep learning has also been applied to analyze sensor data for structural health monitoring of buildings and bridges. By identifying abnormal patterns, potential safety issues or structural damage can be detected early, enabling timely maintenance and repairs [21,22].

To address the aforementioned issues, this paper proposes an end-to-end image fusion model called the Residual Attention-Based Image Fusion Method with Multi-Level Feature Encoding (MFRA-Fuse). To achieve the semantically meaningful tokenization of images and better preservation of local details, we introduce a Deep Semantic Encoding (DSE) module based on RepVGG [23]. However, as Transformers inherently focus more on global information, there is a risk that the semantic space generated by DSE may deviate significantly from the original image, resulting in a loss of fine details after attention computation. To mitigate this, we propose a multi-scale semantic encoding structure, where the query utilizes deep semantic encoding, while the key and value adopt traditional shallow semantic encoding, ensuring a balance between the global context and local detail preservation. Additionally, a residual cross-attention module is introduced to retain the original image information throughout the fusion process. Finally, to better handle image fusion tasks under varying brightness and environmental conditions, we design a novel loss function. This loss function dynamically assigns pixel weights based on image gradients and ensures consistency in color style across fused images, improving the adaptability and robustness of the model.

The main contributions of this paper are summarized as follows:

- A deep–shallow semantic encoding structure is proposed to provide more appropriate semantic representations for attention computation while ensuring the preservation of the original image information during the fusion process.

- A multi-level cascaded semantic encoding structure based on RepVGG is introduced, which is specifically tailored for image fusion applications, providing semantically meaningful query encoding.

- A residual cross-attention module is designed to retain the original image details during the fusion process, preventing the attention mechanism from overlooking fine-grained information during long-range computations.

- A novel pixel-level loss function is proposed, which dynamically adjusts based on image texture and color information, enabling the network to generalize effectively across diverse fusion scenarios.

2. Related Work

2.1. Traditional Infrared and Visible Image Fusion Methods

Over the past few decades, researchers have proposed numerous traditional methods for fusing infrared and visible light images. These methods include approaches based on sparse representation (SR) [24,25], multi-scale transform (MST) [26,27], saliency detection [28,29], and subspace-based algorithms [30]. Each of these methods processes images from distinct perspectives to achieve fusion, enriching the resulting fused images with detailed textures and abundant information.

Sparse representation (SR)-based fusion methods include techniques such as joint sparse representation (JSR) [31] and convolutional sparse representation (CSR) [32]. These methods employ learned sparse dictionaries to represent the source images as sparse linear combinations of dictionary atoms, followed by weighted combinations [33] to generate the fused image. Among the traditional methods, multi-scale transform (MST)-based approaches have gained particular popularity. These include wavelet-based methods [34], pyramid-based methods [35], multi-scale geometric representation methods [36], and curvelet-based methods [37]. These approaches typically decompose the source images using a common multi-scale decomposition technique, fuse the resulting low-frequency and high-frequency components, and then apply the corresponding inverse transform to reconstruct the fused image [38]. Subspace-based methods, such as those based on principal component analysis (PCA) [39] and non-negative matrix factorization (NMF) [40], are also widely employed. These methods decompose the source images into bases and coefficients, merge the bases and coefficients within an appropriate subspace, and then reconstruct the fused image. Such techniques effectively integrate structural information and texture details from different source images, producing high-quality fusion results [40]. Moreover, researchers have explored hybrid methods [41] and optimization-based methods [42] that combine the strengths of various approaches to develop fusion strategies tailored to specific application scenarios. While traditional methods have demonstrated success in fusing image details and features from multiple perspectives, they also exhibit significant limitations. These methods require the manual design of complex feature extraction and fusion rules, relying heavily on the researchers’ expertise and experiential judgment. Additionally, manually designed features often fail to capture the high-level semantic information within images, which limits the generalization capability of the resulting models [43].

2.2. Infrared and Visible Image Fusion Based on Deep Learning

Owing to the formidable capabilities of deep learning in automatic feature extraction, end-to-end solutions, generalization, and computational efficiency, it has been widely applied to various computer vision tasks, including person re-identification [44,45], image super-resolution [46], and image fusion. In the realm of infrared and visible light image fusion, deep learning-based methods can be broadly categorized into four main types: CNN-based methods [47,48], GAN-based methods [49,50,51], AE-based methods [52,53,54], and Transformer-based methods [18,20]. CNN-based fusion methods represent one of the most widely used approaches. These methods employ Convolutional Neural Networks to learn image features, followed by a fusion strategy to combine multiple input images into a single fused output. By leveraging the powerful feature learning capabilities of CNNs, these methods automatically extract important features from input images, thereby achieving high-quality image fusion. Most CNN-based methods adopt an end-to-end training paradigm, in which pairs of infrared and visible light images are input into the network. The network subsequently extracts features and fuses them through a specific fusion strategy to produce the final fused image. The performance of these methods is heavily influenced by the design of loss functions, which determine the type and amount of information retained in the final fused image. Despite their successes, current CNN-based end-to-end infrared and visible light image fusion networks primarily focus on pixel-level details, often neglecting a more comprehensive consideration of deep features and fine-grained information within the images. To address this limitation, MAPFusion [55] introduces a multi-level adaptive perceptual loss, a feature-level strategy that constrains the features of both the original and fused images. This approach simultaneously preserves low-level positional data and high-level semantic information, thereby optimizing CNN-based image fusion methods and enhancing their overall performance.

Furthermore, Generative Adversarial Networks (GANs) have demonstrated the ability to generate fused images that exhibit the characteristics of real images. GAN-based methods primarily rely on the adversarial interaction between the generator and the discriminator to optimize the model. The generator is responsible for producing fused images that preserve the intensity information of infrared images and the gradient details of visible light images, while the discriminator enforces the generator to create images with enhanced texture details [3]. This adversarial process aims to generate fused images that are indistinguishable from real ones, thereby meeting the requirements of image fusion. However, the design of the discriminator’s loss function often biases the fused image towards the visible light modality, with the infrared modality’s information primarily retained through content loss. This imbalance can lead to the loss of critical infrared image information. To address this issue, DDcGAN [11] improved the GAN framework by replacing the single-generator, single-discriminator architecture with a single-generator, dual-discriminator design. This adjustment mitigates the tendency of GAN-fused images to favor one specific modality over the other. Similarly, GANMcC [49], inspired by the PMGI method, divides the input into gradient and contrast channels. Each channel’s input is a weighted combination of infrared and visible light images based on specific rules. In this framework, the discriminator functions as a classifier, outputting a probability to determine whether the image belongs to the infrared or visible light category. Through adversarial training, the final fused image achieves a better balance between the two modalities. Despite these advancements, GAN-based methods still face challenges, particularly instability during the training process [13,14]. The adversarial interaction between the generator and discriminator can lead to issues such as non-convergence, slower training speeds, and difficulty in determining appropriate training steps, making stability an ongoing challenge in GAN-based image fusion.

Autoencoder (AE)-based methods inherit the traditional image fusion process, which involves three main steps: feature extraction, feature fusion, and feature reconstruction. AE-based methods are unsupervised approaches that do not require labeled training data. Instead, they utilize large image datasets to train an encoder–decoder architecture. The encoder is responsible for extracting features from the input images, while the decoder reconstructs the fused image. During this process, the extracted features are combined using specifically designed fusion rules, and the decoder reconstructs the final fused image. Successful reconstruction of the original input image indicates that the encoder has effectively captured the essential features from the source images. Classical AE-based methods include DeepFuse, DenseFuse [56], and VAEFuse [57]. Among these, DenseFuse incorporates an encoding network composed of convolutional layers, fusion layers, and dense block layers, which significantly enhance its feature extraction capabilities. This design helps mitigate the issue of information loss in the intermediate layers of deep networks. However, maintaining a balance between network performance and the number of parameters remains an open challenge. On the other hand, VAEFuse introduced the use of Variational Autoencoders (VAEs) into the field of image fusion for the first time. Its architecture consists of an image fusion network and an infrared feature compensation network. The encoder processes the infrared and visible light images to generate latent vectors, which are then fused into a unified vector using the product of Gaussian probability densities. The decoder subsequently reconstructs the fused vector into the final fused image. While AE-based methods provide an effective framework for feature extraction and image reconstruction, their complex training processes lead to high computational costs, which remain a significant consideration when deploying these methods.

2.3. Vision Transformer

The Transformer architecture, originally introduced by Vaswani et al. for applications in natural language processing (NLP) [16], has since been successfully adapted for computer vision tasks. Its application to infrared and visible image fusion (IVIF) tasks was first pioneered by IFT in 2020 [58]. Building on this foundation, PPTFusion [59] introduced a Transformer-based framework that employs patch Transformers to extract local features and pyramid Transformers to capture global information. Similarly, YDTR [60] proposed a dynamic Transformer capable of simultaneously extracting both local and global features, further advancing the capabilities of Transformers in IVIF tasks. In addition, CDDFuse [19] integrated attention mechanisms into the image fusion framework, utilizing both the long-term and short-term attention capabilities of Transformers to extract shared features across modalities. This approach significantly improved the quality of fused images by effectively aligning information from different sources. However, the high computational demands of most Transformer-based methods have hindered their deployment in mobile and resource-constrained environments compared to their use in larger networks. To address these limitations, Wu et al. proposed LT [61], a lightweight Transformer designed specifically for mobile devices. LT achieves efficiency by combining short- and long-range attention mechanisms with a flattened feedforward network, reducing computational overhead without compromising performance. Compared to traditional Convolutional Neural Networks (CNNs), Transformers offer significant advantages in accelerating model training and inference processes. Nevertheless, their substantial computational resource requirements remain a formidable challenge, particularly for applications requiring real-time processing or deployment on devices with limited hardware capabilities.

3. Method

In this section, we first introduce the overall structural framework of the proposed MFRA-Fuse model in Section 3.1. Next, Section 3.2 provides a detailed explanation of the two encoding schemes introduced in this study: Deep Semantic Embedding and Shallow Semantic Embedding. Then, the structure of the fusion module, based on the residual cross-attention mechanism, is thoroughly described in Section 3.3. Finally, the loss function used in our work is presented in Section 3.4.

3.1. Framework Overview

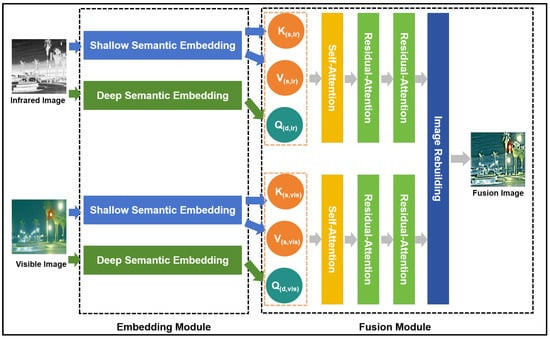

As illustrated in Figure 1, the proposed MFRA-Fuse architecture consists of two main components: the embedding module and the fusion module.

Figure 1.

The architecture of our MFRA-Fuse.

Embedding Module: Unlike traditional Vision Transformer (ViT) methods, which typically employ a uniform shallow encoding approach for the query, key, and value, this study introduces a deep semantic encoding architecture specifically for encoding the query, while the keys and values are encoded using a shallow encoding architecture. In natural language, each word inherently carries a complete semantic meaning developed over the evolution of human language. By encoding these words and directly computing the similarity scores between the query and key, followed by a linear transformation of the value’s semantic space, tokens can effectively learn accurate contextual semantic relationships. However, in the case of image tokens derived from shallow encodings of image patches, the contextual semantic relationships between tokens are not as strong as in natural language. Therefore, a deeper feature extraction encoding method is necessary to enhance the contextual semantic associations of query tokens in high-dimensional linear spaces.

Nonetheless, deeper semantic encodings tend to deviate from the original image content. If attention computations are performed directly in the deep semantic space, it becomes challenging to preserve the original detailed information of the image. As a solution, we retain the traditional shallow encodings from the original ViT architecture for the key and value components. Ablation experiments in Section 4 validate the effectiveness of the proposed method.

Fusion Module: The query (Q), key (K), and value (V) are derived from two distinct encoding strategies: Shallow- and Deep Semantic Embeddings. To better capture the relationships between the encoded tokens generated by these methods, self-attention computations are first performed separately on the Q, K, and V matrices of the infrared and visible images. Following this, two rounds of residual cross-attention computations are applied to integrate information from both the visible and infrared modalities.

Traditional attention modules primarily focus on long-range information, but after multiple iterations of cross-attention in image fusion tasks, the retention of fine-grained details tends to degrade. To address this issue, we propose the residual cross-attention (RCA) mechanism, which introduces a residual connection between the original input value and the output of the attention computation. This residual connection helps preserve the finer details of the images during fusion. The effectiveness of the RCA method is demonstrated through comparative experiments as discussed in Section 4.2.

3.2. Embedding Module

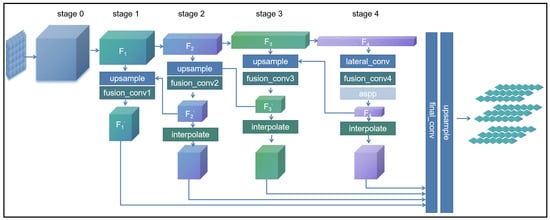

Deep Semantic Embedding: The deep semantic encoding architecture is divided into four stages, as shown in Figure 2. The initial encoding scheme for these stages is inspired by the RepVGG design, utilizing the first four layers of the RepVGG-A0 structure. The output features from each stage are denoted as . To enhance the integration of information across different feature layers, pyramid feature transformation and fusion are applied to as described by Equation (1) and illustrated in Figure 3a:

Figure 2.

The architecture of Deep Semantic Encoding (DSE).

Figure 3.

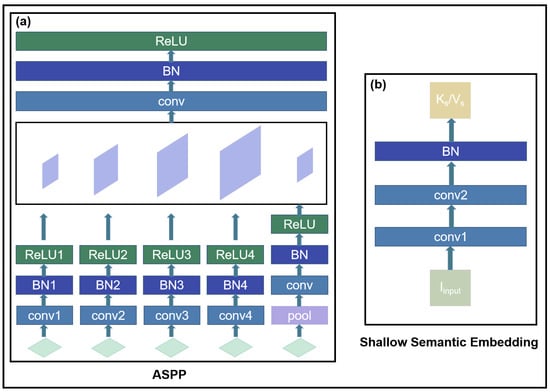

(a) The architecture of Atrous Spatial Pyramid Pooling (ASPP); (b) the architecture of Shallow Semantic Embedding.

Subsequently, a recursive computation approach is applied to iteratively process the first three stages in reverse order, as formulated in Equations (2) to (4):

Finally, the cascaded features from the four stages are concatenated and passed through the final encoding process as defined by Equation (5) and denoted as :

Shallow Semantic Embedding: As mentioned earlier, Shallow Semantic Embedding (SSE) is designed to extract key and value pairs. During the subsequent residual cross-attention fusion process, the value is connected to the attention computation results via residual connections, helping to better preserve image detail information. To retain more of the original image content, we use only two convolutional layers and a batch normalization layer during the shallow feature extraction stage. The corresponding computation method is defined in Equation (6) and illustrated in Figure 3b:

3.3. Fusion Module

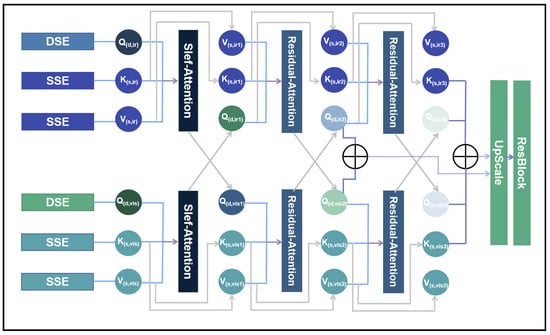

In the fusion module, we propose an enhanced approach based on the cross-attention mechanism, introducing the residual cross-attention (RCA) method. As shown in Figure 4, after passing through the deep and shallow feature extraction networks, the infrared images are encoded and represented at each layer as , where (n = 1, 2, 3). Similarly, the visible images are encoded and represented as , where (n = 1, 2, 3). During the residual cross-attention computation, the encoded query, key, and value of the infrared and visible images are first processed separately through the self-attention module. Then, two iterations of the residual cross-attention module are applied.

Figure 4.

The structure of the residual cross-attention.

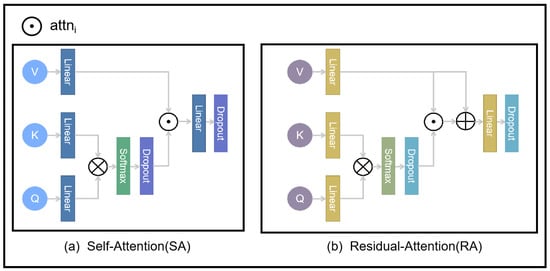

First, as shown in Figure 5, the computation methods for both the self-attention and Residual-Attention modules begin with an initial linear transformation of the query, key, and value. This can be expressed by Equations (7) to (9):

Figure 5.

The structure of the SA and RA modules.

The terms represent the query, key, and value, respectively, following their transformation through linear layers. These projections enable the attention mechanism’s dimensional alignment and feature transformation. The next step is to compute the attention mechanism (where (n = 1, 2, 3)) using the linearly transformed representations , , and . This process is formally expressed in Equation (10).

The term is a scaling factor that mitigates the risk of the softmax function converging to regions with minimal gradients as the dot product values increase. By scaling the dot product, this factor helps maintain more stable gradient magnitudes during the attention mechanism.

First, in the computation of self-attention (SA), no further processing is required for , and its calculation is defined by Equation (11). To better preserve the detailed information of the input images during the fusion process, both and are retained simultaneously in the residual attention fusion process. The structure of the Residual-Attention (RA) module is illustrated in Figure 4, and its corresponding calculation is expressed in Equation (12):

In both the self-attention and residual cross-attention mechanisms, the output is further passed through a linear layer and a dropout layer to enhance the stability of the training process. As shown in Figure 4, after two iterations of residual cross-attention fusion, the outputs from both layers are concatenated and fused to jointly reconstruct the fused image. The outputs of the fusion layer are denoted as (where (i = 1, 2)):

The final image reconstruction module consists of a simple MLP. The fused image, denoted as fuse, is computed as shown in Equation (15):

3.4. Loss Function

Developing an appropriate loss function is pivotal for improving fusion performance. In this study, the proposed loss function is formulated as Equation (16):

Given the distinct imaging mechanisms of infrared (IR) and visible (VI) images, the loss function proposed in this method ensures that the fusion results effectively preserve sufficient details while emphasizing salient information. Specifically, , , and represent the pixel loss, texture loss, and color loss, respectively. The hyperparameters , , and are introduced to balance the contributions of these three loss terms. Based on extensive experiments, Ref. [62] demonstrates that the optimal texture of the fused image can be expressed as the maximum aggregation of the textures from the infrared and visible images. Inspired by Refs. [62,63], the texture details of an image can be effectively represented by the maximum aggregation of its gradients. Accordingly, the texture loss is designed to regulate the gradients of the fused image as formulated in Equation (17):

Here, ∇ represents the Sobel operator, which is used to compute the gradient. The notation denotes the -norm, and refers to the fused image. The variables (H) and (W) represent the height and width of the image, respectively, while the operation (max) indicates the element-wise maximum selection.

For pixel-wise loss, this paper proposes an infrared and visible image fusion loss function based on gradient-adaptive weighting. Specifically, the gradient information of the two input images is first computed using the Sobel operator. The larger gradient value at each pixel location is selected by comparison between the two images. To facilitate unified computation and obtain smoother weighting factors, the selected gradient values are transformed using a softmax function, which serves as the adaptive loss weight:

where ∇ represents the Sobel operator employed to compute the gradient. This approach preserves pixels with rapid gradient changes in the texture-detailed regions of the images, while in regions with smooth color transitions, the fused image is encouraged to approximate the visible image. This is because visible images typically capture richer color information, which is desirable for the final fused result. The notation represents the Smooth l_1 norm:

The color loss is divided into two components.

Since thermal infrared imaging systems output grayscale images without color information, the first component focuses on ensuring that the fused image in the RGB color space closely resembles the visible image in terms of visual perception to the human eye.

To ensure that the fused image achieves a more harmonious appearance in the RGB color space, the images are first separated into their RGB components. The mean of the red channel from both the fused and visible light images is calculated to serve as a weighting factor for the Euclidean distance calculation in the color space for the red and blue channels:

Meanwhile, the weight for the green channel is fixed at four. This weighting strategy takes into account the varying sensitivity of the human visual system to different color channels, with green typically being perceived as the most sensitive and therefore given greater weight:

Finally, the Euclidean distance is transformed through an exponential function to obtain a color space similarity score:

4. Experiments and Results

In this section, we first introduce the datasets and provide a detailed description of the implementation settings. Next, we outline the comparative experimental methodologies and the objective evaluation metrics employed in this study. Following that, we present and analyze the experimental results, accompanied by a comprehensive discussion. Furthermore, ablation studies are conducted to investigate the contributions of individual components in greater detail.

4.1. Datasets and Implementation Settings

In this study, we use the publicly available RoadScene and M3FD datasets, selecting 219 pairs of infrared and visible (IV) images from each. The RoadScene dataset covers a diverse array of scenarios, including roadways, pedestrians, and other common elements, while the M3FD dataset offers source images captured under challenging conditions, such as intense glare, low-light environments, and complex weather phenomena including rain and fog. These two datasets are key resources for image fusion in real-world scenarios. Additionally, we employ the Harvard dataset, a medical imaging repository provided by Harvard Medical School, which contains both normal and pathological brain images across multiple modalities, including MRI, PET, and SPECT. These images are randomly cropped into sample patches of size for training. To train the proposed MFRA-Fuse model, we utilize the AdamW optimizer with an initial learning rate of and a weight decay of . All input images are randomly cropped to a size of . The experiments are conducted using an NVIDIA GeForce RTX 3090 GPU, which is designed and manufactured by NVIDIA Corporation and the framework is implemented in PyTorch.

4.2. Comparative Methods and Objective Evaluation Metrics

Comparative Methods: To evaluate the effectiveness and superiority of the proposed method, comparisons are conducted with six state-of-the-art approaches, including two variants of DenseFusion [64] (one using the addition loss function and the other employing the L1-norm loss function), LRRNet [65], RFN-Nest [66], SwinFusion [18], and U2Fusion [9]. The source codes for all the compared methods are publicly available, and their parameters are configured following the recommendations provided in their respective original papers to ensure a fair and accurate comparison.

Objective Evaluation Metrics: In this study, seven objective evaluation metrics are selected to comprehensively assess the performance of image fusion methods, each capturing different aspects of the fused image’s quality:

Information-based metrics include EN (entropy). Following Equation (29), , which denotes the grayscale intensity distribution of an image, where N is the total number of discrete grayscale levels and each represents the corresponding probability (or frequency) of the i-th level, which quantifies the amount of information contained in the fused image,

and MI (Mutual Information). In this context, and represent the edge distribution histograms of the fused image F and the source image X, respectively. (f,x) denotes the joint distribution histogram of the fused image F and the source image X which measures the amount of information transferred from the source images to the fused image:

Feature-based metrics include AG (Average Gradient), which reflects the richness of texture and detail information by measuring the gradient information of the fused image,

SF (Spatial Frequency), RF, which represents the row frequency, and CF, which represents the column frequency and which evaluates the preservation of texture and detail information from the source images in the fused image:

where SD (Standard Deviation) characterizes the contrast distribution of the fused image. In Equation (35), represents the mean gray level of the fused image. Given that the human visual system is inherently more sensitive to regions with higher contrast, areas with greater contrast in the fusion process are perceived more favorably. Therefore, higher contrast generally leads to better fusion results:

Additionally, the correlation-based metric CC (Correlation Coefficient) is utilized to evaluate the linear relationship between the fused image and the source images. In Equation (36), and are typically set to 0.5. In Equation (37), and represent the mean values of the source image x and the fused image f, respectively:

And, the perception-based metric VIF (Visual Information Fidelity) objectively evaluates the fused image’s quality by reflecting the subjective visual perception of human observers. In Equation (39), and represent the amount of information obtained by the human brain from the source images and the fused results, respectively. is defined as the vector of all blocks in a specific subband. and denote the S-th elements derived from (the output signal of the HSV model with the i-th subband of the source image as input) and (the output signal of the HSV model with the i-th subband of the fused image as input), respectively. Moreover, represents the model parameters of the i-th subband. A higher VIF value indicates that the generated image is more consistent with the human visual perception system.

Finally, the Structural Similarity Index Measure (SSIM) is employed to assess structural differences. This metric evaluates three key aspects of information: luminance, structure, and contrast, as defined in Equation (40). In this context, represents the source image ( or ), while and denote the mean and standard deviation, respectively. The constants , , and are introduced to avoid division by zero. A higher structural similarity score indicates reduced information loss during the fusion process, thereby reflecting better performance of the fused image.

4.3. Results and Discussion

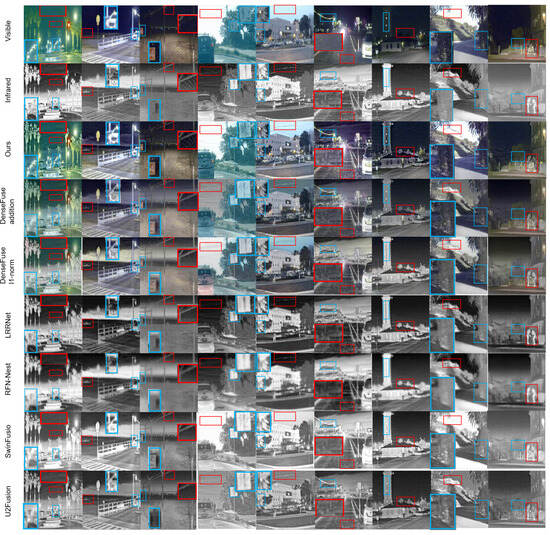

As illustrated in Figure 6, the fusion results for nine pairs of visible and infrared images from the RoadScene dataset are presented, with each image emphasizing two specific details. Although all seven methods demonstrate varying degrees of effectiveness in image fusion, six of them reveal certain limitations or deficiencies in preserving or enhancing these finer details during the fusion process.

Figure 6.

The results of fusing nine pairs of visible and infrared images from the RoadScene dataset.The red box indicates clear textures in the infrared image, and the blue box represents clear textures in the visible light image.

It can be observed that, in terms of texture details, both LRRNet and RFN-Nest exhibit relatively inferior performance, with blurred image edges. This is particularly evident in the comparisons within the red box in the third column and the blue box in the fourth column (from left to right). In the third column, only the proposed method (Ours) and SwinFusion manage to preserve the intertwined grid-like texture. In the fourth column, the road sign information is most comprehensively retained by the proposed method (Ours), while DenseFusion (with the addition of a loss function) and SwinFusion also manage to preserve some contours.

Regarding color style, although DenseFusion generates color images, inconsistencies in color representation are noticeable compared to the original visible-light images. This is especially evident in the first column on the left, where the color of the leaves deviates significantly from the original. This further highlights the effectiveness of the proposed color loss function, which enables the output images to better preserve a color style closer to the true scene. However, there is still room for improvement in both the proposed method (Ours) and SwinFusion in certain specific details. For instance, in the eighth image from the left, the building is only partially represented by its edge contours. This limitation arises because the attention mechanism prioritizes global information, while the proposed method emphasizes maintaining consistency between the color style of the fused image and the real-world scene. As a result, texture detail recovery in areas of high exposure remains insufficient.

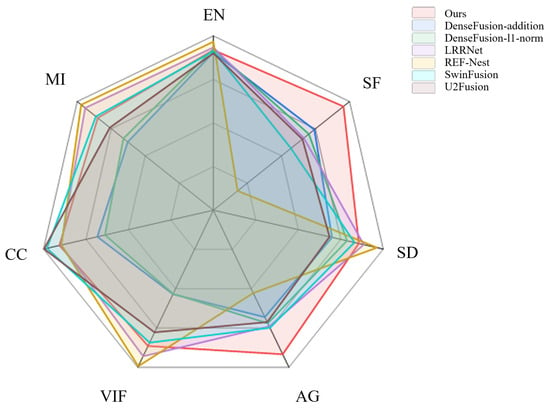

Based on the objective metrics evaluated across the seven methods as shown in Table 1 and Figure 7, our proposed method achieves the best performance in both SF and AG. For the remaining metrics, it consistently ranks within the top three. Furthermore, unlike other methods, our approach does not exhibit any significant weaknesses in specific metrics. For example, DenseFusion performs notably worse in CC, while RFN-Net shows poor performance in SF. These findings further emphasize the balanced and robust performance of our method across various evaluation criteria. Additionally, to validate the generalization capability of the proposed method, we conducted experiments on the M3FD dataset. The fusion results on the M3FD dataset are shown in Figure 8.

Table 1.

Performance comparison of our method with existing image fusion approaches.

Figure 7.

Performance comparison of our method with existing image fusion approaches.

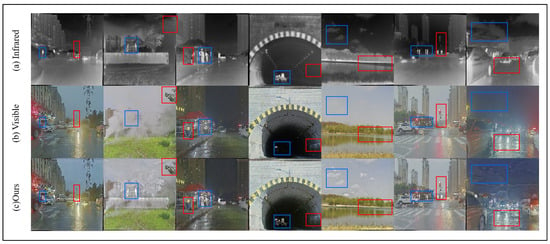

Figure 8.

The results of image fusion on the M3FD dataset.

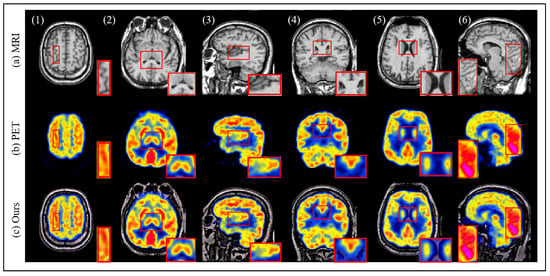

To further evaluate the robustness of the proposed method, we utilize the Harvard dataset for testing. The fusion results are depicted in Figure 9. Based on the data provided in [9], we compare the proposed method with six other medical image fusion techniques. As shown in Table 2, the proposed method ranks within the top three across all four evaluated metrics, with SSIM achieving the best performance among the six methods. Additionally, ablation studies conducted on both datasets not only confirm the effectiveness of the proposed method but also underscore its strong generalization capability.

Figure 9.

The results of image fusion on the Harvard dataset.The red box displays the texture details of the PET image, MRI image and the fused image.

Table 2.

Four Metrics on Medical Image Fusion on the Harvard Dataset.

4.4. Ablation Studies

In this section, we conduct three ablation experiments to evaluate the effectiveness of Shallow and Deep Semantic Embeddings, the contribution of the RCA structure, and the improvements introduced by the deep encoding architecture. Furthermore, we analyze specific configurations, investigate the impact of the hyperparameters , , and , discuss the rationale behind the selection of various optimization algorithms, and provide a comparative evaluation of different normalization functions.

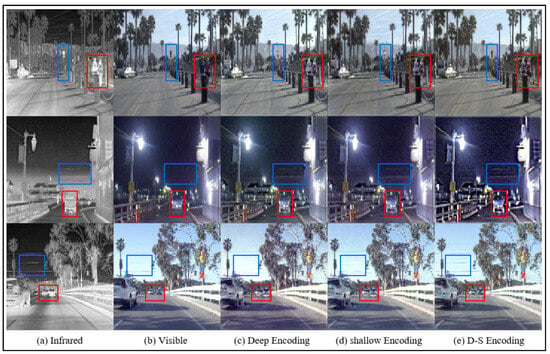

Shallow and Deep Semantic Embedding: To evaluate the effectiveness of applying Deep Semantic Embedding and Shallow Semantic Embedding to the encoding of Q, K, and V, we conduct experiments in which the Q, K, and V of the RCA module are encoded using the same shallow embedding. Additionally, experiments are performed where Q is encoded using the Deep Semantic Embedding (DSE) module, while K and V are encoded using the Shallow Semantic Embedding (SSE) module.

Validation on the RoadSense and M3FD datasets as shown in Table 3, reveals that the DSE + RCA method achieves significant improvements in the SF, SD, and VIF metrics, with a slight decrease in AG, while other metrics remain generally stable. The overall comparison of metrics demonstrates that incorporating Deep Semantic Embedding enhances the effectiveness of image information fusion. Furthermore, as illustrated in Figure 10, the fusion results of the three methods, namely deep embedding, shallow embedding, and the proposed deep–shallow embedding, are visually compared. It is evident that details such as the person, the blue car, and the fine electrical wires in the sky are most clearly preserved with the deep–shallow embedding approach.

Table 3.

Comparison of DSE + RCA and RCA under different settings.

Figure 10.

The results of deep embedding, shallow embedding, and the proposed deep–shallow embedding image fusion on the M3FD dataset. The blue box indicates clear textures in the infrared image, and the red box represents clear textures in the visible light image.

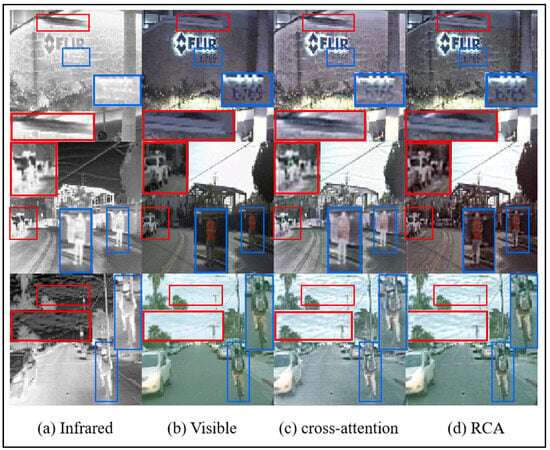

RCA Module: Attention mechanisms often prioritize global information, which can lead to the transformation of original input features and the loss of some details during long-range computations. To better preserve the original fine-grained features in fused images, we propose the residual cross-attention (RCA) mechanism. A comparison of the metrics for images fused using the RCA module versus those fused with a standard cross-attention mechanism, as shown in Table 4, demonstrates that the RCA module effectively enhances the SD, AG, VIF, and MI metrics across both datasets, while the remaining metrics remain largely unaffected. Furthermore, Figure 11 provides a visual comparison of fusion results, highlighting the RCA method’s superiority. With RCA, both fine details, such as the numbers and the car in the corner, and primary texture information, such as the person and the clouds in the sky, are more clearly preserved in comparison to the standard cross-attention approach.

Table 4.

Comparison of DSE + RCA and DSE + cross under different settings.

Figure 11.

The results of RCA and cross-attention image fusion on the M3FD dataset.The red box indicates clear textures in the infrared image, and the blue box represents clear textures in the visible light image.

Deep Semantic Embedding Architecture: In this paper, we propose an enhanced Deep Semantic Embedding method for image fusion tasks by improving the RepVGG model with pyramid semantic extraction and cascade fusion feature encoding structures. To evaluate the effectiveness of this approach, we conduct comparisons across three fusion methods on two datasets: (1) using only the RCA structure, (2) using the RepVGG + RCA structure, and (3) using the DSE + RCA structure. The results, presented in Table 5, show that while employing RepVGG as the deep semantic encoder yields only marginal improvements in image fusion metrics compared to using the RCA model alone, using DSE as the deep semantic encoder achieves significant improvements across multiple metrics. These findings demonstrate that the proposed method effectively enhances the extraction of query features for attention mechanism calculations.

Table 5.

Comparison of RCA, RepVgg + RCA, and DSE + RCA under different settings.

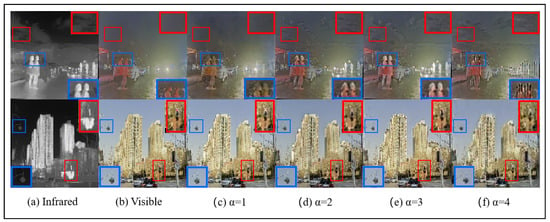

Hyperparameter α: As discussed in Section 3.3, the hyperparameter is used to regulate pixel loss. In our experiments, is set to 1, 2, 3, and 4. The results, summarized in Table 6, show that the overall performance metrics are optimal when = 3. Additionally, the visual comparisons in Figure 12 highlight that texture details are best preserved when = 3. When is set to smaller values, image details—such as the contours of human figures, the background sky, and cloud textures—appear noticeably blurred. Conversely, when = 4, an over-sharpening effect becomes apparent.

Table 6.

Comparison of under different settings.

Figure 12.

The results of different set fusion image on the M3FD dataset. The blue box indicates clear textures in the infrared image, and the red box represents clear textures in the visible light image.

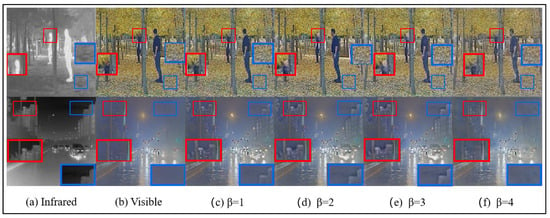

Hyperparameter β: is a crucial hyperparameter used to regulate texture loss in image fusion. To evaluate its effect, we conduct comparative experiments with set to 1, 2, 3, and 4. The results of the image fusion metrics under varying values are summarized in Table 7. The overall performance is optimal when is set to either 2 or 3. However, as illustrated in Figure 13, when , details such as the windows of buildings and the contours of human figures are more distinctly preserved.

Table 7.

Comparison of under different settings.

Figure 13.

The results of different set fusion image on the M3FD dataset.The blue box indicates clear textures in the infrared image, and the red box represents clear textures in the visible light image.

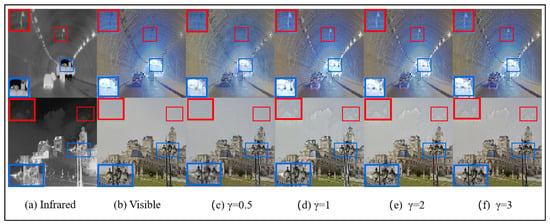

Hyperparameter γ: As discussed in Section 3.3, is a key hyperparameter that controls the color loss in image fusion. To assess its impact, we conduct a series of comparative experiments with set to 0.5, 1, 2, and 3. The results, summarized in Table 8, indicate that the overall performance metrics are optimized when . Additionally, a visual comparison provided in Figure 14 illustrates that when , finer details—such as the buildings at the end of the tunnel and the intricate shapes of the streetlights—are better preserved.

Table 8.

Comparison of under different settings.

Figure 14.

The results of different set fusion image on the M3FD dataset.The blue box indicates clear textures in the infrared image, and the red box represents clear textures in the visible light image.

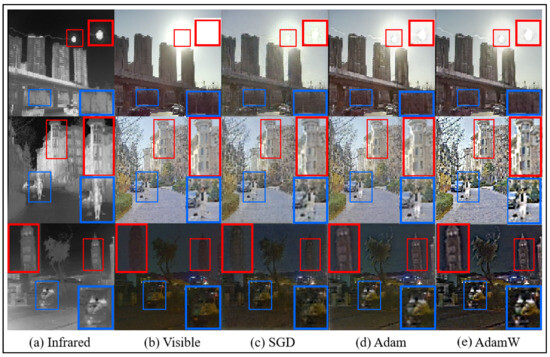

Optimizer: To enhance the training performance of the proposed model, we compare three widely used optimizers in deep learning: SGD, Adam, and AdamW. The test results, shown in Figure 15, indicate that the AdamW optimizer achieves superior performance compared to the others. Specifically, AdamW demonstrates optimal results in preserving details such as human subjects, architectural elements, high-exposure sunlight, and shadow textures. Based on these findings, this study adopts AdamW as the optimizer for model training.

Figure 15.

The results of different optimizers set fusion image on the M3FD dataset.The blue box indicates clear textures in the infrared image, and the red box represents clear textures in the visible light image.

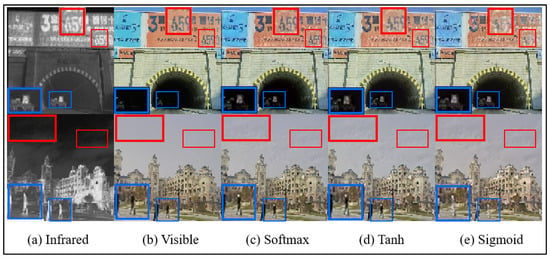

Normalization Function: In Section 3.3, the softmax function is selected as the normalization method for computing grad_weight in the texture loss function. To assess the impact of different normalization functions on image fusion performance, we conduct comparative experiments using three alternatives: sigmoid, softmax, and tanh. As shown in Table 9, the overall performance of all three normalization functions is relatively comparable in terms of quantitative image fusion metrics. However, as illustrated in Figure 16, the softmax function produces superior visual fusion results, particularly in preserving fine details such as human contours, text on billboards, and vehicles in darker areas such as tunnels. Based on these findings, the softmax function is adopted as the normalization method for computing grad_weight in this study.

Table 9.

Comparison of normalization function under different settings.

Figure 16.

The results of different normalization function set fusion images on the M3FD dataset.The blue box indicates clear textures in the infrared image, and the red box represents clear textures in the visible light image.

Computational Efficiency: Based on the data presented in [7], the average computation times of eight different fusion methods on the RoadScene dataset are as follows: DATFuse: 0.1248 s; DenseFuse: 0.1082 s; FusionGAN: 0.1401 s; RFN-Nest: 0.1515 s; SwinFusion: 0.9637 s; U2Fusion: 0.0682 s; ATFFuse: 0.1081 s; and the proposed method: 0.135 s. The computational speed of our method is moderate compared to other image fusion methods. This is primarily attributed to the multiple feature extraction and data transformation steps involved, which introduce additional computational overhead. Nonetheless, this slight increase in computation time is a reasonable trade-off, as the proposed method achieves significantly improved image fusion performance as demonstrated in the earlier sections.

5. Conclusions

This paper addresses the limitations of existing encoding methods in Transformer architectures when applied to the image domain, where image tokens often lack the precise and meaningful semantics that natural language tokens possess. Moreover, preserving the fine details of the original input images remains a significant challenge in image fusion tasks. To tackle these issues, we propose an innovative deep–shallow semantic encoding structure. Building on this foundation, we introduce a multi-stage cascade fusion strategy that leverages deep semantic encoding. In addition, we are the first to propose a residual cross-attention module, designed to retain more of the original image details during long-range computations. To further enhance adaptability to diverse image scenarios, we devise a novel fusion function based on image gradients and color style control.

Comprehensive ablation experiments were conducted on the RoadScene and M3FD datasets, comparing our method with six state-of-the-art techniques. The results demonstrate that the proposed approach achieves superior overall performance in terms of both qualitative and quantitative evaluations. Looking ahead, future work will focus on enhancing the fusion efficiency and adaptability of the proposed method. Specifically, we aim to design a structure capable of automatically selecting the depth of feature encoding layers, achieving an optimal balance between shallow and deep features. Additionally, we plan to streamline the image reconstruction process to reduce computational overhead while further improving the method’s adaptability across various image fusion scenarios.

Author Contributions

H.L. proposed the methodology of the paper, conducted code implementation, and experimental design, and contributed to part of the manuscript writing. T.Y. participated in experimental design, data processing, and code implementation. R.W. contributed to manuscript writing and experimental data processing. C.L. provided guidance. S.Z. provided guidance. X.G. is the supervising professor, and provides guidance, oversight, and funding support. All authors have read and approved the final version of the manuscript.

Funding

This work is supported by The Chinese Academy of Sciences Key Deployment Scientific Research Special Fund [grant numbers KGFZD-145-24-03-03].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pohl, C.; Van Genderen, J. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Johnson, J. Pulse-coupled neural nets: Translation, rotation, scale, distortion, and intensity signal invariance for images. Appl. Opt. 1994, 33, 6239–6253. [Google Scholar] [CrossRef] [PubMed]

- Broussard, R.; Rogers, S. Physiologically motivated image fusion using pulse-coupled neural networks. Appl. Sci. Artif. Neural Netw. II 1996, 2760, 372–383. [Google Scholar]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.; Ma, J. Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12797–12804. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Xu, M.; Zhang, H.; Xiao, G. STDFusionNet: An infrared and visible image fusion network based on salient target detection. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Ma, J.; Xu, H.; Jiang, J.; Mei, X.; Zhang, X. DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 2020, 29, 4980–4995. [Google Scholar]

- Zhao, Z.; Bai, H.; Zhu, Y.; Zhang, J.; Xu, S.; Zhang, Y.; Zhang, K.; Meng, D.; Timofte, R.; Van Gool, L. DDFM: Denoising diffusion model for multi-modality image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 8082–8093. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Arjovsky, M.; Bottou, L. Towards principled methods for training generative adversarial networks. arXiv 2017, arXiv:1701.04862. [Google Scholar]

- Ram Prabhakar, K.; Sai Srikar, V.; Venkatesh Babu, R. Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4714–4722. [Google Scholar]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Dosovitskiy, A. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. Cddfuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5906–5916. [Google Scholar]

- Tang, W.; He, F.; Liu, Y.; Duan, Y.; Si, T. DATFuse: Infrared and visible image fusion via dual attention transformer. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3159–3172. [Google Scholar] [CrossRef]

- Wang, L.; Yi, S.; Yu, Y.; Gao, C.; Samali, B. Automated ultrasonic-based diagnosis of concrete compressive damage amidst temperature variations utilizing deep learning. Mech. Syst. Signal Process. 2024, 221, 111719. [Google Scholar]

- Yu, Y.; Zhang, C.; Xie, X.; Yousefi, A.; Zhang, G.; Li, J.; Samali, B. Compressive strength evaluation of cement-based materials in sulphate environment using optimized deep learning technology. Dev. Built Environ. 2023, 16, 100298. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote. Sens. 2015, 53, 3658–3668. [Google Scholar]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Chen, J.; Li, X.; Luo, L.; Mei, X.; Ma, J. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inf. Sci. 2020, 508, 64–78. [Google Scholar] [CrossRef]

- Shen, J.; Zhao, Y.; Yan, S.; Li, X. Others Exposure fusion using boosting Laplacian pyramid. IEEE Trans. Cybern. 2014, 44, 1579–1590. [Google Scholar] [CrossRef]

- Liu, C.; Qi, Y.; Ding, W. Infrared and visible image fusion method based on saliency detection in sparse domain. Infrared Phys. Technol. 2017, 83, 94–102. [Google Scholar] [CrossRef]

- Gan, W.; Wu, X.; Wu, W.; Yang, X.; Ren, C.; He, X.; Liu, K. Infrared and visible image fusion with the use of multi-scale edge-preserving decomposition and guided image filter. Infrared Phys. Technol. 2015, 72, 37–51. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, F.; Luo, X.; Liu, F. Novel infrared and visible image fusion method based on independent component analysis. Front. Comput. Sci. 2014, 8, 243–254. [Google Scholar]

- Zhang, Q.; Fu, Y.; Li, H.; Zou, J. Dictionary learning method for joint sparse representation-based image fusion. Opt. Eng. 2013, 52, 057006. [Google Scholar]

- Liu, Y.; Chen, X.; Ward, R.; Wang, Z. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Yu, N.; Qiu, T.; Bi, F.; Wang, A. Image features extraction and fusion based on joint sparse representation. IEEE J. Sel. Top. Signal Process. 2011, 5, 1074–1082. [Google Scholar] [CrossRef]

- Pajares, G.; De La Cruz, J. A wavelet-based image fusion tutorial. Pattern Recognit. 2004, 37, 1855–1872. [Google Scholar]

- Yan, L.; Hao, Q.; Cao, J.; Saad, R.; Li, K.; Yan, Z.; Wu, Z. Infrared and visible image fusion via octave Gaussian pyramid framework. Sci. Rep. 2021, 11, 1235. [Google Scholar] [CrossRef]

- Yang, L.; Guo, B.; Ni, W. Multimodality medical image fusion based on multiscale geometric analysis of contourlet transform. Neurocomputing 2008, 72, 203–211. [Google Scholar] [CrossRef]

- Fu, M.-Y.; Zhao, C. Fusion of infrared and visible images based on the second generation curvelet transform. J. Infrared Millim. Waves 2009, 28, 254–258. [Google Scholar] [CrossRef]

- Liu, Y.; Jin, J.; Wang, Q.; Shen, Y.; Dong, X. Region level based multi-focus image fusion using quaternion wavelet and normalized cut. Signal Process. 2014, 97, 9–30. [Google Scholar] [CrossRef]

- Kumar, S.; Muttan, S. PCA-based image fusion. Algorithms Technol. Multispectral Hyperspectral Ultraspectral Imag. XII 2006, 6233, 658–665. [Google Scholar]

- Mou, J.; Gao, W.; Song, Z. Image fusion based on non-negative matrix factorization and infrared feature extraction. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; Volume 2, pp. 1046–1050. [Google Scholar]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Qin, X.; Shen, J.; Mao, X.; Li, X.; Jia, Y. Robust match fusion using optimization. IEEE Trans. Cybern. 2014, 45, 1549–1560. [Google Scholar] [CrossRef]

- Yang, K.; Xiang, W.; Chen, Z.; Zhang, J.; Liu, Y. A review on infrared and visible image fusion algorithms based on neural networks. J. Vis. Commun. Image Represent. 2024, 2024, 104179. [Google Scholar] [CrossRef]

- Wu, D.; Ye, M.; Lin, G.; Gao, X.; Shen, J. Person re-identification by context-aware part attention and multi-head collaborative learning. IEEE Trans. Inf. Forensics Secur. 2021, 17, 115–126. [Google Scholar] [CrossRef]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Hoi, S. Deep learning for image super-resolution: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3365–3387. [Google Scholar] [CrossRef]

- Cheng, C.; Xu, T.; Wu, X. A general unsupervised image fusion network based on memory unit. Inf. Fusion 2023, 92, 80–92. [Google Scholar]

- Li, H.; Cen, Y.; Liu, Y.; Chen, X.; Yu, Z. Different input resolutions and arbitrary output resolution: A meta learning-based deep framework for infrared and visible image fusion. IEEE Trans. Image Process. 2021, 30, 4070–4083. [Google Scholar] [PubMed]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 1–14. [Google Scholar] [CrossRef]

- Zhou, H.; Hou, J.; Zhang, Y.; Ma, J.; Ling, H. Unified gradient-and intensity-discriminator generative adversarial network for image fusion. Inf. Fusion 2022, 88, 184–201. [Google Scholar] [CrossRef]

- Wang, Z.; Shao, W.; Chen, Y.; Xu, J.; Zhang, L. A cross-scale iterative attentional adversarial fusion network for infrared and visible images. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3677–3688. [Google Scholar]

- Tang, L.; Xiang, X.; Zhang, H.; Gong, M.; Ma, J. DIVFusion: Darkness-free infrared and visible image fusion. Inf. Fusion 2023, 91, 477–493. [Google Scholar] [CrossRef]

- Li, Q.; Han, G.; Liu, P.; Yang, H.; Chen, D.; Sun, X.; Wu, J.; Liu, D. A multilevel hybrid transmission network for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2022, 71, 1–14. [Google Scholar] [CrossRef]

- Wang, J.; Xi, X.; Li, D.; Li, F. FusionGRAM: An infrared and visible image fusion framework based on gradient residual and attention mechanism. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar]

- Xing, M.; Liu, G.; Tang, H.; Qian, Y.; Zhang, J. Multi-level adaptive perception guidance based infrared and visible image fusion. Opt. Lasers Eng. 2023, 171, 107804. [Google Scholar]

- Li, H.; Wu, X. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar]

- Ren, L.; Pan, Z.; Cao, J.; Liao, J. Infrared and visible image fusion based on variational auto-encoder and infrared feature compensation. Infrared Phys. Technol. 2021, 117, 103839. [Google Scholar]

- Vs, V.; Valanarasu, J.; Oza, P.; Patel, V. Image fusion transformer. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 3566–3570. [Google Scholar]

- Fu, Y.; Xu, T.; Wu, X.; Kittler, J. Ppt fusion: Pyramid patch transformerfor a case study in image fusion. arXiv 2021, arXiv:2107.13967. [Google Scholar]

- Tang, W.; He, F.; Liu, Y. YDTR: Infrared and visible image fusion via Y-shape dynamic transformer. IEEE Trans. Multimed. 2022, 25, 5413–5428. [Google Scholar]

- Wu, Z.; Liu, Z.; Lin, J.; Lin, Y.; Han, S. Lite transformer with long-short range attention. arXiv 2020, arXiv:2004.11886. [Google Scholar]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83, 79–92. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar]

- Wang, C.; Xu, D.; Zhu, Y.; Martin-Martin, R.; Lu, C.; Fei-Fei, L.; Savarese, S. Densefusion: 6d object pose estimation by iterative dense fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3343–3352. [Google Scholar]

- Li, H.; Xu, T.; Wu, X.; Lu, J.; Kittler, J. Lrrnet: A novel representation learning guided fusion network for infrared and visible images. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11040–11052. [Google Scholar]

- Li, H.; Wu, X.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).