Abstract

Predicting multiple future pedestrian trajectories is a challenging task for real-world applications like autonomous driving and robotic motion planning. Existing methods primarily focus on immediate spatial interactions among pedestrians, often overlooking the impact of distant spatial environments on their future trajectory choices. Additionally, aligning trajectory smoothness and temporal consistency remains challenging. We propose a multimodal trajectory prediction model that utilizes spatio-temporal graphical attention networks for crowd scenarios. Our method begins by generating simulated multiview pedestrian trajectory data using CARLA. It then combines original and selected multiview trajectories using a convex function to create augmented adversarial trajectories. This is followed by encoding pedestrian historical data with a multitarget detection and tracking algorithm. Using the augmented trajectories and encoded historical information as inputs, our spatio-temporal graph Transformer models scaled spatial interactions among pedestrians. We also integrate a trajectory smoothing method with a Memory Storage Module to predict multiple future paths based on historical crowd movement patterns. Extensive experiments demonstrate that our proposed MTP-STG model achieves state-of-the-art performance in predicting multiple future trajectories in crowds.

1. Introduction

In research on intelligent transportation systems and autonomous driving technology, multitarget pedestrian tracking and multifuture trajectory prediction are key areas of focus. The aim of multitarget pedestrian tracking is to monitor the positions and movements of multiple pedestrians within a video sequence. In real-world scenarios, pedestrian movements can be influenced by various factors, including the behavior of other pedestrians, traffic rules, and environmental conditions. Researchers have developed numerous methods that enhance the performance of multitarget pedestrian tracking to a certain extent [1,2]. Predicting pedestrian trajectories is crucial in computer vision, especially for applications in autonomous driving [3,4], surveillance [5,6], and human–robot interaction [7]. Although single-future trajectory prediction has been extensively studied, the inherent uncertainty and multimodality of human behavior necessitate exploring multifuture trajectory prediction. This task aims to generate multiple plausible future paths for pedestrians, reflecting the diverse possibilities of human movement in complex environments. Recent advancements in deep learning and graph-based methods have significantly improved the accuracy and robustness of these predictions. However, challenges remain in accurately modeling the spatial-temporal dependencies and social interactions among pedestrians.

Pedestrian trajectory prediction has evolved from deterministic methods to probabilistic and multimodal frameworks. Initial approaches like Social LSTM [8] and Social GAN [9] modeled social interactions using recurrent neural networks (RNNs) and generative adversarial networks (GANs). However, these methods could only predict a single future trajectory, overlooking the multimodality of human behavior. Recent studies have introduced multifuture prediction frameworks utilizing memory modules, graph-based spatial Transformers, and probabilistic models to better capture the diversity of pedestrian movements. A key challenge in multifuture trajectory prediction is accurately modeling spatial-temporal dependencies. Pedestrian movements are influenced by their past trajectories and their interactions with other pedestrians and the environment. Graph-based methods like STGAT [10] and Trajectron++ [11] show promise in capturing these dependencies by representing pedestrians as nodes and their interactions as edges in a graph. However, these methods struggle with long-term dependencies and the dynamic nature of social interactions. Evaluating multifuture predictions presents another challenge. Traditional metrics, such as Average Displacement Error (ADE) and Final Displacement Error (FDE), fall short in assessing the diversity and plausibility of multiple predicted trajectories. Recent studies have introduced metrics like and [12] and PTU [13], to more effectively evaluate multifuture prediction models.

Recently, several innovative approaches have been developed to address the challenges of multifuture trajectory prediction. For example, the SocialCircle model [14] uses an angle-based social interaction representation, inspired by marine animals’ echolocation, to dynamically model pedestrian interactions. This approach divides the surrounding space into angular partitions and aggregates interaction data within each, offering a detailed representation of social dynamics. Another significant advancement is the Forking Paths Dataset [15], which fills the gap in diverse and realistic datasets for multifuture prediction. Created with a 3D simulator, this dataset enables human annotators to generate multiple plausible future trajectories, serving as a robust benchmark for evaluating multifuture prediction models. Memory-based methods have also become popular in this area. The SHENet framework [16] uses a memory bank to store historical group trajectories and a cross-modal Transformer to refine predictions based on individual-environment interactions. This method excels in constrained environments where human movements follow certain patterns. Additionally, integrating probabilistic models like the Gaussian Mixture Model (GMM) and Variational Autoencoder (VAE) has enabled the creation of diverse and plausible future trajectories. For instance, Multiverse [15] introduces a multidecoder framework that predicts both coarse-grained and fine-grained future locations, enhanced with semantic segmentation features to boost prediction accuracy.

However, despite these advancements, existing methods face three critical limitations. First, graph-based approaches typically rely on single-scale interaction graphs, often overlooking distant environmental context that influences long-term planning. Second, generative models excel in diversity but often lack explicit mechanisms to ensure temporal consistency, occasionally resulting in “jittery” paths. Third, most prediction models operate on the assumption of perfect ground-truth inputs, lacking robustness to detection noise. To overcome these challenges, we propose MTP-STG, a unified framework that specifically incorporates multiscale modeling for distant context and a memory mechanism to enforce temporal consistency, ensuring robustness in end-to-end tracking scenarios.

Multiobject TRACKING and multifuture trajectory prediction are inherently interconnected, relying on current and historical trajectory information provided by multitarget pedestrian tracking for pedestrians. Using a multiobjective tracker, the precise location and motion state of each pedestrian are detected in real time, enhancing the performance of multifuture trajectory prediction and vice versa. In this paper, we introduce MTP-STG, an integrated framework for multipedestrian tracking and multifuture trajectory prediction. Our model initially uses the MOTR detector for multitarget pedestrian tracking in video sequences to gather trajectory data for each pedestrian; this data is then fed into the spatio-temporal graph Transformer to predict the probability distribution of their multiple future trajectories. This integrated approach enables our model to simultaneously track multiple pedestrians and predict their future trajectories, providing a comprehensive understanding of pedestrian dynamics. The main contributions of this work are summarized as follows:

- 1.

- We integrate multitarget tracking and multifuture trajectory prediction into a unified framework for seamless end-to-end detection and prediction, effectively handling noisy inputs in real-world scenarios.

- 2.

- We develop a spatio-temporal graph Transformer incorporating a Multiscale Grid Graph structure. This design allows the model to simultaneously capture local social interactions and distant environmental semantics.

- 3.

- We introduce a Memory Storage Module within the trajectory generator. By retrieving and conditioning on historical embeddings, this module ensures the smoothness and temporal consistency of the diverse predicted trajectories.

2. Related Works

2.1. Multiobject Tracking Models

Multiobject Tracking (MOT) is designed to automatically detect and track multiple objects within a video sequence. Recently, MOT research has made significant strides, broadly categorized into traditional and deep learning approaches. Traditional MOT methods include SORT [17], DeepSORT [18], and Tracktor [19]. These methods typically involve two phases: detection and data association. The detection phase identifies the target’s position in each frame using a detector, while the association phase employs heuristic rules or optimization algorithms to link targets across frames and form their trajectories. The advantage of these methods is their simplicity and efficiency. However, they rely heavily on detector performance and struggle with complex motion patterns and occlusions.

Deep-learning-based MOTs use neural networks to learn the appearance and motion characteristics of targets, improving model performance and robustness. This approach can be divided into two types: the first treats target detection and association as independent subtasks, exemplified by Track-RCNN [20], JDE [21], and FairMOT [22]. These methods leverage existing detection and Re-ID techniques but often overlook the spatio-temporal relationships between targets and still require heuristic post-processing. The second type handles target detection and association as a unified task, as seen in TransMOT [23], TransTrack [24], and TrackFormer [25]. These approaches, based on Transformers [26] or graph neural networks [27], model the spatio-temporal relationships between targets to achieve end-to-end MOT. In the field of MOT, attention mechanisms are now a crucial research tool. The advantage of this approach is that it explicitly learns the motion patterns and interdependencies of targets, reducing the need for subsequent processing. However, it requires more computational resources and training data.

2.2. Single-Future Trajectory Prediction

Pedestrian trajectory prediction has gained significant attention for its essential role in autonomous driving, motion tracking, and robotic navigation. Early methods used deterministic models to predict a single future trajectory based on past observations. A pioneering model, Social LSTM, employed long short-term memory (LSTM) networks to model social interactions among pedestrians, improving prediction accuracy in crowded settings [8]. Subsequently, Social GAN leveraged generative adversarial networks (GANs) to generate socially acceptable trajectories, accounting for the stochastic nature of human motion [9]. However, these methods focus mainly on single-future prediction, limiting their ability to capture the inherent uncertainty and variability of pedestrian paths. Later research expanded on these foundations to enhance prediction accuracy and robustness. For example, SoPhie incorporated an attentive GAN model to account for both social interactions and physical constraints [28]. Likewise, the SR-LSTM model optimized LSTM states for improved pedestrian trajectory prediction in dynamic environments [29]. Despite these advancements, accurately predicting multiple plausible future trajectories remains challenging, necessitating further research into more advanced methods.

Graph neural networks (GNNs) have proven to be powerful tools for modeling relational data and have been effectively applied to trajectory prediction tasks. A recursive social behavior graph (RSBG) based on graph convolutional networks (GCNs) was introduced to model social interactions among pedestrians, capturing dynamic behaviors influencing pedestrian movement [30]. This model highlights the potential of GNNs in understanding complex social interactions and improving trajectory prediction accuracy. The STGAT model utilizes a spatio-temporal graph attention network to capture both spatial and temporal dependencies in pedestrian movements [10]. This method employs attention mechanisms to emphasize relevant interactions, enhancing prediction accuracy in dynamic environments. Another notable advancement is Trajectron, a probabilistic multiagent trajectory model that utilizes dynamic spatio-temporal graphs to predict future paths [31]. This model focuses on multiagent interactions, making it particularly effective in crowded and complex scenarios. Further advancements include Social-STGCNN, which incorporates spatio-temporal graph convolutional neural networks (ST-GCNNs) to predict human trajectories while accounting for social and physical constraints [32]. This model seamlessly integrates social dynamics with physical movement patterns, improving prediction accuracy across different environments. Despite these developments, integrating GNNs with other advanced techniques, such as Transformers and probabilistic models, could further enhance diversity and temporal consistency in multifuture predictions.

2.3. Transformer-Based Models

Transformer architectures have become increasingly popular for their ability to process sequential data across various domains. Initially developed for natural language processing, Transformers have since been adapted for computer vision and trajectory prediction. Spatio-temporal Transformer networks utilize spatial and temporal attention mechanisms to model pedestrian trajectories, effectively capturing long-range dependencies and complex temporal patterns [33]. The DETR model showcased the potential of Transformers in vision tasks, motivating their adoption in trajectory prediction [34]. Its success in object detection underscores the versatility of Transformer architectures. Vision Transformers (ViT) further demonstrated the effectiveness of Transformers in processing visual data. Their self-attention mechanism enhances spatial relationship modeling and improves the accuracy of future movement predictions [35]. AgentFormer [36] employs agent-aware Transformers for socio-temporal multiagent forecasting, emphasizing interactions between agents and their environment. This model provides a comprehensive approach to multifuture trajectory prediction by integrating Transformers with advanced interaction modeling. Combining Transformers with GNNs and probabilistic frameworks presents promising research directions, potentially enhancing accuracy, diversity, and computational efficiency in trajectory prediction. Different from previous methods [10,32] that rely on single-scale graphs to model local neighbors, our approach introduces a Multiscale Grid Graph structure. This allows the Transformer to attend to both fine-grained local interactions and coarse-grained distant environmental contexts simultaneously, providing a more holistic view of the scene.

2.4. Multifuture Trajectory Prediction

Acknowledging the limitations of single-future models, recent research has increasingly focused on predicting multiple plausible future trajectories. The Multiverse model proposed a two-stage probabilistic framework to generate diverse future trajectories, marking a significant advancement in the field [15]. However, ensuring temporal consistency across multiple predictions remains a challenge. Methods employing determinantal point processes (DPPs) enhance the diversity of predicted trajectories but often struggle to fully capture spatial interactions [37]. Beyond probabilistic models, researchers have explored several deep-learning-based approaches. The MultiPath model generates multiple probabilistic anchor trajectory hypotheses for behavior prediction, improving its capability to forecast diverse future scenarios [38]. Likewise, the Social-WaGDAT model utilizes a Wasserstein graph double-attention network to enhance interaction-aware trajectory prediction [39]. While effective, these methods can be computationally demanding due to their complexity. Another notable contribution is the approach in [40], which regularizes neural networks for trajectory prediction through an inverse reinforcement learning framework. This method emphasizes learning socially aware motion representations, ensuring predicted trajectories are diverse and contextually relevant. Despite these advancements, balancing prediction accuracy, diversity, and computational efficiency remains an ongoing research challenge. While methods like Multiverse [15] and SimAug [12] successfully generate diverse hypotheses, they do not explicitly model the temporal consistency of latent states over long horizons. Our MTP-STG addresses this by incorporating a Memory Storage Module. Unlike standard recurrence, our memory module allows the model to retrieve and condition on past historical embeddings, ensuring that the generated diverse trajectories remain smooth and temporally consistent. Most recently, diffusion-based approaches have set new benchmarks in trajectory prediction. For instance, SingularTrajectory [41] proposes a universal diffusion framework that iteratively denoises trajectories to achieve high precision across various domains. While these methods achieve state-of-the-art accuracy, their iterative sampling process often incurs high computational latency, limiting their applicability in real-time end-to-end tracking systems. In contrast, our MTP-STG focuses on an efficient one-shot prediction paradigm that balances accuracy and speed for crowd monitoring.

3. Proposed Method

3.1. Problem Description

The pedestrian trajectory prediction task is generally defined as there are n pedestrians in the geographic space, and given the time moments, the trajectory spatial coordinates of the group of pedestrians in the geographic space at different moments , and predicts the future trajectory coordinates of all of them at moments. The true spatial coordinates of each pedestrian in the geographic scene at the moment are further represented as . The predicted coordinates of the pedestrian group at the moment are . The predicted trajectory duration is . Our goal is to learn a model f that takes as input each pedestrian’s trajectory at , learns the weight parameter , and predicts each pedestrian’s trajectory at moments .

3.2. Overall Framework

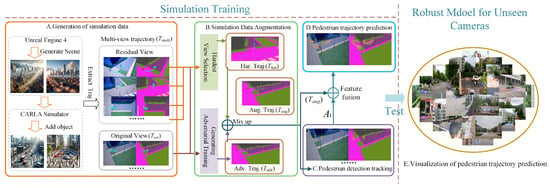

The Pedestrian Multifuture Trajectory Prediction (MTP-STG) framework, based on the Spatio-Temporal Graph Attention Mechanism, consists of four modules, as illustrated in Figure 1.

Figure 1.

Overview of our method for MTP-STG, which is trained in simulation and tested on real unknown videos. Each training trajectory is extracted from the CARLA simulator and represented with multiview semantic segmentation features. MTP-STG mixes features from the hardest camera view with adversarial features from the original view to form an augmented adversarial trajectory. MOTR performs detection tracking on the scene crowd and records historical trajectory information. The trajectory prediction network outputs multiple future trajectory probability distributions for pedestrians.

- A.

- Simulation Data Generation Module: Pedestrian training trajectories from different viewpoints are generated using the CARLA [42] simulator and represented as a multiview semantic segmentation feature map.

- B.

- Simulation Data Enhancement Module: The most challenging trajectory is selected from a given set of multiview trajectories . Then, and are combined using the Mixup [43] convex function to generate the enhanced trajectory .

- C.

- Pedestrian Detection and Tracking Module: Pedestrian tracking and historical trajectory encoding are performed using the MOTR multitarget detection tracker.

- D.

- Pedestrian Multifuture Trajectory Prediction Module: The Spatio-Temporal Graph Attention Mechanism is used as the backbone for trajectory prediction. The augmented trajectory and historical trajectory encoding information serve as network inputs to achieve multifuture trajectory prediction for crowds in spatial environments.

3.3. Multiview Simulation Data and Augmentation

The multiview simulation trajectory training data is derived from the VIRAT/ ActEV [44,45] real-world dataset and is semi-automatically labeled using the CARLA [42] simulator. This dataset captures pedestrian trajectories from four different viewpoints, along with pedestrian detection frame annotations and scene semantic features. The generated simulated video trajectory sequences closely resemble real video sequences in terms of both pedestrian appearance and motion trajectories. We further define the simulation trajectory training data. At a given time , the trajectory coordinates of a group of pedestrians in the scene are represented as , which we denote as . Here, represents the consecutive video frames from 1 to n, and represents the corresponding coordinate positions. For , the future trajectories of pedestrian crowds are denoted as . These are defined as , representing the predicted trajectory coordinates of pedestrians over frames, where is given by:

Let the complete training trajectory under the original view-point trajectory in the training data be , due to the different coordinate representations of the same trajectory under the rest of the viewpoints, , using to represent the different viewpoints of the same trajectory, which is expressed through Equation (2) as:

for the set S of multiview trajectories , one trajectory at a time is selected from S and used as an anchor point to search for the most inconsistent viewpoints with what the model has learned, which we refer to as the hardest-to-learn view trajectories in the text. Inspired by the classification loss function proposed in [46], we use it as a criterion for calculating the loss of a given viewpoint trajectory with respect to the hardest-to-learn views trajectory:

where is denoted as the index of the viewpoint with the highest classification loss; is denoted as the scene semantic segmentation feature of the trajectory frame; is denoted as the future location label of the j-th viewpoint; is the random perturbation of the input feature; and is the loss function for location classification used by the GATRNN adopted by the Trajectory Prediction Network module. For the original trajectory perspective trajectory , the adversarial trajectory is generated using the Targeted-FGSM [47] network, computed as:

where is denoted as a hyperparameter, and the use of the adversarial learning method enables the model to select the most difficult to learn view to predict the future trajectory position of the pedestrian in a given multiview trajectory, instead of predicting the trajectory position in the original view. The random perturbation is added to reduce the error caused by the uncertainty of the data itself. For better stability of the MTP-STG model for low-resolution visual features and different scene viewpoint transitions, the effect of subtle noise generated by different lighting conditions, scene textures, and camera sensors in the generated training data is reduced. We use the Mixup [43] convex function to mix the hardest-to-learn view trajectory and the generative adversarial trajectory to generate the augmented trajectory , which is calculated by the following:

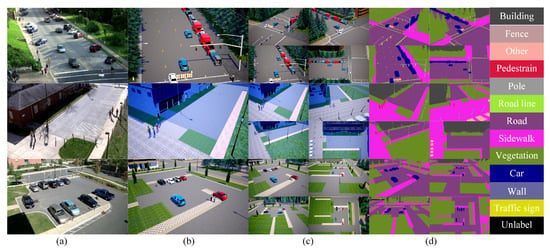

where is derived from the distribution controlled by the hyperparameter ; is the true trajectory coordinate in Equation (2) representing the original viewpoint; and the one-hot function maps the x-y 2D coordinate position projection onto a predefined grid in the trajectory prediction network module. In addition, we train the Deeplabv3 [48] semantic segmentation model on the Cityscapes dataset for extracting real scene semantic features. In order to minimize the difference between real and simulated video frames, we represent all trajectories as semantic segmentation sequence features and locations, denotes the semantic segmentation features of the scene for the trajectory under the i-th viewpoint at the moment of time t, and denotes the location coordinate values under the i-th viewpoint sequence. Figure 2 shows the generated multiview simulation video pedestrian trajectory data visualized in different scene view. The core intuition behind using Adversarial Mixup is to enforce view-invariance. By mixing features from the “hardest-to-learn” view (which yields the highest loss) with the original view, and adding random perturbations , we simulate challenging conditions such as camera noise, varying lighting, or poor sensor quality. This forces the model to learn robust features that are invariant to specific camera angles. Regarding hyperparameters, the mixing coefficient in the Beta distribution controls the intensity of interpolation. We empirically set based on a grid search, finding that this value provides sufficient diversity without destroying the semantic integrity of the pedestrian features. Similarly, the perturbation magnitude was chosen to balance robustness and training stability.

Figure 2.

Visualization of simulation data. (a) Real scene from the VIRAT/ActEV dataset. (b) Reconstructed scene from the VIRAT/ActEV dataset using CARLA and Unreal Engine 4. (c) The corresponding scene visualized from four different viewpoints: three 45° oblique views and one 90° overhead view. (d) Semantic segmentation of the real scene into categories, including sidewalks, roads, vehicles, and pedestrians.

3.4. Crowd Detection Tracking Module

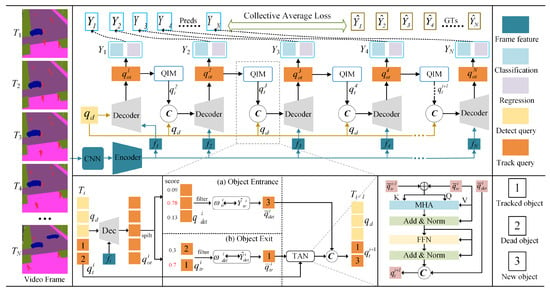

Unlike previous studies [8,9], which used the final hidden layer state of LSTM to model surrounding pedestrian information or abstract pedestrians as coordinate points in space, we employ the MOTR [49] network for crowd detection and tracking. Figure 3 presents a schematic diagram of crowd detection and tracking. In this paper, the simulated crowd trajectory video stream is modeled as a continuous sequence of images. Each frame is processed using a convolutional neural network backbone (ResNet50) and a Transformer encoder to extract image features. The detection query, denoted as , consists of fixed-length queries designed to identify newly emerging pedestrians in a sequence of simulated crowd trajectory video frames. The tracking query, denoted as , represents a continuously tracked crowd object in a sequence of simulated crowd trajectory video frames. It consists of dynamically updated queries. For consecutive video frames, the concatenation of the variable tracking query from the previous frame with the fixed detection query , along with extracted image features, is fed into the Transformer-based decoder to generate the hidden state of the predicted crowd bounding box. This output is then fed into the Query Interaction Module to generate the trajectory query for the next frame. In this paper, all pedestrian bounding box predictions in the video stream are aggregated into the collection .

Figure 3.

The overall architecture of the MOTR encoder represents the convolutional neural network backbone and the Transformer encoder that extracts the features of each image frame. A cascade of detection query and tracking query is fed into the decoder (Dec) to generate hidden states. The hidden state is utilized to generate the prediction for newborn and tracked objects. The QIM module takes the hidden state as input and generates the tracking query for the subsequent frame.

The Query Interaction Module consists of two components: a pedestrian entry and exit mechanism and the Temporal Aggregation Network (TAN). To handle pedestrian appearance and disappearance in video sequences, we propose a crowd trajectory-aware label assignment method, Tracklet-Aware Label Assignment (TALA). For the detection query , we follow the approach in MOTR and modify the assignment strategy to newborn-only, which performs bipartite graph matching exclusively among newly appeared objects. For the tracking query , we apply a goal-consistent assignment strategy, where the tracking query follows the assignment result from the previous frame and bypasses bipartite graph matching. Specifically, we define the tracking query prediction as and the detection query prediction as . Here, represents the newly detected pedestrian object. The label assignment results for the tracking and detection queries are denoted as and , respectively. For frame i, the label assignment for the detection query is determined by performing bipartite graph matching between the detection query and the newly appeared pedestrian target, as follows:

In this context, ℓ denotes the matching function as defined in DETR [34], while represents the space of all possible bipartite matches between the detection query and the newly detected pedestrian target. For the label assignment of the tracking query , we incorporate the assignments of both the newly detected pedestrian object and the tracked object from the previous frame, specifically for :

Since there are no trace objects in the first frame , the trace query assignment is represented as an empty set ∅. However, for consecutive frames , the trace query assignment is obtained by concatenating the previous trace query assignment with the assignment of the new object .

In the crowd entry mechanism, when a new pedestrian target appears in the video stream, its corresponding hidden state is updated according to the assignment rule defined in Equation (8). If a matched pedestrian target is no longer present in the scene, or if the IoU threshold score between the predicted bounding box and the target falls below 0.8, the current hidden state of the pedestrian target is discarded and terminated. This leads to the disappearance of these pedestrian targets in the current video frame, where their hidden states are filtered, and only the remaining hidden states are retained. In the crowd exit mechanism, the disappearance of both newly detected pedestrian targets and previously tracked pedestrian targets is determined based on their classification scores. For the detection query , predictions with classification scores exceeding the entry threshold are retained, while other hidden states are discarded.

For the tracking query , predictions with classification scores below the exit threshold across consecutive M frames are discarded, while other hidden states are retained. A Temporal Aggregation Network (TAN) is incorporated into the QIM module to enhance the modeling of temporal relationships within crowd trajectories and provide contextual a priori knowledge for tracking targets. The TAN, an enhanced Transformer decoder layer, receives the last frame of the tracking query and the filtered hidden state as inputs to the Multihead Attention (MHA) module. Following the MHA, a feed-forward network (FFN) links the result with the hidden state of the new object to produce the trajectory query set for the subsequent frame. At the conclusion of the detection and tracking process, for each input frame at time t, a matrix , as defined in Equation (10), contains the positions of the n detected pedestrians within the sequence frame grid .

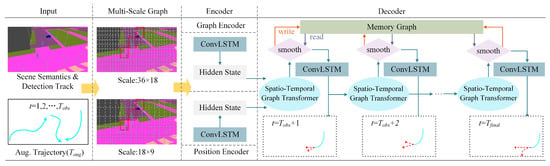

3.5. Spatio-Temporal Graph Transformer Networks

The comprehensive structure of the Pedestrian Multifuture Trajectory Prediction network is depicted in Figure 4. Inputs to the Multiscale Grid Graph include the augmented trajectory , scene semantics S, and pedestrian coding information . These inputs are processed and relayed to the spatio-temporal encoding–decoding network, which generates the pedestrian multifuture trajectory. The encoder comprises a graph encoder and a position encoder, which, respectively, encode the node-level and coordinate-level features of the processed multiscale graph. At each time step, the spatio-temporal graph decoder aggregates the information and generates a probability distribution of the pedestrians’ locations in the subsequent time step using an LSTM cell. The memory map facilitates smooth prediction by reading and writing to the decoded trajectory memory map, which contains comprehensive temporal information.

Figure 4.

Overview of the Spatio-Temporal Graph Transformer Network. The graph encoder and the position encoder encode node-level and coordinate-level features, respectively, processed by a multiscale graph. At each decoding time step, our proposed Spatio-Temporal Graph Transformer predicts the next potential neighboring positions. A Memory Storage Module smooths and corrects trajectories that violate temporal consistency.

3.5.1. Multiscale Grid Graph and Sequence Encoder

We partition the video frame into multiple 2D regular grids, forming a graph , with nodes V and edges E. Each grid cell, defined as a node , connects to its neighbors via undirected edges . Each grid cell establishes connections with its horizontal, vertical, and diagonal neighbors. Specifically, we design two distinct grid resolutions to capture different levels of spatial granularity. The coarse grid () is designed to capture global environmental context, such as distant road layouts and large static obstacles, allowing the model to plan long-term destinations. In contrast, the fine grid () focuses on local social interactions and precise pedestrian localization, enabling the model to handle immediate collision avoidance. By aggregating features from both scales, the model achieves a balance between global navigation and local safety. By leveraging multiscale graphs, the MTP-STG model adapts more effectively to varied information levels and makes comprehensive decisions based on the surrounding pedestrian environments. At each graph scale, the sequence encoder computes scene representations using the pedestrian’s augmented trajectory and coded information . The graph encoder encodes the node-level features of the grid cell index , corresponding to the current location. To enhance the model’s robustness against low-resolution visual features and varied viewpoint transitions, we employ the Deeplab v3+ semantic segmentation model [48], maintaining its weight parameters unchanged to encode each video frame into a semantic segmentation feature vector of size . Pedestrian spatial features are computed using ConvLSTM [50] as follows:

where ⊙ is the product between elements, is the sequence encoder learnable weight parameter, the function converts the pedestrian coordinates to cell indexes in a 2D grid, and the one-hot function projects the cell indexes to their corresponding positions on a map of size scale according to their spatial positions. The position encoder is responsible for encoding the offsets of the center of the area covered by the node, calculated using Equation (12):

where is calculated , and the function retrieves the center coordinates of the indexed cell. and , respectively, represent the hidden states of the graph encoder and the position encoder for the 2D grid at time t. Since both the encoder and the decoder subsequently process these two hidden states in the same manner, this paper treats the last hidden layer state of the sequence encoder as the final spatio-temporal state of pedestrians in the geographic scene. is the size of the last hidden layer state. We also encode the spatio-temporal state and the semantic segmentation average into a context vector , which is used to initialize the decoder.

3.5.2. Spatio-Temporal Attention Transformer

We input the encoded hidden state of the multiscale graph into the spatio-temporal graph attention decoder, aggregating generative information from all node pairs through the graph attention mechanism. Subsequently, a group of node states is updated concurrently, denoting the pedestrian’s confidence state in the unit grid at future time t as . For simplicity, this paper uses a single index i to denote a cell in the 2D grid . The confidence state is then updated and calculated via the hidden state of the ConvLSTM:

where represents the learnable weight parameters of the position decoder, which updates the hidden state using a 2D convolutional filter before applying softmax normalization. The confidence state is embedded into a three-dimensional tensor of size . The denotes a Graph Attention Network, where the graph structure corresponds to the 2D grid within. Due to the graph attention mechanism incorporating both graph structure and spatial proximity advantages, it differentially focuses on various regions. Pedestrians exhibit strong spatial interactions with nearby areas. Objects in distant areas, such as vehicles and buildings, provide essential spatial cues for planning the future trajectory of pedestrians. Therefore, we compute the attention information from node to within the scale graph , and the global information matrix :

where is the information matrix from node to node within the scale graph , utilized to learn the query matrix , key matrix , and value matrix from the hidden state , denotes the operation of concatenating the query matrix with the transpose of the key matrix . Subsequently, ⊙ involves an element-wise multiplication of the concatenated matrix with the value matrix, calculating the attention values for each node with respect to and , and adding a bias . We perform an element-wise addition of with , resulting in the total information matrix that is transmitted from node to . To update the state of the next node, we define as the feature vector of the i-th grid cell in the hidden layer state , and corresponds to the output of . denote the size of the decoder hidden layer state, which can be computed using Equation (15):

where denotes the set of neighboring nodes of within the 2D grid , represents the initial feature vector of the grid cell, and is defined as attention-weight edge function that normalizes the total information matrix using the softmax function. The graph structure update function we implemented enables the model to diffuse probability mass between grid cells in a controlled manner. This model captures human relational dynamics, ensuring that as crowds navigate through a scene, they do not abruptly jump to distant locations. This foundational assumption or prior knowledge is embedded within the network’s convolutional architecture. Integrating the graph attention network enables dynamic adjustment of weights based on input, enhancing trajectory prediction significantly. Combining the above formulations, Equation (16) is used to represent the pedestrian’s position in the unit grid at future time t.

where denotes the GAT and represents the hidden layer state of the GAT. denotes the position prediction at time t. For each node represents a probability when the input is sourced from the graph encoder. When the input derives from the position encoder, it represents a coordinate value offset from the center of node . Although the pedestrian state context vector incorporates semantic segmentation features and facilitates trajectory prediction via an output heatmap, it lacks precise position estimation capabilities. To enhance the precision of predicted pedestrian trajectories, we introduce a second GAT decoder that predicts continuous offset increments within the region. These offset increments, represented by , detail the adjustments required at the center of the grid cell as predicted by the decoder. The refined position is determined using Equation (17).

where MLP is used to embed each pedestrian’s positional coordinates into the vector representation of the grid; and are independent GAT modules; and is the hidden layer of . The final predicted position of a pedestrian in the spatial scene is represented as , where denotes the index of the selected grid cell, is the center of the selected grid cell, and represents the offset increment from the center of the grid cell at time step t.

3.5.3. Memory Storage Module

Although the spatio-temporal graph encoder–decoder in this study utilizes a self-attention mechanism to focus the model on the most probable areas, thereby enhancing the modeling of extended temporal sequences, it struggles with handling continuous time series data that require strong temporal consistency. Within the decoder, the hidden state at time step t heavily relies on the state from Furthermore, the current position is influenced by the hidden states from all preceding time steps. Relying solely on the most recent time step for future trajectory predictions can result in deviations from the initially predicted destination, as indicated by the earlier sequence of hidden states. To address this limitation, this paper introduces a straightforward, interpretable, and trainable external graphical memory module, denoted as . This module serves two primary functions:

- Historical Context: It embeds historical trajectory information into a spatio-temporal graph Transformer, conditioning current predictions on past behaviors and enhancing temporal consistency.

- Smoothing Mechanism: It smooths trajectory embeddings through memory update operations, mitigating abrupt changes in predictions and ensuring trajectory coherence.

Firstly, the Memory Storage Module maintains an embedding for each pedestrian i at every time step t, with dimensions equivalent to . At each time step t, the spatio-temporal graph Transformer retrieves historical embeddings using the reading function from the memory graph. Specifically, for each pedestrian in the spatial scene, the function retrieves all prior embeddings from time steps . The reading function is defined as: . This function integrates the current graph embeddings with historical data, providing the Transformer with a comprehensive view of both past and present contexts. Upon processing the input, the spatio-temporal graph Transformer updates its output graph embeddings into the graph memory using the writing function . This ensures that the Memory Storage Module is updated with the latest embedding, thus achieving temporal smoothness and consistency. To provide a clearer understanding of the temporal update mechanism, the process of reading and writing embeddings in the Memory Storage Module is detailed in Algorithm 1.

| Algorithm 1 Memory Storage Module update process. |

|

3.6. MTP-STG Model Loss Function

The total loss of the MTP-STG model comprises the collective average loss from crowd detection and the multimodal trajectory prediction loss , formulated as . Here, the set balances the collective average loss and the multimodal trajectory prediction loss. The MOTR crowd detector learns temporal variances directly from the data, rather than relying on manually crafted heuristic methods like the Kalman filter. Unlike previous methods, MOTR processes pedestrian video streams as input, facilitating the generation of training samples that capture distant object movements for temporal learning. Instead of computing the loss frame-by-frame, accumulates losses across multiple predictions . The loss for the entire video sequence is calculated based on the ground truth and the matching results . represents the total loss across the entire video sequence, normalized by the number of objects.

where represents the total number of real pedestrians in frame i, where and denote the number of tracked and newly detected objects in frame i, respectively. The loss for a single video frame , is expressed a . Here, is the focal loss, represents the loss, and is the generalized Intersection over Union (IoU) loss. The coefficients , , and are the respective weighting factors.

The multimodal trajectory prediction loss comprises the cross-entropy loss from the graph encoder and the regression loss from the location encoder. To leverage benefits from multiscale graphs , this study utilizes a multiscale discriminator and calculates losses at two scales, denoted as , and expressed as . Each graph scale at each time t considers the true output as , with the duration of loss computation defined as , resulting in the graph encoders cross-entropy loss being calculated as follows:

Additionally, this study employs an exponential smoothing loss for the location encoder, defined as follows:

Furthermore, this study introduces an exponential penalty term, denoted as , defined to guide the model to focus more on the predictions at earlier time steps. This focus is crucial as the accuracy of early trajectory predictions significantly influences subsequent trajectories. The hyperparameter is employed to control the intensity of this penalty term.

3.7. Generation of Multiple Trajectories

To generate diverse probabilistic trajectory distributions, we adopt various beam search strategies outlined in [29]. We define as the set of beams at time , each containing K decoded trajectories. Each trajectory consists of a sequence of position indexes, each representing a potential path from the start to the current time step. Let ) represent the k-th trajectory at time , where is the location-specific index in the scale graph and is the cumulative logarithmic probability of the k-th trajectory from the start to time . The probability distribution at the current time step t is computed based on the past trajectory . computed from Equation (13), which describes the probability of predicting time step t from the past historical trajectory to predict the location of time step t. In Equation (13), is determined by the hidden state , which itself is generated by the history trajectory . is further expressed as where is the logarithmic probability of predicting the next choice of the i-th position given , and is the diversity penalty, which reduces the chances of being chosen again, and increases trajectory diversity.

Specifically, the probability of needs to be calculated for all nodes and beams in the scale graph , where is the number of nodes in . In the flow of the graph encoder, each candidate output is an index of a graph node indicating the grid cell where the position of the next time step is located. In the position encoder process, each candidate output is a coordinate value indicating the offset of the position of the next time step relative to the center of the grid cell. At each time step, the algorithm selects the K highest probability out of all the candidate out-puts as the final prediction, and then uses them as inputs for the next time step to continue the search. In the position encoder process, the offsets are also added to the predicted grid cells to get the exact coordinates.

4. Experiment and Analysis

4.1. Dataset and Evaluation Metrics

4.1.1. Benchmark Dataset

The Forking Path dataset is specifically designed for multifuture forecasting simulations. This dataset includes five scenarios from VIRAT/ActEV and four from ETH/UCY. It comprises 127 scenarios, each available in three 45-degree views and one top-down view. Each scene features several controlled pedestrians, each with an average of 5.9 future trajectories. The ActEV/VIRAT dataset, a public resource released by NIST in 2018, is intended for video activity detection research. It contains 455 videos captured at 30 frames per second and a resolution of 1080p, featuring 12 different scenes from various viewpoints. The ETH/UCY dataset includes five subscenes—ETH, HOTEL, ZARA1, ZARA2, and UNIV—encompassing a total of 1536 pedestrian trajectories. Trajectory data are converted into coordinate points in the world coordinate system, sampled at intervals of 0.4 s to form coordinate sequences. Additionally, all scenes are captured from a fixed top-down perspective. The Argoverse dataset is used for 3D tracking and motion forecasting in autonomous driving applications. It includes two subdatasets: 3D tracking and motion forecasting. The validation set video within the 3D tracking dataset is captured using the onboard front-center camera view.

4.1.2. Multifuture Evaluation Metrics

To evaluate population multifuture trajectory predictions, we adopt the definition of the multifuture trajectory prediction task as described in [15], which involves generating the 20 most likely predictions () for each data sample. Predictions are assessed using the minimum Average Displacement Error () and the minimum Final Displacement Error () across the K predictions. Additionally, the Percentage of Trajectory Usage (PTU) metric, proposed in [13], is utilized to gauge the overall performance of Pedestrian Multifuture Trajectory Predictions. This metric calculates the proportion of predicted trajectories utilized, assessed by and .

: For each true trajectory j of test sample i, the one of the K predictions with the smallest distance from j is selected to compute the average displacement. : For each true trajectory j of test sample i, the coordinate that is closest to the endpoint coordinate value of trajectory j among the K predictions is selected as the minimum Final Displacement Error.

To quantitatively evaluate the safety and rationality of the predicted trajectories, we propose the Static Obstacle Collision Rate (SOCR) metric. It measures the percentage of predicted trajectory points that fall into non-walkable regions (e.g., walls, vehicles, vegetation) defined by the semantic segmentation map. A lower SOCR indicates higher safety and better adherence to static scene constraints. It is calculated as:

where N is the total number of pedestrians, K is the number of predicted modes (future trajectories), and is the prediction horizon. denotes the predicted position of the k-th trajectory for the i-th pedestrian at time t. is a function that maps the coordinate to a semantic class using the scene segmentation map, and represents the set of obstacle classes (e.g., building, fence, pole, road, car). is the indicator function, which equals 1 if the condition is met and 0 otherwise.

4.1.3. Single-Future Evaluation Metrics

We use two metrics, Average Displacement Error () and Final Displacement Error (), to evaluate our model, as follows:

where N denotes the number of pedestrians, T denotes the time step of the prediction, is the trajectory generated by the model at time t, and is the trajectory of the ground truth at time t.

4.2. Implementation Details

We utilize the data processing method described in [9], starting by encoding input coordinates into a 32-dimensional vector via a fully connected layer, followed by ReLU activation. Scene semantic segmentation features are extracted using a pretrained DeepLabv3 model [51]. The MTP-STG model features a single-layer LSTM convolution as the backbone for both encoder and decoder, augmented by a graph attention mechanism that generates and aggregates information based on scale maps. Both spatial and temporal Transformers consist of encoding layers equipped with eight heads. Hyperparameter tuning on a scaled-down network determined the optimal learning rate to be 0.0015, using an Adam optimizer for model training. Training occurred in batches of 8 over 400 epochs, with each batch comprising around 256 pedestrians from various time windows, using an attention mask to speed up both training and inference processes. In the data augmentation module, adversarial trajectories are generated using the Targeted-FGSM attack method, iterating 10 times. Parameters in Equations (4) and (6) are set to and . The trajectory prediction network utilizes a ConvLSTM architecture with an embedding size of 32, and encoder and decoder hidden layers each sized at 256. Hyperparameters in the multimodal trajectory prediction module are set at and , and had a smoothing exponent of 5 for the calculations.

4.3. Quantitative Evaluation of MTP-STG

4.3.1. Quantitative Analysis of Multifuture Trajectory Prediction

In this section, we evaluate the MTP-STG model using the Forking Paths Dataset, comparing it against baseline models including S-LSTM [8], S-GAN [9], Next [52], ST-MR [13], ST-AR [33], SimAug [12], Multiverse [15], TNT [53], MultiPath++ [54], and the AgentFormer [36]. Evaluation results for the minADE20 and minFDE20 metrics are presented across three perspectives: 45-degree, top-down, and full views. The PTU trajectory usage metric is exclusively evaluated for multifuture prediction models. The initial models are trained on the Forking Paths Dataset, with all models subsequently tested on this same dataset. From the results in Table 1, the MTP-STG model surpasses other baseline models across all evaluation metrics. Specifically, compared to the strong baseline ST-MR, MTP-STG reduces minADE20 by 2.3, 0.7, and 1.2 pixels across the metrics, and minFDE20 by 1.2, 1.7, and 2.1 pixels, while enhancing PTU trajectory usage by 0.5% and 0.4% respectively. To further demonstrate the competitiveness of our approach against newer methods, we compared it with prominent SOTA models: AgentFormer, TNT, and MultiPath++. While these methods achieve impressive results on clean datasets by modeling social–temporal interactions or utilizing target-driven anchors, their performance drops slightly in our end-to-end setting (e.g., minADE20 All: 162.9 for MultiPath++ and 163.5 for AgentFormer vs. 161.6 for MTP-STG). This is because these models typically assume perfect historical trajectories and are sensitive to perception noise. In contrast, our MTP-STG utilizes the proposed Memory Storage Module to robustly handle the noise and fragmentation introduced by the upstream MOTR tracker. This superior performance is attributed to the MTP-STG model’s enhanced attention mechanism and multiscale graph structure, which effectively simulate interactions between pedestrians and their environment. Additionally, the memory graph dynamically records trajectory temporal information during decoding, correcting positions that violate temporal consistency, thereby enhancing trajectory smoothness and rationality.

Table 1.

Quantitative evaluation of multifuture trajectory prediction.

4.3.2. Quantitative Analysis of Single Future Trajectory Prediction

Our experimental evaluation is based on the single future trajectory metrics outlined in [13,15], utilizing VIRAT/ActEV in conjunction with Argoverse as the evaluation datasets for pedestrian single future trajectory prediction. Consistent with previous studies, our evaluation observes a time step of 3.2 s (8 frames) and a prediction length of 4.8 s. The experimental results are presented in Table 2. The MTP-STG model, as proposed in this study, enhances performance on the ADE and FDE metrics compared to baseline models such as S-LSTM [8], S-GAN [9], Next [52], ST-MR [13], ST-AR [33], SimAug [12], and Multiverse [15]. This suggests that the MTP-STG model offers greater stability for both multifuture simulation and single future trajectory prediction in real-world scenarios.

Table 2.

Quantitative evaluation of single future trajectory prediction.

4.3.3. Evaluation of Trajectory Rationality and Safety

Beyond standard displacement metrics, evaluating the rationality and safety of predicted trajectories is crucial for real-world applications. We focus on two aspects: collision avoidance and adherence to social norms. We introduce the Static Obstacle Collision Rate (SOCR) to quantify safety. SOCR measures the percentage of predicted trajectory points that fall into non-walkable areas (e.g., walls, parked vehicles, vegetation) defined by the semantic segmentation maps. As shown in Table 3, our MTP-STG model significantly outperforms the baseline ST-MR. Thanks to the integration of the multiscale semantic grid graph, our model effectively “perceives” the environment, reducing the collision rate from 5.4% to 3.9%. In terms of social compliance, the generated trajectories should not only be collision-free but also follow walkable paths (e.g., sidewalks). Our qualitative results (discussed in Section 4.4.2 and Figure 6) demonstrate that MTP-STG predictions align strictly with sidewalk layouts, avoiding jaywalking in vehicle lanes. Furthermore, the high PTU scores (Table 1) indicate that our model generates diverse modes covering various plausible intentions, rather than collapsing to a single average path, thereby ensuring the diversity of the prediction.

Table 3.

Comparison of Static Obstacle Collision Rate (SOCR) on the VIRAT/ActEV dataset.

4.4. Qualitative Evaluation of MTP-STG

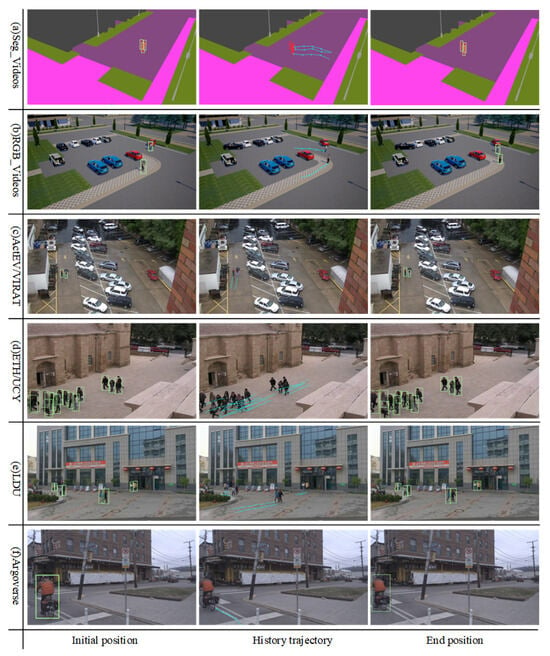

4.4.1. Pedestrian Detection Tracking Visualization

In this paper, we visualize the performance of the MOTR detector across various benchmark datasets; Figure 5 illustrates the paths taken by pedestrians within 3.2 s of observation. The figure demonstrates that the Transformer-based MOTR detector accurately identifies pedestrians across various test scenarios in different benchmark datasets. It effectively extracts pedestrian features at multiple scales, handles complex occlusions and interactions, and maintains high detection accuracy and frame rates in densely crowded scenarios, thereby preventing trajectory loss.

Figure 5.

Effect of pedestrian detection performance of MOTR detector under different benchmark datasets.

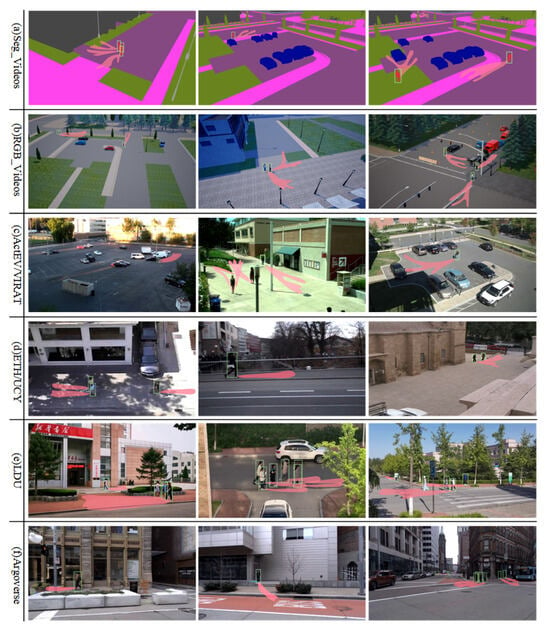

4.4.2. Multifuture Trajectory Heat Map Visualization

We also conduct trajectory prediction heatmap visualizations for each dataset to further analyze the semantic interpretability of the proposed MTP-STG model. Figure 6 displays the heatmap of Pedestrian Multifuture Trajectory Predictions for three different scenes in each dataset, generated by the GAT decoder on a 2D lattice grid. The figure illustrates that the MTP-STG model accurately predicts the intensity of pedestrian multifuture trajectories across all datasets. This accuracy stems from using simulation data for adversarial enhancement during training and employing a spatio-temporal graphical attention network to capture environmental details and self-assign attention weights to pedestrians.

Figure 6.

Heatmap visualization of Pedestrian Multifuture Trajectory Prediction under different benchmark datasets.

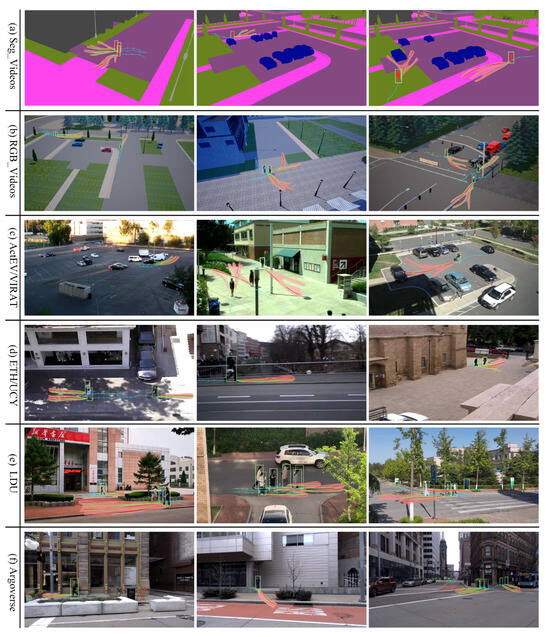

4.4.3. Multifuture Trajectory Prediction Visualization

Qualitative analysis of benchmark datasets. Figure 7 illustrates the predictive tracking performance of the MTP-STG model on pedestrian multifuture trajectories across various benchmark datasets. In Figure 7, blue line segments represent observed walking trajectories of the crowd; light red indicates the crowd’s multimodal trajectory locations output by the scale-map decoder, shown as a heatmap; green lines depict predicted future walking trajectories; red lines show actual future walking trajectories; and light green highlights pedestrian detection frames calibrated by the MOTR detector. The visualization results demonstrate the efficacy of our multifuture trajectory prediction model in forecasting crowd movements. In the third scenario of SegVideos semantic segmentation, the model effectively gathers environmental semantic information to navigate around stationary vehicles. In the Rgbvideos simulation, the MTP-STG model effectively predicts multimodal trajectories for pedestrians approaching each other, forecasting three potential paths. In the third scenario of the VIRAT/ActEV dataset, the model accurately tracks pedestrians carrying suitcases and predicts three potential future directions. In the LDU dataset with high crowd density, the DETR architecture and tracklet trajectory-aware label assignment enhance the MOTR detector’s ability to discern pedestrian appearances and locations for precise cross-frame matching. The location decoder outputs corrected multifuture trajectories, enhancing accuracy in crowded environments.

Figure 7.

Qualitative visualization results of the MTP-STG model on the benchmark dataset. The top 3 highest rated possible trajectories were selected for visualization (K = 3).

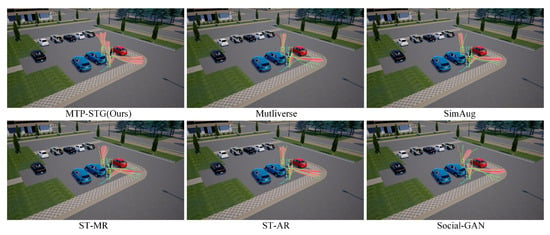

Qualitative analysis of the baseline models. Figure 8 displays the visualization results of the MTP-STG model compared to each baseline model on the Forking Path dataset. The MTP-STG model accurately predicts trajectories that align with the actual value distribution, avoiding collisions with other objects. Examples demonstrate that our model effectively makes informed decisions using pedestrian detections and historical trajectory data processed by a spatio-temporal graph attention network. Additionally, while the baseline models often exhibit temporal inconsistencies, the MTP-STG, leveraging a Memory Storage Module, maintains consistent trajectory predictions over time.

Figure 8.

Comparison of qualitative results between MTP-STG and each baseline model on the benchmark dataset.

4.5. Ablation Study and Efficiency Analysis

4.5.1. Ablations of Key Components

To systematically evaluate the contribution of each module in our MTP-STG framework, we conducted a comprehensive ablation study on the validation set. As shown in Table 4, we analyze six variants by removing specific components: Multiview Simulation Data (Sim), View Selection (View), Adversarial Attack (Adv), Multiscale Graph (MSG), Memory Module (Mem), Location Decoder (Dec), and Exponential Smooth L1 Loss (Loss). We did not include the exponential smoothing design in our tests for multiple future trajectory prediction, as the exponential loss is specifically tailored to enhance single future prediction during model training.

Table 4.

Ablation study of the key design in multifuture prediction models.

Table 4 presents the systematic ablation study results for multiple future trajectory prediction, while Table 5 details those for single future trajectory prediction. The tables demonstrate that the full MTP-STG model attains optimal accuracy across both tasks. This performance is achieved by effectively utilizing simulation data, learning from inconsistent viewpoints, and generating adversarial trajectories. Specifically, setting randomized perturbations with and integrating random search viewpoint trajectories through the Mixup convex function [43] allows the model to adeptly handle fine-grained noises introduced by varying lighting conditions, scene textures, and camera sensors. In our studies, setting to 0 increased errors between sequence frames, thereby raising the computational error rate in the classification loss function (Equation (3)). Conversely, the random perturbation setting of is specifically designed to minimize errors arising from data uncertainty. Furthermore, removing the adversarial network (without Adversarial Attack) causes accuracy to diminish. This decline is attributed to the GAN network’s ability to expand data from original viewpoint labels, reducing interference from varying backgrounds and mitigating overfitting.

Table 5.

Ablation Study of the Key Design in Single Future Prediction Models.

For multifuture trajectory prediction, Table 4 indicates that the position decoder has the most significant impact on overall accuracy, followed by the multiscale graph and the Memory Storage Module. The position decoder computes offset coordinates for each node based on the graph encoder’s output, enabling precise position predictions and preventing restrictions to grid cell boundaries. The multiscale graph allows the model to adapt to varying levels of detail, facilitating decision making based on the pedestrian’s surroundings. Crucially, the Memory Storage Module functions as a trajectory smoothing algorithm. As indicated by the minFDE increase in Table 4 (w/o Memory Module), removing this component leads to temporal inconsistencies. Qualitatively, without the memory module, early-time predictions often exhibit high-frequency jitter (“zigzag” patterns). By conditioning predictions on historical embeddings, the memory module mitigates conflicts between spatial and temporal information, ensuring the generated paths are smooth and kinematically plausible.

Exponential smooth loss. Unlike multiple future trajectory prediction where diversity is prioritized, the exponential smoothing design is specifically employed to enhance the accuracy of single future trajectory prediction (Table 5). It emphasizes earlier data in the sequence, which influences overall performance. In Equation (20), different values of were tested. The hyperparameter regulates the strength of the penalty term, with selected values of , 15, 10, and 5 ( corresponds to using only smooth loss). The results in Table 6 indicate that the MTP-STG model achieves optimal performance when . On the ActEV/VIRAT dataset, ADE is reduced by 0.63 and FDE by 1.91% compared to models without exponential smoothing. These results suggest that selecting an appropriate value effectively balances the penalty term and loss function, thereby enhancing the accuracy of single future trajectory prediction.

Table 6.

Effect of different values on the accuracy of single future prediction.

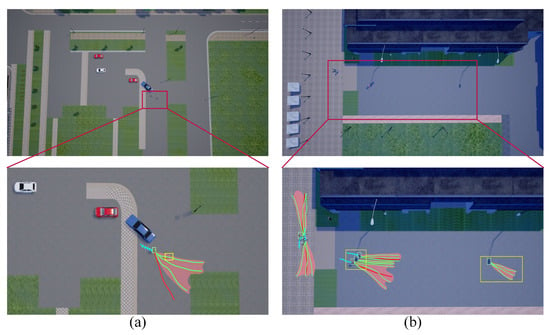

Algorithmic limitations. We present examples of prediction failures in Figure 9, where yellow boxes highlight scenarios of missed and incorrect detections. In scenario (a), a missed detection leads to a failure in predicting crowd trajectories. In scenario (b), the model incorrectly identifies streetlights as pedestrians, resulting in erroneous predictions. Although top-view trajectories are incorporated in training, dynamic detection remains insensitive due to the small size of targets in this view. Additionally, the failure rate increases in scenes with significant lighting changes, indicating a need to enhance detection of small targets under varying lighting conditions. Furthermore, our model occasionally fails to accurately perceive pedestrian walking speeds, predicting trajectories longer than actual distances. Future work could improve performance by optimizing the prediction network loss function or diversifying the final position prediction approaches.

Figure 9.

Example of limitations of our model. In scenario (a), missed detection leads to the failure of crowd trajectory prediction. In scenario (b), the model misidentifies the street lamp as a pedestrian, resulting in incorrect predictions.

4.5.2. Efficiency and Computational Cost

To evaluate the feasibility of MTP-STG for real-world deployment, particularly in intelligent transportation systems (ITS) and autonomous driving, we analyzed the model’s computational complexity and inference speed. All efficiency experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX A6000 GPU and an Intel Xeon CPU. Table 7 presents the comparison of model parameters, inference time, and Frames Per Second (FPS) between our proposed MTP-STG and representative baseline methods. Although the introduction of the multiscale graph structure and the Memory Storage Module increases the number of parameters compared to lightweight LSTM-based models (e.g., S-LSTM), our method maintains a competitive inference speed. Specifically, MTP-STG achieves an inference speed of approximately 38.4 ms per frame, corresponding to 26 FPS. This efficiency is primarily attributed to the parallel computation capabilities of the Transformer architecture and the matrix-based retrieval of the memory module, which avoids the sequential bottlenecks typical of RNNs. To further assess practicality, we estimate the computational cost to be approximately 15.6 GFLOPs per frame. Crucially, for real-world ITS and autonomous driving applications, our framework supports an online/offline separation strategy. The semantic feature extraction for static scene elements (e.g., roads, buildings) can be computed offline and cached as a background feature map. Only the pedestrian detection (MOTR) and trajectory generation components require online inference. This decoupling significantly reduces the real-time computational burden, making MTP-STG highly practical for deployment on edge devices in smart city infrastructures.

Table 7.

Comparison of computational efficiency and model performance on the benchmark dataset.

5. Discussion and Conclusions

This paper explores the integrated framework of multitarget tracking and multifuture trajectory prediction for crowds. We have refined the MOTR detection tracker to enable end-to-end monitoring, leveraging the DETR architecture and automatic tracklet trajectory-aware label assignment to handle variations in pedestrian appearance. For trajectory prediction, we encode historical states into a pedestrian matrix and process them through a Spatio-Temporal Graph Transformer, which features a multiscale graph structure to capture both local and global context. Additionally, a Memory Storage Module was introduced to ensure temporal consistency and smooth trajectory generation.

Crucially, our framework demonstrates significant robustness under harsh real-world conditions, such as severe occlusion and extreme congestion. Our analysis indicates that the query-based mechanism in MOTR effectively retains target identity during temporary occlusions, while the memory module leverages historical embeddings to bridge gaps in visual data. Furthermore, in high-density crowd scenarios (e.g., LDU dataset), the proposed Spatio-Temporal Graph Attention mechanism explicitly models complex neighbor interactions. This allows the model to generate diverse, collision-free paths without collapsing into a single average trajectory, ensuring safety and rationality in congested environments. Beyond ground-level surveillance, the proposed MTP-STG framework exhibits inherent adaptability to broader Remote Sensing applications, particularly in aerial surveillance and smart city monitoring. Our Multiview Data Augmentation specifically trains the model to be robust across varying camera pitch angles ( to ), making it highly suitable for the dynamic perspectives of Unmanned Aerial Vehicles (UAVs). Moreover, the Multiscale Grid Graph can be extended to incorporate high-resolution satellite imagery or GIS data. By aligning pedestrian tracking with these overhead semantic maps, MTP-STG holds the potential to predict crowd flows at a city scale, aiding in urban planning and emergency response.

Experiments on benchmark datasets demonstrate that our proposed MTP-STG model achieves state-of-the-art performance. The integration of end-to-end tracking with interaction-aware prediction offers a promising solution for intelligent transportation systems, autonomous surveillance, and future smart city infrastructures.

Author Contributions

Conceptualization, Y.S.; methodology, Z.Z. and Y.S.; software, Y.S.; validation, X.C.; formal analysis, X.C.; data curation, W.G., L.Z., Y.Z. and Y.L.; writing—original draft preparation, Z.Z.; writing—review and editing, X.C. and H.Z.; visualization, W.G., L.Z., Y.Z. and Y.L.; supervision, X.C.; funding acquisition, H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shenzhen Science and Technology Innovation Commission, Major Science and Technology Project for Innovation and Entrepreneurship (Grant No. Z25306103, 2024); National Natural Science Foundation of China (Grant No. 52472316); Guangdong Basic and Applied Basic Research Foundation (Grant No. 2025A1515010251); National Natural Science Foundation of China (Grant No. 52461160297); National Natural Science Foundation of China (Grant No. 52341203).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting the findings of this study are available from the corresponding author upon reasonable request. Please contact us by email if you wish to obtain access to the data.

Acknowledgments

The authors would like to thank the editors and anonymous reviewers for their valuable comments, which have greatly improved our manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, Y.; Wang, H.; Wang, Y.; Zeng, C.; Liu, Z.; Wang, L.; Steedman, N.; van de Wouw, M.; Gavvesker, M. ByteTrackV2: 2D and 3D Multi-Object Tracking by Associating Every Detection Box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Volume 13682, pp. 1–21. [Google Scholar] [CrossRef]

- Sun, Z.; Zheng, L.; Yang, Y. Multiple Pedestrian Tracking Under Occlusion: A Survey and Outlook. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 1009–1027. [Google Scholar] [CrossRef]

- Peng, Y. Deep learning for 3D Object Detection and Tracking in Autonomous Driving: A Brief Survey. arXiv 2023, arXiv:2311.06043. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Li, T.; Zhao, Y.; Yang, X.; Wang, Y.; Zhang, L. LANDER: Visual Analysis of Activity and Uncertainty in Surveillance Video. IEEE Trans. Hum.-Mach. Syst. 2024, 54, 427–440. [Google Scholar] [CrossRef]

- Lin, C.H.; Hsu, W.L.; Chen, H.Y. A structural description of pedestrian movement behavior in multiple surveillance videos. Expert Syst. Appl. 2024, 252, 124031. [Google Scholar] [CrossRef]

- Luber, M.; Spinello, L.; Silva, J.; Arras, K.O. Socially-aware robot navigation: A learning approach. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 902–907. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social LSTM: Human trajectory prediction in crowded spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 961–971. [Google Scholar]

- Gupta, A.; Johnson, J.; Fei-Fei, L.; Savarese, S.; Alahi, A. Social GAN: Socially Acceptable Trajectories with Generative Adversarial Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2255–2264. [Google Scholar]

- Huang, Y.; Bi, H.; Li, Z.; Mao, T.; Wang, Z. STGAT: Modeling Spatial-Temporal Interactions for Human Trajectory Prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6272–6281. [Google Scholar]

- Salzmann, T.; Ivanovic, B.; Chakravarty, P.; Pavone, M. Trajectron++: Dynamically-Feasible Tra-jectory Forecasting with Heterogeneous Data. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Volume 12363, pp. 683–700. [Google Scholar] [CrossRef]

- Liang, J.; Jiang, L.; Hauptmann, A. SimAug: Learning Robust Representations from Simulation for Trajectory Prediction. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 275–292. [Google Scholar]

- Li, L.; Pagnucco, M.; Song, Y. Graph-based Spatial Transformer with Memory Replay for Multi-future Pedestrian Trajectory Prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; Volume 1, pp. 2221–2231. [Google Scholar]

- Wong, C.; Xia, B.; You, X. SocialCircle: Learning the Angle-based Social Interaction Representation for Pedestrian Trajectory Prediction. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 6–22 June 2024; pp. 19005–19015. [Google Scholar] [CrossRef]

- Liang, J.; Jiang, L.; Murphy, K.; Yu, T.; Haupt-mann, A. The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10505–10515. [Google Scholar]

- Meng, M.; Cai, W.; Wang, S.; Sebe, N.; Tuytelaars, T.; Gao, Y. Forecasting Human Trajectory from Scene History. In Proceedings of the 36th Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Number NeurIPS. pp. 1–17. [Google Scholar]

- Bewley, B.U.A.; Ge, Z.; Ott, L.; Ramos, F. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Bergmann, P.; Meinhardt, T. Tracking without bells and whistles. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Shuai, B.; Berneshawi, A.G.; Modolo, D.; Tighe, J. Multi-Object Tracking with Siamese Track-RCNN. arXiv 2020, arXiv:2004.07786. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards Real-Time Multi-Object Tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 107–122. [Google Scholar]

- Zhang, Y. FairMOT: On the Fairness of Detection and Re-Identification in Multiple. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Chu, P.; Wang, J.; Ling, H.; Liu, Z. TransMOT: Spatial-Temporal Graph Transformer for Multiple Object Tracking. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 4870–4880. [Google Scholar]

- Sun, P.; Jiang, R.; Zhang, Y.; Xie, E.; Xu, X.; Cao, D.; Lu, X.; Luo, P.; Zeng, K. TransTrack: Multiple Object Tracking with Transformer. arXiv 2020, arXiv:2012.15460. [Google Scholar]

- Meinhardt, T.; Kirillov, A.; Lealtaix, L. Track-Former: Multi-Object Tracking with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 8844–8854. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Jiang, C.; Zhang, Z.; Philip, S.Y. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Sadeghian, A.; Kosaraju, V.; Sadeghian, A.; Hirose, N.; Rezatofighi, H.; Savarese, S. SoPhie: An attentive GAN for predicting paths compliant to social and physical constraints. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1349–1358. [Google Scholar]

- Zhang, P.; Ouyang, W.; Zhang, P.; Xue, J.; Zheng, N. SR-LSTM: State refinement for lstm towards pedestrian trajectory prediction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12085–12094. [Google Scholar] [CrossRef]