Abstract

In intelligent sports education, current action quality assessment (AQA) methods face significant limitations: regression-based methods are heavily dependent on high-quality annotated data, while unsupervised methods lack sufficient accuracy and degrade performance when handling long-duration sequences. To address these challenges, this paper introduces a novel indirect scoring method integrating action anomaly detection with a Quick Action Quality Assessment (QAQA) algorithm. In this method, the proposed anomaly detection module dynamically adjusts action quality scores by identifying and analyzing acceleration outliers between frames, effectively improving the robustness and accuracy of sports AQA. Moreover, the QAQA algorithm utilizes a multi-resolution approach, including coarsening, projection, and refinement, to significantly reduce computational complexity to , alleviating the computational burden typically associated with long sequence analyses. Experimental results demonstrate that our method outperforms traditional methods in execution efficiency and scoring accuracy. The proposed system improves algorithmic performance and effectively contributes to intelligent sports training and education.

1. Introduction

The societal shift towards healthier lifestyles has spurred a significant rise in the popularity of mind-body exercises. However, improper execution of these exercises frequently diminishes training efficacy and may even lead to physical injuries. This critical issue has garnered considerable research attention [,].

Action quality assessment (AQA) is a critical challenge in traditional Chinese Qigong (e.g., Baduanjin, Tai Chi), a mind-body discipline valued for its rich cultural heritage and documented health benefits [,,]. However, its pedagogy is fundamentally limited, relying on subjective instructor feedback rather than quantitative metrics and real-time correction. This traditional approach severely hinders the standardized instruction and scientific dissemination of Qigong.

AI-driven systems offer a compelling solution to these pedagogical limitations. Underpinned by recent advances in computer vision, they can provide objective AQA and real-time corrective feedback. This capability, leveraging deep learning for fine-grained pose and trajectory analysis, enables a robust, quantitative evaluation of action quality that transcends traditional subjective methods, thereby enhancing training efficacy and facilitating standardized dissemination.

Current mainstream methods for AQA fall into two main categories: supervised and unsupervised learning. Supervised frameworks [,,,,] mainly use end-to-end deep learning architectures, employing spatio-temporal convolutions or recurrent neural networks to map video sequences to quality scores directly. Although these methods achieve high precision for specific action scoring tasks, they have three inherent limitations. First, they overlook the rich spatio-temporal information present in human skeleton data. Second, uniform sampling strategies create problems with action phase segmentation, resulting in the loss of critical features during key movement stages. Third, these models heavily depend on fine-grained annotations of the datasets, posing the dual challenges of limited domain adaptability and high annotation costs.

To overcome these annotation-related limitations, researchers have explored unsupervised methods based on Dynamic Time Warping (DTW) [,,,,]. These methods construct evaluation metrics based on similarity between movements, calculating the nonlinear alignment distance between test sequences and reference templates. Compared to supervised methods, the DTW approach reduces the need for data annotation and enhances the model’s sensitivity to temporal variations in actions. However, practical applications have exposed two significant drawbacks of DTW-based methods. Firstly, distance metrics are sensitive to noise, leading to possible inaccuracies in assessment. Secondly, the computational complexity of DTW algorithms grows exponentially when handling complex, long-duration actions. This exponential complexity results in low efficiency, making it unsuitable for real-time applications.

In response to the issues described above, this paper proposes a fast AQA method based on anomaly detection optimization and indirect scoring. First, an anomaly detection module is developed to address the problem of outliers and noise present in outdoor action sequences. This module identifies abnormal states of keypoints in the temporal dimension and calculates score adjustments according to specific situations. Second, we build a fast action scoring algorithm that efficiently computes the final scores of sports actions. This algorithm simplifies the dynamic programming score matrix using coarsening, projection, and refinement techniques. Then, it integrates the adjustment strategies calculated by the anomaly detection module to determine the final scores. Finally, we developed a new video dataset that features traditional Chinese Qigong movements and validated the effectiveness and practicality of our proposed method.

The main contributions of this paper can be summarized as follows.

- We propose Quick Action Quality Assessment (QAQA), a fast AQA algorithm based on ACDTW, which significantly reduces the algorithm time complexity to O(n). This improvement addresses computational efficiency problems in the assessment of long-duration action sequences, improving the algorithm’s performance while maintaining high assessment accuracy. Furthermore, the method shows strong generalization capability for real-world applications in Qigong teaching evaluation.

- We introduce an action anomaly detection module based on the DBSCAN clustering algorithm. This module dynamically adjusts the threshold parameters in the action matching algorithm by comparing differences in the acceleration outlier counts between standard and test actions. This strategy improves the model’s ability to detect and differentiate abnormal actions, thereby boosting the accuracy of action assessments.

- We build a novel dataset explicitly designed for AQA in traditional Chinese Qigong. This dataset includes two Qigong routines (Baduanjin and Yijinjing) comprising 22 subactions. Ablation and comparative experiments demonstrate the effectiveness and superiority of our proposed method on this dataset.

2. Related Work

2.1. Human Pose Estimation

Human Pose Estimation, which extracts skeletal keypoints for tasks like Action Quality Assessment (AQA), is dominated by two paradigms: top-down methods [,], whose performance is bottlenecked by the initial person detection, and more efficient bottom-up approaches [] that contend with a complex keypoint association stage. The modern research landscape is driven by the accuracy–efficiency trade-off, leading to the integration of lightweight backbones, attention, and Transformers [,,,], culminating in robust real-time models like RTMPose, developed within the comprehensive MMPose toolbox [,]. However, the performance of these purely vision-based systems degrades under challenging conditions like occlusion. Consequently, to enhance robustness, a complementary line of research augments visual data with explicit kinematic modeling, using features like motion trajectories and joint angles for more reliable AQA [,].

2.2. Action Quality Assessment

Supervised AQA, while effective, is fundamentally hampered by its reliance on extensive, task-specific annotations, which restricts model generalizability [,,]. Although more sophisticated architectures and multi-modal fusion techniques have advanced performance, they do not alleviate this core dependency and often introduce significant model complexity [,,,,]. Consequently, a significant research thrust has focused on reducing or eliminating this label dependency. Semi-supervised learning (SSL) accomplishes this by leveraging unlabeled data, either through pseudo-labeling with teacher-student frameworks [,,] or by imposing temporal consistency constraints []. Pushing this further, unsupervised learning (USL) methods operate without any annotations, typically by reframing AQA as a metric-based comparison task [] or by learning robust feature representations directly from unlabeled skeletal data [,].

2.3. Dynamic Time Warping Algorithm

DTW is a foundational algorithm for comparing time series of varying lengths, first proposed by Sakoe et al. [] and widely applied in fields like speech recognition [,,]. In AQA, its capacity for non-linear sequence alignment is crucial for template-based methods [,,], enabling diverse applications from clinical movement analysis [,] to detailed skill scoring and feedback generation [,].

Despite its utility, canonical DTW suffers from significant limitations. Its quadratic computational complexity poses a challenge for long sequences, while its tendency to produce singular alignments can lead to inaccurate results. Consequently, a line of research has focused on optimization. To improve efficiency, algorithms like FastDTW [] and SparseDTW [] were developed to reduce complexity. To enhance alignment accuracy, methods such as Derivative DTW [] were designed to align sequence shapes, while ACDTW [] employs adaptive penalties to resolve singularity issues.

3. Methods

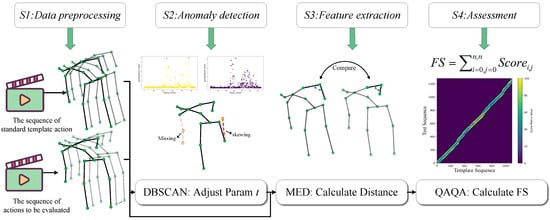

Existing AQA methods are hindered by a dependency on large, annotated datasets and a failure to handle unsegmented real-world videos, which limits their generalizability and practical utility. To overcome these barriers, we propose an unsupervised, skeleton-based AQA framework. As depicted in Figure 1, our annotation-free pipeline consists of four sequential stages: (S1) data extraction and processing, (S2) anomaly detection, (S3) action feature construction, and (S4) final score computation via our proposed QAQA algorithm. This design ensures broad applicability across diverse exercise scenarios without requiring model retraining.

Figure 1.

The overview of our annotation-free scoring framework. It first extracts 3D skeletal keypoints from both standard and evaluation videos, which are used for two parallel purposes: to generate kinematic feature sequences for comparison, and to compute an anomaly adjustment parameter t, based on acceleration (The purple and yellow points denote two instances from the acceleration clustering results of the anomaly detection module.). The final quality assessment is then determined by our QAQA algorithm, which compares the feature sequences and leverages t to modulate the score based on detected irregularities.

3.1. S1: Data Extraction and Processing

In human AQA, mainstream pose estimation algorithms typically represent action characteristics using 17 skeletal keypoints. These algorithms are based on simplified biomechanical models and validated through engineering practices. Among these algorithms, MMPOSE is widely adopted for keypoint extraction due to its flexibility and scalability [,,]. Thus, in this study, we use MMPOSE to extract 3D coordinates of human skeletal keypoints from exercise videos. The processes are described in detail below.

The initial stage of our framework is to extract 3D skeletal keypoints from the input videos. To this end, we adopt a multi-stage pipeline leveraging the MMPose toolbox [,,]. Initially, the RTMDet model [] identifies human bounding boxes. Subsequently, a top-down estimator with a ResNetV1D50 backbone [] extracts 2D keypoints within these boxes, which are then lifted to 3D coordinates using VideoPose3D []. The final output for each video is a time-series of 17 standard 3D skeletal keypoints (e.g., head, shoulders, hips, limbs), providing a complete spatial representation of the pose in each frame, as visualized in Figure 2a.

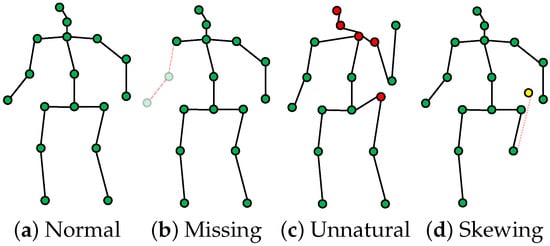

Figure 2.

Anomalous keypoints during data preprocessing, these raw keypoints are often corrupted by artifacts, including partial occlusions (missing keypoints), anatomically implausible poses that violate biomechanical constraints (unnatural), and high-frequency jitter or drift (skewing).

3.2. S2: Anomaly Detection

In outdoor sports training, the accuracy of human keypoint detection can be significantly affected by factors such as camera angles, lighting conditions, object occlusions, and algorithmic errors. Subsequently, these issues affect the assessment of sports actions. Figure 2 illustrates several common anomalies, including missing keypoints Figure 2b, abnormal shifts Figure 2d, and unnatural pose keypoints Figure 2c.

To address these challenges and improve the accuracy of action assessment, we propose a pose feature enhancement method based on anomaly detection. This method identifies anomalies introduced during data preprocessing and dynamically adjusts subsequent scoring based on the type and severity of detected anomalies.

The processing flow of the anomaly detection module consists of three primary steps: First, extract the acceleration sequence for both the template and the test keypoint data. Second, determine the DBSCAN parameters () and detect outliers in the acceleration sequences. Finally, calculate the threshold coefficient t based on the outliers detected in Step 2.

Step 1 (Extracting Acceleration Sequences): Traditional Chinese Qigong actions typically involve slow stretching, twisting, and balancing movements, leading to relatively stable changes in the skeletal keypoints. To improve computational efficiency, we remove data from the spine, chest, and hip midpoint keypoints, as their accelerations show minimal variation. Thus, we retain acceleration data from 14 keypoints.

Each keypoint sequence is approximated as a uniform motion by calculating Euclidean distance differences of every two frames in the 3D space. The for keypoint in frame i is calculated using Equation (1):

Here, represents the 3D coordinates of a specific keypoint, , n is the total frame count, denotes the Euclidean distance function, and is the interval between two consecutive frames.

Step 2 (Outlier detection with DBSCAN): We employ the DBSCAN [] for anomaly detection within individual keypoint acceleration series due to its unsupervised nature, its ability to identify outliers without pre-specifying the number of clusters, and its proven efficacy in density-based outlier detection. Since the motion ranges of the keypoints vary significantly across different actions, using a fixed neighborhood radius could result in suboptimal clustering. Thus, we dynamically determine the DBSCAN parameter using a k-distance method. The k-distance is the distance from each acceleration data point to its k-th nearest neighbor, computed by Equation (2):

Here, denotes the minimum number of samples required to form a cluster, and denotes the acceleration sequence calculated by Equation (1).

We then select as the point with the maximum slope change in the sorted k-distance graph, as shown in Equation (3):

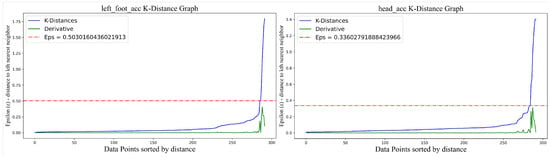

Here, represents the sorted k-distance values, indexes distances. Equation (3) identifies the point with the maximum slope difference, which sets the value of the parameter . The selection of is illustrated in Figure 3.

Figure 3.

Dynamic determination of the optimal DBSCAN parameter via the maximum slope of the k-distance curve.

Figure 3 visualizes the k-distance graph. The vertical axis shows the maximum distance from each data point to its nearest neighbor required for the minimum cluster size, while the horizontal axis indicates the index of data points sorted by distance. The green curve represents the derivative of the k-distance values, and the red dashed line marks the optimal position of the parameter . The DBSCAN algorithm selects the vertical coordinate value at this position as its parameter . This approach ensures accurate clustering for each keypoint under varying conditions, improving the robustness of anomaly detection.

Therefore, given the input action sequences, we denote the total number of acceleration outliers in the standard action sequence as and in the test video sequence as . By entering the determined parameters (, ) and the acceleration sequences into the DBSCAN model, we can calculate and . The calculation method for counting outliers is shown in Equation (4):

Here, is a function that returns the number of detected outliers, and refers to the DBSCAN clustering model.

Step 3 (Threshold Coefficient Calculation): We calculate the threshold coefficient t based on the ratio of the absolute difference between the number of outliers in the standard action sequence () and the test action sequence (), given by . The specific threshold values assigned according to this ratio are detailed in Equation (5).

It is important to note that some discrepancies between standard and test actions are expected. However, if both actions have identical distributions of joint acceleration outliers (i.e., ), it strongly suggests the possibility of cheating. To address this, assignments suspected of cheating are directly assigned a threshold coefficient of 1, indicating that no further scoring is required.

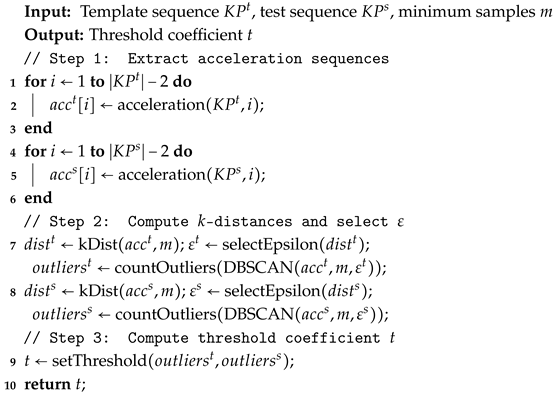

The detailed role and usage of this parameter t will be described further in Section 3.4. The complete anomaly detection process is summarized in Algorithm 1:

| Algorithm 1: Human Skeleton Keypoint Anomaly Detection |

|

3.3. S3: Construction of Action Feature

This study integrates motion state features, including limb angles, body orientation, and shoulder-hip angles, to construct a complete 3D human motion representation. The coordinate system is reconstructed with the neck as the origin based on the 3D spatial coordinates of 17 skeletal keypoints. Subsequently, the limb angles (covering both upper and lower limb joints) and body orientation features are calculated in both 2D and 3D space.

To address the morphological characteristics of Qigong action, we select high-attention skeletal keypoints and establish an 11-dimensional angle feature set, as detailed in Table 1.

Table 1.

Human limb Angle feature extraction number.

The keypoints are labeled using a number-name format, with their mathematical representation given in Equation (6):

Here, and represent vectors between two joint nodes, and is the angle between these vectors. For simplicity, skeletal keypoints are abbreviated as KP.

For spatial limb segment features, we define four proximal limb segments based on anatomical landmarks: left arm (KP 8, 14, 15, and 16), right arm (KP 8, 11, 12, and 13), left leg (KP 0, 1, 2, and 3), and right leg (KP 0, 4, 5, and 6). By calculating the spatial angles between the center of each limb segment and a reference point at the hip (using the geometric center calculation in Equation (7)), we establish four-dimensional kinematic parameters for limb movement:

In Equation (7), , and denote the 3D spatial coordinates of the selected limb block, and represents the 3D coordinates of the center point.

The angle between the center point of a limb block and the midpoint of the spine is then calculated as follows:

Here, denotes the spine vector, is the vector from the neck to the center of the limb block, and is the angle between these two vectors. This calculation yields four spatial upper and lower limb block features, providing important references for subsequent AQA.

To obtain the angle between the coordinates of the center point and the midpoint of the spine, four spatial upper and lower limb block features can be obtained using Equation (8). These features provide important references for subsequent AQA. represents the spine vector, represents the vector from the neck to the center point of the limb block, and represents the angle between the center point vector of the limb block and the spine vector.

We construct a hierarchical orientation system to represent human body orientation. Using vectors connecting the shoulders (KP-11, 12) and hips (KP-0, 1), we establish a three-dimensional anatomical reference in space. The vector projection is then used to convert discrete directional parameters into continuous radian values, setting the forward direction as and the reverse direction as . These are denoted as orientation features and .

When calculating the opening angles between both hands and both feet, these features are not originally radian values, so we normalize them to radians. Specifically, if the distance between the left and right feet is greater than 0.5 times the distance between the left and right shoulders, the opening angle of the feet is set to . Otherwise, it is set to . Similarly, if the distance between the left and right wrists is greater than 1.5 times the distance between the left and right shoulders, the opening angle of the hands is set to ; otherwise, it is set to . These two features are denoted as and .

For four groups of contralateral limb combinations, such as the left upper limb–left lower limb (KP-15, 16, 2, 3) and the right upper limb–right lower limb (KP-12, 13, 5, 6), sagittal plane movement angles are calculated using Equation (6) and denoted as .

Finally, by combining limb angles, body orientation, and shoulder-hip joint angles, we obtain a total of 27-dimensional limb motion angle features: F = [ … , … , … , … , … ]. These features provide comprehensive input data for subsequent action similarity calculations and action scoring methods. To further optimize the efficiency of AQA, we designed a fast action quality scoring algorithm QAQA to address performance bottlenecks in long-sequence calculations.

3.4. S4: Quick Action Quality Assessment

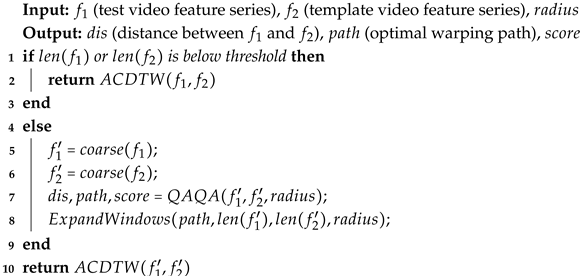

To optimize the time complexity of the ACDTW algorithm [] for the computation of action similarity, we propose the QAQA algorithm, as detailed in Algorithm 2.

QAQA uses an approximate path approach that incrementally refines the final alignment by coarsening, projecting, and refining the dynamic programming score matrix.

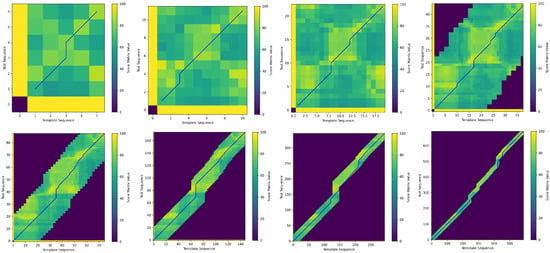

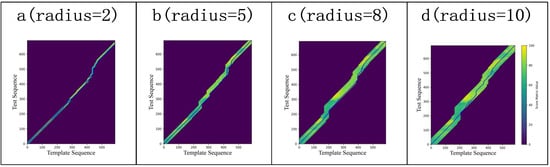

Figure 4 illustrates the iterative process of QAQA, where blue lines indicate backtracking paths from low to high resolution, and darker regions represent areas with uncomputed scores.

| Algorithm 2: Quick Action Quality Assessment (QAQA) |

|

Figure 4.

QAQA iterative process from low resolution to high resolution.

In the coarsening step, QAQA first performs coarse-grained processing of the two time series. According to Equation (9), the series are downsampled by averaging pairs of adjacent features, reducing them to a predefined minimum length.

Using the downsampled series, the dynamic programming distance matrix and action score matrix are computed using ACDTW with a penalty function, resulting in a coarse-grained backtracking path. A weighting factor, typically based on local features or time series attributes, adjusts the match cost for each pair of matching points. The calculation methods for ACDTW are shown in Equations (10) and (11).

Equation (10) defines the penalty function, where N is the number of times each time series point is matched, and a and b are the lengths of the two series. Equation (11) describes the ACDTW computation, where i and j index the frames in the standard and test action sequences, respectively.

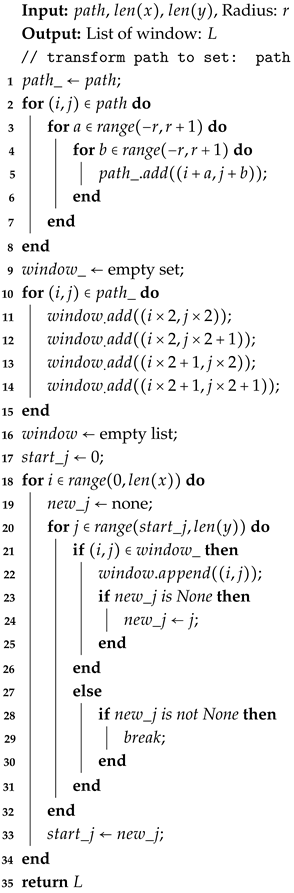

During the projection step, the algorithm calculates the extended window range based on the coarse-grained backtracking path, the radius parameter, and the two feature sequences. This window limits the computational region. Details of the extended window algorithm are provided in Algorithm 3.

| Algorithm 3: ExpandWindows |

|

In the refinement step, the two time series are upsampled, and ACDTW updates the dynamic programming distances only within the specified window. Based on these distances, the backtracking path is recalculated. This cycle of coarsening, window computation, and refinement repeats until the full-resolution distance matrix and the final backtracking path are obtained, at which point the iteration terminates.

Finally, the action score is calculated along the full-resolution backtracking path using Equations (12)–(14). For each frame along the backtracking path, the action score is computed according to Equation (13). Based on the threshold coefficient t (determined by Equation (5) in Section 3.2), different penalty levels are applied to the action similarity distances. The frame-level scores are then summed and averaged to yield the final score.

In Equation (12), and denote the features of the test and standard action sequences at frame i, respectively, with q measuring the discrepancy between the evaluated and standard actions. In Equation (13), is the score of frame i along the backtracking path. If , the score is set to 0 for that frame. In Equation (14), n is the total number of points along the backtracking path.

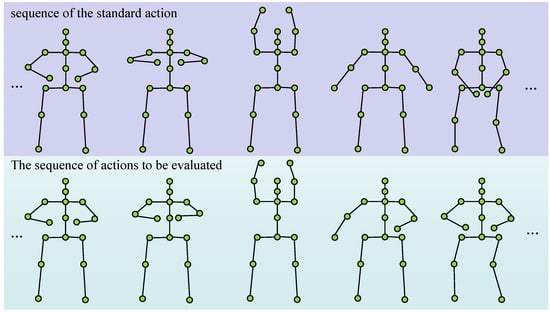

3.5. Unsupervised Labeled Qigong Datasets

The dataset comprises 5887 video clips, averaging 268 per each of the 22 Qigong sub-actions (from Ba Duan Jin and Yi Jin Jing) shown in Figure 5. Raw videos was captured using standard mobile phone cameras (720p, 30 fps) in unconstrained, real-world environments (e.g., dormitories, outdoors, homes) under varied and uncontrolled lighting conditions. A diverse cohort of 2808 participants (1466 male, 1342 female; aged 18–23) with balanced experience levels introduces significant inter-subject heterogeneity, stemming from wide variations in height (150–185 cm), body proportions, and individual execution styles. This intentional diversity makes the dataset a challenging and realistic benchmark for AQA. 10 Physical education experts rated each assessable action sample based on student performance, providing a reference for evaluating method performance.

Figure 5.

Examples of traditional Chinese Qigong actions. After extracting the skeleton key points through MMPOSE, the motion characteristics of Qigong actions can be clearly represented.

4. Experiment

4.1. Implement Details

All experiments are conducted on a system equipped with an 11th Intel (Ontario, California, USA) Core(TM) i7-11700K @ 3.60 GHz processor, dual NVIDIA (Santa Clara, California, USA) RTX 4090 GPUs (24 GB each), and running Ubuntu 22.04. The primary programming language is Python (version 3.12), with major libraries including PyTorch 1.9.1 and CUML.

This study adopts several commonly used metrics for AQA to evaluate the effectiveness of the proposed method. Most prior studies utilize the Spearman rank correlation coefficient to measure the association between true and predicted quality scores.

In Equation (15), denotes the Spearman rank correlation coefficient, is the difference between the ranks of corresponding values from two samples, and n is the number of samples.

Furthermore, to compare the prediction performance of different algorithms further, this study employs Mean Absolute Error (MAE), Relative Mean Absolute Error (RMAE), and Mean Squared Error (MSE) as supplementary assessment metrics. The specific formulas for these metrics are provided in Equations (16)–(18). These metrics facilitate an effective comparison with previous research results.

Here, represents the ground truth score for the i-th sample, denotes the predicted score, and n is the total number of samples.

To establish a gold standard, 500 samples were evaluated by 10 human experts of comparable expertise. Their ratings served as the ground truth labels for our comparative experiments.

4.2. Algorithm Complexity Analysis

The computational efficiency of the QAQA algorithm stems from its hierarchical, coarse-to-fine processing of time series during recursion. This efficiency is governed by two key constraints. First, an anomaly-derived parameter , dynamically adjusts the vertical and horizontal path penalties within the cost matrix update. Second, the coarse-grained alignment path is projected onto the fine-grained level, constraining the search space to a window whose size is dictated by the hyperparameter radius. The radius parameter directly governs the trade-off between computational complexity and precision: a smaller radius reduces computation by narrowing the search window but risks missing the optimal path, whereas a larger radius increases precision at a higher computational cost.

QAQA further improves efficiency by decomposing the problem into levels using a multi-resolution approach. At each level, QAQA does not compute the full cost matrix. Instead, it calculates a narrow band of cells with width , where r is a relatively small constant. Given that the sequence length at a given level is , the time complexity at each level is , which is effectively . Therefore, given the narrow computation window, the time complexity at each level is .

Equation (19) illustrates the overall time complexity. Here, k denotes the level index, where . The decomposition process resembles binary division, resulting in levels in total. At each level, the sequence length is halved: threshold. Consequently, the time complexity at each level can be expressed as . The series is geometric and converges to a constant (specifically, less than 2). As a result, the total computational workload remains linear, so the overall time complexity is .

Regarding space complexity, QAQA employs ACDTW [] for scoring. This approach requires three matrices of size to record the frequency of matching for each point in the time series. Therefore, the space complexity of the algorithm is .

4.3. Comparison Study

As shown in Table 2, both ACDTW and QAQA with the anomaly detection module achieve superior scoring performance, significantly outperforming their counterparts without the module. This shows the positive impact of the anomaly detection module in improving the accuracy of the scoring system.

Table 2.

Results of comparative experiment. The best results are indicated in bold, and the second best ones are underlined. The 95% confidence interval (CI) for Spearman’s rank correlation coefficient is reported to quantify the uncertainty of the estimate, the p-values are derived from the paired Wilcoxon signed rank test. ✗: Without anomaly detection; ✓: with anomaly detection; ↑: higher is better.

ACDTW [], as an unsupervised (indirect) scoring method, inherently provides robust scoring accuracy. Compared to PG-MI, the best-performing regression method, ACDTW with the anomaly detection module achieved a 19.27% improvement in Spearman rank correlation. Similarly, QAQA exhibited the greatest improvement among all methods, increasing Spearman rank correlation by approximately 19.53% compared to supervised methods.

These results suggest that traditional supervised learning methods are often tailored for specific scenarios and actions, and their built-in scoring modules struggle to adapt to the complex movements found in Qigong. In contrast, indirect scoring methods can more accurately capture the unique characteristics of Qigong actions, resulting in more realistic assessments. Moreover, indirect scoring methods demonstrate stronger generalization capability, as they do not require pre-training on labeled scoring data.

As presented in Table 3, a comparative analysis of unsupervised clustering algorithms, including prototype-based (K-Means) and ensemble-based (isolation forest), was conducted. The DBSCAN-based anomaly detection demonstrated superior performance, achieving an improvement of up to 11.76% over other methods. This effectiveness can be attributed to two key aspects: the high consistency between the inherent physical characteristics of acceleration and DBSCAN’s density-based assumption, and its ability to capture acceleration anomalies in euclidean space without a priori knowledge of their shape.

Table 3.

The impact of different clustering algorithms in the anomaly detection module on the accuracy of AQA, among which the detection based on the DBSCAN algorithm demonstrates the best performance. The best results are indicated in bold, ↑: Higher is better, and ↓: lower is better.

4.4. Ablation Study

4.4.1. Parameter Ablation Analysis

In parameter ablation experiments, we compare accuracy and computational time by varying the radius parameter to identify the optimal value for QAQA. The scoring results of the ACDTW on the Chinese traditional Qigong dataset are used as baseline to evaluate the differences among various unsupervised algorithms.

As shown in Table 4, the original DTW algorithm exhibits the most significant error. For QAQA, a smaller radius (e.g., 2) yields higher error, while increasing the radius to 10 achieves the smallest error, and the Spearman rank correlation coefficient for QAQA with a radius of 2 is 0.021 lower than that with a radius of 10. Table 5 presents the average computation times for different algorithms and radius settings. When processing the same dataset, the QAQA scores closely match those of ACDTW, while its computational efficiency is significantly better than that of both DTW and ACDTW.

Table 4.

Ablation study of the radius parameter (accuracy). The best results are indicated in bold, and the second best ones are underlined. ↑: Higher is better, and ↓: lower is better.

Table 5.

Ablation study of the radius parameter (computation time). The best results are indicated in bold, and the second best ones are underlined. ↑: Higher is better, and ↓: lower is better.

In general, setting a larger radius in QAQA improves prediction accuracy but also increases time complexity. Conversely, a smaller radius can speed up computation but may reduce precision. When the radius is set to 10, the prediction results are closest to those of the ACDTW algorithm, with a minimum MAE of 0.5595, representing the best prediction performance. Moreover, in terms of time consumption, the average computation time is reduced by 2.96 s compared to QAQA with a larger radius, significantly improving efficiency without compromising accuracy. We found when the radius is set to 2, the results differ substantially, but the computation time decreases to just 0.15 s, making it suitable for large-scale evaluation scenarios. Therefore, in practical applications, different radius values can be selected to balance the trade-off between computational speed and precision.

The radius parameter also limits the search range of the backtracking path. To further illustrate the impact of different radius settings, Figure 6 visualizes the shapes of the backtracking path under various radius. In the figure, dark areas represent uncalculated and unupdated cells, while light areas show the frame-by-frame action scores between the template and test sequences, with brighter regions indicating higher similarity.

Figure 6.

QAQA’s backtracking paths across different radius parameters: The radius range regulates the search path, further determining the final scoring outcomes.

For instance, in Figure 6a, with a radius of 2, the search range is narrow. In contrast, Figure 6d, with a radius of 10, allows the backtracking path to explore regions with smaller action differences, achieving closer action alignment. However, setting the radius too small may affect the final accuracy, since the optimal backtracking path may lie outside the restricted window. Moreover, reducing the search range also leads to a shorter computational time.

4.4.2. Parameter Sensitivity Analysis

To evaluate the robustness of the proposed anomaly-aware threshold coefficient t in Equation (5), we performed a sensitivity analysis on its binning configuration, with results summarized in Table 6. The proposed setting achieved superior performance with a Sp.Corr of 0.9749 and an MAE of 0.5595. In contrast, widening the bins led to a 0.87% decrease in Sp.Corr and a 9.3% increase in MAE due to reduced penalty granularity. Conversely, narrowing the bins resulted in a 1.04% Sp.Corr drop and an 18.8% MAE increase by over-penalizing minor deviations. The continuous variant was the least effective, confirming that discrete penalty levels are better suited for this task. This analysis validates that our setting provides a robust balance between sensitivity and tolerance.

Table 6.

The result of the parameter sensitivity analysis. ↑: Higher is better, and ↓: lower is better.

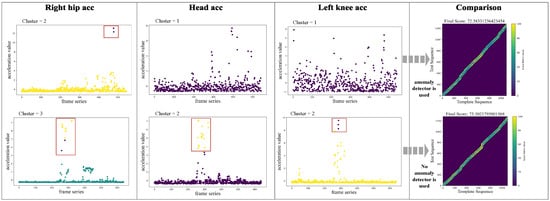

4.5. Case Study of Anomaly Detection Module

Abnormal joint data can degrade action assessment accuracy. Figure 7 illustrates this by visualizing clustered keypoint accelerations, comparing a standard template against a test sample with anomalies. The figure reveals that while some joints like the left knee exhibit stable acceleration patterns similar to the template, others, such as the head, show significant distributional divergence and numerous outliers, indicating improper execution. Our anomaly detection module is designed to identify and penalize these deviations. As shown in the final column, activating this module (threshold parameter t = 0.15) dynamically adjusts the score from 75.3 to 72.3. This demonstrates that our mechanism produces more robust and realistic evaluations by systematically accounting for keypoint anomalies.

Figure 7.

Comparison of acceleration clustering for three keypoints between a standard template (top) and a test sample (bottom). Different colors correspond to different acceleration clusters. The results reveal execution anomalies, particularly in the head joint of the test sample, which exhibits a significant distributional shift compared to the template.

5. Discussion

In this paper, we proposed a fast and robust framework for AQA that addresses key limitations in existing methods for daily exercise analysis. By integrating a novel, DBSCAN-based anomaly detection module with our efficient QAQA scoring algorithm, our approach effectively handles noisy skeletal data and operates without the need for data annotation. The QAQA algorithm’s linear time complexity marks a significant improvement over traditional DTW-based techniques, enabling real-time analysis of long video sequences without compromising accuracy.

Our empirical evaluation confirms the superiority of the proposed framework. On a newly established, large-scale dataset for traditional Chinese Qigong, our method consistently outperformed state-of-the-art approaches in both computational efficiency and assessment accuracy. This dataset itself represents a valuable contribution to the community, providing a robust benchmark for future research in intelligent sports education.

Despite its strong performance, this work opens several avenues for future research. The anomaly detection module could be enhanced by incorporating spatio-temporal graph modeling to capture more complex error patterns. Furthermore, a hierarchical assessment framework could be developed to provide multi-level feedback tailored to actions of varying complexity. These future directions promise to further advance the practical application of AQA in real-world teaching environments and forge new pathways for the intelligent development of traditional sports.

Author Contributions

W.F. (Wen Fu): Experiments, Validation, Writing, Editing, Resources, Funding acquisition, and Conceptualization. W.F. (Wenze Fang): Conceptualization and Data curation. J.H.: Data Preprocessing, Algorithm Implementation, and Writing. K.Z.: Conceptualization, Methodology, and Review Editing. C.F.: Supervision, review editing, and corresponding author. R.C.: Methodology, Review, and Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Education and Teaching Research Project for Undergraduate Institutions of Fujian Province (FBJG20220297), and the National Natural Science Foundation of China (62277010).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. All the provided AQA data have been preprocessed and only include 3D spatial position coordinates representing motion information, without any additional identity information annotations for the tasks.

Data Availability Statement

The datasets used and/or analysed during the current study are available from the corresponding author upon reasonable request only for the purpose of academic research. It is available via Google Drive: https://drive.google.com/drive/folders/1bPnoIrRZLBV8fPS1wo5XqhktYgJVjEy3?usp=sharing (accessed on 11 September 2025). Our academic research-only code is open sourced at https://github.com/waHAHJIAHAO/QAQA-main (accessed on 11 September 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AQA | Action Quality Assessment |

| DTW | Dynamic Time Warping |

References

- Abbasi-Kesbi, R.; Fathi, M.; Najafi, M.; Nikfarjam, A. Assessment of human gait after total knee arthroplasty by dynamic time warping algorithm. Healthc. Technol. Lett. 2023, 10, 73–79. [Google Scholar] [CrossRef]

- Chen, A.X.; Yang, B.W.; Lu, B.; Nie, D.G.; Jin, E.M.; Wang, F.X. Gesture scoring based on Gaussian distance-improved DTW. Elektron. Ir Elektrotechnika 2024, 30, 18–27. [Google Scholar] [CrossRef]

- Fiatarone, M.A.; Marks, E.C.; Ryan, N.D.; Meredith, C.N.; Lipsitz, L.A.; Evans, W.J. High-intensity strength training in nonagenarians: Effects on skeletal muscle. JAMA 1990, 263, 3029–3034. [Google Scholar] [CrossRef]

- Manini, T.; Marko, M.; VanArnam, T.; Cook, S.; Fernhall, B.; Burke, J.; Ploutz-Snyder, L. Efficacy of resistance and task-specific exercise in older adults who modify tasks of everyday life. J. Gerontol. Ser. A Biol. Sci. Med. Sci. 2007, 62, 616–623. [Google Scholar] [CrossRef] [PubMed]

- King, L.K.; Birmingham, T.B.; Kean, C.O.; Jones, I.C.; Bryant, D.M.; Giffin, J.R. Resistance training for medial compartment knee osteoarthritis and malalignment. Med. Sci. Sport. Exerc. 2008, 40, 1376–1384. [Google Scholar] [CrossRef]

- Parmar, P.; Morris, B. Action quality assessment across multiple actions. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1468–1476. [Google Scholar]

- Zhang, B.; Chen, J.; Xu, Y.; Zhang, H.; Yang, X.; Geng, X. Auto-encoding score distribution regression for action quality assessment. Neural Comput. Appl. 2024, 36, 929–942. [Google Scholar] [CrossRef]

- Nekoui, M.; Cruz, F.O.T.; Cheng, L. Falcons: Fast learner-grader for contorted poses in sports. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Zhou, K.; Ma, Y.; Shum, H.P.; Liang, X. Hierarchical graph convolutional networks for action quality assessment. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7749–7763. [Google Scholar] [CrossRef]

- Zeng, L.A.; Zheng, W.S. Multimodal action quality assessment. IEEE Trans. Image Process. 2024, 33, 1600–1613. [Google Scholar] [CrossRef] [PubMed]

- Keogh, E.; Palpanas, T.; Zordan, V.B.; Gunopulos, D.; Cardle, M. Indexing large human-motion databases. In Proceedings of the Thirtieth International Conference on Very Large Data Bases, Toronto, ON, Canada, 31 August–3 September 2004; Volume 30, pp. 780–791. [Google Scholar]

- Krüger, B.; Tautges, J.; Weber, A.; Zinke, A. Fast local and global similarity searches in large motion capture databases. In Proceedings of the Symposium on Computer Animation, Madrid, Spain, 2–4 July 2010; pp. 1–10. [Google Scholar]

- Ying, X. Research on Pose Matching Based on Openpose and Its Application in Physical Education; Nanjing Normal University: Nanjing, China, 2021. [Google Scholar]

- Yan, M.; Liu, X.; Li, Z.; Guo, N. Evaluation of Human Action Based on Feature-Weighted Dynamic Time Warping. Appl. Sci. 2024, 14, 11130. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded pyramid network for multi-person pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7103–7112. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 43, 7291–7299. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Jiang, T.; Lu, P.; Zhang, L.; Ma, N.; Han, R.; Lyu, C.; Li, Y.; Chen, K. Rtmpose: Real-time multi-person pose estimation based on mmpose. arXiv 2023, arXiv:2303.07399. [Google Scholar]

- MMPose. MMPose Documentation. 2024. Available online: https://mmpose.readthedocs.io/en/latest/ (accessed on 14 November 2025).

- Zhang, D.; Hou, Z. Research on Key Technologies of Active Training Mode in Rehabilitation Robots. Ph.D. Thesis, Institute of Automation, Chinese Academy of Sciences, Beijing, China, 2018. [Google Scholar]

- Chen, X. A Action Assessment System Base on Human 3D Pose. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2018. [Google Scholar]

- Parmar, P.; Morris, B.T. What and how well you performed? a multitask learning approach to action quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 304–313. [Google Scholar]

- Zia, A.; Sharma, Y.; Bettadapura, V.; Sarin, E.L.; Clements, M.A.; Essa, I. Automated Assessment of Surgical Skills Using Frequency Analysis. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2015. MICCAI 2015. [Google Scholar]

- Mirzadeh, S.I.; Farajtabar, M.; Li, A.; Levine, N.; Matsukawa, A.; Ghasemzadeh, H. Improved knowledge distillation via teacher assistant. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5191–5198. [Google Scholar]

- Zhao, H.; Sun, X.; Dong, J.; Dong, Z.; Li, Q. Knowledge distillation via instance-level sequence learning. Knowl.-Based Syst. 2021, 233, 107519. [Google Scholar] [CrossRef]

- Yim, J.; Joo, D.; Bae, J.; Kim, J. A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4133–4141. [Google Scholar]

- Ding, G.; Yao, A. Leveraging Action Affinity and Continuity for Semi-supervised Temporal Action Segmentation. arXiv 2022, arXiv:2207.08653. [Google Scholar] [CrossRef]

- Jain, H.; Harit, G.; Sharma, A. Action Quality Assessment using Siamese Network-Based Deep Metric Learning. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2260–2273. [Google Scholar] [CrossRef]

- Kukleva, A.; Kuehne, H.; Sener, F.; Gall, J. Unsupervised learning of action classes with continuous temporal embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12066–12074. [Google Scholar]

- Lerch, D.J.; Zhong, Z.; Martin, M.; Voit, M.; Beyerer, J. Unsupervised 3D skeleton-based action recognition using cross-attention with conditioned generation capabilities. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024. [Google Scholar]

- Sakoe, H.; Chiba, S. Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process. 2003, 26, 43–49. [Google Scholar] [CrossRef]

- Giorgino, T. Computing and visualizing dynamic time warping alignments in R: The dtw package. J. Stat. Softw. 2009, 31, 1–24. [Google Scholar] [CrossRef]

- Judge, J.O.; Underwood, M.; Gennosa, T. Exercise to improve gait velocity in older persons. Arch. Phys. Med. Rehabil. 1993, 74, 400–406. [Google Scholar]

- Baptista, R.; Antunes, M.; Aouada, D.; Ottersten, B. Video-Based Feedback for Assisting Physical Activity. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Porto, Portugal, 27 February–1 March 2017. [Google Scholar]

- Salvador, S.; Chan, P. Toward accurate dynamic time warping in linear time and space. Intell. Data Anal. 2007, 11, 561–580. [Google Scholar] [CrossRef]

- Al-Naymat, G.; Chawla, S.; Taheri, J. Sparsedtw: A novel approach to speed up dynamic time warping. arXiv 2012, arXiv:1201.2969. [Google Scholar] [CrossRef]

- Keogh, E.J.; Pazzani, M.J. Derivative dynamic time warping. In Proceedings of the 2001 SIAM International Conference on Data Mining, Chicago, IL, USA, 5–7 April 2001; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2001. [Google Scholar]

- Li, H.; Liu, J.; Yang, Z.; Liu, R.W.; Wu, K.; Wan, Y. Adaptively constrained dynamic time warping for time series classification and clustering. Inf. Sci. 2020, 534, 97–116. [Google Scholar] [CrossRef]

- Pagnon, D. Design and Evaluation of a Biomechanically Consistent Method for Markerless Kinematic Analysis of Sports Motion. Ph.D. Thesis, Université Grenoble Alpes, Saint-Martin-d’Hères, France, 2023. [Google Scholar]

- Chen, Z.; Huang, W.; Liu, H.; Wang, Z.; Wen, Y.; Wang, S. ST-TGR: Spatio-temporal representation learning for skeleton-based teaching gesture recognition. Sensors 2024, 24, 2589. [Google Scholar] [CrossRef]

- Zhao, T. Research on miners’ human posture detection algorithm based on MMPose. Acad. J. Comput. Inf. Sci. 2023, 6, 57–62. [Google Scholar] [CrossRef]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An empirical study of designing real-time object detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3d human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7753–7762. [Google Scholar]

- Deng, D. DBSCAN Clustering Algorithm Based on Density. In Proceedings of the 2020 7th International Forum on Electrical Engineering and Automation (IFEEA), Hefei, China, 25–27 September 2020; pp. 949–953. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Tang, Y.; Ni, Z.; Zhou, J.; Zhang, D.; Lu, J.; Wu, Y.; Zhou, J. Uncertainty-aware score distribution learning for action quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9839–9848. [Google Scholar]

- Yu, X.; Rao, Y.; Zhao, W.; Lu, J.; Zhou, J. Group-aware contrastive regression for action quality assessment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 7919–7928. [Google Scholar]

- Bai, Y.; Zhou, D.; Zhang, S.; Wang, J.; Ding, E.; Guan, Y.; Long, Y.; Wang, J. Action quality assessment with temporal parsing transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 422–438. [Google Scholar]

- Liu, J.; Wang, H.; Zhou, W.; Stawarz, K.; Corcoran, P.; Chen, Y.; Liu, H. Adaptive Spatiotemporal Graph Transformer Network for Action Quality Assessment. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 6628–6639. [Google Scholar] [CrossRef]

- Zhang, S.; Bai, S.; Chen, G.; Chen, L.; Lu, J.; Wang, J.; Tang, Y. Narrative Action Evaluation with Prompt-Guided Multimodal Interaction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 18430–18439. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A k-means clustering algorithm. J. R. Stat. Soc. Ser. C (Appl. Stat.) 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).