Abstract

Currently, recognition and assessment of human posture have become significant topics of interest, particularly through the use of visual sensor systems. These approaches can effectively address the drawbacks associated with traditional manual assessments, which include fatigue, variations in experience, and inconsistent judgment criteria. However, systems based on visual sensors encounter substantial implementation challenges when a large number of such sensors are used. To address these issues, we propose a human posture recognition and assessment system architecture, which comprises four distinct subsystems. Specifically, these subsystems include a Visual Sensor Subsystem (VSS), a Posture Assessment Subsystem (PAS), a Control-Display Subsystem, and a Storage Management Subsystem. Through the cooperation of subsystems, the architecture has achieved support for parallel data processing. Furthermore, the proposed architecture has been implemented by building an experimental testbed, which effectively verifies the rationality and feasibility of this architecture. In the experiments, the proposed architecture was evaluated by using pull-up and push-up exercises. The results demonstrate that the proposed architecture achieves an overall accuracy exceeding 96%, while exhibiting excellent real-time performance and scalability in different assessment scenarios.

1. Introduction

Regular physical exercise and maintaining fitness are paramount for preventing degenerative and cardiovascular diseases, providing substantial health advantages over a sedentary lifestyle [1]. This necessity has elevated exercises and physical fitness training to topics of widespread concern across various sectors, driven by the continuous improvement of residents’ living standards and the enhancement of national health awareness. For enterprises and institutions, the physical health of employees significantly impacts work efficiency and team collaboration [2]. In education institutions, as reforms in physical education curricula advance in primary and secondary schools, assessments of exercises and physical fitness have increasingly become important criteria for evaluating students’ overall quality [3]. This trend extends to higher education, where the assessment of fitness in colleges has garnered growing attentions from the Ministry of Education. As noted in the Guiding Outline for Teaching Reform of “Physical Education and Health” (Tentative) [4], the assessment of physical fitness for college students has become a crucial aspect of physical education. However, traditional assessment methods, which rely on manual observation, suffer from significant drawbacks such as subjectivity, rater fatigue, inconsistencies, and a lack of data traceability. To overcome these limitations, modern approaches have shifted towards sensor-based systems. In particular, the advent of deep learning and computer vision has provided powerful models for objective, automated posture assessment, offering significant advantages in accuracy, traceability, and efficiency over manual methods.

Recently, vision-based embedded sensor systems have become an increasingly popular solution for exercise assessment. These systems often draw from the broad field of Human Activity Recognition (HAR), which aims to automatically identify and classify human actions from sensor data. This field has diverse applications, including clinical analyses such as pathological gait recognition [5,6]. A fundamental component within this domain is Human Posture Recognition and Assessment (HPRA), a field that focuses on identifying spatial locations of a person’s joints and limbs to understand their body configuration and movement. In the context of exercise analytics, HPRA facilitates the real-time quantitative monitoring of body movements, offering an objective basis for performance evaluation [7]. However, systems with visual sensors face critical challenges regarding the efficiency of posture recognition and assessment, particularly when a large number of visual sensors are involved. In this case, their computational complexity often exceeds the capabilities of many low-spec computing platforms, such as the Phytium 2000+ [8]. Furthermore, the absence of a generalized algorithmic modeling framework results in significant performance limitations for visual sensor systems when assessing multiple exercise modalities, including but not limited to pull-ups, push-ups and abdominal crunches. Therefore, addressing these issues is a crucial step toward improving the intelligence and enabling the large-scale application of human posture recognition and assessment systems, thereby promoting their use in a wider range of scenarios to meet diverse assessment needs. It is important to note that such systems are not intended to replace the invaluable expertise of professionals like physiotherapists and kinesiologists. Rather, our vision-based system is explicitly positioned as a decisional support tool. It is designed to augment clinical and professional fitness evaluations by integrating expertise-derived assessment criteria with objective, quantitative, and traceable sensor data, thereby providing a powerful adjunct to overcome the subjectivity and inconsistency of purely manual methods.

To address these issues, this paper proposes a comprehensive and efficient approach for human posture recognition and assessment, along with an implementable architecture for a visual sensor system. Our results effectively tackle the challenges of low efficiency, poor accuracy, and limited scalability that are commonly associated with traditional assessment methods. The main contributions of this paper are summarized as follows:

- We present the design and practical implementation of an integrated four-subsystem architecture on a resource-constrained platform. We validate its effectiveness in concurrently processing data streams from multiple visual sensors, demonstrating its potential for managing the data loads characteristic of larger-scale scenarios and delivering assessment results in real-time.

- We demonstrate the successful application of a finite state machine (FSM) model to create a generalizable posture assessment framework. This framework is proven to be effective across different exercise modalities (pull-ups, push-ups, etc.) within our system, enhancing the fairness and transparency of the assessment process.

- We provide a comprehensive end-to-end validation of the entire system through a physical experimental testbed. The results confirm that our integrated solution achieves high accuracy over 96% and robust real-time performance, verifying its feasibility and practical value for deployment in real-world assessment scenarios.

2. Related Work

Human posture detection and action recognition technology have been widely applied in sports training, rehabilitation engineering, and intelligent healthcare. In sports training scenarios, accurate real-time recognition and counting of training actions are crucial for ensuring training quality and avoiding sports injuries. However, existing exercise assessment methods still face significant challenges.

The foundational method for exercise assessment is direct observation by a trained professional, such as a coach or clinician. In order to achieve exercise evaluation, manual methods often rely on human observers, which leads to inconsistency, lack of data traceability, and low efficiency in large-scale deployment [9,10]. To overcome these limitations, researchers first turned to sensor-based systems. Wearable devices [11,12,13], such as Inertial Measurement Units (IMUs) containing accelerometers and gyroscopes, can provide highly accurate data on limb orientation, velocity, and acceleration. While effective, early iterations of such systems sometimes required placing multiple sensors on the body, which could be intrusive. Furthermore, they occasionally suffered from issues like sensor drift and complex calibration procedures. It is important to note that modern advancements, such as e-textiles, have significantly mitigated the issue of intrusiveness, and newer IMUs often feature simplified, less time-demanding calibration processes [5,6]. Nonetheless, vision-based approaches offer a compelling non-contact alternative with the advent of deep learning.

State-of-the-art models for human pose estimation, such as OpenPose, HRNet, AlphaPose [14,15], and BlazePose [16,17,18,19,20,21], leverage deep neural networks to directly predict the 2D or 3D coordinates of key skeletal joints from an image or video frame [22,23,24]. Against this background, there is an urgent need to develop an action recognition and counting system suitable for sports training scenarios, which should integrate the advantages of computer vision to address the problems of poor real-time performance, ambiguous action standards, and insufficient robustness in existing technologies. Meanwhile, the system must not only enable simple, fast, and efficient deployment, but also judge the standardization of movements in accordance with sports physiology standards. Additionally, it should provide real-time feedback and accurate counting for users, thereby improving training effectiveness. In these models, although OpenPose, HRNet, and AlphaPose perform well in terms of recognition accuracy, they have limitations such as slow recognition speed and high requirements for hardware performance. In contrast, BlazePose is a model framework that combines fast recognition speed and high accuracy, with its core advantage being low hardware requirements. Our proposed human posture recognition and assessment system requires processing concurrent video streams from multiple cameras, and it is deployed on a Phytium 2000+ processor. These two factors, concurrent video processing demands and the hardware’s performance specifications, create constraints such that OpenPose, HRNet, and AlphaPose cannot be deployed on this platform, and only BlazePose can be effectively deployed.

Therefore, we finally choose BlazePose as the posture recognition model of the proposed system. It is worth noting that our research paradigm—focusing on system integration, deployment, and experimental validation on resource-constrained embedded or edge devices—is a widespread practice in the field of human pose analysis. For instance, the works by Huang et al. [25] focuse on implementing a lightweight OpenPose on edge devices like the Jetson TX2; the researches by Qian [26] and the work by Leone et al. [27] respectively demonstrate the complete engineering implementation of posture recognition on embedded systems using inertial sensors and machine learning. The core contribution of these studies is not the proposal of novel recognition algorithms, but rather the validation of a solution’s actual performance and operability in specific application scenarios (e.g., edge computing, wearable devices) through system design and experimentation. This study follows the same research philosophy, aiming to provide a practical and valuable system paradigm for the large-scale application of visual sensors on Low-Computing- Power platforms.

3. Experimental System Architecture

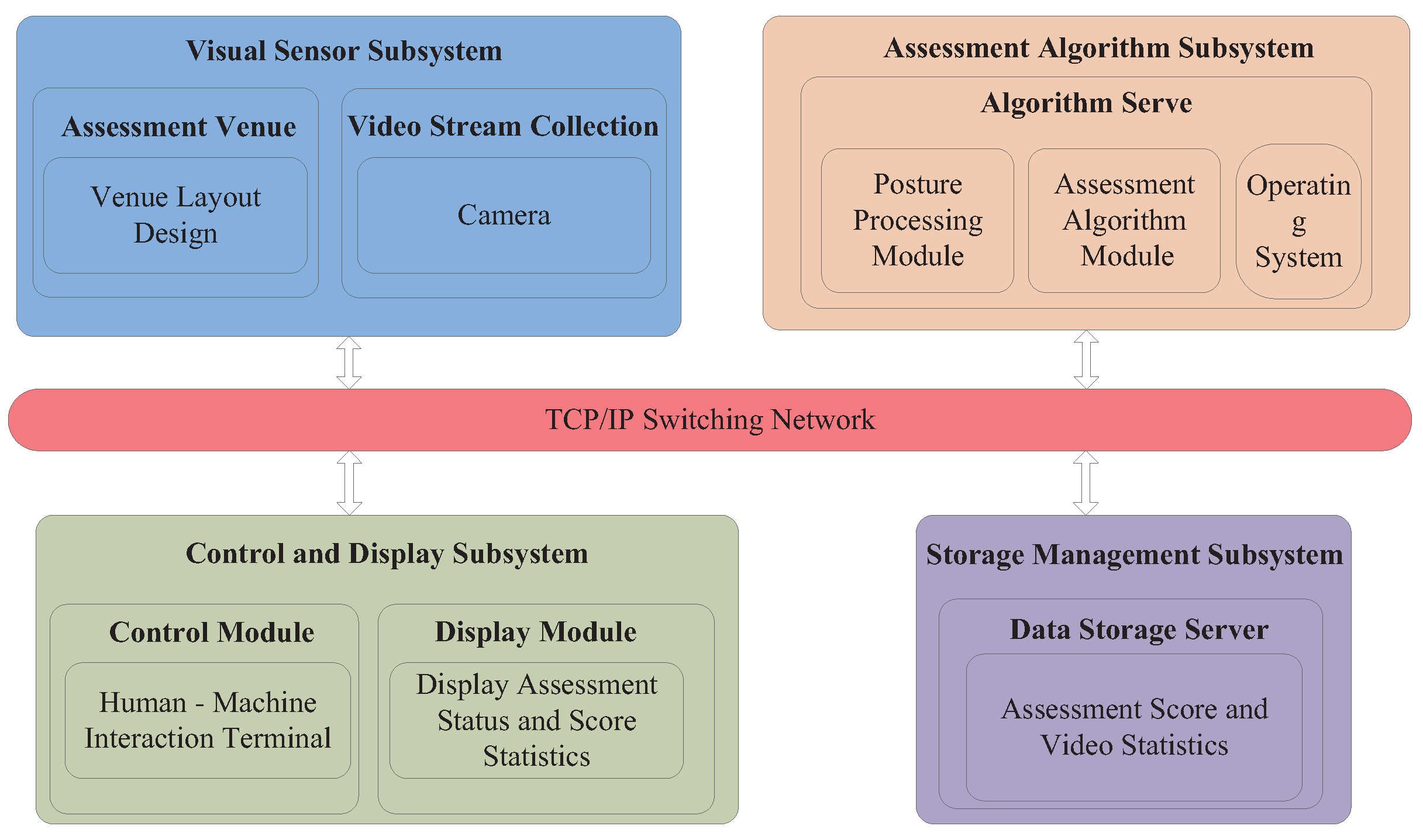

This section presents the architecture design of our testbed for the human posture recognition and assessment system. As depicted in Figure 1, the system architecture consists of four main subsystems. Each subsystem is responsible for distinct tasks and collaborates using the TCP/IP network protocol to facilitate data exchange. The four-subsystem architecture is deployed on the Phytium 2000+ processor platform. Tailored for Phytium 2000+, this design overcomes prior real-time pose estimation challenges on the hardware and enables simultaneous real-time assessment of multiple exercise modalities. Moreover, a state machine-based assessment algorithm is integrated into the assessment algorithm subsystem. Compared to traditional architectures, integrating state machines into the architecture to ensure accurate counting and scalability to other exercise modalities is a novel application.

Figure 1.

Framework diagram of human posture recognition and assessment system.

3.1. Visual Sensor Subsystem

3.1.1. Function Design

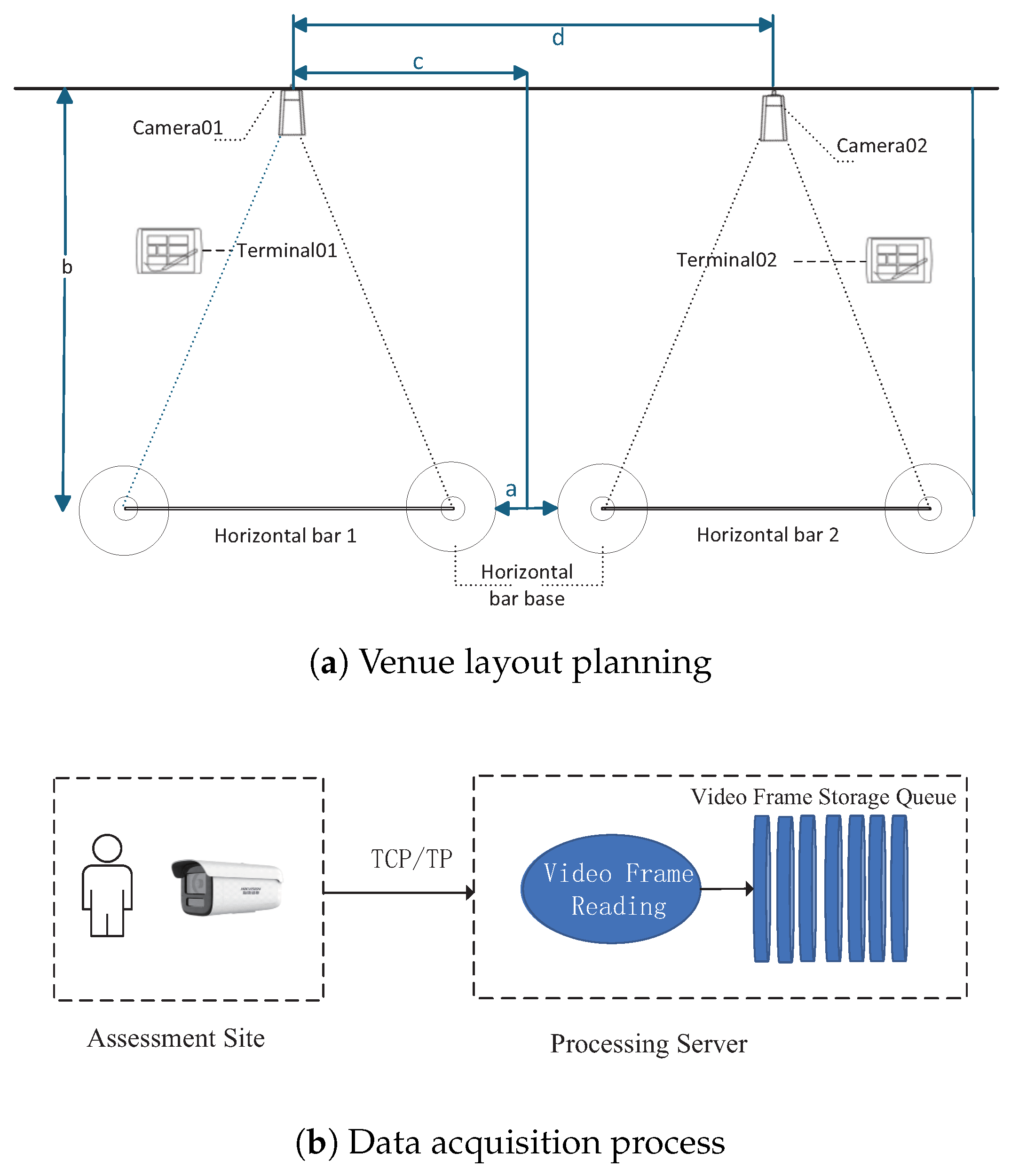

This subsystem includes venue layout planning and video stream acquisition, as illustrated in Figure 2a. Its primary objective is to ensure the real-time collection of video data through strategic venue arrangement, camera selection, and positioning. This framework is crucial for subsequent posture estimation and assessment. The data collection process entails the integration of software, hardware, and network coordination. Cameras capture video streams utilizing the Real-Time Streaming Protocol (RTSP), while OpenCV’s cv2.VideoCapture class facilitates the real-time reading of video frames from RTSP streams [28,29]. Each captured frame is stored in a video frame queue, enabling subsequent subsystems to access the raw data in a sequential manner. The effective selection of protocols, optimized queue management, and seamless hardware-software integration improve the capabilities of the subsystem regarding real-time performance, stability, and processing capacity. In video stream acquisition, since the assessment site and processing server are physically separated, data transmission between the camera and the server must traverse various networks. This scenario imposes the following core requirements on the camera:

Figure 2.

Venue layout and data acquisition process (Taking pull-ups as an example).

Real-time acquisition: The camera must support low-latency video transmission to ensure that the system can capture and transmit images in real-time for posture recognition and scoring. Network transmission latency should be restricted to the millisecond range to guarantee prompt data delivery.

Image quality: High-resolution and clear video footage serve as the foundation for accurate posture recognition. The camera should support multiple resolution options to accommodate various assessment needs. By appropriately adjusting the resolution, the system can maintain image quality while optimizing network bandwidth, reducing storage requirements, and enhancing operational robustness.

Video compression: The adoption of efficient video compression technologies, such as H.264 and H.265, can significantly reduce the size of video data, enhance algorithm efficiency, and decrease network bandwidth consumption, as well as the amount of storage space used.

3.1.2. Hardware Implementation

The selection of this specific camera over alternatives such as standard USB cameras constitutes a critical design decision, as it directly ensures the system’s scalability potential. A key rationale for this choice lies in the fact that network-based cameras are indispensable to a distributed architecture—a fundamental prerequisite for large-scale deployment across extended areas. Our system leverages a TCP/IP switching network to coordinate multiple devices even over potentially long distances, and this infrastructure aligns seamlessly with the capabilities of network cameras. Unlike USB cameras, which are limited by physical cable lengths and restricted to single-device connections, the network cameras in our design—equipped with an RJ45 port—can be effortlessly integrated into the distributed network. This integration not only enables the flexible deployment of numerous cameras to cover large or multiple assessment sites but also guarantees the stable transmission of video streams, thereby reinforcing the system’s ability to scale effectively [30]. The key technical specifications of the chosen camera are detailed in Table 1. The entire data collection process, illustrated in Figure 2b, involves collaborative coordination among software, hardware, and networking components. Each time a video frame is successfully obtained, it is stored in a video frame storage queue, enabling subsequent processing subsystems to read the raw data in sequence.

Table 1.

Camera Technical Specifications [30].

3.2. Assessment Algorithm Subsystem

3.2.1. Function Design

This subsystem extracts human keypoint information from raw video data and executes the corresponding posture assessment algorithms to generate scores. The assessment algorithm subsystem includes a posture processing module and an assessment algorithm module.

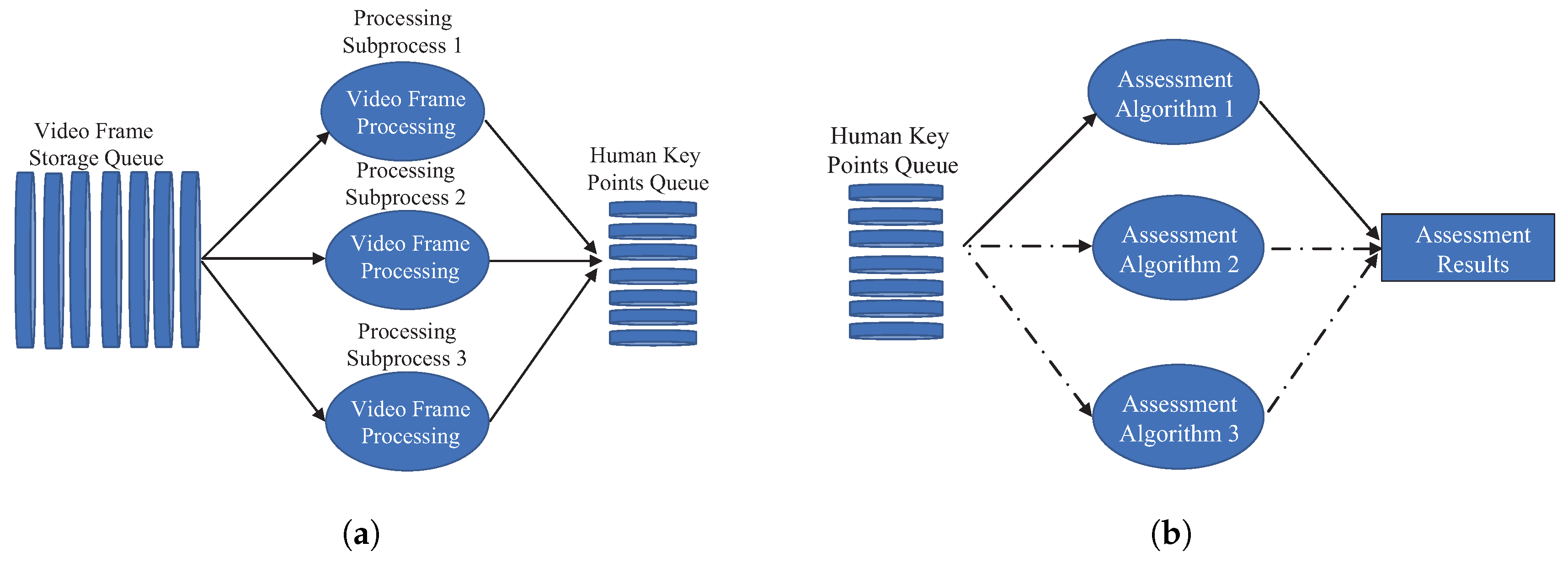

The posture processing module maintains two queues: one for storing raw video frames and another for processed keypoint coordinates. Both queues are thread-safe to prevent data contention or loss. Additionally, the system establishes appropriate queue sizes and regularly clears processed frames to optimize memory usage. As illustrated in Figure 3a, the posture processing module is responsible for acquiring video frames from the video frame storage queue, which is downstream of the visual sensor subsystem. It then utilizes relevant human posture detection algorithms to extract human keypoints from the video frames and transmits the core data of the keypoints to the subsequent human keypoint queue for the assessment algorithms to analyze, count and calculate the assessment scores. As depicted in Figure 3b, the assessment algorithm module obtains data from the human keypoint queue, which is downstream of the posture processing module. It automatically selects the corresponding assessment algorithm for processing based on the chosen assessment event, ultimately generating the assessment results.

Figure 3.

System processing subsystem. (a) Posture processing module. (b) Assessment algorithm module.

3.2.2. Hardware Implementation

In this system, posture processing and assessment are implemented on a server running the Kylin operating system [31], with the specifications detailed in Table 2. Kylin is a Linux kernel-based operating system designed for the Chinese market, offering high security and stability to support enterprise-level application operations. The system also requires a Python 3.8.0 environment and dependency libraries for algorithm execution, such as TensorFlow and OpenCV. A key design trade-off during hardware-software integration was the selection of the human posture recognition model, balancing accuracy and hardware compatibility. We evaluated four mainstream models: OpenPose, HRNet, AlphaPose, and BlazePose. OpenPose and HRNet deliver high accuracy but require GPU acceleration (e.g., NVIDIA Tesla V100) to process 1080P video at 15 FPS, which exceeds the computational capacity of the Phytium 2000+ processor. AlphaPose reduces GPU dependency but still requires 8+ CPU cores per video stream, limiting the system to 8 concurrent streams—insufficient for multi-station assessments. BlazePose, despite a slightly lower accuracy, achieves 25 FPS for 1080P video on the Phytium 2000+ processor and supports 16 concurrent streams. Experimental validation (Section 5.2 and Section 5.3) shows BlazePose meets the system’s accuracy requirements, making it the optimal choice for balancing performance, compatibility, and scalability.

Table 2.

List of Identification Processing Server Configurations [31].

3.3. Control and Display Subsystem

3.3.1. Function Design

The control and display subsystem includes a control module and a display module. As the primary interface for user interaction, the control module is responsible for delivering user commands and ensuring timely responses from all subsystems to start the assessment process. The display module presents real-time algorithm execution and results, thereby enhancing transparency of the assessment process.

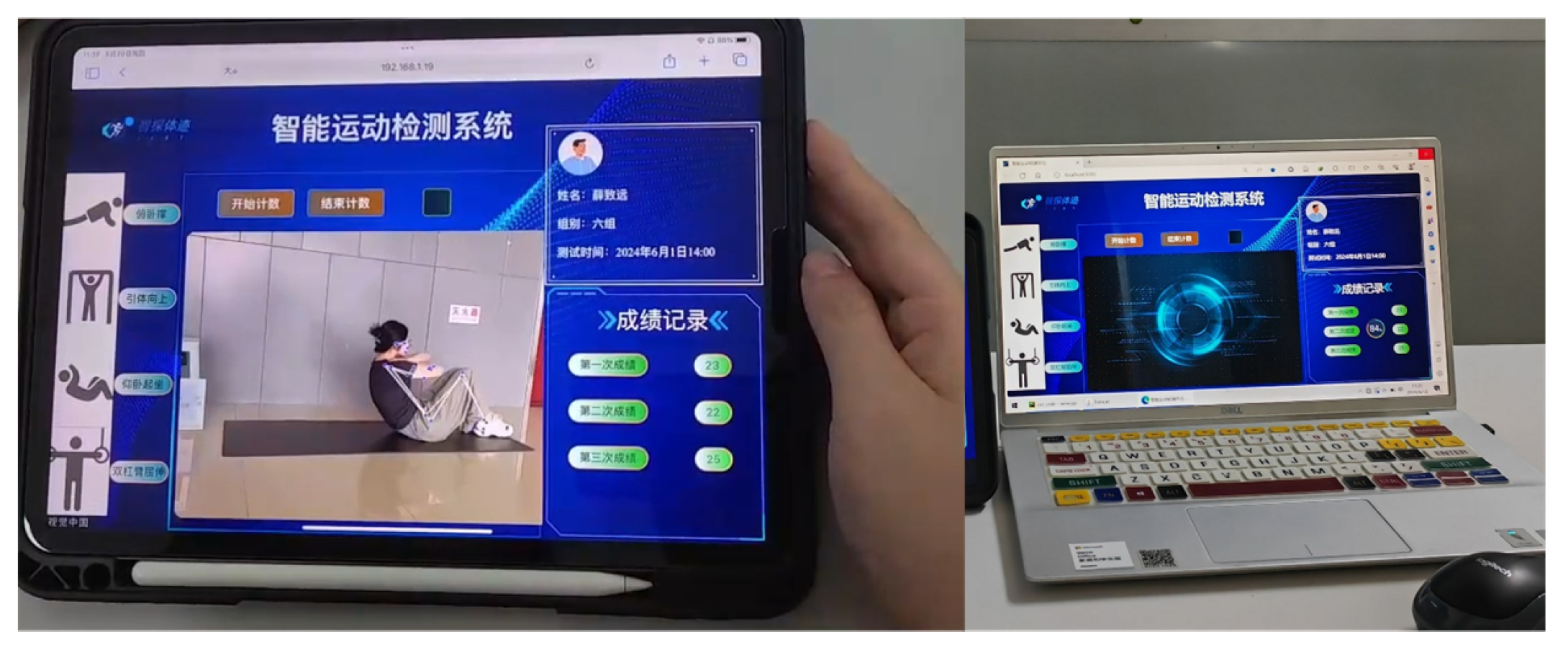

3.3.2. Software Implementation

In this subsystem, we utilize Vue.js for the development of a control module and the user interface (UI) of the display module. Vue.js is a progressive frontend framework that simplifies UI development, enhances development flexibility, enables rapid response to user interactions, and thereby improves the overall UI development experience. The interaction between frontend and backend is achieved through Axios, a promise based HTTP client. This enables cross-domain access and resource requests to obtain real-time data and update the page. The user interaction component employs directives provided by Vue.js (such as v-bind and v-for) to bind data and update the page, ensuring a dynamic response to user commands and changes occurring on the page. The UI of display module, which runs on the tablet along with the tablet terminal, is illustrated in Figure 4.

Figure 4.

The UI of display module.

3.4. Storage Management Subsystem

3.4.1. Function Design

This subsystem is responsible for storing and managing all assessment data, including posture videos, scores, and related information. The data storage servers ensure secure storage and efficient retrieval of this data. In the storage management subsystem, video archives and assessment scores are primarily organized and stored in a database, which serves as the foundation for data analysis. After acquiring the assessment video stream, it is time-stamped according to the timestamps of the start and end frames. Subsequently, the video is archived in the folder designated for each test-taker, allowing for future querying and management.

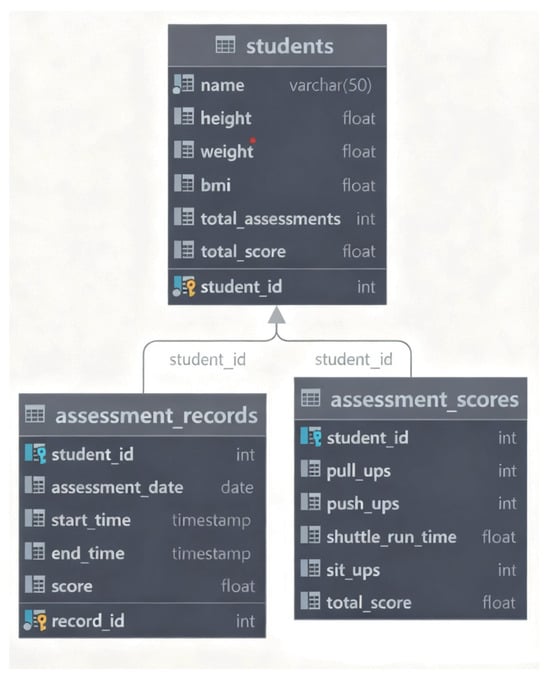

3.4.2. Software Implementation

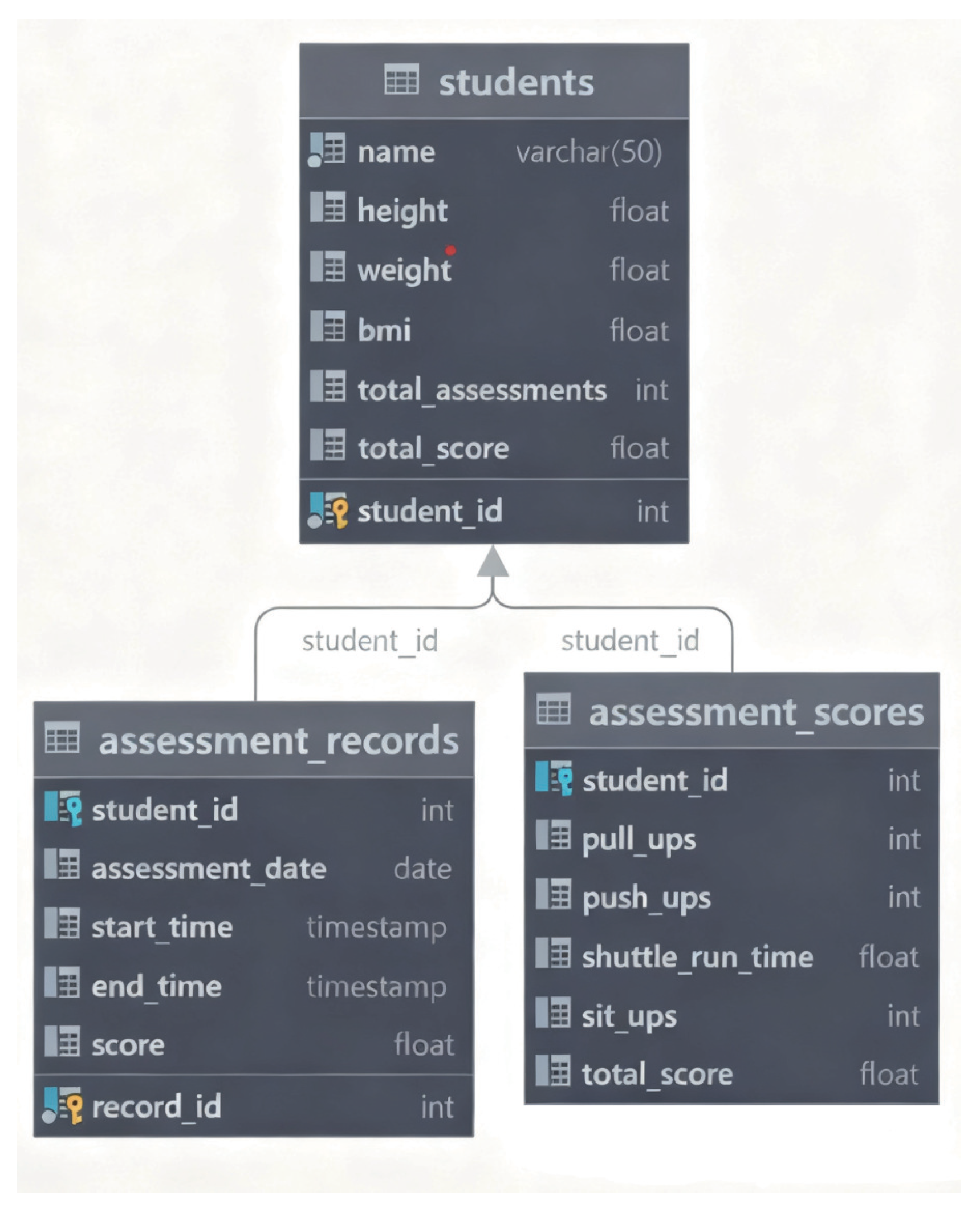

The system utilizes the widely-used MySQL database for efficient management. Within this database, a primary schema named DATABASE has been established, and several tables have been created within it. These tables include a student table (In the table, “students” refers to the test-takers undergoing posture assessment.), an assessment records table and an assessment scores table. The key relationships among these tables are illustrated in Figure 5.

Figure 5.

Table structures and their relationships in databases.

The students’ table serves as a primary data store for the essential information of each student. This information includes the student’s name, height, weight, BMI (Body Mass Index), total number of assessments, total score, and more. This table provides a comprehensive profile for each student, enabling the system to monitor their physical condition and assessment history. This, in turn, facilitates the subsequent analysis and management of their health status and changes in physical fitness.

The assessment records table captures essential information for each assessment, including the assessment date, start and end times, as well as the score for each assessment. By designing this table, the system can accurately record the timing and results of each assessment activity, enabling precise tracking of the specific details of each assessment in subsequent analyses.

The assessment scores table primarily records the specific scores for each evaluation, including scores for various exercise modalities such as pull-ups and push-ups. Each score is associated with an individual student and a corresponding assessment record, thereby ensuring high accuracy and integrity of the assessment data. This table not only captures each student’s performance across different modalities but also serves as foundational data for the comprehensive scoring and progress tracking of the system.

Through the close association of these three tables, the database can effectively and comprehensively manage students’ personal information, assessment records and score data. The scores from each assessment are linked to the students’ basic information and historical records, facilitating detailed analysis and queries by the users. It is important to note that both the assessment_records and assessment_scores tables are linked to the students table through the student_id key. This direct relationship allows for comprehensive queries on a per-student basis and makes an additional associative key between the records and scores tables unnecessary.

4. Algorithms of Human Posture Recognition and Assessment

The algorithmic core of our system extends beyond static keypoint detection to include comprehensive posture modeling and assessment. Posture reconstruction employs BlazePose model to generate anatomical landmarks [32,33,34], which are then refined using temporal smoothing. From the reconstructed posture sequences, we extract joint angles and segment distances within a standardized 2D image coordinate system, allowing for precise geometry-based assessment. Posture-specific assessment logic is implemented as a FSM, where each state corresponds to a recognized phase of posture (e.g., high-pull, low-support). Transitions between states are triggered when posture metrics meet predefined thresholds. This approach ensures that only complete and accurate motion cycles contribute to the final score.

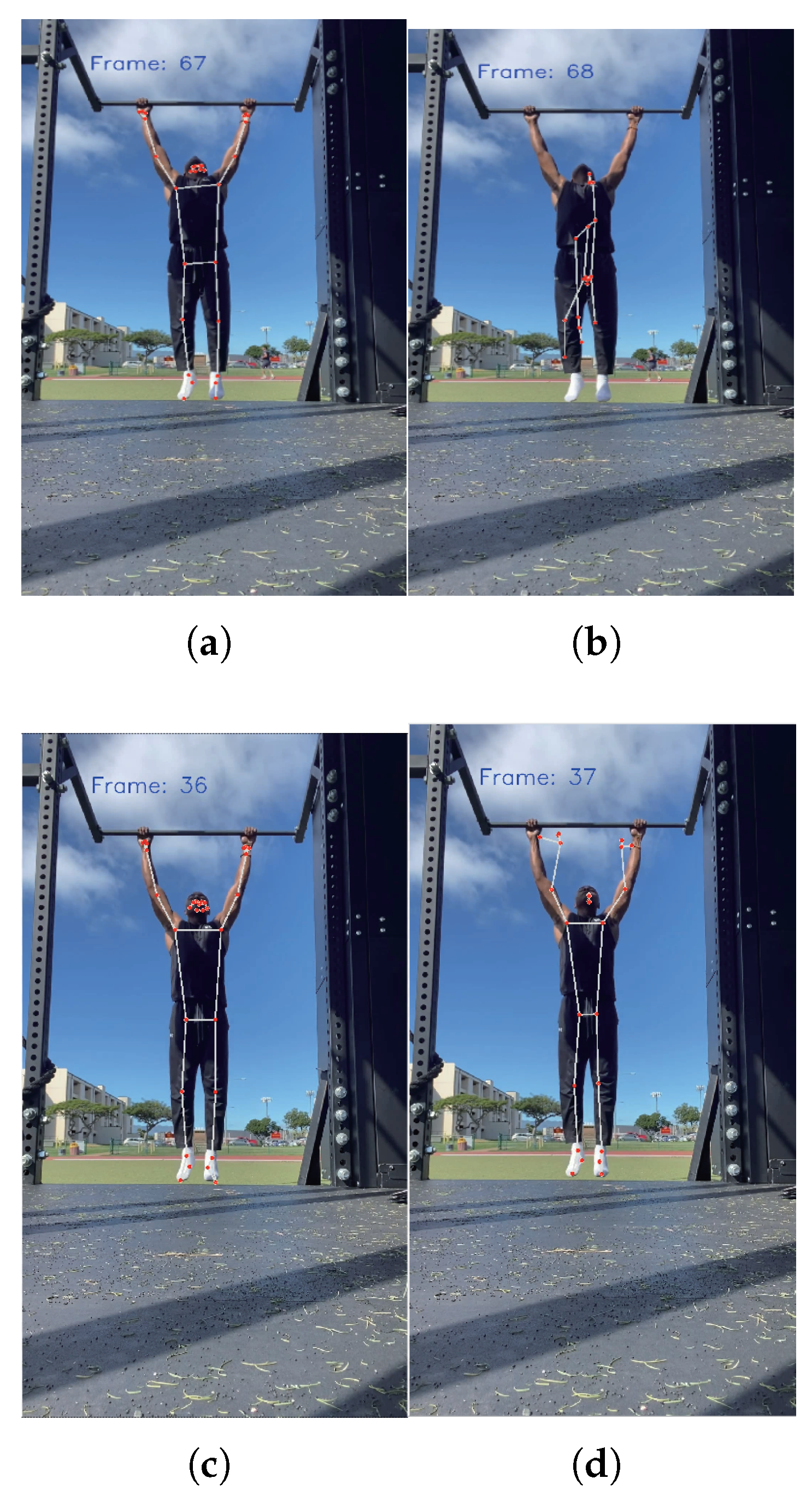

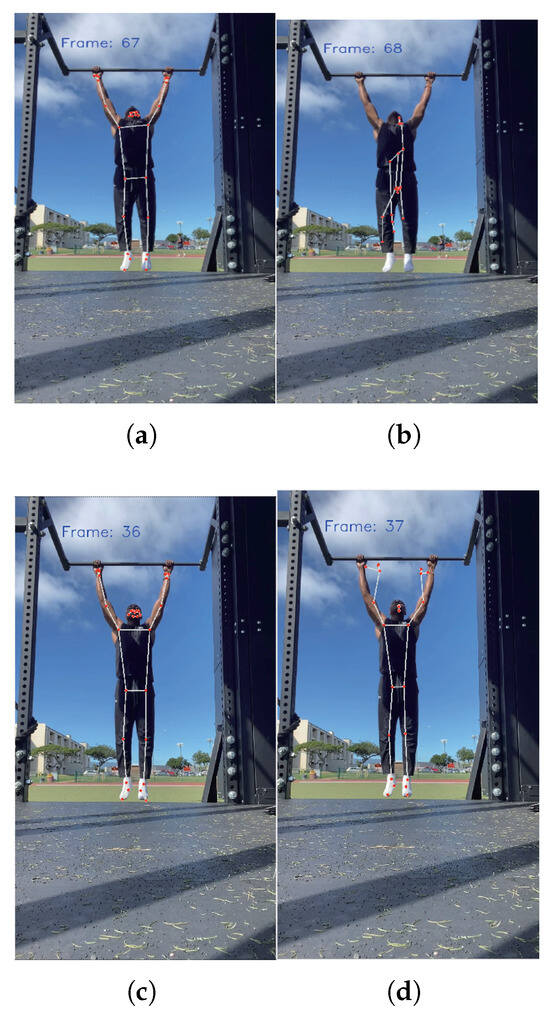

4.1. Smoothing and Filtering

Figure 6 demonstrates the continuous frame outputs of human keypoints at a video frame rate of 30 FPS. As shown in Figure 6a, the keypoint detection in the 67th frame accurately reflects the current human posture. However, in Figure 6b, although the human body does not undergo significant postural changes in the 68th frame, erroneous keypoint detection occurs. Some points show excessive deviations from their actual joint positions, and “flying points” appear in the image. Similarly, the keypoint detection in the 36th frame shown in Figure 6c is accurate; however, in the 37th frame shown in Figure 6d, the detected position of the hand exhibits minor jitter.

Figure 6.

Coordinate errors in recognition. (a) Frame-67 posture is correctly recognized. (b) Frame-68: Pose Recognition Flying Point. (c) Frame-36 posture is correctly recognized. (d) Frame-37 posture recognition jitter.

To optimize the “flying points” and coordinate jitter errors in human keypoints detection, we employ a frame-based inter-frame joint correction and smoothing algorithm. This algorithm effectively enhances the keypoints detection results by implementing inter-frame position correction and sliding window smoothing.

The pseudocode of the algorithm presented in Algorithm 1 briefly illustrates the execution process of the algorithm. Special attention should be given to the configuration of the correction threshold and the size of the smoothing window. For the correction threshold, the thresholds for x-axis and y-axis are set to 1/30th of the frame width and height, respectively. This determination is based on experimental test results. The size of the sliding window for the smoothing process is set to 10 frames.

| Algorithm 1 Frame-Sequence-Based Joint Correction and Smoothing Algorithm |

|

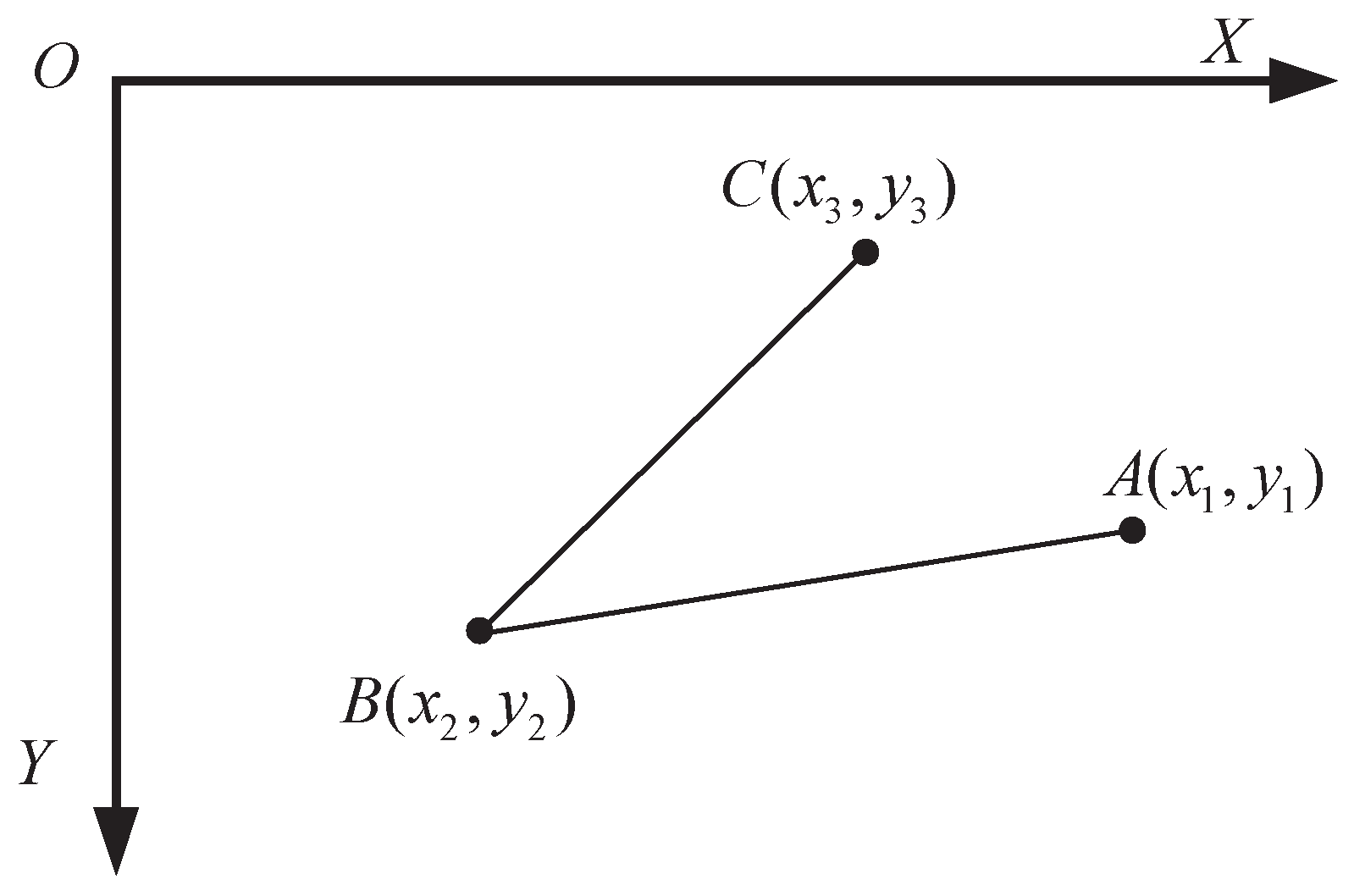

4.2. Body Angle Measurement

In the analysis of assessment algorithms, the angles between joints and the distances between keypoints are crucial for assessing human posture [35,36,37]. Therefore, prior to implementing the algorithm, it is necessary to standardize these angles and distances. It is important to note that the raw data consists of normalized scale factors that range from . To facilitate practical image processing and calculations, these factors are multiplied by the actual width and height of the image frame to obtain pixel coordinates. Based on these coordinates, a 2D image coordinate system (XOY) is established, as shown in Figure 7.

Figure 7.

2D screen image coordinate system XOY.

In the coordinate system (XOY), the origin O is located at the top-left corner of the image. The x-axis extends horizontally to the right, while the y-axis extends vertically downward. Given three human keypoints , and C, the angle between skeletal vectors and can be calculated through the following steps [38]

The cosine value of the joint bone radian is defined as follows:

Subsequently, the radian value is obtained using the arc-cosine formula, with the counterclockwise direction from the origin of the coordinate system XOY defined as positive. For improved intuitive understanding, this radian value is then converted into degrees. In the two-dimensional image plane, the distance d between keypoints, such as joint points A and B, can be expressed as:

Note that the distance d is measured in pixels on the 2D image frame.

4.3. Pull-Up Assessment Algorithm Design

4.3.1. Horizontal Bar Height Measurement

Since the system employs a purely vision-based solution without prior camera calibration, the height of the horizontal bar must be estimated during the execution of the algorithm. By analyzing the entire pull-up movement, we observe that after the test-taker completes the upward motion to grasp the bar, a distinct feature emerges: the height of the hands remains constant. Based on this observation, we attempt to design an algorithm to identify a stable hand height value. Variance measures data dispersion and reflects the extent of value fluctuations within a dataset. The formula for calculation is as follows:

where represents the dataset, is the mean of the dataset, n is the number of elements in the dataset, and denotes each data point within the dataset.

By calculating the variance, the algorithm can effectively identify regions where the heights remain highly stable and change minimally. During the pull-up process, when the hands are in a stationary or nearly static position, the height variation of the hands is minimal, resulting in a low variance value. Conversely, when the hands move up and down, the height variation increases, leading to a rise in variance. By observing these phenomena, the algorithm can accurately determine the height value when the hands are stable, which is then used as the height of the horizontal bar. This approach helps avoid errors in height estimation that could arise from unstable hand movements. The specific steps for the algorithm to estimate the height of the horizontal bar are as follows:

Calculate the variance within the sliding window: The sliding window method is employed, and the variance is calculated for each window. Given a window size , for the data in each window , calculate its variance:

where denotes the mean value of the data within the window, represents each data point, and signifies the size of the window.

Variance stability detection: Select windows that have relatively small variance values, specifically:

where is a threshold determined by the algorithm’s configuration, representing the acceptable range of variance.

Filter and select the maximum height value: Among all stable intervals, the algorithm selects the one with the highest average height value as the final horizontal bar height:

where represents the average height value of the i-th window, and n denotes the total number of window intervals that meet the variance stability condition.

Output result: Return the value of the height of the horizontal bar .

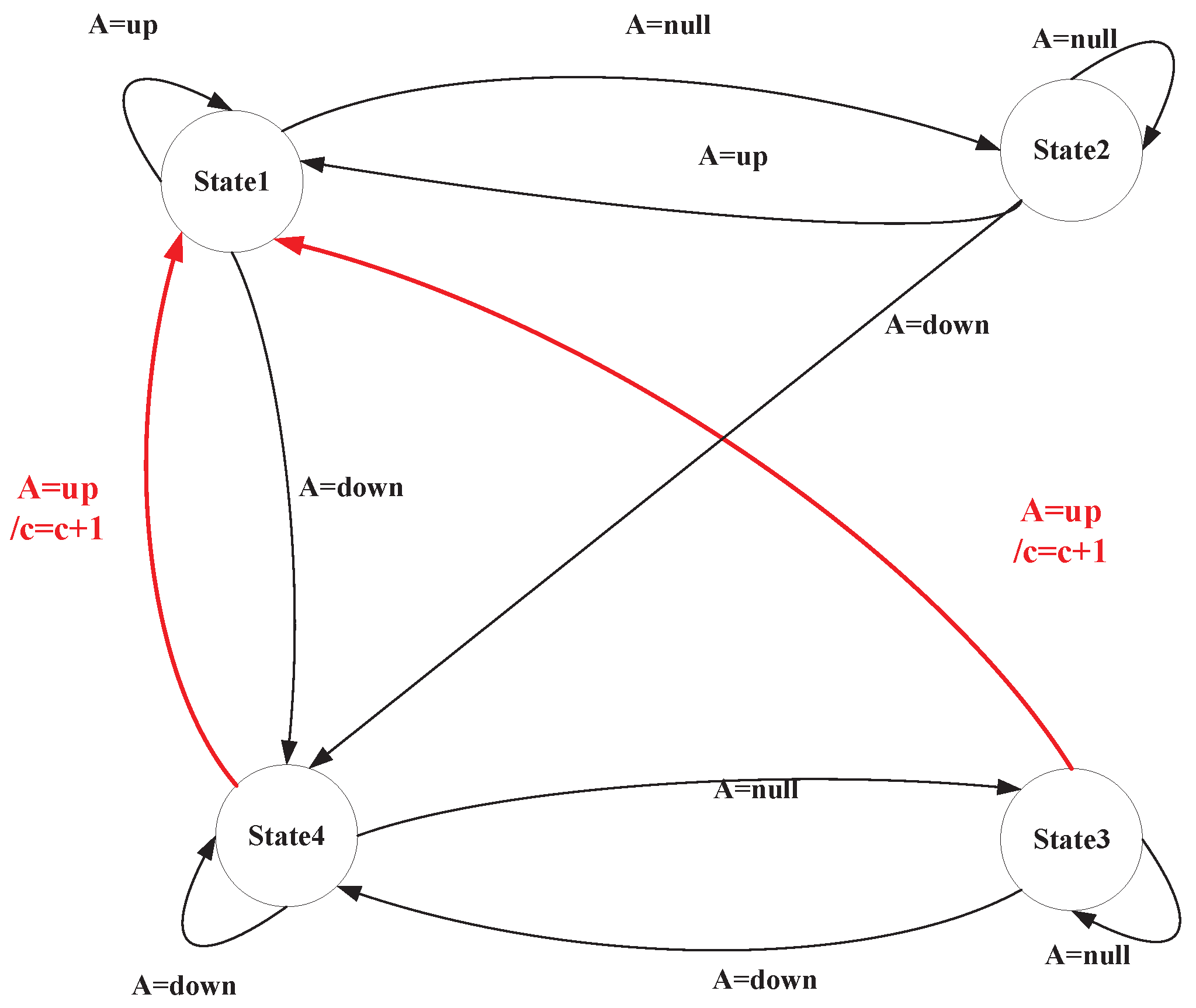

4.3.2. Exercise Posture Measurement Based on Finite State Machine

A FSM is a model that describes how a system switches between a finite number of states in accordance with inputs and based on fixed rules. In exercise posture assessment, the state machine can define the postures occurring at each stage of a movement. It evaluates whether the movement meets the standard state transition conditions by utilizing human keypoint data, thereby facilitating automated scoring. Based on the different movement states that may occur during the pull-up process of the human body, the states of the state machine model in this paper are defined as follows:

- State1:

- The state in which the human body is pulled to a high position during a pull-up.

- State2:

- The state in which the human body exits State1 during a pull-up.

- State3:

- The state in which the human body exits State4 during a pull-up.

- State4:

- The state in which the human body is in a low position during a pull-up.

The transition between each state on the state machine is closely related to the output of the state machine judgment function , which is presented in Algorithm 2.

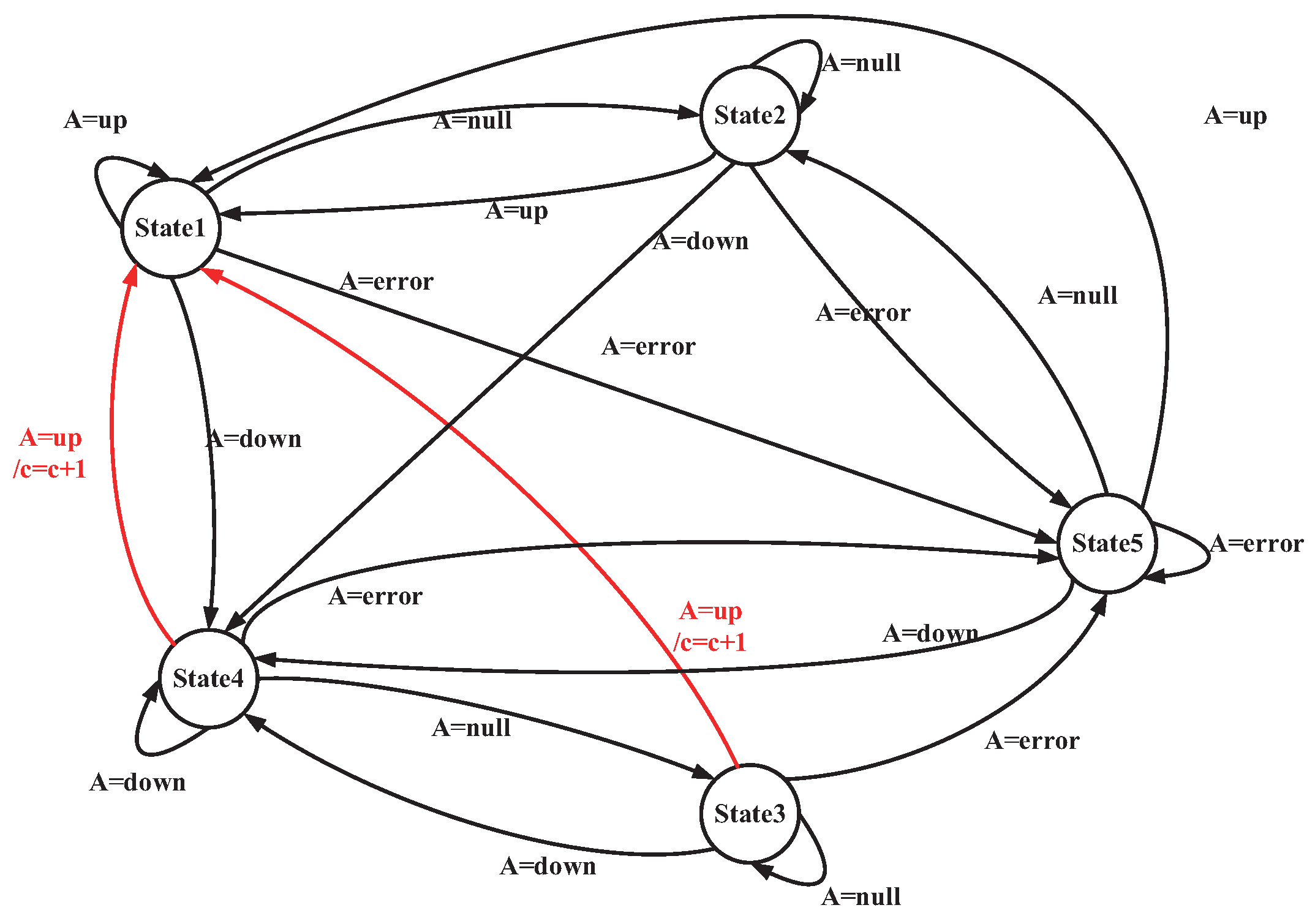

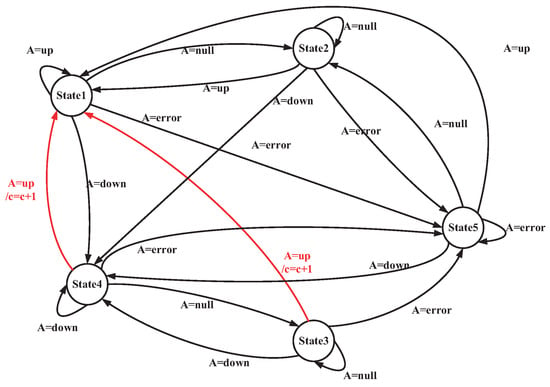

This paper presents a state transition table (Table 3) and a state transition diagram (Figure 8) for the pull-up assessment algorithm. The following sections provide a detailed examination of each state and its transition conditions. This will facilitate a deeper analysis of the behavioral patterns and assessment criteria associated with pull-up movements.

| Algorithm 2 Pull-up State Machine Judgment Function |

|

Table 3.

Pull-up Assessment Algorithm State Transition Table.

Figure 8.

Pull-up assessment algorithm state machine.

Transitions from State1: In State1, the system remains in this state only when the output of the judgment function is “up” indicating that the human body is in the high-pull position. When the output of function is “null”, it indicates that the human body exits the “up” state and transitions to State2. When the output of function is “Down ”, the FSM transitions to State4. This indicates that the human body is moving directly from the high position to the low position. This generally suggests that the test taker’s movement speed may be too fast, or that the sampling frequency of posture detection is inadequate.

Transitions from State2: State2 represents the state that slides out from State1. When the output of the judgment function is “null”, FSM system remains in State2. If the output of function is “up” the system re-enters State1 (the high position). This repetition is not counted because the human body does not transition through State4 (the low position). When the output of function is “down”, the FSM proceeds to State4, where the human body descends to the low position.

Transitions from State3: State3 represents the state that slides out from State4. When the output of the judgment function is “null”, the FSM remains in State3. If the output of function is “up”, the FSM transitions to State1 (the high-pull position) and records this as a standard pull-up posture that has been completed. When the output of function is “down”, the FSM re-enters State4 (the low position).

Transitions from State4: State4 indicates that the human body is in a low position during pull-ups. When the output of the judgment function is “down”, the FSM remains in State4. When the output of the function is “up”, the FSM transitions to State1 (the high position) and counts this as a completed standard pull-up posture. This may also indicate that the test taker’s movement speed is too fast or that the sampling frequency for posture detection is insufficient. When the output of the function is “null”, the FSM exits State4 and transitions to State3.

This state machine model clearly defines key states, such as “pull position”, “transition states” and “low position” along with their transition conditions. It can identify standard postures, where only the complete process from State4 to State1 is counted, and effectively handle abnormal situations (such as the direct transition from State1 to State4 due to fast movements). The strict conditional constraints in the state transition table ensure the rigor of the counting logic, thereby avoiding misjudgments and omissions. The intuitive presentation of the state transition diagram makes the behavioral patterns of the entire assessment process clearly distinguishable. This state machine-based design method meets the stringent requirements of exercise posture assessments, and also demonstrates good fault tolerance and real-time performance.

4.4. Push-Up Assessment Algorithm Design

To accurately describe the working principle of the state machine, we define the relevant terms involved in the push-up assessment algorithm to enhance understanding of its mechanism. As shown in Table 4, the term “angle” refers to the joint angle calculated in the 2D XOY coordinate system of images. The term “height” is defined as the relative distance between the maximum y-axis value in the image frame and the y-value of the key point positions. Lastly, “threshold” refers to an empirical threshold set according to assessment standards, or it may be a self-determined threshold based on each individual’s performance during exercise.

Table 4.

Definition of Key Terms for Push-up Assessment.

Based on the different movement states that the human body may experience during push-ups, we have defined the various states of the state machine as follows.

- State1:

- During push ups, the body supports a high position.

- State2:

- The state in which the human body exits from State1 during push ups.

- State3:

- The state in which the human body exits from State4 during push ups.

- State4:

- The body supports a low position during push ups.

- State5:

- The posture of the human body in a misaligned position during push ups.

The transition between various states in the state machine is closely related to the output of the state machine judgment function , the pseudocode of which is presented in Algorithm 3.

| Algorithm 3 Push-up State Machine Judgment Function |

|

To clearly illustrate the working principle of the push-up state machine, this paper provides a state transition diagram and a state transition table as shown in Table 5 and Figure 9. The principle of state machine transition for push-up is essentially the same as that for pull-up. To save space, it will not be repeated here.

Table 5.

Push-up Assessment Algorithm State Transition Table.

Figure 9.

Push-up assessment algorithm state machine.

4.5. Sit-Up Assessment Algorithm Design

Based on the different movement states that the human body may experience during sit-up, we have defined the various states of the state machine as follows.

- State1:

- When leaning up, the distance between the elbow and knee is less than the threshold value, i.e., --.

- State2:

- The state in which the human body exits from State1 during push ups.

- State3:

- The state in which the human body exits from State4 during push ups.

- State4:

- When lying down, the angle between the body and the horizontal line is less than the threshold value, i.e., --.

- State5:

- The posture of the human body in a misaligned position during sit-ups.

The transition between various states in the state machine is closely related to the output of the state machine judgment function , the pseudocode of which is presented in Algorithm 4.

| Algorithm 4 Sit-up State Machine Judgment Function |

|

The state transition diagram and a state transition table are same as Table 5 and Figure 9, respectively. The principle of state machine transition for sit-up is essentially the same as that for pull-up. To save space, it will not be repeated here. Moreover, it is evident that the state machine-based posture assessment method proposed in this paper exhibits strong generalizability and extensibility. The core idea lies in defining key motion states and transition rules to establish a standardized assessment framework. This framework does not rely on the specific features of movements, but rather focuses on the universal patterns of human motion. Whether it is upper limb-dominant movements such as push-ups and pull-ups, or full-body coordinated movements like squats and sit-ups, this framework can be used for exercise assessment. Consequently, this state machine-based approach not only fulfills the assessment requirements for specific exercises in the current study, but also provides a scalable technical pathway for future applications in diverse exercises (e.g., track and field, gymnastics). Its strong adaptability underscores its practical value for broader implementation.

5. Experiments and Tests

In this section, we present the experimental studies conducted by using the aforementioned testbed. It should be noted that all participants were provided with the same set of instructions prior to data acquisition. They were asked to perform each exercise to their maximum capacity while adhering to standard athletic form. For pull-ups, the standard was defined as starting from a full-hang position with arms extended and pulling up until the chin cleared the horizontal bar. For push-ups, the standard required maintaining a straight torso while lowering the body until the chest was near the floor, followed by a full extension of the arms. This protocol was intentionally designed to test the robustness of the assessment algorithm against the natural performance variations inherent in a diverse population.

5.1. Experimental Configuration

To debug and optimize the algorithm, the following outlines the workflow of human posture recognition and assessment task. The Human Pose Detection (Pose Landmarker [34]) task utilizes the create_from_options function to initialize the task, while the create_from_options function accepts the configuration option values that need to be processed. Table 6 presents the parameter configurations along with their descriptions.

Table 6.

Pose Landmarker Parameter Configuration Sheet.

The Pose Landmarker task exhibits distinct operational logic across its various execution modes. In IMAGE or VIDEO mode, the task blocks the current thread until it has fully processed the input image or frame. Conversely, in LIVE STREAM mode, the task invokes a result callback with the detection outcomes immediately after processing each frame. If the detection function is called while the task is occupied with other frames, it simply ignores the new input frame. In this system, although the live stream from the webcam inherently aligns with the LIVE STREAM mode, the VIDEO mode is deliberately chosen to ensure detection accuracy and fairness in assessment calculations. This decision benefits from the multi-process design of the assessment algorithm subsystems. Even when the posture processing speed lags behind the data collection speed, this design guarantees that all image frames are processed. Additionally, the parallel operation of multiple sub-modules within the assessment algorithm subsystem plays a crucial role in maintaining real-time performance.

Each time the Pose Landmarker task executes a detection, it returns a Pose LandmarkerResult object. This object contains the coordinates of each human body keypoint, providing essential data for subsequent analysis and assessment.

5.2. Pull-Up Case

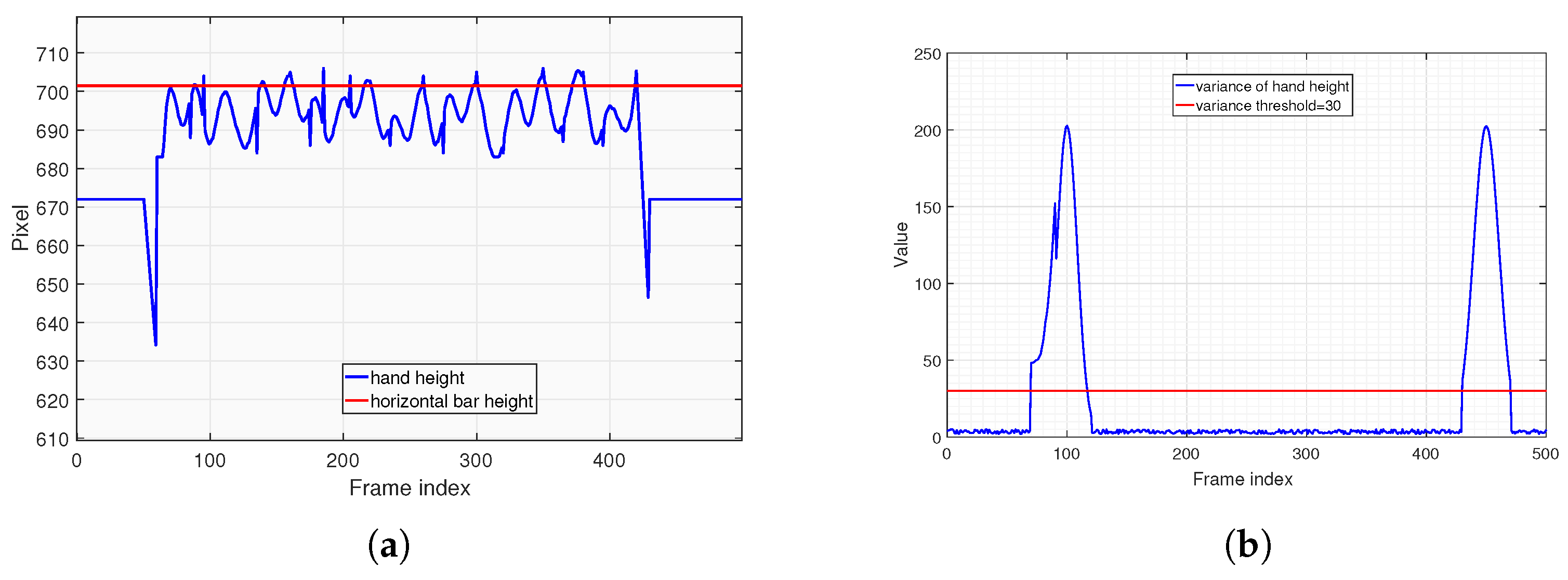

In Figure 10a, the blue line represents the changes of hand height across video frames during pull-ups. It can be observed that the hand height changes slightly and remains stable during the initial and final stages. This stability occurs because the tester’s hands do not vary significantly when standing either before starting or after finishing the pull-up assessment. However, during the pull-up process, there are slight jitters and variations about hand coordinates, primarily influenced by minor hand movements and errors of body keypoints recognition. Particularly during the initial stage of pull-ups, the hand height experiences significant changes. The blue line in Figure 10b illustrates the variance of hand height values within each sliding window. It is evident that the variance remains small in frame intervals where the hands do not move significantly. The red line denotes the acceptable variance threshold (30 pixels, an empirical value selected based on the resolution of the subject’s video stream). Within the sliding window intervals that meet this variance threshold, we select the average hand height value from Figure 10a and take its maximum value (as indicated by the red line) as the height of horizontal bar.

Figure 10.

Horizontal bar height measurement of pull-up. (a) Hand height across video frames during pull-ups. (b) Variance of hand height values within each sliding window.

Similarly, the algorithm employs the same deductive logic to determine the eye height threshold, which will be used by the state machine judgment function in Algorithm 2. Throughout the entire pull-up process, the elbow joint point displays similar data characteristics with the hand height in Figure 10. The algorithm extracts the stable height value after the tester mounts the bar during the assessment. This value acts as the threshold that the eyes should remain below when descending to the low position of pull-up, which is referred to as the eye height threshold. The algorithm test results are presented in Figure 11, and the deduction process will not be reiterated here.

Figure 11.

Eye height threshold measurement of pull-up. (a) Elbow height across video frames during pull-ups. (b) Variance of elbow height values within each sliding window.

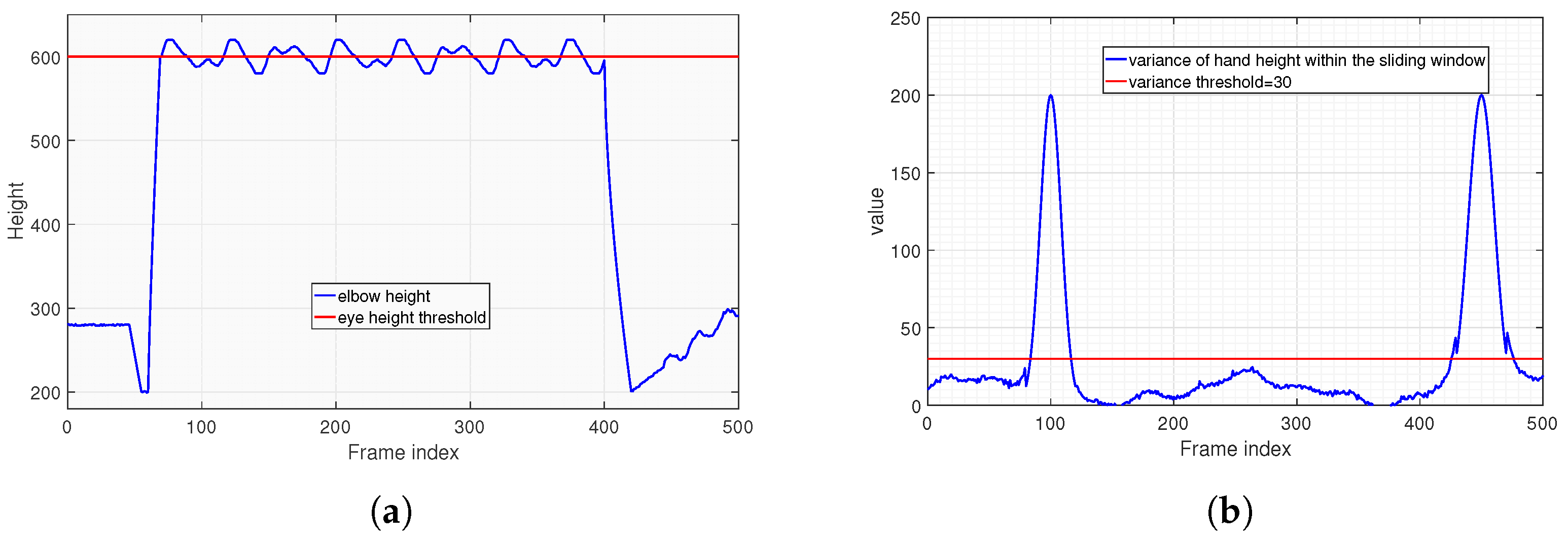

Figure 12 presents the results of applying the frame-sequence-based joint correction and smoothing algorithm. To clearly demonstrate the execution process of the algorithm, the data results after the first-step correction are shown in Figure 12a, while the final results after further smoothing following the correction are presented in Figure 12b. It can be observed from Figure 12a that the errors caused by outliers are effectively suppressed after the correction. In Figure 12b, after the step of smoothing, the jitter errors of the coordinates are also successfully eliminated. This demonstrates that the algorithm effectively optimizes the errors in the recognition process and restores the true motion trajectory, thus providing a solid foundation for the design of the subsequent assessment algorithm.

Figure 12.

Data results of applying the frame-sequence-based joint correction and smoothing algorithm. (a) Data results after the first-step correction. (b) Final data results after further smoothing.

In the preceding sections, the assessment criteria for pull-ups have been analyzed in detail, and a corresponding algorithm has been developed based on these criteria. To verify the actual effectiveness of the designed algorithm, we conduct the following experimental validations.

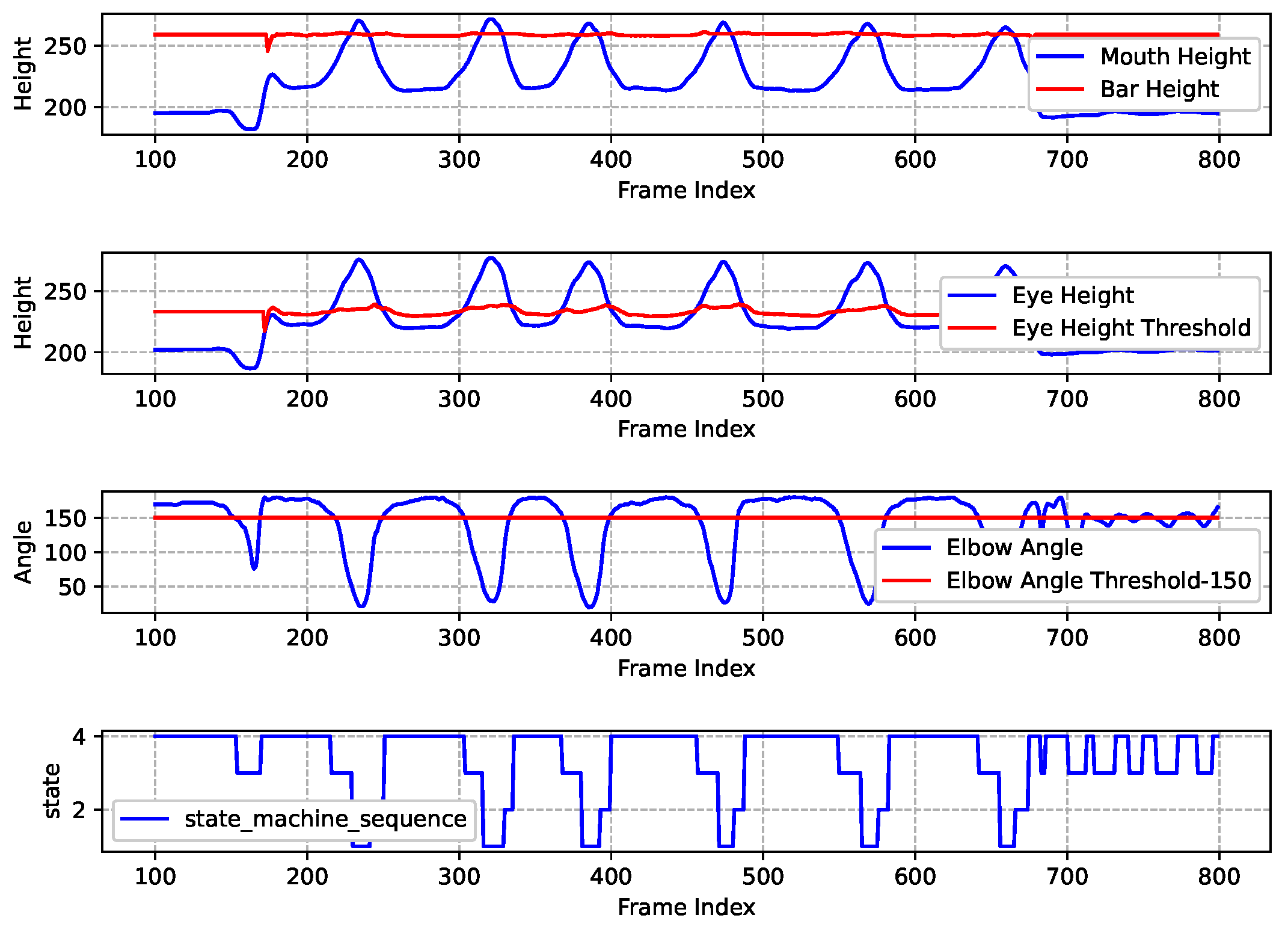

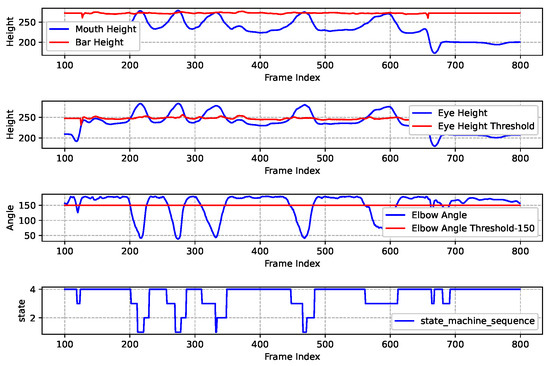

The data results graph from the standard pull-up experiment is shown in Figure 13. The figure is divided into four sub-graphs from top to bottom, illustrating the variation of each key data point. The analysis of the experimental data is as follows.

Figure 13.

Standard pull-up exercise recognition and assessment results.

The 1st Sub-graph: The blue line illustrates the height of the human mouth as the sequence of frames progresses, while the red line indicates the height of the horizontal bar as estimated by the horizontal bar height measurement algorithm. It is intuitively apparent that during a standard pull-up exercise, the curve representing the mouth’s height consistently intersects with the straight line denoting the height of the horizontal bar.

The 2nd Sub-graph: The blue line represents the variation of eye height throughout the frame sequence, while the red line indicates the estimated eye height threshold by Figure 11. Similarly, the curve depicting eye height consistently intersects with the straight line representing the eye height threshold.

The 3rd Sub-graph: The blue line illustrates the variation of the angle of human elbow as the frame sequence progresses, while the red line represents the elbow angle threshold, which has been set at 150 degrees (an empirical value). This setting encourages the test-taker to keep their elbows fully extended when lowering to the low position.

The 4th Sub-graph: The blue line illustrates how the state machine varies as the frame sequence changes, allowing for observation of the overall state of human exercise and the logical counting process. Based on the sequence of the state machine in the 4th sub-graph, this experiment concludes that the test-taker performed 6 standard pull-up movements. Consequently, there were 6 transitions from State4 to State3 to State1 in the sequence.

Figure 14 shows the results of a pull-up assessment process that encounters multiple errors. The data indicates that only a few movements meets the standard assessment criteria. The main issues identified include: the mouth height not exceeding the horizontal bar during the upward pull and the eyes not being below the designated threshold height. Consequently, the final assessment score is determined to be 4, which corresponds to 4 sequences of “State4-State3-State1” in the state machine.

Figure 14.

Nonstandard pull-up exercise recognition and assessment results.

Then, we organized various test-takers to test the pull-up assessment algorithm, and the results are shown in Table 7. To evaluate the recognition accuracy of the pull-up algorithm, a set of experimental trials was conducted involving multiple participants performing pull-ups. During these trials, the actual number of valid pull-up repetitions performed by each participant was meticulously recorded as ground truth by experienced human assessors. The recognition accuracy of the system for pull-ups was then calculated by comparing the number of repetitions correctly identified by our algorithm against this manually established ground truth. Specifically, the accuracy represents the ratio of correctly recognized repetitions to the total actual repetitions, expressed as a percentage, thus quantifying the ability of the system to precisely count valid movements. Specifically, the accuracy rate shown in the table is calculated as the ratio of correctly recognized repetitions by the algorithm to the total actual repetitions established by human assessors, expressed as a percentage. Overall, the assessment algorithm demonstrates a relatively high accuracy rate among test-takers with high physical fitness levels. However, the recognition accuracy rate decreases slightly among ordinary college students and teachers. The overall accuracy rate of the algorithm is 96.6%, which indicates that the algorithm can effectively assess pull-ups in most cases.

Table 7.

Pull-up Assessment Algorithm Accuracy Statistics.

5.3. Push-Up Case

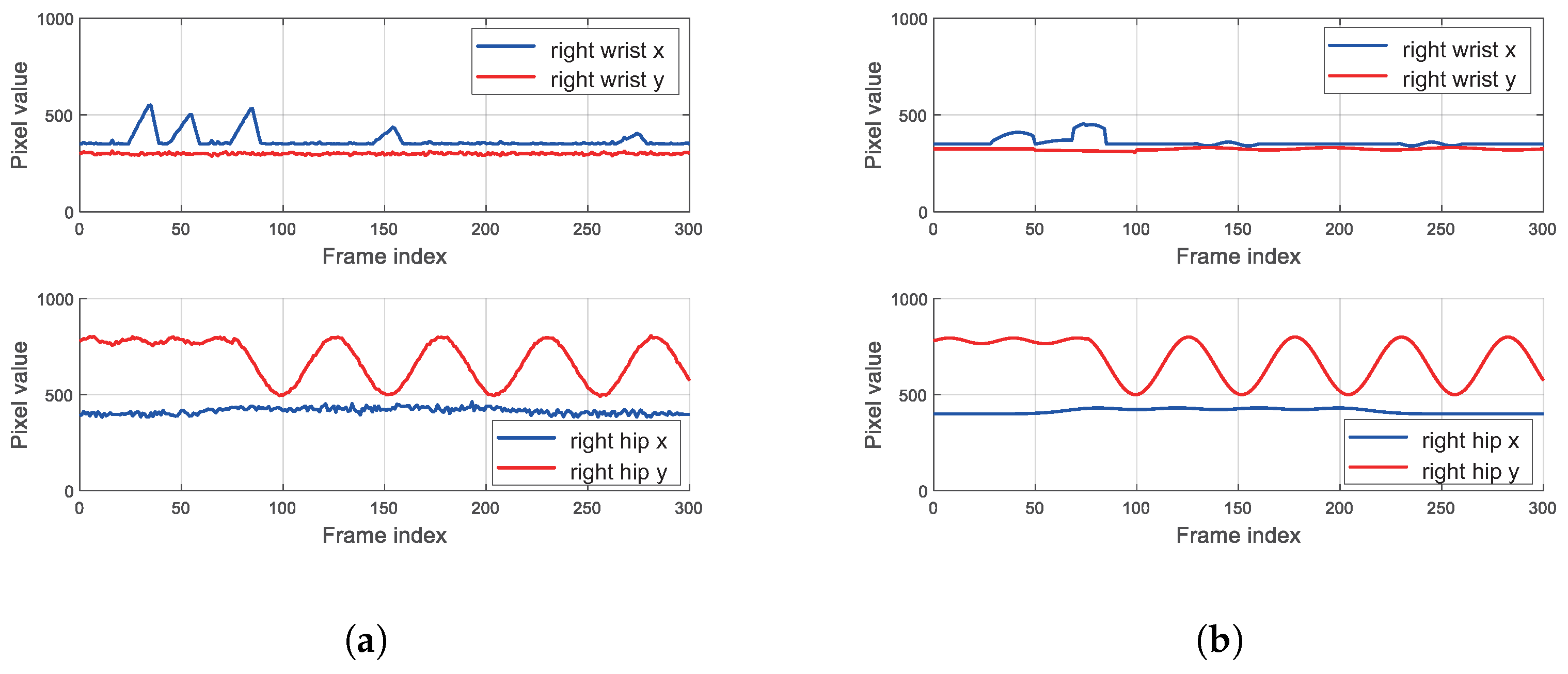

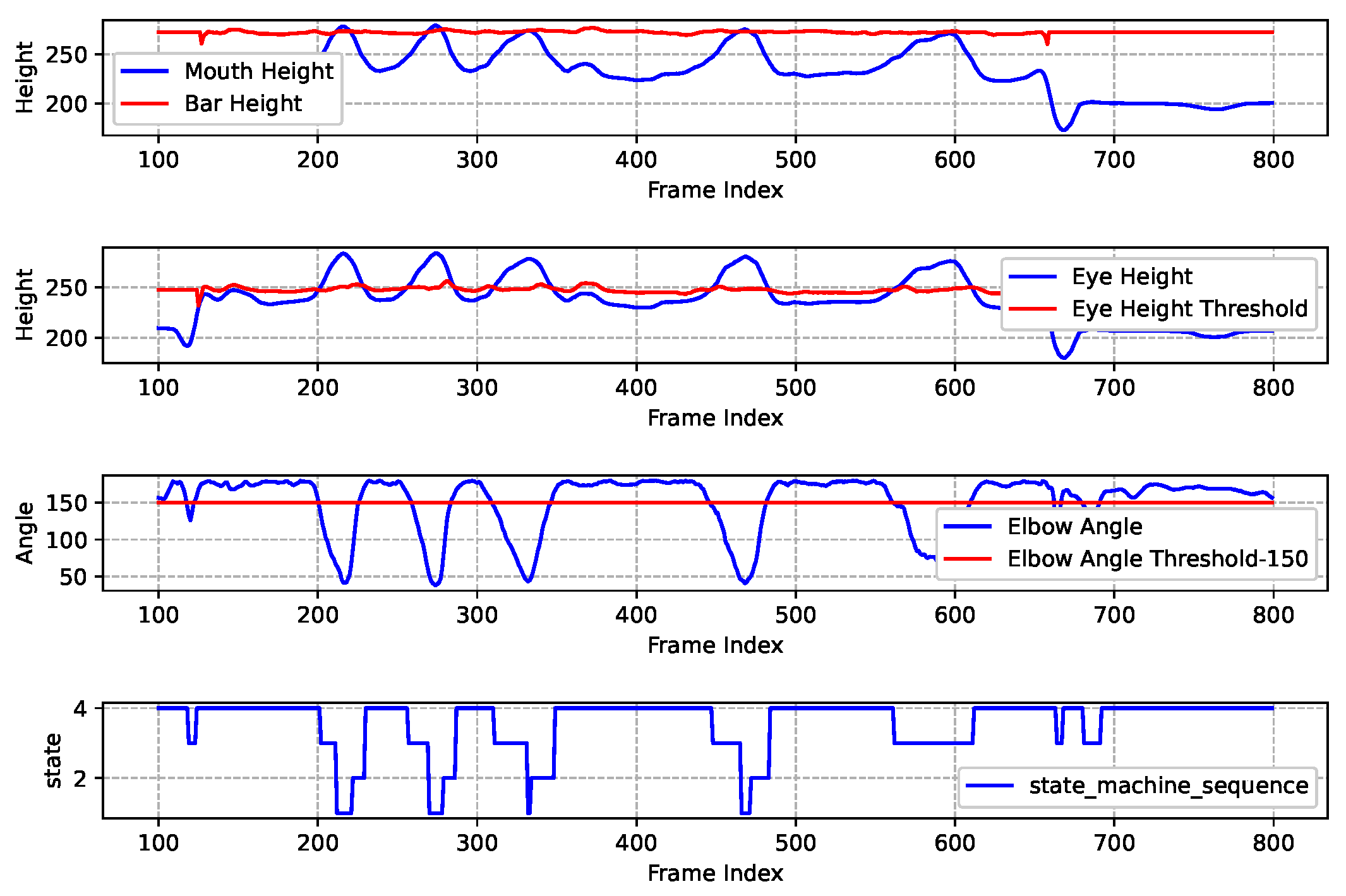

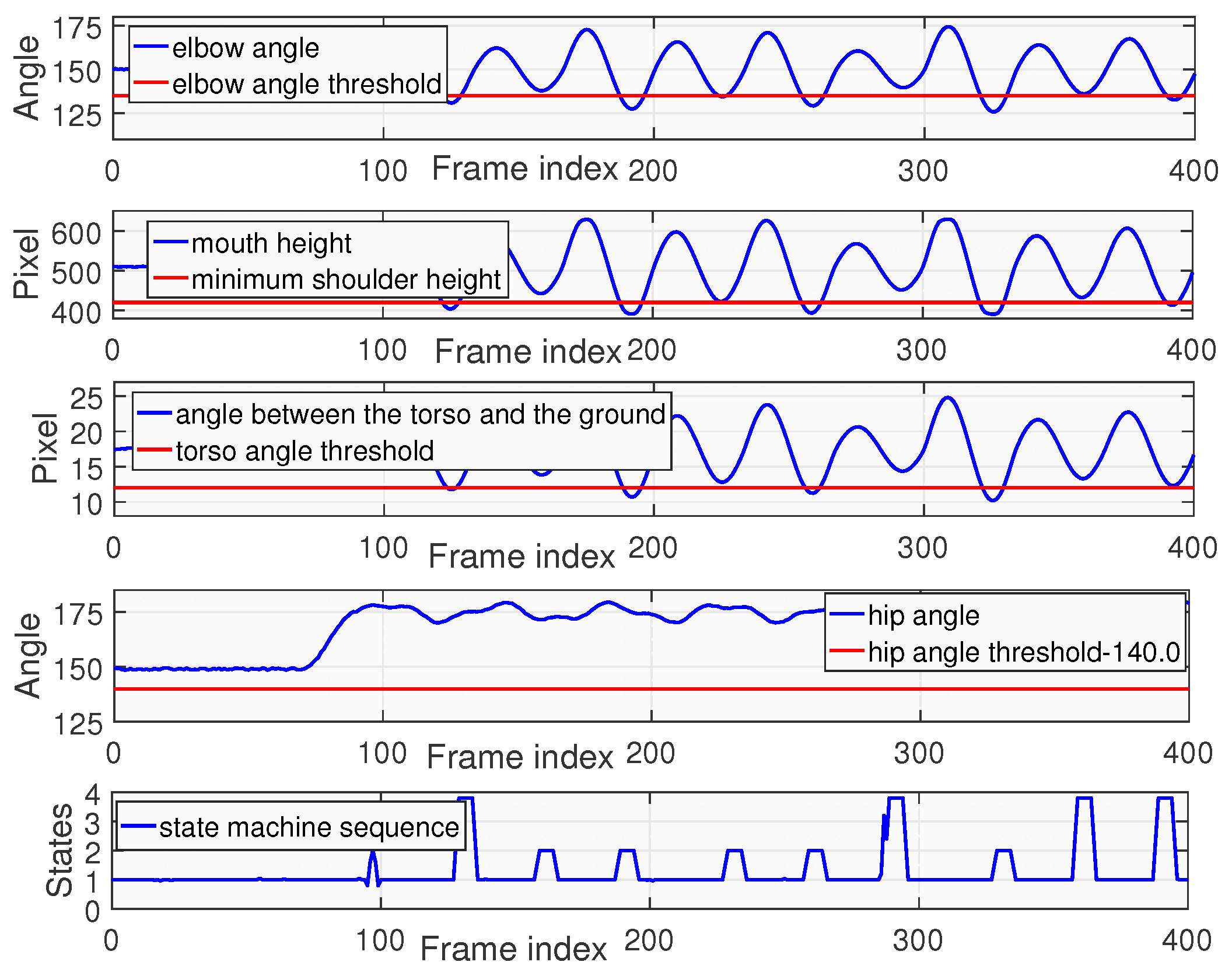

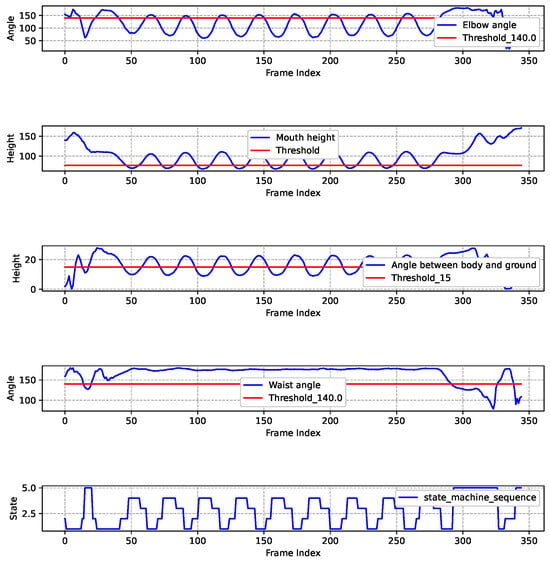

Figure 15 presents the results of a standard push-up experiment. It includes five subgraphs arranged from top to bottom, illustrating the changes of multiple key data points. The specific analysis is as follows.

Figure 15.

Standard push-up exercise recognition and assessment results.

The 1st Sub-graph: The blue line illustrates the variations of elbow angle of human body as the frame sequence progresses. The red line represents the elbow angle threshold which is set as 140 degrees. This empirically chosen value aims to prevent excessive bending of the elbow during the upward phase. This threshold can be adjusted at different actual conditions. When the blue line is above the red line, it indicates that the elbow angle meets the criteria for the high support position; otherwise, it does not fulfill the established standard.

The 2nd Sub-graph: The blue line illustrates the variation of mouth height, indicating the position of the tester’s mouth relative to the ground during push-ups. The mouth height fluctuates throughout the movement. The red line represents the minimum shoulder height throughout the entire push-up process, which can be different for different individuals. A necessary condition for entering the low support state during push-ups is that the mouth height must be lower than the minimum shoulder height.

The 3rd Sub-graph: The blue line illustrates the variation of the angle between torso and ground, while the red line indicates a threshold of 15 degrees.

The 4th Sub-graph: The blue line illustrates the variation of waist angle. The red line indicates the threshold which is set at 140 degrees. The purpose of this threshold is to ensure that the tester maintains a straight body.

The 5th Sub-graph: The blue line illustrates the changes of the state machine sequence. When the blue line of the state machine experiences a transition from State4 to State3 to State1, it signifies that the tester has successfully completed a standard push-up movement. During the movement, any non-compliant action will trigger State5. Finally, it can be observed from the figure that the result of this push-up assessment is 9.

Figure 16 shows the assessment results of non-standard push-up. In this experiment, the mouth height consistently failed to reach to the required threshold for the low-position state, and the torso angle also did not meet the standard. Therefore, in the state machine sequence, only 4 transitions from State4 to State3 to State1 happen. Thus, the final score of this tester is 4.

Figure 16.

Nonstandard push-up exercise recognition and assessment results.

Similarly, we organized various test-takers to test the push-up assessment algorithm, and the results are shown in Table 8.

Table 8.

Push-up Assessment Algorithm Accuracy Statistics.

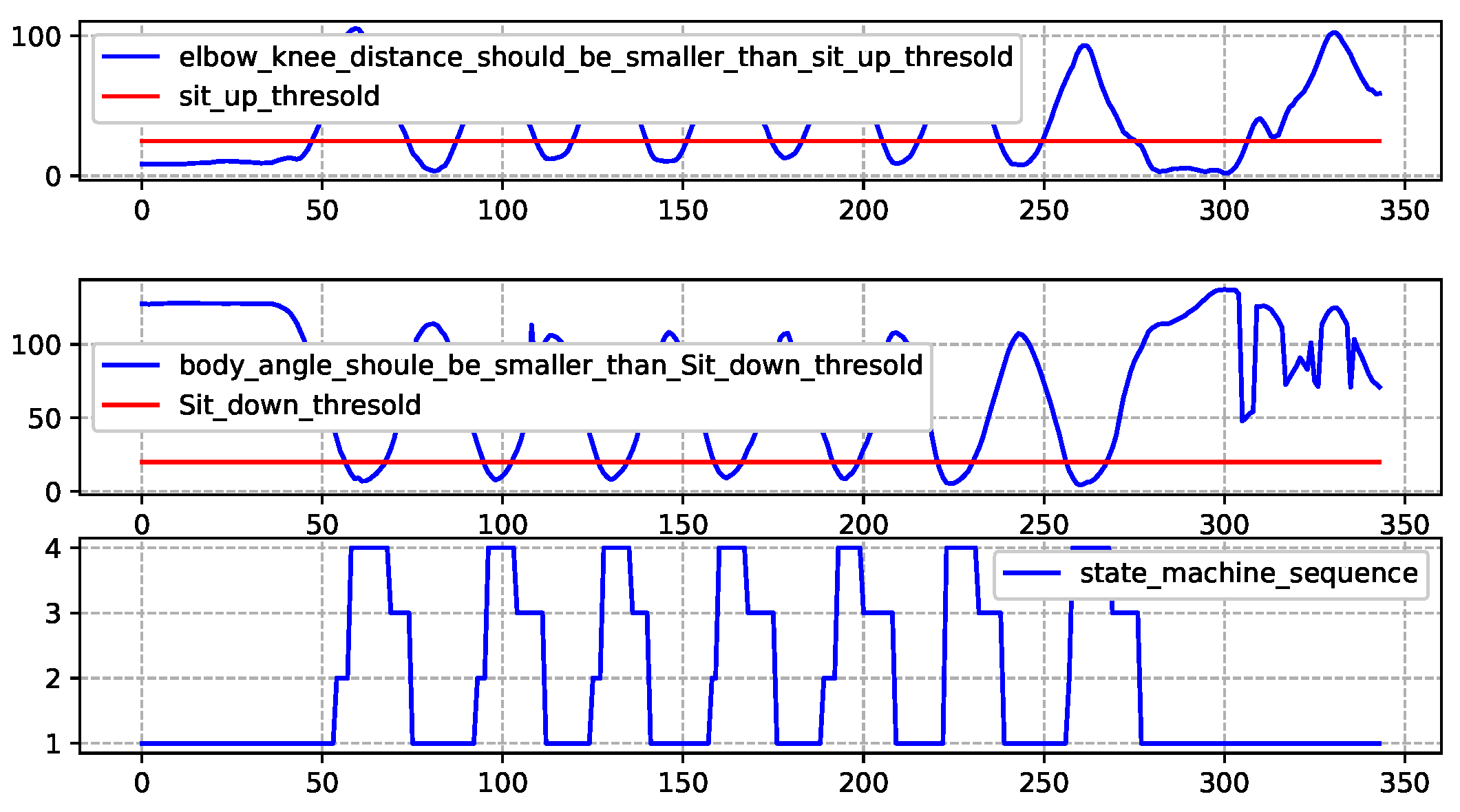

5.4. Sit-Up Case

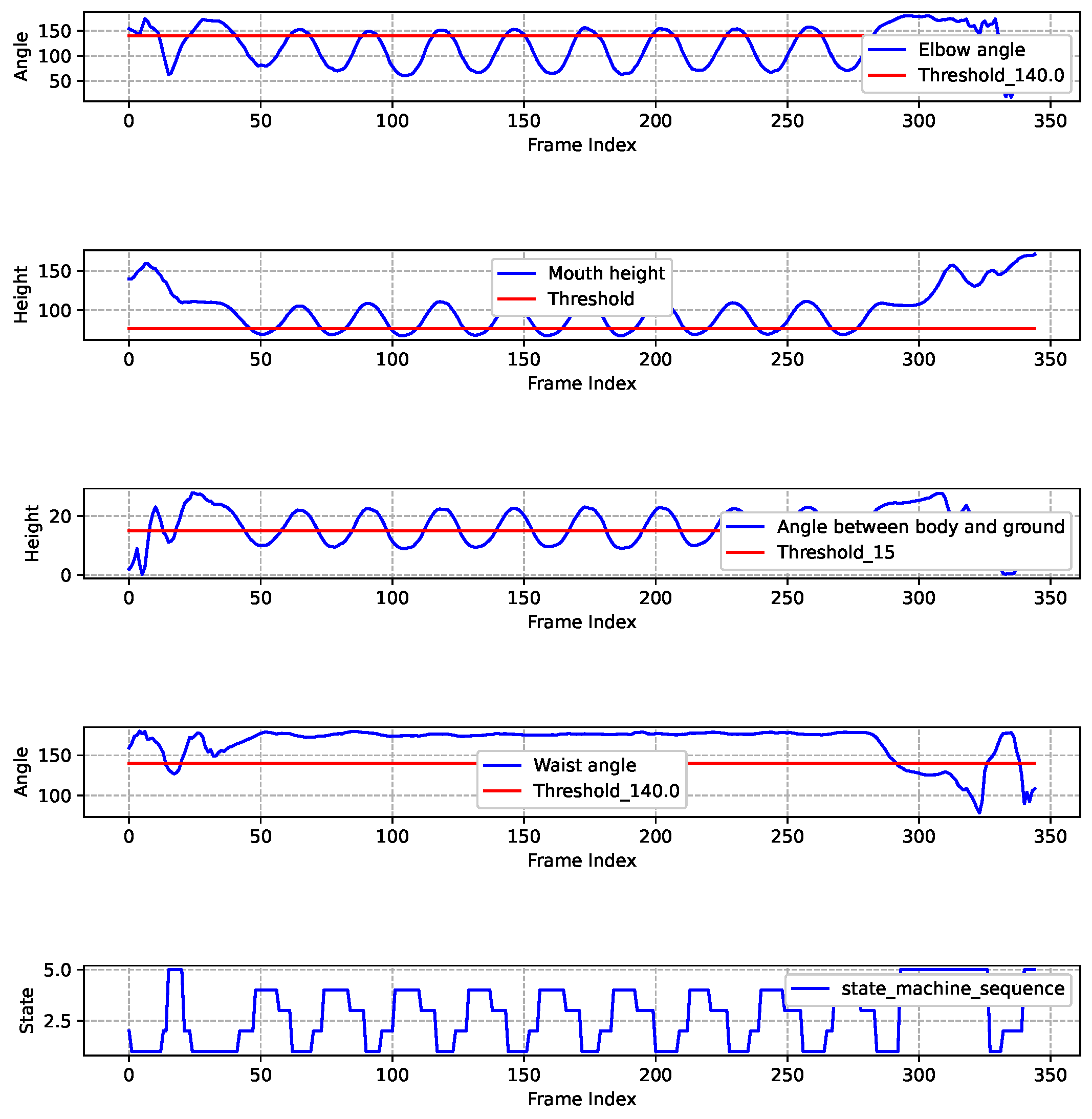

Figure 17 shows the different stages of the sit-up exercise.

Figure 17.

Standard sit-up exercise recognition and assessment results.

The 1st Sub-graph: The blue line illustrates the distance between elbow and knee. The red line represents the sit-up-threshold. This threshold can be adjusted at different actual conditions. When the blue line is above the red line, it indicates that the the elbow and knee are in contact with each other.

The 2nd Sub-graph: The blue line illustrates angle between the body and the horizontal line. The red line represents the sit-down-threshold. When the blue line is above the red line, it indicates that the human body is in a lying state.

The 3rd Sub-graph: The blue line illustrates how the state machine varies as the frame sequence changes, allowing for observation of the overall state of human exercise and the logical counting process. Based on the sequence of the state machine in the 4th sub-graph, this experiment concludes that the test-taker performed 7 standard sit-up movements.

In the aforementioned pull-up, push-up, and sit-up experiments, we conducted tests with individual subjects. In fact, our system is designed under the specification that one camera is dedicated to capturing one person to ensure accurate posture recognition. When there are multiple people for testing simultaneously, we recommend configuring multiple cameras (one camera per person) to avoid interference between individuals. When occlusions occur or lighting conditions are poor, the posture processing module may inaccurately detect key points, leading to the phenomenon of “flying points”. In this case, the Joint Correction and Smoothing Algorithm can identify and correct abnormal key point positions caused by occlusions or bad lighting to a certain extent, thereby improving the stability and accuracy of posture recognition.

5.5. Computational Efficiency Analysis

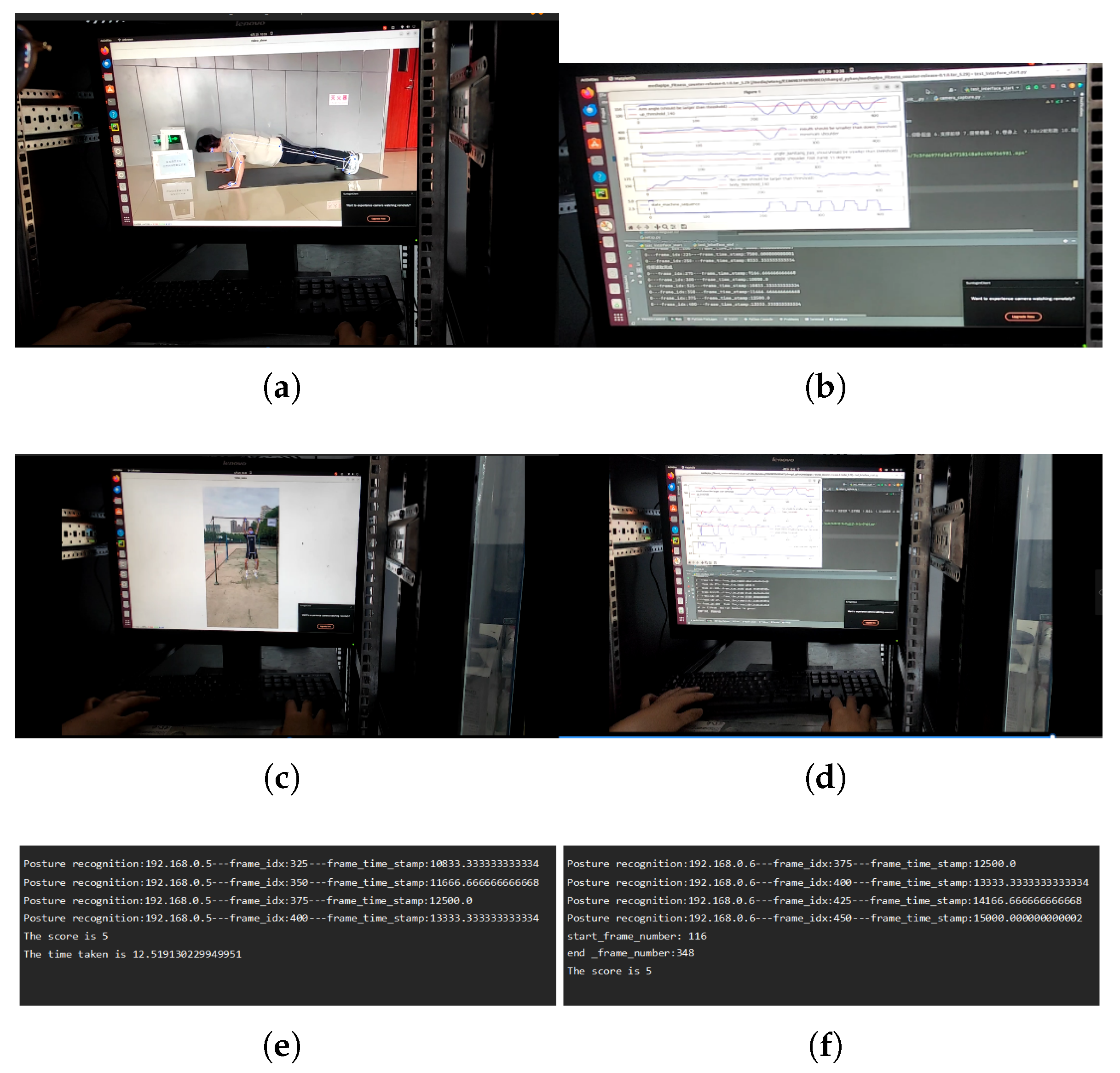

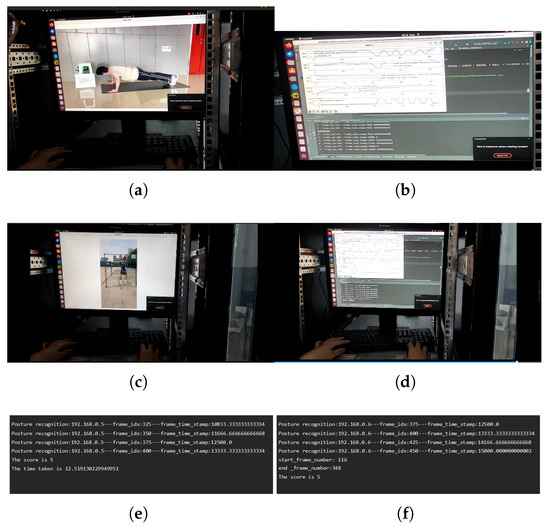

To provide a clearer picture of the operational performance of the system, we conducted the computational efficiency analysis. Figure 18 illustrates the server’s data processing flow during the test of both push-up and pull-up assessments. For debugging and demonstration purposes, the live video feed and real-time algorithm analysis are displayed, as depicted from Figure 18a–d. These display features are disabled during actual deployment to minimize resource consumption.

Figure 18.

Data Processing Process and Real-Time Computational Performance. (a) The server processes push-up videos; (b) Algorithm Analysis of Push-ups; (c) The server processes pull-up videos (d) Algorithm Analsysis of Pull-Ups (e) Push-up assessment judgment results (f) Pull-up assessment judgment results.

The assessment results are shown in the final panels of Figure 18e,f. Figure 18e illustrates the processing of a push-up assessment, where 5 valid repetitions were completed in 12.52 s from stream initiation to final calculation. Figure 18f shows a similar pull-up assessment of 5 repetitions taking 13.17 s. In both cases, the calculated scores are returned to the user interface within 3 s of the tester completing the exercise. This performance demonstrates the capability of the system for real-time analysis and rapid feedback, meeting the requirements for efficient and timely sports assessment.

5.6. Discussion

Our developed system offers a comprehensive approach to automated exercise assessment, distinguishing itself by integrating multiple functionalities into a robust, end-to-end solution suitable for practical deployment—with explicit consideration of fault handling and design trade-offs to address real-world constraints. While much of the existing state-of-the-art research often focuses on optimizing individual components, such as advanced pose estimation algorithms or novel deep learning models for specific exercise recognition, our system prioritizes the entire workflow from real-time video capture to score calculation and data management—including targeted handling of practical faults inherent in visual sensor-based assessment. The primary non-quantifiable faults in this context are “flying points” and coordinate jitter of human keypoints, caused by mathematically unmodelable environmental interference like uneven lighting and limb occlusion. Instead of relying on theoretical fault quantification, we adopted an engineering validation approach: building an experimental testbed based on the Phytium 2000+ processor, collecting real motion data from 5 test groups, and implementing a frame-based inter-frame joint correction and Smoothing Algorithm. As shown in Figure 12, this algorithm reduces “flying point” errors to ensure the reliability of subsequent posture assessment—a practical fault mitigation strategy that aligns with the system’s real-world deployment goal, where experimental validation of fault handling is more actionable than theoretical quantification.

This integrated design is crucial for real-world scenarios where not just accuracy, but also reliability, interpretability, and ease of deployment are paramount. The choice of a modular architecture and a rule-based FSM for exercise evaluation represents a deliberate design decision. Unlike purely data-driven, black-box deep learning approaches— which can achieve high accuracy but often lack transparency—our FSM model provides clear, human-interpretable rules for what constitutes a valid repetition. While our system is designed to be robust, extreme conditions could still pose challenges. Specifically, our calibration-free design, while enhancing deployment flexibility, makes the 2D-based assessment potentially vulnerable to significant alterations in viewing perspective or large-scale camera displacements. The lack of a calibration phase to compensate for such geometric variations is a limitation of the current study. Additionally, while our smoothing algorithm mitigates partial occlusions, more serious or prolonged occlusions can still lead to keypoint detection failures, which may cause the FSM to register errors. Finally, as the system relies on fixed visual sensors, it has limited portability for assessments in unconstrained environments compared to wearable devices like IMUs [6]. Furthermore, our methodological choice to allow subjects to perform exercises ’freely’ to their maximum capacity, while reflective of a real-world scenario, introduces performance variability that might affect results. This approach relies heavily on the FSM’s robustness to filter non-standard movements, and its impact versus a strictly protocol-driven execution should be noted as a study limitation. Rigorous experimental testing validated the system’s efficacy, achieving 96.6% accuracy for pull-ups and 97.4% for push-ups, confirming its alignment with expert judgment. In summary, our system offers a robust, accurate, and transparent solution for automated sports assessment, effectively bridging the gap between advanced computer vision research and practical application. Furthermore we plan to integrate additional thresholds, such as elbow angle checks, alongside the existing eye and mouth criteria, to further minimize the likelihood of false positives in our assessment logic.

6. Conclusions

This paper presented the design and implementation of an automated human posture recognition and assessment system, developed to overcome the issues of traditional manual methods. A primary contribution is our novel evaluation pipeline, which uses a temporal correction algorithm to ensure reliable movement trajectories and a FSM model to enforce strict, phase-based logic, accurately counting valid repetitions while rejecting non-standard movements. Future iterations might explore multi-camera setups or integration with other sensor modalities to further enhance robustness against extreme environmental factors. We also plan to integrate 3D human pose estimation models, which would not only enhance robustness but also unlock more sophisticated biomechanical analyses. Ultimately, this work delivers a high-fidelity, scalable solution for automated exercise assessment, with significant potential for deployment in educational, military, and enterprise contexts where fair and consistent evaluation is paramount.

Author Contributions

Conceptualization, L.L.; Methodology, L.L., H.Z. and W.H.; Software, H.Z., Q.Z. and W.W.; Validation, Q.Z. and R.L.; Writing—original draft, W.H. and R.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by “Cultivation Special Project of Joint Fund for Regional Innovation and Development (Shaanxi) under the National Natural Science Foundation of China”, “Key Research and Development Program of Shaanxi Province” and “Natural Science Basic Research Program of Shaanxi” grant number 24GXFW0081-03, No. 2025CY-YBXM-063 and No. 2025JC-YBMS-791.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Laitakari, J.; Vuori, I.; Oja, P. Is long-term maintenance of health-related physical activity possible? An analysis of concepts and evidence. Health Educ. Res. 1996, 11, 463–477. [Google Scholar] [CrossRef] [PubMed]

- Medical Physical Examination Physical Fitness Monitoring Adding “Double Insurance” for Employees’ Health General Administration of Sport of China. Available online: https://www.sport.gov.cn/n20001280/n20001265/n20066978/c25804813/content.html (accessed on 5 October 2024).

- GASA; the Ministry of Education. Notice of the General Administration of Sport and the Ministry of Education on Printing and Distributing the Opinions on Deepening the Integration of Sports and Education to Promote the Healthy Development of Adolescents. Available online: http://www.moe.gov.cn/jyb_xxgk/moe_1777/moe_1779/202009/t20200922_489794.html (accessed on 5 October 2024).

- Notice of the General Office of the Ministry of Education on Printing and Distributing the Guiding Outline for the Teaching Reform of Physical Education and Health. Available online: http://www.moe.gov.cn/srcsite/A17/moe_938/s3273/202107/t20210721_545885.html (accessed on 5 October 2024).

- Palazzo, L.; Suglia, V.; Grieco, S.; Buongiorno, D.; Pagano, G.; Bevilacqua, V.; D’Addio, G. Optimized Deep Learning-Based Pathological Gait Recognition Explored Through Network Analysis of Inertial Data. In Proceedings of the 2025 IEEE Medical Measurements & Applications (MeMeA), Chania, Greece, 28–30 May 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Palazzo, L.; Suglia, V.; Grieco, S.; Buongiorno, D.; Brunetti, A.; Carnimeo, L.; Amitrano, F.; Coccia, A.; Pagano, G.; D’Addio, G.; et al. A Deep Learning-Based Framework Oriented to Pathological Gait Recognition with Inertial Sensors. Sensors 2025, 25, 260. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Zheng, Z.; Jiao, C. Extra-Curricular Physical Exercise Management System Based on AI Sports—Development of the Extra-curricular Physical Exercise System of Peking University. pp. 36–41. Available online: https://kns.cnki.net/kcms2/article/abstract?v=ZHE1803t14tE6iq_1eEXhxmQ9c-nRtCV53N2yNYJOU88yohipuoCFtBuHiGwgky9DimN-yU7t9a3rZz3bDXkSkXM8d2eY9ttqlli_TVgvuFcK3TOKwaD4dYOy4VLEX9mm9Y6h_ONfj2VuaYTC3WyAQHuSe7l_PMIBQhfM2TVGo4wCVk1PDps5w==&uniplatform=NZKPT&language=CHS (accessed on 5 October 2024).

- Fang, J.B.; Liao, X.K.; Huang, C.; Dong, D.Z. Performance Evaluation of Memory-Centric ARMv8 Many-Core Architectures: A Case Study with Phytium 2000+. J. Comput. Sci. Technol. Engl. Ed. 2021, 36, 11. [Google Scholar] [CrossRef]

- Barreira, D.; Casal, C.A.; Losada, J.L.; Maneiro, R. Editorial: Observational Methodology in Sport: Performance Key Elements. Front. Psychol. 2020, 12, 596665. [Google Scholar] [CrossRef] [PubMed]

- Castellano, J.; Perea, A.; Alday, L.E.A. The Measuring and Observation Tool in Sports. Behav. Res. Methods 2008, 40, 898–905. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhou, Z.; Wu, J.; Xiong, Y. Human posture detection method based on wearable devices. J. Healthc. Eng. 2021, 2021, 8879061. [Google Scholar] [CrossRef]

- Hong, Z.; Hong, M.; Wang, N.; Ma, Y.; Zhou, X.; Wang, W. A wearable-based posture recognition system with AI-assisted approach for healthcare IoT. Future Gener. Comput. Syst. 2022, 127, 286–296. [Google Scholar] [CrossRef]

- Chopra, S.; Kumar, M.; Sood, S. Wearable posture detection and alert system. In Proceedings of the 2016 International Conference System Modeling & Advancement in Research Trends (SMART), Moradabad, India, 25–27 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 130–134. [Google Scholar]

- Luo, W.; Xue, J. Human Pose Estimation Based on Improved HRNet Model. In Proceedings of the 2023 IEEE 3rd International Conference on Computer Communication and Artificial Intelligence (CCAI), Taiyuan, China, 26–28 May 2023; pp. 153–157. [Google Scholar] [CrossRef]

- Zhang, H.; Dun, Y.; Pei, Y.; Lai, S.; Liu, C.; Zhang, K.; Qian, X. HF-HRNet: A Simple Hardware Friendly High-Resolution Network. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7699–7711. [Google Scholar] [CrossRef]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-device Real-time Body Pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

- Akpa, E.A.; Fujiwara, M.; Arakawa, Y.; Suwa, H.; Yasumoto, K. Gift: Glove for indoor fitness tracking system. In Proceedings of the 2018 IEEE international Conference on Pervasive Computing and Communications Workshops (PerCom workshops), Athens, Greece, 19–23 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 52–57. [Google Scholar]

- Liu, Z.P.; Wang, Z.K. An Automatic Counting Device for Pull-Ups. Hebei Agric. Mach. 2018, 60. [Google Scholar] [CrossRef]

- Zhao, S.F. Research and Development of an Automatic Pull-Up Test System Combining Depth Images. Master’s Thesis, Wuyi University, Jiangmen, China, 2014. [Google Scholar]

- Xu, F.; Tao, Q.C.; Wu, L.; Jing, Q. A Push-Up Counting Algorithm Based on Machine Vision. Mod. Comput. 2021, 48–53. Available online: https://kns.cnki.net/kcms2/article/abstract?v=ZHE1803t14vYBo00-t9NA0Etn1AqiJIColoLMKZ0hf8aZyjf2y7Ujl7tdsQfrcqE_1nGeMhFyZXPoyRAm0W5qG7u__upxYED6XUKqsep877LQK3JVWJPo_K0IRwXAhP-G_EnJ-ffVcg-rFzSawYgSZXfPVaEP6us8RXVIlI37agGZx-5Qimj0A==&uniplatform=NZKPT&language=CHS (accessed on 5 October 2024).

- Deng, W.; Yang, W. Fitness Training Assistance System Based on Mediapipe Model and KNN Algorithm. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 22–24 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1878–1882. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. Openpose: Realtime multi-person 2d pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Chen, H.; Cheng, M.; Liu, W.; Chen, Y.; Cao, Y.; Yao, D. Design of a Lightweight Human Pose Estimation Algorithm Based on AlphaPose. In Proceedings of the 2024 International Conference on Networking, Sensing and Control (ICNSC), Hangzhou, China, 18–20 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Zhang, M.; Yu, X.; Li, W.; Shu, X.; Pan, L.; Shen, Z. LENet: A Lightweight and Efficient High-Resolution Network for Human Pose Estimation. IEEE Access 2025, 13, 31032–31044. [Google Scholar] [CrossRef]

- Huang, Y.; Zeng, X.; Feng, S. Research on Lightweight OpenPose Posture Detection Model for Edge Devices. J. Wuhan Inst. Technol. 2024, 46, 424–430. Available online: https://kns.cnki.net/kcms2/article/abstract?v=ZHE1803t14sSwF1mW01JMfCXFlOi8wTcexIsY9dzGR9yxw0zf4DJ6h_nTCBwViaNv3BUNp7kfg7XgMsyvJ8IF3PYGZBnkMvetECSKW_HKL5Y5Wo4sxKsPZXWlPUbKALninjlDmHPIrIFZAhHES852pl03FDqrJMPujs5-IQ8QA1c109b_jgSwQ==&uniplatform=NZKPT&language=CHS (accessed on 5 October 2024).

- Qian, H. Research on Human Posture Detection System Based on Motion Sensors. Master’s Thesis, Xiamen University of Technology, Xiamen, China, 2022. [Google Scholar]

- Leone, A.; Rescio, G.; Caroppo, A.; Siciliano, P.; Manni, A. Human Postures Recognition by Accelerometer Sensor and ML Architecture Integrated in Embedded Platforms: Benchmarking and Performance Evaluation. Sensors 2023, 23, 1039. [Google Scholar] [CrossRef] [PubMed]

- Tushar; Kumar, K.; Kumar, S. Object Detection using OpenCV and Deep Learning. In Proceedings of the 4th International Conference on Advances in Computing, Communication Control and Networking, Greater Noida, India, 16–17 December 2022; pp. 899–902. [Google Scholar] [CrossRef]

- Tao, Y.; An, L.; Hao, J. Application of Static Gesture Recognition Based on OpenCV. In Proceedings of the 6th International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 22–24 December 2023; pp. 215–220. [Google Scholar] [CrossRef]

- HIKVISION. DS-2CD3T56FWDV2-I3. Available online: https://www.hikvision.com/cn/products/pdplist/44536/ (accessed on 5 October 2024).

- Tenglong, Z. TL520F-A. 2023. Available online: https://www.pterosauria.cn/product/ftjs/efd/259262d433a95c96b82e508a151e3b74.html (accessed on 1 December 2023).

- Maitray, T.; Singh, U.; Kukreja, V.; Patel, A.; Sharma, V. Exercise posture rectifier and tracker using MediaPipe and OpenCV. In Proceedings of the International Conference on Advances in Computing, Communication Control and Networking, Greater Noida, India, 15–16 December 2023; pp. 141–147. [Google Scholar] [CrossRef]

- Alsawadi, M.S.; Rio, M. Human Action Recognition using BlazePose Skeleton on Spatial Temporal Graph Convolutional Neural Networks. In Proceedings of the 9th International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE), Semarang, Indonesia, 25–26 August 2022; pp. 206–211. [Google Scholar] [CrossRef]

- Arya, V.; Maji, S. Enhancing Human Pose Estimation: A Data-Driven Approach with MediaPipe BlazePose and Feature Engineering Analysing. In Proceedings of the 2024 First International Conference on Pioneering Developments in Computer Science & Digital Technologies (IC2SDT), Delhi, India, 2–4 August 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Ijjina, E.P.; Mohan, C.K. Human action recognition based on motion capture information using fuzzy convolution neural networks. In Proceedings of the 2015 Eighth International Conference on Advances in Pattern Recognition (ICAPR), Kolkata, India, 4–7 January 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Ruan, R.; Liu, X.; Wu, X. Action Recognition Method for Multi-joint Industrial Robots Based on End-arm Vibration and BP Neural Network. In Proceedings of the 6th International Conference on Control and Robotics Engineering (ICCRE), Beijing, China, 16–18 April 2021; pp. 13–17. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, C.; Luo, W.; Lin, W. Key Joints Selection and Spatiotemporal Mining for Skeleton-Based Action Recognition. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3458–3462. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).