Abstract

Student engagement analysis is closely linked with learning outcomes, and its precise identification paves the way for targeted instruction and personalized learning. Current student engagement methods, reliant on either head pose estimation with facial landmarks or eye-trackers, are hardly generalized to authentic classroom teaching environments with high occlusion and non-intrusive requirements. Based on empirical observations that student body orientation and head pose exhibit a high degree of consistency in classroom settings, we propose a novel student engagement analysis algorithm incorporating human body orientation estimation. To better suit classroom settings, we develop a one-stage and end-to-end trainable framework for multi-person body orientation estimation, named JointBDOE. The proposed JointBDOE integrates human bounding box prediction and body orientation into a unified embedding space, enabling the simultaneous and precise estimation of human positions and orientations in multi-person scenarios. Extensive experimental results using the MEBOW dataset demonstrate the superior performance of JointBDOE over the state-of-the-art methods, with an MAE reduced to 10.63° and orientation accuracy exceeding 91% at 22.5°. With the more challenging reconstructed MEBOW dataset, JointBDOE maintains strong robustness with an MAE of 16.07° and an orientation accuracy of 88.3% at 30°. Further analysis of classroom teaching videos validates the reliability and practical value of body orientation as a robust metric for engagement assessment. This research showcases the potential of artificial intelligence in intelligent classroom analysis and provides an extensible solution for body orientation estimation technology in related fields, advancing the practical application of intelligent educational tools.

1. Introduction

Student classroom engagement analysis is a critical component of improving teaching quality, and it holds significant importance for achieving precision education [1,2]. Traditional manual observation methods suffer from limitations such as inefficiency and subjectivity, driving the trend toward automated detection technologies. Although current artificial intelligence and big data-based computer vision technologies have been widely applied in the educational field, the existing engagement analysis research primarily focuses on online classrooms, analyzing facial data and platform interaction data—and benefiting from the clear individual data collection in virtual environments [3,4]. However, in offline classroom teaching scenarios, methods relying on facial data analysis face significant challenges due to large class sizes and mutual obstructions among students. The mainstream engagement evaluation methods currently depend on identifying typical actions like raising hands or standing [5,6]. Yet, such approaches struggle to effectively assess engagement levels during the most common static listening states. On the other hand, humans’ body orientation usually reflects their attention directions, indicating a new solution for student engagement analysis in authentic classrooms [7].

Human body orientation estimation (HBOE), which involves estimating the orientation angles of humans, has been widely applied in fields such as human–computer interaction and intelligent monitoring [8,9]. However, its application within the educational domains remains relatively nascent. In this paper, we propose to leverage the HBOE method to analyze the student engagement during their listening status in classroom settings. It enables the tracking of individual learning states by detecting subtle changes in students’ body orientation, even in large-size classrooms with high occlusion. Additionally, this non-intrusive detection approach can meet the needs of large-scale routine classroom analysis, providing an innovative technical solution for implementing precision teaching.

However, most existing HBOE methods rely on a two-stage pipeline involving initial human detection, followed by orientation angle estimation. Such approaches are not only computationally intensive but also heavily dependent on the accuracy of the detection results. Previous HBOE studies have primarily focused on pre-cropped human instances under idealized conditions [10,11,12], limiting their generalization for practical applications. Therefore, developing an integrated and efficient HBOE solution is imperative.

In this paper, we propose a novel student engagement analysis method with one-stage HBOE, named JointBDOE, which integrates body detection and orientation estimation into one task. In particular, JointBDOE designs a unified embedding that integrates bounding box prediction and orientation angles, and it forms a single-stage end-to-end trainable HBOE framework. Moreover, the joint learning of body location and orientation angles enables shared human features to enhance orientation estimation, particularly in crowded and occluded classroom scenarios. With JointBDOE, all the students’ orientation directions are computed and displayed with arrow indicators for engagement analysis. Experimental results on reconstructed MEBOW and authentic teaching videos with various layouts demonstrate the effectiveness and efficiency of the proposed JointBDOE for multi-person orientation estimation and student engagement analysis, respectively. The main contributions are summarized as follows:

(1) Method innovation in intelligent classroom engagement analysis: Breaking through the limitation of traditional classroom engagement analysis that relies on manual observation or eye-tracker devices, we proposed a novel visual analysis method integrated with body estimation. This method maintains stable performance in crowded classroom scenarios with severe occlusions, and it fundamentally solves the core problem that traditional methods fail to adapt to the complex environment of real classrooms—realizing method-level innovation in adapting to practical classroom scenarios.

(2) Technological innovation of the One-Stage JointBDOE Framework: We develop a technologically innovative one-stage end-to-end JointBDOE framework to achieve the simultaneous collaboration of human body detection and orientation estimation. By integrating bounding box regression and orientation prediction into a unified feature embedding space and designing a decoupled orientation prediction head, this framework not only ensures real-time scalability but also significantly improves robustness against occlusion interference and viewpoint variations. It breaks through the technical bottleneck of existing multi-stage frameworks in which “step-by-step processing hinders the balance between efficiency and accuracy”, completing technological innovation in framework architecture.

(3) Application innovation in dataset and evaluation system: To solve the practical application problem that existing datasets lack diversity and cannot support real-scenario verification, we reconstruct and extend the MEBOW dataset to greatly enhance its scenario diversity and realism; meanwhile, we conduct comprehensive validation of JointBDOE across YOLOv5 and YOLOv11 models at multiple scales. Extensive experiments on benchmark data and authentic classroom videos confirm its superior accuracy and efficiency. Furthermore, it innovatively realizes a dynamic visualization of students’ attention directions via refined indicators, filling the application gap in intelligent classroom analysis for tools that integrate “quantitative analysis + intuitive presentation”—and providing practical application support for intelligent classroom management.

2. Literature Review

2.1. Student Engagement

Student engagement refers to the degree of attention, curiosity, interest, optimism, and enthusiasm that students demonstrate during learning, which also reflects their intrinsic motivation for academic progress [13,14]. Learning engagement is a critical element in the educational process, directly impacting students’ learning outcomes and developmental progress [15]. Research indicates that student engagement levels show positive correlations with academic performance, progress, graduation rates, satisfaction, and deeper learning. This relationship manifests not only in knowledge acquisition but also in students’ holistic development [16,17,18]. Especially in classroom teaching, a teacher faces a large number of students and finds it difficult to pay attention to each individual student. Therefore, an effective assessment of student participation cannot only concretely demonstrate the students’ classroom performance but must also provide a basis for the teacher to evaluate the effectiveness of classroom teaching.

The current research on student engagement primarily focuses on online learning environments. For instance, Liu et al. systematically investigated the impact of cognitive and affective engagement on learning outcomes in MOOC contexts [19]. Monkaresi et al. innovatively integrated facial expression analysis with self-report data to achieve engagement detection in online writing activities [20]. Rehman et al. established an online learning engagement assessment model through EEG signal analysis [21]. These studies employ diversified technological approaches that have significantly enhanced the objectivity, efficiency, and intelligence of engagement assessment. It is noteworthy that the successful implementation of these studies largely relies on the distinctive advantages of online learning environments, which enable precise acquisition of students’ facial feature data and comprehensive recording of learning behavior trajectories, thereby providing solid data support for engagement research.

However, classroom teaching remains the core front of education and a key link in improving education quality. Yet, due to issues like large class sizes, student occlusion, and the inability to use intrusive monitoring devices, it is difficult to clearly capture individual data like in online environments, making the direct transfer of online analytical techniques challenging. When facing dozens of students, teachers often struggle to monitor each student’s engagement status, making classroom participation assessment a prominent challenge in teaching practice. Common offline classroom engagement assessment methods include self-reporting and classroom observation. Self-reporting is simple and cost-effective but limited due to subjectivity and time delays, making it best for post-learning evaluations. Classroom observation using set scales records student performance, but both manual and semi-automated coding face high labor and time costs, limiting large-scale implementation. The emergence of computer vision technology provides new possibilities for automated classroom analysis. It can effectively capture teacher–student classroom behaviors, significantly reducing reliance on human resources while improving assessment efficiency. However, current technological applications mainly focus on identifying typical behaviors like hand-raising, yawning, phone use, and standing. In reality, during regular teaching processes, students spend most of their time in static listening states in which teachers find it hard to detect attention wandering. Moreover, in newly promoted teaching models like project-based learning, non-linear seating arrangements make behavior recognition particularly challenging.

2.2. Human Body Orientation Estimation (HBOE)

The human body orientation estimation (HBOE) is defined as estimating the skeletal orientation of a person at the orthogonal camera frontal view, which has been applied in various tasks, such as robotics navigation, intelligent surveillance, and human–computer interaction [22,23,24,25]. Early research primarily relied on handcrafted features and traditional classifiers, often modeling HBOE as a classification problem using simple multi-layer neural networks due to limitations in dataset scale and annotation accuracy. With the advancement of technology, the detection foundation has progressively evolved from traditional handcrafted feature-based approaches to deep learning-based methods, including Faster R-CNN [10], FCOS [11], and YOLOv5 [26]. Hara et al. laid the foundation for fine-grained orientation prediction by re-annotating the TUD dataset with continuous angle labels [27]. MEBOW further established a large-scale benchmark dataset and demonstrated the superiority of deep neural networks in HBOE tasks [25]. PedRecNet explored a multi-task learning framework that combines body orientation with 3D pose estimation, achieving competitive performance [28].

The current HBOE methods predominantly adopt a two-stage processing pipeline: first localizing human instances using pre-trained detectors (e.g., Faster R-CNN), followed by orientation estimation on the cropped regions. Wang et al. proposed Graph-PCNN, a two-stage human pose estimation method based on graph convolutional networks, which improves pose estimation accuracy through a graph-based pose refinement module [29]. Yang et al. proposed DWPose, which enhances the efficiency and accuracy of full-body pose estimation via a two-stage distillation strategy [30]. Lin et al. proposed a two-stage multi-person pose estimation and tracking method based on spatiotemporal sampling [31]. While straightforward and effective, this paradigm faces notable challenges in practical applications. On the one hand, instance cropping during detection may introduce information loss, particularly in cases of occlusion or dense crowds. On the other hand, the two-stage approach leads to computational costs that scale linearly with the number of individuals, making real-time performance difficult to achieve. In recent years, some studies have addressed the information loss and computational overhead of two-stage HBOE methods by proposing single-stage approaches. Wang et al. proposed YOLOv8-SP, an enhanced YOLOv8 architecture that integrates multi-dimensional feature fusion and attention mechanisms to achieve real-time pose estimation and joint angle extraction for moving humans [32]; Zhao et al. proposed Part-HOE, which estimates orientation using only visible joints and introduces a confidence-aware mechanism to improve robustness under partial observations [33]. However, although these single-stage methods improve computational efficiency and occlusion robustness, their performance in densely populated scenarios and real-world environments such as classrooms remains limited, and the precision, real-time capability, and generalization of the models still need further validation and optimization.

Moreover, most existing HBOE studies assume input to be precisely cropped human regions, significantly limiting their applicability in real-world scenarios. Multi-task learning strategies have gained considerable attention for their efficiency and potential for task synergy [34,35]. For instance, Raza et al. designed parallel CNN classifiers to separately predict head and body orientation [24]. MEBOW utilized body orientation as auxiliary supervision to enhance 3D pose estimation [25]. PedRecNet proposed a unified architecture for joint 3D pose and orientation estimation [28]. GAFA introduced a novel gaze estimation method leveraging the coordination between the human gaze, head, and body [36]. However, all of these studies rely on cropped human bounding box images as input, hindering their deployment in practical settings. Consequently, developing end-to-end methods capable of directly processing raw images while supporting multi-person orientation estimation remains a critical challenge for advancing this technology.

Compared with these advances, our proposed JointBDOE differs in two key aspects: (i) it integrates orientation estimation directly into the detection head, avoiding the person-dependent cost of two-stage approaches; and (ii) it is designed for real-time classroom scenarios, where robustness and scalability are as critical as accuracy. By situating our work within these latest developments, we show that JointBDOE complements recent trends while offering a practical solution tailored to multi-person classroom analysis.

3. Methodology for Student Engagement Analysis

We first propose a novel student engagement analysis method with body orientation estimation under the empirical observation that body directions usually indicate students’ attention. Then, to adapt to crowded and occluded authentic classroom settings, we integrate the body detection and orientation estimation into one task and design a one-stage end-to-end framework for multi-person body estimation. More details are introduced in the following subsection.

3.1. Overall Framework

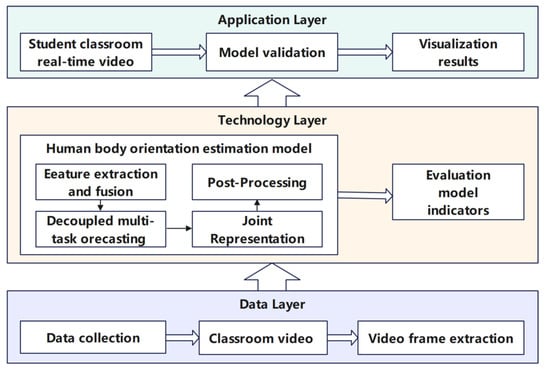

We propose a three-layer architecture for an intelligent student engagement analysis in authentic classroom settings, including data, technology, and application layers, as shown in Figure 1. First, the data layer collects learning state data with two cameras installed on the front and rear walls of the classroom. These continuous video streams are further converted into discrete frames with a sample rate of 3 s as the input for the following engagement analysis. Second, the technology layer integrates the proposed multi-person body orientation estimation method to identify all the students’ locations and body orientation angles in real time. Third, the application layer offers a real-time visual display, where bounding boxes and orientation narrows are represented for students and their orientation directions, respectively. To the end, the proposed architecture is successfully applied to analyze student engagement levels in authentic teaching with various layouts, including regular configuration, small-group discussions, and circular seminar configurations. Students’ engagement is intricately linked to their attention directions, which can be inferred from their body orientation. The frequency of their orientation changes and whether these changes are teacher-directed both indicate students’ engagement levels. The experimental section provides a detailed analysis of both patterns.

Figure 1.

The proposed three-layer framework for intelligent student engagement analysis in authentic classrooms, including a data layer, a technology layer, and an application layer.

3.2. Joint Body Detection and Orientation Estimation (JointBDOE) Model

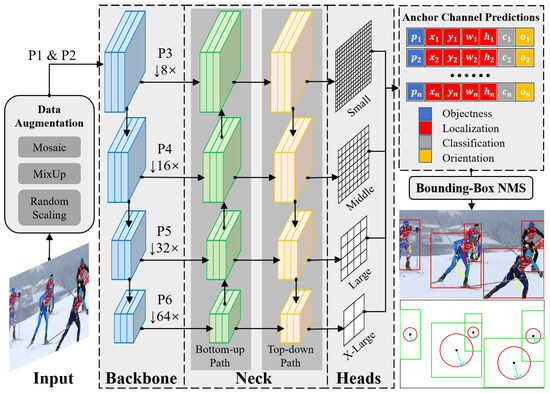

To adapt to crowded and occluded classroom settings, we propose a novel single-stage end-to-end trainable framework that joint student detection and body orientation angles into one task, named JointBDOE, as shown in Figure 2. Combining the bounding box regression and orientation angle estimation into a unified embedding, JointBDOE integrates body orientation information into traditional object detection representation and transforms multi-person orientation estimation into a single-stage detection task. The overall architecture of JointBDOE is built upon the single-stage object detector YOLOv11 [37], which can be readily replaced with other single-stage architectures. In particular, with the input images/frames, we first employ the CSPDarknet 53 backbone [29] and PANet neck [38] for efficient feature extraction and fusion. Then, we design a decoupled prediction head with three independent branches, including classification, localization, and orientation estimation, to simultaneously detect human bodies and estimate orientation angles at various scales. Finally, non-maximum suppression (NMS) is applied to the prediction results and outputs with detected human positions and orientations. To facilitate joint learning, we reconstructed the MEBOW dataset [25], a representative dataset for body orientation estimation in the wild, with supplementing complete human body bounding box annotations and pseudo-orientation labels generated via Wu et al. [25]. Figure 3 shows several selected enhanced samples.

Figure 2.

Our architecture builds upon YOLOv11 [37]. The input image is initially processed via the CSPDarknet53 [29] backbone to extract feature maps at four different scales P3, P4, P5, and P6. These multi-scale features are then fused using PANet [38] at the neck. The fused features are passed to detection heads, where each anchor channel produces a unified output that includes both bounding box and orientation predictions. The square frame represents the human body position, and the circular frame indicates the 360-degree orientation information of the human body on the 2D plane.

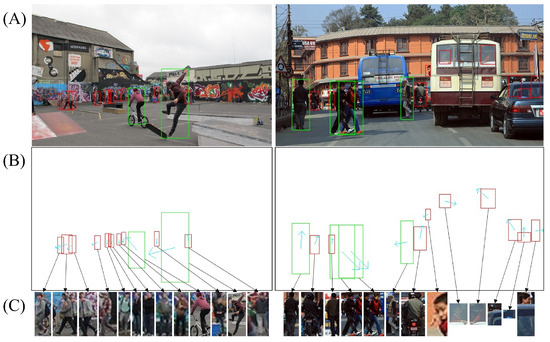

Figure 3.

Two examples from the reconstructed MEBOW dataset [25]. (A): original crowd scenes, where green boxes are provided by MEBOW and red boxes are newly added by us. (B): body boxes with orientation arrows (cyan). (C): cropped human instances, including occluded, truncated, or small bodies. These samples are difficult for single-person HBOE methods but are effectively addressed by our single-stage multi-person approach.

3.2.1. Unified Embedding

To build a single-stage human detection and orientation estimation framework, we propose a unified embedding representation method to integrate the two tasks. This approach extends traditional object detection representations by incorporating human-related attributes into a unified expression [39]. Specifically, the unified embedding is defined as , where p denotes the probability of target existence (i.e., human presence), and represents the center coordinates of the human bounding box, with w and h indicating its width and height—these four parameters collectively determine the precise spatial location of humans in images. c stands for classification scores reflecting the confidence level of detection results belonging to the human category, and o indicates the orientation of the human. Through this representation, the originally complex multi-person orientation estimation problem is successfully transformed into a conventional object detection task. The primary advantage of this design lies in enabling the model to simultaneously learn multiple related tasks with a minimal computational cost by sharing a single network head. This approach not only significantly improves the model’s operational efficiency but also remarkably enhances task processing capability, allowing the entire system to maintain high performance while achieving more efficient deployment and application.

In the implementation, we extend the anchor prediction mechanism of YOLOv11 by working with the output group for the i-th image grid cell at scale reduction factors . In YOLOv11, each detection head has a fixed number of anchor channels, 3. For a specific anchor channel prediction, , its original representation is , which includes the objectness score , bounding box offsets , and classification scores . For the human body orientation estimation task, we maintain the single-class classification setting () and augment the original output with an orientation parameter , thereby constructing the complete prediction embedding . This unified embedding design not only preserves the functionality of the original detection framework but also allows flexible extension to other tasks requiring simultaneous prediction of object attributes and locations. For instance, by replacing with Euler angle parameters, the framework can be readily applied to tasks such as eye gaze direction and head pose estimation.

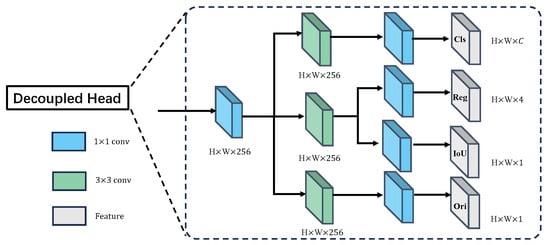

3.2.2. Decoupled Head

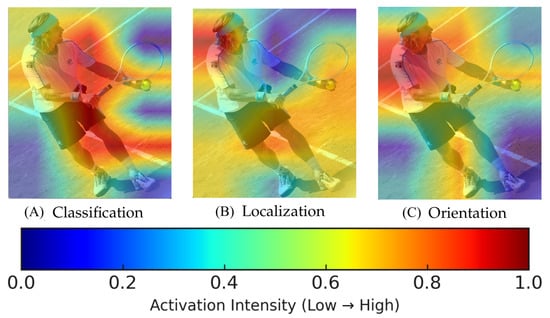

During multi-task joint training, we observed that the features extracted for the three distinct tasks of classification, localization, and orientation estimation emphasize different aspects. The three heatmaps shown in Figure 4 demonstrate that the features for classification cover the entire human body, and the features for localization focus on regions that refine bounding box coordinates, while the features for orientation estimation primarily concentrate on the upper body portion. The discrepancies arising from learning different tasks from the same features may lead to slower convergence and performance degradation. To address this issue, we decoupled the head module into three independent branches, each dedicated to a specific task, as illustrated in Figure 5.

Figure 4.

Areas focused on by different tasks. The classification task focuses on the whole body area, the localization task focuses on coordinate positions of the bounding box, and the orientation task focuses on upper half of the body. Warmer colors (red/yellow) indicate higher attention/activation of the corresponding branch, and cooler colors (blue) indicate lower attention.

Figure 5.

Feature map obtained from PANet [38] is fed into the decoupling head. First, it goes through a convolution block for feature dimension reduction, resulting in a feature map. It then passes through three parallel convolution layers, one for classification, one for localization and confidence estimation, and one for orientation estimation. Finally, each of them goes through a convolution for four tasks, respectively.

3.2.3. Body Orientation Estimation

In this paper, human body orientation is defined as a continuous angle, , representing the orientation of the human body relative to the camera’s frontal view. In the proposed unified embedding vector, , the orientation component o is normalized to the range via the sigmoid activation function. This processing not only facilitates network training optimization but also effectively enhances the model’s robustness to angular features. During the training phase, we adopt the standard mean squared error (MSE) for orientation regression. To address the boundary discontinuity issue caused by angle periodicity, a wrapped MSE loss function is introduced. When calculating errors, it accounts for the periodic nature of the angular space to optimize the supervision mechanism. Since the periodicity of azimuth angles can mislead the original difference between predicted and true values near the boundary, the second term in Equation (1) ensures the accurate measurement of the minimum angular difference by adapting to this periodicity. Its specific form is as follows:

where is the estimated result from i-th multi-scale head, is the corresponding ground-truth. Here, n denotes the number of multi-scale prediction heads; we set following prior work (e.g., MEBOW).

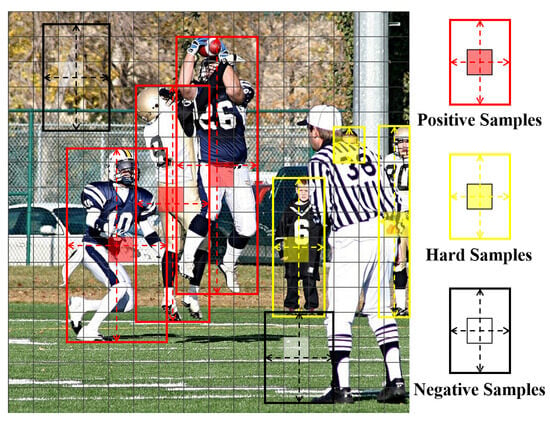

Although human-body orientation appears intuitive and straightforward in images, we have identified two potential challenges for this task. First, for severely occluded, highly truncated, or extremely small human instances, their orientation is often difficult to determine accurately. Second, dense anchor channel predictions may contain numerous areas with partial human body or none. Figure 6 presents an illustration. These special samples have a limited or no effect on the supervised learning of body orientation. Thus, we designed a probability threshold -based filtering mechanism to screen , effectively eliminating unreliable orientation estimates from the prediction results. However, false-negative hard samples should be retained, as they play a crucial role in the joint estimation task, as shown in the bottom display of Figure 3. A suitable value for is obtained via ablation studies.

where is an indicator function that equals 1 for person instances with valid orientation labels and 0 otherwise, so that the orientation loss is applied only when annotations are available.

Figure 6.

The illustration of some anchor channel predictions for positive, hard, and negative body orientation regression. This was provided as an example for illustration only.

3.2.4. Overall Loss Function

Under the unified embedding, the multi-person body estimation can be solved through the following function:

where and are the original detection losses for objectness and localization, and is the orientation estimation. The settings of weight parameters and are the same in YOLOv11. The optimal value of for orientation regression loss is explored through ablation studies.

The definitions of and are as follows:

and

where is the binary cross-entropy, and is the complete intersection over union. The body objectness is multiplied by score to promote concentrated anchor channel predictions. And means no target human body.

4. Experiments

We first demonstrate the effectiveness and efficiency of the proposed JointBDOE model on the multi-person body orientation task on both the original MEBOW dataset and its reconstructed version, where the reconstructed one contains more challenging and complex crowded samples. Then, we test the performances of JointBDOE for student engagement analysis in authentic classroom settings with various layouts, and we dynamically visualize students’ engagement levels during classes.

4.1. Datasets and Metrics

Reconstructed MEBOW Dataset: The MEBOW dataset contains a total of 54,007 annotated images, including 51,836 images (127,844 human instances) for training and 2171 images (5536 human instances) for testing. While preserving the original data, we restored the challenging human instances originally provided through the COCO [40] dataset and employed the MEBOW method to generate pseudo labels for body orientation. The final constructed dataset comprises 225,912 human instances (216,853 for training and 9059 for testing), suitable for multi-person body orientation estimation tasks.

Metrics: Following [25,28], this paper employs two core metrics to evaluate body orientation estimation performance: first, the mean absolute error (MAE) measuring the average deviation between predicted angles and ground truth; second, the Acc.- accuracy metric (), which indicates the proportion of predictions falling within an X° tolerance range centered on the ground truth orientation. For the joint body detection task evaluation, the recall metric is additionally reported as a supplementary reference. Through these metrics, we can comprehensively evaluate the model’s overall performance in both body orientation estimation and human body detection tasks, thereby validating the robustness and practical value of the proposed method in complex scenarios.

4.2. Implementation Details

This study employs the YOLOv11 architecture as the backbone network, replacing the standard detection head with our designed three-branch decoupled detection head (shown in Figure 5), while maintaining the basic training configurations from reference [41]. Utilizing the proposed unified feature embedding approach, the system simultaneously accomplishes both human body detection and orientation estimation tasks. All input images are standardized to a resolution (preserving original aspect ratios), with training parameters and manually fine-tuned through experimental validation. All experiments are run on a workstation with six NVIDIA GeForce RTX 4090 GPUs (24 GB each), driver 550.78, and CUDA 12.4. Unless otherwise noted, we train on four GPUs using torchrun with a global batch size of 32 (8 images/GPU). Our implementation uses Python 3.10.4 and PyTorch 2.6.0 within the YOLO framework. We adopt SGD with an initial learning rate of , momentum of 0.937, and weight decay of . A OneCycleLR scheduler is used with a final LR ratio 0.2, preceded by a 3-epoch warm-up starting at 0.1× the base LR. Training lasts 500 epochs with mixed precision (AMP) and EMA of weights (decay 0.9999). Input images are resized to and augmented with standard YOLO policies. Mosaic is enabled with a probability of 1.0 throughout training, mixup is disabled, and flips (horizontal and vertical) are disabled. Other augmentations, including HSV jitter (h = 0.015, s = 0.7, v = 0.4), random translation (±0.1), scaling (0.9×), rotation, shear, perspective, and copy–paste, are disabled. Random seeds are initialized for each training process to ensure reproducibility. The best-validation checkpoint is selected with mAP@0.5:0.95. When the global batch size changes, the base learning rate is scaled linearly.

4.3. Results on Multi-Person Body Orientation Task

4.3.1. Ablation Studies

In previous studies [42], we trained the yolov5s model for 500 epochs. As shown in Table 1, we initially set to 0.1 and screened the threshold within the range of [0.1, 0.4] at intervals of 0.1. The experimental results indicate that the lowest MAE value was achieved when was set to 0.2, demonstrating the importance of reasonable hard-sample filtering. Subsequently, with fixed, we selected the loss weight for from and ultimately determined that 0.05 achieved the optimal balance between human detection and orientation estimation tasks. Building on this, we upgraded the backbone network from YOLOv5 to YOLOv11 and conducted training in the same manner. The results (Table 1) show that under identical conditions ( 0.2, 0.05), the YOLOv11 backbone network exhibits superior performance. This also indicates that our method is not limited to a specific backbone network but, rather, exhibits a strong generalization capability and adaptability.

Table 1.

Influence exploration of two hyperparameters: the tolerance threshold and the loss weight for . YOLO-s refers to the small variant of the YOLO detector. Upward arrows denote that higher values are preferable and downward arrows denote that lower values are preferable.

Meanwhile, we conducted dedicated ablation experiments on the decoupled head structure to verify its effectiveness. In our previous study [42], based on the YOLOv5 architecture, we demonstrated through a comparative analysis of the performance differences between coupled and decoupled head structures that the decoupled design can effectively reduce feature interference between multiple tasks, thereby achieving superior performance (Table 2). Specifically, the Decoupled-YOLOv5 model showed improved direction estimation accuracy and higher accuracy within the 5° error range across all backbone network versions while maintaining an unchanged detection recall rate. Among them, YOLOv5l performed the best, achieving a mean absolute error of in direction estimation and an accuracy rate of 47.8% within 5° error range. Considering the continuous updates of backbone networks, we further tested the latest YOLOv11 architecture. The results showed that the Decoupled-YOLOv11x model achieved even better performance, reducing the mean absolute error of direction estimation to and increasing the accuracy within 5° error range to 48.7%. The results further confirm the superior performance of our proposed decoupled head structure in orientation estimation tasks, while demonstrating its strong generalization capability across different backbone network architectures.

Table 2.

Body orientation performance comparison of our method with SOTA methods in the MEBOW dataset. The “–” means not reported. C- denotes coupled method and Dec- is decoupled method. YOLO-s/m/l/x refer to the small, medium, large, and extra-large variants of the YOLO detector, respectively. Upward arrows denote that higher values are preferable and downward arrows denote that lower values are preferable.

4.3.2. Quantitative Comparison

For a fair comparison, we first evaluated model performance on the original MEBOW dataset, which excluded numerous challenging instances. As shown in Table 2, the YOLOv5l-based model achieved comparable results to the MEBOW and PedRecNet baseline methods in terms of mean absolute error (MAE) and accuracy metrics, while the subsequently adopted YOLOv11 model further improved these metrics. These baseline methods were specifically designed for single-person body orientation estimation tasks and required precisely cropped human instances as input. Notably, in multi-target scenarios without predefined human detection, the MEBOW and PedRecNet methods demonstrated limited effectiveness, whereas our approach maintained superior performance.

Furthermore, we conducted a validation of the proposed method’s performance on the reconstructed MEBOW dataset, with detailed experimental results presented in Table 3. Compared to the original dataset, the reconstructed version incorporates more challenging real-world samples (e.g., severe occlusion, low-resolution cases). While these challenging samples led to some degree of performance degradation in the overall metrics, these results objectively reflect the technical difficulties encountered in practical application scenarios. These carefully constructed, challenging samples also provide a more realistic test benchmark for future research, which will effectively advance the development of in-the-wild human body orientation estimation technology.

Table 3.

Our performance on the reconstructed MEBOW dataset with more crowded and complex samples. Upward arrows denote that higher values are preferable and downward arrows denote that lower values are preferable.

In addition, to comprehensively present the computational cost of the model, we also report the number of parameters (Params) and floating-point operations per second (FLOPs). For the two-stage baseline models (MEBOW and PedRecNet), we report a composite computational cost, which is equal to the FLOPs of the detector per image plus the product of the FLOPs for orientation estimation per person and the average number of persons in an image. For the single-stage YOLO series models, we directly report the number of parameters and FLOPs corresponding to each 1024 × 1024 image. The relevant results are shown in Table 4.

Table 4.

Computational efficiency comparisons under the metrics of the number of parameters (Params) and FLOPs.

4.3.3. Qualitative Comparison

Figure 7 presents the qualitative evaluation results of our method in various complex scenarios. The predicted orientation is linearly mapped to an angle, by , for visualization. The yellow arrow is drawn from the bounding box center in the direction of , and its length is fixed relative to the box size for clarity. Comparative results demonstrate that our method effectively addresses the detection failures (indicated with black circles) observed in MEBOW under crowded and occluded scenarios. It can be observed that our approach demonstrates remarkable robustness in real-world situations with dense crowds and severe occlusions. Not only can it effectively detect human bodies in low-quality image regions, but it also accurately predicts their orientation angles, fully showcasing the algorithm’s strong adaptability in practical application scenarios.

Figure 7.

The results on extended MEBOW data. The first column is estimated through MEBOW [25], and the second column is estimated using our method. The red boxes indicate human targets, and the yellow arrows represent body orientation. The yellow arrow is drawn from the bounding box center, where the direction corresponds to the orientational angle. The length of the yellow arrow is fixed relative to the box size for visualization clarity.

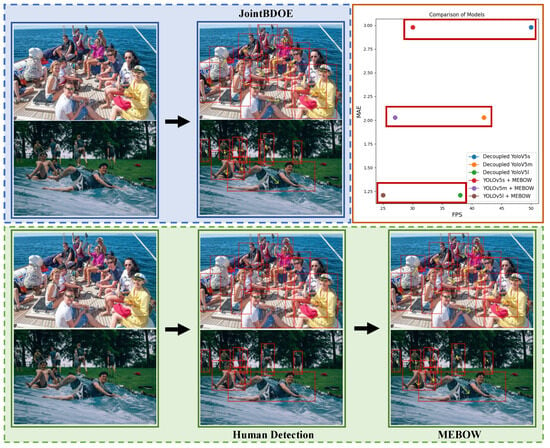

Figure 8 presents a comprehensive comparative analysis between the proposed JointBDOE framework and the conventional MEBOW approach. JointBDOE adopts an innovative end-to-end design concept, requiring only single-stage processing to directly output precise orientation estimation results from raw input images, significantly simplifying the traditional workflow. In contrast, the MEBOW method requires first obtaining human bounding boxes through external detection algorithms (such as Faster RCNN or YOLO) as input before performing subsequent orientation estimation. This two-stage architecture not only increases computational overhead but also introduces additional error accumulation risks. The scatter plot in the upper right corner further confirms that, under the same baseline detection framework, JointBDOE achieves comparable estimation accuracy to MEBOW while operating at a faster processing speed. This makes the algorithm particularly valuable for applications with high real-time requirements.

Figure 8.

The first row delineates the workflow of our method, while the second row represents MEBOW [25]. The scatter plot in the upper right corner visually compares the FPS and MAE between JointBDOE and MEBOW. The term MAE specifically denotes the difference in the estimation results of JointBDOE and MEBOW when employing an identical detection model. The square frame represents body position, and the arrow indicates its facing direction.

4.4. Results on Student Engagement Analysis

In the educational domain, public datasets with students are extremely limited due to ethical and privacy concerns. To address this issue, we construct an authentic classroom teaching dataset from cooperated schools for student engagement analysis. The dataset comprises 10 complete classroom recording videos, each approximately 40 min in duration. The distinctive characteristics of the constructed dataset include the following: (1) various layouts and spatial configurations; (2) diverse camera positions and perspectives; (3) a wide age range from kindergarten to university; and (4) differential seating arrangements, including row–column patterns and group discussions. These characteristics enable the constructed dataset to comprehensively characterize classroom teaching scenarios, thus serving as an effective validation resource for student engagement analysis in classroom settings. In compliance with privacy protection principles, we implement rigorous blurring processing on all facial information of teachers and students in the videos.

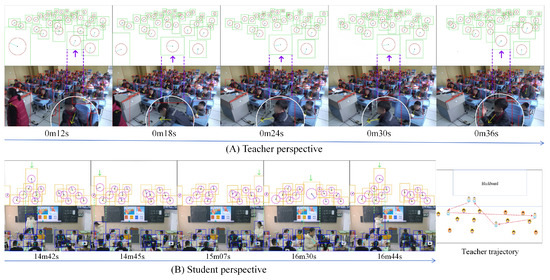

Figure 9 shows the results of student/teacher detection and orientation estimation under various seating arrangements and camera perspectives, where red bounding boxes represent detected teachers and students, and yellow arrows indicate their body orientations. The visualized results demonstrate that the proposed JointBDOE can not only detect almost all the students but also accurately estimate their orientations, even in complex scenarios with crowded students and severe occlusions. From Figure 9, we observe that students’ attention directions are almost consistent with their body orientations; thus, student engagement can be inferred from their body orientations. Meanwhile, JointBDOE can simultaneously identify teachers and their orientations, which supports for mining effective teacher–student interactive patterns.

Figure 9.

Visualized results of JointBDOE on student detection and their body orientation estimation in various classroom layouts. (a) Row–column seating arrangement, (b) group discussion seating arrangement, (c) teacher–student interaction, and (d) circular seating arrangement. The square frame represents body position, and the arrow indicates its facing direction.

Furthermore, we demonstrate how to use students’ body orientation for engagement analysis based on continuous teaching segments. Figure 10 provides dual-perspective visualizations of body orientations from both the teacher and students, along with simplified diagrams above to enhance readability in crowded scenarios. In case (A), with the teacher perspective of Figure 10, when the teacher turns to write on the blackboard, most students maintain high engagement as their body orientations focus on the teacher/blackboard. Meanwhile, the student pointed by the purple arrow constantly changes his orientation, indicating slight disengagement. Combined with timestamp information (class just begins), this phenomenon may indicate that the student has relatively weak self-discipline and has not yet fully entered a focused learning state during the initial class phase. In case (B), with the student’s perspective of Figure 10, based on the size and position of the bounding box, it can be inferred that the person pointed to with the green arrow is the teacher. Between 14 and 16 min of class, the teacher moved back and forth from the podium to the students’ area. Such a movement pattern indicates that the teacher assigns a task and then walks to the students, checking their progress. During this period, all the students hardly changed their orientation, demonstrating a high level of engagement, while the teacher’s movement path showed attention to each row of students.

Figure 10.

Example of continuous course visualization. The square frame represents body position, and the arrow indicates its facing direction.

To sum up, the proposed JointBDOE achieves excellent performances on orientation estimations of teachers and students in various scenarios, including front-view, back-view, and crowded settings. Moreover, the dynamic changes in orientation reveal students’ engagement levels and can also provide objective evidence for dividing classroom teaching segments. This offers strong support for instructional analysis and personalized guidance, fully demonstrating its practical value in educational settings.

4.5. Limitations and Future Research

Although our proposed learning engagement recognition method has achieved good results in various classroom scenarios, there is still room for further improvement. On the one hand, students’ orientation and position are important cues for evaluating classroom engagement, but typical behaviors (such as raising hands or standing up) are also of critical importance in actual teaching, especially in dynamic or collaborative learning situations where interactions and movements are more complex. On the other hand, the dataset used in this study is mainly based on visual information, whereas teacher–student verbal interaction in real classrooms also has a significant impact on teaching effectiveness. In addition, the current engagement detection results are mainly presented in a technical visualization form and have not been fully integrated with educational theory, which limits their interpretability and instructiveness.

Future research will advance in several directions. First, at the feature level, we will integrate the recognition of regular and typical behaviors, including scenarios with more dynamic and collaborative interactions, and combined with students’ position coordinate encoding, to achieve a comprehensive engagement analysis at both the individual and group levels. Second, at the data level, we will incorporate multimodal information such as audio and video to build a more comprehensive classroom evaluation system. Third, at the model level, we will further enhance the performance of JointBDOE in complex scenarios based on its single-stage, low-computational-cost advantages, and explore adaptive optimization strategies for different educational environments. Fourth, at the application level, we will deeply integrate detection results with educational theory to provide teachers with more interpretable and instructive feedback, thereby promoting the innovation and practical implementation of education quality evaluation systems. Through these improvements, we aim to further enhance the accuracy, applicability, and practical value of engagement assessment while maintaining the advantages of being non-intrusive and automated.

5. Conclusions

This study has innovatively proposed a classroom engagement detection method based on human body orientation estimation and developed a model named JointBDOE to efficiently achieve human body orientation estimation. Through an end-to-end architecture, we successfully integrated object detection with orientation estimation, effectively addressing the adaptability and computational efficiency challenges in multi-person scenarios. Given the current scarcity of publicly available classroom datasets, we conducted experimental validation on the MEBOW dataset while performing further testing through reconstructed MEBOW datasets and self-collected real classroom teaching video datasets. The results confirm the effectiveness and broad applicability of our method. This research provides new approaches and robust technical support for classroom analysis and teaching quality assessment in the education field. With technological advancements and evolving educational needs, we will expand the dataset scale and optimize the experimental design to further validate the method’s applicability across different educational scenarios.

Author Contributions

Conceptualization, Y.C. and F.J.; Methodology, Y.C., J.L. and Y.L.; Validation, F.J.; Formal analysis, Y.C., J.L. and Y.L.; Data curation, Y.C. and J.L.; Writing—original draft, Y.C., J.L. and Y.L.; Writing—review & editing, F.J.; Visualization, Y.C., J.L. and Y.L.; Supervision, F.J.; Funding acquisition, F.J. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was supported by the NSFC (National Natural Science Foundation of China) (No. 62207014).

Institutional Review Board Statement

This study was performed in line with the principles of the Declaration of Helsinki. Approval was granted by the Ethics Committee of East China Normal University (date: 9 December 2022/No. HR 786-2022).

Informed Consent Statement

The information is anonymized, and the submission does not include images that may identify the person.

Data Availability Statement

Data can be obtained from the first author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HBOE | Human Body Orientation Estimation |

| MOOC | Massive Open Online Course |

| EEG | Electroencephalography |

| MAE | Mean Absolute Error |

| CNN | Convolutional Neural Network |

| NMS | Non-Maximum Suppression |

References

- Scott, V.G. Clicking in the Classroom: Using a Student Response System in an Elementary Classroom. Proc. New Horizons Learn. 2014, 11. Available online: https://api.semanticscholar.org/CorpusID:61369663 (accessed on 13 October 2025).

- Shulman, L.S. Making differences: A table of learning. Chang. Mag. High. Learn. 2002, 34, 36–44. [Google Scholar] [CrossRef]

- Xie, N.; Liu, Z.; Li, Z.; Pang, W.; Lu, B. Student engagement detection in online environment using computer vision and multi-dimensional feature fusion. Multimed. Syst. 2023, 29, 3559–3577. [Google Scholar] [CrossRef]

- Wang, S.; Shibghatullah, A.S.; Keoy, K.H.; Iqbal, J. YOLOv5 based student engagement and emotional states detection in E-classes. J. Robot. Netw. Artif. Life 2024, 10, 357–361. [Google Scholar] [CrossRef]

- Zhao, C.; Shu, H.; Gu, X. The Measurement and Analysis of Students’ Classroom Learning Behavior Engagement Based on Computer Vision Technology. Mod. Educ. Technol. 2021, 31, 96–103. [Google Scholar]

- Sun, B.; Wu, Y.; Zhao, K.; He, J.; Yu, L.; Yan, H.; Luo, A. Student Class Behavior Dataset: A video dataset for recognizing, detecting, and captioning students’ behaviors in classroom scenes. Neural Comput. Appl. 2021, 33, 8335–8354. [Google Scholar] [CrossRef]

- Mayer, R.E. The Cambridge Handbook of Multimedia Learning; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Zhao, R.; Li, M.; Yang, Z.; Lin, B.; Zhong, X.; Ren, X.; Cai, D.; Wu, B. Towards fine-grained hboe with rendered orientation set and laplace smoothing. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 7505–7513. [Google Scholar] [CrossRef]

- Ahmed, H.; Tahir, M. Improving the accuracy of human body orientation estimation with wearable IMU sensors. IEEE Trans. Instrum. Meas. 2017, 66, 535–542. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Volume 28, p. 72015. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar] [CrossRef]

- Moser, A. The Engagement Concept. In Written Corrective Feedback: The Role of Learner Engagement: A Practical Approach; Springer International Publishing: Cham, Switzerland, 2020; pp. 9–43. [Google Scholar] [CrossRef]

- Booth, B.M.; Bosch, N.; D’Mello, S.K. Engagement detection and its applications in learning: A tutorial and selective review. Proc. IEEE 2023, 111, 1398–1422. [Google Scholar] [CrossRef]

- Trowler, V. Student engagement literature review. High. Educ. Acad. 2010, 11, 1–15. [Google Scholar]

- Zilvinskis, J.; Masseria, A.A.; Pike, G.R. Student engagement and student learning: Examining the convergent and discriminant validity of the revised national survey of student engagement. Res. High. Educ. 2017, 58, 880–903. [Google Scholar] [CrossRef]

- Lei, H.; Cui, Y.; Zhou, W. Relationships between student engagement and academic achievement: A meta-analysis. Soc. Behav. Personal. Int. J. 2018, 46, 517–528. [Google Scholar] [CrossRef]

- National Survey of Student Engagement. Engagement Insights: Survey Findings on the Quality of Undergraduate Education; Annual Results; Center for Postsecondary Research, Indiana University: Bloomington, IN, USA, 2015; 16p. [Google Scholar]

- Liu, S.; Liu, S.; Liu, Z.; Peng, X.; Yang, Z. Automated detection of emotional and cognitive engagement in MOOC discussions to predict learning achievement. Comput. Educ. 2022, 181, 104461. [Google Scholar] [CrossRef]

- Monkaresi, H.; Bosch, N.; Calvo, R.A.; D’Mello, S.K. Automated detection of engagement using video-based estimation of facial expressions and heart rate. IEEE Trans. Affect. Comput. 2016, 8, 15–28. [Google Scholar] [CrossRef]

- Rehman, A.U.; Shi, X.; Ullah, F.; Wang, Z.; Ma, C. Measuring student attention based on EEG brain signals using deep reinforcement learning. Expert Syst. Appl. 2025, 269, 126426. [Google Scholar] [CrossRef]

- Rehder, E.; Kloeden, H.; Stiller, C. Head detection and orientation estimation for pedestrian safety. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014; pp. 2292–2297. [Google Scholar] [CrossRef]

- Araya, R.; Sossa-Rivera, J. Automatic Detection of Gaze and Body Orientation in Elementary School Classrooms. Front. Robot. 2021, 8, 277. [Google Scholar] [CrossRef]

- Raza, M.; Chen, Z.; Rehman, S.U.; Wang, P.; Bao, P. Appearance based pedestrians’ head pose and body orientation estimation using deep learning. Neurocomputing 2018, 272, 647–659. [Google Scholar] [CrossRef]

- Wu, C.; Chen, Y.; Luo, J.; Su, C.C.; Dawane, A.; Hanzra, B.; Deng, Z.; Liu, B.; Wang, J.Z.; Kuo, C.H. MEBOW: Monocular Estimation of Body Orientation in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3451–3461. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO. Code Repository. 2024. Available online: https://github.com/ultralytics/yolov5 (accessed on 6 August 2025).

- Hara, K.; Chellappa, R. Growing regression tree forests by classification for continuous object pose estimation. Int. J. Comput. Vis. 2017, 122, 292–312. [Google Scholar] [CrossRef]

- Burgermeister, D.; Curio, C. PedRecNet: Multi-task deep neural network for full 3D human pose and orientation estimation. In Proceedings of the IEEE Intelligent Vehicles Symposium, Aachen, Germany, 5–9 June 2022. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar] [CrossRef]

- Yang, Z.; Zeng, A.; Yuan, C.; Li, Y. Effective whole-body pose estimation with two-stages distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4210–4220. [Google Scholar] [CrossRef]

- Lin, S.; Hou, W. Efficient Sampling of Two-Stage Multi-Person Pose Estimation and Tracking from Spatiotemporal. Appl. Sci. 2024, 14, 2238. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, X.; Ma, F.; Li, J.; Huang, Y. Single-stage pose estimation and joint angle extraction method for moving human body. Electronics 2023, 12, 4644. [Google Scholar] [CrossRef]

- Zhao, J.; Ye, H.; Zhan, Y.; Zhang, H. Human Orientation Estimation Under Partial Observation. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 11544–11551. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Wu, C.Y.; Xu, Q.; Neumann, U. Synergy between 3dmm and 3d landmarks for accurate 3d facial geometry. In Proceedings of the 2021 International Conference on 3D Vision, London, UK, 1–3 December 2021; pp. 453–463. [Google Scholar] [CrossRef]

- Nonaka, S.; Nobuhara, S.; Nishino, K. Dynamic 3D Gaze from Afar: Deep Gaze Estimation from Temporal Eye-Head-Body Coordination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2192–2201. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Zhou, H.; Jiang, F.; Si, J.; Ding, Y.; Lu, H. BPJDet: Extended Object Representation for Generic Body-Part Joint Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 4314–4330. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. Ultralytics YOLO11. Code Repository. 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 6 August 2025).

- Liu, Y.; Han, Y.; Zhou, H.; Li, J. Joint Multi-person Body Detection and Orientation Estimation Via One Unified Embedding. In Proceedings of the Pattern Recognition and Computer Vision, Singapore, 11–15 June 2025; pp. 467–480. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).