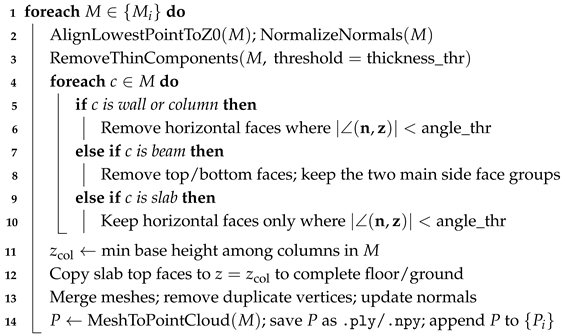

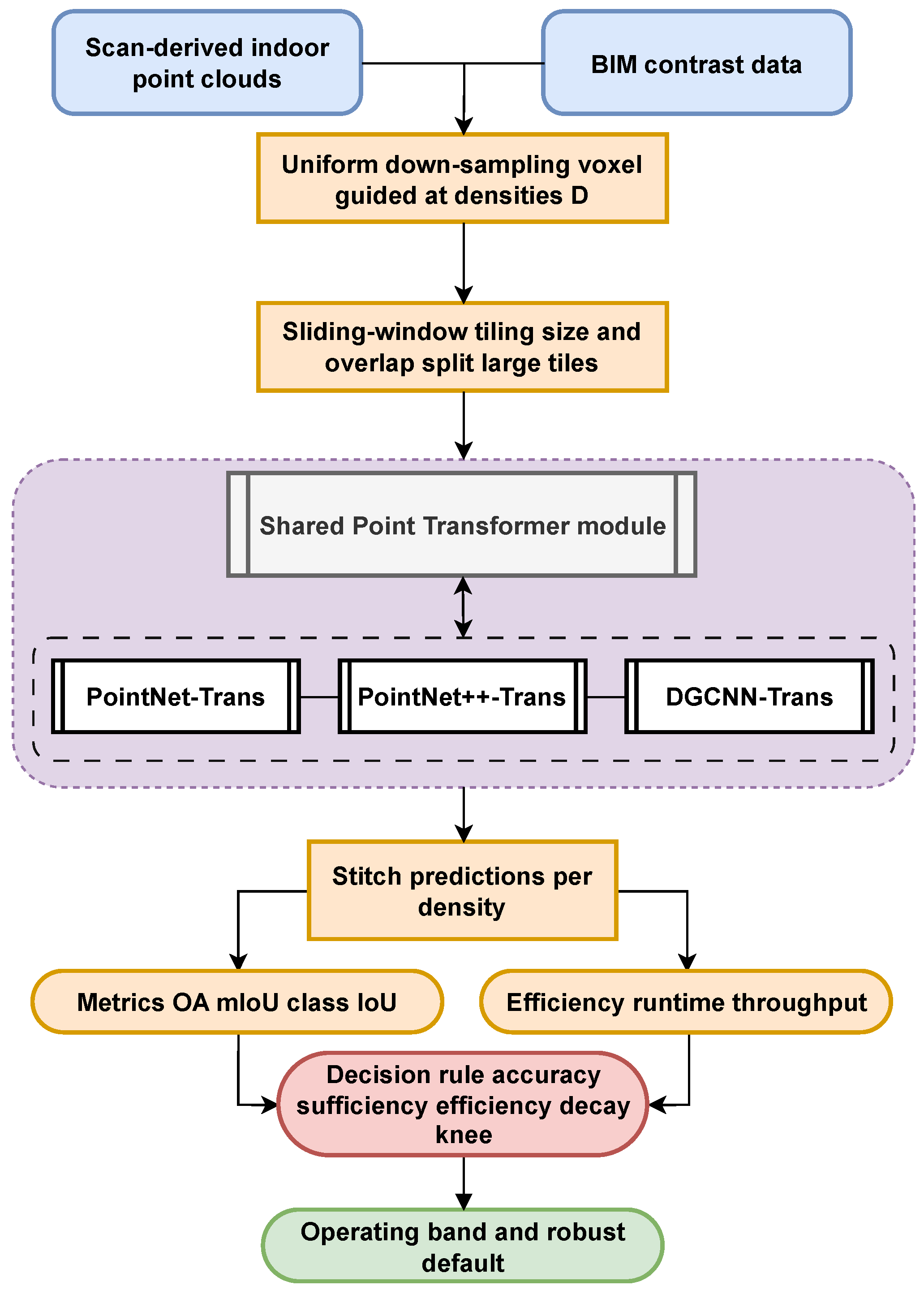

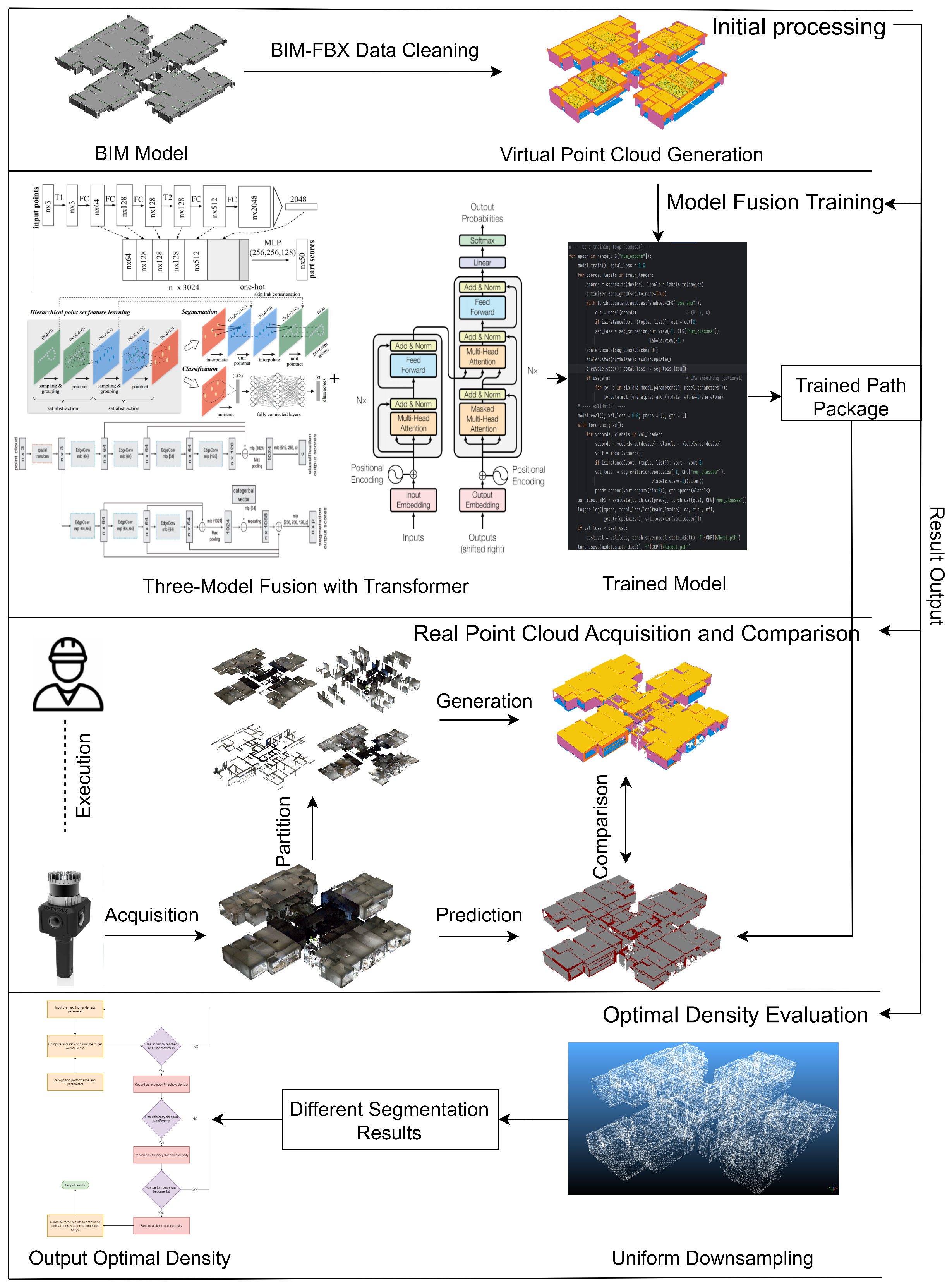

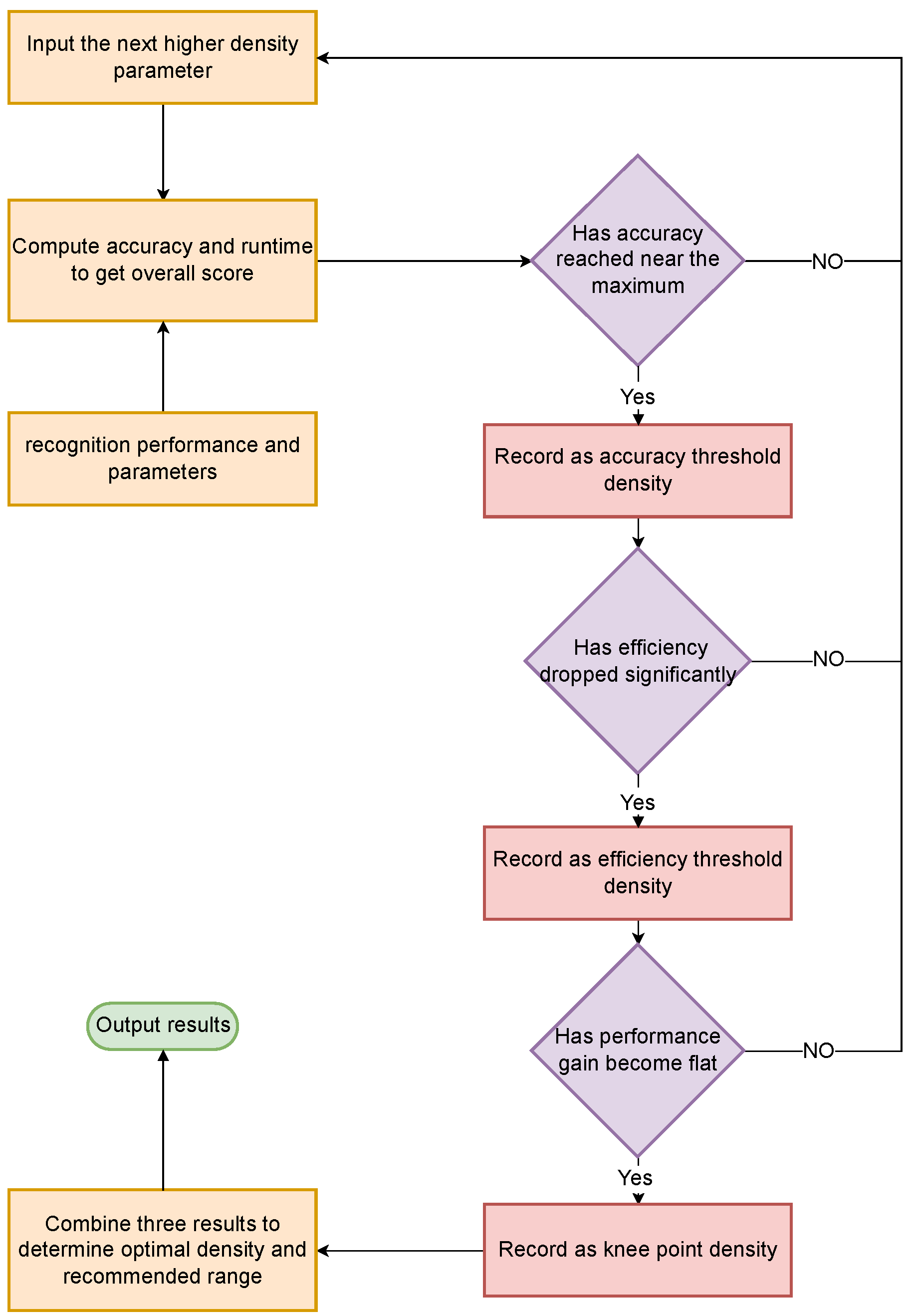

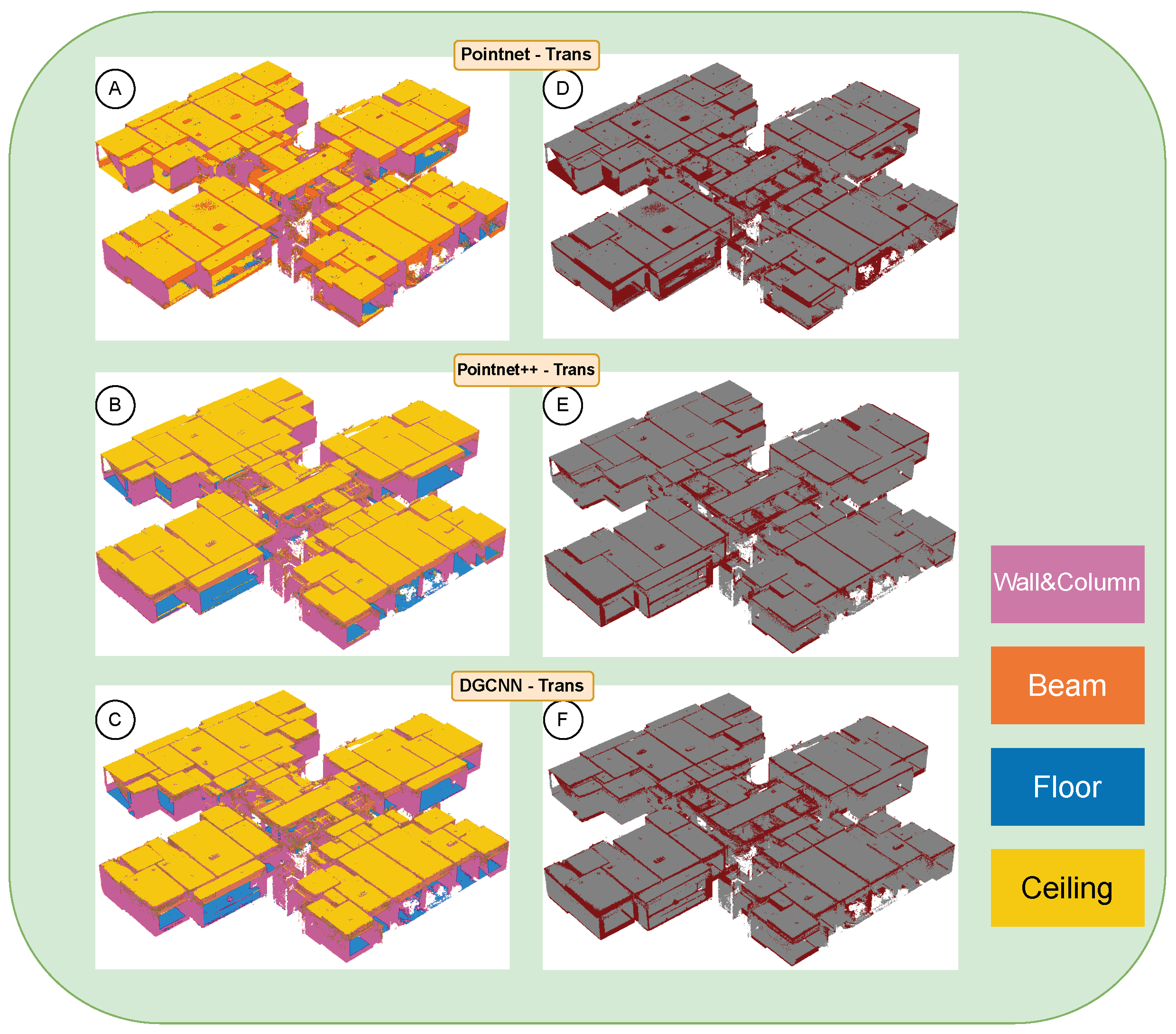

3.3.1. Model Architecture Overview

To prevent architecture-specific differences from confounding the “sampling density–performance” relationship and to examine the generality of our approach across different feature-modeling paradigms, we build a unified segmentation framework based on three classical backbones: PointNet–Trans, PointNet++–Trans, and DGCNN–Trans. Here, PointNet denotes a point-wise MLP with symmetric pooling for global feature aggregation, PointNet++ extends this with hierarchical set abstraction and local neighborhood grouping, and DGCNN encodes local topology using EdgeConv on dynamic k-nearest neighbors (k-NN) graphs. The overall model architecture is as follows: each backbone first extracts basic geometric features; a Point Transformer module (for global context aggregation) is then introduced to capture both local and global information [

45]; channel-wise feature fusion and class-aware attention are applied for further refinement; and finally, a unified point-wise classification head outputs semantic probabilities for the four component categories (walls and columns, beams, floors, and ceilings) [

42]. To ensure fair comparability, all three fusion pipelines use the same Transformer depth, hidden feature dimensionality, and classification head structure, and their training procedures and data preprocessing are fully aligned. This uniform configuration guarantees that any performance differences can be attributed solely to changes in the input sampling density.

To ensure broad coverage of common point-cloud feature learning strategies, we choose PointNet, PointNet++, and DGCNN as the representative backbones. PointNet uses shared MLP layers as a lightweight global feature extractor, which helps us observe the direct impact of density changes on overall representations. PointNet++ employs hierarchical sampling and local feature aggregation to capture multi-scale geometric structure, making it suitable for examining how sampling density affects local context information. DGCNN encodes neighborhood topology through EdgeConv, which enables the study of density sensitivity in local-structure learning [

43,

46,

47]. We insert an identical Transformer module after the high-level features in all three backbones and perform feature fusion, allowing these different modeling mechanisms to accommodate the “density” variable in a consistent way. Accordingly, our analysis centers on quantifying the optimal sampling density rather than comparing the relative superiority of the models. To keep the density–performance relation interpretable and reproducible, we deliberately select three classical yet representative backbones that span point-wise MLPs (PointNet), hierarchical grouping (PointNet++), and graph-based local topology (DGCNN). Recent state-of-the-art systems such as sparse 3D CNNs (e.g., MinkowskiNet), efficient random sampling networks (e.g., RandLA-Net), and Transformer-only encoders (e.g., Point Transformer) employ architecture-specific sparsity or sampling policies that materially change runtime/memory scaling. Introducing them here would confound “density” with model capacity and framework-level optimizations. Our objective in this study is therefore to provide a model-agnostic selector where density is the only independent variable; systematic extensions to newer backbones under the same unified protocol are planned in follow-up work.

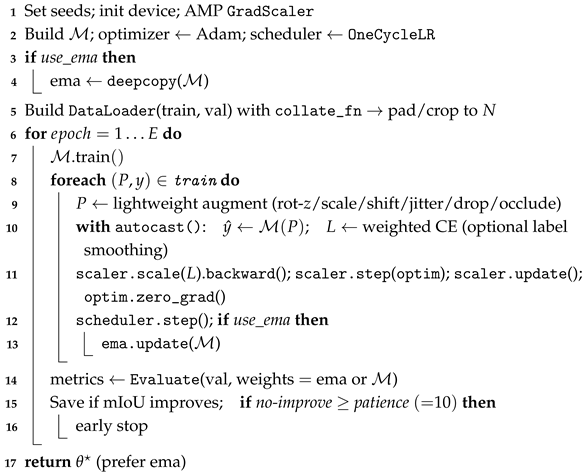

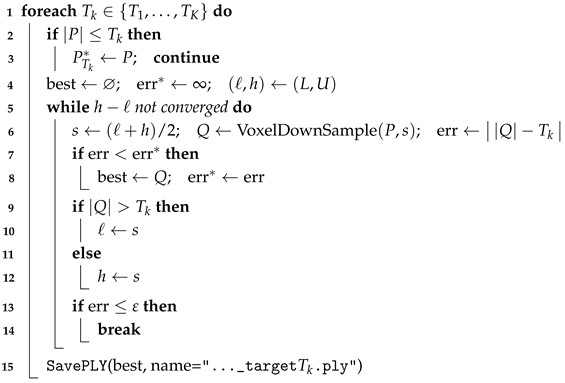

3.3.2. Training Configuration and Implementation

To ensure that “sampling density” is the only variable under comparison in our experiments, we construct a unified and reproducible training pipeline on a single GPU. For different networks, only the local feature extraction branch is changed while all other training factors remain the same. All three fused models follow the same architecture paradigm—local encoding, Point Transformer–based global context, class-aware attention, and point-wise classification head—differing only in whether the local branch is PointNet, PointNet++, or DGCNN. Real point clouds are used solely as an external test set and are not included in training in order to avoid any distribution bias.

The training data consist of the tiled virtual point clouds prepared in

Section 3.2. To eliminate any influence from varying input sizes, we use a custom

collate_fn that pads or crops each sample to a fixed number of points

N. In addition, automatic mixed precision (AMP), Exponential Moving Average (EMA) weight updates, learning rate scheduling, and early stopping are applied uniformly in all experiments [

48].

We use Adam (initial learning rate

, weight decay

) as the optimizer, combined with a

OneCycleLR learning rate schedule that first increases and then decreases the rate [

49]. This “rise-then-fall” policy reduces oscillations in early training and accelerates convergence. An Exponential Moving Average (EMA) of the model weights is maintained to smooth out parameter updates and improve generalization [

50], and the EMA weights are used for inference during validation and testing. This choice follows classical Polyak averaging, which is known to produce more stable parameter estimates in stochastic optimization [

51]. We set the maximum training epochs to 500 and enable Early Stopping with a patience of 10 epochs [

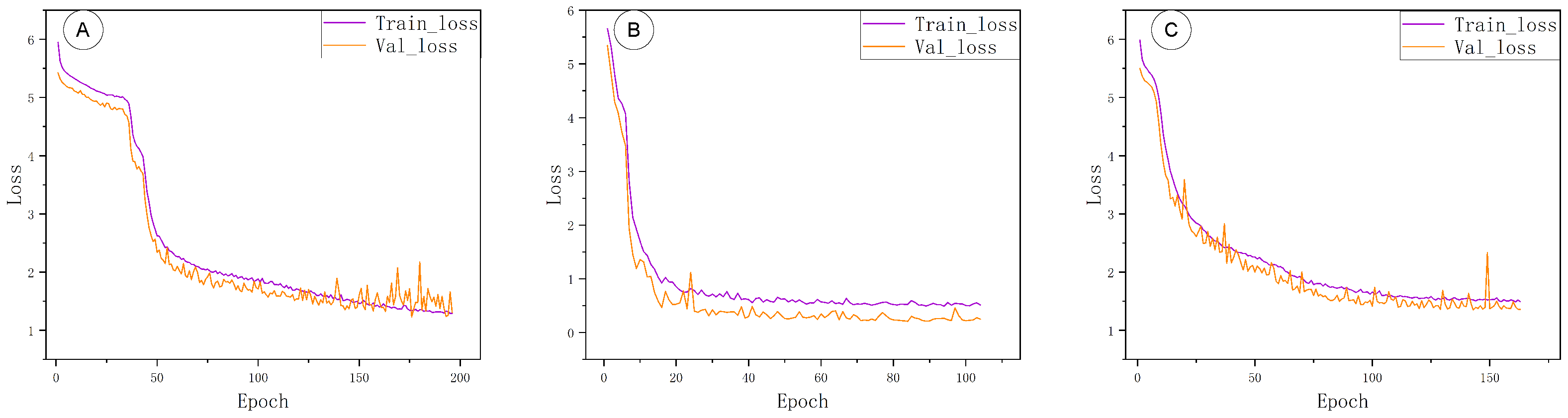

52]. If the validation metrics show no significant improvement for 10 consecutive epochs, training is automatically terminated; conversely, if the model converges earlier, we stop training to avoid overfitting and reduce unnecessary computation.

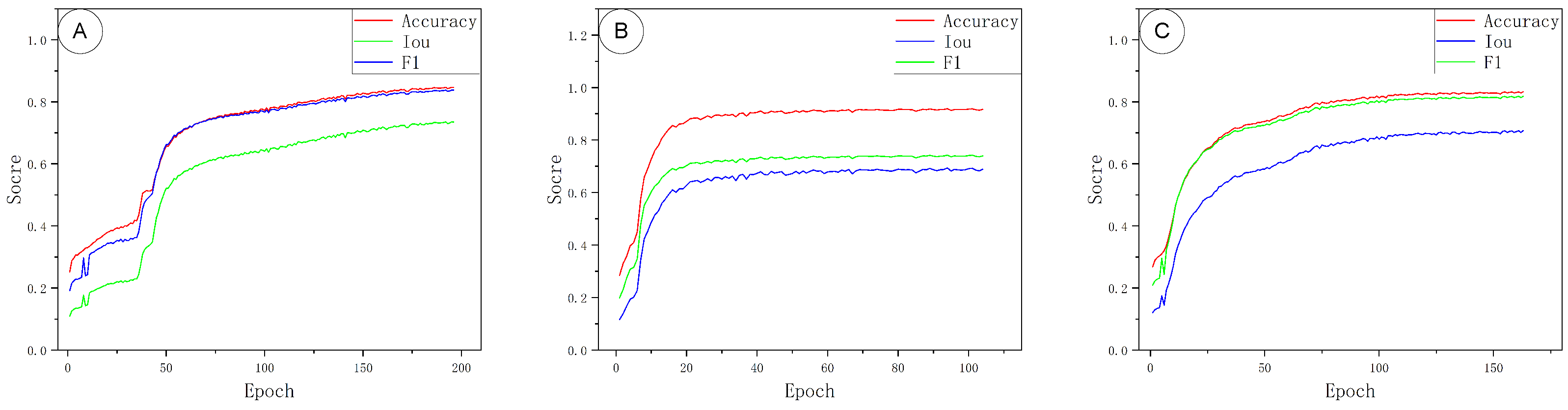

Data augmentation is decoupled from sampling density and includes random rotation about the Z-axis, isotropic scaling, random translation, Gaussian jitter, small-percentage point dropping, and local occlusion. The augmentation magnitudes and probabilities are kept identical across all experiments and do not change the sampling density itself. The evaluation protocol is also consistent between training and validation: at each epoch we compute Overall Accuracy (OA), mean Intersection-over-Union (mIoU), macro F1-score, and per-class IoU, and we generate a confusion matrix for error analysis. Here, OA denotes the fraction of correctly classified points; mIoU is the mean of class-wise intersection-over-union; macro F1-score is the unweighted average of per-class F1; and IoU is defined as

. The best model weights are saved based on validation performance, and Early Stopping is triggered as described above if applicable. The parameter configurations for training and evaluation are summarized in

Table 1. We adopt Adam for adaptive moment estimation with per-parameter step sizes and bias-corrected first/second moments, a widely validated choice for deep architectures in practice [

53]. For clarity, AMP denotes automatic mixed precision to reduce memory use and improve throughput, and EMA denotes the exponential moving average of model weights for more stable evaluation.

To keep sampling density as the sole comparative variable, we apply automatic mixed precision (AMP), exponential moving average (EMA) of weights, a single learning–rate schedule, and early stopping uniformly across all experiments. AMP reduces GPU memory and wall-time at the same batch size while preserving numerical stability via dynamic loss scaling; EMA yields lower-variance validation estimates under identical data and training length; a single schedule (OneCycleLR with the same maximum learning rate as in

Table 1) harmonizes convergence behavior across backbones; and early stopping (patience = 10 on validation metrics) prevents over-training from confounding density effects. These controls standardize optimization and evaluation so that any observed differences arise from density rather than training heuristics.

For the loss function, a point-wise weighted cross-entropy is adopted to mitigate class imbalance among components. It is defined as

where

denotes the one-hot label,

the predicted probability, and

the class weight. With label smoothing enabled

, the labels are rewritten as

The Exponential Moving Average weights are updated by

Here, controls the averaging window (larger gives heavier smoothing and slower adaptation).

During validation and testing, EMA weights are used for inference to obtain more stable evaluations. At this point, all non-density factors in training have been unified, ensuring that density remains the only comparative variable in subsequent experiments; the concrete implementation is provided in Algorithm 2.

| Algorithm 2: Training pipeline for point-cloud segmentation. |

Input: Virtual point-cloud dataset (blocks), model PointNet–Trans, PointNet++–Trans, DGCNN–Trans}, epochs E, fixed point number N

Output: Best weights (EMA preferred)

![Sensors 25 06398 i002 Sensors 25 06398 i002]() |

Training and evaluation settings are kept identical across all density levels: per-tile normalized points ; batch size ; maximum epochs with early stopping (patience ); Adam optimizer (initial learning rate , weight decay ) with OneCycleLR; AMP and EMA enabled; augmentations include rotation about the Z axis, isotropic scaling, translation, Gaussian jitter, and sparse point dropping; validation metrics: OA, mIoU, and macro-F1.

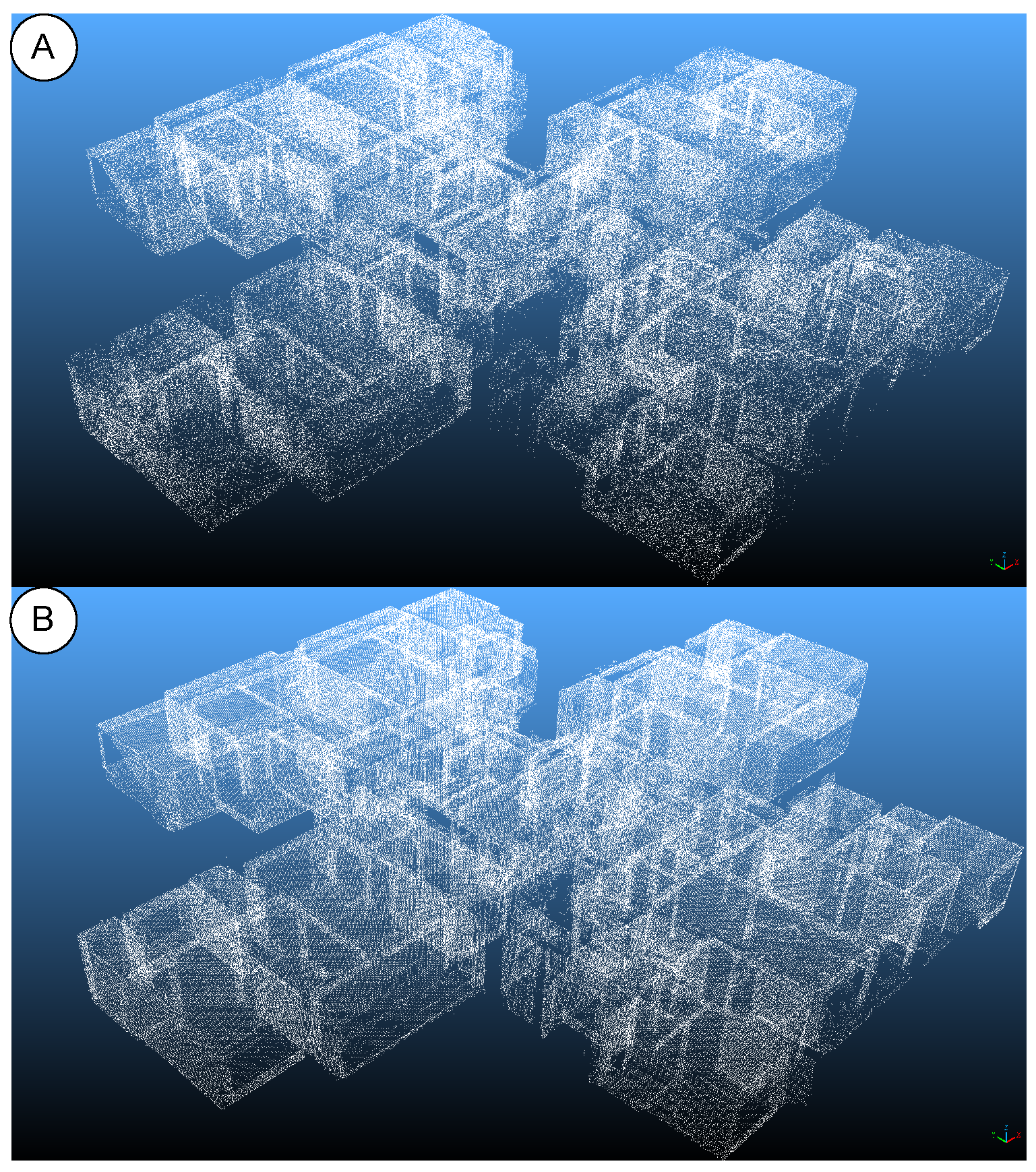

In our DataLoader, the collate function standardizes each sample to a fixed budget N points: if a tile exceeds N, points are randomly subsampled; if smaller, we pad coordinates/features to N and set the extra labels to an ignore_label. During training we also apply lightweight augmentations (rotation about Z, isotropic scaling, translation, jitter, sparse point dropping) within the collate stage, whereas validation only pads. The resulting batch tensors have shapes for coordinates/features and for labels, ensuring that batching effects do not confound density.

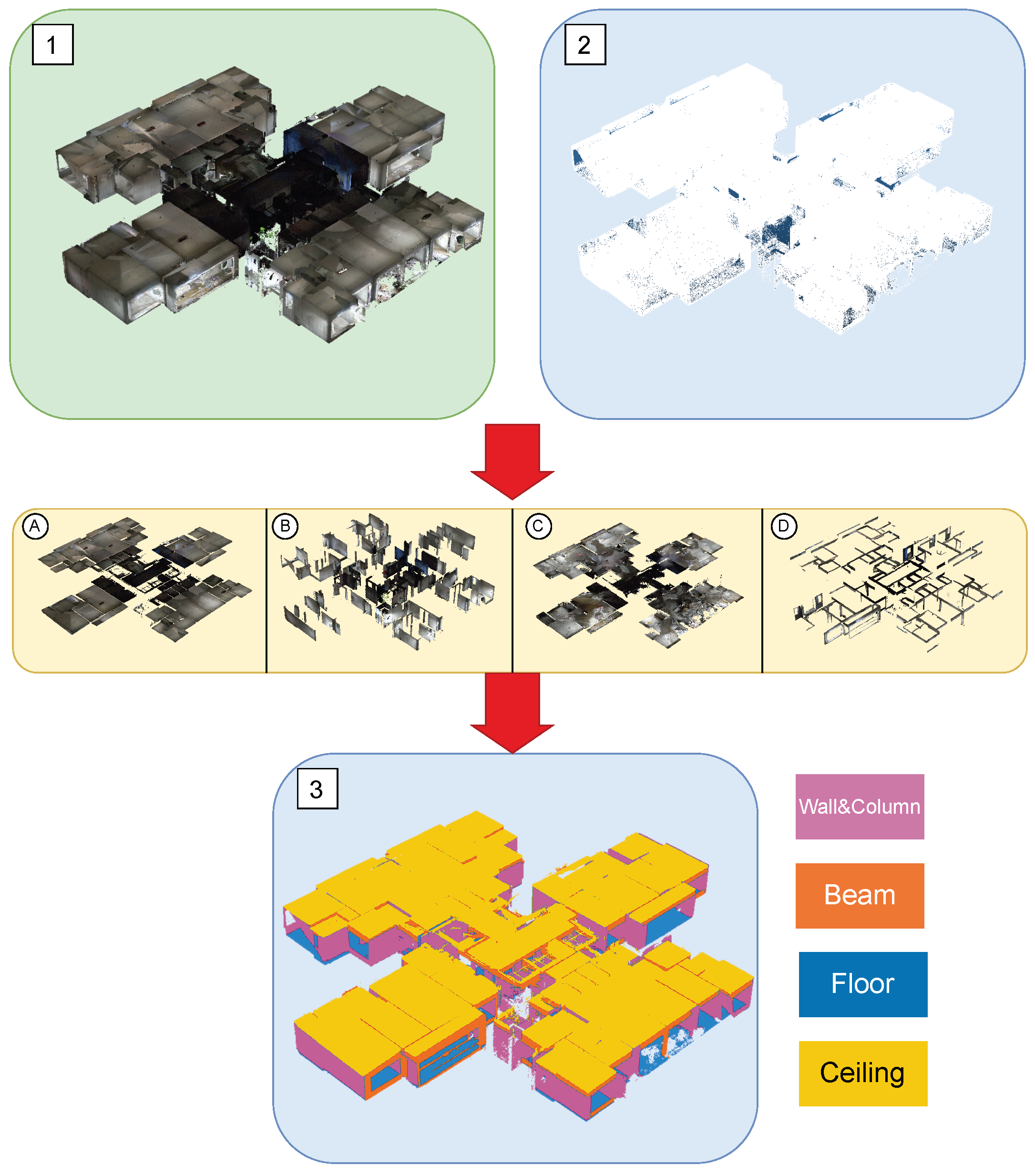

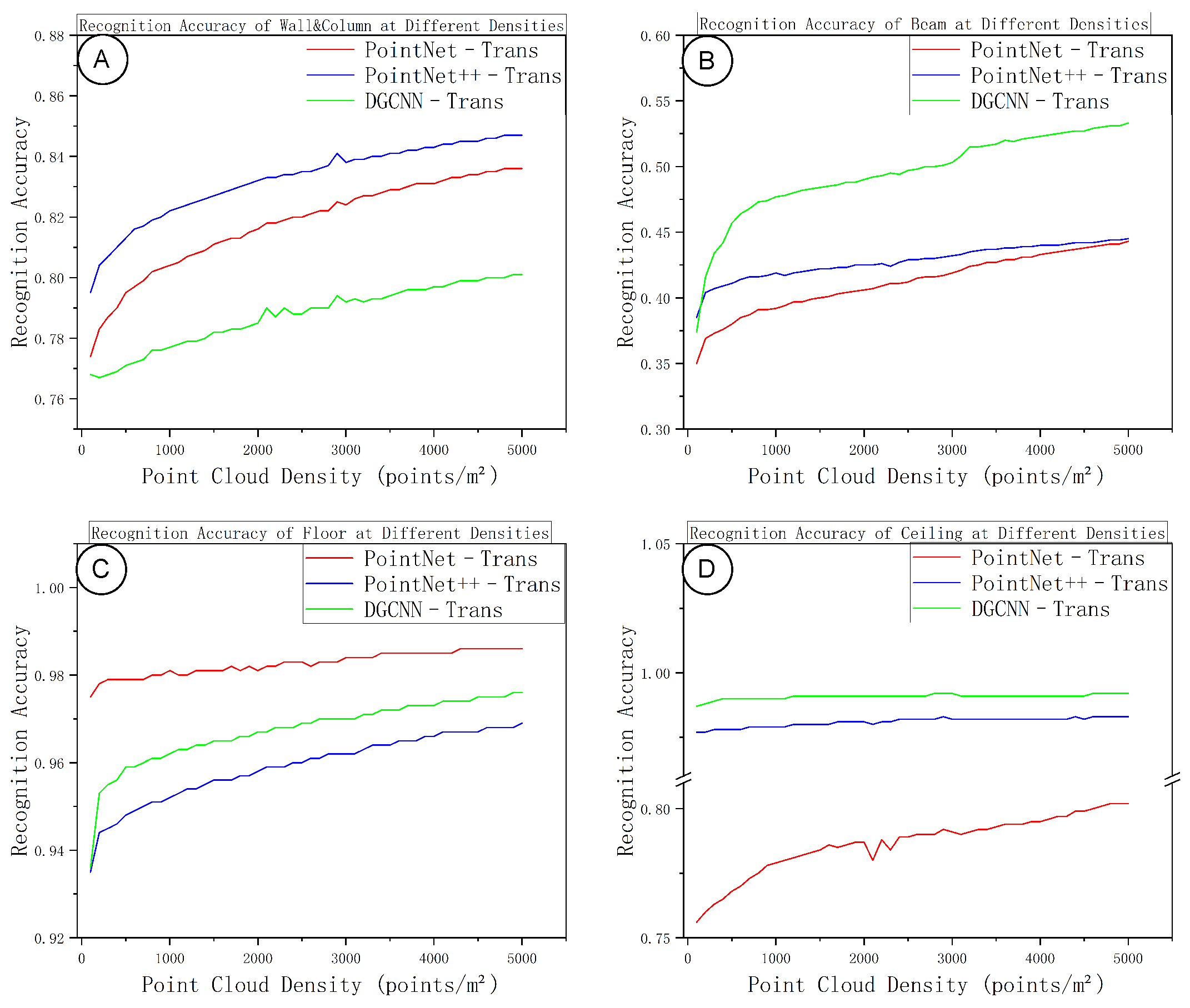

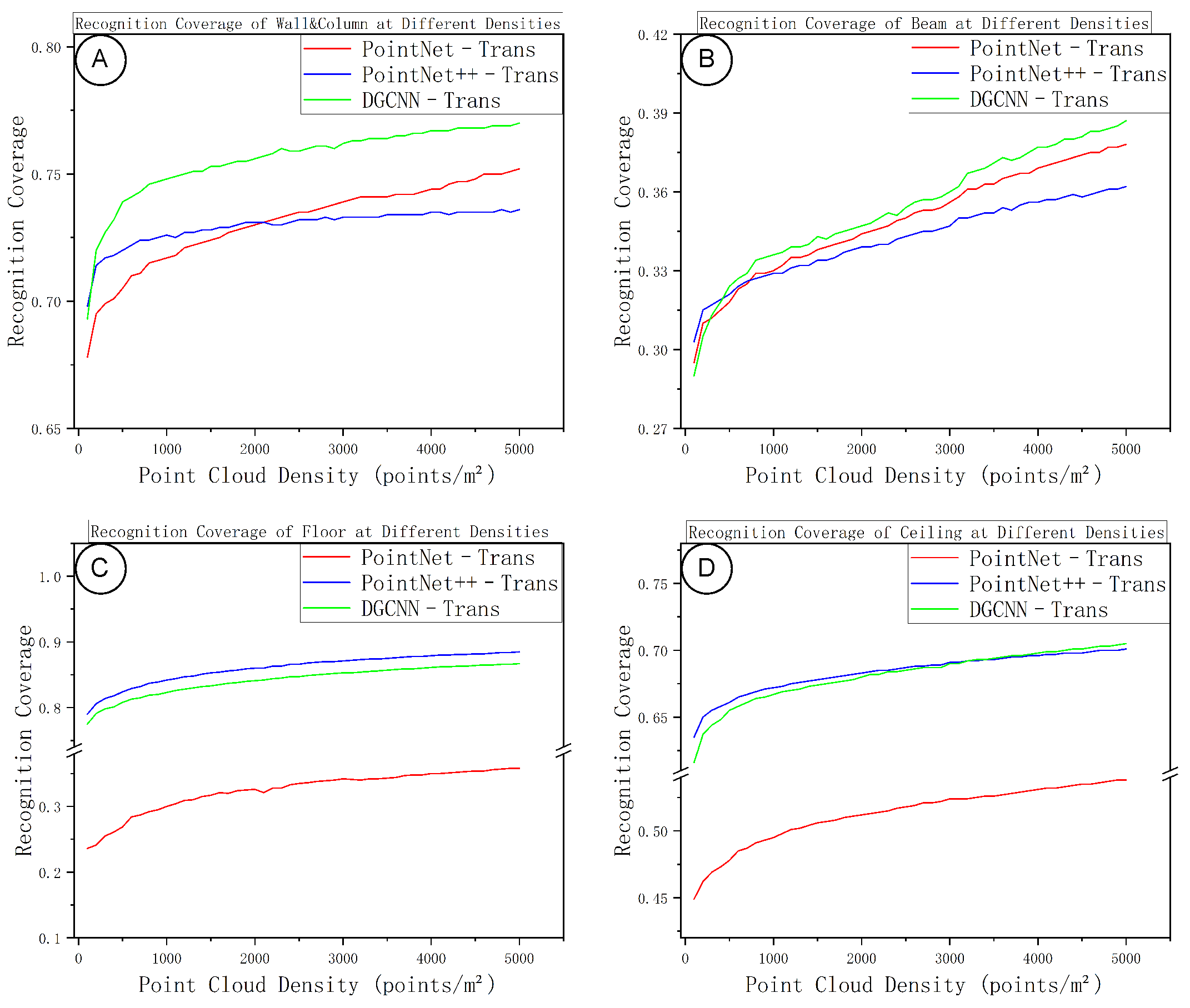

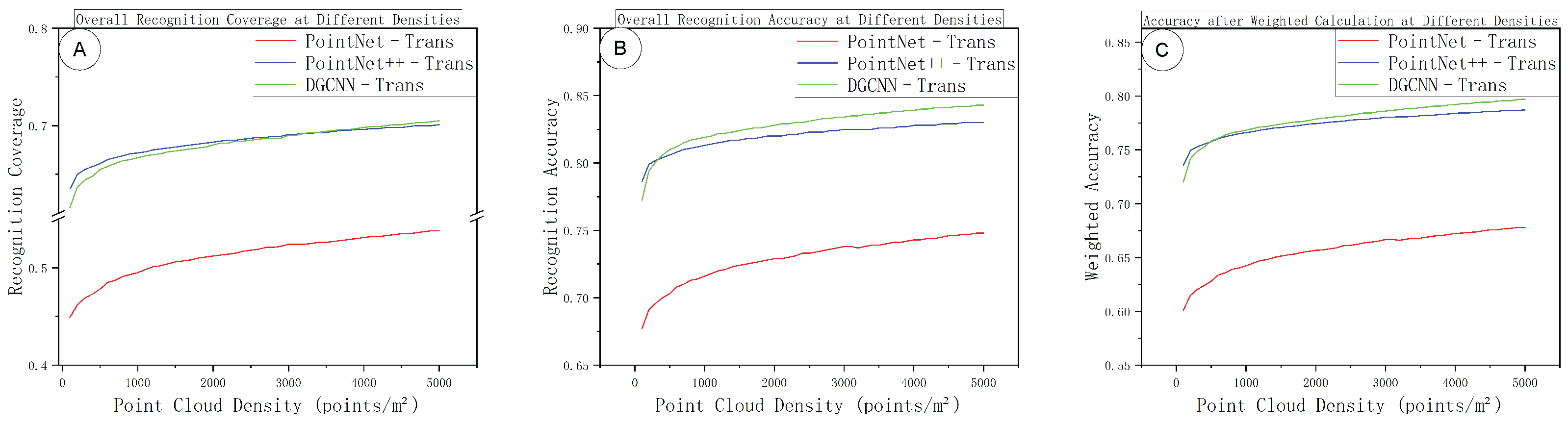

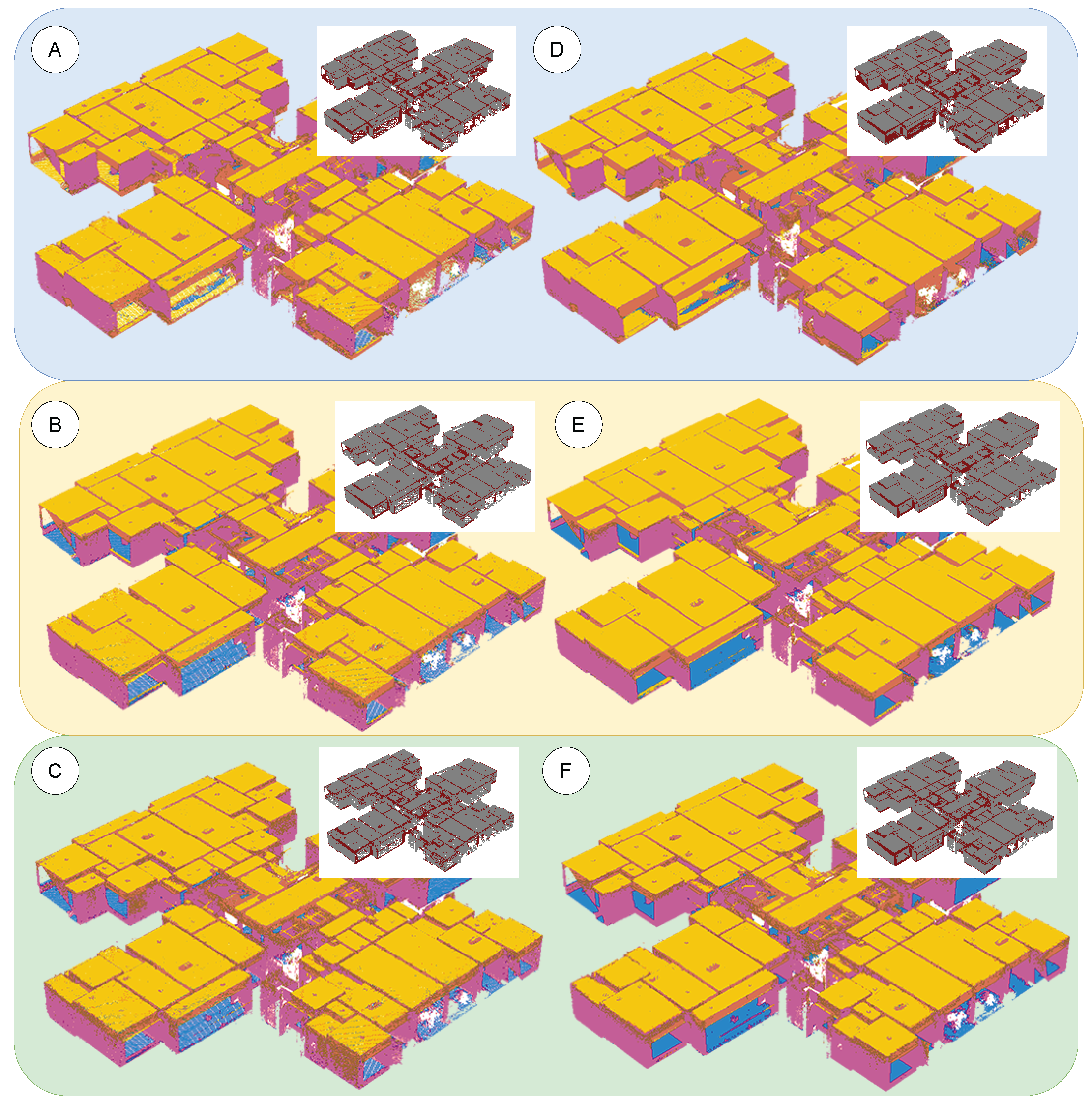

After training, the model is applied to inference on real point clouds. In the testing phase, we reuse the same tiling and overlap strategy as in training, segmenting the input point cloud block by block and performing voting fusion in overlapping regions to reconstruct complete component-level semantic labels. The results are then compared with those from synthetic data to assess recognition performance across data sources. The classification system includes four component categories—walls and columns (0), beams (1), floors (2), and ceilings (3)—and we compute category-wise accuracy and coverage to analyze class-level differences.

The evaluation metrics remain consistent with those used during training and validation: at each epoch we compute Overall Accuracy (OA), mean Intersection over Union (mIoU), macro-F1, and per-class IoU, and generate a confusion matrix for error diagnosis. IoU measures the overlap between predictions and ground truth; mIoU is the arithmetic mean of per-class IoUs; F1 combines precision and recall to characterize overall classification effectiveness.

To ensure comparability and reproducibility, the three fused models use exactly the same settings for batch size, maximum epochs, augmentation magnitude, optimization and scheduling, Early Stopping, EMA, and the evaluation protocol [

6].