Intelligent Classification of Urban Noise Sources Using TinyML: Towards Efficient Noise Management in Smart Cities

Abstract

Highlights

- An efficient TinyML system was deployed for real-time, on-device classification of urban noise, achieving high accuracy (precision/recall up to 1.00).

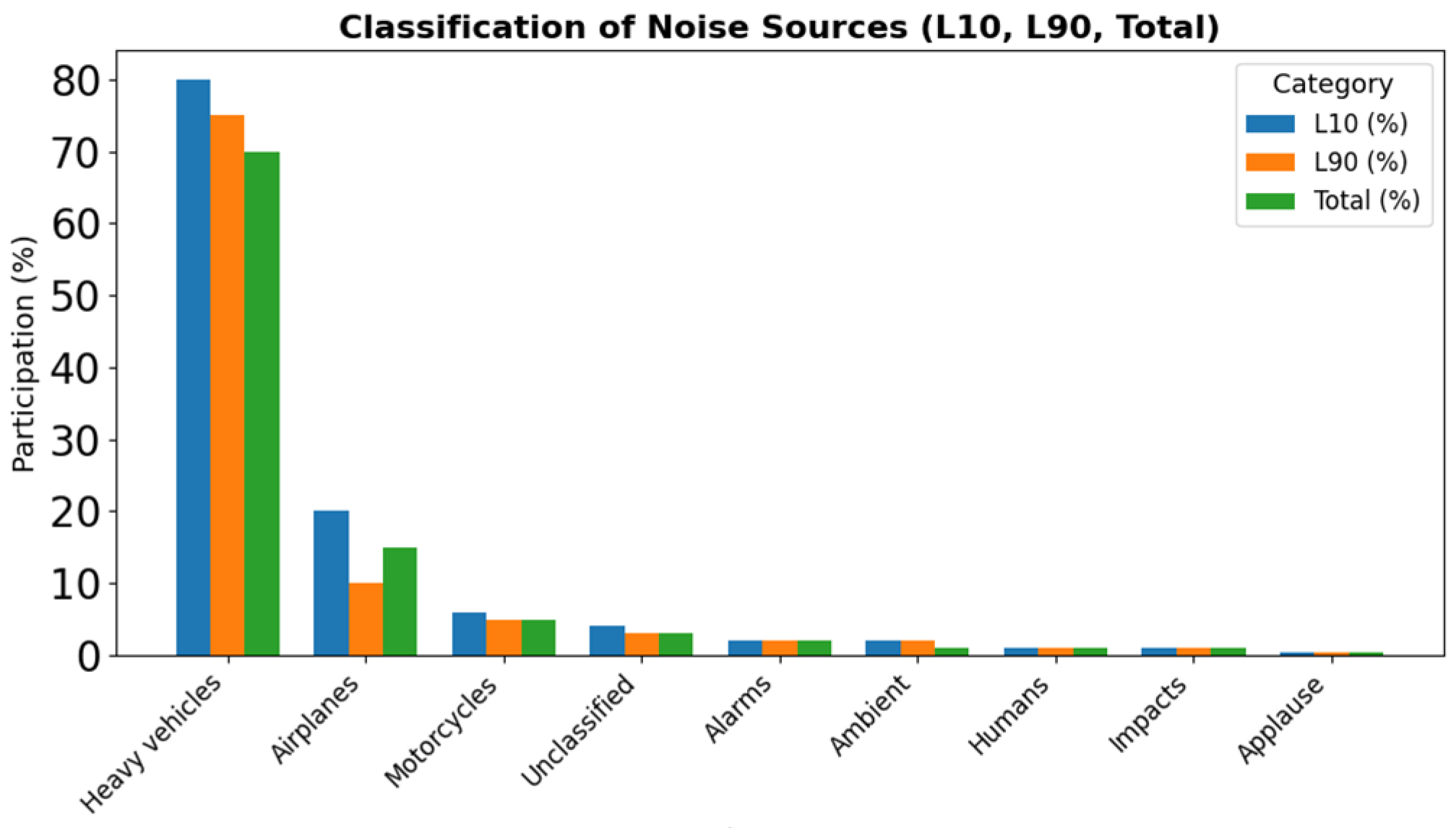

- Heavy vehicles were identified as the most frequent noise sources, whereas aircraft generated the highest A-weighted sound pressure levels (La, max = 88.4 dB(A)), exceeding the local permissible limit by up to 18 dB(A).

- The study proves that TinyML is a viable solution for dense, permanent noise monitoring networks that identify specific sources, not just volume.

- It enables targeted noise mitigation policies by pinpointing the most impactful contributors to urban noise pollution.

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

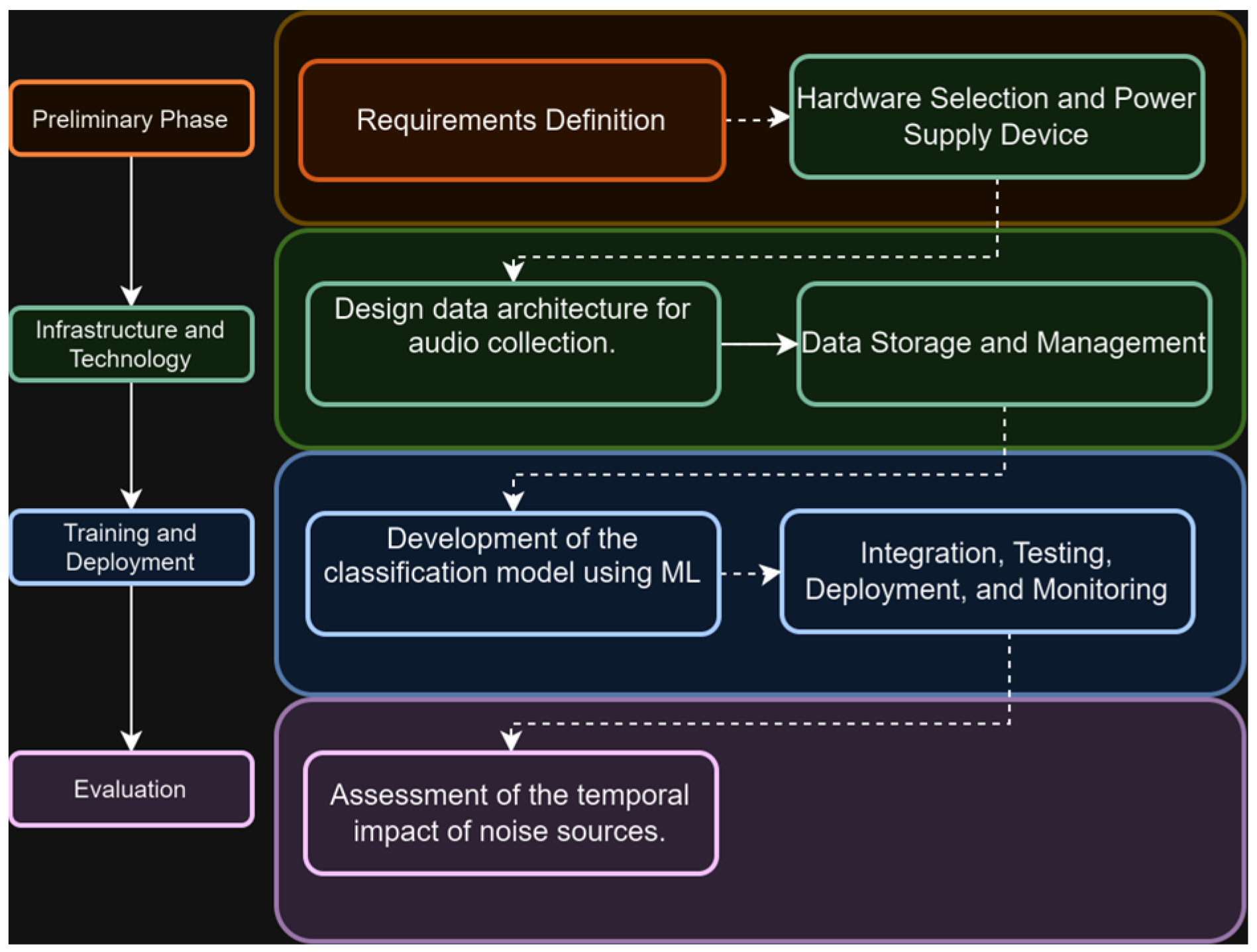

3.1. Preliminary Phase

3.1.1. Requirements Definition

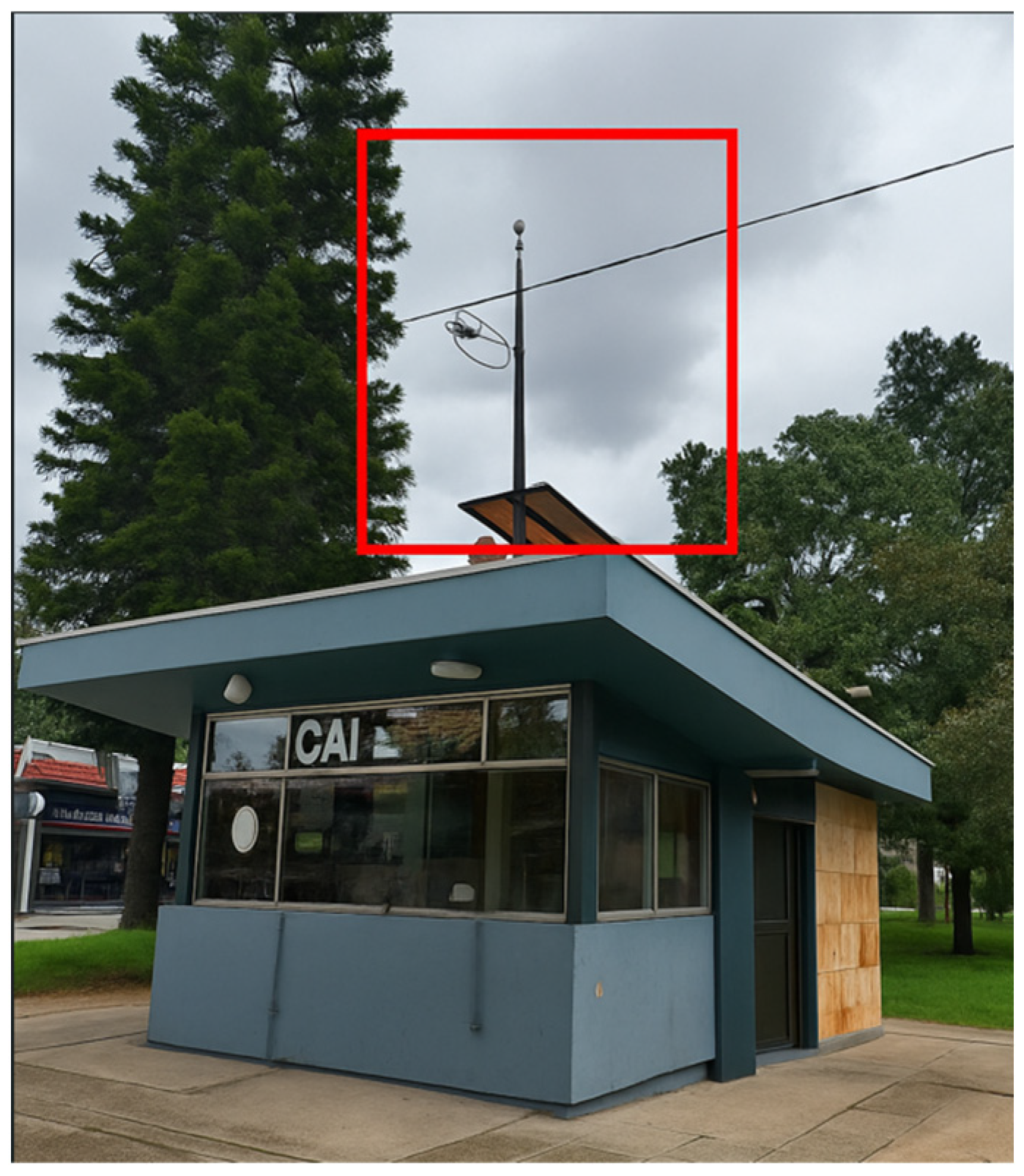

3.1.2. Hardware and Power Supply Device Selection

- Microphone: Based on the results presented, the most cost-efficient microphone was the Umik-1, see Figure 2, whose audio does not suffer high distortion with recordings for long periods of time. However, it was necessary to improve weather protection and filter the audio for the machine learning model.

- Development board: The development board [22] has among its significant advantages its compact design and low power consumption compared to other Raspberry Pi models. The Raspberry Pi 2W offers 512 MB of RAM, which is sufficient for lightweight applications such as basic real-time data collection, simple IoT projects, or educational use. In addition, it integrates a quad-core ARM Cortex-A53 processor, enabling efficient processing while maintaining reduced energy requirements. The Raspberry Pi 2W also relies on microSD cards for storage, providing flexibility in terms of capacity and ease of system updates. These characteristics make it an attractive option for projects that require a balance between performance, portability, and energy efficiency at a low cost.

- System structure: In the energy section of the project, a PS 90 solar panel with a nominal capacity of 90 W [23] has been incorporated. This panel can generate a maximum current of 4.33 A at an operating voltage of 18.625 V, making it suitable for small to medium-scale systems. The panel’s open-circuit voltage is 21.96 V, and the short-circuit current is 4.69 A, providing a safety margin and efficiency during fluctuations in environmental conditions. Weighing 7.2 kg and with dimensions of 905 × 673 × 35 mm, this panel is manageable and fits well in installations with limited space or specific mounting requirements.

3.1.3. Infrastructure, Technology, and Data Architecture for Audio Collection

3.1.4. Data Storage and Management

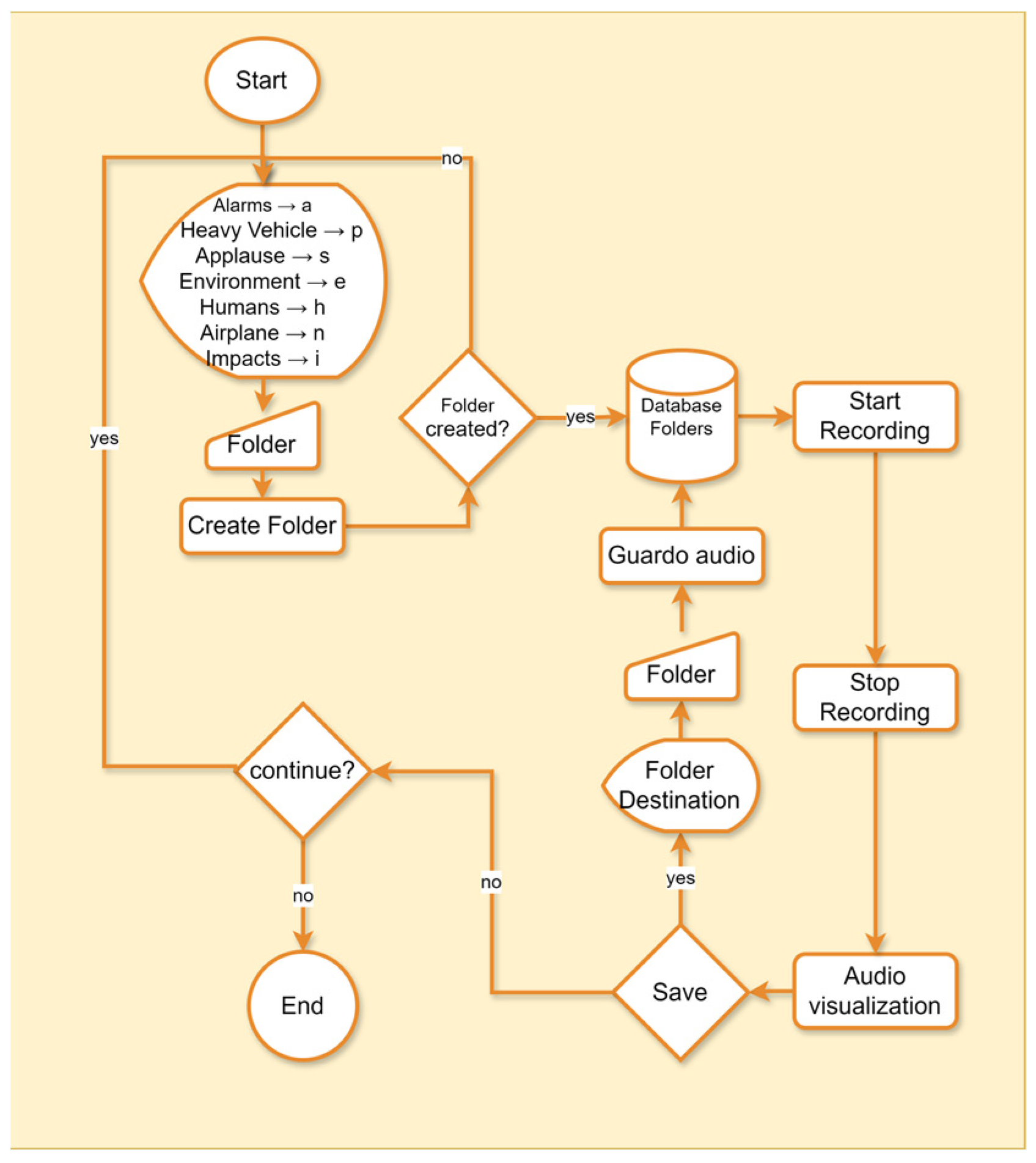

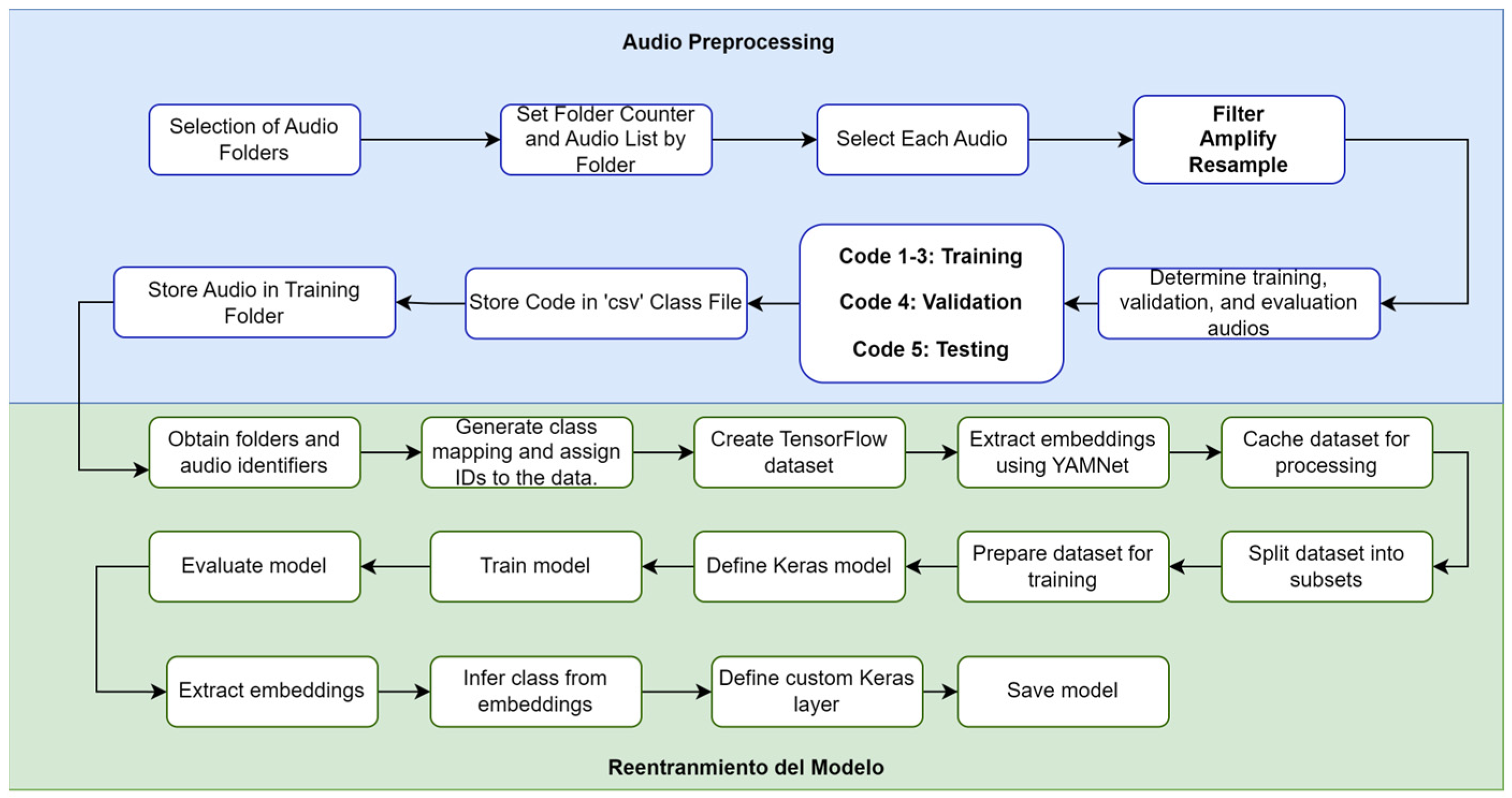

3.2. Dataset Preparation and Audio Processing

3.2.1. Model Re-Training

3.2.2. Pre-Processing and Labeling

3.2.3. Model Adapted to a Tiny Device

3.2.4. Integration, Testing, Deployment and Monitoring

3.2.5. Validation Against Reference Sound Measurement Systems

4. Results

Results of the Implementation of the Embedded System for Data Acquisition

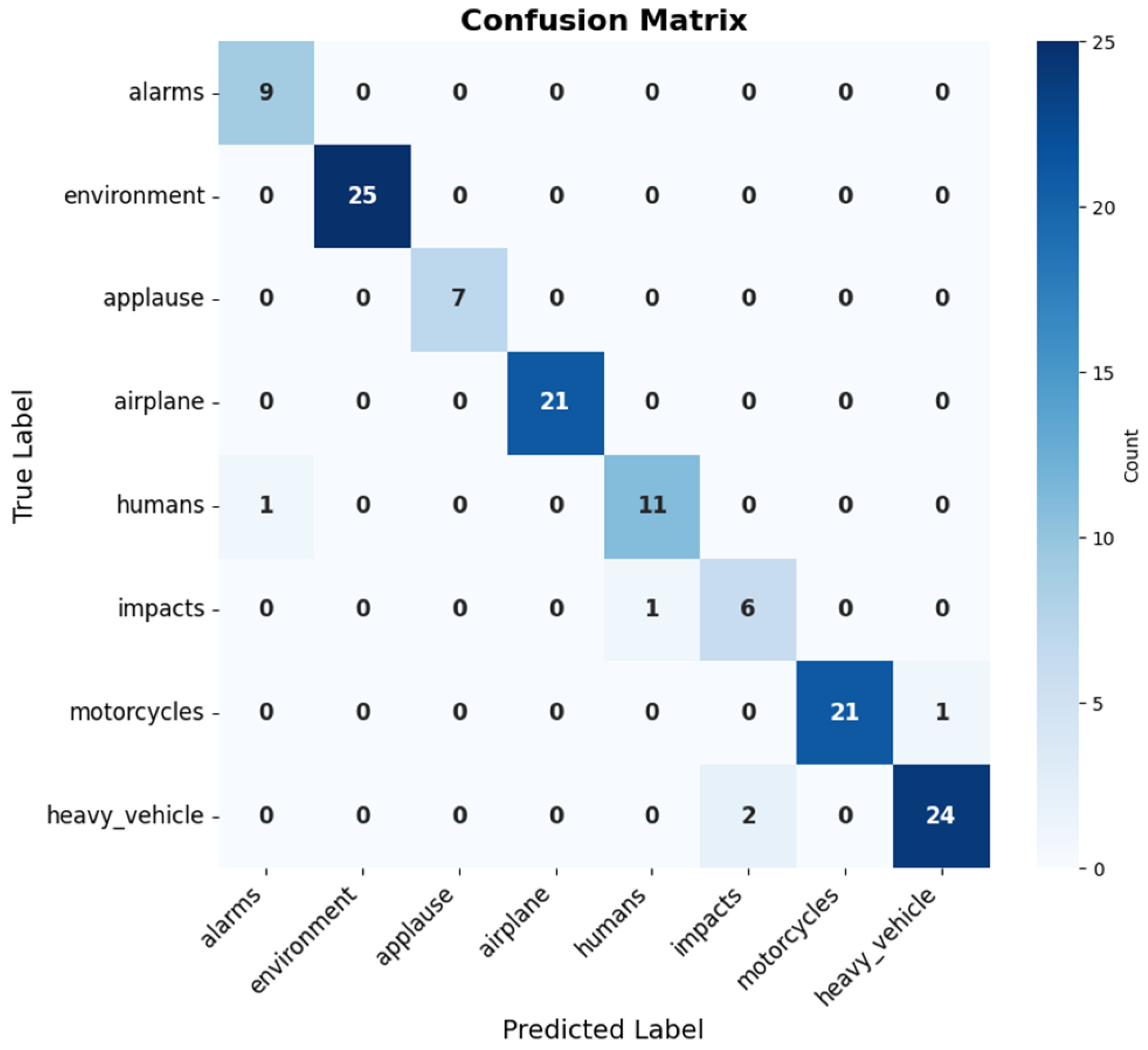

- Two audio recordings of impacts were classified as heavy vehicles.

- An audio from the alarm class was classified into the human class.

- An audio from the motorcycle class was incorrectly classified into the airplane class.

- An audio from the heavy vehicle class was classified in the motorcycle class.

- Analysis of Daytime (LD) and Nighttime (LN) Noise:

- Daytime Shift (LD): The equivalent continuous sound pressure level (LA-Seq, T) showed a variation of 2.8 dB(A), with a maximum of 71.8 dB(A) (10 May) and a minimum of 69.0 dB(A) (13 May).

- Nighttime Shift (LN): Noise levels were lower but showed a similar variation of 2.6 dB(A), recording a maximum of 69.2 dB(A) (10 May) and a minimum of 66.6 dB(A) (13 May).

- Analysis of Frequency Behaviors:

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Basner, M.; Babisch, W.; Davis, A.; Brink, M.; Clark, C.; Janssen, S.; Stansfeld, S. Auditory and Non-Auditory Effects of Noise on Health. Lancet 2014, 383, 1325–1332. [Google Scholar] [CrossRef] [PubMed]

- Burden of Disease from Environmental Noise—Quantification of Healthy Life Years Lost in Europe. Available online: https://www.who.int/publications/i/item/9789289002295 (accessed on 28 August 2024).

- Asdrubali, F.; D’Alessandro, F. Innovative Approaches for Noise Management in Smart Cities: A Review. Curr. Pollut. Rep. 2018, 4, 143–153. [Google Scholar] [CrossRef]

- Pita, A.; Navarro-Ruiz, J.M. Identificación y Predicción de Patrones de Contaminación Acústica en Ciudades Inteligentes Mediante IA y Sensores Acústicos. conRderuido.com. 2025. Available online: https://conrderuido.com/rderuido/ia-que-identifica-y-predice-patrones-de-contaminacion-acustica/ (accessed on 2 June 2025).

- Kumar, A.M.P.S.; Jagadeesh, S.V.V.D.; Siddarth, G.; Phani Sri, K.M.; Rajesh, V. Real Time Noise Pollution Prediction in a City Using Machine Learning and IoT. Grenze Int. J. Eng. Technol. 2024, 10, 1874–1882. [Google Scholar]

- Madanian, S.; Chen, T.; Adeleye, O.; Templeton, J.M.; Poellabauer, C.; Parry, D.; Schneider, S.L. Speech emotion recognition using machine learning—A systematic review. Intell. Syst. Appl. 2023, 20, 200266. [Google Scholar] [CrossRef]

- Goulão, M.; Duarte, A.; Martins, R. Training Environmental Sound Classification Models for Real-World Applications on Edge Devices. Discov. Appl. Sci. 2024, 6, 166. [Google Scholar] [CrossRef]

- Lê, M.T.; Wolinski, P.; Arbel, J. Efficient Neural Networks for Tiny Machine Learning: A Comprehensive Review. arXiv 2023, arXiv:2311.11883. [Google Scholar] [CrossRef]

- Maayah, M.; Abunada, A.; Al-Janahi, K.; Ahmed, M.E.; Qadir, J. LimitAccess: On-device TinyML based robust speech recognition and age classification. Discov. Artif. Intell. 2023, 3, 8. [Google Scholar] [CrossRef]

- Ranmal, D.; Ranasinghe, P.; Paranayapa, T.; Meedeniya, D.; Perera, C. ESC-NAS: Environment Sound Classification Using Hardware-Aware Neural Architecture Search for the Edge. Sensors 2024, 24, 3749. [Google Scholar] [CrossRef] [PubMed]

- Jahangir, R.; Nauman, M.A.; Alroobaea, R.; Almotiri, J.; Malik, M.M.; Alzahrani, S.M. Deep Learning-based Environmental Sound Classification Using Feature Fusion and Data Enhancement. Comput. Mater. Contin. 2023, 74, 1069–1091. [Google Scholar] [CrossRef]

- Peng, L.; Yang, J.; Yan, L.; Chen, Z.; Xiao, J.; Zhou, L.; Zhou, J. BSN-ESC: A Big–Small Network-Based Environmental Sound Classification Method for AIoT Applications. Sensors 2023, 23, 6767. [Google Scholar] [CrossRef] [PubMed]

- Salamon, J.; Jacoby, C.; Bello, J.P. A Dataset and Taxonomy for Urban Sound Research. In Proceedings of the 22nd ACM International Conference on Multimedia, New York, NY, USA, 3–7 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1041–1044. [Google Scholar] [CrossRef]

- Fang, Z.; Li, W.; Li, J. Fast Environmental Sound Classification Based on Resource Adaptive Convolutional Neural Networks. Sci. Rep. 2022, 12, 7369. [Google Scholar] [CrossRef] [PubMed]

- Ahmmed, B.; Rau, E.G.; Mudunuru, M.K.; Karra, S.; Tempelman, J.R.; Wachtor, A.J.; Forien, J.-B.; Guss, G.M.; Calta, N.P.; DePond, P.J.; et al. Deep learning with mixup augmentation for improved pore detection during additive manufacturing. Sci. Rep. 2024, 14, 13365. [Google Scholar] [CrossRef] [PubMed]

- Mesaros, A.; Heittola, T.; Virtanen, T. TUT Database for Acoustic Scene Classification and Sound Event Detection. In Proceedings of the 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016. [Google Scholar]

- Medina Velandia, L.N.; Andrés Gutiérrez, D. Pautas para optar por una metodología ágil para proyectos de software. Rev. Educ. Ing. 2024, 19, 1–8. [Google Scholar] [CrossRef]

- Micrófonos de Medición I NTi Audio. Available online: https://www.nti-audio.com/es/productos/microfonos-de-medicion (accessed on 2 September 2024).

- Adafruit I2S MEMS Microphone Breakout—SPH0645LM4H: ID 3421: Adafruit Industries, Unique & Fun DIY Electronics and Kits. Available online: https://www.adafruit.com/product/3421 (accessed on 2 September 2024).

- Mini USB 2.0 Microphone MIC Audio Adapter Plug and Play for Raspberry Pi 5/4, Voice Recognition Software, SunFounder. Available online: https://www.sunfounder.com/products/mini-usb-microphone (accessed on 2 September 2024).

- Loudspeaker Measurements. Available online: https://www.minidsp.com/applications/acoustic-measurements/loudspeaker-measurements (accessed on 2 September 2024).

- Raspberry Pi, Ltd. Raspberry Pi 2W Model B Specifications, Raspberry Pi. Available online: https://www.raspberrypi.com/products/raspberry-pi-zero-2-w/ (accessed on 2 September 2024).

- Panel Solar Enertik Policristalino 90W—Enertik Chile. Available online: https://enertik.com/ar/tienda/fotovoltaica/paneles-solares/panel-solar-enertik-policristalino-90w/?srsltid=AfmBOopC1He3LJ1OCgULcu7kV2f-3mJA3x2WsLSYXh1ElWEkDX4Vh1Tv (accessed on 2 September 2024).

- Ma, Y.; Chen, S.; Ermon, S.; Lobell, D.B. Transfer learning in environmental remote sensing. Remote Sens. Environ. 2024, 301, 113924. [Google Scholar] [CrossRef]

- Red de Ruido Urbana—Busqueda, Secretaría Distrital de Ambiente. Available online: https://www.ambientebogota.gov.co/search (accessed on 6 September 2024).

- IEC 61672-1; Electroacoustics—Sound Level Meters—Part 1: Specifications. IEC: Geneva, Switzerland, 2013.

- Dayal, A.; Yeduri, S.R.; Koduru, B.H.; Jaiswal, R.K.; Soumya, J.; Srinivas, M.B.; Pandey, O.J.; Cenkeramaddi, L.R. Lightweight deep convolutional neural network for background sound classification in speech signals. J. Acoust. Soc. Am. 2022, 151, 2773–2786. [Google Scholar] [CrossRef] [PubMed]

| Tipo | Requirements | Description |

|---|---|---|

| Functional | Data capture | The device must be capable of continuously capturing audio data per minute from its installed location. |

| Functional | Data Processing | It must process the audio data to extract relevant features that enable the classification of noise sources. |

| Functional | Data storage | Store the processed data and classification results locally. |

| Functional | Device Power Supply | The device must generate its own power to operate 24 h a day. |

| Non-Functional | Low Energy Consumption | The device must have a maximum consumption level of 2.5 amps. |

| Non-Functional | Precision and reliability | The device must have high precision in noise classification and reliable operation in various environmental conditions against the sources of interest. |

| Non-Functional | Requirement | The device must have adequate protection to operate under variable climatic conditions in Bogotá. |

| Non-Functional | Cost-Effectiveness | The cost-effective design and operation of the device should allow for broad deployment in multiple locations. |

| Microphone | Price (USD) | Freq. Range (Hz) | Sensitivity | Max SPL | Comments |

|---|---|---|---|---|---|

| SPH0645 [19] | 2 | 100–7000 | –26 dBV/Pa | 94 dB SPL @ 1 kHz | High interference |

| SunFounder [20] | 10 | 100–16.000 | –38 dBV/Pa | 110 dB SPL @ 1 kHz | Low interference |

| UMIK-1 [21], | 132 | 20–20.000 | –18 dBV/Pa | 133 dB SPL @ 1 kHz | Acceptable interference |

| Items | Field Observations |

|---|---|

| Battery | The battery did not present any anomaly during the period of deployment of the device in the acoustic environment. The pre- and post-measurements were found to be above 12 V. |

| Panel | The panel presented levels of 21 V during the implementation period. |

| Raspberry | The Raspberry worked 24 h a day during the device implementation period without presenting any problems. |

| Microphone | The microphone performed optimally, recording 59.48 s per minute during the device’s deployment period. |

| Trained Source Classes | Definition |

|---|---|

| Alarms | Devices designed to detect specific events by emitting audible signals to alert about such associated events (vehicle or horn alarms, etc.). |

| Ambient | Set of low-intensity natural sounds that define the acoustic environment without human influence. It includes birdsong, the murmur of wind and rain, creating a tranquil soundscape in the absence of anthropogenic noises such as traffic or construction. |

| Applause | Noise produced by the repeated impact of hands on each other, commonly used as a sign of approval, enthusiasm or recognition in social or public events. |

| Airplane | Fixed-wing aircraft powered by engines that allows the transportation of people or cargo over long distances and at different altitudes. |

| Human beings | Noises resulting from anthropogenic activities such as the emission of music, voices, etc. |

| Impacts | Noises with a high concentration of energy in short periods of time, such as closing a door or the passage of heavy vehicles over a speed bump or rumble strip, among others. |

| Motorcycle | Two-wheeled vehicles powered by an engine, designed for individual or dual transportation, and used for both urban and recreational transportation. |

| Heavy vehicles | Motorized vehicles with more than two axles, such as buses, trucks, or lorries, which typically generate low-frequency and high-intensity sound due to engine and exhaust systems |

| Classes | Number of Audios |

|---|---|

| Alarms | 52 |

| Ambient | 126 |

| Applause | 39 |

| Airplane | 109 |

| Humans | 59 |

| Impacts | 40 |

| Motorcycle | 111 |

| Heavy vehicles | 120 |

| Classes | Training | Validation | Test | Total Audios | ||

|---|---|---|---|---|---|---|

| Subset 1 (20%) | Subset 2 (20%) | Subset 3 (20%) | Subset 4 (20%) | Subset 5 (20%) | ||

| alarms | 11 | 11 | 10 | 10 | 10 | 52 |

| ambient | 26 | 25 | 25 | 25 | 25 | 126 |

| applause | 8 | 8 | 8 | 8 | 7 | 39 |

| Airplane | 22 | 22 | 22 | 22 | 21 | 109 |

| humans | 12 | 12 | 12 | 12 | 11 | 59 |

| impacts | 8 | 8 | 8 | 8 | 8 | 40 |

| motorcycle | 23 | 22 | 22 | 22 | 23 | 112 |

| Heavy vehicles | 24 | 24 | 24 | 24 | 24 | 120 |

| Total | 134 | 132 | 131 | 131 | 129 | 657 |

| Classes | VP | VN | FP | FN | Precision | Recall | F1 |

|---|---|---|---|---|---|---|---|

| Alarms | 9 | 119 | 0 | 1 | 1.00 | 0.90 | 0.95 |

| Ambient | 25 | 104 | 0 | 0 | 1.00 | 1.00 | 1.00 |

| Applause | 7 | 122 | 0 | 0 | 1.00 | 1.00 | 1.00 |

| Airplane | 21 | 107 | 1 | 0 | 0.95 | 1.00 | 0.98 |

| Humans | 11 | 117 | 1 | 0 | 0.92 | 1.00 | 0.96 |

| Impacts | 6 | 121 | 0 | 2 | 1.00 | 0.75 | 0.86 |

| Motorcycles | 21 | 106 | 1 | 1 | 0.95 | 0.95 | 0.95 |

| Heavy vehicles | 24 | 102 | 2 | 1 | 0.92 | 0.96 | 0.94 |

| Model/Approach | Dataset | Accuracy (%) | F1-Score | Model Size (MB) | Inference Time (ms) | Reference |

|---|---|---|---|---|---|---|

| ESC-NAS | UrbanSound8K | 93.2 | 0.92 | 10 | 330 | [10] |

| BSN-ESC | UrbanSound8K | 90.8 | 0.88 | 12 | 360 | [12] |

| CNN-ESC (light) | ESC-50 | 92.4 | 0.91 | 15 | 400 | [27] |

| TinyML-YAMNet (proposed) | Custom Bogotá Dataset | 94.1 | 0.93 | 6.0 | 200 | This work |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Remolina Soto, M.S.; Amaya Guzmán, B.; Aya-Parra, P.A.; Perdomo, O.J.; Becerra-Fernandez, M.; Sarmiento-Rojas, J. Intelligent Classification of Urban Noise Sources Using TinyML: Towards Efficient Noise Management in Smart Cities. Sensors 2025, 25, 6361. https://doi.org/10.3390/s25206361

Remolina Soto MS, Amaya Guzmán B, Aya-Parra PA, Perdomo OJ, Becerra-Fernandez M, Sarmiento-Rojas J. Intelligent Classification of Urban Noise Sources Using TinyML: Towards Efficient Noise Management in Smart Cities. Sensors. 2025; 25(20):6361. https://doi.org/10.3390/s25206361

Chicago/Turabian StyleRemolina Soto, Maykol Sneyder, Brian Amaya Guzmán, Pedro Antonio Aya-Parra, Oscar J. Perdomo, Mauricio Becerra-Fernandez, and Jefferson Sarmiento-Rojas. 2025. "Intelligent Classification of Urban Noise Sources Using TinyML: Towards Efficient Noise Management in Smart Cities" Sensors 25, no. 20: 6361. https://doi.org/10.3390/s25206361

APA StyleRemolina Soto, M. S., Amaya Guzmán, B., Aya-Parra, P. A., Perdomo, O. J., Becerra-Fernandez, M., & Sarmiento-Rojas, J. (2025). Intelligent Classification of Urban Noise Sources Using TinyML: Towards Efficient Noise Management in Smart Cities. Sensors, 25(20), 6361. https://doi.org/10.3390/s25206361