Highlights

What are the main findings?

- The FLEX-SFL framework introduces dynamic, device-aware adaptive model segmentation, entropy-driven client selection, and hierarchical local asynchronous aggregation mechanisms, improving training efficiency and scalability in edge heterogeneous environments.

- Extensive experiments demonstrate that FLEX-SFL outperforms state-of-the-art federated and split federated learning methods in terms of accuracy, convergence speed, and resource efficiency across multiple datasets.

What are the implications of the main findings?

- FLEX-SFL provides a practical solution to the challenges posed by system and statistical heterogeneity in federated learning, making it suitable for large-scale edge deployments in real-world intelligent systems.

- The proposed mechanisms can be extended to enhance the scalability and adaptability of other federated learning frameworks, potentially improving edge computing applications in fields like IoT and healthcare.

Abstract

The deployment of Federated Learning (FL) in edge environments is often impeded by system heterogeneity, non-independent and identically distributed (non-IID) data, and constrained communication resources, which collectively hinder training efficiency and scalability. To address these challenges, this paper presents FLEX-SFL, a flexible and efficient split federated learning framework that jointly optimizes model partitioning, client selection, and communication scheduling. FLEX-SFL incorporates three coordinated mechanisms: a device-aware adaptive segmentation strategy that dynamically adjusts model partition points based on client computational capacity to mitigate straggler effects; an entropy-driven client selection algorithm that promotes data representativeness by leveraging label distribution entropy; and a hierarchical local asynchronous aggregation scheme that enables asynchronous intra-cluster and inter-cluster model updates to improve training throughput and reduce communication latency. We theoretically establish the convergence properties of FLEX-SFL under convex settings and analyze the influence of local update frequency and client participation on convergence bounds. Extensive experiments on benchmark datasets including FMNIST, CIFAR-10, and CIFAR-100 demonstrate that FLEX-SFL consistently outperforms state-of-the-art FL and split FL baselines in terms of model accuracy, convergence speed, and resource efficiency, particularly under high degrees of statistical and system heterogeneity. These results validate the effectiveness and practicality of FLEX-SFL for real-world edge intelligent systems.

1. Introduction

The rapid proliferation of intelligent edge terminals, along with the increasing demand for data privacy and real-time decision-making, has led to the rise of collaborative distributed learning as a key enabler for the evolution of edge intelligence in sensor networks and IoT systems [1]. Federated Learning (FL), a prominent privacy-preserving collaborative learning paradigm, facilitates the joint training of a global model across distributed devices without requiring the transfer of raw data, thus enhancing privacy protection. However, FL faces two major challenges in practical deployment, particularly in sensor networks. The first, is system heterogeneity, where edge devices exhibit significant variations in terms of computational power, storage capacity, and network bandwidth, leading to inefficiencies in training and model aggregation. The second is statistical heterogeneity, where the data collected by devices is often non-independent and identically distributed (non-IID), causing slow convergence and unstable performance, which are critical issues in sensor-driven environments where data diversity is high [2]. These challenges significantly hinder the scalability and effectiveness of FL in large-scale edge networks, such as those commonly found in sensor and IoT systems.

To address these issues, Split Learning (SL) has been introduced, which partitions deep learning models between clients and servers, helping to reduce the computational burden on resource-constrained edge devices. While SL improves the participation of low-performance devices, its reliance on serial communication and lack of parallel optimization limits its ability to support large-scale, multi-terminal collaboration. As a result, Split Federated Learning (SFL), which combines the strengths of both FL and SL, has emerged as a promising solution. SFL aims to optimize model partitioning and federated aggregation while ensuring data privacy across diverse devices [3]. Despite its potential, existing SFL approaches are hindered by limitations such as static model partitioning, inadequate client selection strategies, and heavy synchronization dependencies in the training process, which compromise their adaptability and efficiency in highly heterogeneous edge environments, like those seen in sensor networks [4].

To address the above issues, this paper proposes a flexible and efficient federated split learning framework for edge heterogeneous environments, named FLEX-SFL (Flexible and Efficient Split Federated Learning), tailored for heterogeneous edge environments. The framework integrates three complementary mechanisms focusing on adaptive model partitioning, representative client participation, and efficient hierarchical aggregation:

(1) Device-aware adaptive model segmentation (DAS): This mechanism dynamically determines the optimal model split point for each client based on its computing and communication capabilities, mitigating the “straggler” effect and improving resource utilization;

(2) Entropy-driven client selection strategy (EDCS): This mechanism measures data representativeness via label-distribution entropy and applies a lightweight heuristic selection algorithm to enhance data diversity and global generalization;

(3) Hierarchical local asynchronous aggregation mechanism (HiLo-Agg): This mechanism employs a two-level aggregation structure that decouples local client updates from global synchronization, effectively reducing communication delay and accelerating convergence.

The main contributions of this paper are as follows:

- A unified flexible Split Federated Learning framework (FLEX-SFL) is proposed to jointly address system heterogeneity, statistical heterogeneity, and communication bottlenecks in edge environments;

- An adaptive segmentation mechanism (DAS) is designed to personalize model partitioning according to each client’s resource profile, enhancing scalability and training efficiency;

- An entropy-driven client selection strategy (EDCS) is introduced to achieve better balance between representativeness and efficiency under non-IID data;

- A hierarchical local asynchronous aggregation mechanism (HiLo-Agg) is developed to enable asynchronous multi-level aggregation, alleviating synchronization delays while preserving global model consistency;

- Comprehensive experiments on multiple non-IID benchmarks (FMNIST, CIFAR-10, CIFAR-100) verify that FLEX-SFL achieves superior accuracy, faster convergence, and lower communication cost compared with state-of-the-art SFL methods.

FLEX-SFL provides a practical and generalizable framework for building adaptive and communication-efficient edge intelligence systems, with promising potential for real-world IoT deployments.

2. Related Work

This section reviews the main research paradigms and representative works in the field of federated intelligence around the core technical directions of this paper, with a focus on FL, SL, and their fusion SFL, to lay the foundation for the proposed FLEX-SFL framework.

2.1. Federated Learning

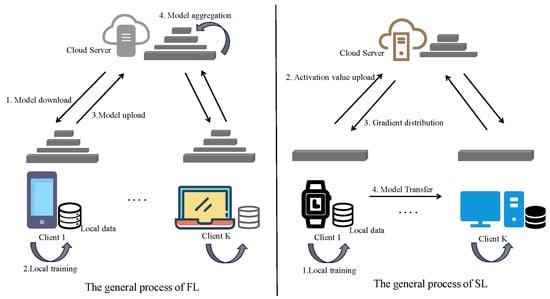

FL is a typical distributed collaborative learning framework. Its core principle is that clients train submodels on local data without centralizing data, and only upload model gradients or parameters to the server for aggregation, as shown on the left side of Figure 1 for its general process. This approach effectively alleviates data silos and privacy leakage issues, and is widely applied in scenarios such as healthcare [5], finance [6], and smart terminals [7]. Its standard optimization objective can be formalized as

where is the global model parameter, represents the loss function of the -th client, is the total number of clients, and is the data proportion of client .

Figure 1.

The general processes of federated learning and split learning.

Despite FL’s strong privacy protection and system scalability, it faces two key challenges in practical deployment:

- System heterogeneity: Significant variations in computing power, energy consumption, storage, and communication quality among clients often cause low-performance devices to slow down the overall training process, leading to the “straggler effect.”

- Statistical heterogeneity: Data distributions across clients generally exhibit non-independent and identically distributed (non-IID) characteristics, making local models prone to bias and affecting the convergence stability and generalization ability of the global model.

To address these challenges, academia has proposed a series of optimization methods. For statistical heterogeneity, Li et al. [8] proposed FedProx, which introduces a regularization term into the local optimization objective to limit model drift; Mora and Zhou et al. [9,10] mitigated non-IID effects by constructing a proxy dataset on the server side for post-training recalibration of local models. For system heterogeneity, FedBuff and AFL adopted asynchronous update mechanisms to improve training parallelism [11,12]; Maciel et al. [13] pre-screened participable devices based on client status (e.g., battery level and connection quality); Zhu and Zhao et al. [14,15] minimized waiting time and energy consumption through bandwidth scheduling strategies.

Although the above methods have achieved optimization effects in specific dimensions, most focus on a single heterogeneous factor and lack a unified mechanism for collaborative optimization of system resources, data distribution, and communication efficiency, making them difficult to adapt to the high dynamics and heterogeneity of real-world edge scenarios.

2.2. Split Learning

Furthermore, with the rapid proliferation of smart terminals (e.g., smartwatches, wristbands, wearable devices) and the substantial increase in task complexity and model parameter scale, edge devices face ever-growing computational and communication burdens when participating in deep learning model training [16]. For instance, ResNet18, ResNet50, and ResNet101 contain approximately 11.2 million, 25.6 million, and 44.5 million parameters, respectively, rendering full-model training unfeasible for resource-constrained devices [17]. He et al. demonstrated that FL exhibits superior communication efficiency when the number of clients is small or the model size is limited; however, in scenarios with a large client base or high model complexity, FL’s communication overhead escalates rapidly, leading to a marked decrease in efficiency.

To alleviate the participation barriers for lightweight devices, Gupta et al. [18] proposed the SL paradigm, whose general process is illustrated on the right side of Figure 1. The core principle involves partitioning deep models into client-side and server-side segments: clients are responsible for performing forward propagation and uploading activation values, while the server manages backward propagation and parameter updates. This mechanism significantly reduces terminal computational and storage pressures through structural computation offloading, making it suitable for edge devices deploying deep models.

However, SL inherently relies on a serial communication architecture, necessitating sequential interaction between multiple clients and the server. This lack of parallelism in the training process renders it inadequate for supporting large-scale multi-client concurrent scenarios. Additionally, due to its fundamental nature as a single-device or non-federated architecture, SL demonstrates limited capability in handling multi-source non-IID data distributions and lacks cross-client modeling capabilities. Consequently, while SL excels in adapting to lightweight devices, its generalization ability, communication efficiency, and collaborative training capacity remain insufficient to meet the practical demands of complex edge systems [19].

2.3. Split Federated Learning

To simultaneously leverage the collaborative training capabilities of federated learning and the resource decoupling features of split learning, Split Federated Learning (SFL) has emerged as a critical development direction in distributed intelligent modeling [20]. In this paradigm, the model is partitioned into client-side submodels and server-side submodels, allowing multiple clients to participate in training in parallel while the server employs federated aggregation strategies to integrate uploaded information from all parties. This approach ensures data privacy while achieving friendly adaptation to resource-constrained devices. SFL reduces terminal load through structural partitioning and enhances model generalization performance via aggregation mechanisms, demonstrating significant engineering deployment potential.

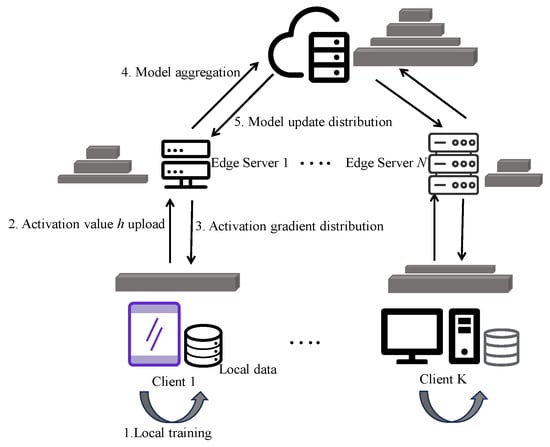

Figure 2 illustrates the basic training workflow of SFL. Taking a typical deep neural network as an example, the model is divided into a client-side submodel and a server-side submodel . The complete training process consists of four steps: (1) the client receives local input samples and computes intermediate activation values ; (2) is transmitted to the server, which performs and calculates the loss ; (3) the server performs backpropagation based on the loss to update and sends the gradient back to the client; (4) the client uses this gradient to continue backpropagation and update .

Figure 2.

The general process of split federated learning.

To enhance SFL’s adaptability and communication efficiency, numerous studies have expanded and optimized its training mechanisms. The SplitFed series, as a representative work, first proposed the “dual-side aggregation” strategy (SFL-V1), which synchronously aggregates client-side and server-side models. However, this method relies heavily on full-synchronous updates across all devices, leading to high communication latency. The subsequent SFL-V2 introduced an asynchronous mechanism, significantly improving training stability and delay tolerance [21].

Building on these foundations, researchers have explored optimizations in model structure design and aggregation mechanisms. SplitMix proposed a tunable partitioning mechanism that allows clients to select model splitting points according to their resource conditions, enhancing flexibility but lacking adaptive coordination across clients [22]. Cluster-HSFL introduced a cluster-based hierarchical training strategy, effectively alleviating the communication load of central servers, yet the static clustering configuration limited responsiveness to dynamic device variations [23]. FedLite employs Product Quantization (PQ) to compress activations and a gradient correction module to reduce quantization errors, thereby improving communication efficiency; however, model accuracy degraded under highly non-IID data distributions [24]. HSFL utilizes a Multi-Armed Bandit (MAB-BC-BN2) strategy that dynamically selected clients based on channel quality and local update magnitude, which improved resource utilization but introduced non-negligible control overhead [25]. CHEESE incorporates a helper–client mechanism that divides models into smaller subsegments for low-capability devices and adopts a ring topology for asynchronous collaboration, improving inclusiveness but occasionally leading to unbalanced convergence across clients. FedCST combines pruning and clustering strategies to mitigate training fluctuations caused by unstable client participation; however, aggressive pruning can reduce the model’s representation capacity [26].

While the above methods have achieved certain improvements in model flexibility and communication efficiency, existing SFL solutions still face three prominent issues in large-scale edge heterogeneous scenarios:

- Model partitioning remains predominantly static, lacking dynamic adaptive mechanisms based on device capabilities, which hinders personalized training efficiency;

- Most client selection strategies are random or round-robin, failing to effectively measure data representativeness and restricting global model training;

- Synchronous communication structures limit training concurrency, prone to blocking in weak-connection or high-latency devices, and reducing system throughput.

Although some studies have attempted to alleviate these issues through asynchronous communication, personalized modeling, distillation, and structural alignment, a universally applicable optimization framework with clear architecture, coordinated mechanisms, and the simultaneous addressing of system and statistical heterogeneities remains lacking.

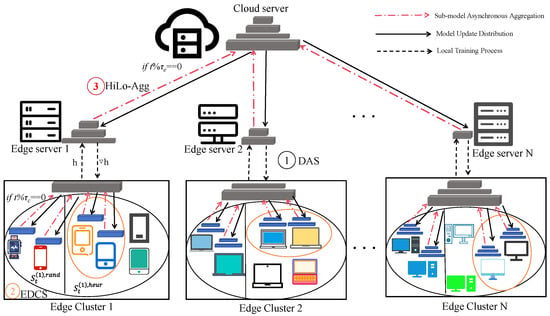

3. System Framework and Design Scheme

To address the triple challenges of system heterogeneity, statistical heterogeneity, and communication overhead in edge intelligent systems, this paper proposes FLEX-SFL (Flexible and Efficient Split Federated Learning), a flexible and efficient optimization framework for split federated learning. Centering on three key issues—model partitioning, client selection, and model aggregation—the framework includes three core mechanisms: device-aware adaptive segmentation (DAS), entropy-driven client selection (EDCS), and hierarchical local asynchronous aggregation (HiLo-Agg). These mechanisms collaborate to achieve system resource adaptation, data representativeness enhancement, and communication efficiency improvement. The overall framework is illustrated in Figure 3.

Figure 3.

Schematic diagram of the FLEX-SFL framework.

In FLEX-SFL, the DAS module dynamically determines model partition points for each client based on its computing power status and aggregates devices with consistent partitioning structures into the same edge cluster, ensuring structural uniformity for personalized modeling and intra-cluster aggregation. The EDCS mechanism employs a “two-stage selection” strategy: first, random sampling to ensure device diversity, followed by heuristic screening based on label entropy to enhance the representativeness of selected clients’ data distributions. HiLo-Agg adopts a local–global dual-layer asynchronous aggregation architecture, enabling local asynchronous updates between clients and edge servers, and global aggregation between edge and central servers. This breaks through the synchronous communication bottleneck and enhances training throughput and system robustness. The main symbols used in this paper and their meanings are listed in Table 1.

Table 1.

Summary of notation.

3.1. Device-Aware Adaptive Segmentation

In practical deployments of FL, edge devices exhibit significant disparities in computing capabilities, where low-performance nodes often become the “performance bottleneck” of the system, forcing an extension of the training cycle. To mitigate this “straggler effect,” FLEX-SFL introduces DAS, a dynamic model partitioning strategy based on computing power and latency awareness. Its core objective is to adjust model partition positions on demand according to device capabilities, thereby achieving balanced allocation of training loads.

3.1.1. Optimization Problem Modeling and Solution

Consider a system with clients forming the set , where the computing capability of the -th client is denoted as . In our implementation, the computing capability of each client is pre-determined based on its CPU floating-point performance, which remains fixed during training to reflect the static hardware heterogeneity across devices. The deep model to be trained consists of layers, which is partitioned into a client-side submodel (comprising the first layers) and an edge server submodel (comprising layers to ).

The total delay of one training round primarily consists of three components: client-side forward propagation, activation value upload, and backpropagation, with edge server computation delay being negligible. Therefore, we model the training delay optimization problem as minimizing the maximum training time across the system:

where represents the proportion of training computation undertaken by client , and is the total computation of the full model. By introducing an auxiliary variable , the above Min-Max problem can be transformed into the following linear programming form:

Solving this programming problem yields the optimal computation proportion:

where is the optimal model partition point for client . This strategy achieves personalized allocation of training tasks based on device computing power, significantly enhancing the overall throughput of system training.

3.1.2. Homogeneous Aggregation-Based Edge Cluster Partitioning Mechanism

Although DAS enables dynamic model partitioning according to device heterogeneity, personalized model structures introduce a critical issue: inconsistent submodel parameter dimensions across clients, which prevents direct model parameter aggregation and affects the consistency and convergence stability of the global model [27].

To address this heterogeneous aggregation barrier, FLEX-SFL designs an edge cluster partitioning mechanism. This mechanism aims to retain the flexible partitioning advantages of DAS while grouping clients with identical or similar partitioning structures into the same edge cluster. Edge servers within each cluster are responsible for corresponding submodel training and local aggregation, thereby achieving model structure consistency at the local level and ensuring aggregation stability.

Let the initial partition point set be , where represents the original model partition point of client . Denote the maximum and minimum values in this set as

Assume edge servers are deployed in the system (i.e., supporting the creation of edge clusters). The interval is uniformly divided into non-overlapping subintervals, with each interval width defined as

The -th subinterval is defined as

For any client , its partition point is assigned to subinterval and the distances to the upper and lower bounds of this interval are calculated as

According to the “minimum distance principle”, is discretized to the nearest boundary value to obtain the final partition point:

Based on this, the final partition point set is defined as . Clients with the same final partition point are assigned to the same edge cluster:

This mechanism ensures that clients within each edge cluster have consistent submodel structures, facilitating local training and parameter aggregation within clusters. It also supports asynchronous parallel collaboration across clusters, effectively balancing model flexibility and aggregation feasibility. The device-aware adaptive segmentation (DAS) process is outlined in Algorithm 1.

| Algorithm 1. DAS |

| Input: Client partition point set , number of edge server |

| Output: Final discrete partition point set for each client, edge cluster partition |

| result |

| 1: Calculate the maximum and minimum partition points and |

| 2: Compute the interval width using Equation (6) |

| 3: Initialize |

| 4: for each client do |

| 5: Determine the subinterval containing the original partition point |

| 6: Calculate and using Equation (8), discretize the partition point using Equation (9) |

| 7: Add to |

| 8: end for |

| 9: Construct the edge cluster set using Equation (10) |

| 10: return and |

3.2. Entropy-Driven Client Selection for Data Heterogeneity

In FL, significant differences in data distributions among clients often lead to inconsistent update directions of local models, affecting the convergence and generalization ability of the global model. The “client drift” problem caused by this statistical heterogeneity is a critical bottleneck restricting system performance.

To address this issue, FLEX-SFL includes an entropy-driven client selection (EDCS) mechanism, which uses label information entropy as a metric for data diversity and combines a “random + heuristic” strategy to effectively improve the representativeness of client selection and enhance the stability and efficiency of model training.

3.2.1. Label Entropy Modeling and Diversity Measurement

Consider an edge cluster containing a client set . The label count vector of client is

where represents the number of samples in the -th class, and is the total number of classes. The corresponding label distribution (probability vector) is

where is the total number of samples of client . The information entropy (label diversity score) of client is defined as

where is a constant to prevent logarithmic singularity, typically set to .

3.2.2. Two-Stage Client Selection Process

To mitigate the bias effect caused by data imbalance, the optimization goal of this strategy is to select a subset from the candidate client set such that the label distribution of the subset is as close as possible to the global data distribution, i.e.,

where denotes the selected client set, is the data distribution of the selected clients, is the global distribution, and represents the KL divergence.

In the -th training round, the goal is to select a subset from to improve the label coverage of the selected set. The total selection size is controlled by the global participation rate :

- First Stage: Random Selection

Let the random sampling ratio be . First, randomly select clients from to form an initial set: , . The remaining candidate set is .

- 2.

- Second Stage: Greedy Entropy-Driven Selection

Select an additional clients from using information entropy improvement as the heuristic criterion. Define the accumulated label vector of the selected clients as

For each candidate client :

The proportion of the -th class samples in the merged vector is

The merged label entropy is

In each iteration, the client with the maximum is selected to join the final set until clients are selected. The final selected set is

The complexity of the random selection stage is . For the greedy selection stage, since the entropy change of at most clients (each with a -dimensional vector entropy) is calculated in each iteration, and clients need to be selected, the total complexity is approximately

Additionally, the greedy strategy involves only single-round vector additions that can be optimized via caching, making the practical complexity approximate to . Compared with traditional client selection methods based on KL divergence minimization , this significantly reduces complexity and is more suitable for resource-constrained edge computing environments [28]. The lightweight heuristic in EDCS evaluates each candidate client’s contribution by estimating the change in information entropy on its local data, which serves as a proxy for data representativeness. This procedure involves only a small local forward pass and simple entropy computation, thus introducing negligible additional cost. The detailed process of EDCS is outlined in Algorithm 2.

| Algorithm 2. EDCS |

| Input: edge cluster Candidate set , label distribution , participation rate , |

| random ratio |

| Output: Selected client set for edge cluster in round |

| 1: Set , |

| 2: , |

| 3: //Initialize accumulated label count vector |

| 4: for each client do |

| 5: for to do |

| 6: // Accumulate label counts |

| 7: end for |

| 8: end for |

| 9: Initialize |

| 10: while do |

| 11: for each do |

| 12: calculate merged entropy using Equation (19) |

| 13: Select client |

| 14: Update: , , |

| 15: end for |

| 16: end while |

| 17: return |

3.3. Hierarchical Asynchronous Dual-End Aggregation Mechanism

To enhance the adaptability and efficiency of federated training in edge heterogeneous environments, a hierarchical asynchronous collaborative training scheme is further proposed, which enables a flexible and efficient training process within the three-tier architecture of client-edge server-central server. The core of this mechanism lies in the following three aspects: First, the aggregation processes on the client side and server side are asynchronously decoupled, allowing the system to proceed in parallel and reduce waiting latency. Second, the introduction of a local aggregation mechanism within edge clusters helps improve training concurrency and alleviate centralization bottlenecks. Third, by setting the aggregation cycles for clients and servers, the global synchronization rhythm is uniformly controlled, ensuring the consistency of the global model and its convergence path.

At the beginning of each training round, taking the edge cluster as an example, clients determine partition points through the DAS module, each holding a front-end submodel , while the edge server is responsible for the back-end submodel .

In the -th round, client performs the following steps:

(1) Local mini-batch data is used to perform forward propagation through to generate activation values ;

(2) is sent to the edge server , which completes the forward and backward propagation of the back-end model

(3) To reduce communication frequency, the server caches and repeats training times;

(4) Backpropagation gradients are sent to the client to update its submodel .

3.3.1. Local Aggregation of Client Submodels

After every rounds of training, the edge server performs local aggregation on the client submodels within the cluster. Let client have a local sample size , then its aggregation weight is defined as

The aggregation result of client submodels in the -th edge cluster is

The aggregated model is broadcast to all to synchronously update their local copies.

3.3.2. Global Aggregation of Edge Server Submodels

After every rounds of training, the system performs global aggregation of edge server models. Let the total data volume of edge cluster be

Its aggregation weight is

The corresponding global server model is

This model is then delivered to all edge servers for the next training round.

Notably, the aggregation of client submodels and server submodels are independent and asynchronous in time, avoiding communication blockages caused by full synchronization. Define

as the global synchronization period. When the number of training rounds reaches , the system integrates the latest client submodels and the global server model to construct a complete global model by concatenation:

This model is used for evaluation and inference in the current phase. Combining the design of the HiLo-Agg mechanism with the strategies in Algorithms 1 and 2, the overall training process of FLEX-SFL is outlined in Algorithm 3.

Although asynchronous aggregation can potentially introduce stale updates and increase gradient variance, HiLo-Agg mitigates these effects through its hierarchical design. Intra-cluster local aggregation at the edge servers reduces client-side noise before updates reach the cloud, the configurable client/edge/server aggregation cycles limit excessive staleness by controlling synchronization rhythm, and server-side caching with repeated local training further stabilizes updates by reducing dependence on single-round activations.

| Algorithm 3. FLEX-SFL |

| Input: Client set , number of edge servers , aggregation periods , , total global |

| training rounds , repeats training times |

| Output: the global model at global round |

| 1: , // Define global training period |

| 2: Initialize all model parameters |

| 3: for = 1 to do |

| 4: Invoke Algorithm 1(DAS) to determine partition points and generate |

| model structures |

| 5: Assign clients with identical structures to edge clusters |

| 6: for each edge cluster do |

| 7: Invoke Algorithm 2 (EDCS) to select participant set |

| 8: for each client do |

| 9: Generate activation values |

| 10: Upload to edge server |

| 11: edge Server-side repeats training |

| 12: Return gradients to update client model |

| 13: end for |

| 14: end for |

| 15: if mod == 0 then |

| 16: Aggregate all using Equation (23) to obtain , and broadcast to clients in the |

| cluster |

| 17: end if |

| 18: if mod == 0 then |

| 19: Aggregate all using Equation (26) to obtain , broadcast to all edge servers |

| 20: end if |

| 21: if mod == 0 then |

| 22: Concatenate and using Equation (28) to generate |

| 23: end if |

| 24: end for |

| 25: |

| 26: return |

4. Convergence Analysis

In this section, convergence analysis of the proposed FLEX-SFL framework is conducted.

Assumption 1.

(L-Smoothness):

Each client’s local loss function is -smooth, i.e., there exists a constant, such that for all ,

Assumption 2.

(Unbiasedness and Bounded Variance of Stochastic Gradients): For the stochastic gradients (of client ) and (of edge server ), the following conditions are satisfied:

Assumption 3.

(Boundedness of Stochastic Gradients):

Lemma 1.

(Local Training Error Squared Deviation Bound): Under Assumptions 1–3, if the number of local training steps is and the learning rate satisfies , then for any communication round, after continuously trainingsteps, the local model of client(Lemma C.5 in Reference [21]):

Theorem 1.

Under the conditions of Assumptions 1–3 and Lemma 1, consider the FLEX-SFL framework with a general non-convex objective function. Let the number of update steps per round for clients and edge servers be and , respectively, the client participation rate be , and a fixed learning rate be adopted. After rounds of iterations, the following convergence result holds:

where , denotes the initial point, , and .

Proof of Theorem 1.

Take the full expectation of the smoothness inequality in Assumption 1:

where .

(1) For the second term , by leveraging unbiasedness and converting it into the cumulative sum over all clients, we have:

For terms and , the following holds:

After iterating over the above equation and performing decomposition on it, we have

Substitute Equation (39) into ; thus, can be further expressed as

where . Let ; meanwhile, by substituting the conclusion of Lemma 1, we further have

Substitute the result of Equation (43) into Equation (42), and combine with the result of ; thus, we finally have

(2) For the third term , its expansion is given by

After performing processing on and , we have

Considering independence, Term is negligible. By substituting the results of Equations (44) and (45) into Equation (43), we thus obtain the expression for Term as follows:

(3) Substitute the relational inequalities satisfied by Term and Term into the initial expression (34); thus, we have

where .

Meanwhile, by summing the above equation on both sides for respectively, we have

Further perform the method of splitting terms and canceling out

Let and . Since , by transforming the above equation, we have

When the learning rate is set to , we finally obtain

Equation (51) has conformed to the expression form of Theorem 1, and the proof is completed. □

By analyzing the result of Equation (51), it can be concluded that the FLEX-SFL framework can ultimately achieve a function value convergence rate of the under non-convex conditions. Furthermore, compared with random client selection, the EDCS mechanism in FLEX-SFL achieves a higher score in terms of data distribution diversity. Under the same conditions, this mechanism leads to a higher participation rate , a smaller stochastic gradient bias , and a smaller upper bound ; consequently, the upper bound of the actual final convergence error will be smaller.

5. Experiments

This section aims to evaluate the performance of the proposed FLEX-SFL framework in typical edge heterogeneous environments and validate its adaptability and efficiency advantages under the dual challenges of statistical heterogeneity and system heterogeneity. All experiments were conducted on a local computing platform equipped with an AMD Ryzen 9 7940HX CPU and an NVIDIA RTX 4070 GPU, running the Windows 11 operating system, within the programming environment of Python 3.9 and the deep learning framework of PyTorch 1.13.

5.1. Datasets and Configurations

To systematically evaluate the adaptability of FLEX-SFL under different task complexities and heterogeneous environments, three widely used image classification datasets were selected: FMNIST, CIFAR-10, and CIFAR-100. These datasets cover grayscale and color images, low-dimensional and high-dimensional features, and multi-class and fine-grained tasks, making them representative and challenging. The details are as follows:

- FMNIST: A grayscale image dataset consisting of 10 classes of fashion items with 28 × 28 pixel size, used for medium- to low-complexity image recognition tasks.

- CIFAR-10: Contains 10 classes of 32 × 32 color images (e.g., airplanes, cars, cats), used for general object classification.

- CIFAR-100: Structurally similar to CIFAR-10 but with 100 finer classes, suitable for evaluating modeling capabilities in high-dimensional multi-class scenarios.

The model structures and training parameters configured for different datasets are shown in Table 2. FMNIST uses a lightweight convolutional neural network (2 convolutional layers + 2 fully connected layers), while CIFAR-10 and CIFAR-100 adopt VGG-16 and VGG-19, respectively, to adapt to their complexity differences. The datasets and configurations used in the experiment are shown in Table 2.

Table 2.

Datasets and configurations used in the experiment.

A total of 100 clients were simulated, with the client participation rate set to (i.e., 20 clients were randomly selected for training in each round), and the number of edge servers was 3 to support client cluster aggregation under heterogeneous partitioning. The following are the corresponding hyperparameter settings in the HiLo-Agg mechanism:

- Local training and aggregation: Clients perform local aggregation within edge clusters after every rounds of local training.

- Global aggregation of edge servers: Global aggregation of edge server submodels is performed every rounds.

- Server-side caching mechanism: After receiving client activation values, edge servers repeatedly train times to reduce communication frequency (i.e., the server updates the model 10 times using the same activation values in each communication round).

Additionally, to enhance heterogeneity, two types of strategies were designed for the experiments:

(a) Label distribution heterogeneity: Each client contained only 2 classes (FMNIST/CIFAR-10) or 20 classes (CIFAR-100) to simulate the locality bias in data collection.

(b) Data quantity imbalance: Client sample sizes followed a power-law distribution, reflecting the typical phenomenon of uneven data distribution in real-world terminals.

5.2. Comparative Methods

To comprehensively evaluate the effectiveness of FLEX-SFL in heterogeneous edge scenarios, six representative federated optimization methods were selected as comparison baselines, covering classic FL and SFL paradigms. The performance of each method on different datasets is shown in the table below:

(1) FedAvg: As a standard Federated Learning (FL) method, this method completes collaborative modeling through local training and parameter averaging, but is vulnerable to client drift in heterogeneous scenarios.

(2) FedProx: On the basis of FedAvg, this method introduces a regularized constraint term to mitigate model deviation caused by non-IID (non-independently and identically distributed) data and improves convergence stability under statistical heterogeneity. In this paper, the coefficient of the proximal term is set to 0.3.

(3) MOON (Model-Contrastive Federated Learning): Integrating the idea of contrastive learning, this method alleviates model bias under heterogeneous data distribution by enhancing the consistency of representation between local and global models.

(4) SplitFed: A typical synchronous communication Split Learning framework, this method splits the model into two parts, which possesses both the resource adaptability of SL and the distributed advantages of FL, but lacks a client selection mechanism.

(5) SplitMix (Split Mixing) [22]: This method supports customized model splitting according to requirements, allowing clients to flexibly splice local submodels from multiple modules and enabling per-round reconstruction. However, it lacks an asynchronous communication mode.

(6) FedRich [29]: This is a state-of-the-art SFL framework that clusters clients based on capability metrics (e.g., computing power, bandwidth) and selects clients by calculating the loss between the selected data distribution and the edge server’s data distribution.

To ensure fair comparison, all methods involving model partitioning (SplitFed, SplitMix, FedRich, and FLEX-SFL) adopt a unified partitioning strategy. The first 1–3 convolutional modules are designated as client-side submodels, with remaining layers as edge server submodels. This configuration standardizes model partitioning positions, eliminating interference from partitioning strategy differences and focusing on core distinctions in aggregation mechanisms, client selection, and heterogeneous adaptability.

5.3. Experimental Results

5.3.1. Performance Across Different Datasets

To comprehensively evaluate the applicability and advantages of the proposed FLEX-SFL framework in various heterogeneous task scenarios, systematic tests were conducted on three datasets—FMNIST, CIFAR-10, and CIFAR-100—comparing FLEX-SFL with six existing baseline methods. In FLEX-SFL’s heuristic selection mechanism, the random selection ratio was set to to balance diversity and representativeness, with all methods trained under consistent configurations to ensure fair comparison.

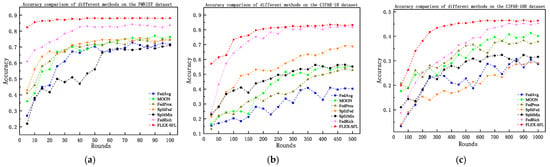

Table 3 lists the test accuracies of each method after 100, 500, and 1000 training rounds, while Figure 4 illustrates the accuracy trends with training rounds. The results show that FLEX-SFL achieved optimal performance on all three datasets, validating its generalization capability in both system and statistical heterogeneous environments.

Table 3.

The final accuracy of different methods on the FMNIST, CIFAR-10, and CIFAR-100 datasets.

Figure 4.

The accuracy variation of different methods across different datasets. (a) FMNIST; (b) CIFAR-10; (c) CIFAR-100.

On the FMNIST dataset, FLEX-SFL achieved a final accuracy of 88.1%, outperforming traditional federated learning methods—FedAvg (71.4%), FedProx (73.6%), and MOON (76.3%)—by 16.7, 14.5, and 11.8 percentage points (pp), respectively, and surpassing Split Learning (SL)-based methods SplitFed (74.7%) and SplitMix (71.1%) (which suffer from static model partitioning and a synchronous communication-induced inability to handle device computing power disparities) by 13.4 and 17 pp. Even compared with the state-of-the-art (SOTA) method FedRich (83.6%), FLEX-SFL still maintained a 4.5 percentage point (pp) advantage in final accuracy.. On the CIFAR-10 dataset, FLEX-SFL led all methods, with 83.8% accuracy (a 2.1 pp improvement over FedRich (81.7%)). Traditional FL methods (FedAvg, FedProx) yield accuracies below 53% due to their incompetence in processing highly heterogeneous data, while SplitFed (68.8%), though validating model partitioning effectiveness, faces communication bottlenecks from synchronous aggregation. By contrast, FLEX-SFL enhances training stability via entropy-driven client selection (to expand sample diversity coverage) and hierarchical asynchronous aggregation (to reduce latency). In the high-complexity CIFAR-100 scenario (characterized by fine-grained categories and extremely unbalanced data distribution), FLEX-SFL still outperformed all methods, with 46.4% accuracy (1.5 pp higher than FedRich (44.9%)), whereas traditional methods exhibited severe performance degradation (e.g., SplitFed only reached 29.1%). FLEX-SFL addresses high-dimensional heterogeneity challenges through device-aware adaptive segmentation (for balancing computing loads) and edge cluster-based local aggregation (for enhancing model consistency).

5.3.2. Comparison of Convergence Rates

To further verify the engineering feasibility and execution efficiency of FLEX-SFL in practical deployment scenarios, this section takes the target accuracy from Section 5.3.1 as the benchmark (setting thresholds of 70% for FMNIST, 50% for CIFAR-10, and 30% for CIFAR-100) and compares FLEX-SFL with representative split federated learning methods (SplitFed, SplitMix, FedRich, etc.) in terms of training rounds and cumulative running time, with results shown in Table 4.

Table 4.

Comparison of communication rounds (round) and actual running time (s) for different split federated methods to reach threshold accuracy on FMNIST, CIFAR-10, and CIFAR-100.

On FMNIST, FLEX-SFL reached 70% accuracy in only 3 communication rounds (90.9% fewer than SplitFed (33 rounds), 76.9% fewer than FedRich (13 rounds)) and took 10.27 s (86.4% shorter than SplitFed (75.57 s), 92.8% shorter than SplitMix (143.39 s), 56.1% shorter than FedRich (23.36 s)), with this advantage stemming from device-aware adaptive segmentation, which assigns lightweight submodels (e.g., the first two convolutional layers) to low-computing-power devices and complex layers to high-computing-power ones. In the CIFAR-10 task, it achieved 50% accuracy in 17 rounds (85.0% fewer than SplitFed (113 rounds), 74.2% fewer than FedRich (66 rounds)) and ran for 22.16 s (88.7% shorter than SplitFed (195.32 s), 96.4% shorter than SplitMix (623.43 s), 91.5% shorter than FedRich (262.13 s)), driven by entropy-driven client selection (screening representative clients via label entropy to reduce local deviation) and hierarchical asynchronous aggregation (avoiding full-synchronous communication blockages). On the high-complexity CIFAR-100, FLEX-SFL hit 30% accuracy in 118 rounds (87.4% fewer than SplitFed (938 rounds), 62.8% fewer than FedRich (317 rounds)) and took 243.33 s (86.3% shorter than SplitFed (1781.52 s), 71.0% shorter than SplitMix (836.51 s), 73.4% shorter than FedRich (913.26 s)), benefiting from edge cluster partitioning (reducing cross-cluster communication via intra-cluster aggregation) and server-side caching (reusing activation values to boost single-round effective computation by 10×).

5.3.3. Resource Consumption

- (1)

- Theoretical Analysis

The FLEX-SFL framework is based on the split federated learning paradigm, offloading the computational and storage burdens of deep models to edge servers. Therefore, resource consumption only needs to consider the communication and computational overhead on the client side.

- Communication Overhead Analysis:

Communication overhead consists of two components: submodel parameter transmission and feature activation value transmission. Submodel Parameter Transmission Overhead: Clients upload gradients and download aggregated models every rounds (intra-cluster aggregation period). The single-client overhead per transmission is (where is the submodel proportion and is the size of the full model parameters). Thus, the total overhead for all clients in the system is , where is the average submodel proportion of the -th cluster, and is the number of clients in the cluster.

Feature Activation Value Transmission Overhead: In each local round, clients need to upload activation values. The single-client overhead is (where is the sampling rate, and is the feature dimension). The total overhead for all clients in a global round is .

Summing the two components, the total communication resource consumption in one global round is .

- Computational Overhead Analysis:

Computational overhead only considers the local training load on clients. Assuming the computation consumption for a client to train a full model is , and the computation amount for each local round is , the total computational overhead for all clients in one round is .

- (2)

- Experimental Analysis

To evaluate the efficiency of FLEX-SFL in terms of resource usage, we conducted a comprehensive comparison with three representative split learning-based frameworks: SplitFed, SplitMix, and FedRich. All methods share consistent experimental configurations, and for FLEX-SFL, the local aggregation interval and the global aggregation interval were used, following the default setup in FedRich [29], to ensure fairness.

- Per-Round Communication and Computation Costs

Table 5 (upper half) presents the average communication and computation overhead per global round across three datasets. For communication cost, FedRich achieved the lowest transmission overhead due to its lightweight client–server interaction design, consuming only 0.76 MB per round on FMNIST. In contrast, FLEX-SFL incurred higher communication costs (e.g., 2.96 MB on FMNIST), approximately 3.9× that of FedRich. This increase stems from the HiLo-Agg architecture in FLEX-SFL, which introduces additional intra-cluster transmissions and repeated server-side computations to enable asynchronous training and mitigate straggler effects.

Table 5.

Per-round and cumulative communication and computation costs to reach target accuracy on three datasets.

However, FLEX-SFL significantly reduces the client-side computation burden due to its dynamic segmentation (DAS) and edge offloading mechanism. On all datasets, FLEX-SFL exhibited the lowest per-round computation cost, e.g., 2.43 MFLOPs on FMNIST, outperforming FedRich (2.7 MFLOPs) and SplitFed (3.81 MFLOPs). This efficiency gain is primarily attributed to the adaptive submodel allocation, which assigns lighter computational loads to resource-constrained clients while delegating heavier components to edge servers.

- Total Resource Consumption to Reach Target Accuracy

The lower part of Table 5 reports the total communication and computation cost required for each method to reach the predefined accuracy thresholds: 70% for FMNIST, 50% for CIFAR-10, and 30% for CIFAR-100.

Thanks to its faster convergence rate, FLEX-SFL achieved substantial savings in overall resource consumption. On FMNIST, it only required 8.81 MB in communication and 7.29 MFLOPs in computation to reach 70% accuracy, which are 10.1% and 79.2% lower than the requirements for FedRich, respectively. Similar trends were observed on CIFAR-10 and CIFAR-100. Although the per-round communication overhead of FLEX-SFL is higher, the reduced number of required training rounds significantly offsets this cost. For example, on CIFAR-100, FLEX-SFL completed the task in 118 rounds, whereas FedRich took 317 rounds, leading to a 48.5% reduction in total communication (3044.4 MB vs. 6041.7 MB estimated if scaled) and a 67.9% reduction in computation (3406.7 MFLOPs vs. 10616.3 MFLOPs).

These results demonstrate that FLEX-SFL, despite higher per-round transmission, achieved superior overall efficiency due to its enhanced convergence behavior and adaptive training mechanisms.

5.4. Hyperparameter Impact Exploration

This subsection investigates the impact of hyperparameters on the FLEX-SFL framework, focusing on the aggregation intervals of the HiLo-Agg mechanism and the random selection ratio of the EDCS strategy. Experiments were conducted on the FMNIST dataset with 100 training rounds, adjusting one variable at a time to record test accuracy and quantify the influence of parameters on convergence efficiency and model performance.

5.4.1. Aggregation Intervals and

FLEX-SFL’s hierarchical asynchronous aggregation mechanism achieves a dynamic balance between communication efficiency and model consistency by adjusting the client aggregation period and edge server aggregation period . Grid search was performed on the FMNIST dataset , with the random selection ratio fixed at , and the average accuracy of the last 10 rounds recorded as the performance metric (Table 6).

Table 6.

Accuracy under different aggregation frequencies and on the FMNIST dataset.

Horizontal analysis: When , as increased from 1 to 8, accuracy decreased from 0.8941 to 0.8344, a drop of 5.97%. This indicates that reducing edge server aggregation frequency leads to delayed global model updates and insufficient information fusion, consistent with the theoretical conclusion in the convergence analysis that “aggregation delay terms dominate the error upper bound” (Theorem 1). Therefore, shortening can effectively improve model synchronization efficiency and suppress error accumulation.

Vertical comparison: Under , as increased from 1 to 8, accuracy first increased then decreased (0.8814→0.8941→0.8923). Moderate increases in enhance model expressiveness through local training, but excessive prolongation amplifies local bias and noise. Experiments showed that the optimal accuracy (0.8941) was achieved when and . When and , this parameter setting is equivalent to synchronous hierarchical aggregation—specifically, it corresponds to the ablation experiment where the HiLo-Agg mechanism was not used. The accuracy under this setting was 0.8814, which is 1.27 percentage points lower than that of the optimal combination. This verifies the effectiveness of the asynchronous decoupled aggregation mechanism.

Thus, optimizing aggregation intervals requires balancing local training depth and global information synchronization. Combinations of shorter (e.g., 1–2 rounds) and moderate (e.g., 4 rounds) significantly enhance FLEX-SFL’s performance.

5.4.2. Random Participation Ratio

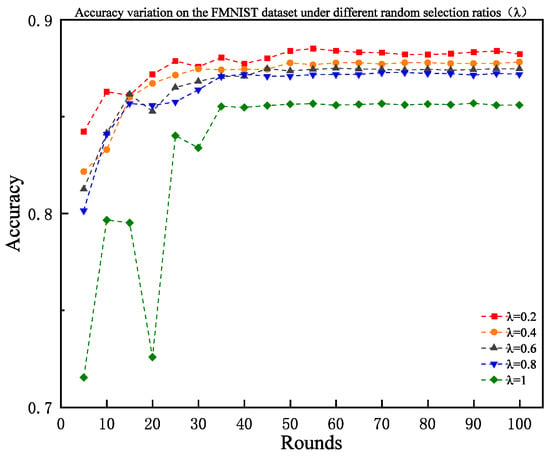

FLEX-SFL’s EDCS module employs a two-stage client selection mechanism of “random sampling + entropy-driven screening,” where the random participation ratio determines the balance between candidate set diversity and heuristic optimization space. A larger value of ensures the initial candidate set covers more types of devices, aiding in capturing global features of data distributions but compressing the optimization space for entropy screening in the second stage. A smaller focuses the candidate set on high-entropy samples, improving screening accuracy but potentially reducing sample diversity.

To quantify the impact of on model performance, experiments were conducted on the FMNIST dataset with , fixing , , and selecting seven clients per edge cluster per round. When , the selection strategy degenerated to pure random sampling, equivalent to an ablation experiment disabling EDCS.

Figure 5 illustrates the test accuracy trends under different , with final results of 0.8823, 0.8782, 0.8747, 0.8719, and 0.856, respectively. The results show that completely random selection yielded the worst performance, at 0.856, while combinations of lower randomness and high-proportion entropy-driven screening enhanced sample quality while maintaining data diversity, balancing client selection efficiency and accuracy. These findings provide a basis for parameter configuration in practical applications, advocating “priority for heuristic screening with moderate randomness retention.”

Figure 5.

Accuracy variation under different random selection ratios on the FMNIST dataset.

6. Conclusions

To address the critical challenges of device heterogeneity, statistical non-IID data, and communication inefficiency in edge intelligent systems, this paper proposes FLEX-SFL, a flexible and efficient optimization framework for split federated learning. Centered around three core aspects—adaptive model structuring, representative client selection, and hierarchical asynchronous aggregation—FLEX-SFL integrates a device-aware adaptive segmentation (DAS) strategy, an entropy-driven client selection (EDCS) mechanism, and a hierarchical local asynchronous aggregation (HiLo-Agg) scheme to enable collaborative optimization under multidimensional heterogeneity.

From a theoretical perspective, the convergence analysis results confirm that FLEX-SFL achieves favorable global convergence guarantees under non-convex objectives, and further reveals how parameters such as participation ratio and local training steps influence the convergence upper bound. Empirical results on diverse non-IID datasets, including FMNIST, CIFAR-10, and CIFAR-100, demonstrate that FLEX-SFL consistently outperforms existing state-of-the-art approaches in terms of model accuracy, convergence speed, and resource efficiency, showcasing strong adaptability and deployment viability in heterogeneous edge scenarios.

Future work will focus on extending FLEX-SFL to heterogeneous IoT edge platforms, such as Raspberry Pi and NVIDIA Jetson clusters, to experimentally validate its scalability and robustness under real-world resource-constrained conditions. Furthermore, we plan to investigate its integration with advanced paradigms, including federated distillation and self-supervised learning for broader applications in industrial IoT and intelligent healthcare.

Author Contributions

Conceptualization, H.Y.; methodology, H.Y.; software, H.Y.; validation, H.Y.; formal analysis, H.Y.; investigation, H.Y. and J.F.; resources, H.D. and Y.J.; data curation, Y.S.; writing—original: draft preparation, H.Y. and Y.S.; writing—review and editing, H.Y. and J.F.; visualization, H.Y.; supervision, H.Y.; project administration, E.X.; funding acquisition, Y.S. and H.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Scientific Research Foundation of the Education Department of Yunnan Province, China (Project number: 2025Y0670).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Niknam, S.; Dhillon, H.S.; Reed, J.H. Federated learning for wireless communications: Motivation, opportunities, and challenges. IEEE Commun. Mag. 2020, 58, 46–51. [Google Scholar] [CrossRef]

- Yuan, L.; Wang, Z.; Sun, L.; Yu, P.S.; Brinton, C.G. Decentralized Federated Learning: A Survey and Perspective. IEEE Internet Things J. 2024, 11, 34617–34638. [Google Scholar] [CrossRef]

- Xu, C.; Li, J.; Liu, Y.; Ling, Y.; Wen, M. Accelerating split federated learning over wireless communication networks. IEEE Trans. Wirel. Commun. 2024, 23, 5587–5599. [Google Scholar] [CrossRef]

- Mohammadabadi, S.M.S.; Zawad, S.; Yan, F.; Yang, L. Speed up federated learning in heterogeneous environments: A dynamic tiering approach. IEEE Internet Things J. 2024, 12, 5026–5035. [Google Scholar] [CrossRef]

- Rauniyar, A.; Hagos, D.H.; Jha, D.; Håkegård, J.E.; Rawat, D.B. Federated learning for medical applications: A taxonomy, current trends, challenges, and future research directions. IEEE Internet Things J. 2024, 11, 7374–7398. [Google Scholar] [CrossRef]

- Yang, X.; Yu, H.; Gao, X.; Wang, H.; Zhang, J.; Li, T. Federated continual learning via knowledge fusion: A survey. IEEE Trans. Knowl. Data Eng. 2024, 36, 3832–3850. [Google Scholar] [CrossRef]

- Zuo, S.; Xie, Y.; Wu, L.; Wu, J. ApaPRFL: Robust privacy-preserving federated learning scheme against poisoning adversaries for intelligent devices using edge computing. IEEE Trans. Consum. Electron. 2024, 70, 725–734. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Mora, A.; Fantini, D.; Bellavista, P. Federated Learning Algorithms with Heterogeneous Data Distributions: An Empirical Evaluation. In Proceedings of the IEEE/ACM 7th Symposium on Edge Computing (SEC), Seattle, WA, USA, 5–8 December 2022; pp. 336–341. [Google Scholar]

- Zhou, X.; Lei, X.; Yang, C.; Shi, Y.; Zhang, X.; Shi, J. Handling data heterogeneity for IoT devices in federated learning: A knowledge fusion approach. IEEE Internet Things J. 2024, 11, 8090–8104. [Google Scholar] [CrossRef]

- Toghani, M.T.; Uribe, C.A. Unbounded gradients in federated learning with buffered asynchronous aggregation. In Proceedings of the 2022 58th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 27–30 September 2022; pp. 1–8. [Google Scholar]

- Zhao, C.; Gao, Z.; Wang, Q.; Xiao, K.; Mo, Z. AFL: An adaptively federated multitask learning for model sharing in industrial IoT. IEEE Internet Things J. 2022, 9, 17080–17088. [Google Scholar] [CrossRef]

- Maciel, F.; Souza, A.M.D.; Bittencourt, L.F.; Villas, L.A.; Braun, T. Federated learning energy saving through client selection. Pervasive Mob. Comput. 2024, 103, 101948. [Google Scholar] [CrossRef]

- Zhu, G.; Wang, Y.; Huang, K. Broadband analog aggregation for low-latency federated edge learning. IEEE Trans. Wirel. Commun. 2019, 19, 491–506. [Google Scholar] [CrossRef]

- You, L.; Zhao, X.; Cao, R.; Shao, Y.; Fu, L. Broadband digital over-the-air computation for wireless federated edge learning. IEEE Trans. Mob. Comput. 2024, 23, 5212–5228. [Google Scholar] [CrossRef]

- Zheng, P.; Zhu, Y.; Hu, Y.; Zhang, Z.; Schmeink, A. Federated learning in heterogeneous networks with unreliable communication. IEEE Trans. Wirel. Commun. 2024, 23, 3823–3838. [Google Scholar] [CrossRef]

- Ali, I.; Muzammil, M.; Haq, I.U.; Amir, M.; Abdullah, S. Deep feature selection and decision level fusion for lungs nodule classification. IEEE Access 2021, 9, 18962–18973. [Google Scholar] [CrossRef]

- Gupta, O.; Raskar, R. Distributed learning of deep neural network over multiple agents. J. Netw. Comput. Appl. 2018, 116, 1–8. [Google Scholar] [CrossRef]

- Tedeschini, B.C.; Brambilla, M.; Nicoli, M. Split consensus federated learning: An approach for distributed training and inference. IEEE Access 2024, 12, 119535–119549. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, Z.; Tonglam, C.; Chen, X.H.; Gao, Y. Hierarchical split federated learning: Convergence analysis and system optimization. IEEE Trans. Mob. Comput. 2025, 24, 9352–9367. [Google Scholar] [CrossRef]

- Han, P.; Tian, G.; Tang, M.; Liu, X. Convergence analysis of split federated learning on heterogeneous data. arXiv 2024, arXiv:2402.15166. [Google Scholar] [CrossRef]

- Hong, J.Y.; Wang, H.T.; Wang, Z.Y.; Zhou, J.Y. Efficient split-mix federated learning for on-demand and In-Situ customization. arXiv 2022, arXiv:2203.09747. [Google Scholar]

- Zhang, S.; Tu, H.; Li, Z.; Liu, S.; Li, S.; Wu, W.; Shen, X.S. Cluster-HSFL: A Cluster-Based Hybrid Split and Federated Learning. In Proceedings of the 2023 IEEE/CIC International Conference on Communications in China (ICCC), Dalian, China, 10–12 August 2023; pp. 1–2. [Google Scholar]

- Wang, J.Y.; Qi, H.; Rawat, A.S.; Waghmare, S.; Yu, F.X.; Joshi, G. FedLite: A scalable approach for federated learning on resource-constrained clients. arXiv 2022, arXiv:2201.11865. [Google Scholar]

- Liu, X.; Deng, Y.; Mahmoodi, T. Wireless distributed learning: A new hybrid split and federated learning approach. IEEE Trans. Wirel. Commun. 2023, 22, 2650–2665. [Google Scholar] [CrossRef]

- Wang, Z.; Lin, H.; Liu, Q.; Zhang, Y.; Liu, X. FedCST: Federated learning on heterogeneous resource-constrained devices using clustering and split training. In Proceedings of the 2024 IEEE 24th International Conference on Software Quality, Reliability, and Security Companion (QRS-C), Cambridge, UK, 1–5 July 2024; pp. 786–792. [Google Scholar]

- Gao, X.; Hou, L.; Chen, B.; Yao, X.; Suo, Z. Compressive-learning-based federated learning for intelligent IoT with cloud–edge collaboration. IEEE Internet Things J. 2025, 12, 2291–2294. [Google Scholar] [CrossRef]

- Lee, H.; Seo, D. FedLC: Optimizing federated learning in Non-IID data via label-wise clustering. IEEE Access 2023, 11, 42082–42095. [Google Scholar] [CrossRef]

- Yang, H.; Xi, W.; Wang, Z.; Shen, Y.; Ji, X.Y.; Sun, C. FedRich: Towards efficient federated learning for heterogeneous clients using heuristic scheduling. Inf. Sci. 2023, 645, 119360. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).