Design and Implementation of a YOLOv2 Accelerator on a Zynq-7000 FPGA

Abstract

1. Introduction

1.1. Motivations

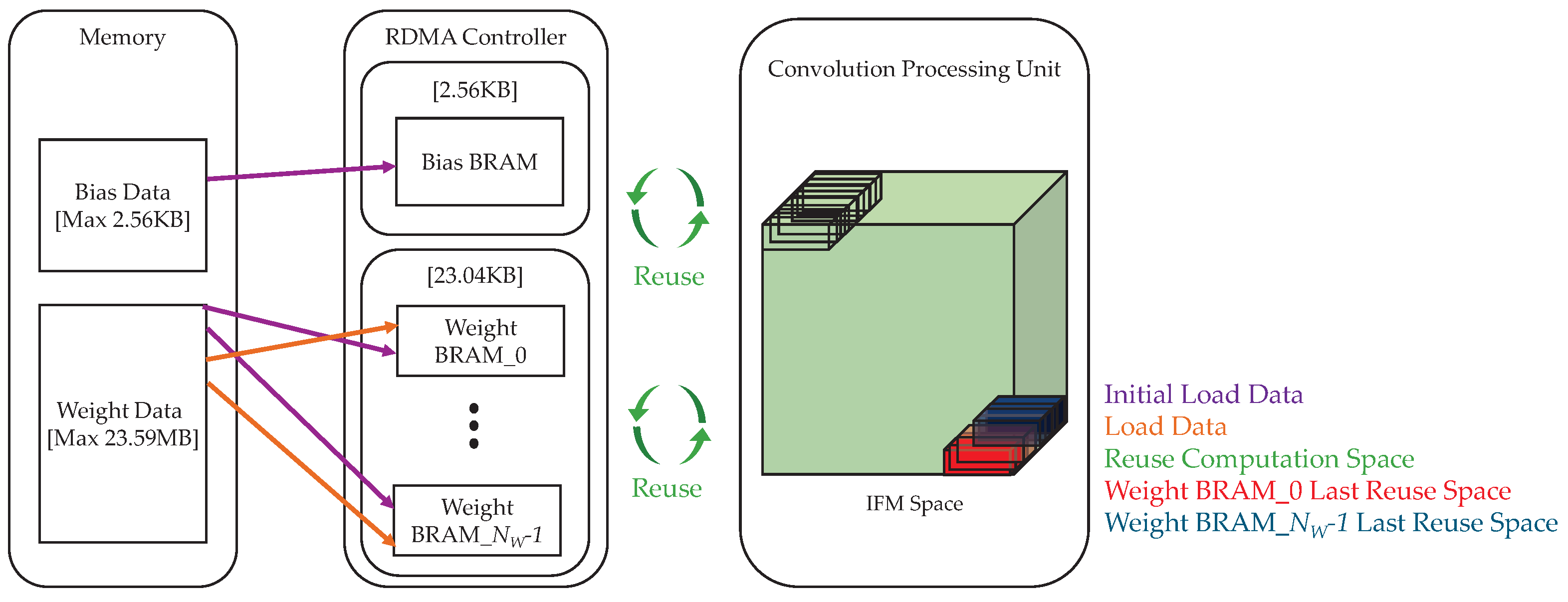

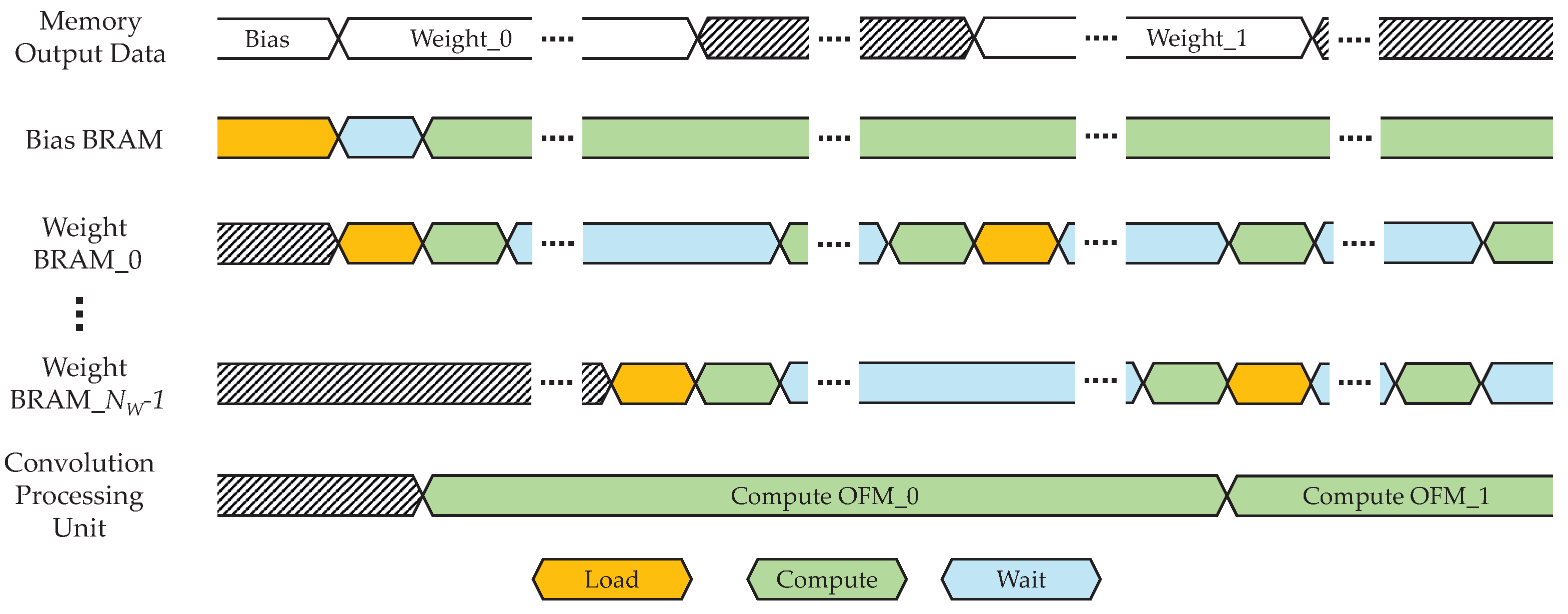

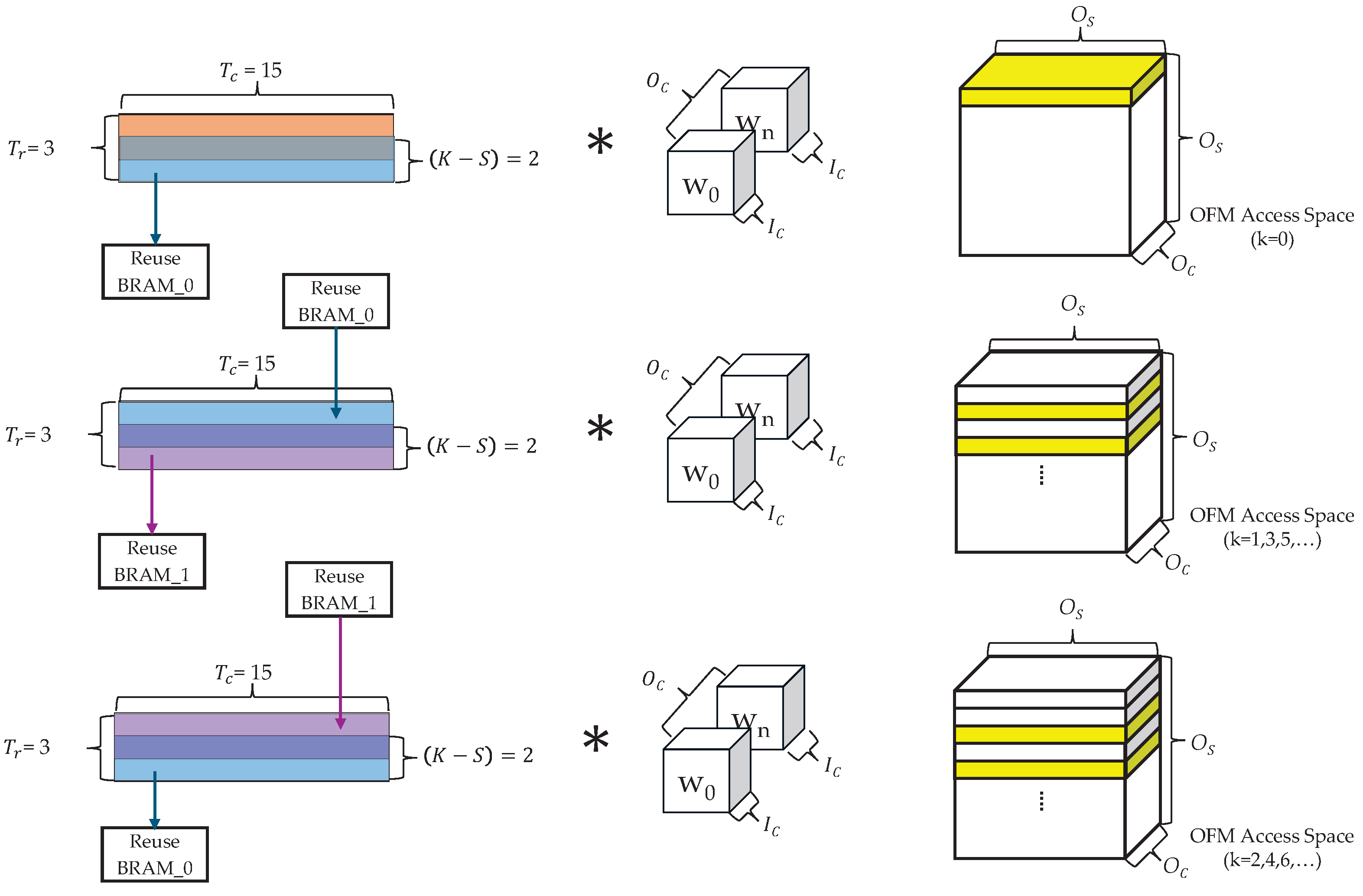

- We optimize the filter strategy and tiling parameters to address the constraints of resource-limited environments. We optimize the data reuse and pipeline architecture for resource-constrained environments. In particular, we adopt 16-bit integer (INT16) quantization to efficiently utilize the limited BRAM on the target platform. This enables the implementation of an optimized filter reuse structure using 25.6 KB of BRAM and a line-buffer-based IFM reuse structure using 153.6 KB of BRAM. In addition, we define tiling parameters that minimize the hardware complexity and reconfigure the pipeline controller using a stall mechanism to ensure continuous data flow.

- We propose a complete accelerator architecture built on the optimized structures. The optimization particularly involves hardware parameters that determine the BRAM size. The architecture comprises six controllers and nine processing units, with its operational flow systematically described through the FSM states of the main controller—Idle, Start, MP, Conv, and Done—demonstrating efficient control of the convolution and max pooling operations.

- We implement the proposed accelerator on an XC7Z020 SoC and perform system-level evaluation to verify its efficiency. The experimental results show that INT16 quantization yields a negligible accuracy loss of approximately 0.2% compared with the 32-bit floating-point (FP32) baseline. Furthermore, compared with other accelerators implemented on the same SoC, our design achieves superior resource efficiency, reducing flip-flop (FF) and DSP usage by up to 26% and 15%, respectively.

1.2. Organization

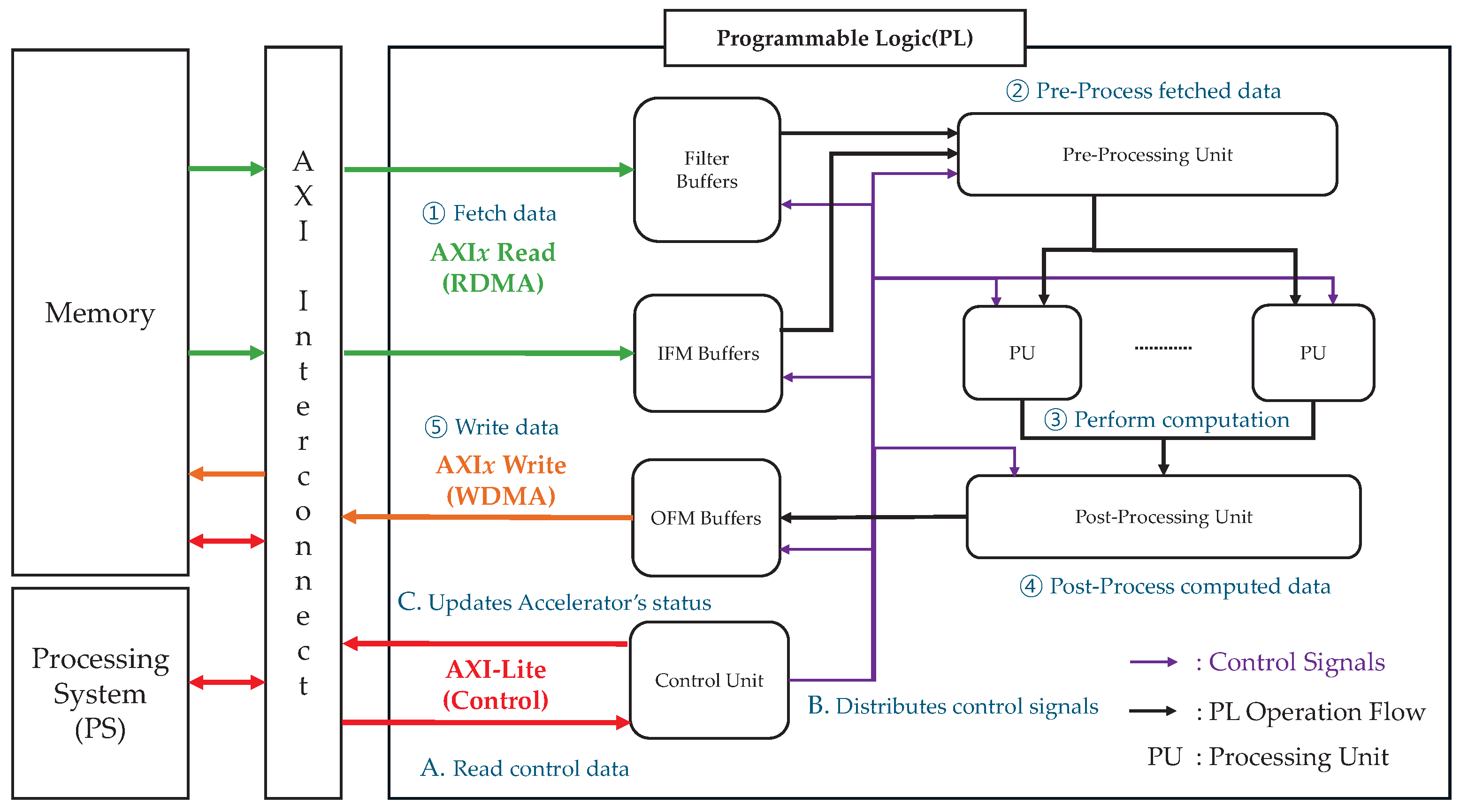

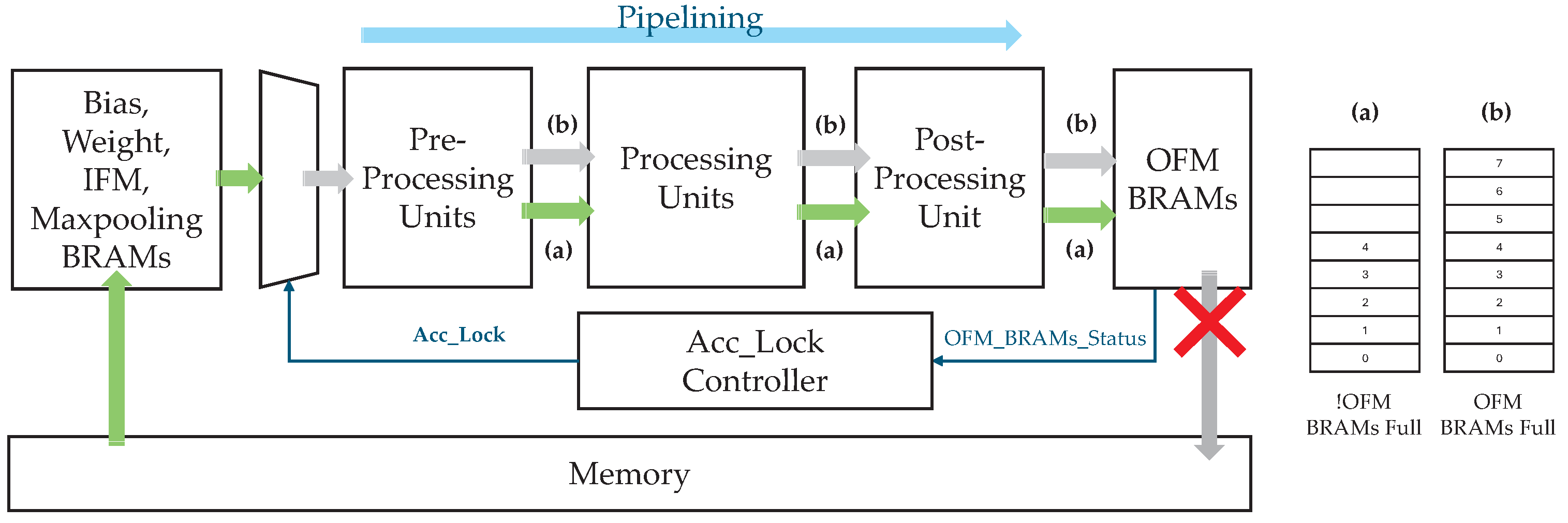

2. Hardware Architecture

2.1. Microarchitecture

- ① The filter and IFM data stored in the external memory are fetched via read direct memory access (RDMA) over the AXI interconnect and stored in on-chip buffers for reuse and tiling.

- ② The pre-processing unit processes the fetched data and forwards it to the PUs.

- ③ Each PU handles a specific data segment and begins its execution as soon as its data becomes available. Computations within each PU are performed in a parallel and pipelined manner. When applicable, the results of filter operations are accumulated in the internal accumulator of the PU.

- ④ After completing its operations, each PU transfers its output to the post-processing unit, which further processes the data and stores it in the OFM buffer.

- ⑤ Once sufficient output data has been accumulated in the OFM buffer, it is written back to the external memory via write direct memory access (WDMA) over the AXI interconnect.

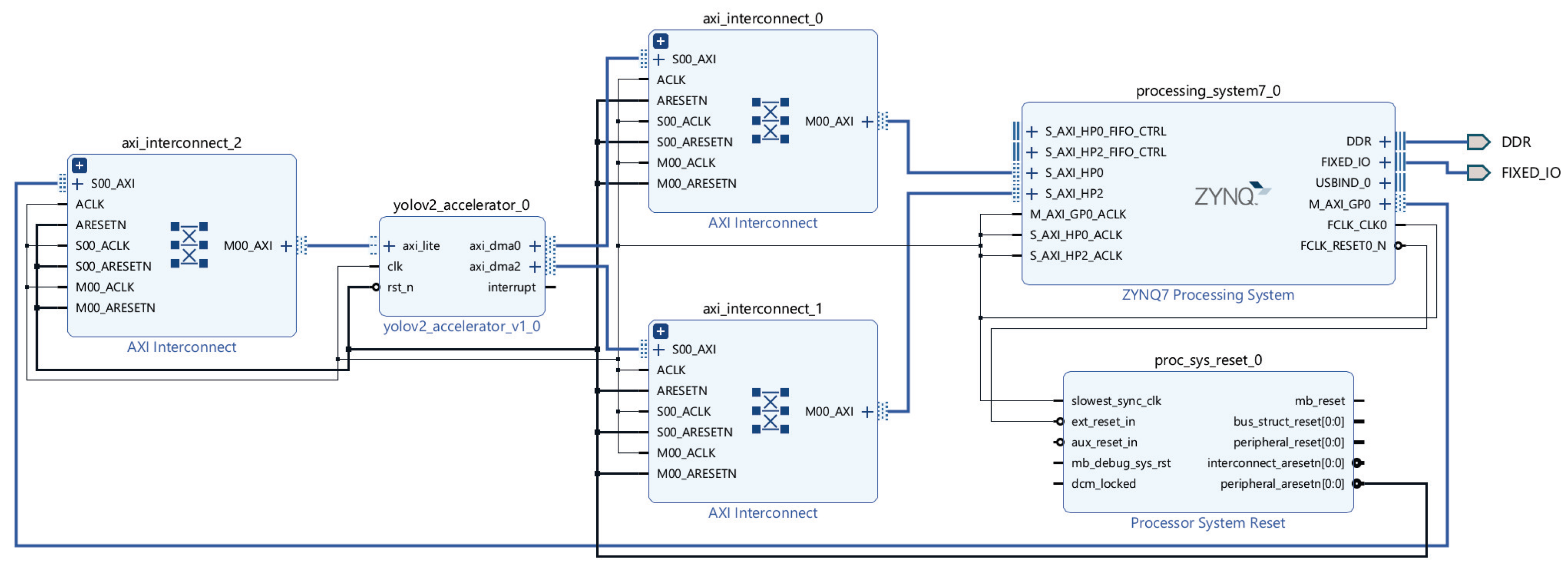

2.2. Zynq-7000 AXI Interconnect

- A Via AXI-Lite, the PS sends control data—such as current layer information, target memory addresses for each buffer’s DMA operation, and accelerator status—to the control unit.

- B The control unit distributes appropriate control signals to each buffer and PU based on this information, initiating the accelerator’s operation.

- C Subsequently, the control unit continuously updates the accelerator’s status and reports it back to the PS, enabling real-time monitoring.

3. Proposed Architecture

3.1. Constraints

3.2. Filter Reuse

3.3. IFM Reuse

3.4. Convolution Utilization

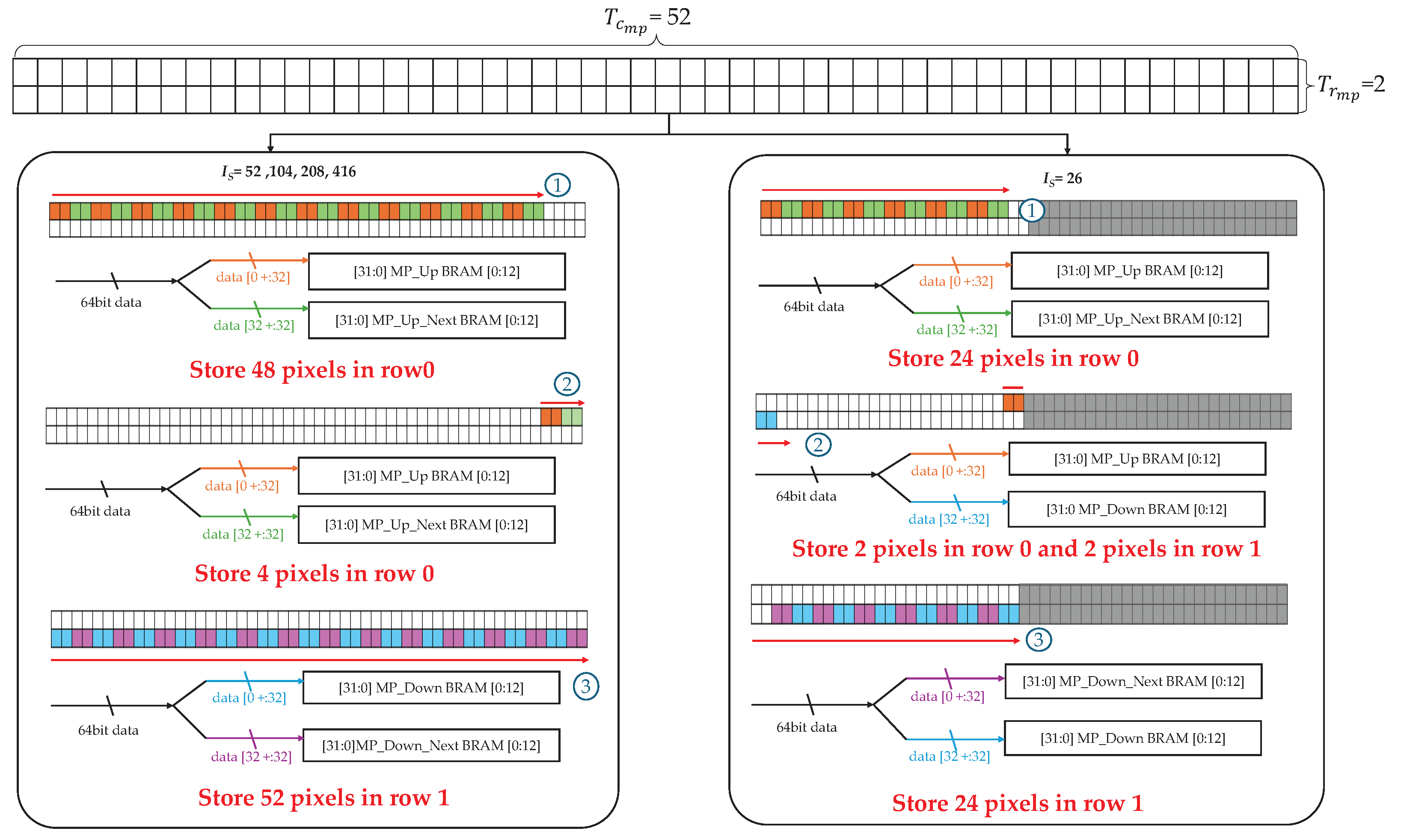

3.5. Max Pooling Utilization

- ①

- For , regardless of the tile size, the first two pixels of the four transferred per cycle are stored in MP_Up BRAM, and the remaining two are stored in MP_Up_Next BRAM. This process continues until 48 pixels in row 0 are filled. For , the same method is applied until 24 pixels of row 0 are stored.

- ②

- For , the remainder of row 0 is filled in the same manner as in Step ①. For , however, the last two of the four pixels belong to the next row and are therefore stored in the MP_Down BRAM instead of the MP_Up_Next BRAM.

- ③

- For , the first two pixels of the following row are stored in the MP_Down BRAM, and the subsequent two pixels are stored in MP_Down_Next BRAM. By contrast, for , the storage order is reversed until the last column of row 1 is filled.

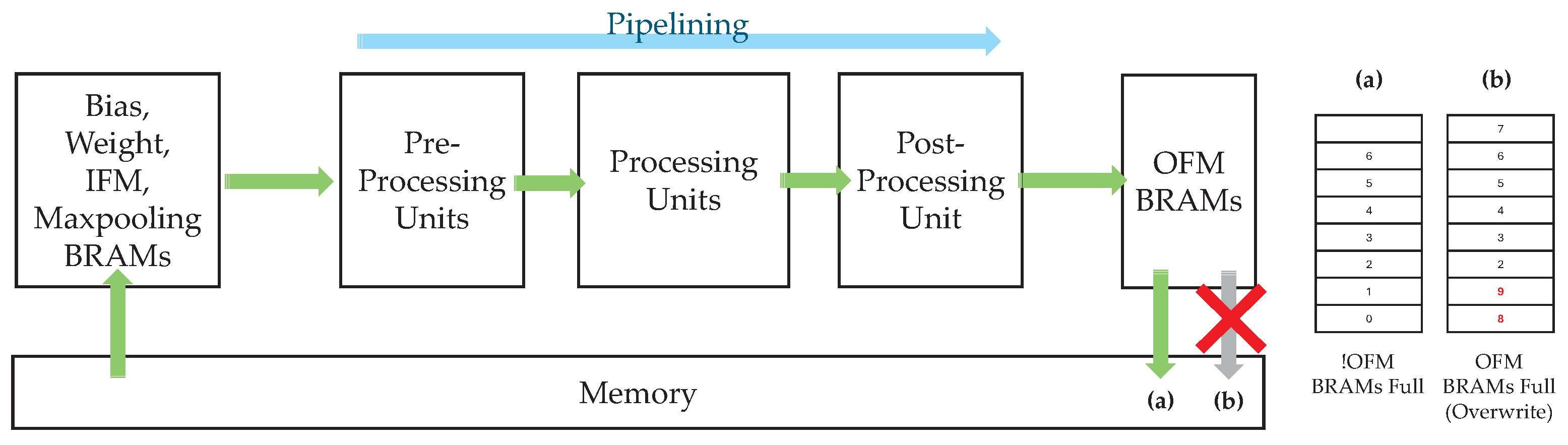

3.6. Acc _Lock Controller

3.7. Proposed Accelerator

3.7.1. Main Controller FSM States

3.7.2. FSM State Transitions

- Idle → Start: Triggered when Acc_start is received from the PS via AXI-Lite.

- Start → MP/Conv: Determined by Conv_layer; 0 for MP, 1 for Conv.

- MP/Conv → Done: Triggered when WDMA0 completes data transfers and issues Acc_done.

- Done → Idle: Triggered when all units are ready and Acc_ready is asserted.

3.7.3. Configurable Hardware Parameters

- : Number of weight BRAMs. Increasing reduces the initial computational latency by enabling faster weight loading.

- : Number of IFM BRAMs. Increasing reduces latency by overlapping IFM loading with weight/bias loading and allows IFM prefetching during reuse-only computation phases for convolution.

- : Number of OFM BRAMs. Increasing reduces the probability of Acc_Lock activation, thereby lowering stall-induced latency.

4. FPGA Evaluation

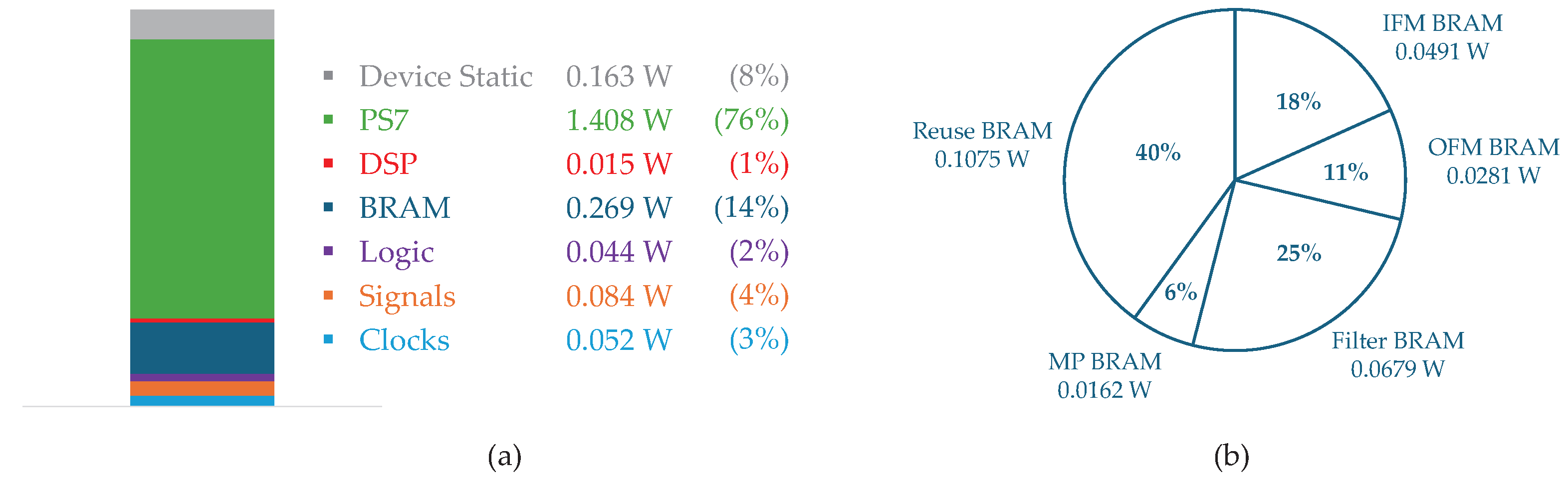

4.1. RTL Synthesis and Implementation

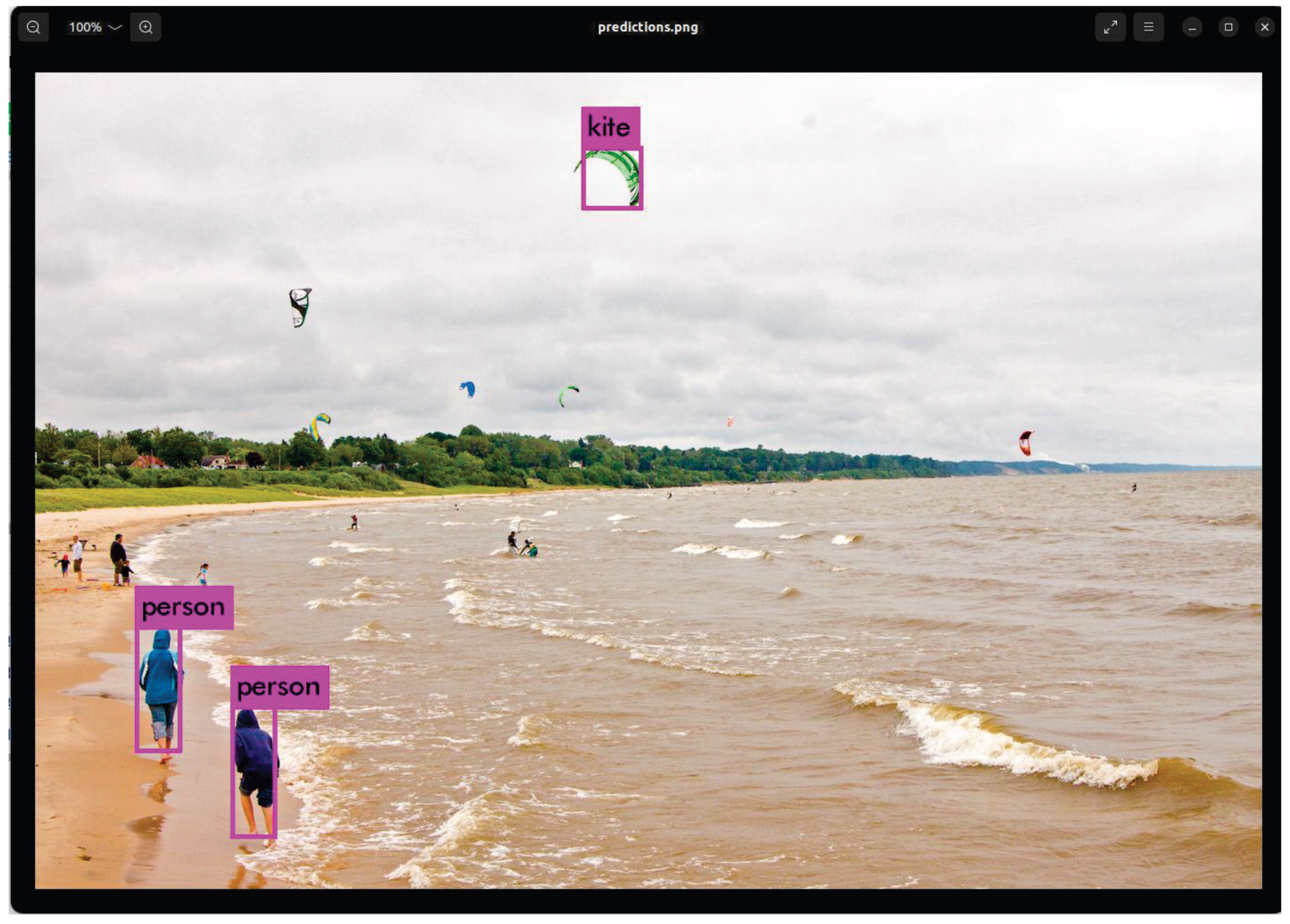

4.2. System-Level Evaluation

4.3. Comparison with Other FPGA Implementations

4.4. Remarks

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Tan, F.; Zhai, M.; Zhai, C. Foreign Object Detection in Urban Rail Transit Based on Deep Differentiation Segmentation Neural Network. Heliyon 2024, 10, e37072. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Yi, J.; Tan, F. Facial Micro-Expression Recognition Method based on CNN and Transformer Mixed Model. Int. J. Biom. 2024, 16, 463–477. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Cai, Y.; Li, H.; Yuan, G.; Niu, W.; Li, Y.; Tang, X.; Ren, B.; Wang, Y. YOLObile: Real-Time Object Detection on Mobile Devices via Compression-Compilation Co-Design. arXiv 2020, arXiv:2009.05697. [Google Scholar]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. YOLO-World: Real-Time Open-Vocabulary Object Detection. arXiv 2024, arXiv:2401.17270. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Tervén, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Bagherzadeh, S.; Daryanavard, H.; Semati, M.R. A Novel Multiplier-Less Convolution Core for YOLO CNN ASIC Implementation. J. Real Time Image Proc. 2024, 45, 1–15. [Google Scholar] [CrossRef]

- Yap, J.W.; Yussof, Z.M.; Salim, S.I.; Lim, K.C. Fixed Point Implementation of Tiny-YOLO-v2 Using OpenCL on FPGA. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 506–512. [Google Scholar]

- Herrmann, V.; Knapheide, J.; Steinert, F.; Stabernack, B. A YOLOv3-Tiny FPGA Architecture Using a Reconfigurable Hardware Accelerator for Real-Time Region of Interest Detection. In Proceedings of the Euromicro Conference on Digital System Design, Maspalomas, Spain, 7–9 September 2022; pp. 453–460. [Google Scholar]

- Krishnamoorthi, R. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv 2018, arXiv:1806.08342. [Google Scholar] [CrossRef]

- Danilowicz, M.; Kryjak, T. Real-Time Multi-Object Tracking Using YOLOv8 and SORT on a SoC FPGA. In Proceedings of the Applied Reconfigurable Computing, Architectures, Tools, and Applications, Seville, Spain, 9–11 April 2025; pp. 214–230. [Google Scholar]

- Zhao, B.; Wang, Y.; Zhang, H.; Zhang, J.; Chen, Y.; Yang, Y. 4-bit CNN Quantization Method with Compact LUT-Based Multiplier Implementation on FPGA. IEEE Trans. Instrum. Meas. 2023, 72, 2008110. [Google Scholar] [CrossRef]

- Chang, S.-E.; Li, Y.; Sun, M.; Shi, R.; So, H.K.H.; Qian, X.; Wang, Y.; Lin, X. Mix and Match: A Novel FPGA-Centric Deep Neural Network Quantization Framework. In Proceedings of the IEEE International Symposium on High-Performance Computer Architecture, Seoul, Republic of Korea, 27 February–3 March 2021; pp. 208–220. [Google Scholar]

- Li, Z.; Wang, J. An Improved Algorithm for Deep Learning YOLO Network Based on Xilinx ZYNQ FPGA. In Proceedings of the International Conference on Culture-oriented Science & Technology, Beijing, China, 28–30 August 2020; pp. 1–4. [Google Scholar]

- Yang, X.; Zhuang, C.; Feng, W.; Yang, Z.; Wang, Q. FPGA Implementation of a Deep Learning Acceleration Core Architecture for Image Target Detection. Appl. Sci. 2023, 13, 4144. [Google Scholar] [CrossRef]

- Xu, G.; Zhao, W.; Ren, Z.; Chen, Z.; Gao, J. Design and Implementation of the High-Performance YOLO Accelerator Based on Zynq FPGA. In Proceedings of the International Conference on Electronics and Information Technology, Chengdu, China, 15–18 March 2024; pp. 1–6. [Google Scholar]

- Valadanzoj, Z.; Daryanavard, H.; Harifi, A. High-Speed YOLOv4-Tiny Hardware Accelerator for Self-Driving Automotive. J. Supercomput. 2024, 80, 6699–6724. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks. IEEE J. Solid State Circuits 2017, 52, 127–138. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, B.; Wang, D. An FPGA-Based YOLOv5 Accelerator for Real-Time Industrial Vision Applications. Micromachines 2024, 15, 1164. [Google Scholar] [CrossRef]

- Huang, H.; Liu, Z.; Chen, T.; Hu, X.; Zhang, Q.; Xiong, X. Design Space Exploration for YOLO Neural Network Accelerator. Electronics 2020, 9, 1921. [Google Scholar] [CrossRef]

- Zhang, N.; Wei, X.; Chen, H.; Liu, W. FPGA Implementation for CNN-Based Optical Remote Sensing Object Detection. Electronics 2021, 10, 282. [Google Scholar] [CrossRef]

- Chen, X.; Li, J.; Zhao, Y. Hardware Resource and Computational Density Efficient CNN Accelerator Design Based on FPGA. In Proceedings of the IEEE International Conference on Integrated Circuits, Technologies and Applications, Zhuhai, China, 1–3 December 2021; pp. 243–244. [Google Scholar]

- Sha, X.; Yanagisawa, M.; Shi, Y. An FPGA-Based YOLOv6 Accelerator for High-Throughput and Energy-Efficient Object Detection. IEICE Trans. Fundam. 2025, 180, 473–481. [Google Scholar] [CrossRef]

- dhm2013724. yolov2_xilinx_fpga. Available online: https://github.com/dhm2013724/yolov2_xilinx_fpga (accessed on 5 August 2025).

- Adiono, T.; Putra, A.; Sutisna, N.; Syafalni, I.; Mulyawan, R. Low Latency YOLOv3-Tiny Accelerator for Low-Cost FPGA Using General Matrix Multiplication Principle. IEEE Access 2021, 9, 141890–141913. [Google Scholar] [CrossRef]

- Bao, K.; Xie, T.; Feng, W.; Yu, C. Power-Efficient FPGA Accelerator Based on Winograd for YOLO. IEEE Access 2020, 8, 174549–174563. [Google Scholar] [CrossRef]

- Nakahara, H.; Yonekawa, H.; Fujii, T.; Sato, S. A Lightweight YOLOv2: A Binarized CNN with a Parallel Support Vector Regression for an FPGA. In Proceedings of the ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 25–27 February 2018; pp. 31–40. [Google Scholar]

- ARM. AMBA AXI and ACE Protocol Specification; ARM IHI 0022H.b; ARM Limited: Cambridge, UK, 2021; Available online: https://developer.arm.com/documentation/ihi0022/latest/ (accessed on 5 August 2025).

- Xilinx Inc. Vivado Design Suite User Guide: Design Analysis and Closure Techniques (UG906); v2023.2; Xilinx Inc.: San Jose, CA, USA, 2023; Available online: https://docs.amd.com/r/en-US/ug906-vivado-design-analysis (accessed on 5 August 2025).

- Digilent Inc. Zybo Z7 Getting Started Guide; Digilent Inc.: Pullman, WA, USA, 2023; Available online: https://digilent.com/reference/programmable-logic/zybo-z7/start (accessed on 5 August 2025).

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.-C.; Qi, H.; Lim, J.; Yang, M.-H.; Lyu, S. UA-DETRAC: A New Benchmark and Protocol for Multi-Object Tracking. In Proceedings of the 2020 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 1182–1191. [Google Scholar]

- Xilinx Inc. Zynq-7000 SoC Technical Reference Manual (UG585); v2023.2; Xilinx Inc.: San Jose, CA, USA, 2023; Available online: https://docs.amd.com/r/en-US/ug585-zynq-7000-SoC-TRM/Functional-Description (accessed on 5 August 2025).

- Xilinx Inc. PetaLinux Tools Reference Guide (UG1144); v2023.2; Xilinx Inc.: San Jose, CA, USA, 2023; Available online: https://docs.amd.com/r/2023.2-English/ug1144-petalinux-tools-reference-guide (accessed on 2 October 2025).

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Li, P.; Che, C. Mapping YOLOv4-Tiny on FPGA-Based DNN Accelerator by Using Dynamic Fixed-Point Method. In Proceedings of the International Symposium on Parallel Architectures, Algorithms and Programming, Xi’an, China, 24–26 September 2021; pp. 248–253. [Google Scholar]

- Yu, Z.; Bouganis, C.S. A Parameterisable FPGA-Tailored Architecture for YOLOv3-Tiny. In Proceedings of the Applied Reconfigurable Computing, Architectures, Tools, and Applications, Toledo, Spain, 1–3 April 2020; pp. 330–344. [Google Scholar]

- Zhai, J.; Li, B.; Lv, S.; Zhou, Q. FPGA-Based Vehicle Detection and Tracking Accelerator. Sensors 2023, 23, 2208. [Google Scholar] [CrossRef]

- Zhang, S.; Cao, J.; Zhang, Q.; Zhang, Q.; Zhang, Y.; Wang, Y. An FPGA-Based Reconfigurable CNN Accelerator for YOLO. In Proceedings of the 2020 IEEE 3rd International Conference on Electronics Technology (ICET), Chengdu, China, 8–12 May 2020; pp. 74–78. [Google Scholar]

| Variables | Definition |

|---|---|

| Size of IFM (13/26/52/104/208/416) | |

| Size of IFM channel | |

| Size of OFM | |

| Size of OFM channel | |

| Size of quantization in bytes [Bytes] | |

| Bias channel size | |

| K | Kernel size |

| S | Stride size |

| Number of filters | |

| Number of IFM reuse BRAM addresses | |

| Number of weight BRAMs | |

| Number of IFM BRAMs | |

| Number of OFM BRAMs | |

| Size of tiles in the row direction | |

| Size of tiles in the column direction | |

| Size of tiles in the row direction for max pooling | |

| Size of tiles in the column direction for max pooling | |

| Number of flip-flops required for the tile storage | |

| Capacity of the IFM reuse BRAM [Bytes] | |

| The MAC count for a single tile |

| Abbreviation | Definition |

|---|---|

| LUT | Look-Up Table |

| FF | Flip-Flop |

| BRAM | 36 Kb Block Random Access Memory |

| DSP | Digital Signal Processing |

| Maximum IFM (Bytes) | Maximum Bias (Bytes) | Maximum Weight (Bytes) | |

|---|---|---|---|

| Required bytes | |||

| 32 bit | (22.15 MB) | (5.12 KB) | (47.19 MB) |

| 16 bit | (11.08 MB) | (2.56 KB) | (23.59 MB = 1024 × 23.04 KB) |

| Steps | Processing Step (IFM Row) | PU Data Source | Reuse BRAM_0 b State | Reuse BRAM_1 b State | Number of IFM Data Stored |

|---|---|---|---|---|---|

| ① | P a ← 0; 0 to | Memory | Read | Idle | × |

| ② | 0 to | Memory | Read | Idle | × |

| ③ | P ← P + 1; P × () to P × ( + | Memory + BRAM_0 | Write | Read | × |

| ④ | P × () to P × () + | Memory + BRAM_0 | Write | Read | × |

| ⑤ | P ← P + 1; P × () to P × () + | Memory + BRAM_1 | Read | Write | × |

| ⑥ | P × () to P × () + | Memory + BRAM_1 | Read | Write | × |

| Convolution Resource Utilization [%] | 100% | ||||||

| 50% | 100% | ||||||

| 25% | 50% | 100% | |||||

| 12.5% | 25% | 50% | 100% | ||||

| 6.25% | 12.5% | 25% | 50% | 100% | |||

| 3.125% | 6.25% | 12.5% | 25% | 50% | 100% | ||

| Maximum | 40 | 80 | 160 | 320 | 640 | 1280 | |

| 133.76 KB | 133.4 KB | 135.68 KB | 138.24 KB | 143.36 KB | 153.6 KB |

| 3 | 4 | 5 | 6 | |||||

| 117 | 720 | 234 | 960 | 351 | 1200 | 468 | 1440 | |

| 234 | 1344 | 468 | 1792 | 702 | 2240 | 936 | 2688 | |

| State | Name | Description |

|---|---|---|

| 1 | Idle | Initial state of the accelerator. The main controller receives the layer configuration and Acc_start signal from the PS via AXI-Lite. |

| 2 | Start | Preparation stage. Based on the received layer information, the main controller generates control data and issues signals to other controllers and PUs. |

| 3-1 | MP | Max pooling stage. The RDMA2 controller loads IFM data into MP BRAMs, which are processed through the max pooling pre-processing unit, max pooling processing unit, and post-processing unit. OFM data are grouped into 64-bit packets, stored in OFM BRAMs, and then transferred to external memory via WDMA0. The Acc_Lock controller monitors OFM BRAM occupancy to manage pipeline flow. |

| 3-2 | Conv | Convolution stage. The RDMA0 controller loads bias and weight data into their respective BRAMs, while the RDMA2 controller loads IFM data. Pre-processed data are sent to either the or convolution PU, followed by the Leaky ReLU PU and post-processing unit. Processed OFM data are grouped, stored in OFM BRAMs, and transferred to memory via WDMA0. Pipeline flow is regulated by the Acc_Lock controller. |

| 4 | Done | Completion stage. The main controller sends Acc_done to the PS via AXI-Lite and resets all units in preparation for the next layer. |

| Resource | Used | Available | Utilization [%] |

|---|---|---|---|

| LUT | 26,147 | 53,200 | 49.15% |

| FF | 17,605 | 106,400 | 16.55% |

| BRAM | 98 | 140 | 70.00% |

| DSP | 126 | 220 | 57.27% |

| [41] | [42] | [43] | [44] | This Work | |

|---|---|---|---|---|---|

| Target FPGA | ZedBoard | ZedBoard | Zynq-7000 SoCs | ZCU 102 | Zybo-Z7-20 |

| Model | YOLOv4-Tiny | YOLOv3-Tiny | YOLOv3 | YOLOv2 | YOLOv2 |

| Dataset | COCO | COCO | UA-DETRAC | COCO | COCO |

| mAP (%) | 40.2 | 30.9 | 71.1 | 48.1 | 48.1 |

| Model GFLOPs | 7.5 | 5.6 | 19.5 | 29.5 | 29.5 |

| LUT | 31 K (58%) | 26 K (49%) | 38 K (71%) | 95 K (35%) | 26 K (49%) |

| FF | 31 K (29%) | 46 K (43%) | 43 K (40%) | 90 K (17%) | 17 K (17%) |

| BRAM (36 Kb) | 132 (94%) | 92.5 (66%) | 132.5 (94%) | 245.5 (27%) | 98 (70%) |

| DSP | 149 (67%) | 160 (72%) | 144 (65%) | 609 (24%) | 126 (57%) |

| Frequency [MHz] | 100 | 100 | 230 | 300 | 100 |

| Latency [ms] | 18,025 | 532 | 310 | 288 | 12,639 |

| GOPS 1 | 0.4 | 10.5 | 62.9 | 102.43 | 2.33 |

| GOPS/DSP | 0.003 | 0.07 | 0.437 | 0.168 | 0.018 |

| Energy [mJ] 2 | 42,900 | 1787.52 | 471.2 | 3398.4 | 25,720.37 |

| Power [W] | 1.994 | 3.4 | 1.52 | 11.8 | 2.035 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Kim, T.-K. Design and Implementation of a YOLOv2 Accelerator on a Zynq-7000 FPGA. Sensors 2025, 25, 6359. https://doi.org/10.3390/s25206359

Kim H, Kim T-K. Design and Implementation of a YOLOv2 Accelerator on a Zynq-7000 FPGA. Sensors. 2025; 25(20):6359. https://doi.org/10.3390/s25206359

Chicago/Turabian StyleKim, Huimin, and Tae-Kyoung Kim. 2025. "Design and Implementation of a YOLOv2 Accelerator on a Zynq-7000 FPGA" Sensors 25, no. 20: 6359. https://doi.org/10.3390/s25206359

APA StyleKim, H., & Kim, T.-K. (2025). Design and Implementation of a YOLOv2 Accelerator on a Zynq-7000 FPGA. Sensors, 25(20), 6359. https://doi.org/10.3390/s25206359