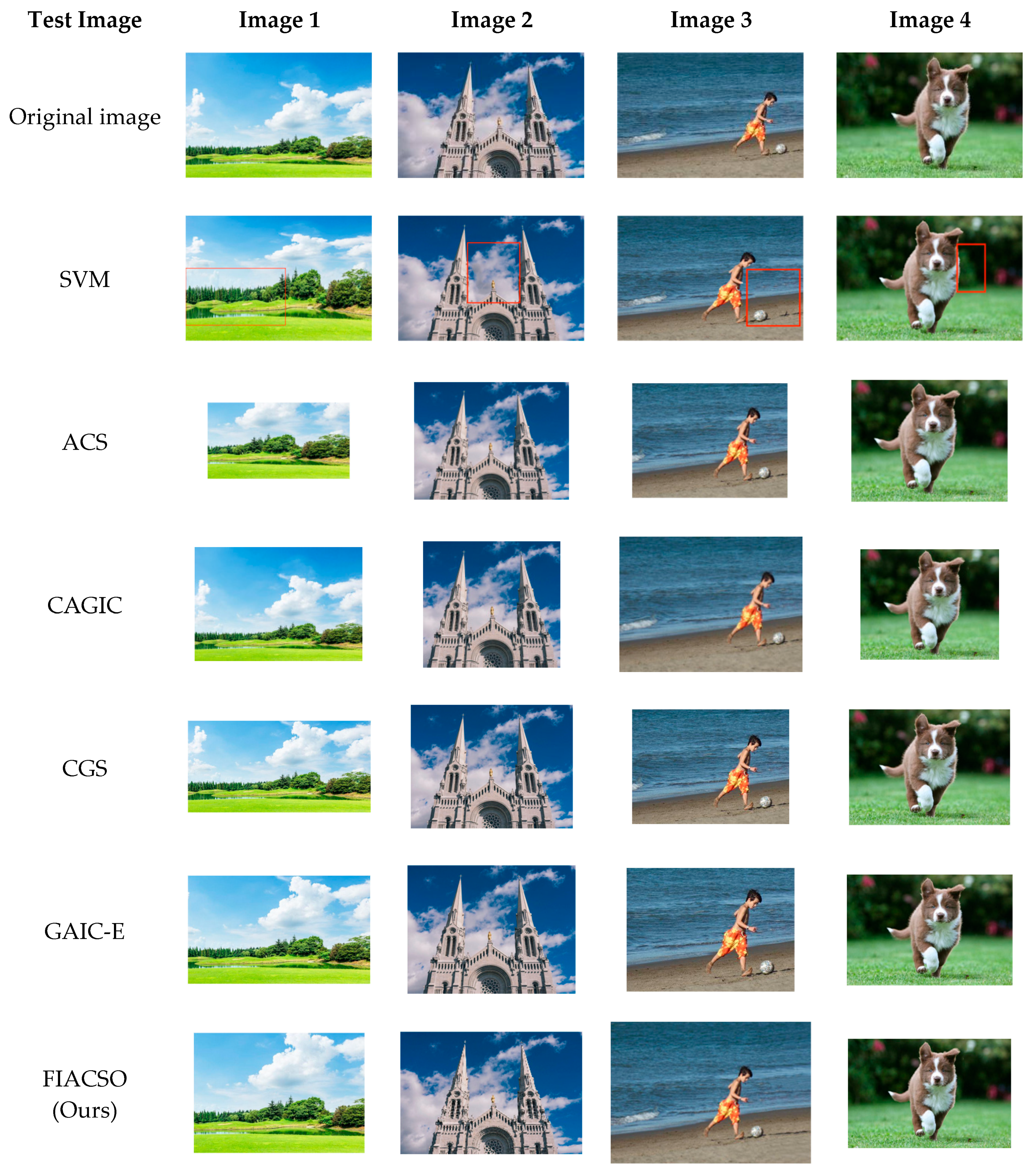

2.1. Main Ideas

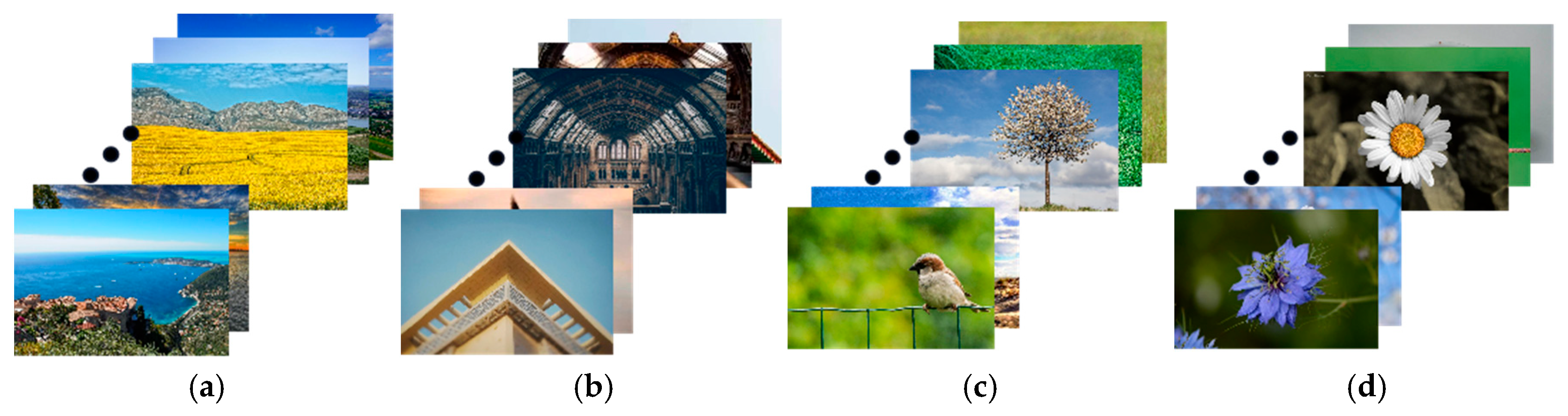

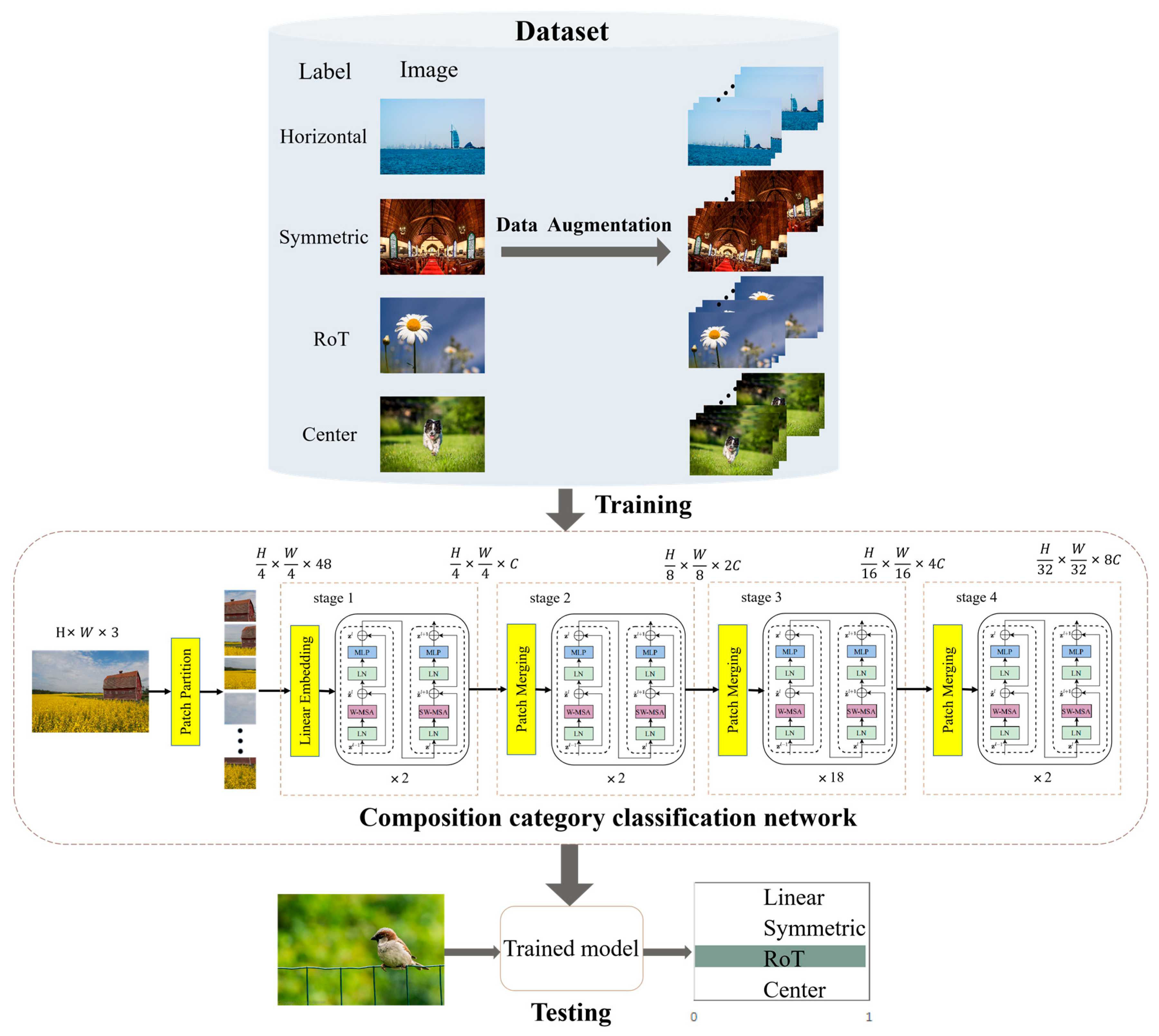

In various themes and scenarios, diverse composition techniques are commonly employed. For instance, landscape photography frequently employs linear or symmetrical composition, while portrait photography leans towards central composition or rule of thirds (RoT). This paper delves into optimizing two distinct types of images: those with main semantic lines and those featuring salient objects. These categories of images are pervasive in practical applications and are pivotal in augmenting the visual allure and aesthetic appeal of images.

For images featuring main semantic lines, the main semantic line plays a crucial role in directing visual attention and emphasizing the structure of the image. As depicted in

Figure 1, when optimizing the composition of such images, the role of semantic lines can be further emphasized, enabling viewers to focus more on the theme and understand the content of the image.

For images containing salient objects, the salient object serves as the central element of composition, capturing viewers’ attention at its core. As depicted in

Figure 2, optimizing the composition of such images aids in accentuating the salient object, granting it a more prominent presence within the frame.

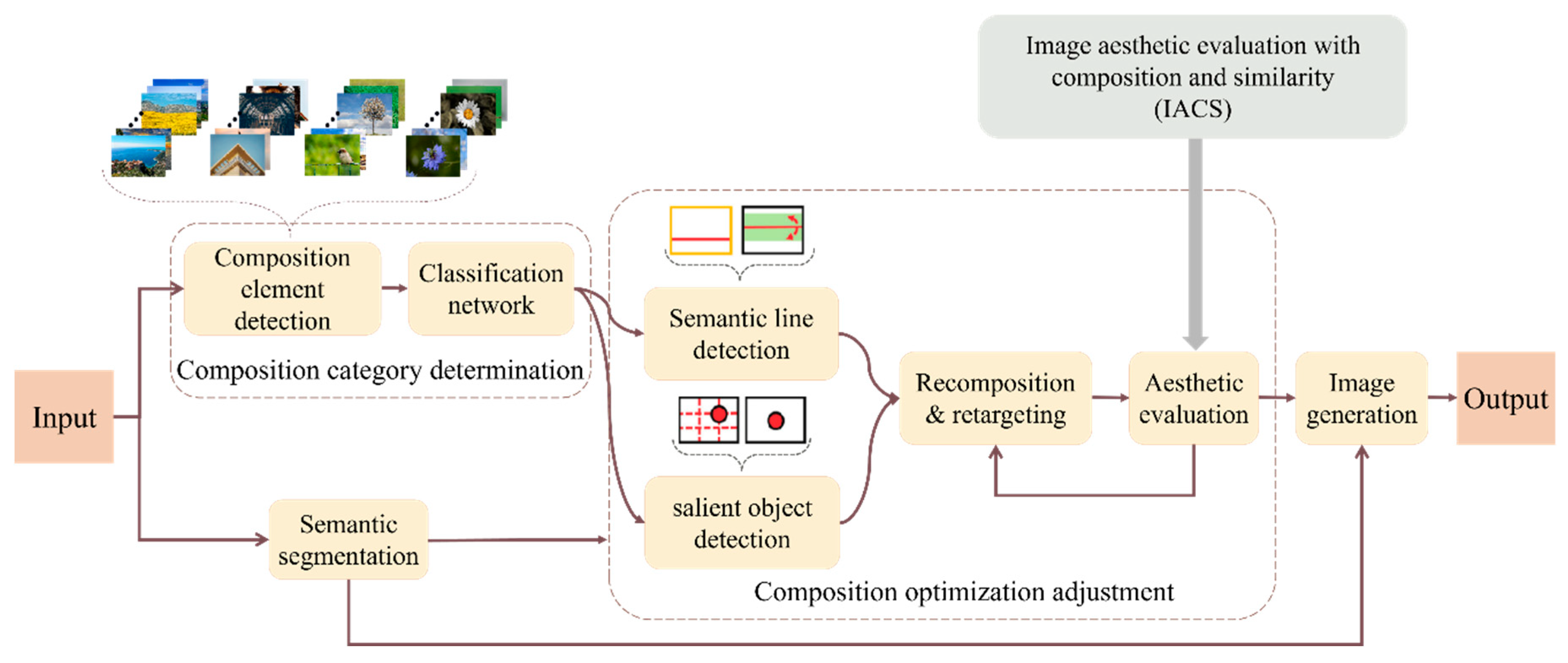

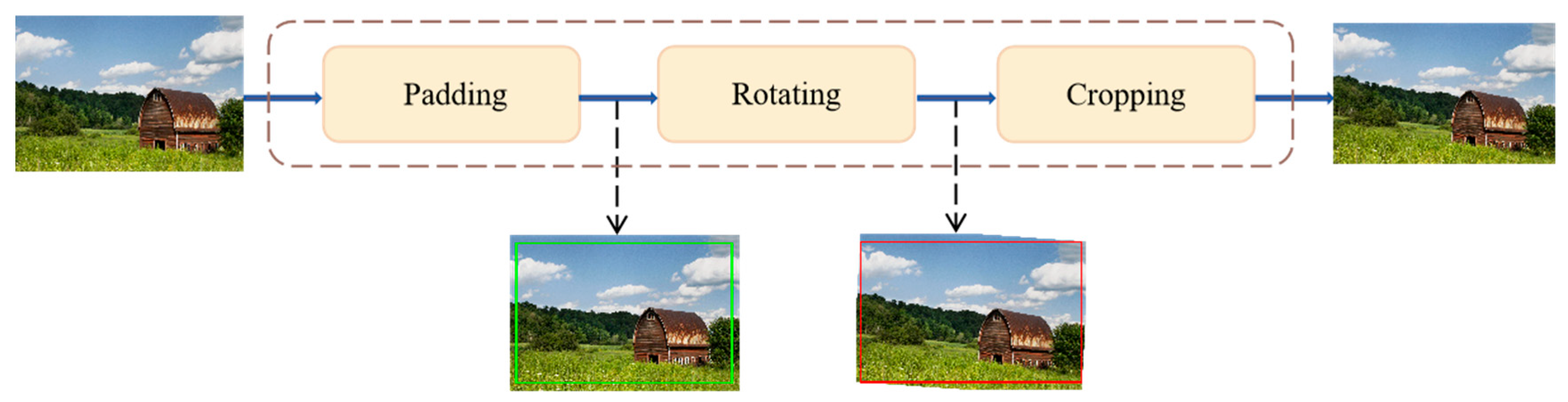

The process of FIACSO for predicted aesthetic score improvement, which integrates composition and similarity, was proposed in this paper and is illustrated in

Figure 3.

From

Figure 3, it is evident that FIACSO primarily comprises the following components.

Composition category determination: In this section, a composition category determination network was designed to detect the composition category of the input image. This network was capable of flexibly identifying complex composition characteristics during the composition category prediction phase and provided confidence scores for adherence to composition rules, thereby demonstrating high accuracy and generalization capabilities.

Image aesthetic evaluation with composition and similarity (IACS): In this section, a unified function was used to balance composition aesthetics and image similarity. The evaluation of composition aesthetics focused on the distance between the main semantic line or salient object and the nearest rule-of-thirds line or central line. For images with prominent semantic lines, an modified Hough transform was employed to detect the main semantic line. For images containing salient objects, a salient object detection method based on LCSF was utilized to determine the salient object region. In evaluating the similarity to original images, edge similarity measured by Canny operator was combined with the SSIM for measurement.

Composition optimization adjustment: This section focuses on maximizing the aesthetic evaluation of composition while preserving the original semantic and structural information of the image. It involves a series of steps, including content-aware rotation, determining the position of the main semantic line or salient object, and gradually adjusting this position using the IACS method. The ultimate goal is to achieve the highest possible composition aesthetic evaluation.

The main objective of this study is to investigate methods for optimizing image composition. Therefore, special emphasis is placed on three key elements: determining the categories of composition, employing the IACS method for aesthetic evaluation, and making adjustments for composition optimization. Semantic segmentation and image generation methods are not the primary focus of this study. Instead, well-established methods are adopted for both tasks in subsequent experiments. The Swin-Base [

13] was utilized as the semantic segmentation network model, while the DeepSIM [

14], a generative adversarial network trained on single images, was employed for image generation.

2.2. Problem Definition

Unless otherwise specified in the Rules, the relevant definitions are as follows:

: Original input image.

: Optimized output image.

: Composition category.

: Composition aesthetic evaluation.

: Similarity evaluation.

: Comprehensive evaluation.

: Semantic segmentation result of image.

: Intermediate image during the optimization process.

: Weight parameter, with a value range of [0, 1].

: Length of the semantic line.

: Minimum length threshold of the semantic line.

: Pixel spacing between points on the semantic line.

: Maximum pixel spacing threshold.

: The collection of candidate main semantic lines.

The main semantic line.

: The line of thirds or center line closest to line .

: The region of the salient object.

: The center point of .

: The intersection point closest to the line of thirds or the center line, nearest to point .

: The central axis of .

: The line of thirds or center line closest to line .

: Euclidean distance.

,, Luminance, contrast, and structure.

: The mean of .

: The mean of .

: The standard deviation of .

: The standard deviation of .

: The covariance between and .

: The edge detection results of the image during the optimization process.

: The edge detection results of the semantic segmentation result image.

: The standard deviation of .

: The standard deviation of .

: The covariance between and .

, , : Ensure the stability of calculations by incorporating constants to prevent instability when the denominator approaches zero.

: The initial IACS score.

: The initial number of datasets.

: The number of datasets after data augmentation.

The original image is initially categorized into composition category , followed by semantic segmentation to obtain the segmented result image containing composition information. Subsequently, the image composition is incrementally adjusted using the IACS method, resulting in the adjusted image . Finally, a generative adversarial network combines the original image with the adjusted image to generate the image, producing the optimized result image . This process enhances the composition aesthetics while preserving the content and features of the original image.

2.4. Image Aesthetic Evaluation with Composition and Similarity IACS

To ensure the quality of output images, optimization focused on two essential attributes. Firstly, the image composition was optimized according to specific compositional rules. Secondly, the optimized image preserved as much information from the original image as possible while minimizing visual flaws or distortions. To address these requirements, an IACS method was proposed. This method combined the aesthetics of image composition and the similarity between the final and the original images into a unified function, calculated according to the following Equation (1):

where

represents the evaluation of the compositional aesthetics of the optimized output image

.

represents the similarity assessment between

and the input image

. The parameter

[0, 1] regulates the impact of these two elements. The objective is to maximize the value of

. A higher

value enhances the compositional quality of the output, while a lower

maintains closer similarity to the input image.

was determined as 0.5 through the sensitivity analysis presented in

Section 3.4.1. This value maximizes both the IACS comprehensive score (

A = 0.80 ± 0.04) and subjective preference rate (82 ± 4%), achieving an optimal balance between compositional aesthetic enhancement and original content preservation.

2.4.1. Composition Aesthetic Evaluation Based on Main Semantic Line

Linear and Symmetric compositions are particularly suited for images with semantic lines, including landscapes or architectural scenes. A direct and effective method to evaluate such compositions is by detecting the main semantic line and assessing its aesthetic quality using .

Since the results of semantic segmentation may include multiple semantic lines, it is necessary to identify one main semantic line based on its position for aesthetic evaluation.

The Hough Transform [

18] can detect semantic lines, including short and discontinuous ones. The core of the Hough Transform for line detection relies on the Hough theorem, which maps line detection in the image space (

,

) to peak detection in the parameter space (

,

). The mathematical expression of the Hough theorem for line detection is given in Equation (2):

where

denotes the perpendicular distance from the origin of the image coordinate system to the straight line, and

denotes the angle between the perpendicular line and the

x-axis. In Hough space, each pixel (

,

) in the image space corresponds to a sinusoidal curve; the intersection of multiple sinusoidal curves in Hough space indicates that these pixels belong to the same straight line in the image space.

However, the standard Hough Transform may detect short or discontinuous lines that are not suitable as main semantic lines. Thus, this paper modified the Hough Transform as follows:

Semantic Line Length Filtering: To exclude overly short semantic lines, a minimum length threshold

was established. Any detected semantic line, represented by length

, must meet the criteria of Equation (3) to be considered:

Pixel Spacing Threshold: To ensure continuity of semantic lines, a maximum pixel spacing threshold

was established. For a segment formed by pixels

and

, their distance must satisfy Equation (4):

Determining the Main Semantic Line: Based on the filtering conditions from the first two steps, a semantic line that meets both conditions is selected as the main semantic line from the candidate set .

In evaluating composition aesthetics based on the main semantic line, the image was first converted to grayscale and processed through Canny edge detection [

19] to produce a binary image. The modified Hough Transform was then used to detect the main semantic line. Finally, the compositional aesthetic evaluation is calculated by utilizing the Euclidean distance between the main semantic line and the nearest rule-of-thirds line or central line.

For linear composition, the alignment of the main semantic line with both horizontal and vertical rule-of-thirds lines is evaluated, and the axis yielding the smaller normalized distance is selected. The corresponding compositional aesthetic score

is given by Equation (5):

where

and

respectively represent the width and height of the image,

is the main semantic line,

is the perpendicular Euclidean distance between lines.

For symmetrical composition, the alignment of the main semantic line with both the vertical and horizontal central lines is evaluated, and the corresponding compositional aesthetic score

is given by Equation (6):

2.4.2. Composition Aesthetic Evaluation Based on Salient Object

RoT and center Composition are widely used in image composition for photos with clear foreground salient objects. Such images are particularly popular in personal photo collections, including pictures of family members, friends, pets, and interesting objects like flowers.

Detecting the salient object region is crucial for this type of composition and subsequent optimization. To this end, a salient object detection method based on LCSF was proposed to accurately distinguish between the foreground and background of an image, aiming to highlight the salient object region. The method involved processing the semantic segmentation results using a Gaussian filter and converting the image to the Lab color space. The luminance channel was then subjected to threshold segmentation and morphological operations to calculate salience features and extract the maximum features.

The calculation of salience features based on the luminance channel is given by Equation (7):

where

represents the salience feature value at pixel

;

represents the luminance value of pixel

in the Lab color space;

(

x,

y) represents a 3 × 3 local neighborhood centered at

;

= 9 represents the total number of pixels in the neighborhood;

is used to suppress negative differences and retain pixels with higher luminance than the local average.

Subsequently, the GrabCut algorithm [

20] was used to segment the image, extract the foreground, and multiply it by the salience features. The fusion process of the foreground region and salience features is given by Equation (8):

where

represents the foreground mask output by GrabCut;

represents the final salient object image. This formula enhances salient features in the foreground region through pixel-wise multiplication while suppressing background noise, effectively enhancing the visibility of the salient object region in the image.

The process of salient object region detection method based on LCSF proposed in this study is illustrated in Algorithm 1.

| Algorithm 1. Salient object region detection based on LCSF |

| Input: |

| Output: |

| function |

|

|

|

|

|

|

|

|

|

| return |

| end function |

| Main: |

| Input Image |

|

Based on the detected salient object region, two elements need to be considered when calculating compositional aesthetics. The first element is the distance from the salient object to the four points of intersection of the rule-of-thirds or the center point in the image. The second element is whether the salient object is placed along the lines of the rule-of-thirds or the center line. For an image, the calculation was done according to the following Equation (9):

where

and

respectively consider the aforementioned elements. The parameter

∈ [0, 1] controls the influence of

and

. Through the sensitivity analysis presented in

Section 3.4.2, α = 1/3 was identified as optimal: it prioritizes

while retaining a moderate weight for

, resulting in the highest average

(0.83 ± 0.07) and subjective aesthetic score (4.2 ± 0.2).

For the rule-of-thirds composition, its parameter

is given by Equation (10):

where

and

respectively represent the center point of the salient object region

and the intersection point closest to

.

For central composition, its similarity evaluation score

is given by Equation (11):

According to the above formulas, when the center of the main object in the image aligns with one of the intersection points, the value of is 1.

For most images with prominent salient objects, including a person or a tall building, the central axis is nearly vertical. Therefore, this paper calculates the central axis by dividing the salient object into two equal regions using a vertical line.

For rule-of-thirds composition, its similarity evaluation score

is given by Equation (12):

where

represents the central axis of the salient object region

.

For central composition, its similarity evaluation score

is given by Equation (13):

where

represent the central axis of the salient object region

.

2.4.3. Evaluation of Similarity to Original Images

Image retargeting is a technique used to adjust the position of semantic lines or foreground salient objects in order to enhance compositional aesthetics. During this process, visual distortion affects the image, particularly when dealing with images featuring complex background structures. To manage this distortion within an acceptable range, this paper employed similarity measurement to quantify the visual variances between the optimized and original images.

While traditional quality metrics like mean square error (MSE) are computationally simple, they fail to accurately reflect visual quality from the perceptual standpoint. Hence, in some studies, SSIM [

21] has been widely used to assess perceptual image similarity. Unlike MSE, SSIM is a perceptual model that aligns more closely with human visual perception. SSIM is calculated according to the following Equation (14):

where

,

and

respectively represent the luminance, contrast, and structure between

and

.

The SSIM value typically ranges from 0 to 1, with values closer to 1 indicating greater similarity between the two images. The individual modules are calculated using the following Equation (15):

where

and

where

respectively represent the means of

and

, while

and

represent their respective standard deviations.

represents the covariance between

and

.

,

and

are constants used to ensure computational stability and prevent instability when the denominator approaches zero.

However, the evaluation of edge structures lacks correlation in the structural section of SSIM, making it difficult to measure differences in edge and contour structure information between images [

22]. Because the human visual system is most sensitive to edge and contour structure information, this paper improves upon SSIM by adding a measure of edge similarity. Canny edge detection was applied to both the semantic segmentation result image and the image obtained during the optimization process to generate edge maps. Subsequently, we compute the edge similarity based on Equation (16):

where

and

respectively represent the standard deviation of the edge detection result images

and

, and

represents their covariance. The constant

is a constant that ensures computational stability.

By combining Equations (14)–(16), the equation for calculating the similarity between the images

was obtained as shown in Equation (17):

where

,

and

were set to 1 to control the relative importance of the three components. The parameter

[0, 1] governs the influence of image structure and edges, and its value is set to 0.5 to balance the two factors. Typically, the constants are defined as

,

, and

, where

,

, and

255. In this experiment,

1 × 10

−4 was introduced to ensure numerical stability.