Abstract

Depth completion aims to reconstruct dense depth maps from sparse LiDAR measurements guided by RGB images. Although BPNet enhanced depth structure perception through a bilateral propagation module and achieved state-of-the-art performance at the time, there is still room for improvement in leveraging 3D geometric priors and adaptively fusing heterogeneous modalities. To this end, we proposed GAC-Net, a Geometric–Attention Fusion Network that enhances geometric representation and cross-modal fusion. Specifically, we designed a dual-branch PointNet++-S encoder, where two PointNet++ modules with different receptive fields are applied to extract scale-aware geometric features from the back-projected sparse point cloud. These features are then fused using a channel attention mechanism to form a robust global 3D representation. A Channel Attention-Based Feature Fusion Module (CAFFM) was further introduced to adaptively integrate this geometric prior with RGB and depth features. Experiments on the KITTI depth completion benchmark demonstrated the effectiveness of GAC-Net, achieving an RMSE of 680.82 mm, ranking first among all peer-reviewed methods at the time of submission.

1. Introduction

Depth completion [] is a fundamental perception task that aims to reconstruct dense and accurate depth maps from sparse sensor inputs. It plays a critical role in various applications, such as autonomous driving [,,,,,,,,,] and sensor-based environmental understanding [,]. However, due to hardware constraints and occlusions, LiDAR sensors typically produce sparse and unevenly distributed depth maps. For example, a 64-line LiDAR provides valid measurements for only about 4% of image pixels [,,], which poses significant challenges for downstream 3D perception tasks []. Although the KITTI dataset [] remains the most widely used benchmark for depth completion, recent works such as RSUD20K [] emphasize the importance of dataset diversity and robustness evaluation under diverse conditions, further underscoring the need for methods with strong generalization ability.

To overcome this challenge, mainstream approaches [,] typically adopt multi-modal fusion strategies by combining sparse depth maps with RGB images. Many existing methods [,,] follow a two-stage architecture that performs early or late fusion of depth and image features. However, these approaches struggle with distinguishing between valid and missing observations and often fail to effectively process the irregular distribution of sparse depth values []. A representative improvement, BPNet [], introduces a three-stage framework that begins with a bilateral propagation module to generate an initial dense depth prior, followed by a multi-modal fusion stage and a final refinement stage. Nevertheless, its fusion module relies on a conventional U-Net backbone [] and lacks explicit modeling of 3D geometric priors and adaptive integration of heterogeneous features.

To address these limitations, we propose GAC-Net, a Geometric–Attention Fusion Network that significantly improves depth completion performance by explicitly modeling 3D geometric priors and enhancing the multi-modal fusion mechanism. Specifically, GAC-Net incorporates two key modules. First, a dual-branch PointNet++-S encoder is designed with two parallel PointNet++ [] branches of different receptive fields to extract scale-aware geometric features from the back-projected sparse point cloud. These features are fused using a channel attention mechanism [] to form a robust global geometric representation. Second, a Channel Attention-Based Feature Fusion Module (CAFFM) is introduced to adaptively recalibrate and integrate the global 3D priors with RGB-depth features, improving the flexibility and accuracy of the fusion process.

The main contributions of this study are summarized as follows:

- We propose a dual-branch PointNet++-S encoder to extract scale-aware geometric features from sparse point clouds and form a robust 3D representation;

- We design a Channel Attention-Based Feature Fusion Module (CAFFM) to adaptively fuse geometric priors with RGB-depth features;

- Extensive experiments on the KITTI depth completion benchmark demonstrate that GAC-Net outperforms BPNet and other published methods in both accuracy, robustness, and structural preservation, ranking first among peer-reviewed methods on the official leaderboard at the time of submission.

2. Related Work

2.1. Two-Dimensional-Based Depth Completion

Early deep learning methods for depth completion typically adopt a single-stage architecture [,,], where sparse depth maps and RGB images are concatenated and processed by a shared convolutional encoder-decoder network. For example, the Sparse-to-Dense [] framework proposed by Ma et al. fuses LiDAR inputs and monocular images through a unified backbone. Although these approaches are structurally simple and computationally efficient, they often produce overly smooth depth maps and fail to preserve fine geometric details around object boundaries.

To address these limitations, subsequent studies introduced two-stage frameworks [,,,,,,,,], which first perform multi-modal feature fusion, followed by a refinement module for post-processing. CSPN [] and CSPN++ [], for instance, leverage convolutional spatial propagation networks to revisit the original sparse but accurate depth measurements and enhance edge sharpness. More recently, CFormer [] extends this line of research by employing a CNN–Transformer hybrid encoder to extract and fuse RGB-depth features, followed by a refinement stage for fine-grained detail recovery. These methods gained popularity for their effectiveness in boundary preservation. However, directly applying convolution to sparse inputs compromises fusion quality, and this issue is also difficult to effectively resolve in the post-processing stage.

To further improve depth estimation quality, BPNet [] proposes a three-stage architecture by adding a bilateral propagation module before fusion. This module generates an initial dense depth map from sparse input, providing structured geometric priors that alleviate data sparsity and enhance the overall completion performance, achieving state-of-the-art results at the time.

Nevertheless, all the above methods are fundamentally 2D-guided and lack explicit modeling of 3D spatial structure, which limits their ability to fully exploit the geometric information inherently embedded in sparse depth measurements.

2.2. 2D–3D Joint Depth Completion: Geometric, Multi-View, and Transformer Approaches

To better exploit the 3D structural information embedded in sparse depth data, recent studies [,,,,,,,,,,] have explored three major directions: geometry-based approaches, multi-view/BEV representations, and Transformer-based cross-modal reasoning, each contributing complementary strengths to depth completion performance.

Geometry-based approaches can be further divided into three categories. The first category involves estimating surface normals as intermediate cues to ensure local geometric coherence. For instance, DeepLiDAR [] and Depth-Normal [] leverage normal estimation to improve local consistency during the completion process. The second category employs graph-based representations to capture geometric and contextual relationships. Typical works, such as ACMNet [] and GraphCSPN [], use graph neural networks to model spatial affinities and guide the effective fusion of RGB and depth features. The third category adopts point-based modeling by treating sparse LiDAR measurements as 3D point clouds. Notable examples include FuseNet [] and PointDC [], which directly extract 3D geometric features from the reprojected point cloud branch and combine them with image features for joint depth estimation.

Beyond explicit geometry, multi-view or bird’s-eye-view (BEV) representations are leveraged to capture global 3D structural consistency. For example, BEV@DC [] constructs a BEV representation of sparse depth measurements to encode global spatial context while preserving fine-grained local geometry. More recently, TPVD [] decomposes sparse depth maps into multiple perspective views that are subsequently reprojected and fused in 2D space, thereby reconstructing dense depth maps with improved global consistency.

Another emerging line of research explores Transformer-based architectures for cross-modal reasoning. The Dual-Attention Fusion model [] employs a CNN–Transformer hybrid encoder and introduces a Dual-Attention Fusion module that combines convolutional spatial/channel attention with Transformer-based cross-modal attention. DeCoTR [] further advances this line by formulating depth completion purely as a cross-modal Transformer reasoning problem, leveraging global attention to integrate RGB and depth features. More recently, HTMNet [] extends this paradigm by coupling Transformer with a Mamba-based state-space bottleneck, representing the first attempt to introduce state-space modeling into depth completion and demonstrating promising results in handling challenging scenarios such as transparent and reflective objects.

Despite these advancements, existing methods still face several challenges. Geometry-based approaches often struggle to balance local precision and global consistency, multi-view/BEV-based representations increase computational cost, and Transformer-based frameworks, while powerful in modeling cross-modal relationships, generally involve complex architectures and heavy computational overhead. In contrast, our method adopts a point-based geometric encoding to explicitly exploit 3D structure and integrates it with an SE-based attention fusion module, thereby achieving a better balance between accuracy and efficiency for robust depth completion.

3. Methods

GAC-Net is designed to fully exploit the rich texture cues from RGB images and the accurate yet incomplete geometric priors from sparse depth data to generate high-quality dense depth maps. Let be the input RGB image, denotes the sparse depth map, and denotes the predicted dense depth map.

As illustrated in Figure 1 and Figure 2, GAC-Net builds upon the multi-scale, three-stage architecture proposed in BPNet [], which performs coarse-to-fine depth prediction over six hierarchical scales (from scale-5 to scale-0). At each scale, depth prediction is performed in three sequential stages:

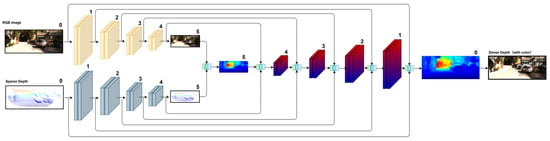

Figure 1.

Overview of the multi-scale processing mechanism in GAC-Net.

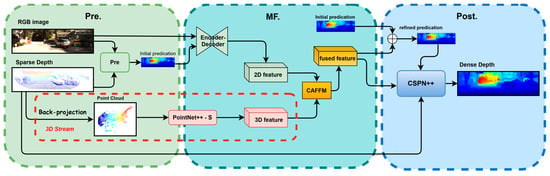

Figure 2.

Overview of the three-stage network.

- (1)

- Pre-processing stage: A bilateral propagation module generates an initial dense depth map from the sparse input, providing a more structured estimate for subsequent fusion;

- (2)

- Enhanced multi-modal fusion: a U-Net backbone [] extracts multi-scale 2D features, while the proposed dual-branch PointNet++-S encodes density-adaptive local and contextual 3D priors. These features are adaptively fused through the proposed Channel Attention-Based Feature Fusion Module (CAFFM), which reweights channels to enhance cross-modal consistency;

- (3)

- Refinement stage: Finally, residual learning [] and CSPN++ [] are employed to iteratively refine the dense depth, enforcing local consistency and recovering fine-grained structures.

Figure 1 illustrates the progressive coarse-to-fine improvement of the depth map across scales, while Figure 2 shows the internal three-stage architecture within each resolution. The following sections present each module in detail (Section 3.1, Section 3.2 and Section 3.3) and describe the multi-scale training loss (Section 3.4).

3.1. Preprocessing via Bilateral Propagation

To generate a structured initial dense depth map from sparse input, we adopt the bilateral propagation module originally proposed by BPNet []. This module propagates valid depth measurements by dynamically aggregating neighboring pixels based on spatial proximity and radiometric similarity, effectively mitigating ambiguity caused by sparse sampling.

Given the input sparse depth map , the initial dense depth can be computed as follows:

Here, denotes the set of neighboring pixels for pixel , and are adaptive weights reflecting both spatial distance and color similarity.

This propagation provides a spatially structured initial estimate that serves as a reliable basis for the subsequent U-Net [] encoding and the proposed PointNet++-S-based geometry extraction. It should be noted that, consistent with BPNet [], the multi-scale coarse-to-fine strategy and the refinement stage remain unchanged, while our main contribution focuses on the 3D geometric modeling and enhanced multi-modal fusion modules described in Section 3.2 and Section 3.3.

3.2. Enhanced Multi-Modal Feature Fusion

To further enhance the robustness and accuracy of depth completion, GAC-Net improves the conventional multi-modal fusion design by explicitly incorporating global 3D geometric priors and a channel attention mechanism for adaptive cross-modal integration. Specifically, the proposed module combines a U-Net [] backbone for multi-scale 2D feature extraction, a dual-branch PointNet++-S encoder for multi-scale 3D geometry representation, and a Channel Attention-Based Feature Fusion Module (CAFFM) to adaptively fuse the 2D and 3D features. The detailed structure of these components and their interaction is shown in Figure 3.

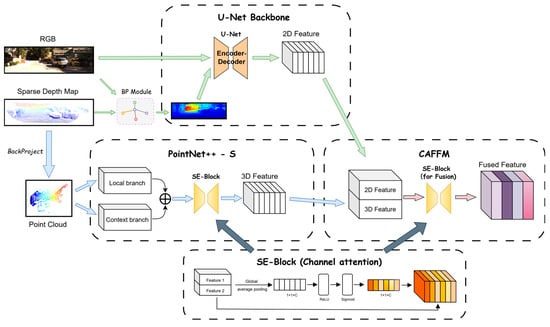

Figure 3.

The architecture of the proposed 2D–3D feature extraction and fusion pipeline. The pipeline consists of a U-Net backbone for multi-scale 2D feature extraction, a dual-branch PointNet++-S module for density-adaptive 3D geometric modeling from back-projected point clouds, and a Channel Attention-Based Feature Fusion Module (CAFFM) for adaptive integration of 2D and 3D features. SE-Blocks are employed in both 3D encoding and feature fusion stages to enhance channel-wise interactions.

3.2.1. U-Net Backbone for 2D Feature Fusion

In the multi-modal fusion stage, the RGB image and the initial dense depth map generated by the bilateral propagation module are concatenated to form the 2D input tensor:

A U-Net [] encoder-decoder backbone (encoder-decoder network) is then employed to extract hierarchical multi-scale 2D features :

This architecture progressively captures local texture, edge, and structural context through down-sampling and restores spatial resolution with skip connections during up-sampling. The resulting feature maps provide rich 2D cues for robust multi-modal fusion.

3.2.2. Dual-Branch PointNet++-S Encoder for 3D Geometry Representation

To capture expressive geometric features and maintain robustness under varying sparsity conditions, we propose a dual-branch PointNet++-S encoder, where S stands for Scale-aware, indicating its ability to extract multi-scale 3D representations from sparse depth inputs.

Let denote the 3D point cloud obtained by back-projecting valid sparse depth pixels using camera intrinsics. The encoder consists of two parallel PointNet++ [] branches with distinct receptive field sizes, designed to extract both local and contextual geometric features.

The local branch aggregates fine-grained neighborhood information via two Set Abstraction (SA) layers:

where each SA layer samples a fixed number of neighboring points within a predefined radius.

Specifically, each Set Abstraction (SA) layer is defined by a tuple , where the following is true:

- is the number of center points sampled from the point cloud using farthest point sampling (FPS);

- is the ball query radius used to group neighboring points around each center;

- is the number of neighboring points selected within radius for local feature aggregation.

These parameters control the receptive field and granularity of geometric information captured at each SA layer.

The context branch uses larger receptive fields to model broader scene-level geometry:

The outputs from both branches are concatenated to form a unified multi-scale 3D feature:

To emphasize informative channels, we apply a squeeze-and-excitation (SE) block [] for channel-wise recalibration. The SE block first performs global average pooling:

This channel descriptor is passed through two fully connected layers with ReLU and Sigmoid activations to compute attention weights:

The recalibrated global 3D feature is then obtained via channel-wise scaling:

where denotes element-wise multiplication. Finally, is broadcasted spatially to match the dimensions of the 2D feature map for cross-modal fusion in the next stage.

3.2.3. Channel Attention-Based Feature Fusion Module (CAFFM)

To integrate complementary local 2D texture features and global 3D geometric priors, we propose a Channel Attention-Based Feature Fusion Module (CAFFM). Let denote the multi-scale 2D feature map extracted by the U-Net [] backbone, and represent the recalibrated global 3D feature vector obtained from the PointNet++-S encoder.

To enable effective fusion, the 3D feature is first broadcast spatially and concatenated with the 2D feature along the channel dimension:

where denotes the spatially broadcasted version of .

A squeeze-and-excitation (SE) block [] is then applied to adaptively learn the fusion attention. First, global average pooling compresses spatial information across all channels:

where .

This channel descriptor is passed through two fully connected layers with ReLU and Sigmoid activations to produce the channel attention weights:

The final fused feature map is recalibrated via channel-wise scaling:

where denotes element-wise multiplication across channels.

This adaptively fused representation is subsequently passed to the refinement stage to generate the final high-quality dense depth map.

3.2.4. Pseudocode for Enhanced Multi-Modal Feature Fusion

To provide a concise overview of the proposed fusion mechanism, we summarize the workflow in Algorithm 1. At a given scale , the inputs include the RGB image , the sparse depth , and the pre-processed dense depth obtained from the bilateral propagation (Section 3.1). The process first extracts 3D features from the back-projected point cloud using a dual-branch PointNet++-S with SE-based recalibration, while 2D features are encoded from the concatenated RGB-depth input using a U-Net encoder. Next, the 3D features are spatially broadcast to the image grid and fused with the 2D features through the CAFFM module with channel-attention weighting, producing the enhanced multi-modal feature . This procedure corresponds to Equations (2)–(15), and its pseudocode implementation is provided in Algorithm 1.

| Algorithm 1: Enhanced Multi-Modal Feature Fusion at scale (Equations (2)–(15)) |

| Input: RGB image Sparse depth map Pre-processed dense depth (from BP) Camera intrinsics Output: Fused feature map Notion: : channel concatenation; : channel-wise multiplication; : global average pooling; : ReLU; : Sigmoid : dense depth pre−processed via Bilateral Propagation Procedure: 1: Step 1: Back-projection to 3D 2: Construct sparse point cloud . 3: Step 2: 2D feature encoding 4: Form 2D input 5: 6: Step 3: Dual-branch PointNet++-S 7: For each branch : 8: For each SA layer with config : 9: 10: End for 11: End for 12: Aggregate to multi-scale 3D feature 13: Step 4: Channel recalibration on 3D 14: ; 15: ; 16: . 17: Step 5: Spatial broadcasting of 3D feature 18: 19: Step 6: CAFFM: channel-attention fusion 20: ; 21: ; 22: ); 23: . 24: return . |

3.3. Depth Refinement

Although the fused depth map captures most scene structures, it may still suffer from local inconsistencies and blurred boundaries. To address this, we adopt a combination of residual learning [] and CSPN++ [] in the refinement stage.

Specifically, residual learning [] corrects global prediction errors using the fused feature :

where denotes the residual regressor. Subsequently, CSPN++ [] refines local details by iteratively propagating depth values:

where are learned affinity weights.

This refinement process preserves clear depth boundaries, improves the structural integrity of ambiguous regions, and enhances the overall accuracy of the predicted depth map, thereby supporting more precise estimation in the subsequent stages of the multi-scale framework.

3.4. Loss Function

To supervise the training of GAC-Net in an end-to-end manner, we adopt a multi-scale hybrid loss that combines the mean squared error (L2) and the mean absolute error (L1) terms, providing robust guidance for both global consistency and local structural accuracy. Specifically, for each predicted depth map at scale , we apply a bilinear up-sampling operator to match the ground truth resolution and compute the loss within valid pixel regions. The total training loss is defined as follows:

where denotes the ground truth dense depth map, is the predicted depth at scale , is the bilinear up-sampling operator, is the set of valid pixels in , and is a scale-dependent weight set to , following the setting in BPNet [].

This hybrid loss ensures that GAC-Net effectively minimizes both global depth estimation errors and local fine-grained residuals across multiple resolutions, producing high-quality dense depth maps even under sparse and noisy input conditions.

4. Experiment

4.1. Experiment Setting

4.1.1. Datasets

All experiments are conducted on the KITTI Depth Completion (DC) benchmark [], which is widely used to evaluate outdoor depth completion performance. The dataset is collected from an autonomous driving platform, with the ground truth depth generated by temporally registering multiple LiDAR scans and refined using stereo image pairs. It consists of 86,898 training frames, 1000 validation frames, and 1000 test frames. Following common practice, we crop the upper 100-pixel region (where LiDAR data is typically missing) from the original resolution of 1216 × 352 to 1216 × 256 during training. In inference, the full resolution is used for evaluation.

4.1.2. Training Details

We implement GAC-Net using PyTorch and train it on a workstation equipped with four NVIDIA RTX 4090 GPUs. The sparse depth map is first back-projected and processed with the proposed dual-branch PointNet++-S encoder to extract a robust global geometric feature.

Following common practice in recent depth completion works [,], we use the AdamW optimizer with a weight decay of 0.05 and apply gradient clipping with a maximum L2 norm of 0.1. The learning rate is scheduled using a warm-up and cosine annealing strategy: it starts at 1/40 of the maximum value (2.5 × 10–4), linearly increases to the maximum in the first of iterations, and then decays to 10% of the maximum following a cosine curve for the remaining of training. The batch size is set to 2 per GPU. The model is trained for 30 epochs. Random brightness and contrast augmentation is applied to RGB images during training.

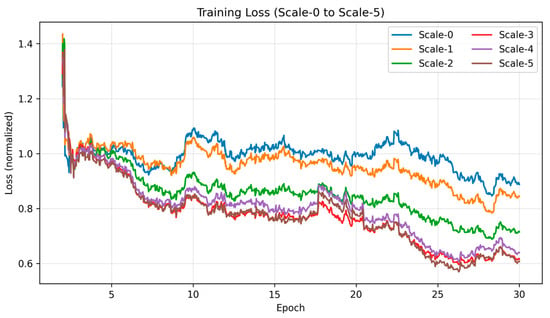

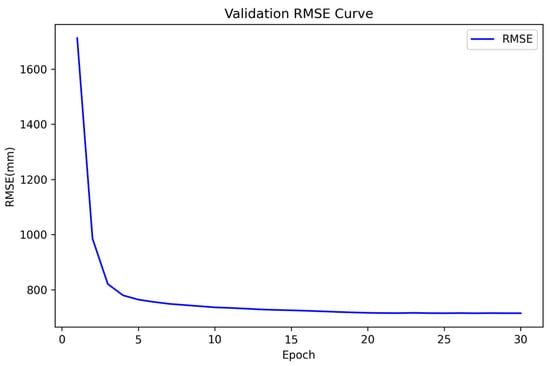

To further illustrate the convergence process, Figure 4 presents the normalized training curves of the per-scale losses (Scale-0 to Scale-5), which are plotted together for clarity. All curves consistently decrease and converge to a stable plateau after sufficient iterations. Figure 5 shows the validation curve of the main evaluation metric (RMSE), which decreases smoothly and stabilizes after approximately 20 epochs.

Figure 4.

Normalized training curves of the per-scale losses (Scale-0 to Scale-5) during training. All curves decrease consistently and converge to a stable plateau after sufficient iterations.

Figure 5.

Validation curve of the main evaluation metric, RMSE. The curve decreases smoothly and reaches a stable plateau after approximately 20 epochs.

4.1.3. Metrics

Four standard metrics are used to evaluate performance: root mean squared error (RMSE, in mm), mean absolute error (MAE, in mm), root mean squared error of inverse depth (iRMSE, in 1/km), and mean absolute error of inverse depth (iMAE, in 1/km). Among these, RMSE is the primary metric used for ranking on the official KITTI benchmark.

The following formula shows the detailed definition of each indicator:

4.2. Comparison with SoTA Methods

To comprehensively validate the superiority of the proposed GAC-Net, we conduct comparison experiments from both quantitative and qualitative perspectives.

4.2.1. Quantitative Comparison

Table 1 summarizes the performance of GAC-Net and representative state-of-the-art (SOTA) methods. The upper section lists purely 2D-based approaches, while the lower section includes 2D–3D joint methods that incorporate geometric priors from LiDAR point clouds.

Table 1.

Quantitative result on KITTI dataset.

Our GAC-Net achieves the lowest RMSE of 680.82 mm, surpassing BPNet [] (684.90 mm), TPVD [] (693.97 mm), and other recent methods. This result demonstrates the effectiveness of our enhanced multi-modal fusion framework, particularly the integration of scale-aware 3D geometry via PointNet++-S and adaptive channel-wise fusion through CAFFM.

In addition to RMSE, GAC-Net also achieves competitive performance on other metrics, including MAE, iRMSE, and iMAE, indicating both global accuracy and robustness in local structures. Notably, GAC-Net ranks first among all peer-reviewed methods on the official KITTI leaderboard at the time of submission.

In contrast to early 2D-based methods such as CSPN++ [] and PENet [], which primarily rely on image-guided convolutions, GAC-Net leverages explicit 3D geometric encoding to address the irregularity and sparsity of LiDAR inputs. While 2D methods can effectively propagate depth in high-texture regions, they often fail to capture reliable geometric structures in textureless or occluded areas, leading to blurred or distorted reconstructions. By integrating point-based representations through the PointNet++-S encoder, our model significantly improves structural completeness and robustness in such challenging scenarios.

Compared with recent 2D–3D joint methods such as TPVD [] and DeCoTR [], GAC-Net adopts a more modular and geometry-driven design. TPVD [] decomposes the sparse input into multiple perspective views to enhance structural awareness, but it also introduces repeated projection steps, which tend to increase error accumulation and processing complexity. DeCoTR [], on the other hand, integrates 2D and 3D features via global attention, facilitating long-range interactions across modalities. However, both approaches involve complex processing pipelines. In contrast, GAC-Net focuses on scale-aware point-based geometry encoding and adaptive channel-wise fusion, yielding lower RMSE while maintaining architectural clarity and generalizability across varying input sparsity levels.

4.2.2. Qualitative Comparison

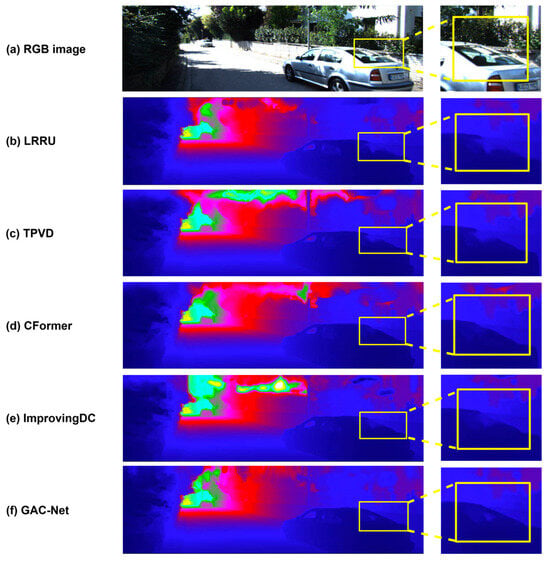

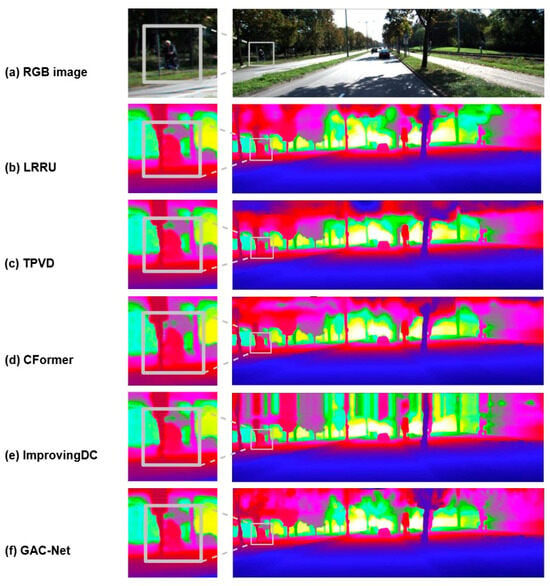

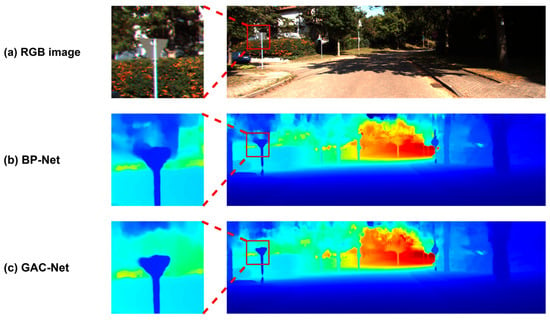

We further conduct visual comparisons to assess the perceptual quality of the predicted depth maps. As shown in Figure 6, Figure 7 and Figure 8, GAC-Net is compared with four representative state-of-the-art methods: LRRU [], TPVD [], CFormer [], and ImprovingDC [], as well as its baseline model BPNet [].

Figure 6.

Qualitative comparison with state-of-the-art methods (LRRU [], TPVD [], CFormer [] and ImprovingDC []) on preserving the structural completeness of objects, highlighting the reconstruction of full vehicles (e.g., rear windshield and boundary contours) under sparse input.

Figure 7.

Visual comparison of local structural details among state-of-the-art methods (LRRU [], TPVD [], CFormer [] and ImprovingDC []), with a focus on edge sharpness and boundary preservation for objects such as traffic signs and cyclists.

Figure 8.

Visual comparison between BPNet [] and our GAC-Net.

Figure 6 focuses on the reconstruction of large-scale foreground objects, particularly a complete vehicle. The zoom-in region highlights GAC-Net’s ability to recover the rear windshield and its boundary with higher completeness and clarity, while other methods either produce incomplete structures (e.g., LRRU []) or blurred and collapsed outlines (e.g., TPVD [] and CFormer []). This illustrates GAC-Net’s superior capability in handling spatially sparse but structurally significant areas.

Figure 7 compares the preservation of fine structures such as thin poles and distant pedestrians. In the zoomed views, GAC-Net demonstrates sharper edges and better geometric integrity, whereas other methods show common issues such as edge blurring, structural breakage, or background fusion. For instance, TPVD [] exhibits strong edge diffusion, and ImprovingDC [] fails to recover the basic shape of the pedestrian’s head. These results indicate that GAC-Net can effectively retain high-frequency details even under highly sparse input conditions.

Figure 8 provides a direct comparison between GAC-Net and BPNet [], verifying the practical improvements brought by our proposed modules. In the zoom-in regions, GAC-Net exhibits sharper, more continuous, and more complete reconstructions of edge structures such as street signs and tree trunks. By contrast, BPNet [] suffers from elongated contours and edge spreading. These differences confirm that the incorporation of 3D geometric encoding and channel-aware fusion in GAC-Net significantly enhances its ability to reconstruct complex scene elements.

In summary, the differences observed in the zoom-in regions clearly demonstrate that GAC-Net achieves more complete, sharper, and geometrically consistent depth estimation results across various object types, including large foreground objects (e.g., vehicles), mid-scale structures (e.g., poles), and fine, distant elements (e.g., pedestrians).

4.3. Ablation Studies

To comprehensively evaluate the contributions of each core module in GAC-Net, we conduct ablation studies on the KITTI validation set []. Specifically, we analyze the impact of the geometric encoding module (PointNet++-S) and the channel-aware feature fusion module (CAFFM). The experimental results are summarized in Table 2 and Table 3, respectively.

Table 2.

Ablation studies on PointNet++-S.

Table 3.

Ablation studies on CAFFM.

4.3.1. Effectiveness of PointNet++-S

Table 2 shows the performance gain brought by incorporating the PointNet++-S module. Starting from a baseline model (i) that only adopts the original multi-modal fusion pipeline without any 3D encoder or attention mechanism, we observe an RMSE of 719.08 mm and an MAE of 204.36 mm. By adding a standard PointNet++ encoder (ii), the performance improves to 714.34 mm RMSE and 200.12 mm MAE, confirming the benefit of introducing explicit 3D geometric priors. Furthermore, replacing the vanilla encoder with our proposed dual-branch PointNet++-S module (iii) leads to the best performance (RMSE: 711.40 mm, MAE: 197.63 mm), demonstrating the effectiveness of the scale-aware geometric extraction strategy.

4.3.2. Effectiveness of CAFFM

As shown in Table 3, we investigate different feature fusion strategies to validate the advantage of our CAFFM module. The baseline model (i) uses element-wise addition for combining local and contextual features, yielding 716.69 mm RMSE and 202.54 mm MAE. Switching to channel-wise concatenation (ii) slightly improves the performance to 715.06 mm RMSE and 201.36 mm MAE. Finally, applying our proposed channel-aware feature fusion module (CAFFM) (iii) further reduces the RMSE to 711.40 mm and MAE to 197.63 mm. This confirms that CAFFM enables richer feature interactions and enhances overall depth completion accuracy.

4.3.3. Visual Comparison of Ablation Results

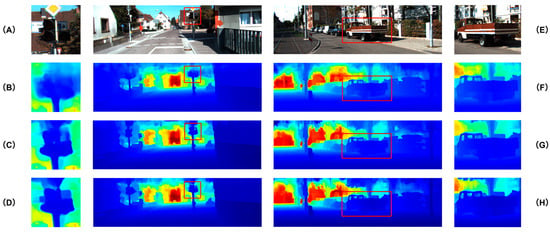

To complement the quantitative results in Table 2 and Table 3, Figure 9 provides visual comparisons of ablation experiments on representative KITTI samples. As shown on the left (A–D), the baseline model without PointNet++-S and CAFFM produces blurry boundaries, where the traffic sign is merged with the background. Adding PointNet++-S improves geometric consistency, but without CAFFM, the edges remain less distinct. The complete GAC-Net successfully separates the sign from the background, yielding sharper and clearer boundaries. On the right (E–H), a larger-scale scene further demonstrates that both PointNet++-S and CAFFM contribute to reconstructing the complete structure of the vehicle, with the full GAC-Net achieving the most accurate and visually coherent depth maps. These qualitative results are consistent with the quantitative improvements reported in the ablation study.

Figure 9.

Visual comparison of ablation experiments on the KITTI validation set []. (A–D) show a local region around a traffic sign and its background, while (E–H) present a large-scale scene containing a vehicle. From top to bottom: RGB input image (A,E), baseline model without PointNet++-S and CAFFM (B,F), variant with PointNet++-S but simple concatenation instead of CAFFM (C,G), and the full GAC-Net with both PointNet++-S and CAFFM (D,H).

4.4. Sparsity Level Analysis

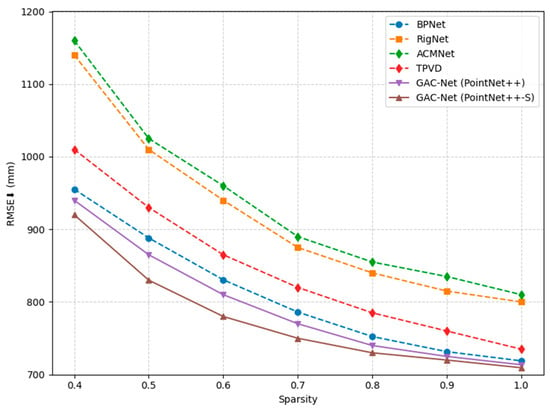

To assess the robustness of our method under varying input sparsity levels, we simulate different degrees of depth sparsity by uniformly sampling valid pixels from the original sparse depth maps. Following standard practice [,], the sparsity ratio is varied from 0.4 to 1.0, where 1.0 corresponds to the original input sparsity.

The results are presented in Figure 10, where our GAC-Net variants are compared against three representative methods: BPNet [], TPVD [], RigNet [], and ACMNet []. As expected, all methods suffer a noticeable performance drop in RMSE as the input becomes sparser. However, both versions of GAC-Net consistently outperform other approaches across all sparsity levels.

Figure 10.

Comparison of RMSE (mm) across different input depth sparsity levels.

Notably, GAC-Net (PointNet++–S) achieves the lowest RMSE in every case, especially under more challenging sparsity conditions (e.g., 0.4–0.6). This performance validates the effectiveness of our scale-aware dual-branch PointNet++–S module, which captures both local geometric detail and global spatial structure. These results confirm that our method maintains higher accuracy and stronger generalization ability in sparse depth completion tasks under real-world constraints.

4.5. Complexity Analysis

To further evaluate the efficiency of our framework, we compare the model complexity of GAC-Net with BPNet [] and the Transformer-based Dual-Attention Fusion model []. The results are summarized in Table 4. As shown, our method contains 91.07 M parameters, which is slightly higher than BPNet [] (89.87 M) due to the additional PointNet++-S branch for 3D geometric feature extraction. This design, however, only introduces a marginal increase in inference time (0.17 s vs. 0.16 s), demonstrating that the added geometric modeling branch does not significantly impact efficiency.

Table 4.

Comparison of model complexity.

Compared with the Transformer-based Dual-Attention Fusion model, GAC-Net runs faster (0.17 s vs. 0.191 s), despite having more parameters (91.07 M vs. 31.38 M). This advantage mainly stems from the lightweight SE-based CAFFM fusion module, which effectively avoids the runtime overhead introduced by Transformer modules.

Overall, the results indicate that GAC-Net achieves a favorable balance between accuracy and efficiency. Although not strictly real-time, the design demonstrates that incorporating 3D modeling through the PointNet++-S branch and the SE-based CAFFM fusion module can be achieved with only marginal computational overhead.

5. Conclusions

This paper presented GAC-Net, a geometric attention fusion framework for image-guided depth completion. Building on the multi-scale, three-stage pipeline of BPNet, GAC-Net introduces two key modules: (i) a dual-branch PointNet++-S encoder that extracts scale-aware 3D geometric features from back-projected sparse point clouds and (ii) a Channel-Attention-Based Feature Fusion Module (CAFFM) that adaptively integrates geometric priors with RGB-depth features. These designs enhance explicit 3D modeling and cross-modal alignment, leading to improved preservation of both global structures and fine details. On the KITTI benchmark, GAC-Net demonstrates competitive performance among peer-reviewed methods, achieving accuracy improvements in both global structures and local details.

We further analyzed model complexity and showed that, while GAC-Net includes an additional 3D branch, the overall inference overhead remains marginal and indicates a favorable trade-off between accuracy and efficiency compared with Transformer-based baselines.

Limitations and future work:

- (1)

- Efficiency under deployment constraints. Although not strictly real-time, our results indicate that incorporating 3D modeling through the PointNet++-S branch and the SE-based CAFFM fusion module can be achieved with only modest computational overhead. Future work will explore hardware-friendly acceleration (e.g., TensorRT, mixed precision), model compression (pruning/quantization/distillation), and lightweight design choices (e.g., streamlined multi-scale stages and operator fusion) to further reduce latency and memory footprint. We will also investigate efficient cross-modal reasoning modules (e.g., state-space models such as Mamba or linear-attention variants) under strict efficiency constraints.

- (2)

- Generalization beyond KITTI. To strengthen external validity, we plan to evaluate cross-dataset performance and domain transfer (e.g., additional outdoor/indoor benchmarks) and study robustness to sparsity, noise, transparency/reflectivity, and calibration shifts. In particular, recent benchmarks such as RSUD20K highlight the importance of dataset diversity and robustness evaluation under diverse environmental conditions, which will inspire our future exploration of generalization across challenging scenarios.

- (3)

- Temporal and system aspects. We will extend GAC-Net to sequential/streaming depth completion with temporal consistency and conduct system-level measurements (FLOPs and inference memory on diverse hardware, including embedded platforms), providing a more comprehensive view of computational costs for practical deployment.

Author Contributions

K.Z.: methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, visualization. C.L.: software. M.S. and L.Z.: writing—review and editing, supervision. X.G.: conceptualization, funding acquisition, project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Research and Development Program Project of Zhejiang Province (No. 2024C01109), the Scientific Research Innovation Fund for Postgraduates of Zhejiang University of Science and Technology (No. 2022yjskc08), and the General Scientific Research Project in Zhejiang Province (No. Y202249472).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xie, Z.; Yu, X.; Gao, X.; Li, K.; Shen, S. Recent Advances in Conventional and Deep Learning-Based Depth Completion: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 3395–3415. [Google Scholar] [CrossRef]

- Huang, Z.; Lv, C.; Xing, Y.; Wu, J. Multi-Modal Sensor Fusion-Based Deep Neural Network for End-to-End Autonomous Driving With Scene Understanding. IEEE Sens. J. 2021, 21, 11781–11790. [Google Scholar] [CrossRef]

- Song, Z.; Lu, J.; Yao, Y.; Zhang, J. Self-Supervised Depth Completion from Direct Visual-LiDAR Odometry in Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 11654–11665. [Google Scholar] [CrossRef]

- Bai, L.; Zhao, Y.; Elhousni, M.; Huang, X. DepthNet: Real-Time LiDAR Point Cloud Depth Completion for Autonomous Vehicles. IEEE Access 2020, 8, 227825–227833. [Google Scholar] [CrossRef]

- Gofer, E.; Praisler, S.; Gilboa, G. Adaptive LiDAR Sampling and Depth Completion Using Ensemble Variance. IEEE Trans. Image Process. 2021, 30, 8900–8912. [Google Scholar] [CrossRef]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep Learning for Image and Point Cloud Fusion in Autonomous Driving: A Review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 722–739. [Google Scholar] [CrossRef]

- Su, S.; Wu, J. GeometryFormer: Semi-convolutional transformer integrated with geometric perception for depth completion in autonomous driving scenes. Sensors 2024, 24, 8066. [Google Scholar] [CrossRef]

- Zou, N.; Xiang, Z.; Chen, Y.; Chen, S.; Qiao, C. Simultaneous Semantic Segmentation and Depth Completion with Constraint of Boundary. Sensors 2020, 20, 635. [Google Scholar] [CrossRef]

- Jeong, Y.; Park, J.; Cho, D.; Hwang, Y.; Choi, S.B.; Kweon, I.S. Lightweight Depth Completion Network with Local Similarity-Preserving Knowledge Distillation. Sensors 2022, 22, 7388. [Google Scholar] [CrossRef]

- Pan, J.; Zhong, S.; Yue, T.; Yin, Y.; Tang, Y. Multi-Task Foreground-Aware Network with Depth Completion for Enhanced RGB-D Fusion Object Detection Based on Transformer. Sensors 2024, 24, 2374. [Google Scholar] [CrossRef]

- El-Yabroudi, M.Z.; Abdel-Qader, I.; Bazuin, B.J.; Abudayyeh, O.; Chabaan, R.C. Guided Depth Completion with Instance Segmentation Fusion in Autonomous Driving Applications. Sensors 2022, 22, 9578. [Google Scholar] [CrossRef] [PubMed]

- Zhai, D.-H.; Yu, S.; Wang, W.; Guan, Y.; Xia, Y. TCRNet: Transparent Object Depth Completion with Cascade Refinements. IEEE Trans. Autom. Sci. Eng. 2025, 22, 1893–1912. [Google Scholar] [CrossRef]

- Wang, M.; Huang, R.; Liu, Y.; Li, Y.; Xie, W. suLPCC: A Novel LiDAR Point Cloud Compression Framework for Scene Understanding Tasks. IEEE Trans. Ind. Inform. 2025, 21, 3816–3827. [Google Scholar] [CrossRef]

- Lu, H.; Xu, S.; Cao, S. SGTBN: Generating Dense Depth Maps from Single-Line LiDAR. IEEE Sens. J. 2021, 21, 19091–19100. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, H.; Wu, L.; Zhou, Y.; Wu, D. Spatiotemporal Guided Self-Supervised Depth Completion from LiDAR and Monocular Camera. In Proceedings of the 2020 IEEE International Conference on Visual Communications and Image Processing (VCIP), Shenzhen, China, 1–4 December 2020; pp. 54–57. [Google Scholar] [CrossRef]

- Wang, Y.; Dai, Y.; Liu, Q.; Yang, P.; Sun, J.; Li, B. CU-Net: LiDAR Depth-Only Completion with Coupled U-Net. IEEE Robot. Autom. Lett. 2022, 7, 11476–11483. [Google Scholar] [CrossRef]

- Fan, Y.-C.; Zheng, L.-J.; Liu, Y.-C. 3D Environment Measurement and Reconstruction Based on LiDAR. In Proceedings of the 2018 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity Invariant CNNs. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE: New York, NY, USA, 2017; pp. 11–20. [Google Scholar] [CrossRef]

- Zunair, H.; Khan, S.; Hamza, A.B. Rsud20K: A dataset for road scene understanding in autonomous driving. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024; IEEE: New York, NY, USA, 2024; pp. 708–714. [Google Scholar] [CrossRef]

- Tang, J.; Tian, F.-P.; Feng, W.; Li, J.; Tan, P. Learning Guided Convolutional Network for Depth Completion. IEEE Trans. Image Process. 2021, 30, 1116–1129. [Google Scholar] [CrossRef]

- Hu, M.; Wang, S.; Li, B.; Ning, S.; Fan, L.; Gong, X. PENet: Towards Precise and Efficient Image Guided Depth Completion. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13656–13662. [Google Scholar] [CrossRef]

- Cheng, X.; Wang, P.; Yang, R. Learning Depth with Convolutional Spatial Propagation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2361–2379. [Google Scholar] [CrossRef]

- Cheng, X.; Wang, P.; Guan, C.; Yang, R. CSPN++: Learning Context and Resource Aware Convolutional Spatial Propagation Networks for Depth Completion. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10615–10622. [Google Scholar] [CrossRef]

- Lin, Y.; Cheng, T.; Zhong, Q.; Zhou, W.; Yang, H. Dynamic Spatial Propagation Network for Depth Completion. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 1638–1646. [Google Scholar]

- Liu, L.; Liao, Y.; Wang, Y.; Geiger, A.; Liu, Y. Learning Steering Kernels for Guided Depth Completion. IEEE Trans. Image Process. 2021, 30, 2850–2861. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; Tian, F.-P.; An, B.; Li, J.; Tan, P. Bilateral Propagation Network for Depth Completion. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 9763–9772. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30, pp. 5099–5108. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Imran, S.; Long, Y.; Liu, X.; Morris, D. Depth Coefficients for Depth Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 12438–12447. [Google Scholar] [CrossRef]

- Ma, F.; Karaman, S. Sparse-to-Dense: Depth Prediction from Sparse Depth Samples and a Single Image. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4796–4803. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Poggi, M.; Zhu, Z.; Huang, G.; Mattoccia, S. CompletionFormer: Depth Completion with Convolutions and Vision Transformers. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; IEEE: New York, NY, USA, 2023; pp. 18527–18536. [Google Scholar] [CrossRef]

- Park, J.; Joo, K.; Hu, Z.; Liu, C.K.; So Kweon, I. Non-local Spatial Propagation Network for Depth Completion. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 120–136. [Google Scholar]

- Chen, D.; Huang, T.; Song, Z.; Deng, S.; Jia, T. Agg-Net: Attention Guided Gated-Convolutional Network for Depth Image Completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 8853–8862. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, K.; Li, X.; Zhang, Z.; Li, J.; Yang, J. RigNet: Repetitive Image Guided Network for Depth Completion. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 214–230. [Google Scholar]

- Yan, Z.; Li, X.; Hui, L.; Zhang, Z.; Li, J.; Yang, J. Rignet++: Semantic Assisted Repetitive Image Guided Network for Depth Completion. arXiv 2023, arXiv:2309.00655. [Google Scholar] [CrossRef]

- Nazir, D.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. SemAttNet: Toward Attention-Based Semantic Aware Guided Depth Completion. IEEE Access 2022, 10, 120781–120791. [Google Scholar] [CrossRef]

- Qiu, J.; Cui, Z.; Zhang, Y.; Zhang, X.; Liu, S.; Zeng, B.; Pollefeys, M. DeepLiDAR: Deep Surface Normal Guided Depth Prediction for Outdoor Scene from Sparse LiDAR Data and Single Color Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3308–3317. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, X.; Shi, J.; Zhang, G.; Bao, H.; Li, H. Depth Completion from Sparse LiDAR Data with Depth-Normal Constraints. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2811–2820. [Google Scholar] [CrossRef]

- Zhao, S.; Gong, M.; Fu, H.; Tao, D. Adaptive Context-Aware Multi-Modal Network for Depth Completion. IEEE Trans. Image Process. 2021, 30, 5264–5276. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Shao, X.; Wang, B.; Li, Y.; Wang, S. Graphcspn: Geometry-aware depth completion via dynamic GCNs. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 90–107. [Google Scholar]

- Chen, Y.; Yang, B.; Liang, M.; Urtasun, R. Learning Joint 2D-3D Representations for Depth Completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10022–10031. [Google Scholar]

- Yu, Z.; Sheng, Z.; Zhou, Z.; Luo, L.; Cao, S.-Y.; Gu, H.; Zhang, H.; Shen, H.-L. Aggregating Feature Point Cloud for Depth Completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 8698–8709. [Google Scholar]

- Zhou, W.; Yan, X.; Liao, Y.; Lin, Y.; Huang, J.; Zhao, G.; Cui, S.; Li, Z. BEV@DC: Bird’s-Eye View Assisted Training for Depth Completion. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 9233–9242. [Google Scholar]

- Yan, Z.; Lin, Y.; Wang, K.; Zheng, Y.; Wang, Y.; Zhang, Z.; Li, J.; Yang, J. Tri-Perspective View Decomposition for Geometry-Aware Depth Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 4874–4884. [Google Scholar]

- Wang, S.; Jiang, F.; Gong, X. A Transformer-Based Image-Guided Depth-Completion Model with Dual-Attention Fusion Module. Sensors 2024, 24, 6270. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Singh, M.K.; Cai, H.; Porikli, F. DeCoTR: Enhancing Depth Completion with 2D and 3D Attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 10736–10746. [Google Scholar]

- Xie, G.; Zhang, Y.; Jiang, Z.; Liu, Y.; Xie, Z.; Cao, B.; Liu, H. HTMNet: A Hybrid Network with Transformer-Mamba Bottleneck Multimodal Fusion for Transparent and Reflective Objects Depth Completion. arXiv 2025, arXiv:2505.20904. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, Y.; Zhang, G.; Wang, S.; Li, B.; Liu, Q.; Hui, L.; Dai, Y. Improving Depth Completion via Depth Feature Upsampling. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 21104–21113. [Google Scholar] [CrossRef]

- Imran, S.; Liu, X.; Morris, D. Depth Completion with Twin Surface Extrapolation at Occlusion Boundaries. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 2583–2592. [Google Scholar] [CrossRef]

- Wang, Y.; Li, B.; Zhang, G.; Liu, Q.; Gao, T.; Dai, Y. LRRU: Long-Short Range Recurrent Updating Networks for Depth Completion. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 9388–9398. [Google Scholar] [CrossRef]

- Huynh, L.; Nguyen, P.; Matas, J.; Rahtu, E.; Heikkilä, J. Boosting Monocular Depth Estimation with Lightweight 3D Point Fusion. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 12747–12756. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).