Active Touch Sensing for Robust Hole Detection in Assembly Tasks

Abstract

1. Introduction

2. The State of the Art

3. Materials and Methods

3.1. Map Registration

| Algorithm 1: Map registration algorithm using touch sensing |

|

- TouchFloor is a function that involves the motion of the robot from the initial position along the axes in the map coordinate system until it touches the surface of the object. It also computes the -coordinate of the touch point in the map coordinate system. This calculation involves the transformationwhere is a constant that defines the z-component of the map coordinate system origin expressed in robot coordinates. Note that x- and y-coordinates of the map coordinate system origin are unknown.

- GetRegion returns the region index based on the measured -coordinate at the contact point.

- GetPoint returns a point from closest to the centroid of .

- RegisterRegions returns the region composed of all points that satisfy the condition .

3.2. Map Registration with Unknown Object Base Plane Height

- Area() returns the area of the region .

- rand(m,n) returns a matrix with random numbers.

- CountFeasibleRegions returns the number of feasible candidate regions, i.e., regions with area greater than 0.

| Algorithm 2: Map registation with unknown object base plane height using touch sensing |

|

3.3. Probabilistic Map Registration

4. Experimental Results

4.1. Inserting the Pin into the Socket

4.2. Inserting the Task Board Probe into the Socket

4.3. Inserting a Peg into a Hole on a Conical Surface

- 1.

- Define the verification plane: Construct a plane orthogonal to the estimated hole direction vector (i.e., the insertion axis).

- 2.

- Select directional vectors: Choose an arbitrary unit vector in the verification plane. Then compute a second unit vector orthogonal to within the same plane: .

- 3.

- Configure robot compliance: Set the robot’s impedance controller to be compliant along both and . The stiffness should be low enough to permit minor displacements without triggering safety thresholds, while still allowing detection of mechanical constraints.

- 4.

- Execute test motions: Apply small, controlled displacements along and , and monitor the actual end-effector response.

- 5.

- Evaluate motion response:

- If no displacement is observed in either direction, the end-effector is physically constrained, indicating that the peg has entered the hole.

- If displacement occurs in at least one direction, the contact is not constrained, suggesting that the peg is outside the hole.

- 6.

- Confirm or reject hole contact: Based on the observed response, classify the contact as a successful or unsuccessful insertion attempt.

4.4. Inserting the Task Board Connector into the Socket with Continuous Search

4.5. Summary of Experimental Results

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- The initial touch point , which defines the initial region .

- At each iteration step , the algorithm computes the displacement , , and the robot touches the new region .

- The candidate region is updated as:Then, for all :

- 1.

- (strict subset property);

- 2.

- (the initial touch point given in the map coordinate system is contained in the selection region).

- , ensuring monotonic shrinkage.

- , ensuring the true initial point is never eliminated.

References

- Navarro, S.E.; Mühlbacher-Karrer, S.; Alagi, H.; Zangl, H.; Koyama, K.; Hein, B.; Duriez, C.; Smith, J.R. Proximity Perception in Human-Centered Robotics: A Survey on Sensing Systems and Applications. IEEE Trans. Robot. 2022, 38, 1599–1620. [Google Scholar] [CrossRef]

- Liang, B.; Fan, W.; Sui, K. Ultrastrong and heat-resistant self-powered multifunction ionic sensor based on asymmetric meta-aramid ionogels. Chem. Eng. J. 2025, 519, 165332. [Google Scholar] [CrossRef]

- Zhuang, C.; Li, S.; Ding, H. Instance segmentation based 6D pose estimation of industrial objects using point clouds for robotic bin-picking. Robot. Comput.-Integr. Manuf. 2023, 82, 102541. [Google Scholar] [CrossRef]

- Nottensteiner, K.; Sachtler, A.; Albu-Schäffer, A. Towards autonomous robotic assembly: Using combined visual and tactile sensing for adaptive task execution. J. Intell. Robot. Syst. 2021, 101, 49. [Google Scholar] [CrossRef]

- Lonćarević, Z.; Gams, A.; ReberŚek, S.; Nemec, B.; śkrabar, J.; Skvarć, J.; Ude, A. Specifying and optimizing robotic motion for visual quality inspection. Robot. Comput.-Integr. Manuf. 2021, 72, 102200. [Google Scholar] [CrossRef]

- Saleh, K.; Szénási, S.; Vámossy, Z. Occlusion Handling in Generic Object Detection: A Review. In Proceedings of the IEEE 19th World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 21–23 January 2021; pp. 477–484. [Google Scholar]

- Yi, A.; Anantrasirichai, N. A Comprehensive Study of Object Tracking in Low-Light Environments. Sensors 2024, 24, 4359. [Google Scholar] [CrossRef] [PubMed]

- Enebuse, I.; Ibrahim, B.K.S.M.K.; Foo, M.; Matharu, R.S.; Ahmed, H. Accuracy evaluation of hand-eye calibration techniques for vision-guided robots. PLoS ONE 2022, 17, e0273261. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Wu, Y.; Sun, F.; Guo, D. Recent progress on tactile object recognition. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417717056. [Google Scholar] [CrossRef]

- Galaiya, V.R.; Asfour, M.; Alves de Oliveira, T.E.; Jiang, X.; Prado da Fonseca, V. Exploring Tactile Temporal Features for Object Pose Estimation during Robotic Manipulation. Sensors 2023, 23, 4535. [Google Scholar] [CrossRef] [PubMed]

- Abu-Dakka, F.; Nemec, B.; Jørgensen, J.A.; Savarimuthu, T.R.; Krüger, N.; Ude, A. Adaptation of manipulation skills in physical contact with the environment to reference force profiles. Auton. Robot. 2015, 39, 199–217. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, J.; Yang, Y.; Wu, W.; Du, K. Skill-Learning Method of Dual Peg-in-Hole Compliance Assembly for Micro-Device. Sensors 2023, 23, 8579. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Dong, M.; Wei, S.; Yu, X. EA-CTFVS: An Environment-Agnostic Coarse-to-Fine Visual Servoing Method for Sub-Millimeter-Accurate Assembly. Actuators 2024, 13, 294. [Google Scholar] [CrossRef]

- Chen, J.; Tang, W.; Yang, M. Deep Siamese Neural Network-Driven Model for Robotic Multiple Peg-in-Hole Assembly System. Electronics 2024, 13, 3453. [Google Scholar] [CrossRef]

- Abu-Dakka, F.; Nemec, B.; Kramberger, A.; Buch, A.; Krüger, N.; Ude, A. Solving peg-in-hole tasks by human demonstration and exception strategies. Ind. Robot 2014, 41, 575–584. [Google Scholar] [CrossRef]

- Chen, F.; Cannella, F.; Sasaki, H.; Canali, C.; Fukuda, T. Error recovery strategies for electronic connectors mating in robotic fault-tolerant assembly system. In Proceedings of the IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA), Senigallia, Italy, 10–12 September 2014; pp. 1–6. [Google Scholar]

- Marvel, J.A.; Newman, W.S. Assessing internal models for faster learning of robotic assembly. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 2143–2148. [Google Scholar]

- Shetty, S.; Silvério, J.; Calinon, S. Ergodic Exploration Using Tensor Train: Applications in Insertion Tasks. IEEE Trans. Robot. 2022, 38, 906–921. [Google Scholar] [CrossRef]

- Chhatpar, S.; Branicky, M. Localization for robotic assemblies with position uncertainty. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; pp. 2534–2540. [Google Scholar]

- Chhatpar, S.; Branicky, M. Particle filtering for localization in robotic assemblies with position uncertainty. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Edmonton, AB, Canada, 2–6 August 2005; pp. 3610–3617. [Google Scholar]

- Petrovskaya, A.; Khatib, O. Global Localization of Objects via Touch. IEEE Trans. Robot. 2011, 27, 569–585. [Google Scholar] [CrossRef]

- Jasim, I.F.; Plapper, P.W.; Voos, H. Position Identification in Force-Guided Robotic Peg-in-Hole Assembly Tasks. Procedia CIRP 2014, 23, 217–222. [Google Scholar] [CrossRef]

- Hebert, P.; Howard, T.; Hudson, N.; Ma, J.; Burdick, J.W. The next best touch for model-based localization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 99–106. [Google Scholar]

- Luo, S.; Mou, W.; Althoefer, K.; Liu, H. Localizing the object contact through matching tactile features with visual map. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3903–3908. [Google Scholar]

- Hauser, K. Bayesian Tactile Exploration for Compliant Docking With Uncertain Shapes. IEEE Trans. Robot. 2019, 35, 1084–1096. [Google Scholar] [CrossRef]

- Bauza, M.; Valls, E.; Lim, B.; Sechopoulos, T.; Rodriguez, A. Tactile Object Pose Estimation from the First Touch with Geometric Contact Rendering. arXiv 2020, arXiv:2012.05205. [Google Scholar] [CrossRef]

- Bauza, M.; Bronars, A.; Rodriguez, A. Tac2Pose: Tactile object pose estimation from the first touch. Int. J. Robot. Res. 2023, 42, 1185–1209. [Google Scholar] [CrossRef]

- Xu, J.; Lin, H.; Song, S.; Ciocarlie, M. TANDEM3D: Active Tactile Exploration for 3D Object Recognition. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 10401–10407. [Google Scholar]

- Calandra, R.; Owens, A.; Upadhyaya, M.; Yuan, W.; Lin, J.; Adelson, E.H.; Levine, S. The Feeling of Success: Does Touch Sensing Help Predict Grasp Outcomes? arXiv 2017, arXiv:1710.05512. [Google Scholar] [CrossRef]

- Yuan, W.; Dong, S.; Adelson, E.H. GelSight: High-Resolution Robot Tactile Sensors for Estimating Geometry and Force. Sensors 2017, 17, 2762. [Google Scholar] [CrossRef] [PubMed]

- Nemec, B.; Hrovat, M.M.; Simonič, M.; Shetty, S.; Calinon, S.; Ude, A. Robust Execution of Assembly Policies Using a Pose Invariant Task Representation. In Proceedings of the 20th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 25–28 June 2023; pp. 779–786. [Google Scholar]

- Simonič, M.; Ude, A.; Nemec, B. Hierarchical Learning of Robotic Contact Policies. Robot. Comput.-Integr. Manuf. 2024, 86, 102657. [Google Scholar] [CrossRef]

- So, P.; Sarabakha, A.; Wu, F.; Culha, U.; Abu-Dakka, F.J.; Haddadin, S. Digital Robot Judge: Building a Task-centric Performance Database of Real-World Manipulation With Electronic Task Boards. IEEE Robot. Autom. Mag. 2024, 31, 32–44. [Google Scholar] [CrossRef]

| Experiment | Trials | Success Rate | Avg. Attem. | Std. Dev. | Avg. Time | Notes |

|---|---|---|---|---|---|---|

| Audio Pin Random Search (Baseline) | 100 | 100% | 37.37 | 36.55 | 71.0 s | No prior knowledge used |

| Audio Pin Insertion (Deterministic) | 100 | 100% | 5.83 | 2.04 | 11.1 s | Basic algorithm with known object height |

| Audio Pin + Height Estimation | 100 | 100% | 6.37 | 2.53 | 12.1 s | Includes z-height search step |

| Audio Pin (Noisy, Deterministic) | 100 | 85% | 6.78 | 3.0 | 12.8 s | Sensitive to uncertainty; occasional failure |

| Audio Pin (Noisy, Probabilistic) | 100 | 100% | 6.76 | 2.32 | 12.8 s | Robust under position and map uncertainty |

| Task Board Probe | 100 | 100% | 4.07 | 1.18 | 8.7 s | Rich geometry improves convergence |

| Cone With a Hole at the Top | 100 | 100% | 3.78 | 0.84 | 7.9 s | Inclined object planes improve convergence |

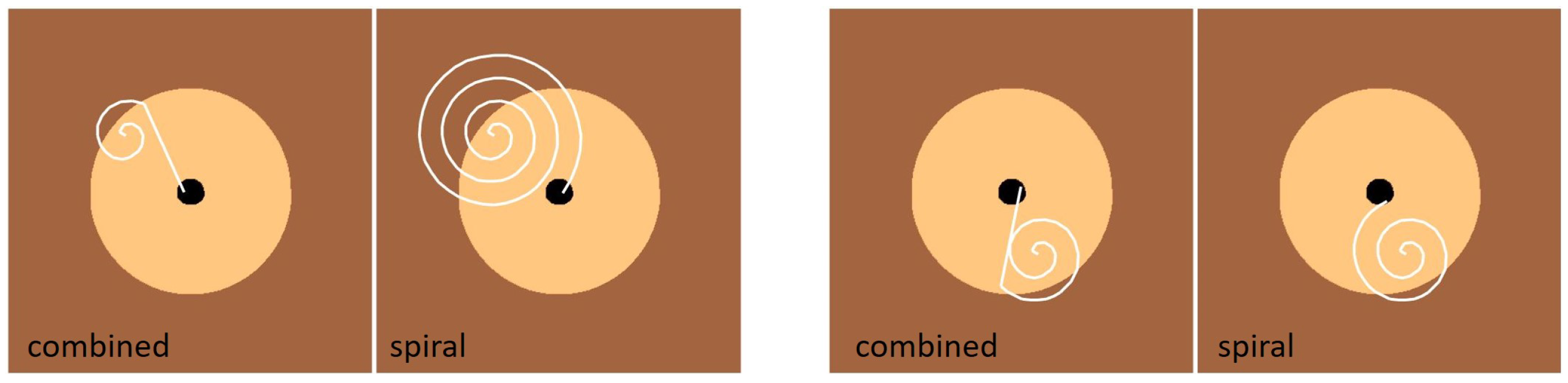

| Task Board Connector (Combined Search) | 20 | 100% | — | — | 7.8 s | Spiral + map registration; robust to par. settings |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nemec, B.; Simonič, M.; Ude, A. Active Touch Sensing for Robust Hole Detection in Assembly Tasks. Sensors 2025, 25, 4567. https://doi.org/10.3390/s25154567

Nemec B, Simonič M, Ude A. Active Touch Sensing for Robust Hole Detection in Assembly Tasks. Sensors. 2025; 25(15):4567. https://doi.org/10.3390/s25154567

Chicago/Turabian StyleNemec, Bojan, Mihael Simonič, and Aleš Ude. 2025. "Active Touch Sensing for Robust Hole Detection in Assembly Tasks" Sensors 25, no. 15: 4567. https://doi.org/10.3390/s25154567

APA StyleNemec, B., Simonič, M., & Ude, A. (2025). Active Touch Sensing for Robust Hole Detection in Assembly Tasks. Sensors, 25(15), 4567. https://doi.org/10.3390/s25154567