Abstract

The proliferation of malicious social bots poses severe threats to cybersecurity and social media information ecosystems. Existing detection methods often overlook the semantic value and emotional cues conveyed by emojis in user-generated tweets. To address this gap, we propose ESA-BotRGCN, an emoji-driven multi-modal detection framework that integrates semantic enhancement, sentiment analysis, and multi-dimensional feature modeling. Specifically, we first establish emoji–text mapping relationships using the Emoji Library, leverage GPT-4 to improve textual coherence, and generate tweet embeddings via RoBERTa. Subsequently, seven sentiment-based features are extracted to quantify statistical disparities in emotional expression patterns between bot and human accounts. An attention gating mechanism is further designed to dynamically fuse these sentiment features with user description, tweet content, numerical attributes, and categorical features. Finally, a Relational Graph Convolutional Network (RGCN) is employed to model heterogeneous social topology for robust bot detection. Experimental results on the TwiBot-20 benchmark dataset demonstrate that our method achieves a superior accuracy of 87.46%, significantly outperforming baseline models and validating the effectiveness of emoji-driven semantic and sentiment enhancement strategies.

1. Introduction

Social media platforms such as Twitter (now renamed X) and Facebook have emerged as pivotal channels for global users to access news, engage in public discourse, and foster emotional interactions, owing to their real-time dissemination capabilities and low-barrier communication mechanisms [1]. According to Meta Group’s financial reports [2], its monthly active users surged exponentially from 1.5 billion in 2015 to 3 billion in 2023. However, this thriving social ecosystem coexists with the rampant proliferation of malicious social bot accounts. A study by the University of Southern California revealed that 15% of Twitter accounts are bots [3], which are automated programs designed to perform social media activities [4], with 78.6% implicated in disseminating disinformation [5]. These bots mimic genuine user behaviors to establish a systematic attack chain: “fabricating pseudo-consensus → inducing group polarization → manipulating decision-making processes” [6]. A notable example occurred during the 2016 U.S. presidential election, where the Botometer system [7] identified over 280,000 bot accounts persistently spreading political misinformation, accounting for one-third of candidate-endorsing tweets [8]. Such activities distorted voter perceptions and severely undermined electoral integrity. Therefore, the detection of social bots has attracted the attention of relevant researchers.

Current mainstream detection methodologies face multifaceted technical challenges. First, their capability to identify highly anthropomorphic behaviors remains limited; social bots now emulate human-like information propagation patterns, significantly degrading the performance of detection models reliant on static shallow statistical features [9]. Second, bots increasingly exploit social network analysis techniques to construct and optimize their relational networks, rendering their interactions indistinguishable from authentic users [10]. These technological advancements have made existing detection methods ineffective in identifying malicious bot accounts, thus creating an urgent demand for a new detection framework to tackle this challenge.

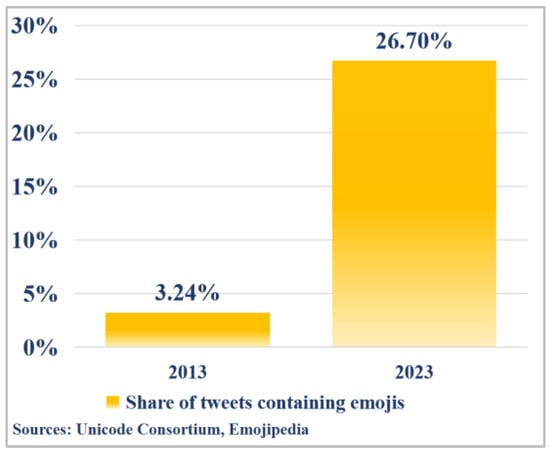

In social bot detection, tweets represent one of the most critical feature sources. However, most existing detection methods dismiss emojis as noise and routinely eliminate them during preprocessing [11]. However, in reality, emojis not only significantly enhance the semantic interpretability of tweets but also provide emotional cues absent in plain text [12,13,14,15,16]. According to a report from Emojipedia, the proportion of tweets containing emojis on Twitter has surged from 3.24% in 2013 to 26.7% in 2023 [17]. As illustrated in Figure 1, emoji usage frequency increased by 724% over this decade, underscoring their growing role as core carriers of nuanced semantics and affective signals. Taking the tweet “Let’s enjoy some  and watch

and watch  at the

at the  later!” as an example, when analyzed solely through its textual content, the meaning becomes ambiguous. However, integrating emojis with the text resolves semantic uncertainties, revealing a clear contextual narrative. This contrast demonstrates that emojis encode rich semantic information, thereby proving their irreplaceable value in tweet interpretation.

later!” as an example, when analyzed solely through its textual content, the meaning becomes ambiguous. However, integrating emojis with the text resolves semantic uncertainties, revealing a clear contextual narrative. This contrast demonstrates that emojis encode rich semantic information, thereby proving their irreplaceable value in tweet interpretation.

and watch

and watch  at the

at the  later!” as an example, when analyzed solely through its textual content, the meaning becomes ambiguous. However, integrating emojis with the text resolves semantic uncertainties, revealing a clear contextual narrative. This contrast demonstrates that emojis encode rich semantic information, thereby proving their irreplaceable value in tweet interpretation.

later!” as an example, when analyzed solely through its textual content, the meaning becomes ambiguous. However, integrating emojis with the text resolves semantic uncertainties, revealing a clear contextual narrative. This contrast demonstrates that emojis encode rich semantic information, thereby proving their irreplaceable value in tweet interpretation.

Figure 1.

Emoji use in tweets (2013 vs. 2023).

Beyond semantic enrichment, emojis excel at conveying emotional information that text alone often fails to capture. Particularly in informal short texts such as comments, they serve as critical indicators of user sentiment. Taking the tweet “It’s raining on my picnic day.  ” as an example, if the emojis are ignored, it expresses the negative emotions of disappointment and chagrin after the plan is ruined. However, after adding the “

” as an example, if the emojis are ignored, it expresses the negative emotions of disappointment and chagrin after the plan is ruined. However, after adding the “ ” emojis, the sentiment of the sentence undergoes a reversal, conveying an easygoing and adaptable attitude of “Since it’s raining and the picnic can’t happen, might as well have a drink and then go to sleep”, thus transforming into a positive sentiment. To meet escalating demands for emotional articulation, the Unicode Consortium has expanded emoji categories through iterative updates. As illustrated in Figure 2, official data reveal a 35-fold increase in emoji diversity from 2005 to 2025 [18], with an annual growth rate of 42.7%. This expansion substantially elevates emojis’ research value in sentiment analysis.

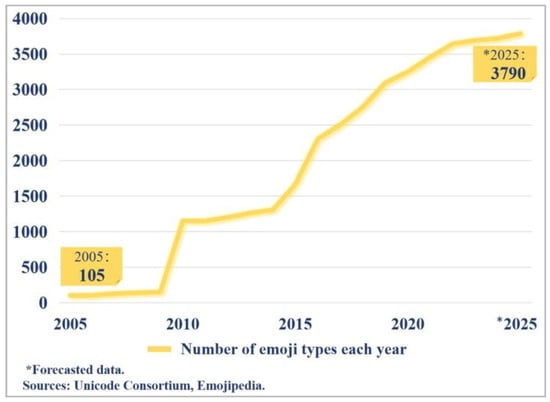

” emojis, the sentiment of the sentence undergoes a reversal, conveying an easygoing and adaptable attitude of “Since it’s raining and the picnic can’t happen, might as well have a drink and then go to sleep”, thus transforming into a positive sentiment. To meet escalating demands for emotional articulation, the Unicode Consortium has expanded emoji categories through iterative updates. As illustrated in Figure 2, official data reveal a 35-fold increase in emoji diversity from 2005 to 2025 [18], with an annual growth rate of 42.7%. This expansion substantially elevates emojis’ research value in sentiment analysis.

” as an example, if the emojis are ignored, it expresses the negative emotions of disappointment and chagrin after the plan is ruined. However, after adding the “

” as an example, if the emojis are ignored, it expresses the negative emotions of disappointment and chagrin after the plan is ruined. However, after adding the “ ” emojis, the sentiment of the sentence undergoes a reversal, conveying an easygoing and adaptable attitude of “Since it’s raining and the picnic can’t happen, might as well have a drink and then go to sleep”, thus transforming into a positive sentiment. To meet escalating demands for emotional articulation, the Unicode Consortium has expanded emoji categories through iterative updates. As illustrated in Figure 2, official data reveal a 35-fold increase in emoji diversity from 2005 to 2025 [18], with an annual growth rate of 42.7%. This expansion substantially elevates emojis’ research value in sentiment analysis.

” emojis, the sentiment of the sentence undergoes a reversal, conveying an easygoing and adaptable attitude of “Since it’s raining and the picnic can’t happen, might as well have a drink and then go to sleep”, thus transforming into a positive sentiment. To meet escalating demands for emotional articulation, the Unicode Consortium has expanded emoji categories through iterative updates. As illustrated in Figure 2, official data reveal a 35-fold increase in emoji diversity from 2005 to 2025 [18], with an annual growth rate of 42.7%. This expansion substantially elevates emojis’ research value in sentiment analysis.

Figure 2.

Growth of emoji types (2005–2025).

To address the aforementioned challenges, we propose ESA-BotRGCN, an emoji-driven multi-modal detection framework, with its core innovations spanning five key dimensions:

- We utilize the Emoji Library to convert emojis into textual descriptions, refine the coherence of transformed tweets via GPT-4, and generate semantically and sentiment-enriched tweet embeddings using RoBERTa.

- We propose a seven-dimensional sentiment feature quantification framework, leveraging foundational sentiment metrics, including positive, negative, and neutral sentiment values and complexity measures, to further capture statistical discrepancies between bot accounts and genuine users in terms of sentiment polarity span, dynamic volatility, and expression consistency.

- An attention gating mechanism is developed to adaptively integrate sentiment features, user descriptions, tweet content, numerical attributes, and categorical features into a globally context-aware unified feature representation.

- To capture bot-specific behavioral patterns, a Relational Graph Convolutional Network (RGCN) is constructed to model user-following relationships based on graph topological structures.

- We conduct extensive experiments on the TwiBot-20 and Cresci-15 datasets, demonstrating our model’s superiority through significant improvements in accuracy, F1-score, and MCC over mainstream baselines.

2. Related Work

Research in social bot detection has evolved over decades, primarily categorized into four methodological paradigms: Content-based methods analyze lexical distributions, metadata (e.g., URL ratios), and basic statistical patterns in user-generated text to build detection models. Behavior-based methods focus on identifying anomalies in temporal user activity patterns (e.g., posting frequency, active hours) or interaction behaviors (e.g., retweet/reply chains). Deep learning approaches leverage neural architectures like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) to automatically extract deep features from user content, significantly enhancing detection performance in complex scenarios. Graph neural network (GNN)-based methods model user social relationship networks (e.g., follow/retweet graphs), employing Graph Convolutional Networks or Graph Attention Networks to capture latent structural correlations. The following sections systematically review the core advancements and technical characteristics of these approaches.

2.1. Content-Based Approaches

Content-based social bot detection methods primarily rely on analyzing statistical patterns, metadata, and linguistic styles of user-generated text to construct classification models. Early studies extracted shallow features such as keyword frequency, URL ratio, and special character usage and combined these with machine learning algorithms for preliminary detection.

Sivanesh et al. [19] proposed a multidimensional feature-based approach for identifying social bots. This method integrates both account attributes and behavioral features to distinguish bots from real users. Specifically, they treat user ID and account creation time as core features while also incorporating auxiliary features such as the posting time and source of the user’s most recent 50 tweets. A probabilistic mathematical model is then used to calculate the likelihood that a user is a bot, enabling precise discrimination between bots and genuine users. Kantepe et al. [20] utilized accounts suspended by Twitter (now renamed X), extracting 62 different features by analyzing tweets, profile information, and temporal behaviors. These features were then used for classification with logistic regression (LR), multinomial Naive Bayes, support vector machines (SVM), and gradient boosting (GB). ESC [21] extracted over 100 feature indicators by combining three groups of features: user attributes, Twitter behavioral data, and textual features. Dedicated classifiers were trained for each type of bot, and their predictions were aggregated using a max-rule strategy.

While content-based approaches have achieved initial success in bot detection, their core limitation lies in the over-reliance on static and shallow features, coupled with a systematic neglect of the semantic and sentiment values embedded in emojis. Methods such as those proposed by Sivanesh [19] and Kantepe [20], despite extracting extensive metadata and text statistical features, commonly discard emojis as noise during preprocessing. This practice results in the loss of critical semantic cues and sentiment expression patterns, significantly undermining their effectiveness against modern, highly anthropomorphic bots capable of dynamic evasion. Moreover, their reliance on manually engineered features restricts generalization capabilities when faced with rapidly evolving bot strategies, struggling to capture deeper statistical disparities such as sentiment complexity and polarity span. Research by Grimme et al. [22] indicates that in several mature detection tools, features like keyword frequency and timing contribute minimally to detection performance. Due to their simplicity and regularity, these features can be easily learned and mimicked by bot developers, allowing bots to evade detection by adjusting such attributes [23]. In contrast, ESA-BotRGCN fundamentally addresses these issues through innovative emoji–text mapping and GPT-4 coherence optimization. By generating tweet embeddings rich in semantic and sentiment information using RoBERTa and designing seven-dimensional sentiment features, our method not only preserves emoji information but also captures more nuanced and discriminative user behavioral patterns.

2.2. Behavior-Based Approaches

Although content-based methods demonstrated promising results in early studies, their reliance on manually engineered features limits their generalization capability. To address this limitation, researchers have shifted toward in-depth exploration of user behavioral patterns, thereby establishing a behavior-based detection paradigm. These approaches aim to distinguish social bots from real users by analyzing user activity patterns and interaction behaviors on social platforms to identify anomalies. The central assumption is that bot accounts exhibit statistically measurable deviations in their temporal behaviors (e.g., posting intervals, activity cycles) and interactive behaviors (e.g., retweeting, commenting chains).

In the work by Ruan et al. [24], user behaviors were categorized into outward and inward actions based on their influence on others. By computing the variance of these behaviors, the authors estimated the likelihood of an account being compromised, enabling effective detection of hijacked accounts. Amato et al. [25] modeled behavioral sequences—such as logins, message posts, and photo sharing—using Markov chains to capture typical behavioral patterns of real users. Deviations from these patterns were then used to identify anomalies, effectively detecting social bots. Cresci et al. [26,27] proposed a novel unsupervised detection method that encodes user behavior sequences along a timeline into DNA-like strings, where each type of tweet is mapped to a nucleotide: original tweet → A, retweet → C, and reply → T. The similarity between these behavioral “DNA sequences” is then measured using the Longest Common Subsequence (LCS) algorithm, and similarity scores are used to differentiate between real users and bots. Peng et al. [28] introduced UnDBot, an unsupervised detection framework grounded in the principles of structural information theory. This method leverages the structural properties of social networks and performs community detection by minimizing structural entropy. It is particularly effective at identifying bot clusters engaged in coordinated attacks. This study further explores the applicability and robustness of the method across social networks of varying sizes and types.

Behavior-based methods identify anomalies through temporal sequences and interaction patterns, circumventing some limitations of content analysis. However, their primary weakness lies in the complete stripping away or superficial utilization of the semantic content of tweets. For instance, Cresci’s pioneering “DNA sequence” method [26,27], while effective in capturing anomalies in behavioral sequences, entirely neglects the semantic information and sentiment tendencies embedded in tweet texts (including emojis). Peng’s UnDBot [28], focused on structural community detection, underutilizes individual-level content features such as sentiment expression. Such methods may fail against bots that closely mimic human behavior patterns but exhibit statistical deviations in sentiment expression (e.g., low sentiment volatility and excessively high consistency). In contrast, ESA-BotRGCN overcomes this limitation by deeply fusing content with behavioral/topological information: it not only models social relationship topologies using RGCN but, crucially, takes semantically and sentimentally enhanced tweet content and specially designed seven-dimensional sentiment features as core inputs. Through an attention mechanism, it dynamically fuses these with behavioral/structural features, enabling joint modeling of users’ multi-dimensional abnormal patterns and achieving stronger discriminative capabilities.

2.3. Deep Learning-Based Approaches

To overcome the limitations of traditional methods that heavily rely on feature engineering, deep learning techniques have enabled a qualitative leap in detection capabilities through end-to-end learning. Deep learning-based social bot detection methods automatically extract high-level semantic and behavioral features via neural networks, significantly improving performance in complex scenarios. The core strength of such methods lies in their ability to bypass the limitations of traditional manual feature engineering by directly learning discriminative patterns from raw data.

Ilias et al. [29] proposed a deep learning model that integrates an embedding layer, bidirectional LSTM, and dense layers, and was among the first to introduce an attention mechanism into the context of social bot detection. In 2020, Wu et al. [30] introduced a novel social spammer detection method based on xDeepFM, which extracted 30 features from multiple dimensions, including user behavior, content, and social relationships. The xDeepFM model was then employed to model these features for identifying malicious users on the Sina Weibo platform. In 2021, Wu et al. [31] further developed an innovative detection framework named DABot. This framework utilizes a residual network to mitigate the vanishing gradient problem in deep neural network training, thereby enhancing training efficiency and model stability. Additionally, a bidirectional gated recurrent unit (BiGRU) was introduced to capture bidirectional dependencies in user behavior sequences, enabling more accurate modeling of user activity patterns. The framework also incorporates an attention mechanism to emphasize critical features and enhance the model’s sensitivity to important information. Arin et al. [32] proposed a deep learning architecture consisting of three Long Short-Term Memory (LSTM) models and a fully connected layer to capture the complex activity patterns of humans and bots on social media. Due to the architecture involving the integration of multiple components across different hierarchical levels, the authors explored three distinct training strategies to effectively optimize each component. Fazil et al. [33] presented a deep neural network model, DeepSBD, which performs comprehensive multimodal modeling—including user profiles, temporal sequences, activity patterns, and content information—for efficient detection of bot accounts. This model employs a two-layer stacked BiLSTM architecture to jointly model user timelines and behavioral patterns, while a Deep Convolutional Neural Network (CNN) is used to extract semantic features from content. Furthermore, an attention mechanism is integrated to dynamically optimize the feature fusion process, significantly enhancing model discrimination in complex scenarios. Najari et al. [34] proposed a GAN-based detection framework, GANBOT, which introduces LSTM layers as a shared module between the generator and discriminator. This design not only addresses the convergence issues commonly associated with traditional SeqGAN in text generation tasks but also enables the classifier to directly access enriched information regarding bot behavior patterns from the generator, thereby providing a novel technical paradigm for social bot detection. Feng et al. [35] used three state-of-the-art large language models (LLMs), including Mistral-7B, LLaMA2-70B, and ChatGPT, for direct social bot detection. On the TwiBot-20 dataset, the accuracies were 60.9%, 66.1%, and 63.2%, respectively. LLMs bring new opportunities for social bot detection, yet their performance still needs to be improved.

Deep learning methods have achieved remarkable progress in automatic feature learning, surpassing traditional manual feature engineering. However, they still have significant deficiencies in handling the semantic and sentiment values of emojis and in efficiently fusing multimodal heterogeneous features. Most existing models usually directly remove emojis or only perform simple replacements during the text preprocessing stage, failing to fully tap their potential as crucial sentiment carriers. Although LLM-based methods [35] have shown promise, their accuracy in social bot detection is still far lower than that of specialized models, and they incur high computational costs. In contrast, the innovations of ESA-BotRGCN lie in the following: first, specially designing a process for semantic enhancement of emojis and quantification of sentiment features, ensuring that deep models can make full use of emoji information; and second, introducing an attention-gating mechanism to dynamically learn and fuse sentiment features, text content, user attributes, numerical/categorical features, and graph-structure information, significantly improving the effectiveness of multimodal information fusion and the discriminative ability of the model.

2.4. Graph Neural Network-Based Approaches

Unlike deep learning methods that focus primarily on textual analysis, graph neural networks (GNNs) are designed to capture collective behavioral patterns from social relational networks, making the two approaches complementary in modality. These methods model the topological structure of users’ social networks to detect anomalous interaction patterns associated with bot accounts. A key advantage of GNNs lies in their ability to directly process non-Euclidean data (e.g., follower or retweet graphs) and to reveal hidden structural features through neighborhood information aggregation via message-passing mechanisms.

In 2021, Feng et al. [36] introduced BotRGCN, marking the first application of RGCN to the modeling of relational heterogeneous graphs. By capturing multi-type interactions among users—such as follows and retweets—they constructed a heterogeneous information network in which users serve as nodes and various interaction types as edges, thereby enhancing account detection performance. In 2022, Feng et al. [37] further enhanced the model architecture by introducing a relational graph Transformer module, which leverages self-attention mechanisms to dynamically model heterogeneous influences between users, thereby addressing the traditional RGCN’s limitation in capturing complex relational dependencies. Li et al. [38] proposed SybilFlyover, a detection model based on heterogeneous graphs for identifying fake accounts. This approach represents the complex interactions among multi-type entities using a directed heterogeneous social network graph. A prompt learning strategy is employed to inject content-based social information into the model for enhanced state modeling. Finally, transformer-based mechanisms are used to process the social graph and identify nodes indicative of Sybil accounts. Peng et al. [39] proposed a Domain-Aware Multi-Relational Graph Neural Network (DA-MRG), which constructs a multi-relational graph based on user features and interaction types to generate graph embeddings and employs a domain-aware classifier to distinguish bot accounts. To address the behavioral similarities of bots across different social networks, they designed a joint learning framework that enables privacy-preserving cross-platform data sharing, thereby enhancing detection performance. Wang et al. [40] proposed an unsupervised social bot detection method, BotDCGC, based on deep contrastive graph clustering. The method employs a graph attention encoder to extract node embeddings by integrating account features with topological structure information and utilizes an inner product decoder to reconstruct the network. Structural contrastive learning is used to enhance the discriminability of node embeddings, while similarity-based calculations generate high-confidence pseudo-labels that dynamically guide the joint optimization of embedding learning and clustering, thereby improving detection performance.

Graph neural network (GNN) methods have demonstrated significant advantages in capturing group behavioral patterns and detecting coordinated bots by explicitly modeling social relationship topologies. However, existing GNN approaches generally suffer from two limitations: First, they underutilize or coarsely fuse the rich content/attribute features of nodes. Their node representations are relatively simplistic and fail to fully integrate semantically and sentimentally enhanced tweet content, user descriptions, and specially quantified high-order sentiment features [41]. Second, most methods construct homogeneous graphs, overlooking the extraction of heterogeneous topological graphs containing diverse social relationships. In contrast, ESA-BotRGCN achieves core breakthroughs by the following: first, using highly informative node features as the input foundation for RGCN; and second, constructing a heterogeneous social topology graph, enabling ESA-BotRGCN to simultaneously leverage both the multimodal intrinsic attributes of nodes and their social topological context information—particularly effective in scenarios with diverse social relationships—thereby enhancing detection accuracy.

3. ESA-BotRGCN Model Architecture

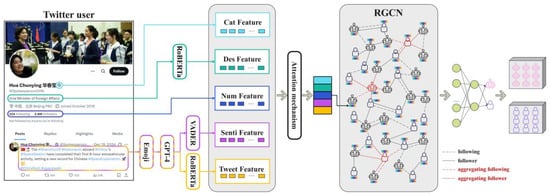

According to the aforementioned discussion, existing social bot detection methods predominantly suffer from two major limitations. First, the rich semantic and emotional expressive value of emojis is often overlooked, as most studies treat them as noise and directly remove them during preprocessing, leading to the loss of critical features [11]. Second, there exist significant statistical deviations in emotional expression patterns between bot accounts and genuine users, including differences in emotional complexity, polarity span, dynamic fluctuations, and expression consistency. However, these discriminative features have yet to be fully explored [42]. To address these issues, this study innovatively proposes ESA-BotRGCN, a Graph Convolutional Network model enhanced by emoji-driven sentiment and semantics, which dynamically integrates multi-dimensional features to improve the accuracy and robustness of social bot detection. Figure 3 illustrates the architecture of our proposed model.

Figure 3.

ESA-BotRGCN model diagram.

The detection method proposed in this study achieves end-to-end modeling through multi-stage collaborative processing. First, tweets undergo preprocessing; emojis are converted into corresponding textual descriptions using the Emoji Library. The GPT-4 model is then employed to refine the textual coherence of the tweets. Next, the RoBERTa model is utilized to generate tweet embeddings that integrate emoji semantics, thereby addressing the limitations of traditional word vectors in representing the semantic information conveyed by emojis and enhancing the expressiveness of sentiment features. Subsequently, seven sentiment-based features are extracted from the preprocessed tweets to capture statistical differences in emotional expression patterns between bot and human accounts. ESA-BotRGCN then jointly encodes tweet features, sentiment-based features, user description features, numerical attributes, and categorical features. Through an attention gating mechanism, the model dynamically adjusts the weights of these heterogeneous features to generate a globally context-aware unified feature vector. This comprehensive feature vector is fed into an RGCN, where two layers of graph convolution operations integrate the topological structure of user social relationships, and dropout is applied to suppress overfitting. Finally, the output features are mapped to a high-dimensional space via a linear layer, and the detection probability of bot accounts is computed using the Softmax function. The Adam optimizer is employed to perform gradient backpropagation based on the cross-entropy loss function, optimizing model parameters to obtain the final classification results.

3.1. Emoji Preprocessing

According to the aforementioned discussion, existing social bot detection studies often treat emojis as noise and directly remove them during preprocessing [11], resulting in severe loss of semantic and emotional cues in tweets. Particularly in short tweets, a single emoji can significantly alter the meaning of the entire tweet. To address this issue, this study employs emoji-to-text mapping and language model optimization to fully preserve the semantic and emotional value of emojis. First, each tweet is defined as an ordered joint structure of textual tokens and emojis:

In this formula, denotes the ordered sequence of textual tokens in the tweet, with being the total number of words and denoting the ordered sequence of emojis in the tweet, where is the total number of emojis. The preprocessing workflow includes the following steps:

3.1.1. Emoji Mapping

First, emoji detection is performed on the tweet. If no emojis are detected, no further processing is applied. If emojis are present, each emoji is converted into its corresponding textual description using a predefined mapping table :

This study compared the Unicode coverage, multilingual description support, semantic mapping accuracy, and update frequency and found that Emoji Library outperformed both Emojiswitch Library and EmojiBase Library: it fully covers the entire Unicode 15.0 version, including the emojis added in 2025, whereas Emojiswitch only covers up to Unicode 13.0 and EmojiBase covers up to 14.0 with some missing variants. Additionally, Emoji Library provides long-text descriptions in multiple languages, such as English and Chinese, while the other two only support English descriptions. Finally, Emoji Library maintains quarterly updates to keep pace with official Unicode releases, which is more adaptable to the semantic evolution of emojis compared to Emojiswitch’s annual updates and EmojiBase’s irregular updates. Therefore, this study selected the Emoji Library to construct the emoji–text mapping table . As shown in Table 1, which lists 10 typical mapping examples.

Table 1.

Examples of popular emoji and their text mapping.

The mapped tweet is expressed as follows:

3.1.2. Text Coherence Optimization

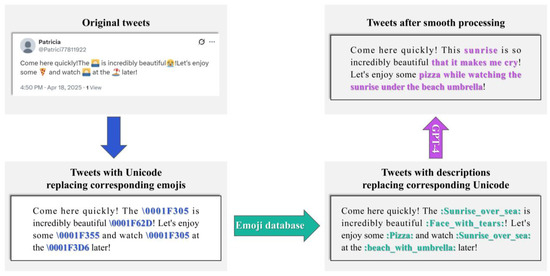

Tweets converted from emojis often suffer from poor textual coherence and semantic ambiguity. For example, the tweet “Let’s go to the beach!  ” becomes “Let’s go to the beach! :beach_with_umbrella:” after conversion, resulting in semantic discontinuity. To address this, this study introduces the GPT-4 model for semantic reconstruction; the prompt is: “You are a language analysis expert. Analyze the tweet provided to you. The emojis in the tweet have been converted into corresponding text label embeddings. Please make the tweet coherent without changing its original meaning, and ensure the length of the generated tweet does not exceed 200% of the original tweet.”. As illustrated in Figure 4, this process ensures that tweets fully express their semantic and emotional information. For instance, the aforementioned tweet is reconstructed as “Let’s go to the beach and enjoy the sun under an umbrella!”.

” becomes “Let’s go to the beach! :beach_with_umbrella:” after conversion, resulting in semantic discontinuity. To address this, this study introduces the GPT-4 model for semantic reconstruction; the prompt is: “You are a language analysis expert. Analyze the tweet provided to you. The emojis in the tweet have been converted into corresponding text label embeddings. Please make the tweet coherent without changing its original meaning, and ensure the length of the generated tweet does not exceed 200% of the original tweet.”. As illustrated in Figure 4, this process ensures that tweets fully express their semantic and emotional information. For instance, the aforementioned tweet is reconstructed as “Let’s go to the beach and enjoy the sun under an umbrella!”.

” becomes “Let’s go to the beach! :beach_with_umbrella:” after conversion, resulting in semantic discontinuity. To address this, this study introduces the GPT-4 model for semantic reconstruction; the prompt is: “You are a language analysis expert. Analyze the tweet provided to you. The emojis in the tweet have been converted into corresponding text label embeddings. Please make the tweet coherent without changing its original meaning, and ensure the length of the generated tweet does not exceed 200% of the original tweet.”. As illustrated in Figure 4, this process ensures that tweets fully express their semantic and emotional information. For instance, the aforementioned tweet is reconstructed as “Let’s go to the beach and enjoy the sun under an umbrella!”.

” becomes “Let’s go to the beach! :beach_with_umbrella:” after conversion, resulting in semantic discontinuity. To address this, this study introduces the GPT-4 model for semantic reconstruction; the prompt is: “You are a language analysis expert. Analyze the tweet provided to you. The emojis in the tweet have been converted into corresponding text label embeddings. Please make the tweet coherent without changing its original meaning, and ensure the length of the generated tweet does not exceed 200% of the original tweet.”. As illustrated in Figure 4, this process ensures that tweets fully express their semantic and emotional information. For instance, the aforementioned tweet is reconstructed as “Let’s go to the beach and enjoy the sun under an umbrella!”.

Figure 4.

Tweet processing flow: from emojis to Unicode and descriptions using GPT-4.

3.1.3. Handling of Abnormal Emojis

For rare or custom emojis not included in the Emoji Library, this study retains their original Unicode encoding and appends the special token “[UNK]” as a placeholder to avoid information loss. Subsequently, all modified tweets are fed into the model for training instead of the original tweets.

3.2. Sentiment-Based Features Processing

Sentiment-based features capture statistical differences in emotional expression patterns between bot and human accounts by quantifying the sentiment dynamics in user tweets. The innovation of this study lies in proposing seven sentiment features, including four foundational ones—positive, negative, and neutral sentiment values, as well as sentiment complexity—and three advanced features derived from these, including sentiment polarity span, volatility, and consistency. These features significantly enhance discriminative power. The specific definitions are as follows:

3.2.1. Basic Sentiments and Complexity

This study employs VADER [43] for analyzing basic sentiment values. The selection of VADER is attributed to three advantages it possesses. First, VADER is adaptable to the characteristics of social media texts. It can effectively handle fragmented content such as short texts and informal language (e.g., slang) in tweets. Its built-in sentiment dictionary contains a large number of commonly used social media words (e.g., “OMG”). Second, VADER does not require a large amount of labeled data. It is lightweight and efficient, enabling sentiment analysis solely through predefined sentiment dictionaries and grammatical rules. It has low computational costs and is easy to deploy, making it suitable for processing large-scale tweet data. Third, VADER can calculate sentiment scores by decomposing elements such as sentiment words, negation words, and intensifier words in the text. The results have clear interpretability, facilitating the analysis of differences in sentiment expression between bots and real users. In recent years, VADER has seen many typical and effective application cases. For example, Francesco et al. [44] applied VADER to investigate the sentiment experiences among e-sports spectators, and Anny et al. [45] used VADER to classify sentiments and discovered the correlation between Twitter sentiments and the price movements of Bitcoin. VADER is highly compatible with the multimodal sentiment feature extraction requirements of this study. Therefore, VADER is adopted to analyze basic sentiment values, and the specific process is as follows:

Conduct sentiment analysis on each tweet of the user. Based on a predefined sentiment dictionary, which contains the positive, negative, and neutral sentiment intensity values for each word, combined with context-adjustment rules (such as negative words, emphasis words, punctuation marks, etc.), extract the positive sentiment value (), negative sentiment value (), neutral sentiment value (), and sentiment complexity ():

In this formula, i denotes the i-th tweet, j represents the j-th word in the i-th tweet, and M is the total number of words in the i-th tweet. and , respectively, denote the positive and negative sentiment intensities of each word , and represents the contextual adjustment factor.

Given that both and always hold, the neutral sentiment value corresponds to the residual portion not covered by positive or negative sentiments.

Sentiment complexity , which quantifies the diversity and volatility of emotional expression, is measured via entropy or standard deviation as follows:

In this formula, is the normalized proportion of each sentiment category. Finally, the mean of sentiment features across all tweets from users is computed to derive the user-level sentiment feature vector:

In this formula, i denotes the i-th tweet; N is the total number of tweets by the user; and reflect the overall positive and negative emotional tendencies of the user, respectively; characterizes the degree of emotional neutrality; and measures the complexity of emotional expression.

3.2.2. Sentiment Polarity Span

To capture the range of sentiment fluctuations in sentiment expressions, the sentiment polarity span is defined as the absolute difference between the maximum positive sentiment value and the maximum negative sentiment value across all tweets from the same user:

Here, and are both positive values. This metric quantifies the extremity of a user’s emotional expression. Real users, due to the diversity of life events (such as celebrating victories and encountering setbacks), will have significant positive and negative emotional fluctuations, and is usually relatively high; however, robots, restricted by task objectives (such as conducting concentrated propaganda or attacks), have their emotional expressions constrained within a specific polarity range, and is significantly low. Even if attackers attempt to simulate the human emotional range, it will reduce the attack efficiency due to disrupting task consistency. Therefore, this feature can significantly improve the performance of robot detection.

3.2.3. Sentiment Volatility

To further quantify the volatility of emotional expression, sentiment volatility is introduced, measured as the standard deviation of sentiment complexity across a user’s tweets:

Sentiment volatility reveals the differences in randomness during the evolution of emotions. Human emotions, triggered by immediate events, exhibit irregular fluctuations (such as a shift from anger to surprise caused by breaking news). Thus, the sentiment complexity of real users shows relatively large fluctuations ( is high). In contrast, robots generate content in a programmatic manner, so the distribution of their sentiment complexity is more concentrated ( is low). If robots forcibly inject random fluctuations to evade detection, it will lead to a decline in semantic coherence and expose traces of generation.

3.2.4. Sentiment Consistency

Sentiment consistency is defined as the mean cosine similarity of sentiment complexity between adjacent tweets, capturing the continuity of a user’s emotional expression:

In this formula, represents the sentiment vector of the tweet. Robot accounts generate purposeful content in batches. For example, sending 50 consecutive advertising tweets will lead to a high degree of similarity in sentiment between adjacent tweets ( approaches 1). In contrast, human beings switch topics more frequently than robots (such as shifting from sports discussions to food sharing), and their sentiment expressions are more random, resulting in low sentiment continuity ( is relatively low). If a robot deliberately reduces consistency, it needs to sacrifice task focus, significantly weakening its propagation effect.

The sentiment polarity span, sentiment volatility, and sentiment consistency form a triangular constraint on sentiment expression, bringing about a joint defense advantage. If a robot attempts to adjust one feature (such as increasing the polarity span to simulate human beings), it will simultaneously disrupt other features (such as causing an abnormal increase in volatility or a break in consistency), forming an “impossible triangle” for detection evasion.

Finally, the sentiment feature vector is constructed by concatenating the above metrics:

3.3. User Node Feature Processing

ESA-BotRGCN aims to leverage multi-modal user information to address the challenge of bot account camouflage, enabling accurate identification of malicious bot accounts and preventing the spread of misinformation. Specifically, ESA-BotRGCN jointly encodes five categories of features: sentiment-based features, user description features, tweet content features, numerical attributes, and categorical features. In this section, the encoding process of the latter four types of features will be elaborated in detail.

3.3.1. User Description Feature

User description features are extracted from the personal bio on the account homepage. To capture their semantic information, a pre-trained RoBERTa model is employed for encoding. First, the user description text is input into RoBERTa to generate word-level embedding vectors:

In this formula, represents the user description embedding, i denotes the i-th word in the description, L is the total number of words, and is the embedding dimension of RoBERTa. The user description representation vector is then derived as follows:

In this formula, and are learnable parameters, is the activation function, and D is the embedding dimension for Twitter users. In subsequent sections of this paper, Leaky-ReLU is adopted as .

3.3.2. Tweet Content Feature

After emoji preprocessing (Section 3.1), the semantic and emotional information of tweets is enhanced. The processed tweets are encoded using RoBERTa in a manner similar to the above. First, RoBERTa generates an embedding vector for each tweet. The mean of all tweet embeddings for a user is then computed to obtain the user-level tweet representation:

In this formula, N is the total number of tweets by the user.

3.3.3. Numerical Features

Numerical attributes refer to measurable metrics that quantify user behaviors and attributes, as listed in Table 2. These features provide numerical descriptions of user behavioral patterns and preferences. The numerical attributes are processed using Multi-Layer Perceptrons (MLPs) and graph neural networks (GNNs). Specifically, 10 numerical features are first directly retrieved from the Twitter API, with the first three being emoji-related. Z-score normalization is then applied to eliminate scale differences, followed by a fully connected layer to derive the user’s numerical feature representation .

Table 2.

User’s numerical features.

3.3.4. Categorical Features

Categorical features are qualitative indicators describing user attributes, as shown in Table 3. These features are typically represented in binary form (yes/no). Similar to numerical attributes, we avoid manual feature engineering and instead encode them using MLPs and GNNs. Specifically, 11 categorical features are directly retrieved from the Twitter API. One-hot encoding is applied to convert these features into sparse vectors, which are then concatenated and transformed via a fully connected layer with Leaky-ReLU activation, yielding the user categorical feature representation .

Table 3.

User’s categorical features.

3.4. Features Fusion

The proposed model achieves multi-modal feature fusion through feature encoding and an attention mechanism. Given a set of user node features , representing sentiment-based features, user descriptions, tweet content, numerical attributes, and categorical features, respectively; the fusion process is defined as follows:

3.4.1. Feature Alignment and Nonlinear Transformation

Heterogeneous features are projected into a unified dimensional space via fully connected layers to eliminate dimensional discrepancies and introduce nonlinear expressive capabilities:

In this formula, is the learnable weight matrix, is the bias term, D = 256 is the unified embedding dimension, and Leaky-ReLU mitigates gradient vanishing.

3.4.2. Single-Head Attention Weight Allocation

A single-head attention mechanism dynamically evaluates feature importance through query–key interactions:

In this formula, is a parameter matrix and is a learnable global query vector that captures cross-feature global dependencies.

3.4.3. Context-Aware Feature Fusion

A globally integrated feature vector is generated via weighted summation and fed into the subsequent RGCN module for social graph modeling:

Through this approach, the model dynamically adjusts the influence of each feature during training, thereby enhancing the discernment of feature importance. This fusion method enables the model to effectively integrate information from diverse sources and deliver superior performance in complex tasks.

3.5. Heterogeneous Social Graph Modeling

To address the limitations of traditional graph neural networks in modeling multi-relational data, this study employs RGCN [46] for deep feature extraction from heterogeneous social graphs. We construct a heterogeneous information network , where the node set represents user accounts, the edge set includes two relationship types , characterizing active and passive follow behaviors between users. Each user node is associated with a feature vector , derived from the attention-based fusion in Section 3.3. RGCN integrates rich contextual information from nodes through efficient message passing and aggregation mechanisms. During this process, RGCN not only considers the intrinsic features of node but also incorporates neighborhood information to generate context-aware node representations. This multi-layer stacking progressively expands the receptive field, capturing global topological patterns. Furthermore, social networks are typically large-scale and sparse, with limited node connections, posing challenges for information propagation. RGCN overcomes these limitations via a dynamic relation-aware edge weighting mechanism, which updates relationship weights and relation-specific embeddings to address potential information gaps in the graph. The graph convolution operations of RGCN are implemented as follows.

3.5.1. Node Initialization

The user feature vector undergoes linear transformation and activation function to generate the initial hidden representation:

In this formula, is a learnable weight matrix, is a bias term, and is the Leaky-ReLU activation function.

3.5.2. Relational Graph Convolution

At the l-th layer, the representation of node is updated as follows:

Here, denotes the set of neighbors of under relation r, and and are the weight matrices for self-connections and relationship r, respectively.

3.5.3. Multi-Layer Perceptron (MLP) Enhancement

Stacked RGCN layers are interleaved with MLPs to further refine node representations and expand the receptive field:

In this formula, and are learnable parameters. The final node representation is mapped to a high-dimensional discriminative space via the MLP.

3.6. Learning and Optimization

ESA-BotRGCN formulates social bot detection as a binary classification task, where the label of a user account denotes a genuine user (0) or a social bot (1). This section details the classification mechanism and loss function design.

3.6.1. Classifier Design

The model leverages context-aware node representations , extracted by the RGCN, to construct the classifier. First, the node representation is mapped to a 2D space via a fully connected layer:

In this formula, is the weight matrix and is the bias term. The class probability distribution is then computed using the Softmax function:

3.6.2. Loss Function

The total loss combines cross-entropy loss and a weight decay term to balance classification accuracy and model complexity:

The first part is the cross-entropy loss, which measures the deviation between the predicted probability and the true label to evaluate the accuracy of the prediction. The second part is the weight decay term. represents the summation over all labeled users, represents the summation over all learnable parameters in the ESA-BotRGCN framework, and is the decay coefficient used to control the model parameters and suppress overfitting.

4. Experiments

4.1. Experimental Setup

4.1.1. Dataset

This study uses the publicly available Twitter bot detection benchmark datasets TwiBot-20 [47] and Cresci-15 [48] to conduct experimental verification.

The TwiBot-20 dataset contains 229,580 user nodes, 33,488,192 tweet texts, 8,723,736 user attribute data entries, and 455,958 bidirectional follow relationships. Following the original data partitioning strategy, we employed a stratified sampling method to divide the dataset into training (80%), validation (10%), and test (10%) sets, ensuring balanced class distribution. Furthermore, based on user nodes and follow relationships, we constructed a heterogeneous graph structure where nodes represent user entities and edges represent unidirectional follow behaviors, ultimately generating a social network topology comprising 229,580 nodes and 227,979 directed edges.

The Cresci-15 dataset consists of five sub-datasets, with a total of 5301 users and 7,086,134 edges. All accounts are labeled, among which 3351 accounts are marked as bot accounts. Similar to the TwiBot-20 dataset, we divide it into a training set (80%), a validation set (10%), and a test set (10%).

4.1.2. Evaluation Metrics

To comprehensively evaluate model performance, this study adopts the following three metrics.

- Accuracy: Measures the overall proportion of correct classifications.

In this formula, TP (true positive) denotes the number of correctly identified bot accounts, TN (true negative) represents the number of correctly identified genuine users, and FP (false positive) and FN (false negative) indicate the numbers of misclassified positive and negative samples, respectively.

- 2.

- F1-score [49]: The harmonic mean of precision and recall, suitable for scenarios requiring balanced class distributions.

In this formula, the meanings of precision and recall are as follows:

- 3.

- MCC (Matthews Correlation Coefficient) [50]: A statistical measure that synthesizes all four elements of the confusion matrix, robust to class imbalance.

MCC’s range is [−1, 1], where a value closer to 1 indicates superior model performance.

The aforementioned metrics evaluate the model’s classification capabilities from different dimensions. Accuracy reflects overall correctness, the F1-score balances class sensitivity, and MCC mitigates the impact of class distribution skew. Their combination can comprehensively validate the robustness of the detection framework.

4.1.3. Baseline Methods

We selected 10 mainstream social bot detection methods as baseline models for performance comparison with ESA-BotRGCN, covering diverse technical approaches including traditional machine learning, deep learning, and graph neural networks. The specific methods are as follows.

- Lee et al. [51]: A Random Forest-based model that integrates multi-dimensional features such as user-following networks and tweet content for detection.

- Yang et al. [52]: Employs lightweight metadata combined with a Random Forest algorithm to achieve efficient tweet stream analysis.

- Kudugunta et al. [53]: Leverages a contextual Long Short-Term Memory (LSTM) network to jointly model tweet content and metadata for tweet-level bot detection.

- Wei et al. [54]: Utilizes a three-layer Bidirectional Long Short-Term Memory (BiLSTM) with word embeddings to identify social bots on Twitter.

- Miller et al. [55]: Treats bot detection as an anomaly detection problem rather than classification, introducing 95 lexical features extracted from tweet text.

- Cresci et al. [26]: Innovatively encodes user behaviors into DNA-like sequences and distinguishes bots from humans via Longest Common Subsequence (LCS) similarity.

- Botometer [7]: A widely used public Twitter detection tool that employs over 1000 features.

- Alhosseini et al. [56]: Detects social bots using a Graph Convolutional Neural Network (GCNN) by leveraging node features and aggregated neighborhood node features.

- SATAR [57]: A self-supervised representation learning framework capable of generalizing by jointly utilizing user semantics, attributes, and neighborhood information.

- BotRGCN [36]: Addresses bot detection by constructing a heterogeneous graph from Twitter user-follow relationships and applying an RGCN.

4.2. Comparative Experiments

This section validates the effectiveness of each module in the ESA-BotRGCN model through four sets of ablation experiments, including the impact of emoji preprocessing, sentiment feature integration, the attention mechanism, and the selection of graph neural network architecture and its layer count on detection performance. Additionally, we have conducted statistical significance analysis under different feature settings.

4.2.1. Emoji Preprocessing Ablation Study

To validate the importance of emojis in tweet semantics and sentiment analysis, this section compares the impact of four tweet input formats on detection performance under the framework of using seven-dimensional advanced sentiment features and a two-layer RGCN-based graph neural network architecture.

- Original tweets: Raw tweets without any emoji processing.

- Emoji-mapped tweets: Tweets where emojis are replaced with corresponding textual descriptions from the Emoji Library.

- GPT-4 optimized tweets: Emoji-mapped tweets further refined by GPT-4 for textual coherence.

- Qwen-2.5 Optimizes Tweets: Use Qwen-2.5, owned by Alibaba, to optimize the text coherence of tweets after mapping with the Emoji library, and keep the prompt consistent with that of GPT-4.

As shown in Table 4, the original tweets yielded the lowest detection performance due to the neglect of emoji semantics and emotional cues. Mapping emojis to textual descriptions via the Emoji Library enabled the model to capture semantic and emotional associations, significantly improving performance. Tweets optimized by large language models (LLMs) further enhance text coherence, and GPT-4, in particular, achieves even better results. This enables the model to more accurately extract semantic and sentiment features, thereby attaining optimal performance. Experimental results demonstrate that semantic reconstruction and coherence optimization of emojis play a critical role in improving detection accuracy.

Table 4.

Emoji preprocessing ablation study results.

Notably, while LLMs improve text coherence and enhance detection accuracy, their computational cost should be taken into account: the inference time for 10,000 tweets on an A100 GPU is 2.1 h (Qwen-2.5) and 2.6 h (GPT-4). This trade-off between performance and cost makes LLMs suitable for scenarios where detection accuracy is prioritized over real-time deployment.

4.2.2. Sentiment Feature Ablation Study

To validate the contribution of sentiment features, this section conducts ablation experiments under three configurations, each employing preprocessed tweets and a two-layer RGCN-based graph neural network architecture.

- No sentiment features: Only user descriptions, tweet content, numerical attributes, and categorical features are used.

- Basic sentiment features: Incorporates positive, negative, and neutral sentiment polarities, as well as sentiment complexity.

- Advanced sentiment features: On the basis of basic sentiment features, three additional features are added—polarity span, volatility, and consistency.

As shown in Table 5, the model performance significantly declined when no sentiment features were used, indicating that sentiment features are critical clues for distinguishing bots from genuine users. After introducing four basic sentiment features, accuracy was partially improved, and further incorporating polarity span, volatility, and consistency into the seven-dimensional sentiment features significantly enhanced the model’s ability to capture emotional expression patterns, ultimately achieving an accuracy of 87.46%. The experiments demonstrate that these seven sentiment features can effectively reflect the differences in emotional expression between bot accounts and genuine users, thereby significantly enhancing the model’s discriminative capability.

Table 5.

Sentiment feature ablation study results.

4.2.3. Selection of Graph Neural Network Architecture and Its Layers

To validate the advantages of RGCN in modeling heterogeneous social graphs, this study compares the detection performance of different graph neural network architectures, each employing preprocessed tweets and seven-dimensional advanced sentiment features.

- Graph Attention Network (GAT): Dynamically assigns edge weights through an attention mechanism.

- Graph Convolutional Network (GCN): Models homogeneous social relationships without distinguishing node or edge types.

- Fully Connected Neural Network: Applies nonlinear transformations via Multi-Layer Perceptrons.

As shown in Table 6, while GAT introduces an attention mechanism, its stability in modeling complex relationships remains insufficient. The GCN model ignores relational heterogeneity, failing to differentiate between active following and passive following behaviors, resulting in suboptimal performance. The fully connected neural network achieves better classification performance through effective feature learning and processing. Although the neural network achieves high detection accuracy through feature fusion, it essentially belongs to a node-level feature processing model and cannot capture the implicit topological structure information in social networks. With the increase in social interaction density, the connections between users are becoming closer and closer, and the dynamic changes in network topology put forward higher requirements for the model. The multi-layer message-passing mechanism of RGCN can gradually aggregate multi-order neighbor information and maintain the stable extraction of topological features in dense networks, which elevates the social bot detection from “individual behavior recognition” to the level of “group structure understanding”. In the experiment, RGCN achieved optimal performance, thus demonstrating its indispensability.

Table 6.

GNN architecture selection ablation study results.

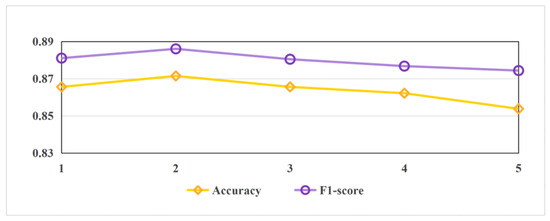

Furthermore, to further investigate the impact of RGCN layer depth on model performance, this study conducted comparative experiments on RGCN architectures with varying numbers of layers. As shown in Figure 5, the 1-layer RGCN failed to adequately aggregate global topological information due to its limited receptive field, while models with three or more layers suffered from performance degradation caused by over-smoothing. Ultimately, the 2-layer RGCN achieved an optimal balance between parameter efficiency and feature representation capability, validating the rationality of the current architectural design.

Figure 5.

Performance comparison of RGCN with varying layer numbers.

4.2.4. Ablation Experiment on Attention Mechanism

To verify the effectiveness of the attention gating mechanism in multi-modal feature fusion, this section designs the following two sets of comparative experiments while keeping other variables unchanged (that is, all use preprocessed tweets, seven-dimensional advanced emotional features, and a two-layer RGCN architecture).

- Without-attention mechanism: Directly concatenate five types of feature vectors (sentiment features, user descriptions, tweet content, numerical features, and categorical features) to replace attention-weighted fusion.

- Complete-attention mechanism: Use the attention gating network proposed in Section 3.4 of this paper for dynamic feature fusion.

The experimental results are shown in Table 7. After removing the attention mechanism, the performance of ESA-BotRGCN declines comprehensively, indicating that simple feature concatenation is difficult to effectively distinguish feature importance. The attention mechanism dynamically allocates weights, enabling ESA-BotRGCN to dynamically adjust the influence of various features during the training process, thereby improving the ability to recognize feature importance. This fusion method allows the model to more effectively integrate information from different sources and provide better performance in complex tasks.

Table 7.

Attention mechanism ablation experiment results.

4.2.5. Statistical Significance Analysis of Different Feature Settings

To further validate the independent and joint contributions of emotional features and emoji preprocessing to performance enhancement, this section compares the performance of three model variants against the complete ESA-BotRGCN model and applies the Welch’s t-test to assess whether the observed differences are statistically significant. Specifically, the following three simplified model settings were used for comparison:

- Without emotion and emojis: sentiment features and emoji preprocessing were removed, retaining only the other feature modalities.

- Use only sentiment features: Retain the seven-dimensional sentiment features and remove the text enhancement processing for emojis.

- Use only emojis: Perform emoji-to-text mapping and GPT-4 semantic optimization, but do not introduce sentiment features.

As shown in Table 8, the average performance of all three simplified variants differs significantly from that of the complete model. The “without emotion and emojis” version exhibited the largest performance gap (t = −16.82, p < 0.00001), indicating an extremely significant difference and highlighting the importance of integrating both sentiment and emoji-related features. In addition, introducing either sentiment features alone (t = −9.25, p = 0.000099) or emoji preprocessing alone (t = −8.09, p = 0.000045) also resulted in statistically significant improvements, thereby confirming that both information sources contribute independently to model performance.

Table 8.

Significance analysis of different feature settings.

It is worth noting that the t-value in a Welch’s t-test measures the standardized difference between the means of two groups relative to their variance. A negative t-value indicates that the complete model outperforms the comparison version. The corresponding p-value represents the probability of observing such a result under the null hypothesis (i.e., no significant difference). When the p-value is substantially lower than the commonly accepted threshold (e.g., 0.05), the likelihood that the observed effect is due to random variation is minimal, thus providing strong statistical evidence to reject the null hypothesis and support the alternative—that the proposed feature processing yields a positive and significant impact on model performance.

4.3. Performance Comparison

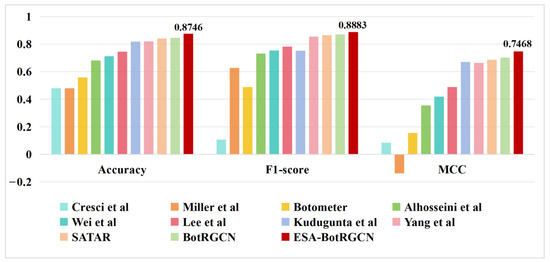

Table 9 shows the social bot detection performance of ESA-BotRGCN compared with the baseline models from Section 4.1.3 on the TwiBot-20 dataset. Table 10 shows the social bot detection performance of ESA-BotRGCN and seven mainstream baseline models on the Cresci-15 dataset. The results in Figure 6 demonstrate that ESA-BotRGCN significantly outperforms all other methods on TwiBot-20, confirming its general effectiveness in Twitter social bot detection tasks. Additionally, ESA-BotRGCN achieves superior performance compared to baseline methods that also leverage user-follow relationships, such as Alhosseini et al. and SATAR, with MCC improvements of 39.25% and 6.05%, respectively. This indicates that ESA-BotRGCN better exploits follow relationships by situating users within their social contexts.

Table 9.

Bot detection performance on the TwiBot-20 benchmark.

Table 10.

Bot detection performance on the Cresci-15 benchmark.

Figure 6.

Comparison of classification performance metrics for various models on TwiBot-20 [27,51,52,53,54,55,56].

5. Conclusions

To address the issue that existing social bot detection methods commonly overlook the semantic and emotional value of emojis, we innovatively propose ESA-BotRGCN, an emoji-driven end-to-end detection framework. This framework introduces an emoji–text enhancement strategy, combining GPT-4-based text coherence optimization and RoBERTa embeddings, effectively resolving the loss of emoji semantics and emotional value in traditional methods. Second, we design seven sentiment quantification metrics to systematically capture statistical differences in emotional expression patterns between bot and human accounts. Furthermore, an attention gating fusion mechanism is constructed to dynamically integrate diverse user features. Finally, by modeling heterogeneous social topologies with an RGCN, the detection robustness is substantially improved through the four aforementioned processes. Experiments on the TwiBot-20 benchmark dataset demonstrate that ESA-BotRGCN outperforms ten mainstream baseline models, highlighting the effectiveness of our emoji-driven multi-modal feature fusion and heterogeneous relationship modeling. Future work will focus on expanding multi-modal features by incorporating user-generated images, audio, and video content to enhance the model’s ability to identify camouflaged behaviors.

Author Contributions

Conceptualization, K.Z. and X.W.; methodology, K.Z. and X.W.; software, Z.L. and K.Z.; validation, Z.L.; formal analysis, K.Z. and Z.L.; investigation, Z.L.; resources, X.W.; data curation, Z.L.; writing—original draft preparation, K.Z.; writing—review and editing, K.Z., X.W., and Z.L.; visualization, K.Z.; supervision, X.W.; project administration, K.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ESA-BotRGCN | Emoji-Driven Sentiment Analysis for Social Bot Detection with Relational Graph Convolutional Network |

| RGCN | Relational Graph Convolutional Network |

| MCC | Matthews Correlation Coefficient |

| CNNs | Convolutional Neural Networks |

| RNNs | Recurrent Neural Networks |

| GNN | graph neural network |

| URL | Uniform Resource Locator |

| LR | logistic regression |

| SVM | support vector machine |

| GB | gradient boosting |

| LCS | Longest Common Subsequence |

| LSTM | Long Short-Term Memory |

| BiGRU | bidirectional gated recurrent unit |

| UNK | unknown (placeholder for rare emojis) |

| MLPs | Multi-Layer Perceptrons |

| API | Application Programming Interface |

| TP | true positive |

| TN | true negative |

| FP | false positive |

| FN | false negative |

| BiLSTM | Bidirectional Long Short-Term Memory |

| GCNN | Graph Convolutional Neural Network |

| GAT | Graph Attention Network |

| GCN | Graph Convolutional Network |

| Adam | Adaptive Moment Estimation |

| Leaky-ReLU | Leaky Rectified Linear Unit |

References

- Krithiga, R.; Ilavarasan, E. A Comprehensive Survey of Spam Profile Detection Methods in Online Social Networks. J. Phys. Conf. Ser. 2019, 1362, 12111. [Google Scholar] [CrossRef]

- Meta Investor Platforms. Meta Reports Fourth Quarter and Full Year 2023 Results; Initiates Quarterly Dividend: New York, NY, USA, 2024. [Google Scholar]

- Varol, O.; Ferrara, E.; Davis, C.A.; Menczer, F.; Flammini, A. Online human-bot interactions: Detection, estimation, and characterization. Proc. Int. AAAI Conf. Web Soc. Media 2017, 11, 280–289. [Google Scholar] [CrossRef]

- Shafahi, M.; Kempers, L.; Afsarmanesh, H. Phishing through social bots on Twitter. In Proceedings of the 4th IEEE International Conference on Big Data, Big Data 2016, Washington, DC, USA, 5–8 December 2016; Institute of Electrical and Electronics Engineers Inc.: Washington, DC, USA, 2016; pp. 3703–3712. [Google Scholar]

- Hajli, N.; Saeed, U.; Tajvidi, M.; Shirazi, F. Social Bots and the Spread of Disinformation in Social Media: The Challenges of Artificial Intelligence. Br. J. Manag. 2022, 33, 1238–1253. [Google Scholar] [CrossRef]

- Chinnaiah, V.; Kiliroor, C.C. Heterogeneous feature analysis on twitter data set for identification of spam messages. Int. Arab. J. Inf. Technol. 2022, 19, 38–44. [Google Scholar] [CrossRef]

- Yang, K.-C.; Ferrara, E.; Menczer, F. Botometer 101: Social bot practicum for computational social scientists. J. Comput. Soc. Sci. 2022, 5, 1511–1528. [Google Scholar] [CrossRef]

- Weedon, J.; Nuland, W.; Stamos, A. Information Operations and Facebook; Facebook, Inc.: Menlo Park, CA, USA, 2017. [Google Scholar]

- Ferrara, E. Social bot detection in the age of ChatGPT: Challenges and opportunities. First Monday 2023, 28, 13185. [Google Scholar] [CrossRef]

- Yang, Y.; Yang, R.; Li, Y.; Cui, K.; Yang, Z.; Wang, Y.; Xu, J.; Xie, H. RoSGAS: Adaptive Social Bot Detection with Reinforced Self-supervised GNN Architecture Search. ACM Trans. Web 2023, 17, 1–31. [Google Scholar] [CrossRef]

- Cappallo, S.; Svetlichnaya, S.; Garrigues, P.; Mensink, T.; Snoek, C.G.M. New Modality: Emoji Challenges in Prediction, Anticipation, and Retrieval. IEEE Trans. Multimed. 2019, 21, 402–415. [Google Scholar] [CrossRef]

- Zhao, J.; Dong, L.; Wu, J.; Xu, K. MoodLens: An Emoticon-Based Sentiment Analysis System for Chinese Tweets; ACM: New York, NY, USA, 2012; pp. 1528–1531. [Google Scholar]

- Liu, K.-L.; Li, W.-J.; Guo, M. Emoticon Smoothed Language Models for Twitter Sentiment Analysis. Proc. AAAI Conf. Artif. Intell. 2021, 26, 1678–1684. [Google Scholar] [CrossRef]

- Alexander, H.; Bal, D.; Flavius, F.; Bal, M.; Franciska De, J.; Kaymak, U. Exploiting emoticons in polarity classification of text. J. Web Eng. 2015, 14, 22. [Google Scholar]

- Boia, M.; Faltings, B.; Musat, C.-C.; Pu, P. A :) Is Worth a Thousand Words: How People Attach Sentiment to Emoticons and Words in Tweets. In Proceedings of the 2013 International Conference on Social Computing, Alexandria, VA, USA, 8–14 September 2013; pp. 345–350. [Google Scholar]

- Wang, Q.; Wen, Z.; Ding, K.; Liang, B.; Xu, R. Cross-Domain Sentiment Analysis via Disentangled Representation and Prototypical Learning. IEEE Trans. Affect. Comput. 2025, 16, 264–276. [Google Scholar] [CrossRef]

- Share of Posts on Twitter Containing Emojis Worldwide in July 2013 and March 2023. Available online: https://www.statista.com/statistics/1399380/tweets-containing-emojis/ (accessed on 2 July 2023).

- In 2025, Global Emoji Count Could Grow to 3790. Available online: https://www.statista.com/chart/17275/number-of-emojis-from-1995-bis-2019/ (accessed on 17 July 2024).

- Sivanesh, S.; Kavin, K.; Hassan, A.A. Frustrate Twitter from automation: How far a user can be trusted? In Proceedings of the 2013 International Conference on Human Computer Interactions (ICHCI), Chennai, India, 23–24 August 2013; pp. 1–5. [Google Scholar]

- Kantepe, M.; Ganiz, M.C. Preprocessing framework for Twitter bot detection. In Proceedings of the 2017 International Conference on Computer Science and Engineering (UBMK), Antalya, Turkey, 5–8 October 2017; pp. 630–634. [Google Scholar]

- Sayyadiharikandeh, M.; Varol, O.; Kai-Cheng, Y.; Flammini, A.; Menczer, F. Detection of Novel Social Bots by Ensembles of Specialized Classifiers. arXiv 2020. [Google Scholar] [CrossRef]

- Grimme, C.; Preuss, M.; Adam, L.; Trautmann, H. Social Bots: Human-Like by Means of Human Control? Big Data 2017, 5, 279–293. [Google Scholar] [CrossRef]

- Grimme, C.; Assenmacher, D.; Adam, L. Changing Perspectives: Is It Sufficient to Detect Social Bots? In Proceedings of the Social Computing and Social Media, Las Vegas, NV, USA, 15–20 July 2018; Meiselwitz, G., Ed.; Springer International Publishing AG: Cham, Switzerland, 2018; Volume 10913, pp. 445–461. [Google Scholar]

- Xin, R.; Zhenyu, W.; Haining, W.; Jajodia, S. Profiling Online Social Behaviors for Compromised Account Detection. IEEE Trans. Inf. Forensics Secur. 2016, 11, 176–187. [Google Scholar] [CrossRef]

- Amato, F.; Castiglione, A.; De Santo, A.; Moscato, V.; Picariello, A.; Persia, F.; Sperlí, G. Recognizing human behaviours in online social networks. Comput. Secur. 2018, 74, 355–370. [Google Scholar] [CrossRef]

- Cresci, S.; Di Pietro, R.; Petrocchi, M.; Spognardi, A.; Tesconi, M. DNA-Inspired Online Behavioral Modeling and Its Application to Spambot Detection. IEEE Intell. Syst. 2016, 31, 58–64. [Google Scholar] [CrossRef]

- Cresci, S.; Pietro, R.D.; Petrocchi, M.; Spognardi, A.; Tesconi, M. Social Fingerprinting: Detection of Spambot Groups Through DNA-Inspired Behavioral Modeling. IEEE Trans. Dependable Secur. Comput. 2018, 15, 561–576. [Google Scholar] [CrossRef]

- Peng, H.; Zhang, J.; Huang, X.; Hao, Z.; Li, A.; Yu, Z.; Yu, P.S. Unsupervised Social Bot Detection via Structural Information Theory. ACM Trans. Inf. Syst. 2024, 42, 1–42. [Google Scholar] [CrossRef]

- Ilias, L.; Roussaki, I. Detecting malicious activity in Twitter using deep learning techniques. Appl. Soft Comput. 2021, 107, 107360. [Google Scholar] [CrossRef]

- Wu, Y.; Fang, Y.; Shang, S.; Wei, L.; Jin, J.; Wang, H. Detecting Social Spammers in Sina Weibo Using Extreme Deep Factorization Machine; Zhang, Y., Wang, H., Zhou, R., Beek, W., Huang, Z., Eds.; Springer International Publishing AG: Cham, Switzerland, 2020; Volume 12342, pp. 170–182. [Google Scholar]

- Wu, Y.; Fang, Y.; Shang, S.; Jin, J.; Wei, L.; Wang, H. A novel framework for detecting social bots with deep neural networks and active learning. Knowl.-Based Syst. 2021, 211, 106525. [Google Scholar] [CrossRef]

- Arin, E.; Kutlu, M. Deep Learning Based Social Bot Detection on Twitter. IEEE Trans. Inf. Forensics Secur. 2023, 18, 1763–1772. [Google Scholar] [CrossRef]

- Fazil, M.; Sah, A.K.; Abulaish, M. DeepSBD: A Deep Neural Network Model With Attention Mechanism for SocialBot Detection. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4211–4223. [Google Scholar] [CrossRef]

- Najari, S.; Salehi, M.; Farahbakhsh, R. GANBOT: A GAN-based framework for social bot detection. Soc. Netw. Anal. Min 2022, 12, 1–11. [Google Scholar] [CrossRef]

- Feng, S.; Wan, H.; Wang, N.; Tan, Z.; Luo, M.; Tsvetkov, Y. What Does the Bot Say? Opportunities and Risks of Large Language Models in Social Media Bot Detection. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024. [Google Scholar]

- Feng, S.; Wan, H.; Wang, N.; Luo, M. BotRGCN: Twitter bot detection with relational graph convolutional networks. In Proceedings of the 2021 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Eindhoven, The Netherlands, 8–11 November 2021; Cuzzocrea, A., Coscia, M., Shu, K., Eds.; ACM: New York, NY, USA, 2021; pp. 236–239. [Google Scholar]

- Feng, S.; Tan, Z.; Li, R.; Luo, M. Heterogeneity-Aware Twitter Bot Detection with Relational Graph Transformers. Proc. AAAI Conf. Artif. Intell. 2022, 36, 3977–3985. [Google Scholar] [CrossRef]