Abstract

Underwater images often exhibit characteristics such as low contrast, blurred and small targets, object clustering, and considerable variations in object morphology. Traditional detection methods tend to be susceptible to omission and false positives under these circumstances. Furthermore, owing to the constrained memory and limited computing power of underwater robots, there is a significant demand for lightweight models in underwater object detection tasks. Therefore, we propose an enhanced lightweight YOLOv10n-based model, BSE-YOLO. Firstly, we replace the original neck with an improved Bidirectional Feature Pyramid Network (Bi-FPN) to reduce parameters. Secondly, we propose a Multi-Scale Attention Synergy Module (MASM) to enhance the model’s perception of difficult features and make it focus on the important regions. Finally, we integrate Efficient Multi-Scale Attention (EMA) into the backbone and neck to improve feature extraction and fusion. The experiment results demonstrate that the proposed BSE-YOLO reaches 83.7% mAP@0.5 on URPC2020 and 83.9% mAP@0.5 on DUO, with the parameters reducing 2.47 M. Compared to the baseline model YOLOv10n, our BSE-YOLO improves mAP@0.5 by 2.2% and 3.0%, respectively, while reducing the number of parameters by approximately 0.2 M. The BSE-YOLO achieves a good balance between accuracy and lightweight, providing an effective solution for underwater object detection.

1. Introduction

The ocean covers 71% of Earth’s surface, playing a crucial role in global climate, species survival, and ecological balance, and serving as an essential resource for human civilization. Underwater object detection represents a challenging yet promising field within computer vision, offering significant applications in marine environmental monitoring, ecological preservation, and resource exploration. Recent advancements in autonomous underwater vehicles (AUVs) and unmanned underwater vehicles (UUVs) have substantially enhanced the availability and quality of underwater images [1,2]. However, as shown in Figure 1, due to the effects of light attenuation and scattering, underwater images are plagued by issues such as low contrast, noise, and color distortion [3,4].

Figure 1.

The underwater images have characteristics such as low contrast, noise and color distortion.

In this study, the primary underwater object detection challenges we aim to address encompass the following: (1) significant variations in shape and size, as well as issues with blurred, minute objects and clustered objects; (2) low contrast, which makes it hard to distinguish objects from their backgrounds. These factors severely degrade the performance of detection models and lead to false detections and missed targets. Traditional object detection methods often fail to achieve the accuracy and speed required by modern applications when confronted with these issues. Furthermore, although this study mainly optimizes the problems of low contrast and detection difficulties such as small and blurred objects, degradation factors such as noise and color distortion have not been completely solved and remain important challenges in the current field of underwater target detection.

In recent years, deep learning technologies have developed rapidly and been widely used in different fields such as defect detection, underwater image enhancement, and object recognition [5,6,7,8]. The object detection algorithms based on deep learning are mainly divided into two categories: one-stage methods and two-stage methods. One-stage algorithms, such as the You Only Look Once (YOLO) series [9,10,11,12,13] and SSD [14], directly classify and locate objects in the input image, thus offering faster detection speed. Two-stage algorithms, such as R-CNN [15], Fast-RCNN [16], and Faster-RCNN [17], initially generate candidate regions and subsequently perform classification and localization refinement on these regions. Compared to one-stage methods, they achieve higher detection accuracy, albeit with a reduction in processing speed.

Currently, most research efforts concentrate on enhancing the detection accuracy of models, but at the same time, they have also increased the model parameters and computational complexity. In addition, underwater equipment usually has limited storage capacity and processing power. Therefore, when improving the detection accuracy, it is also necessary to consider the parameters and computational complexity to achieve an effective balance between efficiency and lightweight.

This paper introduces a lightweight underwater object detection model BSE-YOLO, which integrates the improved Bidirectional Feature Pyramid Network (BiFPN) [18], a modified C2f structure named Synergistic C2f (S_C2f), where the proposed Multi-Scale Attention Synergy Module (MASM) is applied, and the Efficient Multi-Scale Attention (EMA) module [19]. The proposed BSE-YOLO mainly aims to improve the perceptibility of difficult samples and small, blurred, and clustered objects to enhance the model’s detection accuracy and multi-scale robustness. Secondly, BSE-YOLO tries to reduce or not change the parameters and computational complexity to achieve a balance between accuracy and light weight. The main contributions of this paper are summarized as follows:

(1) We incorporate an improved Bi-FPN to replace the original neck of YOLOv10n, achieving a reduction in parameters and computational costs while improving the model’s feature fusion capabilities.

(2) We propose a MASM and apply it to the bottleneck of C2f to generate a modified C2f structure named Synergistic C2f (S_C2f), which can dynamically adjust feature maps, improve the focus on critical regions, and enhance the spatial feature extraction and fusion through depthwise separable convolutions.

(3) We integrate the EMA in the backbone and neck to strengthen the focus on multi-scale features and further improve detection accuracy.

2. Related Work

2.1. Underwater Object Detection

Due to factors such as low contrast, noise, target aggregation, blurriness, small size, and complex morphological variations of underwater objects, traditional object detection algorithms typically exhibit performance degradation when applied to underwater images. Meanwhile, the rapid advancement of deep learning has led to its gradual replacement of traditional algorithms. Deep learning offers effective solutions for underwater object detection. Chen et al. [20] proposed a Sample-WeIghted hyPEr Network (SWIPENET), which significantly enhances the detection capability for small targets by generating multiple high-resolution and semantically rich feature maps, thereby effectively addressing the challenges of blurriness and noise in underwater images. Liu et al. [21] proposed an underwater target detection algorithm based on Faster R-CNN to address common issues such as low contrast and target blurriness, reducing missed detections and false alarms, and improving detection accuracy and efficiency. Pan et al. [22] proposed an improved multi-scale residual neural network (M-ResNet), which enhances the accuracy of detecting underwater objects of different sizes with multi-scale operations. Gao et al. [23] designed a detection module with flexible and adaptive point representation and a new weighted loss function in the developed PE-Transformer. This approach enables the acquisition of semantic details of small-scale underwater targets in complex environments, improving the detection accuracy of underwater targets while encouraging convergence of the network.

In addition, the YOLO series maintains a relatively fast detection speed while achieving a high detection accuracy. Cai et al. [24] proposed a novel underwater detection method that simultaneously trains two deep learning detectors based on YOLOv5 within a weakly supervised learning framework. The detectors mutually teach each other by selecting clearer samples observed during training. Zhang et al. [25] proposed an enhanced lightweight YOLOv4-Lite model by integrating MobileNetv2, depthwise separable convolutions, and an improved Attention Feature Fusion Module (AFFM). This approach significantly reduces computational overhead during training while preserving high detection accuracy, thereby achieving an optimal balance between precision and inference speed. Liu et al. [26] developed the TC-YOLO network based on YOLOv5s by incorporating self-attention and coordinated attention mechanisms from transformers into both the backbone and neck of the network. Additionally, they proposed an optimal transport label assignment strategy to enhance feature extraction and improve the utilization efficiency of training data. Feng et al. [27] designed the CEH-YOLO by integrating high-order deformable attention (HDA), enhanced spatial pyramid fast pooling (ESPPF), and customized composite detection (CD) modules, achieving remarkable performance in underwater small object detection. The above research primarily concentrated on enhancing the detection accuracy of the model in complex scenarios, with less emphasis on making the model lightweight. In other words, there remains room for further optimization between detection efficiency and accuracy.

2.2. Attention Mechanism

The human visual system can naturally identify salient regions in complex scenes. By emulating this capability, the attention mechanism dynamically adjusts weights based on input image features [28], thereby enabling the prioritization of critical information processing with limited computational resources. In underwater detection tasks, objects are typically small and dense. Several researchers have applied attention mechanisms to existing networks and found that these mechanisms can significantly enhance the detection accuracy of underwater objects. Wang et al. [29] integrated Channel and Spatial Fusion Attention (CSFA) into the YOLOv5. This approach not only maintains focus on spatial information but also enhances the extraction of key features. Yan et al. [30] incorporated the CBAM attention into YOLOv7. By weighting and enhancing features in both spatial and channel dimensions, this approach captures local correlations of feature information, refines the model’s focus on feature details, and consequently improves detection accuracy.

According to the dimensions they focus on and the features they emphasize, attention mechanisms are typically categorized into three types: spatial attention, channel attention, and hybrid attention. Spatial attention enables the model to focus on spatial positions in the image that are most relevant to the current task. For example, Spatial Transformer Networks (STNs) [31] can transform deformed data in the spatial domain and automatically capture salient features from critical regions. Channel attention assigns different weights to each channel to reflect their varying importance. This enables the model to filter out the most relevant features for the current task from a multitude of features, thereby enhancing the model’s efficiency in feature utilization. Squeeze-and-Excitation Networks (SENet) [32] exemplify channel attention. The Convolutional Block Attention Module (CBAM) [33], as mentioned in the previous study, is a classical hybrid attention mechanism. It integrates spatial and channel attention mechanisms sequentially and has been extensively utilized in convolutional neural network architectures. In addition to the aforementioned traditional attention mechanisms, there is a more granular pixel attention mechanism that focuses on each pixel of the image. By assigning a weight to each pixel, this approach enables the model to concentrate on key information within the image while disregarding irrelevant backgrounds or noise. For example, the pixel-wise contextual attention network (PiCANet) proposed by Liu et al. [34] generates an attention map for each pixel, where each attention weight reflects the contextual relevance at the corresponding spatial position. Currently, several researchers are actively exploring the synergistic effects between different types of attention mechanisms to leverage their respective advantages and enhance performance in visual tasks. Si et al. [35] proposed a novel spatial–channel synergistic attention module (SCSA) by investigating the synergy between spatial and channel attention.

Inspired by [35], we propose a Multi-Scale Attention Synergy Module (MASM), which integrates channel, spatial, and pixel attention. MASM is utilized to develop the S_C2f structure. Furthermore, we propose BSE-YOLO, a model that combines BiFPN, S_C2f, and EMA. Compared with YOLOv10n, our BSE-YOLO achieves higher detection accuracy for underwater objects while reducing parameters.

3. Methods

3.1. The Architecture of YOLOv10n

You Only Look Once (YOLO) is a representative one-stage detector with both detection speed and accuracy. It reformulates object detection as a regression task and directly predicts object categories and locations. YOLOv10 [36] is the new series of real-time, end-to-end YOLO detectors, comprising six variants: YOLOv10n, YOLOv10s, YOLOv10m, YOLOv10b, YOLOv10l and YOLOv10x. We select YOLOv10n, which has the fewest parameters, as the baseline and further optimize it to be more lightweight.

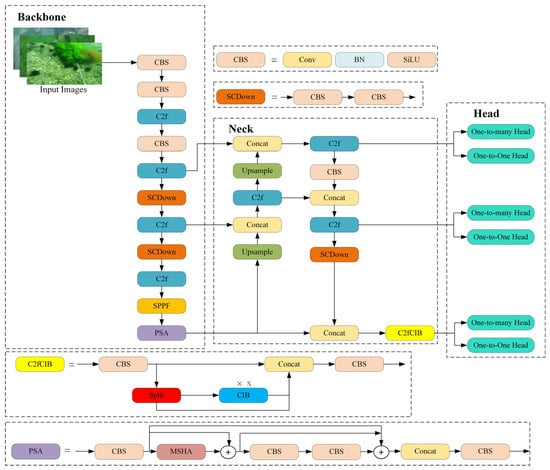

As shown in Figure 2, the structure of YOLOv10n is divided into three parts: backbone, neck, and head. The backbone extracts multi-scale features from the input image and mainly consists of CBS modules, SCDown modules, C2f modules, the fast spatial pyramid pooling (SPPF) modules, and partial self-attention (PSA). Each CBS block is composed of a convolution (Conv) layer, a batch normalization layer (BN), and a SiLU activation function. The SCDown module is made up of two convolution (Conv) layers, where the first convolution adjusts the number of channels and the other is used for downsampling. The C2f module consists of two convolution layers and some bottlenecks. The SPPF module performs max pooling on the input feature maps using pooling kernels of sizes 5 × 5, 9 × 9, and 13 × 13. It then aggregates the pooled feature maps via a concatenation layer, which captures multi-scale features. The PSA module consists of four convolution layers and multi-head self-attention (MHSA). The neck fuses and enhances the multi-scale features extracted by the backbone. It leverages the Feature Pyramid Network (FPN) and Path Aggregation Network (PAN) to integrate semantic and spatial information from multiple feature maps, thereby improving detection performance for various targets. The fused feature maps are subsequently provided to the detection head. The primary function of the head is to predict object localization and classification.

Figure 2.

The original architecture of YOLOv10n.

3.2. The Structure of the Proposed BSE-YOLO

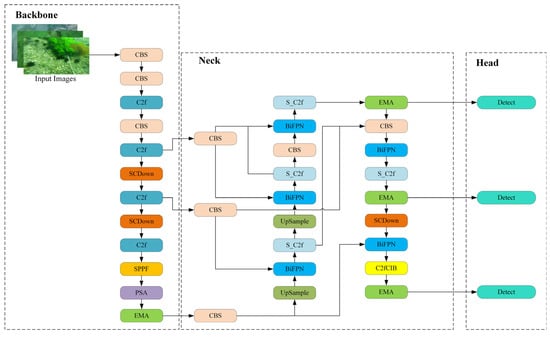

To enhance the detection accuracy and make the architecture more lightweight, we propose BSE-YOLO. The structure of BSE-YOLO is illustrated in Figure 3. Firstly, BiFPN is introduced to optimize the original Feature Pyramid Network to improve multi-scale feature fusion and detection performance without incurring substantial additional computational costs. Additionally, an improved S_C2f structure replaces the corresponding module in the neck to enhance the model’s ability to handle complex samples with low contrast and morphological variations. Furthermore, EMA is integrated into both the backbone and neck to strengthen the model’s focus on multi-scale features and further improve feature extraction and fusion capabilities.

Figure 3.

The framework of the proposed BSE-YOLO.

3.2.1. Improved Bidirectional Feature Pyramid Network (BiFPN)

The Feature Pyramid Network (FPN) [37], which was first introduced in YOLOv3, employs a bottom-up feature extraction path to generate a pyramid-shaped feature map by leveraging features from different layers of a convolutional neural network. Subsequently, through a top-down feature fusion path, it progressively refines the details of high-level feature maps to enrich the semantic depth of the network. However, during the feature upsampling from high-level to low-level, spatial details from low-level features are susceptible to loss. To address this issue, the subsequent YOLOv4 network introduced the Path Aggregation Network (PAN) [38]. By enhancing the bottom-up path, PAN enables each layer’s feature maps to effectively acquire spatial details from lower layers, thereby complementing the FPN framework. This improvement strengthens the feature representation of low-level features and improves the model’s learning capability and accurate target localization performance. Subsequently, feature fusion networks, which integrate FPN with PAN, further optimize the model’s detection performance.

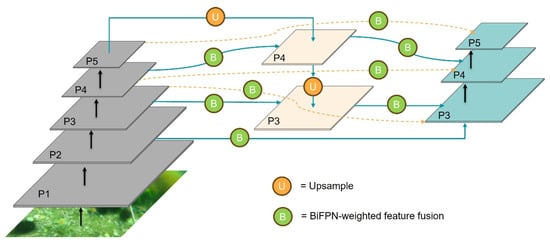

Although the combined application of FPN and PAN has achieved significant breakthroughs in object detection, there remains room for improvement in detecting small objects. Given that most underwater objects are relatively small, their features are prone to loss during the progressive downsampling process in deep neural networks. To address this issue, we introduce an improved BiFPN for optimization, as illustrated in Figure 4. BiFPN establishes bidirectional connections between top-down and bottom-up paths, enabling more efficient information exchange and fusion of features across different scales. Additionally, it adopts a weighted fusion mechanism to replace the simple feature summation used in traditional Feature Pyramid Networks. BiFPN improves model accuracy and stability by assigning learnable weights to each input feature map, allowing the network to adoptedly emphasize more informative features and suppress less relevant ones during the feature fusion process. This process is described by the following formula:

where denotes the result after fusion; represents the weight of the input feature ; a small constant is set to 0.0001 to ensure that the computation remains robust when the sum of weights becomes very small or zero, which could lead to division by zero or amplified errors.

Figure 4.

The structure of the optimized Feature Pyramid Network. “U” = upsample, “B” = BiFPN-weighted feature fusion.

In addition, single input nodes that contribute minimally to effective feature fusion are removed. The feature map P2 is integrated into the original BiFPN, and skip connections are established between the original input and output nodes. By applying weighted feature fusion, the network’s ability to fuse multi-scale features is enhanced while improving efficiency.

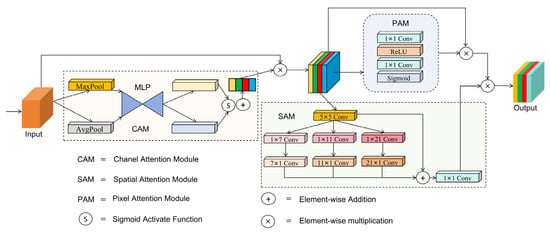

3.2.2. Multi-Scale Attention Synergy Module (MASM)

Images captured in underwater environments often exhibit low contrast and significant variations in object shapes. Traditional convolutional networks are prone to losing details in these images and are insensitive to object deformations, resulting in inadequate adaptability to complex samples, and may overlook critical changes, leading to missed detections and false alarms. Therefore, we propose a Multi-Scale Attention Synergy Module (MASM), which enhances the model’s focus on important regions of the image by combining attention from different scales and improves the spatial feature extraction and fusion ability through depthwise separable convolutions. This module aims to improve the perception of difficult features in samples.

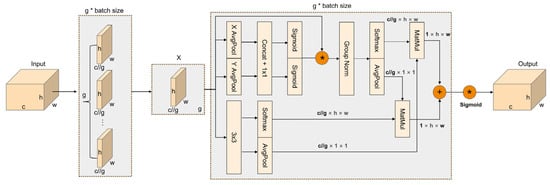

The framework of this module is shown in Figure 5. By integrating channel attention, spatial attention and pixel attention, we establish the synergy mechanism to enhance feature representation. Firstly, the input features are weighted through the channel attention module to obtain enhanced channel features. Subsequently, the spatial attention module processes the generated features to refine the spatial details, while the pixel attention module adjusts these features at the pixel level. Finally, the output is a weighted combination of spatial and pixel attention outputs, which improves the model’s ability to capture both global and local important features.

Figure 5.

The overall architecture of MASM.

Specifically, the channel attention module first applies adaptive pooling to the input feature maps along the channel dimension to compute the results of both average pooling and max pooling. Subsequently, the pooled feature maps are passed through two 1 × 1 convolutional layers followed by the ReLU activation function and then normalized by the Sigmoid activation function. Finally, the channel attention map, which determines the importance of each channel, is generated by the weighted sum of the average pooling and max pooling results. The channel attention module is described in Formulas (2)–(4), where represents the input feature map; and are the average pooling and max pooling operations applied to ; represents a 1 × 1 convolution layer; denotes the Sigmoid activation function; and denote the intermediate feature representations; and denotes the final channel attention map.

Then, the generated channel attention map is element-wise multiplied with the input feature map to generate the weighted feature map. The result is input to both the pixel and spatial attention modules. In the pixel attention module, a pixel attention map is generated through two 1 × 1 convolutional layers and ReLU and Sigmoid activation functions. The original input is then multiplied by the pixel attention map to highlight important pixel regions, resulting in the weighted pixel attention map. This process is described in Formulas (5)–(6), where denotes the feature map obtained by element-wise multiplication between the input feature map and the channel attention map ; represents the final pixel attention map.

The spatial attention module applies depthwise separable convolutions with kernels of 5 × 5, 1 × 7, 7 × 1, 1 × 11, 11 × 1, 1 × 21, and 21 × 1 to the received weighted feature map to capture multi-scale spatial information. Then the results are summed and fused via a 1 × 1 convolution to generate the spatial attention map. Finally, this attention map is element-wise multiplied by the weighted pixel attention map to generate the final output. This process is described in Formulas (7)–(9):

where is a set of kernel sizes designed to extract spatial features at different receptive fields; denotes depthwise separable convolution with composite kernels of 5 × 5, 1 × , × 1; is the spatial attention map; is the final enhanced feature representation of element-wise multiplication between and .

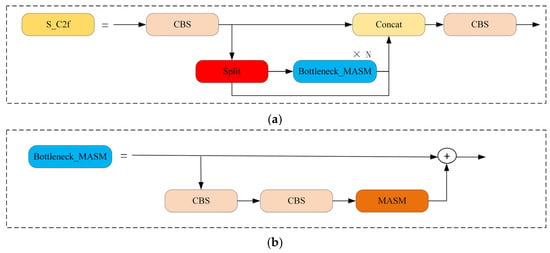

The channel attention module helps the network learn channel importance by adaptively weighting features along the channel dimension, thereby reducing the impact of redundant information. The spatial attention module enables the network to focus on important regions in the image by learning the significance of each spatial position while suppressing irrelevant areas. Pixel attention refines features at the pixel level, which is crucial for enhancing fine-grained features. The MASM, which integrates multi-scale attention mechanisms, dynamically adjusts the feature maps in underwater object detection, thereby improving the performance of the network. In addition, we apply the MASM to the bottleneck in the C2f of the neck to generate a synergistic C2f structure named S_C2f. This structure, which combines the characteristics of MASM, can efficiently handle the complex sample features, and improve the feature fusion of the neck. The S_C2f and its bottleneck are shown in Figure 6a,b.

Figure 6.

The structure of S_C2f and Bottleneck_MASM. (a) S_C2f; (b) Bottleneck_MASM.

3.2.3. Efficient Multi-Scale Attention (EMA)

Underwater environments are characterized by complex backgrounds, where target objects are typically small and tend to appear in dense clusters. Applying the attention enhances the network’s focus on key features while suppressing irrelevant information, thereby improving localization accuracy. This approach effectively boosts the network’s performance on small objects and dense targets when addressing these challenges. We integrate the EMA, which performs well in small object detection, into the backbone and neck of the YOLOv10n, aiming to improve the network’s focus on key information of multi-scale features, and further optimize the feature extraction and fusion.

The EMA module is shown in Figure 7. This module reshapes a portion of the input feature channels to the batch dimension and transforms the channel dimension into multiple sub-features through grouping. This process ensures that spatial semantic information is evenly distributed across each feature group. Then, these features are transferred to the multi-scale parallel subnetwork to establish and process the short-term and long-term dependencies. Firstly, context information is integrated into the intermediate feature map by parallelly adopting two 1 × 1 convolution branches for horizontal and vertical 1D global average pooling and a 3 × 3 convolution branch for feature extraction, which strengthens the network’s ability to process multi-scale and multi-dimensional features. Then, the results of the two 1 × 1 convolution branches are concatenated, transformed by the Sigmoid function for feature conversion, and connected through element-wise multiplication to achieve cross-channel attention interaction. Subsequently, the outputs of the aforementioned branches perform 2D global average pooling to encode the global spatial information and apply the Softmax function for linear transformation. Finally, the ultimate spatial attention map, which collects spatial information of multi-scale features, is generated by merging the results of various branches through point-by-point multiplication. The processes of 1D global average pooling in the horizontal and vertical dimensions and the 2D global average pooling are shown in Formulas (10)–(12). indicates the input feature maps at c-th channel, and H and W refer to the height and width of the feature map, respectively.

Figure 7.

The structure of EMA. “g” = divided groups; “X AvgPool” = 1D horizontal global pooling; “Y AvgPool” = 1D vertical global pooling.

4. Experiment and Results

4.1. Datasets

We use the URPC2020 and DUO datasets [39] to validate the proposed model. The URPC2020 dataset contains 5543 real underwater optical images, which encompass four common underwater objects: holothurian, echinus, scallops, and starfish. In our experiments, we partition this dataset into training, validation, and test sets in a 7:1:2 ratio, resulting in 3880 images for training, 554 images for validation, and 1109 images for testing. This division ensures adequate samples for robust model training, allows the validation set to assess model performance and prevent overfitting, and enables a more objective final evaluation in the test set.

The DUO dataset is a collection derived from recent URPC competitions [40], which has been deduplicated and relabeled. Zhao et al. [39] reorganized and shared this dataset, resulting in a total of 5208 images. This dataset contains four categories of underwater objects: holothurian, echinus, scallops, and starfish. The dataset is partitioned into training, validation, and test sets in a 7:1:2 ratio, comprising 3645 training images, 520 validation images, and 1043 test images.

The above two datasets comprehensively reflect the complexity of the real underwater environment, including low contrast that makes it difficult to distinguish objects from the background, small targets with varying degrees of blurriness, significant changes in object shape and size, and the aggregation of objects. These factors significantly increase the difficulty of the detection task and also help improve the model’s generalization ability in complex scenarios.

4.2. Experimental Setup

Pytorch 1.12.1 was used as the deep learning framework for all experiments. The experimental environment configuration used included an AMD Ryzen 9 5900X CPU with 32 GB of memory, NVIDIA GeForce RTX 3090 (CUDA11.3) with 24 GB of memory, and Ubuntu 16.04 with Python 3.9.16. The model training lasted for 300 epochs, the input images were uniformly adjusted to a standardized size of 640 × 640 pixels, and the Mosaic data augmentation technology was applied to enhance the robustness of the model. The batch size was set to 16, and the momentum was set to 0.937. The initial and final learning rates were fixed at 0.01, and the weight decay coefficient was set to 0.0005 to avoid overfitting. The stochastic gradient descent (SGD) was used to optimize the model.

4.3. Evaluation Metrics

To comprehensively evaluate the performance of the proposed model on underwater object detection, we use precision (P), recall (R), mean average precision (mAP), parameters (Params), giga floating-point operations per second (GFLOPs), and frames per second (FPS) as the evaluation metrics. Params and GFLOPs serve as measures for the computational complexity of the model. Meanwhile, mAP is employed to assess the model’s detection accuracy by taking both precision and recall into account. Furthermore, FPS is utilized to evaluate the detection speed. These indicators are calculated in the following formulas:

In Equations (13) and (14), true positive (TP) refers to instances where the model correctly predicts positive samples as positive. False positive (FP) indicates cases where the model incorrectly classifies negative samples as positive. False negative (FN) denotes situations where the model incorrectly classifies positive samples as negative. Precision is the proportion of actual positive samples among all samples predicted as positive. Recall is the proportion of actual positive samples that are correctly predicted as positive out of all actual positive samples. In Equation (15), denotes the number of categories, and is the index for each class. and denote precision and recall, respectively. computes the average precision (AP) of the i-th class.

4.4. Comparisons with Other Methods on URPC2020

We compared our model with some state-of-the-art models on the URPC2020 dataset. In our experiments, we employed mAP@0.5, mAP@0.5:0.95, parameters, and GFLOPs as evaluation metrics to quantify the model’s detection accuracy, size, and computational efficiency. The experimental results for SSD, YOLOv3, YOLOv4, YOLOv5s, YOLOvX [41], YOLOv8n, and FEB-YOLO were sourced from [39]. The results of YOLOv10n, RT-DETR [42] and BSE-YOLO were conducted using the same dataset and training methodology.

As illustrated in Table 1, the proposed BSE-YOLO model achieved a mAP@0.5 of 83.7% and a mAP@0.5:0.95 of 48.6%. This represents a significant improvement over SSD, with a 7.5% increase in mAP@0.5 and an 11.1% increase in mAP@0.5:0.95. Furthermore, compared to YOLOv3, YOLOv4, and YOLOvX, BSE-YOLO demonstrated superior performance, achieving improvements of 10.8%, 8.3%, and 4.0% in mAP@0.5, respectively. Compared with YOLOv8n and the baseline model YOLOv10n, our BSE-YOLO achieved improvements of 1.5% mAP@0.5 and 2.2% mAP@0.5, respectively. Additionally, relative to the baseline model YOLOv10n, the parameters of BSE-YOLO decreased by approximately 0.2 M. When compared with FEB-YOLO, our model attained the highest mAP@0.5 score of 83.7%. Furthermore, while our model exhibits more parameters and computational requirements than FEB-YOLO, its lightweight design ranks second only to FEB-YOLO. In summary, our model successfully enhances detection accuracy without significantly increasing computational demands, demonstrating both high performance and practicality.

Table 1.

Comparison of different object detection models on the URPC2020 dataset.

4.5. Comparisons with Other Methods on DUO

To further validate the generalization capability of BSE-YOLO, we conducted comparative experiments on the enhanced DUO dataset using the same evaluation metrics. The result of LUW-DETR was obtained from [43], and the experimental results for SSD [14], Faster R-CNN [17], YOLOv3, YOLOv4, YOLOv7-Tiny, YOLOv8n, and FEB-YOLO are from [39], while the results of YOLOv10n, RTDETR [42] and BSE-YOLO were obtained under the same dataset and training settings.

As shown in Table 2, our BSE-YOLO achieved the highest mAP@0.5 of 83.9% and mAP@0.5:0.95 of 64.2%. Compared with Faster R-CNN and YOLOv7-Tiny, BSE-YOLO’s mAP@0.5 is 9.5% and 2.9% higher, respectively. Additionally, relative to the baseline model YOLOv10n, BSE-YOLO demonstrated significant improvements of 3.0% in mAP@0.5 and 2.5% in mAP@0.5:0.95. Although BSE-YOLO has more parameters and higher computational consumption compared to FEB-YOLO, it still achieved a 1.0% improvement in both mAP@0.5 and mAP@0.5:0.95. These results indicate that the proposed BSE-YOLO exhibits superior robustness and detection accuracy, making it effective for underwater object detection tasks.

Table 2.

Comparison of different object detection models on the DUO dataset.

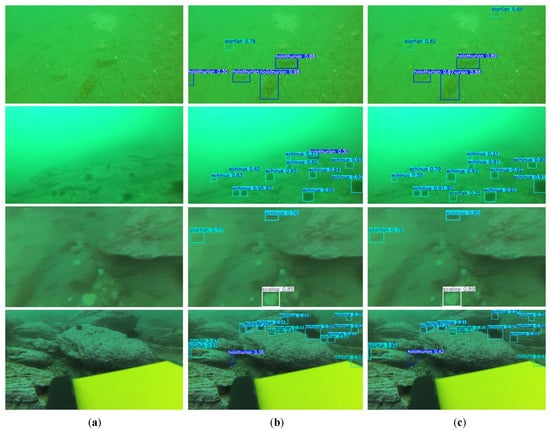

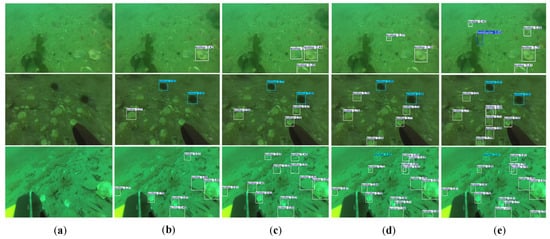

To further verify the performance of BSE-YOLO, we selected several images from the test set of the DUO dataset for qualitative testing and compared them with the baseline model YOLOv10n. As shown in Figure 8, BSE-YOLO demonstrated higher detection accuracy in low-contrast images, scenes with dense small objects, and edge object detection. This indicated that BSE-YOLO had a stronger perception ability for challenging samples, thereby effectively improving its detection accuracy in complex underwater scenes.

Figure 8.

Detection results on DUO. (a) Raw image; (b) YOLOv10n; (c) BSE-YOLO.

4.6. Ablation Study

In this section, we conducted ablation studies on the URPC2020 dataset to further validate the effectiveness of the improvement modules. All experiments are performed under the same conditions without utilizing pre-trained weights. We use mAP@0.5, mAP@0.5:0.95, parameter count, GFLOPs, and FPS as evaluation metrics.

As shown in Table 3, we selected YOLOv10n as the baseline for our experiments. Compared to the baseline, after introducing the improved BiFPN into the neck of the network, the B-YOLO achieved improvements of 1.5% in mAP@0.5, although mAP@0.5:0.95 decreased by 0.1%. Additionally, the number of parameters was reduced by 0.27 M, GFLOPs decreased by 0.3 G, and FPS increased from 136.4 to 144.4. The improved BiFPN’s bidirectional connections between top-down and bottom-up paths maintain fine-grained features across scales, which significantly improve small and blurred object detection accuracy. Furthermore, its weighted fusion mechanism allows the network to adaptively emphasize more informative features and suppress less relevant ones, thereby reducing confusion in scenes where objects are clustered and overlapping. Building on this, we incorporated the S_C2f into the neck. S_C2f integrates channel and spatial attention modules to dynamically adjust feature weights, enhancing the model’s focus on low-contrast regions. Furthermore, its multi-scale spatial convolutions improve the model’s adaptability to shape variations across different scales. Although BS-YOLO slightly increased the parameters and computational costs, it further enhanced mAP@0.5 by 0.5% and mAP@0.5:0.95 by 0.8% compared to B-YOLO. Finally, by integrating the EMA into both the backbone and neck, we aimed to further improve detection accuracy. The EMA uses parallel convolution branches to integrate contextual information, followed by cross-channel and spatial attention mechanisms to focus on critical features and suppress irrelevant background noise, which enhances localization accuracy for small objects and dense targets. The resultant BSE-YOLO achieved the highest mAP@0.5 of 83.7% and mAP@0.5:0.95 of 48.6%. The combination of BiFPN, S_C2f, and EMA creates a cohesive feature processing method optimized for complex underwater scenes. BiFPN’s weighted fusion mechanism integrates multi-scale features, preserving details for small and blurred objects and reducing the confusion in dense scenes. S_C2f leverages channel and spatial attention modules to emphasize features of low-contrast regions, while multi-scale spatial convolutions improve adaptability to shape and scale variations. EMA’s cross-channel and spatial attention mechanisms prioritize critical features and suppress background noise, improving localization accuracy for small and clustered objects.

Table 3.

The ablation experiments on URPC2020.

When used independently, the designed S_C2f improved mAP@0.5 by 0.7% compared to the baseline. Subsequently, BE-YOLO, which integrates the enhanced BiFPN with the EMA, achieved further improvements of 0.2% in mAP@0.5 and 0.2% in mAP@0.5:0.95 over B-YOLO. Although the EMA module alone did not significantly boost performance, its combination with BiFPN enabled more efficient feature exchange and fusion, as well as enhanced processing of multi-scale features, thereby improving the detection accuracy. Finally, after incorporating both the EMA and the S_C2f, BSE-YOLO’s FPS decreased to 97.7, lower than the baseline. However, it still meets real-time detection requirements, striking an effective balance between efficiency and accuracy.

As shown in Figure 9, to further comprehensively validate the superior performance of BSE-YOLO, we conducted qualitative testing in addition to the quantitative experiments by selecting several images from the URPC2020 test set. The results demonstrated that, compared to YOLOv10n, BSE-YOLO achieved higher accuracy in the recognition and detection of small objects, with lower probabilities of target omission and misidentification. These experimental results demonstrated that the proposed model exhibited more outstanding performance in object detection accuracy within underwater environments.

Figure 9.

Detection results on URPC2020. (a) Raw image; (b) YOLOv10n; (c) BSE-YOLO.

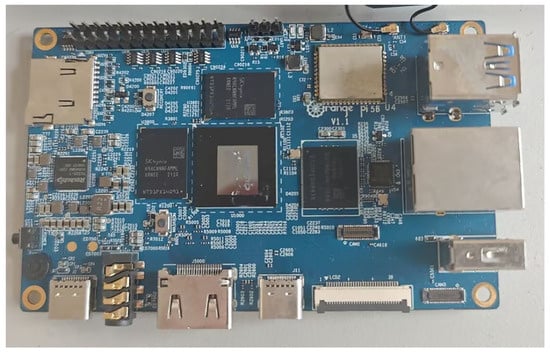

4.7. Application Test

We deployed our BSE-YOLO on an embedded device to assess its real-world applicability. Figure 10 displays Orange Pi 5B as the testing device, equipped with the RK3588S 8-core 64-bit processor, featuring a quad-core A76 and a quad-core A55, with a maximum frequency of 2.4 GHz. We utilized ONNX as the inference computing framework to evaluate the performance of our BSE-YOLO, with detailed information provided in Table 4. Additionally, we selected YOLOv10n for comparison. As illustrated in Table 4, we conducted tests on input images of sizes 256 × 256 and 640 × 640, respectively. Although the proposed BSE-YOLO had a slightly lower inference speed compared to YOLOv10n, it still met the detection requirements in low-computing environments.

Figure 10.

Orange Pi 5B.

Table 4.

Performance of BSE-YOLO on an embedded device, Orange Pi 5B.

To further demonstrate the practical detection performance of both models, we visualized the inference results under different input resolutions. As shown in Figure 11, both YOLOv10n and BSE-YOLO were capable of detecting underwater targets effectively on the Orange Pi 5B. Specifically, (b) and (d) present the detection results of YOLOv10n with input sizes of 256 × 256 and 640 × 640, respectively, while (c) and (e) display the results of BSE-YOLO under the same conditions. It was observed that BSE-YOLO achieved comparable detection accuracy with clearer target localization while maintaining acceptable inference latency on the embedded system.

Figure 11.

The inference results under different input resolutions. (a) Raw image; (b) YOLOv10n with 256 × 256 input; (c) BSE-YOLO with 256 × 256 input; (d) YOLOv10n with 640 × 640 input; (e) BSE-YOLO with 640 × 640 input.

5. Conclusions

This paper proposes a lightweight underwater object detection model, BSE-YOLO, based on YOLOv10n. To balance detection efficiency and accuracy, we introduce an enhanced BiFPN to replace the original neck and design a Multi-Scale Attention Synergy Module (MASM), which forms the core of the proposed S_C2f, which strengthens the perceptibility of challenging sample features, thereby substantially boosting overall performance. Furthermore, we integrate the EMA module into both the backbone and neck to enhance the extraction and fusion of different-scale features, further improving the recognition and detection of small objects in complex underwater environments. The experimental results demonstrate that, compared to the baseline model YOLOv10n, BSE-YOLO improves mAP@0.5 on the URPC2020 and DUO datasets by 2.2% and 3.0%, respectively. While reducing the network’s parameters, BSE-YOLO effectively detects small objects in complex underwater environments, achieving an excellent balance between lightweight design and high accuracy. This study primarily focuses on improving the neck of the baseline model. Future research could explore optimizing the backbone to enhance feature extraction capabilities, further improving detection accuracy, or reducing parameters to make the model even more lightweight.

Author Contributions

Methodology, Y.W.; software, Y.W.; formal analysis, Y.W.; resources, Y.W. and H.Y.; data curation, Y.W.; writing—original draft preparation, Y.W.; writing—review and editing, H.Y. and X.S.; funding acquisition, X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number 62276118) and the Education Science Planning Project of Jiangsu Province (grant number B/2022/01/128).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Apriliani, E.; Nurhadi, H. Ensemble and Fuzzy Kalman Filter for position estimation of an autonomous underwater vehicle based on dynamical system of AUV motion. Expert Syst. Appl. 2017, 68, 29–35. [Google Scholar]

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater optical image processing: A comprehensive review. Mob. Netw. Appl. 2017, 22, 1204–1211. [Google Scholar] [CrossRef]

- Yeh, C.H.; Lin, C.H.; Kang, L.W.; Huang, C.H.; Lin, M.H.; Chang, C.Y.; Wang, C.C. Lightweight deep neural network for joint learning of underwater object detection and color conversion. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6129–6143. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Zhang, M.; Song, W.; Mei, H.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023, 527, 204–232. [Google Scholar] [CrossRef]

- Zhao, C.; Shu, X.; Yan, X.; Zuo, X.; Zhu, F. RDD-YOLO: A modified YOLO for detection of steel surface defects. Measurement 2023, 214, 112776. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, J.; Shu, X.; Pan, L.; Zhang, M. A lightweight Transformer model for defect detection in electroluminescence images of photovoltaic cells. IEEE Access 2024, 12, 194922–194931. [Google Scholar] [CrossRef]

- Hong, L.; Shu, X.; Wang, Q.; Ye, H.; Shi, J.; Liu, C. CCM-Net: Color compensation and coordinate attention guided underwater image enhancement with multi-scale feature aggregation. Opt. Lasers Eng. 2025, 184, 108590. [Google Scholar] [CrossRef]

- Liu, C.; Shu, X.; Xu, D.; Shi, J. GCCF: A lightweight and scalable network for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 128, 107462. [Google Scholar] [CrossRef]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; Springer: Berlin/Heidelberg, Germany, 2018; Volume 1804, pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Chen, L.; Zhou, F.; Wang, S.; Dong, J.; Li, N.; Ma, H.; Wang, X.; Zhou, H. SWIPENET: Object detection in noisy underwater scenes. Pattern Recognit. 2022, 132, 108926. [Google Scholar] [CrossRef]

- Liu, J.; Liu, S.; Xu, S.; Zhou, C. Two-stage underwater object detection network using swin transformer. IEEE Access 2022, 10, 117235–117247. [Google Scholar] [CrossRef]

- Pan, T.S.; Huang, H.C.; Lee, J.C.; Chen, C.H. Multi-scale ResNet for real-time underwater object detection. Signal Image Video Process. 2021, 15, 941–949. [Google Scholar] [CrossRef]

- Gao, J.; Zhang, Y.; Geng, X.; Tang, H.; Bhatti, U.A. PE-Transformer: Path enhanced transformer for improving underwater object detection. Expert Syst. Appl. 2024, 246, 123253. [Google Scholar] [CrossRef]

- Cai, S.; Li, G.; Shan, Y. Underwater object detection using collaborative weakly supervision. Comput. Electr. Eng. 2022, 102, 108159. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight underwater object detection based on yolo v4 and multi-scale attentional feature fusion. Remote Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Liu, K.; Peng, L.; Tang, S. Underwater object detection using TC-YOLO with attention mechanisms. Sensors 2023, 23, 2567. [Google Scholar] [CrossRef]

- Feng, J.; Jin, T. CEH-YOLO: A composite enhanced YOLO-based model for underwater object detection. Ecol. Inform. 2024, 82, 102758. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Wang, X.; Xue, G.; Huang, S.; Liu, Y. Underwater object detection algorithm based on adding channel and spatial fusion attention mechanism. J. Mar. Sci. Eng. 2023, 11, 1116. [Google Scholar] [CrossRef]

- Yan, J.; Zhou, Z.; Zhou, D.; Su, B.; Xuanyuan, Z.; Tang, J.; Lai, Y.; Chen, J.; Liang, W. Underwater object detection algorithm based on attention mechanism and cross-stage partial fast spatial pyramidal pooling. Front. Mar. Sci. 2022, 9, 1056300. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2017–2025. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Liu, N.; Han, J.; Yang, M.H. Picanet: Learning pixel-wise contextual attention for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3089–3098. [Google Scholar]

- Si, Y.; Xu, H.; Zhu, X.; Zhang, W.; Dong, Y.; Chen, Y.; Li, H. SCSA: Exploring the synergistic effects between spatial and channel attention. arXiv 2024, arXiv:2407.05128. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2025, 37, 107984–108011. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Zhao, Y.; Sun, F.; Wu, X. FEB-YOLOv8: A multi-scale lightweight detection model for underwater object detection. PLoS ONE 2024, 19, e0311173. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Li, H.; Wang, S.; Zhu, M.; Wang, D.; Fan, X. A dataset and benchmark of underwater object detection for robot picking. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Li, N.; Ding, B.; Yang, G.; Ni, S.; Wang, M. Lightweight LUW-DETR for efficient underwater benthic organism detection. Vis. Comput. 2025, 1–16. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).