Immersive Robot Teleoperation Based on User Gestures in Mixed Reality Space †

Abstract

1. Introduction

2. Previous Research

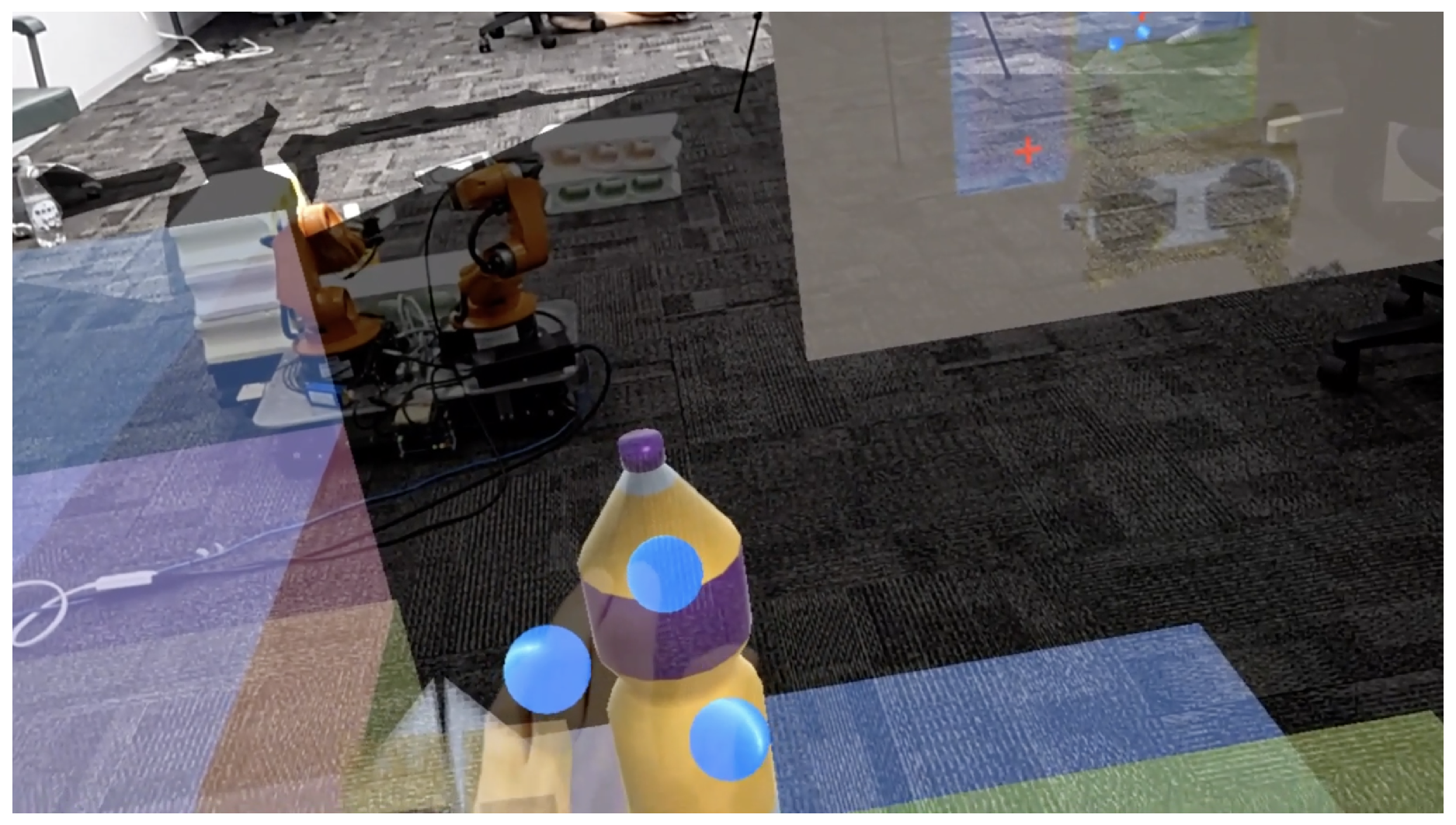

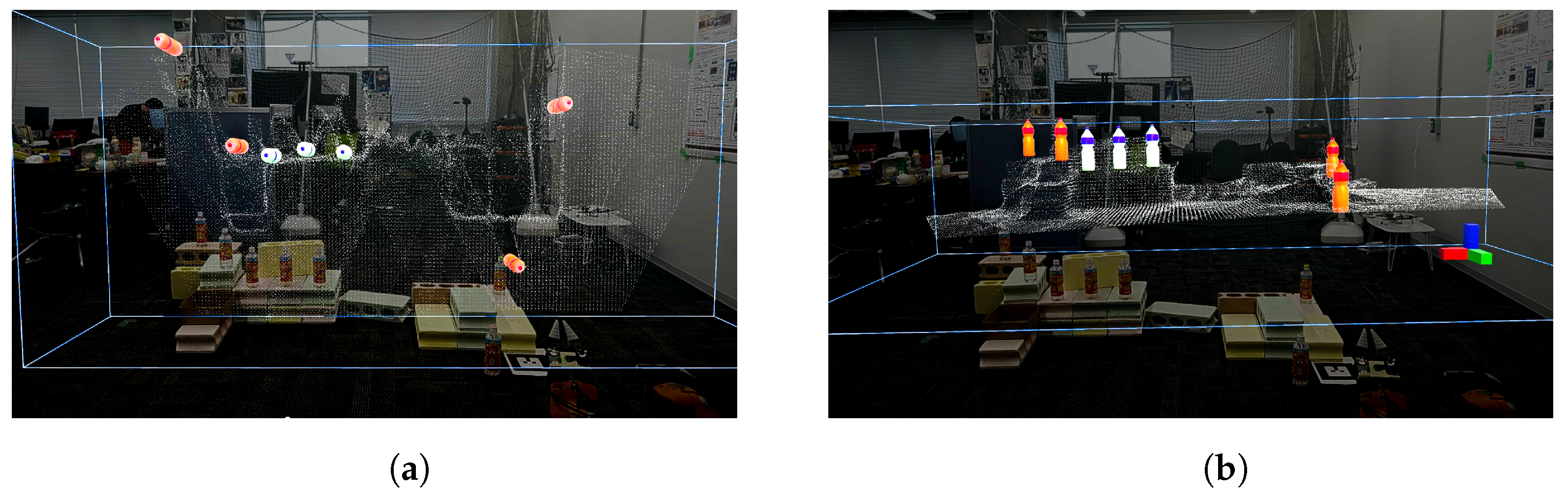

3. Immersive Robot Teleoperation System Based on MR Object Manipulation (IRT-MRO)

Integrating MR Space and Robot System for Human–Robot Interaction

4. Components of Intuitive Robot Operation Based on Mixed Reality Operation (IRO-MRO)

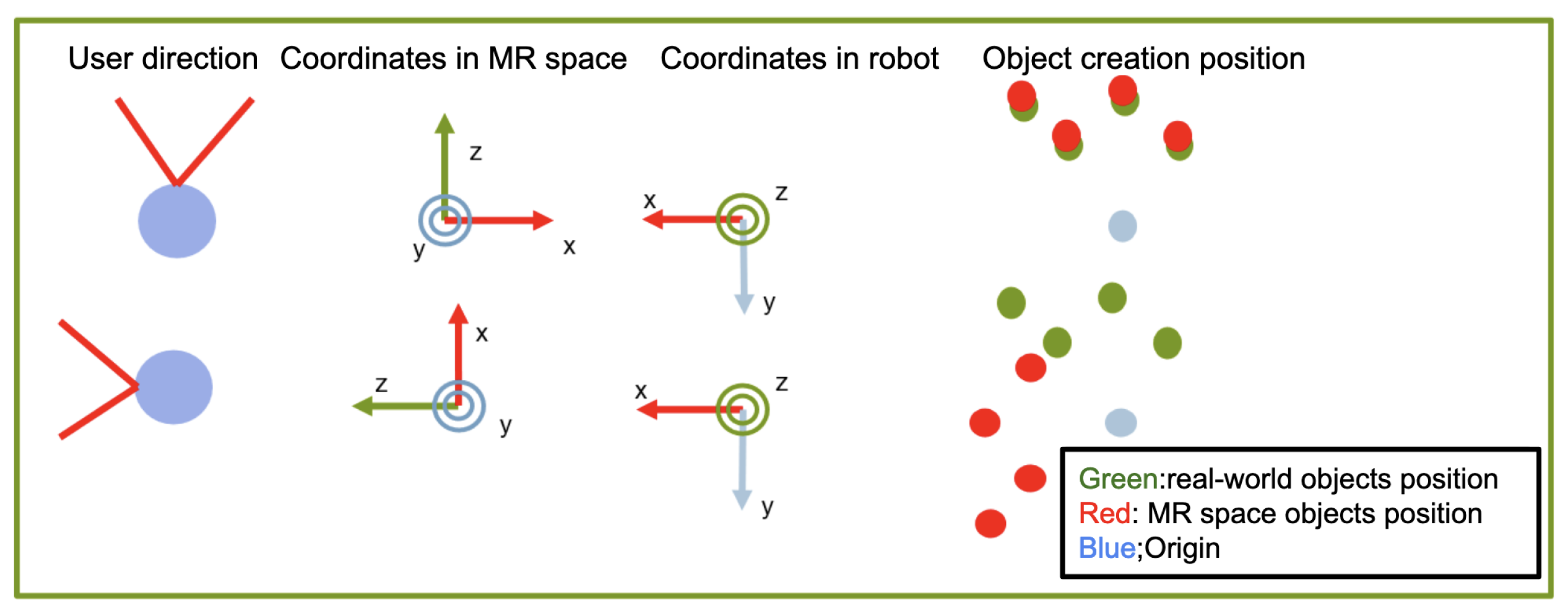

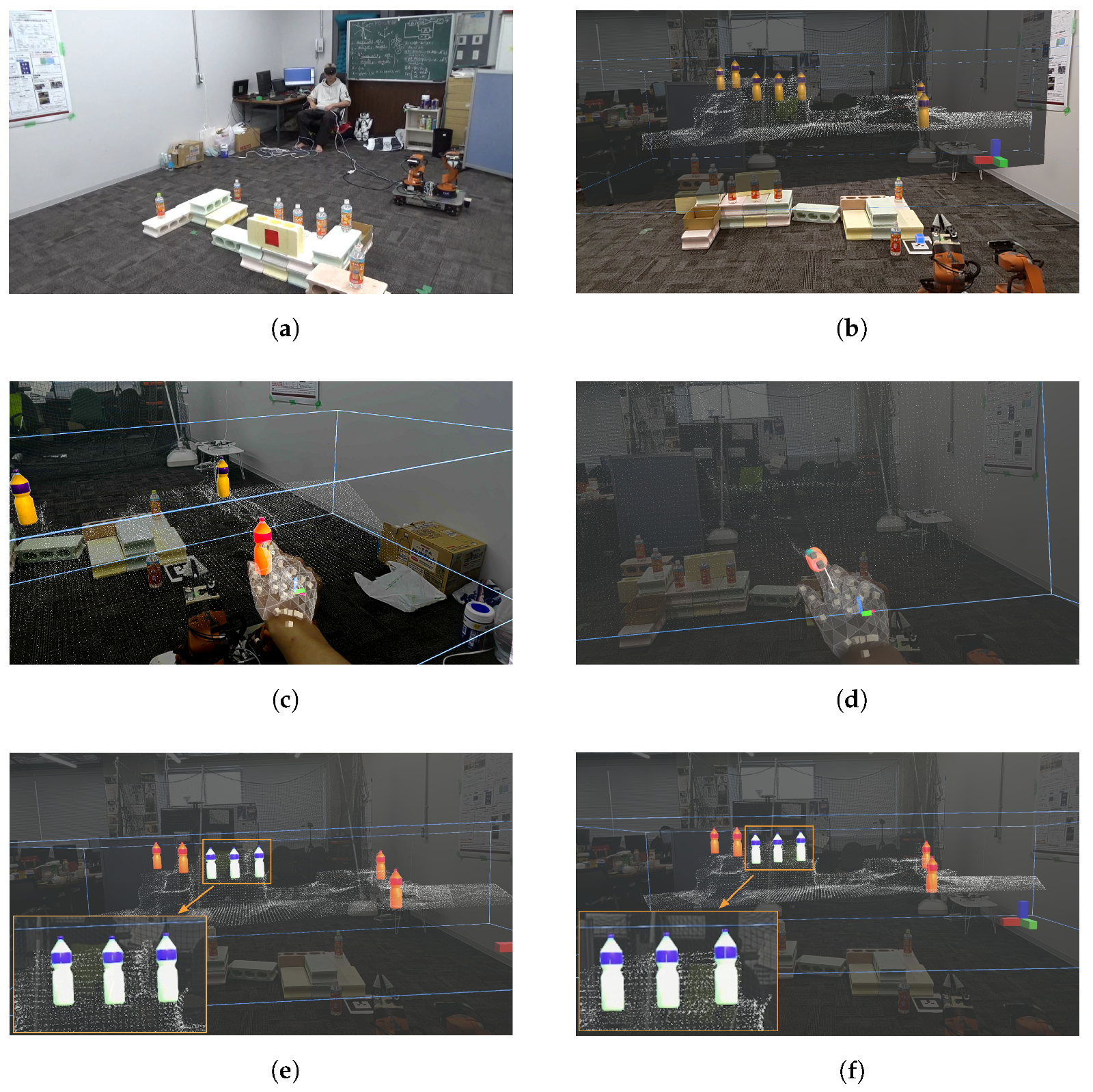

4.1. Definition of MR Space by Coordinate Transformation to Real Space

4.2. Aligning the Origin of MR User’S Viewpoint and Real Robot Using AR Markers

4.3. Automatic Generation of MR Objects

- (1)

- Object detection: Detect physical objects using YOLOv5 and obtain their labels and bounding box .

- (2)

- Object tracking: The object tracking algorithm DeepSORT [30] is used to associate the bounding box of each physical object with its unique ID.

- (3)

- Depth estimation: Realsense is used to obtain the depth with respect to the center coordinates of the bounding box of each physical object.

- (4)

- Camera and world coordinate transformation: Since the bounding boxes are in camera coordinates, the depth information is used to convert the camera coordinates to world coordinates and obtain the world coordinates of each bounding box.

- (5)

- World to robot-based conversion: The camera coordinates are transformed to the base coordinates of YouBot using the same-order transformation matrix for each joint.

- (6)

- MR object generation: MR objects are predefined and generated by the MR objects with the same labels of the physical objects.

- (7)

- Object placement: The MR object is generated at an angle of 10 to 20 degrees from the horizontal plane, taking into account the user’s natural head movements during interaction.

- (8)

- Position update: The location and ID of each physical object are continuously updated and associated with the generated MR object.

- (9)

- User interaction: Updates of the position of the physical objects and the MR objects occur only when the user selects an MR object. Updates stop when the user places the MR object and resume when the user selects the MR object again.

- (10)

- Top-view object placement visualization: Visualize the arrangement of MR objects in MR spaces from top perspective.

4.4. Map-Integrated Calibration by Manipulating MR Objects in MR Space

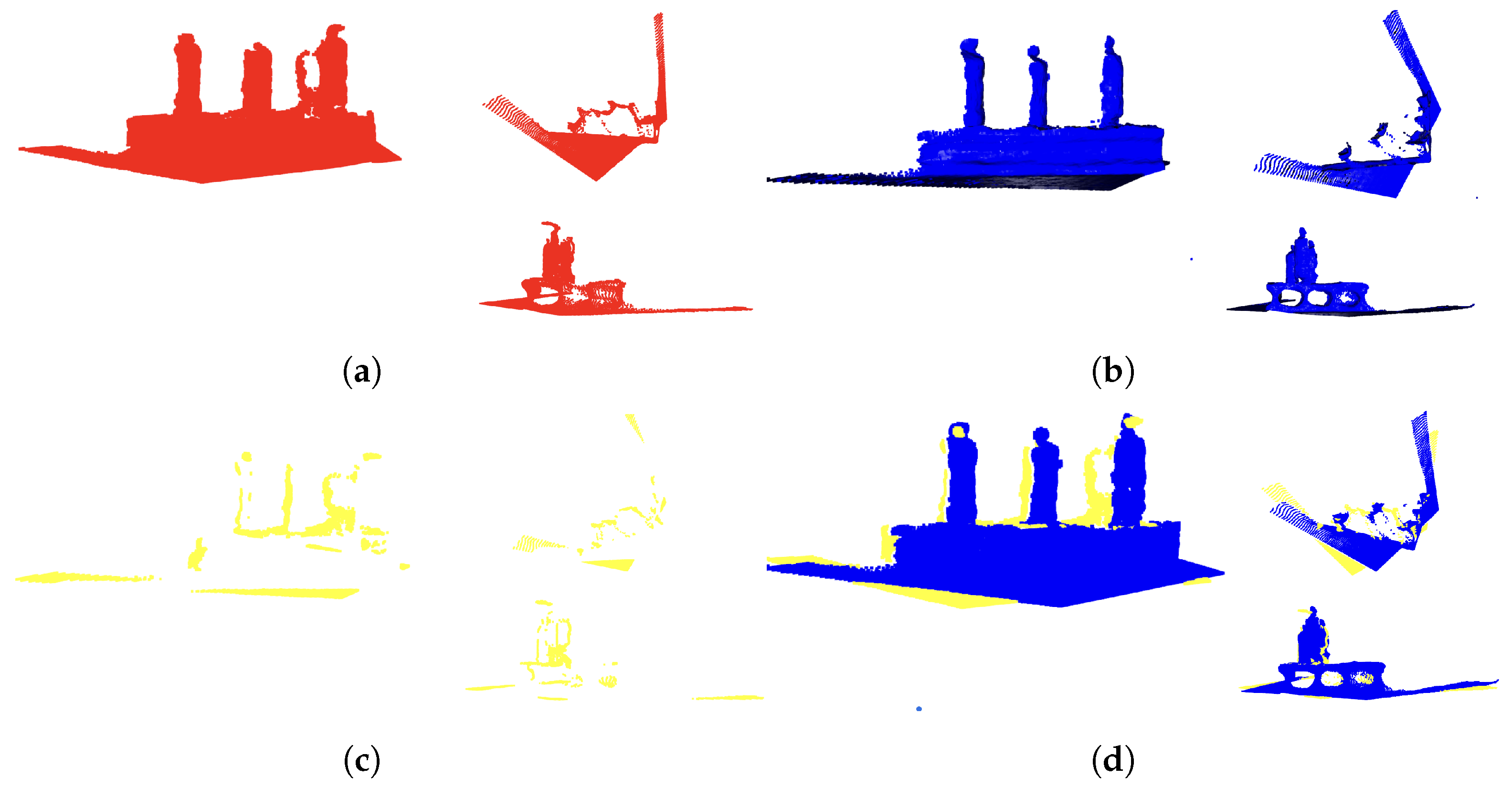

Point Cloud Synthesis

- (1)

- Point cloud acquisition and preprocessing: The initial point cloud is acquired at the start of the scanning process from the RealSense device, and each subsequently acquired point cloud is denoted as . Preprocessing steps such as noise removal are applied to improve data quality, resulting in processed point clouds and .

- (2)

- Global registration: Global registration is performed between the preprocessed point clouds and using the Random Sample Consensus (RANSAC [31]) algorithm to achieve a coarse alignment. The transformation , consisting of translation p and rotation q, is estimated by minimizing the error function based on the sampled point set:where is a point in the point cloud and is the closest point to in the point cloud .

- (3)

- Registration refinement: The Iterative Closest Point (ICP [32]) algorithm is applied to further refine the alignment. Based on the transformation obtained from RANSAC, the error function is minimized to fine-tune the transformation , consisting of translation and rotation :

- (4)

- Extraction of non-overlapping point clouds: Extracting non-overlapping point clouds is a critical step in the process of synthesizing and updating point clouds from continuous scans, especially when dealing with devices with limited processing power, such as HoloLens2. HoloLens2 is powerful for mixed reality applications but has limited onboard processing resources. As a result, non-overlapping point clouds must be extracted to ensure real-time performance and responsiveness. Using a KD-tree, for each point in the point cloud , the nearest neighbor points in point cloud are searched within a certain radius . If no point is found within this radius, is added to the non-overlapping point cloud

- (5)

- Point cloud merging and delivery: The non-overlapping point cloud is merged with the existing point cloud and updated by . Acquired point cloud is then delivered to the MR device, and the point cloud is added on the MR space. This process efficiently integrates consecutive point cloud data and enables the generation of detailed real-time point clouds. Figure 7 shows an example of merging two point clouds, where the number of points in the point cloud is 122,147 and the number of points in the point cloud is 117,037, and simply adding them together gives 239,184 data points. On the other hand, the number of points in non-overlapping point cloud is 14,552, and the number of points in point cloud (d) is 131,589. This shows that the point cloud processing of HoloLens2 has become lighter.

4.5. Map-Integrated Calibration

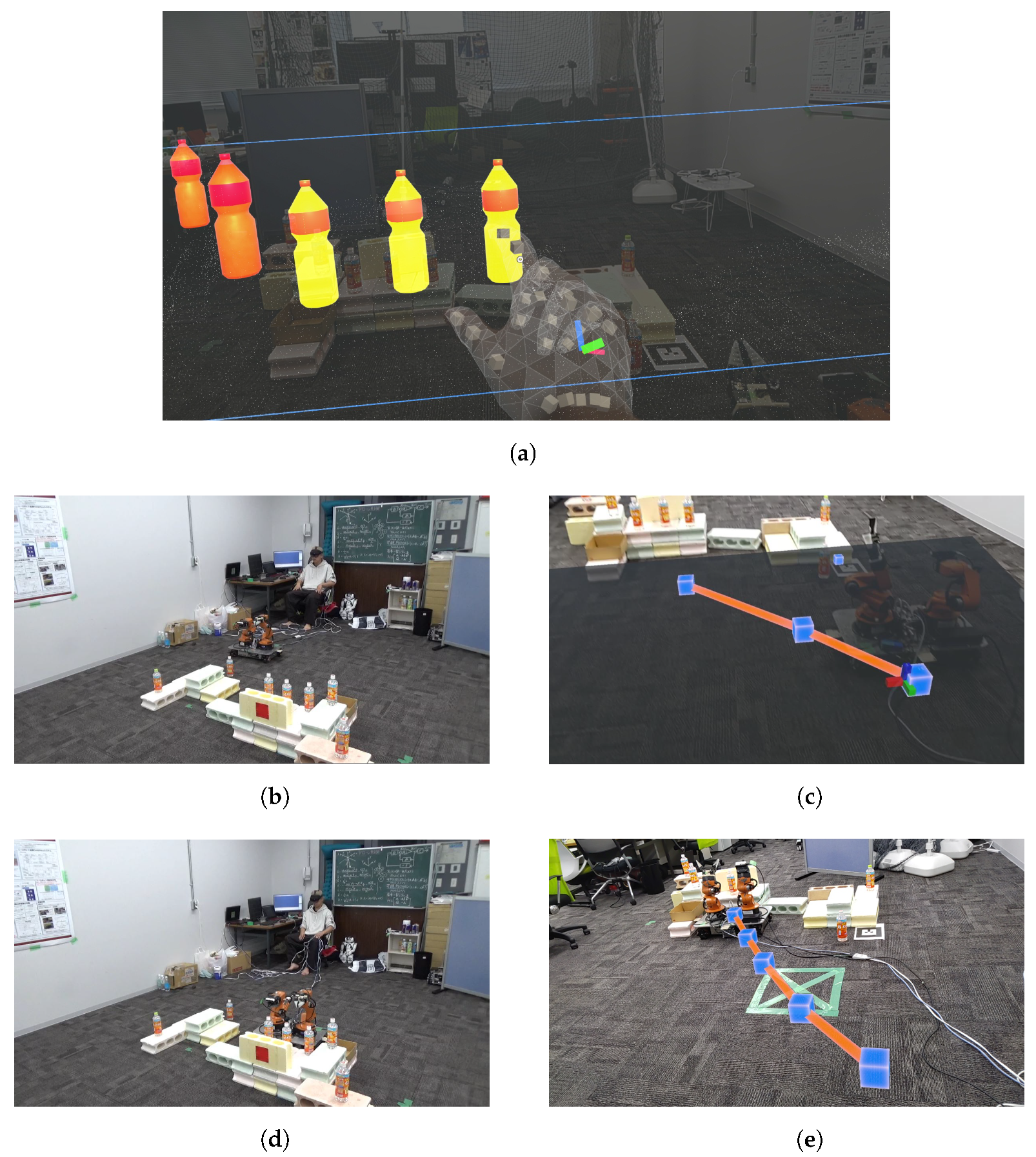

4.6. Route Planning and Navigation of Mobile Robot Using MR Space

4.6.1. Route Planning Process

- (1)

- Setting the destination: The user specifies the robot’s goal position using the MR interface. For example, if the user wishes to retrieve three bottles, as shown in Figure 13a, the robot must move to a position (shown in Figure 13b) where it can grasp these bottles. The user selects multiple objects to pick, and the selected objects are highlighted in yellow (Figure 13c). The convex hull is calculated based on the coordinates of the selected objects, and the centroid of this hull is used as the starting point. The optimal position is then calculated, taking into account the maximum reach of the arm and the safety distance to obstacles. The optimal position p is represented as follows using the centroid and search parameters and :where indicates the coordinates of the mobile base’s centroid, r changes within the range of − , and ranges from 0 to , defining the direction of the searched position. The optimal position is chosen such that the distance from the initial position is minimized. This is formulated as the following optimization problem:where, must be within the maximum reach distance D and at least the safety distance S away from each object. Also, let P denote the set of coordinates of the selected MR objects, and each coordinate in P corresponds to the position of an individual object selected by the user.By solving this optimization problem, the robot will be able to efficiently and safely access the target object group from an optimal position. This position is the closest to the selected objects, and if multiple optimal positions exist, the one with the shortest distance from the initial position is chosen. This approach allows the users to easily specify the robot’s target location, and the robot automatically calculates the optimal position to efficiently reach the target objects.

- (2)

- Route planning: Once the destination is set, the system employs a graph-based environmental model to determine the optimal route from the robot’s current position to the target destination. The Dijkstra algorithm is applied during this process to select the route that minimizes the cost between nodes.

- (3)

- Route visualization: The calculated route is visualized in real time in the MR space, allowing the user to see the route in a 3D space through their MR device, as shown in Figure 14.

4.6.2. User-Driven Route Modification

- (1)

- Initiating route modification: The user selects specific waypoints they wish to modify using MR device.

- (2)

- Adjusting way points: The user directly drags and drops the selected waypoints to new positions in the MR space, modifying the route in real time.

- (3)

- Updating the route: When the position of the waypoint is changed, the system automatically recalculates the route and updates the new path and displays in the MR space.

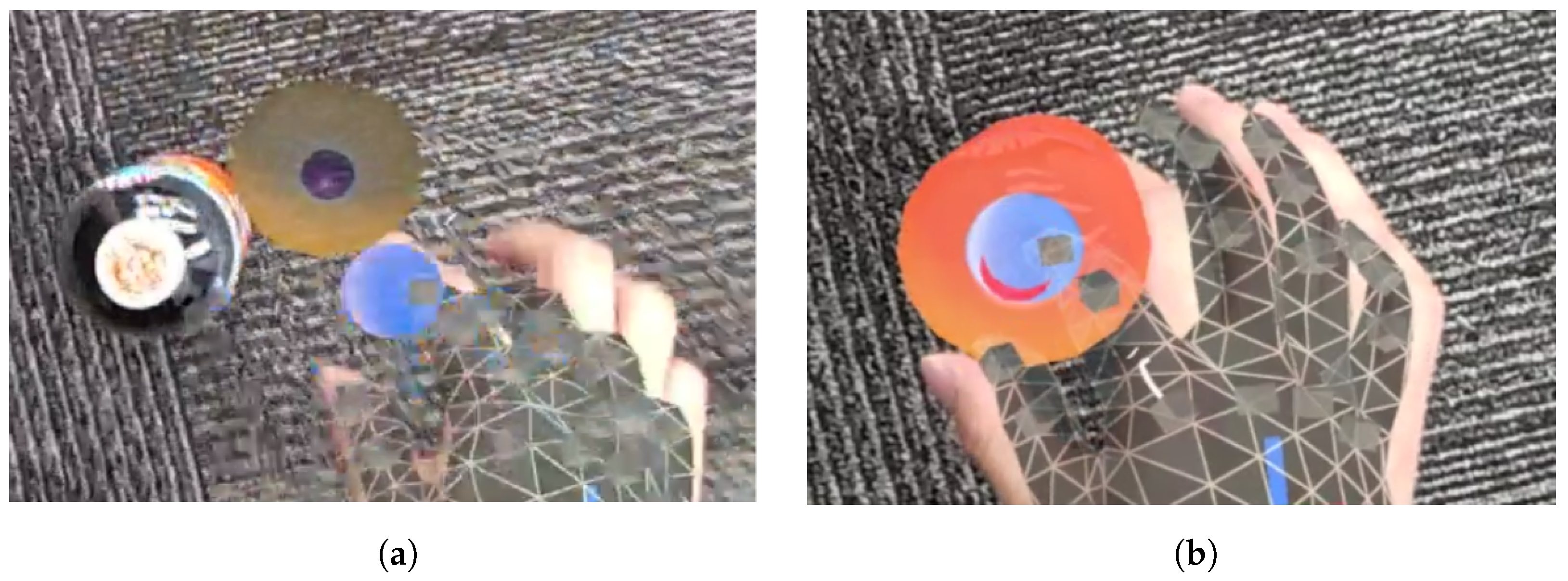

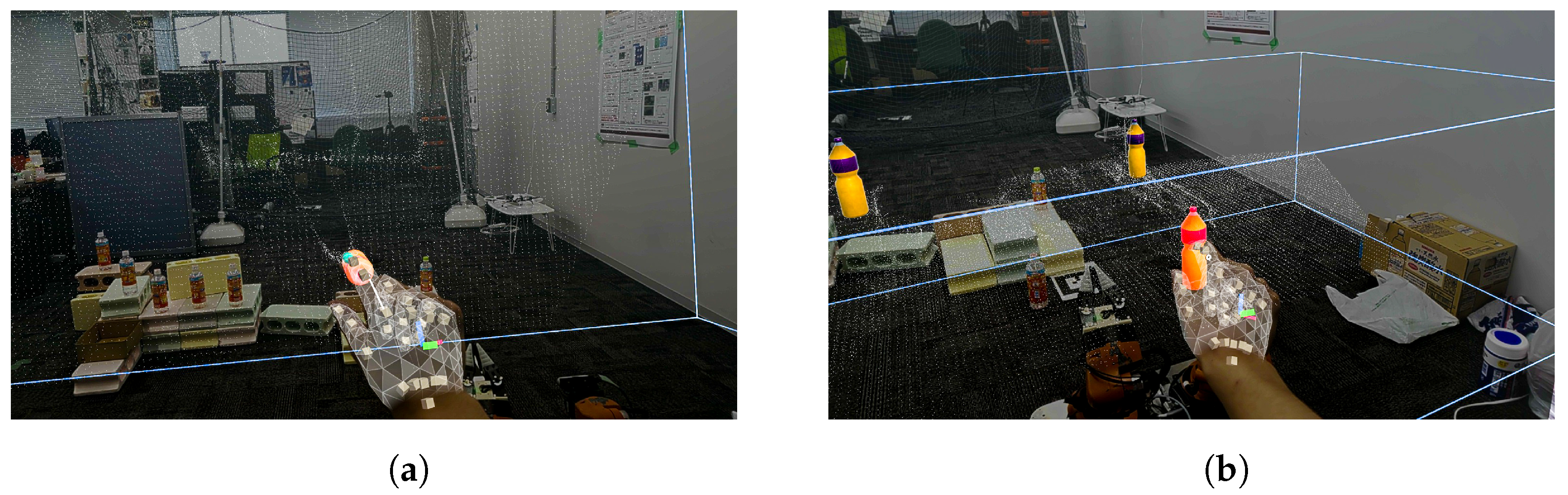

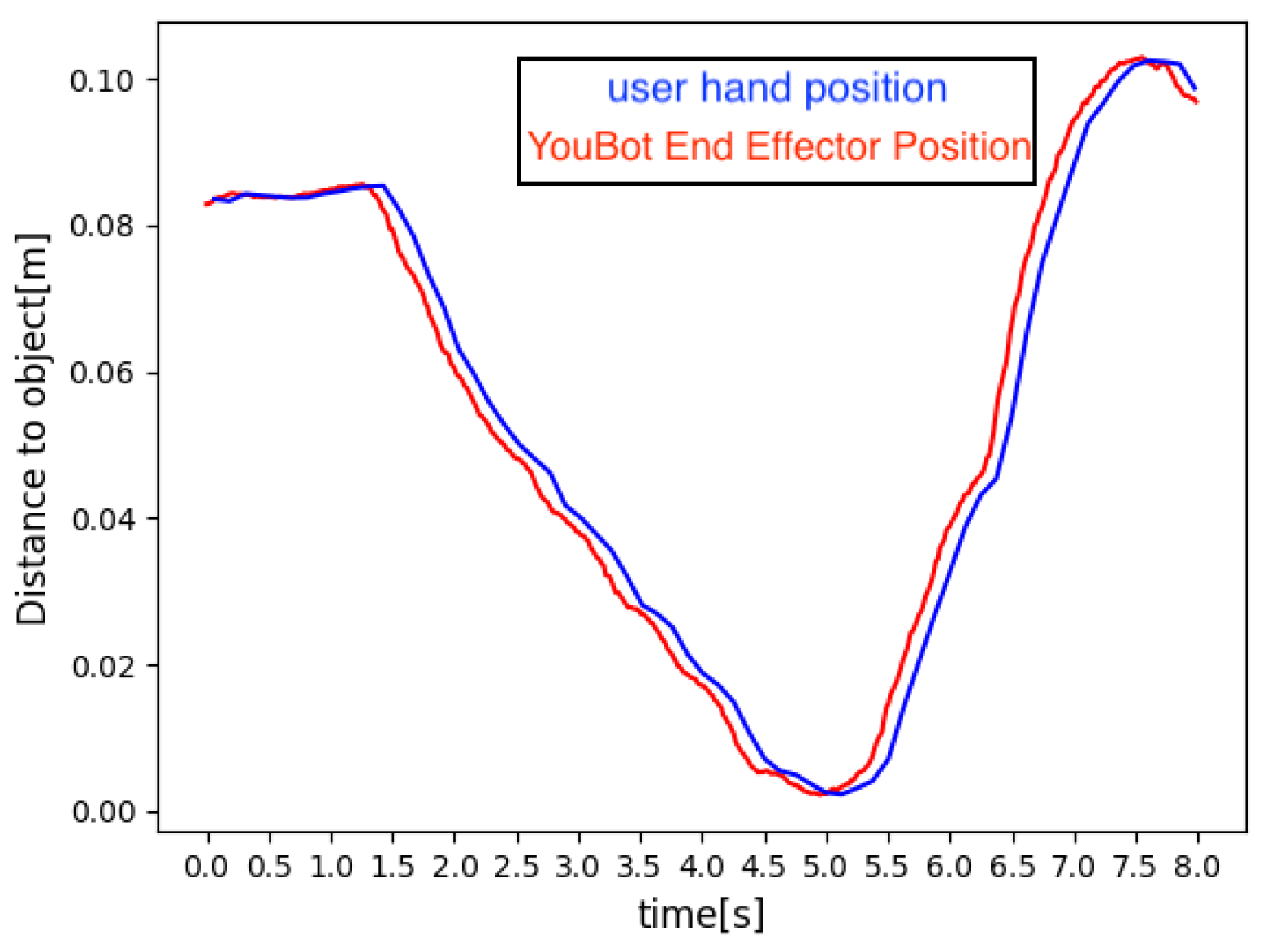

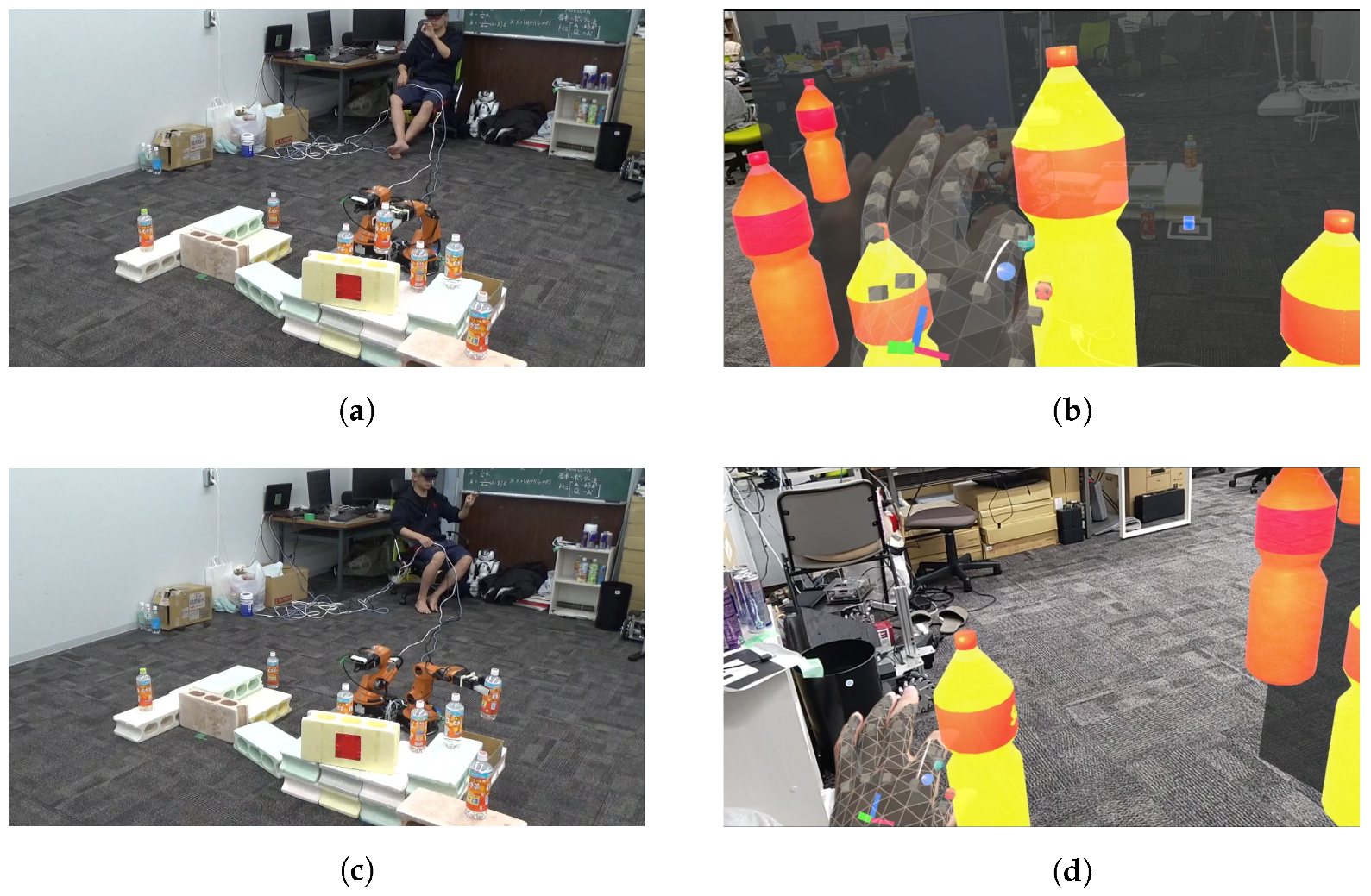

4.7. Real-Time Operation of Robot Arm by Hand Tracking

5. Verification of Alignment between MR Objects and Real Objects

5.1. Experimental Setup

5.2. Experimental Results

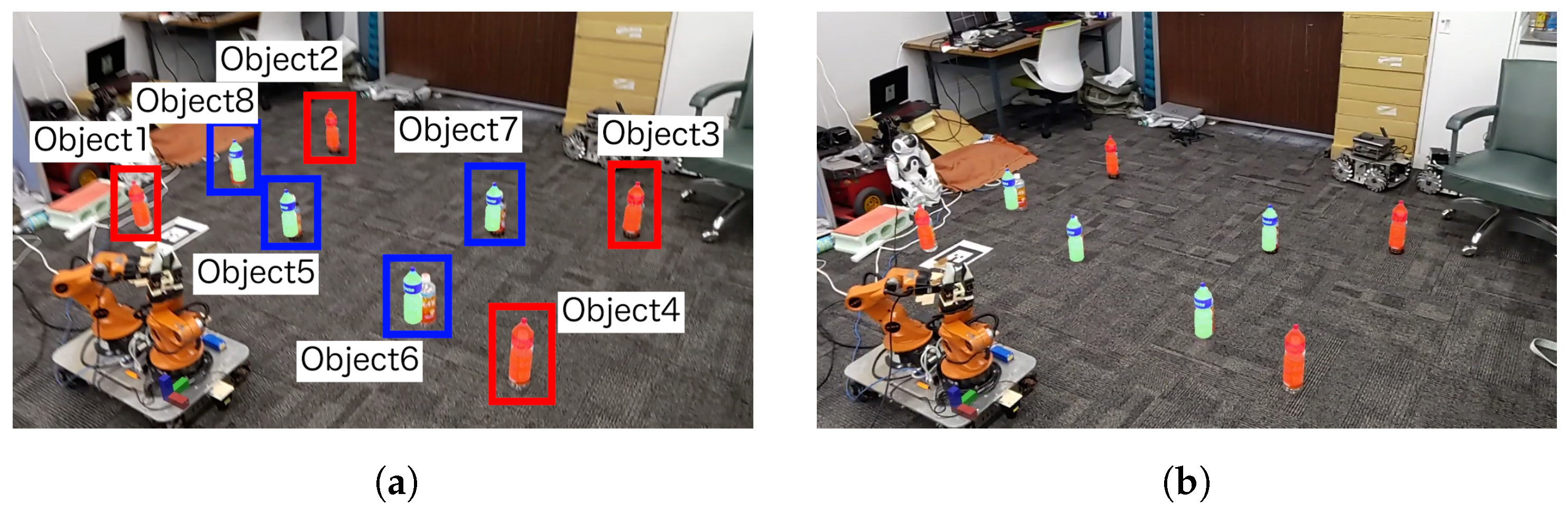

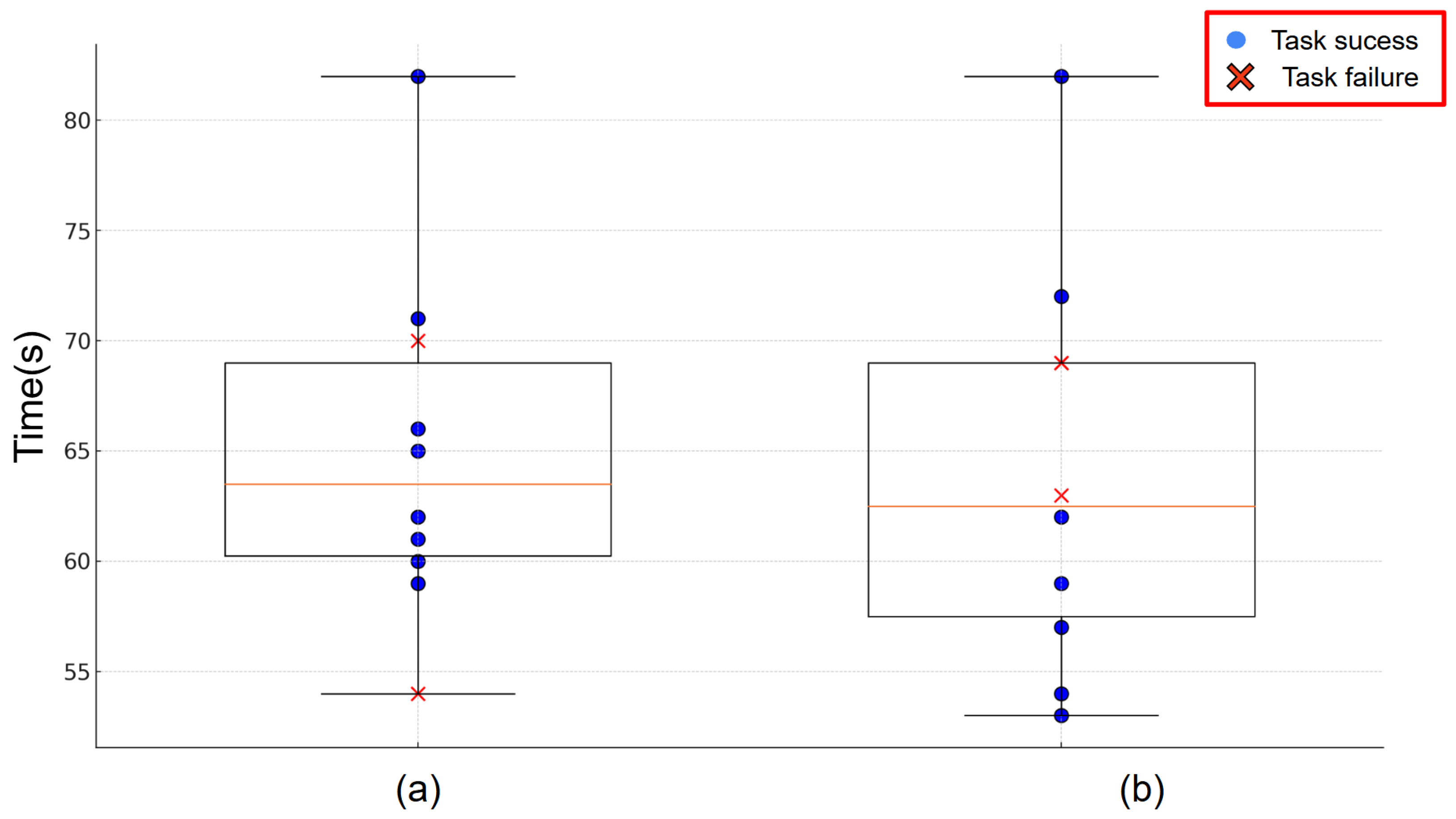

6. Verification Using Pick-and-Place Experiments

6.1. Experimental Setup

6.2. Experimental Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, T.; McCarthy, Z.; Jowl, O.; Lee, D.; Chen, X.; Goldberg, K.; Abbeel, P. Deep Imitation Learning for Complex Manipulation Tasks from Virtual Reality Teleoperation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5628–5635. [Google Scholar]

- Sereno, M.; Wang, X.; Besancon, L.; Mcguffin, M.J.; Isenberg, T. Collaborative Work in Augmented Reality: A Survey. IEEE Trans. Vis. Comput. Graph. 2020, 72, 2530–2549. [Google Scholar] [CrossRef] [PubMed]

- Ens, B.; Lanir, J.; Tang, A.; Bateman, S.; Lee, G.; Piumsomboon, T.; Billinghurst, M. Revisiting Collaboration through Mixed Reality: The Evolution of Groupware. Int. J. Hum. Comput. Stud. 2019, 131, 81–98. [Google Scholar] [CrossRef]

- Belen, R.A.J.D.; Nguyen, H.; Filonik, D.; del Favero, D.; Bednarz, T. A Systematic Review of the Current State of Collaborative Mixed Reality Technologies: 2013–2018. AIMS Electron. Electr. Eng. 2019, 3, 181–223. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, E77-D, 1321–1329. [Google Scholar]

- Demian, A.; Ostanin, M.; Klimchik, A. Dynamic Object Grasping in Human-Robot Cooperation Based on Mixed-Reality. In Proceedings of the 2021 International Conference “Nonlinearity, Information and Robotics” (NIR), Innopolis, Russia, 26–29 August 2021; pp. 1–5. [Google Scholar]

- Ostanin, M.; Klimchik, A. Interactive Robot Programming Using Mixed-Reality. IFAC PapersOnLine 2018, 51, 50–55. [Google Scholar] [CrossRef]

- Ostanin, M.; Yagfarov, R.; Klimchik, A. Interactive Robots Control Using Mixed Reality. IFAC-PapersOnLine 2019, 52, 695–700. [Google Scholar] [CrossRef]

- Delmerico, J.; Poranne, R.; Bogo, F. Spatial Computing and Intuitive Interaction: Bringing Mixed Reality and Robotics Together. IEEE Robot. Autom. Mag. 2022, 24, 45–57. [Google Scholar] [CrossRef]

- Sun, D.; Kiselev, A.; Liao, Q.; Stoyanov, T. A New Mixed-Reality-Based Teleoperation System for Telepresence and Maneuverability Enhancement. IEEE Trans.-Hum.-Mach. Syst. 2020, 50, 55–67. [Google Scholar] [CrossRef]

- Esaki, H.; Sekiyama, K. Human-Robot Interaction System based on MR Object Manipulation. In Proceedings of the 2023 62nd Annual Conference of the Society of Instrument and Control Engineers (SICE), Tsu, Japan, 6–9 September 2023; pp. 598–603. [Google Scholar]

- Norman, D.A.; Draper, S.W. User Centered System Design: New Perspectives on Human-Computer Interaction; L. Erlbaum Associates Inc.: Mahwah, NJ, USA, 1986. [Google Scholar]

- Dinh, T.Q.; Yoon, J.I.; Marco, J.; Jennings, P.; Ahn, K.K.; Ha, C. Sensorless Force Feedback Joystick Control for Teleoperation of Construction Equipment. Int. J. Precis. Eng. Manuf. 2017, 18, 955–969. [Google Scholar] [CrossRef]

- Truong, D.Q.; Truong, B.N.M.; Trung, N.T.; Nahian, S.A.; Ahn, K.K. Force Reflecting Joystick Control for Applications to Bilateral Teleoperation in Construction Machinery. Int. J. Precis. Eng. Manuf. 2017, 18, 301–315. [Google Scholar] [CrossRef]

- Komatsu, R.; Fujii, H.; Tamura, Y.; Yamashita, A.; Asama, H. Free Viewpoint Image Generation System Using Fisheye Cameras and a Laser Rangefinder for Indoor Robot Teleoperation. ROBOMECH J. 2020, 7, 15. [Google Scholar] [CrossRef]

- Nakanishi, J.; Itadera, S.; Aoyama, T.; Hasegawa, Y. Towards the Development of an Intuitive Teleoperation System for Human Support Robot Using a VR Device. Adv. Robot. 2020, 34, 1239–1253. [Google Scholar] [CrossRef]

- Meeker, C.; Rasmussen, T.; Ciocarlie, M. Intuitive Hand Teleoperation by Novice Operators Using a Continuous Teleoperation Subspace. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 5821–5827. [Google Scholar]

- Yew, A.W.W.; Ong, S.K.; Nee, A.Y.C. Immersive Augmented Reality Environment for the Teleoperation of Maintenance Robots. Procedia CIRP 2017, 61, 305–310. [Google Scholar] [CrossRef]

- Ellis, S.R.; Adelstein, B.D.; Welch, R.B. Kinesthetic Compensation for Misalignment of Teleoperator Controls through Cross-Modal Transfer of Movement Coordinates. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Baltimore, MD, USA, 29 September–4 October 2002; Volume 46, pp. 1551–1555. [Google Scholar]

- Ribeiro, L.G.; Suominen, O.J.; Durmush, A.; Peltonen, S.; Morales, E.R.; Gotchev, A. Retro-Reflective-Marker-Aided Target Pose Estimation in a Safety-Critical Environment. Appl. Sci. 2021, 11, 3. [Google Scholar] [CrossRef]

- Bejczy, B.; Bozyil, R.; Vaiekauskas, E.; Petersen, S.B.K.; Bogh, S.; Hjorth, S.S.; Hansen, E.B. Mixed Reality Interface for Improving Mobile Manipulator Teleoperation in Contamination Critical Applications. Procedia Manuf. 2017, 51, 620–626. [Google Scholar] [CrossRef]

- Triantafyllidis, E.; McGreavy, C.; Gu, J.; Li, Z. Study of Multimodal Interfaces and the Improvements on Teleoperation. IEEE Access 2020, 8, 78213–78227. [Google Scholar] [CrossRef]

- Marques, B.; Silva, S.S.; Alves, J.; Araujo, T.; Dias, P.M.; Santos, B.S. A Conceptual Model and Taxonomy for Collaborative Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2021, 28, 5113–5133. [Google Scholar] [CrossRef] [PubMed]

- Marques, B.; Teixeira, A.; Silva, S.; Alves, J.; Dias, P.; Santos, B.S. A Critical Analysis on Remote Collaboration Mediated by Augmented Reality: Making a Case for Improved Characterization and Evaluation of the Collaborative Process. Comput. Graph. 2022, 102, 619–633. [Google Scholar] [CrossRef]

- Nakamura, K.; Tohashi, K.; Funayama, Y.; Harasawa, H.; Ogawa, J. Dual-Arm Robot Teleoperation Support with the Virtual World. Artif. Life Robot. 2020, 2, 286–293. [Google Scholar] [CrossRef]

- Whitney, D.; Rosen, E.; Ullman, D.; Phillips, E.; Tellex, S. ROS Reality: A Virtual Reality Framework Using Consumer-Grade Hardware for ROS-Enabled Robots. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 5018–5025. [Google Scholar]

- Whitney, D.; Rosen, E.; Phillips, E.; Konidaris, G.; Tellex, S. Comparing Robot Grasping Teleoperation across Desktop and Virtual Reality with ROS Reality. In Proceedings of the Robotics Research: The 18th International Symposium ISRR, Puerto Varas, Chile, 11–14 December 2017; pp. 335–350. [Google Scholar]

- Delpreto, J.; Lipton, J.I.; Sanneman, L.; Fay, A.J.; Fourie, C.; Choi, C.; Rus, D. Helping Robots Learn: A Human-Robot Master-Apprentice Model Using Demonstrations via Virtual Reality Teleoperation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10226–10233. [Google Scholar]

- Britton, N.; Yoshida, K.; Walker, J.; Nagatani, K.; Taylor, G.; Dauphin, L. Lunar Micro Rover Design for Exploration through Virtual Reality Tele-Operation. In Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2015; Volume 105, pp. 259–272. [Google Scholar]

- Wojke, N.; Bewley, A. Simple Online and Realtime Tracking with a Deep Association Metric. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 99, 3645–3649. [Google Scholar]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J. USAC: A Universal Framework for Random Sample Consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2022–2038. [Google Scholar] [CrossRef] [PubMed]

- Rusinkiewicz, S.; Levoy, M. Efficient Variants of the ICP Algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Moré, J.J. The Levenberg-Marquardt Algorithm: Implementation and Theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; Volume 630, pp. 105–116. [Google Scholar]

- Nakamura, K.; Sekiyama, K. Robot Symbolic Motion Planning and Task Execution Based on Mixed Reality Operation. IEEE Access 2023, 11, 112753–112763. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esaki, H.; Sekiyama, K. Immersive Robot Teleoperation Based on User Gestures in Mixed Reality Space. Sensors 2024, 24, 5073. https://doi.org/10.3390/s24155073

Esaki H, Sekiyama K. Immersive Robot Teleoperation Based on User Gestures in Mixed Reality Space. Sensors. 2024; 24(15):5073. https://doi.org/10.3390/s24155073

Chicago/Turabian StyleEsaki, Hibiki, and Kosuke Sekiyama. 2024. "Immersive Robot Teleoperation Based on User Gestures in Mixed Reality Space" Sensors 24, no. 15: 5073. https://doi.org/10.3390/s24155073

APA StyleEsaki, H., & Sekiyama, K. (2024). Immersive Robot Teleoperation Based on User Gestures in Mixed Reality Space. Sensors, 24(15), 5073. https://doi.org/10.3390/s24155073