Abstract

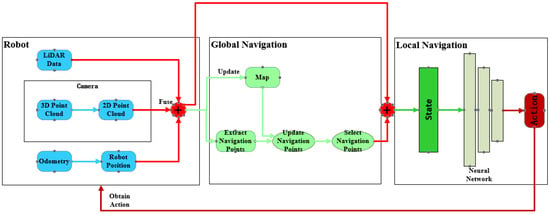

In the domain of mobile robot navigation, conventional path-planning algorithms typically rely on predefined rules and prior map information, which exhibit significant limitations when confronting unknown, intricate environments. With the rapid evolution of artificial intelligence technology, deep reinforcement learning (DRL) algorithms have demonstrated considerable effectiveness across various application scenarios. In this investigation, we introduce a self-exploration and navigation approach based on a deep reinforcement learning framework, aimed at resolving the navigation challenges of mobile robots in unfamiliar environments. Firstly, we fuse data from the robot’s onboard lidar sensors and camera and integrate odometer readings with target coordinates to establish the instantaneous state of the decision environment. Subsequently, a deep neural network processes these composite inputs to generate motion control strategies, which are then integrated into the local planning component of the robot’s navigation stack. Finally, we employ an innovative heuristic function capable of synthesizing map information and global objectives to select the optimal local navigation points, thereby guiding the robot progressively toward its global target point. In practical experiments, our methodology demonstrates superior performance compared to similar navigation methods in complex, unknown environments devoid of predefined map information.

1. Introduction

In the evolution of Simultaneous Localization and Mapping (SLAM), high-precision localization and robust mapping have been achieved. SLAM systems rely on operators controlling measurement devices to collect positional data and environmental features, thus constructing maps [1]. In unknown environments, humans can expedite the arrival of devices at target points by relying on their own experience and understanding of the environment to plan paths. However, this method is limited by factors such as labor costs, potential hazards, and physical constraints. Therefore, automated exploration and navigation path-planning techniques have become a research hotspot. Current research focuses on integrating autonomous navigation with mapping, where mobile robots use map information to autonomously plan a path to the target point via a planner [2]. Algorithms such as A*, Dijkstra [3], RRT, and RRT* [4] utilize existing map information to plan optimal or approximately optimal paths to the destination [5]. However, these methods become impractical in unknown environments. With the application of deep reinforcement learning in robotics, its precise decision-making ability makes it a viable option for autonomous navigation. By employing deep reinforcement learning algorithms to simulate human characteristics and intelligence [6], robots are endowed with the ability to learn action policies based on the current environmental state, enabling effective navigation even in unknown environments after training in diverse environments [7]. This makes deep reinforcement learning particularly suitable for the autonomous navigation tasks of mobile robots. However, popular deep learning algorithms such as DDPG [8], TD3 [9,10], and SAC [11] have limited input state dimensions, and action policies are only relevant to current environmental information, which may lead to policy entrapment in local optima, action loops, or stagnation [12]. Additionally, when the target point is beyond the training range, the robot may fail to navigate correctly. In such cases, intermediate nodes need to be selected between the mobile robot and the target point, gradually approaching the target by continuously selecting intermediate nodes. Heuristic functions play a crucial role in this process, synthesizing various information in the robot’s surrounding environment to score different intermediate nodes and select the optimal navigation node [13].

Deep reinforcement learning requires real-time state information and environmental perception data from the robot during training, which is primarily sourced from the various sensors mounted on the robot. Lidar and camera, serving as the primary perception devices for the robot, are utilized to detect obstacles within the environment and capture surrounding environmental information. Meanwhile, the odometer provides the robot’s positioning information, relative distance, and azimuth angle to the target point. Lidar can be categorized into 2D and 3D types based on its functionality, exhibiting both high precision in object recognition and insensitivity to changes in lighting conditions [14]. Two-dimensional lidar, limited by its scanning plane, cannot detect obstacles above or below its scanning plane; in contrast, 3D lidar can identify obstacles in multiple dimensions but comes with a higher cost. Although cameras have lower costs and can provide rich visual information, their performance is significantly influenced by lighting conditions, making it challenging to obtain clear images in strong or dim light environments [15]. By fusing sensor data from lidar and camera, complementary advantages can be achieved, overcoming their respective limitations and optimizing the performance of the perception system [16].

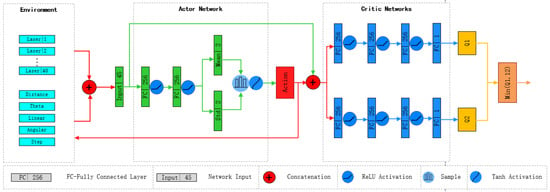

Therefore, we propose an autonomous navigation system that does not rely on prior information for guiding mobile robots to target locations. This system extracts all potential navigation nodes around the robot and evaluates them to select the optimal navigation node. Subsequently, utilizing the Soft Actor–Critic (SAC) network from deep reinforcement learning to process sensor input data generates motion strategies to reach the selected navigation points and gradually guides the robot to the global target point. This system does not require pre-mapping in unknown environments, effectively reducing the risk of falling into local optima. The main contributions of this work are summarized as follows:

- Designed and implemented an algorithm based on the Soft Actor–Critic (SAC) network architecture, providing continuous action outputs for a four-wheeled mobile robot to navigate toward local goals.

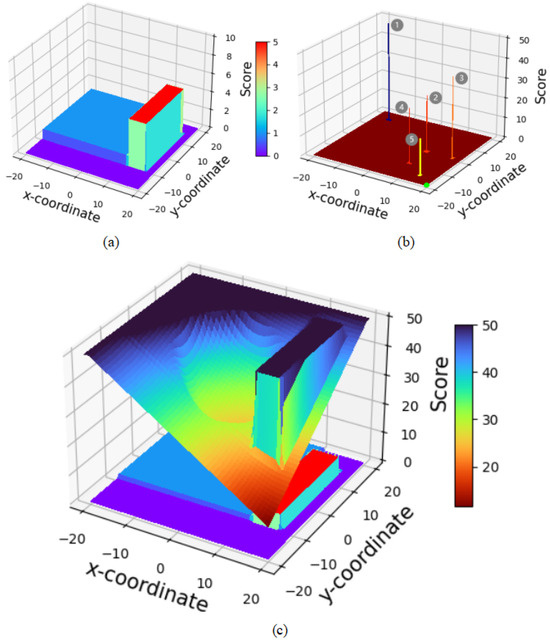

- Proposed a Candidate Point-Target Distance (CPTD) method, an improved heuristic algorithm that integrates a heuristic evaluation function into the navigation system of a four-wheeled mobile robot. This function is used to assess navigation path points and guide the robot toward the global target point.

- Introduced a Bird’s-Eye View (BEV) mapping and data-level fusion approach, integrating a 2D lidar sensor with a camera to identify obstacles at different heights in the environment, thereby enhancing the robot’s environmental perception capabilities.

2. Related Work

With the application and proliferation of deep learning algorithms in the robotics field, researchers have begun to explore the use of deep learning technology to address the exploration and navigation challenges of robots. Deep learning technology has shown significant effectiveness in solving robotic motion decision-making problems. In [17], Kontoudis et al. proposed an online motion planning algorithm that combines RRT and Q-learning, optimizing strategies and costs for continuous-time linear systems via model-free Q-functions and integral reinforcement learning. Terminal state evaluation and local replanning were also introduced to enhance real-time performance and adaptability to obstacles. Marchesini et al. [18] proposed a method that combines DDQN with parallel asynchronous training and multi-batch prioritized experience replay, which demonstrated potential as a viable alternative for mapless navigation by reducing training time while maintaining success rates. In [19], Dong et al. proposed an improved DDPG algorithm that dynamically adjusts the exploration factor using limited prior knowledge and an -greedy algorithm, accelerating the training process of deep reinforcement learning and reducing the number of trial-and-error attempts. Li et al. [20] proposed an improved TD3 algorithm, incorporating prioritized experience replay and designing a dynamic delayed update strategy, which reduced the impact of value estimation errors, improved the success rate of path planning, and reduced training time.

The aforementioned methods are based on single sensors; while they can recognize the environment and navigate correctly, they pose risks when encountering specialized obstacles. In [21,22], a method was proposed to combine ultrasonic sensors and camera, using occupancy grid algorithms to merge camera data and ultrasonic measurements. This enabled the robot to efficiently detect obstacles and accurately reach its target. However, the wide beam angle of ultrasonic sensors can result in erroneous identification of protruding objects on walls when the robot is navigating close to them. Zhang et al. in [23] proposed fusing lidar and RGB-D camera to enhance the accuracy of 2D grid maps, followed by employing an improved A* algorithm for mapping and navigation tasks. In [24], Theodoridou et al. proposed integrating RGB-D and lidar to achieve 3D human pose estimation and tracking, allowing the robot to navigate correctly within an environment without invading personal space. In [25], a method combining lidar and RGB-D camera data was proposed, utilizing GPU for parallel computation to implement an asynchronous update algorithm, thereby enhancing the efficiency of obstacle recognition and data processing speed. However, despite the advantages of this algorithm in efficiency and learning performance, there is also a risk of overfitting, especially when training data are limited or the environment is highly dynamic.

In light of this, deep learning technologies exhibit outstanding performance in handling modular tasks, but they have certain limitations in implementing global end-to-end solutions. Typically, multiple technical challenges are encountered during the transition from simulated environments to real-world scenarios. In response to goal-oriented exploration issues, this study proposes an efficient learning strategy that integrates depth camera information transformation with lidar data fusion and combines it with a global navigation strategy. The target navigation system aims to autonomously navigate to a predetermined global target location in unknown environments and without preset detailed paths, while also possessing the ability to actively recognize and avoid obstacles during the journey. Via this strategic integration, the system can more efficiently utilize the precise distance measurement capabilities of lidar to achieve comprehensive environmental perception and accurate navigation.

4. Experiments and Results

4.1. Device and Parameter Settings

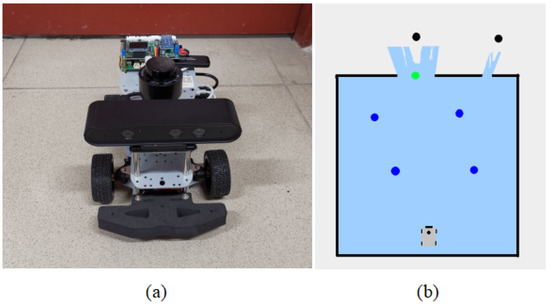

The experiment was conducted using a computer equipped with an NVIDIA RTX 3060 graphics card (Santa Clara, CA, USA), 64 GB of RAM, and Intel Core i5-12490F CPU (Santa Clara, CA, USA) to execute deep reinforcement learning algorithms for learning local navigation strategies. The training of the SAC network was conducted in an environment built within the Gazebo simulation software (Gazebo 9). During the training process, each episode ended when the robot reached the target, collided, or executed 500 steps. The maximum linear velocity of the robot was set to 1 m/s, and the maximum angular velocity was set to 1 rad/s. For candidate navigation points, the internal and external discount limits for the heuristic function were set to m and m, respectively. The calculation of environmental information for candidate points was set to internal. In the real-world experiment, the platform used is an Ackermann mobile robot, as shown in Figure 3a. It was equipped with a microcomputer core based on Jetson Orin Nano 4G, running on the Ubuntu 20.04 operating system, for executing autonomous navigation programs. It was also equipped with a Leishen N10P lidar sensor (Shenzhen, China) and an Astra_s stereo depth camera (Shenzhen, China) for object recognition in the environment. Additionally, a laptop computer running on the Ubuntu 18.04 system, equipped with an NVIDIA RTX 3060 graphics card, 16 GB of RAM, and AMD Ryzen7 5800H CPU (Sunnyvale, CA, USA), was used for remote monitoring and controlling of the robot car.

4.2. Network Training

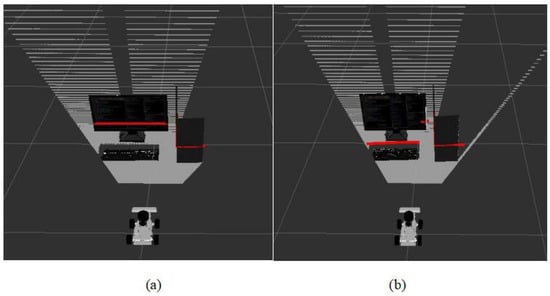

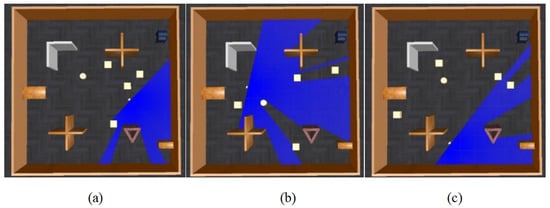

To enable the robot to autonomously avoid obstacles in real-world environments, it is necessary to learn a generalized obstacle avoidance strategy from sensor data. To enhance the generalizability of the learning algorithm and diversify the exploration of strategies, Gaussian noise is introduced into the sensors and the execution of actions. Additionally, to simulate dynamic obstacles in the real world and improve the robot’s ability to handle complex scenarios, a moving cylindrical obstacle and four boxes that change randomly with each training episode are introduced into the simulation training environment. These designs are intended to mimic the dynamism and uncertainty of the real world, allowing the robot to learn more robust and adaptable navigation strategies. The robot model trained in the simulated environment is consistent with the actual robot, and the starting position and target point position of the robot are randomly changed at the beginning of each episode. Figure 6 is an example of an environment trained in the gazebo simulation software.

Figure 6.

Example of the training environment in the gazebo simulation software. The blue lines are the laser beams. The cylinder acts as a moving obstacle that moves vertically, and the four boxes are obstacles that change randomly in each episode.

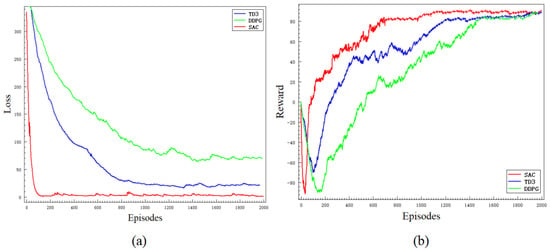

Figure 7 depicts the convergence curves of loss and reward for the SAC, TD3, and DDPG algorithms under the conditions of sensor data fusion, reward function design, and the aforementioned environment during the training process. Each algorithm was trained for approximately 2000 episodes. As shown in Figure 7, the convergence speed of the SAC algorithm is notably faster than the other two algorithms, indicating that the SAC algorithm exhibits superior learning efficiency and performance. Due to the dynamic nature of the environment, significant fluctuations are observed in both the loss and reward curves.

Figure 7.

Training results of the deep reinforcement learning networks. The red line is the SAC algorithm, the blue line is the TD3 algorithm, and the green line is the DDPG algorithm.

4.3. Autonomous Exploration and Navigation

To quantitatively evaluate the performance of the proposed method in this study and accurately assess its effectiveness and efficiency in autonomous navigation tasks, we employed a comparative approach, contrasting it with various existing indoor navigation algorithms. Firstly, experiments were conducted using the SAC network without a global planner, referred to as the Local Deep Reinforcement Learning (L-DRL) method. Secondly, to compare the performance of the heuristic evaluation function, we compare the TD3+CPTD framework with the global navigation method [10] used in the heuristic navigation algorithm of [13], referring to them as NTD3 and OTD3, respectively. Considering that non-learning path planning algorithms struggle to achieve autonomous exploration and navigation in the absence of prior map information, experiments replaced the neural network in our proposed framework with the ROS local planner package, referred to as the LP method. Finally, to establish a performance benchmark for comparison, control experiments were conducted using the Dijkstra algorithm based on complete mapping. Each algorithm was tested in three different environments over five trials. Key recorded data included traveled distance (D, in meters), travel time (T, in seconds), and the number of successful goal arrivals (Arrive). Based on the collected experimental data, average traveled distance (Av.D) and average travel time (Av.T) were further calculated, along with maximum (Max.D, Max.T) and minimum (Min.D, Min.T) values for distance and time traveled. In this study, experiments were conducted in three different environments. To evaluate the transferability between simulation and real-world scenarios, Experiment 1 was performed in the Gazebo simulation software, while Experiments 2 and 3 were conducted in real-world environments.

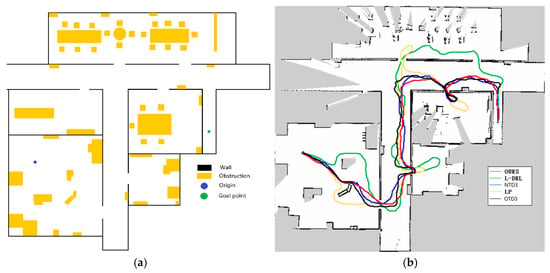

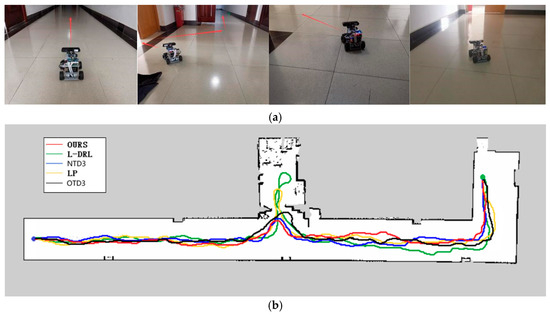

The first experimental environment, as depicted in Figure 8, was designed with dense obstacles and multiple local optima regions. In this experiment, the method proposed in this study demonstrated efficient and precise navigation performance, successfully guiding the robot to the designated global target point. In contrast, the NTD3 algorithm exceeded the method proposed in this study in terms of travel time, although its travel path length was similar. Because the relationship between candidate point distances is taken into account, the path traveled by NTD3 is shorter than that of OTD3. The LP method, prone to becoming trapped in local optima and requiring a longer time to replan paths, resulted in longer travel distances. The L-DRL method exhibited looping behavior when navigating to local optima regions, especially in narrow gaps between obstacles, ultimately requiring human intervention to guide it out of such areas and into open spaces. Detailed experimental data are provided in Table 2.

Figure 8.

Environment and autonomous navigation path for Experiment 1. (a) is the description of the experimental environment, and (b) is an example of the autonomous navigation path.

Table 2.

Detailed experimental data for Experiment 1.

The second experimental environment, as depicted in Figure 9, is primarily composed of a narrow corridor with smooth walls and contains few internal obstacles. The global target point coordinates are located at (33, −5). In this environment, each method was able to reach the global target point, but they exhibited differences in the length of the traveled path and the time required. The method proposed in this study not only rapidly reaches the global target point but also maintains the minimization of travel distance. Although the NTD3 algorithm is similar to our study’s method in terms of travel distance, its lower learning efficiency within the same training period compared to the SAC algorithm results in longer times required to execute certain actions. The path length traveled by OTD3 is still longer than that of NTD3. The LP method has a longer travel time due to the need to wait for the calculation of the next navigation point. The L-DRL method, on the other hand, is prone to falling into local optima, leading to a tendency to enter and delve into side paths. The specific experimental data can be found in Table 3.

Figure 9.

Environment and autonomous navigation path for Experiment 2. (a) is the description of the experimental environment, and (b) is an example of the autonomous navigation path.

Table 3.

Detailed experimental data for Experiment 2.

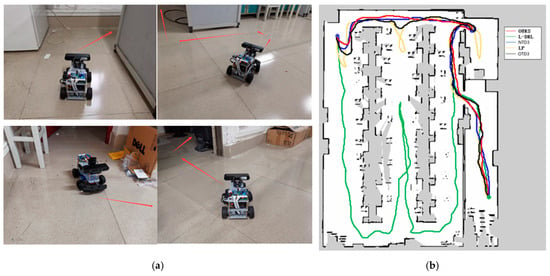

The third experimental scenario, as illustrated in Figure 10, was conducted in a more complex environment featuring numerous obstacles such as desks, chairs, cardboard boxes, and keyboards. Particularly in the vicinity of keyboards, the feasible pathways are narrow, and failure to effectively recognize the keyboard may lead the robot to collide and become stuck, impeding its progress. The method proposed in this study can reach the designated global target point in the shortest time possible, with a relatively shorter travel path. Although the NTD3 algorithm is similar to our study’s method in terms of the length of the traveled path, its decision-making process takes longer. The performance of OTD3 is similar to that of NTD3, but its path is slightly longer, resulting in a longer time required. The LP method tends to become trapped in local optima, although it eventually breaks free, resulting in longer overall time and travel distance. Due to the lack of global planning capability, the L-DRL method tends to loop between the aisles of desks, struggling to break free from local optima, ultimately requiring human intervention to guide it into new areas. Detailed experimental data are provided in Table 4.

Figure 10.

Environment and autonomous navigation path for Experiment 3. (a) is the description of the experimental environment, and (b) is an example of the autonomous navigation path.

Table 4.

Detailed experimental data for Experiment 3.

Synthesizing the experimental results, the method proposed in this study demonstrates consistent performance in both simulation and real-world environments. In simple environments as well as in complex environments with multiple local optima and numerous obstacles, it exhibits significant performance advantages over solutions based on the TD3 algorithm and planning-based methods. Although the TD3 algorithm showed similar performance to the method proposed in this study in some aspects during the experiments, the proposed method exhibited faster convergence and higher learning efficiency within the same training cycles. Compared to planning-based methods, the neural network-driven strategy can learn a wider range of motion patterns, enabling quicker escape from local optima. In contrast to the heuristic scoring method in [13], the CPTD method proposed in this study enables faster arrival at the global target, achieving quicker and more efficient global navigation.

5. Conclusions

In this study, we propose a robot autonomous navigation system based on deep reinforcement learning. Innovatively, this system employs the SAC algorithm as a local planner and combines it with a heuristic-based global planner to enhance the efficiency and accuracy of the robot’s global path planning. For obstacle detection and recognition, this research presents a strategy that fuses lidar sensor data with camera data, improving the robot’s accuracy in identifying obstacles in complex environments and enabling it to effectively navigate through narrow spaces. Additionally, the neural network module introduced in this system allows the robot to perform autonomous navigation tasks without pre-planning or prior information. Meanwhile, embedding a global navigation strategy, it compensates to some extent for the shortcomings of neural networks in global planning. Experimental results demonstrate that the performance of this system approaches the optimal solution achievable by non-learning planning algorithms based on prior map information. Ultimately, we aim for this system to inspire further research into neural network-based autonomous navigation systems, thereby advancing mobile robot technology and autonomous driving technology.

Author Contributions

Conceptualization, Y.O.; methodology, Y.O.; software, Y.O. and Y.C.; analysis, Y.O. and Y.C.; writing—original draft preparation, Y.O. and Y.C.; writing—review and editing, Y.O., Y.S. and T.Q.; supervision, T.Q.; funding acquisition, T.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Guangxi Key Research and Development Plan Project (Grant No. AB24010274 and No. AD24010061).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

No data were used for the research described in the article.

Acknowledgments

The authors would like to thank all the subjects and staff who participated in this experiment.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Singandhupe, A.; La, H.M. A review of slam techniques and security in autonomous driving. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 602–607. [Google Scholar]

- Aggarwal, S.; Kumar, N. Path planning techniques for unmanned aerial vehicles: A review, solutions, and challenges. Comput. Commun. 2020, 149, 270–299. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, Z. A multiple mobile robots path planning algorithm based on A-star and Dijkstra algorithm. Int. J. Smart Home 2014, 8, 75–86. [Google Scholar] [CrossRef]

- Naderi, K.; Rajamäki, J.; Hämäläinen, P. RT-RRT* a real-time path planning algorithm based on RRT. In Proceedings of the 8th ACM SIGGRAPH Conference on Motion in Games, Paris, France, 16–18 November 2015; pp. 113–118. [Google Scholar]

- Patle, B.; Pandey, A.; Parhi, D.; Jagadeesh, A. A review: On path planning strategies for navigation of mobile robot. Def. Technol. 2019, 15, 582–606. [Google Scholar] [CrossRef]

- Shi, F.; Zhou, F.; Liu, H.; Chen, L.; Ning, H. Survey and Tutorial on Hybrid Human-Artificial Intelligence. Tsinghua Sci. Technol. 2022, 28, 486–499. [Google Scholar] [CrossRef]

- Hao, X.; Xu, C.; Xie, L.; Li, H. Optimizing the perceptual quality of time-domain speech enhancement with reinforcement learning. Tsinghua Sci. Technol. 2022, 27, 939–947. [Google Scholar] [CrossRef]

- Bouhamed, O.; Ghazzai, H.; Besbes, H.; Massoud, Y. Autonomous UAV navigation: A DDPG-based deep reinforcement learning approach. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS), Seville, Spain, 12–14 October 2020; pp. 1–5. [Google Scholar]

- Gao, J.; Ye, W.; Guo, J.; Li, Z. Deep reinforcement learning for indoor mobile robot path planning. Sensors 2020, 20, 5493. [Google Scholar] [CrossRef]

- Cimurs, R.; Suh, I.H.; Lee, J.H. Goal-driven autonomous exploration through deep reinforcement learning. IEEE Robot. Autom. Lett. 2021, 7, 730–737. [Google Scholar] [CrossRef]

- Yang, L.; Bi, J.; Yuan, H. Intelligent Path Planning for Mobile Robots Based on SAC Algorithm. J. Syst. Simul. 2023, 35, 1726–1736. [Google Scholar]

- Morales, E.F.; Murrieta-Cid, R.; Becerra, I.; Esquivel-Basaldua, M.A. A survey on deep learning and deep reinforcement learning in robotics with a tutorial on deep reinforcement learning. Intell. Serv. Robot. 2021, 14, 773–805. [Google Scholar] [CrossRef]

- Cimurs, R.; Suh, I.H.; Lee, J.H. Information-based heuristics for learned goal-driven exploration and mapping. In Proceedings of the 2021 18th International Conference on Ubiquitous Robots (UR), Gangneung, Republic of Korea, 12–14 July 2021; pp. 571–578. [Google Scholar]

- Jiang, S.; Wang, S.; Yi, Z.; Zhang, M.; Lv, X. Autonomous navigation system of greenhouse mobile robot based on 3D lidar and 2D lidar SLAM. Front. Plant Sci. 2022, 13, 815218. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H. Fusion of 3D LIDAR and camera data for object detection in autonomous vehicle applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef]

- Gatesichapakorn, S.; Takamatsu, J.; Ruchanurucks, M. ROS based autonomous mobile robot navigation using 2D LiDAR and RGB-D camera. In Proceedings of the 2019 First International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA-SYMP), Bangkok, Thailand, 16–18 January 2019; pp. 151–154. [Google Scholar]

- Kontoudis, G.P.; Vamvoudakis, K.G. Kinodynamic motion planning with continuous-time Q-learning: An online, model-free, and safe navigation framework. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3803–3817. [Google Scholar] [CrossRef] [PubMed]

- Marchesini, E.; Farinelli, A. Discrete deep reinforcement learning for mapless navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10688–10694. [Google Scholar]

- Dong, Y.; Zou, X. Mobile robot path planning based on improved DDPG reinforcement learning algorithm. In Proceedings of the 2020 IEEE 11th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 16–18 October 2020; pp. 52–56. [Google Scholar]

- Li, P.; Wang, Y.; Gao, Z. Path planning of mobile robot based on improved td3 algorithm. In Proceedings of the 2022 IEEE International Conference on Mechatronics and Automation (ICMA), Guilin, China, 7–10 August 2022; pp. 715–720. [Google Scholar]

- Aman, M.S.; Mahmud, M.A.; Jiang, H.; Abdelgawad, A.; Yelamarthi, K. A sensor fusion methodology for obstacle avoidance robot. In Proceedings of the 2016 IEEE International Conference on Electro Information Technology (EIT), Grand Forks, ND, USA, 19–21 May 2016; pp. 0458–0463. [Google Scholar]

- Forouher, D.; Besselmann, M.G.; Maehle, E. Sensor fusion of depth camera and ultrasound data for obstacle detection and robot navigation. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–6. [Google Scholar]

- Zhang, B.; Zhang, J. Robot Mapping and Navigation System Based on Multi-sensor Fusion. In Proceedings of the 2021 4th International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 28–31 May 2021; pp. 632–636. [Google Scholar]

- Theodoridou, C.; Antonopoulos, D.; Kargakos, A.; Kostavelis, I.; Giakoumis, D.; Tzovaras, D. Robot Navigation in Human Populated Unknown Environments based on Visual-Laser Sensor Fusion. In Proceedings of the 15th International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 29 June–1 July 2022; pp. 336–342. [Google Scholar]

- Surmann, H.; Jestel, C.; Marchel, R.; Musberg, F.; Elhadj, H.; Ardani, M. Deep reinforcement learning for real autonomous mobile robot navigation in indoor environments. arXiv 2020, arXiv:2005.13857. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, J.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P.; et al. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Sun, H.; Zhang, W.; Yu, R.; Zhang, Y. Motion planning for mobile robots—Focusing on deep reinforcement learning: A systematic review. IEEE Access 2021, 9, 69061–69081. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Icarte, R.T.; Klassen, T.Q.; Valenzano, R.; McIlraith, S.A. Reward machines: Exploiting reward function structure in reinforcement learning. J. Artif. Intell. Res. 2022, 73, 173–208. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Henderson, T.C. Multisensor data fusion. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 867–896. [Google Scholar]

- Zhu, Z.; Zhang, Y.; Chen, H.; Dong, Y.; Zhao, S.; Ding, W.; Zhong, J.; Zheng, S. Understanding the Robustness of 3D Object Detection With Bird’s-Eye-View Representations in Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21600–21610. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).