Abstract

The inability to see makes moving around very difficult for visually impaired persons. Due to their limited movement, they also struggle to protect themselves against moving and non-moving objects. Given the substantial rise in the population of those with vision impairments in recent years, there has been an increasing amount of research devoted to the development of assistive technologies. This review paper highlights the state-of-the-art assistive technology, tools, and systems for improving the daily lives of visually impaired people. Multi-modal mobility assistance solutions are also evaluated for both indoor and outdoor environments. Lastly, an analysis of several approaches is also provided, along with recommendations for the future.

1. Introduction

At least 2.2 billion individuals worldwide suffer from vision impairment, according to the World Health Organisation (WHO). Some of the conditions that can cause vision impairment and blindness include diabetic retinopathy, cataracts, age-related macular degeneration, glaucoma, and refractive errors [1]. The quality of life of persons with visual impairment is greatly affected, including their capacity for employment and interpersonal interactions [2]. There is an urgent need for efficient assistance for visually impaired people, due to the rapid rise in their population. For their everyday activities, the majority of visually impaired persons use some form of assistive technology [3].

For those who have low eyesight, a navigation system is essential, since it may help them avoid obstructions and offer them exact directions to their destination. A difficult problem is the creation of navigation systems that allow the visually impaired to be guided through indoor and/or outdoor environments, so they may move in unfamiliar surroundings. Scientists are still working to create a system that would enable visually impaired individuals to be more independent and aware of their environment [4].

Technological advancements in information technology (IT), particularly in mobile technology, are expanding the potential of IT-based assistive solutions to enhance the quality of life for visually impaired people. Technology can increase people’s ability to live freely and fully participate in society [5]. The scope and variety of help that IT-based assistive technologies can offer is extensive. The paradigm of humanistic computer interaction where technology blends into society has grown in popularity [6].

In addition to producing significant advances, various studies conducted over the past few years have improved the categories of assistive devices that have been produced and are currently in widespread use. To facilitate travel, assistive technologies are often categorized into three categories: electronic orientation aids, position locators, and electronic travel aids devices. According to Elmannai et al. [7], these categories include devices that collect and transmit environmental information to the user, devices that give directions to pedestrians in unfamiliar areas, and devices that pinpoint the exact location of the holder, respectively. A distinct classification of assistive technologies was put forth by Tapu et al. [8] of perceptual tools that replace vision with other inputs from the senses, and conceptual tools that create orientation strategies to represent the environment and prepare for unforeseen circumstances during navigation.

To assist those who are visually impaired, assistive devices require three components. The first is the navigation module, also known as wayfinding, which was described by Kandalan et al. [9] as a sequence of effective movements needed to reach a desired location. It makes use of the user’s initial location and is always updated. The component should ensure safe navigation and completion within a reasonable time frame. It should be able to operate both inside and outdoors, in different lighting conditions (day/night, rain/sun, etc.), regardless of whether the place has been visited before, and conduct real-time analysis without compromising resilience and accuracy.

Detecting objects is the second component. In the first place, this makes it possible to avoid hazards and provides a helpful warning to the user. It has to be able to identify both stationary and moving obstacles, as well as their positions (ideally up ahead), characteristics, and distances, to give prompt feedback. To help visually impaired people develop cognitive maps of a location and get a thorough grasp of their surroundings, object detection should also offer scene descriptions upon user request.

The final elements are the user-controlled tools and the human–machine interface. These are made up of many devices designed to gather data, process the data, and then provide the results back to the user. Various combinations may be made, and they must be selected based on several factors, including the wearability of the devices, user choice, and computational approaches.

The idea of assistive technology is used to provide support to people with impairments. With the use of mobile assistive systems, visually impaired people can take advantage of the discreet, lightweight, and portable assistance that is provided through widely used gadgets.

A comprehensive assessment of recent developments in indoor navigation was carried out by Khan et al. [10], who covered state-of-the-art techniques, difficulties, and potential directions. They classified existing research, evaluated article quality, and identified key issues and solutions. Messaoudi et al. [11] reviewed various technologies and tools for the visually impaired, focusing on advancements in mobility aids. They explored location methods, obstacle recognition, and feedback mechanisms, alongside discussing specific tools like smart canes and voice-operated devices. Isazade et al. [12] provided an extensive review of the current navigation technologies designed to assist visually impaired individuals. They covered a range of solutions, including mobile applications, web services, and various assistive devices, highlighting the importance of these technologies in enhancing accessibility.Tripathi et al. [13] carried out a systematic review of various technologies aimed at aiding the visually impaired in navigating obstacles. They evaluated studies by categorizing technologies into vision-based, non-vision-based, and hybrid systems. The review identified gaps in current research and suggested improvements for future navigation aids, emphasizing the importance of integrating advanced technologies, to provide visually impaired people with safer and more effective navigation options. According to Soliman et al. [14], assistive technology for the visually impaired has seen recent advancements. They reviewed various systems, focusing on their purposes, hardware components, and algorithms, and categorized them into environment identification and navigation assistance systems. A thorough review was carried out by Zahabi et al. [15], in order to define criteria for creating navigation applications that are specifically suited for people with impairments. It looked at both research projects and applications that are sold commercially, in a methodical manner.

The review papers highlighted above are not without their shortcomings, which are as follows: Some of them lacked a comparative analysis of user experience, long-term effectiveness, efficiency, scalability, reliability, cost-effectiveness, accessibility of the systems, and integration of these technologies into daily life. We also observed an oversight in examining the limitations and drawbacks of systems. Comparative analysis with existing systems was not covered in some of the reviews, without identifying potential areas for improvement.

Given the rapid advancement in technology and changing societal needs, we thought it necessary to provide another review paper, as this can address gaps in the current literature by incorporating the latest developments in assistive technologies, integration, and user-centric design approaches. We examined how well these technologies work and how accessible they are in actual situations, taking into account the various demands of those with disabilities. Researchers and developers who are looking to improve navigation aids and raise the standard of living of people with impairments would greatly benefit from such a review.

This research was motivated by the desire to enhance the mobility and independence of visually impaired individuals in navigating various settings. New solutions that provide potential advantages as electronic travel aids and assistive gadgets emerge as technology advances. We sought out weaknesses in existing systems and difficulties with navigation and integrating these tools and technologies. This paper can drive future developments by assessing current technologies and user experiences, ensuring that new systems not only make use of state-of-the-art technology but are also customized to satisfy the practical needs of people who are visually impaired. To help those who are visually impaired, we provide a comprehensive overview of the most recent developments and research in the areas of mobile technology, Internet of Things (IoT) devices, and artificial intelligence (computer vision).

In this paper, we review the most recent articles in navigation systems for visually impaired people. To do this, we looked at the systems that have been developed recently, taking into account several tools and technologies. We also highlight the limitations and efficiency of these systems. This work’s contribution is summed up as follows: (i) comparing different technologies and assistive tools for visually impaired people; (ii) analysis of artificial-intelligence-based object detection systems for the visually impaired; (iii) analysis of Internet of Things (IoT)-based navigation systems for visually impaired persons.

The organization of this paper is as follows: Section 2 discusses assistive tools, technologies, and systems for the visually impaired. This section also classifies assistive systems based on their technologies. Section 5 proceeds with discussions and recommendations. Section 6 gives the Conclusions.

2. Assistive Technologies for Visually Impaired Persons

Navigation technologies for the visually impaired can improve their users’ mobility, spatial awareness, give real-time information about their surroundings, identify impediments, and recommend the best paths. These systems incorporate several different components, including sensors, algorithms, and feedback mechanisms. To make up for the absence of visual clues, this all-encompassing strategy combines hardware and software solutions.

2.1. Sensing Inputs

Visually challenged people frequently employ assistive tools that use depth cameras, Bluetooth beacons, radio frequency identification (RFID), ultrasonic sensors, infrared sensors, general cameras (or mobile phone cameras), and so on.

2.1.1. Smartphones

Smartphones are capable of producing high-quality, compact photos with good resolution. However, the limitation of a standard smartphone camera is that it does not have depth information, which prevents these sorts of algorithms from determining an object’s distance from the user. Generally, when navigating, general camera views are processed to identify only the obstructions in front.

2.1.2. Radio Frequency Identification (RFID)

RFID is a technique in which smart labels or tags with digital data embedded in them are detected by a reader using radio waves. This technology has issues with slow read rates, collision tags, changing signal accuracy, interruptions of communications, etc. Furthermore, in the context of navigation, the user must be informed of the position of the RFID reader [16].

2.1.3. Depth Camera

A depth camera offers a variety of data. Microsoft Kinect sensor [17] is frequently utilized as the main recognition hardware in depth-based vision analysis systems, among depth camera recognition systems. When it comes to obstructions, depth photos can provide more details than just two-dimensional photographs. The inability of Kinect cameras to function in brightly lit areas is one of their primary drawbacks. Light detection and ranging (LiDaR) cameras are another type of depth-based camera used for depth analysis, in addition to Kinect cameras. Both Kinect and LiDaR-based devices have the drawback of being very large, making them difficult to operate and requiring a person to navigate.

2.1.4. Infrared (IR) Sensor

An IR sensor may either detect or produce an IR signal, to sense certain properties of its environment. IR-based gadgets have the disadvantage of being susceptible to interference from both artificial and natural light. Because many tags need to be installed and maintained, IR-based systems are expensive to install [18]. In addition to detecting motion, infrared sensors are capable of measuring the amount of heat an object emits.

2.1.5. Ultrasonic Sensor

Ultrasonic sensors determine obstacle distance and provide the user with an audible or vibrational signal that indicates an object is ahead. This technology uses the concept of high-frequency reflection to identify impediments. To make navigating easier, certain suggestions or instructions are provided in vibrotactile form. One drawback of these systems is that, because of the ultrasonic beam’s broad beam angle, they are unable to identify obstructions with precision. In addition, these systems are unable to distinguish between different kinds of barriers, such as a bicycle or a car [19].

2.1.6. RGB-D Sensor

These navigation systems are built on a range-extending RGB-D sensor. Range-based floor segmentation is supported by consumer RGB-D cameras to gather range data. In addition, the RGB sensor can identify colors and objects. Sound map data and audio directions or instructions are used to provide the user interface [20].

2.2. Feedback

Obstacles can be identified by a sensing input that possesses vision and non-visual capabilities during navigation. The users need to obtain notifications, along with direction signals. Three common forms of feedback include vibrations, sounds, and touch. Certain systems employ a blend of these, providing the user with a multi-modal choice for obtaining navigation signals.

2.2.1. Audio Feedback

Speakers or earbuds are typically used by navigation systems to provide audio feedback. Audio feedback can be distracting for the user if they are overloaded with information, and it can also be unpleasant if they are oblivious to background noise because of aural signals [21]. To some extent, certain noise-reduction headphones are used by several navigation systems to mitigate this issue, so that the audio stream can be heard by the user without interference [22].

2.2.2. Tactile Feedback

Certain navigation feedback systems also employ tactile feedback, which is provided by the user’s fingers, hand, arm, foot, or any other part of their body where pressure can be felt. Sensing feelings at different body pressure locations enables the user to avoid obstacles and receive notifications for navigational cues. In contrast to audio feedback techniques, tactile feedback can be employed to prevent user attention being distracted by ambient noises [23].

2.2.3. Vibration Feedback

Given the widespread usage of smartphones in navigation system design, various systems have attempted to provide users with navigation feedback by utilizing a vibration function [24]. Certain directional indications can be provided by the vibration pattern.

2.2.4. Haptic Feedback

WeWalk, a commercial device, uses an ultrasonic sensor to assist the visually impaired in detecting impediments above chest level [25]. With the aid of a mobile app, this device can also be paired via Bluetooth with a cane. The haptic feedback used in communicating with the user is vibration, to help them become aware of their surroundings. In order to alert the VI user about their surroundings, SmartCane [26], a different commercially available device, employs haptic feedback and vibration, in addition to light and ultrasonic sensors. The author of [27] described a technique that helps visually impaired people utilize haptic inputs.

2.3. RFID-Based Map-Reading

RFID helps people with vision impairments participate in both indoor and outdoor activities. This device uses inexpensive, energy-efficient sensors to assist the visually impaired in navigating their environment. This RFID technology cannot be used in the landscape spatial region, because of its low communication range. RFID technology [28] helps impaired individuals by providing and facilitating self-navigation in indoor environments. This method was developed to manage and handle internal navigation issues, while accounting for the dynamics and accuracy of different settings.

2.4. Communication Networks

Ultra-wideband (U.W.B.) sensors, Bluetooth, cellular communication networks, and Wi-Fi networks are some of the wireless-network-based methods used for indoor location and navigation [29]. When using a wireless network, indoor location is quite user-friendly for visually impaired individuals. Mobile phones may connect with one another using a cellular network system [30]. One research paper [31] claimed that using Cell-ID, which functions in the majority of cellular networks, is an easy technique for localizing cellular devices. The research in [32] suggested a hybrid strategy to enhance indoor navigation and positioning performance. This strategy combines Bluetooth, wireless local area networks, and cellular communication networks. However, because of radio frequency signal range and cellular towers, such placement is unreliable and has a large navigational error.

3. Assistive Tools for the Visually Impaired

According to [33], there are several drawbacks related to user participation and program adaptation. The difficulties include mobility, comfort, adaptability, and multiple feedback options. The population of visually impaired people may not have accepted the technology, and those who were supposed to utilize it may not have taken to it for various reasons.

3.1. Braille Signs

Directions are difficult for people with visual impairments, thus they must be remembered. Navigation assistance is the primary course of action. These days, Braille signage is installed in many public areas, including emergency rooms, train stations, educational facilities, doors, elevators, and other parts of the infrastructure, to make the navigation easier for those with visual impairments.

3.2. Smart Cane

With the use of smart canes, people who are visually impaired can better navigate their environment and recognize objects in front of them, regardless of size, something that is difficult to do with traditional walking sticks. When an impediment is detected by an intelligent guiding cane, the microphone in the cane’s intelligent system emits a sound. The cane aids in determining whether an area is bright or dark [34]. An innovative cane navigation system that makes use of cloud networks and IoT was presented [35] for indoor use. The cloud network is connected to an IoT scanner, and the intelligent cane navigation system is able to gather and transfer data to it.

3.3. Images into Sound

Depth sensors produce images that people typically see and handle. Since sound can accurately direct people, several designs translate spatial data into sound. A method to enhance navigation in the absence of vision was presented by Rehri et al. [36]. A straightforward yet effective technique for image identification using sound was described in Nair et al. [37] for visually impaired people, enabling them to see with their ears.

3.4. Blind Audio Guidance

A blind audio guidance system uses an ultrasonic sensor to measure distance, an automatic voice recognition sound system to offer aural instructions, and an infrared receiver sensor to detect objects. This is based on an embedded system. The optical signals are first detected by the ultrasonic sensors, which translate them into audio data.

3.5. Voice and Vibration

Due to their heightened sensitivity to sound, those who are visually impaired receive alerts from this type of navigation system through vibrational and vocal inputs, both indoors and outdoors. Users can move about in an alert manner and choose from various levels of intensity [38].

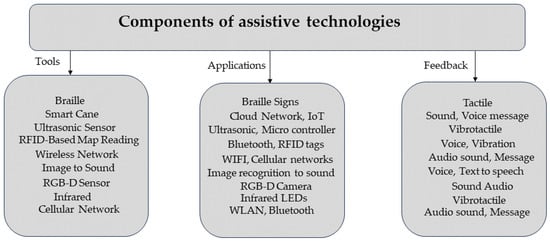

Figure 1 Highlighted assistive navigational components for visually impaired persons.

Figure 1.

Tools and technologies for visually impaired persons.

5. Discussions and Recommendations

5.1. Discussions

Even though there has been a lot of research performed on navigational systems designed to help visually impaired people, not all of them are in use. The majority of the strategies make sense in principle; in practice, they could be too hard or time-consuming for the user to utilize. Sections about sensing techniques, object recognition, physical hardware, and cost-effectiveness were all included in this assessment.

It was observed many systems opted for feedback methods that used audio. Some actually made use of multi- or dual-mode feedback techniques. A superior navigation system may be identified, among other things, by selecting the right feedback mechanism. However, we also should not limit people to just one way of providing feedback. In certain circumstances, one feedback technique may prevail or be more advantageous than another. For instance, an auditory feedback approach is not a good fit in a loud metropolitan setting.

The availability of assistive technology for the visually impaired is highlighted by this study, as well as their capacities and security. Assistive devices such as smart canes and other devices for the visually impaired can help them become more independent. Deep learning methods such as YOLO, SSD, and FasterR-CNN have enabled real-time detection and object recognition, and location determination can be integrated with built-in GPS, hence increasing the efficiency and potential of these technologies.

5.2. Recommendations

To arrive at a location safely is the primary goal of navigation. During navigation, it may be desirable for visually impaired persons to be notified about any changes in their surroundings, such as traffic jams, barriers, warning situations, etc. During navigation, users should be given the appropriate quantity of information at the appropriate time regarding their surroundings [69].

A system that only offers one type of feedback may not be helpful in many situations. While some persons rely on tactile or vibratory modes, others rely on auditory modes. However, using multiple feedback modes may become significant depending on the circumstances. Thus, if numerous feedback modalities are available, the user will be free to select one depending on the circumstances or surroundings. As a result, the system will function better in a variety of settings. Users would benefit from a multi-modal feedback system implementation [70].

When designing a navigation system for the visually impaired, one of the most crucial user-centric considerations is the time required for system familiarization. Visually impaired people need to master use of most of the navigation-assistive technologies on the market today. One of the factors that must be taken into account in this situation is that customers should not find it difficult to learn a new system, and it should not be too complicated.

A lot of the current systems are bulky or cumbersome. Another key element influencing the usefulness and user adoption of these devices is portability. Even though a lot of systems are mobile phone-linked, using them may not be as simple as one may think. A system should be affordable and made portable for easy use [71]. It should not feel as though the user is carrying a heavy burden, since this might cause great discomfort.

All things considered, the development of assistive devices for the visually impaired is an important field of study, and with ongoing technological progress, millions of individuals might see an improvement in their standard of living.

6. Conclusions

This paper presents a number of the most recent assistive technologies for the visually impaired in the areas of artificial intelligence (computer vision), IoT devices, and mobile devices. The most recent developments in navigation systems, tools, and technologies have also been discussed here.

Our research evaluation, which carefully evaluated the indoor and outdoor assistive navigation techniques employed by visually impaired users, analyzed numerous tools and technologies helpful for visually impaired persons. A review of other studies published on the same subject was provided. We looked at research’s limitations and drawbacks, datasets, and various methodologies. This review demonstrates that this field of study is having a significant influence on both the research community and society at large.

Author Contributions

Conceptualisation, G.I.O., T.A. and N.R.; methodology, G.I.O., T.A. and N.R.; formal analysis, G.I.O., T.A. and N.R.; investigation, G.I.O., T.A. and N.R.; writing—original draft preparation, G.I.O., T.A. and N.R.; writing—review and editing, G.I.O., T.A. and N.R.; supervision, N.R.; project administration, N.R. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the King Salman center For Disability Research for funding this work through Research Group no KSRG-2023-374.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- WHO. Blindness and Vision Impairment. 2023. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 9 January 2024).

- Fernandes, H.; Costa, P.; Filipe, V.; Paredes, H.; Barroso, J. A review of assistive spatial orientation and navigation technologies for the visually impaired. Univers. Access Inf. Soc. 2019, 18, 155–168. [Google Scholar] [CrossRef]

- ATIA. What Is AT?-Assistive Technology Industry Association. 2023. Available online: https://www.atia.org/home/at-resources/what-is-at/ (accessed on 9 January 2024).

- Khan, S.; Nazir, S.; Khan, H.U. Analysis of navigation assistants for blind and visually impaired people: A systematic review. IEEE Access 2021, 9, 26712–26734. [Google Scholar] [CrossRef]

- Steel, E.J.; de Witte, L.P. Advances in European assistive technology service delivery and recommendations for further improvement. Technol. Disabil. 2011, 23, 131–138. [Google Scholar] [CrossRef]

- Zdravkova, K.; Krasniqi, V.; Dalipi, F.; Ferati, M. Cutting-edge communication and learning assistive technologies for disabled children: An artificial intelligence perspective. Front. Artif. Intell. 2022, 5, 970430. [Google Scholar] [CrossRef] [PubMed]

- Elmannai, W.; Elleithy, K. Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef] [PubMed]

- Tapu, R.; Mocanu, B.; Zaharia, T. Wearable assistive devices for visually impaired: A state of the art survey. Pattern Recognit. Lett. 2020, 137, 37–52. [Google Scholar] [CrossRef]

- Kandalan, R.N.; Namuduri, K. Techniques for constructing indoor navigation systems for the visually impaired: A review. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 492–506. [Google Scholar] [CrossRef]

- Khan, D.; Cheng, Z.; Uchiyama, H.; Ali, S.; Asshad, M.; Kiyokawa, K. Recent advances in vision-based indoor navigation: A systematic literature review. Comput. Graph. 2022, 104, 24–45. [Google Scholar] [CrossRef]

- Messaoudi, M.D.; Menelas, B.A.J.; Mcheick, H. Review of Navigation Assistive Tools and Technologies for the Visually Impaired. Sensors 2022, 22, 7888. [Google Scholar] [CrossRef]

- Isazade, V. Advancement in navigation technologies and their potential for the visually impaired: A comprehensive review. Spat. Inf. Res. 2023, 31, 547–558. [Google Scholar] [CrossRef]

- Tripathi, S.; Singh, S.; Tanya, T.; Kapoor, S.; Saini, A.S. Analysis of Obstruction Avoidance Assistants to Enhance the Mobility of Visually Impaired Person: A Systematic Review. In Proceedings of the 2023 International Conference on Artificial Intelligence and Smart Communication (AISC), Greater Noida, India, 27–29 January 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 134–142. [Google Scholar]

- Soliman, I.; Ahmed, A.H.; Nassr, A.; AbdelRaheem, M. Recent Advances in Assistive Systems for Blind and Visually Impaired Persons: A Survey. In Proceedings of the 2023 2nd International Conference on Mechatronics and Electrical Engineering (MEEE), Abu Dhabi, United Arab Emirates, 10–12 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 69–73. [Google Scholar]

- Zahabi, M.; Zheng, X.; Maredia, A.; Shahini, F. Design of navigation applications for people with disabilities: A review of literature and guideline formulation. Int. J. Hum.-Interact. 2023, 39, 2942–2964. [Google Scholar] [CrossRef]

- Hunt, V.D.; Puglia, A.; Puglia, M. RFID: A Guide to Radio Frequency Identification; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Filipe, V.; Fernandes, F.; Fernandes, H.; Sousa, A.; Paredes, H.; Barroso, J. Blind navigation support system based on Microsoft Kinect. Procedia Comput. Sci. 2012, 14, 94–101. [Google Scholar] [CrossRef]

- Moreira, A.J.; Valadas, R.T.; de Oliveira Duarte, A. Reducing the effects of artificial light interference in wireless infrared transmission systems. In Proceedings of the IEE Colloquium on Optical Free Space Communication Links, London, UK, 19 February 1996; IET: Stevenage, UK, 1996; pp. 1–9. [Google Scholar]

- Fallah, N.; Apostolopoulos, I.; Bekris, K.; Folmer, E. Indoor human navigation systems: A survey. Interact. Comput. 2013, 25, 21–33. [Google Scholar]

- Bhatlawande, S.S.; Mukhopadhyay, J.; Mahadevappa, M. Ultrasonic spectacles and waist-belt for visually impaired and blind person. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–4. [Google Scholar]

- Dakopoulos, D.; Bourbakis, N.G. Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Trans. Syst. Man, Cybern. Part C (Appl. Rev.) 2009, 40, 25–35. [Google Scholar] [CrossRef]

- Cai, Z.; Richards, D.G.; Lenhardt, M.L.; Madsen, A.G. Response of human skull to bone-conducted sound in the audiometric-ultrasonic range. Int. Tinnitus J. 2002, 8, 3–8. [Google Scholar] [PubMed]

- Fadell, A.; Hodge, A.; Zadesky, S.; Lindahl, A.; Guetta, A. Tactile Feedback in an Electronic Device. U.S. Patent 8373549, 12 February 2013. [Google Scholar]

- Brewster, S.; Chohan, F.; Brown, L. Tactile feedback for mobile interactions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 159–162. [Google Scholar]

- Karkar, A.; Al-Maadeed, S. Mobile assistive technologies for visual impaired users: A survey. In Proceedings of the 2018 International Conference on Computer and Applications (ICCA), Beirut, Lebanon, 25–26 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 427–433. [Google Scholar]

- Phung, S.L.; Le, M.C.; Bouzerdoum, A. Pedestrian lane detection in unstructured scenes for assistive navigation. Comput. Vis. Image Underst. 2016, 149, 186–196. [Google Scholar] [CrossRef]

- Singh, L.S.; Mazumder, P.B.; Sharma, G. Comparison of drug susceptibility pattern of Mycobacterium tuberculosis assayed by MODS (Microscopic-observation drug-susceptibility) with that of PM (proportion method) from clinical isolates of North East India. IOSR J. Pharm. IOSRPHR 2014, 4, 1–6. [Google Scholar]

- Bai, J.; Liu, D.; Su, G.; Fu, Z. A cloud and vision-based navigation system used for blind people. In Proceedings of the 2017 International Conference on Artificial Intelligence, Automation and Control Technologies, Wuhan, China, 7–9 April 2017; pp. 1–6. [Google Scholar]

- Sahoo, N.; Lin, H.W.; Chang, Y.H. Design and implementation of a walking stick aid for visually challenged people. Sensors 2019, 19, 130. [Google Scholar] [CrossRef] [PubMed]

- Schematics, E. How Does Mobile Phone Communication Work? 2024. Available online: https://www.electroschematics.com/mobile-phone-how-it-works/ (accessed on 17 January 2024).

- Higuchi, H.; Harada, A.; Iwahashi, T.; Usui, S.; Sawamoto, J.; Kanda, J.; Wakimoto, K.; Tanaka, S. Network-based nationwide RTK-GPS and indoor navigation intended for seamless location based services. In Proceedings of the 2004 National Technical Meeting of the Institute of Navigation, San Diego, CA, USA, 26–28 January 2004; pp. 167–174. [Google Scholar]

- Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An indoor navigation system for the visually impaired. Sensors 2012, 12, 8236–8258. [Google Scholar] [CrossRef]

- Chen, H.; Wang, K.; Yang, K. Improving RealSense by fusing color stereo vision and infrared stereo vision for the visually impaired. In Proceedings of the 1st International Conference on Information Science and Systems, Jeju, Republic of Korea, 27–29 April 2018; pp. 142–146. [Google Scholar]

- Dian, Z.; Kezhong, L.; Rui, M. A precise RFID indoor localization system with sensor network assistance. China Commun. 2015, 12, 13–22. [Google Scholar] [CrossRef]

- Park, S.; Choi, I.M.; Kim, S.S.; Kim, S.M. A portable mid-range localization system using infrared LEDs for visually impaired people. Infrared Phys. Technol. 2014, 67, 583–589. [Google Scholar] [CrossRef]

- Rehrl, K.; Leitinger, S.; Bruntsch, S.; Mentz, H.J. Assisting orientation and guidance for multimodal travelers in situations of modal change. In Proceedings of the 2005 IEEE Intelligent Transportation Systems, Vienna, Austria, 16 September 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 407–412. [Google Scholar]

- Rehrl, K.; Göll, N.; Leitinger, S.; Bruntsch, S.; Mentz, H.J. Smartphone-based information and navigation aids for public transport travellers. In Location Based Services and TeleCartography; Springer: Berlin/Heidelberg, Germany, 2007; pp. 525–544. [Google Scholar]

- Liu, Z.; Li, C.; Wu, D.; Dai, W.; Geng, S.; Ding, Q. A wireless sensor network based personnel positioning scheme in coal mines with blind areas. Sensors 2010, 10, 9891–9918. [Google Scholar] [CrossRef] [PubMed]

- Tapu, R.; Mocanu, B.; Zaharia, T. DEEP-SEE: Joint object detection, tracking and recognition with application to visually impaired navigational assistance. Sensors 2017, 17, 2473. [Google Scholar] [CrossRef] [PubMed]

- Saleh, K.; Zeineldin, R.A.; Hossny, M.; Nahavandi, S.; El-Fishawy, N.A. Navigational path detection for the visually impaired using fully convolutional networks. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1399–1404. [Google Scholar]

- Joshi, R.C.; Yadav, S.; Dutta, M.K.; Travieso-Gonzalez, C.M. Efficient multi-object detection and smart navigation using artificial intelligence for visually impaired people. Entropy 2020, 22, 941. [Google Scholar] [CrossRef] [PubMed]

- Parvadhavardhni, R.; Santoshi, P.; Posonia, A.M. Blind Navigation Support System using Raspberry Pi & YOLO. In Proceedings of the 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 4–6 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1323–1329. [Google Scholar]

- Cornacchia, M.; Kakillioglu, B.; Zheng, Y.; Velipasalar, S. Deep learning-based obstacle detection and classification with portable uncalibrated patterned light. IEEE Sens. J. 2018, 18, 8416–8425. [Google Scholar] [CrossRef]

- Jiang, B.; Yang, J.; Lv, Z.; Song, H. Wearable vision assistance system based on binocular sensors for visually impaired users. IEEE Internet Things J. 2018, 6, 1375–1383. [Google Scholar] [CrossRef]

- Masud, U.; Saeed, T.; Malaikah, H.M.; Islam, F.U.; Abbas, G. Smart assistive system for visually impaired people obstruction avoidance through object detection and classification. IEEE Access 2022, 10, 13428–13441. [Google Scholar] [CrossRef]

- Lin, S.; Cheng, R.; Wang, K.; Yang, K. Visual localizer: Outdoor localization based on convnet descriptor and global optimization for visually impaired pedestrians. Sensors 2018, 18, 2476. [Google Scholar] [CrossRef]

- Srikanteswara, R.; Reddy, M.C.; Himateja, M.; Kumar, K.M. Object Detection and Voice Guidance for the Visually Impaired Using a Smart App. In Proceedings of the Recent Advances in Artificial Intelligence and Data Engineering: Select Proceedings of AIDE, Udupi, India, 23–24 May 2019; Springer: Berlin/Heidelberg, Germany, 2022; pp. 133–144. [Google Scholar]

- Jaiswar, L.; Yadav, A.; Dutta, M.K.; Travieso-González, C.; Esteban-Hernández, L. Transfer learning based computer vision technology for assisting visually impaired for detection of common places. In Proceedings of the 3rd International Conference on Applications of Intelligent Systems, Las Palmas de Gran Canaria, Spain, 7–9 January 2020; pp. 1–6. [Google Scholar]

- Lee, D.; Cho, J. Automatic object detection algorithm-based braille image generation system for the recognition of real-life obstacles for visually impaired people. Sensors 2022, 22, 1601. [Google Scholar] [CrossRef]

- Neha, F.F.; Shakib, K.H. Development of a Smartphone-based Real Time Outdoor Navigational System for Visually Impaired People. In Proceedings of the 2021 International Conference on Information and Communication Technology for Sustainable Development (ICICT4SD), Dhaka, Bangladesh, 27–28 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 305–310. [Google Scholar]

- Chen, Q.; Wu, L.; Chen, Z.; Lin, P.; Cheng, S.; Wu, Z. Smartphone based outdoor navigation and obstacle avoidance system for the visually impaired. In Multi-Disciplinary Trends in Artificial Intelligence, Proceedings of the 13th International Conference, MIWAI 2019, Kuala Lumpur, Malaysia, 17–19 November 2019, Proceedings 13; Springer: Berlin/Heidelberg, Germany, 2019; pp. 26–37. [Google Scholar]

- Denizgez, T.M.; Kamiloğlu, O.; Kul, S.; Sayar, A. Guiding visually impaired people to find an object by using image to speech over the smart phone cameras. In Proceedings of the 2021 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), Kocaeli, Turkey, 25–27 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- See, A.R.; Sasing, B.G.; Advincula, W.D. A smartphone-based mobility assistant using depth imaging for visually impaired and blind. Appl. Sci. 2022, 12, 2802. [Google Scholar] [CrossRef]

- Salunkhe, A.; Raut, M.; Santra, S.; Bhagwat, S. Android-based object recognition application for visually impaired. In Proceedings of the ITM Web of Conferences, Navi Mumbai, India, 14–15 July 2021; EDP Sciences: Les Ulis, France, 2021; Volume 40, p. 03001. [Google Scholar]

- Saquib, Z.; Murari, V.; Bhargav, S.N. BlinDar: An invisible eye for the blind people making life easy for the blind with Internet of Things (IoT). In Proceedings of the 2017 2nd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 19–20 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 71–75. [Google Scholar]

- Kunta, V.; Tuniki, C.; Sairam, U. Multi-functional blind stick for visually impaired people. In Proceedings of the 2020 5th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 895–899. [Google Scholar]

- Chava, T.; Srinivas, A.T.; Sai, A.L.; Rachapudi, V. IoT based Smart Shoe for the Blind. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 220–223. [Google Scholar]

- Kumar, N.A.; Thangal, Y.H.; Beevi, K.S. IoT enabled navigation system for blind. In Proceedings of the 2019 IEEE R10 Humanitarian Technology Conference (R10-HTC) (47129), Depok, West Java, Indonesia, 12–14 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 186–189. [Google Scholar]

- Rahman, M.W.; Tashfia, S.S.; Islam, R.; Hasan, M.M.; Sultan, S.I.; Mia, S.; Rahman, M.M. The architectural design of smart blind assistant using IoT with deep learning paradigm. Internet Things 2021, 13, 100344. [Google Scholar] [CrossRef]

- Krishnan, R.S.; Narayanan, K.L.; Murali, S.M.; Sangeetha, A.; Ram, C.R.S.; Robinson, Y.H. IoT based blind people monitoring system for visually impaired care homes. In Proceedings of the 2021 5th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 3–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 505–509. [Google Scholar]

- Pathak, A.; Adil, M.; Rafa, T.S.; Ferdoush, J.; Mahmud, A. An IoT based voice controlled blind stick to guide blind people. Int. J. Eng. Invent. 2020, 9, 9–14. [Google Scholar]

- Mala, N.S.; Thushara, S.S.; Subbiah, S. Navigation gadget for visually impaired based on IoT. In Proceedings of the 2017 2nd International Conference on Computing and Communications Technologies (ICCCT), Chennai, India, 23–24 February 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 334–338. [Google Scholar]

- Zhou, M.; Li, W.; Zhou, B. An IOT system design for blind. In Proceedings of the 2017 14th Web Information Systems and Applications Conference (WISA), Liuzhou, China, 11–12 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 90–92. [Google Scholar]

- Rahman, M.W.; Islam, R.; Harun-Ar-Rashid, M. IoT based blind person’s stick. Int. J. Comput. Appl. 2018, 182, 19–21. [Google Scholar]

- Chaurasia, M.A.; Rasool, S.; Afroze, M.; Jalal, S.A.; Zareen, R.; Fatima, U.; Begum, S.; Ahmed, M.M.; Baig, M.H.; Aziz, A. Automated navigation system with indoor assistance for blind. In Contactless Healthcare Facilitation and Commodity Delivery Management during COVID 19 Pandemic; Springer: Singapore, 2022; pp. 119–128. [Google Scholar]

- Messaoudi, M.D.; Menelas, B.A.J.; Mcheick, H. Autonomous smart white cane navigation system for indoor usage. Technologies 2020, 8, 37. [Google Scholar] [CrossRef]

- Saud, S.N.; Raya, L.; Abdullah, M.I.; Isa, M.Z.A. Smart navigation aids for blind and vision impairment people. In Proceedings of the International Conference on Computational Intelligence in Information System, Tokyo, Japan, 20–22 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 54–62. [Google Scholar]

- Electricity-Magnetism. Ultrasonic Sensors|How It Works, Application & Advantages. Available online: https://www.electricity-magnetism.org/ultrasonic-sensors/ (accessed on 8 May 2024).

- Chanana, P.; Paul, R.; Balakrishnan, M.; Rao, P. Assistive technology solutions for aiding travel of pedestrians with visual impairment. J. Rehabil. Assist. Technol. Eng. 2017, 4, 2055668317725993. [Google Scholar] [CrossRef] [PubMed]

- Real, S.; Araujo, A. Navigation systems for the blind and visually impaired: Past work, challenges, and open problems. Sensors 2019, 19, 3404. [Google Scholar] [CrossRef]

- El-Taher, F.E.Z.; Taha, A.; Courtney, J.; Mckeever, S. A systematic review of urban navigation systems for visually impaired people. Sensors 2021, 21, 3103. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).