Abstract

Graph convolutional neural network architectures combine feature extraction and convolutional layers for hyperspectral image classification. An adaptive neighborhood aggregation method based on statistical variance integrating the spatial information along with the spectral signature of the pixels is proposed for improving graph convolutional network classification of hyperspectral images. The spatial-spectral information is integrated into the adjacency matrix and processed by a single-layer graph convolutional network. The algorithm employs an adaptive neighborhood selection criteria conditioned by the class it belongs to. Compared to fixed window-based feature extraction, this method proves effective in capturing the spectral and spatial features with variable pixel neighborhood sizes. The experimental results from the Indian Pines, Houston University, and Botswana Hyperion hyperspectral image datasets show that the proposed AN-GCN can significantly improve classification accuracy. For example, the overall accuracy for Houston University data increases from 81.71% (MiniGCN) to 97.88% (AN-GCN). Furthermore, the AN-GCN can classify hyperspectral images of rice seeds exposed to high day and night temperatures, proving its efficacy in discriminating the seeds under increased ambient temperature treatments.

1. Introduction

Hyperspectral images (HSI) contain rich spectral and spatial information useful for material identification. Recently, many methods based on deep learning tools have been developed resulting in an increase in the classification accuracy and segmentation precision of these images [1]. One of these methods is the use of graphs together with deep learning. Many data structures that are non-Euclidean can be represented in the form of graphs [2,3]. Nowadays sophisticated sensing and imaging technologies are available for the acquisition of complex image datasets. The resultant images have higher information content requiring non-Euclidean space representations. Therefore, the use of graph theory combined with deep learning in images has proved to be more effective for processing these images. Based on convolutional networks and deep networks, the concept of Graph Convolutional Networks (GCN) has been developed and applied to image [4]. An image with a regular domain (regular grid in the Euclidean space) is represented as a graph, where each pixel in the image represents a node, and edges are the connections between adjacent nodes [5]. GCNs are used to model long-range spatial relationships in HSI where a CNN fails [6]. One of the most important parts of the development of the GCNs is the generation of the adjacency matrix and the derivation of the Laplacian [7,8,9]. The adjacency matrix of undirected graphs represents the relationship between vertices, in this case pixels. Quin et al. [10] proposed a GCN method that markedly improved the classification accuracy in HSI by taking the spatial distance between nodes and multiplying it by the adjacency matrix which contained spectral signature information. L Mou et al. [11] built the graph by using correlation to measure the similarity among pixels and thereby identifying pixels belonging to the same category. They built a two-layer GCN that improved the classification results. A drawback of using GCN is the computational cost involved in constructing the adjacency matrix. To address this problem, the authors in [12] constructed an adjacency matrix using pixels within a patch containing rich local spatial information instead of computing the adjacency matrix using all the pixels in the image. Another important work in reducing the computational cost is the one proposed by Hong et al. in [6], where the authors built a method called MiniGCN, which allows training large-scale GCN with small batches. The adjacency matrix is constructed using K-nearest neighbors, taking the 10 nearest neighbors of the central pixel into consideration. For representing a HSI as a graph, many authors rely on building the adjacency matrix using k-nearest neighbors [13,14], because it is an easy method to understand and apply, but this method does not sufficiently capture the spectral characteristics of hyperspectral data [15]. The superpixel approach [16,17] creates the graph from superpixel regions to reduce the graph size.

Some authors have implemented neighbor selection using threshold, for example, in hyperspectral unmixing applications [18]. Others have implemented adaptive shapes [19] in classification methods that does not involve GCNs. In this work, we propose a novel way of adaptively creating the adjacency matrix based on a neighbor selection approach called AN-GCN. The general idea is to iterate over each pixel, aggregating neighbors that belong to the same class as the central pixel based on a variance measure creating an adjacency matrix. The algorithm selects a different number of neighbors for each pixel thereby adapting to the spatial variability of the class in which the pixel belongs with the aim of improving the classification and solving the uncertainty problem that arises due to border pixels. The construction of the adjacency matrix is a crucial step for a GCN to succeed in HSI classification [13]. The performance of AN-GCN is compared with GCN methods that do not combine other machine learning stages in the architecture. An optimal graph representation of the HSI as the adjacency matrix impacts the classification result significantly. Recently, hyperspectral images are being used in agriculture for the analysis of rice seeds [20,21,22], because an accurate phenotype measurement of the seeds using non-destructive methods helps in evaluating the quality of the seeds, and contributing to improvement in agricultural production [23]. Another reason for using hyperspectral images for the classification of rice seeds is to save work and time, since these processes are conventionally done manually by expert inspectors in the area [20,24]. Hyperspectral rice seed images have been classified using only conventional machine learning methods, and there is no report of using GCN based methods. To test the effectiveness of AN-GCN method in classifying hyperspectral images of rice seeds, less than 10% of the rice image data is used for training the AN-GCN and the remaining is used for testing and validation. The main contributions of this work are (1) a novel way of computing the adjacency matrix using adaptive spatial neighborhood aggregation to improve the performance of GCN in HSI classification; (2) Performing classification of rice seed HSIs grown under high temperatures using the AN-GCN approach.

2. Materials and Methods

This section presents the AN-GCN method based on the construction of the adjacency matrix by adaptive neighbor selection. The goal is to characterize the homogeneity between pixels for better discrimination between classes. Pixels that have a degree of homogeneity most likely belong to the same class. For this, a variation metric is used to measure homogeneity [25].

where are the pixel intensity values per band that belong to a radius R, and n is the number of bands in each pixel.

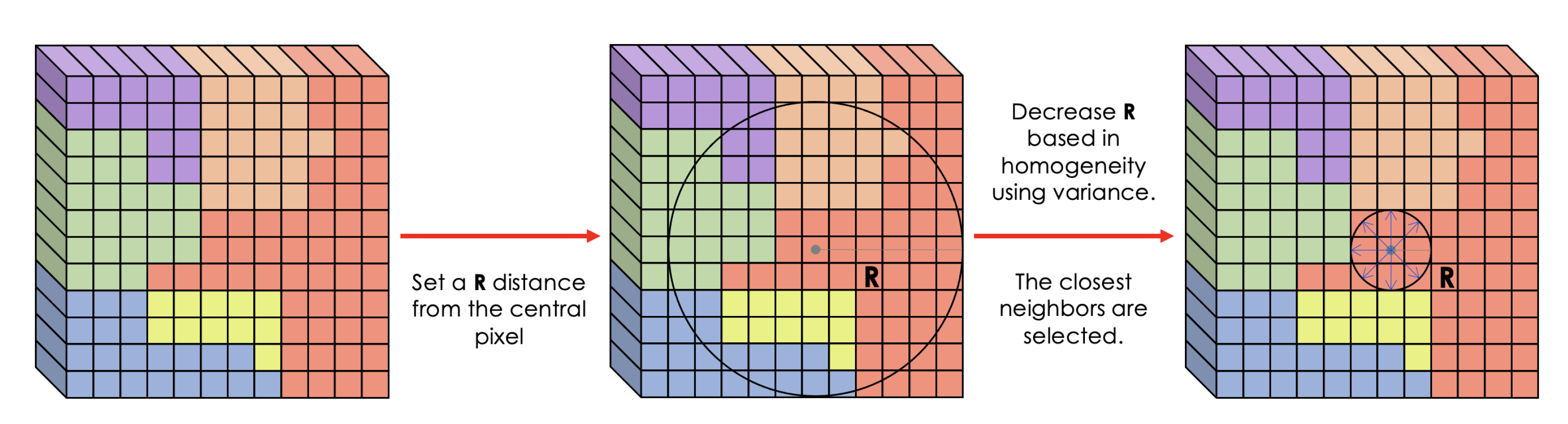

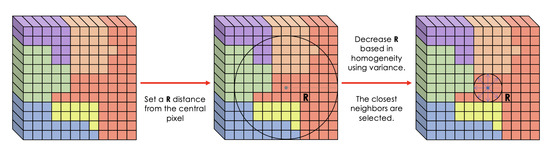

A radius R is selected based on unit distances using spatial coordinates as is shown in the scheme of Figure 1. If R = 1, the algorithm selects only the four closest neighbors, a higher value of radius would select more neighbors. An initial radius is set to R = 100. In order to select homogeneous regions, a variance measure is applied to the area covered by radius R. The threshold values shown in Table 1 is applied to the variance value for the different HSIs for decreasing the area covered by radius R, so that only pixels from homogeneous neighborhoods are selected.

Figure 1.

Scheme of neighbors selection using a variance radius.

Table 1.

Threshold values for selecting neighborhood pixels for each HSI.

If the variance is larger than the threshold value, the coverage radius is decreased until the desired variance threshold is reached. If the variance is smaller than the threshold, the selected neighbors meet the criterion, and the pixels in the neighborhood belong to the same class, thereby resulting in successful class discrimination.

The threshold value for each HSI is determined by calculating the average variance of the set of pixels that are known to belong to the same class, then a unique threshold is chosen for all classes. The threshold value is different for each HSI. The neighbor selection is applied to each pixel of the HSI. Each pixel will have a different neighborhood depending on the homogeneity of the region surrounding it. Once the neighborhood region with the pixel neighbors for each center pixel is selected, the adjacency matrix is built using the radial basis function (RBF).

where and are the pixel intensity vectors per band for pixels i and j, is a control parameter.

After the construction of the adjacency matrix, the GCN algorithm is applied. The Laplacian matrix computed from the adaptive neighborhood adjacency matrix is used to measure how much the function value is changing at each node (graph gradient).

where D is a diagonal matrix of node degrees and A is the adjacency matrix. The normalized Laplacian matrix is positive semi-definite, and with this property the Laplacian can be diagonalized by the Fourier basis U, therefore the Equation (3) can be written as , where U is a matrix of Eigenvectors and is a diagonal matrix of Eigenvalues . The Eigenvectors satisfy the orthonormalization property . The graph Fourier transform of a graph signal X is defined as and the inverse , where X is a feature vector of all nodes of a graph. Graph Fourier transform makes a projection of the input graph signal to an orthonormal space whose bases is determined from the Eigenvectors of the normalized graph Laplacian [5]. In signal processing the graph convolution process with a filter g is defined as:

where ⊙ is the element-wise product. The filter is defined by and the convolution Equation (4) using the transform of a graph signal is simplified as

due to the computational complexity of Eigenvector decomposition in Equation (5), and as the filter g is not localized, which means that it may take nodes far away from the central node, Hammond et al. [26], approximate g using Kth order truncated expansion of Chebyshev polynomials. The Chebnet Kernel is defined as

where i represents the smallest order neighborhood, are the Chebyshev coefficients, k represents the largest order neighborhood, T is the Chebyshev polynomial of the kth order and . Replacing the filter of Equation (6) in the convolutional Equation (5) is obtained,

As , the Equation (8) can be written as

where and taking and the largest eigenvalue of [27], Equation (9) can be written as:

where and , the Equation (10) becomes:

To avoid over-fitting GCN assumes , and replacing the Equation (3) for L in Equation (11), the following equation is obtained:

Therefore, using a normalization proposed by [27] where and . GCN uses a propagation rule for updating the weights in the hidden layer iteratively until the output of the GCN converges to the target classes. The propagation rule for GCNs is:

where is a matrix of filter parameters (weigths). Most articles related to GCN represent the propagation rule as follows:

where matrix denotes the features output in the lth layer (input). is the activation function, and are the learned weights and biases.

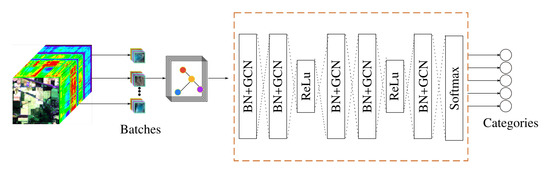

In order to reduce the computational cost of AN-GCN, batch processing of MiniGCN [6] is followed. The batches of pixels are extracted as in CNNs, followed by the construction of subgraphs for each batch from the adjacency matrix . The propagation rule described in Equation (14) for each subgraph is:

where are the batches of pixels or subgraphs used for network training. The final output of the propagation rule is a vector that joins all the subgraph outputs.

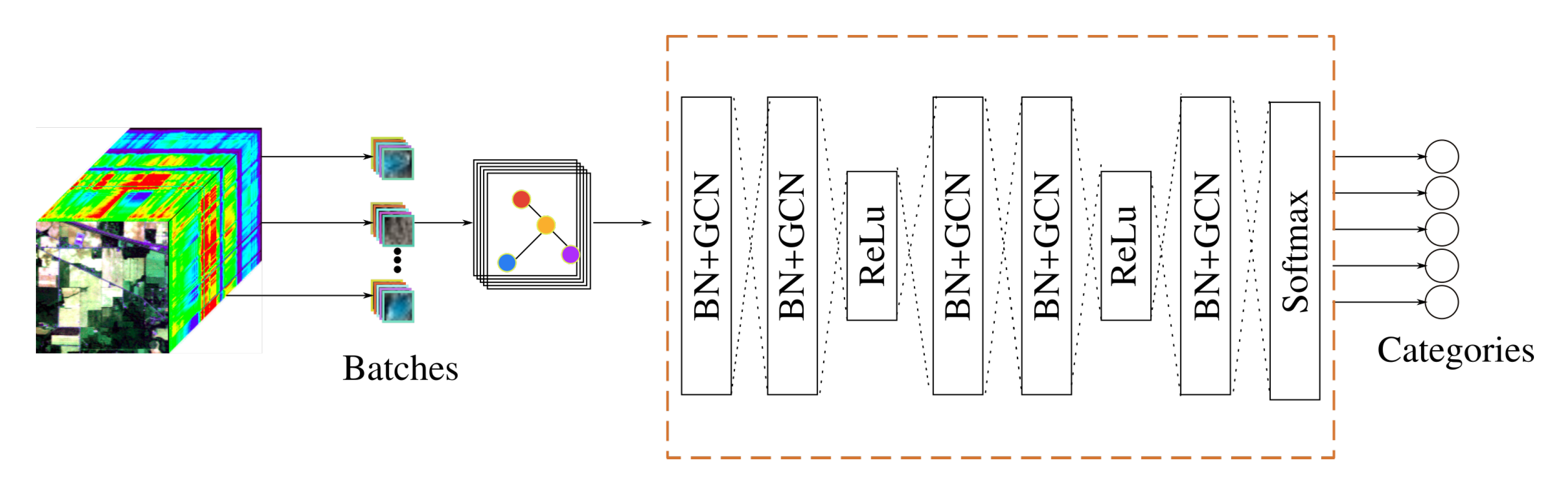

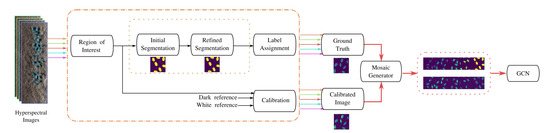

The pseudocode for creation of the adjacency matrix for AN-GCN is described in Algorithm 1 and the pseudo-code for the AN-GCN is given in Algorithm 2. Figure 2 shows the architecture of AN-GCN. Batch normalization is implemented before GCN layer.

| Algorithm 1 Adjacency Matrix for AN-GCN |

|

Figure 2.

AN-GCN architecture.

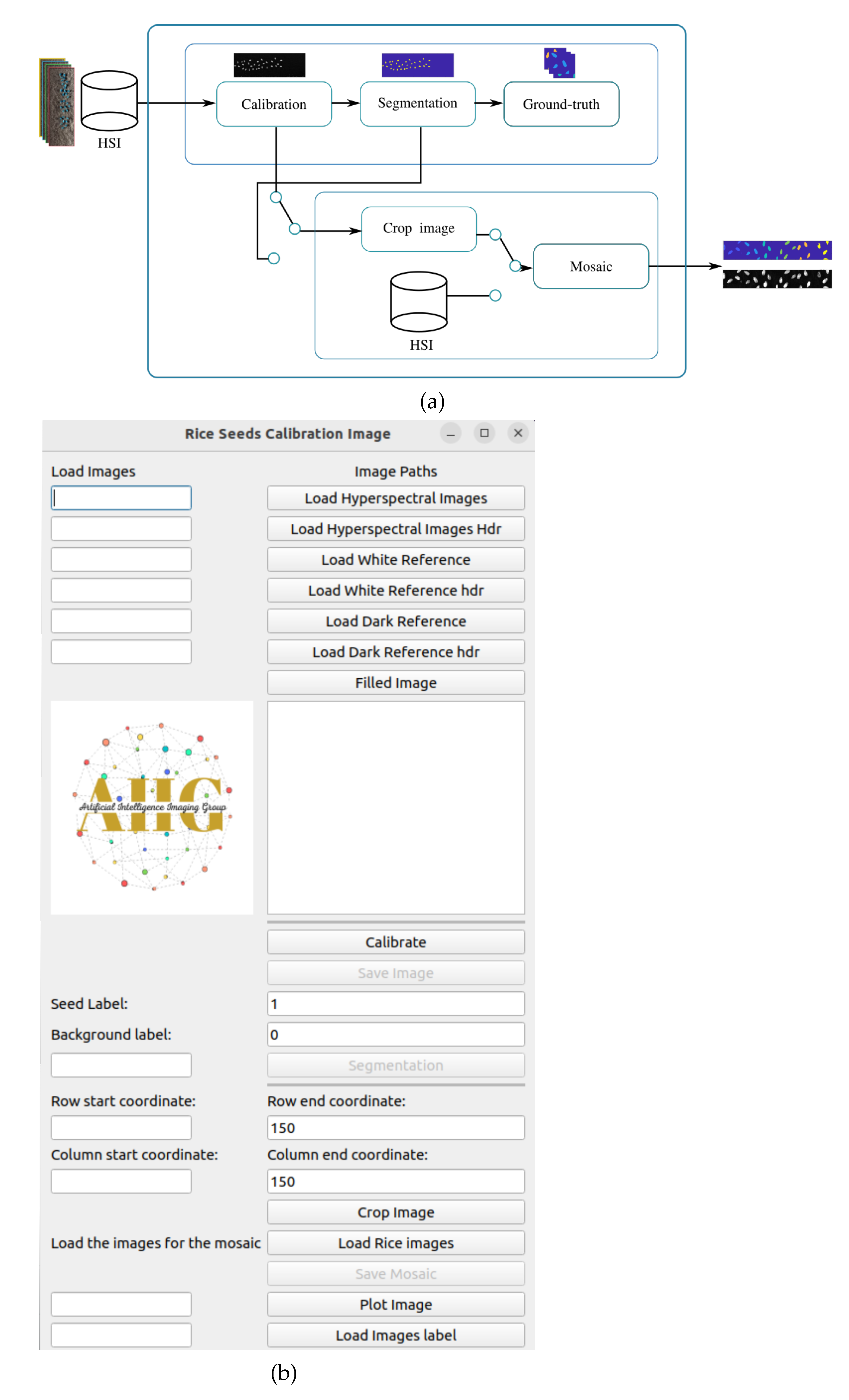

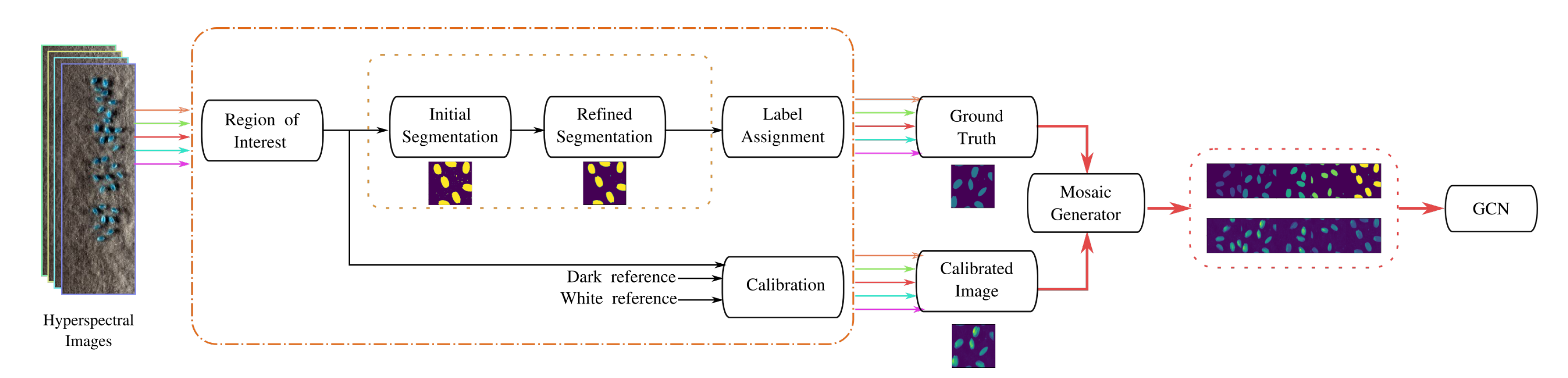

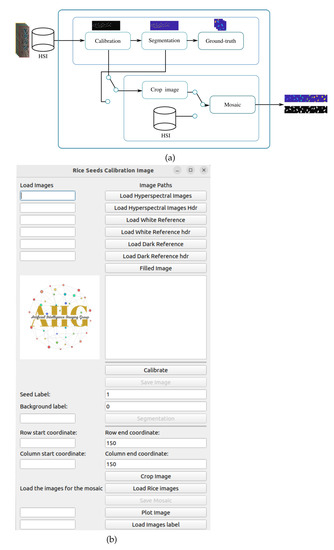

A graphical user interface is developed for constructing the image and ground truth mosaics for the pixel-based classification of rice seed HSIs. The hyperspectral rice seed images are calibrated using the workflow illustrated in Figure 3a. The input is a rice seed hypercube 3 dimensional array.

| Algorithm 2 Pseudo code of AN-GCN for HSI classification |

|

Figure 3.

(a) Workflow for HSI seed images and ground truth mosaic construction, (b) HSI seed calibration application.

where represents the calibrated image, is the dark reference, and is the white reference acquired by the sensor. After calibration, the image is segmented using two methods. The first is the Otsu thresholding algorithm which is applied to identify the seeds from the background. In the second step, the segmentation is improved using a Gaussian filter with a , and the labeling is performed using 2 nearest neighbor connectivity. The “ground truth block” generates the labels for the seeds and the background. In addition, a “crop image block” is available to select a Region Of Interest (ROI), from an input calibrated or segmented image. Once the images are cropped, a parallel block is implemented to create the mosaics for training and classification using the GCN architecture. A Hyperspectral Seed Application has been developed to perform the above processes. The GUI of the App is illustrated in Figure 3b, and is implemented in python using pyqt5 libraries.

The GUI is operated in the following manner. The user uploads the HSI of the seed and the white and dark references in the provided widgets. There are buttons for calibrating and saving the image. The user has the option to assign categorical labels to the seed classes, which can be based on the temperatures the seeds are exposed to, or different varieties of seeds. Once the integer labels are selected, the images are labeled and saved. Another useful function provided by the GUI is for cropping an HSI. As HSIs are large and occupy more space, the user can crop the images by specifying the row and column coordinates enclosing the seed. Finally, a mosaic with seed images of different categories is created by concatenating the individual images in the horizontal or vertical direction. A button is provided for visualization of the images at any stage.

Datasets

Four hyperspectral image datasets are used to test the proposed AN-GCN method. The training set is generated by randomly taking pixels in each class, the class 0 corresponding to the backgroung is not considered for training. Once the pixels for training and testing are selected, the adjacency matrix corresponding to the training and testing pixels is also selected. Table 2 gives the specification for each dataset.

Table 2.

Hyperspectral dataset specifications.

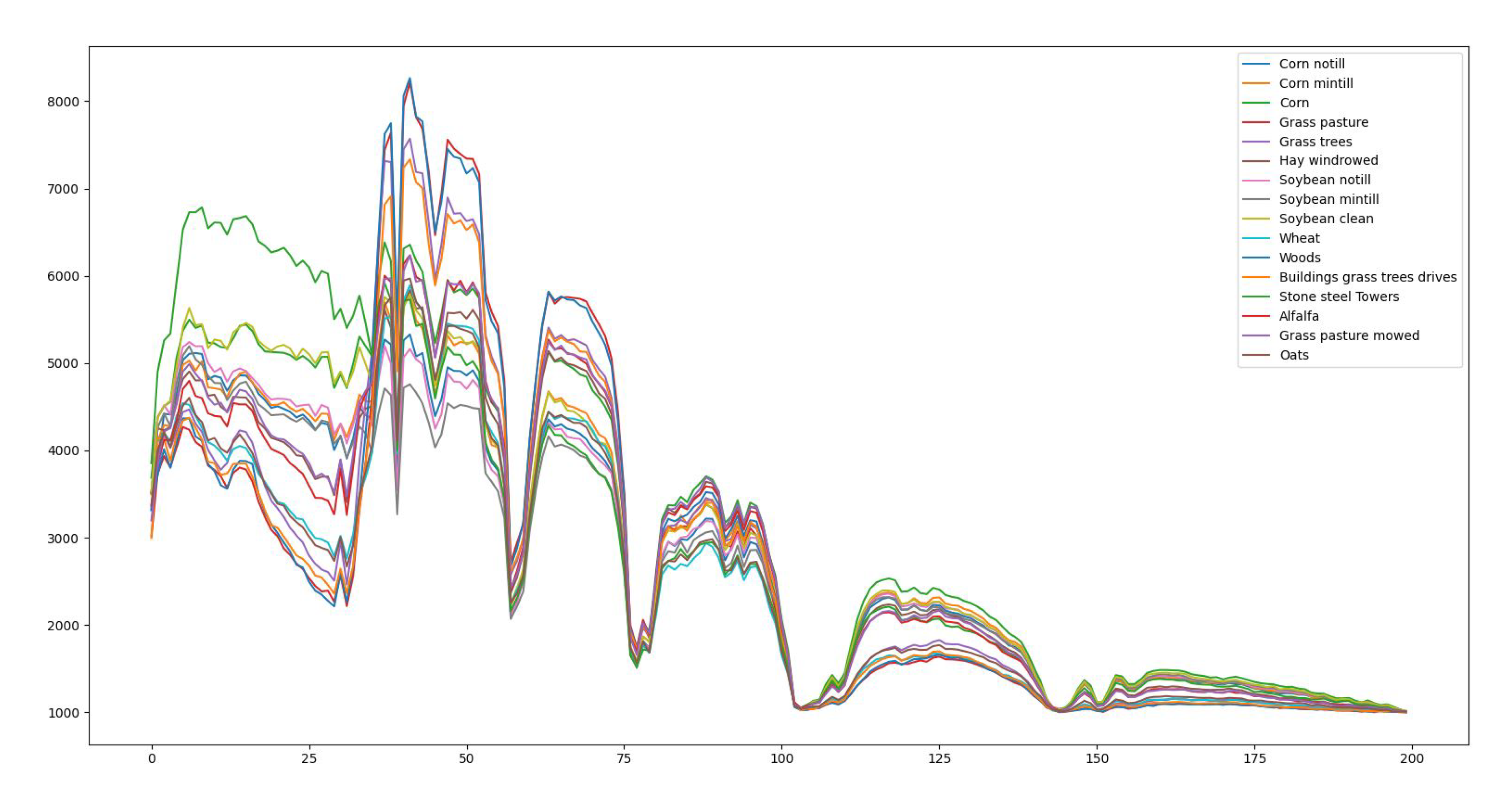

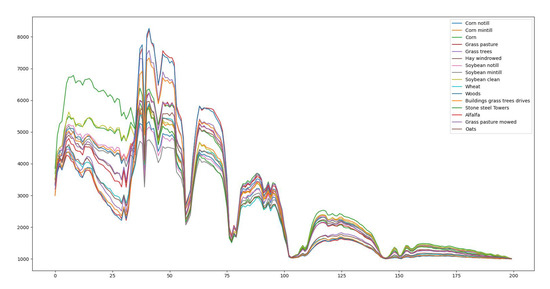

(1) Indian Pine Dataset: This scene is taken from North-Western Indiana with an Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) optical sensor over the Indian pine test area. Each band contains 145 × 145 pixels with a total of 224 bands. Indian Pine scene contains 16 classes. The spectrum for a pixel from each of the 16 classes is shown in Figure 4. Among the classes are crops, vegetation, smaller roads, highways, and low-density housing, which are named in Table 3 along with the training and testing set used for training and testing the AN-GCN. 20 noisy bands due to water absorption are removed leaving a total of 200 bands.

Figure 4.

Spectrum of each material in the Indian Pines hyperspectral image.

Table 3.

Number of training and testing samples for the different classes in Indian Pine dataset.

(2) University of Houston dataset: This hyperspectral dataset is acquired using an ITRES-CASI sensor. It contains 144 spectral bands between 380–1050 nm; with a spatial domain of 349 × 1905 pixels per band and a spatial resolution of 2.5 m. The data is taken from the University of Houston campus and contains the land cover and urban regions with a total of 15 classes as shown in Table 4, along with the training and testing set used for training and testing the AN-GCN.

Table 4.

Number of training and testing samples for the different classes in Houston University dataset.

(3) Botswana dataset: This scene is taken over the Okavango Delta, Botswana in 2001–2004 using an EO-1 sensor. It contains 242 bands between 400–2500 nm. After the denoising process is applied, 145 bands remain [10–55, 82–97, 102–119, 134–164, 187–220]. Each band contains 1476 × 256 pixels with a spatial resolution of 2.5 m.

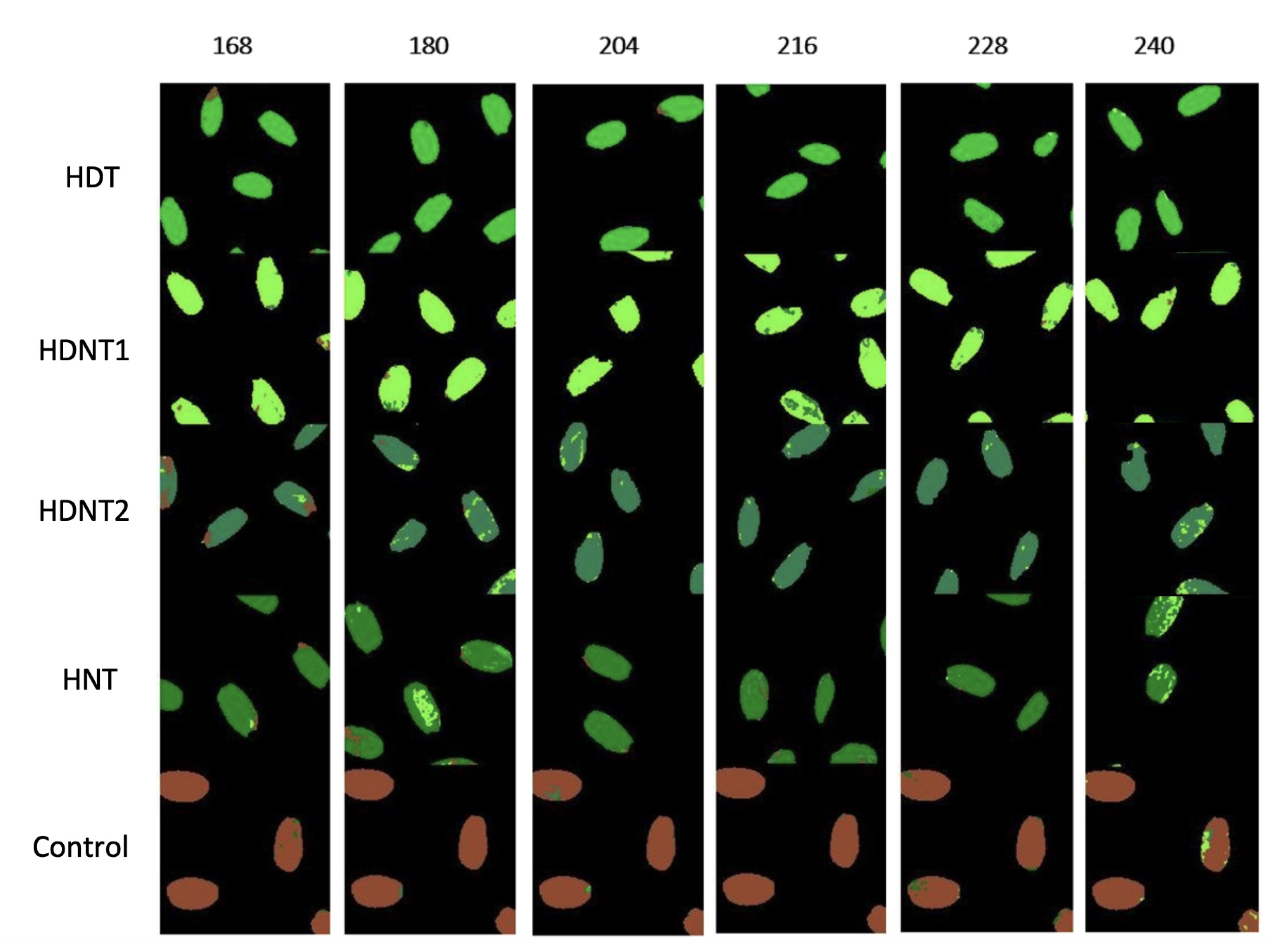

(4) Rice Seeds dataset: Hyperspectral images of rice seeds grown under high day/night temperature environments, and control environment are taken with a high-performance line-scan image spectrograph (Micro-HyperspecImaging Sensors, Extended VNIR version) [23]. This sensor covers the spectral range from 600 to 1700 nm. The dataset contains 268 bands and 150 × 900 pixels per band. there are four temperature treatments shown in Table 5.

Table 5.

Temperature treatments for rice seed HSI dataset.

The rice seed images are calibrated using the workflow outlined in Figure 5. The workflow is composed of five stages described as follows: the first stage consists of reading the input image and selecting a region of interest (ROI) containing rice samples. Once the ROI is obtained, an initial segmentation based on histogram selection is performed. The rice seeds are thresholded from the background, if their intensities are within the interval .

Figure 5.

Workflow for preprocessing of rice seed hyperspectral images.

The initial segmentation extracts the rice seeds in the regions of interest. However, some isolated pixels belonging to the background class are present within the rice seed region. To remove these pixels a Gaussian filter with a standard deviation of is applied to the cropped image. In addition, similar regions are connected using connected component labeling using k-connectivity. Here, a 3 × 3 kernel with 2-connectivity is used to connect disconnected regions and assign a label. Once the refinement of the segmentation is performed, the label assignment stage assigns labels to each pixel using the tuple (0, class number), where 0 represents the background.

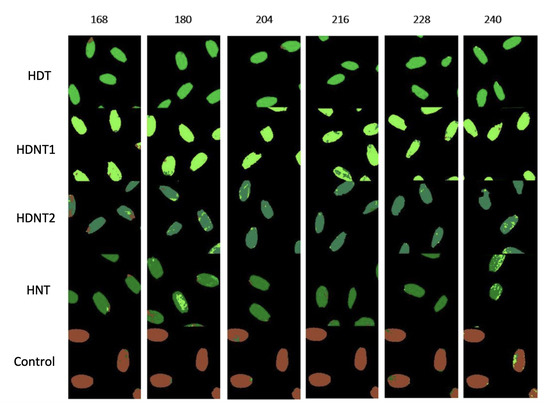

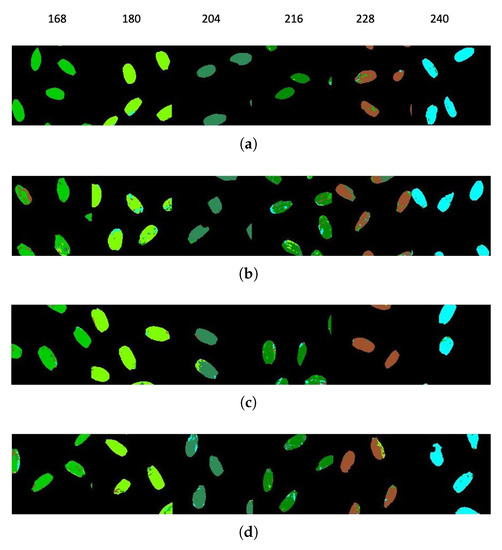

For rice seed HSI, two types of classification were made. The first is to classify each treatment by exposure time, that is, for the same time, the different treatments are taken as a class, where the class number is assigned as follows: 1 for HDT class, 2 for HDNT1 class, 3 for HDNT2 class, 4 for HNT class, and finally 5 for Control class. The second type of classification was to classify the rice seeds for different exposure times for the same treatment; for this case, the classes were assigned as follows: 1 for 168 class, 2 for 180 class, 3 for 204 class, 4 for 216 class, 5 for 228 class and finally 6 for 240 class.

The images are calibrated using the Shafer Model [28] described in Equation (17), is the constant reflection value at a predetermined wavelength, is the measurement of reflection, is the white reference obtained from the calibration of a Teflon tile, and B is the black reference.

The rice seed HSI preprocessing workflow is performed for the five classes of images. The last stage is the mosaic generator which stitches the five groundtruth images, and the five calibrated HSIs into two mosaics, respectively. These two mosaics are then input to the GCN for classification.

3. Results

The performance of the AN-GCN method is evaluated by comparing its classification results with pure GCN based results reported by other authors in the literature, especially MiniGCN. Three metrics: Overall Accuracy (OA), Average Accuracy (AA), and Kappa score are used for this comparison. Increased accuracies using AN-GCN are highlighted in bold in the Tables. To further test the effectiveness of AN-GCN, several tests are done using fixed nearest neighbors and compared with the proposed method of adaptive neighbors.

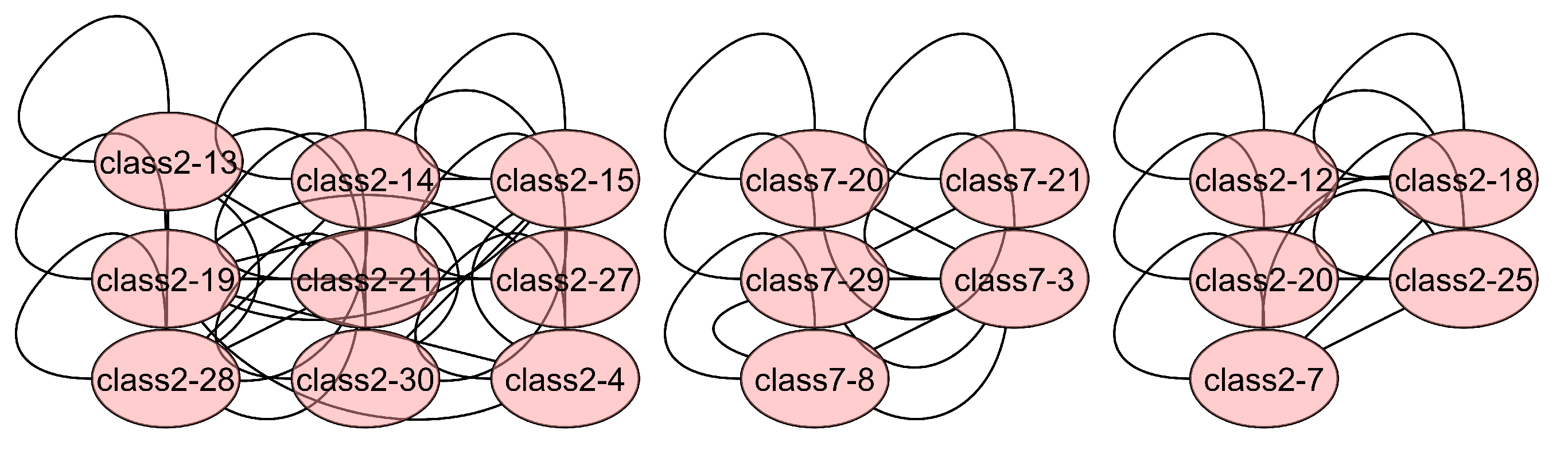

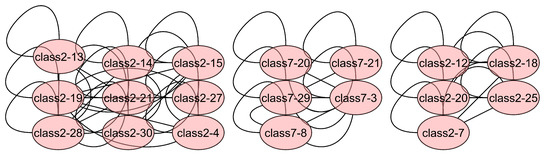

To demonstrate that the adaptive neighbor selection method aggregates pixels that belong to the same class, the Laplacian matrix used for training the Botswana scene is plotted. This scene is taken since it is a small matrix compared to the other HSI images and the subgraphs belonging to the classes can be plotted. In Figure 6, a portion of the matrix is shown, where the subgraphs (pixels) belonging to the same class can be visualized. For example, pixels 20, 21, 29, 3 and 8 belong to class 7, corresponding to Hippo grass class.

Figure 6.

Subgraphs of Laplacian matrix from Botswana dataset.

A test is carried out to show that the construction of the adjacency matrix influences the final classification. For this test, the Indian Pine scene is used as a reference and the adjacency matrix is built using fixed neighbors, starting by taking the first 4 neighbors for each pixel. Then the matrix is built for 8 neighbors, 12, 20 neighbors and as a final step the matrix is built with the proposed method as shown in Table 6. Once the different matrices are built, each one is used in the GCN classification model. In the GCN training process, the same parameters shown in Algorithm 2 are used for each of the matrices and the final classification results of Overall Accuracy (OA), Average accuracy (AA) and kappa score using different numbers of neighbors are shown in Table 6.

Table 6.

Comparison of classification using different K-nearest neighbors in Indian Pines dataset.

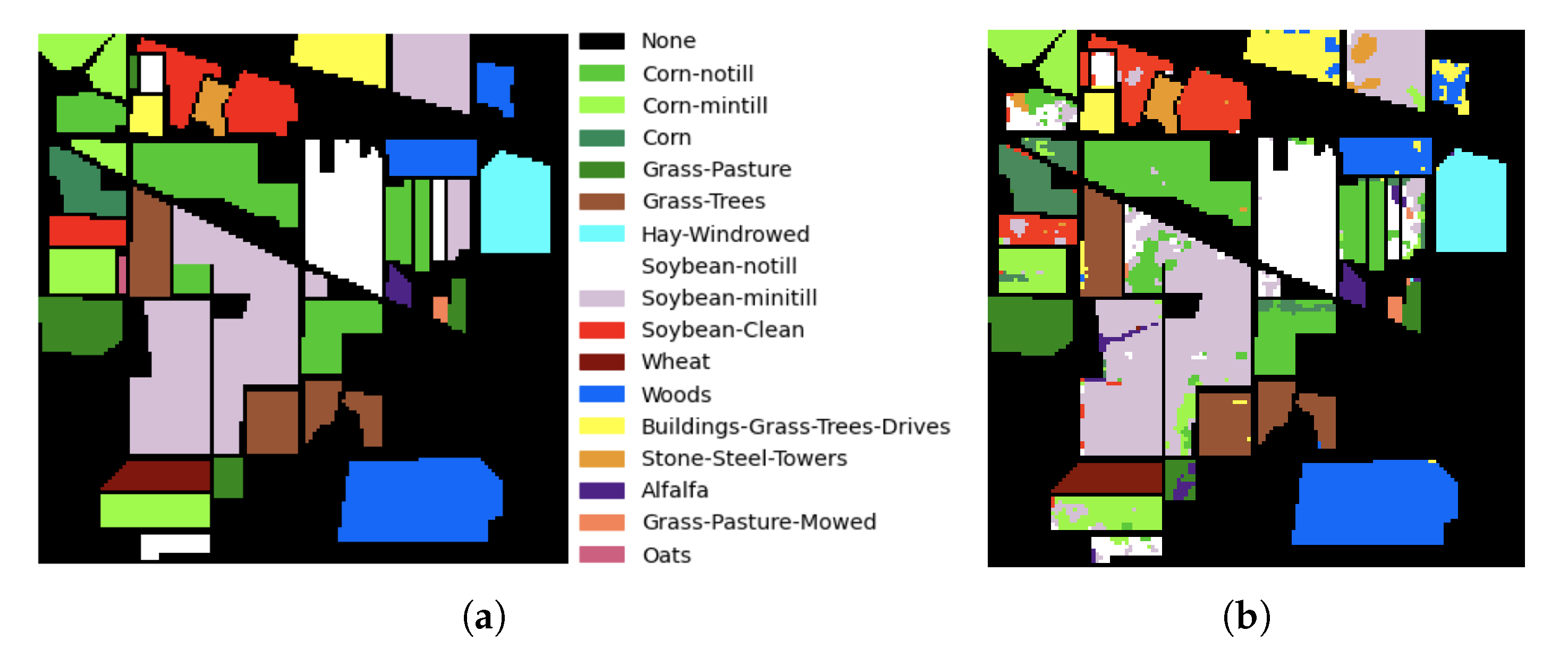

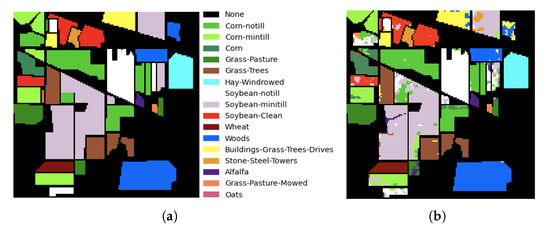

For the construction of the adjacency matrix for the Indian Pine scene, a variance threshold of 0.16 is used for the selection of neighbors. The classification results are shown in Table 7, obtaining a perfect classification for classes: wheat, stone-steel-towers, alfalfa, grass-pasture-mowed and oats. Figure 7 shows the Indian Pines classification map where the different pixel classification results can be seen.

Table 7.

Classification performance (%) of various GCN methods for Indian Pines dataset.

Figure 7.

Classification map in Indian Pine dataset. (a) Groundtruth map. (b) AN-GCN.

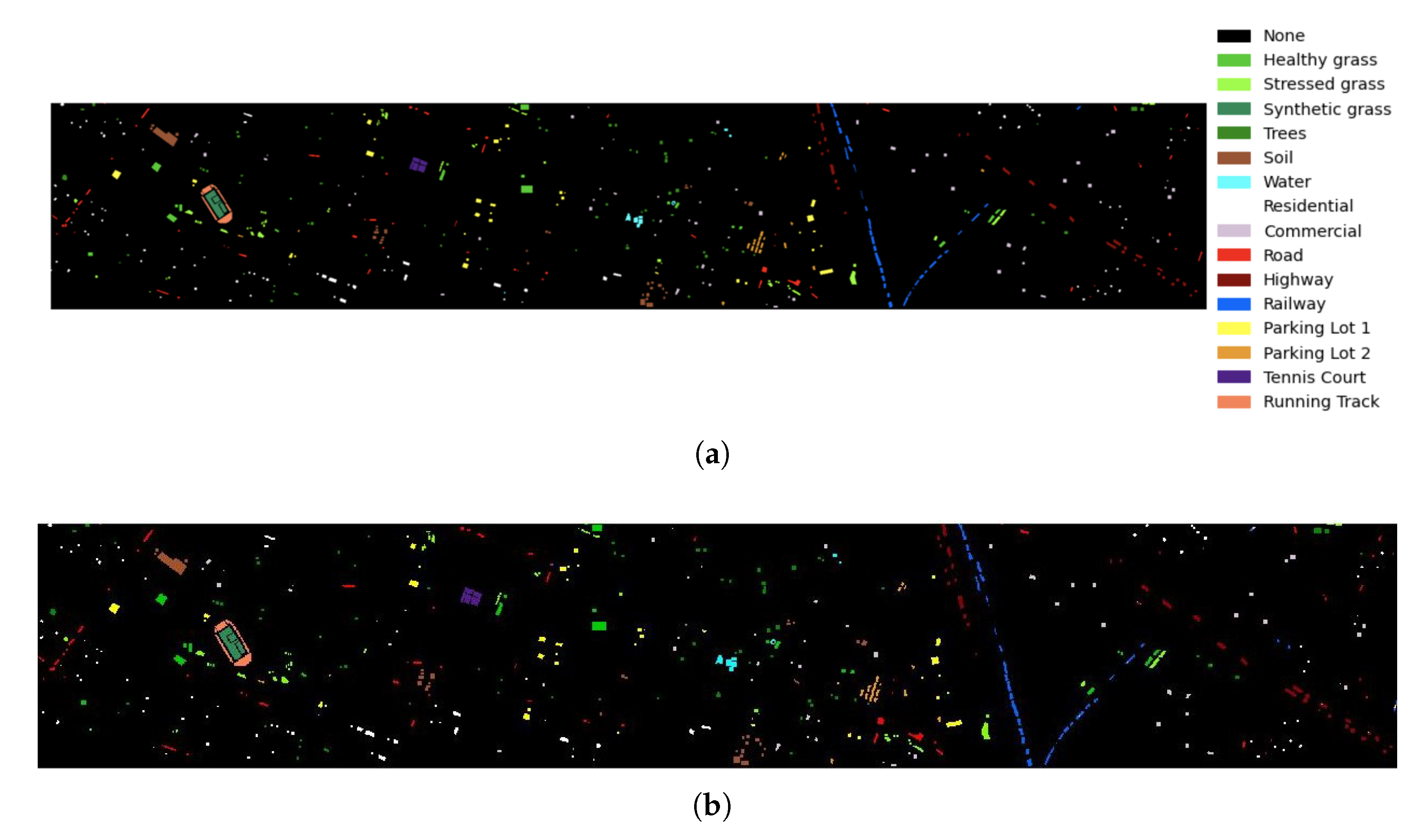

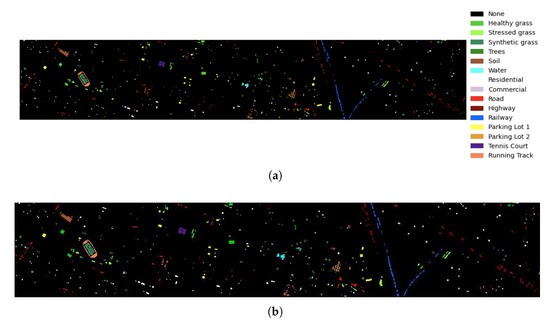

The results obtained for Houston university are reported in Table 8. The AN-GCN obtains the best classification values of OA, AA, Kappa score compared to the other reported GCN methods. A perfect classification is obtained for classes: healthy grass, synthetic grass, soil, water, tennis court and running track.

Table 8.

classification performance(%) of various GCN methods for Houston University dataset.

Figure 8 shows the classification map of the AN-GCN method for the Houston university scene, where Figure 8a is the groundtruth and Figure 8b is the classification map using the proposed model.

Figure 8.

Classification map for Houston university. (a) Groundtruth map. (b) AN-GCN.

Table 9 shows the training and testing set used for the Botswana HSI and reports the classification accuracies obtained this dataset. For the construction of the adjacency matrix, a threshold of 0.024 is used, shown in the Table 1. The results show that AN-GCN obtains the highest accuracy values for 13 of the 14 classes that make up the Botswana scene. The highest reported classification accuracy of AA (99.2%), OA (99.11%) and Kappa score (0.9904) are obtained with the AN-GCN method.

Table 9.

Number of training and testing samples for the different classes and classification performance (%) of various GCN methods for Botswana dataset.

For HSI rice seeds the classification results are shown in Table 10 and Table 11. The first table corresponds to the classification results by treatment for a specific time, the exposure time of 204 and 228 h had the best OA, AA and Kappa score values and time 240 had the lowest values.

Table 10.

Classification performance for rice HSI image datasets for different temperature treatments.

Table 11.

Classification performance for rice HSI datasets for different exposure times.

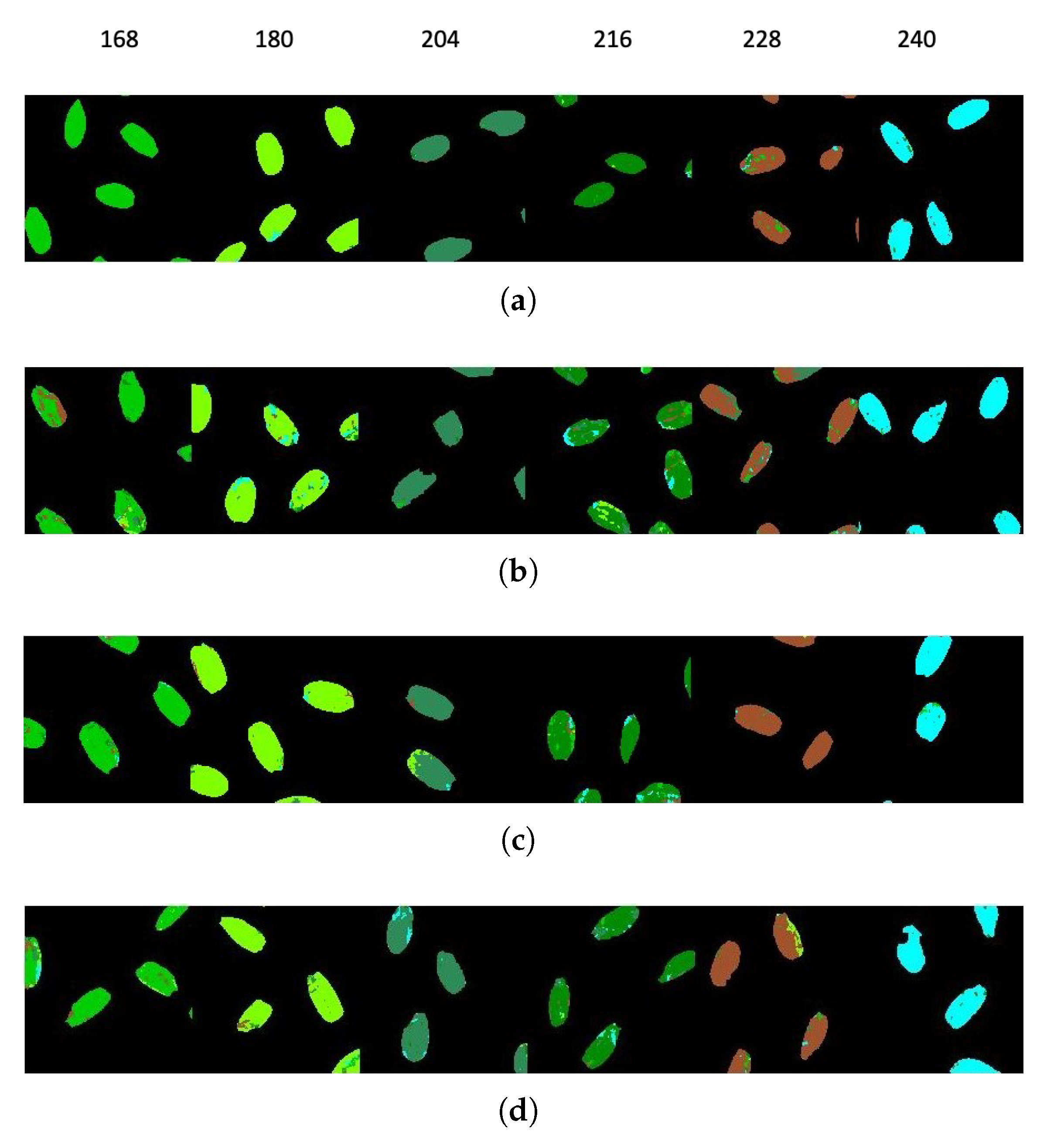

Table 11 corresponds to the classification results for the different exposure times for the same treatment. The HDT treatment obtained the best OA, AA, and Kappa score classification values.

Figure 9 shows the classification map for the different classes at a specific time and Figure 10 shows the classification map for the different exposure times for the same treatment.

Figure 9.

Classification map of HSIs of rice seeds from different hours of exposure for each temperature treatment.

Figure 10.

Classification map for rice HSI datasets for different temperature treatments: (a) HDT, (b) HDNT1, (c) HNT, (d) HDNT2.

4. Discussion

4.1. Indian Pines

The results obtained for the classification of the Indian Pines scene are reported in Table 7. A considerable improvement in classification accuracy using AN-GCN can be seen when comparing with other GCN methods, especially the reported MiniGCN methods [6,29,30]. AN-GCN method improves the accuracy by more than 13 percent affirming the importance of adjacency matrix creation. Incorporating neighborhood information adaptively results in better discrimination between classes, especially in the boundary region between classes. The classification map is shown in Figure 7. For a better visual comparison, the Indian Pine groundtruth is provided in Figure 7a and the classification map from the AN-GCN method is shown in Figure 7b. Compared to the groundtruth, it can be seen that some pixels of the Wood class (blue color) are misclassified into Building-grass-trees drives (yellow color) because these classes have similar spectral signatures. However, the AN-GCN classification map looks similar to the groundtruth map with minimal misclassification errors.

Table 6 gives the results obtained for the test samples from the Indian Pines scene, showing that the adaptive spatial neighbor selection performs better than fixed neighborhood selection. Another test to check that there is better class discrimination using adaptive neighbors is to plot the training Laplacian matrix. In Figure 6, a subgraph of the Laplacian matrix for the Bostwana dataset is shown. It is observed that there are subgraphs grouping pixels that belong to the same class showing the effectiveness of the adaptive neighbors in aggregating pixels of the same class and avoiding pixels of a different class in being grouped together thereby improving the training of the GCN and the final classification results of the HSI.

4.2. Houston University

The training and testing set and the classification accuracies obtained for the Houston university dataset are reported in Table 8. The results show an increase in classification accuracy with respect to the other GCN-based methods. The minimum classification accuracy for the AN-GCN method is 90.88% corresponding to the parking lot 2 class, showing the effectiveness of this method compared to the other methods. One of the classes that has the lowest classification accuracy using almost all methods is the Commercial class. This behavior is also reported in [16]. However, with the AN-GCN method, this class achieves a higher classification accuracy of 97.06%, showing that AN-GCN has superior performance compared to the other methods. In Figure 8a,b, the groundtruth and the classification map obtained using the AN-GCN method are shown, respectively. Due to the improvement of classification performance using the AN-GCN method, it is difficult to observe the misclassification of some pixels. However, when observing the right side of the classification map in detail, some missed pixels can be identified.

4.3. Botswana

The classification results for the Botswana Scene are given in Table 9, along with the number of training pixels and testing pixels used. Botswana presents outstanding results using the AN-GCN method having a higher value of overall accuracy, average accuracy, and Kappa score compared to the other methods. There are only a few reported classification results using GCN for the Botswana dataset, however, the AN-GCN method is efficient in classifying this dataset.

4.4. Rice Seeds

The GCN architecture performs well in classifying the rice seed HSIs from different temperature treatments, as well as classifying the seeds from subclasses of six temperature exposure duration. The GCN integrates spatial and spectral information adaptively performing a pixel-based classification obtaining a good classification performance for all four temperature treatments as well as for sub-classes of varying temperature exposure duration.

The classification results shown in Table 11, show lower values in the classification than those shown in Table 10. These results are expected, since for this case the different exposure hours are being taken from the same treatment, but even so, the proposed method is able to discriminate the exposure times for the same treatment, showing that the rice seed undergoes changes as the exposure time to high temperature increases. The rice seed HSIs from the highest day and night temperature of 36/32 degree Celsius give the lowest accuracy, showing that higher temperatures alter the seed spectral-spatial characteristics drastically making it indiscriminable from other treatment classes.

Comparing these results with those reported in [23], the authors had obtained a classification accuracy of 97.5% only for two classes using a 3D CNN with 80% of the data for training. While using the AN-GCN method, four treatments classes and six temperature exposure duration classes are classified using only 10% of the data for training giving satisfactory classification results.

5. Conclusions

This paper presents a new method for neighborhood aggregation based on statistics which improves the graph representation of high spectral dimensional datasets such as hyperspectral images. This method performs better than recent state-of-the-art implementations of GCNs for hyperspectral image classification. The presented method selectively adapts to the spatial variability of the neighborhood of each pixel and hence acts as a significant measure of discriminability in localized regions. An increased classification accuracy of 4% is obtained with the University of Houston and Botswana datasets, and an increase in accuracy of 1% is obtained with the Indian Pine dataset. The AN-GCN method successfully characterizes intrinsic spatial-spectral properties of rice seeds grown under higher than normal temperatures and classifies hyperspectral images of these seeds with high precision using less than 10% of the data for training, placing the AN-GCN as a preferable method for agricultural applications compared to other CNN-based methods.

Author Contributions

Conceptualization, J.O., V.M., E.A. and H.W.; methodology, J.O., V.M., E.A.; software, J.O. and E.A.; validation, J.O. and E.A.; formal analysis, J.O.; investigation, V.M. and J.O.; resources, B.K.D., H.W. and V.M.; data curation, B.K.D. J.O. and E.A.; writing—original draft preparation, J.O. and V.M.; writing—review and editing, V.M.; visualization, J.O. and E.A.; supervision, V.M. and H.W.; project administration, V.M. and H.W.; funding acquisition, H.W. and V.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science Foundation (NSF), grant number 1736192.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

https://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes#Indian_Pines (accessed on 20 October 2022).

Acknowledgments

This work was funded and supported by National Science Foundation (Grant No. 1736192) under a supplement award (H.W. and V.M.). The authors would like to thank doctoral students Sergio David Manzanarez, David Tatis Posada and Victor Diaz Martinez, UPRM for their help in coding).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gogineni, R.; Chaturvedi, A. Hyperspectral image classification. In Processing and Analysis of Hyperspectral Data; IntechOpen: London, UK, 2019. [Google Scholar]

- Asif, N.; Sarker, Y.; Chakrabortty, R.; Ryan, M.; Ahamed, M.; Saha, D.; Badal, F.; Das, S.; Ali, M.; Moyeen, S.; et al. Graph Neural Network: A Comprehensive Review on Non-Euclidean Space. IEEE Access 2021, 9, 60588–60606. [Google Scholar] [CrossRef]

- Miller, B.; Bliss, N.; Wolfe, P. Toward signal processing theory for graphs and non-Euclidean data. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 5414–5417. [Google Scholar]

- Ren, H.; Lu, W.; Xiao, Y.; Chang, X.; Wang, X.; Dong, Z.; Fang, D. Graph convolutional networks in language and vision: A survey. Knowl.-Based Syst. 2022, 251, 109250. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote. Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Ma, F.; Gao, F.; Sun, J.; Zhou, H.; Hussain, A. Attention Graph Convolution Network for Image Segmentation in Big SAR Imagery Data. Remote Sens. 2019, 11, 2586. [Google Scholar] [CrossRef]

- Wan, S.; Gong, C.; Zhong, P.; Du, B.; Zhang, L.; Yang, J. Multi-scale Dynamic Graph Convolutional Network for Hyperspectral Image Classification. arXiv 2019, arXiv:abs/1905.06133. [Google Scholar]

- Jia, S.; Jiang, S.; Zhang, S.; Xu, M.; Jia, X. Graph-in-Graph Convolutional Network for Hyperspectral Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef]

- Qin, A.; Shang, Z.; Tian, J.; Wang, Y.; Zhang, T.; Tang, Y. Spectral–Spatial Graph Convolutional Networks for Semisupervised Hyperspectral Image Classification. IEEE Geosci. Remote. Sens. Lett. 2019, 16, 241–245. [Google Scholar] [CrossRef]

- Mou, L.; Lu, X.; Li, X.; Zhu, X. Nonlocal Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote. Sens. 2020, 58, 8246–8257. [Google Scholar] [CrossRef]

- Ma, Z.; Jiang, Z.; Zhang, H. Hyperspectral Image Classification Using Feature Fusion Hypergraph Convolution Neural Network. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Pu, S.; Wu, Y.; Sun, X.; Sun, X. Hyperspectral Image Classification with Localized Graph Convolutional Filtering. Remote Sens. 2021, 13, 526. [Google Scholar] [CrossRef]

- Liu, B.; Gao, K.; Yu, A.; Guo, W.; Wang, R.; Zuo, X. Semisupervised graph convolutional network for hyperspectral image classification. J. Appl. Remote. Sens. 2020, 14, 026516. [Google Scholar] [CrossRef]

- Bai, J.; Ding, B.; Xiao, Z.; Jiao, L.; Chen, H.; Regan, A. Hyperspectral Image Classification Based on Deep Attention Graph Convolutional Network. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Wan, S.; Gong, C.; Zhong, P.; Pan, S.; Li, G.; Yang, J. Hyperspectral Image Classification With Context-Aware Dynamic Graph Convolutional Network. IEEE Trans. Geosci. Remote. Sens. 2021, 59, 597–612. [Google Scholar] [CrossRef]

- Huang, Y.; Zhou, X.; Xi, B.; Li, J.; Kang, J.; Tang, S.; Chen, Z.; Hong, W. Diverse-Region Hyperspectral Image Classification via Superpixelwise Graph Convolution Technique. Remote Sens. 2022, 14, 2907. [Google Scholar] [CrossRef]

- Li, Z.; Chen, J.; Rahardja, S. Graph construction for hyperspectral data unmixing. In Hyperspectral Imaging in Agriculture, Food and Environment; IntechOpen: London, UK, 2018. [Google Scholar]

- Hu, Y.; An, R.; Wang, B.; Xing, F.; Ju, F. Shape adaptive neighborhood information-based semi-supervised learning for hyperspectral image classification. Remote Sens. 2020, 12, 2976. [Google Scholar] [CrossRef]

- Fabiyi, S.; Vu, H.; Tachtatzis, C.; Murray, P.; Harle, D.; Dao, T.; Andonovic, I.; Ren, J.; Marshall, S. Varietal Classification of Rice Seeds Using RGB and Hyperspectral Images. IEEE Access 2020, 8, 22493–22505. [Google Scholar] [CrossRef]

- Thu Hong, P.; Thanh Hai, T.; Lan, L.; Hoang, V.; Hai, V.; Nguyen, T. Comparative Study on Vision Based Rice Seed Varieties Identification. In Proceedings of the 2015 Seventh International Conference on Knowledge and Systems Engineering (KSE), Ho Chi Minh City, Vietnam, 8–10 October 2015; pp. 377–382. [Google Scholar]

- Liu, Z.; Cheng, F.; Ying, Y.; Rao, X. Identification of rice seed varieties using neural network. J. Zhejiang-Univ.-Sci. B 2005, 6, 1095–1100. [Google Scholar] [CrossRef]

- Gao, T.; Chandran, A.; Paul, P.; Walia, H.; Yu, H. HyperSeed: An End-to-End Method to Process Hyperspectral Images of Seeds. Sensors 2021, 21, 8184. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Johnson, B.; Xie, Z. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote Sens. 2011, 66, 473–483. [Google Scholar] [CrossRef]

- Hammond, D.; Vandergheynst, P.; Gribonval, R. Wavelets on graphs via spectral graph theory. Appl. Comput. Harmon. Anal. 2011, 30, 129–150. [Google Scholar] [CrossRef]

- Kipf, T.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Polder, G.; Heijden, G.; Keizer, L.; Young, I. Calibration and Characterisation of Imaging Spectrographs. J. Near Infrared Spectrosc. 2003, 11, 193–210. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, J.; Yao, K. Global Random Graph Convolution Network for Hyperspectral Image Classification. Remote Sens. 2021, 13, 2285. [Google Scholar] [CrossRef]

- Zhang, M.; Luo, H.; Song, W.; Mei, H.; Su, C. Spectral-Spatial Offset Graph Convolutional Networks for Hyperspectral Image Classification. Remote Sens. 2021, 13, 4342. [Google Scholar] [CrossRef]

- Wan, S.; Pan, S.; Zhong, P.; Chang, X.; Yang, J.; Gong, C. Dual Interactive Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Chen, R.; Li, G.; Dai, C. DRGCN: Dual Residual Graph Convolutional Network for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).