Abstract

Facial expression recognition is crucial for understanding human emotions and nonverbal communication. With the growing prevalence of facial recognition technology and its various applications, accurate and efficient facial expression recognition has become a significant research area. However, most previous methods have focused on designing unique deep-learning architectures while overlooking the loss function. This study presents a new loss function that allows simultaneous consideration of inter- and intra-class variations to be applied to CNN architecture for facial expression recognition. More concretely, this loss function reduces the intra-class variations by minimizing the distances between the deep features and their corresponding class centers. It also increases the inter-class variations by maximizing the distances between deep features and their non-corresponding class centers, and the distances between different class centers. Numerical results from several benchmark facial expression databases, such as Cohn-Kanade Plus, Oulu-Casia, MMI, and FER2013, are provided to prove the capability of the proposed loss function compared with existing ones.

1. Introduction

Facial expressions have been used as critical and natural signals to represent human emotions and intentions. Therefore, various facial expression recognition (FER) methods have been studied and applied to fields, such as virtual reality (VR) [1], human-robot interaction (HRI) [2], advanced driver assistant systems (ADAS) [3], and disease prevention support systems (DPSS) [4].

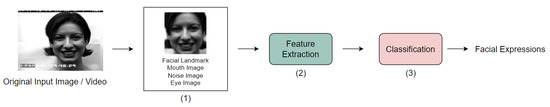

Typical FER methods include three stages: (1) facial-component detection, (2) feature point extraction, and (3) facial expression classification. Facial-component detection involves extracting a facial region from an input image to obtain features such as the eyes and nose from the detected facial components. More recently, studies have shown that feature extraction can be classified into spatial [5,6], and temporal feature extraction [7]. Generally, the expression classifier and feature extraction are vital for the accuracy of FER. Many developments have been made to exploit facial expression (FE) classification, including the Bayesian Classifier [8], Hidden Markov Model (HMM) [9], Adaboost [10], and Support Vector Machine (SVM) [11]. Figure 1 shows the details of the conventional FER process.

Figure 1.

Pipeline of the FER system.

Recent developments in deep learning have achieved significant advancements in computer vision and image processing [12,13,14,15]. Among the deep learning methods, the Convolutional Neural Network (CNN) has been proven capable of reducing the dependency on analytical models and preprocessing techniques by enabling “end-to-end” direct learning from input images. For example, feature extraction and recognition are jointly learned using deep learning methods [16,17,18].

FER is highly sensitive to intra-class variation according to age and gender, illuminance, and facial pose [19]. In addition, because FER datasets are limited and small, operating a CNN to extract the salient features that represent the facial expressions from the facial image is problematic. Several methods have been explored to overcome this problem [20]. Examples of this are the transfer learning method [21] for solving the overfitting problem in training datasets, and the ensemble architectures [22] and hybrid variant input approaches [23] for extracting discriminative features. Notably, most of these approaches primarily concentrated on designing new deep learning architectures and overlooked the loss function. Additionally, the limited training datasets remain a challenge in improving FER performance.

One method of extracting salient features from limited datasets is to change the traditional loss function of the CNN architecture to reduce the intra-class variation and increase the inter-class variation of the deep features, thereby creating discriminative features. Typically, CNN-based FER optimizes the softmax loss function, which seeks to penalize misclassified samples, encouraging the distinction of features between different classes. The softmax layer is crucial for ensuring that the learned features of various classes remain distinguishable. However, severe intra-class variation remains challenging. Advanced loss functions can be used to address this problem. Generally, advanced loss functions are divided into two categories: Angular-distance-based method (L-Softmax [24], AM-Softmax [25]), Euclidean-distance-based method (contrastive loss [26], triplet loss [27], center loss [28]).

The angular-distance-based losses have made the learned features potentially separable with a larger angular/cosine distance. These losses were reformulated based on the original softmax loss, allowing inter-class separability and intra-class compactness between learned features. However, these loss functions were difficult to converge when trained with complex datasets such as that of FER.

Furthermore, the Euclidean-distance-based losses have embedded the input images in the Euclidean space to decrease intra-class variation and increase inter-class variation. Contrastive and triplet losses increased memory load and training time owing to the complex recombination of training samples. Center loss updated the class center by reducing the distance between the deep features and their corresponding class centers. Nevertheless, it disregarded inter-class variation, thus limiting the FER performance improvement.

To summarize, the existing loss functions for CNN-based FER have the following challenges: (1) the difficulty in convergence with the complex training dataset, (2) the high memory consumption and training time, and (3) the disregard of inter-class similarity.

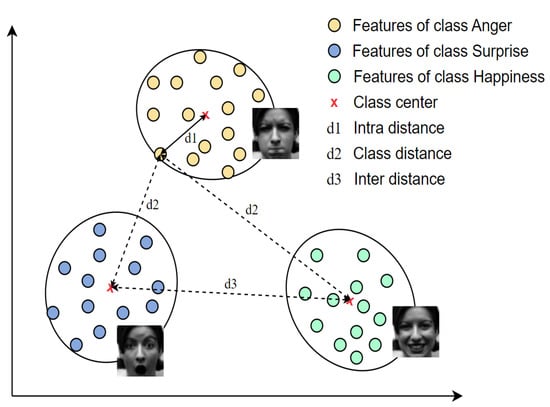

Given the above analysis, this study presents a variant loss to minimize the distance between the deep features and their corresponding class centers as well as maximize the distances of deep features with their non-corresponding class centers and the distances between different class centers. Figure 2 illustrates the concept of the proposed loss function. Finally, the proposed loss function was assessed on four well-known benchmark facial expression databases: the Cohn-Kanade Plus (CK+) [29], the Oulu-CASIA [30], MMI [31], and FER2013 [32] databases. The contributions of this study can be summarized as follows:

Figure 2.

Visualization of the proposed loss for one batch image in the Euclidean space. Supposing the three classes anger, happiness, and surprise in this batch, the proposed loss function aims to reduce the intra-distance and enhance the inter-distance and class distance (Best viewed in color).

- A new loss function is proposed to simultaneously consider inter- and intra-class variations, which enables CNN-based FER methods to achieve impressive performance.

- A new loss function can be easily optimized with various CNN architectures on diverse databases to learn the discriminative power of deep features for the FER problem.

- Comprehensive experiments on benchmark databases are conducted to prove that the auxiliary CNN architectures trained with the proposed loss function performed much better than with existing loss functions.

The remainder of this paper is organized into four sections. Section 2 summarizes previous loss functions and auxiliary CNN architectures. Section 3 describes the proposed loss function that simultaneously considers intra- and inter-class variations. Section 4 analyzes the simulation results, and Section 5 states the conclusions.

2. Related Work

2.1. Previous Loss Functions

The softmax loss is good at increasing the inter-class variation but cannot decrease the intra-class variation. To tackle this problem, several loss functions have been introduced to reduce the intra-class variation. Most representatively, L-Softmax loss [24] is an improvement over the conventional softmax loss, enabling inter-class separability and intra-class compactness between learned features. With an adjustable margin value, L-softmax could determine an adaptable learning task with flexible difficulty for CNNs. It also prevented overfitting problems to leverage the powerful learning capacity of deep and wide architectures. Nevertheless, when the training dataset has various subjects, the convergence of L-Softmax will be tougher than the softmax loss. AM-Softmax loss [25] used an additive margin strategy to the target logit of softmax loss with features and weights normalized. Although it was intuitively appealing and more interpretable than the L-Softmax [24], selecting the margin hyperparameter was challenging.

Contrastive [26] and triplet losses [27] adopted a pair training technique. In particular, the contrastive loss included negative and positive pairs. Its gradients attracted positive pairs and repelled negative ones. Meanwhile, triplet loss reduced the distance between an anchor and a positive sample and increased the distance between an anchor and a negative sample of a different identity. The training procedure for these losses was still challenging owing to the selection of effective training samples. Center loss [28] decreased intra-class variations during training by penalizing the distances between deep features and their corresponding class centers. By relying solely on training CNNs with center loss, the deep features and class centers might deteriorate to zero. Moreover, the center loss was minimal, and discriminative features could not be achieved. Thus, the center loss should be jointly supervised with the softmax loss during training. However, each identity’s center doubled the memory storage of the last CNN layer.

Range loss [33] was proposed to effectively use the whole long-tailed dataset in the training procedure. The range loss was optimized jointly with softmax loss as supervisory signals to train CNNs. However, the optimization strategy could be challenging because softmax loss requires uniform distribution among all the classes, and the ability to improve inter-class differences within each mini-batch was restricted. Marginal loss [34] could decrease the intra-class variances and enlarge the inter-class distances by focusing on the marginal samples. The marginal, combined with a softmax loss to supervise the learning of CNN jointly, could greatly improve the discriminative capacity of deep features for efficient facial recognition. Even so, the age variance restriction in the training data could significantly reduce the performance when there was a large year gap.

According to Table 1, while existing loss functions achieved promising performance, there is still much room for improvement. To this end, this study proposes variant loss to minimize the distance between the deep features and their corresponding class centers as well as maximize the distances of deep features with their non-corresponding class centers and the distances between different class centers. The proposed loss function is easy to adopt in CNN-based FER methods and achieves outstanding performance.

Table 1.

The properties of previous loss functions in deep facial recognition.

2.2. Auxiliary CNN Architectures

Given an input image or feature, classification models predict specific labels. In this study, six popular CNN architectures are trained using various loss functions to evaluate the feasibility of the proposed loss function. First, AlexNet [35] has eight layers comprising five convolutional layers and three fully connected layers combined with dropout techniques. Its simplicity and moderate depth made its training fast.

To improve the classification performance, InceptionNet [36] was designed based on the Inception module, which aggregated four parallel branches: three convolution branches with different kernel sizes (1 × 1, 3 × 3, and 5 × 5) and a max-pooling branch. InceptionNet contained 22 layers, including nine Inception modules stacked on top of each other. This design increased the width of the network and adaptability to various scales.

The deep learning networks also suffer from a vanishing gradient problem that impedes accuracy. ResNet [37] was proposed to add skip connections from the input to the output of the convolutional layer to address these problems. The residual block contained two 3 × 3 convolutional layers, each followed by the Batch Norm and ReLU activation function. ResNet-18 was selected to train with the comparative loss function in this study.

DenseNet [38] proposed dense blocks and transition layers. Dense blocks concatenated the output of the previous layer as the input of the next. In this way, a feed-forward nature could be maintained. However, the number of channels would be increased when concatenating layers. The transition was used to control the size of the features by 1 × 1 convolution. Moreover, the height and width of features were reduced through the average pooling layer.

Recently, MobileNetV3 [39] has been applied to mobile and embedded devices owing to its lightweight. It was based on a combination of hardware-aware network architecture search (NAS) algorithm and squeeze-and-excitation (SE) module [40]. The block-wise search algorithm (MnasNet [41]) was employed to identify global network structures, and then the layer-wise search algorithm (NetAdapt [42]) was sequentially employed to adjust individual layers. MobileNetV3 inserted the SE module to build channel-wise attention. The hard-sigmoid function was utilized to substitute the conventional sigmoid in the SE module for more efficient calculation. In addition, the hard-swish function was adopted instead of ReLU for non-linearity improvement.

Finally, ResNeSt [43] is an improved version of ResNet. It combined channel-wise attention with multi-path representation into a unified Split-Attention block. These Split-Attention blocks were stacked to follow the concept of residual learning from the ResNet model [37]. This architecture enhanced learned feature representations for multiple high-level vision tasks, including object detection, image classification, and semantic segmentation. Moreover, it was reported that ResNeSt enabled the acceleration of training and was computationally efficient.

3. Proposed Method

As mentioned previously, a variant loss is proposed to minimize the distance between the deep features and their corresponding class centers as well as maximize the distances of deep features with their non-corresponding class centers, and the distances between different class centers. The new loss function is expressed as follows:

where , are the ordinary label and input images of i-th sample facial expressions, respectively; d is dimension features. expresses the feature extraction from the CNNs; denotes the -th class center of the deep features from the CNNs with the same label class . M is the number of training data in the batch size; N is the number of classes; , and are the j-th, m-th, and n-th class centers of deep features, respectively. and are tolerance parameters that guarantee that the denominator is higher than zero; and , are the hyperparameters used for balancing these loss terms.

The first term is similar to the center loss and tends to reduce the distance between the deep features and their corresponding class centers. The second and third terms tend to increase the distance between the deep features and their non-corresponding class centers and between class centers, respectively. By minimizing the proposed loss function, the intra-class variations of the deep features are reduced, whereas the inter-class variations continue to increase.

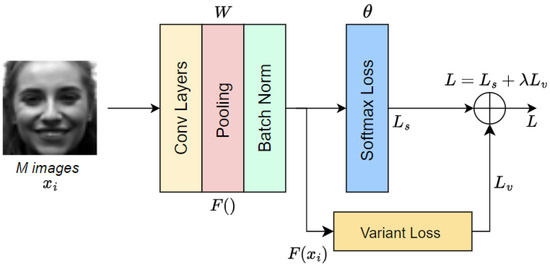

The softmax loss is obviously good at increasing the inter-class variation, and it is tractable and makes it easy to obtain the optimized solution. Therefore, the proposed loss function is applied to the batch data in each iteration to train it with the softmax loss. In addition, the most powerful networks tend to combine specific loss functions such that the supervision signals are more successfully backpropagated, mitigating the training difficulty and improving the robustness of network training [44]. In our study, the overall loss function for training the CNN is computed as the sum of the weights of the softmax and variant losses. In short, the overall loss function is expressed as follows:

where is a hyperparameter used for balancing the softmax and the variant losses. The overall system of the CNNs using the proposed loss is illustrated in Figure 3.

Figure 3.

Overall system CNNs using the proposed loss function.

In this method, the network parameters include CNN parameters W and the softmax loss parameters are updated in mini-batches. Only the gradient of is needed to update the softmax loss parameters because the does not affect it. The gradient of variant loss is used to update W. The gradient of with respect to the is calculated as follows:

In addition, the class center is calculated by averaging the features in the same class and updating in each iteration. The centers are updated as follows:

where is the learning rate of class centers.

The update of the k-th class center can be computed as a derivative of the variant loss with respect to the class center :

where and are defined as

CNNs can be trained utilizing standard stochastic gradient descent (SGD) [45]. The hyperparameters of CNNs contain a batch size M, the number of training iterations T, the learning rates of the weight parameter , the learning rates of the class centers , and balanced terms of the loss function , , . First, the parameters of the CNNs are initialized W, the softmax loss parameters , and class center . In each iteration, M training images are passed into the CNNs to obtain the output of the last fully-connected layer in each batch. The overall loss of the model and derivative of the loss functions with respect to the output of the last fully-connected layers are calculated to update the parameters of the CNNs. is independent of the variant loss; therefore, only the softmax loss is considered. Furthermore, the gradient of variant loss is used to update W. The update process of W and are separated with different derivatives. Finally, the derivative of the variant loss with respect to class center is calculated to update the class center with the learning rate of the class centers . The training process for CNNs with proposed loss is interpreted in Algorithm 1.

| Algorithm 1 Training process for CNNs with proposed loss |

Input: Training images , batch size M, number of training iterations T, learning rates of weight parameter , learning rate of class centers , hyper-parameters , , . Initialization: the CNNs parameters W, the softmax loss parameters , the class centers , the iteration t = 0.

End of the algorithm: The CNNs parameters W, the softmax loss parameters |

4. Experiments

4.1. Experimental Setup

The performance of the proposed method was evaluated based on four benchmark facial expression databases: three from a laboratory environment, namely, Cohn-Kanade Plus (CK+) [29], Oulu-CASIA [30], and MMI [31]; and one from a wild environment, FER2013 [32]. A 10-fold cross-validation strategy was employed for model evaluation, especially focusing on scenarios with small and imbalanced datasets, such as CK+, MMI, and Oulu-CASIA. The amount of data for training depends on several factors, such as the task’s complexity, the data’s diversity, the desired output, the data quality, and the deep model architecture. In this study, each of these databases was strictly divided into 90% as a training set and 10% allocated as a testing set. Furthermore, FER is a large-scale dataset; the training and evaluation processes were conducted on its provided datasets. To prevent overfitting issues, we carefully chose the appropriate weight based on the learning process of the model to achieve a satisfactory performance. Several sample images derived from these databases are illustrated in Figure 4. The details of the databases and the number of images for each emotion are presented in Table 2.

Figure 4.

Example face images from CK+ (top), Oulu-CASIA (center), and MMI (bottom) databases. The facial expressions from left to right convey anger, contempt, disgust, fear, happiness, sadness, and surprise. The contempt images of Oulu-CASIA and MMI are null.

Table 2.

Number of images for each emotion: anger (An), contempt (Co), disgust (Di), fear (Fe), happiness (Ha), sadness (Sa), surprise (Su), neutral (Ne).

To minimize the variations in the face scale and in-plane rotation, the face was detected and aligned from the original database using the OpenCV library with Haar–Cascade detection [46]. The aligned facial images were resized to 64 × 64 pixels. Moreover, intensity equalization was used to enhance the contrast in facial images. A data augmentation technique was used to overcome the restricted number of training images in the FER problem. Furthermore, the facial images were flipped, and each one and its corresponding flipped image was rotated at −15, −10, −5, 5, 10, and 15°. The training databases were augmented 14 times using original, flipped, six-angle, and six-angle-flipped images. The rotated facial images are shown in Figure 5.

Figure 5.

Example rotated images from CK+ database. The facial expressions from left to right convey anger, contempt, disgust, fear, happiness, sadness, and surprise. The rotation degrees from top to bottom are −5, −10, −15, 5, 10, 15°.

The proposed loss function was compared with softmax, center [28], range [33], and marginal losses [34] using the same CNN architectures to demonstrate the effectiveness of the proposed loss function. Accuracy is a crucial quantitative metric to evaluate the performance of the proposed method, which can be calculated as follows:

The experiment was conducted in a subject-independent scenario. The CNN architectures were processed with 64 images in each batch. The training was performed using the standard SGD technique to optimize the loss functions. The hyper-parameter was used to balance the softmax and variant losses. and were utilized to balance among these losses in the variant loss, and controlled the learning rate of the class center . All of these factors affect the performance of our model. In this experiment, the values = 0.001, = 0.4, = 0.6, = = 0.001 were empirically selected for the proposed loss. For the center, marginal, and range losses, was set to 0.001. The detailed specifications of the implemented environment are shown in Table 3.

Table 3.

Configuration information of the experimental environment.

4.2. Experimental Results

(1) Results on Cohn-Kanade Plus (CK+) database: The CK+ is a representative laboratory-controlled database for FER. It comprises 593 image sequences collected from 123 participants. A total of 327 of these image sequences have one of seven emotion labels: anger, contempt, disgust, fear, happiness, sadness, and surprise, from 118 subjects. Each image sequence starts with a neutral face and ends with the peak emotion. To collect additional data, the last three frames of each sequence were collected and associated with the provided labels. Therefore, a database containing 981 experimental images was constructed. The images were primarily grayscale and digitized to a 640 × 490 or 640 × 480 resolution.

The average recognition precision of the methods based on the loss functions and CNN architectures is listed in Table 4. The accuracy of the proposed loss function was superior to that of the others for all six CNN architectures. For the same loss functions, the accuracy of ResNet was the highest, followed by those of MobileNetV3, ResNeSt, InceptionNet, AlexNet, and DenseNet. Overall, the proposed loss produced an average recognition accuracy of 94.89% for the seven expressions using ResNet.

Table 4.

Performance comparison on the CK+ database in terms of the seven expressions.

Table 5 presents the confusion matrix [47] of the ResNet, which was optimized using the proposed loss function. The accuracy of the contempt, disgust, happiness, and surprise labels was significant. Notably, the happiness percentage was the highest at 99.5%, followed closely by surprise, disgust, and contempt at 98.4%, 97.7%, and 93.4%, respectively. The proportions of the anger, fear, and sadness labels were inferior to these emotions because of their visual similarity.

Table 5.

Confusion matrix of ResNet optimized with the proposed loss on the CK+ database. The labels in the leftmost column and on top represent the ground truth and the prediction results, respectively.

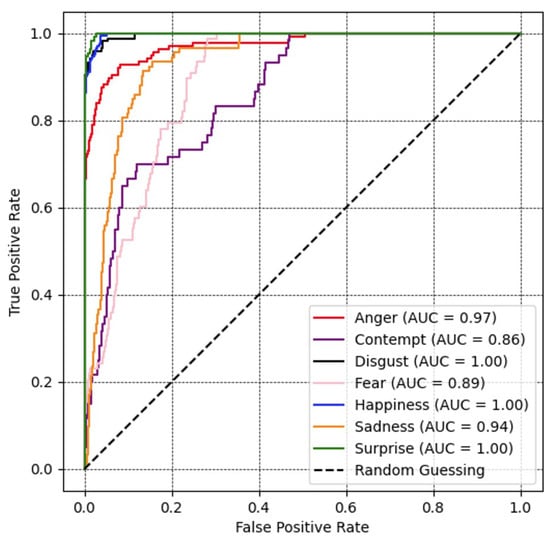

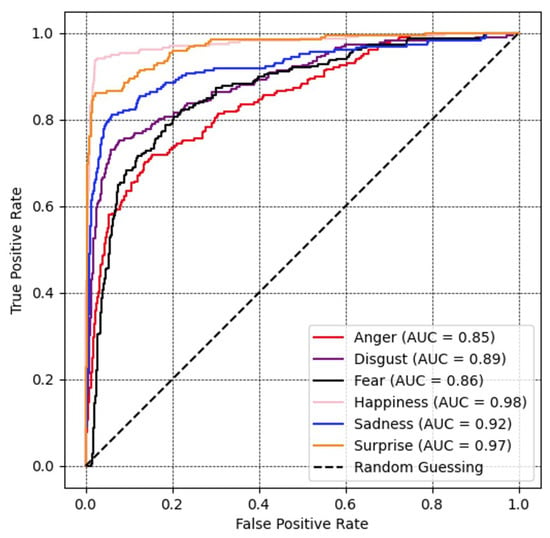

A receiver operating characteristic (ROC) curve [48] and the corresponding area under the curve (AUC) for all expression recognition performances are illustrated in Figure 6. An increase in the AUC signifies an improved ability of the model to differentiate between various classes. The value of the disgust, happiness, and surprise labels reach peak values at 100%. The others also gained a relatively high classified range of 97%, 94%, 89%, and 86% for corresponding emotional classes anger, sadness, fear, and contempt.

Figure 6.

Recognition performance portrayed as ROC curves and corresponding area under the curve (AUC) for all expression recognition performances with ResNet optimized with the proposed loss on the CK+ database.

(2) Results on Oulu-CASIA database: The Oulu-CASIA database includes 2880 image sequences obtained from 80 participants using a visible light (VIS) imaging system under normal illumination conditions. Six emotion labels were assigned to each image sequence: anger, disgust, fear, happiness, sadness, and surprise. Like the Cohn-Kanade Plus database, the image sequence started with a neutral face and ended with the peak emotion. For each image sequence, the last three frames were collected as the peak frames of the labeled expression. The imaging hardware was operated at 25 fps with an image resolution of 320 × 240 pixels.

The average recognition accuracy of the methods is listed in Table 6. The performance of the proposed loss function was comparable to that of previous ones. Specifically, the proposed loss function achieved an average recognition accuracy of 77.61% for the six expressions using the ResNet architectures.

Table 6.

Performance comparison on the Oulu-CASIA database in terms of the six expressions.

Table 7 presents the confusion matrix of ResNet trained with the proposed loss function. The accuracy of the happiness and surprise labels increased, with the former achieving 92.1% and the latter gaining 84.0%. The accuracy for anger, disgust, fear, and sadness was inferior, obtaining 66.2%, 70.5%, 76.3%, and 76.5%, respectively.

Table 7.

Confusion matrix of ResNet optimized with the proposed loss function on the Oulu-CASIA database. The labels in the leftmost column and on top represent the ground truth and the prediction results, respectively.

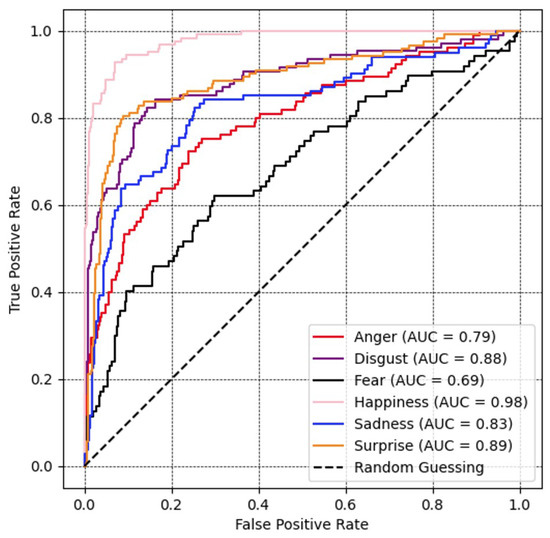

Figure 7 shows a receiver operating characteristic (ROC) curve, which verifies the performance of a recognition model. The range for all emotional labels was relatively significant. Among them, the result of the happiness, surprise, and sadness class illustrates the AUC over 90%, followed by disgust, fear, and anger at 89%, 86%, and 85%, respectively.

Figure 7.

Recognition performance portrayed as ROC curves and corresponding area under the curve (AUC) for all expression recognition performances with ResNet optimized with the proposed loss function on the Oulu-CASIA database.

(3) Results on MMI database: The laboratory-controlled MMI database comprises 312 image sequences collected from 30 participants. A total of 213 image sequences were labeled with six facial expressions: anger, disgust, fear, happiness, sadness, and surprise. Moreover, 208 sequences from 30 participants were captured in frontal view. The spatial resolution was 720 × 576 pixels, and the videos were recorded at 24 fps. Unlike the Cohn-Kanade Plus and Oulu-CASIA databases, the MMI database features image sequences labeled by the onset-apex. Therefore, the sequences started with a neutral expression, peaked near the middle, and returned to a neutral expression. The location of the peak expression frame was not provided. Furthermore, the MMI database presented challenging conditions, particularly in the case of large interpersonal variations. Three middle frames were chosen as the peak expression frames in each image sequence to conduct a subject-independent cross-validation scenario.

Table 8 lists the average recognition accuracy of the methods. Our loss function outperformed all the other loss functions by a certain margin. Specifically, the proposed loss function achieved average recognition accuracy of 67.43% for the six expressions using the MobileNetV3 architecture.

Table 8.

Performance comparison on the MMI database in terms of the six expressions.

Table 9 presents the percentages in the confusion matrix of the MobileNetV3 optimized with the proposed loss function. The accuracy for all emotions was under 80.0%, except for happiness and surprise, which obtained 89.7% and 81.3%, respectively. This may be due to the number of images in each class. An instance of this is fear, which had the fewest labels and whose accuracy was a low 31.0%. Similar results were also confirmed for the accuracy of anger, disgust, and sadness.

Table 9.

Confusion matrix of MobileNetV3 optimized with the proposed loss function on the MMI database. The labels in the leftmost column and on top represent the ground truth and prediction results, respectively.

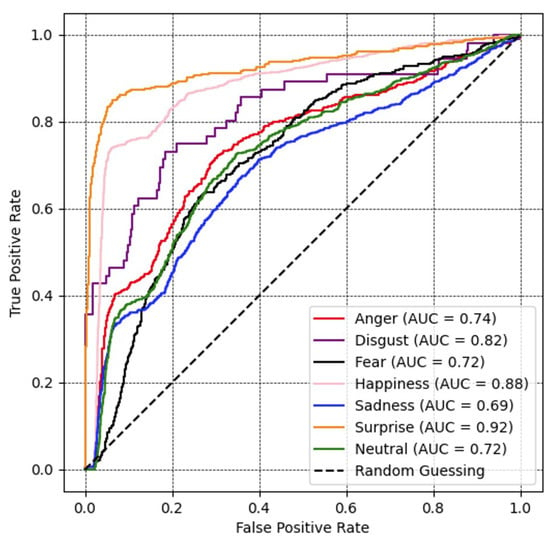

A ROC curve and corresponding AUC for all facial expression recognition performances are presented in Figure 8. The value of the fear and anger classes is lowest, with the former achieving 69% and the latter 79%. The value for sadness, disgust, surprise, and happiness was higher, acquiring 83%, 88%, 89%, and 98%, respectively.

Figure 8.

Recognition performance portrayed as ROC curves and corresponding area under the curve (AUC) for all expression recognition performances with MobileNetV3 optimized with the proposed loss on the MMI database.

(4) Results on FER2013 database: FER2013 is a large-scale, unconstrained database automatically collected by the Google image search API. It includes 35,887 images with a relatively low resolution of 48 × 48 pixels, which are labeled with one of seven emotion labels: anger, disgust, fear, happiness, sadness, surprise, and neutral. The training set comprises 28,709 examples. The public test set consists of 3589 examples; the remaining 3589 images are used as a private test set.

Table 10 lists all the methods’ average recognition accuracy. The accuracy of the proposed loss function greatly exceeds that of the others in all CNN architectures, except AlexNet. The proposed loss function achieved a peak average recognition accuracy of 61.05% for the seven expressions using the ResNeSt architecture.

Table 10.

Performance comparison on the FER2013 database in terms of the seven expressions.

The confusion matrix of ResNeSt, which was trained with the proposed loss function, is presented in Table 11. The happiness percentage was highest at 80.7%, followed by surprise at 77.4%. The others obtained relatively low prediction ratios.

Table 11.

Confusion matrix of ResNeSt optimized with the proposed loss function on the FER2013 database. The labels in the leftmost column and on top represent the ground truth and prediction results, respectively.

Figure 9 depicts a ROC curve, where the range for all emotions was over 70%, except for sadness at 69%. Among other expression classes, the result of the surprise class illustrates the highest AUC value at 92%, followed by happiness, disgust, anger, fear, and neutral at 88%, 82%, 74%, 72%, and 72%, respectively.

Figure 9.

Recognition performance portrayed as ROC curves and corresponding area under the curve (AUC) for all expression recognition performances with ResNeSt optimized with the proposed loss function on the FER2013 database.

4.3. Training Time

The training time is essential for evaluating the computational complexity of deep learning networks with specific loss functions. This section compares the training time of the auxiliary CNN architectures with the existing and proposed loss functions. Notably, all loss functions were trained on a single GPU. Depending on the dataset and network architecture, the number of iterations was empirically set to achieve optimal convergence with the corresponding loss function. When the data have been pre-processed, we start measuring the training time T = T1 − T0 with the beginning time T0 at the start of the first iteration and ending time T1 at the finish of the final iteration. As presented in Table 12, the softmax loss trained the fastest because it only uses one term in the mathematical function, followed closely by the center and proposed loss functions. Furthermore, the range and marginal loss functions required longer training times among the compared methods because their complex mathematical functions produced a time-consuming backpropagation process. In summary, only softmax and center loss were marginally faster than the proposed method. However, the proposed method achieved superior performance compared with these loss functions. Therefore, the proposed method is computationally efficient and meets the practical requirements.

Table 12.

Training time(s) comparison of the auxiliary CNN architecture with different loss functions.

To summarize, the computational cost of the loss function in deep learning is critical. The loss function is used for evaluation during training, so a computationally expensive loss function slows down the training process and can cause bottlenecks, especially for large datasets. In addition, designing the loss function depends on the purpose of the output. Therefore, a good trade-off between computational cost and accuracy is desired.

5. Conclusions

Although a loss function can drive network learning, it has received little attention for promoting facial expression recognition (FER) performance. This study presents a new loss function that allows simultaneous consideration of inter- and intra-class variations to be applied to CNN architecture for FER. More specifically, this loss function minimizes the distance between the deep features and their corresponding class centers as well as maximizes the distances of deep features with their non-corresponding class centers and the distances between different class centers. In addition, the proposed loss function improves the testing accuracy of the benchmark FER database compared with several other loss functions. Overall, this study demonstrates that choosing optimal loss functions strongly affects the performance of deep learning networks, even when maintaining their architecture. While the proposed loss function achieved impressive performance, it has not completely solved the unbalanced data problems. To overcome this issue, we plan to apply resampling methods by undersampling with majority class samples, oversampling with minority class samples, and using cost-sensitive learning to focus on the minority classes. In addition, we would like to extend the experiment for facial expression recognition in real-time conditions with a variety of emotions (e.g., embarrassment, adoration, nostalgia, satisfaction, pride, etc.). Currently, the proposed loss function applied to facial expression recognition for masked faces is under investigation and is expected to achieve promising performance.

Author Contributions

Conceptualization, methodology, T.-D.P.; resources, investigation, analysis, writing-original draft preparation, M.-T.D.; software, Q.-T.H.; validation, project administration, S.L.; supervision, writing-review and editing, M.-C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Korea Institute for Advancement of Technology (KIAT) grant funded by the Korean Government, Ministry of Trade, Industry and Energy (MOTIE) (HRD Program for Industrial Innovation) under Grant P0017011; in part by the Industrial Technology Challenge Track of MOTIE/Korea Evaluation Institute of Industrial Technology (KEIT) under Grant 20012624; in part by the Research and Development Program of MOTIE; and in part by KEIT under Grant RS-2023-00232192.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jourabloo, A.; De la Torre, F.; Saragih, J.; Wei, S.E.; Lombardi, S.; Wang, T.L.; Belko, D.; Trimble, A.; Badino, H. Robust egocentric photo-realistic facial expression transfer for virtual reality. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 20323–20332. [Google Scholar]

- Putro, M.D.; Nguyen, D.L.; Jo, K.H. A Fast CPU Real-Time Facial Expression Detector Using Sequential Attention Network for Human–Robot Interaction. IEEE Trans. Ind. Inf. 2022, 18, 7665–7674. [Google Scholar] [CrossRef]

- Xiao, H.; Li, W.; Zeng, G.; Wu, Y.; Xue, J.; Zhang, J.; Li, C.; Guo, G. On-road driver emotion recognition using facial expression. Appl. Sci. 2022, 12, 807. [Google Scholar] [CrossRef]

- Farkhod, A.; Abdusalomov, A.B.; Mukhiddinov, M.; Cho, Y.I. Development of Real-Time Landmark-Based Emotion Recognition CNN for Masked Faces. Sensors 2022, 22, 8704. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Pietikainen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Niese, R.; Al-Hamadi, A.; Farag, A.; Neumann, H.; Michaelis, B. Facial expression recognition based on geometric and optical flow features in colour image sequences. IET Comput. Vis. 2012, 6, 79–89. [Google Scholar] [CrossRef]

- Moghaddam, B.; Jebara, T.; Pentland, A. Bayesian face recognition. Pattern Recognit. 2000, 33, 1771–1782. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, L.; Chen, X.; Niu, J. Facial landmark automatic identification from three dimensional (3D) data by using Hidden Markov Model (HMM). Int. J. Ind. Ergon. 2017, 57, 10–22. [Google Scholar] [CrossRef]

- Chen, L.; Li, M.; Su, W.; Wu, M.; Hirota, K.; Pedrycz, W. Adaptive feature selection-based AdaBoost-KNN with direct optimization for dynamic emotion recognition in human–robot interaction. IEEE Trans. Emerg. Top. Comput. Intell. 2019, 5, 205–213. [Google Scholar] [CrossRef]

- Kotsia, I.; Pitas, I. Facial expression recognition in image sequences using geometric deformation features and support vector machines. IEEE Trans. Image Process. 2006, 16, 172–187. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Duong, M.T.; Hong, M.C. EBSD-Net: Enhancing Brightness and Suppressing Degradation for Low-light Color Image using Deep Networks. In Proceedings of the IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Yeosu, Republic of Korea, 26–28 October 2022; pp. 1–4. [Google Scholar]

- Hoang, H.A.; Yoo, M. 3ONet: 3D Detector for Occluded Object under Obstructed Conditions. IEEE Sens. J. 2023, 23, 18879–18892. [Google Scholar] [CrossRef]

- Karnati, M.; Seal, A.; Bhattacharjee, D.; Yazidi, A.; Krejcar, O. Understanding deep learning techniques for recognition of human emotions using facial expressions: A comprehensive survey. IEEE Trans. Instrum. Meas. 2023, 72, 1–31. [Google Scholar] [CrossRef]

- Villanueva, M.G.; Zavala, S.R. Deep neural network architecture: Application for facial expression recognition. IEEE Latin Am. Trans. 2020, 18, 1311–1319. [Google Scholar] [CrossRef]

- Ge, H.; Zhu, Z.; Dai, Y.; Wang, B.; Wu, X. Facial expression recognition based on deep learning. Comput. Methods Progr. Biomed. 2022, 215, 106621. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.H.; Yoo, J.H. CNN Learning Strategy for Recognizing Facial Expressions. IEEE Access 2023, 11, 70865–70872. [Google Scholar] [CrossRef]

- Wu, B.F.; Lin, C.H. Adaptive feature mapping for customizing deep learning based facial expression recognition model. IEEE Access 2018, 6, 12451–12461. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Akhand, M.; Roy, S.; Siddique, N.; Kamal, M.A.S.; Shimamura, T. Facial emotion recognition using transfer learning in the deep CNN. Electronics 2021, 10, 1036. [Google Scholar] [CrossRef]

- Renda, A.; Barsacchi, M.; Bechini, A.; Marcelloni, F. Comparing ensemble strategies for deep learning: An application to facial expression recognition. Expert Syst. Appl. 2019, 136, 1–11. [Google Scholar] [CrossRef]

- Liu, C.; Hirota, K.; Ma, J.; Jia, Z.; Dai, Y. Facial expression recognition using hybrid features of pixel and geometry. IEEE Access 2021, 9, 18876–18889. [Google Scholar] [CrossRef]

- Liu, W.; Wen, Y.; Yu, Z.; Yang, M. Large-margin softmax loss for convolutional neural networks. Proc. Int. Conf. Mach. Learn. 2016, 2, 507–516. [Google Scholar]

- Wang, F.; Cheng, J.; Liu, W.; Liu, H. Additive margin softmax for face verification. IEEE Signal Process. Lett. 2018, 25, 926–930. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, Y.; Wang, X.; Tang, X. Deep learning face representation by joint identification-verification. In Advances in Neural Information Processing Systems; Curran: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 499–515. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the IEEE Computer Society Conference Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; IEEE: New York, NY, USA, 2010; pp. 94–101. [Google Scholar]

- Zhao, G.; Huang, X.; Taini, M.; Li, S.Z.; PietikäInen, M. Facial expression recognition from near-infrared videos. Image Vis. Comput. 2011, 29, 607–619. [Google Scholar] [CrossRef]

- Pantic, M.; Valstar, M.; Rademaker, R.; Maat, L. Web-based database for facial expression analysis. In Proceedings of the IEEE International Conference Multimedia Expo, Amsterdam, The Netherlands, 6 July 2005; IEEE: New York, NY, USA, 2005; pp. 317–321. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the International Conference Neural Information Processing (ICONIP 2013), Daegu, Republic of Korea, 3–7 November 2013; Part III 20. pp. 117–124. [Google Scholar]

- Zhang, X.; Fang, Z.; Wen, Y.; Li, Z.; Qiao, Y. Range loss for deep face recognition with long-tailed training data. In Proceedings of the IEEE/CVF International Conference Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5409–5418. [Google Scholar]

- Deng, J.; Zhou, Y.; Zafeiriou, S. Marginal loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 60–68. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Yang, T.J.; Howard, A.; Chen, B.; Zhang, X.; Go, A.; Sandler, M.; Sze, V.; Adam, H. Netadapt: Platform-aware neural network adaptation for mobile applications. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 285–300. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 2736–2746. [Google Scholar]

- Duong, M.T.; Lee, S.; Hong, M.C. DMT-Net: Deep Multiple Networks for Low-light Image Enhancement Based on Retinex Model. IEEE Access 2023, 11, 132147–132161. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A Stochastic Approximation Method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE/CVF International Conference Computer Vision, Kauai, HI, USA, 8–14 December 2001; IEEE: New York, NY, USA, 2001; Volume 1, pp. 511–518. [Google Scholar]

- Susmaga, R. Confusion matrix visualization. In Proceedings of the Intelligent Information Processing and Web Mining, Zakopane, Poland, 17–20 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 107–116. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).