Abstract

The well known cloud computing is being extended by the idea of fog with the computing nodes placed closer to end users to allow for task processing with tighter latency requirements. However, offloading of tasks (from end devices to either the cloud or to the fog nodes) should be designed taking energy consumption for both transmission and computation into account. The task allocation procedure can be challenging considering the high number of arriving tasks with various computational, communication and delay requirements, and the high number of computing nodes with various communication and computing capabilities. In this paper, we propose an optimal task allocation procedure, minimizing consumed energy for a set of users connected wirelessly to a network composed of FN located at AP and CN. We optimize the assignment of AP and computing nodes to offloaded tasks as well as the operating frequencies of FN. The considered problem is formulated as a Mixed-Integer Nonlinear Programming problem. The utilized energy consumption and delay models as well as their parameters, related to both the computation and communication costs, reflect the characteristics of real devices. The obtained results show that it is profitable to split the processing of tasks between multiple FNs and the cloud, often choosing different nodes for transmission and computation. The proposed algorithm manages to find the optimal allocations and outperforms all the considered alternative allocation strategies resulting in the lowest energy consumption and task rejection rate. Moreover, a heuristic algorithm that decouples the optimization of wireless transmission from implemented computations and wired transmission is proposed. It finds the optimal or close-to-optimal solutions for all of the studied scenarios.

1. Introduction

1.1. Motivation

Fog, loosely defined as “a cloud closer to the ground” [1] or “an extension, not a replacement, of the cloud” [2], is a computing and networking paradigm that aims to bring computational, storage and networking resources close to the edge of the network [3]. It provides access to these resources through geographically distributed FN.

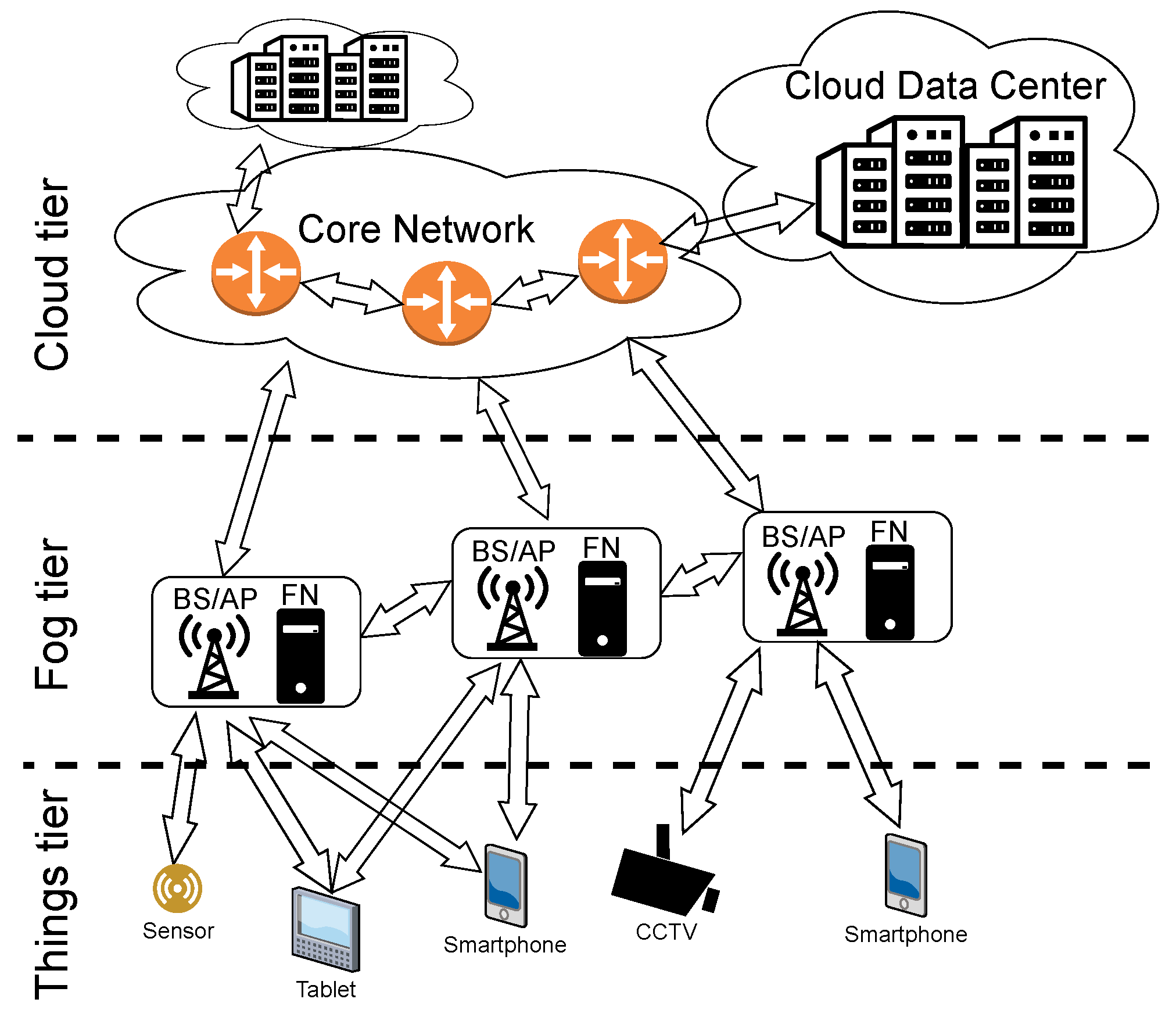

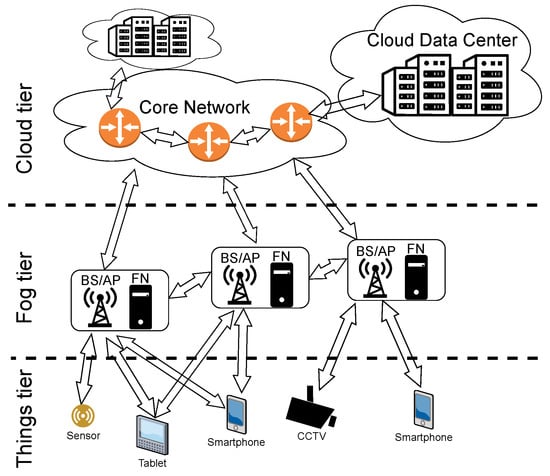

A fog network can be used for offloading computational tasks from end users to other nodes in the network. Energy and time spent on transmission can be saved when information is processed in one of the nearby FNs rather than in the remote cloud DC. However, these cloud DCs are expected to be more energy-efficient in terms of computation due to their scale (Google, for example, reports that its cloud services are carbon neutral [4]). How shall computation tasks be distributed over the computation nodes then? We take a holistic view on modeling and optimizing costs related to offloading in this work. Wired and wireless networks are covered starting from the end users, going through the FN, the core network and ending at the cloud. An example of such a network, divided into tiers, is shown in Figure 1.

Figure 1.

Example fog network architecture.

An example scenario where computational resources provided by FN can be used to efficiently process information is controlling and predicting air quality [5]. Multiple sensors with limited computational capacities can send required data to nearby FN.

1.2. Related Work

Previous research on task allocation for energy-efficient fog networks includes costs only in selected parts of these networks. In [6,7,8,9,10] computational requests can be distributed between various combinations of MDs, one or more nearby FNs and a remote cloud. These studies optimize energy consumption either alone [7,10] or in addition to other parameters [6,8,9]. However, what differentiates our work from those is that they only consider energy consumption from the perspective of MDs. In contrast, we look at the total energy spent on computation as well as wireless and wired transmission in the entire network.

Other studies, similarly to ours, examine energy consumption within the fog network but ignore, e.g., costs related to transmission between different FNs (FN-FN) [11,12,13], transmission between MDs and FNs (MD-FN) [11,12,13] or transmission between FNs and the cloud (FN-CN) [13,14]. In some studies, the possibility of FN-FN [14,15] and FN-CN [15] is not considered at all. In [11,12,13,14,16], computational requests are not examined individually, but as aggregated data. In our work, each request is characterized by its own set of parameters such as size, computational complexity and delay requirement. Moreover, no optimization problem related to processing requests is proposed in [12,16].

A summary of related articles in contrast to our work is presented in Table 1. Rows MD-FN, FN-FN and FN-CN represent costs related to transmission between nodes while rows MD, FN and CN represent costs related to computations at given nodes. The notation used is as follows: Optim. means that energy and delay are optimized, Cons. means that these are considered in calculations and Ign. means that these are ignored or assumed negligible. N/A means that in a network modeled in a given work, there is no possibility of such transmission/computation—energy and delay costs are not applicable. E stands for energy and D stands for delay. If both are considered/optimized/ignored this notation is skipped. Sets means the allocation of sets of individual requests (characterized by size, required computations, etc.) is considered. Flow, on the other hand, means that the requests are not considered individually but as a total bitrate, rate of computations, etc., that have to be completed.

Table 1.

Comparison with related works.

This work extends [17] with the following novel aspects: (i) optimizing the wireless connection of end devices to the fog tier; (ii) introducing an additional set of transmission allocation variables to the optimization problem and its solution; (iii) providing an analytical solution to the proposed problem; (iv) examining the effectiveness of new heuristic algorithms with constraints on either wired or wireless transmission.

1.3. Contribution and Work Outline

The main contribution of this work is a complete analysis of the energy required to satisfy a computation request. A sophisticated nonlinear optimization problem is formulated with the objective of minimizing the energy consumed for the computation and transportation of tasks under delay constraints. We propose a solution by dividing the problem into subproblems where optimal values of CPU frequencies, transmission paths and allocations of computational tasks to nodes are found. Unlike similar works which depend on various heuristics, we propose an analytical approach that guarantees that we find the optimal solution.

2. Network Model

Let us present the three-tier network model used in this work. In the bottom tier of the network, there is a set of MD (e.g., smartphones) with specific computational requests. We assume that serving these tasks requires offloading them to one of the FN or CN, constituting the second and the third tier, respectively. The MDs cannot process these tasks on their own because of energy or computational limitations. The MDs send computational requests using wireless transmission to one of the nearby FNs. As shown in Figure 1, FNs are located at BS or AP, close to the end users. Then, each task can be processed either in one of the FNs out of set or in the cloud tier (set of CN). Unlike MD, nodes in the fog and cloud tiers of the network are interconnected with wire-based communication technology.

The model shown in this work extends the one used in [17]. The notation used for modelling the network is shown in Table 2.

2.1. Computational Requests

Let be a numbered set of all time instances at which MDs offload computational requests. Let be a set of all requests that MDs try to offload at time . The following parameters characterize each computing request :

- MD , which offloads the task (letters in superscript are used throughout this work as upper indices, nor exponents, e.g., does not denote m to the power of r);

- Size in bits;

- Arithmetic intensity in FLOP/bit;

- Ratio of the size of the result of the processed task r to the size of the offloaded task r;

- Maximum tolerated delay .

Let us define a binary variable that shows where the request is computed, i.e., equals 1 if is computed at node and 0 otherwise. Similarly, let us define a binary variable that indicates if request r is wirelessly transmitted from MD to FN .

Table 2.

The notation used for modeling the network and defining the optimization problem.

Table 2.

The notation used for modeling the network and defining the optimization problem.

| Symbol | Description |

|---|---|

| set of all considered time instances, when one or more computational request arrives | |

| set of all Mobile/End Devices | |

| set of all Fog Nodes | |

| set of all Cloud Nodes | |

| set of all computational requests arriving at | |

| size of request | |

| computational complexity of request | |

| MD which offloads the request | |

| output-to-input data size ratio of request | |

| maximum tolerated delay requirement for request | |

| time at which request arrives in the network, | |

| energy-per-bit cost of transmitting data between nodes x and y | |

| number of FLOPs performed per single clock cycle at node | |

| link bitrate between nodes x and y | |

| fiberline distance to CN | |

| a parameter characterizing delay depending on distance | |

| minimum clock frequency of node | |

| maximum clock frequency of node | |

| , , , | parameters of the power model of CPU installed in node |

| time at which node finishes computing its last task | |

| variable showing whether request is computed at node , | |

| variable showing whether request is transmitted wirelessly to node , | |

| clock frequency of node , | |

| energy efficiency (FLOPS per Watt) characterizing node | |

| power consumption related to computations at node | |

| energy spent on transmission and processing of request | |

| energy spent in the network on processing request | |

| energy spent on transmission of request | |

| , | energy spent on wireless/wired transmission of request |

| energy cost for transmission of request between nodes x and y | |

| energy cost of processing request at node | |

| total delay of request | |

| delay caused by transmitting request | |

| , | wireless/wired delay of request |

| delay of transmission of request between nodes x and y | |

| uplink delay of transmitting request to node , provided that | |

| queuing delay of request | |

| queuing delay of request at node , provided that | |

| computational delay caused by processing request | |

| computational delay caused by processing request at node |

2.2. Energy Consumption

The energy consumption model is divided into two parts: computation (processing of data) and communication (transmission of data). Energy spent on computing request equals:

where is the energy spent on computing request at node and is the computational efficiency of node given in FLOPS per watt [18]. For CN, we assume constant CPU clock frequency and efficiency . For FN, depends on CPU frequency of node , number of FLOP performed within a single clock cycle of CPU [19] and on power consumption of CPU. is obtained by modeling as a polynomial function of using four parameters , , and derived from [20]:

This representation provides the flexibility to cover various models of CPU. The clock frequency must lie within the range of possible frequencies of CPU in node , i.e., .

The energy spent on the transmission of request is the sum of energies resulting from wireless () and wired () communication:

The energy spent on wireless transmission of request equals:

where is the energy required to transmit request from MD to FN and return the calculation result in the reverse direction, while is the energy-per-bit cost of this transmission. is the size (in bits) of results transmitted back to MD .

The energy spent on wired transmission of request equals:

where is the energy required to transmit request between FN and node , while is the energy-per-bit cost of this transmission. Energy-per-bit cost can be derived from [21], where the power consumption of networking equipment increases linearly with load starting from idle power. This relation can also be seen in measurements of core routers [22,23]. There is no wired communication between nodes if the request is processed at the same node to which it is wirelessly transmitted by the MD, i.e., .

The total energy spent on offloading request is given by:

2.3. Delay

Three components form the delay model: communication, processing and queuing. The delay caused by computing request equals:

where is the time required to compute request at node .

The delay caused by communication can be further subdivided into wireless () and wired () delay:

The delay caused by wireless transmission of request equals:

where is the time required to transmit request between MD and FN , while is the bitrate of this transmission between FN l and MD .

The delay caused by wired transmission of request equals:

where is the time required to transmit request between FN and node . The model for calculation of differs depending on whether node n is an FN or a CN. It is assumed that cloud data centers are located away from the rest of the network (hundreds or even thousands of kilometers away) which requires the distance-related delay to be modeled. The delay caused by transmitting request between (to and from) FN and cloud node is:

where is the link bitrate in the backhaul and backbone network between nodes l and n, while is the fiberline distance to CN . The parameter indicates the rate at which delay increases with distance [24].

For transmission between FNs, we assume the delay caused by the distance between them ( in Equation (11)) is negligible—well below 1 ms as we use a value of for parameter [24]—and therefore we ignore it. Delay caused by communication between FN and for request equals:

The special case is when the request r is received wirelessly at FN n and the same node is used for processing. In this case, no wired communication delay is expected, i.e., .

Even more significant differences can be observed while modeling queuing delays for requests processed in the fog tier and in the cloud tier of the network. This stems from the fact that clouds are assumed to have huge (practically infinite) computational resources with parallel-computing capabilities and there is no need to queue multiple requests served by the CN . They can be processed simultaneously. Meanwhile, if multiple requests are sent to the same FN for processing in a short time span, additional delays may occur due to congestion of computational requests (an arriving request cannot be processed until processing of all the previous requests has been completed). We define a scheduling variable to represent the point in time at which the last request scheduled at FN is finished processing. The queuing delay of request , transmitted wirelessly to node , for computations being carried at node equals:

where is the uplink delay of transmitting request r to node n through FN l. has nonzero values when . In such cases, the request r arrives at node n at time . It is kept in a queue until time , when processing of another request (or requests) ends. For each node , always equals zero—due to the parallel processing powers of the cloud, each request may be computed right away, regardless of how many requests are already being processed. Queuing delay of request is:

Finally, the total delay of processing request equals the sum of delays related to computation, transmission and queuing:

2.4. Updating Scheduling Variables in the Fog

Since no requests are processed when a simulation starts, we set Then, for each , after allocations and are determined, the times are updated for every according to when computation of requests offloaded to the FN are scheduled to finish:

3. Optimization Problem

Our defined problem seeks to minimize the total energy cost of offloading all requests that enter the network at time , that is to find:

subject to:

where , and are the optimal values of allocation variables and and CPU clock frequencies , respectively. Constraints (18) guarantee that each request must be processed at exactly one FN or CN. Constraints (19) stipulate that no more than a single request can be processed at a given FN at a given time. Constraints (20) guarantee that for each request, a single FN will be used for wireless connectivity. Constraints (21) guarantee that the total delay must not be greater than the maximum acceptable one. Constraints (22) show the lower and upper bounds of CPU frequency at each FN. Finally, according to Constraints (23) and (24), decision variables and take only binary values.

There exist sets of requests for which the optimization cannot be solved (e.g., there is no feasible allocation of requests so that each request is processed (18) while fulfilling its delay requirement (21)). In such a scenario, we decide to reject requests for which (21) cannot be satisfied rather than ending the optimization without finding a solution (which would translate into rejecting all requests ). The remaining requests (set , where denotes the set of rejected requests) are then subjected to the optimization.

4. Problem Solution

In this section we provide a step-by-step solution to the optimization problem. In short, we first find minimum operating frequencies at which delay requirements of offloaded requests are met. Then, we find optimal operating frequencies which minimize energy consumption spent on computations for given combinations of nodes and requests. At this point combinations which cannot satisfy delay requirements are known. Then, the nodes to which wireless transmission energy costs are the lowest are found. Finally, we assign requests to nodes for computing to minimize the total energy consumption. This linear assignment problem is solved with the Hungarian algorithm [25,26]. Notation used in our solution is summarized in Table 3.

4.1. Auxiliary Variables

Let us define the auxiliary variable as the CPU frequency of node where request is allocated while node is the node to which r is wirelessly transmitted ( = 1). The relation between and is given by . Similarly, determines which node request is wirelessly transmitted to provided that it is allocated to ( = 1) and . Moreover, let be the total delay of request provided that it is computed at node ( = 1) and node be the node to which r is wirelessly transmitted ( = 1).

Table 3.

Additional notation used in the problem solution.

Table 3.

Additional notation used in the problem solution.

| Symbol | Description |

|---|---|

| variable showing whether request is transmitted wirelessly to node , provided that | |

| clock frequency of node , provided that and , | |

| total delay of , provided that and | |

| computational delay of , provided that and | |

| energy spent on processing of request , provided that and | |

| set of requests rejected due to delay requirements | |

| set of not rejected requests, | |

| variable showing whether request is transmitted wirelessly to node , provided that | |

| clock frequency of node , provided that and , | |

| total delay of , provided that and | |

| computational delay of , provided that and | |

| energy spent on processing of request , provided that and | |

| set of requests rejected due to delay requirements | |

| set of not rejected requests, |

4.2. Finding Optimal Frequencies

Let us rewrite (19) by expanding into parts caused by computations (), wireless transmission (, between MD and node l) and wired transmission (, between nodes l and n):

Out of these three parts, is the only one that depends on frequencies . The goal of this step is to find , i.e., values of which minimize for all possible values of and . The only constraints that depend on values of are (21) and (22).

Its derivative with respect to equals:

The function is continuous and differentiable for positive (the only discontinuity is at ). Therefore, its extrema in a given interval can only be found at the bounds of this interval or for points at which the derivative equals zero. has a cubic function in the numerator, so it has at most three real roots.

Now, we find for , , by finding the minimum of in the interval . The corresponding minimum energy costs are as follows:

For values , , for which , constraints (21) and (22) cannot both be satisfied, so we set to infinity. For computations in clouds , we do not optimize the frequency (, ). For values , , for which , constraint (21) cannot be satisfied, i.e., we set to infinity.

Each request for which the following occurs:

cannot be fully processed within their delay requirements regardless of chosen computation/transmission nodes. All such requests are therefore rejected. The remaining optimization is performed over , where is the set of rejected requests.

4.3. Transmission Allocation

The auxiliary matrix can be obtained. For each task and each computing node , the goal is to choose node , which minimizes the sum of energy spent on computations (calculated and optimized in the previous step) and transmission (depending directly on ), i.e., to find:

while satisfying (20) and (24). This is equivalent to finding nodes l, which minimize the expression .

4.4. Computation Allocation

The vector can now be obtained by solving the simplified problem:

subject to (18), (19) and (23). This corresponds to the linear assignment problem [25]—each request is assigned to one and only one node . The cost matrix has rows and columns. The columns representing processing at FN are used once as each of them can serve one request at a time while the columns representing processing at CN are multiplied to ensure that multiple requests can be assigned to them simultaneously. The Hungarian algorithm [25,26] is used to solve this problem.

5. Results

Results obtained from computer (MATLAB) simulations and their setup are presented in this section. While the main goal is to serve all the incoming requests within allowed latency constraints with minimum energy, requests that failed to be served are set with virtually infinite consumed energy. This facilitates a fair comparison of various request allocation strategies using only distribution of energy consumption spent per offloaded request. Therefore, we choose medians, percentiles and CDF as evaluation metrics.

For the purpose of computing medians and other percentiles in this section, the energy costs related to rejected requests are equal to positive infinity—such an approach (as well as using other fixed values or omitting them entirely) has a considerably larger impact on the averages. Medians and percentiles avoid bias that unserved requests have with respect to average values.

5.1. Scenario Overview

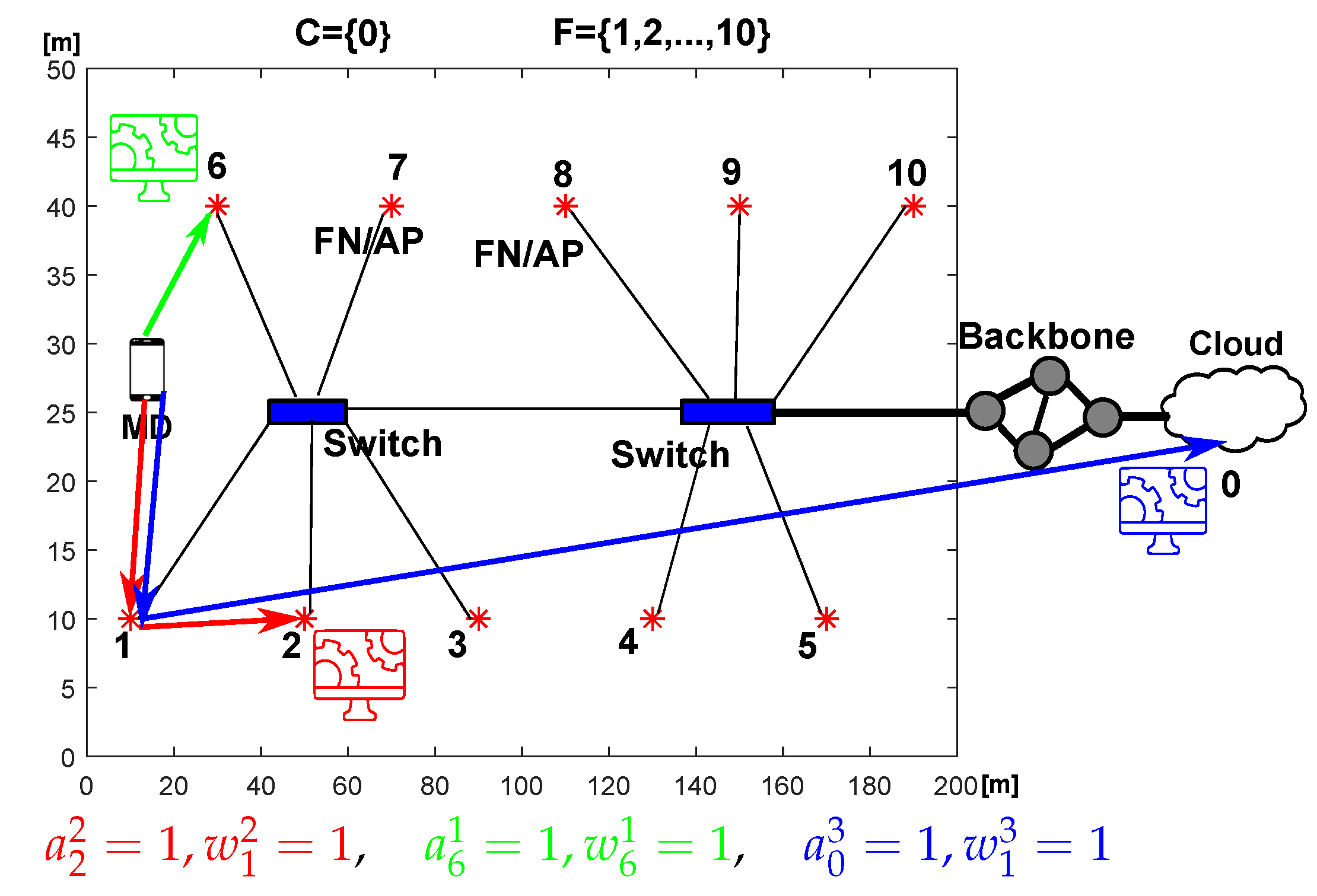

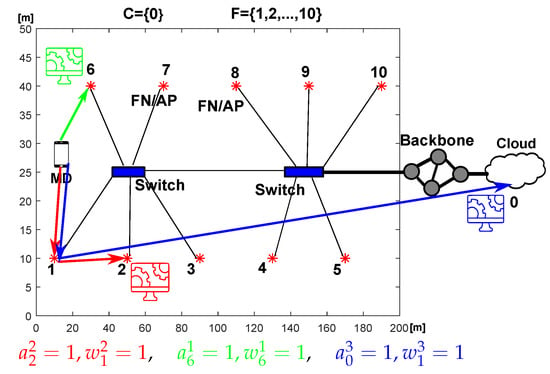

Let us consider a network with FN and cloud DC. Simulation parameters are summarized in Table 4. Figure 2 shows a connection diagram between these FNs and the cloud. The examined environment represents a commercial facility such as an airport, where the end users (MD) want to have their requests processed. Moreover, Figure 2 presents three examples of requests being calculated: (i) in the same FN as the utilized AP, (ii) being calculated in another FN and (iii) being offloaded to the cloud. Appropriate values of binary variables and are presented in Figure 2.

Table 4.

Simulation parameters.

Figure 2.

Diagram of the considered network composed of 10 FNs and a cloud with three examples of request allocations.

Requests—between 5 and 10 new computational requests with uniform distribution at time appear. These requests appear at random locations within the area of the examined network (with uniform distribution in both dimensions). The value is generated as a random delay after the previous time instance . The difference is chosen to be a random variable of exponential distribution with an average value of . The requests have randomly assigned values of their parameters (size, arithmetic intensity, delay requirement) in ranges shown in Table 4 with uniform distribution.

Computations—each FN has computational resources and a frequency–power relationship of a single Intel Core i5-2500K as its CPU. Data relating frequency, voltage and power consumption of i5-2500K are taken from [27] and inserted into Equation (2) adapted from [20] to obtain values for , , and . The parameter s equals 16 for this CPU [19]. The resulting computational efficiency is the highest ( GFLOPS/W) at frequency GHz.

To simulate a scenario with varying computational efficiencies of nodes, we multiply the resulting computational efficiency (2) by random values from the range generated independently for each node .

As for the computational capability of the cloud, its CPUs are parameterized according to the Intel Xeon Phi family commonly used in computer clusters [18,32] run at constant frequency GHz characterized with [19].

Wireless transmission—the power consumption model of the wireless transmission is based on [29] and depends on the data rate and path loss. We use values derived for ASUS USB-N10 WiFi card. The path loss values are determined using the model from Section 3.1 of [31] for a commercial area and frequency closest to (20 dB for frequency ). The wireless link uses a maximum available rate that depends on the minimum sensitivity specified in Section 19.3.19.2 of [30] for a given modulation and coding scheme. It ranges from 6.5 Mbps (BPSK, 1/2) at −82 dBm to 65 Mbps (64-QAM, 5/6) at −64 dBm. The energy-per-bit cost is obtained by dividing the power by the wireless link data rate.

Wired transmission—in order to derive energy-per-bit cost of transmitting requests from one node to another (i.e., from to ), we need to add costs induced in all devices through which it flows. For the power consumption of a single networking device, the linear model from [21] is used. It includes idle power and active power that scales with load C (in bits/second) by parameter (in Joules/bit):

where denotes maximum power consumption and denotes maximum load. Energy-per-bit cost of transmitting data is equal to the sum of parameters of all network devices through which the data flows between nodes l and n. In this work, we assume . It is assumed for the connections between FN that they are connected with 1 G Ethernet. The power consumption of Ethernet switches is set according to [28,33]. Each switch can serve up to 6 FN on the LAN side with 1 Gbps links (star topology) and can be connected to the 10 G EPON on the WAN side. Cost-per-bit of transmission through these switches is equal to 2 nJ/bit (82 W at 1 Gbps throughput, 80 W with no traffic). The configuration can be seen in Figure 2 showing 10 FNs connected with 2 switches.

For the connection between the fog tier of the network and the cloud, it is assumed that the data flow through multiple nodes. Olbrich et al. [24] use geographically locatable nodes (over 250 nodes around the globe) to derive multiple path characteristics. Their results show that the RTT of a packet is, on average, 1.5 times longer than an estimation based only on fiberline distance (the speed of light in optic fiber m/s, in vacuum m/s). The measured RTT has a slope of s/km. We assign this s/km value to parameter . The Cloud DC is assumed to be located 2000 km away from the rest of the network. It is estimated that the energy-per-bit cost of transmitting data through the backbone network to the Cloud is equal to nJ/bit based on 12 Juniper T1600 routers—each with cost-per-bit equal nJ/bit [12,22] and a 10G EPON gateway with 0.3 nJ/bit cost [34]. While there is other equipment through which the data flow within the core network (e.g., optical amplifiers), the value 12.66 nJ/bit is chosen to represent the whole energy spent on transmission. Therefore, nJ/bit for (2 or 3 depending on the logical distance between l and the switch with the WAN connection).

5.2. Baseline/Suboptimal Solutions

To test the effectiveness of the proposed algorithm (Full Optimization, shortened on plots to Full Optim), we compare it with four simpler task allocation methods. A summary of these methods is shown in Table 5.

Table 5.

Comparison of examined algorithms.

Exhaustive Search—all possible variations of allocations are verified. While this baseline approach finds the optimal solution, its running time scales exponentially with the number of requests. The optimal frequencies of CPU are calculated as in Full Optimization.

Cloud Only—all requests are transmitted to and processed in the cloud tier of the network. The optimal transmission allocation is obtained using a simplified version of the Full Optimization.

No Migrate—the nodes in the fog tier and cloud tier of the network cannot transmit tasks between themselves, i.e., the FN to which the request r is sent from the MD is the one that computes it ().

Closest Wireless—in this approach, requests are always transmitted wirelessly to the closest node (the one with the lowest path loss). Then, the rest of the optimization is performed as in Full Optimization. The difference lies mostly in the step described in Equation (33)—in Full Optimization the set of allocation variables is found to minimize total transmission + computation costs, while in Closest Wireless each is found separately, minimizing “only” the wireless transmission costs.

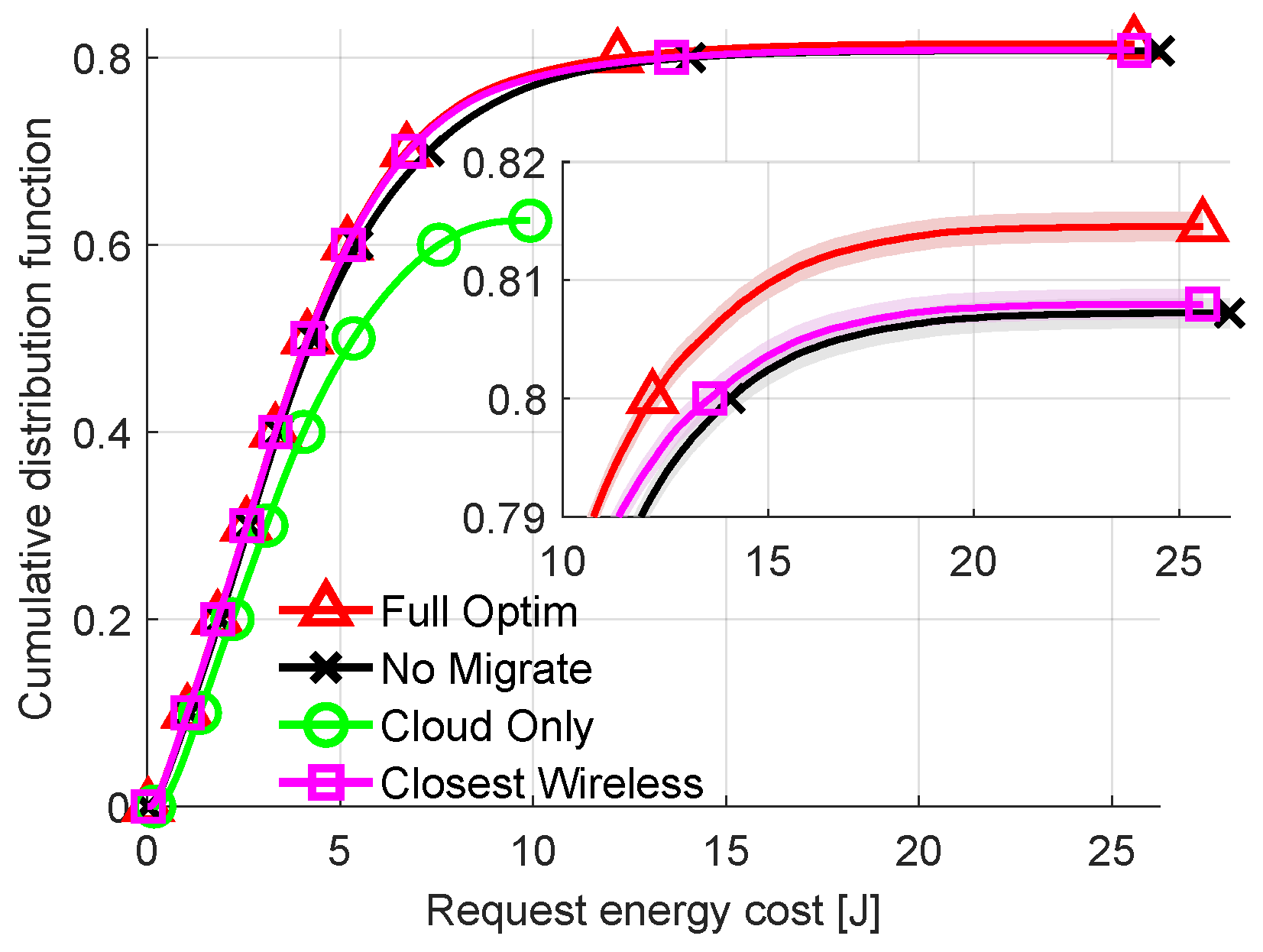

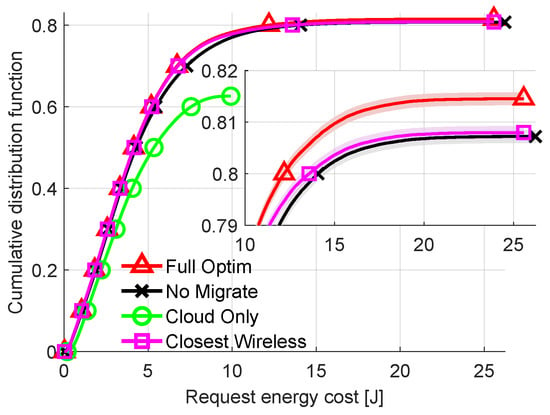

Not all of these solutions are plotted on every graph for clarity in this section. The results of Closest Wireless in many configurations overlap with the results of Full Optimization. In other words, the results of Closest Wireless are indistinguishable (within 0.1%) from the optimal results of Full Optimization for the vast majority of tested parameter setups. Therefore, they are omitted from all plots except Figure 7, where the difference between these two is visible. Shaded areas around results for each solution show 95% confidence intervals.

5.3. Comparison with Exhaustive Search and All Possible Allocations

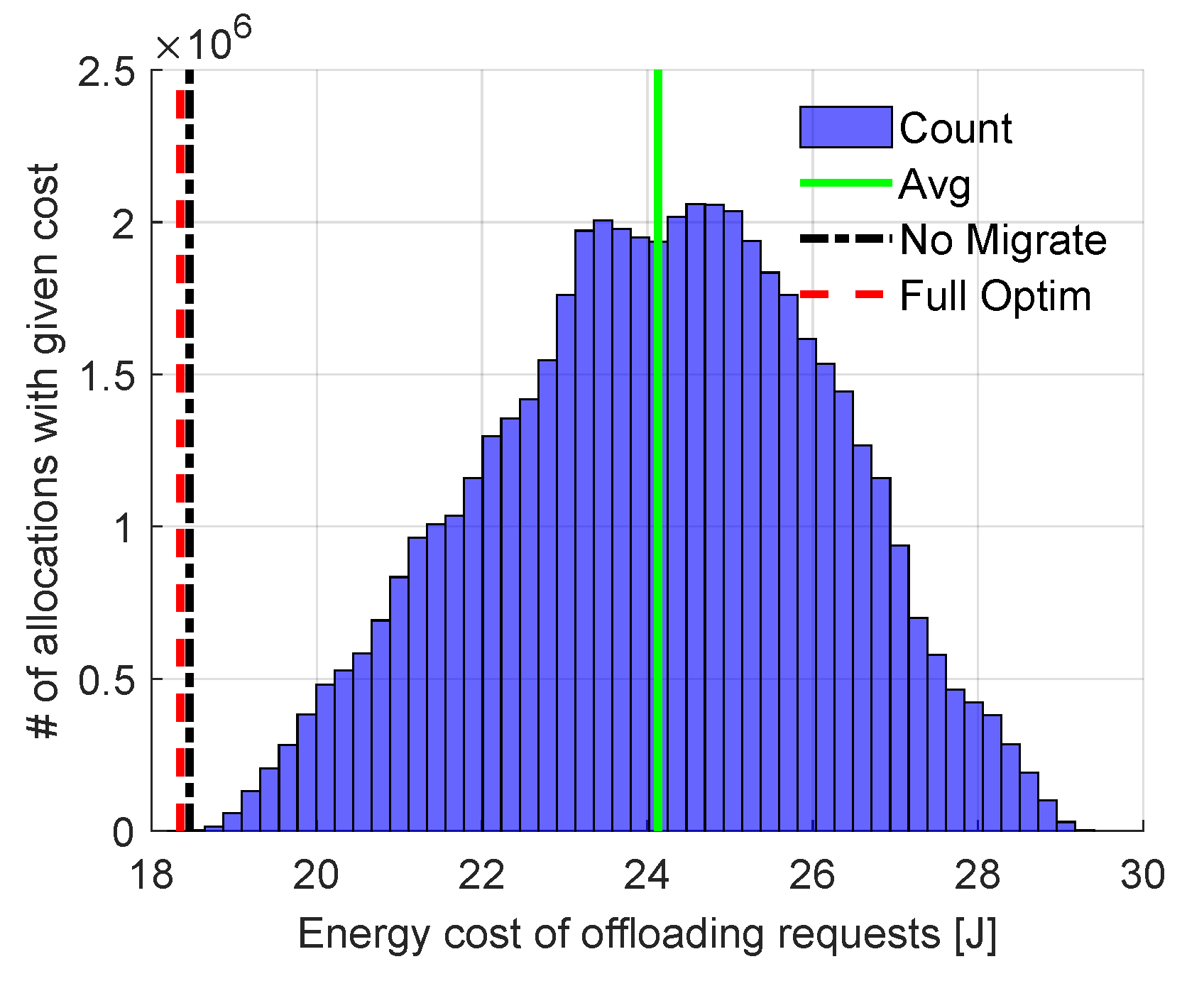

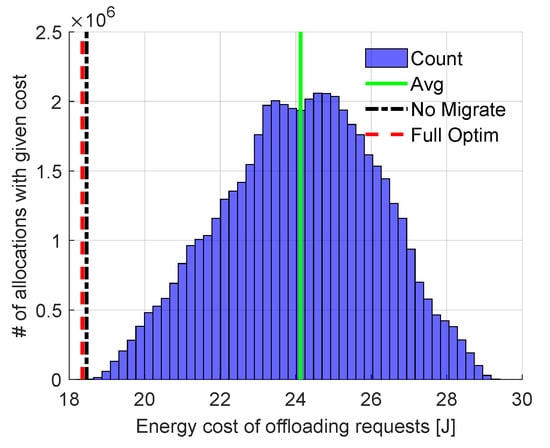

First, let us compare results obtained from our Full Optimization with those resulting from Exhaustive Search to validate the ability of our algorithm to find the total minimum energy cost. A set of four computational requests is considered. The size of this set is limited due to the high computational complexity of Exhaustive Search. These requests have to be allocated among 10 FNs (allocation in the cloud is not considered in this example to highlight the importance of optimization within the fog tier). There are 50,400,000 possible allocations ( for transmission, for computation) in total with energy consumption varying from 18.3 J to more than 29.4 J, as presented in Figure 3. The results obtained by Full Optimization (red dashed line) and No Migrate (black dotted–dashed line) are also shown. Full Optimization does indeed find the same solution as Exhaustive Search. The solution found by No Migrate results in slightly higher energy cost. Still, both solutions provide energy cost significantly lower than the average cost of all possible allocations. It is clear that an algorithm which assigns requests to nodes randomly would not be efficient in terms of energy cost.

Figure 3.

Comparison of our (Full Optim) solution with the No Migrate solution and all possible allocations from exhaustive search (blue bars; average value marked with solid green line).

5.4. Impact of Network Parameters

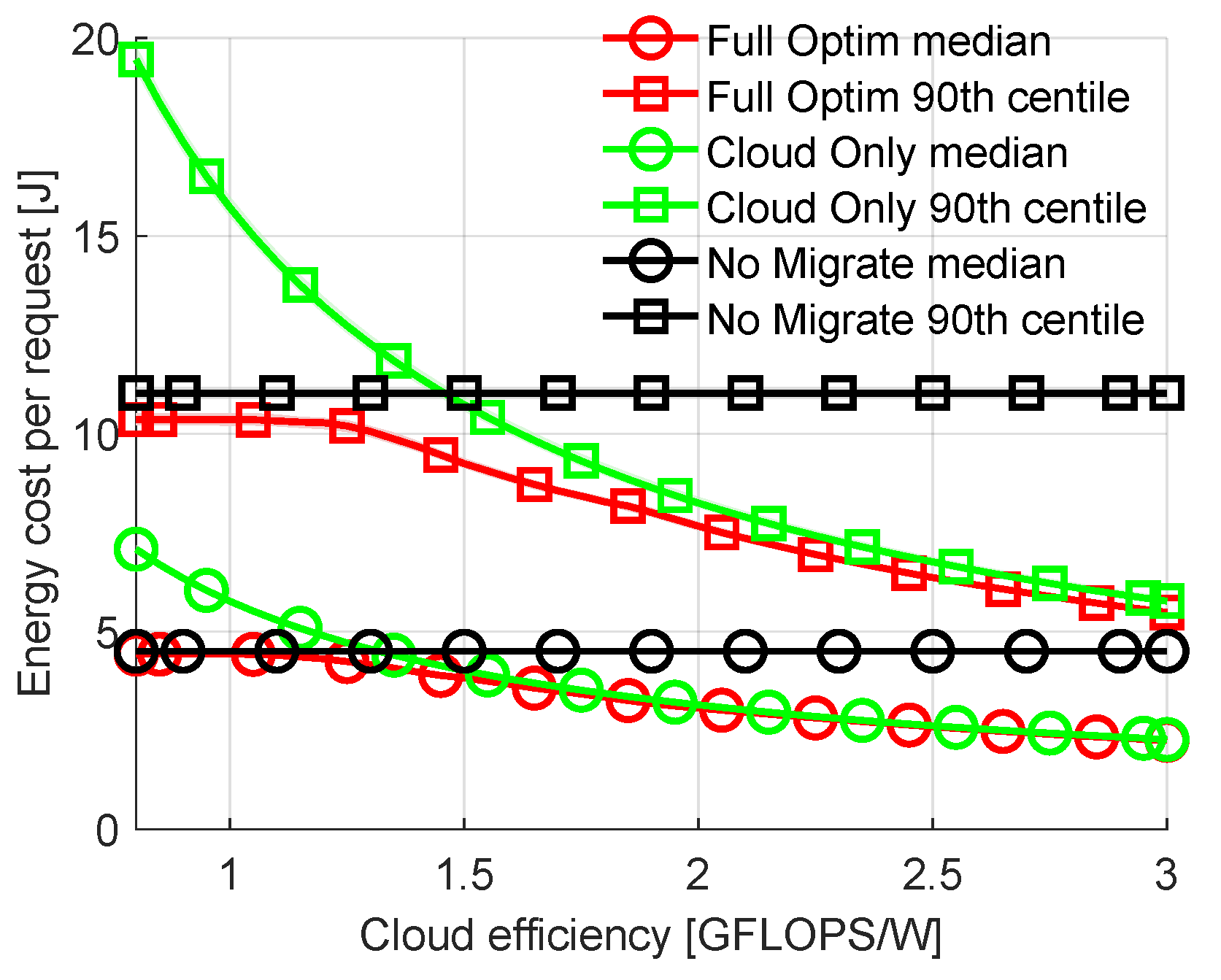

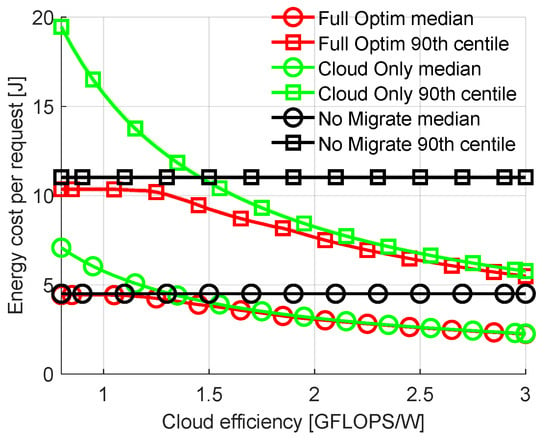

Now let us examine the impact of the computational efficiency of the cloud on energy costs and allocations in the full network. Let us sweep this efficiency from 0.8 to 3.0 GFLOPS/W (efficiency of the 500 most powerful commercially available computer clusters ranges from 0.19 GFLOPS/W to 39.4 GFLOPS/W with 4.04 GFLOPS/W as the median [35]). Figure 4 shows the median and the 90th percentile of the total energy costs spent on transmission and computation of offloaded requests. It can be seen that the energy costs of Cloud Only are significantly higher than those of Full Optimization for the lowest values of cloud efficiency, while differences between No Migration and Full Optimization are small. In all cases, our proposed solution requires a smaller amount of energy for a single request calculation than No Migration. As cloud efficiency increases, the cost of Cloud Only allocation decreases. In parallel, this allows Full Optimization to offload more tasks to the cloud, decreasing the energy consumption. The differences between the 90th percentiles are significantly higher than those between medians, showing the highest gains of Full Optimization for the most difficult requests. It is obvious that for the extremely high or low efficient cloud, the requests will be mostly calculated in the cloud or in the fog nodes, respectively. Therefore, for other results in this section, cloud efficiency is chosen to be 1.3 GFLOPS/W. This is a value of cloud efficiency that results in offloading decisions being not as straightforward as for values significantly higher or lower.

Figure 4.

Comparison of energy cost per request with varied computational efficiency of cloud.

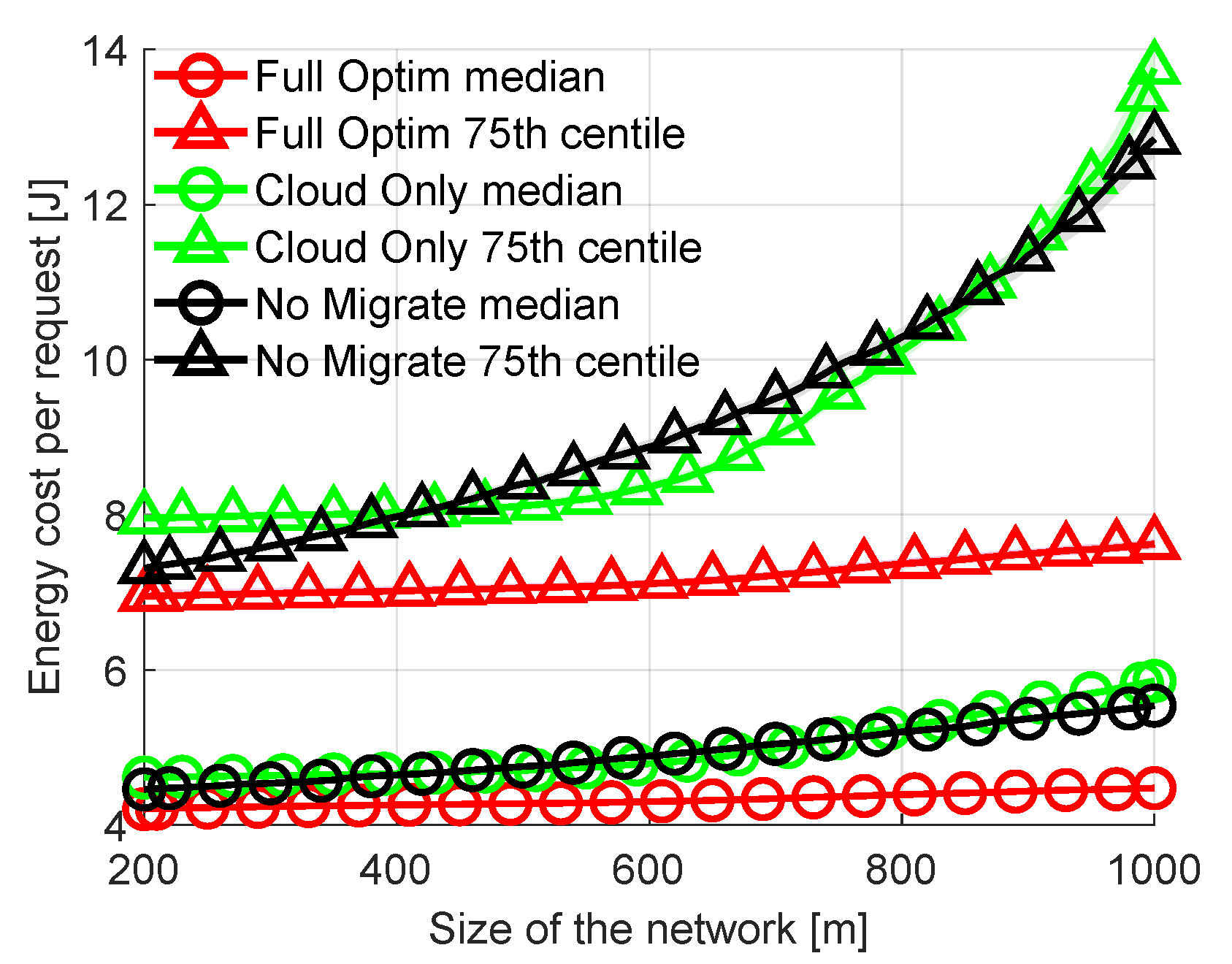

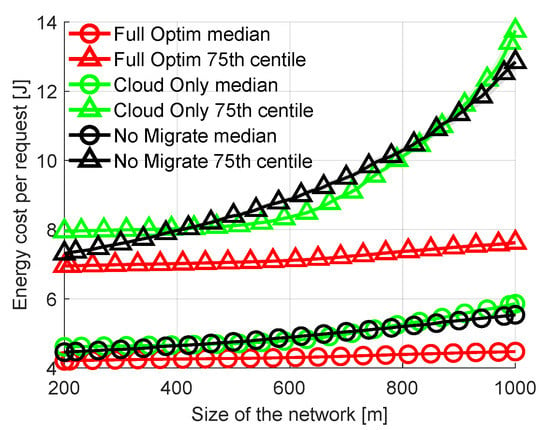

Another network parameter that can impact the costs and offloading decisions is the physical size of the network. The network shown in Figure 2 (10 FN distributed over a hall) is used by default. Now let us vary the physical size of the network while maintaining the same number of FNs. This has an effect on the distance between MDs and FNs. The greater the distance, the higher the path loss and the energy-per-bit cost of wireless transmission. At the same time, the higher the path loss the lower the wireless transmission rate. In Figure 5 the length of the area covered by the network is swept up to 1000 m from the initial 200 m. With changing length (the longer of the two dimensions) the ratios of distances between all FNs and the area perimeter remain constant. The results in Figure 5 clearly show that the energy cost per request increases with the increasing size of the network. The increase is significant for No Migrate as MD is often “forced” to wirelessly send requests to more distant nodes if the close nodes are busy processing other requests or are not efficient enough. The rejection rates also increase from 3.3% at 200 m to 8.6% at 1000 m For Full Optimization, from 3.8% to 21.8% for No migrate and from 6.5% to 23.7% for Cloud Only. The difference in energy costs between Full Optimization and other methods becomes more apparent with increasing distances within the network.

Figure 5.

Comparison of energy consumption per request with varied size of area covered by the network.

5.5. Impact of Traffic Parameters

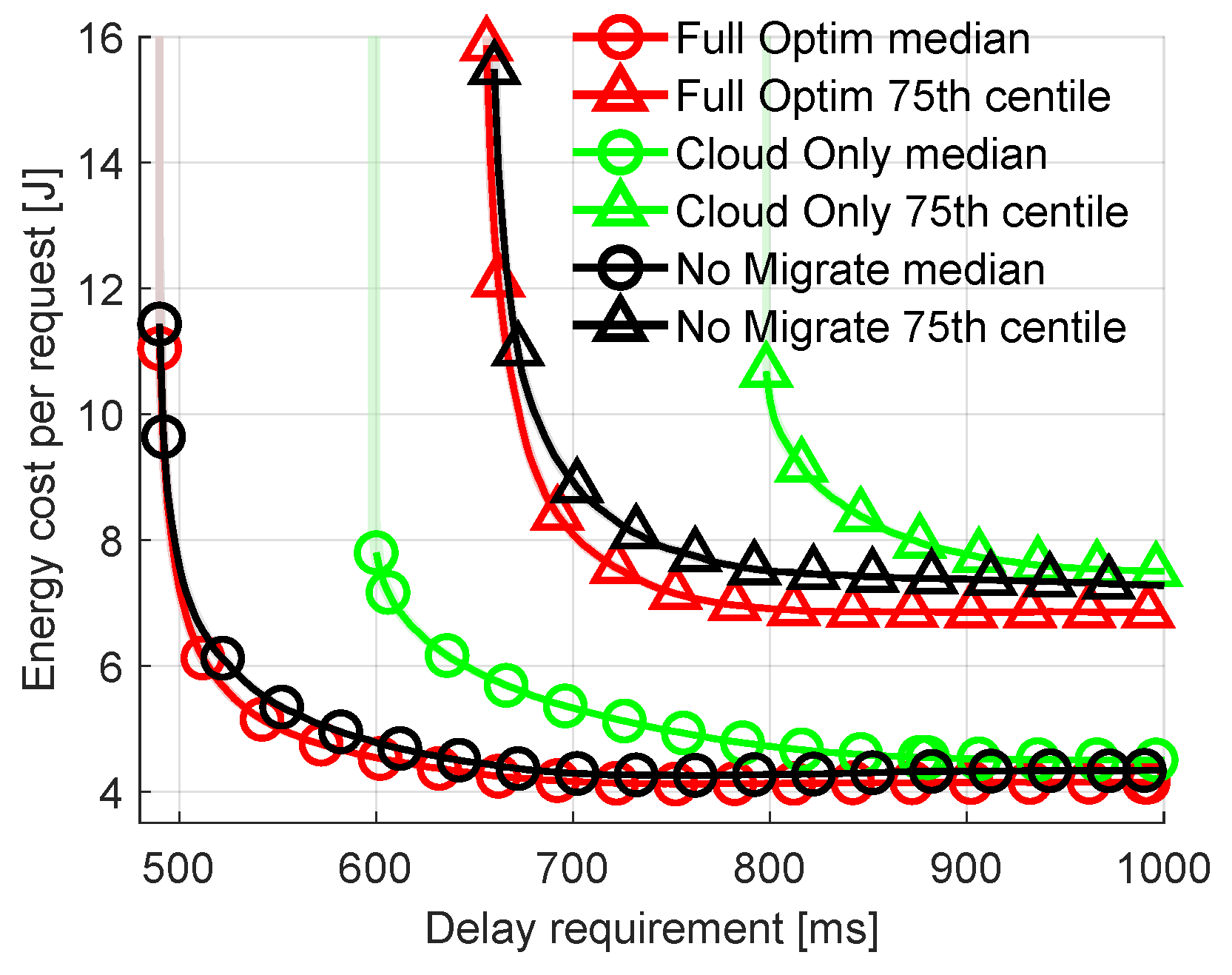

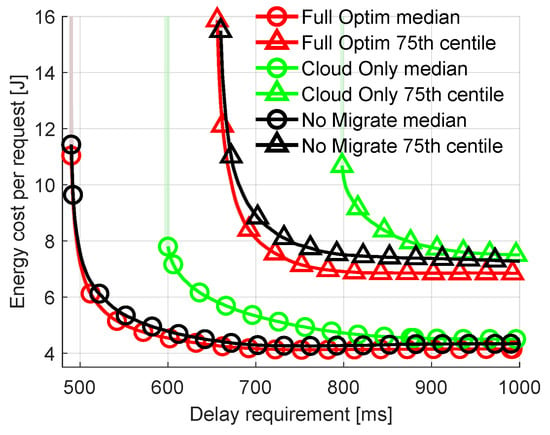

Let us vary parameters characterizing the requests offloaded to the network. For previous results, the parameters characterizing offloaded requests are random, as shown in Table 4. First, let us look at the impact of the delay requirement. It is fixed for all the incoming requests. The other parameters (e.g., arrival rate, arithmetic intensity) are generated in the same way as described in Section 5.1. Figure 6 plots the median and the 75th percentile of energy costs spent on offloading requests as a function of the delay requirement (between 500 and 1000 ms) of these requests. There are a few key observations: (i) the percentage of rejected requests increases with stricter delay requirements, (ii) the energy cost increases with stricter delay requirements, (iii) Cloud Only is particularly poorly suited for delay-sensitive applications. Observation (i) is self-explanatory. The shorter the time-constraint, the harder it is to successfully offload the task, compute it and transmit the results back within this time. This can be seen on the plot where the respective lines terminate in the middle of a plot as a result of virtually infinite energy cost of a request that is unsuccessfully calculated. For example, the green line representing the 75th percentile of Cloud Only terminates at 800 ms. This means that for delay requirements lower than 800 ms more than 25% of requests are rejected. Observation (ii) is an effect of the higher CPU frequency required at the FN to fulfill stricter delay requirements. This results in decreased CPU efficiency and increased energy consumption. Observation (iii) stems from the additional transmission delay caused by sending requests to the distant cloud.

Figure 6.

Comparison of energy consumption per request with varied delay requirement of requests.

To further analyze the difference between allocation strategies CDFs of energy costs are plotted in Figure 7 for fixed delay requirement of all requests equal to 700 ms. Unlike previous plots, Figure 7 includes results from the Closest Wireless algorithm. In all previous plots, the resulting energy costs of Closest Wireless are not shown, since they are either identical to those of Full Optimization or are within 0.1% of it. Lowering the delay requirement created a scenario where sending the request wirelessly to the nearest (cheapest) AP/FN and then finding the optimal node for computation may not result in the optimal solution. This shows that Full Optimization manages to successfully offload nearly 81% of all requests. This is the most out of all the compared methods, about 0.5 percentage point more than Closest Wireless. It is visible that all the methods are differentiated mostly for high percentiles of energy costs. The worst solution is Cloud Only, which rejects nearly 40% of all requests. While the difference between Closest Wireless and Full Optimization is relatively small, this can be treated as a promising suboptimal solution which decreases algorithm complexity while maintaining efficiency. This can change if the considered wireless technology, e.g., 5G NR, provides a higher data rate and higher energy efficiency. However, this requires energy consumption models of 5G terminals to be available.

Figure 7.

Comparison of energy consumption per request (CDF). Delay requirement: 700 ms.

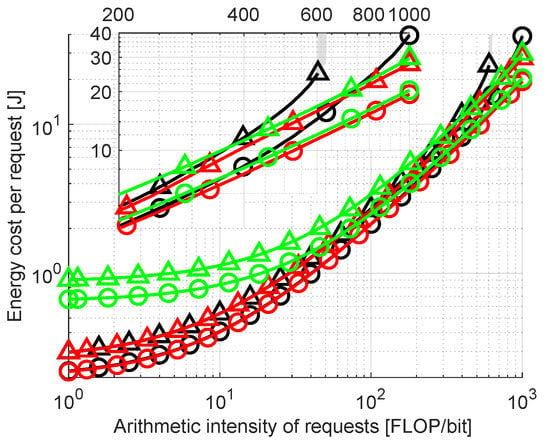

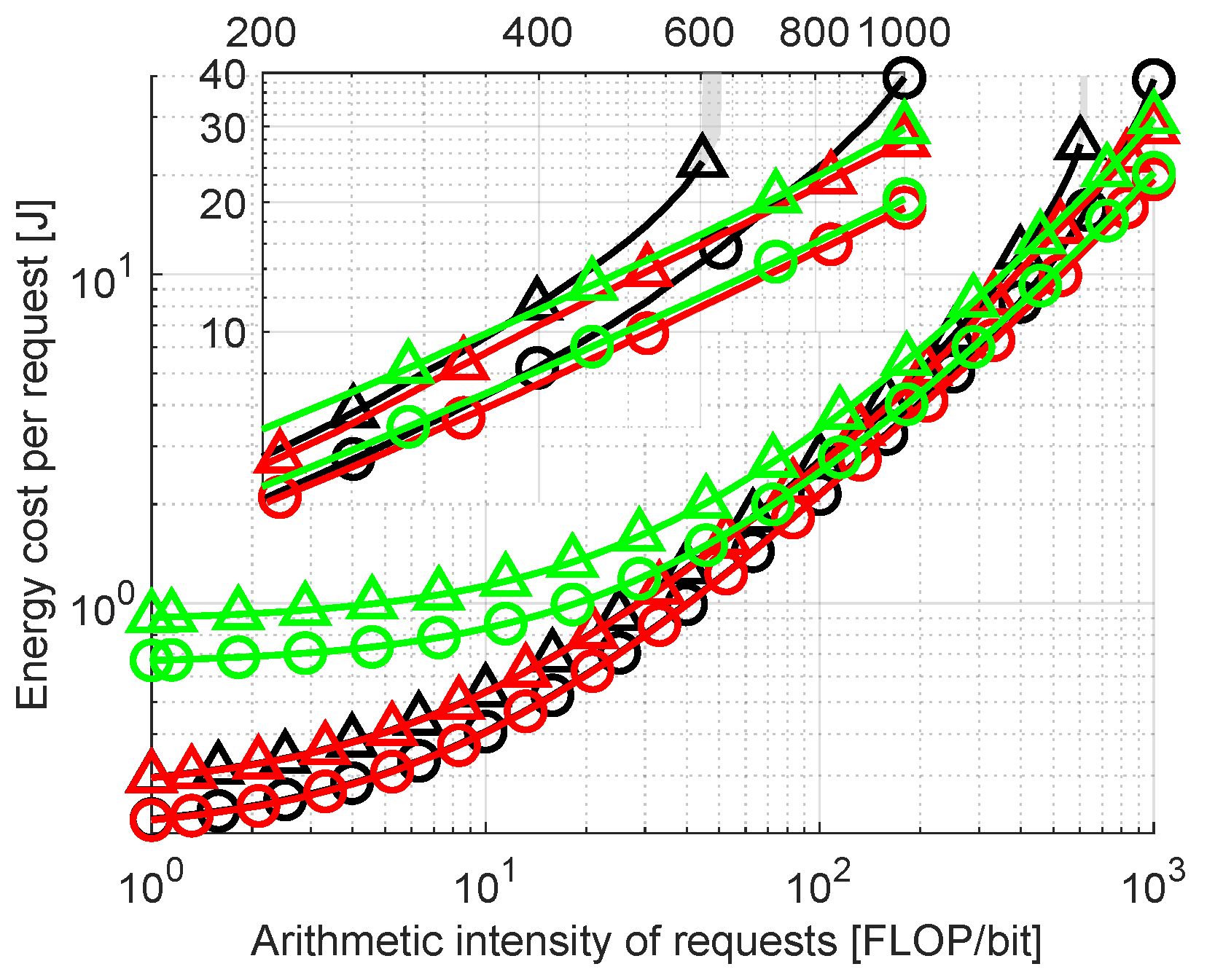

Finally, an impact of arithmetic intensity of offloaded requests is examined. This parameter determines how many computations are needed to process a given request relative to its size. The median and 75th percentile of energy costs for arithmetic intensity swept in range FLOP/bit are plotted in Figure 8. As expected, the energy cost increases with rising intensity. Higher values resulting from Cloud Only allocation at low intensity can be attributed to costs related to transmission (which do not directly depend on arithmetic intensity). Such requests can be more efficiently calculated in FNs, being commonly the result of the Full Optimization method. Energy costs (both median and 75th percentile) of No Migrate are within 10% of Full Optimization except for the values above 300 FLOP/bit where No Migrate steeply inclines. Rejection rates for Full Optimization are 1.1% for 1 FLOP/bit, 1.8% for 100 FLOP/bit and 11.7% for 1000 FLOP/bit. For Cloud Only, the values equal 2.9%, 4.4% and 20%, respectively. For No Migrate, they also start at 1.1% for 1 FLOP/bit and 1.8% for 100 FLOP/bit but reach 46.7% for 1000 FLOP/bit.

6. Discussion

We investigate the minimization of energy spent on offloading computational tasks in fog networks. Our model includes delay and energy costs resulting from computation as well as wireless and wired transmission. The proposed computational task allocation algorithm, Full Optimization, successfully minimizes energy consumption while satisfying delay constraints. All the considered degrees of freedom, i.e., AP selection, computing node selection and FN CPU frequency tuning increase system performance. However, precise gain characterization depends on a specific network configuration and specification of the computational requests. When compared with the No Migrate solution, the biggest performance improvements can be seen when offloaded tasks have high arithmetic intensity or when a large area covered by the network causes higher path loss (up to 50% lower energy consumption). Compared with performing all computations in the cloud, our solution is much better suited for requests with strict delay requirements and low arithmetic intensity. We also propose a heuristic approach that independently allocates wireless transmission called Closest Wireless. This simplified algorithm provides optimal solutions for almost all considered scenarios. Its performance is slightly worse for requests with strict delay requirements—it manages to satisfy delay constraints of 0.8% fewer requests compared to Full Optimization at 700 ms.

The limitations of this work include relying on energy consumption and delay models characterizing equipment in the network. Considering various devices available in the market, the models may not be accurate for all of them. Moreover, this work assumes some simplifications. Each request can only be computed at one node, while each FN can simultaneously process only one request. Future work includes extension of the setup with other wireless technologies, e.g., 5G NR. However, this requires reliable power consumption models for terminals of these technologies. Furthermore, metaheuristics targeting low execution times while finding close-to-optimal solutions may be an interesting research option. Another possible direction is adding a pricing mechanism to the network. This would incentivize FN and CN to prioritize processing certain requests and provide a price–delay trade-off.

Author Contributions

Conceptualization, B.K., F.I., P.K. and H.B.; methodology, B.B., B.K., F.I. and P.K; software, B.K. and B.B.; validation, P.K., B.B. and F.I.; writing—original draft preparation, B.K., F.I, P.K. and B.B.; writing—review and editing, B.K., F.I., H.B. and P.K.; visualization, B.K.; supervision, F.I., H.B. and P.K.; project administration, H.B., F.I. and B.B.; funding acquisition, H.B. and B.B. All authors have read and agreed to the published version of the manuscript.

Funding

The presented work has been funded by the Polish Ministry of Education and Science within the bailout supporting development of young scientists in 2021/22 within task “Optimization of the operation of wireless networks and compression of test data” and by the National Science Centre in Poland within the FitNets project no. 2021/41/N/ST7/03941 on “Fresh and Green Cellular IoT Edge Computing Networks—FitNets”.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

| AWS | Amazon Web Services |

| AP | Access Point |

| API | Application Programming Interface |

| BAN | Body Area Network |

| BS | Base Station |

| BSN | Body Sensor Network |

| CDN | Content Delivery Network |

| CDF | Cumulative Distribution Function |

| CPE | Customer Premises Equipment |

| CN | Cloud Node |

| CPU | Central Processing Unit |

| C-RAN | Cloud Radio Access Network |

| DC | Data Center |

| DVFS | Dynamic Voltage and Frequency Scaling |

| EA | Energy-Aware |

| ECG | Electrocardiogram |

| EEFFRA | Energy-EFFicient Resource Allocation |

| EH | Energy Harvesting |

| ETSI | European Telecommunications Standards Institute |

| EPON | Ethernet Passive Optical Network |

| FI | Fog Instance |

| FLOP | Floating Point Operation |

| FLOPS | Floating Point Operations per Second |

| FN | Fog Node |

| F-RAN | Fog Radio Access Network |

| GPS | Global Positioning System |

| GSM | Global System for Mobile communications |

| HD | High Definition |

| HT | Higher Throughput |

| IBStC | If Busy Send to Cloud |

| IBKiF | If Busy Keep in FN |

| IBStOF | If Busy Send to Other FN |

| IEEE | Institute of Electrical and Electronics Engineers |

| ICT | Information and Communication Technology |

| IoE | Internet of Everything |

| IoT | Internet of Things |

| IP | Internet Protocol |

| KKT | Karush–Kuhn–Tucker |

| LAN | Local Area Network |

| LC | Low-Complexity |

| LTE | Long Term Evolution |

| MCC | Mobile Cloud Computing |

| MINLP | Mixed Integer Nonlinear Programming |

| MD | Mobile Device |

| MEC | Mobile/Multi-Access Edge Computing |

| MIB | Management Interface Base |

| nDC | nano Data Center |

| NFV | Network Function Virtualization |

| NR | New Radio |

| OSI | Open Systems Interconnection |

| PA | Power-Aware |

| PC | Personal Computer |

| PGN | Portable Game Notation |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| RAM | Random Access Memory |

| RAN | Radio Access Network |

| RFC | Request for Comments |

| RRH | Remote Radio Head |

| RTT | Round-Trip Time |

| SCA | Successive Convex Approximation |

| SCN | Small Cell Network |

| SDN | Software Defined Network |

| SDR | SemiDefinite Relaxation |

| SINR | Signal to Interference-plus-Noise Ratio |

| SM | Sleep Mode |

| SOA | Service Oriented Architecture |

| TDM | Time Division Multiplexing |

| TE | Traffic Engineering |

| TM | Traffic Matrix |

| TM | Traffic Matrices |

| V2V | Vehicle-to-Vehicle |

| V2X | Vehicle-to-Anything |

| VC | Virtual Cluster |

| VM | Virtual Machine |

| WAN | Wide Area Network |

| WDM | Wavelength Division Multiplexing |

| WE | WeekEnd day |

| WLAN | Wireless Local Area Network |

References

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.H.; Morrow, M.J.; Polakos, P.A. A Comprehensive Survey on Fog Computing: State-of-the-Art and Research Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 416–464. [Google Scholar] [CrossRef]

- Shih, Y.; Chung, W.; Pang, A.; Chiu, T.; Wei, H. Enabling Low-Latency Applications in Fog-Radio Access Networks. IEEE Netw. 2017, 31, 52–58. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and Its Role in the Internet of Things. In Proceedings of the Mobile Cloud Computing (MCC) Workshop, Helsinki, Finland, 17 August 2012. [Google Scholar] [CrossRef]

- Google. GOOGLE Environmental Report: 2019. Technical Report, Google. 2019. Available online: https://www.gstatic.com/gumdrop/sustainability/google-2019-environmental-report.pdf (accessed on 11 April 2022).

- Ojagh, S.; Cauteruccio, F.; Terracina, G.; Liang, S.H. Enhanced air quality prediction by edge-based spatiotemporal data preprocessing. Comput. Electr. Eng. 2021, 96, 107572. [Google Scholar] [CrossRef]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q.S. Offloading in Mobile Edge Computing: Task Allocation and Computational Frequency Scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H.; Kim, B.H. Energy-Efficient Resource Allocation for Mobile-Edge Computation Offloading. IEEE Trans. Wirel. Commun. 2017, 16, 1397–1411. [Google Scholar] [CrossRef]

- Liu, L.; Chang, Z.; Guo, X. Socially Aware Dynamic Computation Offloading Scheme for Fog Computing System With Energy Harvesting Devices. IEEE Internet Things J. 2018, 5, 1869–1879. [Google Scholar] [CrossRef]

- Bai, W.; Qian, C. Deep Reinforcement Learning for Joint Offloading and Resource Allocation in Fog Computing. In Proceedings of the 2021 IEEE 12th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 20–21 August 2021; pp. 131–134. [Google Scholar] [CrossRef]

- Vu, T.T.; Nguyen, D.N.; Hoang, D.T.; Dutkiewicz, E.; Nguyen, T.V. Optimal Energy Efficiency with Delay Constraints for Multi-Layer Cooperative Fog Computing Networks. IEEE Trans. Commun. 2021, 69, 3911–3929. [Google Scholar] [CrossRef]

- Deng, R.; Lu, R.; Lai, C.; Luan, T.H.; Liang, H. Optimal Workload Allocation in Fog-Cloud Computing Toward Balanced Delay and Power Consumption. IEEE Internet Things J. 2016, 3, 1171–1181. [Google Scholar] [CrossRef]

- Kopras, B.; Idzikowski, F.; Kryszkiewicz, P. Power Consumption and Delay in Wired Parts of Fog Computing Networks. In Proceedings of the 2019 IEEE Sustainability through ICT Summit (StICT), Montreal, QC, Canada, 18–19 June 2019. [Google Scholar]

- Vakilian, S.; Fanian, A. Enhancing Users’ Quality of Experienced with Minimum Energy Consumption by Fog Nodes Cooperation in Internet of Things. In Proceedings of the 2020 28th Iranian Conference on Electrical Engineering (ICEE), Tabriz, Iran, 4–6 August 2020. [Google Scholar] [CrossRef]

- Khumalo, N.; Oyerinde, O.; Mfupe, L. Reinforcement Learning-based Computation Resource Allocation Scheme for 5G Fog-Radio Access Network. In Proceedings of the 2020 Fifth International Conference on Fog and Mobile Edge Computing (FMEC), Paris, France, 20–23 April 2020; pp. 353–355. [Google Scholar] [CrossRef]

- Ghanavati, S.; Abawajy, J.; Izadi, D. An Energy Aware Task Scheduling Model Using Ant-Mating Optimization in Fog Computing Environment. IEEE Trans. Serv. Comput. 2022, 15, 2007–2017. [Google Scholar] [CrossRef]

- Sarkar, S.; Chatterjee, S.; Misra, S. Assessment of the Suitability of Fog Computing in the Context of Internet of Things. IEEE Trans. Cloud Comput. 2018, 6, 46–59. [Google Scholar] [CrossRef]

- Kopras, B.; Bossy, B.; Idzikowski, F.; Kryszkiewicz, P.; Bogucka, H. Task Allocation for Energy Optimization in Fog Computing Networks With Latency Constraints. IEEE Trans. Commun. 2022, 70, 8229–8243. [Google Scholar] [CrossRef]

- Strohmaier, E.; Dongarra, J.; Simon, H.; Martin, M. Green500 List for June 2020. Available online: https://www.top500.org/lists/green500/2020/06/ (accessed on 7 April 2022).

- Dolbeau, R. Theoretical peak FLOPS per instruction set: A tutorial. J. Supercomput. 2018, 74, 1341–1377. [Google Scholar] [CrossRef]

- Park, S.; Park, J.; Shin, D.; Wang, Y.; Xie, Q.; Pedram, M.; Chang, N. Accurate Modeling of the Delay and Energy Overhead of Dynamic Voltage and Frequency Scaling in Modern Microprocessors. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2013, 32, 695–708. [Google Scholar] [CrossRef]

- Jalali, F.; Hinton, K.; Ayre, R.; Alpcan, T.; Tucker, R.S. Fog Computing May Help to Save Energy in Cloud Computing. IEEE J. Sel. Areas Commun. 2016, 34, 1728–1739. [Google Scholar] [CrossRef]

- Van Heddeghem, W.; Idzikowski, F.; Le Rouzic, E.; Mazeas, J.Y.; Poignant, H.; Salaun, S.; Lannoo, B.; Colle, D. Evaluation of power rating of core network equipment in practical deployments. In Proceedings of the OnlineGreenComm, Online, 25–28 September 2012. [Google Scholar]

- Van Heddeghem, W.; Idzikowski, F.; Vereecken, W.; Colle, D.; Pickavet, M.; Demeester, P. Power consumption modeling in optical multilayer networks. Photonic Netw. Commun. 2012, 24, 86–102. [Google Scholar] [CrossRef]

- Olbrich, M.; Nadolni, F.; Idzikowski, F.; Woesner, H. Measurements of Path Characteristics in PlanetLab; Technical Report TKN-09-005; TU Berlin: Berlin, Germany, 2009. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Edmonds, J.; Karp, R.M. Theoretical Improvements in Algorithmic Efficiency for Network Flow Problems. J. ACM 1972, 19, 248–264. [Google Scholar] [CrossRef]

- Wong, H. A Comparison of Intel’s 32 nm and 22 nm Core i5 CPUs: Power, Voltage, Temperature and Frequency. 2012. Available online: http://blog.stuffedcow.net/2012/10/intel32nm-22nm-core-i5-comparison/ (accessed on 7 April 2022).

- Gunaratne, C.; Christensen, K.; Nordman, B. Managing energy consumption costs in desktop PCs and LAN switches with proxying, split TCP connections and scaling of link speed. Int. J. Netw. Manag. 2005, 15, 297–310. [Google Scholar] [CrossRef]

- Kryszkiewicz, P.; Kliks, A.; Kulacz, L.; Bossy, B. Stochastic Power Consumption Model of Wireless Transceivers. Sensors 2020, 20, 4704. [Google Scholar] [CrossRef] [PubMed]

- IEEE Standard 802.11-2020; IEEE Standard for Information Technology–Telecommunications and Information Exchange between Systems—Local and Metropolitan Area Networks–Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications. IEEE: Piscataway, NJ, USA, 2021. [CrossRef]

- ITU. Propagation Data and Prediction Methods for the Planning of Indoor Radiocommunication Systems and Radio Local Area Networks in the Frequency Range 300 MHz to 450 GHz; ITU: Geneva, Switzerland, 2019. [Google Scholar]

- Intel. Intel Delivers New Architecture for Discovery with Intel Xeon Phi Coprocessor. 2012. Available online: https://newsroom.intel.com/news-releases/intel-delivers-new-architecture-for-discovery-with-intel-xeon-phi-coprocessors/ (accessed on 7 April 2022).

- Gunaratne, C.; Christensen, K.; Nordman, B.; Suen, S. Reducing the Energy Consumption of Ethernet with Adaptive Link Rate (ALR). Trans. Comput. 2008, 57, 448–461. [Google Scholar] [CrossRef]

- European Commission, Joint Research Centre; Bertoldi, P. EU Code of Conduct on Energy Consumption of Broadband Equipment: Version 6; Publications Office of the European Union: Luxembourg, 2017. [Google Scholar] [CrossRef]

- Strohmaier, E.; Dongarra, J.; Simon, H.; Martin, M. Green500 List for November 2021. Available online: https://www.top500.org/lists/green500/list/2021/11/ (accessed on 7 April 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).