Abstract

This study addresses the challenges faced by individuals with upper limb disadvantages in operating power wheelchair joysticks by utilizing the extended Function–Behavior–Structure (FBS) model to identify design requirements for an alternative wheelchair control system. A gaze-controlled wheelchair system is proposed based on design requirements from the extended FBS model and prioritized using the MosCow method. This innovative system relies on the user’s natural gaze and comprises three levels: perception, decision making, and execution. The perception layer senses and acquires information from the environment, including user eye movements and driving context. The decision-making layer processes this information to determine the user’s intended direction, while the execution layer controls the wheelchair’s movement accordingly. The system’s effectiveness was validated through indoor field testing, with an average driving drift of less than 20 cm for participates. Additionally, the user experience scale revealed overall positive user experiences and perceptions of the system’s usability, ease of use, and satisfaction.

1. Introduction

The World Health Organization estimates that approximately 15% of the global population has some form of disability, with 2–4% experiencing severe functional difficulties [1]. Danemayer et al. [2] reported that the use of mobility devices (including prostheses, wheelchairs, intelligent power wheelchairs, etc.) ranged from 0.9 to 17.6% among patients with mobility challenges. As one of the most prevalent mobility aids, wheelchairs can significantly enhance the quality of life for people with disabilities and facilitate their participation in social activities.

However, individuals with hand or upper limb disadvantages may find it challenging to use conventional power wheelchairs. Cojocaru et al. [3] found that over half of powered wheelchair users experienced difficulties learning to maneuver a conventional powered wheelchair. To improve mobility for people with disabilities and promote dignified participation in social activities, researchers have explored various wheelchair interaction methods. A comprehensive guide by Marco et al. [4] explores the utilization of computer vision in healthcare to enhance the quality of life for individuals with disabilities. Moreover, a range of interaction techniques has been employed in power wheelchair human–computer interactions, such as voice control [5,6,7], Sip-and-Puff interfaces [8], Brain–Computer interfaces [9,10,11], Tongue Drive Systems [12], EMG-based interfaces [13,14], and eye-control interfaces [15,16,17]. These methods reduce the physical, perceptual, and cognitive skills required to operate a power wheelchair for a broader range of people with disabilities. However, some interaction methods face limitations in their commercialization potential due to factors such as the high environmental impact, control overloading, difficulty of use, and high cost.

With the widespread recognition and acceptance of user-centered design in recent years, humanized power wheelchair interaction systems have become the trend. Eye control is an intuitive and natural input method that many researchers have utilized in designing power wheelchairs. Power wheelchairs based on visual input are divided into blink-controlled and gaze-controlled. In the case of blink-controlled power wheelchairs, blinks are often used as signals to start and stop the system or to indicate the desired direction based on the number of blinks. Li et al. [18] designed an EOG-based switch with blinking as a condition for switching. If the user blinks in sync with the blinking of the switch button, the blink is judged to be intentional, and an on/off command is issued. Huang et al. [19] proposed a wheelchair robotic arm system that utilizes EEG and EOG signals. In their approach, blinking and eyebrow raising were employed as preselection and validation steps, respectively. The button can only trigger the corresponding command after it has been preselected and verified. Choudhari et al. [20] proposed a wheelchair system using one, two, and three voluntary blinks to control different commands: a single voluntary blink for forward and stop, two voluntary blinks for a left turn, and three voluntary blinks for a right turn. Blink-controlled power wheelchairs have a high degree of command accuracy, but frequent blinking may disrupt the user experience.

Eye-tracking wheelchair interaction systems using gaze control can be categorized into two types: gaze at the screen and gaze at the environment. Some researchers [17,21,22] have proposed systems that drive wheelchairs by gazing at a screen. In such systems, users can control the wheelchair by gazing at buttons or modules on a display, such as forward, left, right, back, and stop. Sunny et al. [23] developed a gaze control architecture for a wheelchair with a graphical user interface and a 6DoF robotic arm. The user can use the assisted robotic arm with the help of eye tracking and control the wheelchair to move by gazing at the wheelchair control interface on the graphical interface, which includes four buttons: forward, left, right, and backward. Although driving the wheelchair by looking at buttons on the display can increase the reliability of interpreting users’ intentions, there is a risk that users will need to shift their gaze from the environment to the display during use. Dahmani et al. [15] employed convolutional neural networks by inputting images of users’ eyes and processing them to determine the gaze direction to direct the wheelchair. However, this method uses two small cameras fixed to the frames of glasses to capture images of users’ eyes, with cameras facing users’ pupils, potentially causing discomfort. Ishizuka et al. [24] proposed a system based on gaze detection and environment recognition to enable movement in unknown environments by combining gaze information from eye tracking and obstacle information from LiDAR. However, the study’s experimental results primarily showed results related to turning, without elaborating on the overall movement effect.

Previous research indicates that eye-tracking technology is a natural and intuitive input method for individuals with disabilities to operate power wheelchairs, thereby improving their quality of life. However, gaps in research remain in terms of analyzing user requirements and the overall design process. To address these gaps, this study develops a gaze-controlled system for power wheelchairs using the extended FBS model and the MosCow method. The study analyzes the genuine needs of disabled users and describes the entire design process systematically. The system uses an eye-tracking device to detect users’ gaze positions and determine the wheelchair’s direction of motion. The system’s development and evaluation transpired in three phases, with aims and methods detailed in Table 1. In the first stage, literature research and user interviews identify potential user needs, and then functional requirements are defined through an extended FBS model. In the second stage, requirement prioritization is analyzed using the MosCow method, and the wheelchair system is designed to meet functional requirements based on M-level and S-level requirements. The final phase encompasses system evaluation.

Table 1.

Overview of the aims and methods of the three phases of the current study.

In the subsequent sections, we will introduce and discuss each of these three stages.

2. Defining the Functional Requirements of the System

In this section, we conducted literature research and user interviews to gain a preliminary understanding of user needs for wheelchair interaction systems. To better address user needs, we employed the extended FBS model to analyze and transform the preliminary requirements, resulting in more scientific and objective user requirements. Throughout this process, we took into account the user’s basic situation, daily challenges, and expectations for future wheelchairs.

2.1. Method

2.1.1. Literature Research

Yuan et al. [25] employed the Analytic Hierarchy Process (AHP) and Kano Model to categorize wheelchairs into three levels based on their features and characteristics. The three levels identified were low-level wheelchairs, which offer basic dimensions and affordability; mid-level wheelchairs, which emphasize comfort and cost effectiveness; and high-level wheelchairs, which provide comfort, optimal functionality, and innovation. Meanwhile, Rice et al. [26] examined falls among wheelchair users, many of whom require assistance to recover and may remain on the floor for 10 minutes or longer. Moreover, the researchers [27] conducted semi-structured interviews with a cohort of 20 wheelchair users, revealing that 70% of the participants expressed fear of falling, while 80% of them acknowledged requiring assistance for recovery. Pellichero et al. [28] also investigated wheelchair use safety. Frank et al. [29] conducted a study on the pain and discomfort experienced by power wheelchair users who believed their pain was related to the wheelchair. Additionally, Viswanathan et al. [30] conducted surveys and found that wheelchair users want the ability to choose different levels of smart wheelchair control based on their physical condition and scenarios while expressing concerns about safety. In a cross-sectional study based on personal interviews, Sarour et al. [31] identified safety, comfort, and weight as the paramount concerns among wheelchair users. Table 2 provides a comprehensive summary of these requirements. Users prioritize several key factors that greatly influence their wheelchair experience. Ensuring safety, optimizing comfort, maintaining cost effectiveness, and facilitating easy operation are the primary considerations that underscore their essential needs. By addressing these aspects, wheelchair designs can better meet the expectations and requirements of users, leading to enhanced satisfaction and overall usability.

Table 2.

Summary of requirements.

2.1.2. User Interviews

We interviewed two long-term users of power wheelchairs via online and telephone platforms. One participant had paraplegia while the other had progressive muscular dystrophy. Both individuals had more than 4 years of experience using wheelchairs and had used three different models. During the interviews, we inquired about their personal background, wheelchair usage experience, and future expectations for wheelchairs. Participants reported that wheelchairs are necessary for daily activities and provide significant assistance in daily life. Their primary concerns included collisions, rollovers, and obstacles encountered due to poor control and complex driving situations. They expressed a desire for future wheelchairs to be safer, more easily controllable, and more cost-effective. With respect to the gaze-controlled wheelchair, they expected it to be safe, accurate, easy to control, comfortable, visually appealing, and affordable.

2.1.3. The Extended FBS Model

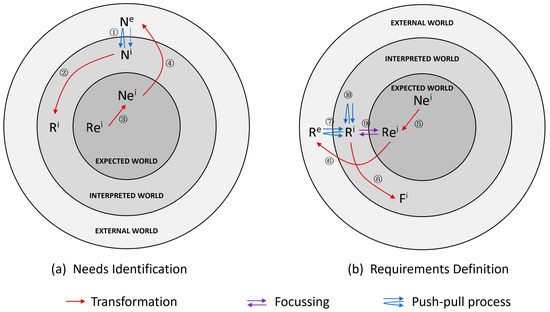

To circumvent the limitations of a traditional user requirement analysis through research and interviews, this study employed the Extended Function–Behavior–Structure (FBS) model for analyzing potential user requirements during the early stages of system design, providing a reference for subsequent design processes. Gero et al. [32,33] initially proposed the FBS framework, which describes design activities by connecting the external world, interpreted world, and expected world through three variables: Function (F), Behavior (B), and Structure (S) variables. The design activity’s outcome involves effecting change in the external world by focusing on the goals achieved in the expected world. Cascini et al. [31] further developed and refined the model by integrating two new variables, Needs (N) and Requirements (R), within the FBS framework’s three worlds, ultimately proposing the Extended FBS model [34], as illustrated in Figure 1.

Figure 1.

The Extended FBS model.

In this context, ‘Ne’ denotes ‘Needs in the External World’, while ‘Ni’ signifies ‘Needs in the Interpreted World’ and ‘Nei’ represents ‘Needs in the Expected World’. Similarly, ‘Re’ stands for ‘Requirements in the External World’, ‘Ri’ refers to ‘Requirements in the Interpreted World’, and ‘Rei’ corresponds to ‘Requirements in the Expected World’. Lastly, ‘Fi’ is indicative of ‘Functions in the Interpreted World’.

In the Extended FBS model, needs represent an expression of a perceived undesirable or ideal situation, which can be extracted by observing user behavior or perceived or assumed by the designer. Requirements refer to measurable attributes associated with one or more needs. The Extended FBS model describes the processes of need identification and requirement definition, consisting of the following steps:

- ① Investigating user needs Ne in the external world and generating interpretations of needs Ni.

- ② transforming Ni into a preliminary requirement Ri.

- ③ Translating the initially expected requirements Rei into Nei, ensuring that unprovided user needs are considered.

- ④ Transforming Nei into Ne and then verifying the expected requirements with the user and, if negative feedback is received, reinterpreting Ne through steps ① and ②.

- ⑤ Transforming Nei into Rei variables.

- ⑥ Expanding Rei to more or the equal number of Re.

- ⑦ Deriving and interpreting Re into Ri (with the help of design experience).

- ⑧ Transforming part of Ri into Fi.

- ⑨ Further focus on Ri as Rei to obtain the initial design requirements.

- ⑩ Refining Ri through design experience and further derivation of new design requirements.

2.2. Results

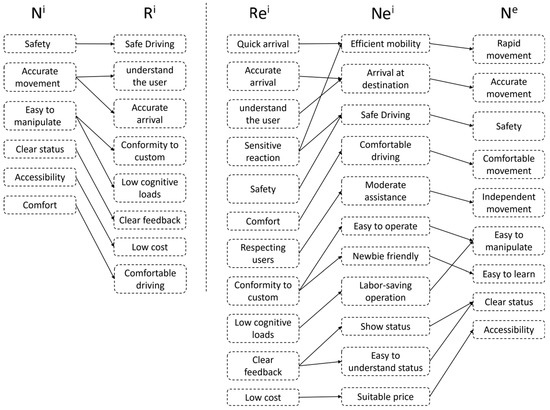

To avoid the drawbacks of a traditional user needs analysis relying solely on research interviews, this study employed the Extended FBS model to identify the results of literature research and user interviews. First, the external user needs Ni obtained from the research are transformed into Ri, which allows for the aggregation of needs by category. Second, Rei is transformed into Nei by fully considering the necessary requirements not mentioned by the user, and then further verifying with the user to confirm the expected requirements. The results of the need identification based on the Extended FBS model are illustrated in Figure 2.

Figure 2.

The results of the need identification.

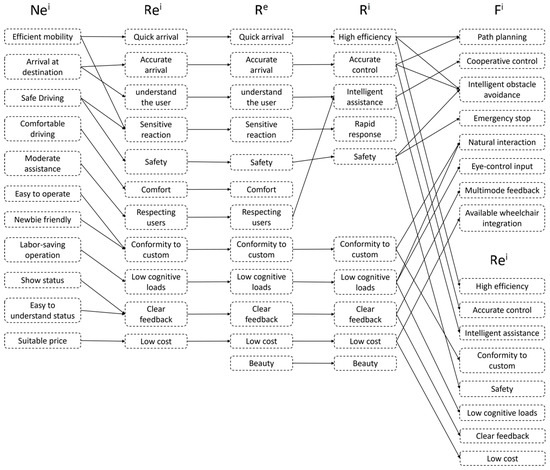

Upon obtaining Nei, the design requirements are defined, as illustrated in Figure 3. First, Rei is transformed and expanded into Re; second, Re is derived into Ri with the assistance of design experience; finally, a portion of Ri is converted into Fi, and Ri is further refined into Rei to obtain the initial design requirements.

Figure 3.

The results of the requirement definition.

According to Figure 3, users expected wheelchairs to include needs such as high efficiency, accurate control, intelligent assistance, safety, custom conformity, a low load, clear feedback, and a low cost. These expectations include functions such as path planning, collaborative control, intelligent obstacle avoidance, an emergency stop, natural interaction, eye-control input, multimodal feedback, and integration with existing wheelchairs.

2.3. Summary of This Section

This section aims to identify the needs and challenges of wheelchair users through literature research and user interviews. To ensure the objectivity and accuracy of the user requirements, we applied the extended FBS model to analyze and transform the preliminary requirements. Based on this, we established that the ideal wheelchair should possess the following needs: high efficiency, accurate control, intelligent assistance, safety, custom conformity, a low weight, clear feedback, and a low cost. Additionally, users expect the wheelchair to have several functions, including path planning, collaborative control, intelligent obstacle avoidance, an emergency stop, natural interaction, eye-controlled input, multimodal feedback, and integration with existing wheelchairs.

3. Realizing the Functional Requirements of the System

In this section, we detail the design and development of a prototype wheelchair system predicated on previously established requirements. Initially, we prioritized the Fi and Rei identified earlier using the MosCow method. This categorization facilitated the delineation of “Must Have” and “Should Have” requirements, which subsequently guided the conceptualization and prototyping of the requisite wheelchair functionality.

3.1. Method

Upon obtaining the initial design requirements, it was important to prioritize them to facilitate subsequent design and prototyping. In order to prioritize the requirements effectively, we employed the MosCow method, a qualitative technique widely used in the industry. The MosCow method categorizes requirements into four priority levels: Must Have, Should Have, Could Have, and Won’t Have [35]. Must Have requirements are deemed crucial for the success of the project, and they must be implemented in the final product. Should Have requirements are important but not as critical as Must Have requirements, and they should be implemented if the time and budget allow. Could Have requirements are desirable but not essential, and they can be implemented if the time and budget permit. Won’t Have requirements are not included in the current project scope but may be considered in future iterations. Table 3 outlines the specific details of the prioritization process and the requirements categorized under each priority level. By using the MosCow method, we were able to prioritize the design requirements effectively and ensure that the final product met the essential needs of our users.

Table 3.

MosCow method.

3.2. Results

3.2.1. Priority of Requirements

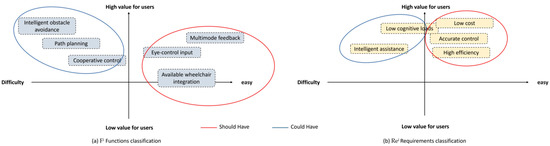

To prioritize these requirements, we adopted the MosCow method, taking into account various crucial factors. These include the value, the feasibility of implementation, the impact on user satisfaction, and the cost effectiveness in terms of resources, time, and costs.

- Value: Assess the importance of each requirement to the user to determine its level of importance in relation to the overall solution.

- Feasibility: The feasibility of implementing a requirement is assessed by considering factors such as implementation difficulty, technical feasibility, and resource constraints.

- User satisfaction: The impact of a requirement on the user experience is taken into account, with a focus on assessing its contribution to enhancing user satisfaction.

- Cost effectiveness: Resources, time, and costs needed to fulfill the requirements are evaluated, along with careful consideration of their economic benefits in the context of the overall solution.

- Timeliness: The urgency and time required to fulfill a requirement are carefully considered to determine its appropriate placement within the solution development cycle.

Finally, we have categorized these requirements into two levels: M-level requirements and S&M-level requirements, as illustrated in Table 4. S-level and C-level requirements were then determined with the assistance of the value–difficulty rule’s four quadrants, as shown in Figure 4. The final MosCow requirement prioritization was obtained, as displayed in Table 5. The Must Have requirements of the system include conformity to custom, safety, and clear feedback, which are essential in ensuring the safety and satisfaction of the users. Additionally, the Must Have functions include natural interaction and an emergency stop, which allow for intuitive and safe control of the wheelchair in various situations. On the other hand, the Should Have requirements include high efficiency, accurate control, and a low cost, which are necessary to ensure that the system is practical and accessible to a wide range of users. Correspondingly, the Should Have functions include available wheelchair integration, multimode feedback, and eye-control input. By meeting these requirements and functions, the developed system can provide a reliable and efficient solution for individuals with mobility limitations, improving their quality of life and independence.

Table 4.

Fi and Rei initially identified according to the MosCow method.

Figure 4.

The four quadrants of value–difficulty rule.

Table 5.

Final requirement prioritization.

According to the MosCow method, the M-Level requirements are conformity to custom, safety, and clear feedback. M-Level functions are natural interaction and an emergency stop. Natural interaction can use eye-control input to control the wheelchair, which is one of the functions of the S-level. Additionally, eye-control input through staring at the environment is not only consistent with the user’s driving habits, but also enhances user safety by avoiding distraction from computer interfaces. The emergency stop function allows the user to stop the wheelchair autonomously under any circumstances to avoid accidental collisions. Clear feedback can be achieved through multimodal feedback (an S-level function), and the status of the wheelchair can be fed back to the user in real time with the help of vibration and the voice. In addition, the requirements of the S-level also include high efficiency, accurate control, and a low cost, which means that the structure of the system must be simple and can be integrated into the available wheelchair (an S-level function) to reduce unnecessary costs and expenses. At the same time, the information transmission of each device should be efficient. Natural interaction, feedback, and efficiency can be evaluated utilizing the System Usability Scale (SUS). Meanwhile, factors such as efficiency, accurate control, and safety can be gauged through simulated driving experiments, considering metrics such as the driving time, average drift, and instances of a collision or other accidental situations. Additionally, user satisfaction can be measured using satisfaction scales, facilitating an assessment of conformity to user customs.

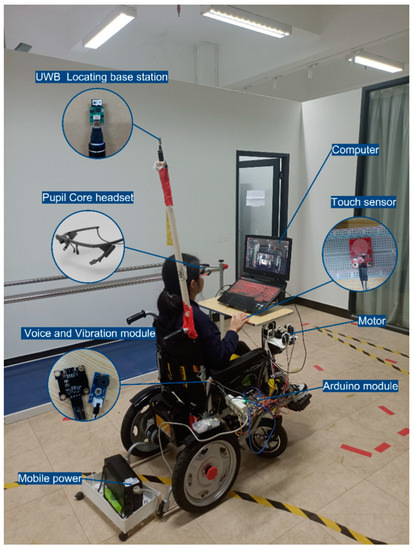

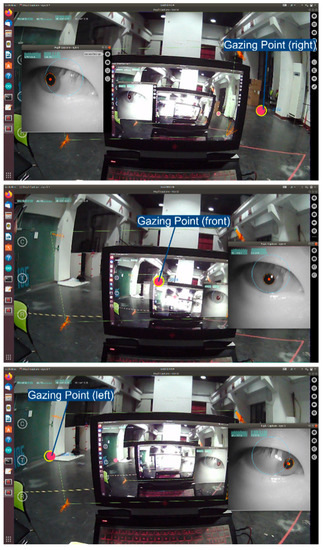

3.2.2. Sensors

The proposed gaze-controlled wheelchair system is based on a commercially available power wheelchair modified with touch sensors, control modules, audio and vibration modules, and an eye-tracking module, enabling precise control through gaze interaction. Figure 5 displays the sensors used. The eye tracker (Pupil Core headset) features a world camera and an eye camera. The Pupil Core headset serves as a user interface for the proposed interactive system, detecting the user’s gaze point in the environment to control the wheelchair’s direction of travel, as illustrated in Figure 6. The voice and vibration module utilizes hardware such as vibrating pads and a small buzzer to provide multimodal interaction for feedback on various system states, including the system wake-up/arrival/emergency stop, etc.

Figure 5.

Proposed wheelchair prototype.

Figure 6.

Eye tracker to obtain the gazing point.

The control module comprises an Arduino module and four MG 995 motors, which control the wheelchair’s movement based on the gaze information obtained from the eye-tracking device. For instance, when the user gazes forward, the control module drives the wheelchair forward. When the user gazes left (right), the control module steers the wheelchair left (right).

The touch sensor can be mounted anywhere on the gaze-controlled wheelchair. The user can adjust the pressure sensor’s position according to their residual capacity to apply pressure for waking up the wheelchair and activating its emergency stop function by relieving the applied pressure. Further sensor details are shown in Table 6.

Table 6.

Details of the sensors.

3.2.3. Gaze-Controlled Wheelchair System Process

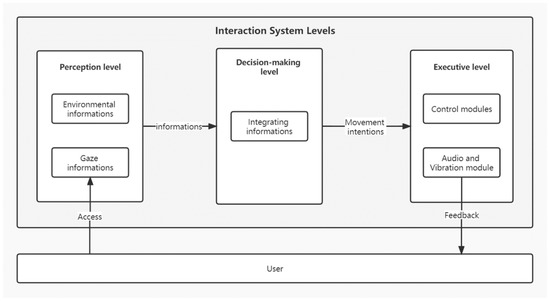

The proposed gaze-controlled wheelchair system, based on the natural gaze of the environment, consists of three layers: perception, decision making, and execution. The perception layer is primarily responsible for sensing and acquiring surrounding information, including the user’s eye movements and driving environment information. The decision layer processes the information and data from the perception layer, integrating and analyzing the collected information to determine the end user’s intended movement (go forward, turn left, or turn right). The execution layer controls the wheelchair’s direction of movement, as determined by the decision layer, through the control module. Simultaneously, it provides feedback to the user about the wheelchair’s driving status through the audio and vibration module, as illustrated in Figure 7.

Figure 7.

Interaction system level diagram.

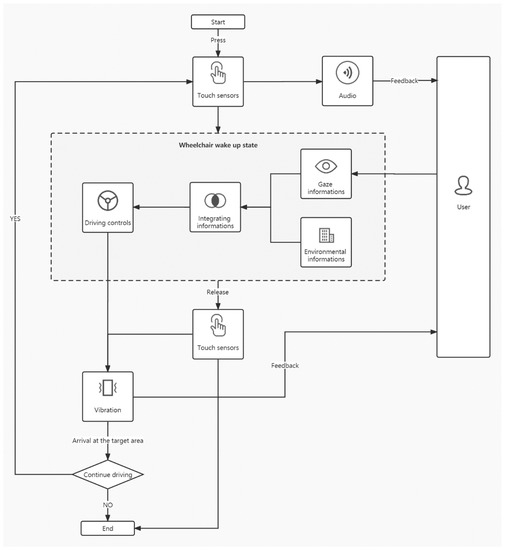

The interaction system’s functional design is divided into two main modules: wake-up/emergency stop and driving. Prioritizing safety as the most critical M-level requirement, the wake-up/emergency stop control program’s logic supersedes that of the driving module. Only when the user applies pressure to the touch sensors can the gaze-controlled wheelchair be awakened and transition into the driving phase; the wheelchair receives the wake-up command while the user is informed of the current state through audio feedback. In any driving state, the user can release the pressure applied to the touch sensor to quickly disengage the wheelchair from the driving state and initiate an emergency stop for the user’s safety. Simultaneously, vibration feedback informs the user that the wheelchair has transitioned from the driving state to the stop state, as shown in Figure 8. To ensure clear and multimodal feedback, employing various feedback methods is crucial to alert the user when the wheelchair starts or stops, helping to prevent user confusion and satisfying the clear feedback requirement.

Figure 8.

Flowchart of the interactive system.

The driving module is the main functional module. The system covers most of the requirements in MosCow, such as controlling the wheelchair in a natural interactive way that conforms to the user’s habits, and efficiently captures the user’s driving intention information to drive the power wheelchairs to the target area. Specifically, the system detected the user’s eye movement information and determined the position of the user’s gaze point in the environment using an eye-tracking device. After obtaining the user’s intention to move, the control module drove the gaze-controlled wheelchair toward the target area. When the wheelchair reached the target area, it stopped moving and emitted vibrating feedback to indicate that the user had reached it. The user could then perform the next gaze, causing the wheelchair to move again. The user can make the wheelchair move several times over short distances by gazing at them, eventually achieving long-distance movement in the wheelchair.

3.2.4. Implementation of the Gaze-Controlled Wheelchair System

In our study, we employed the widely used MATLAB software as a valuable tool for collecting and analyzing the gaze information obtained from the eye tracker. This powerful software allowed us to accurately capture and process the intricate data related to the participant’s eye movements.

Following the collection of gaze information, we established a seamless connection between the eye tracker and the Arduino board. The Arduino board effectively evaluated the received data and intelligently determined the appropriate actions based on the user’s gaze, including making decisions to turn left, turn right, or move forward.

To facilitate the translation of these decisions into physical movements, the Arduino board expertly regulated the movement of the wheelchair joystick by effectively controlling the motors. This enabled smooth and precise moving of the wheelchair in the intended direction. As the user approached the target position, they could effortlessly release the touch sensor, indicating their intention to halt the movement. In response, the Arduino board promptly commands the servo to bring the wheelchair to a stop, successfully concluding the movement.

Through this well-coordinated integration of software and hardware components, our proposed system effectively translated the user’s gaze information into accurate and responsive control of the wheelchair. This enhanced the overall usability and control of the wheelchair, enabling users to navigate their environment with ease and precision.

4. Evaluation and Results

This section focuses on testing and evaluating the prototype of the gaze-controlled wheelchair interaction system. To assess the effectiveness, usability, ease of use, and usefulness of the prototype, we conducted both an indoor simulated driving test and a System Usability Scale (SUS) evaluation. These tests aimed to evaluate the system’s performance and its ability to meet users’ needs, providing valuable feedback for future development and improvement.

4.1. Method

4.1.1. Participants

In the third phase of the study, 14 participants (8 females and 6 males) were included. All participants had a normal or corrected-to-normal visual acuity greater than 4.5. Given that the system is currently in an early research phase, all participants enlisted for this simulation trial were individuals without disadvantages. This precaution was taken to preclude potential harm to users with disadvantages, considering the nascent state of the product.

4.1.2. Experimental Site

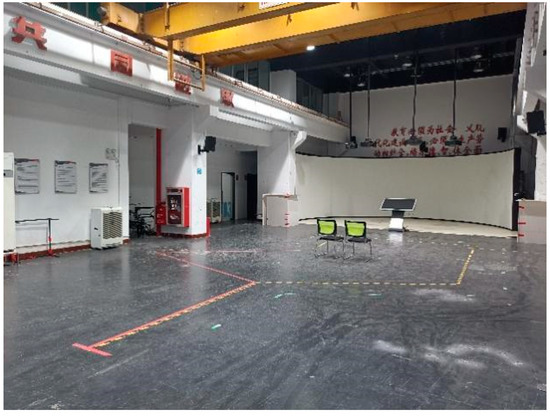

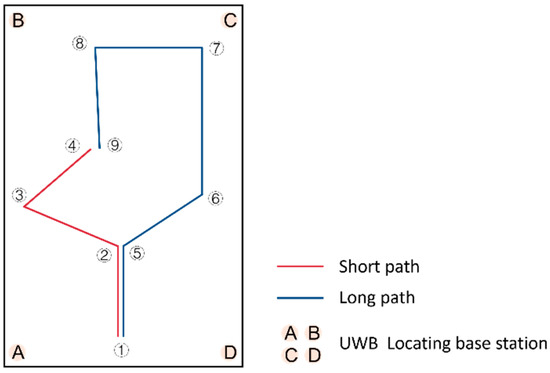

We consulted the publication by MacPhee et al. [36] for guidance on the arrangement of the experimental site. We selected an experimental site measuring 800 mm × 1215 mm. Based on daily driving needs, short and long experimental paths were planned and marked on the ground with red and yellow–black tape, including sharp, obtuse, and right-angle turns. While these two paths may not have covered all scenarios of wheelchair users’ daily travel activities, they represented common paths. The experimental site is shown in Figure 9, and the experimental site plan and planned paths are shown in Figure 10. The start and end points, along with the turning points, in the planned path are sequentially labeled with numbers to facilitate further discussion and analysis.

Figure 9.

The real shot of the experimental site.

Figure 10.

Schematic diagram of the site.

4.1.3. Positioning Method

The subject’s travel path was recorded using the two-dimensional positioning mode of the Ultra-Wideband (UWB) indoor positioning system. An indoor positioning module was placed at each of the four corners of the experimental site and on the wheelchair, as shown in Figure 10. The positioning module at point A was marked as the primary base station, while B, C, and D were marked as secondary base stations. The positioning module on the wheelchair was marked as a tag. During the experiment, all base stations remained stationary. The tag moved with the wheelchair and communicated with the other four base stations, transmitting location information in real time to the primary base station, which was connected to a computer to output the tag’s location information.

4.1.4. Evaluation Indicators

- Time

The time factor, serving as a critical evaluation indicator, corresponds to the duration required for a user to perform a particular task using the system. This quantitative metric provides an objective assessment of both system efficiency and user proficiency.

- 2.

- Drift

Another critical performance measure is drift, which measures the system’s precision. It is defined as the average deviation from the target or expected result, thus providing insights into the accuracy of the system’s performance.

- 3.

- System usability scale

SUS is a tool for assessing the usability of a product or system. It was developed in 1996 by John Brooke [37]. The scale consists of ten declarative sentences, and users are asked to rate their agreement with each sentence after using the product or system. The odd-numbered items on the scale have positive statements, while the even-numbered items have negative statements. SUS is highly versatile and can be used to measure a wide range of user interfaces. In the field of power wheelchair research, many researchers have used SUS to score proposed systems.

Callejas-Cuervo et al. [38] designed a prototype wheelchair with head movement control and tested it using SUS. The final mean SUS score for the 10 subjects was 78. Guedira et al. [39] proposed a haptic interface for manipulating a wheelchair and gathered three wheelchair users to test it and score it on SUS. Panchea et al. [40] conducted a qualitative and quantitative study of an intelligent power wheelchair based on SUS. The results showed that the proposed graphical interface-based touch-controlled wheelchair SUS showed an average of 68.

- 4.

- Satisfaction questionnaire

The satisfaction questionnaire is an instrumental tool to quantify user satisfaction. It helps in understanding how well the system meets user expectations and provides a subjective measure of the system’s performance from the user’s perspective.

4.1.5. Procedure

The specific procedure of the experiment was as follows:

Experiment description and operation instruction. The staff explained the experiment’s purpose, task, procedure, and precautions, helped the subject put on the eye tracker, calibrated it, and instructed the subject on the essential operation of the wheelchair and the feedback method.

Path driving. The subjects were asked to complete two different driving tasks of varying difficulty. From the initial point ①, the subject drove the prototype wheelchair along a short path to the endpoint ④. Then, they followed a long path from the initial point ① to the endpoint ⑨. Each subject was instructed to operate the eye-tracking wheelchair along the short path once, and subsequently complete two additional trials along the long path. At the same time, the staff observed and recorded the driving behavior and duration for each subject and ensured their safety.

Evaluation and reporting. At the end of the experiment, each participant was invited to fill in a user experience evaluation form and report on their experience of driving the gaze-controlled wheelchair.

4.2. Results

4.2.1. Driving Function Evaluation

In the gaze-controlled wheelchair system driving evaluation experiment, 14 subjects successfully reached the endpoint in 42 path-driving tests. The observed and recorded driving conditions of the subjects are shown in Table 7. To minimize the influence of accidental conditions on the experimental results, the average driving time was calculated after removing the maximum and minimum values for the two paths, respectively. As displayed in Table 7 and Table 8, all subjects were generally able to drive normally during the driving sessions. The mean time for short-distance driving was 1.13 min, and the mean time for long-distance driving was 1.79 min.

Table 7.

Driving times for short routes for 14 subjects.

Table 8.

Driving times for long routes for 14 subjects.

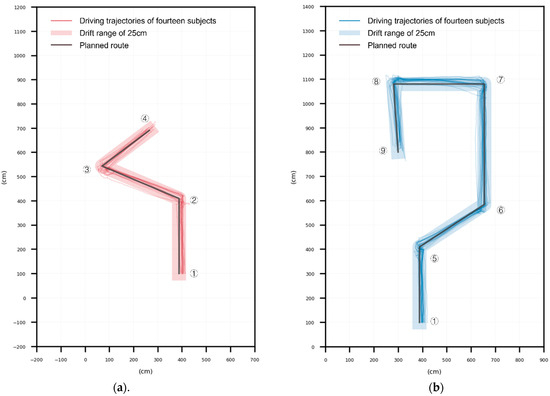

The UWB positioner located the wheelchair at a 10 Hz frequency and transmitted its position coordinates to the computer in real time. After the experiment, the obtained coordinates of the wheelchair position were compared with the coordinates of the intended route, and the drifts between the two were the output. The average drifts for the 14 subjects for the 14 short and 20 long paths are shown in Table 9 and Table 10. From Table 9, the minimum drift for the short path was 9.37 cm, the maximum drift was 34.26 cm, and the average drift for the 10 tests was 19.49 cm. From Table 10, the minimum drift of the long path was 7.98 cm, and the maximum drift was 33.99 cm, with an average drift of 15.72 cm over 20 tests.

Table 9.

The average drift of short path driving for 14 subjects.

Table 10.

The average drift of long path driving for 14 subjects.

The average drift for the short path was greater than for the long path. According to the post-test reports, the larger drift in the short path trajectory was due to the initial unfamiliarity with the prototype gaze-controlled wheelchair interaction. During long path driving, the user could operate the gaze-controlled wheelchair more consistently and smoothly because of their experience with short path driving and increased familiarity with the system’s operation, feedback, and delay times. The average drift for the long path was also minimal.

Figure 11a displays the 14 subjects’ short path driving trajectories for a total of 14 times, while Figure 11b shows the long route driving trajectories of the 14 subjects for a total of 28 times. Figure 11 indicates that all subjects drove on the expected route and completed the driving evaluation. However, there was a drift between the intended route at point ① and the subject’s driving trajectory. This was because the driving test was carried out with the wheelchair’s center aligned with point ①, but the positioning label was fixed to the right rear of the wheelchair. Consequently, the trajectory at point ① appeared to be drifting to the right. Additionally, some trajectories still had large drifts, especially around the turning points, including locations ②, ③, ⑤, ⑦, and ⑧. Field observations and feedback from the subjects after the experiment revealed two main reasons for the turn deviations: (1) the laptop was improperly positioned, obstructing the subjects’ view and making it difficult for them to determine whether they had reached the target position, and (2) there was some deviation in the track record due to the jitter generated during the wheelchair driving process.

Figure 11.

Driving trajectories of 14 subjects. (a) Short path driving trajectories of 14 subjects and (b) long path driving trajectory of 14 subjects.

4.2.2. User Experience Evaluation

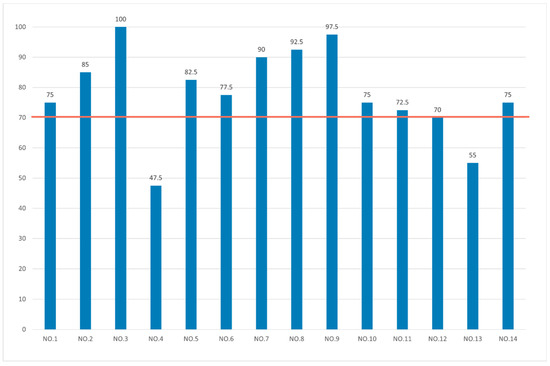

Upon completing the experiment, the subjects were asked to fill out a SUS questionnaire based on their experience using the device, as well as a satisfaction questionnaire. The SUS consists of 10 questions, with each question rated on a scale of 1 to 5. To calculate the SUS score, the base value for each question, ranging from 0 to 4 points, was first determined. For positively worded questions with odd question numbers, the base value equaled the score for that question minus 1. For negatively worded questions with even question numbers, the base value equaled 5 minus the score for that question. Finally, the base values of the 10 questions were summed and multiplied by 2.5 to obtain the SUS score. According to a previous study [41], SUS scores can be interpreted as follows: a system with a score below 50 is unacceptable, a score of 50–70 is critical, and a score of 70 or higher is acceptable.

The results indicated that the mean SUS score for the 14 subjects was 78.21. Based on the findings by Bangor et al. [41,42], a system is considered to have good usability when its SUS score exceeds 71.4. Figure 12 presents the SUS scores of all participants, with the orange line representing the threshold of 70 points. The graph clearly indicates that the majority of the 14 participants scored above 70, reflecting favorable usability perceptions. However, it is worth noting that two participants scored below 70, suggesting a lower level of perceived usability in their assessment. Through post-experimental interviews, we identified the following reasons for these lower scores:

- The eye tracker occasionally failed to capture the gaze position after pressing the touch sensor (due to the subject blinking or gazing too far out of position), causing the system to report an error.

- The eye tracker was positioned too close to the eyes, resulting in discomfort during extended use.

- The voice and vibration feedback was not prominent enough and lacked a sense of security.

Figure 12.

SUS scores for each subject.

Ten of the fourteen subjects scored over 74.1 on the SUS, indicating that they found the system’s usability acceptable and reasonable. The subjects generally reported that the gaze-controlled wheelchair interaction was easy to understand and learn, and it effectively achieved the intended mobility improvements.

The satisfaction questionnaire comprised three questions, as illustrated in Table 11. The satisfaction questionnaire utilized a 5-point Likert scale. Based on the results in Table 11, the mean score for the three questions was 4.36, suggesting that the subjects were quite satisfied with the system and would be willing to recommend it to friends in need. The proposed gaze control wheelchair system fulfilled the subjects’ expectations.

Table 11.

Results of the satisfaction scale.

Therefore, the future optimization direction and strategy for the gaze-controlled wheelchair are (1) ensuring system stability while shortening the delay time and enhancing the system’s timeliness, and (2) further optimizing the interaction mode to reduce the load on the user’s head and eyes, as well as improving the feedback system to increase feedback for different states, such as starting and turning.

4.2.3. Discussion

The proposed gaze-controlled wheelchair system aims to support individuals with upper limb dysfunction who encounter difficulties utilizing traditional power wheelchairs with joysticks. Our system, founded on the extended FBS model and the MosCow method, facilitates natural sight interaction for wheelchair control, effectively eliminating the need for joysticks. Furthermore, the system addresses user safety and feedback concerns through the implementation of an emergency stop module, as well as voice and vibration modules. This design ensures responsiveness to user needs and concerns, promoting enhanced comfort and control. The efficacy of the proposed gaze-controlled wheelchair interaction system was demonstrated through an experiment involving 14 healthy participants. The collective results indicated a promising solution for disabled users. The participants expressed optimism regarding the system’s usefulness and ease of use, which allowed them to quickly learn and navigate to their desired destinations. Additionally, they proposed performance improvements for the gaze-controlled wheelchair, such as reducing the delay time and enhancing ride comfort.

The project is currently in its preliminary development stage. Healthy volunteers were selected to participate in the experiment to mitigate potential risks to disabled individuals due to the product’s immaturity. While this may impact the authenticity of the experimental results, it enables preliminary verification of the system. In the future, we plan to optimize the system’s functions and performance and involve users with limited upper limb mobility in the experiments to derive more accurate conclusions.

During the experiment, ensuring the accuracy and effectiveness of the eye-tracking device and program operation was of paramount importance. Consequently, a laptop was placed in front of the participants for device calibration and testing. Regrettably, the laptop’s presence interfered with users’ gaze behavior, necessitating that they extend their heads to view the path ahead. In future work, we aim to optimize and adjust our methodology to prevent such interference. To guarantee the accuracy and validity of the experimental results, we intend to explore alternative calibration methods or modify the laptop’s positioning. Furthermore, we will endeavor to minimize any potential sources of interference that may impact users’ gaze behavior. These improvements will facilitate more accurate and reliable experimental results, enabling a deeper understanding of the system’s capabilities and limitations.

5. Conclusions

This study presents a gaze-controlled system for power wheelchairs, utilizing the extended FBS model and the MosCow method. We began by conducting a comprehensive analysis of user requirements derived from literature research and user interviews, according to the extended FBS model. Subsequently, the MosCow method was used to prioritize and categorize the identified user requirements into “Must Have” and “Should Have” categories. Based on these requirements, we developed an intuitive and efficient gaze-controlled system for power wheelchairs, designed to assist individuals facing manual control challenges who struggle to use a joystick.

The proposed system aims to reduce complexity while addressing users’ essential needs. To achieve this, critical components such as eye trackers, control modules, audio and vibration modules, and touch sensors are integrated into existing power wheelchairs. The system utilizes a three-level approach of perception, decision making, and execution to allow for the continuous analysis of the user’s intention to move short distances, thus enabling long-distance driving. The system’s effectiveness was verified through gaze-controlled wheelchair driving experiments in a simulated indoor environment. All participants successfully followed the designated route, exhibiting an average drift of less than 20 cm. Moreover, the SUS results suggest that the system possesses good usability, while the satisfaction scale indicates that the subjects expressed high levels of satisfaction with the system.

However, our study has certain limitations. Firstly, due to the impact of COVID-19 and the product being in the initial development stage, we recruited healthy individuals with hands bounded as subjects. Secondly, the system was tested and evaluated exclusively indoors, limiting the assessment of its effectiveness and user experience in outdoor environments. Moreover, the study presents only a preliminary application of the extended FBS model without in-depth optimization and integration.

Despite these limitations, our research successfully demonstrates the process of transitioning from user research to a requirement analysis, design prototype implementation, and testing. By employing the extended FBS model and the MosCow method, we extracted and sorted users’ real requirements, culminating in a valuable concept prototype. We also conducted simulation tests to confirm the system’s effectiveness, usability, and user satisfaction. In future research, we plan to address these limitations and develop a wheelchair system that is more beneficial, easy to use, and accessible for individuals facing manual control challenges.

Author Contributions

Conceptualization, X.Z. and J.L.; methodology, L.J.; software, Q.H. and Z.S.; validation, X.Z., J.Z. and X.L.; investigation, L.J. and J.Z.; resources, X.Z.; data curation, Q.H. and Z.S.; writing—original draft preparation, J.L.; writing—review and editing, X.Z. and D.-B.L.; funding acquisition, X.Z. and D.-B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by the 1st Batch of 2021 MOE of PRC Industry-University Collaborative Education Program (Program No. 202101042021, Kingfar-CES “Human Factors and Ergonomics” Program), the Humanity and Social Science Youth Foundation of the Ministry of Education of China (grant number: 18YJCZH249), the Guangzhou Science and Technology Planning Project (grant number: 201904010241), and the Humanity Design and Engineering Research Team (grant number: 263303306).

Institutional Review Board Statement

This study was approved by the Institutional Review Board of Guangdong University of Technology.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

Available upon request.

Acknowledgments

We would like to thank Lanxin Hui for her contributions in the earlier stages of this project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Krahn, G.L. WHO world report on disability: A review. Disabil. Health J. 2011, 4, 141–142. [Google Scholar] [CrossRef] [PubMed]

- Danemayer, J.; Boggs, D.; Ramos, V.D.; Smith, E.; Kular, A.; Bhot, W.; Ramos-Barajas, F.; Polack, S.; Holloway, C. Estimating need and coverage for five priority assistive products: A systematic review of global population-based research. BMJ Glob. Health 2022, 7, e007662. [Google Scholar] [CrossRef] [PubMed]

- Cojocaru, D.; Manta, L.F.; Vladu, I.C.; Dragomir, A.; Mariniuc, A.M. Using an eye gaze new combined approach to control a wheelchair movement. In Proceedings of the 2019 23rd International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 9–11 October 2019; pp. 626–631. [Google Scholar]

- Marco, L.; Farinella, G.M. Computer Vision for Assistive Healthcare; Academic Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Abdulghani, M.M.; Al-Aubidy, K.M.; Ali, M.M.; Hamarsheh, Q.J. Wheelchair neuro fuzzy control and tracking system based on voice recognition. Sensors 2020, 20, 2872. [Google Scholar] [CrossRef]

- Sharifuddin, M.S.I.; Nordin, S.; Ali, A.M. Voice control intelligent wheelchair movement using CNNs. In Proceedings of the 2019 1st International Conference on Artificial Intelligence and Data Sciences (AiDAS), Ipoh, Malaysia, 19 September 2019; pp. 40–43. [Google Scholar]

- Iskanderani, A.I.; Tamim, F.R.; Rana, M.M.; Ahmed, W.; Mehedi, I.M.; Aljohani, A.J.; Latif, A.; Shaikh, S.A.L.; Shorfuzzaman, M.; Akther, F. Voice Controlled Artificial Intelligent Smart Wheelchair. In Proceedings of the 2020 8th International Conference on Intelligent and Advanced Systems (ICIAS), Kuching, Malaysia, 13–15 July 2021; pp. 1–5. [Google Scholar]

- Grewal, H.S.; Matthews, A.; Tea, R.; Contractor, V.; George, K. Sip-and-Puff Autonomous Wheelchair for Individuals with Severe Disabilities. In Proceedings of the 2018 9th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 8–10 November 2018; pp. 705–710. [Google Scholar]

- Tang, X.; Li, W.; Li, X.; Ma, W.; Dang, X. Motor imagery EEG recognition based on conditional optimization empirical mode decomposition and multi-scale convolutional neural network. Expert Syst. Appl. 2020, 149, 113285. [Google Scholar] [CrossRef]

- Palumbo, A.; Gramigna, V.; Calabrese, B.; Ielpo, N. Motor-imagery EEG-based BCIs in wheelchair movement and control: A systematic literature review. Sensors 2021, 21, 6285. [Google Scholar] [CrossRef] [PubMed]

- Saha, S.; Mamun, K.A.; Ahmed, K.; Mostafa, R.; Naik, G.R.; Darvishi, S.; Khandoker, A.H.; Baumert, M. Progress in Brain Computer Interface: Challenges and Opportunities. Front. Syst. Neurosci. 2021, 15, 578875. [Google Scholar] [CrossRef]

- Kim, J.; Park, H.; Bruce, J.; Sutton, E.; Rowles, D.; Pucci, D.; Holbrook, J.; Minocha, J.; Nardone, B.; West, D.; et al. The Tongue Enables Computer and Wheelchair Control for People with Spinal Cord Injury. Sci. Transl. Med. 2013, 5, 213ra166. [Google Scholar] [CrossRef]

- Vogel, J.; Hagengruber, A.; Iskandar, M.; Quere, G.; Leipscher, U.; Bustamante, S.; Dietrich, A.; Höppner, H.; Leidner, D.; Albu-Schäffer, A. EDAN: An EMG-controlled daily assistant to help people with physical disabilities. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4183–4190. [Google Scholar]

- Yulianto, E.; Indrato, T.B.; Nugraha, B.T.M.; Suharyati, S. Wheelchair for Quadriplegic Patient with Electromyography Signal Control Wireless. Int. J. Online Biomed. Eng. 2020, 16, 94–115. [Google Scholar] [CrossRef]

- Dahmani, M.; Chowdhury, M.E.H.; Khandakar, A.; Rahman, T.; Al-Jayyousi, K.; Hefny, A.; Kiranyaz, S. An Intelligent and Low-Cost Eye-Tracking System for Motorized Wheelchair Control. Sensors 2020, 20, 3936. [Google Scholar] [CrossRef]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Kalafatakis, K.; Tsipouras, M.G.; Giannakeas, N.; Tzallas, A.T. EEG-Based Eye Movement Recognition Using Brain–Computer Interface and Random Forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef]

- Maule, L.; Luchetti, A.; Zanetti, M.; Tomasin, P.; Pertile, M.; Tavernini, M.; Guandalini, G.M.A.; De Cecco, M. RoboEye, an Efficient, Reliable and Safe Semi-Autonomous Gaze Driven Wheelchair for Domestic Use. Technologies 2021, 9, 16. [Google Scholar] [CrossRef]

- Li, Y.; He, S.; Huang, Q.; Gu, Z.; Yu, Z.L. A EOG-based switch and its application for “start/stop” control of a wheelchair. Neurocomputing 2018, 275, 1350–1357. [Google Scholar] [CrossRef]

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-based hybrid brain-computer interface: Application on controlling an integrated wheelchair robotic arm system. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef]

- Choudhari, A.M.; Porwal, P.; Jonnalagedda, V.; Mériaudeau, F. An Electrooculography based Human Machine Interface for wheelchair control. Biocybern. Biomed. Eng. 2019, 39, 673–685. [Google Scholar] [CrossRef]

- Maule, L.; Zanetti, M.; Luchetti, A.; Tomasin, P.; Dallapiccola, M.; Covre, N.; Guandalini, G.; De Cecco, M. Wheelchair driving strategies: A comparison between standard joystick and gaze-based control. Assist. Technol. 2022, 35, 180–192. [Google Scholar] [CrossRef] [PubMed]

- Letaief, M.; Rezzoug, N.; Gorce, P. Comparison between joystick- and gaze-controlled electric wheelchair during narrow doorway crossing: Feasibility study and movement analysis. Assist. Technol. 2021, 33, 26–37. [Google Scholar] [CrossRef] [PubMed]

- Sunny, M.S.H.; Zarif, M.I.I.; Rulik, I.; Sanjuan, J.; Rahman, M.H.; Ahamed, S.I.; Wang, I.; Schultz, K.; Brahmi, B. Eye-gaze control of a wheelchair mounted 6DOF assistive robot for activities of daily living. J. Neuroeng. Rehabil. 2021, 18, 173. [Google Scholar] [CrossRef]

- Ishizuka, A.; Yorozu, A.; Takahashi, M. Driving Control of a Powered Wheelchair Considering Uncertainty of Gaze Input in an Unknown Environment. Appl. Sci. 2018, 8, 267. [Google Scholar] [CrossRef]

- Yuan, Y.; Guan, T. Design of individualized wheelchairs using AHP and Kano model. Adv. Mech. Eng. 2014, 6, 242034. [Google Scholar] [CrossRef]

- Rice, L.A.; Fliflet, A.; Frechette, M.; Brokenshire, R.; Abou, L.; Presti, P.; Mahajan, H.; Sosnoff, J.; Rogers, W.A. Insights on an automated fall detection device designed for older adult wheelchair and scooter users: A qualitative study. Disabil. Heal. J. 2022, 15, 101207. [Google Scholar] [CrossRef] [PubMed]

- Rice, L.A.; Peters, J.; Sung, J.; Bartlo, W.D.; Sosnoff, J.J. Perceptions of Fall Circumstances, Recovery Methods, and Community Participation in Manual Wheelchair Users. Am. J. Phys. Med. Rehabil. 2019, 98, 649–656. [Google Scholar] [CrossRef]

- Pellichero, A.; Best, K.L.; Routhier, F.; Viswanathan, P.; Wang, R.H.; Miller, W.C. Blind spot sensor systems for power wheelchairs: Obstacle detection accuracy, cognitive task load, and perceived usefulness among older adults. Disabil. Rehabil. Assist. Technol. 2021, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Frank, A.O.; De Souza, L.H.; Frank, J.L.; Neophytou, C. The pain experiences of powered wheelchair users. Disabil. Rehabil. 2011, 34, 770–778. [Google Scholar] [CrossRef]

- Viswanathan, P.; Zambalde, E.P.; Foley, G.; Graham, J.L.; Wang, R.H.; Adhikari, B.; Mackworth, A.K.; Mihailidis, A.; Miller, W.C.; Mitchell, I.M. Intelligent wheelchair control strategies for older adults with cognitive impairment: User attitudes, needs, and preferences. Auton. Robot. 2016, 41, 539–554. [Google Scholar] [CrossRef]

- Sarour, M.; Jacob, T.; Kram, N. Wheelchair satisfaction among elderly Arab and Jewish patients—A cross-sectional survey. Disabil. Rehabil. Assist. Technol. 2023, 18, 363–368. [Google Scholar] [CrossRef] [PubMed]

- Gero, J.S. Design prototypes: A knowledge representation schema for design. AI Mag. 1990, 11, 26. [Google Scholar]

- Gero, J.S.; Kannengiesser, U. The situated function–behaviour–structure framework. Des. Stud. 2004, 25, 373–391. [Google Scholar] [CrossRef]

- Cascini, G.; Fantoni, G.; Montagna, F. Situating needs and requirements in the FBS framework. Des. Stud. 2013, 34, 636–662. [Google Scholar] [CrossRef]

- del Sagrado, J.; del Águila, I.M. Assisted requirements selection by clustering. Requir. Eng. 2021, 26, 167–184. [Google Scholar] [CrossRef]

- MacPhee, A.H.; Kirby, R.L.; Coolen, A.L.; Smith, C.; A MacLeod, D.; Dupuis, D.J. Wheelchair skills training program: A randomized clinical trial of wheelchair users undergoing initial rehabilitation. Arch. Phys. Med. Rehabil. 2004, 85, 41–50. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A ’Quick and Dirty’ Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Callejas-Cuervo, M.; González-Cely, A.X.; Bastos-Filho, T. Design and implementation of a position, speed and orientation fuzzy controller using a motion capture system to operate a wheelchair prototype. Sensors 2021, 21, 4344. [Google Scholar] [CrossRef] [PubMed]

- Guedira, Y.; Bimbard, F.; Françoise, J.; Farcy, R.; Bellik, Y. Tactile Interface to Steer Power Wheelchairs: A Preliminary Evaluation with Wheelchair Users. In Proceedings of the Computers Helping People with Special Needs: 16th International Conference, ICCHP 2018, Linz, Austria, 11–13 July 2018; pp. 424–431. [Google Scholar]

- Panchea, A.M.; Todam Nguepnang, N.; Kairy, D.; Ferland, F. Usability Evaluation of the SmartWheeler through Qualitative and Quantitative Studies. Sensors 2022, 22, 5627. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Human Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).