Abstract

Sequential recommendation uses contrastive learning to randomly augment user sequences and alleviate the data sparsity problem. However, there is no guarantee that the augmented positive or negative views remain semantically similar. To address this issue, we propose graph neural network-guided contrastive learning for sequential recommendation (GC4SRec). The guided process employs graph neural networks to obtain user embeddings, an encoder to determine the importance score of each item, and various data augmentation methods to construct a contrast view based on the importance score. Experimental validation is conducted on three publicly available datasets, and the experimental results demonstrate that GC4SRec improves the hit rate and normalized discounted cumulative gain metrics by 1.4% and 1.7%, respectively. The model can enhance recommendation performance and mitigate the data sparsity problem.

1. Introduction

Recommender systems play a key role in e-commerce, video streaming media, music platforms, and many other online services, as they address the problem of information overload. Research on serialized recommendations is of great importance and has gained widespread attention in recent years. Given a sequence of user behavior, sequential recommendations capture the sequential transition pattern between consecutive items and predict the next item that the user may be interested in. GRU4Rec [1] uses recurrent neural networks (RNNs) to model the sequential behavior of users. SASRec [2] uses a self-attention mechanism to capture high-level information from user behavior. Additionally, the recommendation algorithm based on graph neural networks (GNN) converts each behavior sequence into a sequential graph.

Self-supervised learning (SSL) mines self-supervised signals from the sequence to mitigate the problem of data sparsity. Contrastive learning, as a typical self-supervised learning method, has received increasing attention in various fields. Contrastive learning takes positive and negative samples from data to maximize the consistency of positive samples and minimize the consistency of negative samples. S3Rec [3] and CL4Rec [4] perform data-level augmentations to user behavior sequences, while DuoRec [5] performs model-level augmentations.

The above contrastive learning methods completely obtain self-supervised signals from the sequence. Due to the limited items contained in each user behavior sequence, the self-supervised signals obtained from the sequence are insufficient. Moreover, S3Rec and CL4Rec generate contrastive views through simple data augmentation methods (such as item crop) on user-item interaction sequences, resulting in low diversity of contrastive views and weak self-supervised signals. To address the above issues, the main work of this paper is as follows:

- (1)

- We use graph neural networks to capture local context information, and an encoder is used to extract sequence context information to generate importance scores.

- (2)

- We ensure that the positive view remains semantically similar by guiding the generation of contrastive learning positive views through graph neural networks.

- (3)

- Using two data augmentation methods, mask and crop, positive views are generated based on the importance score of the item.

2. Related Work

2.1. Sequential Recommendation

Sequential recommendation predicts the next item that users may purchase by learning the user’s behavioral sequence. Early research focused on modeling the relationships between items through Markov chains (MC), which are based on the previous item purchased by the user to infer the next item to be purchased. For instance, FPMC [6] captures sequential patterns through first-order Markov chains and then extends to high-order Markov chains. With the development of deep neural networks, the focus of sequential recommendation research has shifted towards the use of neural networks, such as RNN-based [7,8] methods that treat user behavior sequences as a sequential modeling problem and apply recurrent neural networks to capture sequential transformation patterns. CNN-based [9] algorithms treat the sequence as an image and use convolution networks to model sequences.

Inspired by the effectiveness of the self-attention mechanism in the NLP field, SASRec applies the attention mechanism to sequential recommendation for the first time and learns the transfer mode of items by stacking multi-head attention blocks. MIND [10] utilizes dynamic routing to obtain multiple interests of users. GNN-based [11,12,13] models are used to capture structural information in behavior sequences. GC-SAN [14] captures rich local dependencies through the graph neural network and learns long-term dependencies for each sequence through the self-attention mechanism.

2.2. Contrastive Learning

The main idea of contrastive learning is to learn information representation through contrasting positive and negative views. This approach has led to significant achievements in computer vision [15], natural language processing [16], and graph neural networks [17]. Recently, some studies have introduced contrastive learning into recommendation systems [18]. For example, SGL [19] takes node self-discrimination as a supervised task, providing auxiliary information for existing GNN-based recommendation models. SEPT [20] designs a socially aware self-supervised framework for learning self-supervised signals from user-item bidirectional graphs and social network graphs. S3Rec designs four auxiliary self-supervised objectives through maximum mutual information for data representation learning. CL4Rec applies three kinds of data augmentation (i.e., crop, mask, and reorder) to generate positive examples. DuoRec proposes a model-level augmentation method that adopts a supervised positive sampling strategy to capture self-supervised signals in the sequence.

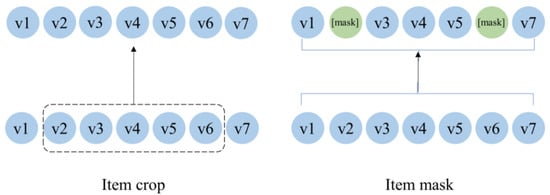

As shown in Figure 1, we use two augmentation methods: mask and crop. The item masking strategy randomly masks items and replaces them with a special token [mask]. The idea behind this method is that a user’s intent is relatively stable over a period of time. Therefore, even though some items are masked, the main intended information is still preserved in the remaining items. Random crop is a common data augmentation technique to increase the variety of images in computer vision. It usually creates a random subset of an original image to help the model generalize better. Inspired by the random crop technique in images, we propose the item crop augmentation method for the contrastive learning task in the sequential recommendation.

Figure 1.

Augmentation methods.

2.3. Guided Method

Recent research has found that data-driven recommendation systems may pose serious threats to users and society, such as spreading fake news on social media to manipulate public opinion or inferring privacy information from recommendation results. Therefore, to mitigate the negative impacts of recommendation systems and increase public trust in the technology, the trustworthiness of recommendation systems is receiving increasing attention. There are already some studies on explainable recommendation systems [21], but these methods mainly explain why the algorithm recommends certain items. GC4SRec focuses on general explanation methods originally designed to identify explanatory feature results [22,23]. By applying these methods to sequential recommendation approaches, it is possible to identify historical items in user sequences that explain the predicted next item and assign importance scores to these historical items. For example, saliency [23] obtains attribution scores of input features by returning gradients relative to the input features. Attention-based mechanisms model items’ relative importance through attention weights. However, using attention as an explanation is controversial.

3. GC4SRec

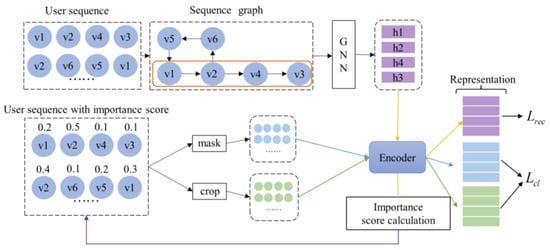

The sequential recommendation model GC4SRec proposed in this paper, with the structure shown in Figure 2, consists of two phases: the graph neural network-guided phase and the contrastive learning phase. In the graph neural network-guided phase, the model transforms the user sequence into a graph and then generates the importance score of each item in the sequence by learning contextual information about the user sequence through GNN. In the sequence contrastive learning phase, the model performs data augmentation methods according to the importance scores to generate positive views.

Figure 2.

The framework of the model.

3.1. Graph Neural Network-Guided Approach

Sequential recommendations predict what users want to click or buy next based on their behavior sequence. Let U be the set of users and V be the set of items. For each user’s behavior sequence, a series of click actions of the user is represented as , where denotes the set of items clicked by user at time .

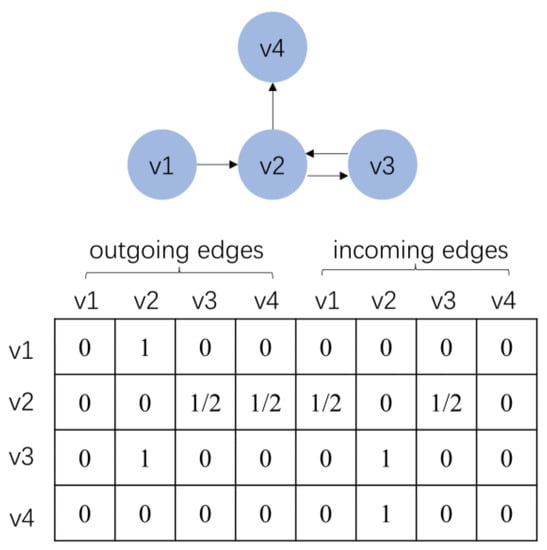

The user behavior sequences can be constructed as a sequence graph, and given a sequence , , where each item is considered as a node of the graph, and is an edge in the sequence graph, indicating that the user clicked on item and then clicks on item in sequence . Since several items may appear in the sequence repeatedly, we assign each edge a normalized weight, which is calculated as the occurrence of the edge divided by the outdegree of that edge’s start node. denotes the weighted connection of the incoming and outgoing edges of the session graph, and the corresponding graph and matrix for sequence are shown in Figure 3.

Figure 3.

Sessions of a session graph and the connection matrix.

GNN is well suited for sequential recommendation because it can automatically extract the features of the sequential graph, considering rich node connections. The node vector denotes the embedding vector, and the information propagation between different nodes can be formalized as:

where , are the parameter matrices, , are the bias vectors, , are the t-row corresponding to node, , and extracts the contextual information of the neighborhood of . Then, we feed it and into the GNN. The final output of the GNN layer is calculated as follows:

where , are the trainable parameters, is the sigmoid function, and e is the element-wise multiplication. , are the update gate and reset gate, respectively, deciding what information is to be preserved and discarded. After feeding the sequence to the GNN, the embedding vector of all nodes in the sequential graph can be obtained. It is then fed into the self-attention layer to capture the global user preference.

where are the projection matrices. On this basis, we apply a two-layer feed-forward network to give the model non-linearity, and a residual connection is added after the feed-forward network to make it easier for the model to leverage the low-layer information.

where , are matrices and , are d-dimensional vectors. The above self-attention mechanism is defined as follows:

is the output of the multi-layer self-attention network. Finally, the next click probability of each candidate item is predicted, denotes the recommendation probability of the item , and is the item embedding of . The formula is defined as follows:

To obtain the importance score of each item in the user sequence, the importance score of each item in sequence is set to , denotes the importance score of the item . In the item embedding matrix , where is an embedding of item , the dimensional importance score of is defined as: . By adding and normalizing the d-dimensional importance score, the importance score of can be obtained as:

The value returned for is between [0, 1], indicating the importance of in session s. Importance scores are relative, with all importance scores in a session adding up to 1.

3.2. Contrastive Learning

The model uses two augmentation methods: mask and crop. In the crop operation, the model removes some items with low importance scores. Assuming that in the session s, the model removes the lowest k items in terms of scores, where k is defined as , and represents the subsequence composed of the lowest k items in the session s. We can define the positive and negative views of the guidance as follows:

For the mask performed on session s, which hides items with low importance scores, the positive view of the masking can be defined as follows:

GC4SRec is based on CL4SRec, where guided operations are used for contrastive learning to generate positive and negative examples. The loss function consists of two parts: recommendation loss and guided contrastive loss:

is the embedding representation of the session, , represents the next item to be clicked in the session, and is the negative, . , are the contrastive views generated by mask and crop on the sequence, represented as , .

4. Experiment

4.1. Experiment Settings

For all models with learnable embedding layers, the embedding dimension is set to 64, and the training batch size is set to 256. For each baseline model, all other hyper-parameters are set according to the optimal performance reported in the original paper. For GC4SRec, all parameters are initialized using a truncated normal distribution at [−0.01, 0.01]. The parameters are optimized using the Adam optimizer with a learning rate of 0.001. We tune the hyper-parameters range, which is set from 0.1 to 0.9, with a step size of 0.1.

4.2. Dataset

The first dataset is the MovieLens-1M dataset, which is widely used for evaluating recommendation algorithms. We conducted experiments on three public datasets collected from the real-world platforms. Two of them were obtained from Amazon, one of the largest e-commercial platforms in the world. They were split by top-level product categories in amazon.

For dataset preprocessing, we coverted all numeric ratings or presence of a review to “1” and others to “0”. It is worth mentioning that to guarantee each user/item had enough interactions, we only kept the “5-core” datasets. We discarded users and items with fewer than 5 interaction records iteratively. The dataset information is shown in Table 1:

Table 1.

Datasets.

4.3. Evaluation

For each user, the last interacted item was used as test data, and the items before the last interacted item were used as validation data. The model ranked all items that the user has not interacted with according to their similarity. We employed hit ratio (HR) and normalized discounted cumulative gain (NDCG), which are widely used in related works, to evaluate the performance of each method. HR focuses on positive cases, while NDCG further considers ranking information. In this work, we report HR and NDCG with K = 5, 10.

4.4. Baselines

In order to prove the effectiveness of the model, GC4SRec was compared with six recommendation algorithms on three datasets. The characteristics of the six recommendation algorithms are introduced as follows:

GRU4Rec [1]: Uses gated networks to address the gradient problem in long-term memory and backpropagation.

GC-SAN [14]: Dynamically constructs graph structures of sequences and captures local dependencies through graph neural networks.

SASRec [2]: Uses the self-attention mechanism to model the user interaction sequence to capture the user’s dynamic interest.

S3Rec(MIP) [3]: Uses the self-supervised learning method to derive the intrinsic correlation of data and uses item masking data augmentation methods to solve data sparsity.

CL4Rec [4]: Uses a contrastive learning framework to derive self-supervised signals from raw user behavior sequences. It can extract more meaningful user patterns and further encode user representations.

DuoRec [5]: Proposes a dropout-based model-level augmentation and selects sequences of the same target item as hard positive samples.

4.5. Overall Performance Comparison

For each baseline, the embedding size was set to 64, and all other hyperparameter settings were kept the same as in the CL4SRec. The experimental results are shown in Table 2:

Table 2.

Experimental result on datasets for different models.

The metrics of GEC4SRec consistently outperform the latest algorithms on all datasets. GC4SRec outperforms DuoRec by an average of 2.8% over all metrics on all datasets, and by 28% over CL4SRec. The above results show that self-supervised learning combined with GNN guidance can achieve better performance. For contrastive learning, higher quality positive views lead to better representations of user behavior sequences. Experiments also show that the former is better than BERT4Rec, which fully proves that contrastive learning can effectively alleviate the data sparsity and improve recommendation performance.

GC-SAN can achieve better performance in some cases, proving the effectiveness of applying GNN in sequential recommendation. GC4SRec captures structural information in user behavior sequences by applying GNN and also considers the sequential information of user sequences. Therefore, better performance can be achieved.

5. Ablation Study

5.1. Necessity of Graph Neural Network-Guided Approach

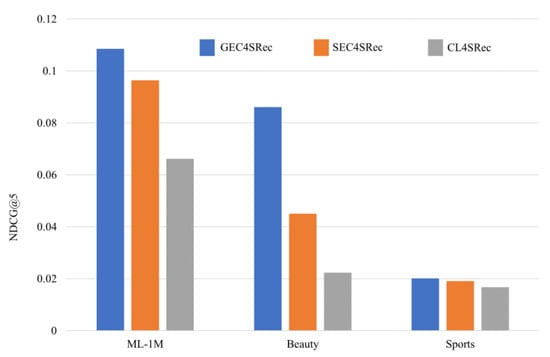

To verify the effect of the GC4SRec graph neural network bootstrap on model performance, SC4SRec uses the attention mechanism to generate importance scores. CL4SRec removes the process of generating importance scores from the graph neural network compared to GC4SRec. The results are shown in Figure 4.

Figure 4.

Ablation experiments with the necessity of a guided method.

Figure 4 shows the evaluation results. It is clear that the bootstrapping process generates accurate contrast learning views, which have higher performance compared to CL4SRec, which generates contrast learning views randomly. The graph neural network has better results than the self-attentive mechanism in generating item importance scores because the graph neural network captures the structural information of user behavior sequences.

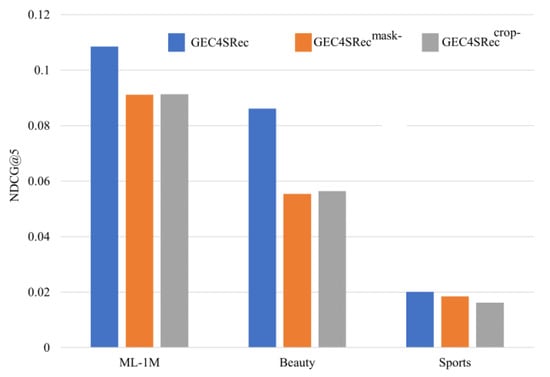

5.2. Necessity of Data Augmentation Methods

The common data augmentation methods for contrastive learning include mask and crop. To verify the impact of these two data augmentation methods on the model performance, experiments were conducted on three datasets with NDCG@5, and the experimental results are shown in Figure 5:

Figure 5.

Ablation experiments with data augmentation methods.

GC4SRecmask- refers to the removal of the mask data augmentation method from GC4SRec, and GC4SReccrop- refers to the removal of the crop data augmentation method from GC4SRec. On all three datasets, removing either of the data augmentation methods resulted in a decrease in recommendation metrics, indicating that both data augmentation methods can improve recommendation performance.

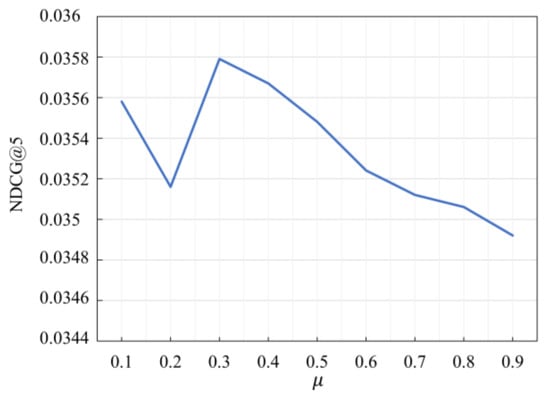

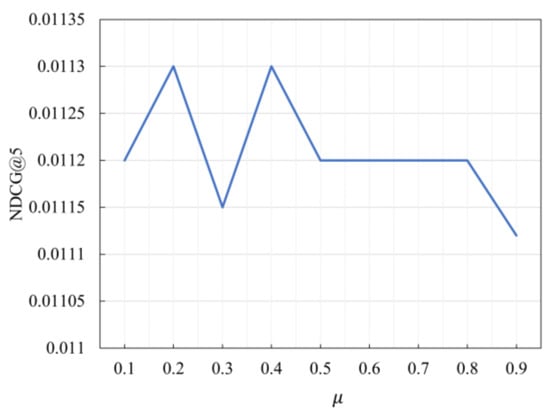

5.3. Study of Hyper-Parameter

During data augmentation, we choose to crop or mask the k items with the lowest scores, and k is defined by . The hyper-parameter determines the number of items with the highest score in the positive view under the bootstrap method. We employ the grid search to tune the hyper-parameter . In the experiments, the values were set from 0.1 to 0.9 for validation, and the results are shown in Figure 6 and Figure 7:

Figure 6.

NDCG@5 with different settings on Beauty dataset.

Figure 7.

NDCG@5 with different settings on Sports dataset.

From the Figure 6 and Figure 7, it is clear the value of greatly affects the performance of the model. In the Beauty dataset, the optimal value of is 0.3. In the Sports dataset, the model’s performance is optimal when is 0.2. Therefore, different values should be used for different datasets to achieve optimal performance.

6. Conclusions

This paper investigates how to generate higher quality comparison views in sequential recommendations using a bootstrapping approach. We propose graph neural network-guided contrastive learning for sequential recommendations. We use graph neural networks to generate importance scores of items and construct contrastive learned views based on the importance scores. Experiments were conducted on three datasets, and the experimental results show that the model can effectively improve recommendation performance. Our work is the first to apply graph neural networks to address the problem of inconsistent positive views. In future work, we will investigate extracting the global contextual representation of users on the global graph and combining the global context and the sequence context to generate the user representation for item recommendation for users. The global context contains more global information, which can enrich the user representation, generate more accurate recommendation views for users, and improve the recommendation performance.

Author Contributions

Conceptualization, X.-Y.Y. and F.X.; methodology, X.-Y.Y. and F.X.; data curation, J.Y.; formal analysis, D.-X.W.; investigation, J.Y. and Z.-Y.L.; validation, F.X., D.-X.W. and X.-Y.Y.; writing—original draft, all authors; Writing—review and editing, all authors; supervision, X.-Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 62262064, 61862060), the Natural Science Foundation of Xinjiang Uygur Autonomous Region of China (Grant No. 2022D01C56), the Education Department Project of Xinjiang Uygur Autonomous Region (Grant No. XJEDU2016S035), and the Doctoral Research Start-up Foundation of Xinjiang University (Grant No. BS150257, 202212140030).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study did not report any data. We used public data for research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hidasi, B.; Karatzog, A.; Baltrunas, L.; Tikk, D. Session-based recommendations with recurrent neural networks. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016; ACM: New York, NY, USA, 2016; pp. 1–10. [Google Scholar]

- Wang, C.K.; Mcauley, J. Self-attentive sequential recommendation. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–18 November 2018; ACM: New York, NY, USA, 2018; pp. 197–206. [Google Scholar]

- Zhou, K.; Wang, H.; Zhao, W.X.; Zhu, Y.; Wang, S.; Zhang, F.; Wang, Z.; Wen, J.-R. S3-Rec: Self-supervised learning for sequential recommendation with mutual information maximization. In Proceedings of the International Conference on Information and Knowledge Management, Virtual Event, 19–23 October 2020; ACM: New York, NY, USA, 2020; pp. 1893–1902. [Google Scholar]

- Xie, X.; Sun, F.; Liu, Z.; Wu, S.; Gao, J.; Ding, B.; Cui, B. Contrastive learning for sequential recommendation. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR’21), Montréal, QC, Canada, 11–15 July 2021; ACM: New York, NY, USA, 2021; pp. 1–11. [Google Scholar]

- Qiu, R.H.; Huang, Z.; Yin, H.Z.; Wang, Z.J. Contrastive learning for representation degeneration problem in sequential recommendation. In Proceedings of the 15th ACM International Conference on Web Search and Data Mining, Tempe, AZ, USA, 21–25 February 2022; ACM: New York, NY, USA, 2022; pp. 813–823. [Google Scholar]

- He, R.N.; Mcauley, J. Fusing similarity models with markov chains for sparse sequential recommendation. In Proceedings of the IEEE International Conference on Data Mining, Barcelona, Spain, 12–15 December 2017; ACM: New York, NY, USA, 2017; pp. 191–200. [Google Scholar]

- Wu, Y.C.; Ahmed, A.; Beutel, A.; Smola, A.J.; Jing, H. Recurrent recommender networks. In Proceedings of the International Conference on Web Search and Data Mining, Cambridge, UK, 6–10 February 2017; ACM: New York, NY, USA, 2016; pp. 495–503. [Google Scholar]

- Xu, C.F.; Zhao, P.P.; Liu, Y.C.; Xu, J.J.; Sheng, V.S.; Liu, Y.C.; Cui, Z.M.; Zhou, X.F.; Xiong, H. Recurrent convolutional neural network for sequential recommendation. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; ACM: New York, NY, USA, 2019; pp. 3398–3404. [Google Scholar]

- Tang, J.; Wang, K. Personalized top-n sequential recommendation via convolutional sequence embedding. In Proceedings of the International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018; ACM: New York, NY, USA, 2019; pp. 1–9. [Google Scholar]

- Li, C.; Wu, M.M.; Zhao, H.; Liu, Z.Y.; Xu, Y.C.; Huang, P.P.; Kang, G.L.; Chen, Q.W.; Li, W.; Lee, D.L. Multi interest network with dynamic routing for recommendation at Tmall. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; ACM: New York, NY, USA, 2019; pp. 1–9. [Google Scholar]

- Zhang, J.N.; Shi, X.J.; Zhao, S.L.; King, I. STAR-GCN: Stacked and reconstructed graph convolutional networks for recommender systems. In Proceedings of the International Joint Conference on Artificial Intelligence, Vienna, Austria, 8–10 August 2019; ACM: New York, NY, USA, 2019; pp. 4264–4270. [Google Scholar]

- Chang, J.X.; Gao, C.; He, X.N.; Jin, D.P.; Li, Y. Bundle recommendation and generation with graph neural networks. In Proceedings of the 43rd International Conference on Research and Development in Information Retrieval, Xi’an, China, 25–23 July 2020; ACM: New York, NY, USA, 2020; pp. 1–14. [Google Scholar]

- Chang, J.X.; Gao, C.; He, X.N.; Jin, D.P.; Li, Y. Multi-behavior recommendation with graph convolutional networks. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; ACM: New York, NY, USA, 2020; pp. 659–668. [Google Scholar]

- Xu, C.F.; Zhao, P.P.; Liu, Y.C.; Sheng, V.S.; Xu, J.J.; Zhuang, F.Z.; Fang, J.H.; Zhou, X.F. Graph contextualized self-attention network for session-based recommendation. In Proceedings of the International Joint Conference on Artificial Intelligence, Vienna, Austria, 8–10 August 2019; ACM: New York, NY, USA, 2019; pp. 3940–3946. [Google Scholar]

- Yang, Z.H.; Cheng, Y.; Liu, Y.; Sun, M.S. Reducing word omission errors in neural machine translation: A contrastive learning approach. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; ACM: New York, NY, USA, 2019; pp. 6191–6196. [Google Scholar]

- Zhu, Y.T.; Nie, J.Y.; Dou, Z.C.; Ma, Z.Y.; Zhang, X.Y.; Du, P.; Zuo, X.C.; Jiang, H. Contrastive learning of user behavior sequence for context-aware document ranking. In Proceedings of the International Conference on Information and Knowledge Management, Virtual Event, 1–5 November 2021; ACM: New York, NY, USA, 2021; pp. 2780–2791. [Google Scholar]

- Chu, G.Y.; Wang, X.; Shi, C.; Jiang, X.Q. CuCo: Graph representation with curriculum contrastive learning. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; IJCAI: Vienna, Austria, 2021; pp. 2300–2306. [Google Scholar]

- Qiu, R.H.; Zhang, Z.; Yin, H.Z. Memory augmented multi-instance contrastive predictive coding for sequential recommendation. In Proceedings of the International Conference on Data Mining, Auckland, New Zealand, 7–10 December 2021; ACM: New York, NY, USA, 2021; pp. 1–10. [Google Scholar]

- Wu, J.C.; Wang, X.; Feng, F.L.; He, X.N.; Chen, L.; Lian, J.X.; Xie, X. Self-supervised graph learning for recommendation. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; ACM: New York, NY, USA, 2021; pp. 726–735. [Google Scholar]

- Yu, J.L.; Yin, H.Z.; Gao, M.; Xia, X.; Zhang, X.L.; Viet Hung, N.Q. Socially-aware self-supervised tri-training for recommendation. In Proceedings of the International Conference on Knowledge Discovery and Data Mining, Virtual Event, 14–18 August 2021; ACM: New York, NY, USA, 2021; pp. 2084–2092. [Google Scholar]

- Zhang, Y.F.; Chen, X. Explainable recommendation: A survey and new perspectives. Found. Trends Inf. Retr. 2020, 14, 1–101. [Google Scholar] [CrossRef]

- Sundararajan, M.; Taly, A.; Yan, Q.Q. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; ACM: New York, NY, USA, 2017; pp. 1–11. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional network. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; ACM: New York, NY, USA, 2014; pp. 1–11. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).