Abstract

Currently, the use of Unmanned Aerial Vehicles (UAVs) in natural and complex environments has been increasing, because they are appropriate and affordable solutions to support different tasks such as rescue, forestry, and agriculture by collecting and analyzing high-resolution monocular images. Autonomous navigation at low altitudes is an important area of research, as it would allow monitoring parts of the crop that are occluded by their foliage or by other plants. This task is difficult due to the large number of obstacles that might be encountered in the drone’s path. The generation of high-quality depth maps is an alternative for providing real-time obstacle detection and collision avoidance for autonomous UAVs. In this paper, we present a comparative analysis of four supervised learning deep neural networks and a combination of two for monocular depth map estimation considering images captured at low altitudes in simulated natural environments. Our results show that the Boosting Monocular network is the best performing in terms of depth map accuracy because of its capability to process the same image at different scales to avoid loss of fine details.

1. Introduction

In recent years, the importance of Unmanned Aerial Vehicles (UAVs) has been increasing in sectors such as search and rescue [1], precision agriculture [2], and forestry [3], as they can capture and process different types of data in real time to monitor the environment in which they are moving.

Deep Learning (DL) has proven to be an excellent alternative to achieve autonomous navigation of UAVs, solving a variety of tasks in the areas of sensing, planning, mapping, and control.

One of the main problems that has not yet been solved is giving UAVs the capacity to navigate autonomously at low altitudes in confined and cluttered spaces using cameras as the main sensing sensor.

UAVs with only monocular cameras must be able to detect obstacles to prevent collisions; therefore, it is necessary to create depth maps from RGB images in order to determine the free space to navigate safely. This problem is further complicated when the drone is navigating in a natural environment, due to very thin obstacles such as tree branches or soft obstacles such as large leaves or bushes. Such obstacles can destabilize or damage the UAVs.

Vision-based navigation has been promising for autonomous navigation [4]. First, visual sensors can provide a wealth of information about the environment; second, cameras are very suitable for the perception of the dynamic environment; third, some cameras are cheaper than other types of sensors [5].

The concept of depth estimation refers to the process of preserving the 3D information of the scene using 2D information captured by cameras [6]. A variety of 3D commercial sensors are available to obtain depth information; for example, binocular cameras or LiDAR sensors can obtain accurate depth information, but the memory requirements and processing power are challenges in many onboard applications. In addition, the price of these sensors is very high.

A potential solution to address the problems of 3D sensors could be the use of monocular cameras to create depth maps. The single-view depth estimation technique has currently shown considerable advances in accuracy and speed, increasing the number of approaches published in the literature.

In the specific case of autonomous navigation for drones in outdoor forested environments, Loquercio et al. [6] demonstrated that a neural network for autonomous flight can be trained in simulated environments and perform well in similar real scenarios. However, the creation of such simulated environments can be very time-consuming and there is currently no large set of images with a variety of plants in different settings, such as what may exist in agriculture or forestry.

For this reason, in this work, we evaluated the performance of four models for monocular depth estimation pre-trained on a mixture of diverse datasets with the aim of inferring the depth of RGB images of natural and unstructured scenarios. These models were chosen because they are in a supervised learning framework. We analyzed whether existing databases and network models for depth estimation can generalize to complex natural environments due to the presence of very thin obstacles.

The main contributions of this paper are:

- A qualitative and quantitative analysis of the performance of four state-of-the-art neural networks for monocular depth estimations of synthetic images of complex forested environments.

- We propose a new model resulting from the combination of GLPDepth and Boosting Monocular Depth networks, increasing the accuracy of depth maps compared with GLPDepth alone and decreasing the inference time in the Boosting Monocular Depth structure.

- A discussion of the main open challenges of the monocular depth estimation with neural networks in natural environments.

The rest of this paper is structured as follows. Section 2 introduces the related work. Section 3 explains the network architectures. Section 4 analyzes the comparison of the monocular depth estimation networks. Section 5 enumerates currently open challenges, and in Section 6, we present the conclusions.

2. Related Work

During the last decade, DL has produced good results in various areas such as image recognition, medical imaging, and speech recognition. DL belongs to the machine learning computational field and is similar to an Artificial Neural Network (ANN). However, DL is about “deeper” neural networks that provide a hierarchical representation of the data by means of a collection of convolutional layers. This characteristic allows larger learning capabilities and, thus, higher performance and precision [7].

The automatic feature extraction from raw data is the strong advantage of DL, where features from higher levels of the hierarchy are formed by the composition of lower-level features. Thanks to the hierarchical structure and large learning capacity, DL is flexible and adaptable for a wide variety of highly complex challenges [8].

Convolutional Neural Networks (CNN) are a type of architecture that receives input images that are passed through different hidden layers to learn patterns in a hierarchical manner. Therefore, CNN-based models are widely used in the context of collision avoidance [9], especially in the stages related to the perception of the environment and depth estimation [10].

Additionally, with the advance of CNN and publicly available large-scale datasets, the monocular depth estimation method has significantly improved. Current research works have achieved more accurate results with lower computational and energy resources. Accurate depth maps can play an important role in understanding 3D scene geometry, particularly in cost-sensitive applications [11,12].

2.1. Monocular Depth Estimation Networks

In recent years, the amount of literature on monocular depth estimation has increased and has evolved to the use of deep learning. Khan et al. [11] and Dong et al. [12] presented two excellent reviews of the state of the art on monocular depth estimation. Deep learning models for monocular depth estimation can be divided by the way they are trained as supervised, unsupervised, and semi-supervised learning models. We focus on supervised learning methods, which are trained using a ground truth to asess their capacity to generalize to other types of environments. Therefore, the latest published papers related to supervised learning deep neural networks were reviewed.

Ranftl et al. [13] trained a robust monocular depth estimation neural network named MiDaS with different datasets obtained from diverse environments. They developed new loss functions that are invariant to the main sources of incompatibility between datasets. They used six different datasets for training and a zero-shot cross-dataset transfer technique to prove generalization. They demonstrated that a model properly trained on a rich and diverse set of images from different sources outperforms the state-of-the-art methods in a variety of settings.

The R-MSFM network [14] is a recurrent multi-scale network with modulation of self-supervised functions, where features are extracted per pixel and a multi-scale feature modulation is performed, iteratively updating an inverse depth via a shared parameter decoder at the fixed resolution. Leveraging multi-scale feature maps prevents error propagation from low to high resolution and maintains representations with modulation semantically richer and more spatially accurate.

The Boosting Monocular Depth network [15] merges estimations in different resolutions with changing context in order to be able to generate depth maps of several megapixels with a high level of detail using a previously trained model. For experimentation, two model options, MiDaS [13] and SGR [16], are taken for depth estimation at high and low resolution; after obtaining both maps, these are combined using a Pix2Pix architecture network [17] with 10 U-net network layers [18] as a generator. Finally, the LeReS model [19] is implemented for depth estimation, which, at the time, has achieved the best performance in the state-of-the-art with AbsRel = 0.09 evaluated with the NYU dataset.

GLPDepth architecture [20] uses both global and local contexts through the entire network to generate depth maps. Their transformer encoder learns the global dependencies and captures multi-scale features, whereas the decoder converts the extracted features into a depth map using a selective feature fusion module, which helps to preserve the structure of the scene and to recover fine details.

On the other hand, Kendall and Gal [21] proposed a Bayesian deep learning framework to estimate depth, in which they modeled two types of uncertainty: aleatoric (concerning noise in observations) and epistemic (related to model parameters), making the networks perform better despite being trained on noisy data. Modeling uncertainty is of great importance in computer vision tasks to know whether the network generates reliable predictions. The generation of confident depth maps is also required for autonomous vehicles in order to make safe and accurate decisions in their environment.

2.2. Datasets for Monocular Depth Map Estimation

Research related to autonomous navigation of UAVs has currently focused on indoor, urban, and wooded environments. However, most of the publicly available datasets of the outdoors do not correspond to low-altitude flights. Traditional datasets used to train obstacle detection models [6,22,23,24,25,26,27,28,29,30,31,32,33,34] are KITTI [35] or NYU-v2 [36]. The IDSIA dataset [37] could be considered as a pioneer in the use of images of natural environments, specifically of forest trails.

In addition to these datasets, images obtained from simulators such as Gazebo [38] or AirSim [39] can be used to deal with the lack of data. The TartanAir dataset [40] is one of the best options of data collected from photorealistic simulations of forest environments. A special goal of the TartanAir dataset is that it focuses on the challenging environments with changing light conditions, adverse weather, and dynamic objects. Another alternative is the Mid-Air dataset [41], which is also obtained by a simulated drone flying in natural environments at low altitude.

3. Monocular Depth Estimation Networks Analyzed in This Work

The networks that were selected for this analysis are those that have presented the best results in the state-of-the-art to date. In Table 1, we show the AbsRel (Absolute Relative Error) and RMSE (Root-Mean-Square Error) evaluation metrics reported for the networks described above. However, each of the works carried out their experimentation with different datasets, so it is difficult to compare them. Therefore, we perform an evaluation using the different networks and a dataset that contains images of complex natural environments collected by a simulated drone flying at low altitude.

Table 1.

Comparison of monocular depth map estimation networks. The metric values correspond to those reported in their respective publications.

Ranftl et al. [13] demonstrated that a network trained with different datasets can generate a better estimation of depth; therefore, on their experimentation, they started with the experimental configuration of [42] and used a multi-scale architecture based on ResNet for the prediction of depth with a single image. During the experimentation, the influence of the encoder on the architecture was evaluated, so they exchanged different encoders such as ResNet-101, ResNeXt-101, and DenseNet-161. They showed that network performance increased when using higher-capacity encoders. Thus, in subsequent tests, they used ResNeXt-101-WSL, which is a ResNeXt-101 version pre-trained with a massive corpus of Weakly-Supervised Data (WSL). After the evaluation of the encoder, their new model MiDaS was trained with six different datasets (DIW, ETH3D, Sintel, KITTI, NYU, and TUM). The model performed best in the ETH3D dataset according to absolute relative error (Abs Rel).

The MiDaS network has been continuously upgraded to the MiDaS v3.0 DPT version, where it uses the Dense Prediction Transform (DPT) model from the work of [43]. This model can use keys, queries, or values to completely trust the attention ratio between units to find the ratio between each unit in the sequence.

The architecture proposed in [14] consists of four main components: (a) a depth encoder that extracts the pixel representations of ResNet18 except for the last two blocks, which produces multi-scale features with an input resolution of ; (b) a shared-parameter depth decoder that iteratively updates a zero-initialized inverse depth, preventing spatial inaccuracy at the coarse level from propagating to the fine part; (c) a parameter-learned oversampling module that adaptively oversamples the estimated inverse depth, preserving its motion limits; (d) a Multi-Scale Feature Modulation (MSFM) component that modulates the content in multi-scale feature maps, maintaining semantically richer and spatially more accurate representations for each iterative update. This architecture reduces the number of parameters to 3.8M, making it more suitable for memory-limited scenarios and giving it the ability to process 640x192 videos at 44 frames per second on an RTX2060 GPU.

In [15], an algorithm called double estimation was proposed, where two depth estimations from the same image at different resolutions are merged, obtaining a structure with high-frequency details. Their experiments showed that at low resolutions, depth estimates exhibit a consistent structure of the scene, but high-frequency details are lost. On the other hand, at high resolutions, fine details are well preserved but the scene structure starts to show inconsistencies. To combine the features of both images, they used Pix2Pix4DepthModel, which has a Pix2Pix architecture [17] with U-net layers [18] as a generator and a “PatchGAN” convolutional classifier (only penalizes the scale structure of image patches) as a discriminator. Both the generator and the discriminator use modules of the form Convolution-BatchNorm-ReLU. The entire network process can be described in three steps; first, the network generates a base estimation using the double estimation for the whole image. Then, patch selection starts by tiling the image at the base resolution with a tile size equal to the receptive field size and a overlap; for each patch, a depth estimate is generated using again the double estimation algorithm. Finally, the generated patch-estimates are merged onto the base estimate one by one to generate a more detailed depth map.

In [20], a new architecture that is mainly made up of an encoder, a decoder, and skip connections with feature fusion modules was suggested. The encoder has the objective to take advantage of the rich global information to model long-range dependencies and capture multi-scale context features with a hierarchical transformer [44], where the transformer allows the network to expand the size of the receptive field. The input image is embedded as a sequence of patches with the 3 × 3 convolution operation; then, these patches are used in the transforming block that is made up of several self-attention sets and a Multilayer-Convolution-Multilayer with residual skip. In the lightweight decoder, the channel dimension of the function is reduced to with 1 × 1 convolution; then, consecutive bilinear upsampling is used to expand the function to size . Finally, the output goes through two convolution layers and a sigmoid function to predict the depth map , which is multiplied with the maximum depth value to scale in meters. To further exploit local structures in fine detail, a skip connection (to create smaller, receptive fields that help focus on short-distance information) was added with a proposed fusion module.

4. Comparison of the Monocular Depth Estimation Networks

The experimentation was performed using the TartanAir public dataset [40], which consists of different trajectories of simulated natural environments captured by a drone. We decided to use the trajectories that are composed of elements of our interest, such as various types of plants (trees, bushes, and grass) and farm tools (fences, lights, poles, and walls).

4.1. TartanAir Dataset

The three selected trajectories were: Gascola, Season Forest, and Season Forest Winter. Each of the trajectories is composed of the depth, RGB, and segmentation images obtained by a stereo camera. Table 2 shows the details of each dataset by trajectory.

Table 2.

Details of the content of selected TartanAir trajectories.

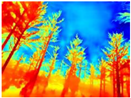

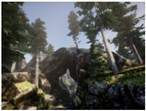

The main visual characteristics of the three selected environments are described below:

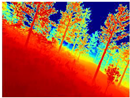

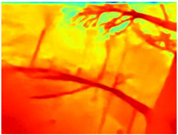

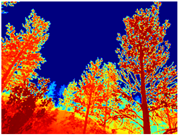

Gascola: A wooded environment with several rocky areas, mossy regions, and areas with different species of pines. Images were captured in daylight in the morning (see row 1 of Table 3).

Table 3.

Examples of RGB images from Tartan Air dataset: Gascola (a), Season Forest (b), and Season Forest Winter (c) trajectories.

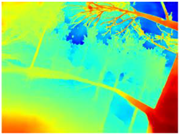

Season Forest: A wooded environment in the autumn season. Row 2 of Table 3 shows different species of trees with shades of autumn in the trunks and leaves, with effects of falling leaves. The lighting was at noon, so there are shadows under the trees.

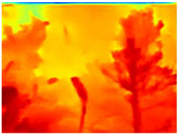

Season Forest Winter: A forest area in the winter season; it contains different species of trees showing only the trunks and branches in a greyish shade and covered with snow. Row 3 of Table 3 shows that the capture was made at dusk, so there are many shadows in the area around the trees.

4.2. Metrics for Monocular Depth Estimation

In order to evaluate the performance of the networks, we used the following state-of-the-art metrics: Absolute Relative Difference (AbsRel), Root-Mean-Square Error (RMSE), RMSE (log), Square Relative Error (SqRel), and Delta Thresholds (), that is, the percentage of pixels with relative error under a threshold controlled by the constant [11]. These metrics are defined as follows, respectively:

where and are the ground truth and predicted depth at pixel , respectively, and is the total number of pixels. In (5), the accuracy is calculated under a predefined threshold [30,31], where a point of the image is used as a positive or negative sample based on how close the ground truth depth of the corresponding pixel is to the depth in the predicted image , if their ratio is close to 1. Even though these statistics are good indicators for the general quality of the predicted depth map, they could be elusive. In addition, it is of high relevance that depth discontinuities are precisely located. Therefore, in [16], Xian et al. proposed the ORD metric (Ordinal Relation Error in the depth space) for the evaluation of zero shot crossed datasets. This ordinal error is a general metric for evaluating the ordinal accuracy of a depth map and can be used directly with different sources of the ground truth. The ordinal error can be defined as:

where is set to 1, and the ordinal relationships and are computed using Equation (7).

where τ is a tolerance threshold, and denotes the ground truth pseudo-depth value. When the pair of points are close in the depth space, i.e., , the loss encourages the predicted and to be the same; otherwise, the difference between and must be large to minimize the loss.

Moreover, Miangoleh et al. [15] proposed a variation in the ordinal ratio error, which they called Depth Discontinuity Disagreement Ratio (), to measure the quality of high-frequency depth estimates. Instead of using random points for ordinal comparison as in [16], they used the centers of the superpixels calculated from the depth maps of the ground truth, as well as the centroids neighborhoods, to compare the depth discontinuities. Therefore, this metric focuses on the boundary accuracy to capture performance around high-frequency details.

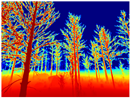

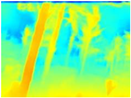

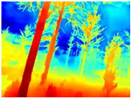

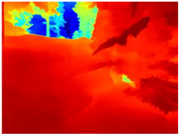

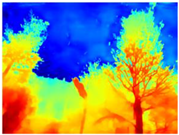

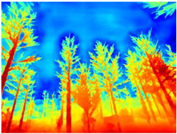

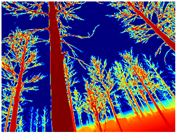

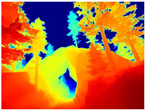

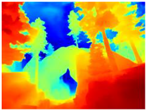

4.3. Qualitative Analysis of Networks

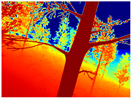

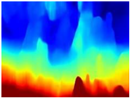

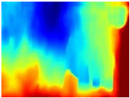

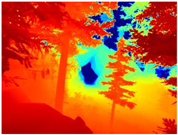

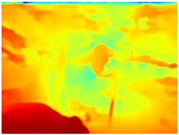

We used some of the RGB images from the three trajectories to estimate their respective depth maps using the pretrained networks: R-MSFM, MiDaS, GLPDepth, and Boosting Monocular Depth (MiDaS). To perform the qualitative analysis, the inferred depth maps were compared with the ground truth of each dataset.

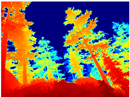

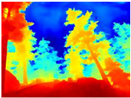

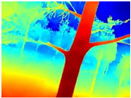

Table 4 illustrates the depth maps that were obtained from the networks using nine test images representative of the different natural obstacles in the environments. The R-MSFM network can define the obstacles that are close and the free space that the scene has in the depth maps. However, in areas of the image where there are several branches with many leaves at different depths, the network considers them as a single object, generating very large areas marked as obstacles.

Table 4.

Depth maps of forested environments generated from state-of-the-art monocular depth networks.

We used the MiDaS network in its hybrid version with the DPT model because there is a great improvement in the detection of fine details compared with the R-MSFM network. Tree trunks are well defined, but branches with many leaves and distant objects do not always perform well.

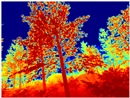

In the case of depth maps obtained with the GLPDepth network, the branches and leaves appear thicker than they really are, which could provide a safety margin when navigating between trees. According to the limited color range of the depth maps with this network, we can infer that GLPDepth has some issues determining the depth of the different objects in some of the images of Gascola and Season Forest Winter trajectories.

Finally, with the Boosting Monocular Depth network using the first version of MiDaS, depth maps are obtained with all the obstacles detected; in some scenarios where the branches are in the foreground, they are well defined, reflecting their thickness. This is revealing as it could allow for trajectory planning.

In summary, the above experimental results show that GLPDepth and Boosting Monocular Depth (MiDaS) are the best options to detect obstacles caused by trunks and branches with dense or sparse foliage, regardless of how close or far they are.

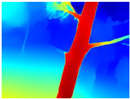

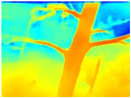

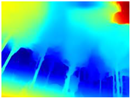

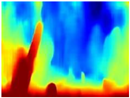

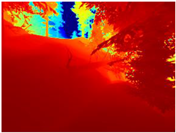

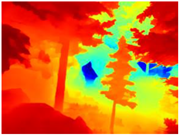

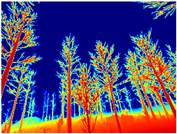

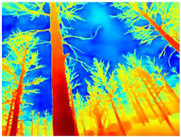

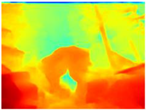

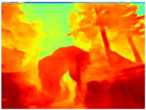

Recently, it has been shown that changing the MiDaS network to LeReS in the Boosting architecture improves the performance of depth map generation [45]. Hence, we also decided to carry out an evaluation between GLPDepth and Boosting Monocular Depth with LeReS.

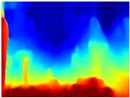

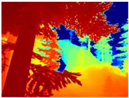

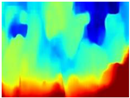

Table 5 displays the depth maps obtained by the two networks. As in the previous test, GLPDepth demonstrates its ability to detect fine details, but these are not very well delimited, thus having in the map the branches and leaves that are thicker than they really appear. Meanwhile, Boosting Monocular Depth (LeReS) shows a great improvement in detecting fine details compared to GLPDepth. The thickness of branches, trunks, and leaves corresponds to what is visualized in the RGB images. In addition, this combination makes a better differentiation of the various depths of the objects.

Table 5.

Depth maps from GLPDepth and Boosting Monocular Depth (LeReS).

For the above, we observed that the dataset used in the training phase of each neural network significantly influences the results. The GLPDepth network was trained with the NYU Depth V2 dataset, which contains images of several closed scenarios, and is, therefore, severely affected by light and shadows originating from outdoor environments. Boosting Monocular Depth (MiDaS) was trained to transfer the fine-grained details from the high-resolution input to the low-resolution input using the Middlebury2014 (23 pairs of high-resolution image pairs of interior scenes) and Ibims-1 (high-quality RGB-D images of indoor scenes), whereas Boosting Monocular Depth (LeReS) was trained using various RGBD image datasets, providing depth maps with better definitions of fine details without being affected by shadows or lighting.

4.4. Quantitative Analysis of Networks

To measure the performance and inference times of the neural networks, we used a computer with an Intel Core i5-11400H of 2.70 GHz, 16 GB of RAM, a Nvidia GeForce RTX3050 graphics card of 8 GB, and the Windows 10 operating system for all tests.

The results obtained using the three trajectories are shown in Table 6. The GLPDepth network gives the best results in the accuracy metrics, and the Boosting Monocular Depth network shows the best results for the others metrics. The error metrics are better when they tend to 0 and accuracy metrics are better when they are closer to 1. In Season Forest and the Season Forest Winter trajectory, the Boosting Monocular network achieves the best results in the accuracy metric and in the other error evaluation metrics.

Table 6.

Comparison results of depth estimation on the three trajectories (Gascola, Season Forest, and Season Forest Winter); the values in bold are the best results using GLPDepth and Boosting Monocular Depth (LeRes).

We confirm from the observations made in the qualitative analysis that the GLPDepth network is good at detecting obstacles as is shown on the accuracy threshold metrics; they are comparable to those obtained with Boosting Monocular Depth (LeReS). However, this network is not providing a good estimation of depth, because there is a considerable increase in the values obtained with the metrics ORD and in the three trajectories analyzed.

The datasets with which the networks were trained may affect their performance in this forested environment under study. The GLPDepth network was trained with indoor images, while a set of images from a variety of environments were used to train the Boosting network.

The inference times obtained by GLPDepth and Boosting Monocular Depth (LeReS) with the three different trajectories are shown in Table 7. GLPDepth proves to be much faster than Boosting Monocular Depth (LeReS) in all cases. This large time difference is because Boosting has to extract patches from the image and estimate the depth map of each of them, and finally merges them all into a base depth estimation generated from the whole image.

Table 7.

Inference runtime comparison on the three trajectories (Gascola, Season Forest, and Season Forest Winter); the values in bold are the best results using GLPDepth and Boosting Monocular Depth (LeRes).

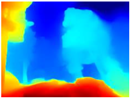

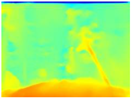

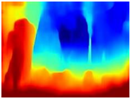

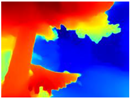

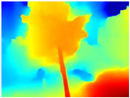

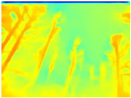

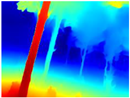

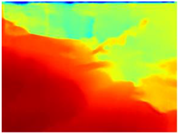

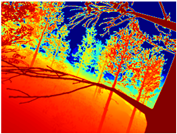

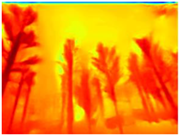

In order to obtain short inference times and to preserve estimation accuracy, we decided to combine the Boosting Monocular Depth network with GLPDepth. We tested this new model only with the Gascola trajectory; the resulting depth maps are shown in Table 8. The new model is better able to highlight fine details in the depth maps than GLPDepth. However, Boosting Monocular Depth using LeReS still generates better depth maps with well-defined fine details than the other networks. Boosting Monocular Depth (GLPDepth) has the same problem as GLPDepth in correctly inferring object depths, so the depth maps also show a very narrow range of colors.

Table 8.

Comparison of depth maps generated by GLPDepth, Boosting Monocular Depth (LeReS), and Boosting Monocular Depth (GLPDepth).

The performance metrics obtained with Boosting Monocular Depth (GLPDepth) are shown in Table 9. This network generates better values in accuracy metrics, and with the other metrics, the Boosting Monocular Depth (LeReS) network achieves the best results.

Table 9.

Comparison results of depth estimation on the Gascola trajectory; the values in bold are the best results using GLPDepth and Boosting Monocular Depth (GLPDepth).

The inference times obtained using the Gascola trajectory are shown in Table 10. Boosting Monocular Depth (GLPDepth) shows a decrease in inference times compared to Boosting Monocular Depth (LeReS). Nevertheless, these times are still high compared to those generated by GLPDepth.

Table 10.

Inference runtime comparison on Gascola trajectory; the values in bold are the best results using Boosting Monocular Depth with LeReS and GLPDepth.

6. Conclusions

Currently, there has been a breakthrough in the development of deep networks for monocular depth estimation, demonstrating improvements in accuracy and inference times of the models. However, there are still many problems to be solved in the area of low-altitude aerial navigation.

We reviewed four state-of-the-art neural networks for monocular depth estimation networks, with the main idea of using depth maps for obstacle detection for a UAV system in complex natural environments. We found that the networks GLPDepth and Boosting Monocular Depth achieved good performance in detecting fine details in natural environments such as thin branches and small leaves. Nevertheless, Boosting Monocular Depth had high inference times and GLPDepth did not correctly infer the thickness of objects.

We proposed the combination of the GLPDepth network with the Boosting Monocular Depth network for the creation of depth maps. With this new model, inference times were reduced but the accuracy did not improve much compared to Boosting Monocular Depth (LeReS).

We identified some challenges related to the creation of depth maps for autonomous drone navigation in heavily vegetated environments. We also noted the lack of natural environment datasets for training deep neural networks.

Author Contributions

Conceptualization, A.R.-L. and J.F.-P.; methodology, A.R.-L.; software, A.R.-L. and J.F.-P.; validation, A.R.-L., A.M.-S., J.F.-P., and R.P.-E.; formal analysis, A.R.-L., A.M.-S., J.F.-P., and R.P.-E.; investigation, A.R.-L. and J.F.-P.; resources, A.R.-L., A.M.-S., J.F.-P., and R.P.-E.; writing—original draft preparation, A.R.-L.; writing—review and editing, A.M.-S., J.F.-P., and R.P.-E.; visualization, A.R.-L.; supervision, J.F.-P.; project administration, A.M.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thankfully acknowledge the use of the TecNM/Centro Nacional de Investigación y Desarrollo Tecnológico (CENIDET) facility in carrying out this work.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAVs | Unmanned Aerial Vehicles |

| DL | Deep Learning |

| ANN | Artificial Neural Network |

| FE | Feature Engineering |

| CNN | Convolutional Neural Networks |

| WSL | Weakly Supervised Data |

| DPT | Dense Prediction Transform |

| MSFM | Multi-Scale Feature Modulation |

| GPU | Graphics Processing Unit |

| SFF | Selective Feature Fusion |

References

- Alsamhi, S.H.; Shvetsov, A.V.; Kumar, S.; Shvetsova, S.V.; Alhartomi, M.A.; Hawbani, A.; Rajput, N.S.; Srivastava, S.; Saif, A.; Nyangaresi, V.O. UAV Computing-Assisted Search and Rescue Mission Framework for Disaster and Harsh Environment Mitigation. Drones 2022, 6, 154. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep learning in forestry using uav-acquired rgb data: A practical review. Remote Sens. 2021, 13, 2387. [Google Scholar] [CrossRef]

- Yasuda, Y.D.V.; Martins, L.E.G.; Cappabianco, F.A.M. Autonomous visual navigation for mobile robots: A systematic literature review. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.-S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Loquercio, A.; Kaufmann, E.; Ranftl, R.; Müller, M.; Koltun, V.; Scaramuzza, D. Learning high-speed flight in the wild. Sci. Robot. 2021, 6, 59. [Google Scholar] [CrossRef] [PubMed]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep learning An MIT Press Book; MIT Press: Cambridge, MA, USA, 2016; pp. 1–8. [Google Scholar]

- Amer, K.; Samy, M.; Shaker, M.; ElHelw, M. Deep convolutional neural network-based autonomous drone navigation. arXiv 2019. [Google Scholar] [CrossRef]

- Tai, L.; Li, S.; Liu, M. A deep-network solution towards model-less obstacle avoidance. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems. Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2759–2764. [Google Scholar] [CrossRef]

- Khan, F.; Salahuddin, S.; Javidnia, H. Deep learning-based monocular depth estimation methods—A state-of-the-art review. Sensors 2020, 20, 2272. [Google Scholar] [CrossRef]

- Dong, X.; Garratt, M.A.; Anavatti, S.G.; Abbass, H.A. Towards Real-Time Monocular Depth Estimation for Robotics: A Survey. arXiv 2021. [Google Scholar] [CrossRef]

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-Shot Cross-Dataset Transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1623–1637. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Fan, X.; Shi, P.; Xin, Y. R-MSFM: Recurrent Multi-Scale Feature Modulation for Monocular Depth Estimating. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar] [CrossRef]

- Miangoleh, S.H.M.; Dille, S.; Mai, L.; Paris, S.; Aksoy, Y. Boosting Monocular Depth Estimation Models to High-resolution via Content-adaptive Multi-Resolution Merging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Xian, K.; Zhang, J.; Wang, O.; Mai, L.; Lin, Z.; Cao, Z. Structure-Guided Ranking Loss for Single Image Depth Prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Denmark, 2015; Volume 9351. [Google Scholar] [CrossRef]

- Yin, W.; Zhang, J.; Wang, O.; Niklaus, S.; Mai, L.; Chen, S.; Shen, C. Learning to Recover 3D Scene Shape from a Single Image. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Kim, D.; Ga, W.; Ahn, P.; Joo, D.; Chun, S.; Kim, J. Global-Local Path Networks for Monocular Depth Estimation with Vertical CutDepth. arXiv 2022. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What uncertainties do we need in bayesian deep learning for computer vision? Adv. Neural Inf. Proc. Syst. 2017, 30, 5574–5584. [Google Scholar]

- Teed, Z.; Jia, D. DeepV2D: Video to depth with differentiable structure from motion, International Conference on Learning Representations (ICLR). arXiv 2020. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, H.; Hu, Z.; Peng, J. Attention-based context aggregation network for monocular depth estimation. Int. J. Mach. Learn. Cybern. 2021, 12, 1583–1596. [Google Scholar] [CrossRef]

- Alhashim, I.; Wonka, P. High quality monocular depth estimation via transfer learning. arXiv 2018. [Google Scholar] [CrossRef]

- Yin, W.; Liu, Y.; Shen, C.; Yan, Y. Enforcing geometric constraints of virtual normal for depth prediction. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Lee, J.H.; Han, M.K.; Ko, D.W.; Suh, I.H. From big to small: Multi-scale local planar guidance for monocular depth estimation. arXiv 2019. [Google Scholar] [CrossRef]

- Wofk, D.; Ma, F.; Yang, T.J.; Karaman, S.; Sze, V. FastDepth: Fast monocular depth estimation on embedded systems. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019. [Google Scholar] [CrossRef]

- Zhao, S.; Fu, H.; Gong, M.; Tao, D. Geometry-aware symmetric domain adaptation for monocular depth estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Goldman, M.; Hassner, T.; Avidan, S. Learn stereo, infer mono: Siamese networks for self-supervised, monocular, depth estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Andraghetti, L.; Myriokefalitakis, P.; Dovesi, P.L.; Luque, B.; Poggi, M.; Pieropan, A.; Mattoccia, S. Enhancing Self-Supervised Monocular Depth Estimation with Traditional Visual Odometry. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Quebec City, QC, Canada, 16–19 September 2019. [Google Scholar] [CrossRef]

- Garg, R.; Vijay Kumar, B.G.; Carneiro, G.; Reid, I. Unsupervised CNN for single view depth estimation: Geometry to the rescue. In European Conference on Computer Vision; Springer: Cham, Denmark, 2016; Volume 9912, pp. 740–756. [Google Scholar] [CrossRef]

- Eigen, D.; Fergus, R. Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-scale Convolutional Architecture. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar] [CrossRef]

- Tosi, F.; Aleotti, F.; Poggi, M.; Mattoccia, S. Learning monocular depth estimation infusing traditional stereo knowledge. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Guizilini, V.; Ambrus, R.; Pillai, S.; Gaidon, A. 3D Packing for Self-Supervised Monocular Depth Estimation. arXiv 2019. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32. [Google Scholar] [CrossRef]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from RGBD images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7576, No. Part 5. [Google Scholar] [CrossRef]

- Giusti, A.; Guzzi, J.; Cireşan, D.C.; He, F.-L.; Rodríguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Caro, G.D.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Howard, A.; Nate Koenig, N. Gazebo: Robot simulation made easy. Open Robot. Found. 2002. Available online: https://gazebosim.org/home (accessed on 19 September 2021).

- Microsoft Research, AirSim. 2021. Available online: https://microsoft.github.io/AirSim/ (accessed on 5 June 2021).

- Wang, W.; Zhu, D.; Wang, X.; Hu, Y.; Qiu, Y.; Wang, C.; Hu, Y.; Kapoor, A.; Scherer, S. TartanAir: A Dataset to Push the Limits of Visual SLAM. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar] [CrossRef]

- Fonder, M.; Droogenbroeck, M.V. Mid-air: A multi-modal dataset for extremely low altitude drone flights. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Xian, K.; Shen, C.; Cao, Z.; Lu, H.; Xiao, Y.; Li, R.; Luo, Z. Monocular Relative Depth Perception with Web Stereo Data Supervision. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision Transformers for Dense Prediction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2015. [Google Scholar] [CrossRef]

- Miangoleh, S.H.M.; Dille, S.; Mai, L.; Paris, S.; Aksoy, Y. Github repository of Boosting Monocular Depth Network. Available online: https://github.com/compphoto/BoostingMonocularDepth (accessed on 15 October 2022).

- De Sousa Ribeiro, F.; Calivá, F.; Swainson, M.; Gudmundsson, K.; Leontidis, G.; Kollias, S. Deep bayesian self-training. Neural Comput. Appl. 2020, 32, 4275–4291. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).