Abstract

With the objective of addressing the problem of the fixed convolutional kernel of a standard convolution neural network and the isotropy of features making 3D point cloud data ineffective in feature learning, this paper proposes a point cloud processing method based on graph convolution multilayer perceptron, named GC-MLP. Unlike traditional local aggregation operations, the algorithm generates an adaptive kernel through the dynamic learning features of points, so that it can dynamically adapt to the structure of the object, i.e., the algorithm first adaptively assigns different weights to adjacent points according to the different relationships between the different points captured. Furthermore, local information interaction is then performed with the convolutional layers through a weight-sharing multilayer perceptron. Experimental results show that, under different task benchmark datasets (including ModelNet40 dataset, ShapeNet Part dataset, S3DIS dataset), our proposed algorithm achieves state-of-the-art for both point cloud classification and segmentation tasks.

1. Introduction

With the substantial improvement in the performance of 3D scanning equipment, the application scenarios of 3D data in computer vision are also increasing, and include remote sensing mission, autonomous driving, robotics. In contrast with the two-dimensional regularly arranged images, the three-dimensional point cloud data are a collection of points in space which are characterized by being sparse, irregular and disordered. Therefore, means of effectively and quickly improving the performance of traditional optical remote sensing images in road segmentation, three-dimensional urban modeling, forestry monitoring and other tasks in this field through the point cloud approach to the original sensor data characteristics have attracted extensive attention.

To solve these problems, some scholars have carried out a lot of research, which can be roughly divided into three aspects: multi-view, voxel and point. The multi-view-based method [1,2] mainly projects three-dimensional data onto regular two-dimensional images, and then performs two-dimensional convolution operations. However, there is still a certain loss of spatial information in the process of projection. The early works on the Voxel-based method [3,4,5]: mainly quantified irregular three-dimensional data to form regular three-dimensional data, and then applied three-dimensional convolution to extract features. However, the quantization operation of this method greatly increases the computational cost. The point-based methods [6,7,8] directly takes a sparse or dense point set as input, and then uses a multilayer perceptron (MLP) to extract features.

Recently, some scholars directly performed convolution operations on irregular point cloud data by designing convolution kernels [9,10]. These methods mainly distribute convolution kernel weights for local adjacent points, make local point cloud data regularized and then use convolution operations. In addition, some research works established the topological relationship between points through the graph structure [11,12,13], and then performed convolution operations. However, in the complex point cloud density environment, different adjacent points have different feature correspondences, so the fixed/isotropic convolution kernel will make the final effect poor.

In this paper, we propose an adaptive weighted graph convolutional multilayer perceptron, namely GC-MLP. The main contributions of this paper can be summarized as follows:

- (a)

- We propose a point cloud processing method based on adaptive weight graph convolution multilayer perceptron. The adaptive weight method greatly improves the flexibility of the algorithm by defining convolution filters at any relative position, and then calculating the aggregate weights on all adjacent points.

- (b)

- We propose a convolution multilayer perceptron, which improves the generalization performance through convolutional sparse links, and improves the model capacity through MLP’s dynamic weights and global receptive field. The experimental results show that our proposed algorithm achieves the state of the art on both classification and segmentation benchmark datasets.

2. Related Work

For 2D image understanding, pixels are arranged in a regular grid and can be processed using methods such as convolution. In contrast, the 3D point cloud is disordered and irregular in 3D space, and the essence of point cloud is collection. At this stage, methods based on learning 3D point cloud processing are mainly divided into the following types: projection-based, voxel-based and point-based networks.

2.1. Projection-Based Networks

Due to the success of 2D image processing in deep learning, early works [1,2,14] first projected point clouds onto 2D images through multiple views, and then used traditional convolutions for feature learning. However, point cloud data have irregularities in three-dimensional space, and two-dimensional images are regular, so the process of converting three-dimensional images into two-dimensional images results in some data loss. In a recent studies, Tatarchenko et al. [15] proposed a surface convolution. The deep convolutional network structure first projects the local surface geometry of each point onto the tangent planes around the point, and then convolves the sliced image. Lin et al. [16] proposed a flattened convolution method for point cloud structure. This method first maps the local point cloud to a two-dimensional plane through interpolation, and then uses traditional convolutions to extract features. Lang et al. [17] proposed a new encoder PointPillars. The method firstly divides the 3D point cloud on the x–y plane to form a uniform point cloud column, converts it into a sparse virtual image through a learnable encoder and finally performs a convolution operation on it to extract the features. To reduce the required computing resources, Li et al. [18] proposed a transformation function, which aggregates point clouds into 2D image space before using affine transformation for transformation. Then, the convolution algorithm is applied to the transformed image space.

2.2. Voxel-Based Networks

Voxel-based methods convert irregular 3D point cloud data into regular 3D data [19,20], and then perform 3D convolution on them to extract features. Due to the loss of spatial information in the conversion process, and with the increase in voxel resolution, the memory and calculation amount also greatly increase. For such problems, recent studies have used sparse structures to obtain smaller grids with better performance. For example, the OctNet [21] and Kd-Net [22] networks divide point clouds by building trees. However, due to the quantization of the voxel grid, there is still a certain loss of information. Park et al. [23] have effectively improved the computing efficiency by using lightweight self-attention to encode the voxel hash architecture.

2.3. Point-Based Networks

Unlike projections in 2D and voxel quantization in 3D to regularize the data, this section discusses the direct input of point cloud data as features into deep networks [24,25,26,27,28]. PointNet [29] first proposed to use MLP for feature extraction independently for each point, and then aggregate the global features through a symmetric function (global max pooling). However, due to the lack of local features captured in this structure, the ability to recognize fine-grained categories and generalization in complex scenes is limited. Recent studies [30,31,32] have proposed more ways of capturing local features, such as multi-scale features and weight features. Among them, Qi et al. [33] iteratively extracted local features from the spatial point cloud by calculating the spatial distance, which effectively solved the problem of local adjustment loss in the PointNet structure network. To better capture the characteristics of different scales, Wang et al. [34] proposed a multi-scale fusion module, which can adaptively consider the information of different scales and establish fast descent branches to bring more abundant gradient information.

Recently, some local feature learning methods that directly input point cloud data into continuous convolutions have also yielded good experimental results. PointCNN [35] transforms the local input point cloud data into regularized data through X-conv transformation, and then performs convolution operation. SpiderCNN [36] extends the convolution operation from a regular grid to an embeddable irregular point set through a defined convolution filter, and captures local informative features through the filter. KPConv [9] captures local information through the local radius search and extracts features through flexible Kernel convolution with an arbitrary number of points. RS-CNN [37] first performs random sampling to establish a neighborhood set of sampling points, and then calculates the distance between the neighbor point and the center point in each neighborhood to bring the local prior relationship into the convolution operation. ShellNet [38] divides the neighbors of sampling points into different shells, then extracts features from the points of the same shell, and finally aggregates the local features of different shells. Qiu et al. [39] proposed a geometric back-projection network for point cloud classification, which uses the idea of error correction feedback structure to fully capture the local features of point clouds.

Some studies treat the set of points in the point cloud as the vertices of the graph in the graph network [40,41,42], and generate directed edges of the graph based on the neighborhood of this point. DGCNN [12] captures local information through EdgeConv, and updates the local information at each layer through the nearest neighbor number. However, EdgeConv only considers the distance between the coordinates of the point and each neighbor point, ignoring the vector direction of the adjacent points, and loses part of the local geometric information. Wang et al. [11] proposed a graph attention convolution, which dynamically distributes the attention weights of neighboring points by combining spatial locations and specific attributes. ECC [43] proposes a local convolution of graphs, where the weights of the convolution kernels depend on the values of adjacent edges and can be dynamically updated for each specific input. SPG [44] captures contextual relationships by building a super-dot graph structure, and then performs convolutional learning. Landrieu et al. [45] proposed a graph-structured contrast loss, which is combined with a cross-partition weighting strategy to generate point embeddings with high contrast at object boundaries. Lin et al. [13] proposed a 3D graph convolutional network for processing 3D point cloud data. The shape and weight of the convolution kernel are obtained during training, and graph max pooling captures features of different scales. Yang et al. [46] proposed a continuous CRF graph convolution (CRFConv) to construct an encoder–decoder network, in which the CRFConv embedded in the decoding layer can recover the details of high-level features lost in the encoding phase to enhance the network’s positioning capability.

3. Methods

In this section, we first review the overall idea of direct point processing (Section 3.1), and then we go on to introduce adaptive weighted graph convolutional multilayer perceptrons (AWConvMLP) (Section 3.2). Finally, we introduce the transformation process of convolutional multilayer perceptrons (ConvMLP) (Section 3.3).

3.1. Overview

Dataset: For point cloud set with N points and its corresponding point cloud feature , where is the point cloud coordinate and color information of the i-th point, and is its corresponding point cloud feature.

General formulation: When the point cloud set is input, the local aggregation network first calculates the relative position of any point and the feature of its adjacent points. Then, converts the local adjacent point features into a new feature map through the conversion function . Finally, global features are extracted using channel symmetric aggregation functions.

where represents the nearest neighbor of point . Symmetric aggregate function S is usually the maximum, average or sum.

3.2. Adaptive Weight Graph Convolution Multilayer Perceptron

We define a point P and a set of adjacent spatial points to form a graph structure , where and represent sets of vertices and edges, respectively. Definition (including itself) is the number of neighbors of point .

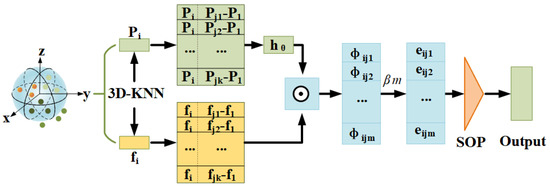

First, we design a convolutional filter at arbitrary relative positions such that it focuses on the most relevant parts of the neighborhood for learning (As shown in Figure 1), enabling the convolutional kernel to dynamically adapt to the object’s structure.

where represents the weight of M filters and is the feature mapping function. Here, we use MLP, whose dimension is from dim to 2*dim and back to dim. is the graph vertex and its relative position, , is the concatenation operation.

Figure 1.

Processing flow of AWConvMLP. Firstly, the local aggregation network extracts the features of target point P, convolutes its relative position, then calculates the aggregation weight of all dimensional convolution kernels and finally extracts the feature by channel symmetric aggregation function (Max, average or sum. Here, max pooling is used [12,42].

In addition, the aggregation weight corresponding to each adjacent point convolution kernel is as follows:

where represents the point-wise MLP. is the inner product of two vectors. is correspondingly defined as .

Finally, we define the output features of point by applying an aggregate function to all edge features in the neighborhood:

where represents the max pooling function.

3.3. Convolution Multilayer Perceptron

For the traditional convolution with a fixed convolution kernel, it contains local connections, which has the advantages of high computational efficiency, but has the characteristics of a poor effect in complex environments. Although the point-wise MLP structure has the characteristics of large model capacity, the local generalization ability needs to be improved.

In view of the advantages and disadvantages of the two, we propose a model ConvMLP, which improves the generalization performance through sparse links, and improves the model capacity through dynamic weights and point-wise MLP.

First, the characteristic matrix output in the previous stage is used as the input data of the model, the graph structure matrix is obtained through the graph network, then it is input into a convolution residual multilayer perceptron structure, and finally, the graph convolution multilayer perceptron feature with maximum pooling is output.

where represents the graph network, , denotes the convolution operation, stands for the activation function, represents the j-th feature vector after convolution, represents a normalized function, represents channel MLP, and represents the feature vector output through the ConvMLP layer.

4. Experimental Results and Analysis

We evaluate our model on three different datasets for point cloud classification, part segmentation and semantic scene segmentation. For classification, we use the benchmark ModelNet40 dataset [47]. For object part segmentation, we use the ShapeNet Part dataset [48]. For semantic scene segmentation, we use the Stanford Large 3D Indoor Space (S3DIS) dataset [49].

4.1. Classification

Data: We train and evaluate the proposed classification task model on the ModelNet40 dataset and compare the results with those of previous work. ModelNet40 is a synthetic dataset consisting of computer-aided design (CAD) models, containing 12,311 CAD models given as triangular meshes, among which 9843 samples are used for training and 2468 samples are used for testing. For evaluation metrics, we use the mean accuracy within each category (mAcc) and overall accuracy (OA) across all categories.

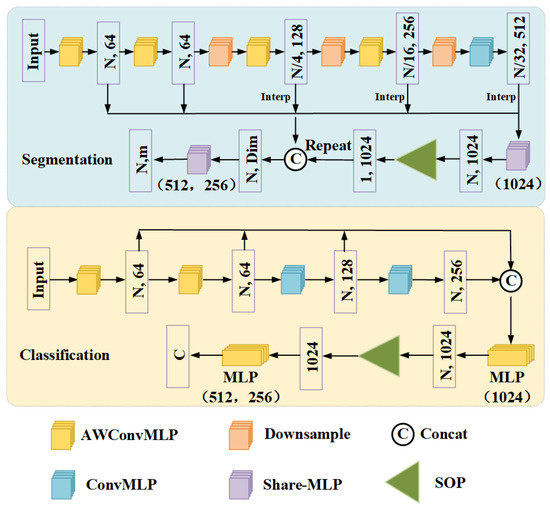

Network configuration: The network structure flow of the classification task is shown in Figure 2. GC-MLP replaces the first two layers and the last two layers of the four-layer EdgeConv in the DGCNN algorithm with AWConvMLP and ConvMLP, respectively. The number of nearest neighbors k for each layer is set to 20. Shortcut connections employ a shared fully connected layer (1024) to aggregate multi-scale global features, where the global features of each layer are obtained through a max pooling function. The initial learning rate of the SGD optimizer is 0.03 and the momentum is 0.9. The normalization and activation functions of each layer are batch normalization and LeakyReLU, respectively. The dropout rate is set to 0.5. The batch size of all trained models is set to 32 and trained for 250 epochs. We use PyTorch [50] to implement and train the network on a RTX 3090 GPU. For other tasks, hyperparameters are chosen in a similar manner.

Figure 2.

The GC-MLP network structure is used to classify and divide tasks. The convolution of the convolution layer is a standard convolution operation. The first two layers of the segmentation model use normal AWconvMLP, the last three layers use downsampling and AWconvMLP structures, and finally use linear interpolation and skip link connection methods to upsample and aggregate the features of each layer. Among them, represents 2112 dimensions (2048+64 (category vector)) in partial segmentation, represents 2048 dimensions in semantic segmentation and the classification model uses dynamic structure.

Results: To make the comparison clearer, we additionally list the input data type and number of points corresponding to each model algorithm. The experimental results of the classification task are shown in Table 1. As can be seen from Table 1, compared with recent model algorithms, our proposed model has state-of-the-art performance results under the same dataset. Compared with the CSANet algorithm, our algorithm achieves 1.1% and 0.6% improvement in mAcc and OA, respectively. Compared with the MFNet algorithm, our algorithm is slightly lower in mAcc, but has 0.3% improvement in OA. Compared with the DGCNN model algorithm, it improves by 0.8% in mAcc and 0.5% in OA.

Table 1.

Classification results on ModelNet40 dataset.

4.2. Part Segmentation

Data: We train and evaluate the proposed part segmentation task model on the ShapeNet dataset and compare the results with those of previous work. The dataset has 16 categories, and there are 2–6 types of 50 shapes in each category (2048 points are sampled for each shape), and a total of 16,881 3D models in all categories (including 14,007 in the training set and 2874 in the test set). For the evaluation metrics, we use the mean class IoU (mcIoU) per class and the mean instance IoU (mIoU) per instance.

Network configuration: The network structure flow of some segmentation tasks is shown in Figure 2. We replace the EdgeConv layer in the DGCNN algorithm with AWConvMLP, transition downsample, and ConvMLP. Experimental settings such as SGD, normalization, activation function, and dropout rate are consistent with the classification tasks.

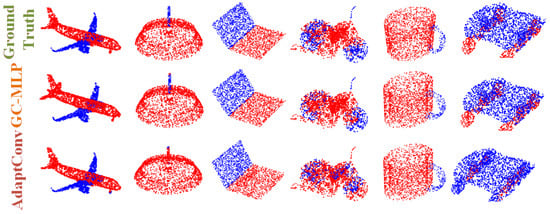

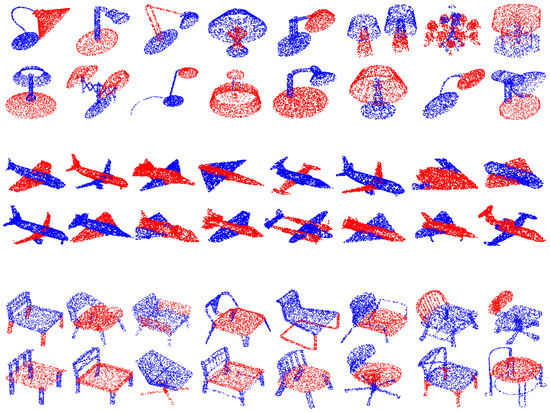

Results: The experimental results of some segmentation tasks are shown in Table 2. The visualization results of part segmentation are shown in Figure 3, and more visualization results are shown in Figure 4. As can be seen from Table 2, compared with recent model algorithms, our proposed model has state-of-the-art performance results on the same dataset. As can be seen from Figure 3, the part segmentation prediction of GC-MLP is clear and close to the ground truth. Compared with the DGCNN model algorithm, it improves by 1.3% in mcIoU and 0.9% in mIoU.

Table 2.

Part segmentation results on the ShapeNetPart dataset evaluated as the mean class IoU (mcIoU(%)) and mean instance IoU (mIoU(%)).

Figure 3.

Visualization of shape part segmentation results on ShapeNet Parts. The first row is the ground truth, and the second row is the predictions of our GC-MLP. From left to right are the airplane, chair, earphone, lamp, laptop, motorbike, mug and skateboard.

Figure 4.

Visualization of the part segmentation results for the lamps, airplanes and chairs.

4.3. Indoor Scene Segmentation

Data: We evaluate the proposed semantic segmentation model on the real-world point cloud semantic segmentation S3DIS dataset and compare the results with those of previous work. The dataset contains 6 indoor areas (between 23 and 68 rooms in each area), 11 scenes (e.g., office, meeting room, open space) and 13 sub-categories (e.g., ceiling, wall, chair) for a total of 272 areas. For evaluation metrics, we use the mean class-wise intersection over union (mIoU) per class.

Network configuration: We follow the same setting as prior study [9], where each point is represented as a 6D vector (XYZ, RGB). We performed six cross-validations with six regions, each with five regions for training and one region for validation. Due to the overlap between the other regions except Region 5, we will report the metrics in Region 5 separately.

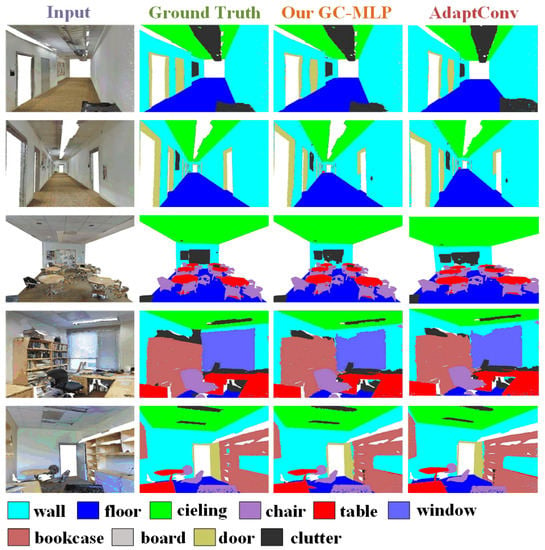

Results: The experimental results of the semantic segmentation task are shown in Table 3. The visualization results of the semantic segmentation are shown in Figure 5. As can be seen from Table 3, compared with recent model algorithms, our proposed model obtains state-of-the-art performance results on the same dataset. As can be seen from Figure 5, the semantic segmentation prediction of GC-MLP is very close to the ground truth. Compared with the DGCNN model algorithm, it improves by 1.3% in mIoU.

Table 3.

Semantic segmentation results on S3DIS dataset evaluated on Area 5. We report the mean classwise IoU (mIoU(%)).

Figure 5.

Visualization of semantic segmentation results on S3DIS Area-5. The first column shows original scene inputs, the second column shows the ground truth annotations, and the third column shows the scenes segmented by our proposed model GC-MLP.

4.4. Ablation Studies

In order to verify some choices of the structure in this paper, we conducted comparative experiments with different graph convolution structures based on the ModelNet40 dataset, and the results are shown in Table 4.

Table 4.

Comparison of classification results of different schemes based on ModelNet40 dataset.

AWConvMLP and ConvMLP: On the premise of keeping other parameters the same and only replacing the model, this paper discusses and verifies the proposed model algorithm with standard graph convolution (DGCNN), ConvMLP and AWConvMLP. The results are shown in Table 4.

It can be seen from Table 4 that compared with other model algorithms, the model algorithm (GC-MLP) combining AWConvMLP and ConvMLP has better experimental results.

AWConvMLP VS ConvMLP: Inspired by channel MLP, we add MLP on the basis of graph convolution, and test the first two layers and the last two layers of convolution and MLP combined experiments, the results of which are shown in Table 4.

It can be seen from Table 4 that when the convolution is combined with the MLP, experimental results have better improvement. This is due to the local information interaction between the MLP and the convolution layer, which makes up for a certain loss of spatial information.

4.5. Robustness Test

To evaluate the robustness of the proposed model, we conducted test experiments on point cloud density and nearest neighbors on the ModelNet40 dataset.

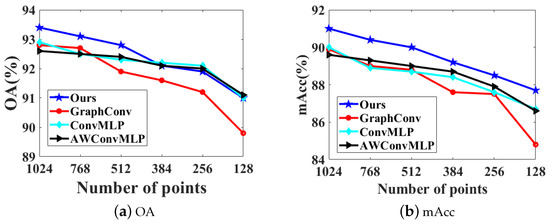

For the point cloud density experiment: under the premise of unified parameter settings, this paper randomly selects a series of points from 128 to 1024, and conducts a comparative test experiment with other graph convolution structures. The results are shown in Figure 6.

Figure 6.

Robustness test on ModelNet40 for classification. GraphConv indicates the standard graph convolution network. ConvMLP indicates that we replace the ablation of the AWConvMLP layer in GC-MLP with ConvMLP. AWConvMLP indicates that we replace the ablation of the ConvMLP layer in GC-MLP with AWConvMLP.

It can be seen from Figure 6 that, compared with other graph convolution structure models, the model proposed in this paper has better robustness in point cloud density experiments.

For the nearest neighbor number experiment, under the premise of unified parameter settings, this paper randomly selects a series of representative nearest neighbor numbers from 5 to 40, and conducts a comparative test experiment under the number of 1024 points. The results are shown in Table 5.

Table 5.

Results of our classification network with different numbers k of nearest neighbors.

It can be seen from Table 5 that when the number of nearest neighbors k = 20, it has better performance. As the number of nearest neighbors decreases within a certain range, the visual receptive field is also limited, and the performance also decreases. However, when the number of nearest neighbors reaches a certain level, as the number of nearest neighbors increases, nearby local information will also be incorporated, and the performance will also decrease.

4.6. Efficiency

In order to calculate the computational complexity of the proposed model structure, this paper calculates the parameters and the performance achieved by the model based on the classification task of the ModelNet40 dataset. The parameters and model performance of the proposed model and excellent algorithms in recent years are shown in Table 6.

Table 6.

The number of parameters and overall accuracy of different models.

It can be seen from Table 6 that compared with other model structures, the model proposed in this paper not only has less model parameters, but also has a state-of-the-art performance in classification tasks.

5. Conclusions

In this paper, we propose a method based on the graph convolution multilayer perceptron method for the problem of 3D point cloud feature learning. Experimental results show that the proposed algorithm not only achieves good experimental performance in point cloud classification and segmentation tasks under the benchmark dataset, but also has good robustness and efficiency. Among them, AWConvMLP enables the convolution kernel at any relative position to dynamically adapt to the structure of the object by learning the features of the most relevant parts of the neighborhood, which effectively improves the flexibility of point cloud convolution. The global feature learning is further carried out through the weight-sharing multi-layer perceptron. ConvMLP improves the generalization performance through sparse links, and improves the model capacity through dynamic weights and global receptive fields.

Author Contributions

Conceptualization, Y.W. and G.G.; methodology, Y.W., G.G. and Q.Z.; resources, Y.W. and P.Z.; writing—original draft preparation, Y.W. and Z.L.; writing—review and editing, Y.W., G.G., Q.Z., P.Z., Z.L. and R.F.; visualization, Q.Z. and R.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key projects of National Natural Science Foundation of China: 61731015, 62271393; National key research and development plan: 2020YFC1523301, 2020YFC1523303, 2019YFC1521103; Key research and development plan of Qinghai Province: 2020-SF-140; Key industrial chain projects in Shaanxi Province: 2019ZDLSF07-02, 2019ZDLGY10-01.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of these data. The data were obtained from Stanford University and accessed on 2 November 2021, available at https://goo.gl/forms/4SoGp4KtH1jfRqEj2/ with the permission of Stanford University. Publicly available datasets were analyzed in this study. The datasets was accessed on 2 November 2021. It can be found here: http://modelnet.cs.princeton.edu/ and https://www.shapenet.org/.

Acknowledgments

The authors want to thank Stanford University for providing the experimental datasets.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Kanezaki, A.; Matsushita, Y.; Nishida, Y. Rotationnet: Joint object categorization and pose estimation using multiviews from unsupervised viewpoints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5010–5019. [Google Scholar]

- Ben-Shabat, Y.; Lindenbaum, M.; Fischer, A. 3dmfv: Three-dimensional point cloud classification in real-time using convolutional neural networks. IEEE Robot. Autom. Lett. 2018, 3, 3145–3152. [Google Scholar] [CrossRef]

- Meng, H.Y.; Gao, L.; Lai, Y.K.; Manocha, D. Vv-net: Voxel vae net with group convolutions for point cloud segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 8500–8508. [Google Scholar]

- Maturana, D.; Scherer, S. Voxnet: A 3d convolutional neural network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 922–928. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Huang, Q.; Wang, W.; Neumann, U. Recurrent slice networks for 3d segmentation of point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2626–2635. [Google Scholar]

- Jiang, L.; Zhao, H.; Liu, S.; Shen, X.; Fu, C.W.; Jia, J. Hierarchical point-edge interaction network for point cloud semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 10433–10441. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 6411–6420. [Google Scholar]

- Qiu, S.; Anwar, S.; Barnes, N. Dense-resolution network for point cloud classification and segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 3813–3822. [Google Scholar]

- Wang, L.; Huang, Y.; Hou, Y.; Zhang, S.; Shan, J. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10296–10305. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. (Tog) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Lin, Z.H.; Huang, S.Y.; Wang, Y.C.F. Convolution in the cloud: Learning deformable kernels in 3d graph convolution networks for point cloud analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1800–1809. [Google Scholar]

- Yu, T.; Meng, J.; Yuan, J. Multi-view harmonized bilinear network for 3d object recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 186–194. [Google Scholar]

- Tatarchenko, M.; Park, J.; Koltun, V.; Zhou, Q.Y. Tangent convolutions for dense prediction in 3d. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3887–3896. [Google Scholar]

- Lin, Y.; Yan, Z.; Huang, H.; Du, D.; Liu, L.; Cui, S.; Han, X. Fpconv: Learning local flattening for point convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4293–4302. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Li, H.T.; Todd, Z.; Bielski, N.; Carroll, F. 3d lidar point-cloud projection operator and transfer machine learning for effective road surface features detection and segmentation. Vis. Comput. 2022, 38, 1759–1774. [Google Scholar] [CrossRef]

- Le, T.; Duan, Y. Pointgrid: A deep network for 3d shape understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9204–9214. [Google Scholar]

- Choy, C.; Gwak, J.; Savarese, S. 4d spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3075–3084. [Google Scholar]

- Riegler, G.; Osman Ulusoy, A.; Geiger, A. Octnet: Learning deep 3d representations at high resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3577–3586. [Google Scholar]

- Klokov, R.; Lempitsky, V. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Park, C.; Jeong, Y.; Cho, M.; Park, J. Fast point transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 16949–16958. [Google Scholar]

- Yang, J.; Zhang, Q.; Ni, B.; Li, L.; Liu, J.; Zhou, M.; Tian, Q. Modeling point clouds with self-attention and gumbel subset sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3323–3332. [Google Scholar]

- Zhao, H.; Jiang, L.; Fu, C.W.; Jia, J. Pointweb: Enhancing local neighborhood features for point cloud processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5565–5573. [Google Scholar]

- Sheikh, M.; Asghar, M.A.; Bibi, R.; Malik, M.N.; Shorfuzzaman, M.; Mehmood, R.M.; Kim, S.H. DFT-Net: Deep feature transformation based network for object categorization and part segmentation in 3-dimensional point clouds. Sensors 2022, 22, 2512. [Google Scholar] [CrossRef] [PubMed]

- Han, X.F.; Jin, Y.F.; Cheng, H.X.; Xiao, G.Q. Dual transformer for point cloud analysis. IEEE Trans. Multimed. 2022, 1–10. [Google Scholar] [CrossRef]

- Yan, X.; Zheng, C.; Li, Z.; Wang, S.; Cui, S. Pointasnl: Robust point clouds processing using nonlocal neural networks with adaptive sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5589–5598. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Li, J.; Chen, B.M.; Lee, G.H. So-net: Self-organizing network for point cloud analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9397–9406. [Google Scholar]

- Xu, M.; Zhang, J.; Zhou, Z.; Xu, M.; Qi, X.; Qiao, Y. Learning geometry-disentangled representation for complementary understanding of 3d object point cloud. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, USA, 2–9 February 2021; Volume 35, pp. 3056–3064. [Google Scholar]

- Xu, M.; Zhou, Z.; Qiao, Y. Geometry sharing network for 3d point cloud classification and segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12500–12507. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Wang, G.; Zhai, Q.; Liu, H. Cross self-attention network for 3d point cloud. Knowl. Based Syst. 2022, 247, 108769. [Google Scholar] [CrossRef]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. Pointcnn: Convolution on x-transformed points. Adv. Neural Inf. Process. Syst. 2018, 31. Available online: https://proceedings.neurips.cc/paper/2018/hash/f5f8590cd58a54e94377e6ae2eded4d9-Abstract.html (accessed on 24 October 2022).

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. Spidercnn: Deep learning on point sets with parameterized convolutional filters. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 87–102. [Google Scholar]

- Liu, Y.; Fan, B.; Xiang, S.; Pan, C. Relation-shape convolutional neural network for point cloud analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8895–8904. [Google Scholar]

- Zhang, Z.; Hua, B.S.; Yeung, S.K. Shellnet: Efficient point cloud convolutional neural networks using concentric shells statistics. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 1607–1616. [Google Scholar]

- Qiu, S.; Anwar, S.; Barnes, N. Geometric back-projection network for point cloud classification. IEEE Trans. Multimed. 2021, 24, 1943–1955. [Google Scholar] [CrossRef]

- Lei, H.; Akhtar, N.; Mian, A. Spherical kernel for efficient graph convolution on 3d point clouds. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3664–3680. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Chen, H.; Cui, Z.; Timofte, R.; Pollefeys, M.; Chirikjian, G.S.; Van Gool, L. Towards efficient graph convolutional networks for point cloud handling. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–18 October 2021; pp. 3752–3762. [Google Scholar]

- Zhou, H.; Feng, Y.; Fang, M.; Wei, M.; Qin, J.; Lu, T. Adaptive graph convolution for point cloud analysis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–18 October 2021; pp. 4965–4974. [Google Scholar]

- Simonovsky, M.; Komodakis, N. Dynamic edge-conditioned filters in convolutional neural networks on graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3693–3702. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4558–4567. [Google Scholar]

- Landrieu, L.; Boussaha, M. Point cloud oversegmentation with graph-structured deep metric learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7440–7449. [Google Scholar]

- Yang, F.; Davoine, F.; Wang, H.; Jin, Z. Continuous conditional random field convolution for point cloud segmentation. Pattern Recognit. 2022, 122, 108357. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Yi, L.; Kim, V.G.; Ceylan, D.; Shen, I.C.; Yan, M.; Su, H.; Lu, C.; Huang, Q.; Sheffer, A.; Guibas, L. A scalable active framework for region annotation in 3d shape collections. ACM Trans. Graph. (ToG) 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1534–1543. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper/2019/hash/bdbca288fee7f92f2bfa9f7012727740-Abstract.html (accessed on 24 October 2022).

- Qi, C.R.; Su, H.; Nießner, M.; Dai, A.; Yan, M.; Guibas, L.J. Volumetric and multi-view cnns for object classification on 3d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5648–5656. [Google Scholar]

- Wang, C.; Samari, B.; Siddiqi, K. Local spectral graph convolution for point set feature learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 52–66. [Google Scholar]

- Li, Y.; Lin, Q.; Zhang, Z.; Zhang, L.; Chen, D.; Shuang, F. MFNet: Multi-level feature extraction and fusion network for large-scale point cloud classification. Remote. Sens. 2022, 14, 5707. [Google Scholar] [CrossRef]

- Tchapmi, L.; Choy, C.; Armeni, I.; Gwak, J.; Savarese, S. Segcloud: Semantic segmentation of 3d point clouds. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 537–547. [Google Scholar]

- Wang, S.; Suo, S.; Ma, W.C.; Pokrovsky, A.; Urtasun, R. Deep parametric continuous convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2589–2597. [Google Scholar]

- Liu, Z.; Hu, H.; Cao, Y.; Zhang, Z.; Tong, X. A closer look at local aggregation operators in point cloud analysis. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 326–342. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).