Abstract

In robot-assisted ultrasound-guided needle biopsy, it is essential to conduct calibration of the ultrasound probe and to perform hand-eye calibration of the robot in order to establish a link between intra-operatively acquired ultrasound images and robot-assisted needle insertion. Based on a high-precision optical tracking system, novel methods for ultrasound probe and robot hand-eye calibration are proposed. Specifically, we first fix optically trackable markers to the ultrasound probe and to the robot, respectively. We then design a five-wire phantom to calibrate the ultrasound probe. Finally, an effective method taking advantage of steady movement of the robot but without an additional calibration frame or the need to solve the equation is proposed for hand-eye calibration. After calibrations, our system allows for in situ definition of target lesions and aiming trajectories from intra-operatively acquired ultrasound images in order to align the robot for precise needle biopsy. Comprehensive experiments were conducted to evaluate accuracy of different components of our system as well as the overall system accuracy. Experiment results demonstrated the efficacy of the proposed methods.

1. Introduction

Needle biopsy is a well-established procedure that allows for examination of abnormal tissue within the body. For example, percutaneous needle biopsy of suspected primary bone neoplasms is a well-established procedure in specialist centers [1]. Fine needle biopsy has long been established as an accurate and safe procedure for tissue diagnosis of breast mass [2,3]. Amniocentesis is a technique for withdrawing amniotic fluid from the uterine cavity using a needle [4,5,6,7]. Often, these procedures are performed under image guidance. Although some of the needle biopsy procedures can be guided using imaging modalities such as fluoroscopy, CT, MRI, single-photon emission computed tomography (SPECT), positron emission tomography (PET), and optical imaging, there are procedures such as amniocentesis which require continuous ultrasound (US) guidance when taking the safety of the mother and the baby into consideration. US is regarded as one of the most common imaging modalities for needle biopsy guidance as it is relatively cheap, readily available, and uses no ionizing radiation.

US-guided needle biopsies are often accomplished with hand held and stereotactic biopsy procedure, which are operator dependent. Moreover, such procedures require extensive training exercises, are difficult to regulate, and are more challenging to perform when small lesions are found. Consequently, hand held US-guided biopsies do not always yield ideal results.

To address these challenges, one of the proposed technologies is to integrate a robotic system with US imaging [3,8]. In such a robot-assisted, US-guided needle biopsy system, it is essential to conduct calibration of a US probe and to perform hand-eye calibration of the robot in order to establish a link between intra-operatively acquired US images and robot-assisted needle insertion. Based on a high-precision optical tracking system, novel methods for US probe and robot hand-eye calibration are proposed. Specifically, we first fix optically trackable markers to the US probe and to the robot, respectively. We then design a five-wire phantom to calibrate the US probe. Finally, an effective method taking advantage of steady movement of the robot but without the need to solve the equation is proposed for hand-eye calibration. After calibration, our system allows for in situ definition of target lesions and aiming trajectories from intra-operatively acquired US images in order to align the robot for precise needle biopsy. The contributions of our paper can be summarized as:

- We design a five-wire phantom. Based on this phantom, we propose a novel method for ultrasound probe calibration.

- We propose an effective method for hand-eye calibration, which unlike previous work, does not need to solve the equation, or a calibration frame.

- Comprehensive experiments are conducted to evaluate the efficacy of the proposed calibration methods as well as the overall system accuracy.

Related Work

Different robotic systems have been developed for US-guided procedures. The robot has to know the spatial information of the target lesion and the aiming trajectory from the US image in order to realize the needle biopsy. The performance of the needle biopsy is dependent upon the image-to-robot registration accuracy.

Rapid and accurate US probe calibration depends on a well-designed phantom, which is expected to reduce the operation time and to improve the accuracy level. There exist different types of calibration phantom [9]. When a point phantom or plane phantom is used, it is very difficult to align the scan probe with the targets [10,11]. Moreover, these methods rely on a manual segmentation that is time-consuming and labor-intensive. The N-wire phantom was designed to solve the alignment problem [12,13,14]. However, it heavily depends on the known geometry constraint [15], which cannot be precisely satisfied considering the errors in fiducial detections from US images. To address the problem, arbitrary wire phantoms were proposed [16,17].

Hand-eye calibration aims to determine the transformation between a vision system and a robot arm system. The hand-eye calibration methods are different due to various kinds of vision devices and various fixing locations [18]. Generally, an additional calibration frame is required for the hand-eye calibration to identify the extrinsic and intrinsic parameters of the camera [19,20]. Furthermore, it is addressed by solving the form of that formulates the closed-loop system [21]. Different methods and solutions have been developed, including simultaneous closed-form solution [22], separable closed-form solutions [23], and iterative solutions [24]. The first autonomous hand-eye calibration was proposed by Bennett et al. [25] to identify all parameters of the internal models of both the camera and the robot arm system by an interactive identification method. There also exist methods to identify the hand-eye transformation by recognizing movement trajectories of the reference frame corresponding to fixed robot poses [26]. In such methods, it is critical to choose appropriate poses and movement trajectories in order to realize a rapid and reliable calibration.

2. Overview of Our Robot-Assisted Ultrasound-Guided Needle Biopsy System

Our robot-assisted US-guided needle biopsy system consists of a master computer equipped with a frame grabber (DVI2USB 3.0, Epiphan Systems Inc., Ottawa, ON, Canada), an US machine (ACUSON OXANA2, Siemens Healthcare GmbH, Marburg, Germany) with a 45-mm linear array probe of 9L4 Transducer (Siemens Medical Solutions USA Inc., Pennsylvania, CA, USA), an optical tracking camera (Polaris Vega XT, Northern Digital Inc., Ontario, ON, Canada), and a robot arm (UR 5e, Universal robots Inc., Odense, Denmark) with a biopsy guide. Via the frame grabber, the master computer can grab real-time US images with a frequency of 10 Hz. It also communicates with the tracking camera to get poses of different tracking frames and with the remote controller of the UR robot in order to realize a steady movement and to receive feedback information, such as robot poses.

During a needle biopsy procedure, the target lesion and the aiming trajectory are planned in the US image grabbed by the master computer. Then, the pose of the guide will be adjusted to align with the planned biopsy trajectory. Thus, it is essential to determine the spatial transformation from the two-dimensional (2D) US imaging space to the three-dimension (3D) robot space, as shown in Figure 1. The transformation can be obtained via three different calibration procedures, including US probe calibration, hand-eye calibration, and TCP (Tool Center Point) calibration.

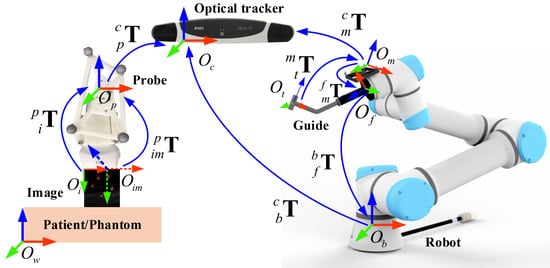

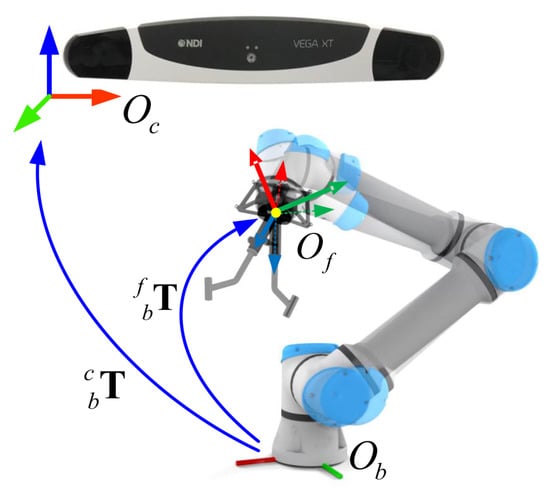

Figure 1.

The involved coordinate systems in our robot-assisted US-guided biopsy system. During a needle biopsy procedure, the pose of the guide is adjusted to align with the biopsy trajectory planned in an acquired US image. See the main text for detailed descriptions.

A robot-assisted ultrasound-guided needle biopsy procedure involves following coordinate systems (COS) as shown in Figure 1. The 3D COS of the optical tracking camera is represented by ; the 3D COS of the reference frame on the end effector is by ; the 3D COS of the robotic flange is by ; the 3D COS of the guiding tube is by ; the 3D COS of the robot base is by ; the 2D COS of the US image is by ; the 3D COS of the plane where the US image is located is by ; the 3D COS of the reference frame attached to the US probe is by ; the 3D COS of the reference frame attached to the patient/phantom is by . At any time, poses of different tracking frames with respect to the tracking camera such as , , , are known. At the same time, the pose of the robotic flange with respect to the robot base is known. This transformation information can be retrieved from the API (Application Programming Interface) of the associated devices.

A biopsy trajectory can be defined from an intra-operatively acquired US image by a target point and a unit vector that indicates the direction of the trajectory. To simplify the derivation and expression, the planned trajectory in the image COS is written in a format of a matrix, as:

The planned trajectory in the robot-base COS is presented by , which is obtained by the following chain of transformations:

where represents the homogeneous transformation of the tracking camera COS relative to the robot-base COS and is determined by:

where represents the homogeneous transformation of the flange COS relative to the robot-base COS, and is the inverse of , which is the homogeneous transformation of the COS of the reference frame on the end effector relative to the tracking camera COS .

Similar to the definition of the planned trajectory, pose of the center line of the guiding tube in the robot-base COS can be defined by , which is defined by two end points of the center line, and :

To realize the robotic assistance for needle biopsy, the robot is controlled to provide a corresponding pose, so that the center axis of the guiding tube is aligned with the planned trajectory, which can be modeled as:

The complete system requires knowing three spatial transformations, i.e., , and , of which is obtained by US probe calibration, is by hand-eye calibration, and is by TCP (Tool Center Point) calibration. The accuracy of the spatial calibrations will affect the biopsy accuracy. Below, we will present details about these three calibration procedures.

3. Calibration Methods

3.1. US Probe Calibration

is used to transform a pixel in the 2D US imaging space to the 3D-COS of the reference frame attached to the US probe. This transformation matrix is determined by a calibration procedure as described below.

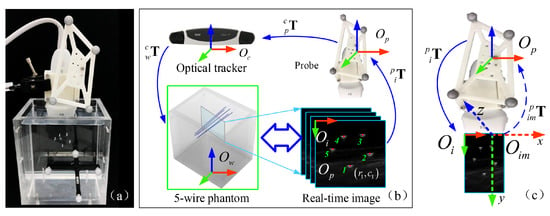

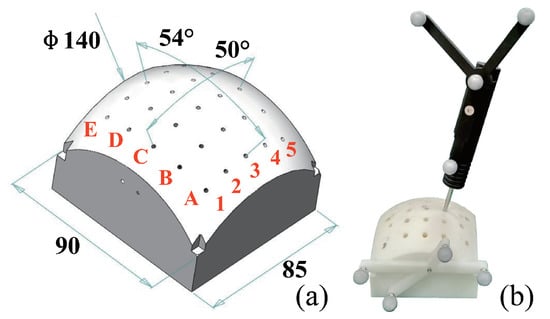

To calibrate , we design a five-wire phantom. The wire phantom uses five pieces of nylon wires with a diameter of mm, as shown in Figure 2. These wires are designed not to be parallel to each other and are submersed in a water tank. During the US probe calibration process, we fix the scanning depth of the US to 5 cm, and the focus depth to cm, which are selected based on typical clinical scenarios. The COS of the wire phantom is defined by fixing an optical reference frame to the phantom.

Figure 2.

Schematic view of the US probe calibration based on a five-wire phantom; (a) experimental setup with a five-wire phantom; (b) spatial transformations involved in US probe calibration; (c) a schematic illustration on how to transform a pixel in the 2D US imaging space to the 3D-COS of the reference frame attached to the US probe.

The transformation can be represented as:

where the scaling matrix describes the relationship between the local 2D US image COS and the 3D COS , which defines the local COS of the plane where the US image is located (see Figure 2 for details); is the rigid body transformation between the 3D COS and the 3D COS of the reference frame attached to the US probe.

The scaling matrix has the form:

where and represent the scaling parameters (mm/pixel) in the x- and y-direction, respectively; and defines the translation between the origins of the local 2D US image COS and the 3D COS . We can multiply into to get , which has the form:

where , , , and is the sum of the translation components of matrices and . Thus, is determined by , and , which are all vectors. Below, we present details on how to compute these three vectors.

The intersections between the US image plane and the wires are used to derive the transformation . They are extracted from acquired US images by a semi-automatic point recognition algorithm [27]. Every detected intersection point is expressed as , where r and c indicate the location of a pixel at the r-th row and c-th column in the image. With , the position of any intersection point can be transformed to the 3D COS , as:

The image-based points {} can be further transformed to the 3D COS via the transformations of the reference frame attached to the phantom and the reference frame attached to the US probe with respect to the tracking camera:

The intersection point is on a straight wire which is rigidly attached to the phantom and can be modeled in the phantom COS as:

where is a coefficient matrix of a line equation in the phantom COS , which can be determined if we know two points on the wire. This is done by digitizing the two end points of the wire using a tracked pointer. By combining (13) and (14), we have:

The point recognition algorithm [27] will generate detection points with noise. To model such detection noise, we aim to compute the calibration parameters by solving following optimization problem:

where represents the location of the intersection pixel between the j-th wire with the i-th US image. is the corresponding known coefficient matrix of the j-th wire in the phantom COS . After obtaining , we can compute transformation according to (8) to finish the US probe calibration.

3.2. Hand-Eye Calibration

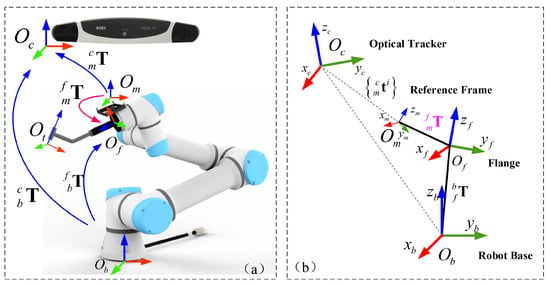

The hand-eye calibration is to establish the spatial transformation between the optical tracking camera and the robot. In this work, the hand-eye calibration is to derive the transformation of the optical reference frame attached to the end effector with respect to the robot base. Generally, the hand-eye transformation is represented by a homogeneous matrix , which is composed of a rotation matrix and a translation vector . Our hand-eye calibration procedure involves four 3D COSs as shown in Figure 3, including the robot-base COS , the flange COS , the tracking camera COS , and the COS of reference frame attached to the end effector.

Figure 3.

A schematic illustration of spatial transformations involved in the hand-eye calibration. (a) the set up; (b) the coordinate systems.

A conventional way to solve the hand-eye calibration problem requires solving the equation. In this study, instead of solving the equation, we propose a novel hand-eye calibration method that takes advantage of steady movement of the robot without an additional calibration frame. Specifically, we observe that the orientation of the reference frame changes only if the flange rotates. By controlling the flange to move in two different types of trajectories and by tracking the poses of the reference frame attached to the robot with respect to the tracking camera during the movement, we can compute the rotation matrix and the translation vector of the hand-eye calibration matrix, separately.

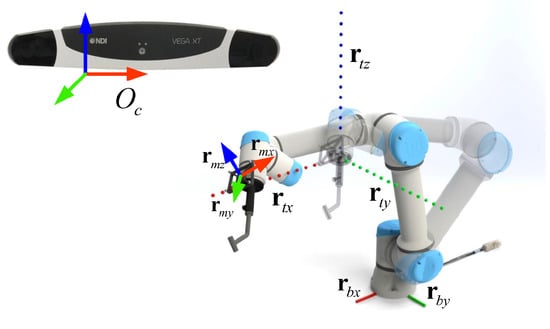

In the definition of the rotation matrix, the column vector of the rotation matrix indicates the components of coordinate axis of a COS relative to another COS. As shown in Figure 4, it is feasible to move the flange along the three coordinate axes of the robot-based COS while keeping the same orientation. Consequently, three line trajectories of the reference frame are recorded by the tracking camera which can be respectively used to compute the three column vectors of the rotation matrix . In detail, we compute three unit vectors , and from the recorded trajectories, which represent the direction of the three coordinate axes of in the tracking camera COS .

Figure 4.

Movement trajectories of the reference frame for identifying the three column vectors, , , and , of the rotation matrix . During the movement, we keep the orientation of the flange unchanged under the observation of the tracking camera.

Hence, the rotation matrix can be written as:

Considering the potential tracking errors, we decompose (17) with singular value decomposition (SVD) to preserve the orthogonality. The result (17) is:

where indicates the matrix determinant, and is the sign function.

Then, the rotation matrix can be obtained through a chain of spatial transformations:

where the right subscript i indicates the i-th points in the movement trajectories. is the inverse of . is the orientation matrix of the reference frame attached to the robot with respect to the tracking camera.

Following (19), each point on the trajectories will give a different when taking tracking errors into consideration. We define a matrix by column vectors , , and of , as well as a column vector by column vectors , , and of the rotation matrix . Because a rotation matrix is orthogonal, we further optimize the hand-eye calibration by using a least-squares fitting, as:

where

is a zero vector.

We can then obtain the rotation matrix in terms of , and preserve its orthogonality by using SVD.

After obtaining the rotation matrix , we can compute the rotation matrix at any time point. Now, we need to compute the translation vector , which represents the offset of the origin of the COS relative to the flange COS . This is done by controlling the movement of the robot such that the flange is rotated around its origin and by maintaining a fixed relationship between the camera and the robot base during the movement. Then, considering two different poses indexed by i and j in the rotational trajectory, we have:

As we are only interested in the translational part, we can decompose all the homogeneous transformations according to the block operation of the matrix to obtain:

In deriving above equations, as shown in Figure 5, we use the properties (1) that the flange is rotated around its origin, thus is constant and (2) that we maintain a fixed relationship between the camera and the robot base, thus and are constant. With a simple mathematical manipulation, we have:

Figure 5.

Rotating around the origin of the flange COS. The yellow point indicates the origin of the flange COS. During the rotation, we maintain a fixed relationship between the camera and the robot base.

In above equation, we would like to estimate while all other elements are either known or can be retrieved from the corresponding device’s API. Similarly, we can improve the translation vector calibration by using a least-squares fitting.

3.3. TCP Calibration

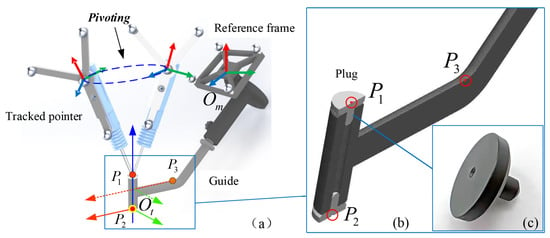

We need to conduct the TCP calibration in order to realize the closed-loop vision control on the pose of the guide under the tracking camera. The TCP calibration is a procedure to estimate the transformation of the COS defined on the guiding tube relative to the the COS of the reference frame attached to the end effector. In this calibration procedure, three COSs are utilized, including the tracking camera COS , the local COS of the guiding tube, and the COS , as shown in Figure 6.

Figure 6.

TCP Calibration by using a tracked pointer. (a) positions of points , and are obtained by pivoting, which are used to build the local COS of the guiding tube. Specifically, the origin of he local COS is located at . (b) Two points and are at the center of plugs inserting into the guiding tube, and the third point is on the guide. (c) A plug is designed for digitizing the end point of the guiding tube.

As shown in Figure 6, the local COS of the guiding tube can be determined by three points, where and are two end points on the center axis of the guiding tube, and is a point on the guide. In order to determine two end points, plugs with a sharp indent are designed and inserted into the guiding tube. We then obtain the positions of these three points by using a tracked pointer pivoting at the corresponding indent.

In the local COS , the origin is defined by the point , the z-axis is determined by and , and the x-z plane is the plane containing the three points. The coordinate axes can be modeled by the three points. , , and are all column vectors. We further obtain the homogeneous transformation by its origin and coordinate axes as:

where

We then combine with the pose of the reference frame to obtain the transformation as:

4. Evaluations and Experiments

4.1. Performance Evaluation

For the robot-assisted needle biopsy, the target point and vector of the trajectory direction are planned in an acquired US image. By the transformation , they are transformed from the US imaging space into the physical space. Hence, the results of spatial calibrations affect the system performance. The US probe calibration affects the recognition and reconstruction on the planned trajectory, while the hand-eye calibration and the TCP calibration affects the accuracy of the robot control.

Accuracy evaluation on the US probe calibration is conducted by comparing reconstructed points, lines, and planes with the corresponding ground truth. With the aid of the tracking camera, the detected points in US images are reconstructed in the phantom space. The deviation between the recognized points and the digitized wire, which is used as the ground truth, and the incline angle between the fitted line and the digitized wire are used to evaluate the calibrations.

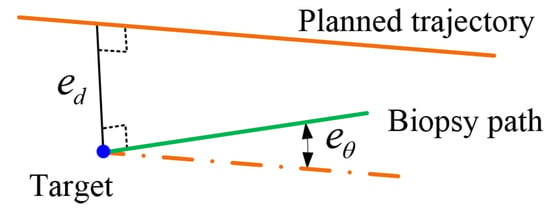

For the robotic system, the system performance is quantified by the deviations between the actual path and the planned biopsy trajectory. The deviations consist of the incline angle (unit: ), as well as the distance (unit: mm) between the planned target point to the biopsy path, as shown in Figure 7.

Figure 7.

Metrics used to evaluate the accuracy including the angle as well as the distance between two spatial lines.

4.2. Validation of the US Probe Calibration

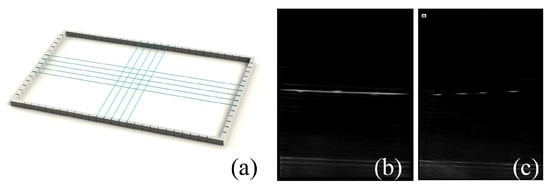

As shown in Figure 8, a plane-wire phantom was designed to verify the US probe calibration. Five longitudinal wires (LWs) and five transverse wires (TWs) were woven on a supporting frame, which was submerged in a water tank. The diameter of the wires was mm. The span distance between the paralleled wires was about 10 mm. We used the semi-automatic point recognition algorithm [27] as we used in the probe calibration to recognize the intersection points between the US image plane and the validation wire phantom, which were represented as a set of pixels. We also established the line equations of the validation wire phantom using a tracked pointer, which was used as the ground truth.

Figure 8.

Validation wire phantom. (a) a supporting frame with crossing wires; (b) one of the US images intersecting with a transverse wire; (c) one of US images intersecting with longitudinal wires.

4.3. Validation of Hand-Eye Calibration

A plastic phantom fabricated by 3D printing was used for evaluating the validation of the hand-eye calibration and the TCP calibration. The phantom had a dimension of mm . In addition, the phantom was designed with drilling trajectories. As shown in Figure 9, the location of the drilling trajectories inside the plastic phantom was coded in alpha-numeric form. The robot was controlled to align a mm drilling bit with the planned trajectory. After drilling, a tracked pointer was used to digitize the drilled paths.

Figure 9.

Plastic phantom for verifying hand-eye calibration. (a) geometric parameters; (b) using a tracked pointer to digitize actual drilling paths inside the phantom.

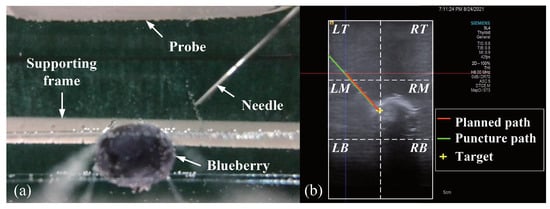

4.4. Blueberry Biopsy Experiments

We designed biopsy experiments on a blueberry submerged in a water tank as shown in Figure 10. The target blueberry had a size of mm mm, and a biopsy needle had a diameter of mm. We divided the water tank into blocks and fixed the blueberry in the lower four blocks to simulate deep seated lesions. Moreover, the incline angle of the planned trajectory was varied over the range to . The biopsy path can be real-time tracked by the ultrasound system. Thus, the biopsy accuracy was quantified by path deviations.

Figure 10.

A schematic illustration of the setup for the blueberry biopsy experiments (a) and a schematic view of the partition of the water tank (b).

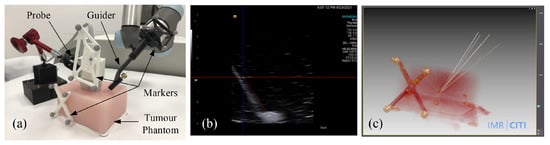

4.5. Tumor Phantom Biopsy Experiments

We further conducted biopsy experiments on a soft tumor phantom (LYDMED, China) to validate the potential of the proposed system for tumor biopsy. The soft tumor phantom is made of silicon rubber and has a size of mm , as shown in Figure 11.

Figure 11.

Soft tumor phantom biopsy experiments. (a) experimental setup including a robot arm with the guide, the soft tumor phantom with a reference frame atached, and the biopsy needle; (b) US image of a biopsy needle inserting into the tumor phantom; (c) CT image of the phantom after needle insertions.

There is a simulated tumor with a diameter of about 10 mm embedded inside the soft phantom. In addition, an optical reference frame was fixed to the phantom. The planned trajectories in the COS of the reference frame were obtained by using the method introduced in [28], which was treated as the ground truth. A needle with a diameter of mm was inserted into the phantom via the passage of guide, and it was kept inside the phantom. We repeated the same procedure six times, and every time we planned different target points and aiming trajectories. After needle insertion, we obtained a CT scan of the phantom. The biopsy accuracy were then measured in the 3D CT imaging space.

5. Experimental Results

5.1. US Probe Calibration

During the US probe calibration, we acquired 110 frames of US images, of which 90 images were used as the data set to derive the transformation , and the others were used as the test data to evaluate the calibration accuracy. We used the test set to reconstruct the five-wire phantom. The distance between the detected points and the adjacent wires, and the incline angle of the reconstructed lines, are presented in Table 1. An average incline angle of and an average distance of mm were found.

Table 1.

Results of the US probe calibration.

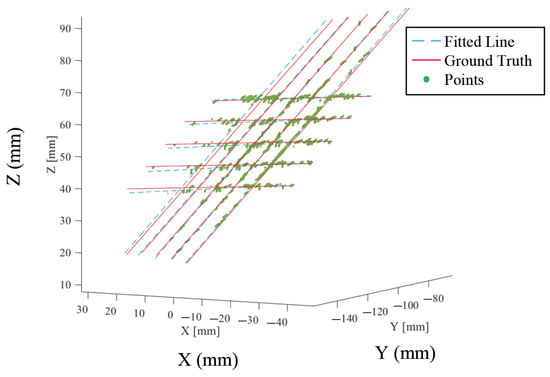

5.2. Validation of US Probe Calibration

For the US probe calibration validation, 176 frames of images were acquired, and 26,868 intersection points were detected from these images, which were used to reconstruct the plane phantom, as shown in Figure 12. The incline angle of the normal vector of the fitted plane was . The mean distance between the corresponding position of the detected points and the wires, and the mean incline angle between the fitted lines, are presented in Table 2. From this table, one can see that our US probe calibration method achieved sub-millimeter and sub-degree accuracy, which were accurate enough for our applications.

Figure 12.

Validation of the US probe calibration. Red lines indicate the ground truth wires while blue lines are fitted lines. Green points are the points detected from the US images.

Table 2.

Results of the experiments on validation of US probe calibration.

5.3. Validation of Hand-Eye Calibration

In the hand-eye calibration validation experiments, the distance and the incline angle between the drilling path and the planned trajectory are presented in Table 3. Specifically, we found that the mean distance deviation was mm and the maximum distance deviation mm. The mean and the maximum incline angle were and , respectively. The relatively large angular error might be caused by the vibration of the guide during drilling.

Table 3.

Results of the experiments on validation of hand-eye calibration.

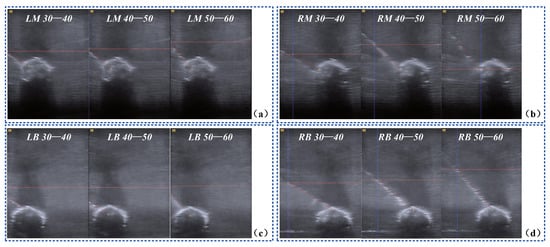

5.4. Blueberry Biopsy Experiments

As shown in Figure 13, we quantified the deviations of the targets and the trajectories when the blueberry was submerged in different blocks of the water tank. The experimental results of the 72 times biopsy on a blueberry are presented in Table 4. An average distance error of mm and an average angular error of were founded. Throughout the 72 times biopsy, the successful rate was .

Figure 13.

US images of the biopsy on a blueberry. (a) biopsy on the targets in the left middle block from three different angles; (b) biopsy on the targets in the right middle block from three different angles; (c) biopsy on the targets in the left bottom block from three different angles; (d) biopsy on the targets in the right bottom block from three different angles.

Table 4.

Results of the blueberry biopsy experiments.

5.5. Tumor Phantom Biopsy Experiments

The overall system performance was evaluated by needle biopsy on a tumor phantom. Results of the tumor phantom experiment are presented in Table 5. The success rate of the needle biopsy into the tumor was . An average distance error of mm and an average angular error of were found. We attributed the relatively large errors to the elastic deformation of the biopsy needle during insertion. Nonetheless, the achieved accuracy is good enough for the target applications and is better than the results achieved by most of the state-of-the-art methods [2,3,8,13,29].

Table 5.

Results of the tumor phantom biopsy experiments.

5.6. Comparison with the State-of-the-Art (SOTA) Methods

For US probe calibration, we compared the reconstruction accuracy with SOTA methods using other types of phantoms, including the method introduced by Wen et al. [15], the method based on an eight-wire phantom [17], the method based on an N-wire phantom [13], the method based on a pyramid phantom [14], and the method based on a Z-wire phantom [9]. In terms of the mean reconstruction accuracy, our method achieved the best result. Table 6 shows the comparison results.

Table 6.

Comparison with other SOTA US probe calibration methods.

Additionally, we also compared our method with other SOTA biopsy methods, including the method introduced by Tanaiutchawoot et al. [30], the method introduced by Treepong et al. [29], and the method introduced by Chevrie et al. [31]. Table 7 shows the comparison results, where the exact type of phantom, the achieved accuracy and the biopsy successful rate of each method are presented. From this table, one can see that our method achieved the best result in terms of both the accuracy and the biopsy successful rate.

Table 7.

Comparison with other SOTA biopsy methods. “-” indicates that the corresponding data are not available.

6. Discussion

Previous studies of needle biopsy have emphasized the applications of fluoroscopy and CT as imaging modalities [32,33]. Compared with these imaging modalities, US has a major advantage in that it is free of risk from ionizing radiation to both the patient and staff. In addition, robot systems have the advantage to ensure the stability and accuracy [30,34]. Taking advantage of an ultrasound system and a robot arm, we developed and validated a robot-assisted system for a safe needle biopsy.

Three spatial calibration methods, including US probe calibration, hand-eye calibration, and TCP calibration, were developed for the robot-assisted biopsy system to realize a rapid registration of patient-image-robot. We validated the US probe calibration by reconstruction analysis of wire phantoms. Our method also achieved a higher accuracy than previously reported results [13,15,16,35]. Different from previous works [10,12,17], our US probe calibration is not dependent upon the known geometric parameters, which makes it easier to manufacture a calibration phantom. We further investigated a combination of the hand-eye calibration and TCP calibration by drilling experiments.

It is worth discussing the proposed hand-eye calibration method. Our method does not need to solve the equation “” as required by previously introduced hand-eye calibration methods [36]. In comparison with methods depending on iterative solutions [24,25] or probabilistic models [22,37], our method is much faster. Our method also eliminates the requirement of an additional calibration frame as in [19,20]. Our hand-eye calibration transformation is derived based on the movement trajectories of the reference frame attached to the end effector, taking advantage of the steady movement of a robot.

There are limitations in our study. First, we did not consider the influence of respiratory motion, which may degrade the performance of the proposed system. Second, the accuracy of the proposed system was affected by the elastic deformation and friction of the target object, which conformed with the finding reported in [31]. Nonetheless, results from our comprehensive experiments demonstrated that the proposed robot-assisted system could achieve sub-millimeter accuracy.

7. Conclusions

In this paper, we developed a robot-assisted system for an ultrasound-guided needle biopsy. Specifically, based on a high-precision optical tracking system, we proposed novel methods for US probe calibration as well as for robot hand-eye calibration. Our US probe calibration method was based on a five-wire phantom and achieved sub-millimeter and sub-degree calibration accuracy. We additionally proposed an effective method for robot hand-eye calibration taking advantage of steady movement of the robot but without the need to solve the equation. We conducted comprehensive experiments to evaluate the efficiency of different calibration methods as well as to evaluate the overall system accuracy. Results from our comprehensive experiments demonstrate that the proposed robot-assisted system has a great potential in various clinical applications.

Author Contributions

Conceptualization, G.Z.; Data curation, J.L., W.S. and Y.Z.; Formal analysis, J.L.; Funding acquisition, G.Z.; Investigation, J.L., W.S. and Y.Z.; Methodology, J.L. and G.Z.; Project administration, G.Z.; Software, J.L. and W.S.; Supervision, G.Z.; Validation, J.L., W.S. and Y.Z.; Visualization, J.L.; Writing—original draft, J.L.; Writing—review and editing, G.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported partially by the National Natural Science Foundation of China (U20A20199) and by the Shanghai Municipal Science and Technology Commission (20511105205).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of School of Biomedical Engineering, Shanghai Jiao Tong University, China (Approval No. 2020031, approved on 8 May 2020).

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 2D | Two-dimension |

| 3D | Three-dimension |

| API | Application Programming Interface |

| COS | Coordinate system |

| LB | Left bottom |

| LM | Left middle |

| LW | Longitudinal wire |

| RB | Right bottom |

| RM | Right middle |

| SVD | Singular value decomposition |

| TCP | Tool center point |

| TW | Transverse wire |

| US | Ultrasound |

References

- Saifuddin, A.; Mitchell, R.; Burnett, S.J.D.; Sandison, A.; Pringle, J.A.S. Ultrasound-Guided Needle Biopsy of Primary Bone Tumours. J. Bone Jt. Surg. Br. 2000, 82-B, 50–54. [Google Scholar] [CrossRef]

- Mallapragada, V.G.; Sarkar, N.; Podder, T.K. Robot-Assisted Real-Time Tumor Manipulation for Breast Biopsy. IEEE Trans. Robot. 2009, 25, 316–324. [Google Scholar] [CrossRef]

- Mallapragada, V.; Sarkar, N.; Podder, T.K. Toward a Robot-Assisted Breast Intervention System. IEEE ASME Trans. Mechatron. 2011, 16, 1011–1020. [Google Scholar] [CrossRef]

- Bibin, L.; Anquez, J.; de la Plata Alcalde, J.P.; Boubekeur, T.; Angelini, E.D.; Bloch, I. Whole-Body Pregnant Woman Modeling by Digital Geometry Processing with Detailed Uterofetal Unit Based on Medical Images. IEEE Trans. Biomed. Eng. 2010, 57, 2346–2358. [Google Scholar] [CrossRef]

- Tara, F.; Lotfalizadeh, M.; Moeindarbari, S. The Effect of Diagnostic Amniocentesis and Its Complications on Early Spontaneous Abortion. Electron. Physician 2016, 8, 2787–2792. [Google Scholar] [CrossRef][Green Version]

- Connolly, K.A.; Eddleman, K.A. Amniocentesis: A Contemporary Review. World J. Obstet. Gynecol. 2016, 5, 58–65. [Google Scholar] [CrossRef]

- Goto, M.; Nakamura, M.; Takita, H.; Sekizawa, A. Study for Risks of Amniocentesis in Anterior Placenta Compared to Placenta of Other Locations. Taiwan J. Obstet. Gynecol. 2021, 60, 690–694. [Google Scholar] [CrossRef] [PubMed]

- Lim, S.; Jun, C.; Chang, D.; Petrisor, D.; Han, M.; Stoianovici, D. Robotic Transrectal Ultrasound Guided Prostate Biopsy. IEEE Trans. Med. Robot. Bionics 2019, 66, 2527–2537. [Google Scholar] [CrossRef]

- Hsu, P.-W.; Prager, R.W.; Gee, A.H.; Treece, G.M. Real-Time Freehand 3D Ultrasound Calibration. Ultrasound Med. Biol. 2008, 34, 239–251. [Google Scholar] [CrossRef]

- Amin, D.V.; Kanade, T.; Jaramaz, B.; DiGioia, A.M.; Nikou, C.; LaBarca, R.S.; Moody, J.E. Calibration Method for Determining the Physical Location of the Ultrasound Image Plane. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention, Utrecht, The Netherlands, 14–17 October 2001; pp. 940–947. [Google Scholar]

- Prager, R.W.; Rohling, R.N.; Gee, A.H.; Berman, L. Rapid Calibration for 3D Freehand Ultrasound. Ultrasound Med. Biol. 1998, 24, 855–869. [Google Scholar] [CrossRef]

- Najafi, M.; Afsham, N.; Abolmaesumi, P.; Rohling, R. A Closed-Form Differential Formulation for Ultrasound Spatial Calibration: Multi-Wedge Phantom. Ultrasound Med. Biol. 2014, 40, 2231–2243. [Google Scholar] [CrossRef]

- Carbajal, G.; Lasso, A.; Gómez, Á.; Fichtinger, G. Improving N-Wire Phantom-Based Freehand Ultrasound Calibration. Int. J. CARS 2013, 8, 1063–1072. [Google Scholar] [CrossRef]

- Lindseth, F.; Tangen, G.A.; Langø, T.; Bang, J. Probe Calibration for Freehand 3D Ultrasound. Ultrasound Med. Biol. 2003, 29, 1607–1623. [Google Scholar] [CrossRef]

- Wen, T.; Wang, C.; Zhang, Y.; Zhou, S. A Novel Ultrasound Probe Spatial Calibration Method Using a Combined Phantom and Stylus. Ultrasound Med. Biol. 2020, 46, 2079–2089. [Google Scholar] [CrossRef]

- Shen, C.; Lyu, L.; Wang, G.; Wu, J. A Method for Ultrasound Probe Calibration Based on Arbitrary Wire Phantom. Cogent Eng. 2019, 6, 1592739. [Google Scholar] [CrossRef]

- Ahmad, A.; Cavusoglu, M.C.; Bebek, O. Calibration of 2D Ultrasound in 3D Space for Robotic Biopsies. In Proceedings of the 2015 International Conference on Advanced Robotics (ICAR), Istanbul, Turkey, 27–31 July 2015; pp. 40–46. [Google Scholar]

- Liu, G.; Li, C.; Li, G.; Yu, X.; Li, L. Automatic Space Calibration of a Medical Robot System. In Proceedings of the 2016 IEEE International Conference on Mechatronics and Automation, Harbin, Heilongjiang, China, 7–10 August 2016; pp. 465–470. [Google Scholar]

- Horaud, R.; Dornaika, F. Hand-Eye Calibration. Int. J. Robot. Res. 1995, 14, 195–210. [Google Scholar] [CrossRef]

- Ding, X.; Wang, Y.; Wang, Y.; Xu, K. A Review of Structures, Verification, and Calibration Technologies of Space Robotic Systems for on-Orbit Servicing. Sci. China Technol. Sci. 2021, 64, 462–480. [Google Scholar] [CrossRef]

- Shah, M.; Eastman, R.D.; Hong, T. An Overview of Robot-Sensor Calibration Methods for Evaluation of Perception Systems. In Proceedings of the Workshop on Performance Metrics for Intelligent Systems, College Park, MD, USA, 20–22 March 2012; pp. 15–20. [Google Scholar]

- Li, H.; Ma, Q.; Wang, T.; Chirikjian, G.S. Simultaneous Hand-Eye and Robot-World Calibration by Solving the AX=YB Problem Without Correspondence. IEEE Robot. Autom. Lett. 2016, 1, 145–152. [Google Scholar] [CrossRef]

- Zhang, H. Hand/Eye Calibration for Electronic Assembly Robots. IEEE Trans. Robot. Autom. 1998, 14, 612–616. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Yang, G.-Z. A Computationally Efficient Method for hand-eye Calibration. Int. J. CARS 2017, 12, 1775–1787. [Google Scholar] [CrossRef] [PubMed]

- Bennett, D.J.; Geiger, D.; Hollerbach, J.M. Autonomous Robot Calibration for Hand-Eye Coordination. Int. J. Robot. Res. 1991, 10, 550–559. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, F.; Qu, X.; Shi, X. A Rapid Coordinate Transformation Method Applied in Industrial Robot Calibration Based on Characteristic Line Coincidence. Sensors 2016, 16, 239. [Google Scholar] [CrossRef] [PubMed]

- Qu, Z.; Zhang, L. Research on Image Segmentation Based on the Improved Otsu Algorithm. In Proceedings of the 2010 Second International Conference on Intelligent Human-Machine Systems and Cybernetics, Nanjing, China, 26–28 August 2010; Volume 2, pp. 228–231. [Google Scholar]

- Gao, Y.; Zhao, Y.; Xie, L.; Zheng, G. A Projector-Based Augmented Reality Navigation System for Computer-Assisted Surgery. Sensors 2021, 21, 2931. [Google Scholar] [CrossRef]

- Treepong, B.; Tanaiutchawoot, N.; Wiratkapun, C.; Suthakorn, J. On the Design and Development of a Breast Biopsy Navigation System: Path Generation Algorithm and System with Its GUI Evaluation. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Valencia, Spain, 1–4 June 2014; pp. 273–276. [Google Scholar]

- Tanaiutchawoot, N.; Wiratkapan, C.; Treepong, B.; Suthakorn, J. On the Design of a Biopsy Needle-Holding Robot for a Novel Breast Biopsy Robotic Navigation System. In Proceedings of the 4th Annual IEEE International Conference on Cyber Technology in Automation, Control and Intelligent, Hong Kong, China, 4–7 June 2014; pp. 480–484. [Google Scholar]

- Chevrie, J.; Krupa, A.; Babel, M. Real-Time Teleoperation of Flexible Beveled-Tip Needle Insertion Using Haptic Force Feedback and 3D Ultrasound Guidance. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2700–2706. [Google Scholar]

- Aström, K.G.; Ahlström, K.H.; Magnusson, A. CT-Guided Transsternal Core Biopsy of Anterior Mediastinal Masses. Radiology 1996, 199, 564–567. [Google Scholar] [CrossRef]

- Yilmaz, A.; Üskül, T.B.; Bayramgürler, B.; Baran, R. Cell Type Accuracy of Transthoracic Fine Needle Aspiration Material in Primary Lung Cancer. Respirology 2001, 6, 91–94. [Google Scholar] [CrossRef] [PubMed]

- Blasier, R.B. The Problem of the Aging Surgeon: When Surgeon Age Becomes a Surgical Risk Factor. Clin. Orthop. Relat. Res. 2009, 467, 402–411. [Google Scholar] [CrossRef]

- Kim, C.; Chang, D.; Petrisor, D.; Chirikjian, G.; Han, M.; Stoianovici, D. Ultrasound Probe and Needle-Guide Calibration for Robotic Ultrasound Scanning and Needle Targeting. IEEE Trans. Med. Robot. Bionics 2013, 60, 1728–1734. [Google Scholar]

- Grossmann, B.; Krüger, V. Continuous Hand-Eye Calibration Using 3D Points. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 311–318. [Google Scholar]

- Ma, Q.; Li, H.; Chirikjian, G.S. New Probabilistic Approaches to the AX = XB Hand-Eye Calibration without Correspondence. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4365–4371. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).