Abstract

Over a billion people around the world are disabled, among whom 253 million are visually impaired or blind, and this number is greatly increasing due to ageing, chronic diseases, and poor environments and health. Despite many proposals, the current devices and systems lack maturity and do not completely fulfill user requirements and satisfaction. Increased research activity in this field is required in order to encourage the development, commercialization, and widespread acceptance of low-cost and affordable assistive technologies for visual impairment and other disabilities. This paper proposes a novel approach using a LiDAR with a servo motor and an ultrasonic sensor to collect data and predict objects using deep learning for environment perception and navigation. We adopted this approach using a pair of smart glasses, called LidSonic V2.0, to enable the identification of obstacles for the visually impaired. The LidSonic system consists of an Arduino Uno edge computing device integrated into the smart glasses and a smartphone app that transmits data via Bluetooth. Arduino gathers data, operates the sensors on the smart glasses, detects obstacles using simple data processing, and provides buzzer feedback to visually impaired users. The smartphone application collects data from Arduino, detects and classifies items in the spatial environment, and gives spoken feedback to the user on the detected objects. In comparison to image-processing-based glasses, LidSonic uses far less processing time and energy to classify obstacles using simple LiDAR data, according to several integer measurements. We comprehensively describe the proposed system’s hardware and software design, having constructed their prototype implementations and tested them in real-world environments. Using the open platforms, WEKA and TensorFlow, the entire LidSonic system is built with affordable off-the-shelf sensors and a microcontroller board costing less than USD 80. Essentially, we provide designs of an inexpensive, miniature green device that can be built into, or mounted on, any pair of glasses or even a wheelchair to help the visually impaired. Our approach enables faster inference and decision-making using relatively low energy with smaller data sizes, as well as faster communications for edge, fog, and cloud computing.

1. Introduction

There are over 1 billion disabled people today around the world, comprising 15% of the world population, and this number is greatly increasing due to ageing, chronic diseases, and poor environments and health according to the World Health Organization (WHO) [1]. WHO defines disability as having three dimensions, “impairment in a person’s body structure or function, or mental functioning; activity limitation; and participation restrictions in normal daily activities”, and states that disability “results from the interaction between individuals with a health condition with personal and environmental factors” [2]. Cambridge Dictionary defines disability as “not having one or more of the physical or mental abilities that most people have” [3]. Wikipedia defines physical disability as “a limitation on a person’s physical functioning, mobility, dexterity, or stamina” [4]. Disabilities can relate to various human functions, including hearing, mobility, communication, intellectual ability, learning, and vision [5]. In the UK, 14.6 million people are disabled [6], forming over 20% of the population. In the US, 13.2% of the population were disabled according to the 2019 statistics, comprising over 43 million people [7]. Similar statistics could be found in various countries around the world, some worse than others, which means that, on average, disabled people make up 15% of the population globally.

With 253 million people affected by visual impairment and blindness around the globe, it is the second most prevalent disability in the world population after hearing loss and deafness [8]. Four terminologies can be used to identify various rates of loss of vision and blindness, namely, partially sighted, low vision, legally blind, and totally blind [9]. People with partial vision in one or both eyes are considered partially sighted. Low vision relates to a serious visual impairment, where visual acuity in the good-seeing eye is 20/70 or lower and cannot be enhanced with glasses or contact lenses. If the best-seeing eye can be corrected to achieve 20/200, then the person is considered legally blind [9]. Finally, people who are totally blind are those with a total loss of vision [10]. Even though vision impairment can happen at any point in life, it is more common among older people. Visual impairment can be hereditary. In these kinds of circumstances, it occurs from birth or in childhood [11].

While visual impairment and blindness are among the most disabling disabilities, we know relatively little about the lives of visually impaired and blind individuals [12]. The WHO predicts that the number of people with visual impairments will increase owing to population growth and aging. Moreover, contemporary lifestyles have spawned a multitude of chronic disorders that degrade vision and other human functions [13]. Diabetes and hyperglycemia, for instance, can cause a range of health issues, including visual impairment. Several tissues of the ocular system can be affected by diabetes, and cataracts are one of the most prevalent causes of vision impairment [14].

Behavioral and neurological investigations relevant to human navigation have demonstrated that the way in which we perceive visual information is a critical part of our spatial representation [15]. It is typically hard for visually impaired individuals to orient themselves and move in an unknown location without help [16]. For example, landplane tracking is a natural mobility task for humans, but it is an issue for individuals with poor or no eyesight. This capacity is necessary for individuals to avoid the risk of falling and to alter their position, posture, and balance [16]. Moving up and down staircases, low and high static movable obstacles, damp flooring, potholes, a lack of information about recognized landmarks, obstacle detection, object identification, and dangers are among the major challenges that visually impaired people confront indoors and outdoors [17,18].

The disability and visual impairment statistics of Saudi Arabia are also alarming. Around 3% of people in Saudi Arabia reported the presence of a disability in 2016 [19]. According to the General Authority for Statistics in Saudi Arabia, 46% of all the disabled in Saudi Arabia who have one disability are visually impaired or blind, and 2.9% of the Saudi population have disabilities amounting to extreme difficulty [20]. The information provided above highlights the urgent need for research in the development of assistive technologies for general disabilities, including visual impairment.

A white cane is the most popular tool used by visually impaired individuals to navigate their environments; nevertheless, it has a number of drawbacks. It requires physical contact with the environment [12], cannot detect barriers above the ground, such as ladders, scaffolding, tree branches, and open windows [21], and generates neuromusculoskeletal overuse injuries and syndromes that may require rehabilitation [22]. Moreover, the user of a white cane is sometimes ostracized for social reasons [12]. In the absence of appropriate assistive technologies, visually impaired individuals must rely on family members or other people [23]. However, human guides can be dissatisfying at times, since they may be unavailable when assistance is required [24]. The use of assistive technologies can help visually impaired and blind people engage with sighted people and enrich their lives [12].

Smart societies and environments are driving extraordinary technical advancements with the promise of a high quality of life [25,26,27,28]. Smart wearable technologies are generating numerous new opportunities in order to enhance the quality of life of all people. Fitness trackers, heart rate monitors, smart glasses, smartwatches, and electronic travel aids are a few examples. The same holds true for visually impaired people. Multiple devices have been developed and marketed to aid visually impaired individuals in navigating their environments [29]. An electronic travel aid (ETA) is a regularly used type of mobility-enhancing assistive equipment for the visually impaired and blind. It is anticipated that ETAs will increasingly facilitate “independent, efficient, effective, and safe movement in new environments” [30]. ETAs can provide information about the environment through the integration of multiple electronic sensors and have shown their effectiveness in improving the daily lives of visually impaired people [31]. ETAs are available in a variety of wearable and handheld devices and may be categorized according to their usage of cellphones, sensors, or computer vision [32]. The acceptance rate of ETAs is poor among the visually impaired and blind population [23]. Their use is not common among potential users because they have inadequate user interface designs, are restricted to navigation purposes, are functionally complex, weighty to carry, expensive, and lack functionality for object recognition, even in familiar indoor environments [23]. The low adoption rate does not necessarily indicate that disabled people oppose the use of ETAs; rather, it confirms that additional research is required to investigate the causes of the low adoption rate and to improve the functionality, usability, and adaptability of assistive technologies [33]. In addition, the introduction of unnecessarily complicated ETAs that may necessitate extensive and supplementary training to learn additional and difficult abilities is not a realistic alternative and is not a feasible solution [22]. Robots can assist the visually impaired in navigating from one location to another, but they are costly, along with their other challenges [34]. Augmented reality has been used as a solution for magnifying text and images through a finger wearable applied with a camera to project on a HoloLens [35], but this technology is not suitable for blind people.

We developed a comprehensive understanding of the state-of-the-art requirements of, and solutions for, visually impaired assistive technologies using a detailed literature review (see Section 2) and a survey [36] of this topic. Using this knowledge, we identified the design space for assistive technologies for the visually impaired and the research gaps. We found that the design considerations for assistive technologies for the visually impaired are complex and include reliability, usability, and functionality in indoor, outdoor, and dark environments; transparent object detection; hand-free operations; high-speed, real-time operations; low battery usage and energy consumption; low computation and memory requirements; low device weight; and cost effectiveness. Despite the fact that several devices and systems for the visually impaired have been proposed and developed in academic and commercial settings, the current devices and systems lack maturity and do not completely fulfill user requirements and satisfaction [18,37]. For instance, numerous camera-based and computer-based solutions have been produced. However, the computational cost and energy consumption of image processing algorithms pose a concern for low-power portable or wearable devices [38]. These solutions require large storage and computational resources, including large RAMs to process large volumes of data containing images. This would require substantial processing, communication, and decision-making times, and would also consume energy and battery life. Significantly more research effort is required to bring innovation, intelligence, and user satisfaction to this crucial area.

In this paper, we propose a novel approach that uses a combination of a LiDAR with a servo motor and an ultrasonic sensor to collect data and predict objects using machine and deep learning for environment perception and navigation. We implemented this approach using a pair of smart glasses, called LidSonic V2.0, to identify obstacles for the visually impaired. The LidSonic system consists of an Arduino Uno edge computing device integrated into the smart glasses and a smartphone app that transmits data via Bluetooth. Arduino gathers data, operates the sensors on smart glasses, detects obstacles using simple data processing, and provides buzzer feedback to visually impaired users. The smartphone application collects data from Arduino, detects and classifies items in the spatial environment, and gives spoken feedback to the user on the detected objects. LidSonic uses far less processing time and energy than image-processing-based glasses by classifying obstacles using simple LiDAR data and using several integer measurements.

We comprehensively describe the proposed system’s hardware and software design, having constructed their prototype implementations and tested them in real-world environments. Using the open platforms WEKA and TensorFlow, the entire LidSonic system was built with affordable off-the-shelf sensors and a microcontroller board costing less than USD 80. Essentially, we provide the design of inexpensive, miniature green devices that can be built into, or mounted on, any pair of glasses or even a wheelchair so as to help the visually impaired. Our approach affords faster inference and decision-making using relatively low energy with smaller data sizes. Smaller data sizes are also beneficial in communications, such as those between the sensor and processing device, or in the case of fog and cloud computing, because they require less bandwidth and energy and can be transferred in relatively shorter periods of time. Moreover, our approach does not require a white cane (although it can be adapted to be used with a white cane) and, therefore, it allows for handsfree operation.

The work presented in this paper is a substantial extension of our earlier system LidSonic (V1.0) [39]. LidSonic V2.0, the new version of the system, uses both machine learning and deep learning methods for classification, as opposed to V1.0, which uses machine learning alone. LidSonic V2.0 provides a higher accuracy of 96% compared to 92% for LidSonic V1.0, despite the fact that it uses a lower number of data features (14 compared to 45) and a wider vision angle of 60 degrees compared to 45 degrees for LidSonic V1.0. The benefits of a lower number of features are evident in LidSonic V2.0, requiring even lower computing resources and energy than LidSonic V1.0. We have extended the LidSonic system with additional obstacle classes, provided an improved and extended explanation of its various system components, and conducted extensive testing with two new datasets, six machine learning models, and two deep learning models. System V2.0 was implemented using the Weka and TensorFlow platforms (providing dual options for open-source development) compared to the previous system that was implemented using Weka alone. Moreover, this paper provides a much extended, completely new literature review and taxonomy of assistive technologies and solutions for the blind and visually impaired.

Earlier in this section, we noted that, despite the fact that several devices and systems for the visually impaired have been developed in academic and commercial settings, the current devices and systems lack maturity and do not completely fulfill user requirements and satisfaction. Increased research activity in this field will encourage the development, commercialization, and widespread acceptance of devices for the visually impaired. The technologies developed in this paper are of high potential and are expected to open new directions for the design of smart glasses and other solutions for the visually impaired using open software tools and off-the-shelf hardware.

The paper is structured as follows. Section 2 explores relevant works in the field of assistive technologies for the visually impaired and provides a taxonomy. Section 3 gives an overview of the LidSonic V2.0 system, highlighting its user, developer, and system features. Section 4 provides a detailed illustration of the software and hardware design and implementation. The system is evaluated in Section 5 Conclusions and thoughts regarding future work are provided in Section 6.

2. Related Work

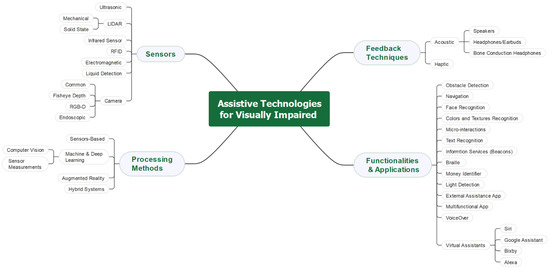

This section reviews the literature relating to this paper. Section 2.1 presents the sensor technologies and the types of sensor technologies used in the assistive tools for the visually impaired. Section 2.2 reviews the processing methods. Section 2.3 discusses the feedback techniques. A taxonomy of functions and applications is provided in Section 2.4. Section 2.5 identifies the research gap and justifies the need for this research. A taxonomy of the research on the visually impaired presented in this section is given in Figure 1. An extensive complimentary review of the assistive technologies for the visually impaired and blind can be found in our earlier work [39].

Figure 1.

A Taxonomy of Research on Assistive Technologies for the Visually Impaired.

2.1. Sensor Technologies and Types Used in Assistive Tools

Sensors are indistinguishable components of cyberphysical systems. They collect knowledge regarding environmental factors, as well as non-electrical system parameters, and provide the findings as electrical signals. With the development of microelectronics, sensors are available as compact devices at low costs and have a wide range of applications in different fields, especially in control systems [40]. There are two types of sensors, including passive and active sensors. The active sensor needs an incentive to activate. On the contrary, the passive sensor detects inputs and generates output signals directly without an external incentive. The classification may be dependent on the sensor’s means of detection, e.g., electric, biological, chemical, radioactive, etc. [41].

A number of sensors have been employed in the field of technologies for visually impaired people. They have been used to solve a wide range of vision issues. The most frequent types of sensors used in assistive devices for the visually impaired are listed in Table 1. It also shows the sensors’ functions (functionality), types of wearables, and types of feedback.

2.1.1. Ultrasonic Sensors

An ultrasonic sensor is an electronic device that uses ultrasonic sound waves to detect the distance between the user and a target item and transforms the reflected sound into an electric signal. Fauzul and Salleh [42] developed a visual assistive technology to assist visually impaired individuals in safely and conveniently navigating both indoor and outdoor situations. A smartphone app and an obstacle sensor are the two major components of the system. To deliver auditory cue instructions to the user, the mobile software makes use of the smartphone’s microelectromechanical sensors, location services, and Google Maps. Ultrasonic sensors in the obstacle sensor are used to detect objects and offer tactile feedback. The obstacle avoidance gadget attaches to a standard cane and vibrates the handle with varying intensities according to the nature of the obstructions. The spatial distance and direction from the present position to the intended location are used to produce spatial sound cues. Gearhart et al. [43] proposed a technique for identifying the position of the detected object using triangulation by geometric relationships with scalar measurements. The authors placed two ultrasonic sensors one on each shoulder, which were angled towards each other at five degrees from parallel, with a space of 10 inches. However, this technique is too complex to be applied to several objects in front of the sensor. A significant number of research papers in the field of objects detection have depended on ultrasonic sensors. Tudor et al. [44] proposed a wearable belt with two ultrasonic sensors and two vibration motors to direct the visually impaired away from obstacles. The authors used an Arduino Nano Board with an ATmega328P microcontroller to connect and build their system. According to their findings, the authors in [45] found that ultrasonic sensors and vibrator devices are easily operated by Arduino UNO R3Impatto Zero boards. Noman et al. The authors of [46] proposed a robot equipped with several ultrasonic sensors and Arduino Mega (ATMega 2560 processor) to detect obstacles, holes, and stairs. The robots can be utilized in indoor environments; however, their use outdoors is not practical.

2.1.2. LiDAR Sensors

Light detection and ranging, or LiDAR, is a common remote sensing technique used for determining an object’s distance. Chitra et al. [47] proposed a handsfree LVU (LiDARs and Vibrotactile Units) discrete wearable gadget that helps blind persons to identify impediments. Proper mobile assistance equipment is required. The proposed gadget consists of a wearable sensor strap. Liu et al. proposed HIDA. This is a lightweight assistance system used for comprehensive indoor detection and avoidance based on 3D point cloud instance segmentation and a solid-state LiDAR sensor. The authors created a point cloud segmentation model with dual lightweight decoders for semantic and offset predictions, ensuring the system’s efficiency. The segmented point cloud was post-processed by eliminating outliers and projecting all points onto a top-view 2D map representation after the 3D instance segmentation.

2.1.3. Infrared (IR) Sensors

An infrared (IR) sensor is an electronic device that monitors and senses the infrared radiation in its surroundings [48]. Infrared signals are similar to RFID in that they rely on distant data transfer. As previously stated, the latter uses radio waves, whilst the former uses light signals. Air conditioner remotes and motion detectors, for example, all use infrared technology. Smartphones now come with infrared blasters, allowing users to control any compatible device with an infrared receiver. It is an eye-safe light, which emits pulses and measures the time taken to calculate the distance using the reflected light. Every metric of the IR consists of thousands of separate pulses of light that lead to reliable measurements of rain, snow, fog, or dust and can be obtained by an infrared sensor. These measurements are difficult to capture with cameras [22]. In addition, IR has a long range in both indoor and outdoor environments, high precision, small size, and low latency. An IR sensor can detect obstacles up to 14 m away, with a 0.5 resolution and 4 cm accuracy [12]. IR has medium width among the ultrasonic and laser sensors. The laser has a rather narrow scope, and it gathers a very limited amount of space information, which is not large enough for free paths. On the other hand, ultrasonic sensors have many reflections; thus, they are limited [49].

2.1.4. RFID Sensors

RFID is the abbreviation of radio frequency identification. Data may be transferred and received through radio waves using RFID. In RFID, the sender sends a radio receiver. In this method, the sender is commonly an RFID chip (or an RFID tag) inserted into the object being read or scanned. The receiver, on the other hand, is an electrical device that detects the RFID chip’s data. The chip and receiver do not need to be in physical contact, because the data is broadcast and received by radio waves. RFID is appealing because of its remote capabilities, but it is also harmful. Because the chip’s RFID signal may be read by anybody with an RFID reader, it may lead to an unethical and harmful situation, namely, data theft. The fact that the person using the scanner does not even have to be near the chip/tag increases the danger. Another disadvantage is that each tag has a certain range, which necessitates extensive individual testing, limiting the scope. In addition, the system may be quickly turned off if the tags are wrapped or covered, preventing them from receiving radio signals [50].

An intelligent walking stick for the blind was proposed by Chaitrali et al. [51]. The proposed navigation system for vision impairment uses infrared sensors, RFID technology, and Android handsets to provide speech output for the purpose of obstacle navigation. The gadget is equipped with proximity infrared sensors, and RFID tags are implanted in public buildings, as well as in the walking sticks of blind people. The gadget is Bluetooth-connected to an Android phone. An Android application that provides voice navigation based on the RFID tag reading and also updates the server with the user’s position information is being developed. Another application allows family members to access the location of the blind person via the server at any time. The whereabouts of a blind person may be traced at any time, providing further security. This approach has the disadvantage of not being compact. When the intelligent stick is within the range of the PCB unit, the active RFID tags immediately send location information. It is not necessary for the RFID sensor to read it explicitly.

2.1.5. Electromagnetic Sensors (Microwave Radar)

Adopting a pulsed chirp scheme can reduce the power consumption and preserve a high resolution by managing the spatial resolution in terms of the frequency modulation bandwidth. A pulsed signal enables the transmitter to be turned off in the listening time of the echo, thereby significantly reducing the energy consumption [37]. Using a millimeter-wave radar and a typical white cane, a method of electronic travel assistance for blind and visually impaired persons was proposed [52]. It is a sophisticated system that not only warns the user of possible difficulties but also distinguishes between human and nonhuman targets. Because real-world situations are likely to include moving targets, a novel range alignment approach was developed to detect minute chest movements caused by physiological activity as vital evidence of human presence. The proposed system recognizes humans in complicated situations, with many moving targets, giving the user a comprehensive set of information, including the presence, location, and type of the accessible targets. The authors used a 122 GHz radar board to carry out appropriate measurements, which were used to demonstrate the system’s working principle and efficacy.

2.1.6. Liquid Detection Sensors

Research usually involves more than one type of sensor in order to cover most of the prevalent challenges facing the visually impaired. Ikbal et al. [53] proposed a stick that is equipped with various kinds of sensors to assist in detecting obstacles. One of the major obstacles that jeopardize the visually impaired is water on the floor [54]. Therefore, the authors included a water sensor in their solution, in addition to two ultrasonic sensors to detect 180 cm obstacles, including one IR sensor to detect stairway gaps and holes on streets, and a temperature sensor for fire alert. The authors connected all the sensors with an Arduino microcontroller board. This sensor must come into contact with the surface of the water in order to provide the result; thus, this method must consider the appropriate wearable. One of the most important uses of a liquid detector for the blind is the use of a sensor that is placed on the cup to prevent it from spilling. To improve navigation safety, the authors present a polarized RGB-Depth (pRGB-D) framework to detect the traversable area and water hazards while using polarization-color-depth-attitude information [55].

2.1.7. Cameras

The camera is utilized to provide various functions in different technology solutions using machine learning algorithms, such as facial recognition, object recognition, and localization (see Table 1). Research has used various types of cameras. The most frequently used types are the common camera and the RGB-Depth camera. The common camera is used mostly in facial, emotion, and obstacle recognition. On the other hand, the RGB-D camera has been used for detecting and avoiding obstacles and mapping to assist in navigation through indoor environments. A depth image is an image channel in which each pixel is related to a distance between the image plane and the respective point in the RGB picture. Adding depth to standard color camera techniques increases both the precision and density of the map. RGB-D sensors are popular in a variety of visual aid applications due to their low power consumption and inexpensive cost, as well as their resilience and high performance, as they can concurrently sense color and depth information at a smooth video framerate. Because polarization characteristics reflect the physical properties of materials, polarization and associated imaging can be employed for material identification and target detection, in addition to color and depth [30]. Meanwhile, because various polarization states of light act differentially at the interface of an object’s surface, polarization has been utilized in a variety of surface measuring techniques. Nevertheless, most industrial RGB-D sensors, such as light-coding sensors and stereo cameras, depend solely on intensity data, with polarization indications either missing or insufficient [55]. The study reported in [56] describes a 3D object identification algorithm and its implementation in a robotic navigation aid (RNA) that allows for the real-time detection of indoor items for blind people utilizing a 3D time-of-flight camera for navigation. Then, using a Gaussian-mixture-model-based plane classifier, each planar patch is classified as belonging to a certain object model. Finally, the categorized planes are clustered into model objects using a recursive plane clustering process. The approach can also identify various non-structural elements in the indoor environment. The authors of the research reported in [57] proposed a new approach to autonomous obstacle identification and classification that combines a new form of sensor, a patterned light field, with a camera. The proposed gadget is compact in terms of size, portable, and inexpensive. As the sensor system is transported in natural interior and outdoor situations over and toward various sorts of barriers, the grid projected by the patterned light source is visible and distinguishable. The proposed solution uses deep learning techniques, including a convolutional neural-network-based categorization of individual frames, to leverage these patterns without calibration. The authors improved their method by smoothing frame-based classifications across many frames using lengthy short-term memory units.

Table 1.

Types of Sensors Used in Assistive Devices for VI.

Table 1.

Types of Sensors Used in Assistive Devices for VI.

| Sensor Name | Works | Purpose of Sensor | No. of Sensors | Weight | Wearable/Assistive | Feedback Method |

|---|---|---|---|---|---|---|

| IR Sensors | [58] | Touch down, touch up sensor | 2 | Light | Mounted on top of a finger | Acoustic |

| [49] | Detect obstacles, stairs | 2 | Light | Cane | Acoustic | |

| IMU | [58] | Recognize gestures and sense movements | 1 | Light | Mounted on top of a finger | Acoustic |

| Ultrasonic Sensors | [23] | Detect obstacles up to the chest level | 5 | Light | Cane | Acoustic, vibration |

| [59] | Detect obstacles | 2 | High | Guide dog robot and portable robot | Acoustic | |

| [45] | Detect obstacles | 5 | Fair | Mounted on the head, legs, and arms | Buzzer, vibration | |

| [44] | Detect obstacles | 2 | Fair | Belt | Vibration | |

| ToF Distance Sensors | [12] | Detect obstacles | 7 | High | Belt | Vibration belt |

| Microwave Radar | [37] | Detect obstacles | 1 | Light | Mounted on a cane | Acoustic, vibration |

| Wet Floor Detection Sensors | [53] | Detect wet floors | 1 | Light | Cane | Buzzer |

| Bluetooth | [60] | Informing about indoor environments | 3 | Light | Beacon transmitter, smartphone | Acoustic |

| Laser Pointer | [61] | Detect obstacles | 1 | Light | Belt | Vibration belt |

| Cameras | [58] | Localize the hand touch | 1 | Light | Mounted on top of a finger | Acoustic |

| [62] | Emotion recognition | 1 | Light | Clipped on to spectacles | Vibration belt | |

| [59] | Obstacle recognition (traffic light, cones, bus, etc.) | 2 | High | Guide dog robot and portable robot | Acoustic | |

| [63] | Localization system | 1 | Low | Head level (helmet), chest level (hanged) | Location in a Map | |

| RGB-D Cameras | [61] | Detect obstacles | 1 | Light | Smartphone simulating a cane | Vibratory belt |

| [64] | Avoid obstacles, localization system for indoor navigation | 1 | Light | Glass, tactile vest, smartphone | Haptic vest (4 vibration motors) | |

| Endoscopic Cameras | [65] | Identify clothing colors, visual texture recognition | 1 | Light | Mounted on top of a finger | Acoustic |

| Compass | [66] | Indoor navigation | 1 | Light | Optical head-mounted (Glass) | Acoustic |

2.2. Processing Methods

Researchers have used a range of processing methods for assistive technologies. Recent years have seen an increase in the use of machine learning and deep learning methods in various applications and sectors, including healthcare [67,68,69], mobility [70,71,72], disaster management [73,74], education [75,76], governance [77], and many other fields [78]. Assistive technologies are no different and have begun to increasingly rely on machine learning methods. This section reviews some of the works on processing methods for assistive technologies, including both machine learning-based methods and other methods.

There are numerous ideas and methods that have been proposed to solve the problems and challenges facing the blind. Katzschmann et al. [12] incorporated several sensors and feedback motors in a belt to produce an aiding navigation system, called Array of LiDARs and Vibrotactile Units (ALVU), for visually impaired people. The authors developed a secure navigation system, which is effective in providing detailed feedback to a user about the obstacles and free areas around the user. Their technology is made up of two components: a belt with a distance sensor array and a haptic array of feedback modules. The haptic strap that goes around the upper abdomen provides input to the person wearing the ALVU, which allows them to sense the distance between themselves and their surroundings. As the user approaches an impediment, they receive greater pulse rates and a higher vibration force. The vibration and pulses stop once the user has overcome the obstacle. However, this kind of feedback is primitive and cannot define the type of obstacle that the user should avoid. Moreover, it does not determine whether the obstacle should or should not be avoided. In addition, wearing two belts may not be easy and comfortable for the user. Meshram et al. [23] designed a NavCane that detects and avoids obstacles from the floor up to the chest level. It can also identify water on the floor. It has a user button to send auto alerts through SMS and email in emergencies. It provides two kinds of feedback, including tactile feedback using vibration and auditory feedback using the headphones. However, the device cannot identify the nature of the objects and cannot detect obstacles above chest level.

Hong et al. [79] proposed a solution for blind people based on two haptic wristbands used to provide feedback on objects. Using a LiDAR, Chun et al. [80] proposed a detection technique that reads the distances of deferent angles and then measures the predicted obstacles by comparing these reading.

Using the Internet of Things (IoT), machine learning, and embedded technologies, Mallikarjuna et al. [34] developed a low-cost visual aid system for object recognition. The image is acquired by the camera and then forwarded to the Raspberry Pi. To classify the image, the Raspberry Pi is trained using the TensorFlow Machine Learning Framework and Python programming. However, their technique requires a long period of time (5 s to 8 s) to inform the visually impaired individual about the item in front of them.

Gurumoorthy et al. [81] proposed a technique using the rear camera of a mobile phone to capture and analyze the image in front of the visually impaired. To execute tasks related to computer vision, this device uses Microsoft Cognitive Services. Then, image feedback is provided to the user through Google talkback. This technique needs a mobile internet service in order to be performed. Additionally, it is hard for the visually impaired to take a proper picture. A similar solution by means of sending the picture to the cloud to be analyzed was proposed in [33]; however, the authors captured the image through a camera mounted into the white cane. The authors also proposed a solution for improving visually impaired people’s mobility, which comprises a smart folding stick that works in tandem with a smartphone app using interconnection mechanisms based on GPS satellites. Navigational feedback is presented to the user as a voice output, as well as to the visually impaired family/guardians via the smartphone application. Rao and Singh [82] developed an obstacle detection method based on computer vision using a fisheye camera that is mounted onto a shoe. The photo is transmitted to a mobile application that uses TensorFlow Lite to classify the picture and alert visually impaired users about potholes, ditches, crowded places, and staircases. The device gives a vibration notification. In addition, an ultrasonic sensor is mounted with a servo on the front of the shoe to detect nearby obstacles. A vibration motor inside the shoe is used for feedback.

2.3. Feedback Techniques

People with standard vision depend on feedback that they gain from vision. They perceive more through vision than through hearing or touch. This is something that the visually impaired lack. Therefore, ETAs must be able to provide sufficient input on the perceived knowledge about the world of the user. Furthermore, feedback should be swift and not conflict with hearing and feeling [61].

2.3.1. Haptics

The haptic methods for the visually impaired person can offer more methods for interaction with the other human senses, such as hearing, and do not interfere with them. It has been noted in studies that the visually impaired have higher memorization abilities and recognition of haptic tasks [83]. The advantages of haptic feedback are high privacy, because only the person can observe the stimuli, as well as usefulness in high-noise environments and the fact that they can expand the person’s experience as an additional communicational channel [84]. Buimer et al. [62] presented an experiment of a technique used to recognize facial emotion and send feedback through a vibration belt to the user. The authors conveyed information regarding six emotions by installing six vibration units in a belt. Even though the technique has accuracy problems, the satisfactory results of this method are based on a study of eight visually impaired people. Five of them found that the belt was easy to use and could interpret the feedback while conversing with another person. Meanwhile, the other three found its use difficult. Gonzalez-Canete et al. have proposed Tactons, whereby they identified sixteen applications with different vibration signals so that they could be distinguished from one another. The authors found that musical techniques for haptic icons are more recognizable and can be further distinguished. In addition, adding complicated vibrotactile sensations to smartphones is a significant benefit for users with any kind of sensory disability. The authors measured the recognition rate of the VI users and non-VI users. They found that non-VI users scored higher rates, especially with identification applications that they were familiar with, but when they used the reinforcement learning stage, in which some feedback is provided to the users, the recognition rate of the VI users increased [84].

2.3.2. Acoustic

Masking auditory signals by binaurally re-displaying environmental information through headphones or earbuds blocks vital environmental signals on which many visually impaired people rely for secure navigation [22]. Currently, bone-transmitting helmets enable the user to obtain 3D auditory feedback, leaving the ear canal open and enabling the user to operate with free eyes, hands, and mind. The algorithm reduces sound production that does not indicate a change in order to further minimize the auditory output. Thus, the audible output sound is only produced when the user is confronted with an impediment, limiting possibly irritating sounds to a minimum [85].

2.4. Functions and Applications

Here, we review the necessary functions and applications that the visually impaired use to solve difficult matters. These applications are obstacle detection, navigation, facial recognition, color and texture recognition, micro-interactions, text recognition, informing services, and braille displayers and printers. Next, a detailed review is presented.

2.4.1. Obstacle Detection

A great deal of research and many studies have focused on obstacle detection due to its significance for the visually impaired, as it is considered to be a major challenge for them. An ETA using a microwave radar to detect obstacles up to the head level through the vertical beam of the sensor was presented in [37]. To overcome the issue of power consumption, the authors switched off the transmitter during the listening time of the echo. Moreover, the pulsed chirp scheme was adapted to manage the spatial resolution. To improve the precision of the indoor blind guide robot’s obstacle recognition, Du et al. [86] presented a sensor data fusion approach based on the DS evidence theory of the genetic algorithm. The system uses ultrasonic sensors, infrared sensors, and LiDAR to collect data from the surroundings. The optimized weight is replaced in DS evidence theory by data fusion for the purpose of determining the weight range of various sensors using the genetic algorithm. In practice, weighing and fusing evidence requires the determination of the weight of the evidence. Their technique has an accuracy of 0.94 for indoor obstacle identification. Bleau et al. [87] presented EyeCane, which can identify four kinds of obstacles: cubes, doors, posts, and steps. However, its bottom sensor failed to properly identify objects on the ground, making downwards navigation more dangerous.

2.4.2. Navigation

Navigation can be divided into two main categories, including internal and external navigation, because the set of techniques used in each one is different from the other. For example, the global positioning system (GPS) is not suitable for indoor localization due to the power of satellite signals, which become weak and cannot determine whether the user is close to a building or a wall [88]. However, some studies have developed techniques that may apply to both.

AL-Madani et al. [88] adopted a fingerprinting localization algorithm with fuzzy logic type-2 to navigate indoors in rooms with six BLE (Bluetooth low energy) beacons. The algorithm calculation was performed on the smartphone. The algorithm achieved an accuracy of 98.2% in indoor navigation precision and an accuracy of 0.5 m on average. Jafri et al. [89] used Google Tango Project to serve the visually impaired. The Unity engine’s built-in functions in the Tango SDK were used to build a 3D reconstruction of the local area, and then a Unity collider component was provided to the user, who used it for obstacle detection by determining its relationship with the reconstructed mesh. A method of indoor navigation assistance using an optical head-mounted screen that directs the visually impaired is presented in [66]. The program creates indoor maps by monitoring a sighted person’s activities inside of the facility, develops and prints QR code location markers for locations of interest, and then gives blind users vocal direction. Pare et al. [90] investigated a smartphone-based sensory replacement device that provides navigation direction based on strictly spatial signals in the form of horizontally spatialized sounds. The system employs numerous sensors to identify impediments in front of the user at a distance or to generate a 3D map of the environment and provide audio feedback to the user. A navigation system based on binaural bone-conducted sound was proposed by the authors of [91]. The system performs the following functions to correctly direct the user to their desired point. Initially, the best bone conduction device for use is described, as well as the best contact circumstances between the device and the human skull. Secondly, using the head-related transfer functions (HRTFs) acquired in the airborne sound field, the fundamental performance of the sound localization replicated by the chosen bone conduction device with binaural sounds is validated. A panned sound approach was also approved here, which may accentuate the sound’s location.

iMove Around and Seeing Assistant [92,93] enable users to know their current location, including the street address, receive immediate area details (Open Street Map), manage their points, manage paths, create automated paths, navigate to the selected point or path, and exchange newly generated data with other users. The app can use voice commands to facilitate the control of the program. Seeing Assistant Move has an exploration mode that utilizes a magnetic compass to measure the direction correctly, which transmits this knowledge using clock hours. It also has a light source detector that allows the user to interact with devices that use a diode as an information tool. For people who are fully blind, the light source detector is extremely useful. When leaving the house or planning to sleep, the blind consumer can avoid leaving a lamp turned on. In order to be able to accommodate signaling devices (such as diodes and control lights), the program has a feature that helps a user to detect a blinking light. This can be used to indicate whether or not a device is turned on, or whether or not a battery level is low or high. On the other hand, a great deal of speech, correlated with the user position, is registered by the iMove around app. A note of speech is played any time when the user is near the position where it was captured. BlindExplorer [94] utilizes 3D sounds as auditory stimuli, which offers the app a type of feedback that helps a consumer to travel to the route or destination or in the right direction without needing to visualize the screen and without moving their eyes away from the ground. Right Hear [95] is a virtual access assistant that helps users to easily navigate new environments. It has two modes, including indoor and outdoor. It locates the visually impaired user’s current location and nearby points in indoor and outdoor environments. However, indoor location is limited by supported locations.

Ariadne GPS [96] has a feature that makes the app suitable for the visually impaired. Namely, it uses VoiceOver in the app to inform the user about the street names and numbers that are around them, activated by touch. By simply placing the finger on the device’s screen, the user can be told about the streets while viewing the map and moving it. The user location is in the middle of the screen, and everything in front of the user is in the top half of the screen, while the bottom half of the screen shows that wis behind the user. This app reports on the user’s position at all times. It has a monitor function that works during activation, and it informs the user about their location continuously. BlindSquare [97] is a self-voicing software combined with third-party navigation applications that provides detailed points of interest and intersections for navigating both outside and inside and is designed especially for the visually impaired, blind, deafblind, and partially sighted. To determine what data are most important, BlindSquare has some special algorithms and talks to the user through high-quality speech synthesis. The app can be controlled by voice commands. The Voice Command feature is a paid service that requires credits to be purchased for its continuous use. Voice Command credits are available on the App Store as an in-app purchase.

2.4.3. Facial and Emotion Recognition

Morrison et al. [98] investigated the technological needs of the visually impaired through tactile ideation. Their findings were critical for people with visual disabilities and pointed to the need for social information, such as facial recognition and emotion recognition. In addition, social engagement and the ability to watch what others are doing, as well as the simulation of a variety of visual skills such as object recognition or text recognition, were important abilities. The importance of knowledge of people’s emotions was discussed in [62]. The authors used computer vision technology to solve the problem. Their system uses facial recognition applications to capture six basic emotions. Then, it conveys this emotion to the user by means of a vibration belt. The proposal faced several challenges, involving lighting conditions and the movements of the person opposite the user who was facing the camera directly while the pictures were captured. Therefore, the recognition accuracy was affected. The authors did not present any numerical accuracy information in their paper.

2.4.4. Color and Texture Recognition

Medeiros et al. [65] present a finger-mounted wearable that can recognize colors and visual textures. They developed a wearable device that included a tiny camera and a co-located light source (an LED) that was placed on the finger. This technology allows users to obtain color and texture information by touch, allowing them to glide their fingers across a piece of clothing and combine their understanding of physical materials with automated aural input regarding the visual appeal. The authors used a special camera for this purpose. To identify visual textures, they used a machine learning approach, while color identification was performed through super-pixel segmentation to help with the higher cognition of clothing appearance.

Color Inspector [99] was developed to help blind and other visually disabled people to distinguish color by analyzing live footage in order to explain the color in view and to recognize complicated colors. It supports VoiceOver, which can audibly read out the color. Color Reader [100] allows for the real-time identification of colors solely by pointing the camera at the object. The app has a feature for reading the colors in the Arabic language. ColoredEye [101] provides different color categories with different color descriptions, such as BASIC, with 16 fundamental colors, CRAYOLA, with 134 fun pigments, and DETAILED, with 134 descriptive colors.

2.4.5. Micro-Interactions

Micro-interaction refers to the animations and configuration adjustments that occur when a user interacts with something [102]. There are four stages involved in setting micro-interactions: First, a micro-interaction is started when a trigger is engaged. Second, triggers can be initiated by the user or by the system. Then, a user-initiated trigger requires the user to take action. In a system-initiated trigger, the software recognizes the presence of particular criteria and takes action. When a micro-interaction is initiated, rules dictate what occurs next. Finally, feedback informs individuals about what is happening. Feedback is defined as whatever a user sees, hears, or feels during a micro-interaction. The meta-rules of the micro-interaction are determined by Loops and Modes [103]. A device mounted on a finger was presented by Oh et al. [58] that uses physical gestures to facilitate the micro-interactions of some common and daily applications, such as setting the alarm and finding and opening an app. The authors carried out a survey to study the efficiency of their methods.

2.4.6. Text Recognition

The important questions that we must consider are how to use this feature and how to convey the important information only, rather than all of the information detected in the surrounding area [104]. The blob is a collection of pixels whose intensity is different from the other nearby pixels. Although the MSER (maximally stable external region) can detect the blob faster, the SWT (stroke width transform) algorithm can detect characters in an image with no separate learning process [104]. Shilkrot et al. [105] developed FingerReader, a reading support system for visually impaired people to assist impaired persons in reading printed texts with a real-time response. This gadget is a close-up scanning device that can be worn on the index finger. As a result, the gadget reads the printed text one line at a time and then provides haptic feedback and audible feedback. The Text Extraction Algorithm, which is integrated with Flite Text-To-Speech, was utilized by the authors of [106]. The proposed technique uses a close-up camera to retrieve the printed text. The trimmed curves are then matched with the lines. The 2D histogram ignores the repeated words. The program then defines the words from the characters and transmit them to ORC. As the user continues to scan, the identified words are recorded in a template. As a result, the system keeps a note of those terms in the event of a match. However, when the user deviates from the current line, they receive audible and tactile feedback. Moreover, if the device does not discover any more printed text blocks, the visually impaired receive signals via tactile feedback, informing them of the line’s ending. SeeNSpeak [107] supports VoiceOver and can audibly interpret text from the photographs of books, newspapers, posters, bottles, or any item with text. The app can also use a wide range of target languages to translate the detected text.

2.4.7. Information Services

In the context of location-based services, a beacon is a tiny hardware device that allows data to be transmitted to mobile devices within a certain range. Most apps require that receivers have Bluetooth switched on and that they download the corresponding mobile app and have location services turned on. Moreover, they require that the receiver accepts the sender’s messages. Beacons are frequently mounted on walls or other surfaces in the area as small standalone devices. Beacons can be basic, sending a signal to nearby devices, but they can also be Wi-Fi- and cloud-connected, with memory and processing resources. Some are equipped with temperature and motion sensors [108]. Perakovic et al. [60] used the beacon technology to inform the visually impaired of the required information, such as notifications about possible obstacles, location of an object, and information about a facility or discounts, and to provide navigation in indoor environments.

2.4.8. Braille Display and Printer

Braille technology is an assistive technology that helps blind or visually impaired individuals to perform basic tasks, such as writing, internet searches, braille typing, text processing, chatting, file downloading, recording, electronic mail, song burning, and reading [109]. Major challenges include the high cost of some current braille technologies and the large and heavy format of some braille documents or books with embossed paper. Braille is a representation of the alphabet, numbers, marks of punctuation, and symbols, composed of cells of dots. In a cell, there are 6–8 possible dots, and a single letter, number, or punctuation mark is created by one cell.

Displayer is an electromechanical mechanism for viewing braille characters that commonly utilizes round-tipped pins lifted through holes on a flat surface, which is often called a refreshable braille display or braille terminal. The visually impaired use it instead of a monitor. Via the braille display, they can insert commands and text, and it conveys text and images on the screen to them by modifying or refreshing the braille characters on the keyboard. They can browse the internet, draft documents, and use a computer in general. Up to 80 characters on the screen can be shown on a braille display, which can be updated when the user moves the cursor across the monitor using the command keys, cursor routing keys, or Windows and screen reader controls. Braille displays of 40, 70, or 80 characters are typically available. For most occupations, a 40-character display is appropriate and sufficient.

There are other important devices that can be used by the visually impaired, especially in the case of learning or employment in the office, such as the Refreshable Braille Display and Braille Embosser. A braille printer or a braille embosser is a device that uses solenoids to regulate embossing pins. It extracts data from computing devices and embosses the information on paper in braille. It produces tactile dots on hard paper, rendering written documents that are clear to the blind. Depending on the number of characters depicted, the cost of braille displays varies from USD 3500 to USD 15,000. In 2012, sixty-three studies aimed at finding new ways to developed refreshable braille were conducted by the Transforming Braille Group. Orbit Reader 20 is the result of these studies, with a 20-cell refreshable braille display [110] that currently costs USD 599. However, Orbit Reader 20 is the basic version and has limited braille characters.

Special braille paper is needed for braille embossers, which is heavier and more expensive than standard printer paper. More pages with the same volume of data are required for braille printing compared to a standard printer. They are also sluggish and noisier. Some braille printers are capable of printing single- or double-sided pages. Although embossers are relatively simple to use, they can be messy and can somewhat differ from one device to another in regard to the quality of the finished product [111]. Braille displays range in price from USD 3500 to USD 15,000, depending on the number of characters depicted. The cost of a braille printer is relative to the volume of braille it generates. Low-volume braille printers cost between USD 1800 and USD 5000, whereas high-volume printers cost between USD 10,000 and USD 80,000 [112].

2.4.9. Money Identifier App

Blind people have difficulty determining the value of the banknotes in their possession, and they usually ask and rely on others to discover the value of the banknotes, which exposes them to the risk of theft or fraud. Money identifier apps are a type of software that can recognize the denomination of banknotes used as currencies. The “Cash Reader: Bill Identifier” app [113] can identify a wide range of currencies. However, it requires a monthly payment subscription. MCT Money Reader [114] is another app that can recognize currencies, including Saudi Riyal, with a cost of SR 58.99 for lifetime use.

2.4.10. Light Detection

The Light Detector [115] translates any natural or artificial light source it encounters into sound. The software locates the light by aiming the mobile camera in its direction. Depending on the strength of the light, the user can notice a greater or lower tone. It is useful for helping the visually impaired to determine if the lights at home are on or off or to if the blinds are drawn by moving the device upwards and downwards.

2.4.11. External Assistance App

Be My Eyes [116] is an app with which blind or visually impaired users can request help from sighted volunteers. Once the first sighted user accepts the request, a live audio and video call is established between the two parties. With a back-camera video call function, the sighted aide can assist the blind or visually impaired. However, the app depends on an insufficient number of volunteers and has privacy issues because the users share videos and personal information during the connection.

2.4.12. Multifunctional App

Sullivan+ (blind, visually impaired, low vision) [117] depends on the camera shots of the mobile and analyzes them using the AI mode, which automatically seeks the top results that match the pictures taken. The app supports the following functions: text recognition, facial recognition, image description, color recognition, light brightness, and a magnifier. However, its capacities for facial and object recognition need further improvements. Visualize-Vision AI [118] makes use of different neural networks and AI to identify pictures and texts. The software is intended to provide visual assistance to the visually disabled, while still providing a developer mode that enables various AIs to be explored. Another app that can identify an object using artificial neural networks was explored in [119].

A visually impaired person can find an object by calling the name of the object, and the app finds it. Seeing Assistance Home [120] allows users to use an electronic lens for partially visually impaired persons, providing color identification, light source detection, and the ability to scan and produce barcodes and QR codes. VocalEyes AI [121] can assist the visually impaired in the following functions: object recognition; reading text; describing environments, label brands, and logos; facial recognition; emotion classification; age recognition; and currency recognition. LetSee [122] has three functions, including money recognition, which supports several currencies but does not support the Saudi Riyal; plastic card recognition; and light measurement tools to help users to locate sources of light, such as spotlights, cameras, or windows. The higher the brightness of the light is, the louder the sound the user hears is. TapTapSee [123] supports object recognition, barcode and QR code reading, auto-focus notification, and Flash toggle. Aipoly Vision [124] provides its service, including full object recognition features, for monthly fees of USD 4.99. The software also has the functions of currency recognition, text reading, color recognition, and light detection. However, the software is only supported by designated iPhone and iPad devices.

2.4.13. VoiceOver

For visually impaired people, voice over is one of the most useful functions. VoiceOver is a gesture-based screen reader. The user can utilize a mobile device even if they cannot see the screen. VoiceOver provides auditory explanations of what is on the screen.

On an iPhone, for example, when the user touches the screen or drags his finger over it, VoiceOver reads out the name of whatever the user touches, including icons and text. To interact with an item, such as a button or link, or to go to another item, the user can use VoiceOver gestures. VoiceOver creates a sound when the user moves to a new screen and then picks and speaks the name of the first item on the screen (typically in the top-left corner). VoiceOver tells the user when the display changes to the landscape or portrait orientation, the screen dims or locks, and what is active on the lock screen when the user turns on their iPhone [125].

The VoiceOver on iOS communicates with the user through a variety of “gestures”, or motions made with one or more fingers on the screen. Many gestures are location sensitive. Sliding one’s finger over the screen, for example, reveals the screen’s visual contents as the finger passes over them. This allows visually impaired users to explore an application’s actual on-screen layout. A person can activate a selected element by double-tapping, similar to double-clicking a mouse, in the same way a sighted user would. VoiceOver can also switch off the display while keeping the touch screen responsive, conserving battery life. This function is called the “Screen Curtain” by Apple [126].

2.4.14. Virtual Assistant Apps (Voice Commands)

Virtual assistants can help the visually impaired through their ability to control their mobile with voice commands. Here, we investigate the three most popular and recent virtual assistants: Siri, Google Assistant, and Bixby. One of the main concerns that we explore is privacy.

Siri

Siri is an assistant that uses voice queries and a natural language user interface to respond to queries, make recommendations, and take action by delegating requests to a set of internet services [127]. Siri assists in a series of tasks, such as phone calls; messaging, setting alarms, timers, and reminders; handling device settings; getting directions; scheduling events and reminders; previewing the calendar; running smart homes; making payments; playing music; checking facts; making calculations; and/or translating a phrase into another language [128]. However, some of the functions need to be visualized in order to be completed, because the assistant is designed for sighted people, not for the visually impaired. It needs improvements in order satisfy their needs. Siri provides various languages, including Arabic.

Apple notes that Siri searches and requests are paired with a specific identifier and not an Apple ID; thus, personal information is not stored for sale to advertisers or organizations. Apple declares that users can reset the identifier by turning Siri off and back on at any point, essentially restarting their interaction with Siri, which would erase user data associated with the Siri identifier. The terms state that personal information can only be used by Apple in order to provide or enhance third-party applications for their products, services, and ads. Private data are not exchanged with third parties for marketing purposes of their own. However, for whatever reason, Apple can use, pass, and reveal non-personal information. This means that Apple’s sites, internet platforms, mobile software, email messages, and third-party product ads use monitoring tools to help Apple to better identify customer behavior, inform the business about the areas of its website accessed by the users, and promote and evaluate the efficacy of advertising and searches. Users may see advertisements dependent on other details in third-party applications; however, the Apple ID of a child also receives non-targeted advertisements on such platforms. The terms of Apple state that the protection of all children is a significant priority of Apple. Apple provides parents with the information they need to determine what would be best for their child.

Google Assistant

Google Assistant is a virtual assistant that is powered by artificial intelligence created by Google and is mostly available on smartphones and smart home platforms. Google Assistant can be accessed through its website and can be downloaded from the iOS App Store and the Google Play Store. For the sake of privacy, Google Account is designed with on/off data controls, allowing users to choose the privacy settings that suit them. In addition, as technology advances, Google’s terms note that its privacy policies often change, meaning that privacy is still a user-determined individual option. The terms state that Google can use the personal information of users to provide ads to third parties but report that it does not sell the personal information of users to third parties. Moreover, the terms state that Google can show targeted ads to users, but that users can alter their preferences, choose whether their personal information is used to make ads more applicable to them, and turn such advertising services on or off. The terms also state that Google enables particular collaborators to use their cookies or related technology to retrieve information from a user’s account or computer for advertisement and measurement purposes. Google’s rules, however, note that a child’s customized advertising is not displayed, meaning that advertisements are not focused on the information received from a child’s account.

The terms of Google note that all of its services allow users to connect with other trustworthy and untrusted users and exchange information with other people, such as others with whom a user may chat or share content. If a user has a Google Account, their profile name, profile photo, and activities that they carry out on Google, or on third-party applications that are linked to their Google Account, can appear. In addition, information about a child, including their name, photo, email address, and transactions on Google Play, can be exchanged with members of a family using Google Family Link. These terms and conditions note that Google does not gather or use data for advertising purposes in the Google Cloud or G Suite services and that there are no commercials in the G Suite or Google Cloud Platform. Finally, the terms of Google note that it does not send users personalized advertisements on the basis of specific categories, such as religion, race, health, or sexual orientation.

Bixby

For Samsung devices, Bixby [129] is an intelligent digital assistant that hears and records according to the user’s desire and works with their favorite applications. Bixby Vision’s scene description function describes what is displayed on the screen. Personalized Bixby allows the assistant to learn the user’s preferences based on their usage in order to facilitate its utility in the future. In addition, Bixby can handle smart devices with voice commands while attaching the apps to SmartThings. The user can change the TV channel or turn the lights on/off.

There is a privacy notice that describes what is recorded and saved. The information that Bixby requires—and it will not work until the user gives their permission—include the username, birthdate, phone number, email, device identifiers, voice commands, health information, and any information that has been provided through the application, such as the user’s interaction with the app. In addition, some of the information can be sent to an external third party to convert the voice command into text. Some of Samsung’s services allow users to communicate with others, and those other users may view information stored or displayed in the user’s account on the social networking service that they are connecting to. In addition, Samsung can use third-party monitoring technology for a range of purposes, such as evaluating the usage of its services and (in combination with cookies) delivering user-relevant content and advertising. Some third parties may serve to advertise or keep track of which advertisements users see, how frequently they see those advertisements, and what users do in response. The terms note that only restricted representatives of Samsung’s Bixby voice service team can access and otherwise process personal data in accordance with their job or contractual duties. Nevertheless, the terms do not reveal how common security measures in the sector, such as encryption, are used to secure sensitive details in transit or at rest [130]. The word Bixby is not easy to pronounce in Arabic. Currently, Bixby does not support the Arabic language.

Alexa

Alexa began as a smart speaker equipped with Alexa software, capable of listening to user questions and answering with replies. Over time, more household gadgets were interconnected through Alexa, and they can be operated by smartphones from anywhere. Amazon first built the Alexa platform to work as a digital assistant and entertainment device, but its application and use grew to encompass IoT, online searching, smart office, and smart home features, substantially improving the way that the average person interacts with technology [131]. To make it easier to interact with Alexa, the developers provide a set of tools, APIs, reference solutions, and documentation [132].

2.5. Research Gap

We provide a comparison of LidSonic V2.0 with the related works in Table 2. In Column 2, the technologies used in the particular works are mentioned in their respective rows. In Column 3, we discuss the work settings (i.e., whether they were indoor or outdoor). After that, the studies are examined in regard to the capacity for detecting transparent object features. We verify whether the gadget is handsfree. It is critical to know whether the device can operate at night, which is documented in Column 7. We also note whether or not machine learning techniques were used in the research. We also examine the different forms of feedback that they provided and whether they used verbal feedback. In addition, we examine the processing speed, because the solution requires real-time and quick data processing. We also discuss whether the gadget has a low energy consumption. We also explore the device’s cost effectiveness and whether it is inexpensive, and also whether or not the solutions given require low memory, as well as their weights. The various studies relate to, and satisfied the requirements of, some of the system’s essential features. All of these aspects of system design are addressed in our work. To ensure maturity and robustness, further system optimization and assessments are required. A detailed comparison of LidSonic V1.0, which also applies to LidSonic V2.0, is provided in [39].

Table 2.

System Aspects and a Comparison with Related Works.

We noted earlier that, despite the fact that several devices and systems for the visually impaired have been developed in academic and commercial settings, the current devices and systems lack maturity and do not completely fulfil user requirements and satisfaction. We created a low-cost, miniature green device that can be built into or mounted on any pair of glasses or even a wheelchair to assist the visually impaired. Our method allows for faster inference and decision-making while using relatively little energy and smaller data sets. The focus of this paper is the facilitation of the mobility of the visually impaired for the reason that this is one of the most basic and important tasks required for the visually impaired to be self-reliant, as explained in Section 1. The broader literature review was provided in this section to make the reader aware of other requirements of, and solutions for, the visually impaired, to break research barriers, and enable collaboration between different solution providers, leading to the integration of different solutions to create holistic solutions for the visually impaired. Increased and collaborative research activity in this field will encourage the development, commercialization, and widespread acceptance of devices for the visually impaired.

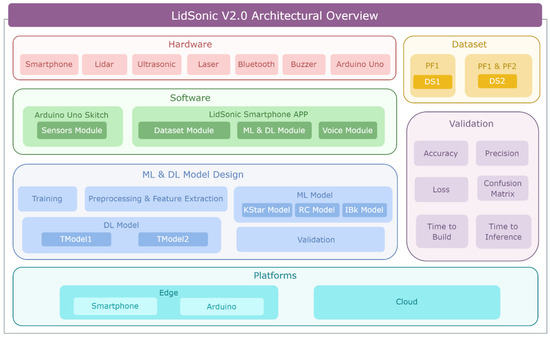

3. A High-Level View

In Section 3.1, Section 3.2 and Section 3.3, we present a high-level view of the LidSonic V2.0 system, the user view, the developer view, and the system view. A detailed description of the system design is provided in Section 4.

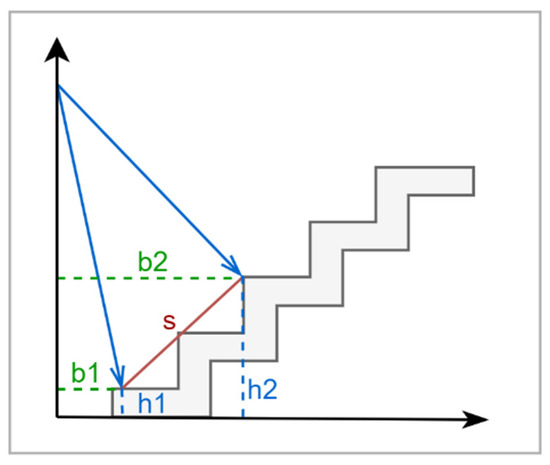

3.1. User View

Figure 2 shows the user view. The user puts on the LidSonic V2.0 gadget, which is fixed in a glass frame. The user installs the LidSonic V2.0 smartphone app after downloading it. Bluetooth connection between the LidSonic V2.0 mobile app and the LidSonic V2.0 device is used. LidSonic V2.0 is intensively trained in both indoor and outdoor settings. The user wanders around in both indoor and outdoor surroundings, allowing the LidSonic V2.0 gadget to be further trained and validated. Furthermore, a visually impaired person’s family member or a volunteer may move around and retrain and check the gadget as needed. The gadget has a warning system in case the user encounters any impediments. When the user encounters an obstacle, a buzzer is activated. Additionally, the system may provide vocal input, such as “Ascending Stairs”, to warn the user of an impending challenge. By pressing the prediction mode screen, the user may also hear the result. A user or his/her assistant can also use voice commands to label or relabel an obstacle class and create a dataset. This enables the validation and refining of the machine learning model, such as the revision of an object’s label in the case that it was incorrectly categorized.

Figure 2.

LidSonic: A User’s View.

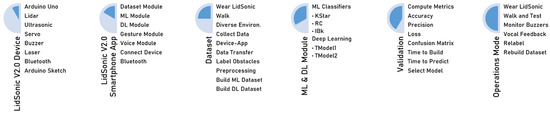

3.2. Developer View

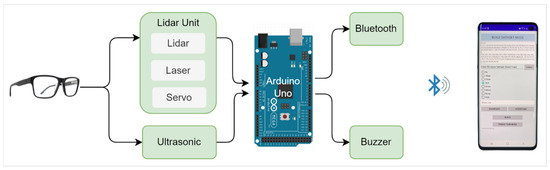

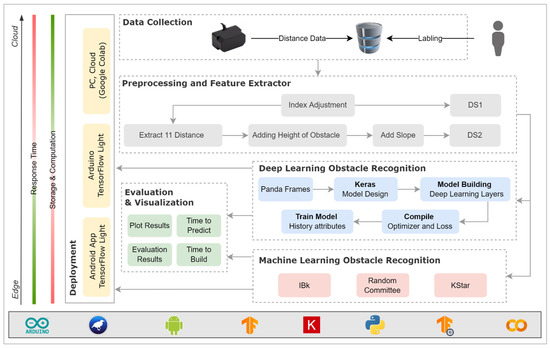

The development of modules, as seen in Figure 3, starts with the construction of the LidSonic V2.0 device. A LiDAR sensor, ultrasonic sensor, servo, buzzer, laser, and Bluetooth are all connected to an Arduino Uno CPU used to build the LidSonic V2.0 gadget. Then, using an Arduino sketch, we combined and handled the different components (sensors and actuators), as well as their communication. The LidSonic V2.0 smartphone app was created with Android Studio (LidSonic V2.0). We created the dataset module to help with the dataset generation. Then, the chosen machine or deep learning module was used to construct and train the models. We utilized the Weka library for the machine learning and the TensorFlow framework for the deep learning models. Bluetooth is used to create a connection between the LidSonic V2.0 device and the mobile app, which is also used to send data between the device and the app. The Google speech-to-text and text-to-speech APIs were used to develop the speech module.

Figure 3.

LidSonic: A Developer’s View.

The developer wears the LidSonic V2.0 device and walks around to create the dataset. The LidSonic V2.0 device provides sensor data to the smartphone app, which classifies obstacle data and generates the dataset. To verify our findings, we used standard machine and deep learning performance metrics. The developer wore the trained LidSonic V2.0 gadget and went for a walk to test it in the operational mode. The developer observed the system’s buzzer and vocal feedback. The dataset can be expanded and recreated by the developers, users, or their assistants to increase the device’s accuracy and precision.

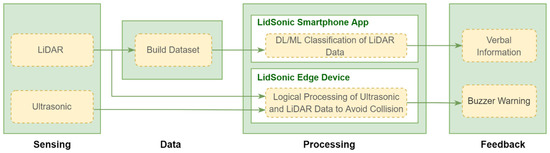

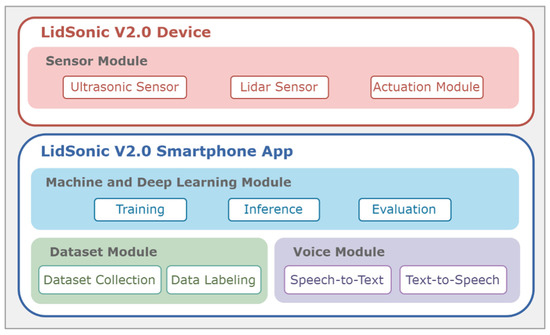

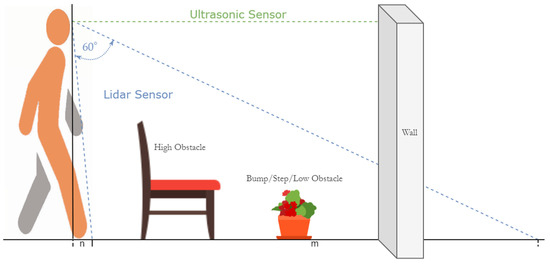

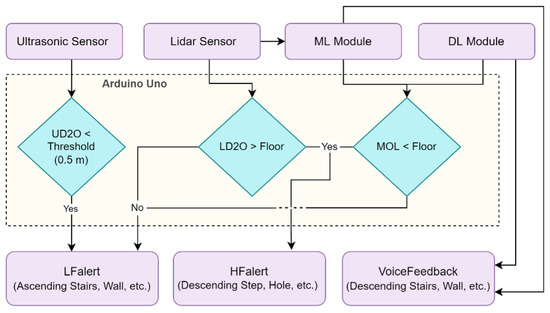

3.3. System View

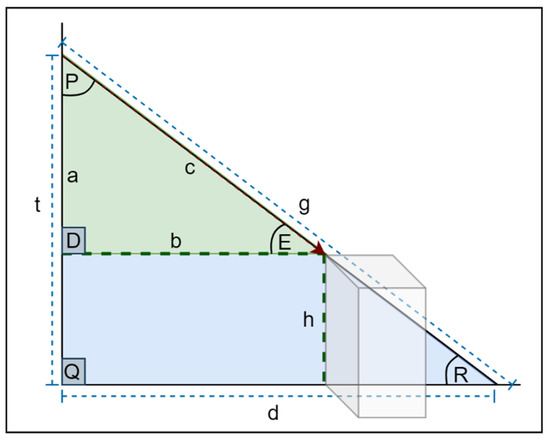

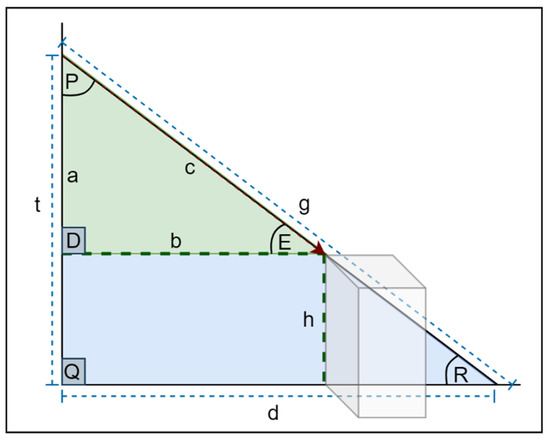

LidSonic V2.0 detects hazards in the environment using various sensors, analyzes the data using multiple channels, and issues buzzer warnings and vocal information. With the use of an edge device and an app that collects data for recognition, we propose a technique for detecting and recognizing obstacles. Figure 4 presents a high-level functional overview of the system. When the Bluetooth connection is established, the data is collected from the LiDAR and ultrasonic sensors. An obstacle dataset should be established if the system does not already have one. The dataset is created using LiDAR data only. Two distinct channels or procedures are used to process the data. First, simple logic is used by the Arduino unit. The sensors operated by the Arduino Uno controller unit offer the essential data required for visually impaired people to perceive the obstacles surrounding them. It processes the ultrasonic and basic LiDAR data for rapid processing and feedback through a buzzer. The second channel is the use of deep learning or machine learning techniques to analyze the LiDAR data via a smartphone app and produce vocal feedback. These two channels are unrelated to one another. The recognition process employs deep learning and machine learning approaches and is examined and evaluated in the sections below.

Figure 4.

LidSonic V2.0 Overview (Functional).