Abstract

A brain-computer interface (BCI) translates a user’s thoughts such as motor imagery (MI) into the control of external devices. However, some people, who are defined as BCI illiteracy, cannot control BCI effectively. The main characteristics of BCI illiterate subjects are low classification rates and poor repeatability. To address the problem of MI-BCI illiteracy, we propose a distribution adaptation method based on multi-kernel learning to make the distribution of features between the source domain and target domain become even closer to each other, while the divisibility of categories is maximized. Inspired by the kernel trick, we adopted a multiple-kernel-based extreme learning machine to train the labeled source-domain data to find a new high-dimensional subspace that maximizes data divisibility, and then use multiple-kernel-based maximum mean discrepancy to conduct distribution adaptation to eliminate the difference in feature distribution between domains in the new subspace. In light of the high dimension of features of MI-BCI illiteracy, random forest, which can effectively handle high-dimensional features without additional cross-validation, was employed as a classifier. The proposed method was validated on an open dataset. The experimental results show that that the method we proposed suits MI-BCI illiteracy and can reduce the inter-domain differences, resulting in a reduction in the performance degradation of both cross-subjects and cross-sessions.

1. Introduction

A brain–computer interface (BCI) based on electroencephalography (EEG) enables a user to control external devices by decoding brain activities that reflect the user’s thoughts [1]. For example, a user’s motor imagery (MI) can be translated into external device control by an MI-BCI. Some subjects cannot effectively control BCI equipment, meaning that they achieve a classification accuracy of less than 70%; such subjects are referred to as BCI illiterate [2,3]. Poor repeatability is obvious with MI-BCI, which can elicit SMR underpinned by neurophysiological processes [4,5]. As shown in Figure 1, the power spectral density (PSD) of Subject 46 was quite different in each of the two sessions. However, it is generally assumed that the training samples and test samples followed the same statistical distribution when a BCI system is based on machine learning. Domain adaptation (DA), as it pertains to transfer learning, has proven to be an effective method to handle inter-domain shift [6]. To make the distribution of features between the source domain and target domain become even closer to each other, we need to adapt both the marginal distribution and the conditional distribution. Furthermore, MI-BCI illiterate subjects do not display typical brain events such as event-related desynchronization (ERD) and event-related synchronization (ERS) [7]. Their divisibility of features was low, as is shown in Figure 2. Therefore, when studying the shared model of the source domain and target domain, the maximum divisibility of features and the impact of a low classification rate should be taken into consideration alongside the inter-domain shift.

Figure 1.

The power spectral density (PSD) of Subject 46 performing motor imagery at different times: the charts are the power spectra (blue represents negative, and red represents positive). The EEG signals were provided by an open dataset with BCI illiterate subjects [8] and they were recorded in two different sessions on different days. (a) and (b) are the PSD diagrams of Subject 46 in session 1 and session 2, respectively. The classification accuracies of Subject 46 were 53% and 58% in the two sessions, respectively, so the subject was classified as BCI illiterate.

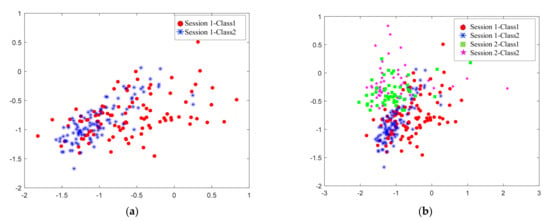

Figure 2.

The feature distribution comparison of Subject 46. The features were extracted by a common spatial pattern (CSP). (a) The distribution of features for session 1; (b) The distribution comparison of features for Sessions 1 and 2.

The goal of feature-based marginal distribution adaptation (MDA) methods is to have a common feature space in which the marginal distribution of the source domain and the target domain are as close as possible. Certain achievements have been made in the adaptation of marginal distribution in many fields including EEG signal processing with these methods. Liu et al. [9] applied transfer component analysis (TCA) in EEG-based cross-subject mental fatigue recognition. Zhang et al. [10] reduced distribution differences through an inter-domain scatter matrix based on cross-subject mental workload classification. Chai et al. [11] proposed the use of subspace alignment (SA) to transform features into a domain-invariant subspace to solve the adaptation problem of EEG-based emotion recognition. He et al. [12] applied correlation alignment (CORAL) to minimize the spatial offset when solving the problem of different set domain adaptation for BCIs. Hua et al. [13] applied geodesic flow kernel (GFK) in EEG-based cross-subject emotion recognition. With the development of transfer learning, new progress has also been made in MDA. Wei et al. [14] applied the linear weighting method to the four frequently adopted DA methods (TCA, manifold alignment, CORAL, and SA) to determine coefficients through repeated iterations using the principle of neighborhood consistency. Ma et al. [15] identified the center particle position between the two domains and aligned the center position of the source domain and the target domain by translation. A common problem of these approaches is that although they reduce the marginal distribution differences between the source domain and the target domain in the new subspace, the data categories remain indistinguishable, as displayed in Figure 3a. Considering the difficulties in classifying the features extracted by the MI-BCI illiterate subjects, the above works were probably not optimal for BCI illiteracy. We need to find a new subspace in which the divisibility of categories is maximized and the difference between domains is minimized, as displayed in Figure 3b.

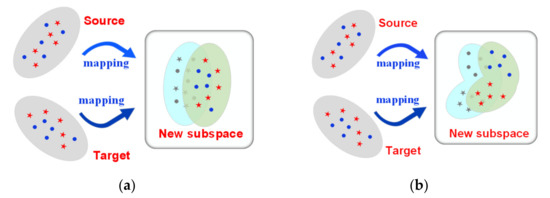

Figure 3.

The distribution adaptation. (a) Purely for marginal distribution adaptation; (b) With the features being mapped into the new space, the inter-class maximal divisibility was achieved and the distribution difference between the source domain and target domain was reduced.

Inspired by the kernel trick that data can be mapped to high-dimensional space to increase data divisibility, the kernel method provides a powerful prediction framework for learning nonlinear prediction models. Therefore, we attempted to map features to the Reproducing Kernel Hilbert Space (RKHS) to find latent features of the subjects, especially BCI illiterate subjects, in this multi-dimensional nonlinear space to improve the class divisibility. Meanwhile, to address the problem that a single kernel has relatively more limitations for wide feature distribution, instead, we applied the linear combination of a series of basic kernels. The combined kernel function could still satisfy the Mercer condition, that is, the function satisfies the symmetry and positive definiteness [16,17]. Multiple kernel based maximum mean discrepancy (MK-MMD) put forward in [18] maps both the source domain and target domain data to multiple-kernel-based RKHS and then minimizes the center distance to reduce the marginal distribution difference. Following this idea, we adopted multiple kernel learning (MKL) combined with MK-MMD to build a DA framework. DA combined with MKL was then addressed using the classifier-based DA method in many studies [19,20,21,22,23]. The method involved adding the objective function that minimized the distance between domains in the mapped feature space to the risk function with a kernel-based classifier and applied a weight parameter λ to balance the data distribution differences between the two domains and structural risk functions. This method demonstrated improved classification and generalization capabilities. However, Chen et al. [24] pointed out that the results of minimizing the risk function with the above methods depend on the parameter λ, which may sometimes sacrifice domain similarity to achieve a high classification accuracy for the source only. Zhang et al. [25] put forward a marginal distribution adaptive framework for kernel-based learning machines. This framework first maps the original features to RKHS to improve the divisibility of categories and then transfers the original data to the target domain through linear operators in the result space to make the processed data become close to the covariance of the target data. Inspired by this method, we proposed a distribution adaptation framework based on multiple kernel. Specifically, we used the source domain data to train the multiple-kernel extreme learning machine (MK-ELM) [26] and found that the multiple-kernel induced RKHS, which can maximize the divisibility of source domain feature categories. We then applied MK-MMD to align the source domain and the target domain under this result space. Features after transformation can retain the information of the original data as much as possible [27,28,29]. Therefore, the proposed method can achieve the maximal divisibility of categories and minimal shift between domains.

It is necessary to retain as much information as possible for MI-BCI illiteracy during feature extraction, so the dimension of features will be relatively high. In light of this point, we applied random forest (RF) as the classifier, which can be used without dimensionality reduction and cross validation. RF has been widely used in the field of BCI and has achieved good results [30,31,32].

Considering MI-BCI illiteracy and referring to the existing technology, we proposed a framework combined distribution adaptation with RF based on multiple kernel learning (MK-DA-RF). We then verified this framework using an open dataset containing BCI illiterate subjects [8]. The main contributions of this study are as follows.

- The source domain data were applied to train kernel-based ELM to find a subspace that could achieve the best classification effect, that is, the separability of features was the best in this new subspace;

- To overcome the limitations of a single kernel, a linear connection framework using multiple basic kernels was proposed;

- MK-MMD was applied to align the distribution of the mapped source and target domain data in this subspace.

2. Methodology

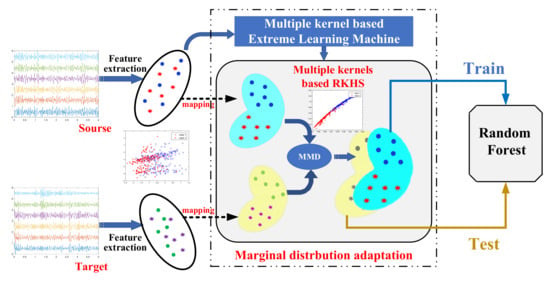

Our proposed distribution adaptation and classification framework based on multi-kernel learning is displayed in Figure 4. The extracted features of the EEG-based BCI system used for the training classifier were defined as source domain features, and the features used for testing were defined as target domain features. The source domain features were used to train the multiple-kernel ELM and the weights were then determined. Next, the kernel function that allows for the maximal inter-class divisibility after the data are mapped to the new RKHS is obtained. Then, the features of the source domain and the target domain were aligned based on MK-MMD under the new RKHS. Then, we applied the adapted training features to train the RF to obtain a suitable classifier.

Figure 4.

The joint distribution adaptation framework.

In this study, is the labeled source domain data, where is the source domain data with D as the data dimension, is the number of data points in the source domain, and is the corresponding label; is defined as the unlabeled target domain data, where is the source domain data with as the number of data points in the source domain without available labels; and Class C is contained in both the source domain and target domain.

2.1. Distribution Alignment Based on Multiple Kernel

The goal of DA is to have equal probability densities of distributions in the new subspace between the source and target domains, in other words, .

2.1.1. Multiple Kernel Expression

To overcome the limitations of a single kernel, a linear connection framework using multiple basic kernels is proposed. The mathematical expression is defined as follows:

where refers to the basic mapping function; is the corresponding kernel function; and is the coefficient.

2.1.2. Multiple-Kernel Extreme Learning Machine

Assume that there are N training samples, in other words, . According to the research by Huang et al. [33], the output of kernel based ELM for binary classification is:

where refers to connection weight, and refers to the feature mapping function.

The learning objective of ELM is to minimize the learning error and weight coefficient, which can be expressed as:

where is the training error and is a parameter set by the user and provides a tradeoff between the output weights and training error.

Based on the Karush–Kuhn–Tucker (KKT) theory and Bartlett’s theory [34], the Lagrangian function can be written as follows:

According to the solution method of the KKT and Mercer’s theorem, we set the derivative of (4) with regard to the parameters, which can be expressed as:

Subsequently, by substituting Equation (5a,b) into Equation (5c), it can thus be inferred that

By substituting Equation (6) in Equation (5a),

Combining (2) with (7), the relationship between the input and output of kernel-based ELM can be expressed as:

where:

On this basis, the single-kernel linear combination is replaced by a multiple-kernel linear combination. The target function of MK-ELM is gained combined with (1) [26]. It can be expressed as:

Herein, q = 2, , and . The Lagrangian function is:

According to the KKT theory, it can be concluded that:

By taking the derivative of (10) with respect to , we obtain:

Combining (11) with , we obtain:

By gradual iteration coefficient, is updated and the optimal coefficient is obtained.

2.1.3. Multiple Kernel Maximum Mean Discrepancy

It is assumed that ; however, there is a mapping so that . The MMD put forward in the research by Pan et al. [27] is often used as an indicator for calculating the distribution distance in the RKHS:

In combination with (1), the multiple basic mapping functions can be regarded as one mapping function after linear combination (i.e., the mapping function is defined as ) and a single kernel based MMD can form MK-MMD in this way.

If , then the kernel mapping is and the kernel matrix is . According to the Kernel PCA theory [27,35], the transformed features can be expressed as , where W is the kernel-PCA transformation matrix. By definition, this vector can retain the maximal mapped feature space information, and (14) is then written as:

where

The maximal mean difference is to be minimized in infinite-dimensional RKHS space. Combining kernel-based PCA, the problem for domain adaptation then reduces to:

where is a regular term that controls the model complexity; is a trade-off parameter; is the identity matrix; is the centering matrix, where is the column vector with all ones; and is the identity matrix. Defining A as a symmetric matrix, the Lagrangian of (16) is

Setting the derivative of (17) with regard to to zero, we have

Take the former m eigenvector of as W. The transformed feature is then expressed as:

The process of marginal distribution adaption is presented in Algorithm 1.

| Algorithm 1. Marginal Distribution Adaptation: |

| 1: Input: labeled source samples and unlabeled target samples ; several basic kernel functionsq, and C 2: Output:, 3: Initialize: and 4: repeat 5: Compute by solving (1) 6: Update by solving (11) 7: Update by (13) 8: until } 9: Compute with the obtained by solving (1) 10: Compute the eigenvector of 11: Take the former m eigenvector as W 12: Compute and with (19). |

2.2. Random Forest

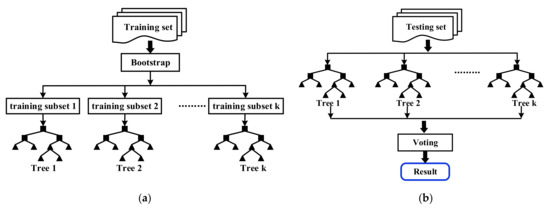

Random forest [36] is a type of ensemble learning, which is to combine several base classifiers to obtain a strong classifier with significantly superior classification performance. The principle of random forest is to obtain the final classification result by voting. The generation process of random forest is shown in Figure 5.

Figure 5.

The generation process of random forest. (a) The generation of the forest. (b) The implementation of decisions.

Suppose that there is a training set consisting of samples (i.e., ) and the corresponding feature vector with dimensions (i.e., ). We applied a random forest with decision trees, and the training steps are as follows:

- Resample randomly from the training set based on bootstrap to form a training subset ;

- Randomly extract features from of without replacement ( is set in this paper) to generate a complete decision tree without pruning;

- Repeat the above two steps times to generate decision trees, and then combine all of the decision trees to form a random forest;

- Take the test sample as the input of the random forest, and then vote on the result of each decision tree based on majority voting algorithm to obtain the classification result.

3. Results

We validated our method by an open-access dataset, namely the BMI dataset (http://gigadb.org/dataset/view/id/100542/ (accessed on 16 May 2021)). The research was provided by Lee et al. [8].

3.1. Experiment Materials and Preprocessing

The BCI system analyzed was based on Brain Amp, which utilizes 62 Ag/AgCI electrodes [8]. The EEG signals were collected at a frequency of 1000 Hz, and electrodes were placed in accordance with the international 10/20 system standard. Fifty-four subjects participated in this experiment, and none had a history of mental illness or psychoactive drugs that would affect the results of the study.

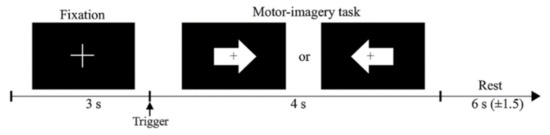

MI-BCI was tested with a dichotomous experiment in which subjects imagine their left and right hands in accordance with the directions of arrows, as shown in Figure 6. The EEG signals were recorded in two different sessions on different days. For all blocks of a session, black fixation was displayed on the screen for 3 s before each trial task began. The subjects then imagined they were performing the hand-grabbing action (grasping) in the direction specified by the visual cue. After the task, the screen display was blank for 6 s to allow the subjects to rest. There were 200 trials in one experiment per subject, half on the left and half on the right.

Figure 6.

The experimental design for binary class MI. The experimental description was provided by Lee et al. [8].

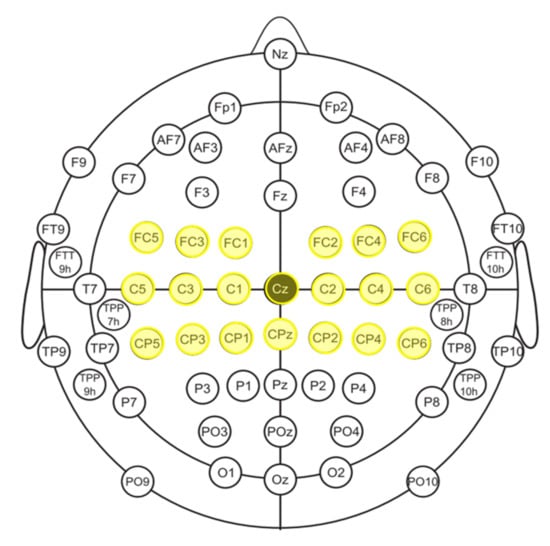

To retain the EEG information of the subjects as much as possible, as shown in Figure 7, 20-channel EEG data of the motor cortex region were selected: {FC5, FC3, FC1, FC2, FC4, FC6, C5, C3, C1, Cz, C2, C4, C6, CP5, CP3, CP1, CPz, CP2, CP4, CP6}. The EEG signals were downsampled to 100 Hz, and the 5th order Butterworth digital filter was utilized to obtain 8–30 Hz signals. Then, the range of 500–3500 s after the task started was selected. A common spatial pattern (CSP) was applied to maximize the difference between the two types of tasks. The first five dimensions of the feature vector were selected, and after that, the log-variance feature was calculated. Therefore, the CSP feature of a single trial was 1 × 10.

Figure 7.

The electrode position.

3.2. Model Generation

We verified the effect of the proposed domain adaption framework that combined MK-ELM and MK-MMD (denoted as MK-DA) on the aforementioned open dataset. First, the following three base kernel functions were chosen to form multiple-kernel ELM:

- Polynomial kernel function

- Gaussian kernel function

- Translation-invariant of wavelet kernel function

The relaxation coefficient C of the classifier was selected from The optimal parameters and of the poly-kernel function were selected from , and , respectively. The optimal parameter of the Gaussian kernel function was determined from . The optimal parameters , , and of the wavelet kernel function were searched from , respectively.

Then, random forest was used as the classifier. The number of decision trees was selected from .

3.3. Experimental Results

3.3.1. Methods for Comparison

This study primarily addressed the domain shift problem of BCI illiteracy by applying an open dataset containing BCI illiterate subjects [8] for validation. The classic method in which CSP was applied to extract features and linear discriminant analysis (LDA) was applied to classify, which was used as the reference framework.

The performance of the proposed DA method was compared with the performance of the DA methods that are widely used and known to achieve good results. At the same time, RF was employed as the classifier, which was the proposed method in this paper. To ensure fairness in the comparison, we gave the same parameter to all parts using the same operation, and the other parameters were given the optimal value according to the suggestions in the literature. The comparison DA methods were as follows:

- SA: We set the parameters referring to the research by Xiao et al. [37]. Considering the poor classification effect of BCI illiteracy, we set the subspace dimension of principal component analysis (PCA) to all to avoid information loss;

- GFK: We referred to the research by Wei et al. [38]. We determined the optimal dimension of the subspace by adopting the subspace disagreement measure (SDM) after the source domain and target domain data were determined;

- CORAL: Referring to the research by He et al. [12], we conducted a distributed computation on the feature covariance matrix of each domain and then minimized the distance between the covariance matrices of different domains;

- TCA: We referred to the research by Jayaram et al. [39]. In this experiment, when carrying out a multiple-kernel linear combination, the weight of the Gaussian kernel was generally the largest. Therefore, we chose the Gaussian kernel function and set its parameters to be the same as those of the Gaussian kernel function in MK-ELM;

- MKL: Referring to the research by Sun et al. [19] and Dai et al. [20], we combined Gaussian-kernel-based support vector machine (SVM) with MKL and applied the classifier-based DA method to optimize the target function of SVM, while minimizing the inter-domain offset based on MKL. MKL uses the three kernels above-mentioned and applied the second-order Newton method recommended by Sun et al. [18] to obtain the combination coefficients. The balance parameter was λ = 0.5. Note that the combined coefficients obtained by this method can be different from those obtained by the method proposed in this paper.

Then, the performance of the proposed classifier was compared with the performance of classifiers that are widely used in MI-BCI. The comparison classifiers were as follows:

- LDA: The reference method proposed by Lee et al. [8].

- SVM: We referred to the research by Lotte et al. [40]. We chose the Gaussian kernel function and set its parameters to be the same as those of the Gaussian kernel function in MK-ELM;

- KNN: We referred to the research by Lotte et al. [40]. We set the number .

- EEGnet: We referred to the research by Lawhern et al. [41]. We set the number of channels as 20.

- FBCNet: We referred to the research by Mane et al. [42]. We set C as 20.

3.3.2. Performance of the Domain Adaption and Classification Framework

We set the threshold value as 0.05, so p ≤ 0.05 indicates the statistical significance. During the experiment, according to the classification results obtained from the literature and the definition of BCI illiteracy, the subjects were divided into the following two groups:

- BCI (the classification result was greater than 70% in both sessions), denoted as BNI;

- BCI illiteracy (the classification result was less than 70% in both sessions), denoted as BI.

We performed experiments from two perspectives (i.e., cross-subject experiment and cross-session experiment). For preciseness, the Kruskal-Wallis test was adopted to display the statistical significance of the differences between methods.

- 1.

- Results of Cross-Subject Experiments

To ensure the simplicity of the comparison factors, both the source and target domains in this part were of the same session. Based on NBI and BI grouping, we randomly selected one subject as the source domain and another subject in the same session as the target domain in the following two ways. The first method was random sampling limited to NBI, and the second method was random sampling limited to BI. We used the proposed method DA and the control method to align the marginal distribution and employed RF as the classifier. Then, we applied different classifiers to the features adapted by MK-DA for the classification and comparison. The experiment was repeated 30 times, and the average accuracy was taken as the result. The results including the average classification accuracies (mean), standard deviation (Std), and confidence interval under 95% signification level (CI) are shown in Table 1 and Table 2.

Table 1.

A comparison of the different distribution adaptation methods for the source and target data from different subjects.

Table 2.

A comparison of the different classifiers in the cross-subject experiments.

- 2.

- Results of the Cross-Session Experiments

The experiments of the two sessions of each subject were taken as the source domain and the target domain, respectively. The results were divided into the BI group and the NBI group, and the average value of each group was taken as the result of that group. Then, the data of the source domain and the target domain were switched. The experimental verification was conducted in the same way as the cross-subject experiments, and the results including the average classification accuracies (mean), standard deviation (Std), and confidence interval under 95% signification level (CI) are shown in Table 3 and Table 4.

Table 3.

A comparison of the different distribution adaptation methods for the source and target data from different sessions.

Table 4.

A comparison of the different classifiers in the cross-session experiments.

4. Discussion

Based on the above experimental results, the rationality of the proposed method is discussed from two perspectives: cross-subject experiment and cross-session experiment.

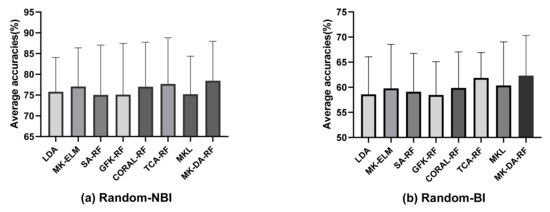

- Cross-Subject Experiments

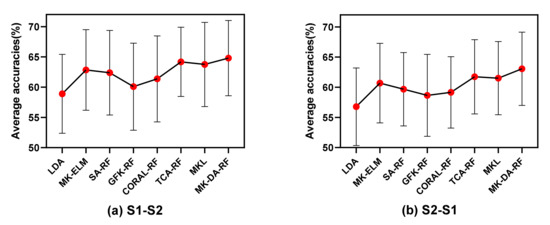

We randomly selected one subject as the subject of the training field and another subject in the same session as the target domain based on two methods, namely, the first subject was selected from those whose target domain was specified as the NBI group, and the second subject was selected from those whose target domain was specified as the BI group. When applying the proposed MK-ELM for classification, compared with LDA as the reference method, the average classification accuracies were improved, among which the biggest gain was 1.26% and 1.18%, respectively, which proved that the MK-ELM adopted in this paper increased the data divisibility in the new RKHS. Then, the competitive marginal distribution method was applied to the feature distribution adaptation. As can be seen from Figure 8, the classification accuracies of MK-DA-RF improved by 2.65% and 3.72%, respectively, compared with LDA as the reference method and by 0.78% and 0.46% compared with TCA-RF, which was the best-performing control method.

Figure 8.

The results of the different distribution adaptation methods in the cross-subject experiments.

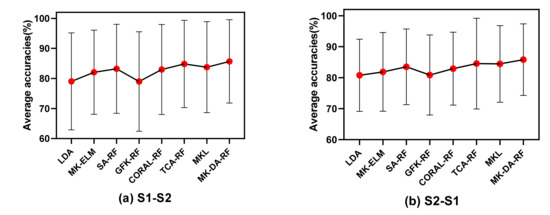

- Cross-Session Experiments

The two sessions of the same subject were chosen as the source domain and target domain, respectively. From the results of the average classification accuracy of all subjects, the classification accuracies of the proposed MK-DA-RF improved by 6.24% and 5.74%, respectively, compared with LDA as the reference method and by 0.72% and 1.31% compared with TCA-RF, which was the best-performing control method. Then, we averaged the subjects according to the NBI and BI groups. The results of group NBI and group BI are displayed in Figure 9 and Figure 10, respectively. It can be seen that the classification accuracy of MK-ELM gained an average increase of 3.93% in the two tasks compared with the reference method for the BI group, but an average increase of 2.06% for the NBI group. Since the subjects in the BI group could not effectively control the BCI, the extracted features were difficult to distinguish. The divisibility was increased after features were mapped to multiple-kernel-based RHKS by MK-ELM, which was consistent with the experimental phenomenon from the results. Then, under the adjustment of DA, the combined method of MK-ELM and MK-MMD proposed in this study significantly improved the classification accuracy. In particular, in the BI group, the average classification accuracies of MK-DA-RF were 5.9% and 6.3% higher than those of the reference method and 0.62% and 1.34% higher than those of TCA-RF, which was the best-performing control method.

Figure 9.

The results of the different distribution adaptation methods for the NBI group.

Figure 10.

The results of the different distribution adaptation methods for the BI group.

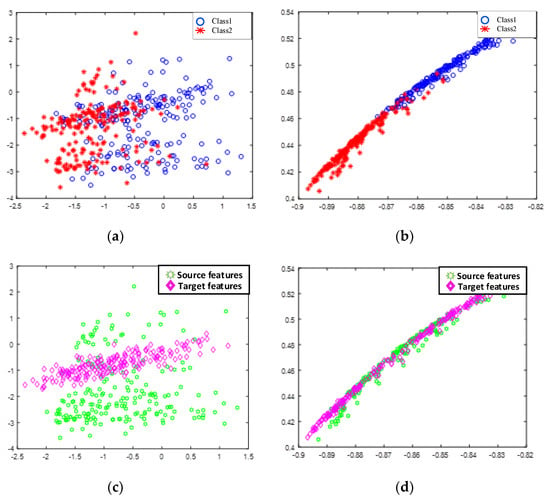

The feature distribution of the same subject before and after MDA was observed, and the first two dimensions of the feature value were taken as the X-axis and Y-axis, respectively, to check the feature distribution. Figure 11 shows the original feature distribution of Subject 19 and the feature distribution obtained with the method proposed in this study. Specifically, Figure 11a,b is the feature space distribution of class 1 (left-hand motor imagery) and class 2 (right-hand motor imagery), respectively. It can be seen that the class divisibility of the feature distribution was increased with MK-DA. Figure 11c,d refers to the feature distribution before and after MK-DA was employed. It can be seen that the feature space of the source domain and the target domain were further closer by the method proposed in this paper.

Figure 11.

The feature space distribution of Subject 22. With the reference method, Subject 22 had a classification accuracy of 68% from session 1 to session 2. With the improved method, the classification accuracy was 83%. (a) The original feature space distribution of class 1 (left-hand imagery) and class 2 (right-hand imagery) in session 1; (b) The feature space distribution of class 1 and class 2 obtained after marginal distribution adaptation session 1; (c) The original feature space distribution in session 1 (source domain) and session 2 (target domain) for all categories; (d) The feature space distribution obtained after marginal distribution adaptation in session 1 (source domain) and session 2 (target domain) for all categories.

- Performance of Random Forest

In this section, we analyzed the performance of different classifiers separately in two experiments. The performance evaluation metrics were calculated referring to the research by Giannakakis et al. [43].

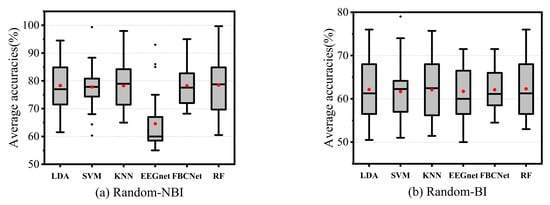

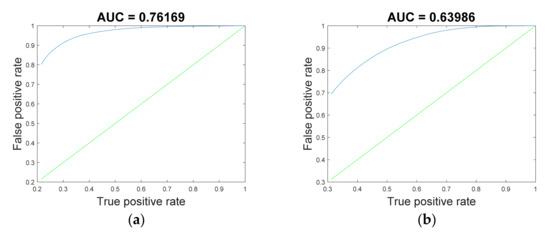

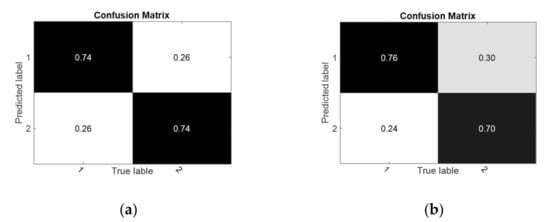

In the cross-subject experiment, as shown in Figure 12, RF achieved relatively better results in all experiments. The classification accuracies of the proposed RF improved by 0.17% and 0.17%, respectively, compared with LDA, which was the best-performing control method. The results of the performance evaluation metrics are shown in Table 5. The confusion matrices and receiver operating characteristic curves are shown in Figure 13 and Figure 14.

Figure 12.

The results of different classifiers in the cross-subject experiment.

Table 5.

The performance matrices of RF in the cross-subject experiments.

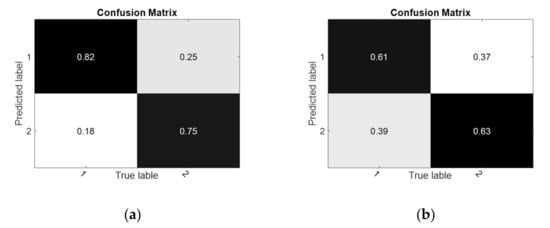

Figure 13.

The confusion matrices of RF in the cross-subject experiment. (a) Random-NBI; (b) Random-BI.

Figure 14.

Receiver operating characteristic curve of RF in the cross-subject experiment. (a) Random-NBI; (b) Random-BI.

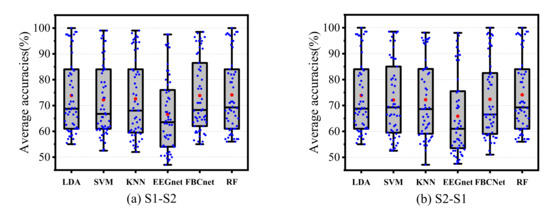

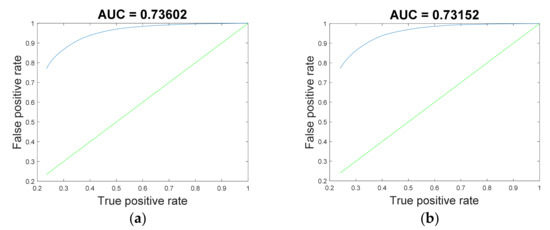

In the cross-session experiment, the results of all subjects are displayed in Figure 15. It can be seen that the classification accuracy of RF gained an average increase of 0.24% and 0.58%, respectively, in the two tasks compared with the control method with the best performance. The results of the performance evaluation metrics are shown in Table 6. The confusion matrices and receiver operating characteristic curve are shown in Figure 16 and Figure 17.

Figure 15.

The results of the different classifiers in the cross-session experiment.

Table 6.

The performance matrices of RF in the cross-session experiments.

Figure 16.

The confusion matrices of RF in the cross-session experiment. (a) S1-S2; (b) S2-S1.

Figure 17.

The receiver operating characteristic curve of RF in the cross-session experiment. (a) S1-S2; (b) S2-S1.

In particular, in all experiments, the performance of EEGnet was worse than that of the LDA without domain adaptation, so we believe that it is related to the fact that 20 channels were used, but the training data were so small that the model overfitted.

- Computational Complexity

Let and denote the number of training samples and testing samples, respectively, and each sample . Suppose we grow trees for RF. The computational complexity of each step is shown in Table 7, which is based on Liu et al. [26], Pan et al. [27], and Biau [36], where is the maximum number of iterations and is the number of base kernels. Therefore, the computational complexity of MK-DA is . Then, the computational complexity of the proposed framework is .

Table 7.

The computational complexity of each step.

- Limitations

However, there are also problems with the proposed method. First, after the features were adapted, the classification accuracies applying RF in both types of experiments were higher than the LDA after domain adaption, that is, the average classification accuracy of RF was 0.17% higher than that of LDA in the cross-subject experiment, and was 0.41% higher than that of the LDA in the cross-session experiment. However, the computational complexity of RF was significantly higher than that of the LDA. Therefore, it is necessary to combine classification accuracy with the computational complexity in selecting the suitable classifier. Second, parameters involved in the proposed framework (i.e., the relaxation coefficient C of ELM, the initial parameters of the kernels) were all selected from among a limited number of values, but the choice of the parameters would affect the classification results. Therefore, optimization methods will be suggested to solve this problem in our subsequent studies.

5. Conclusions

The method proposed in this paper aimed to address the inter-domain differences of EEG-based motor imagery BCI, especially for BCI illiteracy. It was found that BCI illiterate subjects could not effectively control the BCI due to two major problems: difficulties in classifying and poor repeatability. We proposed a domain adaption method that combines MK-ELM and MK-MMD. To demonstrate the effectiveness of the method, we performed experiments from two perspectives (i.e., cross-subject experiment and cross-session experiment). The MK-ELM achieved relatively better results than the LDA in all experiments. Meanwhile, it can be seen from the results of MK-DA that the MK-DA with each classifier achieved relatively better results in all combination forms. The average accuracies of all experiments of MK-DA combined with LDA was 3% higher than that of LDA in the cross-subject experiments, and was 5.6% higher in the cross-session experiments. Therefore, the divisibility was increased after the features were mapped to multiple-kernel-based RHKS by MK-ELM, and the domain shift decreased by MK-MMD, which was consistent with the experimental phenomenon from the results. At the same time, RF that could effectively handle high-dimensional features was employed as a classifier. It can be seen from the results of MK-DA-RF in the cross-subject experiments that the average classification accuracy of all the experiments could reach 70.4%, which was 2.7% higher than that of the reference method “CSP + LDA” and 0.3% higher than that of the best-performing control method. In the cross-session experiments, the average classification accuracy of the proposed method for all experiments could reach 73.6%, 6.1% higher than that of the reference method, and 0.4% more than that of the best-performing control method. Particularly for the BCI illiterate subjects, the average classification accuracy of all the experiments with target subjects showed that BCI illiteracy could reach 63.4% with the proposed method, which was 5.3% higher than the reference method without domain adaption. Therefore, the method proposed in this paper could achieve a significant effect in the BCI illiteracy group.

Author Contributions

Conceptualization, L.T. and D.L.; Methodology, L.T.; Software, L.T. and T.C.; Validation, L.T., T.C. and D.L.; Formal analysis, L.T.; Investigation, L.T.; Resources, Q.W.; Data curation, L.T.; Writing—original draft preparation, L.T.; Writing—review and editing, J.S.; Visualization, L.T.; Supervision, J.S. and D.L.; Project administration, J.S. and D.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number 61471140); the Fundamental Research Funds for the Central Universities (grant number IR2021222), the Sci-tech Innovation Foundation of Harbin (grant number 2016RALGJ001), and the China Scholarship Council and the Future Science and Technology Innovation Team Project of HIT (grant number 216506).

Data Availability Statement

We validated our method by an open-access dataset, namely, the BMI dataset (http://gigadb.org/dataset/view/id/100542/ (accessed on 16 May 2021)). Thus, our study did not involve any self-recorded datasets from human participants or animals. The authors declare that they have no conflicts of interest.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pasqualotto, E.; Federici, S.; Belardinelli, M.O. Toward functioning and usable brain-computer interfaces (BCIs): A literature review. Disabil. Rehabil. Assist. Technol. 2012, 7, 89–103. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Vidaurre, C. Towards a cure for BCI illiteracy: Machine learning based co-adaptive learning. BMC Neurosci. 2009, 10, P85. [Google Scholar] [CrossRef]

- Blankertz, B.; Sannelli, C.; Halder, S.; Hammer, E.M.; Kübler, A.; Müller, K.R.; Curio, G.; Dickhaus, T. Neurophysiological predictor of SMR-based BCI performance. Neuroimage 2010, 51, 1303–1309. [Google Scholar] [CrossRef] [PubMed]

- Saha, S.; Baumert, M. Intra- and Inter-subject Variability in EEG-Based Sensorimotor Brain Computer Interface: A Review. Front. Comput. Neurosci. 2020, 13, 87. [Google Scholar] [CrossRef] [PubMed]

- Kragel, P.A.; Knodt, A.R.; Hariri, A.R.; Labar, K.S. Decoding Spontaneous Emotional States in the Human Brain. PLoS Biol. 2016, 14, e2000106. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A Survey of Transfer Learning; Springer International Publishing: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Shu, X.; Yao, L.; Sheng, X.; Zhang, D.; Zhu, X. Enhanced motor imagery-based BCI performance via tactile stimulation on unilateral hand. Front. Hum. Neurosci. 2017, 11, 585. [Google Scholar] [CrossRef]

- Lee, M.H.; Kwon, O.Y.; Kim, Y.J.; Kim, H.K.; Lee, Y.E.; Williamson, J.; Fazli, S.; Lee, S.W. EEG dataset and OpenBMI toolbox for three BCI paradigms: An investigation into BCI illiteracy. Gigascience 2019, 8, giz002. [Google Scholar] [CrossRef]

- Liu, Y.; Lan, Z.; Cui, J.; Sourina, O.; Muller-Wittig, W. EEG-Based cross-subject mental fatigue recognition. In Proceedings of the 2019 International Conference on Cyberworlds (CW), Kyoto, Japan, 2–4 October 2019; pp. 247–252. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Li, S. Cross-subject mental workload classification using kernel spectral regression and transfer learning techniques. Cogn. Technol. Work 2017, 19, 587–605. [Google Scholar] [CrossRef]

- Chai, X.; Wang, Q.; Zhao, Y.; Li, Y.; Liu, D.; Liu, X.; Bai, O. A fast, efficient domain adaptation technique for cross-domain electroencephalography(EEG)-based emotion recognition. Sensors 2017, 17, 1014. [Google Scholar] [CrossRef]

- He, H.; Wu, D. Different Set Domain Adaptation for Brain-Computer Interfaces: A Label Alignment Approach. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1091–1108. [Google Scholar] [CrossRef]

- Hua, Y.; Zhong, X.; Zhang, B.; Yin, Z.; Zhang, J. Manifold feature fusion with dynamical feature selection for cross-subject emotion recognition. Brain Sci. 2021, 11, 1392. [Google Scholar] [CrossRef] [PubMed]

- Wei, H.; Ma, L.; Liu, Y.; Du, Q. Combining Multiple Classifiers for Domain Adaptation of Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1832–1847. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Zhu, L.; Liu, Y. Centroid and Covariance Alignment-Based Domain Adaptation for Unsupervised Classification of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2305–2323. [Google Scholar] [CrossRef]

- Liu, X.; Wang, L.; Zhu, X.; Li, M.; Zhu, E.; Liu, T.; Liu, L.; Dou, Y.; Yin, J. Multiple Kernel Learning Algorithms. J. Mach. Learn. Res. 2011, 42, 1303–1316. [Google Scholar] [CrossRef]

- Bucak, S.S.; Jin, R.; Jain, A.K. Multiple kernel learning for visual object recognition: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1354–1369. [Google Scholar] [CrossRef] [PubMed]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M.I. Learning transferable features with deep adaptation networks. In Proceedings of the 32nd International Conference on Machine Learning, ICML, Lille, France, 7–9 July 2015; Volume 1, pp. 97–105. [Google Scholar]

- Sun, Z.; Wang, C.; Wang, H.; Li, J. Learn multiple-kernel SVMs for domain adaptation in hyperspectral data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1224–1228. [Google Scholar] [CrossRef]

- Dai, M.; Wang, S.; Zheng, D.; Na, R.; Zhang, S. Domain Transfer Multiple Kernel Boosting for Classification of EEG Motor Imagery Signals. IEEE Access 2019, 7, 49951–49960. [Google Scholar] [CrossRef]

- Deng, C.; Liu, X.; Li, C.; Tao, D. Active multi-kernel domain adaptation for hyperspectral image classification. Pattern Recognit. 2018, 77, 306–315. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Y.; Wang, X.; Zhang, G.; Xiao, B.; Xiao, F.; Zhang, J. Multi-Kernel Coupled Projections for Domain Adaptive Dictionary Learning. IEEE Trans. Multimed. 2019, 21, 2292–2304. [Google Scholar] [CrossRef]

- Wang, W.; Wang, H.; Zhang, Z.; Zhang, C.; Gao, Y. Semi-supervised domain adaptation via Fredholm integral based kernel methods. Pattern Recognit. 2019, 85, 185–197. [Google Scholar] [CrossRef]

- Chen, X.; Lengelĺe, Ŕ. Domain adaptation transfer learning by SVM subject to a maximum-mean-discrepancy-like constraint. In Proceedings of the ICPRAM 2017—6th International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017; pp. 89–95. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, M.; Huang, Y.; Nehorai, A. Aligning Infinite-Dimensional Covariance Matrices in Reproducing Kernel Hilbert Spaces for Domain Adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3437–3445. [Google Scholar] [CrossRef]

- Liu, X.; Wang, L.; Huang, G.; Zhang, J.; Yin, J. Multiple kernel extreme learning machine. Neurocomputing 2015, 149, 253–264. [Google Scholar] [CrossRef]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2011, 22, 199–210. [Google Scholar] [CrossRef]

- Sch, B. Nonlinear Component Analysis as a Kernel Eigenvalue Problem. Neural Comput. 1998, 1319, 1299–1319. [Google Scholar]

- Long, M.; Wang, J.; Ding, G.; Sun, J.; Yu, P.S. Transfer feature learning with joint distribution adaptation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2200–2207. [Google Scholar] [CrossRef]

- Kaeseler, R.L.; Johansson, T.W.; Struijk, L.N.S.A.; Jochumsen, M. Feature and Classification Analysis for Detection and Classification of Tongue Movements from Single-Trial Pre-Movement EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 678–687. [Google Scholar] [CrossRef]

- Torres, E.P.; Torres, E.A.; Hernández-Álvarez, M.; Yoo, S.G. Emotion Recognition Related to Stock Trading Using Machine Learning Algorithms with Feature Selection. IEEE Access 2020, 8, 199719–199732. [Google Scholar] [CrossRef]

- Bentlemsan, M.; Zemouri, E.T.; Bouchaffra, D.; Yahya-Zoubir, B.; Ferroudji, K. Random forest and filter bank common spatial patterns for EEG-based motor imagery classification. In Proceedings of the International Conference on Intelligent Systems, Modelling and Simulation, ISMS, Kuala Lumpur, Malaysia, 9–12 February 2015; pp. 235–238. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.B.; Ding, X.; Zhou, H. Optimization method based extreme learning machine for classification. Neurocomputing 2010, 74, 155–163. [Google Scholar] [CrossRef]

- Lee, J.M.; Yoo, C.K.; Choi, S.W.; Vanrolleghem, P.A.; Lee, I.B. Nonlinear process monitoring using kernel principal component analysis. Chem. Eng. Sci. 2004, 59, 223–234. [Google Scholar] [CrossRef]

- Biau, G. Analysis of a random forests model. J. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar]

- Xiao, T.; Liu, P.; Zhao, W.; Tang, X. Iterative landmark selection and subspace alignment for unsupervised domain adaptation. J. Electron. Imaging 2018, 27, 1. [Google Scholar] [CrossRef]

- Wei, J. Learning Discriminative Geodesic Flowkernel For Unsupervised Domain Adaption. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018. [Google Scholar]

- Jayaram, V.; Alamgir, M.; Altun, Y.; Grosse-wentrup, M. Transfer Learning in Brain-Computer Interfaces. IEEE Comput. Intell. Mag. 2016, 11, 20–31. [Google Scholar] [CrossRef]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain-computer interfaces. J. Neural Eng. 2007, 4, R1. [Google Scholar] [CrossRef] [PubMed]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef] [PubMed]

- Mane, R.; Chew, E.; Chua, K.; Ang, K.K.; Robinson, N.; Vinod, A.P.; Lee, S.-W.; Guan, C. FBCNet: A Multi-view Convolutional Neural Network for Brain-Computer Interface. arXiv 2021, arXiv:2104.01233. [Google Scholar]

- Giannakakis, G.; Trivizakis, E.; Tsiknakis, M.; Marias, K. A novel multi-kernel 1D convolutional neural network for stress recognition from ECG. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos, ACIIW, Cambridge, UK, 3–6 September 2019; pp. 273–276. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).