Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2

Abstract

1. Introduction

- Warm-up time (the effect of device temperature on its precision)

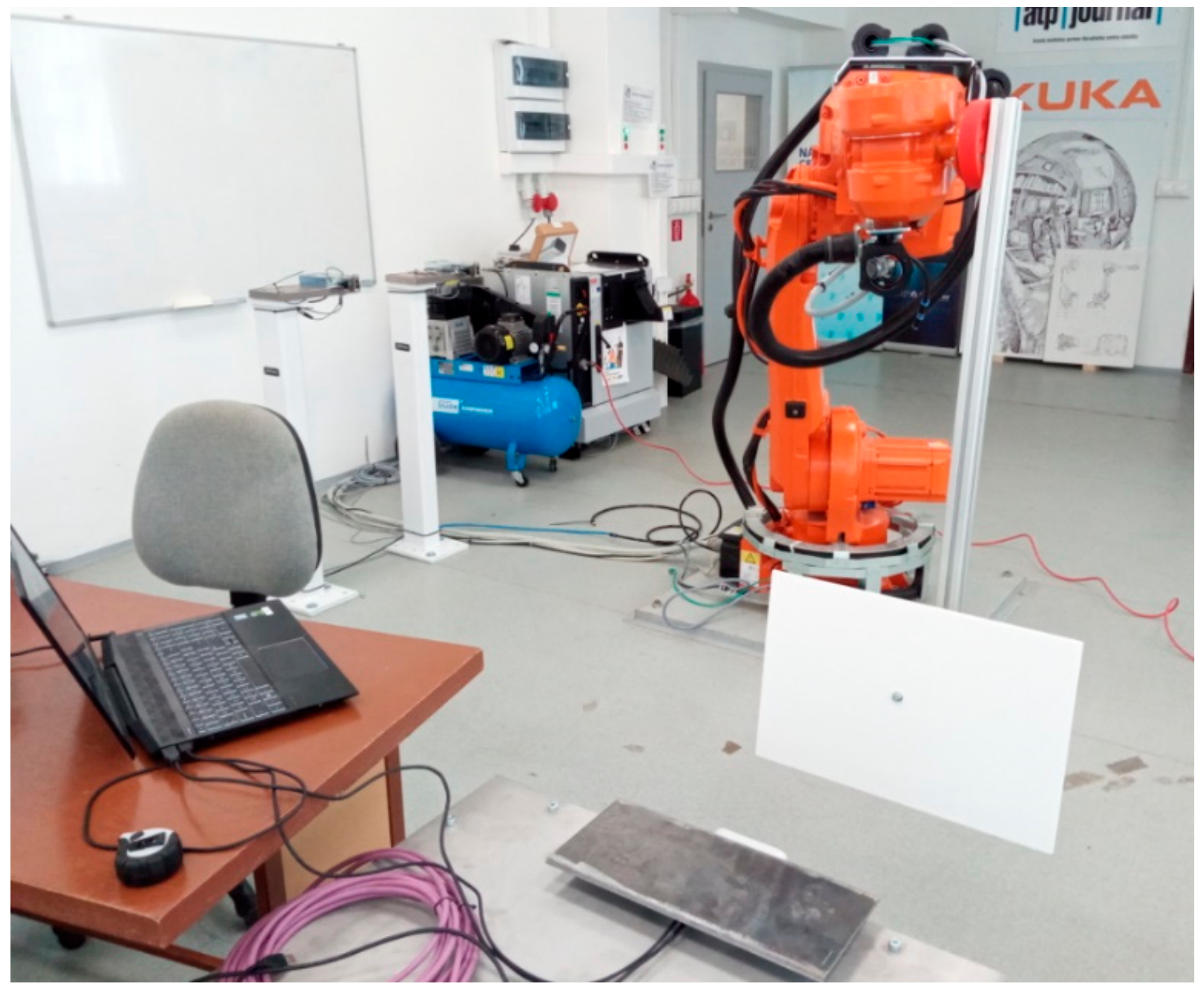

- Accuracy

- Precision

- Color and material effect on sensor performance

- Precision variability analysis

- Performance in outdoor environment

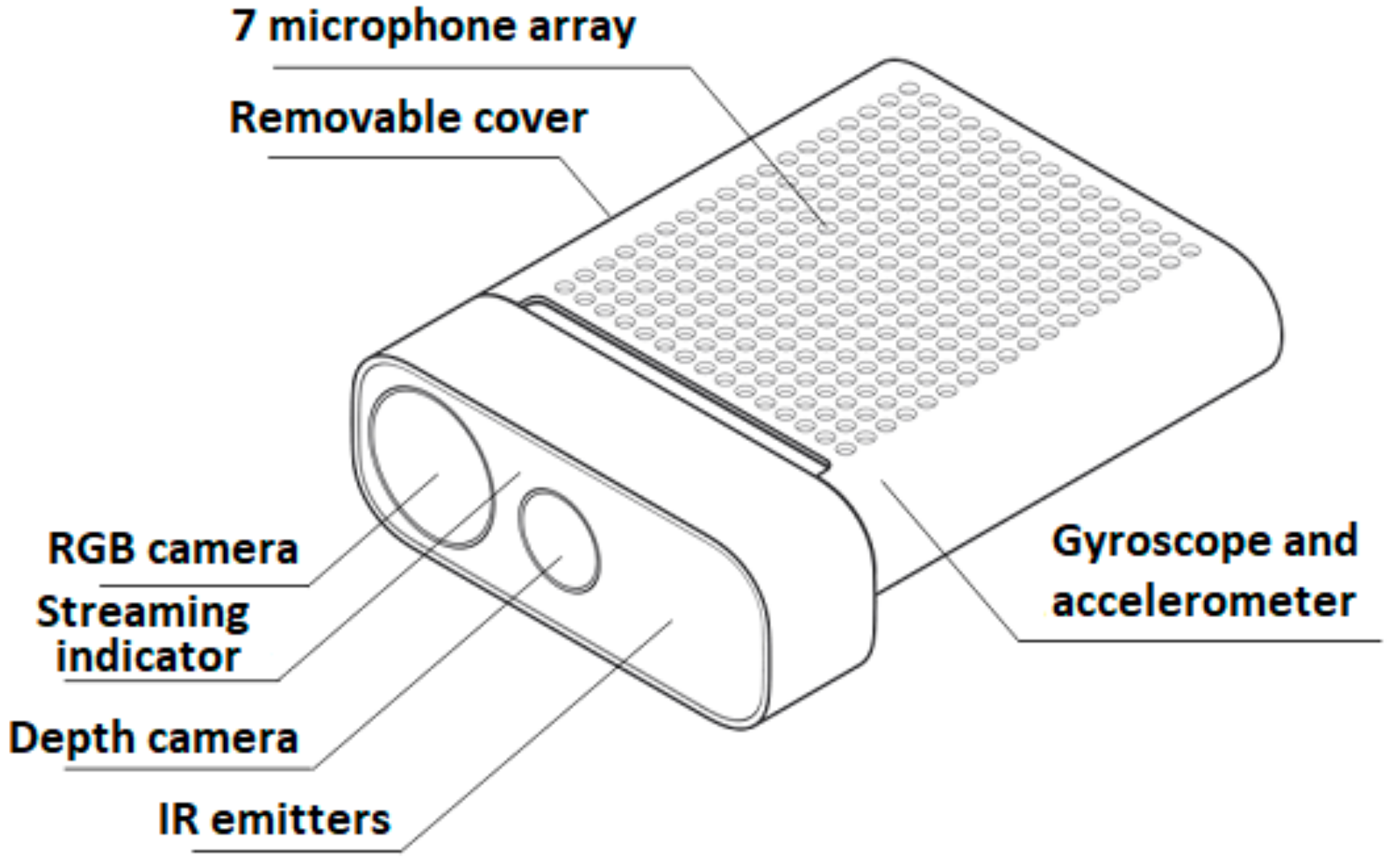

2. Kinects’ Specifications

3. Comparison of all Kinect Versions

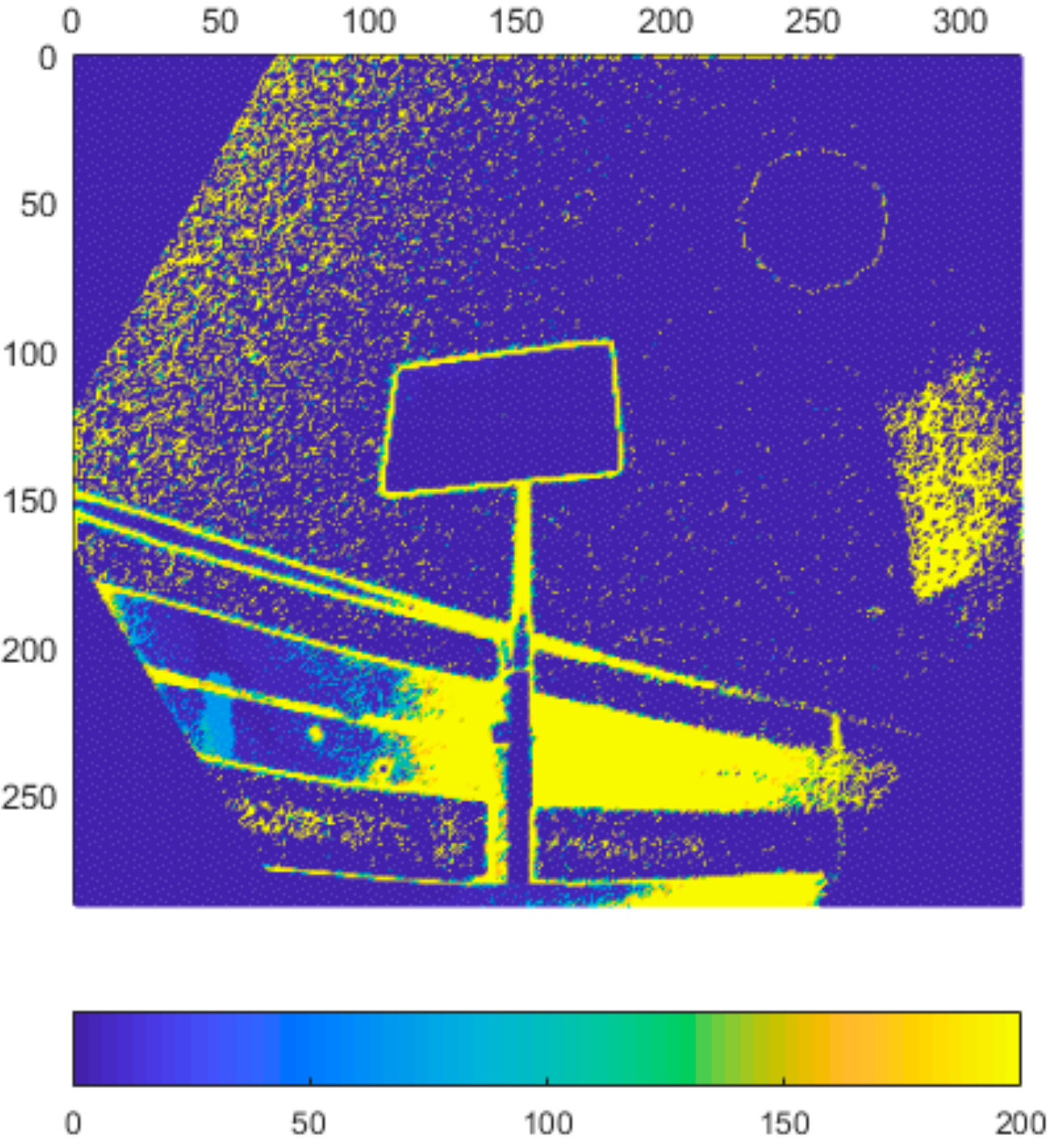

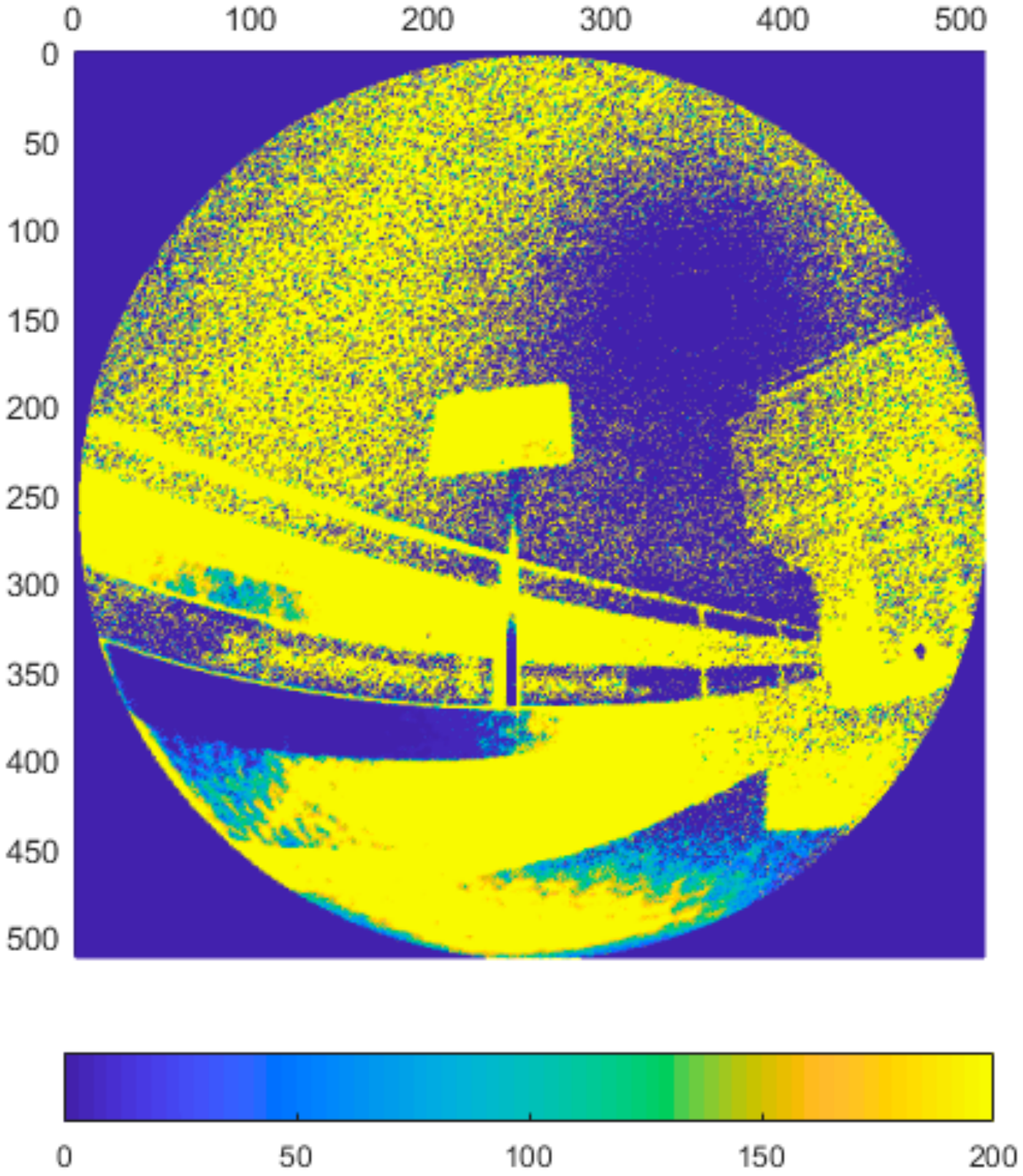

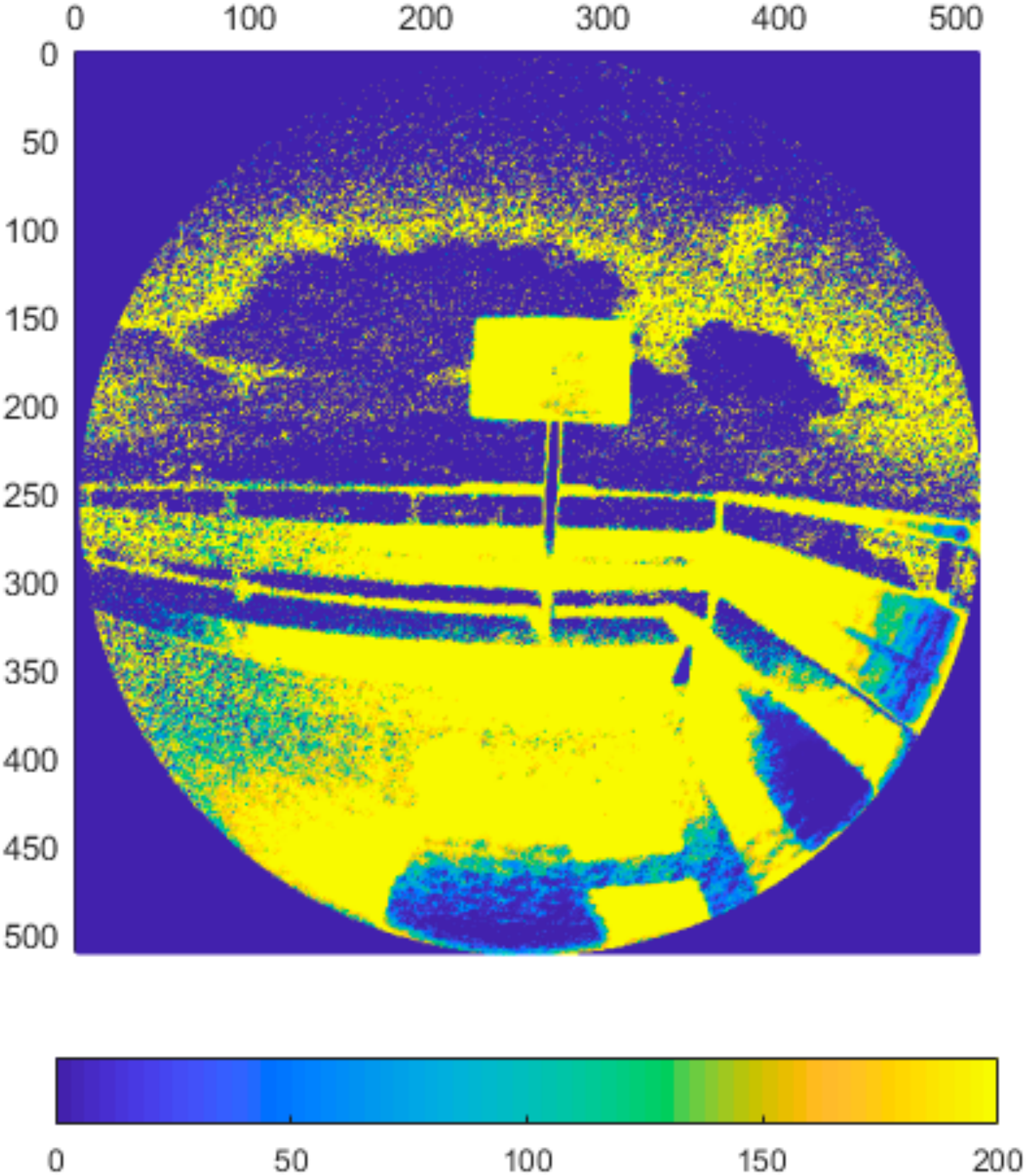

3.1. Typical Sensor Data

3.2. Experiment No. 1–Noise

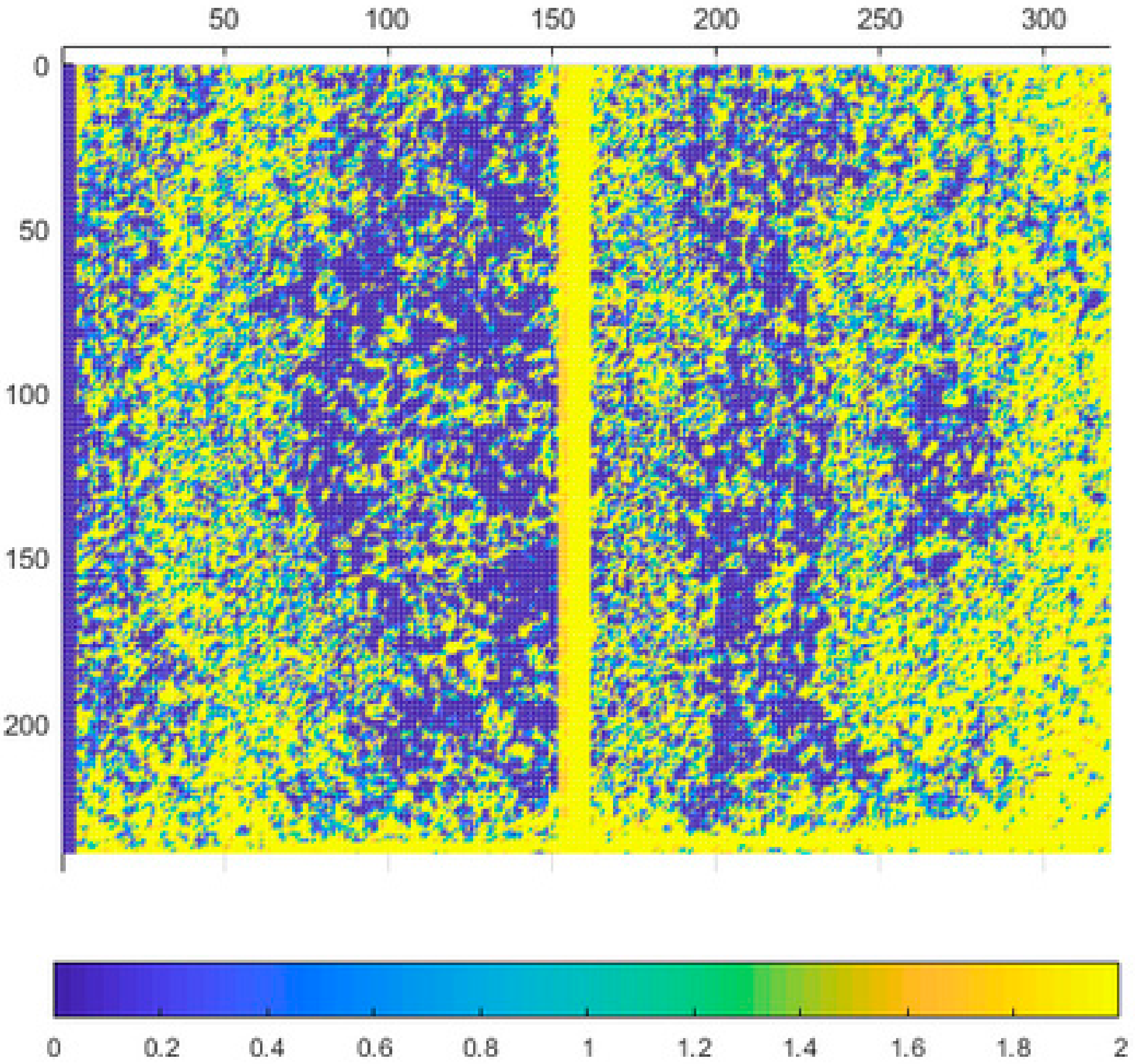

3.3. Experiment No. 2–Noise

4. Evaluation of the Azure Kinect

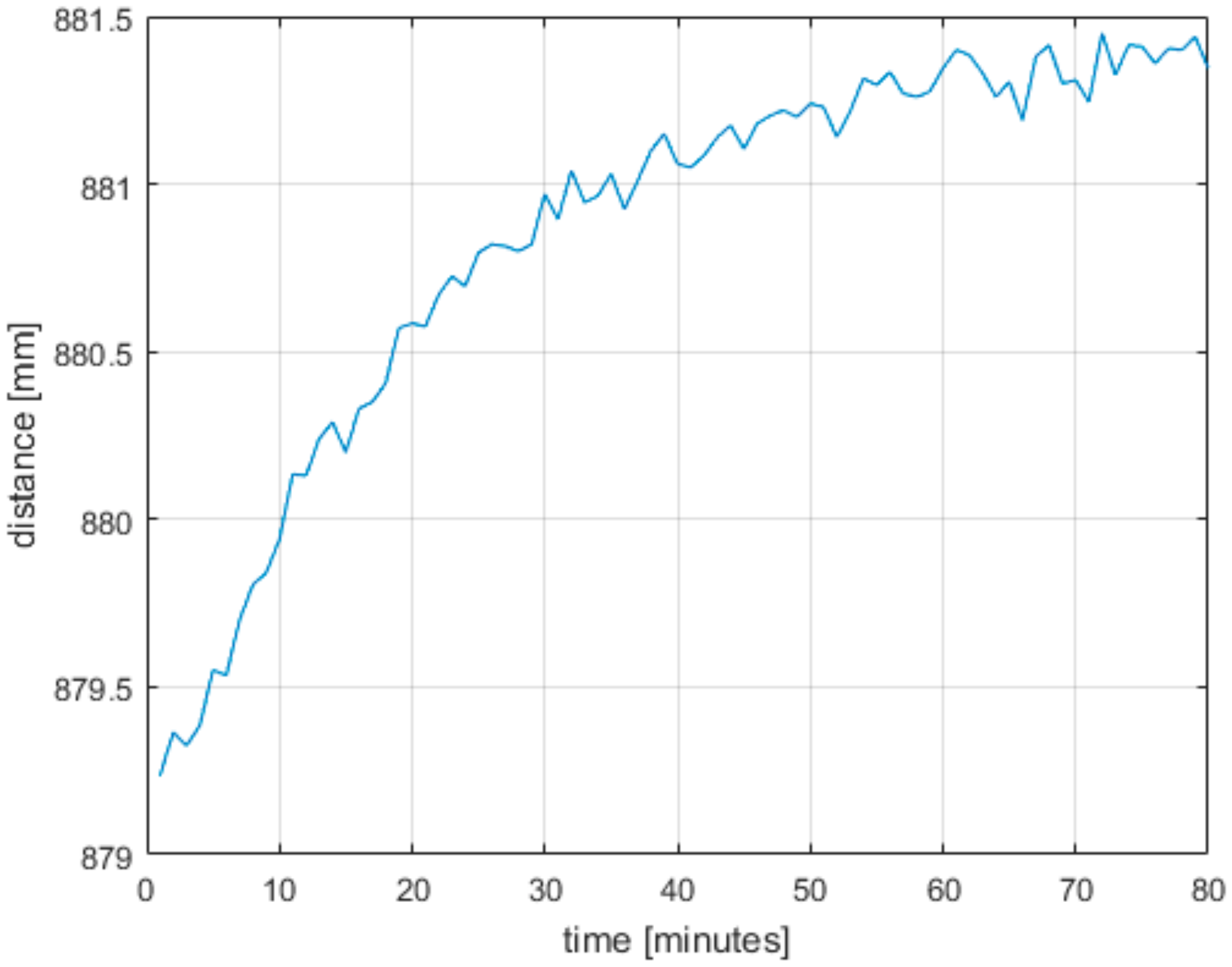

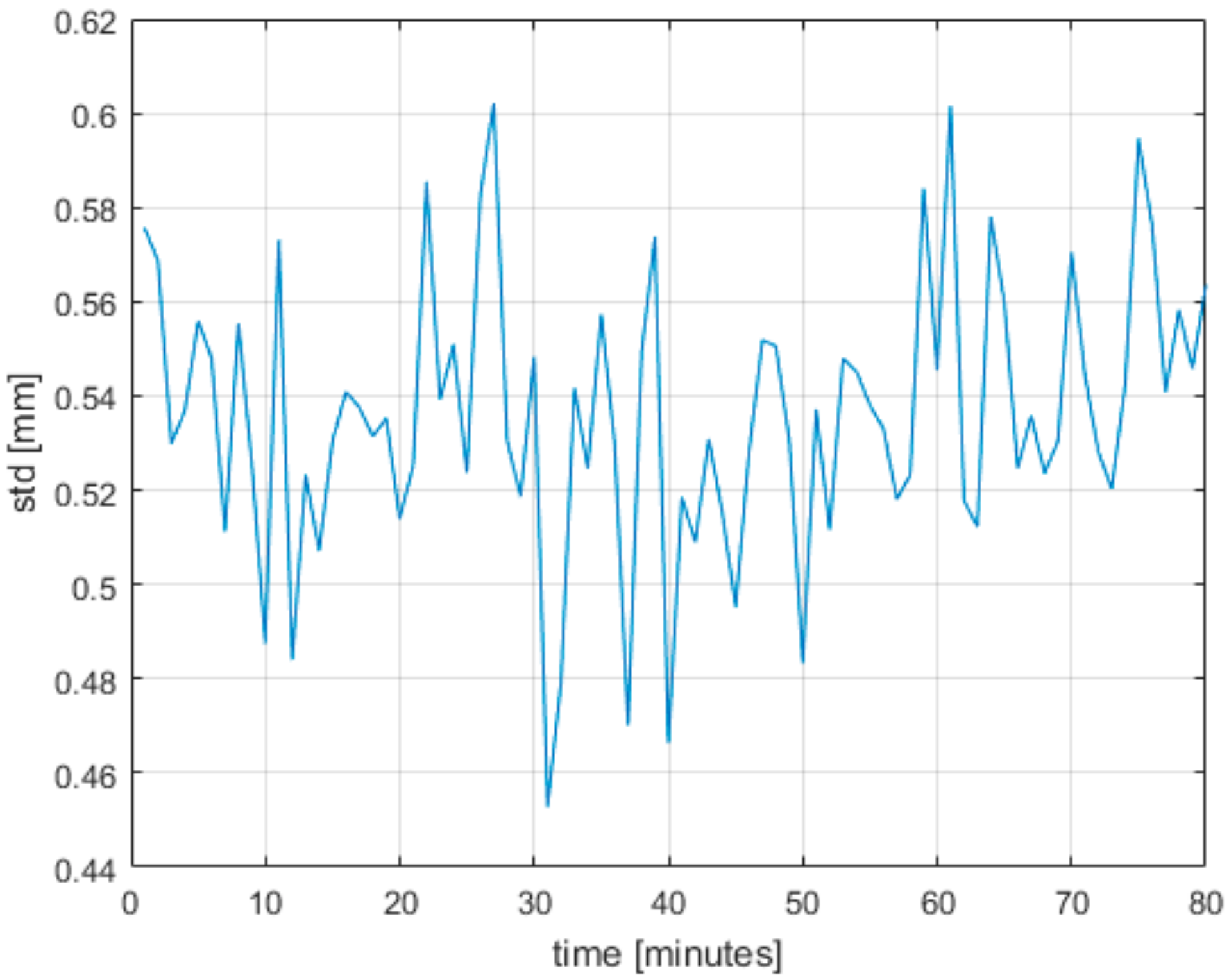

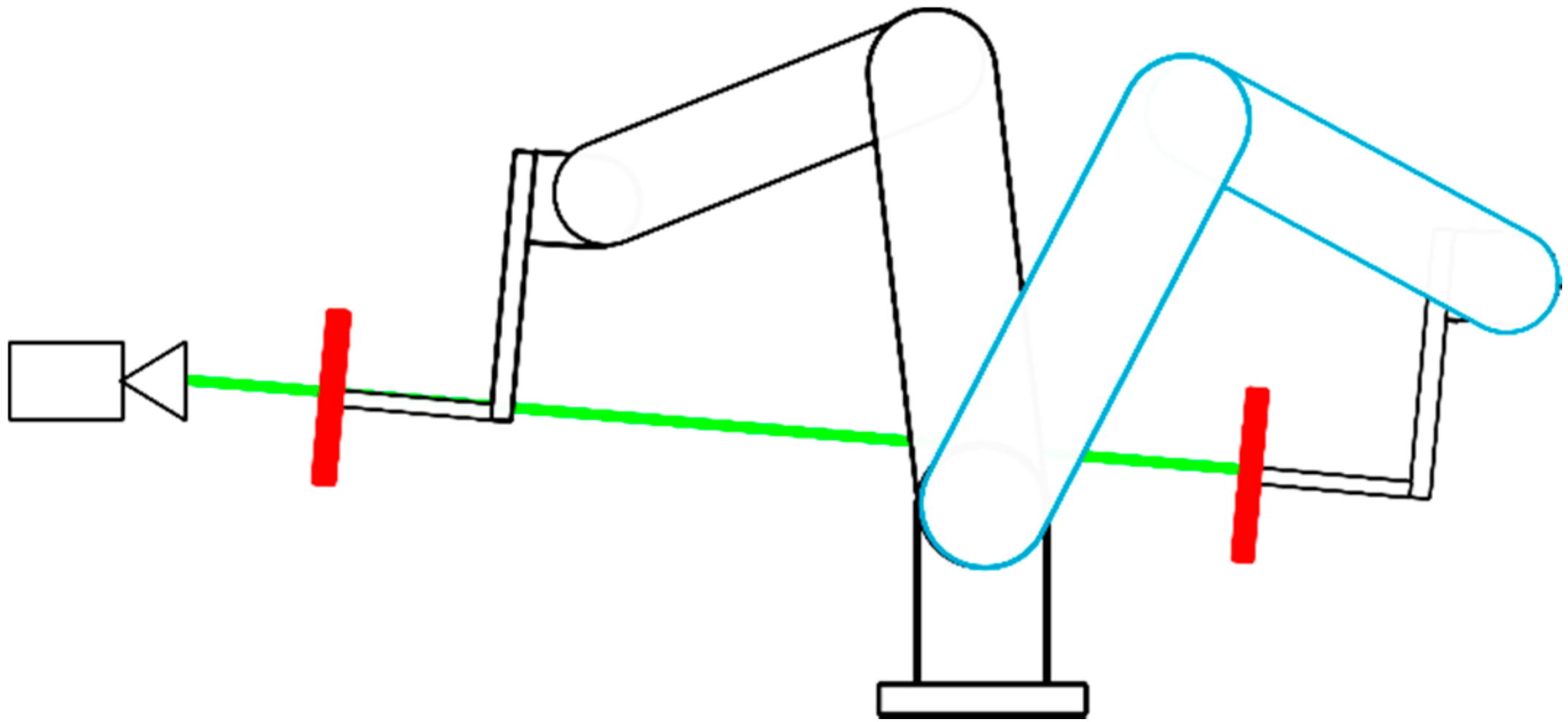

4.1. Warm-up Time

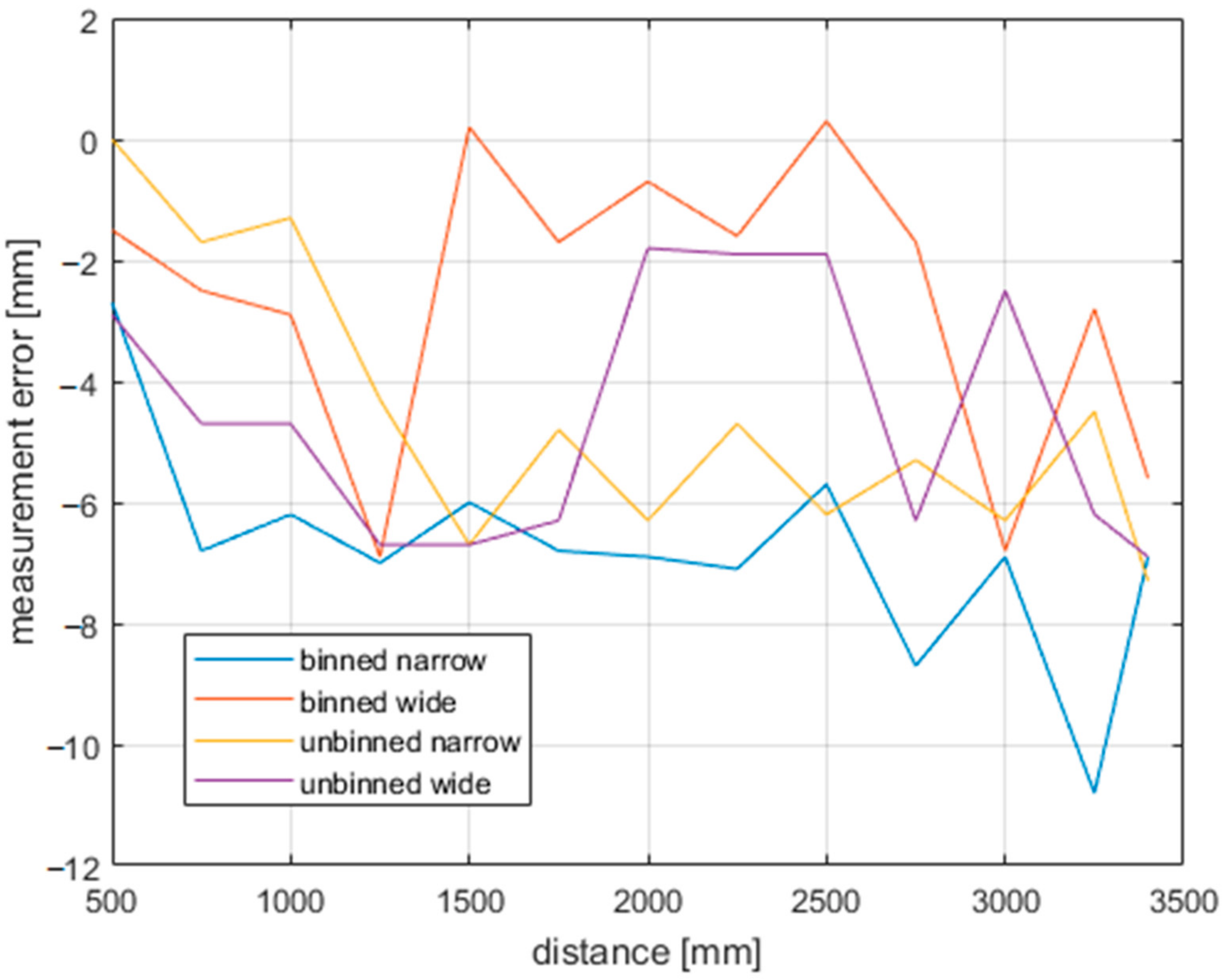

4.2. Accuracy

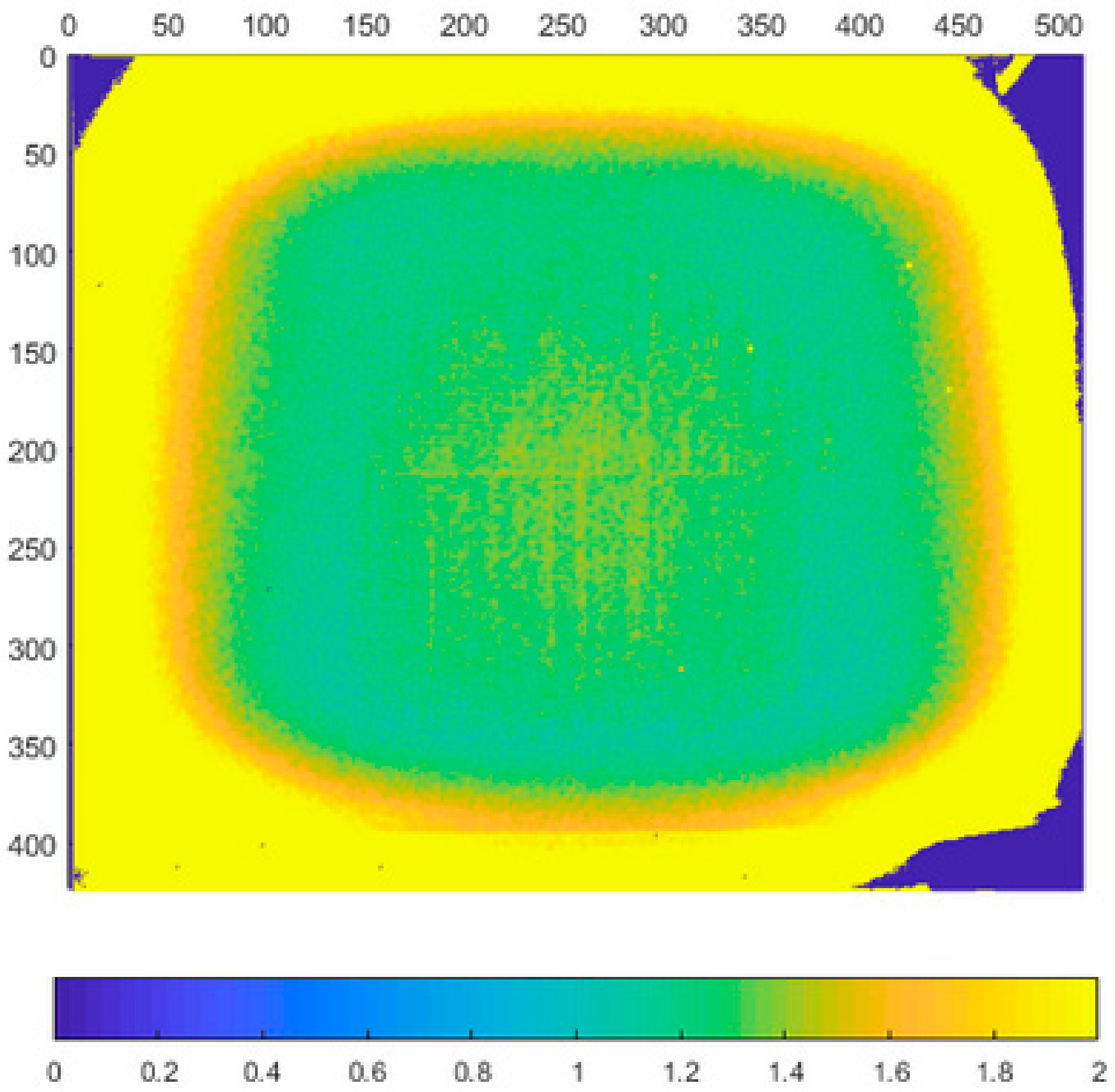

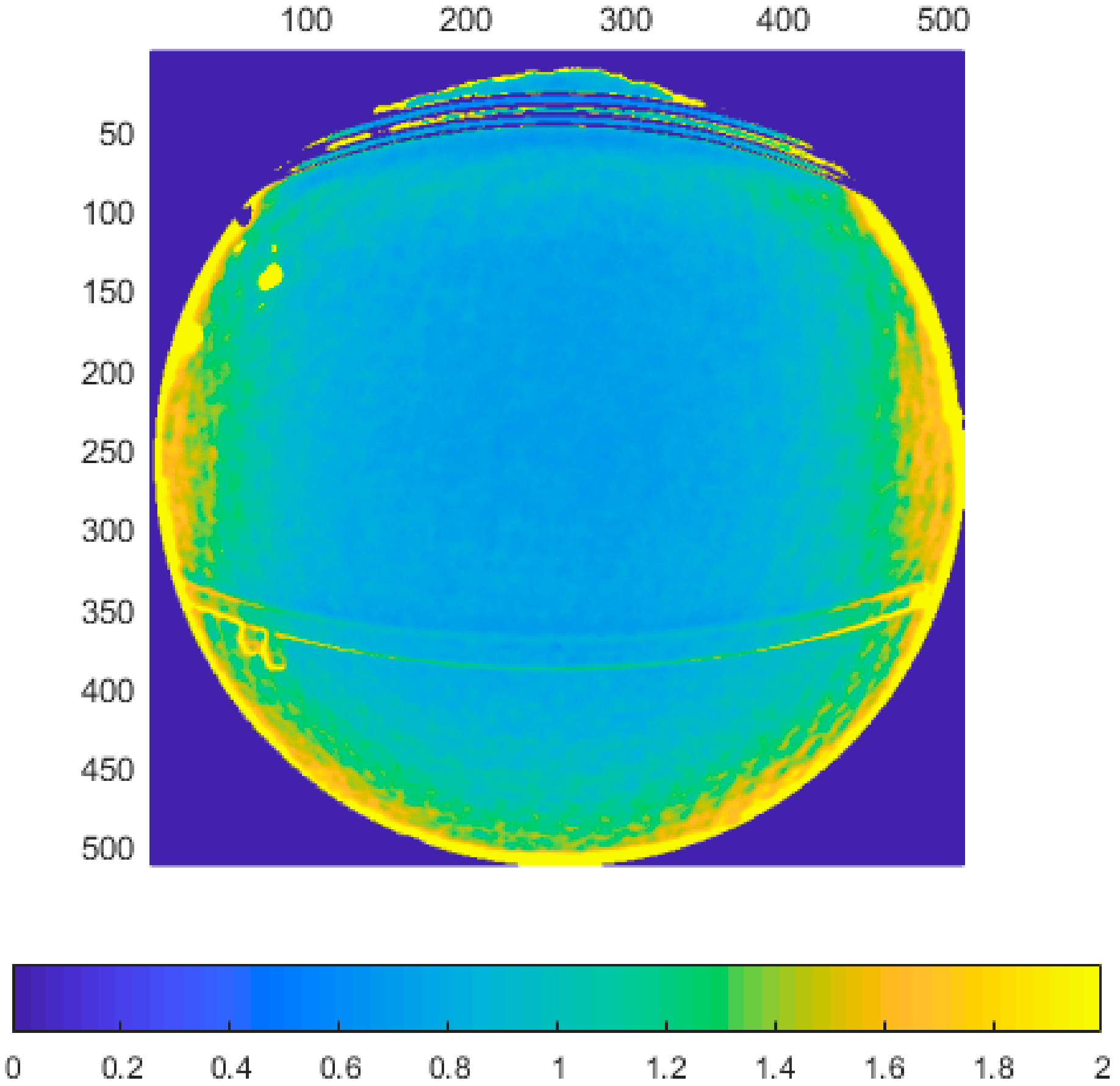

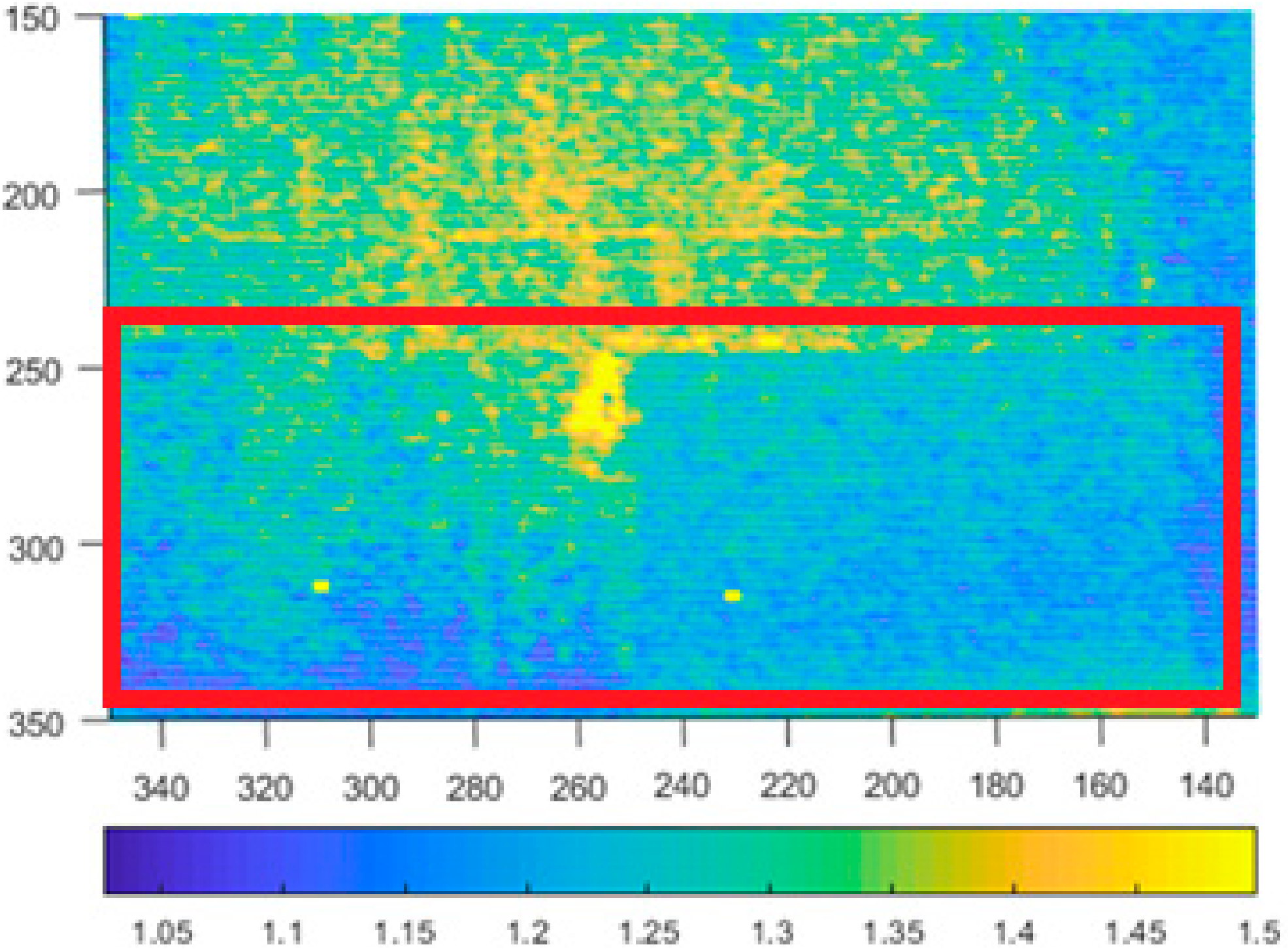

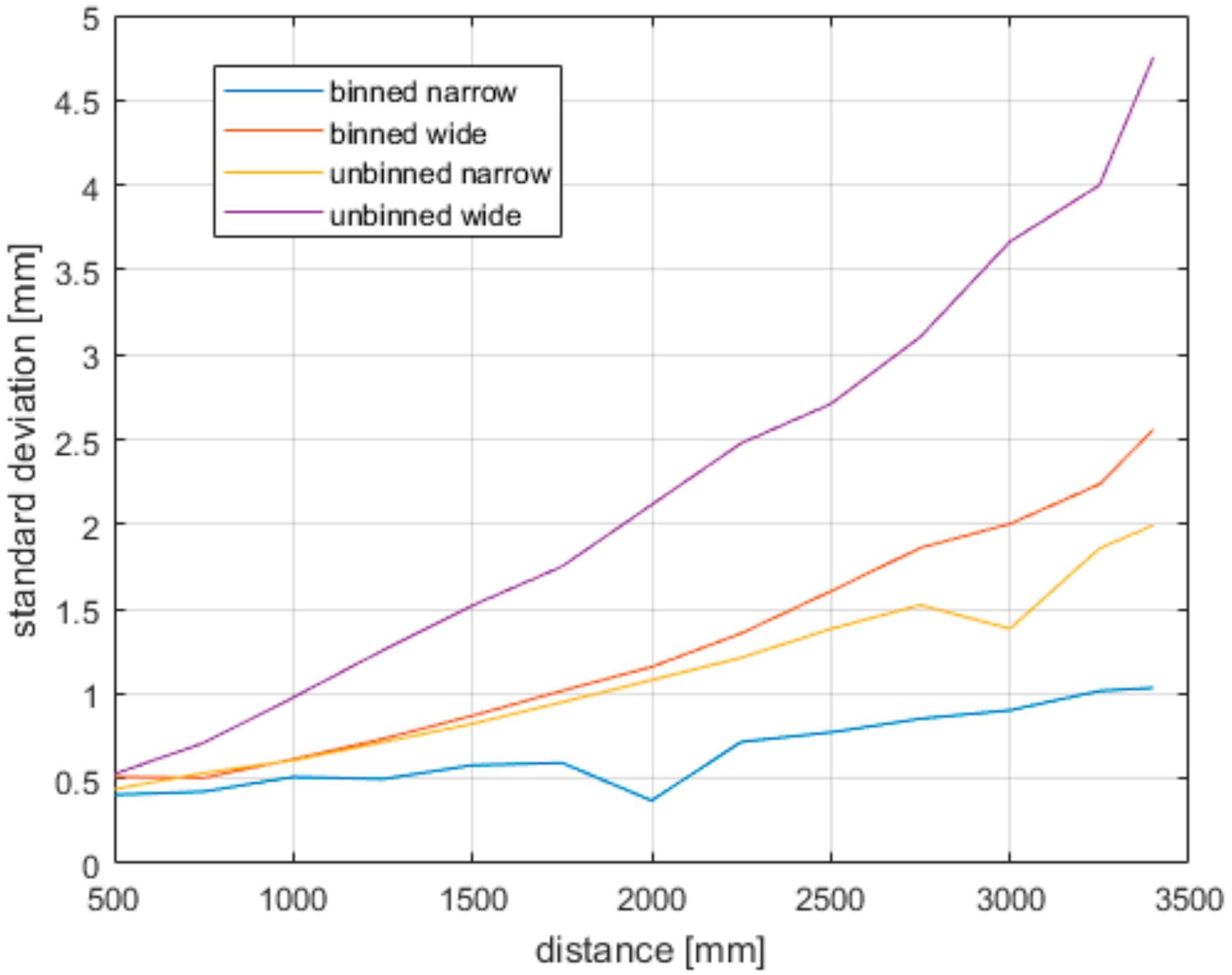

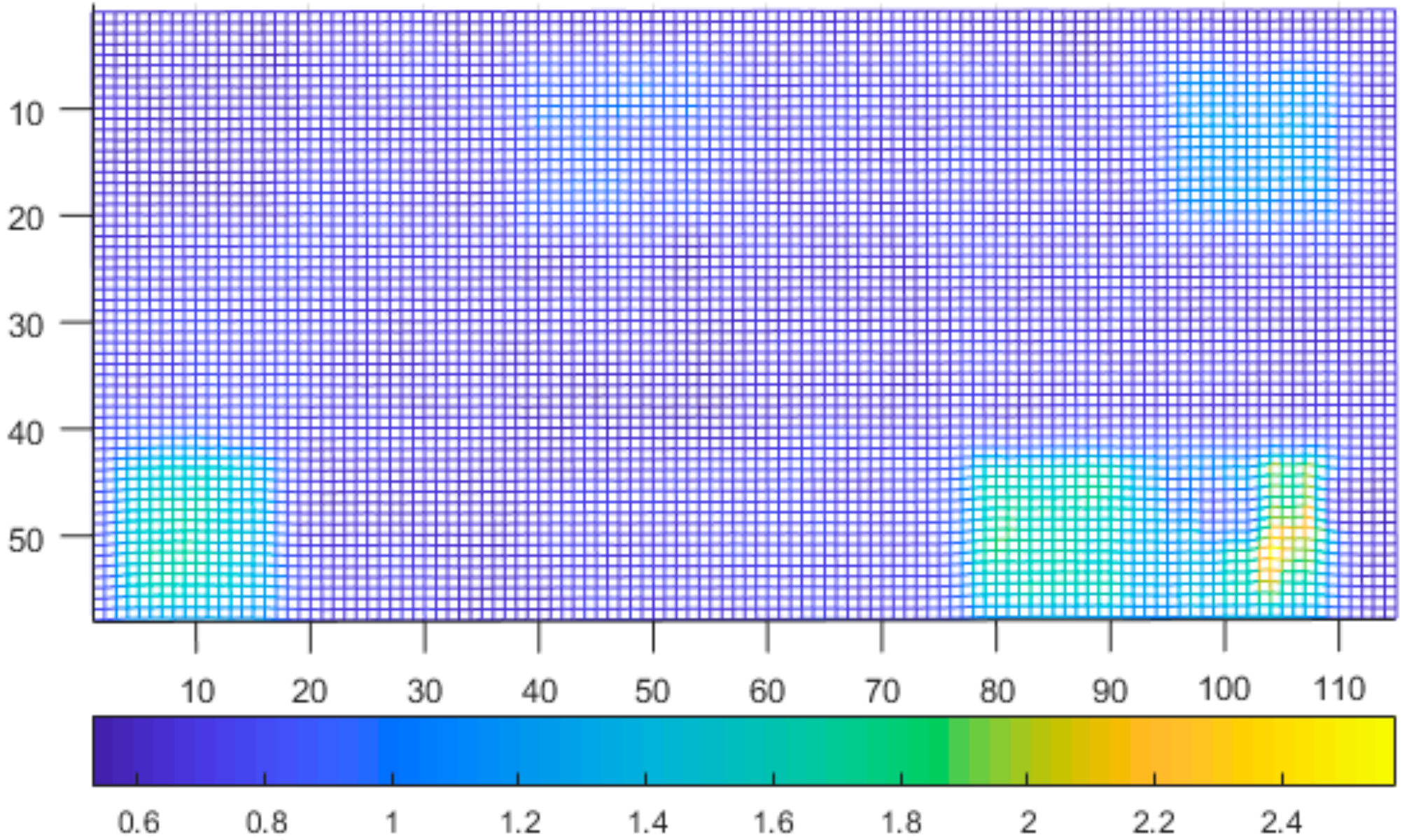

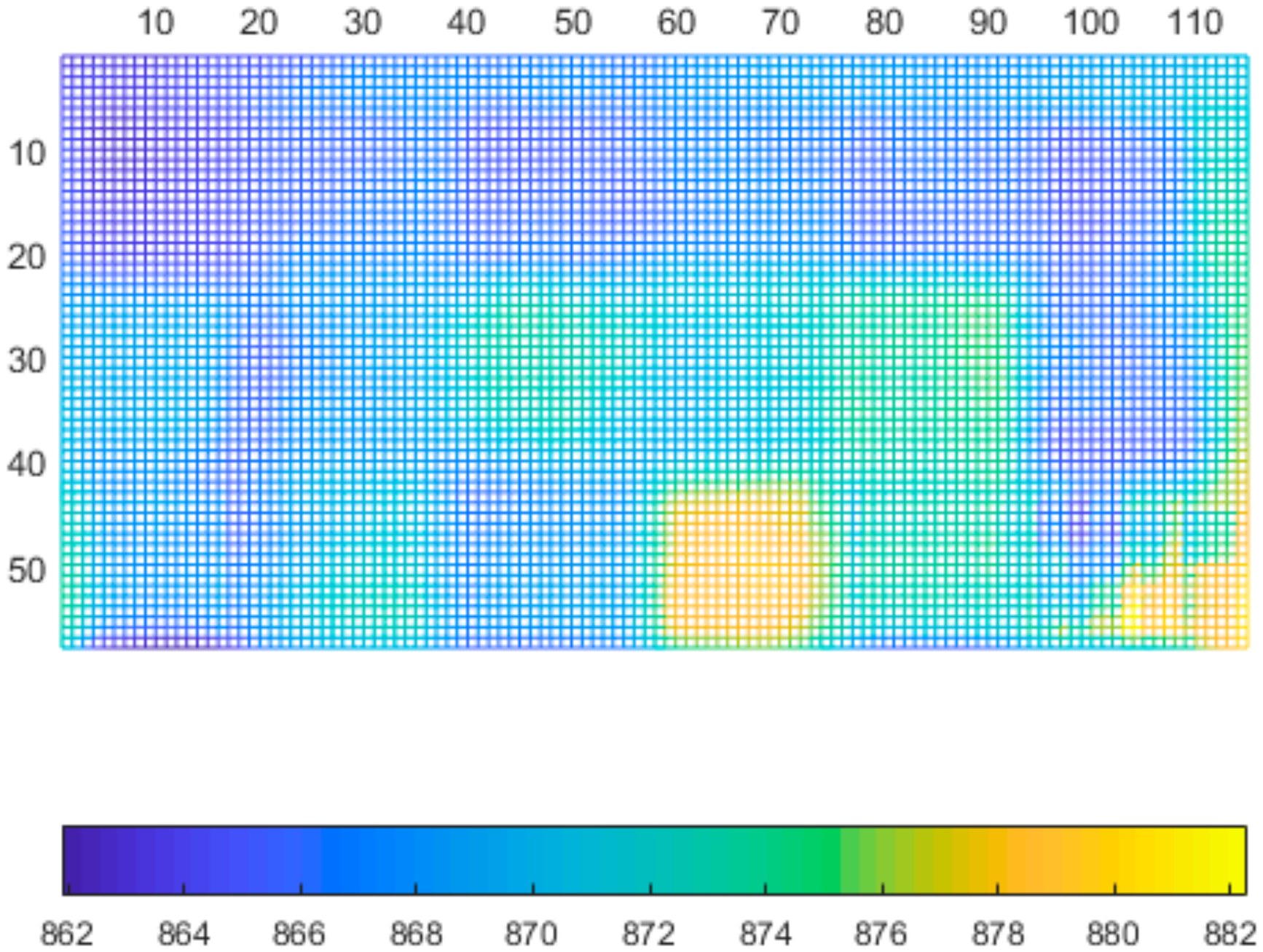

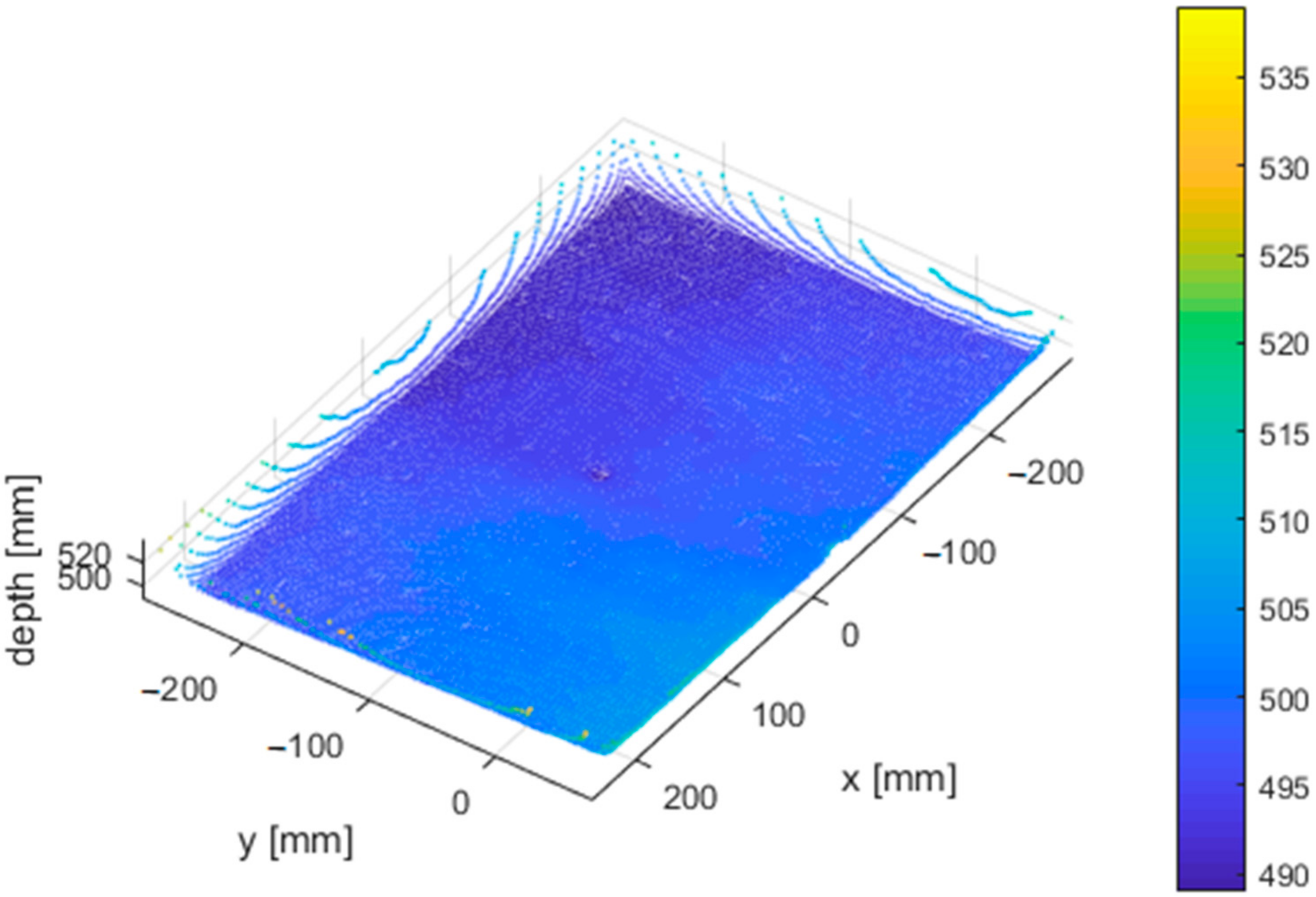

4.3. Precision

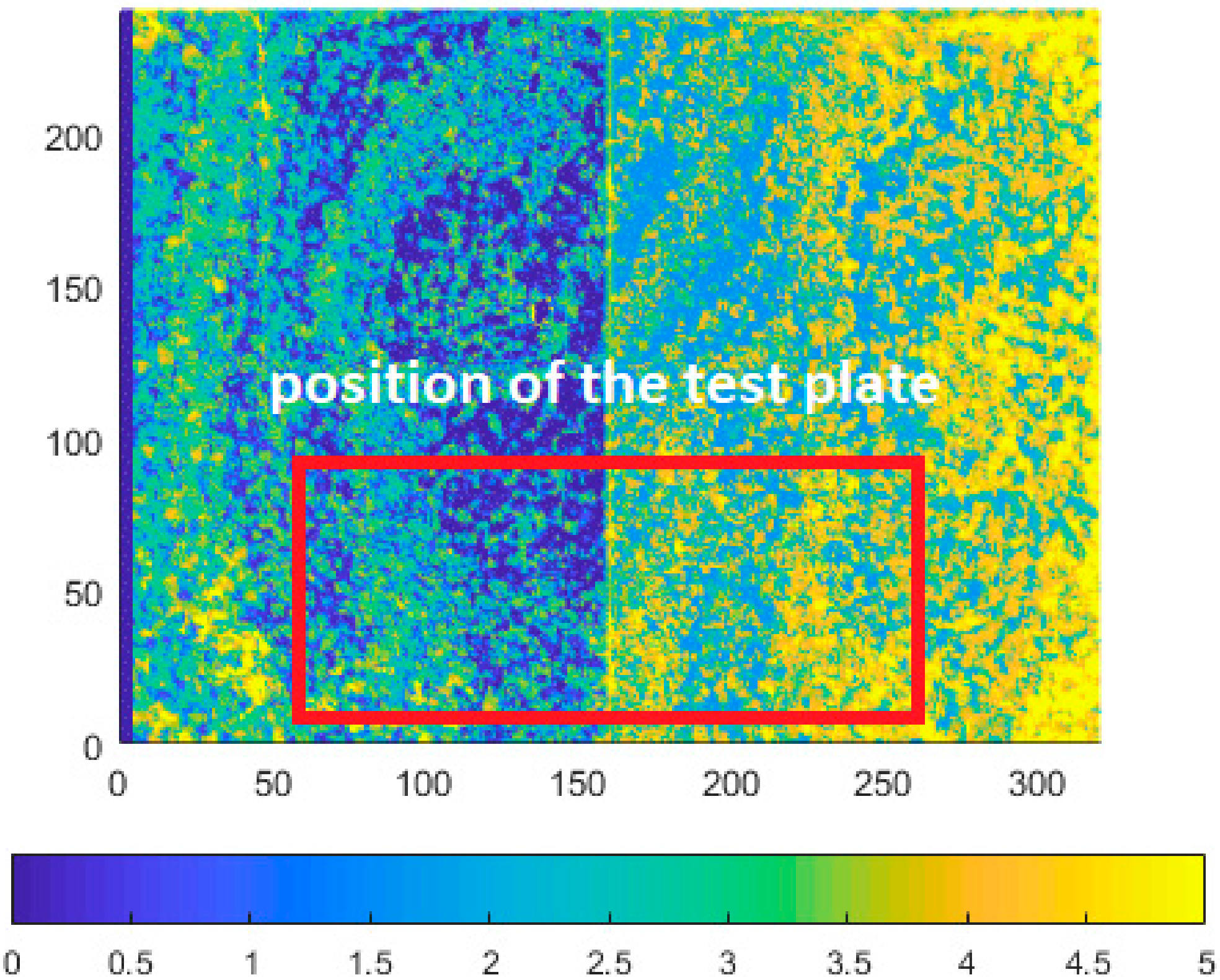

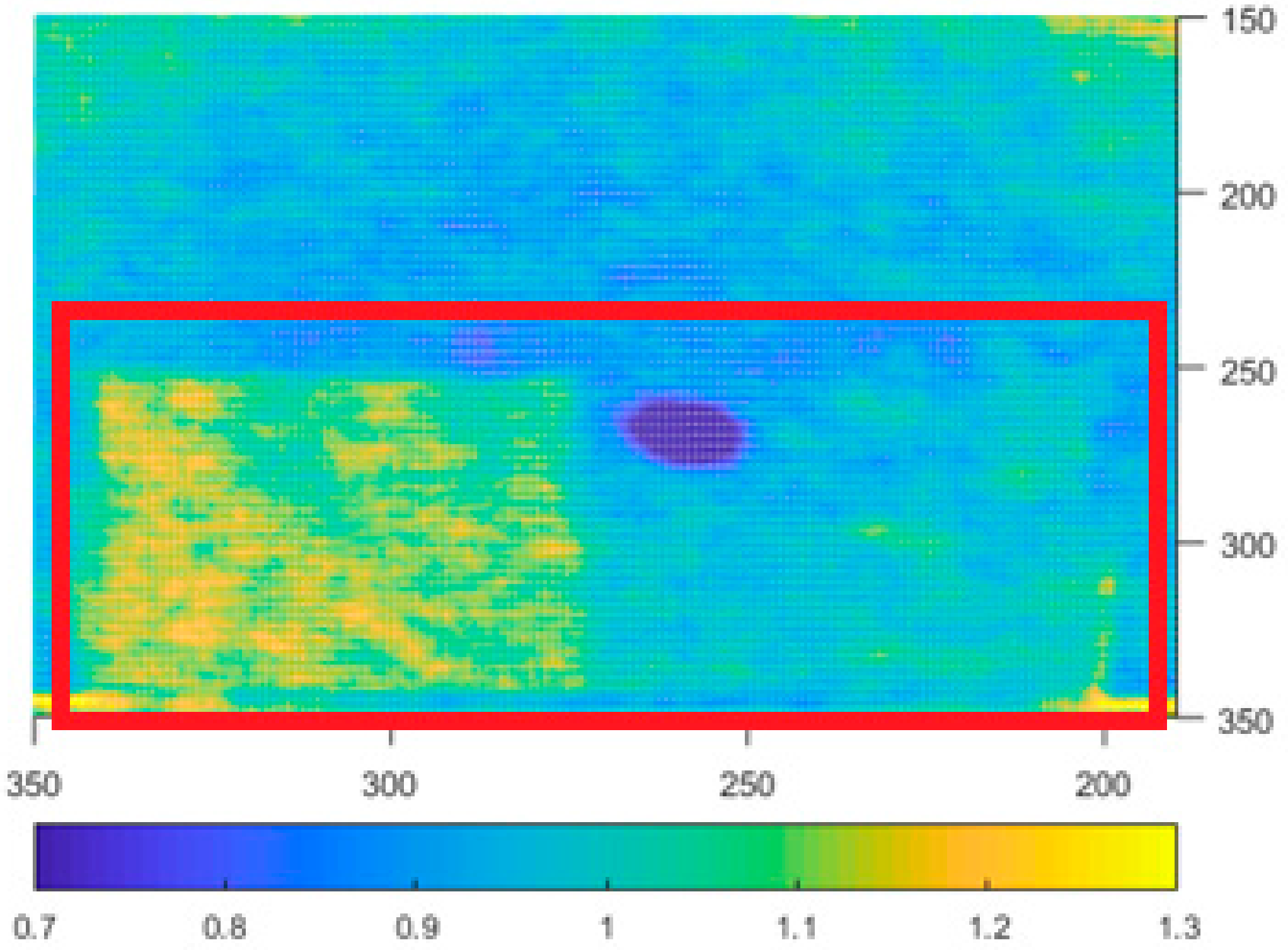

4.4. Reflectivity

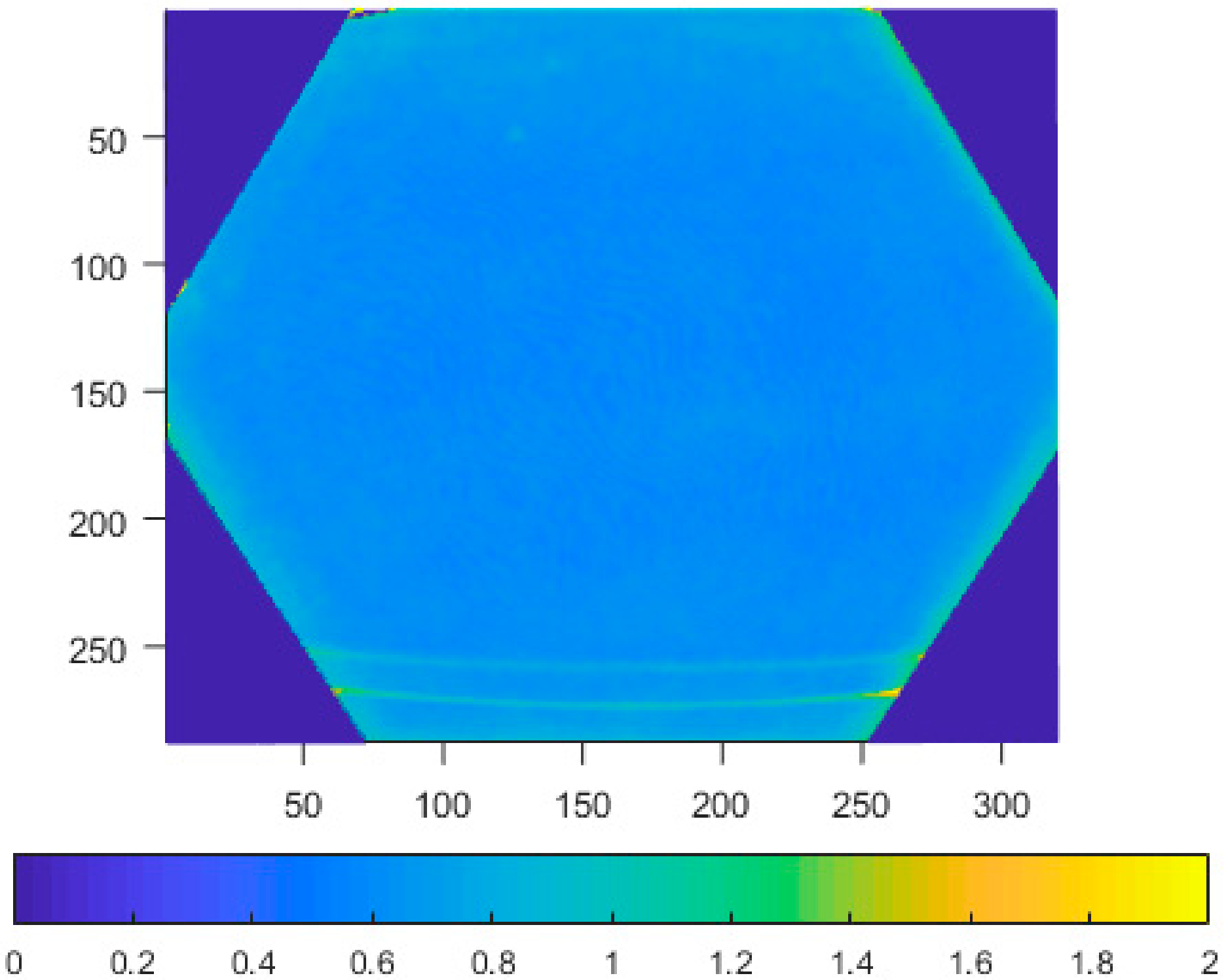

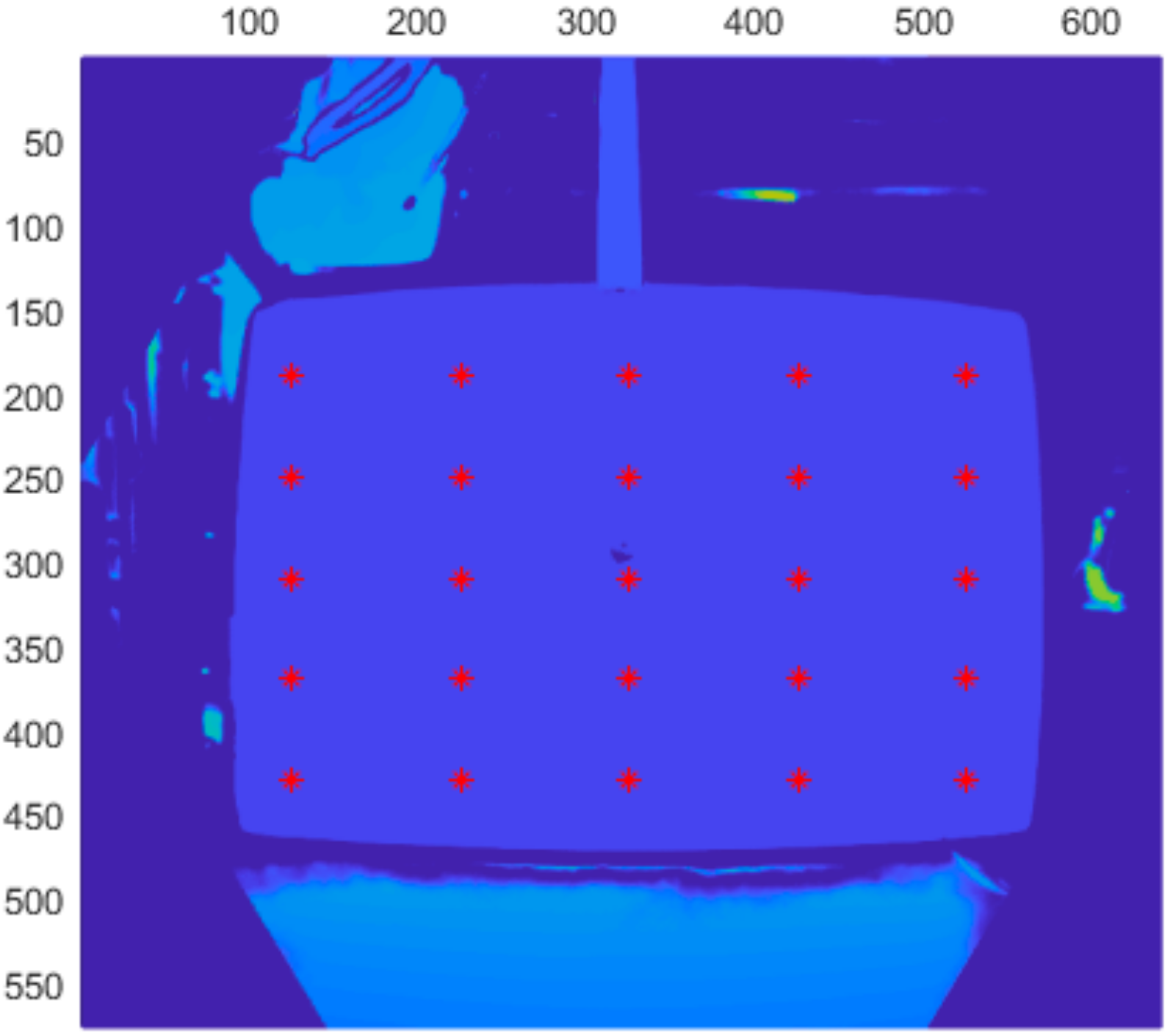

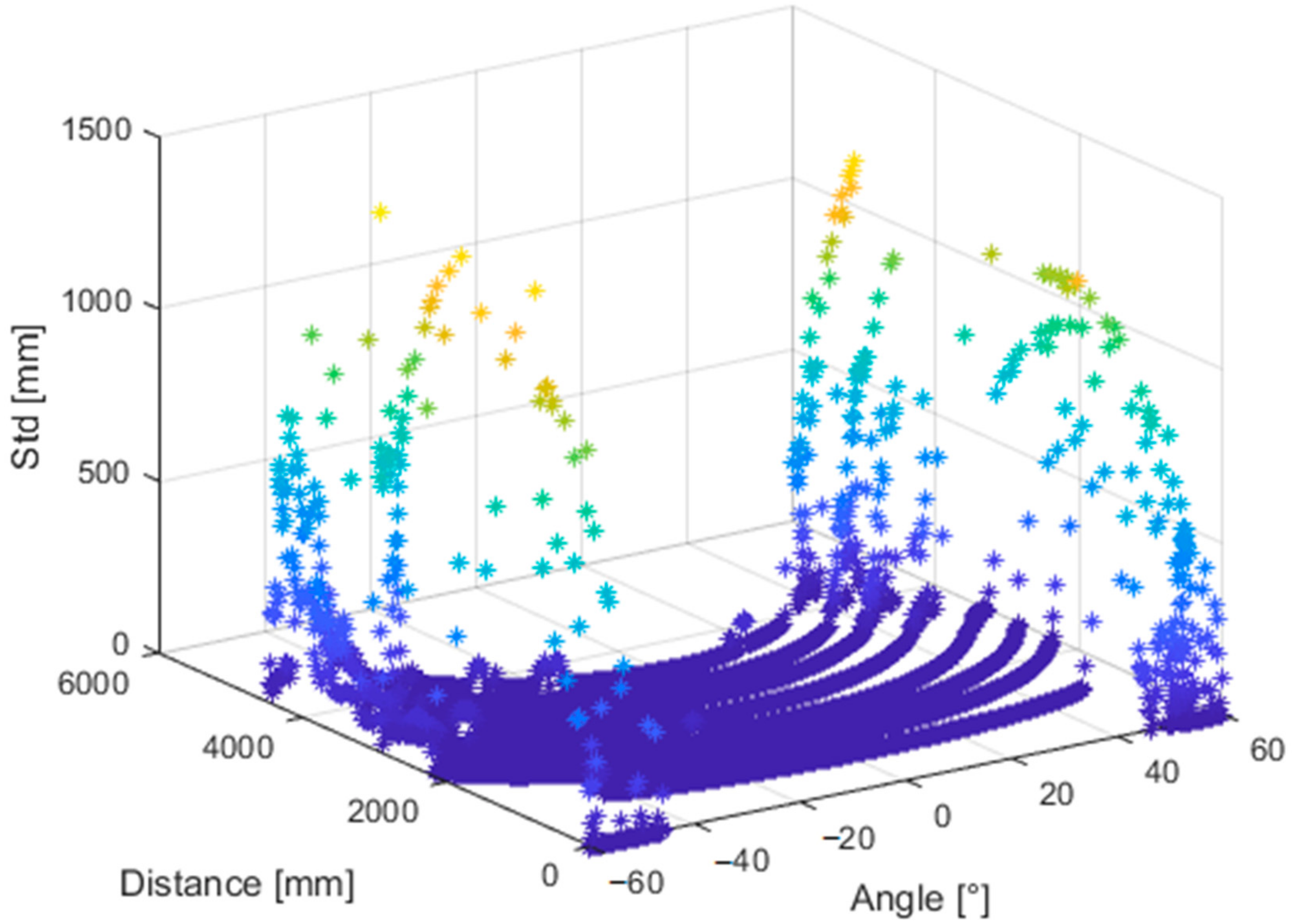

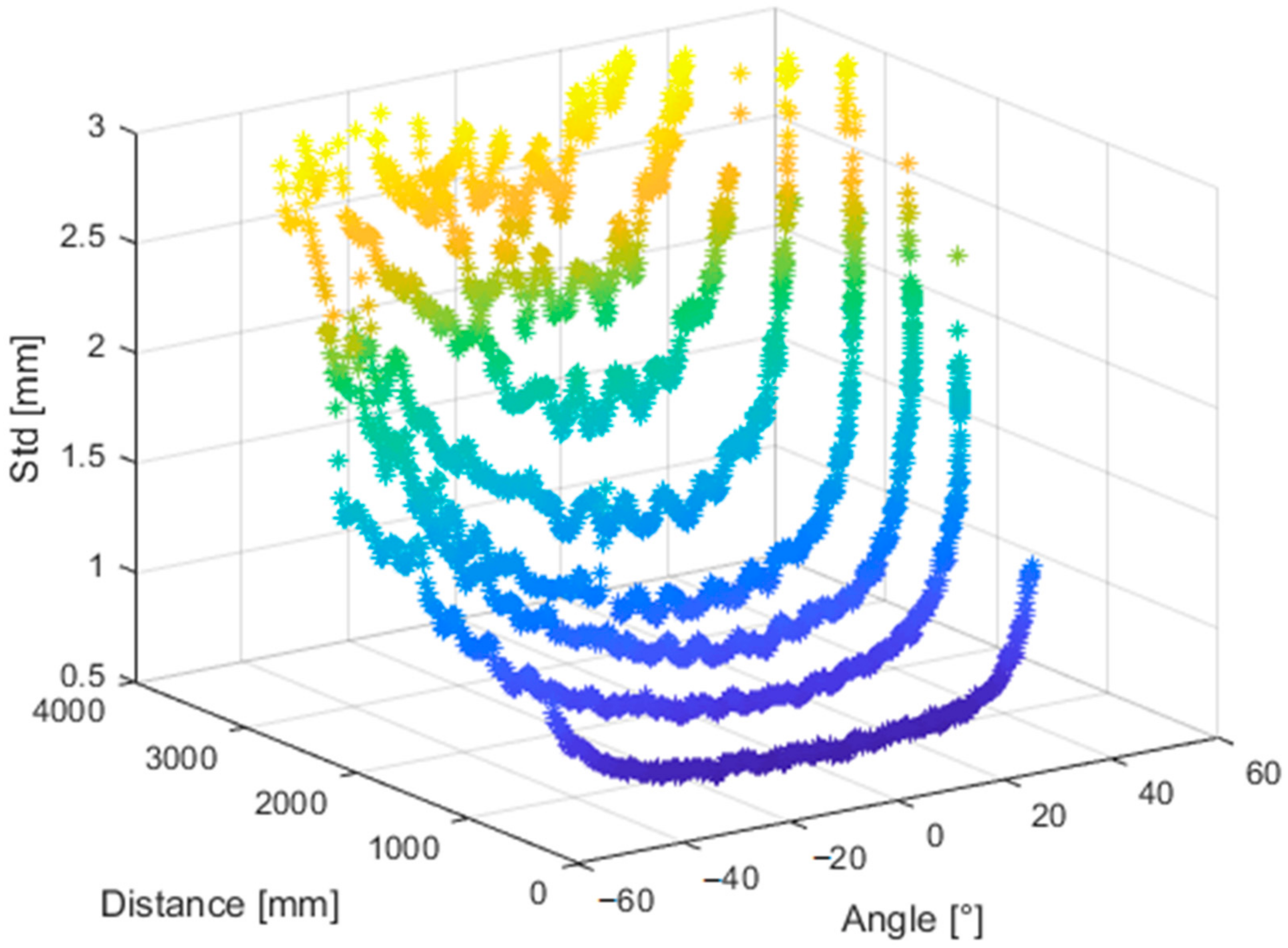

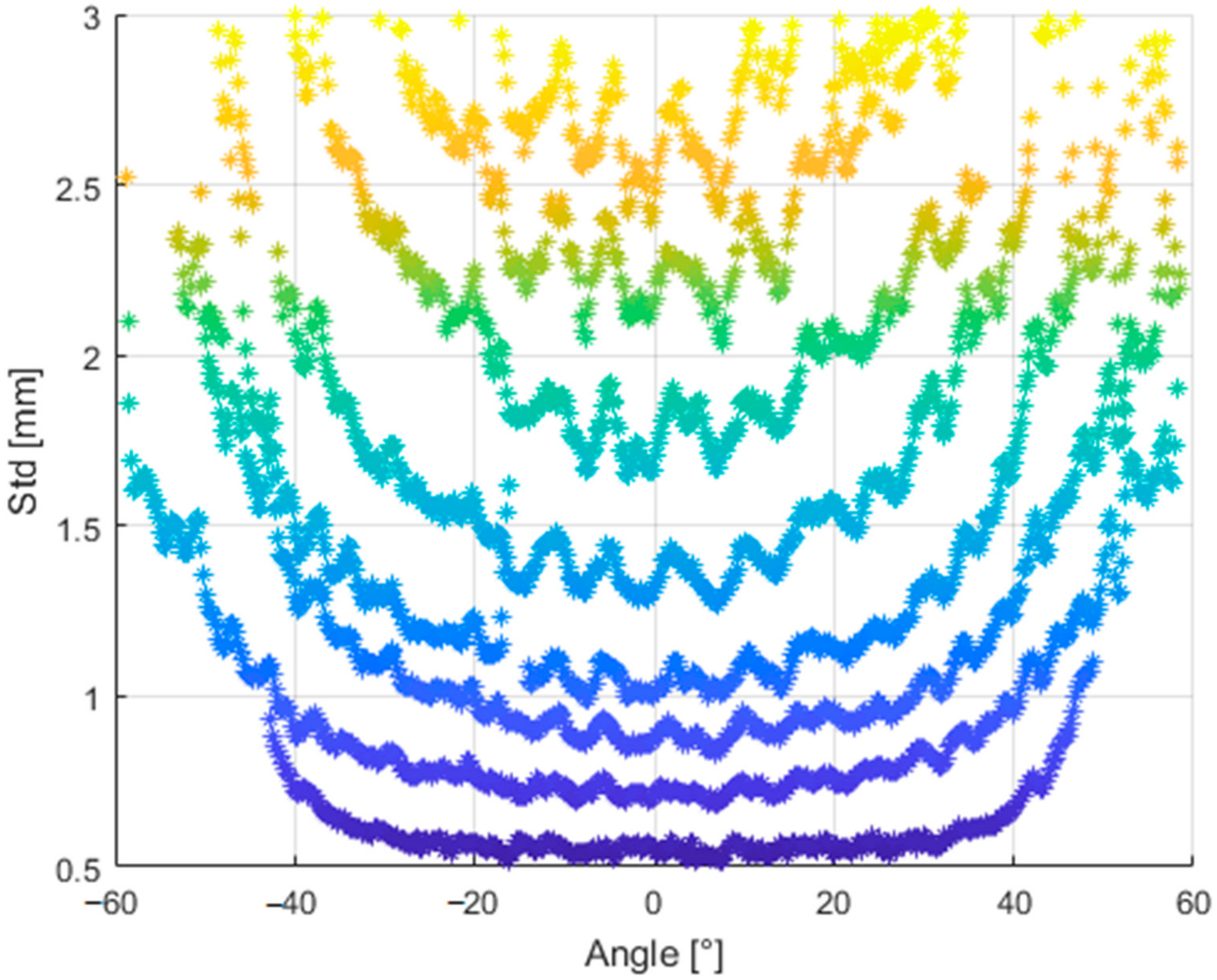

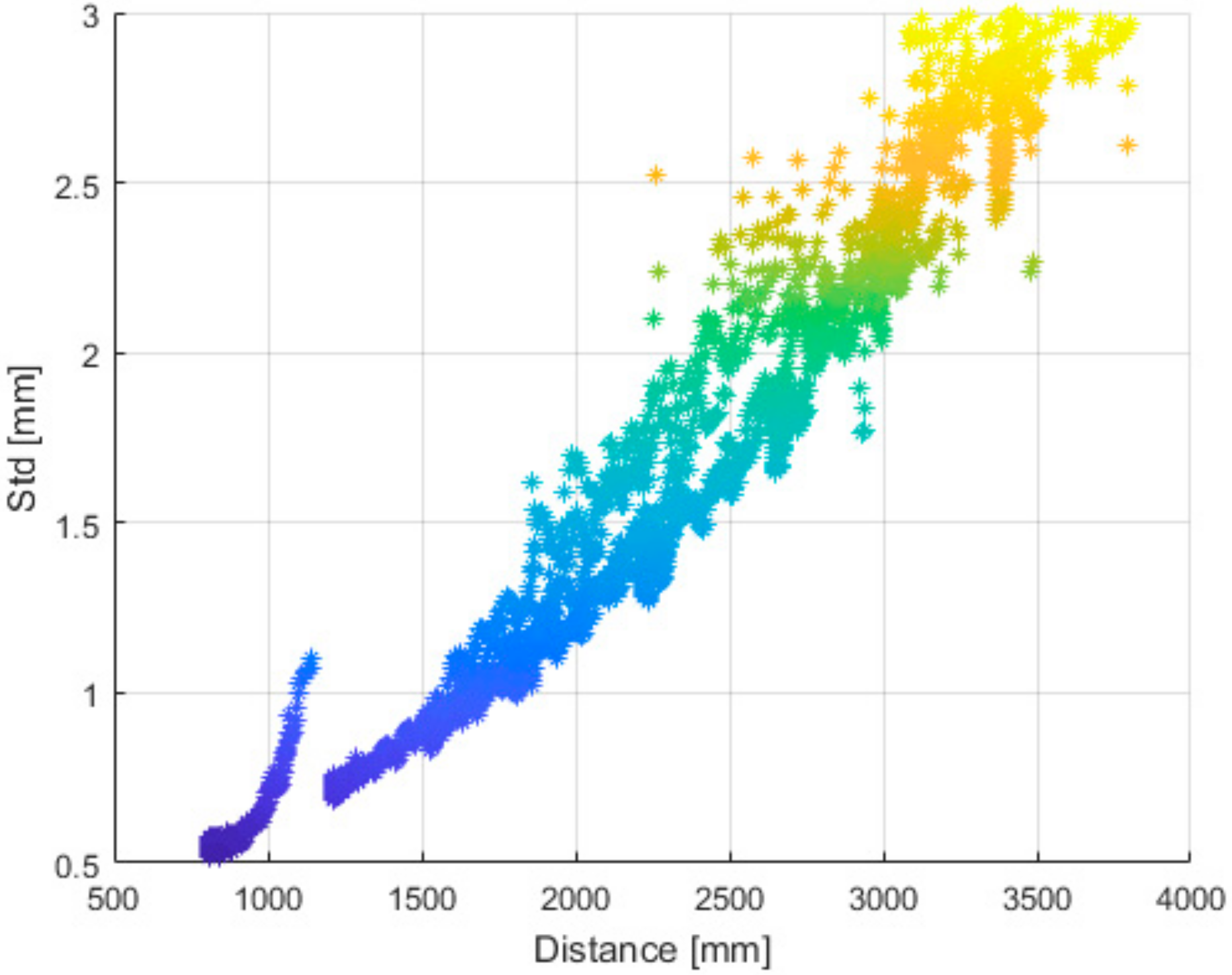

4.5. Precision Variability Analysis

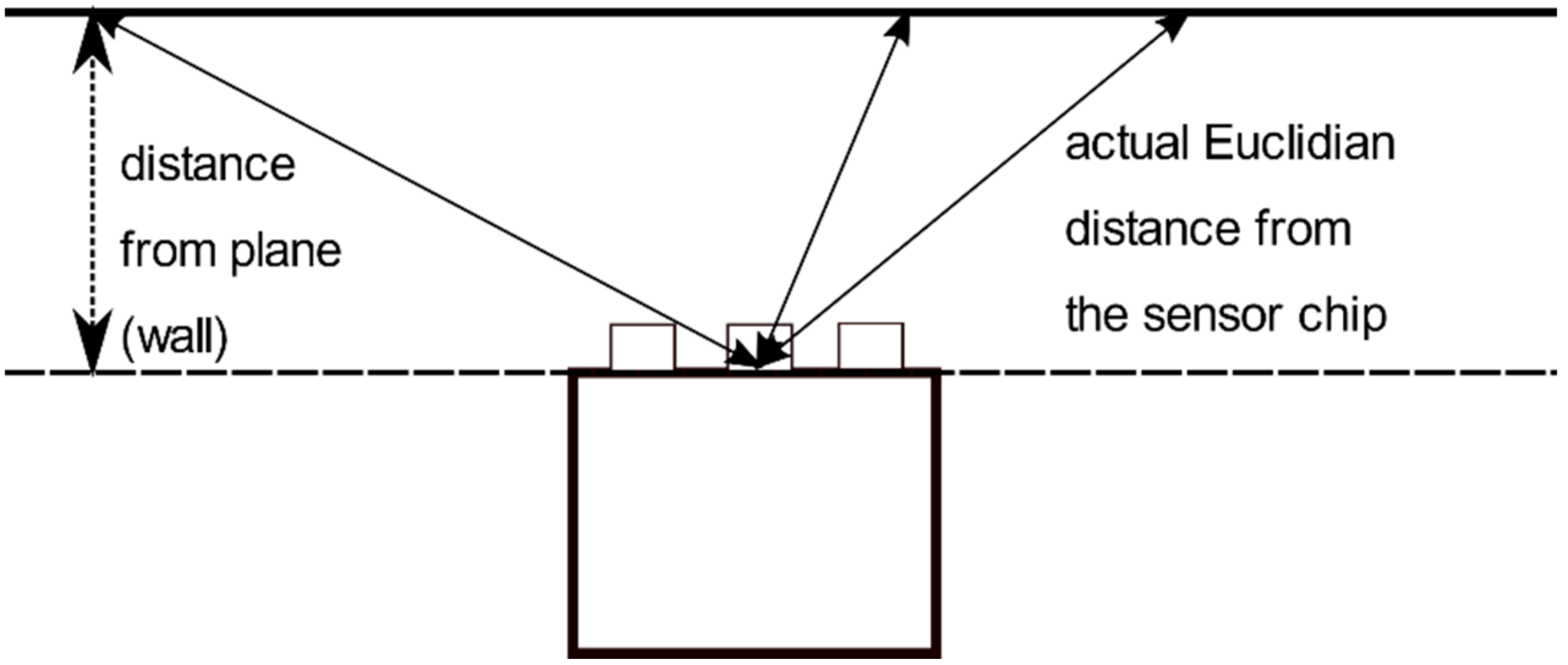

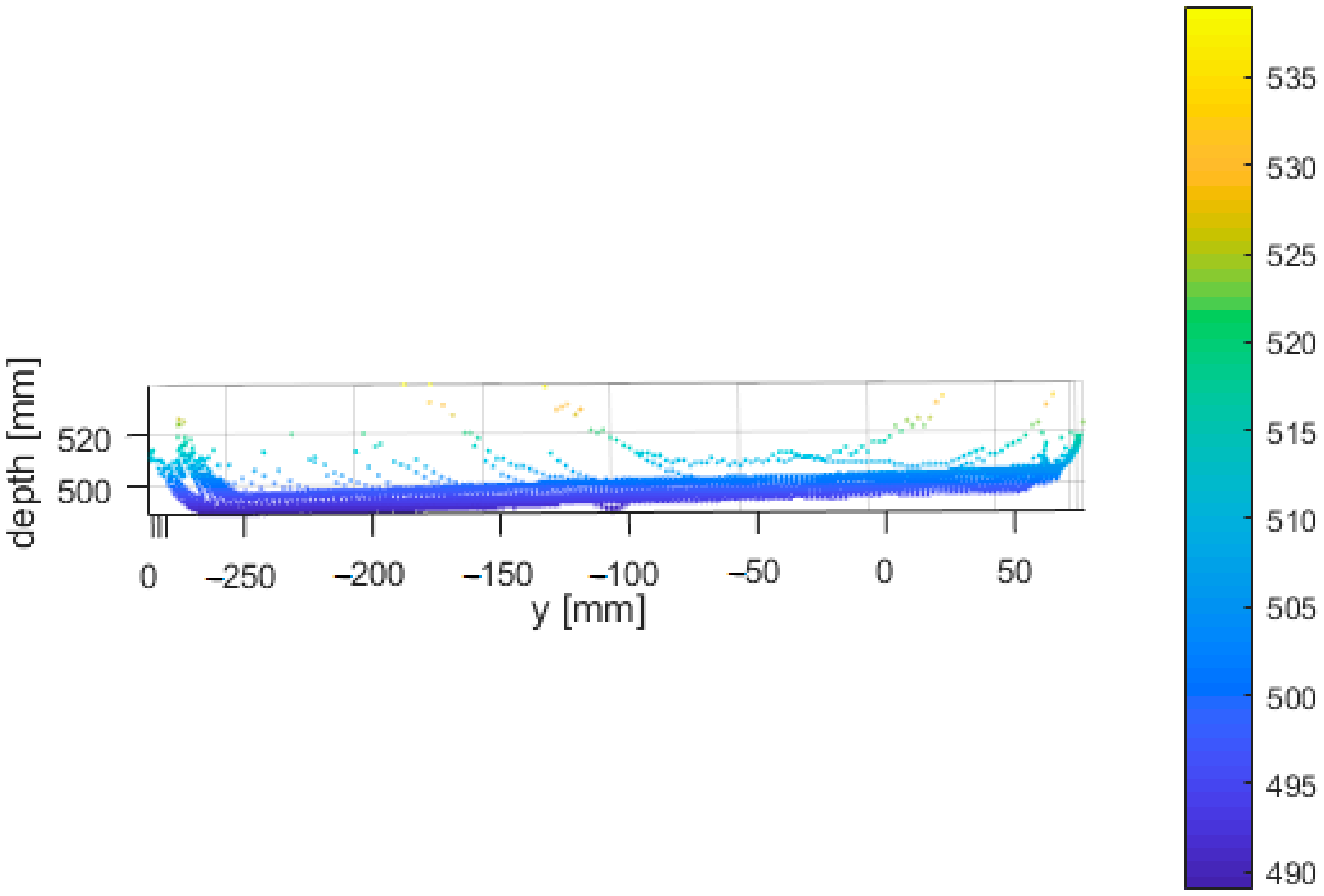

- The rise of the noise is due to distance. Even though the reported distance is approximately the same, the actual Euclidian distance from the sensor chip is considerably higher (Figure 9).

- The sensor measures better at the center of the image. This could be due to optical aberration of the lens.

- The relative angle between the wall and sensor. This angle changes from center to the edges changing the amount of reflected light back to the sensor, which could affect the measurement quality.

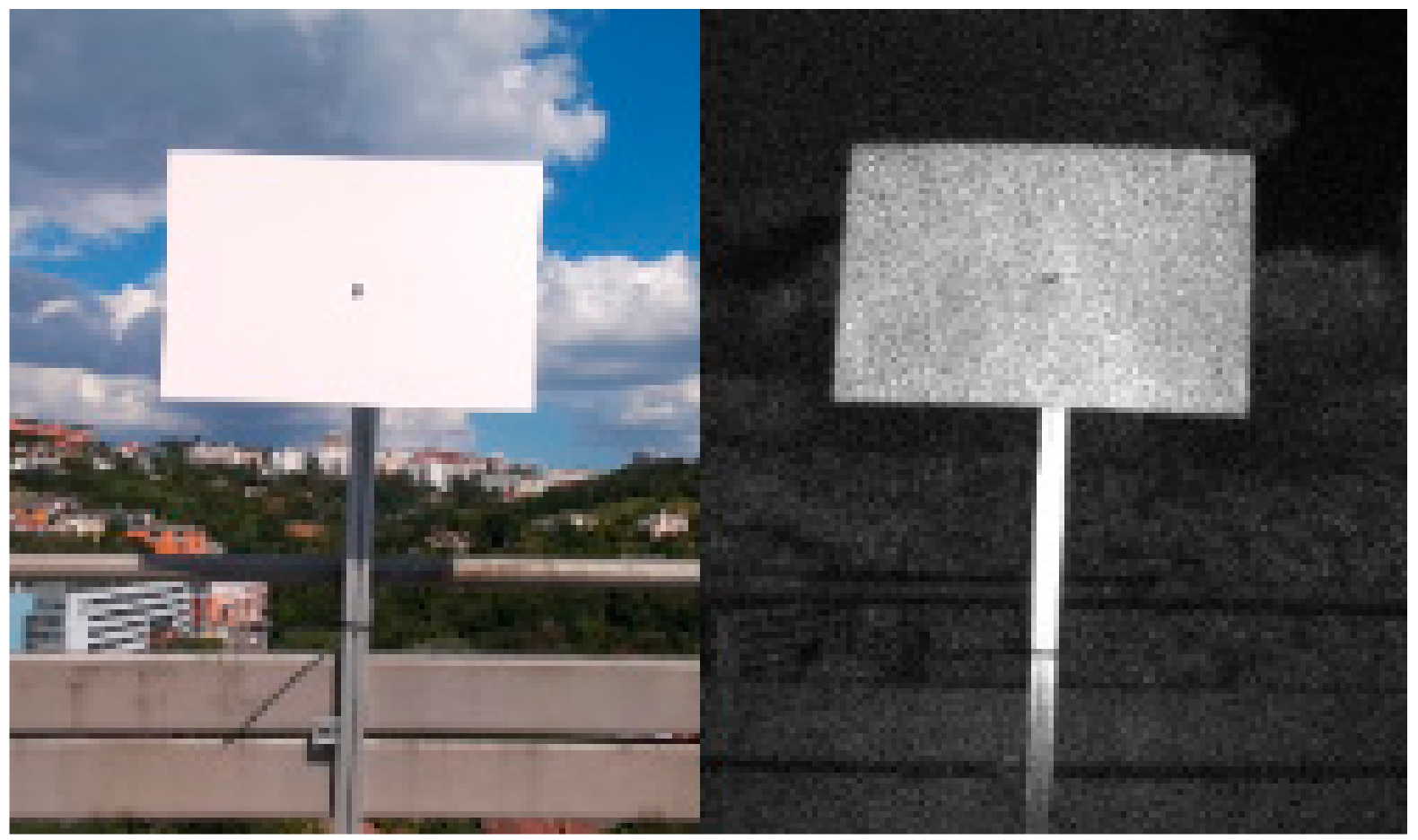

4.6. Performance in Outdoor Environment

4.7. Multipath and Flying Pixel

5. Conclusions

- Standard deviation ≤ 17 mm.

- Distance error < 11 mm + 0.1% of distance without multi-path interference

- PROS

- Half the weight of Kinect v2

- No need for power supply (lower weight and greater ease of installation)

- Greater variability–four different modes

- Better angular resolution

- Lower noise

- Good accuracy

- CONS

- Object reflectivity issues due to ToF technology

- Virtually unusable in outdoor environment

- Relatively long warm-up time (at least 40–50 min)

- Multipath and flying pixel phenomenon

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Elaraby, A.F.; Hamdy, A.; Rehan, M. A Kinect-Based 3D Object Detection and Recognition System with Enhanced Depth Estimation Algorithm. In Proceedings of the 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 1–3 November 2018; pp. 247–252. [Google Scholar] [CrossRef]

- Tanabe, R.; Cao, M.; Murao, T.; Hashimoto, H. Vision based object recognition of mobile robot with Kinect 3D sensor in indoor environment. In Proceedings of the 2012 Proceedings of SICE Annual Conference (SICE), Akita, Japan, 20–23 August 2012; pp. 2203–2206. [Google Scholar]

- Manap, M.S.A.; Sahak, R.; Zabidi, A.; Yassin, I.; Tahir, N.M. Object Detection using Depth Information from Kinect Sensor. In Proceedings of the 2015 IEEE 11th International Colloquium on Signal Processing & Its Applications (CSPA), Kuala Lumpur, Malaysia, 6–8 March 2015; pp. 160–163. [Google Scholar]

- Xin, G.X.; Zhang, X.T.; Wang, X.; Song, J. A RGBD SLAM algorithm combining ORB with PROSAC for indoor mobile robot. In Proceedings of the 2015 4th International Conference on Computer Science and Network Technology (ICCSNT), 19–20 December 2015; pp. 71–74. [Google Scholar]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef]

- Ibragimov, I.Z.; Afanasyev, I.M. Comparison of ROS-based visualslam methods in homogeneous indoor environment. In Proceedings of the 2017 14th Workshop on Positioning, Navigation and Communications (WPNC), Bremen, Germany, 25–26 October 2017. [Google Scholar]

- Plouffe, G.; Cretu, A. Static and dynamic hand gesture recognition in depthdata using dynamic time warping. IEEE Trans. Instrum. Meas. 2016, 65, 305–316. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Z.; Chan, S. Superpixel-based hand gesture recognition withKinect depth camera. IEEE Trans. Multimed. 2015, 17, 29–39. [Google Scholar] [CrossRef]

- Ren, Z.; Yuan, J.; Meng, J.; Zhang, Z. Robust part-based hand gesture recognition using kinect sensor. IEEE Trans. Multimed. 2013, 15, 1110–1120. [Google Scholar] [CrossRef]

- Avalos, J.; Cortez, S.; Vasquez, K.; Murray, V.; Ramos, O.E. Telepres-ence using the kinect sensor and the nao robot. In Proceedings of the 2016 IEEE 7th Latin American Symposium on Circuits & Systems (LASCAS), Florianopolis, Brazil, 28 February–2 March 2016; pp. 303–306. [Google Scholar]

- Berri, R.; Wolf, D.; Osório, F.S. Telepresence Robot with Image-Based Face Tracking and 3D Perception with Human Gesture Interface Using Kinect Sensor. In Proceedings of the 2014 Joint Conference on Robotics: SBR-LARS Robotics Symposium and Robocontrol, Sao Carlos, Brazil, 18–23 October 2014; pp. 205–210. [Google Scholar]

- Tao, G.; Archambault, P.S.; Levin, M.F. Evaluation of Kinect skeletal tracking in a virtual reality rehabilitation system for upper limb hemiparesis. In Proceedings of the 2013 International Conference on Virtual Rehabilitation (ICVR), Philadelphia, PA, USA, 26–29 August 2013; pp. 164–165. [Google Scholar]

- Satyavolu, S.; Bruder, G.; Willemsen, P.; Steinicke, F. Analysis of IR-based virtual reality tracking using multiple Kinects. In Proceedings of the 2012 IEEE Virtual Reality (VR), Costa Mesa, CA, USA, 4–8 March 2012; pp. 149–150. [Google Scholar]

- Gotsis, M.; Tasse, A.; Swider, M.; Lympouridis, V.; Poulos, I.C.; Thin, A.G.; Turpin, D.; Tucker, D.; Jordan-Marsh, M. Mixed realitygame prototypes for upper body exercise and rehabilitation. In Proceedings of the 2012 IEEE Virtual Reality Workshops (VRW), Costa Mesa, CA, USA, 4–8 March 2012; pp. 181–182. [Google Scholar]

- Heimann-Steinert, A.; Sattler, I.; Otte, K.; Röhling, H.M.; Mansow-Model, S.; Müller-Werdan, U. Using New Camera-Based Technologies for Gait Analysis in Older Adults in Comparison to the Established GAITRite System. Sensors 2019, 20, 125. [Google Scholar] [CrossRef] [PubMed]

- Volák, J.; Koniar, D.; Hargas, L.; Jablončík, F.; Sekel’Ova, N.; Durdík, P. RGB-D imaging used for OSAS diagnostics. 2018 ELEKTRO 2018, 1–5. [Google Scholar] [CrossRef]

- Guzsvinecz, T.; Szucs, V.; Sik-Lanyi, C. Suitability of the Kinect Sensor and Leap Motion Controller—A Literature Review. Sensors 2019, 19, 1072. [Google Scholar] [CrossRef] [PubMed]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Smisek, J.; Jancosek, M.; Pajdla, T. 3D with Kinect. In Consumer Depth Cameras for Computer Vision; Springer: Lomdom, UK, 2013; pp. 3–25. [Google Scholar]

- Fankhauser, P.; Bloesch, M.; Rodriguez, D.; Kaestner, R.; Hutter, M.; Siegwart, R. Kinect v2 for mobile robot navigation: Evaluation and modeling. In Proceedings of the 2015 International Conference on Advanced Robotics (ICAR), Istanbul, Turkey, 27–31 July 2015; pp. 388–394. [Google Scholar]

- Choo, B.; Landau, M.; Devore, M.; Beling, P. Statistical Analysis-Based Error Models for the Microsoft KinectTM Depth Sensor. Sensors 2014, 14, 17430–17450. [Google Scholar] [CrossRef] [PubMed]

- Pagliari, D.; Pinto, L. Calibration of Kinect for Xbox One and Comparison between the Two Generations of Microsoft Sensors. Sensors 2015, 15, 27569–27589. [Google Scholar] [CrossRef] [PubMed]

- Corti, A.; Giancola, S.; Mainetti, G.; Sala, R. A metrological characterization of the Kinect V2 time-of-flight camera. Robot. Auton. Syst. 2016, 75, 584–594. [Google Scholar] [CrossRef]

- Zennaro, S.; Munaro, M.; Milani, S.; Zanuttigh, P.; Bernardi, A.; Ghidoni, S.; Menegatti, E. Performance evaluation of the 1st and 2nd generation Kinect for multimedia applications. In Proceedings of the 2015 IEEE International Conference on Multimedia and Expo (ICME), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar]

- Bamji, C.S.; Mehta, S.; Thompson, B.; Elkhatib, T.; Wurster, S.; Akkaya, O.; Payne, A.; Godbaz, J.; Fenton, M.; Rajasekaran, V.; et al. IMpixel 65nm BSI 320MHz demodulated TOF Image sensor with 3μm global shutter pixels and analog binning. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 94–96. [Google Scholar]

- Wasenmüller, O.; Stricker, D. Comparison of Kinect V1 and V2 Depth Images in Terms of Accuracy and Precision. In Proceedings of the 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20−24 November 2016; pp. 34–45. [Google Scholar]

| Kinect v1 [17] | Kinect v2 [26] | Azure Kinect | |

|---|---|---|---|

| Color camera resolution | 1280 × 720 px @ 12 fps 640 × 480 px @ 30 fps | 1920 × 1080 px @ 30 fps | 3840 × 2160 px @30 fps |

| Depth camera resolution | 320 × 240 px @ 30 fps | 512 × 424 px @ 30 fps | NFOV unbinned—640 × 576 @ 30 fps NFOV binned—320 × 288 @ 30 fps WFOV unbinned—1024 × 1024 @ 15 fps WFOV binned—512 × 512 @ 30 fps |

| Depth sensing technology | Structured light–pattern projection | ToF (Time-of-Flight) | ToF (Time-of-Flight) |

| Field of view (depth image) | 57° H, 43° V alt. 58.5° H, 46.6° | 70° H, 60° V alt. 70.6° H, 60° | NFOV unbinned—75° × 65° NFOV binned—75° × 65° WFOV unbinned—120° × 120° WFOV binned—120° × 120° |

| Specified measuring distance | 0.4–4 m | 0.5–4.5 m | NFOV unbinned—0.5–3.86 m NFOV binned—0.5–5.46 m WFOV unbinned—0.25–2.21 m WFOV binned—0.25–2.88 m |

| Weight | 430 g (without cables and power supply); 750 g (with cables and power supply) | 610 g (without cables and power supply); 1390 g (with cables and power supply) | 440 g (without cables); 520 g (with cables, power supply is not necessary) |

| Kinect v1 (320 × 240 px) | Kinect v1 (640 × 480 px) | Kinect v2 | Azure Kinect NFOV Binned | Azure Kinect NFOV Unbinned | Azure Kinect WFOV Binned | Azure Kinect WFOV Unbinned | |

|---|---|---|---|---|---|---|---|

| 800 mm | 1.0907 | 1.6580 | 1.1426 | 0.5019 | 0.6132 | 0.5546 | 0.8465 |

| 1500 mm | 3.1280 | 3.6496 | 1.4016 | 0.5800 | 0.8873 | 0.8731 | 1.5388 |

| 3000 mm | 10.9928 | 13.6535 | 2.6918 | 0.9776 | 1.7824 | 2.1604 | 8.1433 |

| Parameter | 54° | 46° | 39° | 30° | 16° | 0° |

| Std (mm) | 0.5957 | 0.6005 | 0.5967 | 0.6045 | 0.6172 | 0.6264 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. https://doi.org/10.3390/s21020413

Tölgyessy M, Dekan M, Chovanec Ľ, Hubinský P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors. 2021; 21(2):413. https://doi.org/10.3390/s21020413

Chicago/Turabian StyleTölgyessy, Michal, Martin Dekan, Ľuboš Chovanec, and Peter Hubinský. 2021. "Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2" Sensors 21, no. 2: 413. https://doi.org/10.3390/s21020413

APA StyleTölgyessy, M., Dekan, M., Chovanec, Ľ., & Hubinský, P. (2021). Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors, 21(2), 413. https://doi.org/10.3390/s21020413