Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction

Abstract

1. Introduction

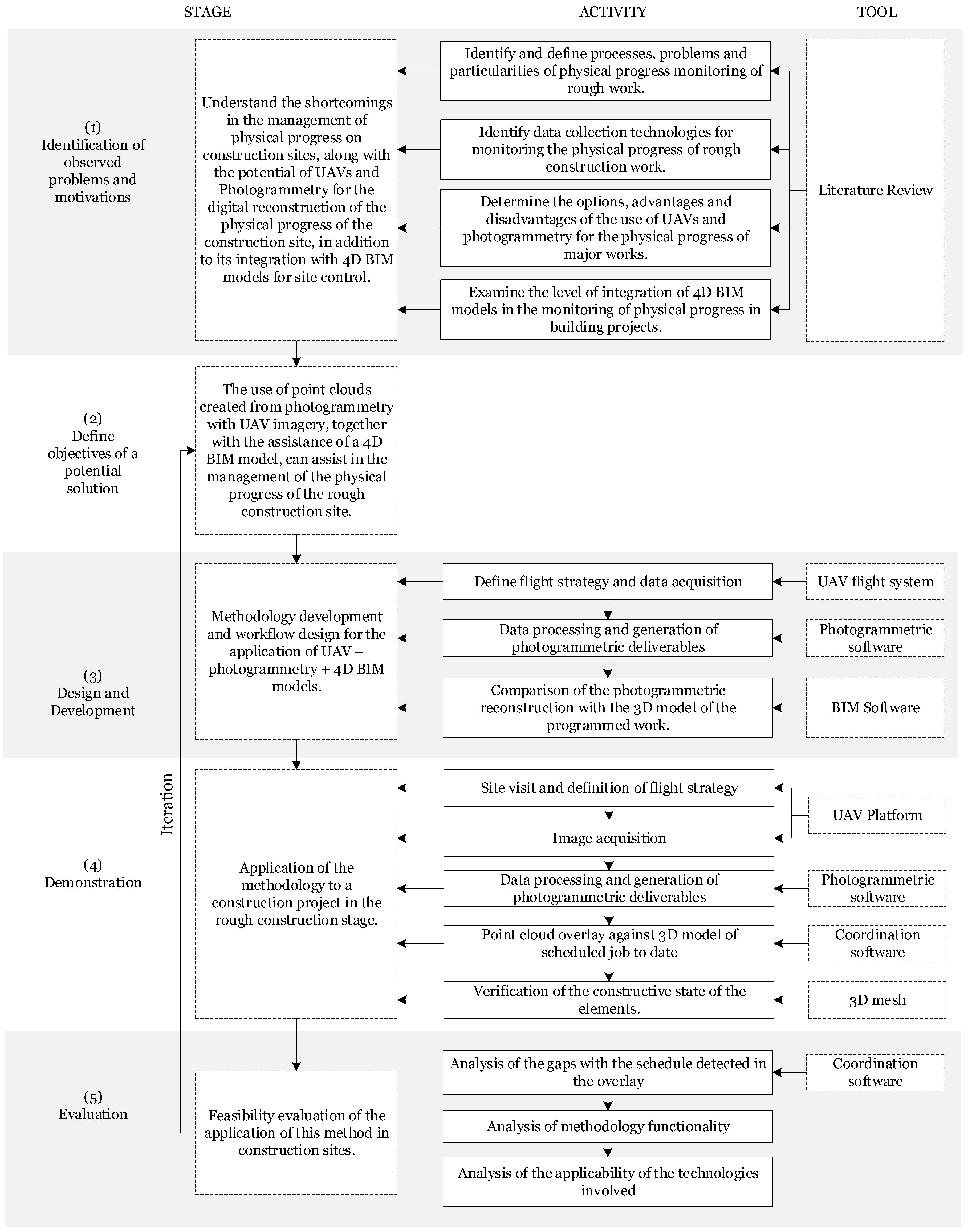

1.1. Research Methodology

- identify and define processes, problems, and particularities of physical progress monitoring of major works;

- identify technologies for data collection from the construction site;

- determine on the options available with UAVs and photogrammetry for the digital reconstruction of real scenarios; and

- examine the level of integration of 4D BIM models for construction site monitoring.

1.2. Literature Review

1.2.1. Traditional Construction Monitoring

1.2.2. New Methodologies and Tools for Monitoring Construction Projects

1.2.3. Current Deficiencies and Challenges in Construction Site Monitoring

1.2.4. Image Capture and Processing Technologies under Construction

2. Methods

2.1. Definition of Flight Strategy

2.2. Data Acquisition

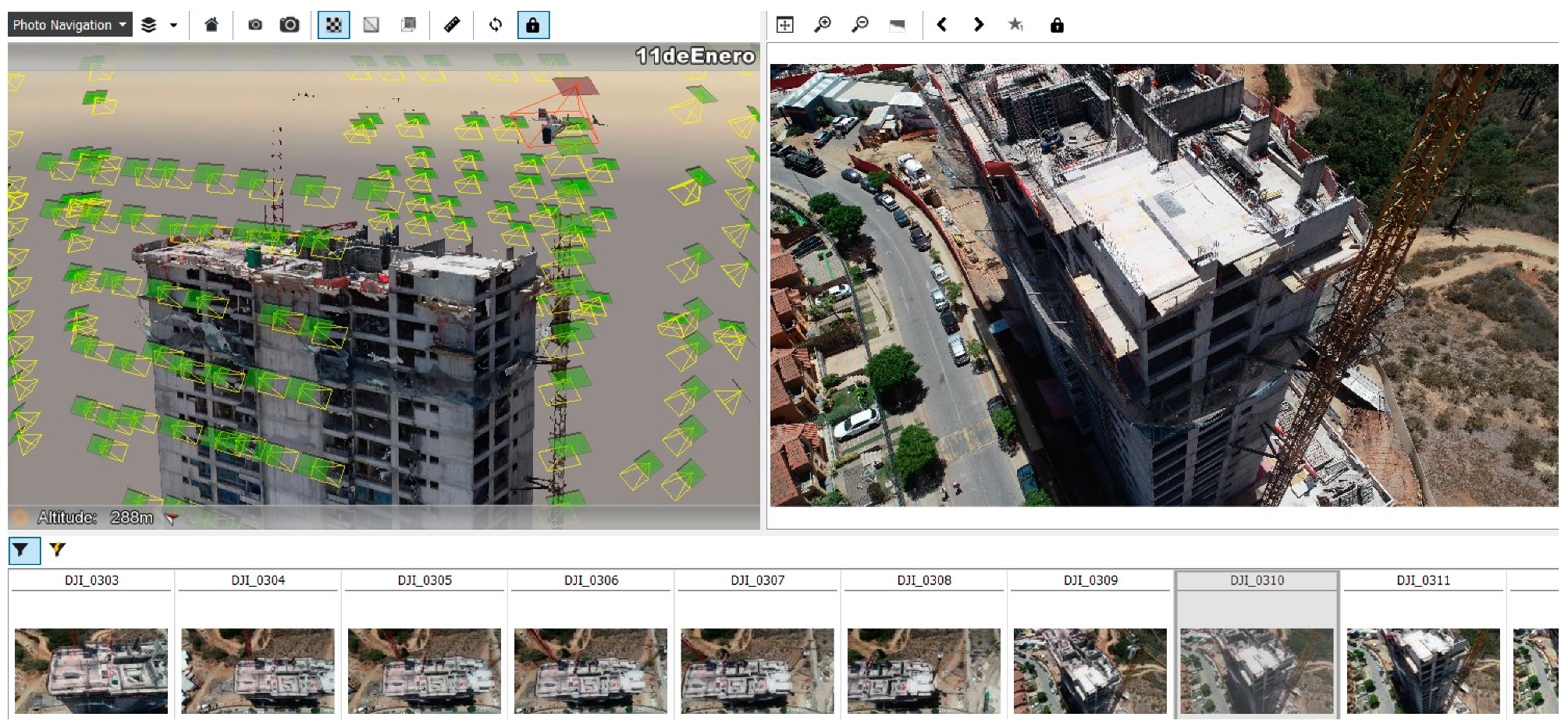

2.3. Data Processing

2.4. Coordination and Monitoring

3. Results

3.1. Definition of the Flight Strategy

- For a minimal amount of shadowed areas, flying at hours close to noon was preferable.

- The project location had a humid climate in the early morning hours and experienced an increasing wind speed after noon; this speed was even higher at the height of the working slab, making it difficult for the aircraft to fly.

- The lunch hour for the workers was between 1 p.m. and 2 p.m., leaving the working slab clear of working personnel.

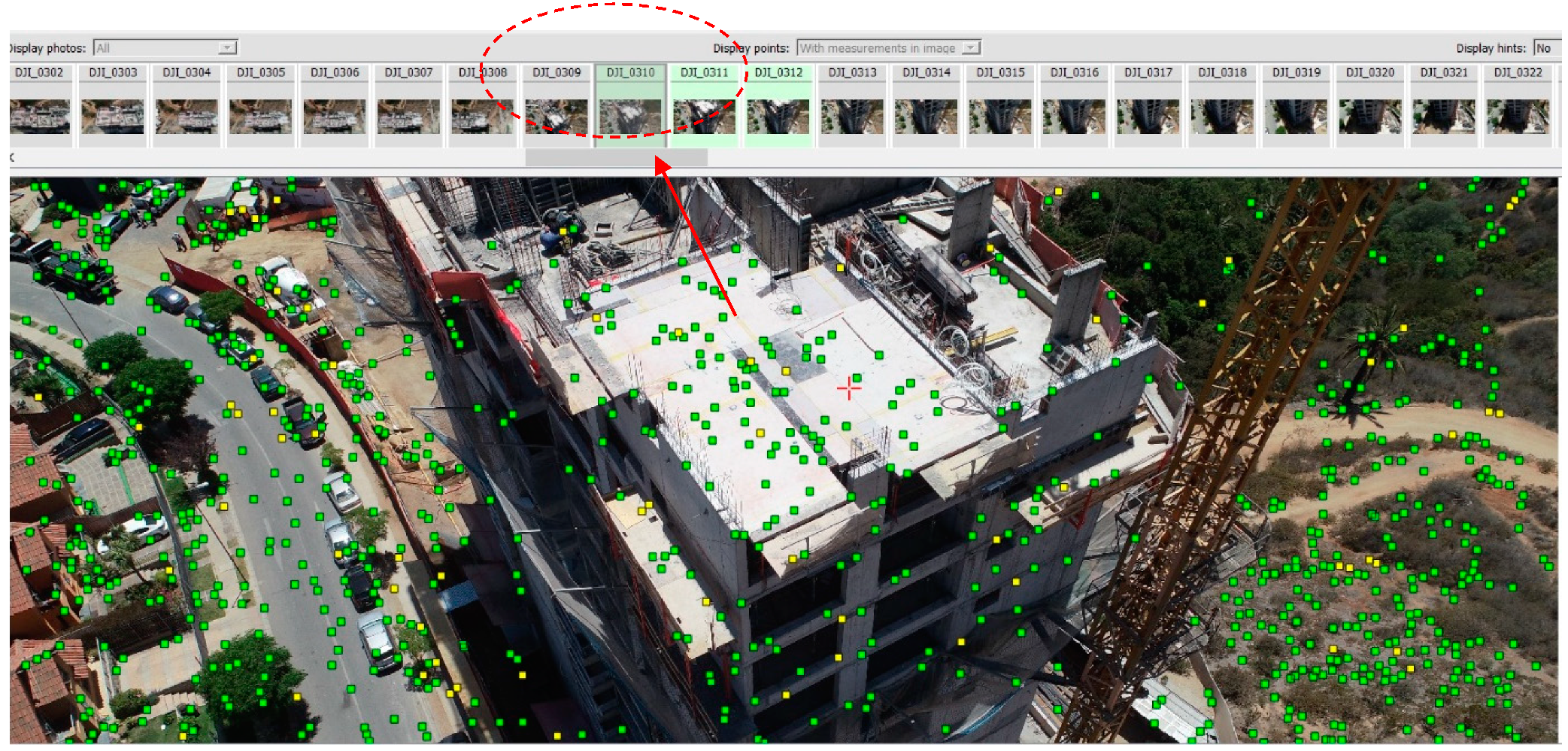

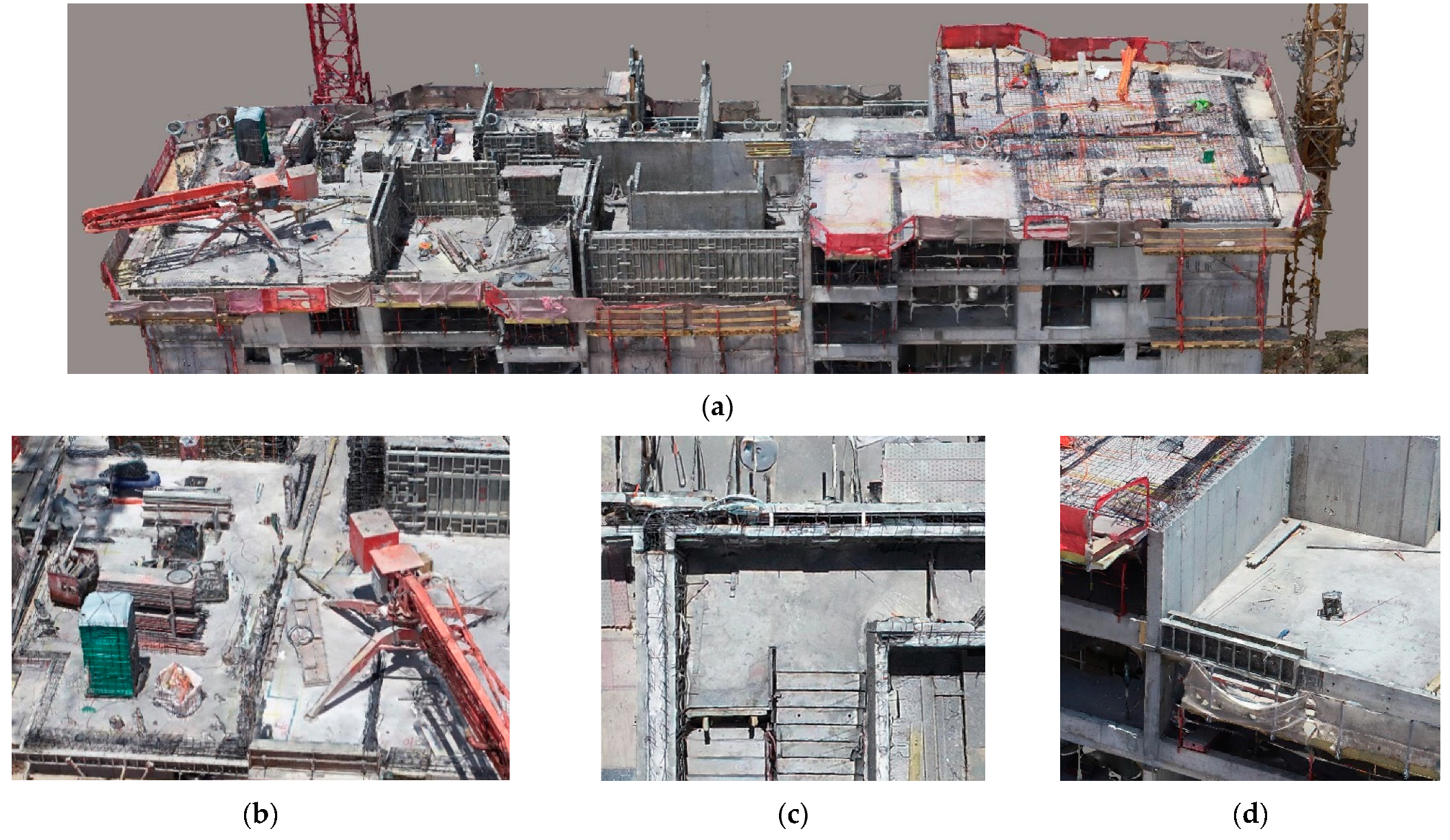

3.2. Inspection and Image Acquisition

- High accuracy level (green): RMS < 1 pixel, which ensures good reprojection.

- Medium accuracy level (yellow): 1 pixel < RMS < 3 pixels, and can be used as tie points but with lower quality.

- Low accuracy level (red): RMS > 3 pixels.

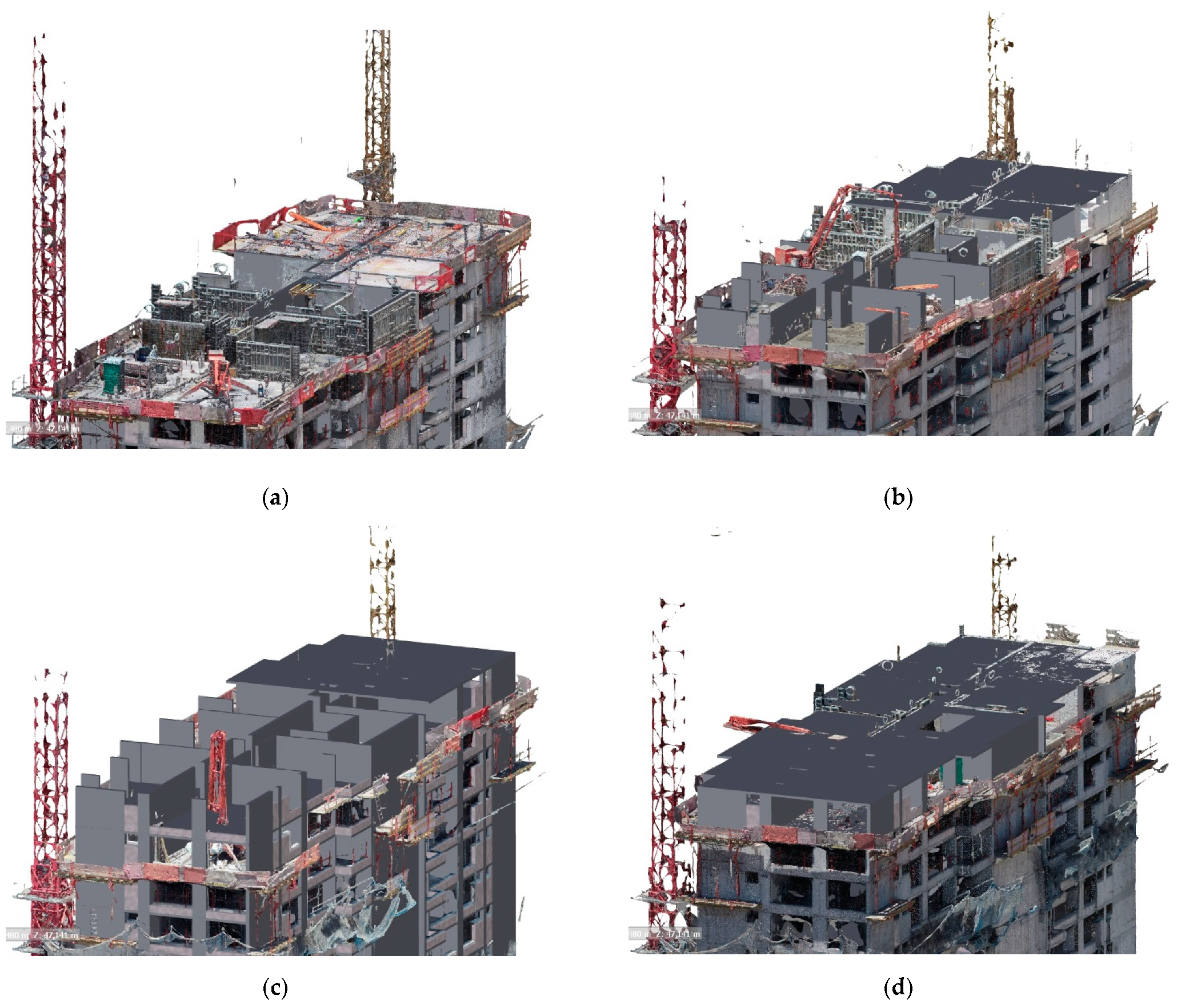

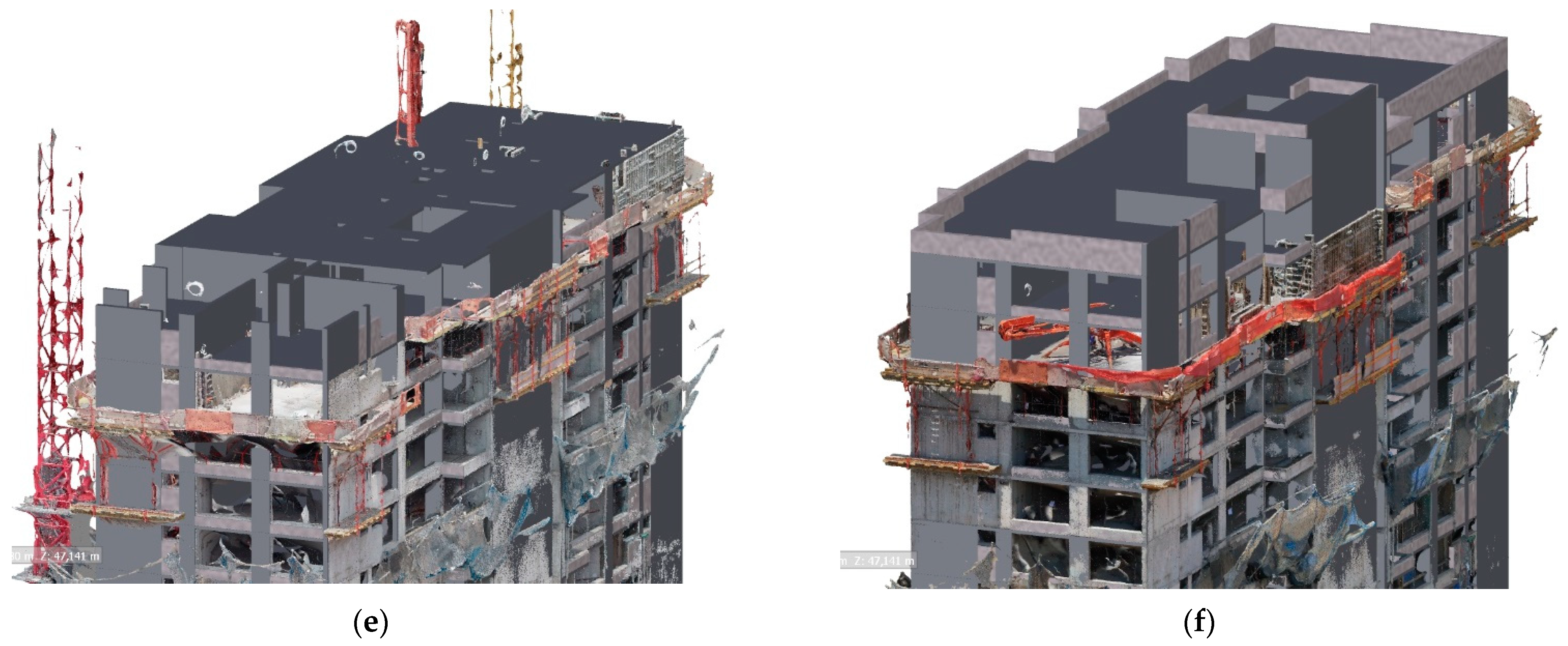

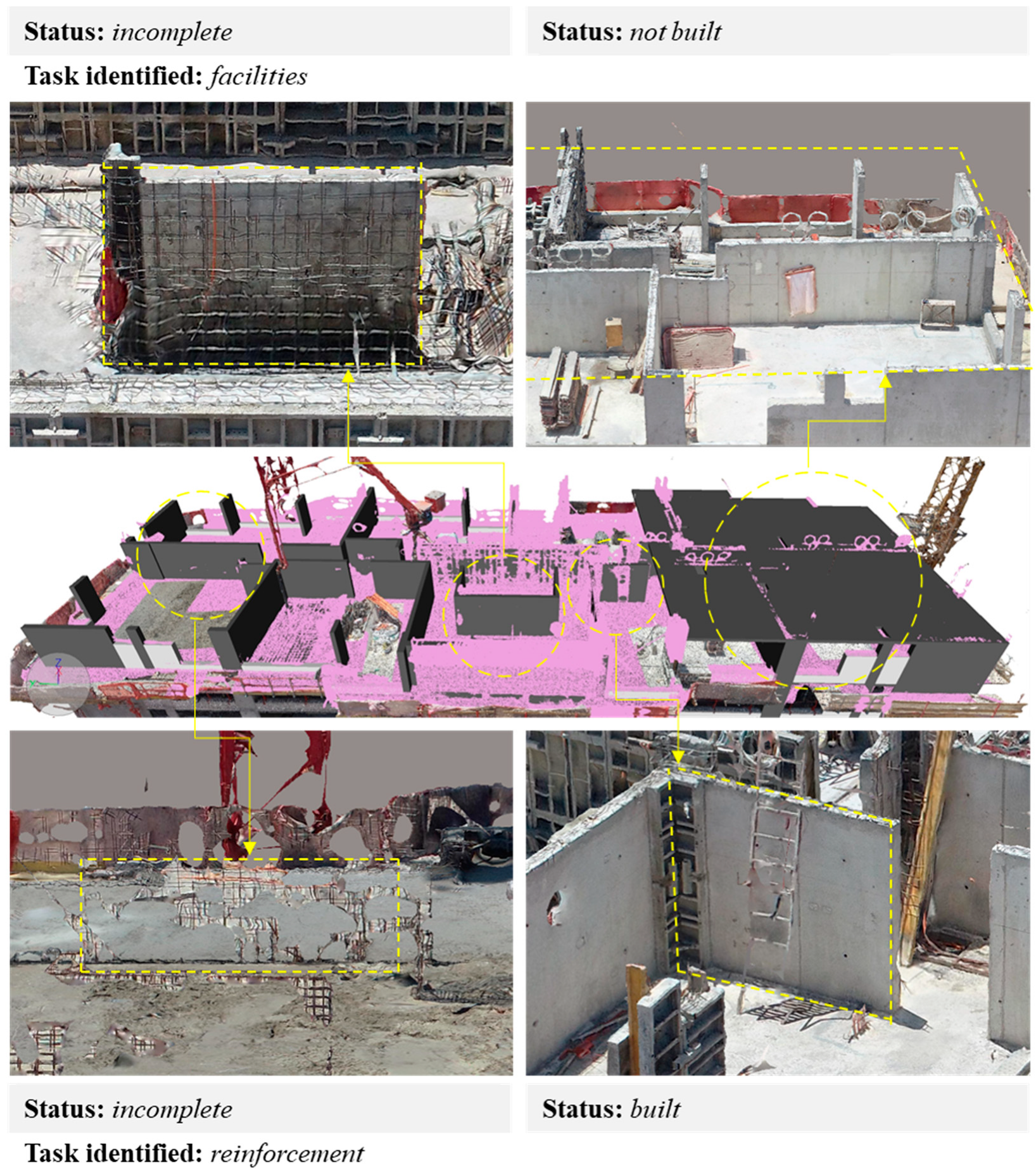

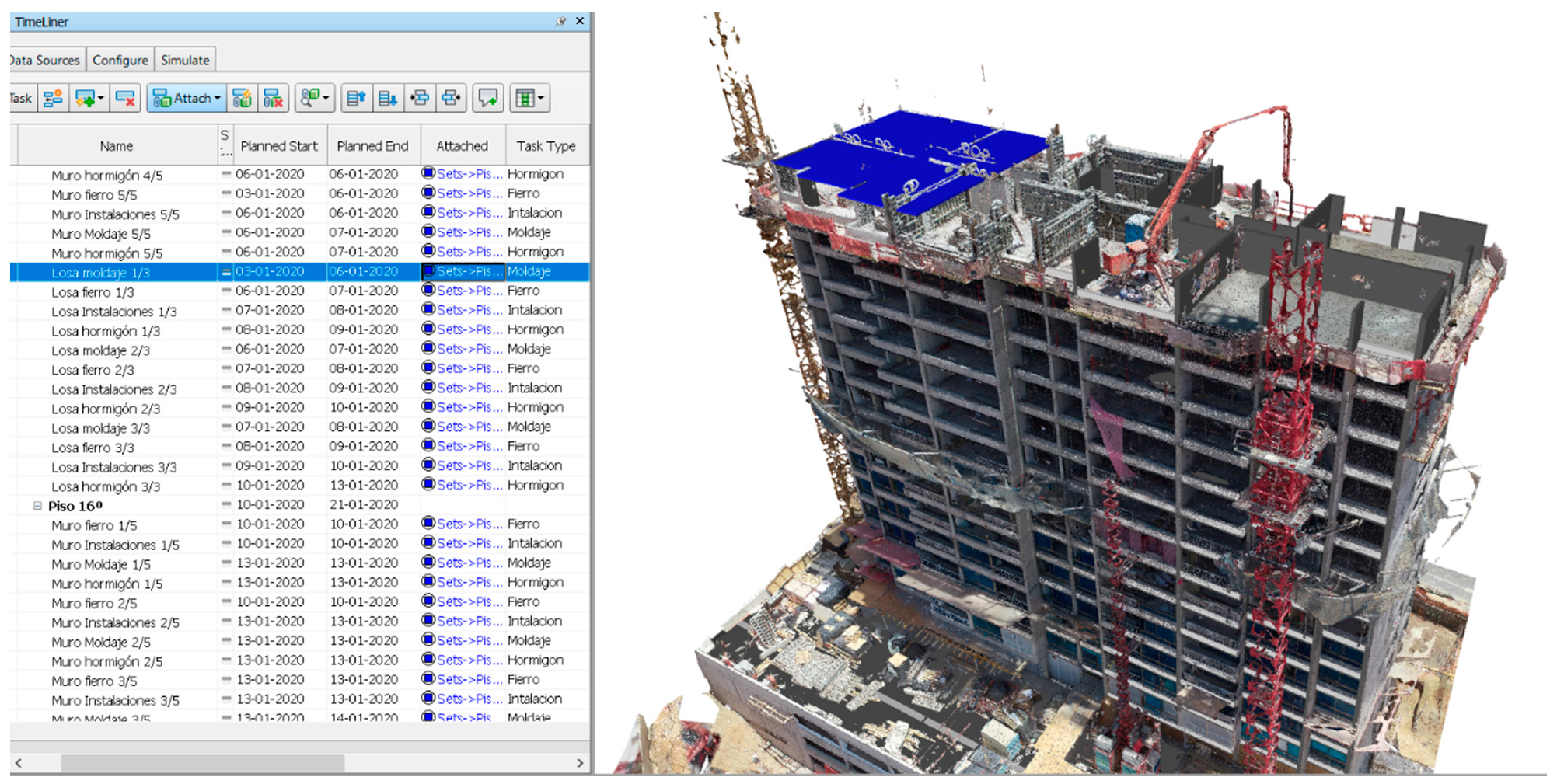

3.3. 4D Coordination and Identification of Work Performed

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- De Solminihac, H.; Dagá, J. Productividad Media Laboral en la Construcción en Chile: Análisis Comparativo Internacional y con el Resto de la Economía Hernán de Solminihac y Joaquín Dagá. Clapes Uc 2018, 41, 1–23. [Google Scholar]

- Aldana, J.; Serpell, A. Temas y tendencias sobre residuos de construcción y demolición: Un metaanálisis. Rev. La Constr. 2012, 11, 4–16. [Google Scholar] [CrossRef]

- Ballard, G. The Last Planner System of Production Control; School of Civil Engineering, Faculty of Engineering, University of Birmingham: Birmingham, UK, 2000. [Google Scholar]

- Navon, R.; Sacks, R. Assessing research issues in Automated Project Performance Control (APPC). Autom. Constr. 2007, 16, 474–484. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. Integrated sequential as-built and as-planned representation with D 4AR tools in support of decision-making tasks in the AEC/FM industry. J. Constr. Eng. Manag. 2011, 137, 1099–1116. [Google Scholar] [CrossRef]

- Duque, L.; Seo, J.; Wacker, J. Synthesis of Unmanned Aerial Vehicle Applications for Infrastructures. J. Perform. Constr. Facil. 2018, 32, 04018046. [Google Scholar] [CrossRef]

- Dastgheibifard, S.; Asnafi, M. A Review on Potential Applications of Unmanned Aerial Vehicle for Construction Industry. Sustain. Struct. Mater. 2018, 1, 44–53. [Google Scholar] [CrossRef]

- Lin, J.J.; Golparvar-Fard, M. Proactive Construction Project Controls via Predictive Visual Data Analytics. Congr. Comput. Civ. Eng. Proc. 2017, 2017, 147–154. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. D4AR—A 4-Dimensional augmented reality model for automating construction progress monitoring data collection, processing and communication. J. Inf. Technol. Constr. 2009, 14, 129–153. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research A Design Science Research Methodology for Information Systems Research. J. Manag. Inf. Syst. 2014, 1222. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. 3D structural component recognition and modeling method using color and 3D data for construction progress monitoring. Autom. Constr. 2010, 19, 844–854. [Google Scholar] [CrossRef]

- Vacanas, Y.; Themistocleous, K.; Agapiou, A.; Hadjimitsis, D. Building Information Modelling (BIM) and Unmanned Aerial Vehicle (UAV) technologies in infrastructure construction project management and delay and disruption analysis. In Proceedings of the Third International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2015), Paphos, Cyprus, 16–19 March 2015; Volume 9535. [Google Scholar] [CrossRef]

- Fard, M.G.; Peña-Mora, F. Application of visualization techniques for construction progress monitoring. Congr. Comput. Civ. Eng. Proc. 2007, 40937, 216–223. [Google Scholar] [CrossRef]

- Oesterreich, T.D.; Teuteberg, F. Computers in Industry Understanding the implications of digitisation and automation in the context of Industry 4.0: A triangulation approach and elements of a research agenda for the construction industry. Comput. Ind. 2016, 83, 121–139. [Google Scholar] [CrossRef]

- Yap Hui, B.J.; Chow, I.N.; Shavarebi, K. Criticality of Construction Industry Problems in Developing Countries: Analyzing Malaysian Projects. J. Manag. Eng. 2019, 35. [Google Scholar] [CrossRef]

- Nasrun, M.; Nawi, M.; Baluch, N.; Bahauddin, A.Y. Impact of Fragmentation Issue in Construction Industry: An Overview 3 Discussions: Fragmentation Issue. In Proceedings of the MATEC Web of Conferences, Perak, Malaysia, 14 September 2014; Volume 15. [Google Scholar]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. Automated progress monitoring using unordered daily construction photographs and IFC-based building information models. J. Comput. Civ. Eng. 2015, 29, 1–20. [Google Scholar] [CrossRef]

- Lin, J.J.; Han, K.K.; Golparvar-Fard, M. A Framework for Model-Driven Acquisition and Analytics of Visual Data Using UAVs for Automated Construction Progress Monitoring. Comput. Civ. Eng. 2015, 156–164. [Google Scholar] [CrossRef]

- Angah, O.; Chen, A.Y. Removal of occluding construction workers in job site image data using U-Net based context encoders. Autom. Constr. 2020, 119. [Google Scholar] [CrossRef]

- Dave, B.; Kubler, S.; Främling, K.; Koskela, L. Addressing information flow in lean production management and control in construction. In Proceedings of the 22nd Annual Conference International Group for Lean Construction IGLC 2014, Oslo, Norway, 25–27 June 2014; pp. 581–592. [Google Scholar]

- Han, K.K.; Golparvar-Fard, M. Potential of big visual data and building information modeling for construction performance analytics: An exploratory study. Autom. Constr. 2017, 73, 184–198. [Google Scholar] [CrossRef]

- Muñoz-La Rivera, F.; Vielma, J.; Herrera, R.F.; Carvallo, J. Methodology for Building Information Modeling (BIM) Implementation in Structural Engineering Companies (SEC). Adv. Civ. Eng. 2019, 2019, 1–16. [Google Scholar] [CrossRef]

- Muñoz-La Rivera, F.; Mora-Serrano, J.; Valero, I.; Oñate, E. Methodological—Technological Framework for Construction 4.0. Arch. Comput. Methods Eng. 2021, 28, 689–711. [Google Scholar] [CrossRef]

- Heesom, D.; Mahdjoubi, L. Trends of 4D CAD applications for construction planning. Constr. Manag. Econ. 2004, 22, 171–182. [Google Scholar] [CrossRef]

- Tserng, H.P.; Ho, S.P.; Jan, S.H. Developing BIM-assisted as-built schedule management system for general contractors. J. Civ. Eng. Manag. 2014, 20, 47–58. [Google Scholar] [CrossRef]

- Hallermann, N.; Morgenthal, G. Visual inspection strategies for large bridges using Unmanned Aerial Vehicles (UAV). In Proceedings of the 7th IABMAS, International Conference on Bridge Maintenance, Safety and Management, Shanghai, China, 7–11 July 2014; pp. 661–667. [Google Scholar] [CrossRef]

- Park, M.W.; Koch, C.; Brilakis, I. Three-dimensional tracking of construction resources using an on-site camera system. J. Comput. Civ. Eng. 2012, 26, 541–549. [Google Scholar] [CrossRef]

- Greenwood, W.W.; Lynch, J.P.; Zekkos, D. Applications of UAVs in Civil Infrastructure. J. Infrastruct. Syst. 2019, 25, 04019002. [Google Scholar] [CrossRef]

- Dupont, Q.F.M.; Chua, D.K.H.; Tashrif, A.; Abbott, E.L.S. Potential Applications of UAV along the Construction’s Value Chain. Procedia Eng. 2017, 182, 165–173. [Google Scholar] [CrossRef]

- Gheisari, M.; Esmaeili, B. Applications and requirements of unmanned aerial systems (UASs) for construction safety. Saf. Sci. 2019, 118, 230–240. [Google Scholar] [CrossRef]

- Moon, D.; Chung, S.; Kwon, S.; Seo, J.; Shin, J. Comparison and utilization of point cloud generated from photogrammetry and laser scanning: 3D world model for smart heavy equipment planning. Autom. Constr. 2019, 98, 322–331. [Google Scholar] [CrossRef]

- Liu, P.; Chen, A.Y.; Huang, Y.N.; Han, J.Y.; Lai, J.S.; Kang, S.C.; Wu, T.H.; Wen, M.C.; Tsai, M.H. A review of rotorcraft unmanned aerial vehicle (UAV) developments and applications in civil engineering. Smart Struct. Syst. 2014, 13, 1065–1094. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from Unmanned Aerial Vehicle (UAV) imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Ma, Z.; Liu, S. A review of 3D reconstruction techniques in civil engineering and their applications. Adv. Eng. Informatics 2018, 37, 163–174. [Google Scholar] [CrossRef]

- Mahami, H.; Nasirzadeh, F.; Hosseininaveh Ahmadabadian, A.; Nahavandi, S. Automated Progress Controlling and Monitoring Using Daily Site Images and Building Information Modelling. Buildings 2019, 9, 70. [Google Scholar] [CrossRef]

- Fuentes, J.E.; Bolaños, J.A.; Rozo, D.M. Modelo digital de superficie a partir de imágenes de satélite ikonos para el análisis de áreas de inundación en Santa Marta, Colombia. Bol. Investig. Mar. Costeras 2012, 41, 251–266. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Prosser-Contreras, M.; Atencio, E.; Muñoz La Rivera, F.; Herrera, R.F. Use of Unmanned Aerial Vehicles (UAVs) and Photogrammetry to Obtain the International Roughness Index (IRI) on Roads. Appl. Sci. 2020, 10, 8788. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Cramer, M.; Stallmann, D.; Haala, N. Direct georeferencing using gps/inertial exterior orientations for photogrammetric applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 198–205. [Google Scholar]

- Nyimbili, P.H.; Demirel, H.; Seker, D.Z.; Erden, T. Structure from Motion (SfM)—Approaches and Applications. In Proceedings of the International Scientific Conference on Applied Sciences, Antalya, Turkey, 27–30 September 2016. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- Lewińska, P.; Pargieła, K. Comparative Analysis of Structure-From-Motion Software’s –An Example of Letychiv (Ukraine) Castle and Convent Buildings. J. Appl. Eng. Sci. 2019, 8, 73–78. [Google Scholar] [CrossRef]

- Memon, Z.A.; Abd. Majid, M.Z.; Mustaffar, M. The Use Of Photogrammetry Techniques To Evaluate The Construction Project Progress. J. Teknol. 2006, 44. [Google Scholar] [CrossRef][Green Version]

- Hoppe, C.; Wendel, A.; Zollmann, S.; Pirker, K.; Irschara, A.; Bischof, H.; Kluckner, S. Photogrammetric Camera Network Design for Micro Aerial Vehicles. Comput. Vis. Winter Work. 2012, 8, 1–3. [Google Scholar]

- Atencio, E.; Muñoz, F.; Romero, E.; Villarroel, S. Analysis of Optimal Flight Parameters of Unmanned Aerial Vehicles ( UAVs ) Analysis of Optimal Flight Parameters of Unmanned Aerial Vehicles (UAVs) for Detecting Potholes in Pavements. Appl. Sci. 2020, 10, 4157. [Google Scholar] [CrossRef]

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) applications in the built environment: Towards automated building inspection procedures using drones. Autom. Constr. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Rupnik, E.; Nex, F.; Remondino, F. Oblique multi-camera systems-orientation and dense matching issues. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2014, 40, 107–114. [Google Scholar] [CrossRef]

- Hugenholtz, C.H.; Whitehead, K.; Brown, O.W.; Barchyn, T.E.; Moorman, B.J.; LeClair, A.; Riddell, K.; Hamilton, T. Geomorphological mapping with a small unmanned aircraft system (sUAS): Feature detection and accuracy assessment of a photogrammetrically-derived digital terrain model. Geomorphology 2013, 194, 16–24. [Google Scholar] [CrossRef]

- Rossi, P.; Mancini, F.; Dubbini, M.; Mazzone, F.; Capra, A. Combining nadir and oblique uav imagery to reconstruct quarry topography: Methodology and feasibility analysis. Eur. J. Remote Sens. 2017, 50, 211–221. [Google Scholar] [CrossRef]

- Vacca, G.; Dessì, A.; Sacco, A. The Use of Nadir and Oblique UAV Images for Building Knowledge. ISPRS Int. J. Geo-Information 2017, 6, 393. [Google Scholar] [CrossRef]

- Souto, M.S.; Eschoyez, M.A.; Morales, N.; Ripa, G.; Del Bosco, L.; Castillo, J.P. Estudio y análisis de precisión de diversos navegadores GNSS de bajo costo. Rev. La Fac. Ciencias Exactas Físicas Nat. 2017, 4, 15. [Google Scholar]

- Mikolajczyk, K.; Tuytelaars, T.; Schmid, C.; Zisserman, A.; Matas, J.; Schaffalitzky, F.; Kadir, T.; Van Gool, L. A comparison of affine region detectors. Int. J. Comput. Vis. 2005, 65, 43–72. [Google Scholar] [CrossRef]

- Hung, I.-K.; Unger, D.; Kulhavy, D.; Zhang, Y. Positional Precision Analysis of Orthomosaics Derived from Drone Captured Aerial Imagery. Drones 2019, 3, 46. [Google Scholar] [CrossRef]

| Level | Development Status | Work Required |

|---|---|---|

| 1 | The project uses only 2D CAD drawings together with the schedule of activities in a Gantt chart format to monitor the physical progress of the work. | Build the 3D model from the 2D CAD drawings of the project using modeling software and associate the start date of each activity in the schedule with the corresponding elements of the model in 4D coordination software. |

| 2 | The project has a 3D BIM model representative of the final programmed state of the structure. The monitoring is performed through the usual practices of visiting, recording, and checking through the schedule. | Associate the start dates of the activities in the schedule with the corresponding parametric elements of the 3D BIM model in the coordination software. |

| 3 | The project has a 4D BIM model, composed of elements coordinated with the activities of the chronogram through their start and duration dates, allowing visualization of the programmed workspace at any date of the calendar. | Generate the monitoring in the base file of the 4D BIM model. |

| Type of Interaction between the Element and the Point Cloud | Element Status |

|---|---|

| Element surface coexisting with cloud points. | Built |

| Volume of the element with cloud points inside or outside it. | Incomplete |

| Volume of the element without dots inside it. | Not built |

| Type of Use | Materiality | Vertical Construction | Height Per Floor [m] | Floor Area Per Floor [m2] | Total Area [m2] | Total Duration [days] |

|---|---|---|---|---|---|---|

| Housing | Reinforced concrete | 3 basements 18 floors | 2.52 | 541.6 | 13,053 | 420 |

| Device | Image Resolution [MP] | Focal Distance [mm] | Sensor Size (h,v) [mm] | Actual Image Size (h,v) [m] | Step (h,v) [m] |

|---|---|---|---|---|---|

| Phantom 4 pro | 20 (5472 × 3648) | 8.8 | 12.83 × 7.22 | 21.8 × 12.3 | 4.3 × 4.3 |

| Parrot Anafi | 21 (4068 × 3456) | 3.8 | 5.92 × 5.92 | 23.4 × 23.4 | 4.6 × 8.1 |

| Registration Day | N° 0 | N° 12 | N° 21 | N° 24 | N° 30 | N° 41 |

|---|---|---|---|---|---|---|

| Number of photos uploaded | 235 | 196 | 200 | 208 | 210 | 190 |

| Number of photos used | 210 | 196 | 200 | 208 | 209 | 189 |

| Percentage of photos used (%) | 89 | 100 | 100 | 100 | 100 | 99 |

| Processing time | 5 h 42 min | 4 h 52 min | 4 h 57 min | 5 h 10 min | 5 h 20 min | 4 h 50 min |

| GSD [mm/px] | 9.95 | 11.96 | 10.51 | 10.3 | 10.9 | 11.5 |

| Model Scale | 1:30 | 1:36 | 1:32 | 1:32 | 1:32 | 1:40 |

| Image dimension | 5472 × 3078 px | 5472 × 3078 px | 5472 × 3078 px | 5472 × 3078 px | 5472 × 3078 px | 5472 × 3078 px |

| Total tie points | 60,322 | 56,597 | 45,767 | 50,645 | 55,455 | 49,788 |

| Average tie Points per image | 1245 | 1333 | 1089 | 1121 | 1280 | 1289 |

| Average RMS error | 0.47 | 0.51 px | 0.57 px | 0.55 | 0.51 | 0.5 |

| Minimum RMS error | 0.01 px | 0.01 px | 0.01 px | 0.01 px | 0.01 px | 0.01 px |

| Maximum RMS error | 1.88 px | 1.87 px | 1.78 px | 1.74 px | 1.71 px | 1.8 px |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jacob-Loyola, N.; Muñoz-La Rivera, F.; Herrera, R.F.; Atencio, E. Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors 2021, 21, 4227. https://doi.org/10.3390/s21124227

Jacob-Loyola N, Muñoz-La Rivera F, Herrera RF, Atencio E. Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors. 2021; 21(12):4227. https://doi.org/10.3390/s21124227

Chicago/Turabian StyleJacob-Loyola, Nicolás, Felipe Muñoz-La Rivera, Rodrigo F. Herrera, and Edison Atencio. 2021. "Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction" Sensors 21, no. 12: 4227. https://doi.org/10.3390/s21124227

APA StyleJacob-Loyola, N., Muñoz-La Rivera, F., Herrera, R. F., & Atencio, E. (2021). Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors, 21(12), 4227. https://doi.org/10.3390/s21124227