IGRNet: A Deep Learning Model for Non-Invasive, Real-Time Diagnosis of Prediabetes through Electrocardiograms

Abstract

1. Introduction

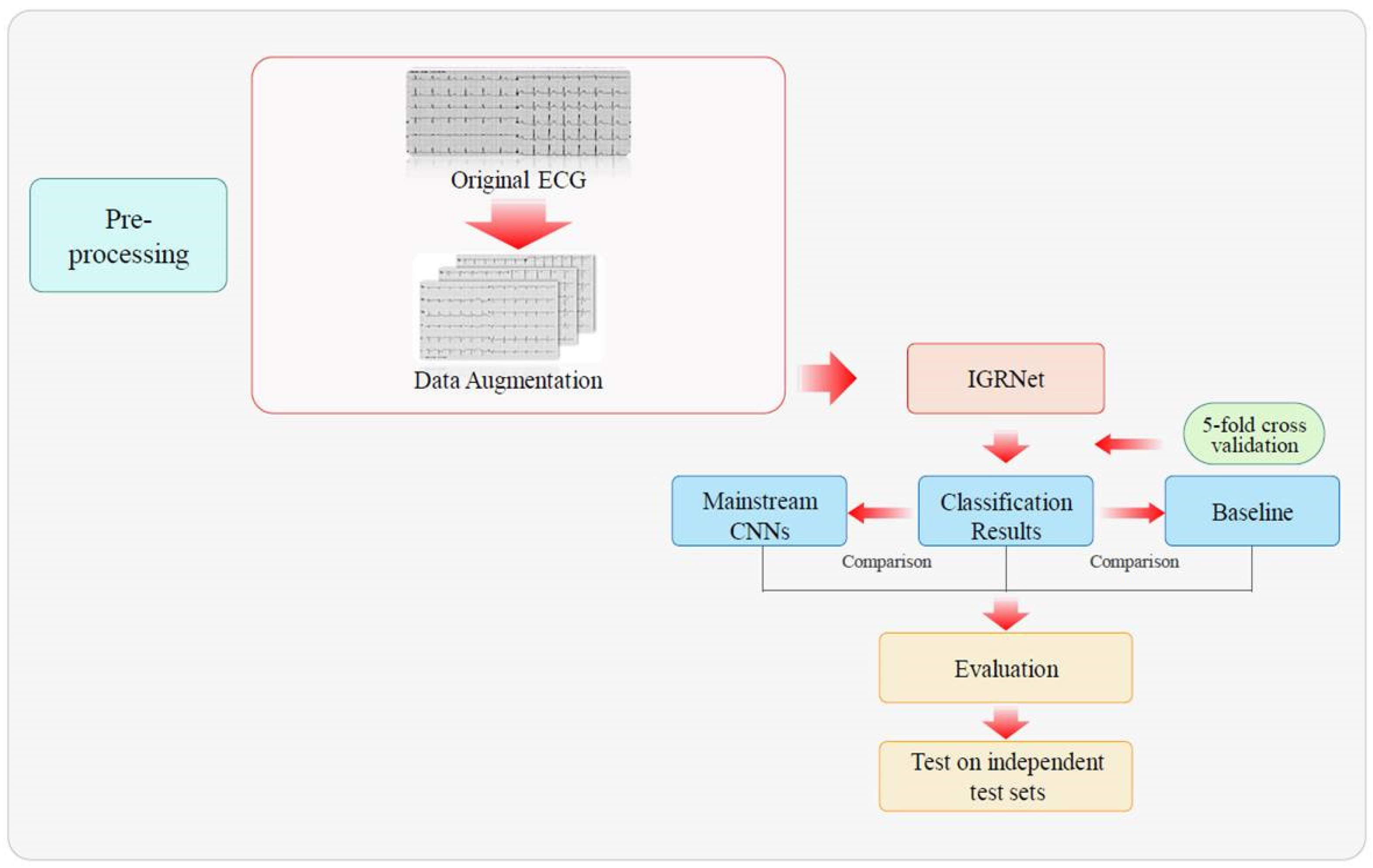

2. Materials and Methods

2.1. Acquisition and Partitioning of Datasets

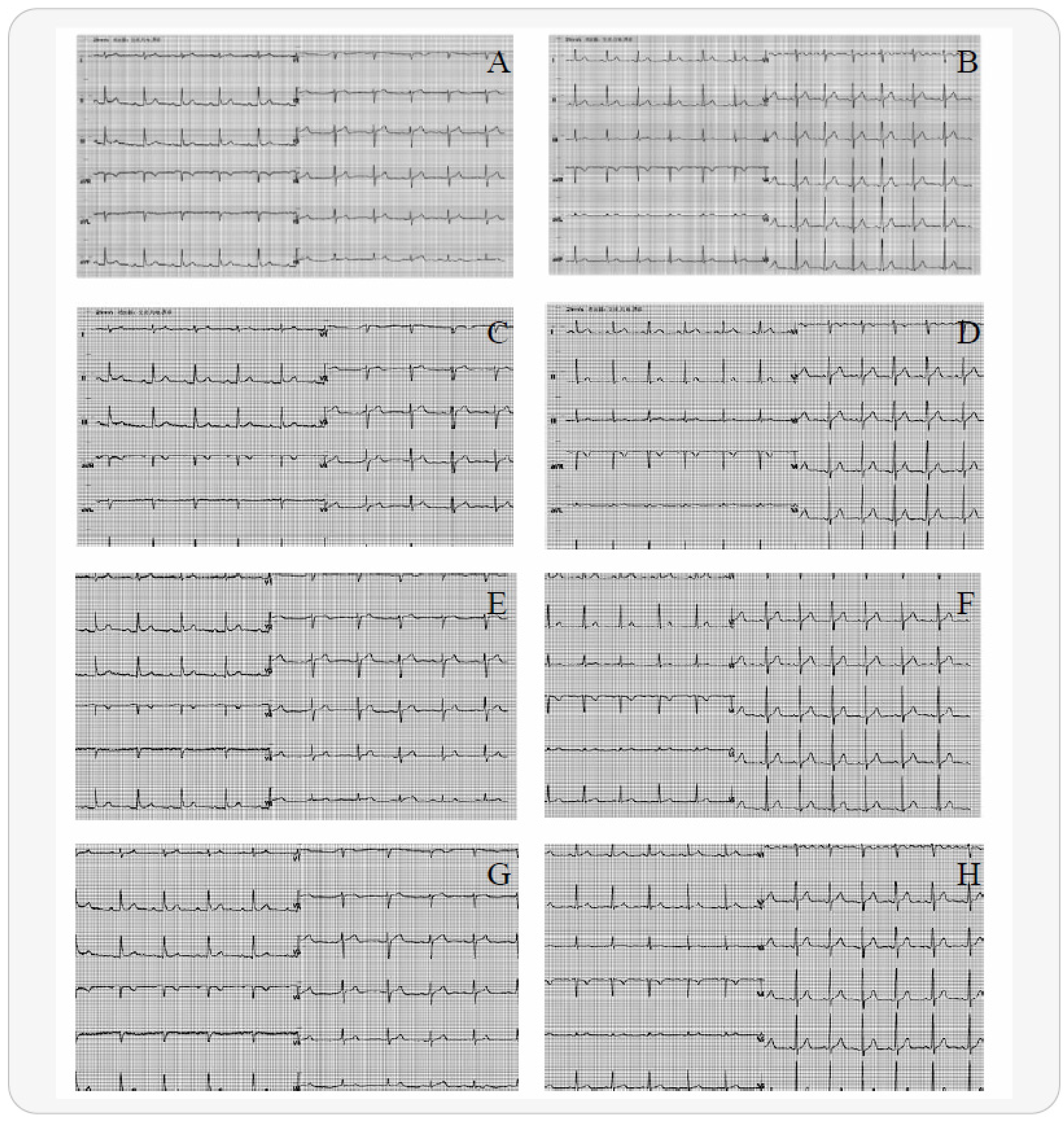

2.2. Electrocardiogram Preprocessing

2.3. Model Architectures

2.3.1. IGRNet

2.3.2. Nonlinear Activation Function in IGRNet

2.3.3. Batch Normalization (BN) in IGRNet

2.3.4. Dropout in IGRNet

2.4. Mainstream Convolutional Neural Networks

2.5. Baseline Algorithms

3. Experiment

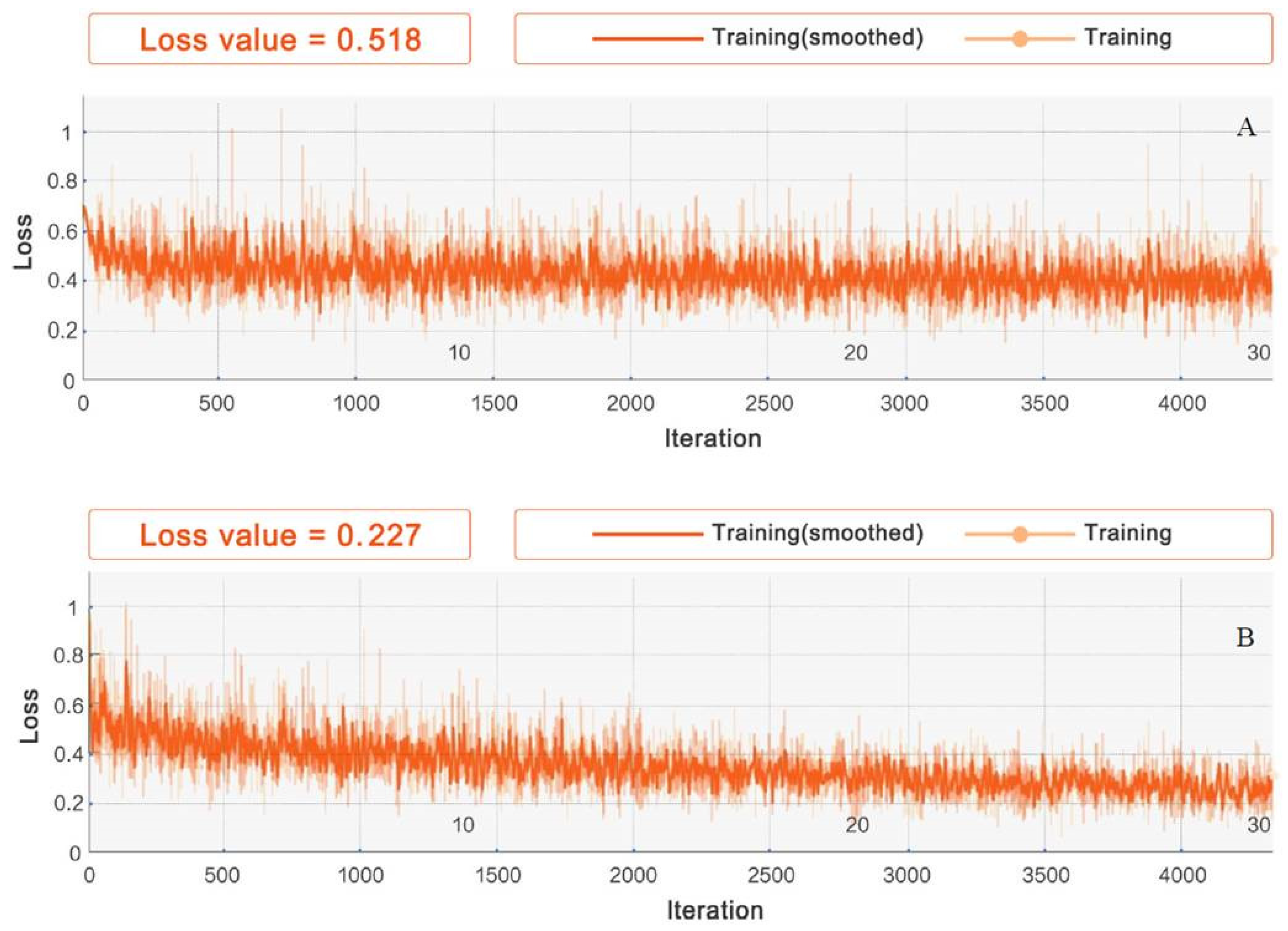

3.1. Experimental Setup

3.2. Experimental Process

- Experiment #1 For dataset_1, experiments were conducted on the four aforementioned activation functions for IGRNet, so as to find the activation function with the optimal performance and thus improve the generalizability of the model. The four activation functions were optimized in the preliminary experiments. Additionally, the InitialLearnRate was set to 0.0001, the L2Regularization was set to 0.001 during training.

- Experiment #2 To verify the superiority of the IGRNet architecture in the task of ECG prediabetes diagnoses, we compared it with two mainstream CNN models (AlexNet and GoogLeNet) on dataset_1. All models were optimized during training.

- Experiment #3 The adjusted SVM, RF, and K-NN models were also compared against IGRNet, to verify the superiority of the 2D-CNN proposed in this paper.

- Experiment #4 To reduce the interference of other factors on the ECG diagnosis and further improve the performance of the model, IGRNet was used to perform cross-validation on dataset_2, dataset_3, dataset_4, dataset_5, and dataset_6.

- Experiment #5 In order to verify the true performance of IGRNet in IGR diagnosis, we employed the model trained by the former (dataset_1-6) to test independent test set_0-6 respectively. In addition, in order to more strictly prove the superiority of the model proposed in this paper, we also tested other models and different activation functions on the total independent test set.

3.3. Experimental Evaluation

4. Results and Discussion

4.1. Selection of Activation Functions

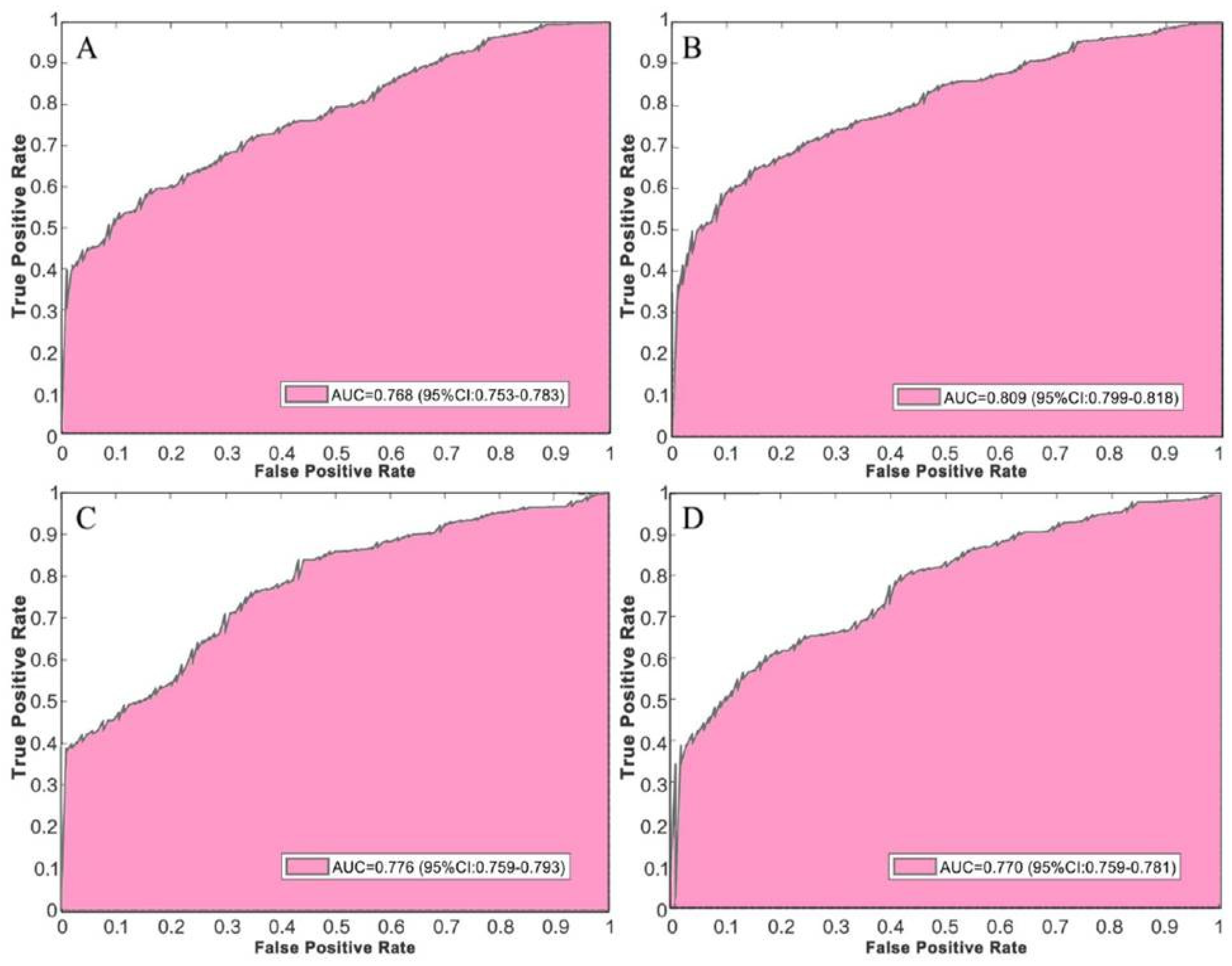

4.2. Comparison with Deep Convolutional Neural Networks

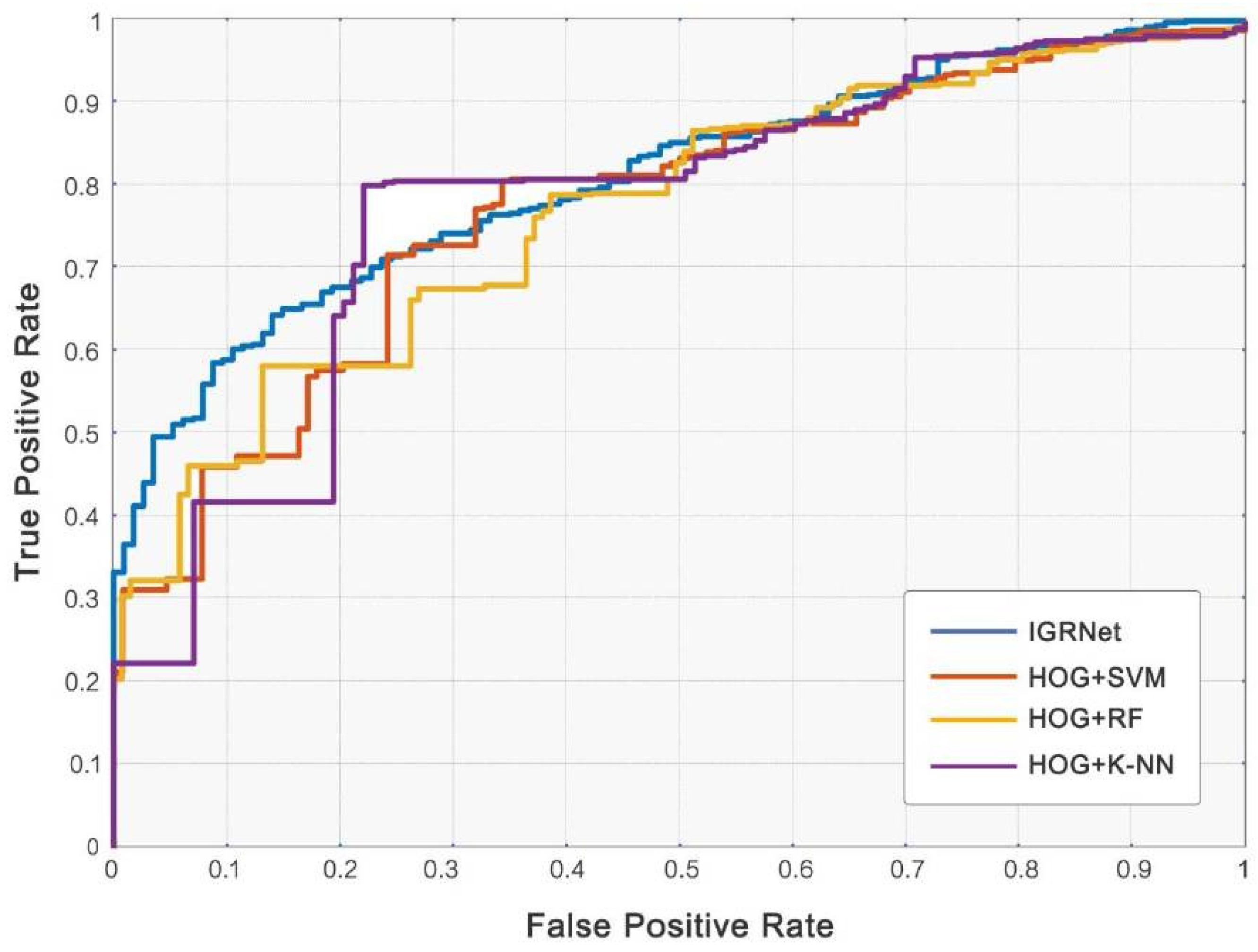

4.3. Comparison with Baseline Algorithms

4.4. Further Improvement

4.5. Test Performance on Independent Test Sets

4.6. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- International Diabetes Federation. IDF Diabetes Atlas, 9th ed.; International Diabetes Federation: Brussels, Belgium, 2019. [Google Scholar]

- Tabak, A.G.; Herder, C.; Rathmann, W.; Brunner, E.J.; Kivimaki, M. Prediabetes: A high-risk state for diabetes development. Lancet 2012, 379, 2279–2290. [Google Scholar] [CrossRef]

- Qi, L.; Hu, F.B.; Hu, G. Genes, Environment, and interactions in prevention of type 2 diabetes: A focus on physical activity and lifestyle changes. Curr. Mol. Med. 2008, 8, 519–532. [Google Scholar] [CrossRef] [PubMed]

- Axelsen, L.N.; Calloe, K.; Braunstein, T.H.; Riemann, M.; Hofgaard, J.P.; Liang, B.; Jensen, C.F.; Olsen, K.B.; Bartels, E.D.; Baandrup, U. Diet-induced pre-diabetes slows cardiac conductance and promotes arrhythmogenesis. Cardiovasc. Diabetol. 2015, 14, 87. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, W.; Zhu, L.; Lin, N.; Niu, Y.; Li, X.; Lu, S.; Zhang, H.; Wang, X.; Wen, J. Resting heart rate and impaired glucose regulation in middle-aged and elderly Chinese people: A cross-sectional analysis. BMC Cardiovasc. Disord. 2017, 17, 246. [Google Scholar] [CrossRef]

- Stacey, R.B.; Leaverton, P.E.; Schocken, D.D.; Peregoy, J.; Bertoni, A.G. Prediabetes and the association with unrecognized myocardial infarction in the multi-ethnic study of atherosclerosis. Am. Heart J. 2015, 170, 923–928. [Google Scholar] [CrossRef] [PubMed]

- Gudul, N.E.; Karabag, T.; Sayin, M.R.; Bayraktaroglu, T.; Aydin, M. Atrial conduction times and left atrial mechanical functions and their relation with diastolic function in prediabetic patients. Korean J. Intern. Med. 2017, 32, 286–294. [Google Scholar] [CrossRef] [PubMed]

- Santhanalakshmi, D.; Gautam, S.; Gandhi, A.; Chaudhury, D.; Goswami, B.; Mondal, S. Heart Rate Variability (HRV) in prediabetics—A cross sectional comparative study in north India. Indian J. Physiol. Pharmacol. 2019, 63, 275–282. [Google Scholar]

- Wang, L.; Cui, L.; Wang, Y.; Vaidya, A.; Chen, S.; Zhang, C.; Zhu, Y.; Li, D.; Hu, F.B.; Wu, S. Resting heart rate and the risk of developing impaired fasting glucose and diabetes: The Kailuan prospective study. Int. J. Epidemiol. 2015, 44, 689–699. [Google Scholar] [CrossRef]

- Kim, I.; Oh, J.M. Deep learning: From chemoinformatics to precision medicine. J. Pharm. Investig. 2017, 47, 317–323. [Google Scholar] [CrossRef]

- Kim, W. Knowledge-based diagnosis and prediction using big data and deep learning in precision medicine. Investig. Clin. Urol. 2018, 59, 69–71. [Google Scholar] [CrossRef]

- Iqbal, M.S.; Elashram, S.; Hussain, S.; Khan, T.; Huang, S.; Mehmood, R.; Luo, B. Efficient cell classification of mitochondrial images by using deep learning. J. Opt. 2019, 48, 113–122. [Google Scholar] [CrossRef]

- Zhou, J.; Theesfeld, C.L.; Yao, K.; Chen, K.M.; Wong, A.K.; Troyanskaya, O.G. Deep learning sequence-based ab initio prediction of variant effects on expression and disease risk. Nat. Genet. 2018, 50, 1171–1179. [Google Scholar] [CrossRef]

- Sannino, G.; De Pietro, G. A deep learning approach for ECG-based heartbeat classification for arrhythmia detection. Future Gener. Comput. Syst. 2018, 86, 446–455. [Google Scholar] [CrossRef]

- Attia, Z.I.; Kapa, S.; Yao, X.; Lopez-Jimenez, F.; Mohan, T.L.; Pellikka, P.A.; Carter, R.E.; Shah, N.D.; Friedman, P.A.; Noseworthy, P.A. Prospective validation of a deep learning ECG algorithm for the detection of left ventricular systolic dysfunction. J. Cardiovasc. Electrophysiol. 2019, 30, 668–674. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Ganglberger, W.; Panneerselvam, E.; Leone, M.; Quadri, S.A.; Goparaju, B.; Tesh, R.A.; Akeju, O.; Thomas, R.; Westover, M.B. Sleep staging from electrocardiography and respiration with deep learning. arXiv 2015, arXiv:1098.11463. [Google Scholar] [CrossRef]

- Simjanoska, M.; Gjoreski, M.; Gams, M.; Bogdanova, A.M. Non-invasive blood pressure estimation from ECG using machine learning techniques. Sensors 2018, 18, 1160. [Google Scholar] [CrossRef]

- Porumb, M.; Stranges, S.; Pescape, A.; Pecchia, L. Precision medicine and artificial intelligence: A pilot study on deep learning for hypoglycemic events detection based on ECG. Sci. Rep. 2020, 10, 1–16. [Google Scholar] [CrossRef]

- Yildirim, O.; Talo, M.; Ay, B.; Baloglu, U.B.; Aydin, G.; Acharya, U.R. Automated detection of diabetic subject using pre-trained 2D-CNN models with frequency spectrum images extracted from heart rate signals. Comput. Biol. Med. 2019, 113, 103387. [Google Scholar] [CrossRef]

- Swapna, G.; Soman, K.O.; Vinayakumar, R. Automated detection of diabetes using CNN and CNN-LSTM network and heart rate signals. Procedia Comput. Sci. 2018, 132, 1253–1262. [Google Scholar]

- Garske, T. Using Deep Learning on EHR Data to Predict Diabetes. Ph.D. Thesis, University of Colorado, Denver, CO, USA, 2018. [Google Scholar]

- Miao, F.; Cheng, Y.; He, Y.; He, Q.; Li, Y. A wearable context-aware ECG monitoring system integrated with built-in kinematic sensors of the smartphone. Sensors 2015, 15, 11465–11484. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, B.; Yin, J.; Gong, Y. Development of a wearable electrocardiogram monitor with recognition of physical activity scene. J. Biomed. Eng. 2012, 29, 941–947. [Google Scholar]

- Macfarlane, P.W.; Browne, D.W.; Devine, B.; Clark, E.N.; Miller, E.; Seyal, J.; Hampton, D.R. Effect of age and gender on diagnostic accuracy of ECG diagnosis of acute myocardial infarction. In Proceedings of the Computing in Cardiology Conference, Chicago, IL, USA, 19–22 September 2004; pp. 165–168. [Google Scholar]

- Alpert, M.A.; Terry, B.E.; Hamm, C.R.; Fan, T.M.; Cohen, M.V.; Massey, C.V.; Painter, J.A. Effect of weight loss on the ECG of normotensive morbidly obese patients. Chest 2001, 119, 507–510. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Jan, A.K.; Peter, R.R. Age and sex differences in ECG interval measurements in Chinese population. Chin. J. Cardiol. 2001, 29, 618–621. [Google Scholar]

- Krell, M.M.; Kim, S.K. Rotational data augmentation for electroencephalographic data. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, Seogwipo, Korea, 11–15 July 2017; pp. 471–474. [Google Scholar]

- Jun, T.J.; Nguyen, H.M.; Kang, D.; Kim, D.; Kim, D.; Kim, Y. ECG arrhythmia classification using a 2-D convolutional neural network. arXiv 2018, arXiv:1804.06812. [Google Scholar]

- Perez, L.; Wang, J. The Effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Singh, S.A.; Majumder, S. A novel approach osa detection using single-lead ECG scalogram based on deep neural network. J. Mech. Med. Biol. 2019, 19, 1950026. [Google Scholar] [CrossRef]

- Zha, X.F.; Yang, F.; Wu, Y.N.; Liu, Y.; Yuan, S.F. ECG classification based on transfer learning and deep convolution neural network. Chin. J. Med. Phys. 2018, 35, 1307–1312. [Google Scholar]

- Yang, X.; Li, H.; Wang, L.; Yeo, S.Y.; Su, Y.; Zeng, Z. Skin lesion analysis by multi-target deep neural networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; Volume 2018, pp. 1263–1266. [Google Scholar]

- Zhu, Z.; Albadawy, E.; Saha, A.; Zhang, J.; Harowicz, M.R.; Mazurowski, M.A. Deep learning for identifying radiogenomic associations in breast cancer. Comput. Biol. Med. 2019, 109, 85–90. [Google Scholar] [CrossRef]

- Everingham, M. The 2005 PASCAL Visual Object Classes Challenge. In Proceedings of the 1st PASCAL Machine Learning Challenges Workshop (MLCW 2005), Southampton, UK, 11–13 April 2005; pp. 117–176. [Google Scholar]

- Rathikarani, V.; Dhanalakshmi, P.; Vijayakumar, K. Automatic ECG image classification using HOG and RPC features by template matching. In Proceedings of the 2nd International Conference on Computer and Communication Technologies, CMR Tech Campus, Hyderabad, India, 24–26 July 2015; pp. 117–125. [Google Scholar]

- Ghosh, D.; Midya, B.L.; Koley, C.; Purkait, P. Wavelet aided SVM analysis of ECG signals for cardiac abnormality detection. In Proceedings of the IEEE India Conference, Chennai, India, 11–13 December 2005; pp. 9–13. [Google Scholar]

- Kumar, R.G.; Kumaraswamy, Y.S. Investigating cardiac arrhythmia in ECG using random forest classification. Int. J. Comput. Appl. 2012, 37, 31–34. [Google Scholar]

- Balouchestani, M.; Krishnan, S. Fast clustering algorithm for large ECG data sets based on CS theory in combination with PCA and K-NN methods. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 98–101. [Google Scholar]

- Zhong, W.; Liao, L.; Guo, X.; Wang, G. A deep learning approach for fetal QRS complex detection. Physiol. Meas. 2018, 39, 045004. [Google Scholar] [CrossRef] [PubMed]

- Balcıoğlu, A.S.; Akıncı, S.; Çiçek, D.; Çoner, A.; Müderrisoğlu, İ.H. Cardiac autonomic nervous dysfunction detected by both heart rate variability and heart rate turbulence in prediabetic patients with isolated impaired fasting glucose. Anatol. J. Cardiol. 2016, 16, 762–769. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Zhou, Z.; Sun, K.; Xi, L.; Zhang, L.; Yu, L.; Wang, J.; Zheng, J.; Ding, M. Association between coronary artery atherosclerosis and plasma glucose levels assessed by dual-source computed tomography. J. Thorac. Dis. 2018, 10, 6050–6059. [Google Scholar] [CrossRef] [PubMed]

- Ramasahayam, S.; Koppuravuri, S.H.; Arora, L.; Chowdhury, S.R. Noninvasive blood glucose sensing using near infra-red spectroscopy and artificial neural networks based on inverse delayed function model of neuron. J. Med. Syst. 2015, 39, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Ji, Z.; Du, Y.; Chen, S. In vivo noninvasive blood glucose detection using near-infrared spectrum based on the PSO-2ANN model. Technol. Health Care 2018, 26, 229–239. [Google Scholar] [CrossRef]

| Dataset Name | Prerequisite | Number of Samples (Normal) | Number of Samples (IGR) |

|---|---|---|---|

| dataset_1 | Total | 1750 | 501 |

| dataset_2 | BMI25 | 643 | 282 |

| dataset_3 | BMI 25 | 1107 | 219 |

| dataset_4 | Men | 1043 | 361 |

| dataset_5 | Women | 707 | 140 |

| dataset_6 | Age 60 | 1673 | 433 |

| dataset_7 | Age60 | 77 | 68 |

| Dataset Name | Prerequisite | Number of Samples (Normal) | Number of Samples (IGR) |

|---|---|---|---|

| test set_0 | Total | 503 | 160 |

| test set_1 | Mixed | 250 | 100 |

| test set_2 | BMI25 | 228 | 73 |

| test set_3 | BMI 25 | 275 | 87 |

| test set_4 | Men | 269 | 89 |

| test set_5 | Women | 234 | 71 |

| test set_6 | Age 60 | 442 | 101 |

| test set_7 | Age60 | 61 | 59 |

| Dataset Name | Prerequisite | Number of Samples (Normal) | Number of Samples (IGR) |

|---|---|---|---|

| dataset_1 | Total | 1750 | 1503 |

| dataset_2 | BMI25 | 1929 | 1692 |

| dataset_3 | BMI 25 | 1660 | 1533 |

| dataset_4 | Men | 1564 | 1444 |

| dataset_5 | Women | 1414 | 1400 |

| dataset_6 | Age 60 | 1673 | 1299 |

| Activation Function | Acc | Sens | Spec | Prec |

|---|---|---|---|---|

| ReLU | 0.795 (0.785–0.805) | 0.763 (0.759–0.768) | 0.849 (0.843–0.854) | 0.887 (0.877–0.896) |

| LeakyReLU | 0.854 (0.839–0.870) | 0.862 (0.853–0.871) | 0.865 (0.857–0.874) | 0.895 (0.882–0.907) |

| ELU | 0.839 (0.830–0.847) | 0.842 (0.839–0.846) | 0.854 (0.846–0.862) | 0.882 (0.874–0.890) |

| ClippedReLU | 0.819 (0.803–0.835) | 0.795 (0.770–0.820) | 0.898 (0.887–0.909) | 0.925 (0.911–0.939) |

| CNN Model | Acc | Sens | Spec | Prec | AUC | Training Time (s) |

|---|---|---|---|---|---|---|

| IGRNet | 0.854 (0.839–0.870) | 0.862 (0.853–0.871) | 0.865 (0.857–0.874) | 0.895 (0.882–0.907) | 0.809 (0.799–0.818) | 940.6 (901.1–980.1) |

| AlexNet | 0.807 (0.792–0.822) | 0.780 (0.753–0.807) | 0.904 (0.886–0.922) | 0.921 (0.890–0.952) | 0.787 (0.777–0.797) | 6477.2 (6341.8–6612.6) |

| GoogLeNet | 0.820 (0.802–0.838) | 0.752 (0.719–0.786) | 0.924 (0.907–0.941) | 0.906 (0.891–0.921) | 0.716 (0.698–0.733) | 8948.5 (8761.4–9135.6) |

| Classification Method | Acc | Sens | Spec | Prec | AUC | Training Time (s) |

|---|---|---|---|---|---|---|

| IGRNet | 0.854 (0.839–0.870) | 0.862 (0.853–0.871) | 0.865 (0.857–0.874) | 0.895 (0.882–0.907) | 0.809 (0.799–0.818) | 940.6 (901.1–980.1) |

| HOG+SVM | 0.809 (0.795–0.822) | 0.720 (0.703–0.737) | 0.867 (0.836–0.899) | 0.836 (0.803–0.868) | 0.772 (0.764–0.780) | 95.7 (87.5–103.9) |

| HOG+RF | 0.800 (0.774–0.827) | 0.687 (0.670–0.704) | 0.836 (0.794–0.878) | 0.842 (0.826–0.859) | 0.764 (0.749–0.780) | 98.3 (93.8–102.8) |

| HOG+K-NN | 0.824 (0.805–0.844) | 0.718 (0.698–0.739) | 0.904 (0.878–0.929) | 0.891 (0.867–0.915) | 0.775 (0.768–0.782) | 84.8 (77.1–92.5) |

| Dataset | Acc | Sens | Spec | Prec | AUC |

|---|---|---|---|---|---|

| dataset_2 | 0.914 (0.891–0.937) | 0.918 (0.899–0.937) | 0.895 (0.875–0.915) | 0.911 (0.895–0.927) | 0.854 (0.845–0.863) |

| dataset_3 | 0.927 (0.916–0.938) | 0.882 (0.853–0.911) | 0.967 (0.962–0.972) | 0.960 (0.949–0.971) | 0.861 (0.838–0.884) |

| dataset_4 | 0.869 (0.848–0.890) | 0.785 (0.780–0.790) | 0.916 (0.904–0.928) | 0.920 (0.902–0.938) | 0.844 (0.829–0.859) |

| dataset_5 | 0.878 (0.865–0.891) | 0.814 (0.800–0.828) | 0.961 (0.955–0.967) | 0.956 (0.934–0.978) | 0.851 (0.831–0.871) |

| dataset_6 | 0.888 (0.869–0.907) | 0.755 (0.752–0.758) | 0.980 (0.974–0.986) | 0.959 (0.950–0.968) | 0.858 (0.834–0.882) |

| Dataset | Acc | Sens | Spec | Prec | AUC | Test Time (s) |

|---|---|---|---|---|---|---|

| test set_0 | 0.778 | 0.808 | 0.775 | 0.852 | 0.773 | 101.2 |

| test set_1 | 0.781 | 0.798 | 0.789 | 0.846 | 0.777 | 57.7 |

| test set_2 | 0.850 | 0.834 | 0.820 | 0.879 | 0.808 | 56.4 |

| test set_3 | 0.856 | 0.839 | 0.902 | 0.887 | 0.825 | 58.3 |

| test set_4 | 0.821 | 0.760 | 0.925 | 0.901 | 0.801 | 58.4 |

| test set_5 | 0.833 | 0.800 | 0.907 | 0.888 | 0.794 | 57.2 |

| test set_6 | 0.829 | 0.697 | 0.892 | 0.874 | 0.788 | 85.9 |

| Activation Function | Acc | Sens | Spec | Prec | AUC |

|---|---|---|---|---|---|

| ReLU | 0.739 | 0.687 | 0.765 | 0.819 | 0.742 |

| LeakyReLU | 0.778 | 0.808 | 0.775 | 0.852 | 0.773 |

| ELU | 0.765 | 0.784 | 0.809 | 0.822 | 0.764 |

| ClippedReLU | 0.756 | 0.799 | 0.780 | 0.834 | 0.761 |

| Model | Acc | Sens | Spec | Prec | AUC | Test Time (s) |

|---|---|---|---|---|---|---|

| IGRNet | 0.778 | 0.808 | 0.775 | 0.852 | 0.773 | 101.2 |

| AlexNet | 0.749 | 0.770 | 0.821 | 0.862 | 0.755 | 117.6 |

| GoogLeNet | 0.754 | 0.693 | 0.837 | 0.846 | 0.689 | 125.1 |

| HOG+SVM | 0.736 | 0.698 | 0.768 | 0.840 | 0.757 | 13.5 |

| HOG+RF | 0.741 | 0.685 | 0.755 | 0.853 | 0.752 | 18.8 |

| HOG+K-NN | 0.760 | 0.705 | 0.799 | 0.837 | 0.761 | 11.7 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Mu, Y.; Zhao, J.; Wang, X.; Che, H. IGRNet: A Deep Learning Model for Non-Invasive, Real-Time Diagnosis of Prediabetes through Electrocardiograms. Sensors 2020, 20, 2556. https://doi.org/10.3390/s20092556

Wang L, Mu Y, Zhao J, Wang X, Che H. IGRNet: A Deep Learning Model for Non-Invasive, Real-Time Diagnosis of Prediabetes through Electrocardiograms. Sensors. 2020; 20(9):2556. https://doi.org/10.3390/s20092556

Chicago/Turabian StyleWang, Liyang, Yao Mu, Jing Zhao, Xiaoya Wang, and Huilian Che. 2020. "IGRNet: A Deep Learning Model for Non-Invasive, Real-Time Diagnosis of Prediabetes through Electrocardiograms" Sensors 20, no. 9: 2556. https://doi.org/10.3390/s20092556

APA StyleWang, L., Mu, Y., Zhao, J., Wang, X., & Che, H. (2020). IGRNet: A Deep Learning Model for Non-Invasive, Real-Time Diagnosis of Prediabetes through Electrocardiograms. Sensors, 20(9), 2556. https://doi.org/10.3390/s20092556