Voice Communication in Noisy Environments in a Smart House Using Hybrid LMS+ICA Algorithm

Abstract

1. Introduction

- To ensure control of operational and technical functions (blinds, lights, heating, cooling, and forced ventilation) in the SH rooms (living room, kitchen, dining room, and bedroom) using the KNX technology.

- To ensure recognition of individual commands for the control of operational and technical functions in SH.

- To record individual voice commands (“Light on”, “Light off”, “Turn on the washing machine”, “Turn off the washing machine”, “Dim up”, “Dim down”, “Turn on the vacuum cleaner”, “Turn off the vacuum cleaner”, “Turn on the dishwasher”, “Turn off the dishwasher”, “Fan on”, “Fan off”, “Turn on the TV”, “Turn off the TV”, “Blinds up”, “Blinds down”, “Blinds up left”, “Blinds up right”, “Blinds up middle”, “Blinds down left”, “Blinds down right”, “Blinds down left”, and “Blinds down middle”).

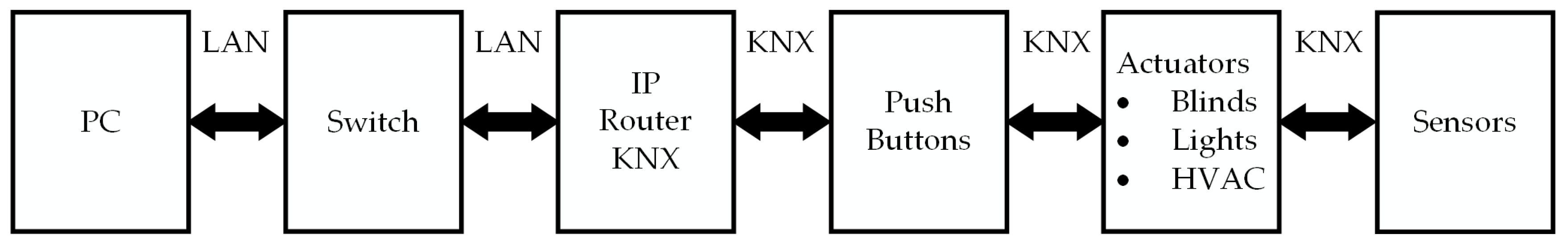

- To ensure data connectivity among the building automation technology, the sound card, and the speech recognition software tool.

- To upload sample additive interference in a real SH environment (TV, vacuum cleaner, washing machine, dishwasher, and fan).

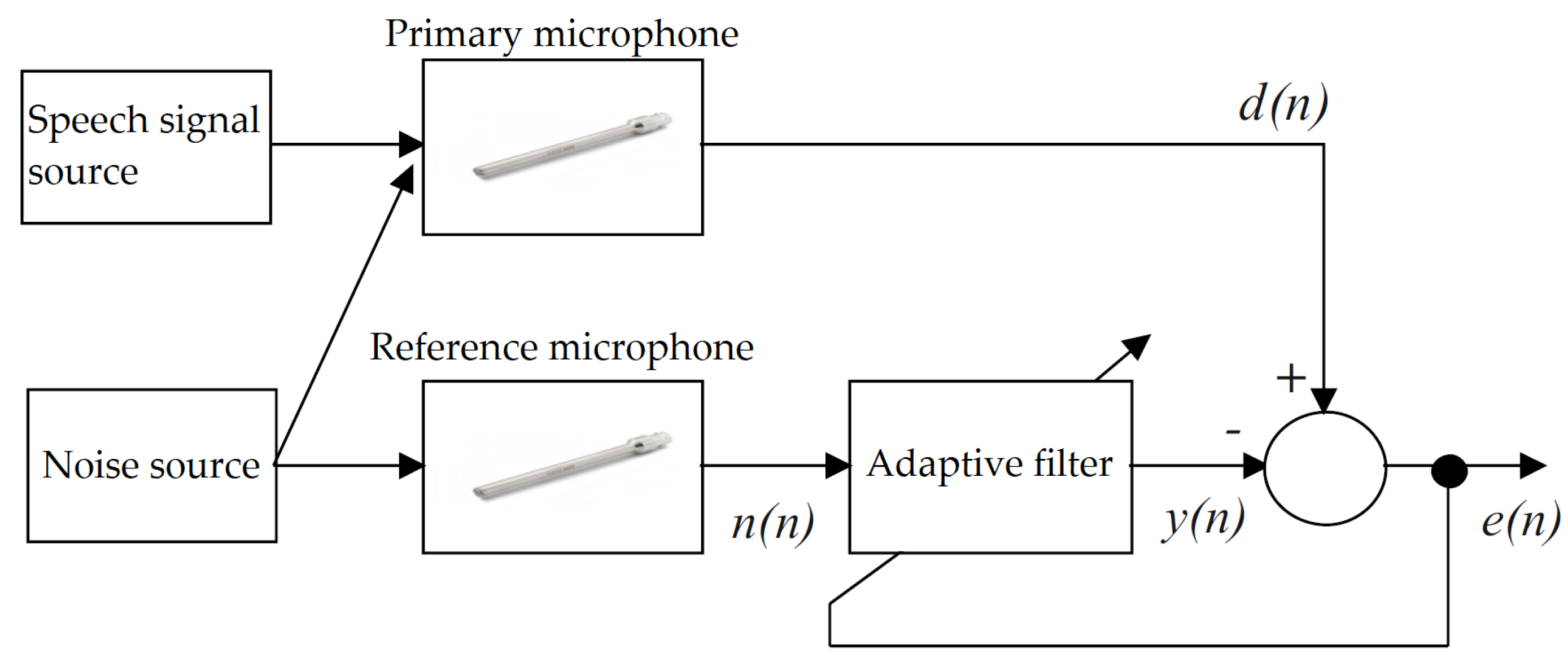

- To ensure additive noise cancelling in the speech signal using the least mean squares algorithm (LMS) and the independent component analysis (ICA).

- To ensure visualization of the aforementioned processes of Visualization software application with a SH simulation floor plan; in this work, the measurement and processing of the speech signal were implemented using the LabView software tool together with a database of interference recordings.

- To ensure the highest possible recognition success rate of speech command in a real SH environment with additive noise.

2. Related Work

- the RASTA method (RelAtive SpecTrAl) [38], and

- the Mel-frequency cepstral analysis (MFCC), for example,

- the hidden Markov models (HMM) [53], and

- artificial neural networks (ANN) [54], for example,

- -

- feed-forward Neural Network (NN) with back propagation algorithm and a Radial Basis Functions Neural Networks [55],

- -

- an automatic speech recognition (ASR) based approach for speech therapy of aphasic patients [56],

- -

- fast adaptation of deep neural network based on discriminant codes for speech recognition [57],

- -

- implementation of dnn-hmm acoustic models for phoneme recognition [58],

- -

- combination of features in a hybrid HMM/MLP and a HMM/GMM speech recognition system [59], and

- -

- hybrid continuous speech recognition systems by HMM, MLP and SVM [60].

3. The Hardware Equipment in SH

3.1. SH Automation with the KNX Technology

3.2. Steinberg UR44 Sound Card

3.3. RHODE NT5 Measuring Microphones

4. The Software Equipment in SH

4.1. ETS5 - KNX Technology Parametrisation

4.2. LabVIEW Graphical Development Environment

- Interactive Graphical User Interface (GUI)—the so-called front panel, which simulates the front panel of a physical device. It contains objects, such as controls and indicators, which can be used to control the running of the application, to enter parameters and to obtain information about the results processed.

- Block diagram, in which the sequence of evaluation of the individual program components (the program algorithm itself, their interconnection and parameters) is defined. Each component contains input and output connection points. The individual connection points can be connected to the elements on the panel using a wiring tool.

- Subordinate virtual instruments, the so-called subVI. The instrument has a modular, hierarchical structure. This means that it can be used separately, as an entire program, or as its individual subVI’s. Each VI includes its icon, which is represented in the block diagram, and a connector with locations connected for the input and output parameters.

4.3. Speech Recognition

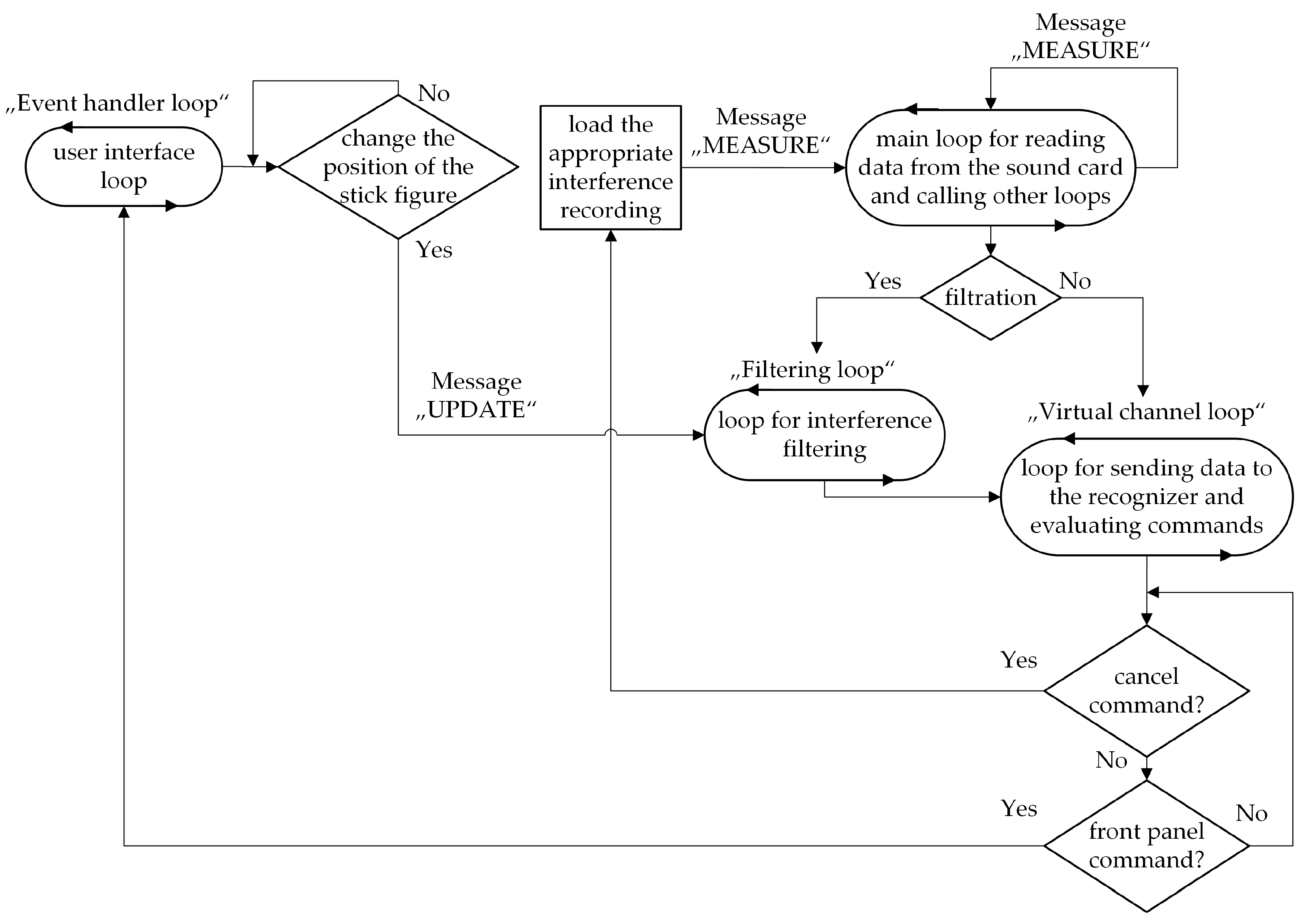

5. SW Application for Automation Voice Control in a Real SH

5.1. Visualization

5.2. Speech Recognition

5.3. Virtual Cable Connection

5.4. The Main Loop for Data Reading

5.5. Visualization of a “Smart Home”

5.6. Glossary of Commands

5.7. Application Control

6. The Mathematical Methods Used

6.1. Least Mean Squares Algorithm

6.2. Independent Component Analysis

6.3. Prerequisites for ICA Method Processing

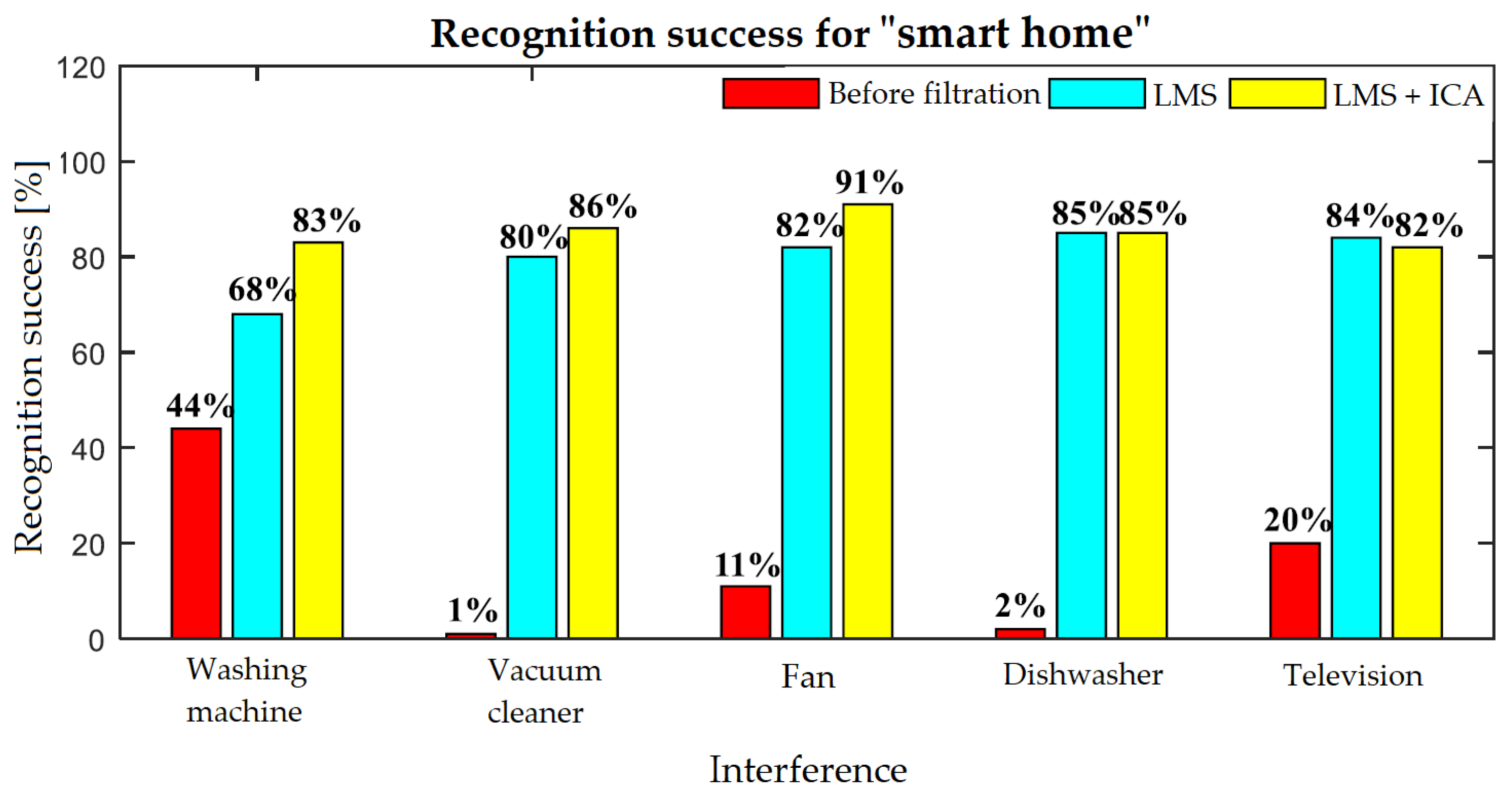

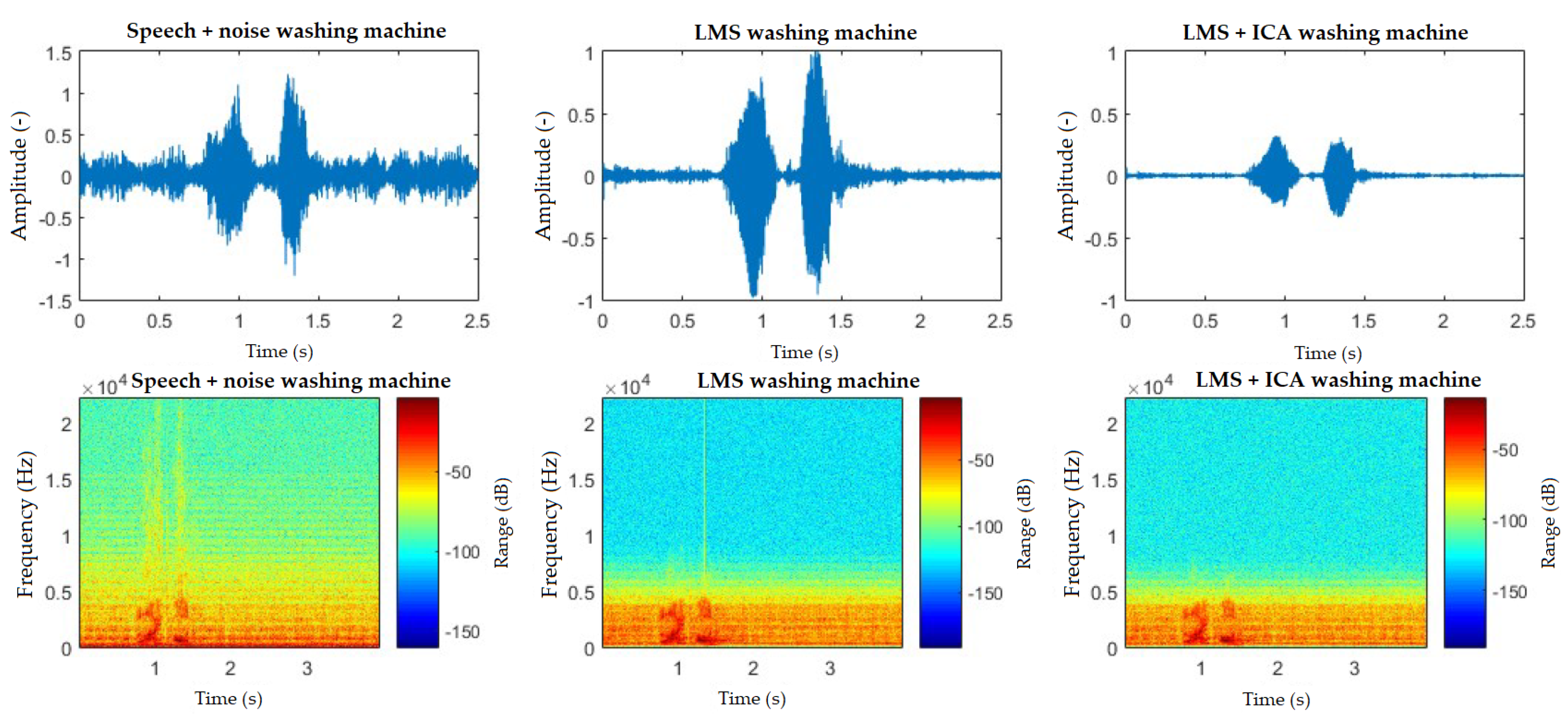

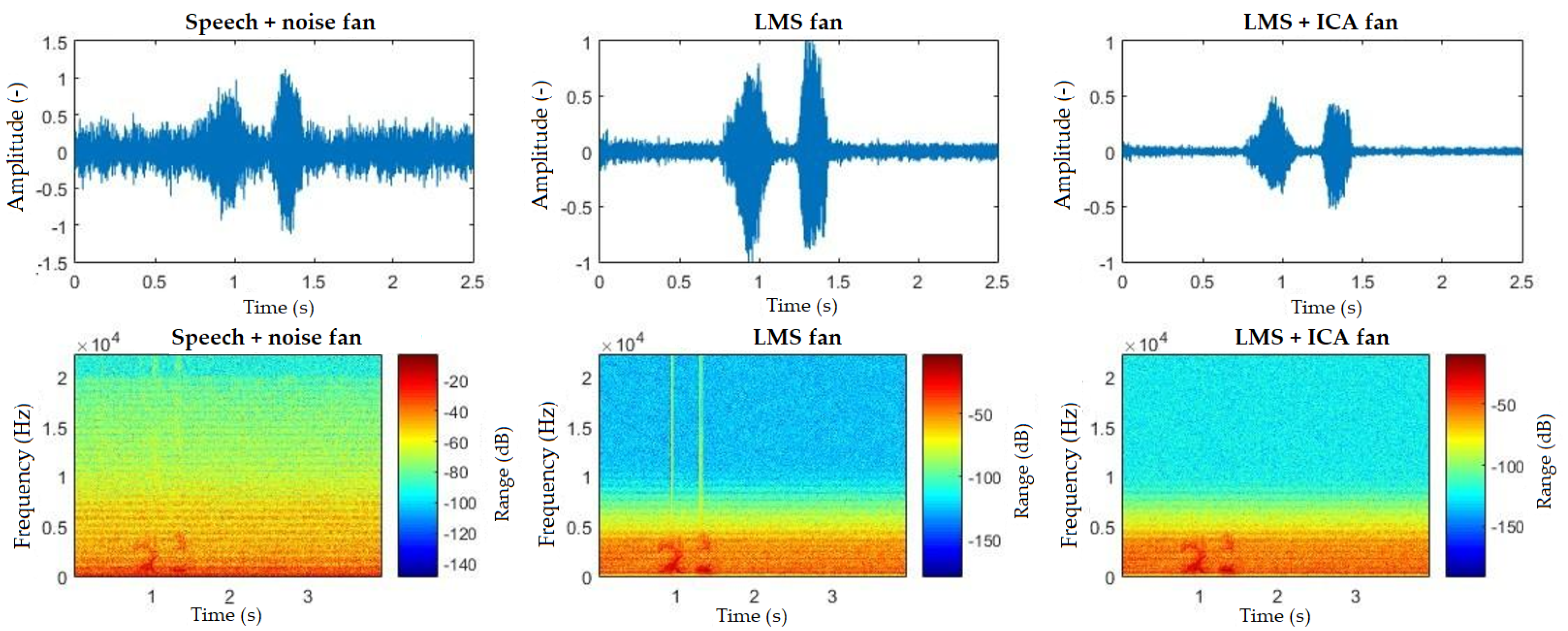

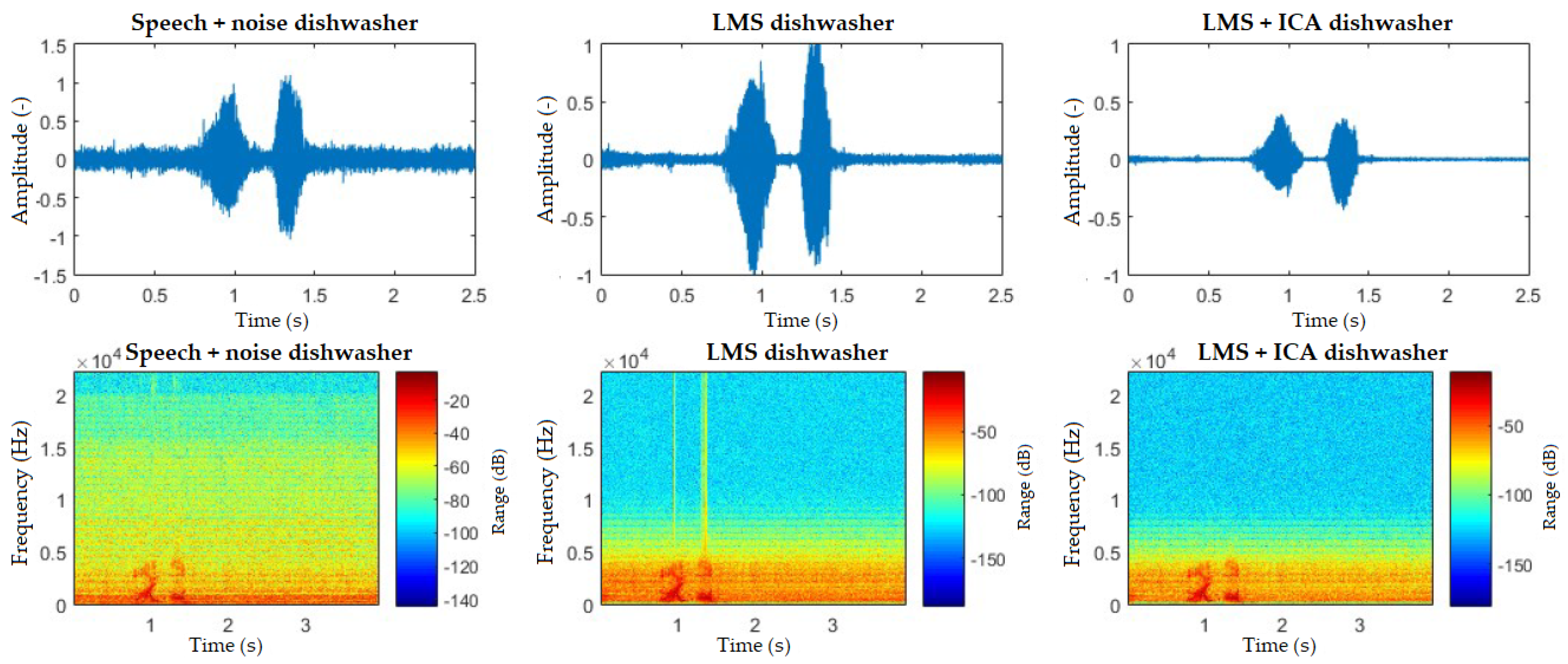

7. Experimental Part—Results

7.1. Selected Filtering Methods and Recognition Success Rate

7.2. Search for Optimal Parameter Settings for the LMS Algorithm

7.3. Independent Component Analysis

7.4. Recognition Success Rate

8. Discussion

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dotihal, R.; Sopori, A.; Muku, A.; Deochake, N.; Varpe, D. Smart Homes Using Alexa and Power Line Communication in IoT: ICCNCT 2018; Springer Nature: Basel, Switzerland, 2019; pp. 241–248. [Google Scholar] [CrossRef]

- Erol, B.A.; Wallace, C.; Benavidez, P.; Jamshidi, M. Voice Activation and Control to Improve Human Robot Interactions with IoT Perspectives. In Proceedings of the 2018 World Automation Congress (WAC), Stevenson, WA, USA, 3–6 June 2018; pp. 1–5. [Google Scholar]

- Diaz, A.; Mahu, R.; Novoa, J.; Wuth, J.; Datta, J.; Yoma, N.B. Assessing the effect of visual servoing on the performance of linear microphone arrays in moving human-robot interaction scenarios. Comput. Speech Languag. 2021, 65, 101136. [Google Scholar] [CrossRef]

- Novoa, J.; Wuth, J.; Escudero, J.P.; Fredes, J.; Mahu, R.; Yoma, N.B. DNN-HMM based Automatic Speech Recognition for HRI Scenarios. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018. [Google Scholar] [CrossRef]

- Grout, I. Human-Computer Interaction in Remote Laboratories with the Leap Motion Controller. In Proceedings of the 15th International Conference on Remote Engineering and Virtual Instrumentation, Duesseldorf, Germany, 21–23 March 2019; pp. 405–414. [Google Scholar] [CrossRef]

- He, S.; Zhang, A.; Yan, M. Voice and Motion-Based Control System: Proof-of-Concept Implementation on Robotics via Internet-of-Things Technologies. In Proceedings of the 2019 ACM Southeast Conference, Kennesaw, GA, USA, 18–20 April 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 102–108. [Google Scholar] [CrossRef]

- Kennedy, S.; Li, H.; Wang, C.; Liu, H.; Wang, B.; Sun, W. I Can Hear Your Alexa: Voice Command Fingerprinting on Smart Home Speakers; IEEE: Washington, DC, USA, 2019; pp. 232–240. [Google Scholar] [CrossRef]

- Knight, N.J.; Kanza, S.; Cruickshank, D.; Brocklesby, W.S.; Frey, J.G. Talk2Lab: The Smart Lab of the Future. IEEE Int. Things J. 2020, 7, 8631–8640. [Google Scholar] [CrossRef]

- Kodali, R.K.; Azman, M.; Panicker, J.G. Smart Control System Solution for Smart Cities. In Proceedings of the 2018 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Zhengzhou, China, 18–20 October 2018; pp. 89–893. [Google Scholar]

- Leroy, D.; Coucke, A.; Lavril, T.; Gisselbrecht, T.; Dureau, J. Federated Learning for Keyword Spotting. In Proceedings of the ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6341–6345. [Google Scholar]

- Li, Z.; Zhang, J.; Li, M.; Huang, J.; Wang, X. A Review of Smart Design Based on Interactive Experience in Building Systems. Sustainability 2020, 12, 6760. [Google Scholar] [CrossRef]

- Irwin, S. Design and Implementation of Smart Home Voice Control System based on Arduino. In Proceedings of the 2018 5th International Conference on Electrical & Electronics Engineering and Computer Science (ICEEECS 2018), Istanbul, Turkey, 3–5 May 2018. [Google Scholar] [CrossRef]

- Vanus, J.; Belesova, J.; Martinek, R.; Nedoma, J.; Fajkus, M.; Bilik, P.; Zidek, J. Monitoring of the daily living activities in smart home care. Hum. Centric Comput. Inf. Sci. 2017, 7. [Google Scholar] [CrossRef]

- Vanus, J.; Weiper, T.; Martinek, R.; Nedoma, J.; Fajkus, M.; Koval, L.; Hrbac, R. Assessment of the Quality of Speech Signal Processing Within Voice Control of Operational-Technical Functions in the Smart Home by Means of the PESQ Algorithm. IFAC-PapersOnLine 2018, 51, 202–207. [Google Scholar] [CrossRef]

- Amrutha, S.; Aravind, S.; Ansu, M.; Swathy, S.; Rajasree, R.; Priyalakshmi, S. Voice Controlled Smart Home. Int. J. Emerg. Technol. Adv. Eng. (IJETAE) 2015, 272–276. [Google Scholar]

- Kamdar, H.; Karkera, R.; Khanna, A.; Kulkarni, P.; Agrawal, S. A Review on Home Automation Using Voice Recognition. Int. J. Emerg. Technol. Adv. Eng. (IJETAE) 2017, 4, 1795–1799. [Google Scholar]

- Kango, R.; Moore, P.R.; Pu, J. Networked smart home appliances-enabling real ubiquitous culture. In Proceedings of the 3rd IEEE International Workshop on System-on-Chip for Real-Time Applications, Calgary, Alberta, 30 June–2 July 2003; pp. 76–80. [Google Scholar]

- Wang, Y.M.; Russell, W.; Arora, A.; Xu, J.; Jagannatthan, R.K. Towards dependable home networking: An experience report. In Proceedings of the International Conference on Dependable Systems and Networks (DSN 2000), New York, NY, USA, 25–28 June 2000; pp. 43–48. [Google Scholar]

- McLoughlin, I.; Sharifzadeh, H.R. Speech Recognition for Smart Homes. In Speech Recognition; Mihelic, F., Zibert, J., Eds.; IntechOpen: Rijeka, Croatia, 2008; Chapter 27. [Google Scholar] [CrossRef]

- Rabiner, L.R. Applications of voice processing to telecommunications. Proc. IEEE 1994, 82, 199–228. [Google Scholar] [CrossRef]

- Obaid, T.; Rashed, H.; Nour, A.; Rehan, M.; Hasan, M.; Tarique, M. Zigbee Based Voice Controlled Wireless Smart Home System. Int. J. Wirel. Mob. Netw. 2014, 6. [Google Scholar] [CrossRef]

- Singh, D.; Sharma Thakur, A. Voice Recognition Wireless Home Automation System Based On Zigbee. IOSR J. Electron. Commun. Eng. 2013, 22, 65–75. [Google Scholar] [CrossRef]

- Mctear, M. Spoken Dialogue Technology—Toward the Conversational User Interface; Springer Publications: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Chevalier, H.; Ingold, C.; Kunz, C.; Moore, C.; Roven, C.; Yamron, J.; Baker, B.; Bamberg, P.; Bridle, S.; Bruce, T.; et al. Large-vocabulary speech recognition in specialized domains. In Proceedings of the 1995 International Conference on Acoustics, Speech, and Signal Processing, Detroit, MI, USA, 9–12 May 1995; Volume 1, pp. 217–220. [Google Scholar]

- Kamm, C.A.; Yang, K.; Shamieh, C.R.; Singhal, S. Speech recognition issues for directory assistance applications. In Proceedings of the 2nd IEEE Workshop on Interactive Voice Technology for Telecommunications Applications, Kyoto, Japan, 26–27 September 1994; pp. 15–19. [Google Scholar]

- Sun, H.; Shue, L.; Chen, J. Investigations into the relationship between measurable speech quality and speech recognition rate for telephony speech. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 1, pp. 1–865. [Google Scholar]

- Ravishankar, M.K. Efficient Algorithms for Speech Recognition; Technical Report; Carnegie-Mellon Univ Pittsburgh pa Dept of Computer Science: Pittsburgh, PA, USA, 1996. [Google Scholar]

- Vajpai, J.; Bora, A. Industrial applications of automatic speech recognition systems. Int. J. Eng. Res. Appl. 2016, 6, 88–95. [Google Scholar]

- Rogowski, A. Industrially oriented voice control system. Robot. Comput. Integr. Manuf. 2012, 28, 303–315. [Google Scholar] [CrossRef]

- Collins, D.W.B.R. Digital Avionics Handbook—Chapter 8: Speech Recognitionand Synthesis; Electrical Engineering Handbook Series; CRC Press: Boca Raton, FL, USA, 2001. [Google Scholar]

- Rabiner, L.R. Applications of speech recognition in the area of telecommunications. In Proceedings of the 1997 IEEE Workshop on Automatic Speech Recognition and Understanding Proceedings, Santa Barbara, CA, USA, 17 December 1997; pp. 501–510. [Google Scholar]

- MAŘÍK, V. Průmysl 4.0: VýZva Pro Českou Republiku, 1st ed.; Management Press: Prague, Czech Republic, 2016. [Google Scholar]

- Newsroom, C. Cyber-Physical Systems [online]. Available online: http://cyberphysicalsystems.org/ (accessed on 25 August 2020).

- Mardiana, B.; Hazura, H.; Fauziyah, S.; Zahariah, M.; Hanim, A.R.; Noor Shahida, M.K. Homes Appliances Controlled Using Speech Recognition in Wireless Network Environment. In Proceedings of the 2009 International Conference on Computer Technology and Development, Kota Kinabalu, Malaysia, 13–15 November 2009; Volume 2, pp. 285–288. [Google Scholar]

- Techopedia. Smart Device Techopedia. Available online: https://www.techopedia.com/definition/31463/smart-device (accessed on 25 August 2020).

- Schiefer, M. Smart Home Definition and Security Threats; IEEE: Magdeburg, Germany, 2015; pp. 114–118. [Google Scholar] [CrossRef]

- Kyas, O. How To Smart Home; Key Concept Press e.K.: Wyk, Germany, 2013. [Google Scholar]

- Psutka, J.; Müller, L.; Matoušek, J.; Radová, V. Mluvíme s Počítačem česky; Academia: Prague, Czech Republic, 2006; p. 752. [Google Scholar]

- Sakoe, H.; Chiba, S. Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 43–49. [Google Scholar] [CrossRef]

- Bellman, R.E.; Dreyfus, S.E. Applied Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 2015. [Google Scholar]

- Kumar, A.; Dua, M.; Choudhary, T. Continuous hindi speech recognition using monophone based acoustic modeling. Int. J. Comput. Appl. 2014, 24, 15–19. [Google Scholar]

- Arora, S.J.; Singh, R.P. Automatic speech recognition: A review. Int. J. Comput. Appl. 2012, 60, 132–136. [Google Scholar]

- Saksamudre, S.K.; Shrishrimal, P.; Deshmukh, R. A review on different approaches for speech recognition system. Int. J. Comput. Appl. 2015, 115, 23–28. [Google Scholar]

- Hermansky, H. Perceptual linear predictive (PLP) analysis of speech. J. Acoust. Soc. Am. 1990, 87, 1738–1752. [Google Scholar] [CrossRef]

- Xie, L.; Liu, Z. A Comparative Study of Audio Features for Audio-to-Visual Conversion in Mpeg-4 Compliant Facial Animation. In Proceedings of the 2006 International Conference on Machine Learning and Cybernetics, Dalian, China, 13–16 August 2006; pp. 4359–4364. [Google Scholar]

- Garg, A.; Sharma, P. Survey on acoustic modeling and feature extraction for speech recognition. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 2291–2295. [Google Scholar]

- Rajnoha, J.; Pollák, P. Detektory řečové aktivity na bázi perceptivní kepstrální analýzy. In České Vysoké učení Technické v Praze, Fakulta Elektrotechnická; Fakulta Elektrotechnická: Prague, Czech Republic, 2008. [Google Scholar]

- Saon, G.A.; Soltau, H. Method and System for Joint Training of Hybrid Neural Networks for Acoustic Modeling in Automatic Speech Recognition. U.S. Patent 9,665,823, 30 May 2017. [Google Scholar]

- Godino-Llorente, J.I.; Gomez-Vilda, P.; Blanco-Velasco, M. Dimensionality Reduction of a Pathological Voice Quality Assessment System Based on Gaussian Mixture Models and Short-Term Cepstral Parameters. IEEE Trans. Biomed. Eng. 2006, 53, 1943–1953. [Google Scholar] [CrossRef]

- Low, L.S.A.; Maddage, N.C.; Lech, M.; Sheeber, L.; Allen, N. Content based clinical depression detection in adolescents. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; pp. 2362–2366. [Google Scholar]

- Linh, L.H.; Hai, N.T.; Thuyen, N.V.; Mai, T.T.; Toi, V.V. MFCC-DTW algorithm for speech recognition in an intelligent wheelchair. In Proceedings of the 5th international conference on biomedical engineering in Vietnam, Ho Chi Minh City, Vietnam, 16–18 June 2014; pp. 417–421. [Google Scholar]

- Ittichaichareon, C.; Suksri, S.; Yingthawornsuk, T. Speech recognition using MFCC. In Proceedings of the International Conference on Computer Graphics, Simulation and Modeling, Pattaya, Thailand, 28–29 July 2012; pp. 135–138. [Google Scholar]

- Vařák, J. Možnosti hlasového ovládání bezpilotních dronů. In Bakalářská Práce; Vysoká škola Báňská—Technická Univerzita Ostrava: Ostrava, Czech Republic, 2017. [Google Scholar]

- Cutajar, M.; Gatt, E.; Grech, I.; Casha, O.; Micallef, J. Comparative study of automatic speech recognition techniques. IET Signal Process. 2013, 7, 25–46. [Google Scholar] [CrossRef]

- Gevaert, W.; Tsenov, G.; Mladenov, V. Neural networks used for speech recognition. J. Autom. Control. 2010, 20, 1–7. [Google Scholar] [CrossRef]

- Jamal, N.; Shanta, S.; Mahmud, F.; Sha’abani, M. Automatic speech recognition (ASR) based approach for speech therapy of aphasic patients: A review. AIP Conf. Proc. 2017, 1883, 020028. [Google Scholar]

- Xue, S.; Abdel-Hamid, O.; Jiang, H.; Dai, L.; Liu, Q. Fast adaptation of deep neural network based on discriminant codes for speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1713–1725. [Google Scholar]

- Romdhani, S. Implementation of Dnn-Hmm Acoustic Models for Phoneme Recognition. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2015. [Google Scholar]

- Pujol, P.; Pol, S.; Nadeu, C.; Hagen, A.; Bourlard, H. Comparison and combination of features in a hybrid HMM/MLP and a HMM/GMM speech recognition system. IEEE Trans. Speech Audio Process. 2005, 13, 14–22. [Google Scholar] [CrossRef]

- Zarrouk, E.; Ayed, Y.B.; Gargouri, F. Hybrid continuous speech recognition systems by HMM, MLP and SVM: A comparative study. Int. J. Speech Technol. 2014, 17, 223–233. [Google Scholar] [CrossRef]

- Chaudhari, A.; Dhonde, S.B. A review on speech enhancement techniques. In Proceedings of the 2015 International Conference on Pervasive Computing (ICPC), Pune, India, 8–10 January 2015; pp. 1–3. [Google Scholar]

- Upadhyay, N.; Karmakar, A. Speech enhancement using spectral subtraction-type algorithms: A comparison and simulation study. Procedia Comput. Sci. 2015, 54, 574–584. [Google Scholar] [CrossRef]

- Martinek, R. The Use of Complex Adaptive Methods of Signal Processingfor Refining the Diagnostic Quality of the Abdominalu Fetal Cardiogram. Ph.D. Thesis, Vysoká škola báňská—Technická Univerzita Ostrava, Ostrava, Czech Republic, 2014. [Google Scholar]

- Jan, J. Číslicová Filtrace, Analýza a Restaurace Signálů, vyd. 2. rozš. a dopl ed.; VUTIUM: Brno, Czech Republic, 2002. [Google Scholar]

- Harding, P. Model-Based Speech Enhancement. Ph.D. Thesis, University of East Anglia, Norwich, UK, 2013. [Google Scholar]

- Loizou, P.C. Speech Enhancement: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Cole, C.; Karam, M.; Aglan, H. Increasing Additive Noise Removal in Speech Processing Using Spectral Subtraction. In Proceedings of the Fifth International Conference on Information Technology: New Generations (ITNG 2008), Las Vegas, NV, USA, 7–9 April 2008; pp. 1146–1147. [Google Scholar] [CrossRef]

- Aggarwal, R.; Singh, J.K.; Gupta, V.K.; Rathore, S.; Tiwari, M.; Khare, A. Noise reduction of speech signal using wavelet transform with modified universal threshold. Int. J. Comput. Appl. 2011, 20, 14–19. [Google Scholar] [CrossRef]

- Mihov, S.G.; Ivanov, R.M.; Popov, A.N. Denoising speech signals by wavelet transform. Annu. J. Electron. 2009, 2009, 2–5. [Google Scholar]

- Martinek, R. Využití Adaptivních Algoritmů LMS a RLS v Oblasti Adaptivního Potlačování Šumu a Rušení. ElectroScope 2013, 1, 1–8. [Google Scholar]

- Farhang-Boroujeny, B. Adaptive Filters: Theory and Applications, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Vaseghi, S.V. Advanced Digital Signal Processing and Noise Reduction, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Martinek, R.; Žídek, J. The Real Implementation of NLMS Channel Equalizer into the System of Software Defined Radio. Adv. Electr. Electron. Eng. 2012, 10. [Google Scholar] [CrossRef]

- Visser, E.; Otsuka, M.; Lee, T.W. A spatio-temporal speech enhancement scheme for robust speech recognition in noisy environments. Speech Commun. 2003, 41, 393–407. [Google Scholar] [CrossRef]

- Visser, E.; Lee, T.W. Speech enhancement using blind source separation and two-channel energy based speaker detection. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’03), Hong Kong, China, 6–10 April 2003; Volume 1, p. I. [Google Scholar]

- Hyvarinen, A.; Oja, E. A fast fixed-point algorithm for independent component analysis. Neural Comput. 1997, 9, 1483–1492. [Google Scholar] [CrossRef]

- Cichocki, A.; Amari, S.I. Adaptive Blind Signal and Image Processing: Learning Algorithms and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2002. [Google Scholar]

- Fischer, S.; Simmer, K.U. Beamforming microphone arrays for speech acquisition in noisy environments. Speech Commun. 1996, 20, 215–227. [Google Scholar] [CrossRef]

- Griffiths, L.; Jim, C. An alternative approach to linearly constrained adaptive beamforming. IEEE Trans. Antennas Propag. 1982, 30, 27–34. [Google Scholar] [CrossRef]

- Zou, Q.; Yu, Z.L.; Lin, Z. A robust algorithm for linearly constrained adaptive beamforming. IEEE Signal Process. Lett. 2004, 11, 26–29. [Google Scholar] [CrossRef]

- Vaňuš, J.; Smolon, M.; Martinek, R.; Koziorek, J.; Žídek, J.; Bilík, P. Testing of the voice communication in smart home care. Hum. Centric Comput. Inf. Sci. 2015, 5, 15. [Google Scholar]

- Wittassek, T. Virtuální Instrumentace I, 1. vyd ed.; Vysoká škola Báňská—Technická Univerzita Ostrava: Ostrava, Czech Republic, 2014. [Google Scholar]

| Sound Card Type | USB |

|---|---|

| Number of analogue outputs | 6 |

| Number of microphone inputs | 4 |

| Number inputs | 4 |

| Number outputs | 4 |

| MIDI | YES |

| Phantom power supply | +48VDC |

| Sampling frequency | 44.1 kHz, 48 kHz, 88.2 kHz, 96 kHz, 176.4 kHz, 192 kHz |

| Resolution | up to 24 bits at a maximum sampling rate |

| Acoustic Principle | Pressure Gradient |

|---|---|

| Sound pressure level | 143 dB |

| Active electronics | J-FET impedance converter with a bipolar output buffer |

| Directional characteristics | Cardioid (kidney) |

| Frequency range | 20 Hz–20 KHz |

| Output impedance | 100 |

| Power supply options | 24VDC or 48VDC |

| Sensitivity | −38 dB re 1 Volt/Pascal (12 mV @ 94 dB SPL) +/− 2 |

| Equivalent noise level | 16dBA |

| Output | XLR |

| Weight | 101 g |

| Command | Room |

|---|---|

| “Light on” | All |

| “Light off” | All |

| “Turn on the washing machine” | bathroom |

| “Turn off the washing machine” | bathroom |

| “Dim up” | bedroom |

| Dim down | bedroom |

| “Turn on the vacuum cleaner” | bedroom |

| “Turn off the vacuum cleaner” | bedroom |

| “Turn on the dishwasher” | kitchen |

| “Turn off the dishwasher” | kitchen |

| “Fan on” | Hall |

| “Fan off” | Hall |

| Turn on the TV | Living room |

| Turn off the TV | Living room |

| “Blinds up” | Kitchen/hall/living room/bedroom |

| “Blinds down” | Kitchen/hall/living room/bedroom |

| “Blinds up left” | Kitchen/hall/living room |

| “Blinds up right” | Kitchen/hall/living room |

| “Blinds up middle” | Kitchen/hall/living room |

| “Blinds down left” | Kitchen/hall/living room |

| “Blinds down right” | Kitchen/hall/living room |

| “Blinds down left” | Kitchen/hall/living room |

| “Blinds down middle” | Kitchen/hall/living room |

| Interference | Filter Length M | Convergence Constant [-] |

|---|---|---|

| Washing machine | 240 | 0.01 |

| Vacuum cleaner | 80 | 0.001 |

| Fan | 210 | 0.01 |

| Dishwasher | 40 | 0.01 |

| TV | 110 | 0.01 |

| Parameter | Value |

|---|---|

| Method | FastICA |

| Number of components | 2 |

| Number of iterations | 1000 |

| Convergence tolerance | 0.000001 |

| LMS and ICA | Washing Machine Maximum Volume | ||

|---|---|---|---|

| Command | Before [%] | LMS [%] | LMS + ICA [%] |

| “Light on” | 28 | 100 | 100 |

| “Light off” | 21 | 45 | 70 |

| “Turn off the washing machine” | 85 | 60 | 78 |

| LMS and ICA | Vacuum Cleaner Maximum Volume | ||

|---|---|---|---|

| Command | Before [%] | LMS [%] | LMS + ICA [%] |

| “light on” | 0 | 100 | 100 |

| “light off” | 0 | 27 | 45 |

| “blinds down” | 0 | 95 | 100 |

| “blinds up” | 0 | 93 | 100 |

| “Dim up” | 5 | 100 | 100 |

| “dim down” | 3 | 100 | 100 |

| “Turn off the vacuum cleaner” | 0 | 45 | 60 |

| LMS and ICA | Fan Maximum Volume | ||

|---|---|---|---|

| Command | Before [%] | LMS [%] | LMS + ICA [%] |

| “light on” | 42 | 100 | 100 |

| “light off” | 24 | 28 | 90 |

| “blinds down” | 2 | 100 | 100 |

| “blinds up” | 5 | 91 | 100 |

| “blinds down left” | 3 | 88 | 100 |

| “blinds down right” | 0 | 83 | 100 |

| “blinds down middle” | 0 | 100 | 100 |

| “blinds up left” | 6 | 95 | 100 |

| ““blinds up right” | 9 | 100 | 100 |

| “blinds up middle” | 15 | 100 | 100 |

| “Fan off” | 18 | 18 | 13 |

| LMS and ICA | Dishwasher Maximum Volume | ||

|---|---|---|---|

| Command | Before [%] | LMS [%] | LMS + ICA [%] |

| “light on” | 0 | 100 | 100 |

| “light off” | 0 | 65 | 66 |

| “blinds down” | 0 | 100 | 100 |

| “blinds up” | 0 | 100 | 100 |

| “blinds down left” | 0 | 100 | 100 |

| “blinds down right” | 3 | 100 | 100 |

| “blinds up left” | 5 | 100 | 100 |

| “blinds up right” | 0 | 100 | 100 |

| “Turn off the dishwasher” | 10 | 0 | 0 |

| LMS and ICA | TV Maximum Volume | ||

|---|---|---|---|

| Command | Before [%] | LMS [%] | LMS + ICA [%] |

| “light on” | 60 | 100 | 100 |

| “light off” | 0 | 74 | 68 |

| “blinds down” | 0 | 62 | 51 |

| “blinds up” | 0 | 74 | 60 |

| “blinds down left” | 24 | 97 | 80 |

| “blinds down right” | 21 | 98 | 96 |

| “blinds down middle” | 20 | 100 | 98 |

| “blinds up left” | 15 | 91 | 88 |

| “blinds up right” | 8 | 74 | 90 |

| “blinds up middle” | 42 | 100 | 98 |

| “Turn off the TV” | 27 | 52 | 69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martinek, R.; Vanus, J.; Nedoma, J.; Fridrich, M.; Frnda, J.; Kawala-Sterniuk, A. Voice Communication in Noisy Environments in a Smart House Using Hybrid LMS+ICA Algorithm. Sensors 2020, 20, 6022. https://doi.org/10.3390/s20216022

Martinek R, Vanus J, Nedoma J, Fridrich M, Frnda J, Kawala-Sterniuk A. Voice Communication in Noisy Environments in a Smart House Using Hybrid LMS+ICA Algorithm. Sensors. 2020; 20(21):6022. https://doi.org/10.3390/s20216022

Chicago/Turabian StyleMartinek, Radek, Jan Vanus, Jan Nedoma, Michael Fridrich, Jaroslav Frnda, and Aleksandra Kawala-Sterniuk. 2020. "Voice Communication in Noisy Environments in a Smart House Using Hybrid LMS+ICA Algorithm" Sensors 20, no. 21: 6022. https://doi.org/10.3390/s20216022

APA StyleMartinek, R., Vanus, J., Nedoma, J., Fridrich, M., Frnda, J., & Kawala-Sterniuk, A. (2020). Voice Communication in Noisy Environments in a Smart House Using Hybrid LMS+ICA Algorithm. Sensors, 20(21), 6022. https://doi.org/10.3390/s20216022