Abstract

In order to restore traffic videos with different degrees of haziness in a real-time and adaptive manner, this paper presents an efficient traffic video dehazing method using adaptive dark channel prior and spatial-temporal correlations. This method uses a haziness flag to measure the degree of haziness in images based on dark channel prior. Then, it gets the adaptive initial transmission value by establishing the relationship between the image contrast and haziness flag. In addition, this method takes advantage of the spatial and temporal correlations among traffic videos to speed up the dehazing process and optimize the block structure of restored videos. Extensive experimental results show that the proposed method has superior haze removing and color balancing capabilities for the images with different degrees of haze, and it can restore the degraded videos in real time. Our method can restore the video with a resolution of 720 × 592 at about 57 frames per second, nearly four times faster than dark-channel-prior-based method and one time faster than image-contrast-enhanced method.

1. Introduction

Today, traffic video analysis plays a very important role in intelligent transportation systems. It has become a common way to help people track a vehicle, as well as locate and judge an accident. Because the images captured by outdoor cameras are often affected by different weather conditions, they suffer from poor visibility and lack of contrast. In the literature, there are many enhancements and dehazing algorithms that improve different images, such as traffic videos, underwater images, and satellite imagery [1,2,3]. The hazy weather that happens frequently all over the world is becoming a video analysis killer. The haze captured in the video degrades the contrast and color information and reduces the visibility. Therefore, the problem of how to efficiently and effectively remove the haze in traffic videos has attracted broad attention from both academia and industry. In general, when dealing with haze removal in traffic videos, the existing dehazing algorithms often exhibit poor real-time performance, overstretched contrast, and even fail to remove dense haze. The key issue of these problems is how to deal with images in different scenes with different degrees of haze, thus an adaptive algorithm that can remove haze based on the image characteristics is needed. Moreover, the existing video-dehazing methods are almost universal for all videos and do not consider the characteristics of videos in particular scenarios. For traffic videos, the time continuity, lane space structure, and camera spatial locations can be effectively used to decrease computational cost.

In order to restore traffic videos with different degrees of haziness in a real-time and adaptive manner, this paper presents an efficient traffic video dehazing method using adaptive dark channel prior and spatial-temporal correlations. This method can avoid overstretched contrast after haze removal and obtain satisfactory restored results for dense hazy videos by using a novel approach involving adaptive transmission estimation. This method also takes full advantage of the temporal and spatial correlations in traffic videos to meet the requirements of real-time dehazing, such as using time continuity to set the time slice, refining transmission by characteristics of block structure, decreasing restored area according to the lane space, and simplifying the calculation of parameters by using multi-camera distribution.

2. Related Works

Essentially, videos are composed of frames, thus the haze removal method for images can be used for videos. The image dehazing method is the most common way to restore hazy images. This method considers the inverse process of image degradation and describes the image degradation process in detail through an established physical model. The most critical step of this method is to obtain the parameters of the degradation model. Oakley et al. [4] improved the image quality by using the physical model and estimated the degradation model parameters based on a statistical model. This method is not widely used because it is only useful for gray-scale images, and the acquiring parameters require calibrated radar to get depth information. Narasimhan et al. [5] proposed a method to estimate the depth information by comparing two images of the same scene in different weather conditions. Chen et al. [6] used a sunny image and a foggy image for reference images to calculate parameters. Both of these methods need to receive eligible images in advance, which increases the difficulty of image acquisition.

To obtain the parameters of the degradation model effectively, some dehazing methods based on prior knowledge or assumptions were proposed, and they do not need to get reference images in advance or use an additional hardware device. Therefore, these methods have better adaptability than previous methods. Based on the assumption that a haze-free image has a higher contrast than a hazy image, Tan [7] proposed a haze removal approach by maximizing the contrast of recovered scene radiance. This approach can produce a satisfactory result for haze removal in single images, but it tends to overcompensate for the reduced contrast and leads to halo effects. Fattal [8] decomposed scene radiance of an image into the albedo and shading and then estimated the scene radiance based on independent component analysis, assuming that transmission shading and surface shading are locally uncorrelated. However, this method cannot generate impressive results when the captured image is heavily obscured by fog. He et al. [9] presented a single image haze removal method by using dark channel prior, which can estimate the transmission map directly. However, when a large white area without shading exists in the images, or the images have uneven illumination, this method takes a long time to restore the hazy images. In addition, the use of the soft matting algorithm makes this a complex computation. Then, Lai et al. [10] presented a haze removal method based on the difference-structure-preservation prior. In this method, the difference-structure-preservation dictionary is learned such that the local consistency features of the transmission map can be well preserved after coefficient shrinkage. Zhu et al. [11] presented a simple but effective Color Attenuation Prior (CAP)algorithm similar to Dark Channel Prior (DCP)using the difference in brightness and saturation to estimate the haze concentration to build a depth model for dehazing. Up until now, other researchers have improved their dehazing algorithms based on the dark channel prior. Yeh et al. [12] introduced a haze removal algorithm based on region decomposition and feature fusion, which is especially suitable for hazy images with large sky regions. Li et al. [13] proposed a novel haze removal method based on sky segmentation and dark channel prior to restore images. In this method, the average image intensity of the sky region is chosen as the atmospheric light value. Wang et al. [14] designed a new method of selecting atmospheric light values to weaken the area where the dark channel priority does not work effectively. A visibility restoration method was introduced by Huang et al. [15], which consists of three modules: (i) depth estimation module based on dark channel priority, (ii) color analysis module that repairs depth estimation distortion, and (iii) visibility restoration module that generates repair results. Riaz et al. [16] proposed a new and efficient method for transmission estimation with bright-object handling capability, which uses a local average haziness value to compute the transmission of such surfaces based on the observation that the transmission of a surface is loosely connected to its neighbors.

Usually, traffic video dehazing algorithms are proposed based on single-image dehazing algorithms. However, the computational complexity makes it difficult to apply single-image dehazing algorithms directly to video dehazing. Most existing research on video dehazing is to speed up the process of dehazing. Sun et al. [17] proposed a real-time haze removal method based on bilateral filtering to reduce the processing time of 320 × 240 images to a speed of 20 frames per second. However, this method cannot satisfy the requirements of high-definition videos. Wang et al. [18] proposed a method based on Retinex theory that enhances image contrast in YUV color space and can process an image of 704 × 576 in 0.055 s. Kumari et al. [19] proposed an approach for dehazing images and videos based on a filtering method. The use of a gray-scale morphological operation made the approach faster, and it took only 80% of the execution time compared to a fast bilateral filter. Berman et al. [20,21] proposed a new method via calculating the air-light to dehaze fogs, which was based on a non-local prior. Their algorithm relies on the assumption that colors of a haze-free image are well approximated by a few hundred distinct colors that form tight clusters in RGB space. It performs well on a wide variety of images. However, these methods take every frame in videos as a single image, and they are completely based on image dehazing methods.

The characteristics of videos can be applied in specific video dehazing algorithms. Tarel et al. [22] proposed a video dehazing method for onboard video systems. This method can separate moving objects and driveway regions in videos and only update the depth information of moving objects. Zhang et al. [23] proposed a method based on spatial and temporal correlation that uses spatial and temporal similarity between frames to optimize the estimation of a scene depth map. Shin et al. [24] proposed an effective video dehazing technique to reduce flicker artifacts by using adaptive temporal average. However, these methods cannot remove the haze from videos in real time. Therefore, Kim et al. [25] proposed an image-dehazing method based on the image degradation model and kept a balance between image contrast enhancement and image information loss. To improve the speed of video dehazing, they adopted a video dehazing method by using temporal correlation, which can reach a speed of 30 frames per second for videos with a resolution of 640 × 480. However, this method adopts a fixed initial transmission value that cannot be adapted to images with different degrees of haze, and it cannot efficiently remove dense haze in videos. Our method uses an adaptive initial transmission value based on image characteristics to handle different degrees of hazes; meanwhile, it can reduce the processing time through lane space separation.

3. Single-Image Dehazing Using Adaptive Dark Channel Prior

3.1. Framework of Single-Image Dehazing Method

The most common dehazing model is based on atmospheric optics [26], which can describe the degradation process of a hazy image. In [27], the modeling function is simplified, and it is represented by Equation (1).

where is a pixel in the image, and are the observed and haze-free image, respectively, is the global atmospheric light, and is the transmission map for each pixel that describes the proportion of the light arriving at a digital camera without scattering.

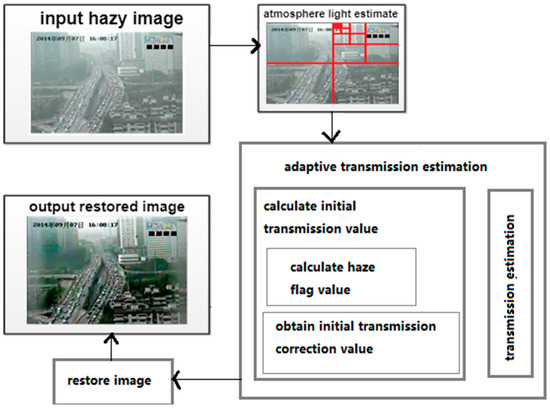

The process of haze removal for every frame of a traffic video can be divided into three steps: calculating atmospheric light, estimating the transmission map, and restoring the image. In this paper, we present a novel adaptive method for transmission map estimation, thus the dehazing algorithm can be applied to images with different degrees of haze. The framework of the single-image dehazing algorithm is shown in Figure 1.

Figure 1.

Framework of single-image dehazing method.

We use a hierarchical searching method based on quad-tree subdivision [25] to find the areas least affected by haze and to get the brightest pixel in this area. The detailed steps are as follows:

- Step 1:

- Divide an input image into four rectangular regions.

- Step 2:

- Define the score of each region as the average pixel value subtracted from the standard deviation of the pixel values within the region.

- Step 3:

- Select the region with the highest score and divide it further into four smaller regions.

- Step 4:

- Repeat Steps 1 through Step 3 until the size of the selected region is smaller than a prespecified threshold. The prespecified threshold in this paper is 200, which is that the height * width of the selected region is smaller than 200.

At last, we choose the color vector, which minimizes the distance , where is the value of pixel in the selected region as the atmospheric light.

3.2. Transmission Estimation for Enhancing the Contrast of Blocks

In general, a hazy block yields low contrast, and the contrast of a restored block increases as the value of the estimated transmission decreases. We adopt the image-contrast-enhanced method [18] to maximize the contrast of the restored blocks and get the best estimated transmission value.

Mean squared error contrast (CMSE) [28] can define the contrast of a restored block, which is represented by Equation (2):

where represents the RGB color channel of pixel p in a block of input image, , is the average value of , and is the number of pixels in a block.

According to the assumption that the scene depths are locally similar [8,12,16], the dehazing algorithm in this paper determines a single transmission value for each block of size 32 × 32, and then gets the fixed optimal transmission value for each block. For a pixel in a block, in Equation (1) can be replaced with the fixed estimated transmission of its block. Hence, is represented by Equation (3).

If Equation (3) is put into Equation (2), can be represented by Equation (4):

where is the average value of in the input block. According to Equation (4), we can find that the mean squared error contrast is a decreasing function of . Thus, we can select a small value of to increase the contrast of a restored block. However, the value of influences the pixel’s restored image value according to Equation (3).

However, when a block contains dense haze, it has a relatively narrow value range for input pixels. Thus, even though it is assigned a small value, most of the input values are not truncated, and the block can be correctly restored. On the contrary, a block without haze usually has a broad range of values for input pixels and should be assigned a larger t value to reduce the information loss due to the truncation. Thus, we should not only enhance the contrast but also reduce the information loss.

Therefore, we need to set quantitative evaluations for contrast and information integrity. The contrast cost and the information loss cost were proposed by Kim [25] to evaluate the contrast and information integrity, respectively.

where and are the average values of and in block , respectively, and is the number of pixels in . Thus, we can maximize the mean squared error contrast by minimizing the value of .

where and denote the truncated values for output pixels due to the underflow and overflow, respectively.

If we want to get a better restored image, the image contrast should be smoother, and the color information should be maintained as much as possible. Thus, these two factors should be taken into consideration synthetically, and the overall cost function is described as Equation (7).

where is a weight coefficient that controls the relative importance of the contrast cost and the information loss cost [18]. The minimum value of represents the most suitable contrast for restored images, and the color loss is as small as possible. Finally, for each block in a hazy image, we can get an optimal transmission by minimizing the value of . The value of is the transmission we use while dehazing.

3.3. Adaptive Estimation of Initial Transmission

3.3.1. Calculating Image Haziness Flag

We present a haziness flag to measure the degree of haze in an image. The dark channel prior [9] can estimate the transmission of a block, which represents the luminosity of objects. The transmission has a close relationship with the degree of haze. Therefore, we can adopt the average value of transmission as the haziness flag T of an image. The haziness flag T is concerned with the effects of the degree of haze in images.

The dark channel prior is based on the observation that most local blocks in haze-free outdoor images contain some pixels that have very low intensities in at least one color channel. In other words, the dark channel value of a haze-free image is close to zero [9]. For any input image , dark channel can be expressed as Equation (8).

where and represent a local block centered at , and is a pixel in the local block . A dark channel is the outcome of two minimum operators: is performed on each pixel, and is a minimum filter [9].

Assuming that the atmospheric light is given, we can normalize the haze imaging Equation (1) by [9]:

Since the transmission is a constant in local block, and the value of is given, the dark channel operation can be given by the following equations [9].

Using the concept of a dark channel [9], if is an outdoor haze-free image except for the sky region, the intensity of dark channel is low and tends to be zero, which leads to:

Putting Equation (11) into Equation (9), we can eliminate the multiplicative term and estimate the transmission simply by

where is the predicted value of transmission of a block [9]. We need to calculate the average transmission for all blocks to obtain the average transmission T for the whole image, which is the value of image haziness flag.

3.3.2. Correction of Initial Transmission

According to our experimental results, in a hazy image, the range of is generally between 0.4 and 0.6. Although the image haziness flag can characterize the nature of the image, taking as the initial transmission value to get the optimal transmission leads to an excessive value of . Thus, we set a correction value , and set as the initial transmission value to decrease this initial value.

The structural similarity (SSIM) index is a method for predicting the perceived quality of digital television and cinematic pictures, as well as other kinds of digital images and videos. To guarantee that the restored images are closer to ground truths, we adopted the SSIM index [29] to measure the similarity between the ground truths and restored images. Because the traffic video is captured by a fixed camera, we can get a haze-free image of the same scene as a reference image in advance and compare the restored image with the reference image. The initial value of can be obtained directly because it is relevant to the nature of images, whereas the unknown value is calculated by the SSIM. In our experiments, we set as a series of values between 0.3 and 1.2, and the interval is 0.02. Then, we take every in this range multiplied by T, that is, , as the initial value of transmission and get the corresponding restored image. At last, we find a restored image that is closest to the haze-free image based on the maximum value of the SSIM index. Thus, the value of transmission is the optimal initial value, and the corresponding correction value is the optimal correction value of initial transmission.

However, this method needs a haze-free image to get the optimal correction value . This method is limited in practical applications, thus it is necessary to get the correction value according to the image characteristics. After analyzing the image contrast and the haze in images, we find the relationship between the correction value of initial transmission and the image characteristics. Therefore, a relatively reasonable initial transmission correction value can be obtained directly from hazy images.

If the relatively reasonable correction value of initial transmission is , we take as the initial transmission value. Because the dehazing algorithm is based on the concept of enhancing the image contrast to the greatest degree, the contrast is the important indicator. The value of image haziness flag T represents the degree of haze that degrades the image contrast. Thus, the image contrast and haziness flag value should be considered simultaneously. We set as the image contrast and set as a quantitative value representing the image characteristics. The constant value depends on the range of value .

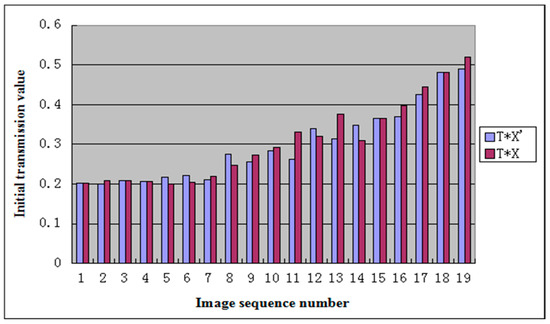

Table 1 shows the values of for different ranges of . In Table 1, is the optimal correction value obtained by the method with reference images, and is the relatively reasonable correction value obtained by the ranges of . In the dehazing algorithm, the initial transmission value is the key factor that affects the dehazing result. Table 1 shows the values of and , which are the initial transmission value derived by optimal correction of initial and relatively reasonable correction value , respectively. Figure 2 shows the histogram of and , where the values of and in the same group have similar values, and the difference of the values in the same group does not affect the dehazing results significantly. Therefore, our method can determine the optimal initial transmission value using only the nature of images and then obtain a more adaptive transmission value.

Table 1.

The value of x’ for different ranges of T * C.

Figure 2.

The histogram of T * X and T * X′.

4. Adaptive Traffic Video Dehazing Method Using Spatial–Temporal Correlations

Compared with static traffic images, traffic videos have some unique characteristics. First, a traffic video is a collection of images with time continuity. Second, the cameras are fixed on the road and capture videos of the same scene over a long time, thus the videos are consistent in space. Therefore, we can use the correlations of spatial-temporal information to speed up traffic video dehazing.

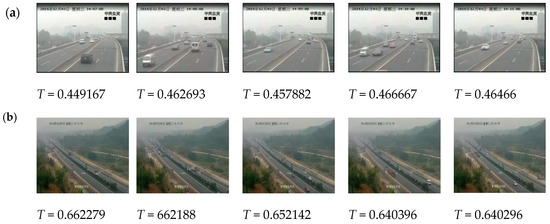

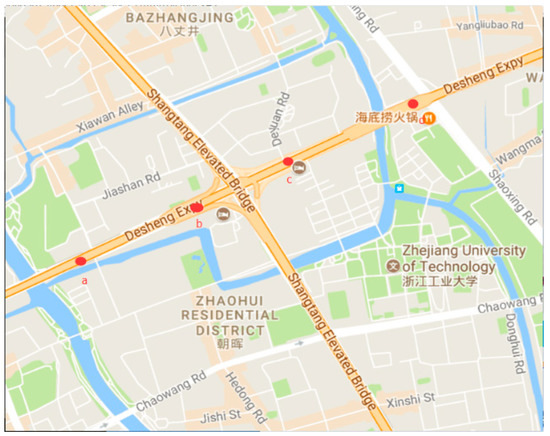

4.1. Time Continuity of Traffic Videos

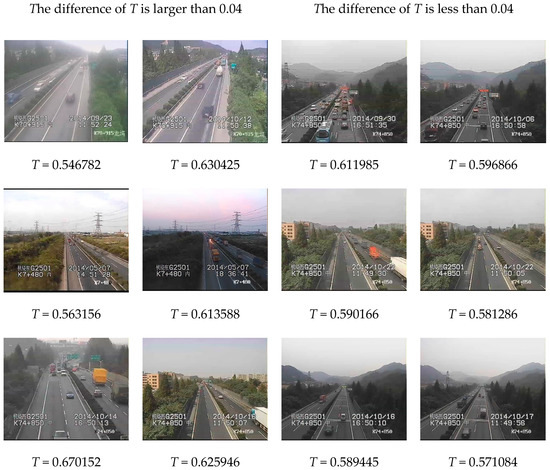

Because the cameras are fixed, the scenes in traffic videos barely change over a long period of time, and the influence of haze is stable. In our experiments, we use the traffic videos from ZhongHe elevated freeways in Hangzhou City, set a cycle of five minutes, and regard the frames in one cycle as a collection of images with the same characteristics. Figure 3 shows images whose interval is 1 min in a 5 min cycle, and the difference of is very small, usually less than 0.04. Figure 4 presents the difference in restored images by using different values where the results have no obvious influence on visibility with the difference of less than 0.04. Therefore, if the videos are captured at the same scene, the values of for these video images in a 5 min cycle are at the same level, and the cycle of 5 min is reasonable in practical application.

Figure 3.

The difference of T for the images in a 5 min cycle. The images come from different scenes (a,b).

Figure 4.

The images with different T values.

After setting the 5 min cycle, we can take the first frame of a video segment as a reference frame. We can determine the image haziness flag value and the relatively reasonable initial transmission correction value from the reference frame and then calculate the optimal transmission . In this way, we can speed up the dehazing processing for the traffic video. This method can avoid incorrect transmission estimation, which is caused by the changes in atmospheric light, and eliminate the discontinuity of videos after dehazing.

4.2. Transmission Refinement Based on Spatial Structure

We estimate the optimal transmission based on the assumption that all pixels in a block have the same transmission. However, scene depths may vary spatially within a block, and the block-based transmission map usually has a blocking-artifact problem. Therefore, an edge-preserving filter is adopted to refine the block-based transmission map.

The single-image dehazing method using dark channel prior [9] employs the soft matting technique [30] to refine the large block size in the transmission map, which causes an enormous computational burden. In this paper, the guided filter method [31] is adopted to refine the transmission map, which has less computational cost. The filtered transmission is an affine combination of the guidance image , as show in Equation (13):

where is a scaling vector, and is an offset determined by the size of block. For a block in one image, the optimal parameters of and can be obtained by minimizing the difference between the transmission and the filtered transmission using the least squares method as Equation (14):

If the transmission is too small, the noise will be enhanced in the restored image [9]. Thus, the lower limit of the transmission is set to 0.1. If a window slides pixel by pixel over the entire image, there will be multiple windows that overlap at each pixel position. Therefore, we adopt the centered window scheme, which sets the final transmission values as the average of all associated refined transmission values at each pixel position. However, the average transmission value in this scheme will cause blurring in the final transmission map, especially around object boundaries, where the depths change abruptly. To overcome this problem, the shiftable window scheme [32] is employed instead of the centered window scheme. The centered window scheme overlays a window on each pixel so that the window contains multiple objects with different depths, which leads to unreliable depth estimation. In the shiftable window scheme, the window is shifted within a block of 40 × 40. The optimal shift position is selected depending on the smallest change of pixel values within the window. Even though a shiftable window is selected for a specific pixel, the number of overlapping windows usually varies at different positions. The windows in smooth regions are selected more frequently than those in rough boundary regions. Thus, the shiftable window scheme can reduce the effects of unreliable transmission values derived from rough boundary regions, thereby alleviating the blurring artifacts.

4.3. Lane Separation for Traffic Videos

After analyzing the spatial characteristics of traffic video, we found that the traffic lane is an obvious structure. In a traffic video detection system, the detected objects are mostly concentrated in the driveway regions. The areas outside lanes are not the regions of interest in traffic video processing. Therefore, we can process haze removal only in the driveway region of traffic video to reduce computing time.

However, the estimations of atmospheric light and transmission are based on the whole image. If these values are achieved only through the driveway regions, it may cause some deviations, especially when the sky occupies a large area of the image, such as the cases shown in Table 2. The larger the sky region is, the greater the deviation for the value of is. Therefore, the separated lane can be used in the last step to restore the pixels only for the driveway regions.

Table 2.

Global image and driveway.

We adopt a straight-line extraction algorithm based on the Hough transform to detect the lanes and separate the driveway region from the global image. The process of haze removal combined with the driveway region separation is described as follows:

- Calculate the global atmospheric light , the value of haziness flag , and the image contrast , then estimate the optimal transmission map for each block in an image.

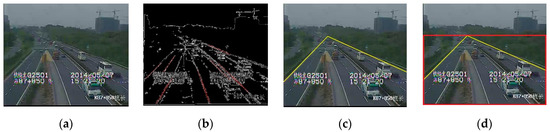

- Get the driveway region, as shown in Figure 5.

Figure 5. Lane space separation: (a) original Image; (b) lane candidates; (c) driveway boundary; (d) result for lane separation.

Figure 5. Lane space separation: (a) original Image; (b) lane candidates; (c) driveway boundary; (d) result for lane separation.- Step 1: Obtain the edge information in the video through edge detection.

- Step 2: Remove obviously wrong-angle lines by Hough linear fitting, and obtain lane candidates, as shown in Figure 5b.

- Step 3: Find the far left lane and the far right lane, and set them as the driveway boundaries, then find the intersection of these two lines, as shown in Figure 5c.

- Step 4: Identify a rectangular area as the driveway region, which is composed of the boundary of the image and a horizontal line across the intersection, as shown in Figure 5c. If the intersection is outside the image, take the whole image area as the driveway region.

- Use the original pixel values and the optimal transmission of driveway region in the dehazing model to restore the image in the driveway region.

In a traffic video detection system, each camera is located at a fixed position and captures the same traffic scenes for a long time. Based on the time continuity, the result of lane space separation for the initial frame of a traffic video can be used over a long time period. Lane space separation can decrease the area of haze removal and improve the efficiency of the dehazing algorithm. Figure 6 shows the haze removal results with and without lane separation. In this scene, the dehazing of 2000 frames needs 35.301 s without lane separation and 32.74 s with lane separation (lane space separation takes 0.182 s). Although lane separation requires some time, the operation just occurs in the first frame. Thus, the time for lane separation can be shared by all frames of a traffic video. With an increasing number of frames, the efficiency of the dehazing algorithm with lane separation will be improved more significantly. Hence, if the driveway region is a larger portion of a whole image, the processing time can be decreased obviously. When real-time processing is required, a little reduction in processing time has been of practical significance.

Figure 6.

Results for video dehazing with lane separation: (a) before haze removal; (b) haze removal without lane separation; (c) haze removal with lane separation.

4.4. Optimization Based on Spatial Distribution of Cameras

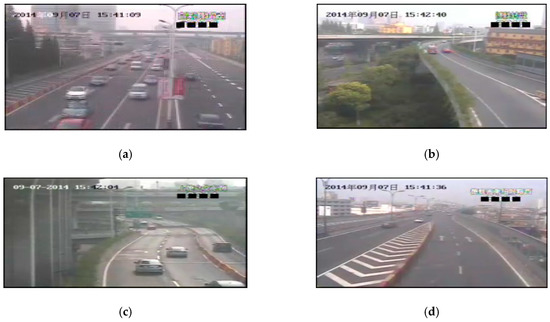

With an increasingly complex layout of transportation networks, the number of traffic monitoring cameras also increases gradually, and sometimes there are multiple cameras in the same section of road. These cameras located in close physical proximity usually have the same hardware indicators. In a traffic video detection system, multiple cameras are connected to one system. These cameras have similar characteristics according to their spatial distribution. The weather is also an index with spatial characteristics, that is, the degrees of haze are similar in nearby regions. Thus, we can use the spatial distribution information of cameras to speed up dehazing and optimize the performance of the traffic video detection system.

Figure 7 shows the images captured by four surveillance videos of DE-elevated freeways in Hangzhou City at the same time. The locations of these cameras are shown in Figure 8, where the distance between the cameras is about 500 to 600 m. Table 3 shows the initial transmission values of these four videos. The haziness flag values T calculated from each video are shown in the first column of Table 3. We obtain relatively proper initial transmission correction value by using the method proposed in Section 3, and then determine the initial transmission value . According to the results, these initial transmission values are very numerically similar, thus there may be no obvious influence on the restored images.

Figure 7.

Example images of the nearby regions.

Figure 8.

The locations of cameras.

Table 3.

Initial transmission values for videos in nearby regions.

In traffic video dehazing, the cameras are divided into different regions according to their locations, and one camera in a region is set as the calibration camera. The images from the calibration camera are used to calculate the initial transmission value, which is also applied to other cameras in the same region. Therefore, we can avoid repeatedly calculating the values of , , and for other cameras, thus improving the efficiency of haze removal. The results of haze removal with the initial transmission value obtained by calibration cameras is shown in Figure 9b, and the result directly using the initial transmission value obtained by the image itself is shown in Figure 9c. It is obvious that the results are very similar in these two ways. It takes 0.033 s to calculate the initial transmission value, which can be saved by using that of the calibration camera.

Figure 9.

Results of haze removal with and without calibration camera: (a) original image; (b) initial transmission value for calibration camera is 0.596; (c) initial transmission value for image itself is 0.578.

5. Results

In the efficient traffic video dehazing method using adaptive dark channel prior and spatial-temporal correlations, a video sequence is converted into color space where represents the luminance and represents the chromaticity. Human eyes are more sensitive to high-frequency signals than low-frequency signals and more sensitive to changes in visibility than changes in color. The and components are less affected by haze than the component. Thus, we can only adopt the luminance () component to reduce computational complexity. In our experiments, we implemented each method with Opencv and C/C++ language. The source codes were compiled with Microsoft Visual Studio 2010 and run on an Intel Core I5-2400 processor and 4 GB of main memory running a Windows 7 system.

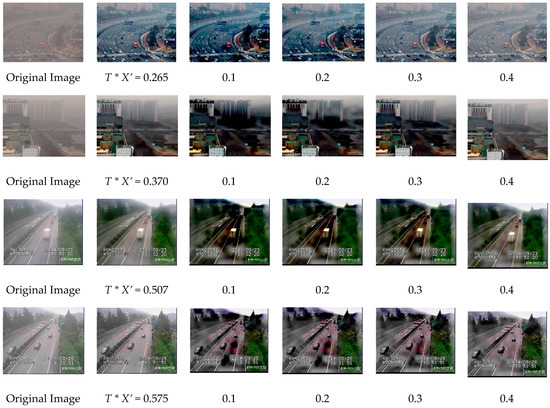

5.1. Results for Single Image Dehazing

Our adaptive method can determine the initial transmission according to the image characteristics, thus it can produce a more satisfactory dehazing result than the method with fixed initial transmission. Figure 10 shows the restored images using our adaptive method, and there are four different initial transmission values, 0.1, 0.2, 0.3, and 0.4. It is obvious from the experimental results that the smaller initial transmission values may lead to some blocks in the images with overstretched contrast, therefore the optimal initial transmission for the first image is between 0.2 and 0.3, the value for the second image is between 0.3 and 0.4, and the value for the third and fourth images is above 0.4. The values for the images obtained by our method are all located in the range of the optimal initial transmission. Therefore, our method is adaptable for images with different degrees of haze.

Figure 10.

Results for different initial transmission using our adaptive method.

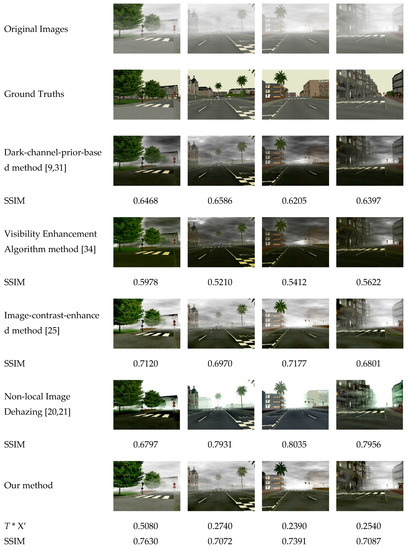

Figure 11 shows four images from Foggy Road Image Database (FRIDA) [33] and restored these images using the dark-channel-prior-based method [9,31], the visibility enhancement algorithm [34], the image-contrast-enhanced method [25], the non-local image dehazing method [20,21], and our method. The SSIM values in Figure 7 are the average values of three channels of RGB. In FRIDA [33], each image without fog is associated with some hazy images, and different kinds of fog are added in each image—uniform fog, heterogeneous fog, cloudy fog, and cloudy heterogeneous fog. According to the experimental results, the dark-channel-prior-based method does not have satisfactory results for haze removal in heterogeneous fog and cloudy heterogeneous fog, while the image-contrast-enhanced method and our method achieves more satisfactory results for these two cases. In addition, our method obtains the highest for the restored images compared to the first three methods, thus the restored images using our method are more similar to ground truth. As to the results of non-local image dehazing method [20,21], the for some restored images may be higher than those of our method. However, the non-local image dehazing method takes longer processing time, as shown in Table 4. Table 4 provides the overall processing times of these methods. Our method is faster than the dark-channel-prior-based method [9,31] and visibility enhancement algorithm [34]. However, our method takes more time than the image-contrast-enhanced method [25] because it spends some time in calculating the image haziness flag value and the initial transmission correction value. However, the results for haze removal using the proposed method are better than the results of the image-contrast-enhanced method. Although the non-local image dehazing method can get more satisfactory restored images, it is too slow to be used in real-time scenarios. In addition, it usually needs to manually set the parameters to different scenes, which is not suitable for real-time traffic video processing. Further still, we can spread this part of the computation time over all frames in video dehazing and reach a faster dehazing speed through the fusion of spatial and temporal information.

Figure 11.

Comparison of the restored images using different methods; * SSIM = structural similarity.

Table 4.

Processing times for single-image dehazing.

5.2. Results for Traffic Video Dehazing

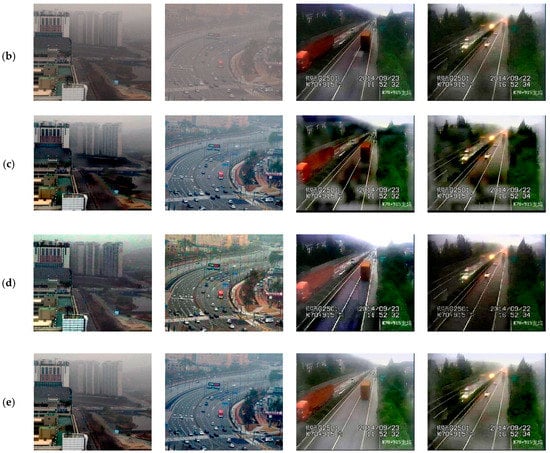

To get better restored images, we restore the whole image for the first frame of a time slice and use the area outside the lane space of the restored frame to replace those areas of the following frames. Moreover, we adopt the parallel programming tools SIMD [35] and OpenMP [36] for rapid calculation. Figure 12 presents a comparison of three approaches for traffic video dehazing, where Figure 12a shows the original videos; Figure 12b shows the results for the dark-channel-prior-based method with guided filtering [9,31], which uses the transmission map obtained from the first frame to filter the following frames; Figure 12c shows the results for the image-contrast-enhanced method [25], whose initial transmission is a constant value 0.3; Figure 12d shows the results produced by our method. Experimental results demonstrate that the image-contrast-enhanced method leads to some blocks with overstretched contrast, such as the images in groups (1), (3), and (4). For some urban scenes, the color is not obviously different between the driveway and background, such as the examples in group (1) with medium haze and group (2) with dense haze. Our method can restore these videos in a manner more similar to the haze-free scenes, and the driveway and the vehicles can been seen more clearly. However, the dark-channel-prior-based method cannot deal with these videos. For the suburban scenes where the trees and road surface are obviously different in color, such as images in group (3) that were captured in daytime and images in group (4) that were captured in dense haze with vehicle headlights on, our method achieves better restored results than the other two methods. For the restored images using our method in group (3), the driveway color is more uniform. For the restored images using our method in group (4), there are no blocks with overstretched contrast, and the color of trees with hierarchical structure is more realistic. Therefore, our method can maintain the image details and restore images that are more similar to the real scene with proper contrast.

Figure 12.

Comparison of restored videos. (a) Original Videos; (b) Dark-channel-prior-based method; (c) Image-contrast-enhanced method; (d) Non-local Image Dehazing; (e) Our method.

As we can see from the experiment results, our method produces better haze removal results by determining parameters according to image characteristics. It is also applicable to dense fog or a variety of fog densities. Moreover, it makes the restored images more similar to the real scene and avoids the problem that the restored images exhibit overstretched contrast. Therefore, it can solve the general problems in the existing dehazing algorithms—contrast distortion after video dehazing and failure to remove dense haze.

In addition, our method adopts the spatial correlation, time continuity, lane separation, and spatial distribution of cameras to improve computational efficiency. Besides the processing time, the performance parameters of frames per second (fps) and SSIM of different methods for the video dehazing in Figure 12 are shown in Table 5. In order to meet the actual traffic scenarios, we process the video frame by frame, and the data show the total processing time for 1000 frames. Our method uses the initial frame in a time slice to calculate the transmission map and atmospheric light and adopts the lane separation to decrease the dehazing areas. Compared with other methods, the time of dehazing in our method decreases when the time slice increases. According to the experiment results, our method can obviously speed up video dehazing, especially if the video has high resolution or the driveway is only a small part of the whole image. Our method can restore the video with a resolution of 720 × 592 at about 57 fps, nearly four times faster than dark-channel-prior-based method and one time faster than image-contrast-enhanced method. Furthermore, our method obtains the highest SSIM for the restored videos compared with other existing methods, thus the restored videos using our method are more similar to ground truth. Therefore, the proposed method not only has superior haze removing and color balancing capabilities but also restores and enhances the degraded videos in real time.

Table 5.

Comparing the performance parameters.

6. Conclusions

Traditional haze removal methods fail to restore the images with different degrees of haziness in a real-time and adaptive manner under most circumstances. To solve this problem, we propose an efficient traffic video dehazing method using adaptive dark channel prior and spatial-temporal correlations. The dark channel prior is based on the statistics of outdoor haze-free images, but it cannot adaptively estimate the initial transmission value based on the degree of haze and contrast of images. Therefore, we adopt the image-contrast-enhanced method to obtain the best estimated transmission value as the initial transmission value of dark channel prior. The image dehazing method using adaptive dark channel prior can overcome the shortcomings of existing dehazing algorithms that overstretch contrast after haze removal and deal with images with dense haze to a satisfactory level. Additionally, we introduce the temporal-spatial correlation of traffic videos to speed up the traffic video dehazing using the time continuity to set a time slice, the characteristics of block structure to refine transmission, lane space structure to decrease the restored area, and multi-camera distribution to simplify the calculation of parameters. The experiment results show that our method can restore satisfactory image appearance, which can remove dense haze effectively and does not produce results with overstretched contrast. The temporal and spatial characteristics can reduce the computation time, especially for dehazing multiple videos.

However, the dark channel prior is a kind of statistic, and it may not work for some particular traffic videos. When there are rapidly changing hazes in the videos, the dark channel of the scene radiance has a great difference at different times. In addition, if the scene objects are inherently similar to the atmospheric light and no shadow is cast on them, the adaptive dark channel prior is invalid. The dark channel of the scene radiance has bright values near such objects. As a result, our method may underestimate the transmission of these objects and overestimate the haze layer.

Author Contributions

Formal analysis, G.Z. and J.W.; methodology, T.D., Y.Y. and Y.S.; project administration, T.D.; validation, Y.Y.; literature search, J.W. and G.Z.; writing-original draft, T.D. and J.W.; writing-review and editing, G.Z. and Y.S.

Funding

This work is supported by National Natural Science Foundation of China (No. 61672414, 61572437).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pyka, K. Wavelet-Based Local Contrast Enhancement for Satellite, Aerial and Close Range Images. Remote Sens. 2017, 9, 25. [Google Scholar] [CrossRef]

- Li, R.; Pan, J.; Li, Z.; Tang, J. Single Image Dehazing via Conditional Generative Adversarial Network. In Proceedings of the CVPR Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 July 2018; pp. 8202–8211. [Google Scholar]

- Mangeruga, M.; Bruno, F.; Cozza, M.; Agrafiotis, P.; Skarlatos, D. Guidelines for Underwater Image Enhancement Based on Benchmarking of Different Methods. Remote Sens. 2018, 10, 1652. [Google Scholar] [CrossRef]

- Oakley, J.P.; Satherley, B.L. Improving image quality in poor visibility conditions using a physical model for contrast degradation. IEEE Trans. Image Process. 1998, 7, 167–179. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Removing weather effects from monochrome images. In Proceedings of the CVPR Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; pp. 186–193. [Google Scholar]

- Chen, G.; Wang, T.; Zhou, H. A Novel Physics-based Method for Restoration of Foggy Day Images. J. Image Graph. 2008, 13, 888–893. [Google Scholar]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the CVPR Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Fattal, R. Single image dehazing. In Proceedings of the ACM Siggraph, Los Angeles, CA, USA, 11–15 August 2008; pp. 1–9. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the CVPR Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar]

- Lai, Y.; Chen, Y.; Chiou, C.; Hsu, C. Single-Image Dehazing via Optimal Transmission Map Under Scene Priors. Circuits Syst. Video Technol. 2015, 25, 1–14. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar]

- Yeh, C.; Kang, L.; Lee, M.; Lin, C. Haze effect removal from image via haze density estimation in optical model. Opt. Express 2013, 21, 27127–27141. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Wang, S.; Zheng, J.; Zheng, L. Single image haze removal using content-adaptive dark channel and post enhancement. IET Comput. Vis. 2014, 8, 131–140. [Google Scholar] [CrossRef]

- Wang, J.; He, N.; Zhang, L.; Lu, K. Single image dehazing with a physical model and dark channel prior. Neurocomputing 2015, 149, 718–728. [Google Scholar] [CrossRef]

- Huang, S.; Chen, B.; Wang, W. Visibility Restoration of Single Hazy Images Captured in Real-World Weather Conditions. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1814–1824. [Google Scholar] [CrossRef]

- Riaz, I.; Fan, X.; Shin, H. Single image dehazing with bright object handling. IET Comput. Vis. 2016, 10, 817–827. [Google Scholar] [CrossRef]

- Sun, K.; Wang, B.; Zhou, Z. Real time image haze removal using bilateral filter. Trans. Beijing Inst. Technol. 2011, 31, 810–814. [Google Scholar]

- Wang, D.; Fan, J.; Liu, Y. A foggy video images enhancement algorithm of monitoring system. J. Xian Univ. Posts Telecommun. 2012, 5, TP391.41. [Google Scholar]

- Kumari, A.; Sahdev, S.; Sahoo, S.K. Improved single image and video dehazing using morphological operation. In Proceedings of the IEEE International Conference on VLSI Systems, Architecture, Technology and Applications, Bangalore, India, 8–10 January 2015; pp. 1–5. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Non-Local Image Dehazing. In Proceedings of the CVPR Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Air-light Estimation using Haze-Lines. In Proceedings of the IEEE 13th International Conference on Intelligent Computer Communication and Processing, Stanford, CA, USA, 12–14 May 2017; pp. 5178–5191. [Google Scholar]

- Tarel, J.; Hautière, N.; Cord, A.; Gruyer, D.; Halmaoui, H. Improved visibility of road scene images under heterogeneous fog. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 478–485. [Google Scholar]

- Zhang, J.; Li, L.; Zhang, Y.; Yang, G.; Cao, X.; Sun, J. Video dehazing with spatial and temporal coherence. Vis. Comput. 2011, 27, 749–757. [Google Scholar] [CrossRef]

- Shin, D.K.; Kim, Y.M.; Park, K.T.; Lee, D.; Choi, W.; Moon, Y.S. Video dehazing without flicker artifacts using adaptive temporal average. In Proceedings of the IEEE International Symposium on Consumer Electronics, JeJu Island, Korea, 22–25 June 2014; pp. 1–2. [Google Scholar]

- Kim, J.; Jang, W.; Sim, J.Y.; Kim, C.S. Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 2013, 24, 410–425. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Vision and the Atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Pan, X.; Xie, F.; Jiang, Z.; Yin, J. Haze Removal for a Single Remote Sensing Image Based on Deformed Haze Imaging Model. IEEE Signal Process. Lett. 2015, 22, 1806–1810. [Google Scholar] [CrossRef]

- Peli, E. Contrast in complex images. J. Opt. Soc. Am. A 1990, 7, 2032–2040. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Levin, A.; Lischinski, D.; Weiss, Y. A closed-form solution to natural image matting. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 228–242. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In Proceedings of the Springer ECCV European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 1–14. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer: New York, NY, USA, 2010. [Google Scholar]

- Foggy Road Image DAtabase FRIDA. Available online: http://www.lcpc.fr/english/products/image-databases/article/frida-foggy-road-image-database (accessed on 8 June 2012).

- Huang, S.; Chen, B.; Cheng, Y. An Efficient Visibility Enhancement Algorithm for Road Scenes Captured by Intelligent Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2321–2332. [Google Scholar] [CrossRef]

- Patterson, D.A.; Hennessy, J.L. Computer Organization and Design: The Hardware/Software Interface; Morgan Kaufmann Publishers: Burlington, MA, USA, 1998. [Google Scholar]

- Chapman, B.; Jost, G.; van der Pas, R. Using OpenMP: Portable Shared Memory Parallel Programming (Scientific and Engineering Computation); MIT Press: Cambridge, MA, USA, 2008. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).