Abstract

Automatic registration of terrestrial laser scanning point clouds is a crucial but unresolved topic that is of great interest in many domains. This study combines terrestrial laser scanner with a smartphone for the coarse registration of leveled point clouds with small roll and pitch angles and height differences, which is a novel sensor combination mode for terrestrial laser scanning. The approximate distance between two neighboring scan positions is firstly calculated with smartphone GPS coordinates. Then, 2D distribution entropy is used to measure the distribution coherence between the two scans and search for the optimal initial transformation parameters. To this end, we propose a method called Iterative Minimum Entropy (IME) to correct initial transformation parameters based on two criteria: the difference between the average and minimum entropy and the deviation from the minimum entropy to the expected entropy. Finally, the presented method is evaluated using two data sets that contain tens of millions of points from panoramic and non-panoramic, vegetation-dominated and building-dominated cases and can achieve high accuracy and efficiency.

1. Introduction

The applications of terrestrial laser scanning (TLS) are continuously growing in areas such as city modeling, heritage documentation, manufacturing, and terrain surveying. The primary purpose of terrestrial laser scanning is to generate a complete surface model of the target object. However, because the limits of coverage vary and interruptions exist, a series of scans from different views are generally necessary. Hence, point clouds from various scans have their own coordinate frames. To obtain a complete model, point clouds from multiple scans must be transformed into a common uniform frame. This process is called registration.

Standard method for the registration task includes using artificial targets and semi-automatic registration. There are many types of artificial targets such as spheres [1] and planar targets [2], and such targets generally have special shapes or reflective features. When the targets are identified, transformation parameters can be calculated based on corresponding targets between different scans. One drawback of this method is that it takes too much additional time to arrange the targets in the scene. In some extreme conditions, it is impossible to place any targets. Additionally, placing artificial targets inevitably causes occlusions and disrupts the integrality of the data. Semi-automatic registration is also a commonly used registration method, which possesses a high universality and has been implemented in many commercial or opensource software packages (e.g., Riscan, Cyclone and Cloudcompare), and the corresponding points are picked manually to calculate the transformation parameters. Nevertheless, it sometimes takes much time and manpower when there are a number of scans.

To avoid the manual intervention, much research has been conducted focusing on automatic registration. Generally, automatic registration comprises two stages: coarse registration, roughly aligning scans and producing good initial pose parameters, and fine registration, obtaining final registration results with high precision. The most widely used method for fine registration is the Iterative Closest Point (ICP) algorithm introduced by Besl and McKay [3] and Chen and Medioni [4]. Transformation parameters between two scans are estimated iteratively until the sum of the squares of Euclidean distances between corresponding points converge to the minimum. Variants and optimizations have been proposed in various contexts since then, such as mathematical framework [5,6], corresponding metric [7,8], corresponding selection and weighting [9]. The drawback is that the distances will most likely converge to a local minimum without a good prior alignment. Therefore, methods to roughly align two original points in the coarse registration stage are important to the ICP algorithm.

A general line of thought for coarse registration is based on distinctive spatial elements, such as points, lines or planes. Those spatial elements generally have unique features, which are different from most others and can be extracted for correspondence searching. Scale Invariant Feature Transform (SIFT) [10] is one of the most widely used point features and can be classified as 2D key points. Bendels et al. [11] introduced SIFT for TLS points registration combing SIFT features from camera images with surface elements from range images. Barnea and Filin [12] proposed an autonomous model based on SIFT key points of panoramic images. This method addresses the registration of multiple scans. Application of the SIFT feature to reflectance images was introduced by Böhmand and Becker [13]. False matches caused by symmetry and self-similarity are filtered by checking the geometric relationship in a RANSAC filtering scheme. The SIFT feature was also used on reflectance images by Wang and Brenner [14] and Kang et al. [15,16]. Weinmann et al. [17] extracted characteristic feature points from reflectance images based on SIFT features and projected them into 3D space to calculate transformation parameters. This algorithm can achieve a high accuracy without fine registration by using 3D-to-3D geometric constraints. Besides SIFT descriptor, other image features also have been used, such as Moravec operator [18]. Methods mentioned above mainly rely on mature image processing algorithms which are efficient and convenient, but generally require large overlap areas to make sure enough correspondences. To relax the overlap requirement and adapt to the case of minimal overlap, Renaudin et al. utilized the linear features from photogrammetric and TLS dataset for the registration of multiple scans, with the coregistration of image and point cloud as a byproduct [19]. Similarly, photogrammetric linear and planar features were used for scan registration by Canaz and Habib [20], to avoid the requirement of large overlap.

In situations of strong viewpoint changes or poor intensity resolution, a method using 2D features is prone to failure; 3D features display more robust performances. Thus, many studies have focused on 3D point features for registration. Those methods extract 3D feature point sets and identify corresponding points to recover the transformation between two scans. Gelfand et al. [21] proposed an integral volume descriptor to detect feature points and match those points using an algorithm called branch-and-bound correspondence search. Theiler et al. [22] extracted Difference-of-Gaussian (DoG) and 3D Harris [23] key points from voxel-filtered datasets as input to the 4-Points Congruent Sets algorithm [24] to achieve coarse registration. Rusu et al. [25] estimated a set of 16D features called Point Feature Histograms (PFH), which are robust to scale and noise, providing good starting points for ICP. Then, Rusu et al. [26] applied some optimizations to PFH and proposed Fast Point Feature Histograms (FPFH) reducing the computation time dramatically. Examples of point features also include normal vector [27], distance between target point and center of neighboring points [28], 2.5D SIFT [29], curvature [30] and rotational projection statistics feature [31]. Stamos and Leordeanu extracted the intersection lines of neighboring planes as the primitives and calculated the transformation parameters with at least two line pairs [32]. Yao et al. presented a common framework for the automatic registration of two scans with linear or planar features and the orientation angles and distances were used to find candidate matches [33]. Yang and Zang used curves as matching primitives to find the initial transformation for point clouds with freeform surfaces, such as statues and artifacts [34]. Planes are also used for coarse registration in many studies. Theiler and Schindler [35] used intersecting planes to generate a set of virtual tie points, which are described by properties of corresponding parent planes. Then, tie points matching is guided by those descriptors. In the work of Dold and Brenner [36], planar patches were described by features including area, boundary length, bounding box and mean intensity value and matched with the help of image information. In this approach, those features of planar patches are sensitive to density and occlusions; thus, Brenner et al. [37] proposed a more robust method with planar patches. Plane triples were used instead of single patch in the matching process based on a sensible criterion. Pu et al. [38] combined the semantic features of planar patches and GPS position to derive the mathematical formulation of transformation parameters. The semantic information was also used in [39], in which Yang et al. detected features points based on semantic feature and the matching was processed by searching for corresponding triangles constructed and eliminate from the feature points. Kelbe et al. [40] calculated the transformation parameters for the forest TLS data based on the results of tree detection, which can be obtained from some previous work [41,42]. Some other geometric elements are also used in the registration, such as salient directions [43], cylindrical and polygonal objects [44], fitted objects in industrial environments [7] and other fitted geometric primitives [45]. To identify a good feature combination, Urban and Weinmann [46] presented a framework to evaluate different detector-descriptor combinations, in which five approaches are involved.

Another train of thought depends on external sensors (e.g., GPS/IMU) to record the position and orientation of each scan. Point clouds from different scans can be registered easily. This method is often used in mobile laser scanning [47,48] but can also be used in terrestrial laser scanning [38,49]. External sensors are helpful for registration of terrestrial point clouds, although the high cost of external sensors limits the application of this method.

The registration techniques described above suggest that most methods focus on detailed information extracted from point clouds to achieve the registration task. In complex scenes, feature extraction and matching are time-consuming and prone to failure if too much symmetry, self-similarity and occlusions exist. As the external sensors are quite helpful for point registration, this paper presents a novel method for the automatic coarse registration of leveled point clouds, combining terrestrial laser scanner with the smartphone, which is low-cost compared with professional sensors. This method works without synchronization between scanner and smartphone and jumps out of detailed features to register terrestrial point clouds from a macroscopic perspective. Scanner positions are roughly measured by the smartphone GPS and the distance between neighboring scanner positions is used as a translation constraint. The distribution coherence of the whole points from two scans is measured by 2D distribution entropy and used to identify optimal transformation parameters. The main contributions of this paper are as follows:

- combining the terrestrial laser scanner with smartphone for coarse registration;

- using 2D projection entropy to measure the distribution coherence between two scans; and

- presenting the Iterative Minimum Entropy (IME) algorithm to correct initial transformation parameters and reduce the effect of positioning error from the smartphone GPS.

2. Methodology

2.1. Combining the Terrestrial Laser Scanner with Smartphone

Smartphones are currently commonly used and quite popular in daily life. Most smartphones have the GPS and have previously been utilized in the processing of point clouds [50,51]. The position of a laser scanner can be measured by the GPS on a smartphone by an SDK of network maps such as Google Map or phone applications such as GPS checker, etc. To measure the scan position, a smartphone can easily be attached to the scanner when GPS data are being collected; this process does not produce a highly accurate measurement of scanner position, but is rapid and convenient.

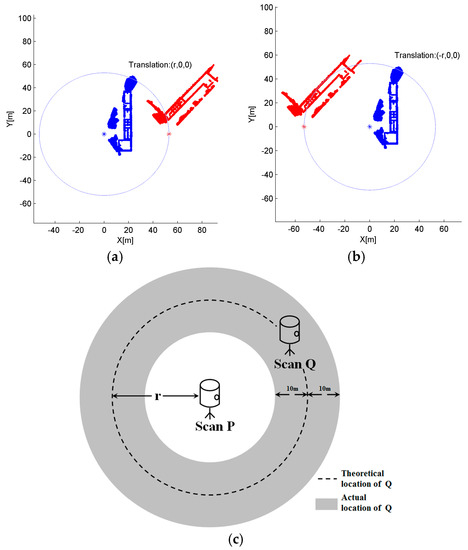

With each scan position measured, the distance between adjacent scans can be obtained. The distance measuring with smartphone GPS does not require the intervisibility between neighboring scans, bringing a favorable flexibility for the scanner set up, which is applicable to most cases, especially in the plot containing many trees or other obstacles. For two scans, P and Q, distance r between their scan positions can then be calculated. With r, it is possible to determine the relative position relation between the origins of P and Q. If P is set reference whose origin coordinate is (0, 0, 0) under its own coordinate system, then Q’s scan position will be located on the circle whose center is P and radius is r. However, because the framework of TLS data is usually related with the orientation of the scanner, e.g., the x-axis is commonly the direction of the starting laser beam on the scanner’s rotating plane, y-axis is set to the direction perpendicular to x-axis whirling counterclockwise and the direction of z-axis follows the right-hand rule in Reigl VZ-400 laser scanner used in this paper, the posture of the point cloud is thus unknown without external reference. Although the attitude parameters can be obtained by the gyroscopes, it is also required to know how the scanner constructs the frame to associate the postures for two scans, which will bring more manual intervention and make the method poor in the applicability for the data from unknown scanner. The distance r itself is not enough to recover the transformation between two scans directly, as there are many possible distributions for P and Q only with the constraint r, which is shown in Figure 1a,b. Nevertheless, the most appropriate transformation parameters can still be searched based on the spatial relation between scans P and Q, with postures considered. The problem is that smartphone GPS commonly has a location error up to 10 m, causing an error which may reach 20 m in the distance r, resulting in more uncertainty for the location of Q (as shown in Figure 1). We propose an Iterative Minimum Entropy (IME) algorithm to reduce the effect of locating error and refine the initial transformation parameters in Section 2.2.3.

Figure 1.

Relation between P and Q: (a,b) two different postures of P and Q with the same distance r, and the distance r is not enough to recover the transformation between them; and (c) the uncertainty of Q’s location.

2.2. Registration with 2D Projection Entropy

2.2.1. Searching for the Minimum Entropy in 3D Space

Information entropy [52] is the measurement of the expected value of information and represents the uncertainty of an event, which has been used in many areas, such as remote sensing image evaluation [53], classification [54] and image matching [55]. This section exploits information entropy to measure the distribution coherence of point clouds from two neighboring scans.

According to information theory, for a discrete random event with corresponding probabilities , the amount of information of X can be measured by

The more information X contains, the larger will be, with more uncertainty of X.

For two scans P and Q, different spatial distributions achieved by different transformation parameters represent different levels of point distribution uncertainty between two scans. The uncertainty of the point distribution can also be measured by entropy. As the relative position relation between P and Q can be obtained through the smartphone in Section 2.1, P and Q can be transformed into the uniform coordinate framework. In this paper, an r translation is applied to Q, where r is the coarse distance between origins of P and Q, and the origin of Q will turn into (r, 0, 0) under the coordinate framework of P. The space covered by P and Q is subdivided into a regular cuboid, and a cell C can be treated as an event. The probability that one point falls into C is

where i, j and k are the index of C with n(i, j, k) points falling into and N is the total number of points in both P and Q. The distribution entropy of the whole points thus can be calculated by

where in, jn and kn are the cell numbers along three axes x, y and z. In case of the same total number of points, higher H(P,Q) indicates more cells containing at least one point and more discrete distribution of these points. If all corresponding points in P and Q fall into the same cell, the entropy will generally achieve the minimum. If we get can identify the minimum entropy among different point distribution cases, the corresponding transformation parameters will most likely be an ideal answer. Thus, the task of registration can be transformed into finding the minimum distribution entropy of P and Q.

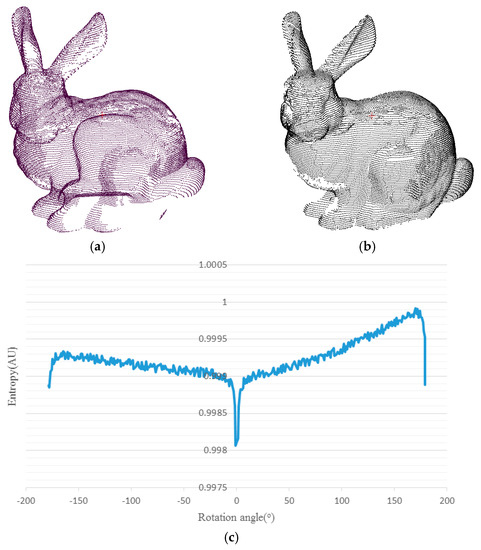

Figure 2 shows the relation between rotation angle γ and the corresponding entropy in Stanford Buddy datasets in 3D space. Points in Figure 2a,b are previously registered, and γ is the rotation angle of points in Figure 2b around Z axis. Entropy corresponding to different γ is shown in Figure 2c. Figure 2c shows that entropy achieves the minimum when γ is 0°; thus, the optimal transformation parameters can be observed in the process of searching for minimum entropy. However, for point clouds captured in real scenes, there are always three rotation parameters that must be considered simultaneously, making the search process a three-nested loop, which is time-consuming. In addition, the cuboid partition is executed in a large-scale 3D space, also making this method inefficient. Thus, some changes are essential to simplify the search process, which will be described in next section.

Figure 2.

The relation between γ and its corresponding entropy: points in (a,b) are previously registered, and the entropy is calculated when points in (b) are being rotated γ degrees around the z axis. The entropy change with γ is recorded in (c).

2.2.2. Transformation from 3D to 2D Space

To reduce the cost of searching process, we project points onto the plane of X-o-Y. On this plane, the 2D space is divided into a regular grid, with the number of points falling into every block recorded. Then, transformation parameters are detected by searching for the minimum distribution entropy on this grid.

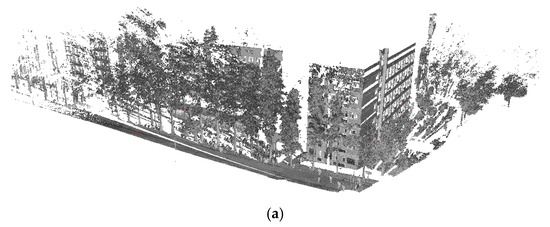

Before projecting points onto X-o-Y plane, preprocessing is applied for the original points, including ground filtering and removing small clusters. The purpose of filtering out ground points and small point clusters is to reduce the spatial extent and number of points after projection because a discrete or flying point may provide an extreme coordinate value, expand grid extent and produce more redundant empty blocks. As described by Pirotti et al. [56], ground filtering is critical to the definition of above-ground elements, which are small point clusters in this paper. Vosselman and Mass [57] presented an overview of ground filtering methods that can be divided into three primary classes. Those three methods are based on progressive densification of a triangle mesh, mathematical morphology and linear prediction and hierarchic robust interpolation. Although point filtering is a wide field, we can narrow it to the case of our research, in which ground points of high accuracy are not required. Preprocessing is conducted in three major steps:

- Ground filtering: The ground is removed using progressive densification of a triangle mesh.

- Clustering above-ground points: The clustering process is based on distance. If the distance between p and its closest point q is within the distance threshold, then p and q will be identified as the same cluster.

- Removing small clusters: In this paper, a cluster is removed if the number of points in the cluster is less than 500.

The remaining points will be projected onto the X-o-Y plane, primarily comprising architectures, vegetation and some other types of points. Typically, we follow the assumption that a laser scanner is set approximately vertical during data acquisition [37]. Sometimes it is not the case, such as scanning a high building in close distance with a tilted scanner posture, and this can be compensated by rotating the point cloud through Principal Components Analysis (PCA), as described by Polewski et al. [58]. Thus, the coordinates x and y can be directly used as the projected coordinates on X-o-Y plane and Figure 3 shows an example of the projection in this paper. Then the scan Q can be transformed into P’s coordinate framework on X-o-Y plane, with the translation of distance r (obtained in Section 2.1) and the origin of Q will turn into (r, 0).

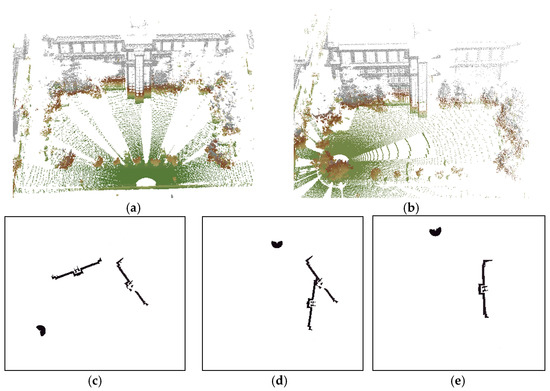

Figure 3.

Transformation from 3D to 2D: (a) original points; (b) ground filtering; (c) clustering result with small clusters colored by green; (d) filtering result and the number of points is 3,407,526; and (e) projecting result and the number of points in each block is rendered by different colors of the block center. The number of points is 27,706.

Under the same coordinate framework, the distribution entropy of P and Q can be calculated. The combined 2D space is subdivided into a regular grid of block size tG to generate a grid G(P,Q). The size of G(P,Q) can be calculated by

where , , and represent the bounding rectangle of the projected points of both P and Q. The index of the block that point p falls into can be obtained using

where and are the projected coordinates of p. The distribution entropy for P and Q can be calculated on G(P,Q) with

where is the number of points falling into the block of index (i, j) and N is the total number of points in both P and Q.

Fundamentally, the Euler distance, which is generally used in ICP, can also be utilized to measure the distribution for two scans. However, the convergence basin of ICP is usually too small [20] and it works only with an accurate description for the postures of two scans. For two coincident wall surfaces, similar distance error will be obtained as the height of the wall changes. However, the entropy will change as the height of the wall changes, as the space distribution of all the points is considered. Additionally, computing the distance error related with ICP is more time consuming than the computation of entropy, making Euler distance less practical in the coarse alignment stage.

After point projection, the registration task will convert to 2D point alignment and only the rotation angle around the z axis needs to be considered. With the origins of P and Q have been set (0, 0) and (r, 0) under P’s coordinate framework, the postures of the two scans are only related with the rotation angles around (0, 0) and (r, 0), respectively. The 2D relation between P and Q when registered will be

where P and Q rotate angles of and , respectively, around their own z axis. Thus, the 2D point alignment will convert to finding appropriate rotation parameters and to gain the minimum entropy:

where is the distribution entropy under the rotation parameters and when the distance between P and Q is r. The coordinates of P and Q need recalculation every time and vary, which is time consuming. Thus, point cloud simplification is necessary and we achieve it by grid simplification in this paper. The point cloud from P or Q is assigned to a grid of block size dG and every block is represented by its center, with the number of points falling into it recorded. Figure 4 illustrates a simple example of the searching process. Point clouds in Figure 4a,b rotate around their own origins, (0, 0) and (r, 0), and the continuous ranges of and , which are , are discretized with the interval of 1°. The rotation angles corresponding to the minimum entropy are selected to calculate the initial transformation parameters, as shown in Figure 4e.

Figure 4.

Search for the minimum entropy: (a,b) the original point clouds; (c,d) searching process; and (e) the postures corresponding to the minimum entropy.

With the assumption of the approximately vertical scanner, the z component of translation parameters between P and Q can be roughly determined based on the distance between the ground parts. Then, the relation between two registered scans P and Q can be extended back to 3D space by

where and are rotation angles around the z axis for P and Q, r is the distance between the two scan positions of P and Q and is the transformation along the z axis. The transformation from Q to P will be

2.2.3. Correcting Initial Transformation Parameters Using Iterative Minimum Entropy

As described in Section 2.1, the distance r between the scan positions of P and Q generally has an error up to 20 m. Search for the minimum entropy is not always the ideal case shown in Figure 4. In this paper, we propose a method called Iterative Minimum Entropy (IME) to correct r when searching for the minimum entropy and reduce the impact from smartphone GPS location error. The process of IME is as follows:

1 Initial distance and rotation angle detection

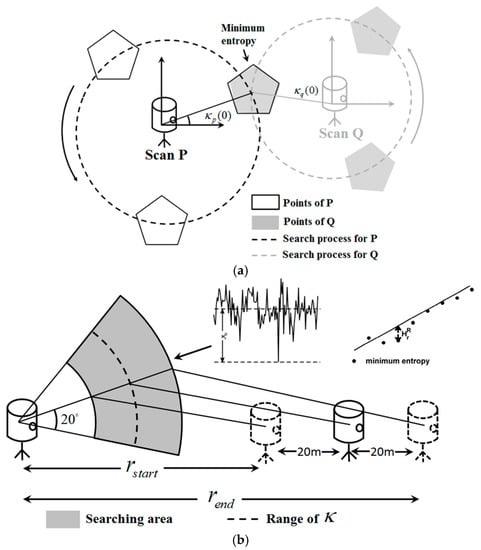

The distance r between two adjacent scans (hereafter referred to as scan distance) can be directly calculated from scan position’s GPS coordinates. As shown in Figure 5a, the angles corresponding to the minimum entropy are selected as the initial rotation parameters,

Figure 5.

Search for the initial parameters: (a) the searching process for rotation angles; and (b) the searching process for initial scan distance.

The smartphone GPS generally has an error of up to 10 m, leading to a 20-m error in scan distance r. Thus, the true value of r commonly falls in the range of [r − 20, r + 20]. This range may be unreasonable; for example, r − 20 may be negative, or r +20 may be too large to conform to the actual situation. Here, we set two thresholds rlow and rup to establish a further limit. The initial search range for scan distance can be obtained by

where is zero or a small value to ensure positive and can be easily set to or a known value the scan distance will certainly not exceed.

The aim of the initial scan distance detection is to find the distance closest to the true value in [,]. Ten sampling values are selected to help the traversal, with the interval labeled as rinterval,

For each rk, a series of entropy values are calculated within the range of , as shown in Figure 6a. With those entropy values, the difference between average and minimum entropy (Figure 6a) and the deviation from minimum entropy to expected entropy (Figure 6b), labeled as and , are calculated to evaluate rk, as shown in Figure 5b. The criterion to select the initial scan distance is

where is used to narrow the selection to some preliminary search results and larger commonly means more discriminative minimum entropy for rk. The is calculated by

where is the average entropy obtained within the preset angel range.

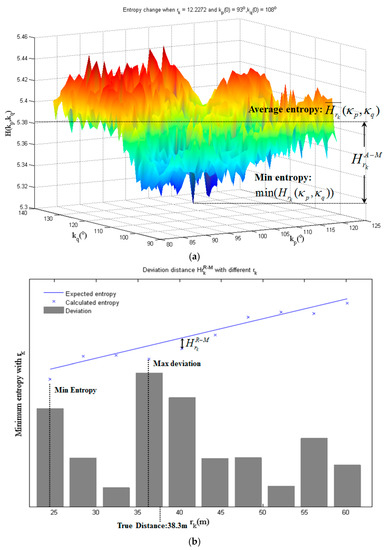

Figure 6.

Two criteria for initial distance and rotation angles selection: (a) The entropy change when = 14.2272 m and = 93°, = 108°, in S1 and S2 of the first data set in Section 3.1. The calculation of corresponding to 12.2272 m is also shown. (b) How works on S3 and S4 in the second data set in Section 3.2.

For , we find that the entropy is partially affected by the scan distance between P and Q, that will generally become smaller with a smaller rk, and the effect can be ignored when it comes to a small variation range of r. Although smaller minimum entropy commonly means the corresponding rk closer to the true value, comparing the minimum entropy directly for different rk becomes unreliable here with the largest variation range of 40 m, as shown in Figure 6b. To eliminate the effect of distance, the series of entropy values (rk sequence is obtained through Equations (16) and (17)) are selected as the reference and the relation between the entropy and distance is constructed based on linear model . We prefer rk with the largest deviation from the minimum to reference entropy, instead of rk with the minimum entropy. The deviation is calculated by

2 Iterative Minimum Entropy

With initial parameters obtained, the scan distance and rotation parameters will be corrected iteratively. In i-th iteration, the searching scope will be

where is the scan distance obtained after i − 1 times iteration and [,] will be the search region and be the search step. The rk will be traversed in [,] to find the optimal transformation parameters,

where the scan distance is selected with the minimum entropy in the iteration, because of the shrinking search scope, and the rotation parameters are calculated based on .

3 Convergence

The change of the distribution entropy between two adjacent iterations is utilized as the condition of convergence. The algorithm stops when the entropy change is less than threshold τ, which is set 0.001 in this paper.

With the scan distance and rotation angles obtained at convergence, the coarse transformation parameters can be obtained by the Equation (12). The result of coarse alignment is used as the input for ICP in fine registration stage.

3. Experiments and Discussion

Two data sets obtained by a Reigl VZ-400 scanner were used to process and evaluate the proposed approach. Data set 1 was captured on a square, in which the primary objects are plants, sculptures and buildings around the square and covered a panoramic view. Data set 2 comprised scans captured from 6 positions around a building and only covered a horizontal view of about 100°. The primary target of data set 2 is a building with some plants around it.

All scans in one data set are aligned manually and refined by standard ICP to generate reference transformation parameters. Because IME is a method for coarse registration, the result of IME is processed with the ICP algorithm to achieve a complete registration. Errors between reference and estimated transformation parameters are used to estimate the registration accuracy. In addition, root mean square deviation (RMSD) before and after ICP is used here for metric accuracy and RMSD is calculated as

As described in Section 2.2.2, there are two parameters that must be set manually: dG and tG. We also tested parameter sensitivity by changing one parameter while another remained unchanged.

3.1. Data Set 1

Data set 1 contains scans recorded at 3 different positions, as shown in Figure 7. Each scan in data set 1 contains approximately 50 million points spanning 360° horizontally and 100° vertically with a maximum range of approximately 400 m and dimensions of 537 m × 483 m × 111 m. The angular resolution is 0.02° and point density is between 8 and 80 mm. As illustrated in Figure 7, data set 1 contains a square in the middle with plants around it and buildings at a distance.

Figure 7.

View of data set 1 after registration and three scan positions.

Table 1 shows the basic information of each scan, including the number of points in different stages. Block size dG and tG for point simplification and projection are both 1 m and the number of points is obviously decreased after projecting, improving the efficiency of IME significantly. Table 2 shows the distance between each scan position, as well as the corresponding reference distance, which is calculated from the result of manual alignment. Generally, there is a significant error for each scan distance that is unstable and cannot be removed as system error.

Table 1.

Basic information of each scan.

Table 2.

Initial distance between adjacent scan positions and the corresponding reference.

Table 3 shows the registration result, including rotation and translation errors, RMSD in IME and ICP and scan distance error ∆dS. For the search scope of the scan distance, rlow is set to 0, ensuring the distance is positive, and no upper limit is set (or rup can be set to an extremely large value).

Table 3.

Errors between estimated and reference transformation parameters: ∆φ, Δω and Δκ are rotation parameter errors corresponding to the x, y and z axes, respectively; ∆x, Δy and Δz are translation errors along the x, y and z axes, respectively; RMSD is the root mean square deviation between two point clouds; and ∆dS is the error of the distance between two adjacent scan positions.

The rotation error is generally within 2°, with Δκ commonly smaller than the other two, because the rotation angle corresponding to the z axis is continuously corrected during IME iteration. Similarly, IME performs better on ∆z than the other two translation errors, demonstrating that it is feasible to directly use the distance between the ground parts along the z axis as the z component of translation for two adjacent scans. Although horizontal translation errors ∆x and ∆y are much larger than ∆z, the errors remain sufficient for a successful fine alignment, with translation errors and RMSE all reaching centimeter level after ICP. In addition, the error of scan distance also reaches centimeter or sub-centimeter level after ICP. In Table 3, we can also observe that the correlation between ∆dS and transformation errors is not absolute, although in most cases, a smaller ∆dS tends to correspond to smaller transformation errors. In conclusion, the IME algorithm is more concerned with the overall distribution of points in two adjacent scans, resulting in larger initial transformation errors than feature-based method in the stage of rough alignment, but the coarse alignment accuracy is still good enough to ensure the convergence of ICP in the fine alignment stage.

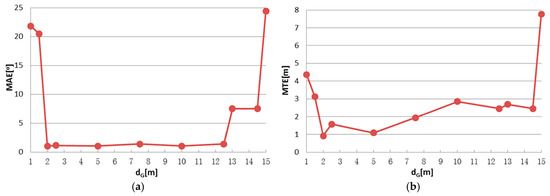

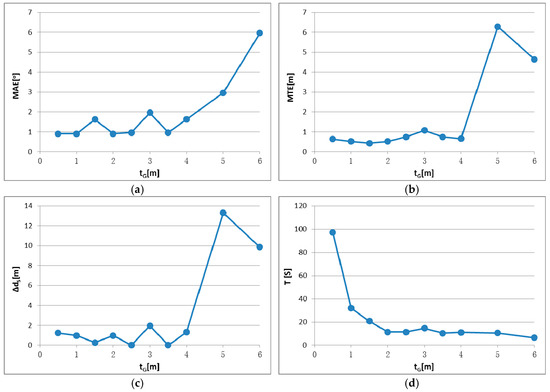

There are two parameters in IME that have to be set by the user: block size dG for the grid simplification of original points and block size tG for entropy calculation, as described in Section 2.2.2. To identify the appropriate parameter settings and test the parameter stability, registration tests were conducted using different parameter settings. Test results are shown in Figure 8, Figure 9, Figure 10 and Figure 11, in which the mean angular error (MAE), mean translation error (MTE), scan distance error (∆dS) and runtime T are analyzed. In the test, MAE and MTE are only influenced by Δκ and ∆x, ∆y, respectively, because ∆φ, Δω and ∆z are all set to fixed values during IME iteration.

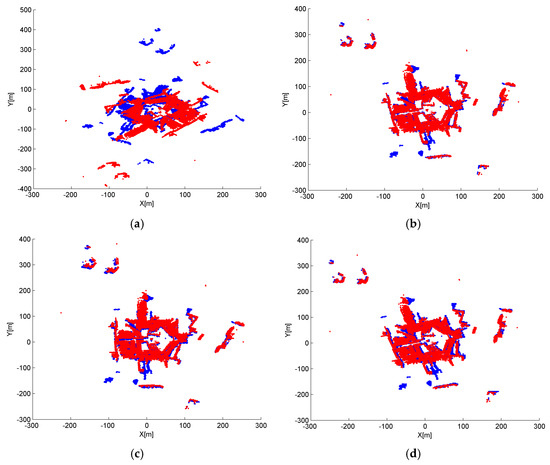

Figure 8.

IME results with different dG, when tG is fixed to 10 m: (a) mean angular error (MAE); (b) translation error (MTE); (c) scan distance error (∆dS); and (d) runtime (T).

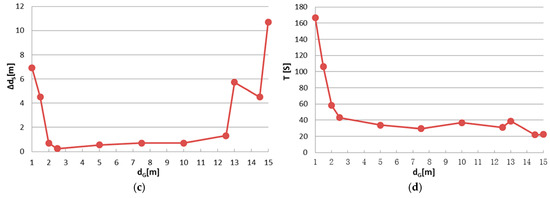

Figure 9.

Nadir view of the spatial distributions for S1 and S2 after IME when: (a) dG = 1 m; (b) dG = 2.5 m; (c) dG = 5 m; and (d) dG = 10 m, and tG is fixed to 10 m.

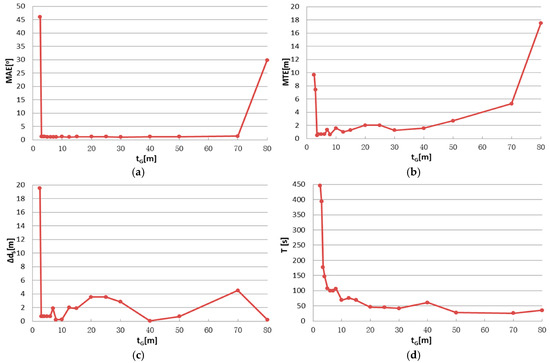

Figure 10.

IME results with different tG, when dG is fixed to 2.5 m: (a) mean angular error (MAE); (b) mean translation error (MTE); (c) scan distance error (∆dS); and (d) runtime (T).

Figure 11.

Nadir view of the spatial distributions after IME when: (a) tG = 2.5 m; (b) tG = 5 m; (c) tG = 10 m; and (d) tG = 20 m and dG is fixed to 2.5 m.

Although a smaller grid size dG or tG can retain more details from the original point cloud, we observed that incorrect transformation parameters are obtained with an MAE greater than 20° when dG is below 2 m or tG is below 3 m in Figure 8 and Figure 10. The ICP can obtain a right convergence when dG is between 2 m and 14.5 m in Figure 8. Considering the runtime and translation errors, [2.5, 7.5] will be a more appropriate variation range for dG. Figure 9 shows the projections of four rough alignment results when dG is 1 m, 2 m, 5 m and 10 m. By comparing Figure 9b,c, we observe that despite having similar angular and translation errors, slight differences remain in the results of rough alignment. IME performs better on the two buildings located in the upper left corner when dG is 5 m whereas the parameter setting dG = 2.5 m gains a greater overlap on the building located in the lower right corner. The best results are achieved when dG is approximately 50 to 100 times the average density and the stability of the rotation error is better than that of the translation error.

Figure 10 and Figure 11 show similar tendency with Figure 8 and Figure 9; thus, similar conclusions can be drawn. MAE is below 2 m when tG is in the range of [3,40], which is much wider than the that of dG. We also note that tG has more influence on the efficiency than dG, by comparing Figure 8d and Figure 10d. The best results are achieved when tG is approximately 1% to 10% of the shortest edge of the point cloud’s bounding box. In addition, tG has better error resistance than dG while the latter has better runtime stability.

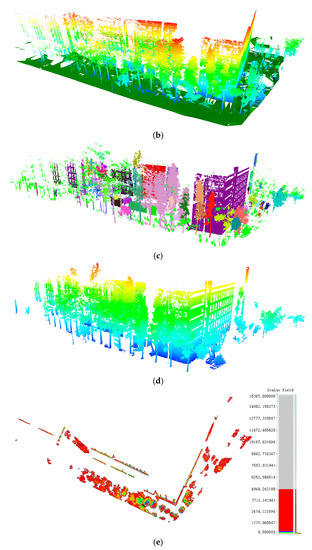

3.2. Data Set 2

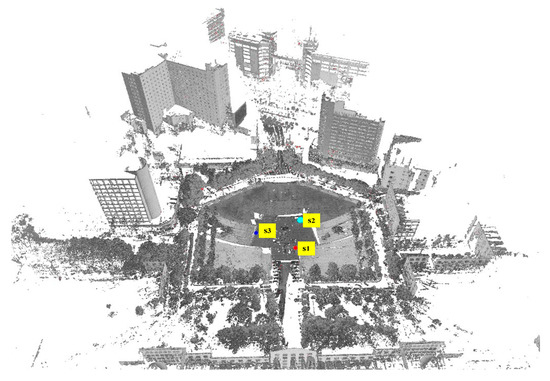

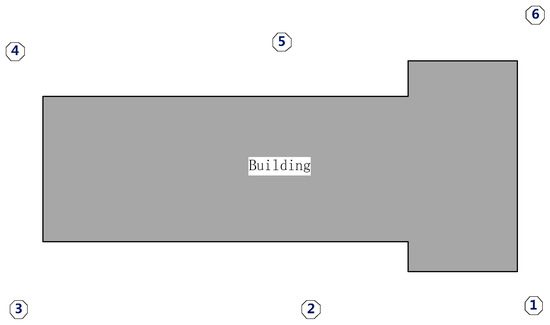

Compared with data set 1, the horizontal scanning scope of data set 2 (as shown in Figure 12) is between 60 and 100° with a lower overlap rate. Data set 2 comprises 6 scans around a building with surroundings including vegetation, roads, cars and bicycles and was acquired with dimensions ≈ 85 m × 166 m × 49 m and point density between 8 and 16 mm. The basic information is shown in Table 4, and Table 5 shows the distance between different scan pairs in data set 2. Scan pairs S1–S2 and S5–S6 have a deviation of more than 15 m from the reference values. This is because a large amount of vegetation interfered with the GPS signal when the scans were acquired.

Figure 12.

Distribution of the 6 scans.

Table 4.

Basic information of each scan.

Table 5.

Initial distance of each scan pair and the corresponding reference.

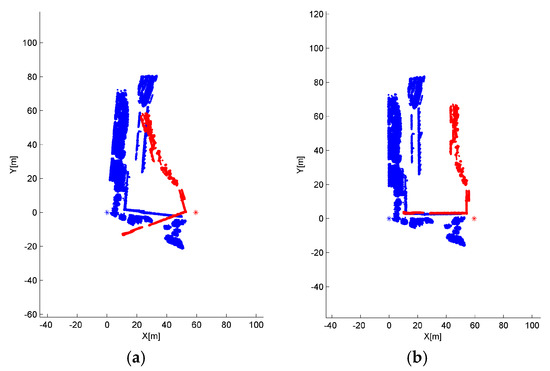

The registration test was conducted with parameter settings: dG = 0.15 m and tG = 2.5 m, recalling the conclusion of the test on dataset 1. The result is shown in Table 6, with the overlap rate of neighboring scans. The registration error of data set 2 is larger than that of data set 1 and the likely reason is the lower overlap rate between adjacent scans, resulting in the decreased accuracy of initial transformation parameters. Despite this, most results of IME remains good enough to be correctly refined by ICP in the stage of fine alignment, with the final accuracy on the order of scanner’s measurement accuracy, except the registration between S1 and S6. As shown in Table 6 and Figure 13, the IME falls into the local convergence basin for the registration of S1 and S6, probably because of the small overlap rate and the homogeneous overlapping area, containing only part of a wall and placing weak constraint on the transformation search. We also tried to use the result in Figure 13b as the input of ICP, but the z component of the translation parameters still contained a large error, for there is little overlap at the ground part. For the two nonadjacent scan pairs, S1–S3 and S4–S6, although the overlap rates are significantly lower compared with the sequential pairs, the registration results are still satisfying, with the registration errors at centimeter level.

Table 6.

Errors between estimated and reference transformation parameters.

Figure 13.

The IME result of S1–S6: (a) the Nadir view corresponding to the minimum entropy, which is a local convergence for IME; and (b) the theoretically correct posture for S1 and S2, which corresponds to the fifth small entropy.

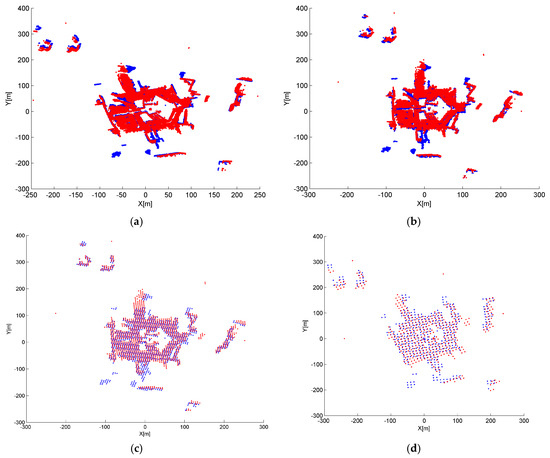

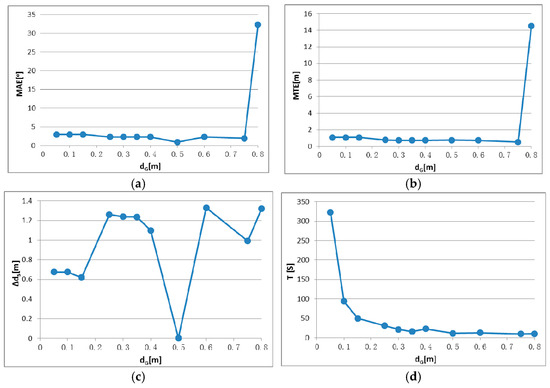

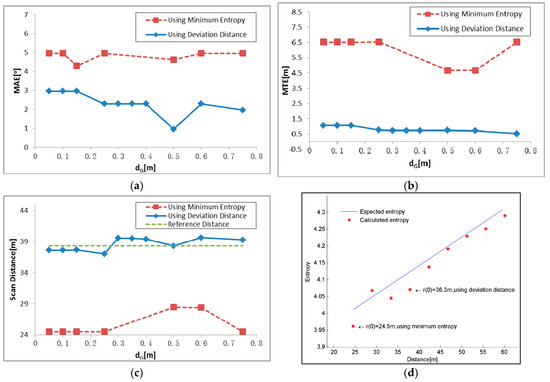

The results under different dG and tG settings are shown in Figure 14 and Figure 15. The experiment on data set 2 shows similar trends with data set 1, except for the correct result under the minimum parameter setting, dG = 0.05 m and tG = 0.05 m. Considering the error and runtime together, the best results are obtained when dG is between 0.05 m and 0.75 m, and tG is between 0.05 m and 4 m and the conclusion can be drawn similar as that of data set 1.

Figure 14.

IME results with different dG, when tG is fixed to 2.5 m: (a) mean angular error (MAE); (b) mean translation error (MTE); (c) scan distance error (∆dS); and (d) runtime (T).

Figure 15.

IME results with different tG when dG is fixed to 0.5 m: (a) mean angular error (MAE); (b) mean translation error (MTE); (c) scan distance error (∆dS); and (d) runtime (T).

The accuracies shown in Figure 14 and Figure 15 are slightly worse than in Figure 8 and Figure 10 and the range of dG and tG corresponding to correct results is smaller. This result is most likely because of the smaller dimensions of data set 2. Two distant points may fall into the same block if dG or tG is set too large compared with the edge length of the bounding box.

In addition to parameter tests, we conducted a further test to compare two initial value selecting criteria: using minimum entropy and deviation distance. This test was conducted using parameter settings: dG = 0.5 m and tG = 0.25 m, and the result is shown in Figure 16. As shown in Figure 16a–c, the results based on the deviation show a better performance, with smaller MAE and MTE and the scan distance much closer to the reference. Figure 16d shows the selecting process of scan distance. The linear function between entropy and distance is shown in the form of line, and ten candidates are labeled as points. The 2D distribution entropy shows a decreasing trend when the scan distance reduces. The minimum distance 24.5 m will be selected as the initial scan distance if the minimum entropy is used as decision criterion, as shown in Figure 16d. When the deviation is used as criterion, the initial scan distance will be 36.3 m, which is much closer to the reference scan distance 38.302 m, erasing the entropy change brought by distance variation.

Figure 16.

Comparison of two initial value selection criteria, using minimum entropy and using deviation distance: (a) MAE; (b) MTE; and (c) Scan distance; and (d) the selecting process in detail. Although the distance 24.5 m corresponds to the smallest entropy, 36.3 m, which is much closer to reference distance of 38.302 m, is selected based on deviation distance.

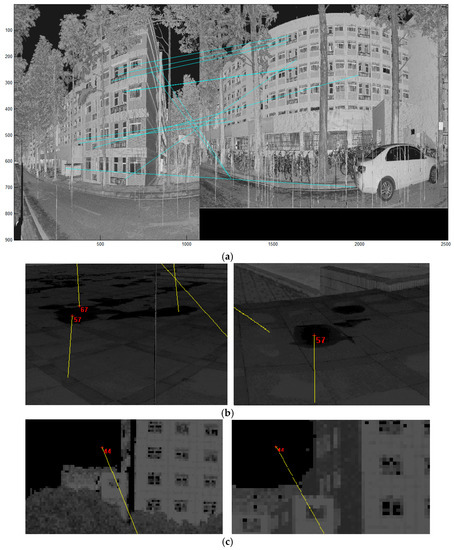

3.3. Comparison

A comparison was taken between SIFT-based method and the proposed method. In the SIFT-based method, correspondences are obtained on the reflectance image with SIFT and false matches are excluded with the branch-and-bound algorithm [19,23]. The mean angle error, mean translation error and RMSD before ICP are listed in Table 7. As shown in Figure 17, the matching rate is limited because of the viewpoint changes, self-similarity and holes, and some problems may exist, e.g., the points on background and inaccurate matching. Meanwhile, the proposed method, which is independent of detailed features, mainly focuses on the overall distribution and reduces the possibility of false registration result.

Table 7.

Comparison between the proposed method and SIFT-based method.

Figure 17.

Matching results using SIFT: (a) the result of SIFT feature matching on S1–S2 in data set 2 and the false matching is mainly because of viewpoint changes and self-similarity; (b) the false match from holes; and (c) the correct match on the background with no correspondence in the space of point cloud.

4. Conclusions

In this paper, a method called Iterative Minimum Entropy (IME) is proposed for the coarse registration of TLS point clouds, with a novel sensor combination mode of terrestrial laser scanner and smartphone. This method is based on 2D distribution entropy and the distance r between neighboring scan positions, by rotating two point clouds around their own z axis until the optimal initial transformation is reached. Since there is no synchronization between the laser scanner and smartphone, only a rough distance between neighboring scanner positions is measured using smartphone GPS. We proposed two criteria, the difference between average and minimum entropy and the deviation from minimum entropy to expected entropy, to decide the optimal initial transformation between two scans instead of directly using the minimum entropy. The method achieved high accuracy and efficiency in the two experiments we have conducted, in which panoramic and non-panoramic, vegetation-dominated and building-dominated scenes were tested. Commonly, the proposed method achieves a good result when tG is approximately 1% to 10% of the shortest edge of the bounding box and dG is approximately 50 to 100 times the average density, according to the experimental results, but the range is not absolute. It is also noticed that the IME is likely to fall into a local convergence basin when the overlapping rate is too low or most overlapping areas are of narrow distribution.

The future investigations will include efforts to deal with the small overlapping rate, which is about 5%–10%, and homogeneous overlapping areas. In addition, IME can be extended in the future using other ranging methods, e.g., Electronic Distance Measurement (EDM), or the initial distance can simply be given by the user in some special cases, such as near the subway or train tracks, where each track section has a fixed length and can be treated as the distance marker, making it a method using no prior information. However, some modifications may be needed to ensure the robustness for different manners of extension.

Acknowledgments

This work was supported by the National Science and Technology Pillar Program under Grant No. 2014BAL05B07 and the National Natural Science Foundation of China under Grant No. 41671450.

Author Contributions

Maolin Chen, Siying Wang and Youchuan Wan developed the methodology and analyzed the data; Maolin Chen and Mingwei Wang conceived and performed the experiments; Peipei He provided suggestions about the work and helped to modify the manuscript; Maolin Chen wrote the paper. All of the authors contributed to the writing and reviewing of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Franaszek, M.; Cheok, G.S.; Witzgall, C. Fast automatic registration of range images from 3D imaging systems using sphere targets. Autom. Constr. 2009, 18, 265–274. [Google Scholar] [CrossRef]

- Akca, D. Full Automatic Registration of Laser Scanner Point Clouds; Optical 3D Measurement Techniques VI: Zurich, Switzerland, 2003; pp. 330–337. [Google Scholar]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Gruen, A.; Akca, D. Least squares 3D surface and curve matching. ISPRS J. Photogramm. Remote Sens. 2005, 59, 151–174. [Google Scholar] [CrossRef]

- Pottmann, H.; Huang, Q.X.; Yang, Y.L.; Hu, S.M. Geometry and Convergence Analysis of Algorithms for Registration of 3D Shapes. Int. J. Comput. Vis. 2006, 67, 277–296. [Google Scholar] [CrossRef]

- Rabbani, T.; Dijkman, S.; van den Heuvel, F.; Vosselman, G. An integrated approach for modelling and global registration of point clouds. ISPRS J. Photogramm. Remote Sens. 2007, 61, 355–370. [Google Scholar] [CrossRef]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Pasadena, CA, USA, 19–23 May 2008; pp. 19–25.

- Gressin, A.; Mallet, C.; Demantké, J.; David, N. Towards 3D lidar point cloud registration improvement using optimal neighborhood knowledge. ISPRS J. Photogramm. Remote Sens. 2012, 3, 240–251. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bendels, G.H.; Degener, P.; Wahl, R.; Körtgen, M.; Klein, R. Image-based registration of 3D-range data using feature surface elements. In Proceedings of the International Conference on Virtual Reality, Archaeology and Intelligent Cultural Heritage, Eurographics Association, Oudenaarde, Belgium, 7–10 December 2004; pp. 115–124.

- Barnea, S.; Filin, S. Keypoint based autonomous registration of terrestrial laser point-clouds. ISPRS J. Photogramm. Remote Sens. 2008, 63, 19–35. [Google Scholar] [CrossRef]

- Böhm, J.; Becker, S. Automatic marker-free registration of terrestrial laser scans using reflectance. In Proceedings of the Proceedings of 8th Conference on Optical 3D Measurement Techniques, Zurich, Switzerland, 9–12 July 2007; pp. 338–344.

- Wang, Z.; Brenner, C. Point based registration of terrestrial laser data using intensity and geometry features. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 583–590. [Google Scholar]

- Kang, Z.; Li, J.; Zhang, L.; Zhao, Q.; Zlatanova, S. Automatic registration of terrestrial laser scanning point clouds using panoramic reflectance images. Sensors 2009, 9, 2621–2646. [Google Scholar] [CrossRef] [PubMed]

- Kang, Z. Automatic registration of terrestrial point clouds based on panoramic reflectance images and efficient BaySAC. In Proceedings of the Eighth International Symposium on Multispectral Image Processing and Pattern Recognition, International Society for Optics and Photonics, Wuhan, China, 26–27 October 2013.

- Weinmann, M.; Weinmann, M.; Hinz, S.; Jutzi, B. Fast and automatic image-based registration of TLS data. ISPRS J. Photogramm. Remote Sens. 2011, 66, S62–S70. [Google Scholar] [CrossRef]

- Kang, Z.; Zlatanova, S.; Gorte, B. Automatic Registration of Terrestrial Scanning Data Based on Registered Imagery; Delft University of Technology: Delft, The Netherlands, 2007. [Google Scholar]

- Renaudin, E.; Habib, A.; Kersting, A.P. Feature-Based Registration of Terrestrial Laser Scans with Minimum Overlap Using Photogrammetric Data. ETRI J. 2011, 33, 517–527. [Google Scholar] [CrossRef]

- Canaz, S.; Habib, A. Photogrammetric features for the registration of terrestrial laser scans with minimum overlap. J. Geod. Geoinf. 2013, 2, 1–8. [Google Scholar] [CrossRef]

- Gelfand, N.; Mitra, N.J.; Guibas, L.J.; Pottmann, H. Robust global registration. In Proceedings of the Symposium on Geometry Processing, Vienna, Austria, 4–6 July 2005.

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Keypoint-based 4-Points Congruent Sets–Automated marker-less registration of laser scans. ISPRS J. Photogramm. Remote Sens. 2014, 96, 149–163. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 1–4.

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points congruent sets for robust pairwise surface registration. ACM Trans. Graph. 2008, 27, 85. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391.

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’09), Kobe, Japan, 12–17 May 2009; pp. 3212–3217.

- Liu, R.; Hirzinger, G. Marker-Free Automatic Matching of Range Data; Panoramic Photogrammetry Workshop, Proceedings of the ISPRS Working Group: Vienna, Austria, 2005. [Google Scholar]

- Basdogan, C.; Oztireli, A.C. A new feature-based method for robust and efficient rigid-body registration of overlapping point clouds. Vis. Comput. 2008, 24, 679–688. [Google Scholar] [CrossRef]

- Lo, T.W.R.; Siebert, J.P. Local feature extraction and matching on range images: 2.5 D SIFT. Comput. Vis. Image Underst. 2009, 113, 1235–1250. [Google Scholar] [CrossRef]

- Ge, B.; Peng, B.; Tian, Q. Registration of three-dimensional point-cloud data based on curvature map. J. Tianjin Univ. 2013, 46, 174–180. [Google Scholar]

- Guo, Y.; Sohel, F.; Bennamoun, M.; Wan, J.; Lu, M. An accurate and robust range image registration algorithm for 3D object modeling. IEEE Trans. Multimedia 2014, 16, 1377–1390. [Google Scholar] [CrossRef]

- Stamos, I.; Leordeanu, M. Automated feature-based range registration of urban scenes of large scale. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003.

- Yao, J.; Ruggeri, M.R.; Taddei, P.; Sequeira, V. Automatic scan registration using 3D linear and planar features. 3D Res. 2010, 1, 1–18. [Google Scholar] [CrossRef]

- Yang, B.; Zang, Y. Automated registration of dense terrestrial laser-scanning point clouds using curves. ISPRS J. Photogramm. Remote Sens. 2014, 95, 109–121. [Google Scholar] [CrossRef]

- Theiler, P.W.; Schindler, K. Automatic registration of terrestrial laser scanner point clouds using natural planar surfaces. ISPRS Ann. Photogr. Remote Sens. Spat. Inf. Sci. 2012, 3, 173–178. [Google Scholar] [CrossRef]

- Dold, C.; Brenner, C. Registration of terrestrial laser scanning data using planar patches and image data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 78–83. [Google Scholar]

- Brenner, C.; Dold, C.; Ripperda, N. Coarse orientation of terrestrial laser scans in urban environments. ISPRS J. Photogramm. Remote Sens. 2008, 63, 4–18. [Google Scholar] [CrossRef]

- Pu, S.; Li, J.; Guo, S. Registration of Terrestrial Laser Point Clouds by Fusing Semantic Features and GPS Positions. Acta Geod. Cartogr. Sin. 2014, 43, 545–550. [Google Scholar]

- Yang, B.; Dong, Z.; Liang, F.; Liu, Y. Automatic registration of large-scale urban scene point clouds based on semantic feature points. ISPRS J. Photogramm. Remote Sens. 2016, 113, 43–58. [Google Scholar] [CrossRef]

- Kelbe, D.; Aardt, J.V.; Romanczyk, P.; van Leeuwen, M.; Cawse-Nicholson, K. Marker-Free Registration of Forest Terrestrial Laser Scanner Data Pairs With Embedded Confidence Metrics. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1–17. [Google Scholar] [CrossRef]

- Yang, B.; Dai, W.; Dong, Z.; Liu, Y. Automatic Forest Mapping at Individual Tree Levels from Terrestrial Laser Scanning Point Clouds with a Hierarchical Minimum Cut Method. Remote Sens. 2016, 8, 372. [Google Scholar] [CrossRef]

- Wen, X.; Xu, S.; Elberink, S.O.; Vosselman, G. Individual Tree Crown Modeling and Change Detection from Airborne Lidar Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3467–3477. [Google Scholar]

- Zeisl, B.; Koser, K.; Pollefeys, M. Automatic registration of RGB-D scans via salient directions. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2808–2815.

- Chan, T.O. Cylindrical and Polygonal Object Modelling and its use in LiDAR Calibration and Point Cloud Registration. Ph.D. Thesis, University of Calgary, Calgary, AB, Canada, 2015. [Google Scholar]

- Rabbani, T.; van den Heuvel, F. Automatic point cloud registration using constrained search for corresponding objects. In Proceedings of the 7th Conference on Optical, Vienna, Austria, 3–5 October 2005; pp. 3–5.

- Urban, S.; Weinmann, M. Finding a good feature detector-descriptor combination for the 2D keypoint-based registration of TLS point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 5, 121–128. [Google Scholar] [CrossRef]

- Talaya, J.; Alamus, R.; Bosch, E.; Serra, A.; Kornus, W.; Baron, A. Integration of a terrestrial laser scanner with GPS/IMU orientation sensors. In Proceedings of the XXth ISPRS Congress, Istanbul, Turkey, 12–23 July 2004.

- Kukko, A.; Kaartinen, H.; Hyyppä, J.; Chen, Y. Multiplatform mobile laser scanning: Usability and performance. Sensors 2012, 12, 11712–11733. [Google Scholar] [CrossRef]

- Asai, T.; Kanbara, M.; Yokoya, N. 3D modeling of outdoor environments by integrating omnidirectional range and color images. In Proceedings of the Fifth International Conference on 3-D Digital Imaging and Modeling (3DIM’05), Ottawa, ON, Canada, 13–16 June 2005; pp. 447–454.

- Sirmacek, B.; Lindenbergh, R.C.; Menenti, M. Automatic registration of Iphone images to LASER point clouds of the urban structures using shape features. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Antalya, Turkey, 11–13 November 2013; pp. 265–270.

- Zhang, S.; Shan, J.; Zhang, Z.; Yan, J.; Hou, Y. Integrating Smartphone Images and Airborne LIDAR Data for Complete Urban Building Modelling. In Proceedings of the ISPRS-International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 741–747.

- Shannon, C.E. A mathematical theory of communication. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Jiao, W.; Long, T.; Yang, G.; He, G. A New Method for Geometric Quality Evaluation of Remote Sensing Image Based on Information Entropy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 63. [Google Scholar] [CrossRef]

- Samanta, D.; Sanyal, G. Classification of SAR Images Based on Entropy. Int. J. Inf. Technol. Comput. Sci. 2012, 4, 82. [Google Scholar] [CrossRef]

- Pickering, M.R.; Jia, X. Registration of multi-sensor remote sensing imagery by gradient-based optimization of cross-cumulative residual entropy. In Proceedings of the SPIE—The International Society for Optical Engineering, San Diego, CA, USA, 10–14 August 2008.

- Pirotti, F.; Guarnieri, A.; Vettore, A. Ground filtering and vegetation mapping using multi-return terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2013, 76, 56–63. [Google Scholar] [CrossRef]

- Vosselman, G.; Maas, H.G. Airborne and Terrestrial Laser Scanning; Whittles Publishing: Caithness, UK, 2010. [Google Scholar]

- Polewskia, P.; Ericksonc, A.; Yaoa, W.; Coopsc, N.; Krzysteka, P.; Stillab, U. Object-Based Coregistration of Terrestrial Photogrammetric and ALS Point Clouds in Forested Areas. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 347–354.

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).