Abstract

In order to improve the performance of an inertial navigation system, many aiding sensors can be used. Among these aiding sensors, a vision sensor is of particular note due to its benefits in terms of weight, cost, and power consumption. This paper proposes an inertial and vision integrated navigation method for poor vision navigation environments. The proposed method uses focal plane measurements of landmarks in order to provide position, velocity and attitude outputs even when the number of landmarks on the focal plane is not enough for navigation. In order to verify the proposed method, computer simulations and van tests are carried out. The results show that the proposed method gives accurate and reliable position, velocity and attitude outputs when the number of landmarks is insufficient.

1. Introduction

The inertial navigation system (INS) is a self-contained dead-reckoning navigation system that provides continuous navigation outputs with high-bandwidth and short-term stability. Due to its navigation characteristics, the accuracy of the navigation output degrades as time passes. In order to improve the performance of the INS, a navigation aid can be integrated into the INS. The GPS/INS integrated navigation system is one of the most generally used integrated navigation systems [1,2]. However, the GPS/INS integrated navigation system may not produce reliable navigation outputs, since the GPS signal is vulnerable to interference such as jamming and spoofing [3,4]. In recent years, many alternative navigation systems to GPS such as vision, radar, laser, ultrasonic sensor, UWB (Ultra-Wide Band) and eLoran (enhanced Long range navigation) have been studied in order to provide continuous, reliable navigation outputs [4].

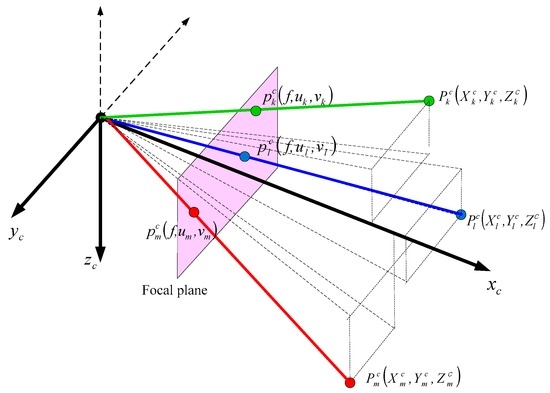

Vision sensors have recently been used for navigation of vehicles such as cars, small-sized low-cost airborne systems and mobile robots due to their benefits in terms of weight, cost and power consumption [5,6,7]. Navigations using vision sensors can be classified into three methods [4,7]. The first method determines the position of the vehicle by comparing the measured image of a camera with the stored image or stored information of a map [8]. The second method, which is called landmark-based vision navigation, determines position and attitude by calculating directions to landmarks from the measured image of the landmarks [9,10]. The third method, called visual odometry, determines the motion of the vehicle from successive images of the camera [11]. Among these three methods, the landmark-based approach is known to have the advantages of bounded navigation parameter error and simple computation [7].

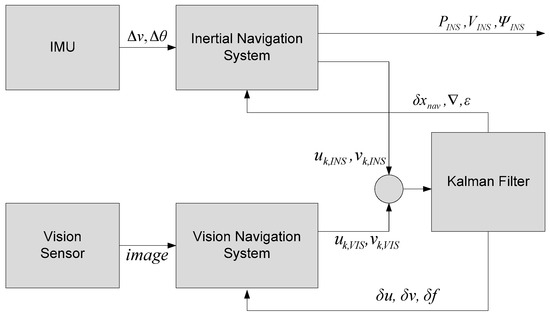

In order to integrate an inertial navigation system with a vision navigation system, several methods have been proposed [12,13,14,15,16]. The method in [12] uses gimbal angle and/or bearing information calculated from camera images. In this case, the integrated navigation method may not give an optimal navigation output since the inputs to the integration filter are processed outputs from raw measurements from the vision sensor. When the visual odometry is used for the integrated navigation system as in [13], the error of the navigation output from the vision navigation system increases with time. The integrated navigation method proposed in [14,15,16] uses the position and attitude, velocity or heading information from the vision navigation system. This integrated navigation method may not give a reliable navigation output when the number of landmarks in the camera image is not enough for the navigation output.

This paper proposes an inertial and vision integrated navigation method for poor vision environments, in which position and attitude outputs cannot be obtained from a vision navigation system due to the limited number of landmarks. The proposed method uses focal plane measurements of landmarks in the camera and INS outputs. Since there is no need to have navigation output from the vision navigation system, the proposed method can give integrated navigation output even when the number of landmarks in the camera is not enough for the navigation output. In addition to this, since the integration method uses raw measurements for integration filter, the navigation output may have better performance. In Section 2, a brief description of landmark-based vision navigation is given. The proposed integration method is presented in Section 3. Results of computer simulations and vehicle experiments are given in Section 4. The concluding remarks and further studies are mentioned in Section 5.

4. Computer Simulation and Experimental Result

The proposed method is verified through computer simulations and van tests.

4.1. Computer Simulation

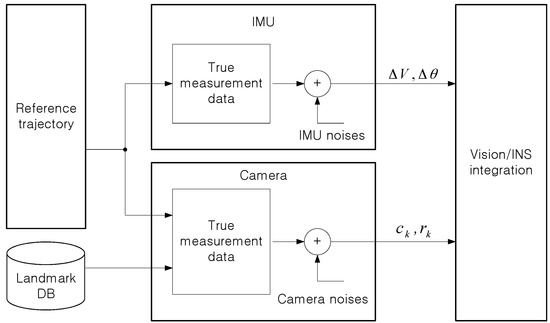

Computer simulations of the proposed integrated navigation method were carried out for a low medium-grade inertial sensor and a low-cost commercial camera. Figure 3 shows the scheme of the simulations. Reference trajectory and inertial sensor data were generated using MATLAB and INS tool box manufactured by GPSoft LLC. True camera measurement data of the landmarks were first generated using the pinhole camera model given in Equations (1) and (2). The camera measurement data on the focal plane of the landmarks were finally generated by adding noises into the true camera measurement data. Zero to ten landmarks to be observed on every image are placed by a random generator. The IMU measurement data were also generated by adding noises into the true IMU measurement data. Table 1 and Table 2 show the specifications of the IMU and the vision sensor for the simulation.

Figure 3.

Scheme of simulation.

Table 1.

IMU specification and INS initial attitude error for simulation.

Table 2.

Vision sensor specification for simulation.

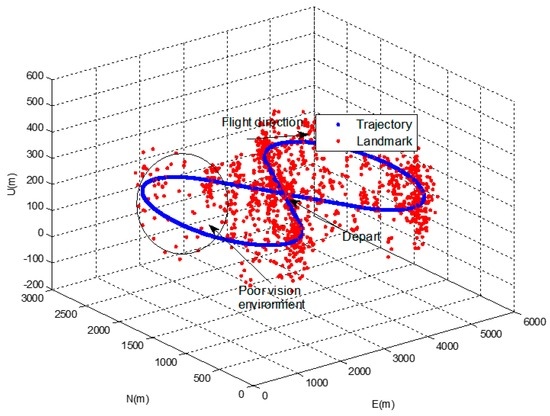

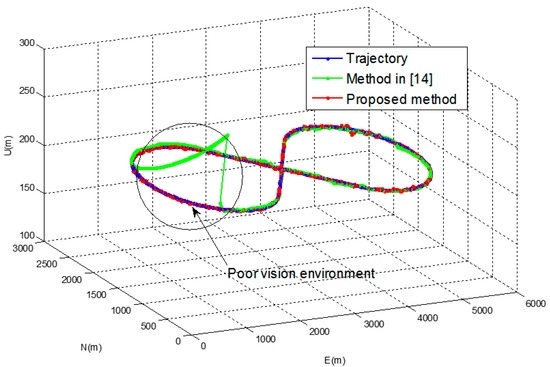

50 Monte-Carlo simulations were performed for an eight-shaped flight path with constant height as shown in Figure 4. Less than three landmarks were intentionally placed randomly in a specific area in order to create a poor vision navigation environment.

Figure 4.

Vehicle trajectory and landmarks in simulation.

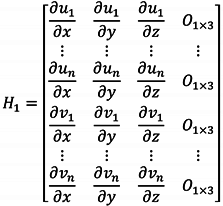

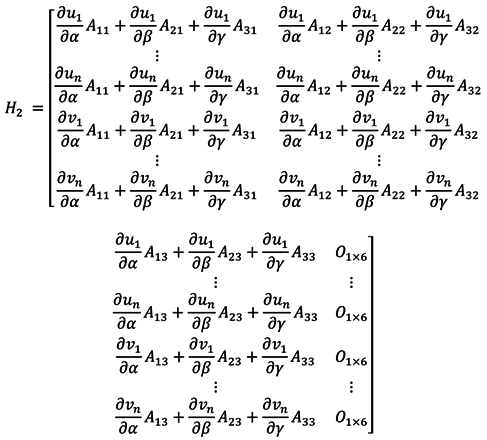

Results of the proposed method were compared with those of another integration method in [14]. In the integration method in [14], the outputs of the vision navigation system are position and attitude and state vector is given in Equation (27).

The measurement vector is given in Equation (28).

As with the loosely coupled GPS/INS integrated navigation method, the method in [14] has redundancy in the navigation output. The vision navigation system can provide a stand-alone navigation output even when the INS and/or the integrated navigation system cannot provide a navigation output. However, as described in Section 2, the vision system cannot give navigation output when less than three landmarks are available on the focal plane. In this case, performance of integrated navigation system can deteriorate since the measurement update process cannot be performed in the integration Kalman filter. As shown in Equation (15), the measurement update process can be performed even when only one landmark is visible on the focal plane in the proposed method. Only the time update in Kalman filtering is performed when no landmarks are visible at all.

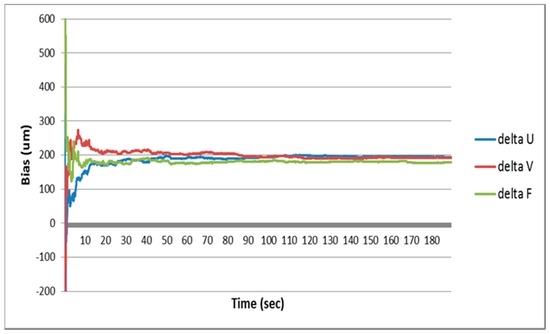

Figure 5 shows results of the estimated vision sensor errors of the proposed method in the simulation. It can be seen from the results that the vision sensor errors are well estimated and the performance of the vision navigation system is improved.

Figure 5.

Camera sensor error estimation results of the simulation.

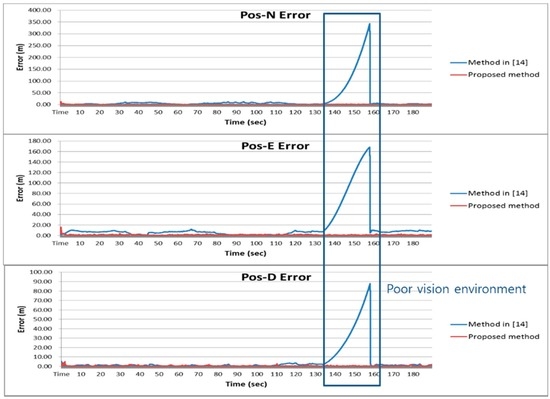

In Figure 6, navigation results of the proposed method are compared with those of the pure INS and the method in [14]. Figure 7 shows the position errors in the north, east and down direction of the proposed method and the method in [14].

Figure 6.

Navigation results of the simulation.

Figure 7.

Position error of the simulation.

Table 3 shows RMS errors for the pure INS, the method in [14] and the proposed method. It can be observed that error of the pure INS becomes large as the navigation operation continues. It can also be observed that the method in [14] gives relatively large navigation parameter errors in the area where the number of the landmarks are not enough for vision navigation output. The proposed method gives approximately 50 and 10 times better performance in the position and the attitude than the method in [14] in this area, respectively.

Table 3.

RMS navigation parameter error of the simulation.

4.2. Van Test

Figure 8 shows the experimental setup and a reference navigation system. The experimental setup consists of a camera and an IMU and is installed on an optical bench. The reference navigation system, which is a carrier-phase differential GPS (CDGPS)/INS integrated navigation system, is installed together. Outputs of the reference navigation system are regarded as true values in the evaluation of the experimental results. A low-cost commercial camera and a micro electro mechanical system (MEMS) IMU given in Table 4 and Table 5 were used in the experiment. Database of the landmarks was made in advance with the help of large-scale maps and aerial photographs.

Figure 8.

Experimental setup and reference navigation system.

Table 4.

IMU specification and initial attitude error for the experiment.

Table 5.

Vision sensor specification for the experiment.

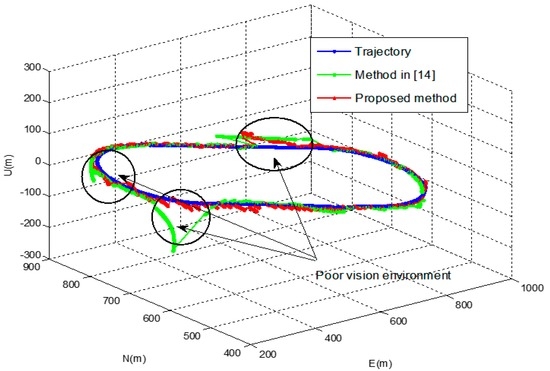

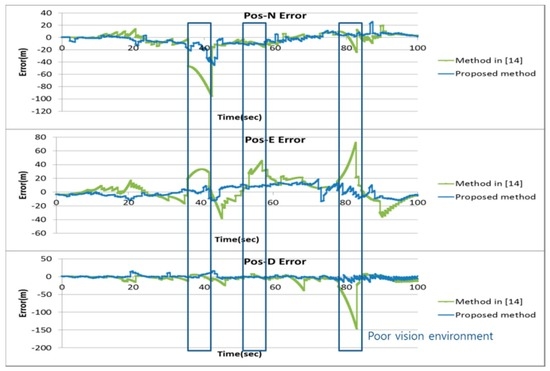

Figure 9 shows the position of the vehicle’s reference trajectory in the experiment. The results of the proposed method of the experiment were compared with those of the pure INS and the method in [14]. Figure 10 and Figure 11 show the navigation results and Table 6 shows the errors in the experiment. As with the results of the computer simulations, it can be seen from the experimental result that the proposed method provides reliable solutions with approximately 5 times better positioning performance than the method in [14] even in poor vision environments.

Figure 9.

Vehicle’s reference trajectory in the experiment.

Figure 10.

Navigation results of the experiment.

Figure 11.

Position error of the experiment.

Table 6.

RMS navigation parameter error of the experiment.

5. Concluding Remarks and Further Studies

This paper proposed an inertial and landmark-based vision integrated navigation method using focal plane measurements of landmarks. An integration model was derived to use the raw measurements on the focal plane in the integration Kalman filter. The proposed method has been verified through computer simulations and van tests. Performance of the proposed method has been compared with other integration method which used a vision navigation output, i.e., position and attitude output from a vision navigation system. It has been observed from the results that the proposed system gives reliable navigation outputs even when the number of landmarks is not sufficient for vision navigation.

An integration method to use continuous images to improve navigation performance and an integration model to efficiently detect and recognize landmarks will be studied. As future works, other filtering methods such as the particle filter and unscented Kalman filter, artificial neural network-based filtering and the application of a vision/INS integrated navigation system for sea navigation can be considered.

Author Contributions

Youngsun Kim and Dong-Hwan Hwang proposed the idea of this paper; Youngsun Kim designed and performed the experiments; Youngsun Kim and Dong-Hwan Hwang analyzed the experimental data and wrote the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Biezad, D.J. Integrated Navigation and Guidance Systems, 1st ed.; American Institute of Aeronautics and Astronautics Inc.: Virginia, VA, USA, 1999; pp. 139–150. [Google Scholar]

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems, 1st ed.; Artech House: Boston, MA, USA, 2008; pp. 361–469. [Google Scholar]

- Ehsan, S.; McDonald-Maier, K.D. On-Board Vision Processing for Small UAVs: Time to Rethink Strategy. 2015. arXiv:1504.07021. arXiv.org e-Print archive. Available online: https://arxiv.org/abs/1504.07021 (accessed on 10 October 2016).

- Groves, P.D. The PNT boom, future trends in integrated navigation. Inside GNSS 2013, 2013, 44–49. [Google Scholar]

- Bryson, M.; Sukkarieh, S. Building a robust implantation of bearing-only inertial SLAM for a UAV. J. Field Robot. 2007, 24, 113–143. [Google Scholar] [CrossRef]

- Veth, M.J. Navigation using images, a survey of techniques. J. Inst. Navig. 2011, 58, 127–139. [Google Scholar] [CrossRef]

- Borenstein, J.; Everette, H.R.; Feng, L. Where am I? Sensors and Methods for Mobile Robot Positioning, 1st ed.; University of Michigan: Michigan, MI, USA, 1996. [Google Scholar]

- Thompson, W.B.; Henderson, T.C.; Colvin, T.L.; Dick, L.B.; Valiquette, C.M. Vision-based localization. In Proceedings of the 1993 Image Understanding Workshop, Maryland, MD, USA, 18–21 April 1993; pp. 491–498.

- Betke, M.; Gurvits, L. Mobile robot localization using landmarks. IEEE Trans. Robot. Autom. 1997, 13, 251–263. [Google Scholar] [CrossRef]

- Chatterji, G.B.; Menon, P.K.; Sridhar, B. GPS/machine vision navigation systems for aircraft. IEEE Trans. Aerosp. Electron. Syst. 1997, 33, 1012–1025. [Google Scholar] [CrossRef]

- Scaramuzza, D. Visual odometry-tutorial. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- George, M.; Sukkarieh, S. Camera aided inertial navigation in poor GPS environments. In Proceedings of the 2007 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2007; pp. 1–12.

- Tardif, J.P.; George, M.; Laverne, M. A new approach to vision-aided inertial navigation. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4161–4168.

- Kim, Y.S.; Hwang, D.-H. INS/vision navigation system considering error characteristics of landmark-based vision navigation. J. Inst. Control Robot. Syst. 2013, 19, 95–101. [Google Scholar] [CrossRef]

- Yue, D.X.; Huang, X.S.; Tan, H.L. INS/VNS fusion based on unscented particle filter. In Proceedings of the 2007 International Conference on Wavelet Analysis and Pattern Recognition, Beijing, China, 2–4 November 2007; pp. 151–156.

- Wang, W.; Wang, D. Land vehicle navigation using odometry/INS/vision integrated system. In Proceedings of the 2008 IEEE International Conference on Cybernetics Intelligent Systems, Chengdu, China, 21–24 September 2008; pp. 754–759.

- Meskin, D.G.; Itzhack, Y.B. A unified approach to inertial navigation system error modeling. J. Guid. Control Dyn. 1992, 15, 648–653. [Google Scholar] [CrossRef]

- Song, G.W.; Jeon, C.B.; Yu, J. Relation of euler angle error and eotation vector error. In Proceedings of the 1997 Conference on Control and Instrumentation, Automation, and Robotics, Seoul, Korea, 22–25 July 1997; pp. 217–222.

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).