Abstract

Predicting the toxicity of drug molecules using in silico quantitative structure–activity relationship (QSAR) approaches is very helpful for guiding safe drug development and accelerating the drug development procedure. The ongoing development of machine learning techniques has made this task easier and more accurate, but it still suffers negative effects from both the severely skewed distribution of active/inactive chemicals and relatively high-dimensional feature distribution. To simultaneously address both of these issues, a binary ant colony optimization feature selection algorithm, called BACO, is proposed in this study. Specifically, it divides the labeled drug molecules into a training set and a validation set multiple times; with each division, the ant colony seeks an optimal feature group that aims to maximize the weighted combination of three specific class imbalance performance metrics (F-measure, G-mean, and MCC) on the validation set. Then, after running all divisions, the frequency of each feature (descriptor) that emerges in the optimal feature groups is calculated and ranked in descending order. Only those high-frequency features are used to train a support vector machine (SVM) and construct the structure–activity relationship (SAR) prediction model. The experimental results for the 12 datasets in the Tox21 challenge, represented by the Modred descriptor calculator, show that the proposed BACO method significantly outperforms several traditional feature selection approaches that have been widely used in QSAR analysis. It only requires a few to a few dozen descriptors for most datasets to exhibit its best performance, which shows its effectiveness and potential application value in cheminformatics.

1. Introduction

During drug development, toxicity tests are generally required to guarantee patient safety [1,2]. Traditional toxicity tests are carried out in biochemistry laboratories; these are time-consuming and cause the deaths of many experimental animals [3]. In recent years, the in silico quantitative structure–activity relationship (QSAR) has been an effective and efficient alternative for predicting the toxicity of molecules produced during drug development [4,5,6]. It is noteworthy that, with the sustained accumulation of chemical structure–activity data and the rapid development of machine learning techniques, QSAR models are becoming increasingly accurate [7,8,9].

However, practical QSAR modeling processes usually face two challenges: a relatively high-dimensional feature (descriptor) distribution and a skewed distribution of active/inactive chemicals. These two data characteristics hinder the accuracy of the modeling for QSAR. In recent years, some feature selection techniques [10,11,12] and learning strategies focusing on imbalanced data [13,14,15] have been proposed. It is well known that feature selection removes irrelevant and redundant features in the original feature space, and the emerging feature selection algorithms can be roughly divided into one of three categories: filter [10,11], wrapper [12], and embedded [16]. Filter methods independently estimate the correlation strength of each feature with class labels and then rank them according to their scores to select those with the most differentiation for different classes. These methods are generally fast, but they fail to focus on the correlations among features. Wrapper approaches always select a feature subset and use a specific classifier to estimate its accuracy on a previously prepared validation set and then, according to the feedback, change the feature subset using some optimization strategies until an excellent feature subset is acquired that can produce an accurate enough result on the validation set. Since the classifier evaluation participates, wrapper methods are generally time-consuming, but the cooperative effect among features should be considered. As for the embedded feature selection, it is a niche strategy and is highly sensitive to the structure of underlying classifiers. That means that the features selected by a specific classifier may be useless for other classifiers. It is especially noteworthy that, in the context of QSAR, the existing feature selection approaches fail to effectively extract those features that can maximize the performance of the learning model in the context of skewed data distribution.

The aforementioned issue motivates us to design and develop new feature selection approaches in the context of imbalanced data distribution. In this study, a novel feature selection method based on the idea of ant colony optimization [17,18,19], called a binary ant colony optimization (BACO) algorithm, is proposed. Specifically, BACO arranges all features in order between the ant colony and the food source, and there are two paths from each feature to the next one. Here, one path denotes that the next feature should be selected, and the other one signals that the next feature is useless. For each ant, after it travels from the ant colony to the food source, a feature group can be extracted. Next, a support vector machine (SVM) classifier [20], which has been most widely used in various QSAR tasks [21,22,23,24], is trained based on the extracted feature group, and its quality is further evaluated according to the fitness function, which is a mixture of three different performance metrics: F-measure, G-mean, and MCC (Mathew correlation coefficient). These three metrics are specifically designed for evaluating the performance of the classification model on imbalanced data; thus, the fitness can accurately reflect the robustness of the feature group to skewed data distribution. In addition, to avoid producing overfitting and/or random results, the original training set can be divided multiple times into a sub-training set and a sub-validation set. For each sub-validation set, an optimum feature group can be obtained by conducting the BACO optimization procedure. Next, the frequency of each feature that emerges in all optimum feature groups is counted, and all features are ranked in descending order. Finally, some top-ranked features are extracted to train a new SVM classifier to classify instances in the testing set. Notably, BACO can be seen as a mixed-feature selection method, which first conducts a wrapper feature selection procedure and then uses the results to guide filter feature selection, aiming to uncover the features that are the most robust to skewed data distribution.

We use the proposed BACO algorithm to accomplish the toxicity prediction of drug molecules. Specifically, twelve datasets acquired from the Tox21 data challenge [25] are used to verify the effect of the BACO algorithm. Among the twelve datasets used, eight refer to the stress response (NR), and four others are related to the effects of the nuclear receptor (SR). All drug molecules with original SMILES representations are transformed into quantitative descriptor representations using the Modred descriptor calculator [26]. Under the same experimental conditions and settings, we compared our proposed BACO approach with five well-known feature selection methods, including the chi-square test (CHI) [27], Gini index (Gini) [28], minimum-redundancy-maximum-relevance (mRMR) [29], mutual information (MI) [30], and ReliefF [31], which are also widely used feature selection techniques in QSAR [10,11]. The experimental results show that BACO obviously outperforms several competitors on skewed Tox21 datasets when selecting the same number of features, indicating its effectiveness on unevenly distributed data. Additionally, the experimental results illustrate that, by using BACO, the best performance can be obtained with only a few to dozens of descriptors on most datasets.

The rest of this study is organized as follows. Section 2 provides the experimental results and presents the corresponding discussions. In Section 3, the Tox21 datasets, Modred descriptor representation, and proposed BACO method are described in detail. Section 4 summarizes the findings of the study.

2. Results and Discussion

This section aims to verify the effectiveness and superiority of the proposed BACO algorithm using experiments. First, the results based on the initially screened features and high-frequency features acquired by BACO were compared with show the effect of feature selection. Then, based on the same experimental settings, the BACO algorithm was compared with several benchmark feature selection algorithms, aiming to show the superiority of BACO. Next, we explored the impact of the number of selected features K on the BACO algorithm. Furthermore, we investigated the impact of adopting several different basic classifiers and determined the generalizability and applicability of the BACO algorithm by verifying it on three other imbalanced feature selection and classification tasks. Finally, the top 20 high-frequency descriptors on each Tox21 dataset were listed to explain why they help to effectively distinguish active/inactive molecules; we further provide several suggestions about how to use them to enhance our understanding of the relationship between drug toxicity and various molecule structure features, as well as in drug molecule design. It is specifically noted that all experimental results are shown as the mean of 10 random five-fold cross-validations, which helps to avoid providing incorrect evaluations for various methods.

2.1. Comparison Between Initially Screened Features and High-Frequency Features

As we know, the mechanism of multiple random divisions adopted by BACO produces many sub-optimal feature subsets. Each feature that emerged in these subsets can help distinguish active/inactive molecules to some extent, and the features that are present in the same subset can cooperate with each other well. This raises the question of whether combining all features emerging in K optimal subsets could yield good enough classification performance or whether selecting a few high-frequency features from these subsets could produce a better performance. Table 1 presents the classification performance based on the initially screened features and the top 20 high-frequency features acquired from BACO.

Table 1.

Performance comparison between initially screened features (covering all features emerging in K optimal feature subsets) and high-frequency features (top 20).

The results in Table 1 show that adopting a few high-frequency features can significantly improve the performance of the classification model as compared with using the initially screened features. Specifically, on nearly all datasets, the classification performance of BACO based on the top 20 features was improved. We believe that, although each initially screened feature comes from at least one optimal feature subset, there may exist very severe redundancy as each feature subset was acquired from one individual division. In addition, some features with weak distinguishing abilities were also put into the initially screened feature set. These two reasons explain why directly using the initially screened features cannot yield excellent performance. In our BACO, the high frequency not only represents a high distinguishing ability but also indicates the significance of the corresponding feature. Therefore, combining a few high-frequency features helps to promote the performance of a learning model. The results presented in Table 1 preliminarily verify the effectiveness of the proposed BACO algorithm.

2.2. Comparison Between BACO and Several Benchmark Feature Selection Approaches

Next, we compared the performance of BACO and several benchmark feature selection algorithms, which were mentioned in Section 1. Specifically, to guarantee the impartiality of the experimental comparison, all feature selection algorithms selected the top 20 features. The experimental results are presented in Table 2, Table 3, Table 4, Table 5 and Table 6, where the best results have been highlighted in bold.

Table 2.

Classification performance of BACO and several benchmark feature selection methods in terms of the F-measure metric.

Table 3.

Classification performance of BACO and several benchmark feature selection methods in terms of the G-mean metric.

Table 4.

Classification performance of BACO and several benchmark feature selection methods in terms of the MCC metric.

Table 5.

Classification performance of BACO and several benchmark feature selection methods in terms of the AUC metric.

Table 6.

Classification performance of BACO and several benchmark feature selection methods in terms of the PR-AUC metric.

The results in Table 2, Table 3, Table 4, Table 5 and Table 6 show that, for all datasets except DS7, BACO yields better results than five widely used feature selection approaches, both in terms of the F-measure and G-mean metrics. In addition, we observed that, for the MCC metric, BACO yielded the best results on nine datasets but produced slightly worse results on DS8 than CHI and on DS12 than mRMR. As for the AUC and PR-AUC metrics, BACO yielded the best results on 11 and 10 datasets, respectively, with results that were significantly superior to those of the five other feature selection methods. It is particularly noteworthy that both the AUC and PR-AUC metrics did not participate in the optimization procedure of BACO. Their improvement indicates the credibility of the selected features using this method, as they can truly improve classification performance in the context of imbalanced data. The results above also further indicate the effectiveness and superiority of the proposed BACO algorithm. It is not difficult to understand these results, as all other feature selection algorithms fail to consider the associations among those selected top K features, as well as the influence of imbalanced data distribution. For feature selection, a worse result is often produced by combining those features with the most distinguishing abilities rather than integrating some important features to support each other. Additionally, an extremely high-class imbalance distribution may lower the accuracy of most traditional feature selection methods. That explains why our proposed BACO approach exhibits obviously improved performance in comparison to several benchmark feature selection methods. Moreover, we note that except for mRMR, the other compared methods fail to remove redundant features, which explains why mRMR behaves better than the four other methods.

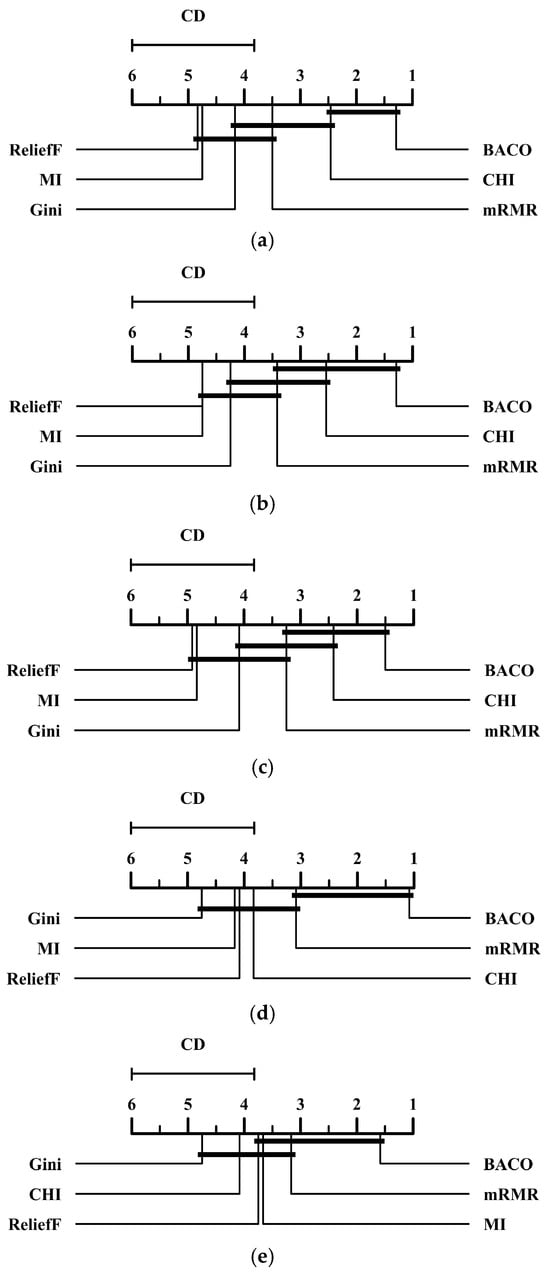

To confirm the superiority of the proposed BACO feature selection approach, we also conducted the Nemenyi test [32,33] for the results in Table 2, Table 3, Table 4, Table 5 and Table 6. Figure 1 presents the critical difference (CD) diagrams of several feature selection methods at a standard level of significance α = 0.05 in terms of five different performance metrics. Specifically, if the difference between the average rankings belonging to two different methods is lower than a CD unit, then they would be regarded as having no significant difference in statistics. From Figure 1, we observe that BACO significantly outperforms several other methods, except for CHI and mRMR, in terms of both the G-mean and MCC metrics. In both the F-measure and AUC metrics, BACO shows no significant difference with only one method, CHI and mRMR, respectively. Meanwhile, for the PR-AUC metric, BACO only significantly outperforms two other feature selection methods, namely, Gini and CHI. To summarize, although the superiority of BACO is not significant enough in comparison with a few methods, it can still be regarded as the best option among these compared approaches as it yields the lowest average ranking on each performance metric.

Figure 1.

CD diagrams of several feature selection methods at a standard level of significance α = 0.05 in terms of five performance metrics. (a) CD diagram of the F-measure metric. (b) CD diagram of the G-mean metric. (c) CD diagram of the MCC metric. (d) CD diagram of the AUC metric. (e) CD diagram of the PR-AUC metric.

2.3. Impact of the Parameter on the Performance of BACO

In the second layer of BACO, the top K high-frequency features must be extracted to train the final classification model. Then, we have to address the question of how to provide an appropriate setting for K. Next, we tested the impact of the parameter on the performance of BACO. Here, we varied in the range of {5, 10, 20, 30, 50, 100, 200, 300}, and the results are presented in Table 7 and Tables S1 and S2, where the best results for each dataset are highlighted in bold.

Table 7.

Classification performance of BACO on the DS1~DS4 datasets with different K settings.

The results in these tables show that, for most datasets, selecting a few or dozens of features with BACO is guaranteed to yield excellent classification performance. Specifically, on the DS4 dataset, training the classification model on the top five features yields the best performance. The results illustrate that only a few descriptors are associated with whether a drug molecule exhibits toxicity. If designating an extremely large K value in BACO, some weak, relevant, and redundant descriptors will destroy the classification model and further lower its quality. By analyzing the experimental results, we recommend designating with a value no larger than 100 in practical applications.

2.4. Impact of Adopting Different Basic Classifiers in BACO

Next, we deemed it necessary to investigate the impact of basic classifiers in BACO [34]. In addition to SVM, we compared the performance of four other representative classifiers, including a classification and regression tree (CART) [35], logistic regression (LR) [36], random forest (RF) [37], and XGBoost [38]. The compared results are presented in Table 8 and Tables S3 and S4; for each dataset, the best result in terms of each metric is highlighted in bold.

Table 8.

Classification performance of BACO on the DS1~DS4 datasets with different basic classifiers.

The results in Table 8 and Tables S3 and S4 show that among several compared classifiers, SVM, RF, and XGBoost yield the best results in terms of all combinations of performance metrics and datasets. This indicates that, when integrating one of these three classifiers into the optimization procedure of BACO, it tends to achieve better performance than when integrating two others. As we know, SVM, RF, and XGBoost are generally more robust and stable in the context of imbalanced data distribution than the two others, and thus, we cannot say that adopting CART and LR yields worse feature selection results, as the difference in classification performance may be driven by the difference among classifiers. When applying BACO in a practical feature selection task, we suggest selecting the appropriate classifier with regard to the requirements of classification performance and learning efficiency.

2.5. Detecting the Generalizability and Applicability of BACO

To verify the generalizability and applicability of BACO, we tested it on three other datasets, including two ovarian mass spectrometry datasets (Ovarian I and Ovarian II) [39] and a colon cancer microarray gene expression dataset (Colon) [40]. Specifically, both the Ovarian I and Ovarian II datasets contain 116 mass spectrometry instances derived from the serum of women. The task of Ovarian I is to distinguish 16 benign samples from 100 ovarian cancer examples, while Ovarian II is used to distinguish the same 16 benign samples from 100 unaffected examples; i.e., in these two datasets, the minority class represents 13.8% of all instances. Each sample is represented by 15,154 features. The Colon dataset contains 62 samples collected from colon cancer patients. Among them, 40 tumor biopsies are from tumors, and 22 normal biopsies are from healthy parts of the colons of the same patients, i.e., the minority class represents 35.5% of all instances. Each instance is represented by 2000 features. Considering the high-dimensional and small sample characteristics of these datasets, we manually tuned several default parameters to adapt them, including tuning the iteration rounds R from 10 to 100 to guarantee sufficient optimization and tuning the initial pheromone concentration in pathway 1 phini1 to rapidly focus on a few highly relevant features with classification.

Similarly to the experiments conducted on the Tox21 datasets, we selected the top 20 features using various feature selection methods and then compared their performance in terms of the F-measure, G-mean, MCC, AUC, and PR-AUC. The results are presented in Table 9.

Table 9.

Classification performance comparison of BACO and several benchmark feature selection methods on three other datasets, in which the best results have been highlighted in bold.

The results in Table 9 show that, when applied to three other high-dimensional and small-sample datasets, BACO can still yield significantly better performance than several other feature selection methods. High dimensionality generally means that some pseudo-significant features are easily extracted, but this is not true of the strongly relevant ones; meanwhile, a small sample tends to provide unstable feature selection results. In BACO, although these two data characteristics may cause a very large distribution drift between two random divisions, further influencing the quality and stability of feature selection, these issues can be effectively addressed by simultaneously decreasing the initial pheromone concentration in pathway 1 and increasing the number of iterations and divisions. According to the experimental results above, we can conclude that BACO possesses strong generalizability and applicability, guaranteeing the quality of feature selection in various scenarios.

2.6. Discussion and Further Suggestions

Finally, to further understand the association between molecule toxicity and molecule structure, the information about the top 20 high-frequency descriptors acquired by BACO on each dataset is listed. The information helps us to determine which structural properties and their corresponding physicochemical or biological properties directly induce toxicity, further helping us to develop more accurate prediction models and to design drug molecules without toxicity. Specifically, we list the information of the top 20 high-frequency descriptors, except DS7, on all of the datasets in Table 10 and Tables S5–S14.

Table 10.

List of information about the top 20 high-frequency descriptors acquired by BACO on the DS1 dataset.

Specifically, we note that some descriptors frequently emerge in high-frequency lists of various molecular pathway endpoints, including nG12FARing, nG12Fring, n5ARing, nBridgehead, SlogP_VSA6, and SRW05.

Among these descriptors, both nG12FARing and nG12Fring denote a large ring size, which has also been found to be closely associated with molecule toxicity in some previous research [41,42]. A large ring size helps to significantly increase molecule size and volume, which can hinder efficient excretion via the renal or hepatic pathways, meaning that they are retained in the body and contribute to prolonged toxic effects. In addition, a large ring structure tends to increase the hydrophobic surface area of a molecule, further promoting non-covalent interactions with the hydrophobic regions of proteins or nucleic acids. These interactions may disrupt the structural integrity of biomolecules, leading to misfolding, aggregation, or loss of function.

As for n5ARing, it quantifies the number of aromatic rings with five members (e.g., furan, thiophene, pyrrole, or cyclopentadienyl anion) in a molecule. It has been found that n5ARing can increase the potential for reactive metabolite formation, further leading to cellular damage; it enhances binding affinity to biological targets, potentially disrupting normal cellular functions, and influences molecular polarity and solubility, further affecting the distribution and accumulation of the compound in biological systems [43].

nBridgehead denotes the number of bridgehead atoms in a molecule. The bridgehead atoms can increase the rigidity of a molecule to limit its flexibility and conformational changes while enhancing the metabolic stability of a molecule and its hydrophobicity, and they may also introduce additional steric hindrance [44]. It has been observed that the nBridgehead property is helpful for distinguishing toxic from non-toxic compounds based on several potential reasons: it enhances the metabolic stability and bioaccumulation of the molecule, further prolonging its activity in the body; it may introduce structural rigidity and steric hindrance, further interfering with normal biological processes; and it can influence the hydrophobicity and electronic properties of the molecule, further leading to adverse interactions with biological targets [45].

SlogP_VSA6 describes the van der Waals surface area of specific hydrophobic regions on the molecular surface; thus, it can capture the key features of molecular hydrophobicity and surface polarity, which directly influence the molecule’s biodistribution, metabolism, and interactions with biological targets, thereby determining its toxic potential [46,47].

SRW05 describes the topological features of a molecule with a self-returning walk length of five, reflecting structural information in three-dimensional space. These features indirectly influence the shape, flexibility, steric hindrance, and metabolic pathways of the molecule, thereby determining its toxic potential [48,49].

Readers are encouraged to use the structural features and physicochemical and/or biological properties reflected by the significant descriptors acquired from BACO to research and understand the relationship between molecule structure and compound toxicity in their specific experiments.

In addition, the significant descriptors selected by BACO can be used to design and modify chemical structures, with the aim of reducing molecule toxicity and accelerating drug design. Taking the partial significance descriptors discussed above as an example, we suggest adjusting the large ring counts to decrease the molecule’s hydrophobic surface area and optimize ring connectivity in order to control the number of five-membered aromatic rings to decrease the potential for reactive metabolite formation and reduce the likelihood of generating toxic intermediates. This will reduce the number of bridgehead atoms in order to decrease molecular rigidity and optimize the chemical environment, optimizing hydrophobicity to balance hydrophilicity/lipophilicity and modify the topological structure of the molecule or introduce flexible groups to minimize interactions with off-target molecules. Further molecular modification for toxicity control and practical drug design requires QSAR analysis based on molecular toxicity information. In addition, the descriptors acquired from BACO can only help to accelerate drug design: the actual toxicity of a designed chemical must still be measured using in vitro toxicity testing.

3. Materials and Methods

This section first describes the details of Tox21 datasets used in the experiments and then explains how to use the Modred descriptor calculator to represent drug molecules in datasets. Next, the BACO feature selection algorithm is introduced in detail.

3.1. Datasets and Their Representations

3.1.1. Tox21 Datasets

In this study, twelve Tox21 datasets are used. These datasets come from the Tox21 Data Challenge, which is an open-access resource that aims to help drug developers understand the chemical toxicology that can disrupt biological pathways and further induce toxic effects. The toxic effects included in the twelve Tox21 datasets refer to the stress response (SR) and the effects of the nuclear receptor (NR). Specifically, among the twelve datasets, eight refer to NR, and four others relate to SR. Toxic effects activated by SR pathways tend to damage the liver and even cause cancer, while the toxic effects activated by NR pathways can disrupt the functions of the endocrine system.

The details of the twelve Tox21 datasets [25] are presented in Table 11, in which # molecules denotes the number of drug molecules, and # inactive and # active, respectively, represent the number of inactive and active drug molecules in the corresponding dataset. In addition, we provide statistics about the proportion of active molecules among all molecules and give molecular pathway endpoint descriptions in each dataset. It is not difficult to observe that all of the datasets are imbalanced to some extent, as the statistic about the ratio of active molecules ranges between 2.6% and 15.8%. This explains why class imbalance issues must be considered in tasks predicting the toxicity of drug molecules.

Table 11.

Toxicity datasets used in experiments.

3.1.2. Modred Descriptor Calculator

In this study, each drug molecule is represented as a vector that is sequentially constituted by 1610 two-dimensional descriptors. This task is accomplished using the Modred descriptor calculation software (https://github.com/mordred-descriptor, accessed on 6 October 2024) [26], which is freely available, fast, and able to calculate descriptors for large molecules. Using the Modred descriptor calculator, all drug molecules with original SMILES representations can be transformed into quantitative descriptor representations. The specific descriptor information is given in Table 12.

Table 12.

Modred descriptor information.

3.2. Binary Ant Colony Optimization (BACO) Feature (Descriptor) Selection Algorithm

3.2.1. Optimal Feature Group Search Using BACO

The ant colony optimization (ACO) algorithm proposed by Dorigo et al. [17] is a well-known swarm optimization algorithm that has been widely used to deal with various real-world optimization issues, especially discrete ones. Specifically, ACO simulates the foraging behavior of ant colonies. During foraging, ants communicate with each other by releasing pheromones into the air since they tend to assemble in locations with high pheromone concentrations. In addition, the pheromone evaporates over time. Based on these basic conditions, ant colonies present intelligent behavior that is not possessed by a single ant.

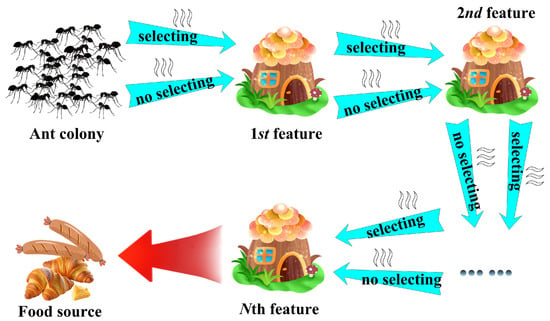

In this study, we design a binary ant colony optimization (BACO) algorithm to conduct feature selection tasks. The mechanism procedure of BACO is described in Figure 2. It can be observed that, between the nest and the food source, there are N sequentially arranged sites, with each one corresponding to a feature. From one feature to the next, there are two alternative pathways: one denotes that the next feature should be extracted into the feature group, and the other one denotes the next feature should be abandoned. When an ant travels from one feature to the next feature, the probability of selecting the pathway j (j = 1 or 2) can be calculated as follows:

where i denotes the ith feature, while and represent the pheromone concentration of the jth pathway and the probability of selecting the jth pathway of the ith feature by an ant, respectively. Specifically, to avoid selecting an excessive number of features into the subset, a higher initial pheromone concentration should be pre-assigned for pathway 2 than for pathway 1. Next, after all S ants have finished their journeys, S feature subsets can be acquired according to their choices about the pathways. Based on these S feature subsets, S SVM classifiers are trained on the training sets, and then their quality can be further evaluated on the validation sets using the following fitness function:

where α, β, and γ are weights for three different performance metrics, i.e., the F-measure, G-mean, and MCC, and their summation is 1. Specifically, the calculation of these three performance metrics relies on the fusion matrix illustrated in Table 13. Here, TP, TN, FP, and FN are statistics used to record the number of accurately and falsely classified instances belonging to the positive class and negative class, respectively. Furthermore, we can use them to calculate several metrics as follows:

Figure 2.

Mechanism procedure of the BACO algorithm.

Table 13.

Confusion matrix.

Next, the F-measure, G-mean, and MCC metrics can be calculated as follows:

It is clear that all three of these performance metrics evaluate the quality of a learning model in the context of imbalanced data. Thus, the fitness function can reflect the value of the features that help to improve the quality of a learning model when it is applied to skewed data. Aside from these three metrics, both the area under ROC curves (AUC) [50] and precision–recall AUC (PR–AUC) [51] have also been widely used to evaluate the quality of a learning algorithm in the context of imbalanced data. In this study, we did not use them in our fitness function for optimization, but they are used to reflect the real quality of the selected features in our experiments.

Furthermore, based on the fitness evaluation, the pheromone concentration of each pathway is updated using the following function:

where denotes the evaporation factor, corresponds to the iteration number of BACO, and represents the pheromone concentration increment on the corresponding pathway. Here, we only added the pheromone concentration corresponding to the pathways emerging in the best 10% of ants. Specifically, we stored the best pathways in a set E. Then, can be calculated as follows:

According to Equations (9) and (10), after an iteration is finished, the poor pathways are weakened in terms of pheromone concentration by the introduction of the evaporation factor , while the good pathways are intensified to promote the probability of selecting them in the next iteration. To avoid acquiring an overfitting result, the lower bound and upper bound of pheromone concentration in each pathway are also pre-designated.

BACO repeats the above optimization procedure until the pre-defined number of iterations is satisfied. Finally, the optimal feature group that corresponds to the highest fitness throughout the optimization procedure is generated.

3.2.2. Filter Feature Selection Based on Frequency Statistics Acquired by BACO

When using a fixed training set and validation set, the data distribution may deviate from the real one, and limited instances may influence the stability of feature selection; this leads BACO to generate an overfitting feature subset. To address this issue, we adopt a strategy of multiple divisions to evaluate the significance of each feature. Specifically, a five-fold cross-validation approach is adopted to divide the training set and testing set, and then the training set is randomly divided into a training subset, and a validation subset based on the ‘8–2 rule’, i.e., 80% of instances are assigned into the training subset, and the remaining 20% of instances are placed into the validation subset. BACO runs on each group of divisions and outputs the optimal feature group. Next, a filter feature selection procedure based on the statistics of the emerging frequency of each feature in multiple sub-optimal feature groups is conducted to extract the K most significant features. Finally, we only use these K features on the original training set to train the SVM classifier and verify its performance on the testing set. A similar Bootstrap approach [52], using a multiple random division strategy, is expected to produce more stable feature selection results on even smaller sample datasets.

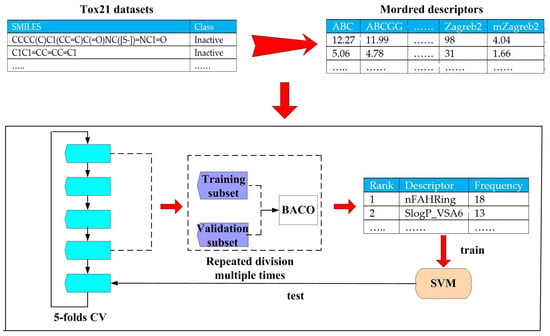

Taking the toxicity prediction task as the object, our filter feature selection and classification procedure is fully described in Figure 3. Specifically, BACO represents a filter feature selection method that relies on the statistical results of multiple wrapper feature selection procedures.

Figure 3.

Filter feature selection and toxicity prediction procedure based on BACO.

3.2.3. Default Parameter Settings and Time Complexity Analysis

Table 14 presents the default parameter settings used in this study. Specifically, the iteration times of BACO R are empirically designated as 10 for the two following reasons: (1) in most cases, this setting is guaranteed to converge on an optimal solution, and (2) the optimization process of BACO is relatively time-consuming. As for the number of random divisions M, we provided a medium setting for it on the basis that it could simultaneously highlight the significant features and save time.

Table 14.

Default parameter settings of the proposed BACO algorithm and SVM classifier.

Next, the time complexity of the BACO algorithm is analyzed in detail. In each minimal iteration, generating ants requires consuming O(NS), training SVMs for these ants costs O(h2NS)~O(h3NS), and acquiring the prediction performance consumes O(h2NS) time, where h denotes the number of instances and N denotes the number of features. In total, there are R iterations for each ant and M iterations. Therefore, the time complexity of BACO based on SVM constructing the RBF kernel function is between O(h2NSRM) and O(h3NSRM). Thus, it is extremely time-consuming in comparison with filter-based feature selection methods. In some other practical applications, we suggest users tune the number of ants S, the iteration times of BACO R, and the number of random divisions M according to the data’s internal characteristics, further reducing its running time. In addition, the user should also adopt classifiers with low time complexity when they are working with large datasets; alternatively, they can lower the initial pheromone concentration in pathway 1, which helps to significantly lower the feature dimensions of the estimated subset corresponding to each ant when the dataset is extremely high-dimensional. This will reduce the running time of BACO. All in all, BACO is a time-consuming feature selection method; however, in our opinion, it can be used for static feature selection and prediction tasks, as the accuracy of the algorithm is always more important than its real-time requirements.

3.2.4. Applicability and Limitations of BACO

In theory, BACO is appropriate for use in various feature selection scenarios, even when feature distribution is manifold, as it evaluates the significance of features in an indirect fashion based on the performance feedback from the classifier. It can be used to adapt various feature selection targets by modifying the fitness function, e.g., when the data distribution is balanced, the fitness function can be replaced by the accuracy metric. Alternatively, when it encounters a regression task, the fitness function can be modified as MSE or MAE losses. Therefore, BACO is both a robust and universal feature selection algorithm.

However, it may encounter some limitations in specific application scenarios. First, it is relatively sensitive to the size of the training set; for such datasets, it tends to output a local optimal result, although the issue can be alleviated by increasing the number of internal divisions. In addition, it is difficult to apply BACO to large-scale high-dimensional data; in these situations, it is not easy for BACO to find the optimal tradeoff between feature selection quality and running time. However, on datasets such as Tox21 that only contain thousands of instances and hundreds of features, BACO can easily produce high-quality feature selection within a limited time.

4. Conclusions

In this study, we proposed a novel feature selection method, BACO, which is based on the idea of ant colony optimization for predicting the toxicity of drug molecules. Specifically, BACO simultaneously focuses on the challenges presented by the skewed data distribution of drug activity data and the issue of dimensionality. To adapt to the skewed data distribution, BACO adopts a combination of three performance metrics, which are specifically designed to evaluate the quality of learning models on imbalanced data as the fitness function. To guarantee the quality and stability of feature selection, we designed a feature frequency ranking mechanism based on multiple random divisions, which can effectively reduce the negative effects caused by local optimization searches. The experimental results for the 12 datasets in the Tox21 challenge illustrate that the proposed BACO method significantly outperforms traditional feature selection approaches when selecting only a few or dozens of descriptors, indicating its effectiveness and superiority. It can be regarded as an effective tool in various molecule activity prediction tasks.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/molecules30071548/s1, Table S1: Classification performance of BACO on the DS5~DS8 datasets with different K settings; Table S2: Classification performance of BACO on the DS9~DS12 datasets with different K settings; Table S3: Classification performance of BACO on the DS5~DS8 datasets with different basic classifiers; Table S4: Classification performance of BACO on the DS9~DS12 datasets with different basic classifiers; Table S5: List of information of the top 20 high-frequency descriptors acquired by BACO on DS2 dataset; Table S6: List of information of the top 20 high-frequency descriptors acquired by BACO on DS3 dataset; Table S7: List of information of the top 20 high-frequency descriptors acquired by BACO on DS4 dataset; Table S8: List of information of the top 20 high-frequency descriptors acquired by BACO on DS5 dataset; Table S9: List of information of the top 20 high-frequency descriptors acquired by BACO on DS6 dataset; Table S10: List of information of the top 20 high-frequency descriptors acquired by BACO on DS8 dataset; Table S11: List of information of the top 20 high-frequency descriptors acquired by BACO on DS9 dataset; Table S12: List of information of the top 20 high-frequency descriptors acquired by BACO on DS10 dataset; Table S13: List of information of the top 20 high-frequency descriptors acquired by BACO on DS11 dataset; Table S14: List of information of the top 20 high-frequency descriptors acquired by BACO on DS12 dataset.

Author Contributions

Conceptualization, Y.D. and H.Y.; methodology, J.R. and H.Y.; software, Z.Z.; validation, Y.D. and J.R.; formal analysis, Z.Z.; investigation, Y.D. and J.R.; resources, Y.D.; data curation, J.R.; writing—original draft preparation, Y.D., J.R. and Z.Z.; writing—review and editing, H.Y.; visualization, Z.Z.; supervision, Y.D. and H.Y.; project administration, Y.D. and H.Y.; funding acquisition, Y.D. and H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under grant No. 62176107 and the Natural Science Foundation of Jiangsu Province under grant No. BK2022023163.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The Tox21 datasets with SMILES descriptions used in this study can be downloaded from https://pubs.acs.org/doi/abs/10.1021/acs.jcim.0c00908, accessed on 26 September 2024. The Ovarian I and Ovarian II datasets can be downloaded from https://home.ccr.cancer.gov/ncifdaproteomics/ppatterns.asp, accessed on 26 September 2024, and the Colon dataset can be downloaded from https://github.com/sameesayeed007/Feature-Selection-For-High-Dimensional-Imbalanced-Datasets/blob/main/Datasets/colon%202000.xls, accessed on 26 September 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gupta, R.; Polaka, S.; Rajpoot, K.; Tekade, M.; Sharma, M.C.; Tekade, R.K. Importance of toxicity testing in drug discovery and research. In Pharmacokinetics and Toxicokinetic Considerations; Academic Press: Cambridge, MA, USA, 2022; pp. 117–144. [Google Scholar]

- Kelleci Çelik, F.; Karaduman, G. In silico QSAR modeling to predict the safe use of antibiotics during pregnancy. Drug Chem. Toxicol. 2023, 46, 962–971. [Google Scholar] [PubMed]

- Krewski, D.; Andersen, M.E.; Tyshenko, M.G.; Krishnan, K.; Hartung, T.; Boekelheide, K.; Wambaugh, J.F.; Jones, D.; Whelan, M.; Thomas, R.; et al. Toxicity testing in the 21st century: Progress in the past decade and future perspectives. Arch. Toxicol. 2020, 94, 1–58. [Google Scholar] [PubMed]

- De, P.; Kar, S.; Ambure, P.; Roy, K. Prediction reliability of QSAR models: An overview of various validation tools. Arch. Toxicol. 2022, 96, 1279–1295. [Google Scholar] [CrossRef]

- Tran, T.T.V.; Surya Wibowo, A.; Tayara, H.; Chong, K.T. Artificial intelligence in drug toxicity prediction: Recent advances, challenges, and future perspectives. J. Chem. Inf. Model. 2023, 63, 2628–2643. [Google Scholar] [PubMed]

- Achar, J.; Firman, J.W.; Tran, C.; Kim, D.; Cronin, M.T.; Öberg, G. Analysis of implicit and explicit uncertainties in QSAR prediction of chemical toxicity: A case study of neurotoxicity. Regul. Toxicol. Pharmacol. 2024, 154, 105716. [Google Scholar]

- Tropsha, A. Best practices for QSAR model development, validation, and exploitation. Mol. Inform. 2010, 29, 476–488. [Google Scholar] [CrossRef]

- Keyvanpour, M.R.; Shirzad, M.B. An analysis of QSAR research based on machine learning concepts. Curr. Drug Discov. Technol. 2021, 18, 17–30. [Google Scholar]

- Zhang, F.; Wang, Z.; Peijnenburg, W.J.; Vijver, M.G. Machine learning-driven QSAR models for predicting the mixture toxicity of nanoparticles. Environ. Int. 2023, 177, 108025. [Google Scholar]

- Cerruela García, G.; Pérez-Parras Toledano, J.; de Haro García, A.; García-Pedrajas, N. Filter feature selectors in the development of binary QSAR models. SAR QSAR Environ. Res. 2019, 30, 313–345. [Google Scholar]

- Eklund, M.; Norinder, U.; Boyer, S.; Carlsson, L. Choosing feature selection and learning algorithms in QSAR. J. Chem. Inf. Model. 2014, 54, 837–843. [Google Scholar]

- MotieGhader, H.; Gharaghani, S.; Masoudi-Sobhanzadeh, Y.; Masoudi-Nejad, A. Sequential and mixed genetic algorithm and learning automata (SGALA, MGALA) for feature selection in QSAR. Iran. J. Pharm. Res. IJPR 2017, 16, 533. [Google Scholar] [PubMed]

- Wang, Y.; Wang, B.; Jiang, J.; Guo, J.; Lai, J.; Lian, X.Y.; Wu, J. Multitask CapsNet: An imbalanced data deep learning method for predicting toxicants. ACS Omega 2021, 6, 26545–26555. [Google Scholar] [PubMed]

- Idakwo, G.; Thangapandian, S.; Luttrell, J.; Li, Y.; Wang, N.; Zhou, Z.; Hong, H.; Yang, B.; Zhang, C.; Gong, P. Structure–activity relationship-based chemical classification of highly imbalanced Tox21 datasets. J. Cheminform. 2020, 12, 1–19. [Google Scholar]

- Kim, C.; Jeong, J.; Choi, J. Effects of Class Imbalance and Data Scarcity on the Performance of Binary Classification Machine Learning Models Developed Based on ToxCast/Tox21 Assay Data. Chem. Res. Toxicol. 2022, 35, 2219–2226. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar]

- Sun, L.; Chen, Y.; Ding, W.; Xu, J. LEFSA: Label enhancement-based feature selection with adaptive neighborhood via ant colony optimization for multilabel learning. Int. J. Mach. Learn. Cybern. 2024, 15, 533–558. [Google Scholar]

- Yu, H.; Ni, J.; Zhao, J. ACOSampling: An ant colony optimization-based undersampling method for classifying imbalanced DNA microarray data. Neurocomputing 2013, 101, 309–318. [Google Scholar]

- Drucker, H.; Wu, D.; Vapnik, V.N. Support vector machines for spam categorization. IEEE Trans. Neural Netw. 1999, 10, 1048–1054. [Google Scholar]

- Boczar, D.; Michalska, K. A review of machine learning and QSAR/QSPR Predictions for complexes of organic molecules with cyclodextrins. Molecules 2024, 29, 3159. [Google Scholar] [CrossRef]

- Rodríguez-Pérez, R.; Bajorath, J. Evolution of support vector machine and regression modeling in chemoinformatics and drug discovery. J. Comput.-Aided Mol. Des. 2022, 36, 355–362. [Google Scholar] [PubMed]

- Czermiński, R.; Yasri, A.; Hartsough, D. Use of support vector machine in pattern classification: Application to QSAR studies. Quant. Struct.-Act. Relatsh. 2001, 20, 227–240. [Google Scholar]

- Du, Z.; Wang, D.; Li, Y. Comprehensive evaluation and comparison of machine learning methods in QSAR modeling of antioxidant tripeptides. ACS Omega 2022, 7, 25760–25771. [Google Scholar]

- Antelo-Collado, A.; Carrasco-Velar, R.; García-Pedrajas, N.; Cerruela-García, G. Effective feature selection method for class-imbalance datasets applied to chemical toxicity prediction. J. Chem. Inf. Model. 2020, 61, 76–94. [Google Scholar] [PubMed]

- Moriwaki, H.; Tian, Y.S.; Kawashita, N.; Takagi, T. Mordred: A molecular descriptor calculator. J. Cheminform. 2018, 10, 4. [Google Scholar] [PubMed]

- Rupapara, V.; Rustam, F.; Ishaq, A.; Lee, E.; Ashraf, I. Chi-square and PCA based feature selection for diabetes detection with ensemble classifier. Intell. Autom. Soft Comput. 2023, 36, 1931–1949. [Google Scholar]

- Menze, B.H.; Kelm, B.M.; Masuch, R.; Himmelreich, U.; Bachert, P.; Petrich, W.; Hamprecht, F.A. A comparison of random forest and its Gini importance with standard chemometric methods for the feature selection and classification of spectral data. BMC Bioinform. 2009, 10, 213. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [PubMed]

- Estévez, P.A.; Tesmer, M.; Perez, C.A.; Zurada, J.M. Normalized mutual information feature selection. IEEE Trans. Neural Netw. 2009, 20, 189–201. [Google Scholar]

- Robnik-Šikonja, M.; Kononenko, I. Theoretical and empirical analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar]

- Demsar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Garcia, S.; Fernandez, A.; Luengo, J.; Herrera, F. Advanced nonpara-metric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: Experimental analysis of power. Inf. Sci. 2010, 180, 2044–2064. [Google Scholar]

- Makarov, D.M.; Ksenofontov, A.A.; Budkov, Y.A. Consensus Modeling for Predicting Chemical Binding to Transthyretin as the Winning Solution of the Tox24 Challenge. Chem. Res. Toxicol. 2025, 38, 392–399. [Google Scholar] [PubMed]

- Loh, W.Y. Classification and regression trees. Wiley Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar]

- Nick, T.G.; Campbell, K.M. Logistic regression. Top. Biostat. 2007, 273–301. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Petricoin, E.F.; Ardekani, A.M.; Hitt, B.A.; Levine, P.J.; Fusaro, V.A.; Steinberg, S.M.; Mills, G.B.; Simone, C.; Fishman, D.A.; Kohn, E.C.; et al. Use of proteomic patterns in serum to identify ovarian cancer. Lancet 2002, 359, 572–577. [Google Scholar]

- Alon, U.; Barkai, N.; Notterman, D.A.; Gish, K.; Ybarra, S.; Mack, D.; Levine, A.J. Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. USA 1999, 96, 6745–6750. [Google Scholar]

- Srisongkram, T. Ensemble quantitative read-across structure–activity relationship algorithm for predicting skin cytotoxicity. Chem. Res. Toxicol. 2023, 36, 1961–1972. [Google Scholar]

- Krishnan, S.R.; Roy, A.; Gromiha, M.M. Reliable method for predicting the binding affinity of RNA-small molecule interactions using machine learning. Brief. Bioinform. 2024, 25, bbae002. [Google Scholar]

- Clare, B.W. QSAR of aromatic substances: Toxicity of polychlorodibenzofurans. J. Mol. Struct. Theochem 2006, 763, 205–213. [Google Scholar]

- Podder, T.; Kumar, A.; Bhattacharjee, A.; Ojha, P.K. Exploring regression-based QSTR and i-QSTR modeling for ecotoxicity prediction of diverse pesticides on multiple avian species. Environ. Sci. Adv. 2023, 2, 1399–1422. [Google Scholar]

- Kumar, A.; Ojha, P.K.; Roy, K. QSAR modeling of chronic rat toxicity of diverse organic chemicals. Comput. Toxicol. 2023, 26, 100270. [Google Scholar]

- Edros, R.; Feng, T.W.; Dong, R.H. Utilizing machine learning techniques to predict the blood-brain barrier permeability of compounds detected using LCQTOF-MS in Malaysian Kelulut honey. SAR QSAR Environ. Res. 2023, 34, 475–500. [Google Scholar]

- Fujimoto, T.; Gotoh, H. Prediction and chemical interpretation of singlet-oxygen-scavenging activity of small molecule compounds by using machine learning. Antioxidants 2021, 10, 1751. [Google Scholar] [CrossRef]

- Galvez-Llompart, M.; Hierrezuelo, J.; Blasco, M.; Zanni, R.; Galvez, J.; de Vicente, A.; Pérez-García, A.; Romero, D. Targeting bacterial growth in biofilm conditions: Rational design of novel inhibitors to mitigate clinical and food contamination using QSAR. J. Enzym. Inhib. Med. Chem. 2024, 39, 2330907. [Google Scholar]

- Castillo-Garit, J.A.; Barigye, S.J.; Pham-The, H.; Pérez-Doñate, V.; Torrens, F.; Pérez-Giménez, F. Computational identification of chemical compounds with potential anti-Chagas activity using a classification tree. SAR QSAR Environ. Res. 2021, 32, 71–83. [Google Scholar] [PubMed]

- Wang, G.; Wong, K.W.; Lu, J. AUC-based extreme learning machines for supervised and semi-supervised imbalanced classification. IEEE Trans. Syst. Man Cybern. Syst. 2020, 51, 7919–7930. [Google Scholar]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar]

- Efron, B. The bootstrap and modern statistics. J. Am. Stat. Assoc. 2000, 95, 1293–1296. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).