Abstract

Road agglomerate fog seriously threatens driving safety, making real-time fog state detection crucial for implementing reliable traffic control measures. With advantages in aerial perspective and a broad field of view, UAVs have emerged as a novel solution for road agglomerate fog monitoring. This paper proposes an agglomerate fog detection method based on the fusion of SURF and optical flow characteristics. To synthesize an adequate agglomerate fog sample set, a novel network named FogGAN is presented by injecting physical cues into the generator using a limited number of field-collected fog images. Taking the region of interest (ROI) for agglomerate fog detection in the UAV image as the basic unit, SURF is employed to describe static texture features, while optical flow is employed to capture frame-to-frame motion characteristics, and a multi-feature fusion approach based on Bayesian theory is subsequently introduced. Experimental results demonstrate the effectiveness of FogGAN for its capability to generate a more realistic dataset of agglomerate fog sample images. Furthermore, the proposed SURF and optical flow fusion method performs higher precision, recall, and F1-score for UAV perspective images compared with XGBoost-based and survey-informed fusion methods.

1. Introduction

Agglomerate fog is a special fog that is much denser and has lower visibility, appearing in the range of tens to hundreds of meters [1]. Since agglomerate fog can severely impair a driver’s vision, it is difficult to promptly discern the road conditions ahead, and it is highly prone to triggering chain-reaction traffic accidents, inducing a significant threat to traffic safety. Traditional agglomerate fog monitoring methods can be classified into two types: (1) active remote sensing technology, including meteorological sensors, LiDAR, infrared imaging devices [2,3], and meteorological satellites [4]; and (2) vision-based detection technology [5,6,7,8,9,10,11,12,13,14]. However, active remote sensing technology faces challenges in signal attenuation, low spatial resolution, coverage constraints, and high implementation costs, which prevent it from being widely adopted in the field of traffic management. Vision-based detection technology can obtain richer feature information for more accurate environment monitoring using existing road surveillance equipment without additional cost investment. Hence, the vision-based methods hold broader application prospects in real-time monitoring of agglomerate fog.

Currently, vision-based research on agglomerate fog mainly focuses on (1) the fog generation and removal methods [5,6], (2) recognition and tracking methods of traffic objects under agglomerate foggy conditions [7,8,9], and (3) the detection of agglomerate fog in the road environment. For the detection of agglomerate fog, the approaches can be classified into traditional image processing methods and AI (artificial intelligence)-based methods. Traditional image processing methods primarily employ image feature extraction and classification approaches to accomplish fog state recognition, such as utilizing gray-level co-occurrence matrix [10,11], edge detection [12], or texture analysis [13,14]. Meanwhile, AI-based methods leverage large-scale patchy fog datasets for model training and perform state classification guided by gradient convergence principles, with predominant models including support vector machines (SVM), clustering analysis, K-nearest neighbor (KNN), and convolutional neural networks (CNN), and other models.

In [15], the authors propose a fog-density-level distinction method using an SVM classification framework that incorporates both global and local fog-related features, such as entropy, contrast, and dark channel information. The method achieves a classification accuracy of 85.8%, with greater robustness compared to traditional image processing methods, such as edge detection or texture analysis. To comparatively analyze the performance of SVM, K-NN, and CNN, the authors in [16] examine the three methods for identifying foggy and clear weather images based on the gray-level co-occurrence matrix extracted from ground-moving onboard scenario images. In [17], the authors propose an Attention-based BiLSTM-CNN (ABCNet) model, which integrates attention mechanisms with BiLSTM and CNN architectures to predict atmospheric visibility caused by heavy fog. Current research shows that artificial intelligence methods, which extract effective image features and convolutional characteristics and construct gradient descent convergence classifiers, can achieve efficient fog classification and atmospheric visibility prediction. This approach has now been a mainstream research direction.

In recent years, unmanned aerial vehicles (UAVs) have been widely applied in traffic detection, including traffic flow status, traffic events, and road environmental conditions, using technologies such as visual analysis, multi-sensor fusion, and edge computing [18,19,20]. In the field of fog research, the authors in [21] employed a classification module and a style migration module to address the challenge of non-uniform dense fog removal in UAV imagery without relying on paired foggy and corresponding fog-free datasets. The proposed method significantly enhanced the visual clarity and interpretability of complex foggy scenes using the classification-guided thick-fog-removal network and style migration mechanism. In [22], a multi-task framework named ODFC-YOLO was proposed, which seamlessly integrated image dehazing into a YOLO detection subnetwork via end-to-end joint training. This combination significantly improved detection accuracy and maintained high real-time performance for UAV-based object recognition in dense fog environments. However, existing methods primarily analyze static scenario images without consideration of the challenges caused by moving UAVs in dynamic flight conditions, such as motion-induced blurring, viewpoint variation, and fog density gradation. Furthermore, in real-world scenarios, the scarcity of agglomerate fog datasets limits model training performance and generalization capability.

To address these challenges, this paper develops a UAV-based framework for agglomerate fog detection, including a FogGAN model for generating agglomerate fog images to alleviate data scarcity, and an agglomerate fog detection model based on Bayesian fusion of SURF and optical flow characteristics for the fog state judgement. In the new UAV aerial perspective, the proposed fusion method integrates both the static and dynamic features of UAV images and performs higher precision, recall, and F1-score compared with traditional approaches.

2. Agglomerate Fog Sample Dataset Generation Method Based on GAN

Since agglomerate fog has characteristics of rapid formation and dissipation, limited spatial coverage, and shifting locations with air currents dynamically, it is difficult to acquire substantial valid samples in actual road environments. This paper adopts the generative adversarial network (GAN) [23] to synthesize an agglomerate fog sample dataset based on a limited number of field-collected images.

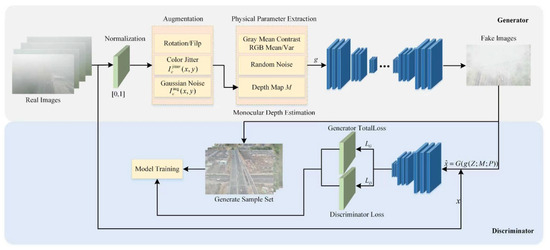

To obtain a high-quality and realistic agglomerate fog dataset, this paper proposes an improved generative adversarial network named FogGAN, in which the generator is enhanced by injecting physical image cues into its feature modulation layers for producing agglomerate fog with more authentic tonal and structural characteristics. The architecture of the FogGAN is shown in Figure 1.

Figure 1.

The generation and working principle of GAN model.

2.1. Generator

The agglomerate fog image samples acquired from actual road scenarios are linearly normalized to the unit interval [0,1], as expressed by Equation (1).

In Equation (1), and are denoted as the minimum and maximum light intensity values of the agglomerate fog image samples, respectively.

To simulate variations in viewpoint and sensor noise characteristics in actual road scenarios, random rotation and horizontal/vertical flipping, color jittering, and Gaussian noise injection are carried out for the augmentation of agglomerate fog images.

The color jittering is expressed by Equation (2).

In Equation (2), is the color jittering image. represents independent perturbations. Color jittering modifies image properties, including brightness, contrast, and saturation.

The Gaussian noise is expressed by Equation (3):

In Equation (3), is the image after adding Gaussian noise. is the random noise following the Gaussian distribution of standard deviation , which is generally valued in the range .

These augmentations enrich the training set with diverse lighting, color balance, and noise conditions. Based on the augmented image, the depth map describes the distance from each pixel to the camera and is produced by a CNN-based monocular depth estimation model that is pretrained on UAV perspective images.

The global photometric statistics from are extracted and further converted into a grayscale image following the ITU-R BT.601 [24] standard by Equation (4). In this study, the photometric statistics are computed from the real images to maintain the photometric consistency of the generated images. The grayscale conversion is performed as follows:

Referring to Equation (4), the mean gray level is calculated by Equation (5).

where H is the height of the image and W is the width. Further, the gray-level contrast, i.e., the standard deviation, is calculated by Equation (6).

For each color channel in , the average and the standard deviation of light intensity are calculated by Equations (7) and (8), respectively.

After normalization, the physical parameter vector of the agglomerate fog image sample (8) can be expressed by Equation (9).

The depth map M, the physical parameter vector P, and the random noise vector Z collectively form the input of the Generator. Since the proposed method incorporates the physical features of the image samples, FogGAN can ensure that the generated agglomerate fog images exhibit enhanced realism.

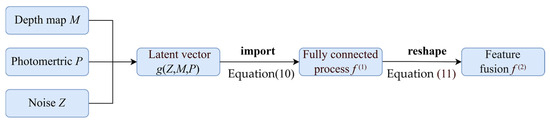

In practice, is instantiated as a 1-D latent vector concatenating the embeddings of the depth map M, the photometric vector P and the noise Z. This vectorized input g is fed to the fully connected layer in Equation (10).

where and are the weight matrix and bias vector, respectively.

To achieve physics-driven fog attenuation, these features are further modulated using a depth-dependent transmission coefficient and a learnable mapping of the photometric parameters using Equation (11).

In Equation (11), is a per-pixel mask of size 1 × H × W that multiplies all channels at location (element-wise multiplication). is calculated using Equation (12).

where and > 0 control the attenuation strength.

is a per-channel bias of size C × 1 × 1 that is broadcast over H × W. is calculated using Equation (13).

where denotes the learnable parameters of the MLP (weights and biases).

As mentioned in the aforementioned analysis, the physical cues injection process is shown in Figure 2.

Figure 2.

Physical cue injection process.

The modulated feature is passed through L successive up-sampling blocks, which consist of convolution, BatchNorm, and ReLU activation, as shown in Equation (14).

The output fake images are expressed by Equation (15).

The entire generator is trained to minimize the combined loss as given by Equation (16).

where is the discriminator, and extracts the same global photometric statistics defined in Equations (4)–(9). denotes expectation over , and , , and are the weights.

2.2. Discriminator

Taking either the real foggy image or the fake foggy image created by the Generator as input, a scalar is produced to describe the reality probability. The discriminator architecture consists of Q down-sampling blocks and a fully connected layer, as expressed by Equations (17)–(19).

where each is a convolution with stride 2. and are the weights and bias of the final linear layer. is the sigmoid activation.

The discriminator is trained to minimize the standard binary-cross-entropy loss as given by Equation (20).

By alternately minimizing with respect to and minimizing the generator loss with respect to , the two networks play the minimax game that drives to produce increasingly realistic foggy images.

3. Dense Fog Detection Method Based on SURF Feature Extraction and Optical Flow Analysis

3.1. ROI Division for UAV Perspective

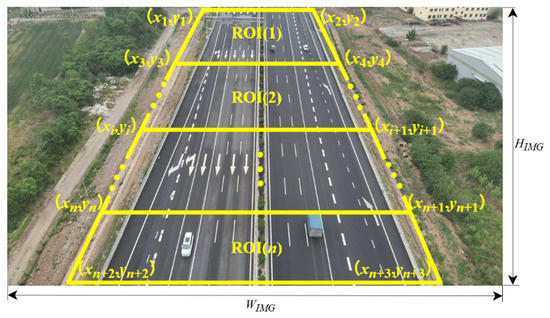

The agglomerate fog has characteristics of small spatial scale and localized distribution, whereas the forward-facing perspective of drones during UAV cruise has a broad distant view. It is imperative to define the region of interest (ROI) for fog detection, which is beneficial for accurately locating where the fog occurs and for reducing computational overhead. In this paper, trapezoidal ROI along the road within different image depth ranges are preset and applied for further research.

For the image resolution of , n trapezoidal ROIs are divided according to Equation (21).

where are the pixel coordinates of the j-th vertex of the i-th ROI (j = 1, 2, …, n; i = 1, 2, …, n); , , , and denote the horizontal pixel positions of the trapezoid’s top-left, top-right, bottom-left, and bottom-right base points, respectively; and and are the image width and height as shown in Figure 3.

Figure 3.

Distribution of regions of interest.

3.2. SURF Extraction of Road Agglomerate Fog

For each ROI, the SURF (speeded-up robust features) [25] algorithm is employed for robust detection and description of interest points. The integral image at any location (x,y) is defined as the cumulative sum of pixel intensities above and to the left of (x,y), denoted by Equation (22).

Based on the integral image acquired by Equation (22), box filters are applied to approximate the second-order Gaussian derivatives , , and at scale s. The resulting responses are combined into the Hessian matrix, as expressed by Equation (23).

As shown in Equation (23), the determinant of this effectively approximates the response of the Laplacian-of-Gaussian operator. In this paper, the points with values above the threshold in both spatial and scale dimensions are defined as key points.

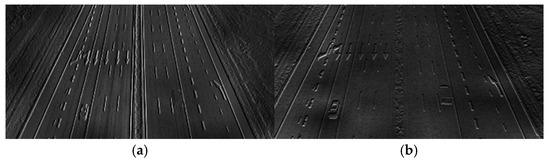

The orientation of each feature point is assigned using the Haar wavelet transform on the circular neighborhood around the key point. Figure 4 shows the differentiated response of the Haar wavelet in the x and y directions in the same road scenario.

Figure 4.

Haar responses of the road scenario: (a) Horizontal Haar response Hx; (b) Vertical Haar response Hy.

The wavelet responses are aggregated in both horizontal and vertical directions, and the orientation is acquired using Equation (24).

where and denote the Haar wavelet transform in the horizontal and vertical directions, respectively, and is the four-quadrant inverse tangent function.

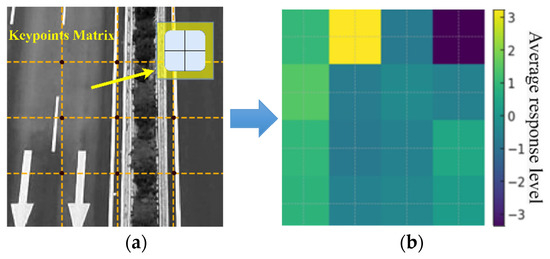

Finally, to construct the SURF descriptor, a square region centered at each key point is divided into a 4 × 4 grid of subregions, as shown in Figure 5a. Within each of the subregions, four statistical values of the Haar responses are accumulated. Concatenating these four values from all 16 subregions yields a 64-D descriptor, as shown in Figure 5b.

Figure 5.

Construction of SURF descriptors: (a) 4 × 4 subregion response matrix; (b) Mean horizontal response per subregion.

After detection, the count of SURF key points within the region of interest is denoted by for the i-th ROI and is used as one evidential variable in the subsequent Bayesian fusion.

3.3. Optical Flow Analysis of Road Agglomerate Fog

The SURF algorithm is primarily applied for static feature extraction of individual images. To improve the accuracy of agglomerate fog detection during UAV adaptive cruise, this paper further integrates optical flow features for dynamic feature extraction. The Lucas–Kanade [26] algorithm is used to capture dynamic features of agglomerate fog by pixel motion across consecutive image frames for each ROI.

Referring to the brightness constancy assumption, pixel values exhibit minimal variation over short time intervals between consecutive frames, as expressed by Equation (25).

Using the first-order Taylor series, the optical flow constraint equation is obtained and expressed by Equation (26).

In Equation (26), , , and are partial derivatives of image intensity in the spatial (x,y) and temporal t domains. u and v represent horizontal and vertical components of the optical flow vector, respectively.

By establishing an overdetermined system of equations using multiple neighboring pixels, Equation (26) can be further transformed into Equation (27), and the solution of the optical flow constraint equation can be treated as a least squares problem.

Referring to the previously identified SURF key points of consecutive frames, optical flow vectors are computed for capturing displacement and directionality of feature movements. In agglomerate fog areas, atmospheric particles typically exhibit subtle and coherent motion, resulting in smaller magnitudes and directionally consistent optical flow vectors. In contrast, non-fog areas tend to display irregular and diverse motion features.

Let denote the Lucas–Kanade flow vector at pixel (x,y) between two consecutive frames t and t + 1. Its magnitude is expressed by Equation (28).

The mean magnitude of optical flow vectors is denoted by for the i-th ROI and is used as the other evidential variable in the subsequent Bayesian fusion.

3.4. Bayesian-Based Data Fusion for Agglomerate Fog Detection

For each ROI in the frame of the road image detected by UAV, calculate the number of SURF and the mean optical flow magnitude . The likelihoods under the “there is agglomerate fog in the ROI” hypothesis are expressed by Equations (29) and (30), respectively.

where and denote the mean and standard deviation of SURF counts, respectively. and are the mean and standard deviation of motion magnitudes, respectively. These parameters are derived from statistical analysis of the samples by FogGAN.

Assuming the prior agglomerate fog probability is conditionally independent of and , the posterior probability of agglomerate fog can be expressed by Equation (31).

When the posterior probability is larger than the preset threshold , it can be considered that agglomerate fog has occurred, as expressed by Equation (32).

4. Experimental Results and Analysis

4.1. Experimental Conditions

In this paper, the DJI Mavic 3T UAV is used for original agglomerate fog image collection, and the configurations presented in Table 1 are collected from mileage post number K470 to K480 of the Jingtai Highway. The road scenario is shown in Figure 6.

Table 1.

Parameters of the experiment.

Figure 6.

UAV and experimental scenario.

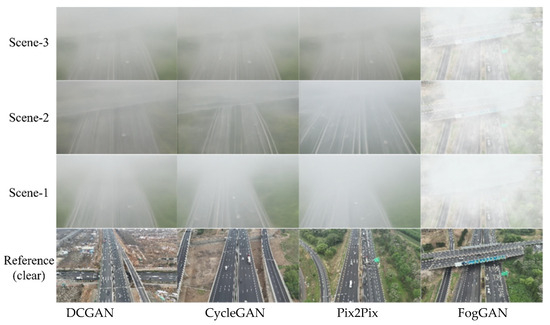

4.2. FogGAN-Based Dataset Generation Results and Analysis

To evaluate the performance of the proposed FogGAN, comparative experiments were conducted against three benchmark methods: DCGAN [27], CycleGAN [28], and Pix2Pix [29]. The visual effects of agglomerate fog images generated by different methods are presented in Figure 7.

Figure 7.

Agglomerate fog visual effects using different methods.

To carry out a quantitative evaluation of the results generated by different methods, this paper applies three indexes for further comparative analysis, including peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and Frechet inception distance (FID). The indexes are calculated as follows:

① PSNR: Generated images with a greater PSNR have higher fidelity to the reference. The PSNR is calculated by Equation (33).

where MAXI is the maximum possible pixel value. MSE is the mean squared of the referenced image X and the generated image Y, as expressed by Equation (34).

② SSIM: Generated images with a greater SSIM mean they are closer to the reference in luminance, contrast, and structure. The SSIM is calculated using Equation (35).

where and are local means; and are local variances; is the covariance; and , with l1 = 0.01, l2 = 0.03.

③ FID: Generated images with a lower FID indicate the generated distribution is closer to the reference. The FID is calculated using Equation (36).

where and are the mean and covariance of deep features for the referenced and generated images, respectively.

Table 2.

PSNR, SSIM, and FID of different methods.

It is evidence that the proposed FogGAN achieves higher PSNR and SSIM values while exhibiting lower FID compared with DCGAN, CycleGAN, and Pix2Pix. Since the generator in FogGAN is enhanced by injecting physical image cues, the generated images exhibit greater similarity to the original images in terms of structural features, with high values in PSNR and SSIM. Meanwhile, FogGAN improves the pixel-level fidelity and creates a more realistic road background with lower FID.

4.3. Agglomerate Fog Detection Results Based on the Fusion of SURF and Optical Flow

4.3.1. SURF Extraction Results and Analysis

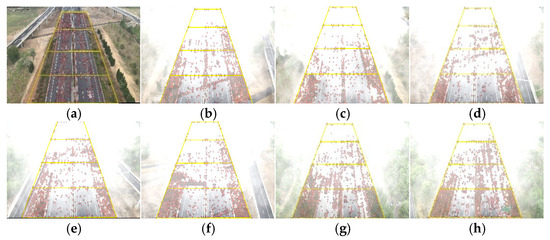

In this paper, four ROIs are configured for agglomerate fog detection. The SURF extraction results for partial samples are shown in Figure 8.

Figure 8.

SURF extraction results: (a) Reference (clear) image. (b–h) Detection results under agglomerate fog, with yellow trapezoids marking road ROIs, and R1–R4 (near → far) and red dots indicating SURF key points. All panels use identical detector parameters and are aligned to the reference in (a).

Table 3.

SURF distributions for different images and ROIs.

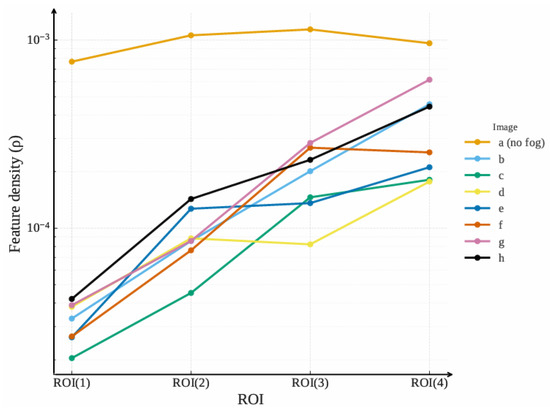

Figure 9.

SURF density variation of different samples and ROIs.

Referring to Figure 8, Figure 9, and Table 3, the distribution of SURF points follows a pattern where the density is lower in long-distance ROIs and higher in short-distance ROIs. The reason is that agglomerate fog concentration is higher in long-distance regions, allowing the image to capture limited features that mainly originate from the agglomerate fog. In contrast, visibility is relatively better in short-distance regions, enabling ground targets to generate more feature points. Therefore, SURF features can effectively describe the state of agglomerate fog in an actual scenario.

4.3.2. Optical Flow Extraction Results and Analysis

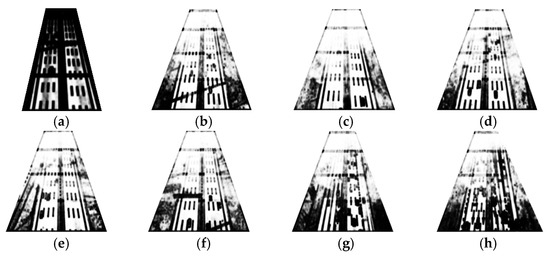

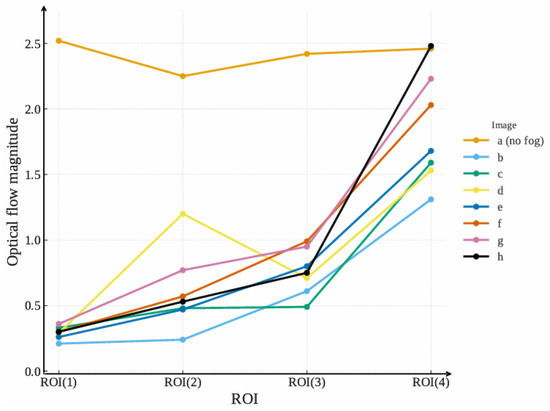

Taking any two consecutive frames as examples, the optical flow is detected and is shown in Figure 10. In the figure, the optical flow magnitude is represented by the brightness.

Figure 10.

Optical flow extraction results: (a) Reference (clear) image. (b–h) Detection results under agglomerate fog. The visualization encodes magnitude only: darker = larger motion, brighter = smaller motion. All panels share the same contrast range for comparability and are aligned to the reference in (a).

The optical flow magnitude for different images and ROIs is presented in Table 4.

Table 4.

Optical flow magnitude distribution for different images and ROIs.

Referring to Figure 10, Figure 11, and Table 4, the optical flow magnitude exhibits a distribution pattern where values are smaller in long-distance ROIs and larger in short-distance ROIs. The reason is that higher agglomerate fog concentration in long-distance ROIs results in minimal pixel variation between consecutive frames captured by the moving UAV. In contrast, better visibility at close range leads to significant pixel changes due to dynamic UAV perspective shifts.

Figure 11.

Optical flow magnitude variation of different samples and ROIs.

4.3.3. Fusion Method Experimental Results and Analysis

Based on the aforementioned analysis, the SURF and optical flow features can be independently applied to the judgement of the agglomerate fog state by setting certain thresholds. Referring to Equations (28)–(30), the agglomerate fog state is detected for different images, including 300 training samples and 100 test samples. The precision, recall, and F1-score of the SURF-based method, optical flow-based method, and the fusion method are presented in Table 5.

Table 5.

Agglomerate fog detection performance of the proposed method.

It is evidence that both the SURF-based method and the optical flow-based method can achieve high precision for the agglomerate fog state judgement. However, the fusion of the two features presents better performance, where the precision increases by 7.3% compared to the SURF-based method and by 11.4% compared to the optical flow-based method; the recall improved by 13.3% and 9.0%, respectively; and the F1-score improved by 5.1% and 7.9%, respectively.

To further evaluate the performance of the proposed method, the XGBoost-based method [13] and the survey-informed fusion method [12] are selected for the comparison of agglomerate fog detection. All methods are tested under identical experimental settings using the same mixed dataset generated by FogGAN. The precision, recall, and F1-score are presented in Table 6.

Table 6.

Agglomerate fog detection results of different methods.

The experimental results show that the proposed SURF and optical flow fusion method presents higher precision, recall, and F1-score compared with the XGBoost method and the survey-informed fusion method. Different from the XGBoost-based method, which relies on a dynamic light source, and the survey-informed fusion method, which requires high texture agglomerate fog images, the implement of the fusion of SURF and optical flow is able to detect both the static and dynamic physical characteristics of agglomerate fog in localized ROIs, and thus it yields better performance under rapidly changing viewpoints of UAVs. Since optical flow detection requires the comparison of two consecutive images, the CPU time is longer than that of the other two methods. Nevertheless, the millisecond-level additional CPU time will not substantially impact the practical engineering application of agglomerate fog detection; for example, the dissemination of fog status.

5. Conclusions and Future Work

This paper proposes an improved generative adversarial network (FogGAN) to obtain a high-quality and realistic agglomerate fog dataset, in which the generator is enhanced by injecting physical image cues into its feature modulation layers. Using the data sample generated by FogGAN, a fusion method of SURF and optical flow for detecting agglomerate fog in UAV-captured road scenarios is further presented. The method describes static features using SURF and dynamic features using optical flow magnitude, achieving better performance for agglomerate fog detection under the flight viewpoint of UAVs.

Future work includes the following: (1) Experiments will be further carried out under different UAV flight scenarios, such as different flight altitudes and camera resolutions, to evaluate the performance of the proposed method. (2) The integration of deep convolutional features of images with SURF and optical flow should be further explored to improve the accuracy of agglomerate fog detection.

Author Contributions

Conceptualization, F.G. and H.L.; methodology, H.L.; software, F.G.; validation, F.G., M.J. and X.G.; formal analysis, F.G.; investigation, M.J.; resources, F.G.; data curation, M.Z.; writing—original draft preparation, F.G.; writing—review and editing, F.G.; visualization, F.G.; supervision, H.L.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

The research is partially supported by Jinan City’s Self-Developed Innovative Team Project for Higher Educational Institutions (# 20233040) and Shandong Province Overseas High-Level Talent Workstation Project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhen, I.L.; Yuan, A.; Chen, I.Z.; Hai, B.Z.; Chang, M.L.; Zong, J.Z. Research on video monitoring and early warning of expressway fog clusters. Sci. Technol. Eng. 2023, 22, 15408–15417. [Google Scholar][Green Version]

- Jiang, J.; Yao, Z.; Liu, Y. Nighttime fog and low stratus detection under multi-scene and all lunar phase conditions using S-NPP/VIIRS visible and infrared channels. ISPRS J. Photogramm. Remote Sens. 2024, 218, 102–113. [Google Scholar] [CrossRef]

- Kumar, U.; Hoos, S.; Sakthivel, T.S.; Babu, B.; Drake, C.; Seal, S. Real-time fog sensing employing roadside traffic cameras using lanthanide-doped upconverting NaYF4 nanomaterials as a contrast piece. Sens. Actuators A Phys. 2025, 382, 116131. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Bullock, T.; Beale, S. Automatic nighttime sea fog detection using GOES-16 imagery. Atmos. Res. 2020, 238, 104712. [Google Scholar] [CrossRef]

- Gharatappeh, S.; Neshatfar, S.; Sekeh, S.Y.; Dhiman, V. FogGuard: Guarding YOLO against fog using perceptual loss. arXiv 2024, arXiv:2403.08939. [Google Scholar] [CrossRef]

- Khatun, A.; Haque, M.R.; Basri, R.; Uddin, M.S. Single image dehazing: An analysis on generative adversarial network. Int. J. Comput. Sci. Netw. Secur. 2024, 24, 136–142. [Google Scholar] [CrossRef]

- Raza, N.; Habib, M.A.; Ahmad, M.; Abbas, Q.; Aldajani, M.B.; Latif, M.A. Efficient and cost-effective vehicle detection in foggy weather for edge/fog-enabled traffic surveillance and collision avoidance systems. Comput. Mater. Contin. 2024, 81, 911. [Google Scholar] [CrossRef]

- Meng, X.; Liu, Y.; Fan, L.; Fan, J. YOLOv5s-fog: An improved model based on YOLOv5s for object detection in foggy weather scenarios. Sensors 2023, 23, 5321. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Shi, Z.; Zhu, C. Enhanced multi-scale object detection algorithm for foggy traffic scenarios. Comput. Mater. Contin. 2025, 82, 2451. [Google Scholar] [CrossRef]

- Asery, R.; Sunkaria, R.K.; Sharma, L.D.; Kumar, A. Fog detection using GLCM based features and SVM. In Proceedings of the 2016 Conference on Advances in Signal Processing (CASP), Pune, India, 9–11 June 2016; pp. 72–76. [Google Scholar]

- Bronte, S.; Bergasa, L.M.; Alcantarilla, P.F. Fog detection system based on computer vision techniques. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009; pp. 1–6. [Google Scholar]

- Miclea, R.C.; Ungureanu, V.I.; Sandru, F.D.; Silea, I. Visibility enhancement and fog detection: Solutions presented in recent scientific papers with potential for application to mobile systems. Sensors 2021, 21, 3370. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Tan, Q.; Fan, Q.; Zhang, Z.; Zhang, Y.; Li, X. Nighttime agglomerate fog event detection considering car light glare based on video. Int. J. Transp. Sci. Technol. 2024, 19, 139–155. [Google Scholar] [CrossRef]

- Ahmed, M.M.; Khan, M.N.; Mohamed, A.; Das, A.; Li, L. Automated Real-Time Weather Detection System Using Artificial Intelligence; Wyoming Department of Transportation: Cheyenne, WY, USA, 2023. [Google Scholar]

- Li, Z.; Zhang, S.; Fu, Z.; Meng, F.; Zhang, L. Confidence-feature fusion: A novel method for fog density estimation in object detection systems. Electronics 2025, 14, 219. [Google Scholar] [CrossRef]

- ApinayaPrethi, K.N.; Nithya, S. Fog detection and visibility measurement using SVM. In Proceedings of the 2023 2nd International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), Coimbatore, India, 16–17 June 2023; pp. 1–5. [Google Scholar]

- Li, W.; Yang, X.; Yuan, G.; Xu, D. ABCNet: A comprehensive highway visibility prediction model based on attention, Bi-LSTM and CNN. Math. Biosci. Eng. 2024, 21, 4397–4420. [Google Scholar] [CrossRef] [PubMed]

- Zheng, B.; Zhou, J.; Hong, Z.; Tang, J.; Huang, X. Vehicle recognition and driving information detection with UAV video based on improved YOLOv5-DeepSORT algorithm. Sensors 2025, 25, 2788. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Wang, Y.; An, Y.; Yang, H.; Pan, Y. Real-time vehicle detection and urban traffic behavior analysis based on uav traffic videos on mobile devices. arXiv 2024, arXiv:2402.16246. [Google Scholar] [CrossRef]

- Baya, C. Lidar from the Skies: A UAV-Based Approach for Efficient Object Detection and Tracking. Master’s Thesis, Missouri University of Science and Technology, Rolla, MO, USA, 2025. [Google Scholar]

- Liu, Y.; Qi, W.; Huang, G.; Zhu, F.; Xiao, Y. Classification guided thick fog removal network for drone imaging: Classifycycle. Opt. Express 2023, 31, 39323–39340. [Google Scholar] [CrossRef] [PubMed]

- Fang, W.; Zhang, G.; Zheng, Y.; Chen, Y. Multi-task learning for uav aerial object detection in foggy weather condition. Remote Sens. 2023, 15, 4617. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Rec. ITU-R BT.601-7; Studio Encoding Parameters of Digital Television for Standard 4:3 and Wide-Screen 16:9 Aspect Ratios. International Telecommunication Union: Geneva, Switzerland, 2011.

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).