1. Introduction

Cellular automata (CA) are dynamical systems discrete in time, space, and state. The Game of Life [

1] represents a canonical cellular automaton that investigates how simple rules evolve within discrete spaces and whether such rules can generate dynamics simulating lifelike phenomena. However, its limitation lies in its reliance on a single fixed rule set. In contrast, Wolfram cellular automata enable the exploration of emergent complexities across diverse deterministic rules [

2], revealing how intricate phenomena arise from elementary local interactions. While Wolfram automata operate under deterministic constraints, the Domany-Kinzel (DK) model generalizes this framework as a stochastic cellular automaton [

3], facilitating the study of non-equilibrium dynamics and phase transitions in disordered systems. Cellular automata are widely used in various fields, such as fluid mechanics [

4], ecosystem modeling [

5], simulation of any computer algorithm [

6], urban planning [

7], simulating traffic flow [

8], mathematics [

9], stock market [

10], crystal growth models [

11], and biological modeling [

12].

The intricate patterns generated by CA present formidable challenges for conventional analytical methods, motivating the adoption of advanced computational approaches. Machine learning approaches have garnered significant interest in recent years and have been extensively adopted across diverse domains, such as computer vision [

13,

14], natural language processing [

15], recommendation systems [

16], finance [

17], healthcare [

18,

19], and large language models (LLMs) [

20].

Machine learning, leveraging its robust capabilities in high-dimensional data processing and nonlinear modeling, has been extensively applied to research in physics. For example, in high-energy physics, particle swarm optimization and genetic algorithms have been employed to autonomously optimize hyperparameters of machine learning classifiers in high-energy physics data analyses [

21]; HMPNet integrates HaarPooling with graph neural networks to boost quark–gluon tagging accuracy [

22]. In astrophysics, a text-mining-based scientometric analysis has been conducted to map the application trends of machine learning in astronomy [

23], and a machine learning model has been developed to predict cosmological parameters from galaxy cluster properties [

24]. In quantum simulation, an ab initio machine learning protocol has been developed for intelligent certification of quantum simulators [

25], a quantum machine learning framework has been developed for resource-efficient dynamical simulation with provable generalization guarantees [

26], and a noise-aware machine learning framework has been developed for robust quantum entanglement distillation and state discrimination over noisy classical channels [

27].

Machine learning has enabled groundbreaking advances in identifying distinct phases of matter. Carrasquilla and Melko’s seminal 2016 study demonstrated that supervised machine learning methods can classify ferromagnetic and paramagnetic phases in the classical Ising model [

28], accurately extracting critical points and spatial correlation exponents. This pioneering work catalyzed the widespread adoption of machine learning for analyzing diverse phase transitions across condensed matter systems. Machine learning has also been applied to identify more complex phase transitions, such as in three-dimensional Ising models [

29], percolation phase transitions [

30], topological phase transitions [

31,

32], the non-equilibrium phase transitions in the Domany-Kinzel automata [

33], and the non-equilibrium phase transitions in even-offspring branching annihilating random walks [

34].

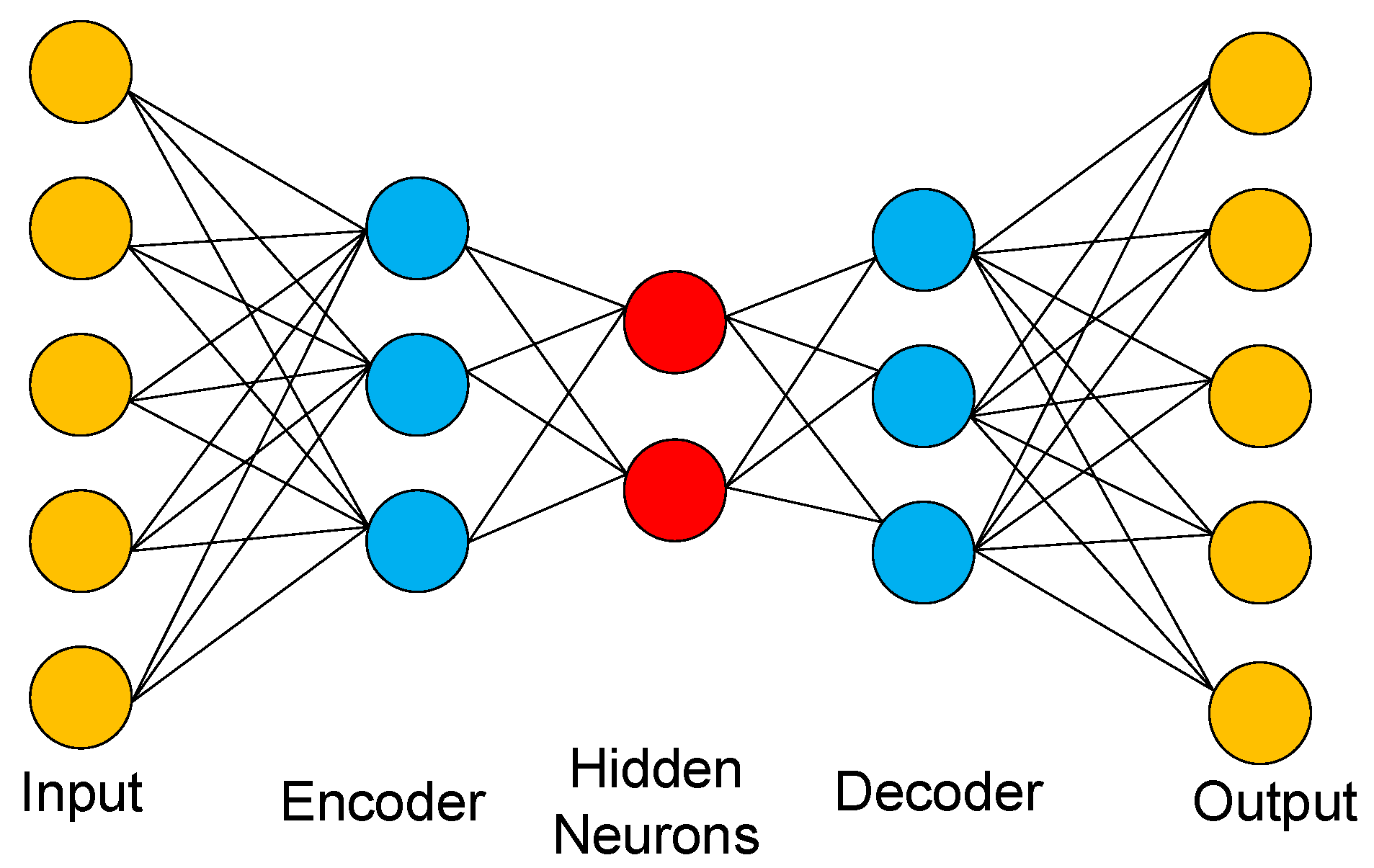

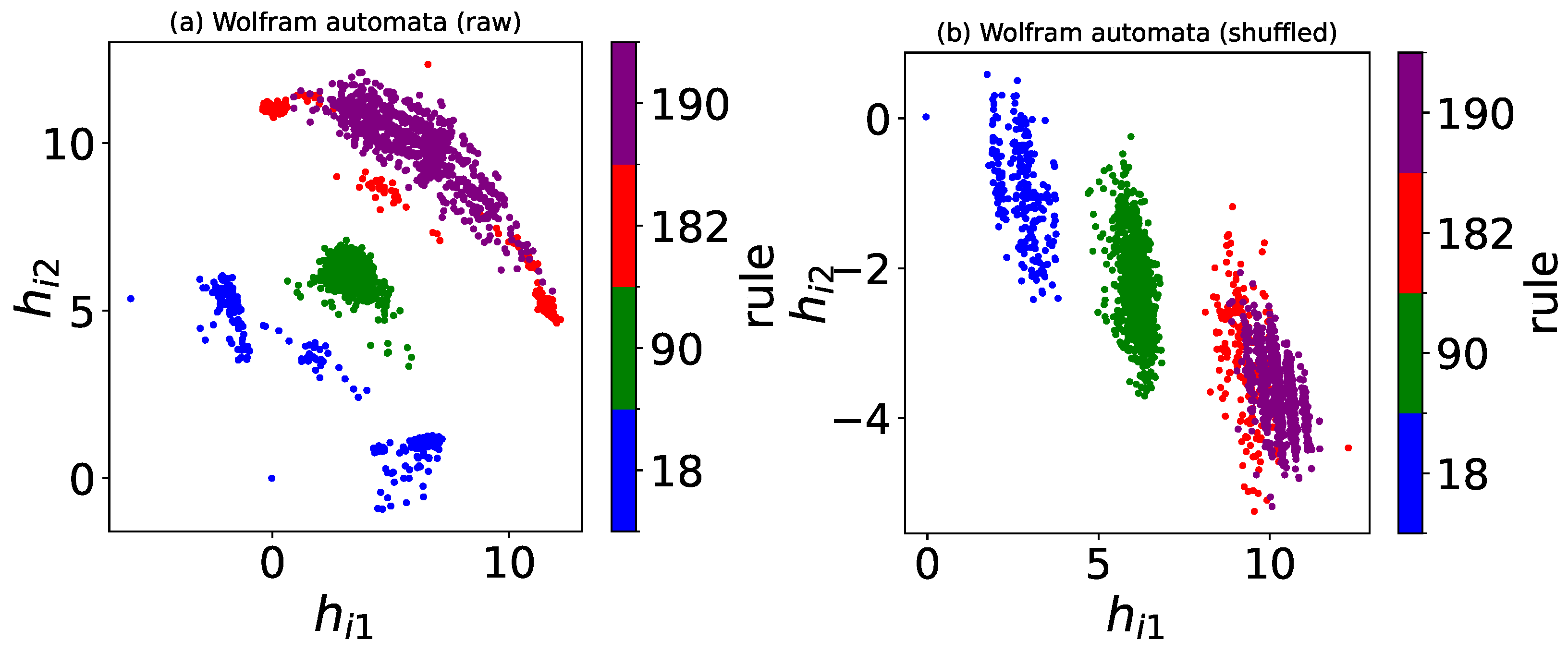

In this work, we study the (1+1)-dimensional Wolfram automata by using numerical computation and machine learning. Wolfram automata encompass diverse evolution rules, enabling in-depth analysis of dynamical evolution mechanisms in cellular systems. Simulations and numerical computations are employed to investigate the fractal structures, transient time to reach steady states, and asymptotic density of Wolfram automata. Manual analysis struggles to distinguish configurations under complex Wolfram rules, whereas machine learning leverages its image-classification capabilities to automate Wolfram automata identification. By applying both supervised and unsupervised learning methods, a trained neural network can accurately identify diverse configurations across different Wolfram rules after training on a limited set of automata samples. In addition, two unsupervised learning methods, principal component analysis (PCA) and autoencoders, are utilized to classify configurations and estimate the density of Wolfram automata.

The remainder of this paper is organized as follows: In

Section 2, we briefly introduce the Wolfram automata.

Section 3 presents the numerical computations of (1+1)-dimensional Wolfram automata, which are employed to investigate the fractal structures and asymptotic density of Wolfram automata.

Section 4 presents the supervised learning of (1+1)-dimensional Wolfram automata, which are used to identify distinct configurations of Wolfram rules.

Section 5 is about the unsupervised learning results of (1+1)-dimensional Wolfram automata, via autoencoder and PCA.

Section 6 summarizes the main findings of this work.

3. Numerical Computation of Wolfram Automata

We first employ Monte Carlo simulation methods to study the Wolfram automata, leveraging randomly generated initial configurations to analyze fractal structures and self-organization phenomena. This approach specifically investigates the relationship between the asymptotic density of evolved states and the disordered initial state of the automaton. Subsequently, we utilize numerical computation to explore the evolution mechanisms of cellular automata configurations under predefined Wolfram rules.

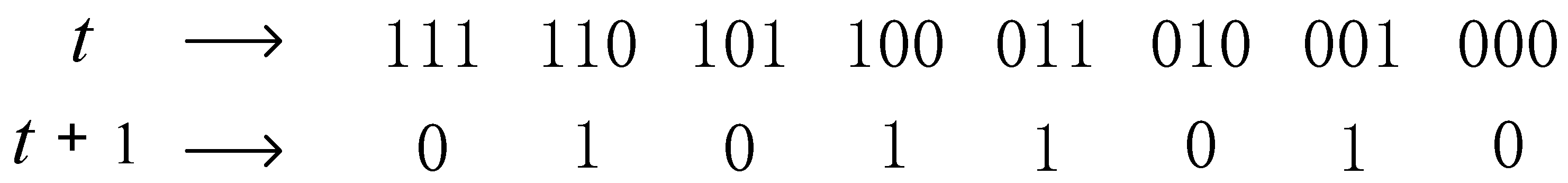

The local rules governing Wolfram automata can be formalized as Boolean functions acting on a central site and its nearest neighbors.

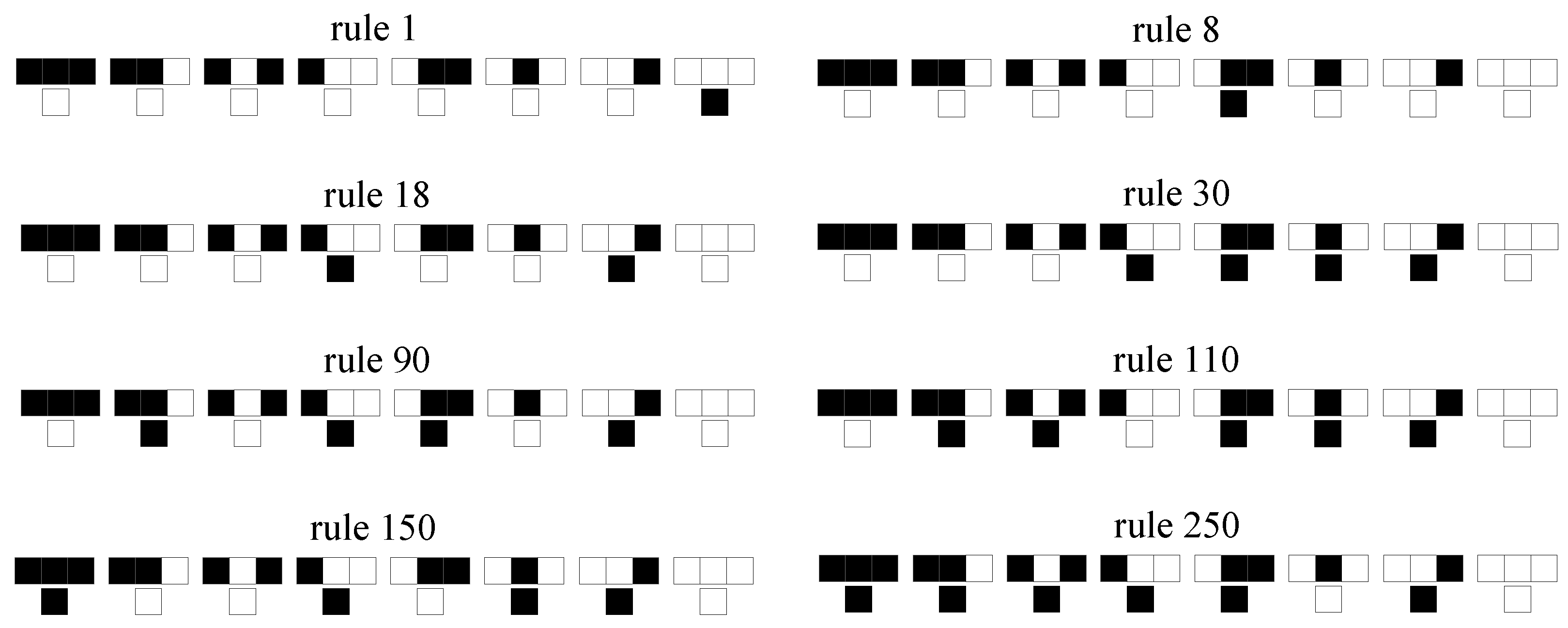

Figure 2 shows a black-and-white color representation of the Wolfram rule, where black represents sites with value 1 and white represents sites with value 0. Each rule is defined by a unique Boolean expression, as shown in

Table 1. For example, rule 90 is equivalent to

=

, rule 18 to

=

, rule 30 to

=

, and 110 to

=

. Here,

denotes the state of site

i at time step

t, while

and

represent its left and right neighbors, respectively. Boolean operators include AND (∧), OR (∨), NOT (¬), and XOR (⊕). Compared to enumerating all 256 rule conditions, these simplified Boolean expressions significantly enhance computational efficiency in simulations by reducing logical redundancy and enabling optimized code implementation.

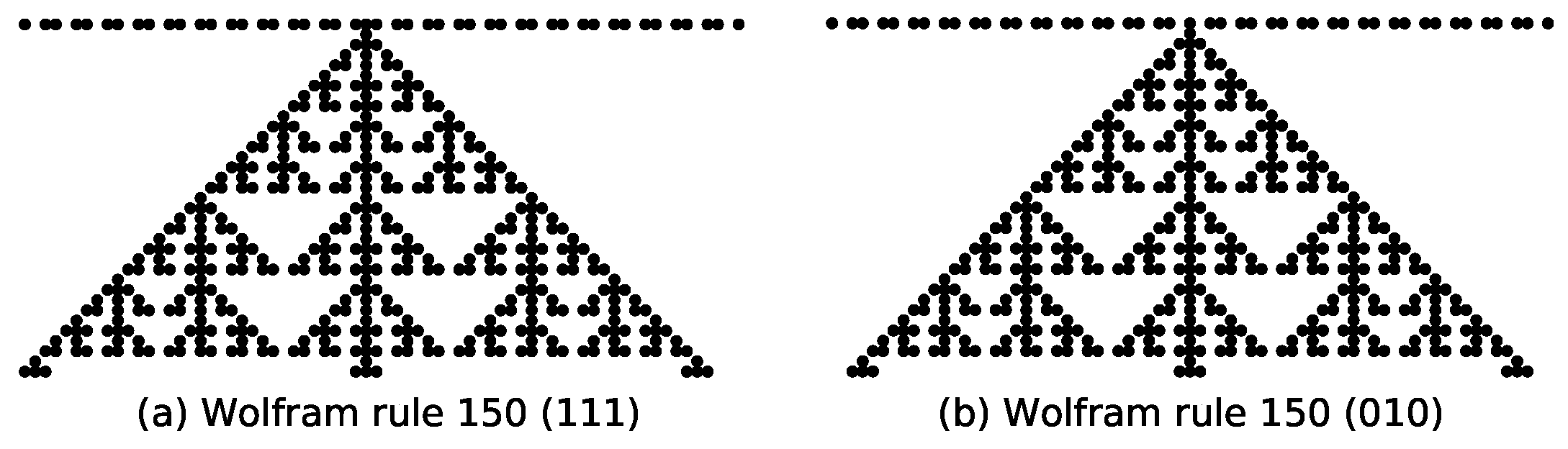

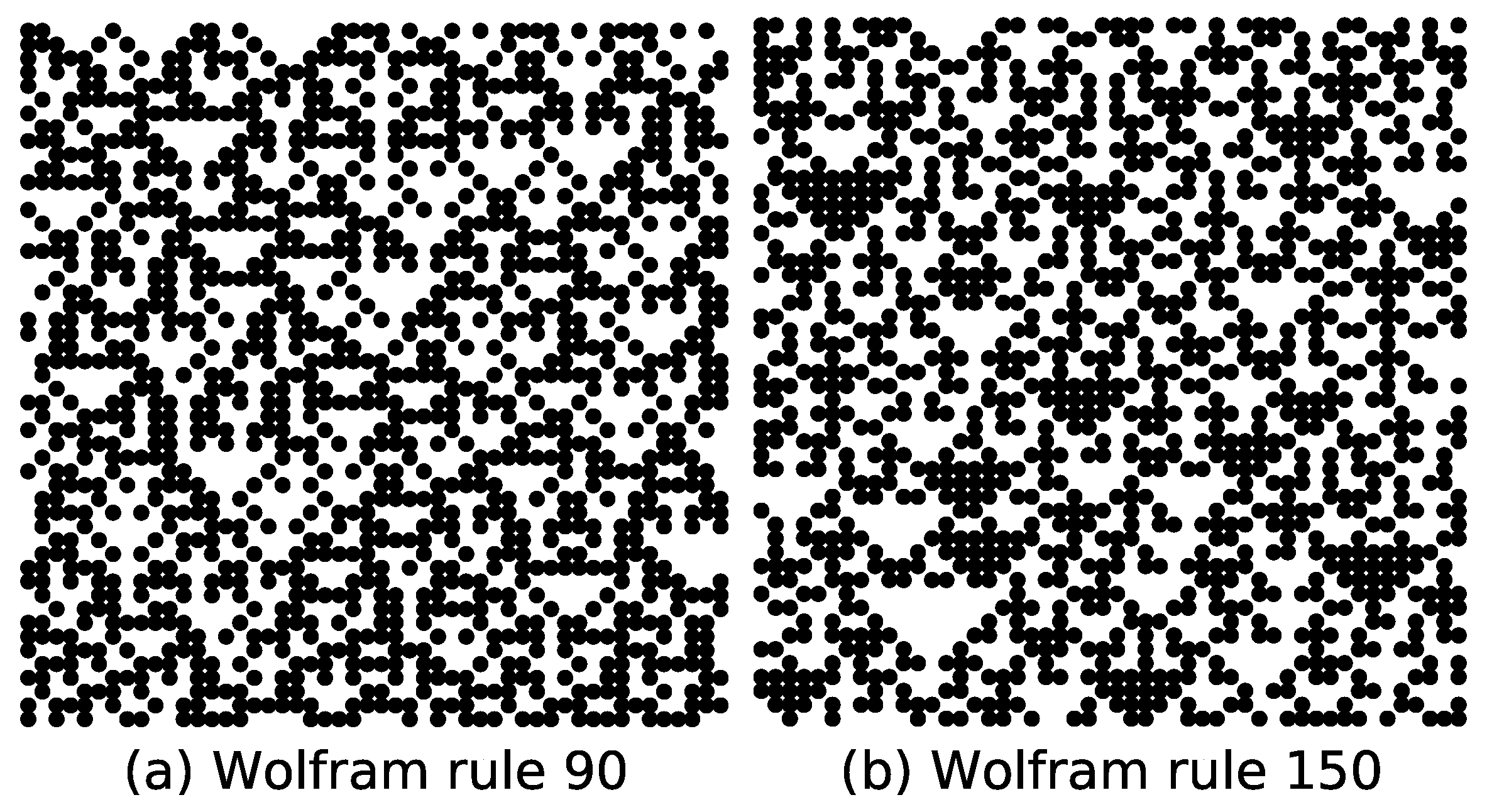

As shown in

Figure 3, the cluster diagram of the Wolfram automata evolves from an initial state with a single active site. Starting from such simple initial configurations, Wolfram automata either evolve to homogeneous states or generate self-similar fractal patterns. For example, under the initial state with a single active site, rules 18 and 90 both produce the Sierpiński triangle pattern with a fractal dimension of

. Similarly, rule 150 exhibits self-similarity with a fractal dimension of 1.69 [

35].

Numerical analysis reveals that the emergent Sierpiński triangle structure in rules 18 and 90 depends solely on two specific rule conditions: neighborhood state 100→1 and 001→1. Notably, rule 90 additionally satisfies conditions such as 110→1 and 011→1, but these do not influence the Sierpiński pattern under the specified initial state. This property extends to other rules satisfying the same core conditions (100→1 and 001→1), resulting in eight distinct Wolfram rules (including rules 26, 82, 146, 154, 210, and 218) that generate Sierpiński triangles when evolved from configurations containing only one active site at any time step.

In Ref. [

36], the explanation of symmetry originates from the additive nature of cellular automata rules. Rules 150 and 105 exhibit unique symmetry because they are additive rules, which flip the result only when any of the three-cell neighborhood states is flipped, leading to the emergence of self-similar fractal structures like the Sierpiński triangle. Similarly, rule 90 and its equivalence class, {18, 22, 26, 82, 146, 154, 210, and 218}, also generate the Sierpiński triangle from a single active site through their additive properties, forming a symmetric octet. These rules are equivalent under transformation, reflecting an underlying symmetry.

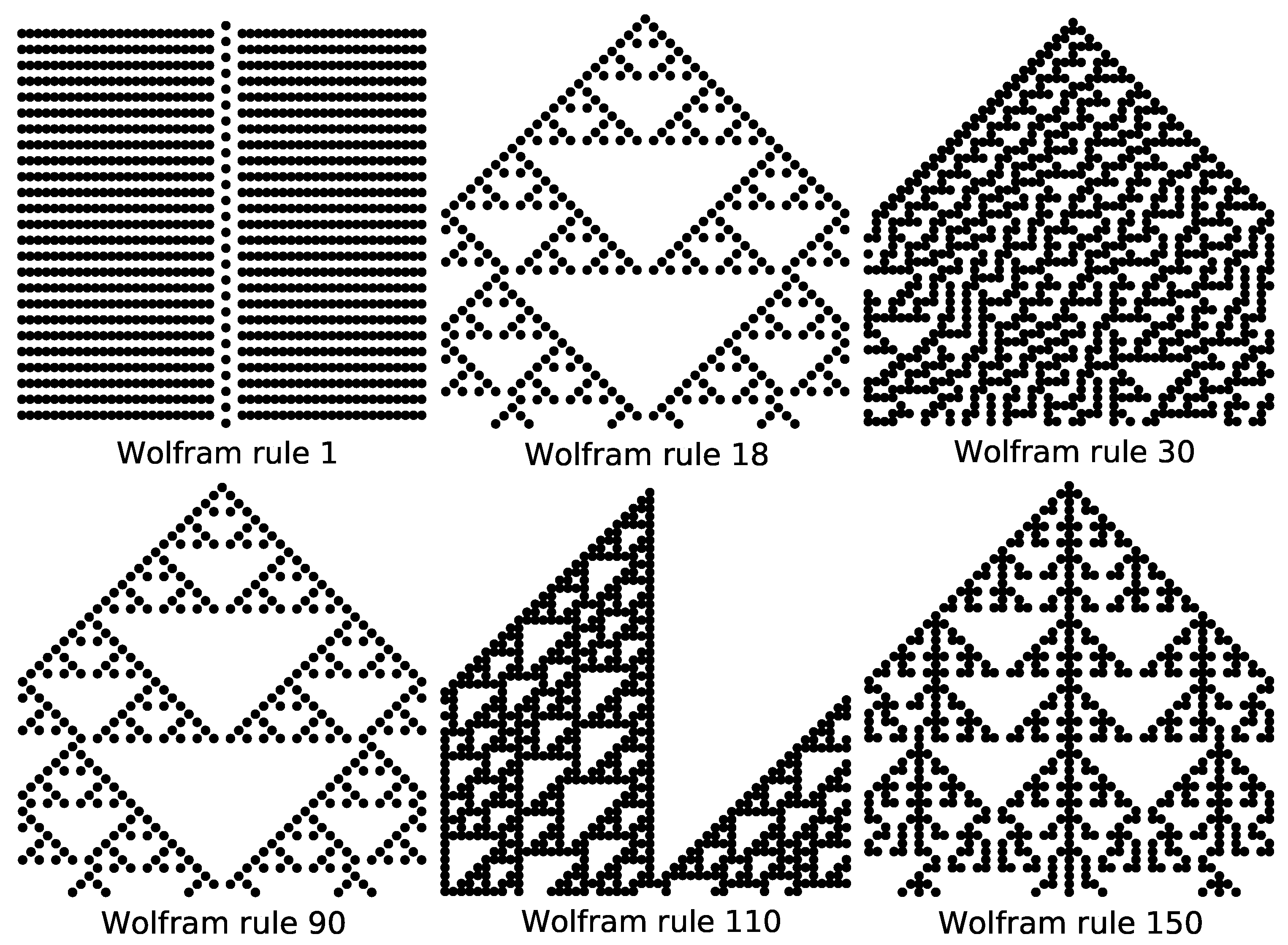

Further investigations under modified initial conditions highlight nuanced behavioral differences. For rule 90 evolving from an initial state where the central site is 0 and all others are 1, the state transition at

depends exclusively on

110→1 and

011→1. This results in the activation of two central sites at this time step and ultimately forms a Sierpiński triangle with vertices removed (

Figure 4a). Under identical initial conditions, the transition of rule 26 at

depends solely on

011→1, while rule 82 depends solely on

110→1. Both rules yield only one active site at this time step, and yet subsequently evolve into full Sierpiński triangles (

Figure 4b,c). For specialized initial states (e.g., alternating sequences with isolated active sites), rules 146, 154, and 210 each activate only one site at

due to distinct rule dependencies, (

111→1 for rule 146,

011→1 for rule 154, and

110→1 for rule 210), yet all subsequently generate complete Sierpiński triangles (

Figure 4d,f).

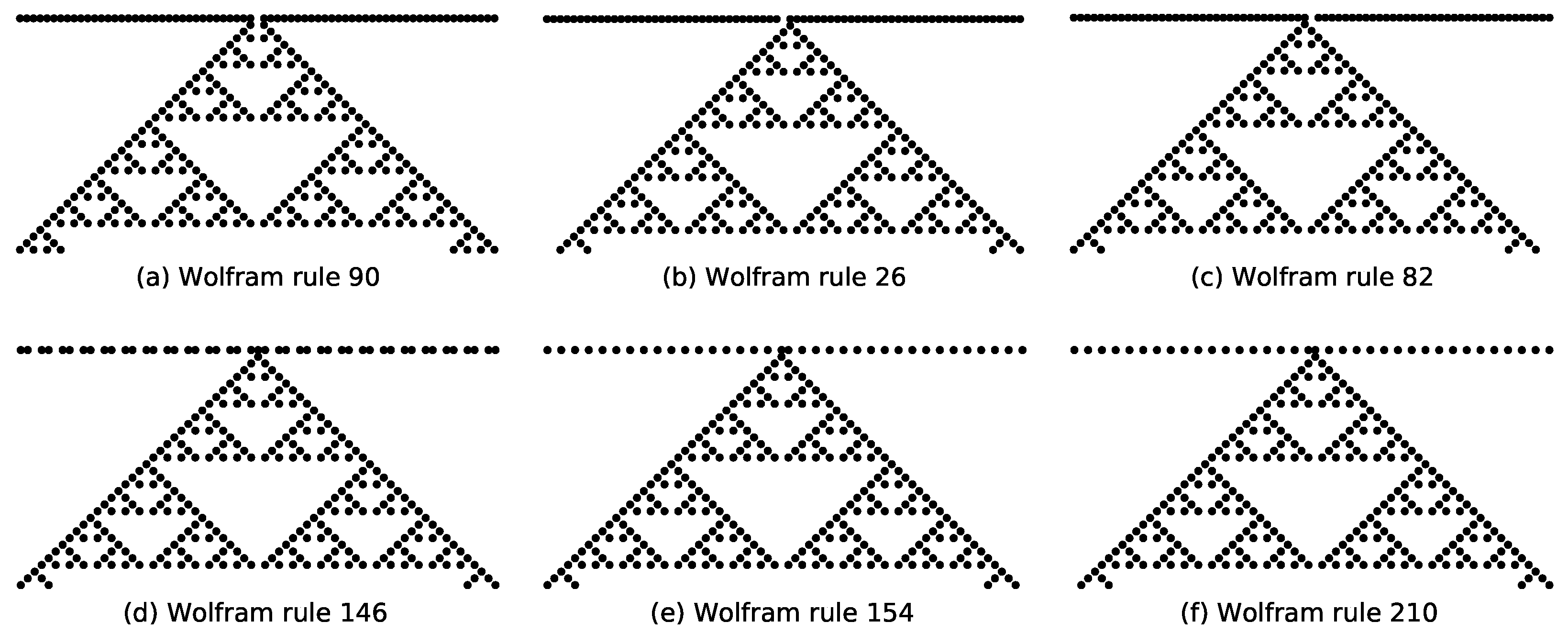

Under the initial state with a single active site, rule 150 generates a self-similar pattern with a fractal dimension of 1.69 [

2]. Further numerical analysis reveals that if a configuration at any time step

t contains exactly one active site, rule 150’s subsequent evolution will consistently produce self-similar patterns with the same fractal dimension of 1.69. This property holds across diverse initial states; for the initial state “…1, 1, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 1, 1…”, the state transition at

depends solely on the rule condition

111→1, resulting in a single active site; and for the initial state “…1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 1…”, the transition at

relies exclusively on

010→1, also yielding one active site. Despite these distinct initial configurations and localized rule dependencies, rule 150’s long-term evolution converges to identical self-similar structures with fractal dimension 1.69, as shown in

Figure 5.

Grassberger and Wolfram conducted theoretical analyses primarily focused on complex Wolfram rules [

2,

37], such as rules 90 and 182, which exhibit well-defined asymptotic density

. This asymptotic density is typically independent of the initial state density. However, under specific initial conditions, for example, a configuration with only a single active site, rule 90 generates Sierpiński triangle patterns. This implies that its asymptotic density displays periodic oscillations rather than converging to a constant value. The observed divergence from their established

motivates further investigation into whether the asymptotic behavior of complex Wolfram rules under very low initial densities aligns with their behavior under disordered initial states. Notably, the steady-state density of many Wolfram rules are known to depend on initial conditions, which prompts a systematic exploration of the relationship between initial density and long-term statistical behavior. In this work, we employ numerical simulation and analysis to study the temporal evolution of configurations in these automata, aiming to characterize their dynamic properties.

Monte Carlo simulations of (1+1)-dimensional Wolfram automata are conducted according to predefined Wolfram rules under periodic boundary conditions. When starting from a disordered initial state where each site has an independent probability

p, the initial density

equals

p.

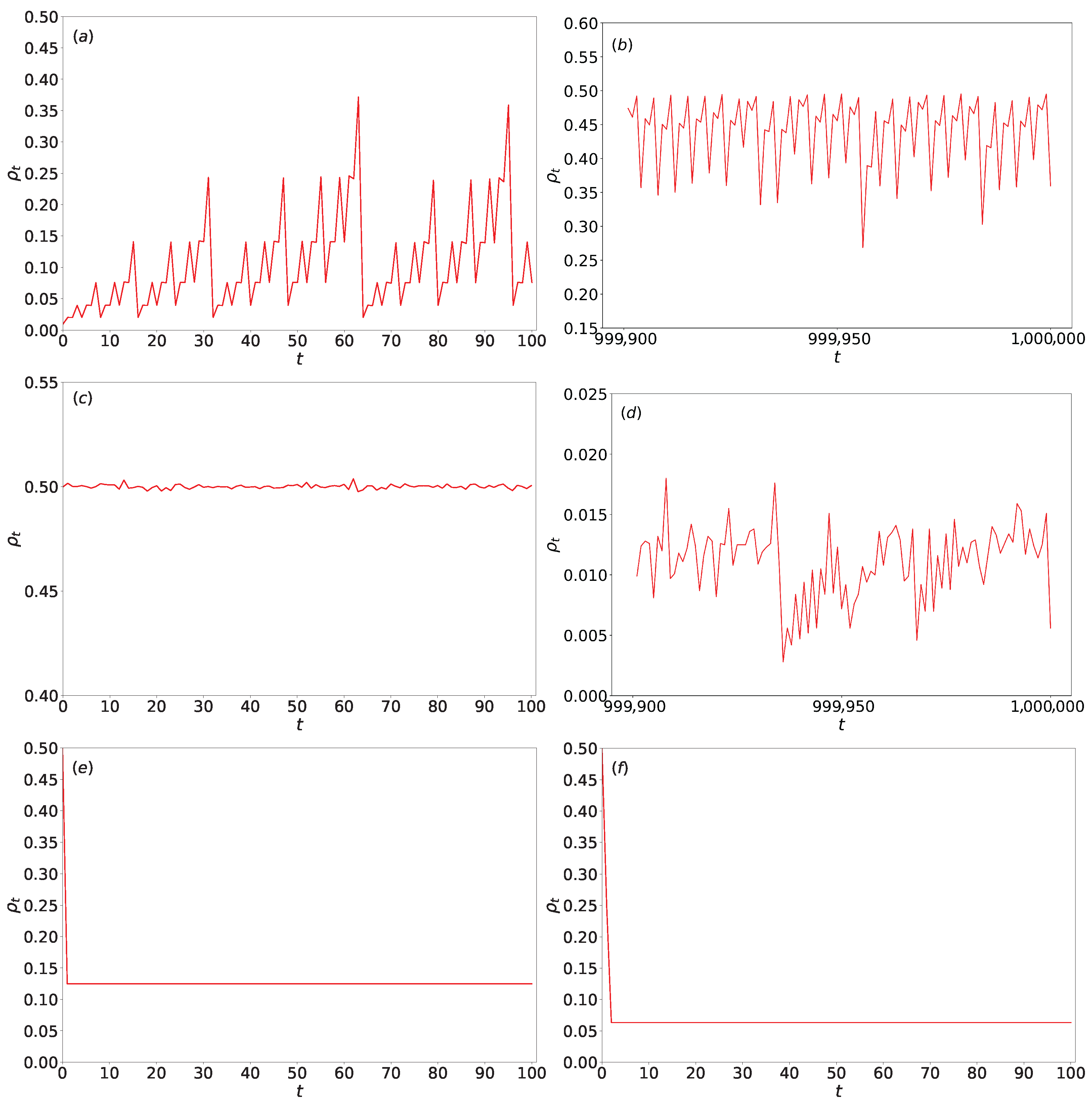

Figure 6 illustrates the temporal evolution of density

for various Wolfram rules under different initial densities. We selected specific rules including rules 90, 18, 2, and 36 for presentation. These rules exemplify distinct behavioral types within Wolfram automata: rules 90 and 18 are complex rules demonstrating chaotic and fractal characteristics as simple rules that serve as discriminative filters for selected initial configurations, while rules 2 and 36 rapidly converge to stable states. This selection is designed to contrast the differences in density evolution across rules of varying complexity, thereby emphasizing our research focus.

As shown in

Figure 6a,b, for rule 90 with a very low initial density (

= 0.01, 2000), the density

exhibits significant fluctuations over the first

t = 100 time steps. During the last 100 steps of a prolonged simulation (

t =

),

also exhibits significant fluctuations with distinct periodic characteristics. Due to large fluctuations, temporal moving averages are adopted here. With ensemble averaging over 100 independent initial condition seeds,

approximately equal 0.46, which is lower than the theoretical asymptotic density

. In contrast, for rule 90 with a moderate initial density (

= 0.5, 2000,

t = 100), as seen in

Figure 6c,

is the absence of significant fluctuations beyond early time.

converges rapidly to the asymptotic value of 0.5 within 600 time steps and remains stable.

For rule 18 with a very high initial density (

= 0.99,

L = 2000),

Figure 6d shows that during the last 100 steps of

steps simulation,

exhibits minor fluctuations and remains small.

In the cases of rules 2 and 36 with initial density

= 0.5 (

Figure 6e,f), both rules achieve stability quickly: rule 2 stabilizes almost immediately, while rule 36 converges within a few time steps.

A key observation is that for complex rules like rule 90, under typical initial densities (e.g., = 0.5), stabilizes within a few hundred steps. However, under extreme initial densities (very low or very high), like L = 2000, and even prolonged evolution (e.g., t = ), is lower than the theoretical asymptotic density . This discrepancy may stem from finite-size effects or insufficient time steps (although a large ) in numerical simulations. As the system size increases, will approach the asymptotic density more closely; a more detailed discussion is provided in the subsequent section. In contrast, simple rules (such as rules 2 and 36) exhibit rapid convergence and stable densities regardless of initial conditions.

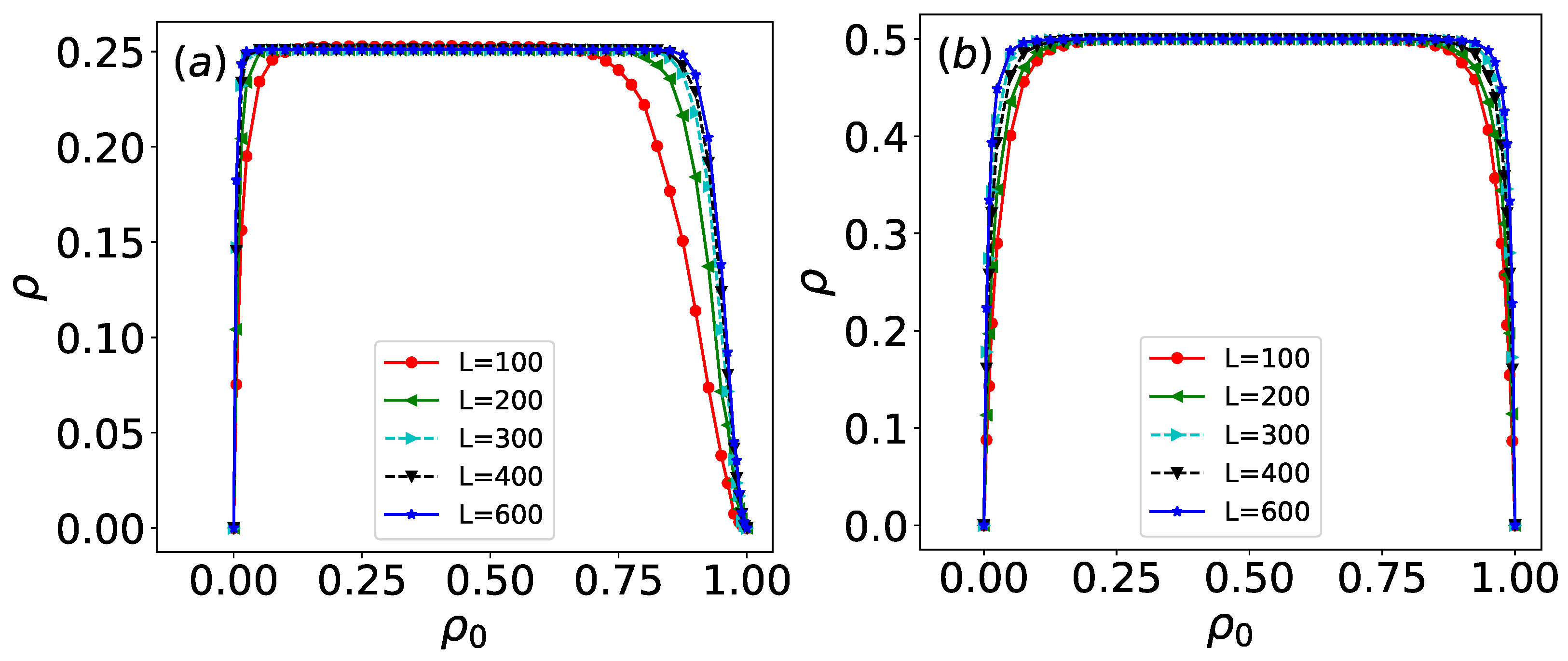

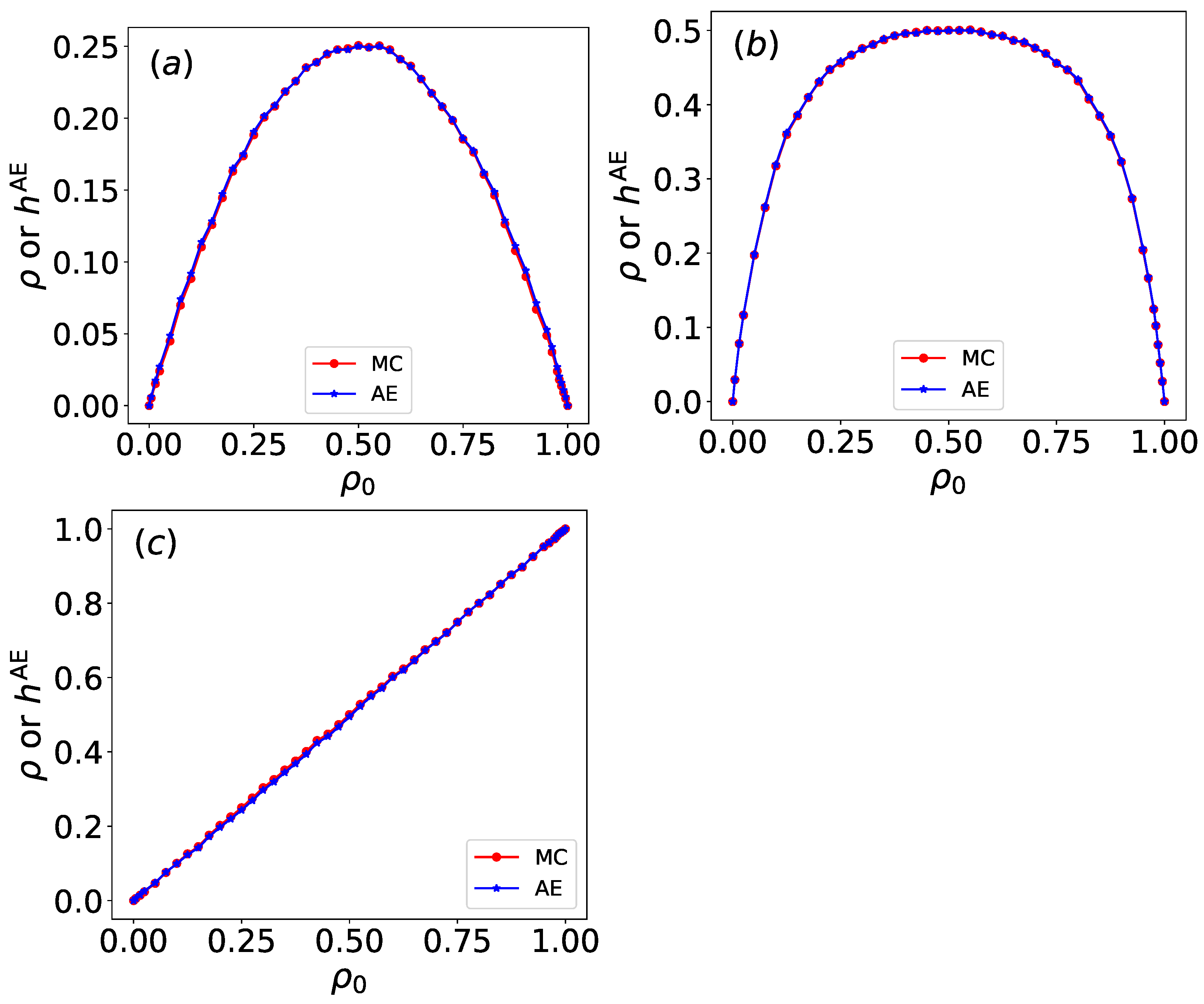

For rules 18 and 90, simulations are run on arrays of sizes

L = 100, 200, 300, 400, and 600, and time step

t = 6000, with the configurations from the last 200 time steps (

= 200) being taken. For each initial density

, 1000 configurations are generated to calculate the density. As shown in

Figure 7, the long-time evolved density of both rules under disordered initial states is presented. For most initial densities

, rules 18 and 90 exhibit well-defined asymptotic densities:

for rule 18 and

for rule 90. However, under extreme initial densities (e.g.,

or

), the evolved density significantly deviates from these asymptotic values.

At extremely low initial densities (characterized by very few active sites), the evolution of both rules depends primarily on the transition conditions 100→1 and 001→1. In this regime, sites evolve nearly independently. When only a single site is active in the initial state, both rules generate Sierpiński triangle patterns, resulting in densities much lower than their asymptotic values. Under such sparse initial conditions, the evolution produces numerous isolated triangular structures with large empty regions, leading to low overall density. As the initial density increases, the evolved density converges toward the asymptotic value. Conversely, at extremely high initial densities, the first time step is dominated by the condition 111→0, causing a sharp drop in density. This results in configurations similar to those observed under very low initial densities. As the initial density decreases from this extreme, the evolved density again approaches the asymptotic value. For a fixed initial density, larger system sizes (increasing L) yield densities closer to the asymptotic limit.

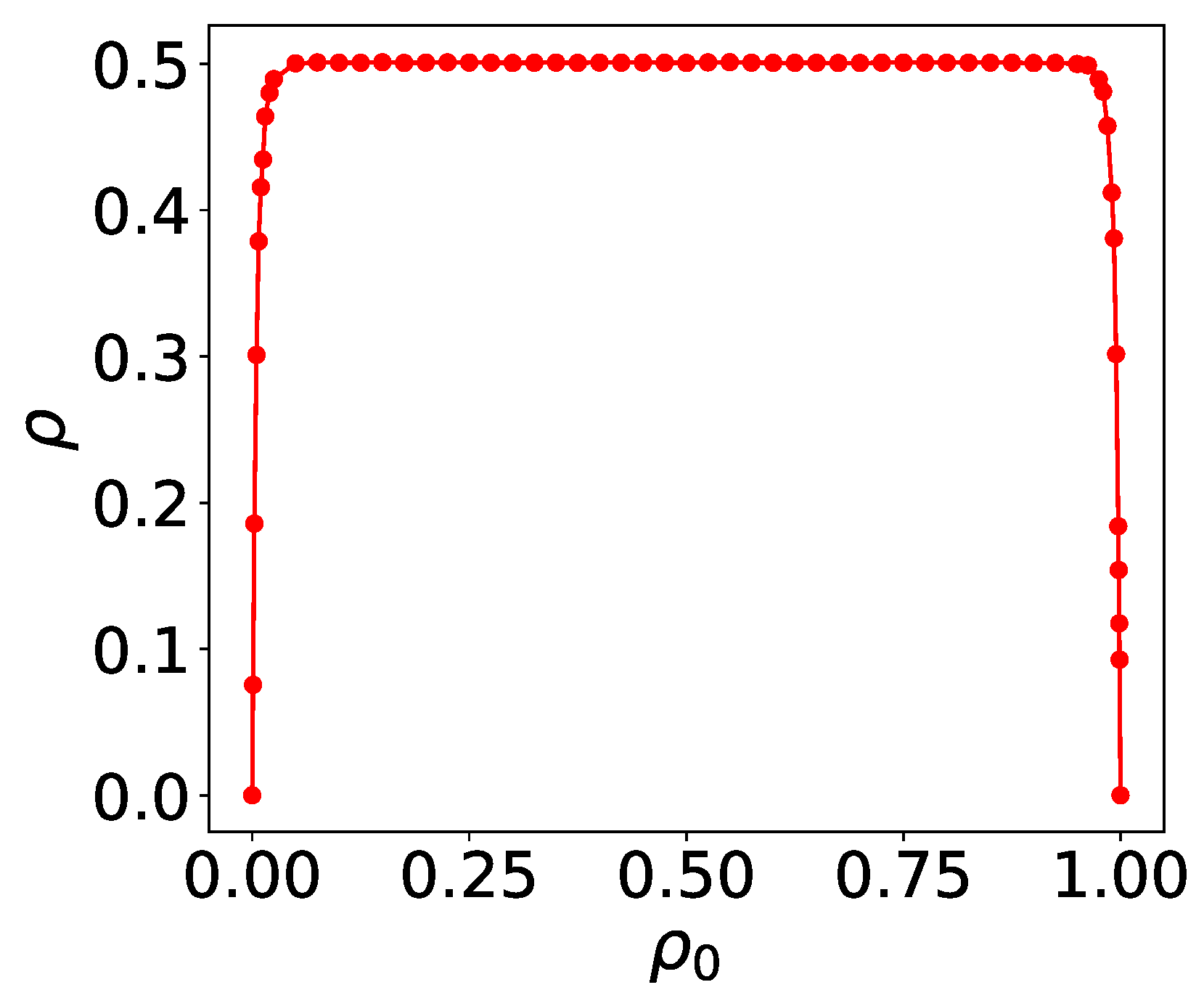

Rule 126, another complex rule, is simulated on arrays of size

L = 200 and

t = 6000, with the configurations from the last 200 time steps (

= 200) being taken. For each initial density

, 1000 configurations are generated to calculate the density. As shown in

Figure 8, the long-time density

for disordered initial states is presented. Unlike rules 18 and 90, rule 126 exhibits a consistent asymptotic density

across a broader range of initial densities. This result holds robustly under varying initial densities and system sizes.

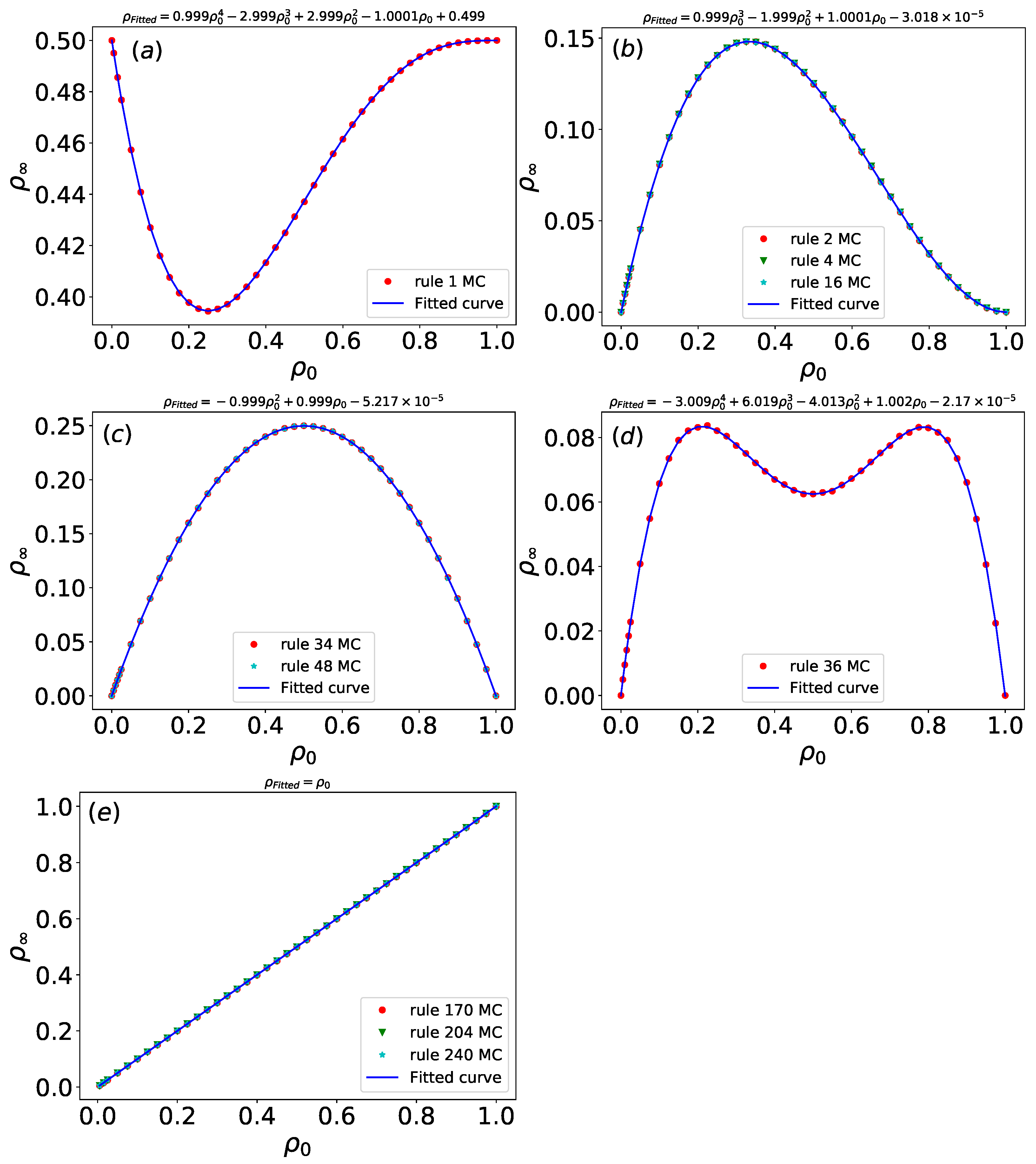

For rules 1, 2, 4, 16, 34, 48, 36, 170, 204, and 240, simulations are run on arrays of size

L = 1000, and time steps

t = 30, with the configurations from the last time step being taken. For each initial density

, 1000 configurations are generated to calculate the density. The density

of these simple Wolfram rules stabilizes rapidly, with the asymptotic density

exhibiting dependence on the initial density

. As shown in

Figure 9, the asymptotic density

under disordered initial configurations with varying

is presented for all rules, including parameter optimization and fitting results. A least squares fitting procedure yields fitted densities

for each rule, and approximate values of

are summarized in

Table 2.

For rule 1, whose evolution depends solely on the condition 000→1, the asymptotic density = 0.5 when is 0 or 1. Conventional analytical methods struggle to determine the extrema of such functions, but our approach employs Python 3.10’s symbolic computation library (SymPy) to obtain precise solutions. Specifically, a minimum density of 0.395 occurs at .

The asymptotic density curves for rules 2, 4, and 16 coincide apart from minor fluctuations. A maximum density of 0.148 is at . The evolution of rule 2 depends only on 001→1, rule 4 on 010→1, and rule 16 on 100→1. Despite their distinct evolutionary paths under the same initial density, all three rules converge to the same asymptotic density, indicating functionally equivalent dynamical mechanisms. This suggests that these rules can serve as effective filters in certain applications.

Similarly, rules 34 and 48 exhibit identical asymptotic density . Rule 34 depends on condition 101→1 and 001→1, while rule 48 depends on condition 101→1 and 100→1. At , both rules reach a maximum density of 0.25.

For rule 36, the fitted density curve displays two local maxima and one local minimum. The asymptotic density reaches a local minimum of 0.0625 at , and local maxima of approximately 0.0833 occur at or .

Rules 170, 204, and 240 all satisfy . Rule 204 is the “identity rule”, defined by the Boolean function , meaning the configuration remains unchanged over time. Rule 170 is characterized by , and rule 240 by . The asymptotic density of these rules always matches the initial density, confirming that they share identical dynamical evolution mechanisms.

Simple Wolfram rules such as rules 2, 4, 16, 34, and 48 stabilize rapidly within a small number of time steps, with the asymptotic density depending on the initial density . Notably, despite being governed by distinct local update conditions, for instance, rule 2 depends on 001→1, rule 4 on 010→1, and rule 16 on 100→1. These rules exhibit identical asymptotic densities under the same initial density , even though their configurations evolve through different pathways over time. This convergence behavior suggests that they share equivalent underlying dynamical mechanisms, irrespective of differences in their local transition functions.

4. Supervised Learning of the Wolfram Automata

Supervised learning is applied to study Wolfram automata, with data comprising raw configurations generated from Monte Carlo (MC) simulations of the (1+1)-dimensional Wolfram automata. Within the (1+1)-dimensional Wolfram automata framework encompassing 256 distinct rules, certain rules rapidly converge to trivial steady states (e.g., homogeneous or empty configurations), enabling straightforward classification. We focus on Wolfram rules that exhibit complex structural configurations under disordered initial states during temporal evolution. Ten typical Wolfram rules are selected: rules 6, 16, 18, 22, 36, 48, 90, 150, 182, and 190. The generated configurations are divided into a training set and a test set. the configurations of each rule are labeled with an identical label. For ten distinct Wolfram rules, the true label is a one-hot vector. For instance, rule 6 corresponds to , and rule 150 corresponds to , where only the position indexing the specific rule is set to 1, and all others are 0.

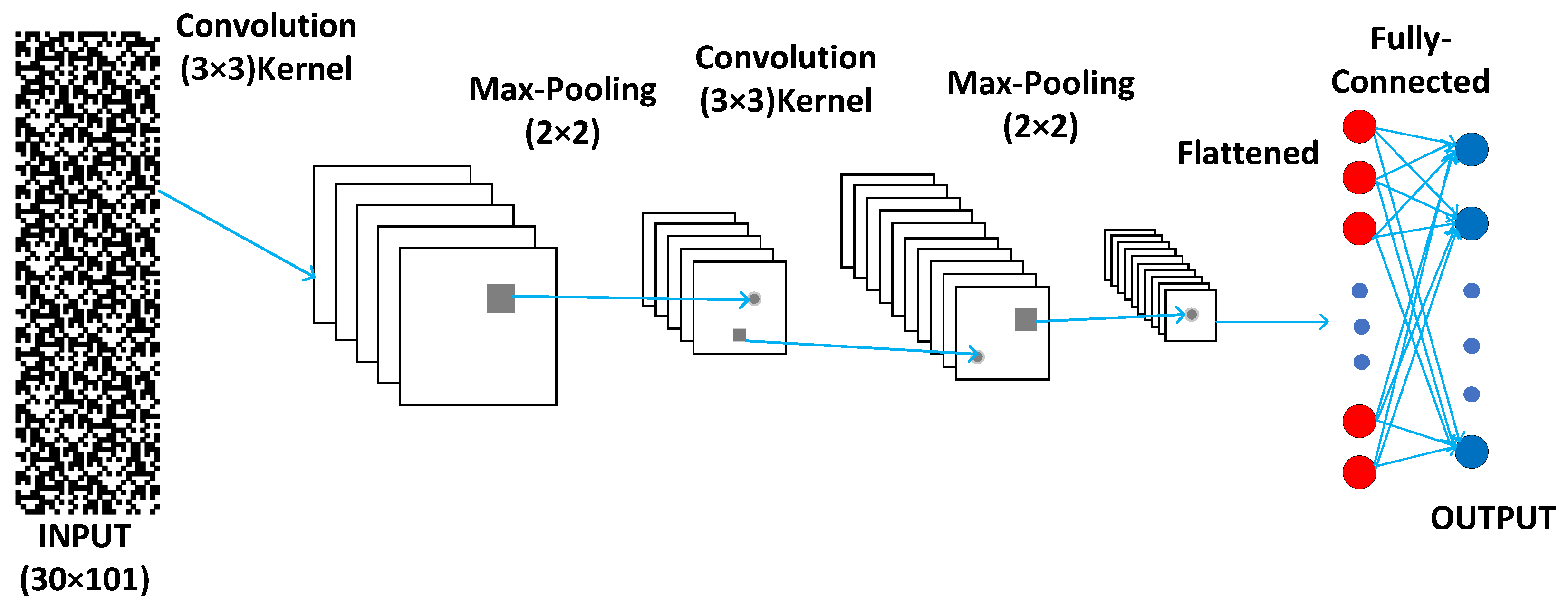

For supervised learning, we apply the convolutional neural network (CNN) as illustrated in

Figure 10. The CNN architecture consists of two convolutional layers, each followed by a max-pooling layer, a fully connected layer, and a

softmax output layer. The first convolutional layer applies 3 × 3

kernels with

sigmoid activation, extracting spatial features while introducing nonlinearity, followed by 2 × 2 max-pooling for dimensionality reduction, enhancing translational invariance. The second convolutional layer repeats this pattern, further refining feature maps. The pooled features are then flattened and passed through a fully connected layer using

sigmoid activation. Finally, the output layer employs

softmax activation to produce class probabilities for classification tasks.

Since learning machines extract features from configuration images generated across distinct rules and small system sizes, selecting configurations that maximize information capture is essential. For complex Wolfram automata such as rules 18 and 90, under low or high initial densities (e.g., or ), the density evolving over time remains low; thus, it is customary to select random initial states (probability of one per site is 0.5) for simulations to capture non-trivial dynamical behaviors. For simple rules, the asymptotic density depends on the initial density. Therefore, the initial density corresponding to a high asymptotic density should be optimally selected. For example, rule 16 adopts an initial state density of 0.33, while rule 36 sets it to 0.21.

The configuration images are of dimension, , and . For each rule, 2000 labeled configurations are generated for the training set and another 200 configurations for the test set. The CNN output layers are eventually averaged over the test set.

Categorical cross-entropy loss is the standard choice for data classification tasks in machine learning. For Wolfram rule classification, it thus serves as the designated loss function. The Categorical cross-entropy loss function is defined as follows:

N is the number of samples in the batch (batch size), and

is the model’s predicted probability for the true class label

of sample

i.

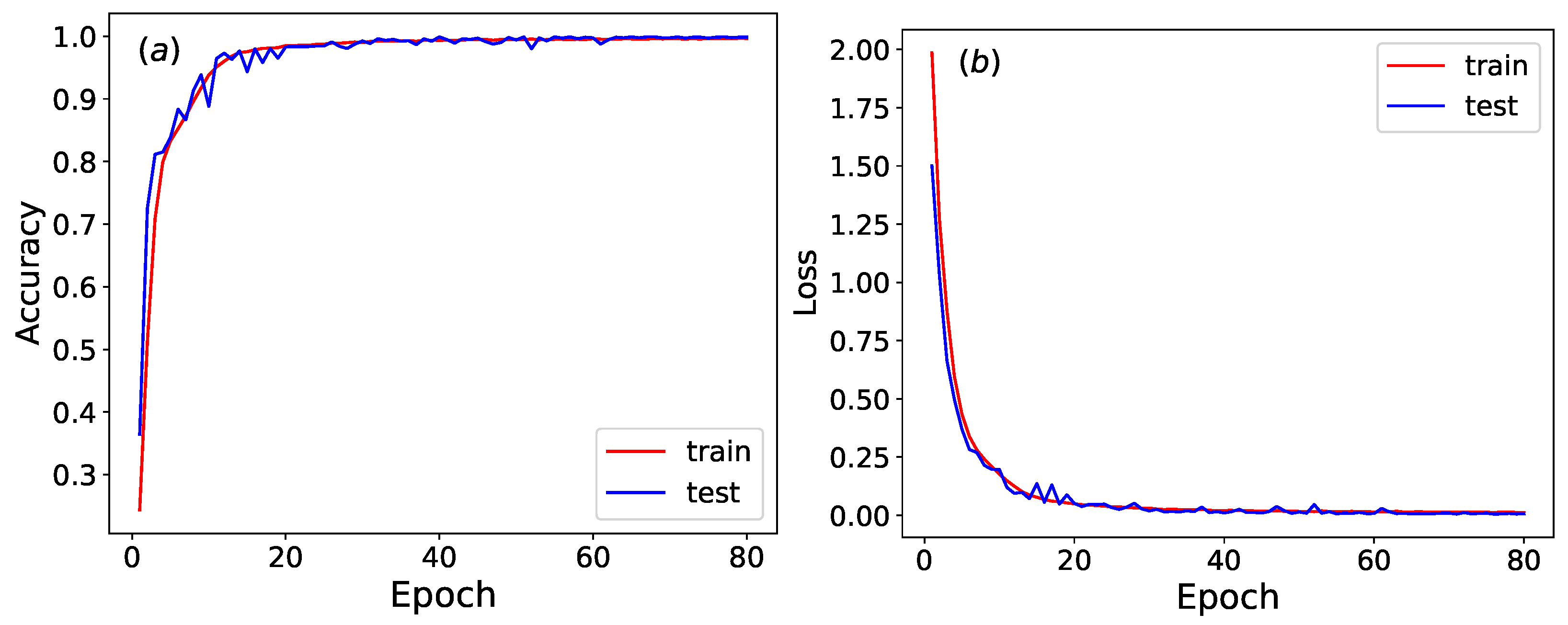

As illustrated in

Figure 11, the accuracy and loss function of the trained neural network stabilize around epoch 80. The CNN achieves an impressive

test accuracy in classifying Wolfram rules. This high level of recognition accuracy highlights the CNN’s exceptional ability to reliably classify Wolfram rules, even within complex systems.

A confusion matrix is a tabular tool used to evaluate the performance of a classification model, in which the rows represent the true classes (actual values), the columns represent the predicted classes (model outputs), the diagonal entries indicate the number of correctly classified samples, and the off-diagonal entries reveal specific patterns of misclassification. This structure helps quickly identify the accuracy of the model across different classes and trends in errors. As shown in

Table 3, this is a confusion matrix for the CNN-based classification of Wolfram rules, with identical initial density conditions in both the training and test sets. The model demonstrates excellent classification accuracy, with nearly perfect diagonal values (200 correct predictions for most rules) and only three misclassifications out of 2000 samples.

A classification report is a concise summary that evaluates the performance of a classification model by presenting key metrics such as precision, recall, and F1-score. Precision is the proportion of correctly predicted positive cases (e.g., rule 6) out of all cases predicted as positive. High precision (close to 1.0) indicates few false positives. Recall is the proportion of correctly predicted positive cases out of all actual positive cases. High recall (close to 1.0) indicates few false negatives. F1-score is the harmonic mean of precision and recall, providing a balanced measure between them. It is particularly useful when class distribution is uneven (though here all classes have equal support). Class refers to the Wolfram automata representing different rules. As shown in

Table 4, this is a classification report of CNN of Wolfram rules, with identical initial density conditions in training and test sets. The classification report demonstrates exceptional performance of the CNN model in classifying Wolfram automata rules.

In order to verify the robustness of the neural network in recognizing Wolfram automata, test configurations with initial densities different from those used in the training set are input into a pre-trained CNN. This process evaluates the accuracy of classifying distinct rules. The initial densities are uniformly sampled from the values 0.2, 0.4, 0.6, and 0.8, with 200 test samples generated for each rule. As shown in

Table 5 and

Table 6, the provided confusion matrix and classification report collectively evaluate the CNN model’s performance on Wolfram rules classification under different initial density conditions between training and test sets. The confusion matrix indicates an overall accuracy of approximately 89.65% (1793 correct predictions out of 2000), with notable misclassification patterns, such as rule 16 being frequently confused with rules 18 (41 errors) and 48 (19 errors). Correspondingly, the classification report reveals varied metric scores, where rules 16 and 36 show lower F1-scores (0.748 and 0.778, respectively) due to reduced recall or precision, while rules 182 and 190 achieve perfect scores.

Compared to scenarios with identical initial density conditions in training and test sets, it is observed that when test densities differ from those in the training set, the accuracy of classifying distinct rules declines significantly. Under varying initial densities, automata may fail to develop distinctive features sufficient for rule discrimination within smaller time steps t, leading to classification challenges for the model. For instance, rule 18, under a low initial density such as 0.2 and at small time steps, exhibits minimal density and a homogeneous state, making it difficult to distinguish between them. Expanding the range of initial densities of training sets is necessary to enhance the robustness of the CNN.

A uniform distribution of initial densities between 0.2 and 0.8 is selected. To ensure a sufficient training set, 6000 configurations are taken for each rule, and 600 configurations are used for the test set. The accuracy of CNN in recognizing different Wolfram rules is 0.985, indicating that even under a wide range of initial densities, CNN maintains high recognition accuracy for diverse Wolfram rules.

As shown in

Table 7 and

Table 8, based on the provided classification report and confusion matrix, the CNN model demonstrates strong performance in classifying Wolfram rules under expanded initial density conditions (e.g., densities uniformly sampled from 0.2 to 0.8). The overall accuracy of 0.985 (98.5%) indicates high effectiveness, with precision, recall, and F1-score all at 0.985, suggesting balanced performance across classes. The confusion matrix reveals minimal errors, with only 85 misclassifications out of 6000 samples, with most errors concentrated in specific rule pairs, such as rule 16 being confused with rules 18 and 48, as seen in the 25 and 21 errors, respectively. This minor confusion may stem from similarities in dynamical behaviors under certain density conditions, but the model maintains robustness, as evidenced by perfect F1-scores for rules 150, 182, and 190.

The CNN achieves high reliability in distinguishing Wolfram rules despite variations in initial density, with accuracy exceeding 98.5%. The results validate the model’s generalization capability, though future work could focus on refining feature extraction for rules with lower recall (e.g., rule 16 at 0.917) to further reduce errors. This performance underscores the potential of CNNs for complex automata classification tasks.

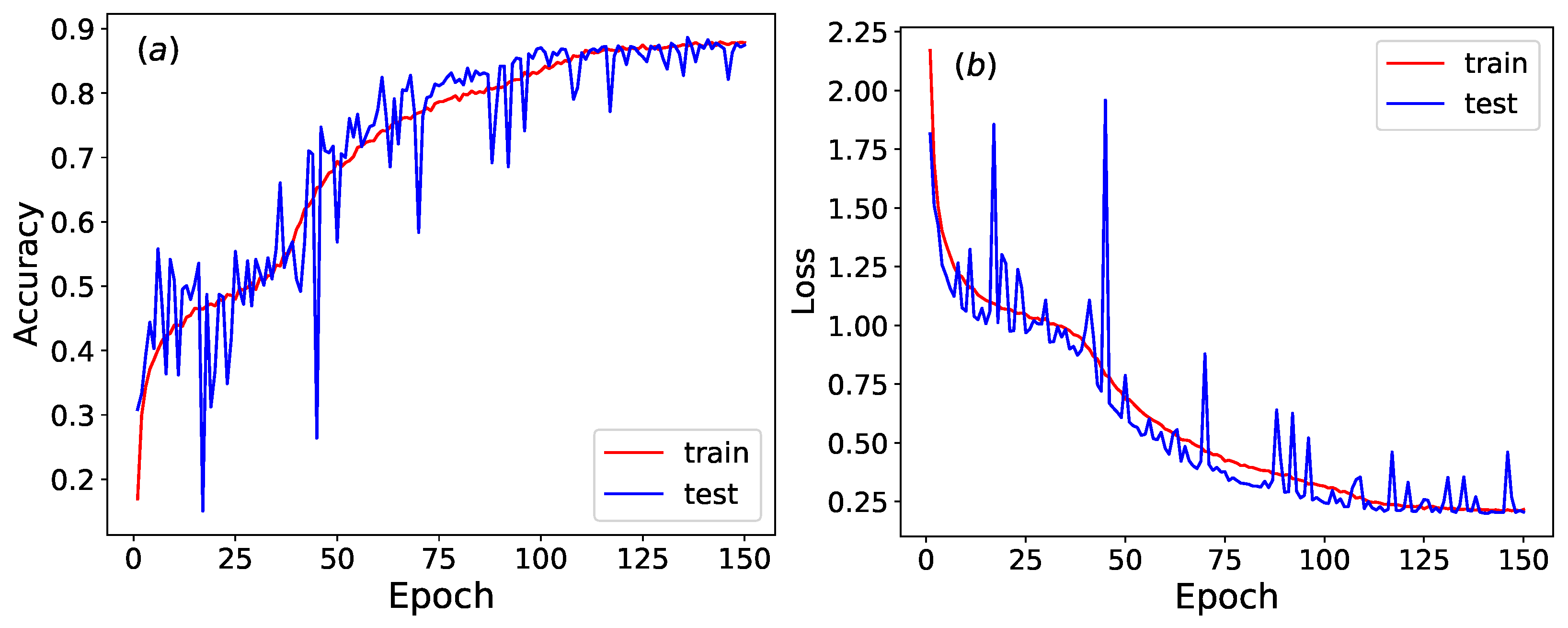

The training and test sets undergo row-shuffling while sharing identical initial density conditions. Row-shuffling is a control technique that randomly reorders the temporal sequence of rows in a Wolfram automata configuration. This process destroys dynamical patterns by disrupting the causal relationships between consecutive time steps, while preserving marginal statistics (e.g., density and local patterns) within each individual row. As illustrated in

Figure 12, the accuracy and loss function of the trained neural network stabilize around epoch 150, and the accuracy and loss function fluctuate significantly.

As shown in

Table 9 and

Table 10, the provided confusion matrix and classification report evaluate the CNN model’s performance on classifying Wolfram rules with row-shuffled configuration. Row-shuffling disrupts the temporal sequence of Wolfram automata evolution, forcing the model to rely solely on static spatial features rather than time-dependent dynamics. The overall accuracy is 0.874 (87.4%), with a F1-score of 0.873, indicating a significant performance drop compared to scenarios with intact temporal data. The confusion matrix reveals systematic misclassifications: for instance, rule 18 is frequently confused with rule 6 (10 errors) and rule 22 (16 errors), while rule 150 shows low recall (0.600) due to 80 misclassifications as rule 90. This suggests that although row-shuffling preserves the marginal distribution of each row (such as density), density alone may be insufficient to distinguish all rules. In summary, while the model maintains moderate accuracy, the errors highlight the importance of temporal dynamics in Wolfram rule classification, and row-shuffling exposes limitations in feature learning.

Although rules 90 and 150 share the same asymptotic density (

= 0.5) under random initial states, their configurations exhibit high visual similarity, making manual distinction challenging, as shown in

Figure 13. However, a trained CNN achieves high classification accuracy in reliably identifying these rules. Although supervised learning provides prior knowledge of Wolfram rules, the neural network model can rapidly distinguish large volumes of Wolfram rule configurations with minimal training time—demonstrating a key advantage of machine learning for complex spatial–temporal pattern recognition tasks.

We employ a CNN as a supervised learning framework, due to its high effectiveness in image-like classification tasks, which offers superior efficiency and accuracy over traditional methods. By utilizing only a two-layer CNN architecture rather than deeper networks, we achieve optimal performance for the Wolfram automata configurations with small system dimensions. This design minimizes trainable parameters, reduces training time, and maintains high classification accuracy.

The CNN effectively identified unique spatiotemporal patterns and structural correlations within evolved configurations—even among rules sharing identical asymptotic densities, such as rules 90 and 150 (both with = 0.5). These discriminative features include propagation dynamics of active sites, neighborhood interaction patterns, and emergent fractal geometries, forming unique “ dynamical signatures” for each rule. This demonstrates that supervised learning can extract physically meaningful patterns beyond scalar metrics like density, enabling accurate classification based on structural evolution.

6. Summary

In this paper, we applied numerical simulations, computation methods, and supervised and unsupervised learning methods to study the asymptotic density and dynamical evolution mechanisms of the (1+1)-dimensional Wolfram automata. Through numerical simulations, we extended beyond single-site initial states to specific sequences, including rule 146 with “…0, 1, 1, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 1, 1…” and rule 154 with “…0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 1…”. Rules 26, 82, 146, 154, and 210 also evolve into Sierpiński triangle patterns over time.

The asymptotic density of Wolfram rules was numerically determined. For complex rules such as rules 18 and 90, the asymptotic density converges to a well-defined value under most disordered initial configurations with initial density . However, under extremely low or high initial densities (e.g., or ), like L = 2000, even after extended evolution (e.g., t = ), have a substantial deviation from . This discrepancy may stem from finite-size effects; as the system size increases, will approach the asymptotic density more closely. The density of simple Wolfram rules stabilizes within a small number of time steps, with the asymptotic density depending on the initial density . Certain rules, such as rules 2, 4, and 16, or rules 34 and 48, rely on different local update conditions. Yet, under identical initial density, even though their configurations evolve through different pathways over time, they converge to the same asymptotic density, suggesting that they share equivalent underlying dynamical mechanisms.

With supervised learning, a trained CNN achieves high accuracy in identifying these rules. Although supervised learning provides prior knowledge of Wolfram rules, the neural network model can rapidly distinguish large volumes of Wolfram rule configurations with minimal training time—demonstrating a key advantage of machine learning for complex spatial–temporal pattern recognition tasks.

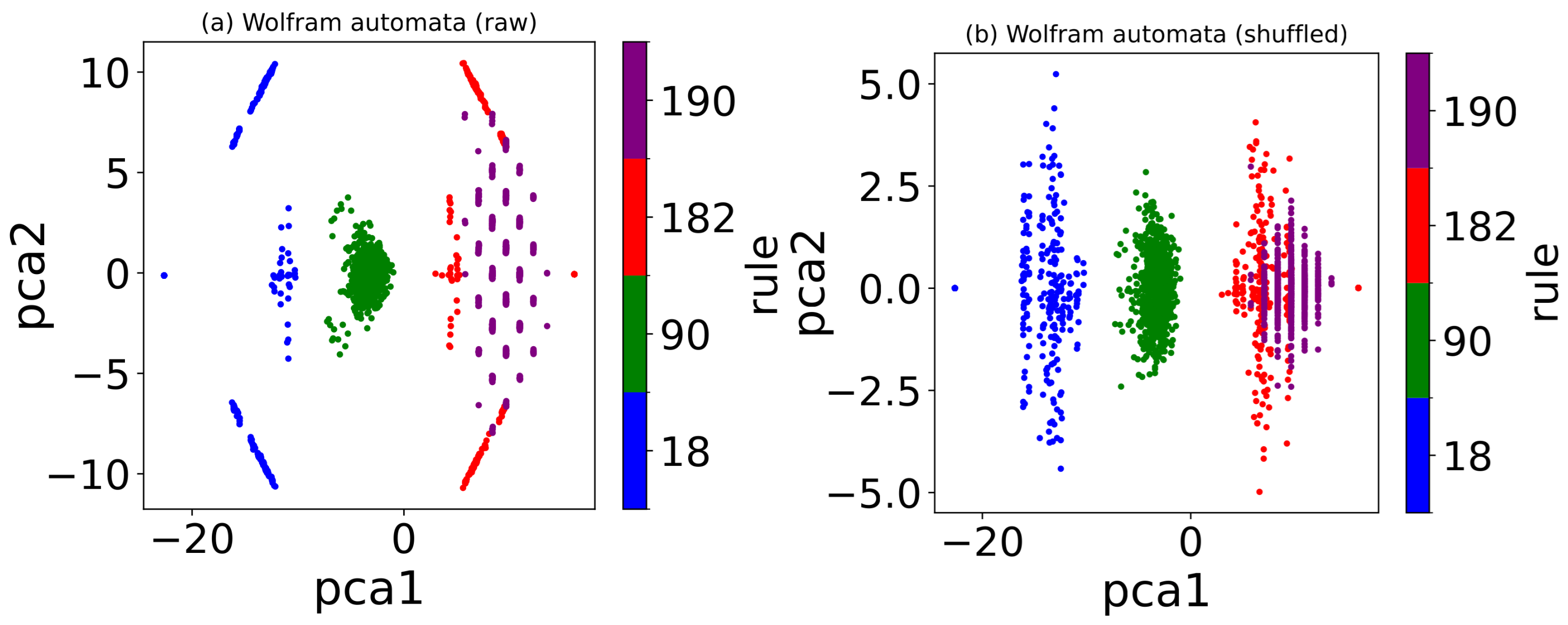

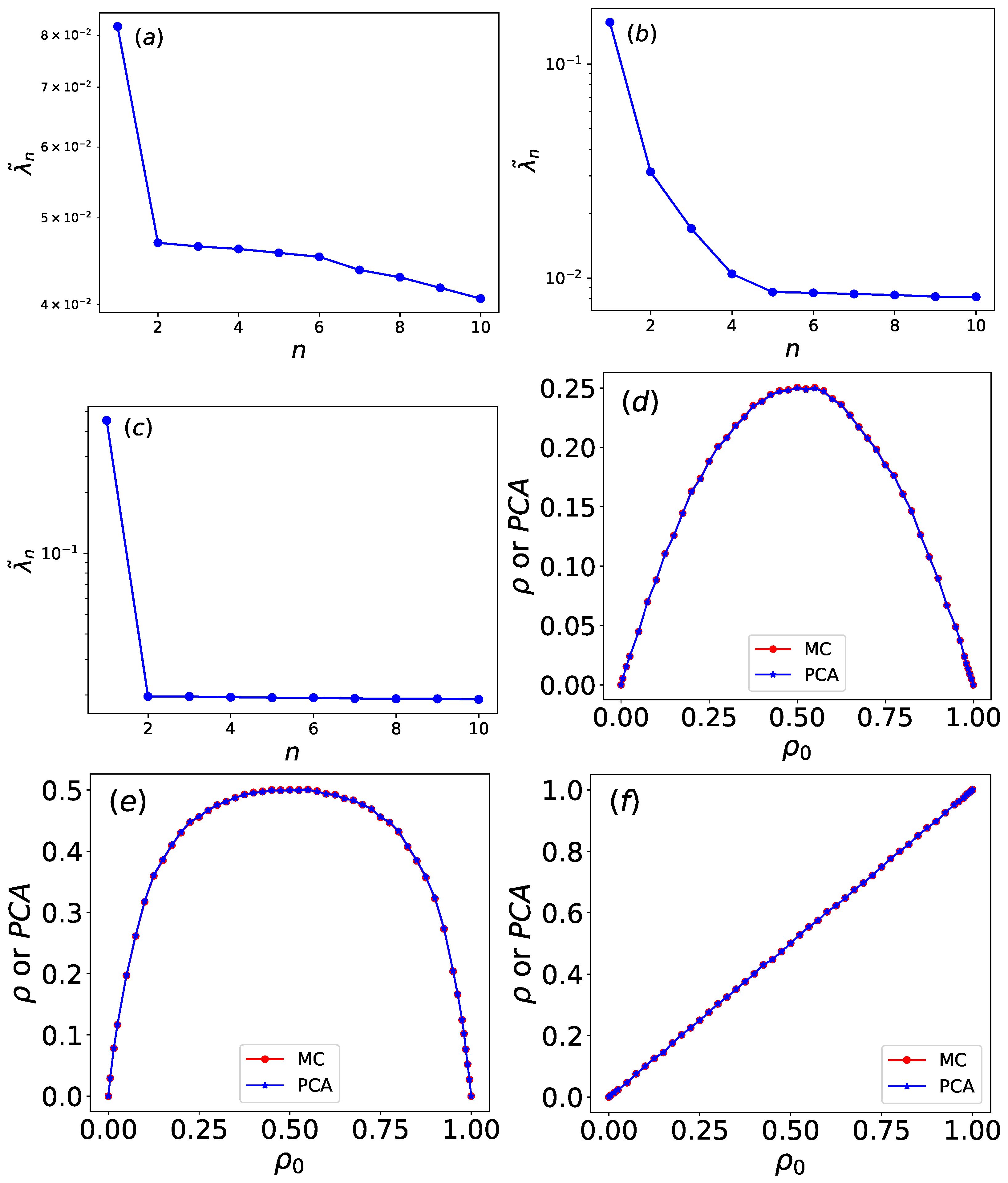

The unsupervised learning methods, PCA and autoencoders, were also employed. The two-dimensional projections from both methods separated the raw and row-shuffled configurations of different Wolfram rules into distinct clusters, indicating that autoencoders and PCA primarily rely on density to distinguish between these rules. Once the output is restricted to one dimension, both methods yield an output that shows strong agreement with the density. Due to its simpler learning mechanism, PCA achieves shorter computation time and greater operational efficiency compared to the autoencoder.

For future research directions, our study can be extended in several meaningful ways. First, our focus on (1+1)-dimensional Wolfram automata leaves open questions about higher-dimensional or stochastic systems, which we plan to explore in future work. Second, although our machine learning models achieve high accuracy, interpretability remains a challenge; we will investigate explainable AI techniques to bridge model decisions with physical insights. Finally, computational costs for large-scale automata necessitate optimizations like distributed computing. Addressing these aspects will extend the framework’s applicability to broader complex systems.