Abstract

Entropy-based analyses have emerged as a powerful tool for quantifying the complexity, regularity, and information content of complex biological signals, such as electroencephalography (EEG). In this regard, EEG-based lie detection offers the advantage of directly providing more objective and less susceptible-to-manipulation results compared to traditional polygraph methods. To this end, this study proposes a novel multi-scale entropy approach by fusing fuzzy entropy (FE), time-shifted multi-scale fuzzy entropy (TSMFE), and hierarchical multi-band fuzzy entropy (HMFE), which enables the multidimensional characterization of EEG signals. Subsequently, using machine learning classifiers, the fused feature vector is applied to lie detection, with a focus on channel selection to investigate distinguished neural signatures across brain regions. Experiments utilize a publicly benchmarked LieWaves dataset, and two parts are performed. One is a subject-dependent experiment to identify representative channels for lie detection. Another is a cross-subject experiment to assess the generalizability of the proposed approach. In the subject-dependent experiment, linear discriminant analysis (LDA) achieves impressive accuracies of 82.74% under leave-one-out cross-validation (LOOCV) and 82.00% under 10-fold cross-validation. The cross-subject experiment yields an accuracy of 64.07% using a radial basis function (RBF) kernel support vector machine (SVM) under leave-one-subject-out cross-validation (LOSOCV). Furthermore, regarding the channel selection results, PZ (parietal midline) and T7 (left temporal) are considered the representative channels for lie detection, as they exhibit the most prominent occurrences among subjects. These findings demonstrate that the PZ and T7 play vital roles in the cognitive processes associated with lying, offering a solution for designing portable EEG-based lie detection devices with fewer channels, which also provides insights into neural dynamics by analyzing variations in multi-scale entropy.

1. Introduction

Lie detection technology holds significant applications across judicial, security, and psychological domains [1]. Conventional methods, which typically include the strategic usage of evidence, familiarity with the context, diagnostic questioning, instrument-aided physiological and behavioral monitoring, contextual examination, repeated interrogation, expert analysis, intuitive processing, and motivational inference, rely on personal experience [2]. Currently, while polygraphs can infer an individual’s deceptive state by measuring physiological changes such as respiration, heart rate, and skin galvanic response, their reliability should not be overestimated, as the accuracy of polygraph results closely depends on the abilities and knowledge of the individual being tested. Additionally, specific individuals under the influence of anesthetics, alcohol, and drugs remain unsuitable for examinations [3]. Thus, lie detection using machine learning methods has garnered interest due to its enhanced objectivity and efficiency.

Previously, Opancina et al. [4] mentioned that combining interview patterns with neuroimaging techniques, such as functional magnetic resonance imaging (fMRI), can be used for lie detection. Nonetheless, fMRI relies on detecting brain metabolic activities, rendering it time-consuming in practice and unfavorable for designing portable systems [5,6]. In contrast, electroencephalography (EEG), a non-invasive way for investigating brain activities, only requires electrodes placed on the scalp to collect biological signals, providing better operability and safety. More importantly, EEG signals exhibit high temporal resolution and relatively low cost, facilitating the real-time recording of neural variations during cognitive, emotional, and decision-making processes in the brain [7]. Meanwhile, related works [8,9] found that when an individual lies, the brain engages in inhibiting truthful information and constructing false information, which can be viewed as neurophysiological biomarkers in EEG signals for lie detection.

Generally, to obtain valuable characteristics pertinent to lie detection from EEG signals, feature extraction is an essential step. Traditional approaches primarily focus on time-domain and frequency-domain analysis. As for the time domain, extensively used features include amplitude, latency, and waveform morphology, such as components of event-related potentials (ERPs) [10]. Specifically, P300 amplitude and latency, which are associated with attention allocation and information updating, have been employed in this field [11]. However, time-domain features are often susceptible to noise and struggle to denote the dynamic changes of the EEG signals [12]. Frequency-domain techniques enable the extraction of power spectra and phase features from different frequency bands that reflect various cognitive states [13,14,15]. Furthermore, entropy-based analyses have emerged as a powerful solution for quantifying the complexity, regularity, and information content of EEG signals [16]. Nevertheless, traditional single-scale entropy measures, such as approximate entropy, permutation entropy, and sample entropy, are insufficient for identifying biomarkers of EEG signals across different time or frequency scales. This limitation indicates that crucial dynamic information related to lie detection is missed if the analysis is restricted to a single scale. Hence, the multi-scale entropy approach is more appropriate for investigating complex brain activities under diverse conditions. Regarding the frequency-domain multi-scale entropy, Yu et al. [17] used Butterworth filters to decompose EEG signals into four frequency bands for emotion recognition. Then, they extracted differential entropy, nonlinear entropy, and maximum entropy from each frequency band to achieve good performance through a multi-band approach. Niu et al. [18] investigated the characteristics of multi-band by combining Shannon-based multivariate permutation entropy and conditional-based multivariate sample entropy, offering a perspective for quantifying complex EEG dynamics. Chen et al. [19] extract a series of brain maps based on spatial features to form frequency-domain entropy for analyzing EEG signals.

Concerning multi-scale entropy, they helpfully address the issue of information loss in traditional coarse-graining methods. For example, Shang et al. [20] claimed the drawback of coarse-graining methods, where practical information diminishes with increasing scale, leading to reduced entropy stability. Therefore, the concept of time shift was applied by proposing the time-shifted multi-scale increment entropy, which utilizes the property of Higuchi fractal dimension to generate a new set of time series with fractal curve characteristics on the original time scale. It not only thoroughly investigates the irregularity of time series data but also preserves vital structural information. Fan et al. [21] found that utilizing the numerical features of dispersion patterns as a new metric makes it proper for quantifying fuzzy dispersion entropy singularity. Their experiments revealed that combining amplitude-based time-shifted coarse-graining methods with fuzzy dispersion entropy provides valuable monitoring indicators for multi-scale calculations. Zheng et al. [22] reported that traditional slope entropy only analyzes time series data at a single scale, neglecting vital information at other scales. Therefore, they developed an improved time-shifted multi-scale slope entropy to enhance classification performance. As discussed, the aforementioned multi-scale entropy approaches facilitate the analysis of signal complexity and dynamics, laying the groundwork for EEG-based lie detection.

Recently, deep learning has brought advantages to EEG-based lie detection, with its automatic high-level feature learning capability [23,24]. Convolutional neural networks (CNNs) and long short-term memory (LSTM) networks are two widely used models in this field. For instance, Saryazdi et al. [25] converted 1D EEG signals into 2D images and then utilized a deep CNN (DCNN) model to achieve lie detection with good results. Aslan et al. [26] employed the discrete wavelet transform (DWT) and min-max normalization preprocessing on EEG signals, subsequently designing an LSTMNCP model that combines LSTM and neural circuit policy (NCP), where the NCP replaces the traditional fully connected layers in LSTM to achieve better computational efficiency and reduced neuron consumption. Rahmani et al. [27] combined a deep graph convolutional network (GCN) with type-2 fuzzy sets (TF-2), acquiring over 90% accuracy in lie detection. Additionally, the V-TAM model proposed by AlArfaj and Mahmoud [28] realized lie detection by fusing spatiotemporal features of EEG signals.

Despite progress in deep learning for EEG-based lie detection, most approaches tend to utilize multi-channel input, which increases data complexity and poses challenges for device portability, as multi-channel data sources often result in redundant data, computational burden, and increased hardware requirements. For example, a 64-channel EEG system makes it challenging to deploy outside of a laboratory setting. That means it is necessary to perform channel selection to investigate representative channels, where the fewer the channels, the fewer electrodes need to be placed on the scalp.

A review of previous works reveals several persistent challenges that hinder the practical application of EEG-based lie detection. First, many high-performing methods rely on a large number of EEG channels (often 16 or more), which limits their potential for portable, real-world applications. Second, conventional feature extraction techniques, particularly single-scale entropy and traditional coarse-graining methods, usually suffer from information loss, potentially overlooking subtle neural markers of deception. Ultimately, the current trend towards computationally intensive deep learning models often overlooks the need for lightweight and efficient systems. Hence, this study is specifically designed to address these gaps by proposing a multi-scale entropy feature that is effective even with a single channel, paving the way for a more practical EEG-based lie detection solution.

To this end, since the multi-scale entropy approach shows potential for analyzing complex EEG signals, this study proposes a novel method that fuses fuzzy entropy (FE), time-shifted multi-scale fuzzy entropy (TSMFE), and hierarchical multi-band fuzzy entropy (HMFE), enabling the multidimensional characterization of EEG signals. Then, with the help of machine learning classifiers, the multi-scale entropy feature vector from the channels selected can achieve a satisfactory accuracy in lie detection. Particularly, the main contributions of this study are presented as follows:

- A novel multi-scale entropy approach is proposed for EEG-based lie detection, which innovatively fuses fuzzy entropy (FE) with time-shifted multi-scale fuzzy entropy (TSMFE) and hierarchical multi-band fuzzy entropy (HMFE) to achieve a multidimensional characterization of EEG signals, overcoming limitations of the existing single-scale methods.

- A systematic channel selection analysis is conducted to identify the most representative EEG channels for lie detection, which provides an empirical basis for designing portable, few-channel lie detection devices, helpfully reducing hardware complexity.

- A comprehensive performance evaluation is performed using various machine learning classifiers (SVM, kNN, NB, and LDA), including both subject-dependent and cross-subject validation schemes to assess the proposed method’s effectiveness and generalization capability rigorously.

The remainder is organized as follows: Section 2 describes the proposed multi-scale entropy approach and the classifiers applied, along with the dataset used for evaluation. Section 3 shows the results from the subject-dependent experiment and the cross-subject experiment, as well as the channel selection. Section 4 conducts a comparative study with existing works and discusses the findings. Finally, Section 5 concludes this study and outlines future work.

2. Proposed Method

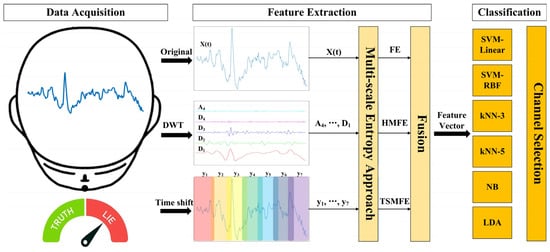

For better understanding, Figure 1 illustrates the overall flowchart of the proposed method, which primarily involves data acquisition, feature extraction, and classification, along with channel selection. More details are described as follows:

Figure 1.

Overall flowchart of the proposed EEG-based lie detection method, where different colors in the time shift represent various time-shifted sequences.

2.1. Feature Extraction

Single-scale entropy is insufficient to describe the intricate characteristics of EEG signals across diverse temporal or frequency scales. Therefore, this study focuses on the multi-scale entropy approach, where feature extraction of FE is performed first and then develops into two variants: TSMFE and HMFE, which can quantify multi-dimensional characterization to provide more discriminative entropy-based analyses for EEG-based lie detection.

2.1.1. Fuzzy Entropy (FE)

FE represents similarity within a time series as a fuzzy membership degree [29], which circumvents information loss that might arise from rigid thresholding and provides a stable and continuous measurement of complexity. For extracting FE from a given input time series like EEG signals, three parameters are needed: the embedding dimension m, which determines the length of the pattern vectors, the tolerance r, which controls the fuzziness of similarity, and the fuzzy factor n, which defines the shape of the membership function.

Initially, a series of overlapping m-dimensional embedded vectors is constructed from the original EEG signals to capture pattern information within local regions. This vector is represented as (1):

where denotes the i-th m-dimensional pattern vector starting from time point i, and a total of N – m + 1 vectors are constructed.

Next, the distance between any two m-dimensional vectors, and , needs to be calculated. The Chebyshev distance is used in this context, as it sensitively captures the maximum difference at any corresponding position within the pattern vectors. In EEG signals analysis, even if most time points match well, a few significantly deviating points indicate diverse patterns. Therefore, the Chebyshev distance directly reflects this worst-case dissimilarity, aiding in the identification of abrupt changes in the signals, expressed as (2):

where means the maximum difference between two m-dimensional pattern vectors.

Subsequently, to convert the distances between pattern vectors into a measure of similarity, a fuzzy membership function (μ) is applied, which maps distance values within the range [0, 1], representing the degree of similarity between them. This choice not only provides a smooth and continuous decay of similarity, avoiding abrupt changes and information loss caused by threshold selection in traditional binarization methods, but also results in a rapid exponential decrease in membership values as the distance increases, which implies that only very close patterns are considered highly similar. Its mathematical expression is (3):

The pattern matching degree is then calculated, which reveals the average similarity of all possible pairs of m-dimensional pattern vectors, denoted as (4):

Specifically, this matching degree can be interpreted as the average probability that two m-dimensional patterns randomly drawn from the original signals match each other, representing the probability of their similarity.

The physical significance of FE lies in the observation that if a time series exhibits higher complexity, the similarity of its patterns will decrease more significantly when transitioning from m to m + 1 dimensions, resulting in a higher value. Conversely, if the series is more regular and predictable, the FE will be lower. Therefore, the final FE value also requires the participation of , expressed as (5):

2.1.2. Time-Shifted Multi-Scale Fuzzy Entropy (TSMFE)

TSMFE draws upon the concept of time-shifted sequence analysis. By constructing multiple time-shifted subsequences across different time scales, it circumvents the potential information loss that occurs during traditional coarse-graining processes. For an EEG signal , extracting TSMFE requires presetting the embedding dimension m, tolerance r, fuzzy factor n, and maximum time interval scale factor . Notably, the parameters for all entropy calculations are set based on common practices in biomedical signal processing and the preliminary evaluations. The embedding dimension m is set to 2, and the fuzzy factor n is set to 2. The tolerance r is set to 0.15 times the standard deviation of the corresponding time series, which allows for adaptive thresholding based on signal amplitude. For the multi-scale analysis, the maximum scale factor kmax is set to 10. These values are chosen since they have been widely established in the literature for providing a robust measure of complexity in EEG signals, while also balancing feature effectiveness with computational efficiency.

First, k time-shifted subsequences are constructed, which have different starting points but the same sampling intervals to prevent potential smoothing of signals. Each time-shifted subsequence begins from the j-th data point of the original signals and is sampled with a step size of k, denoted as (6) and (7):

where indicates the total number of data points that can be extracted starting from the j-th point of the original sequence with a step size of k. Usually, the length of each subsequence is at least m + 1 for calculation. Then, the FE value for each of the k time-shifted subsequences is calculated according to (5), as shown in (8):

where represents the FE value of the j-th time-shifted sequence at scale k. If a subsequence’s length is insufficient or if the FE calculation fails, it is 0 to prevent unreasonable influence on subsequent average values.

Next, at each scale k, the TSMFE is defined as the average of the FE values of all k time-shifted subsequences, which facilitates that the complexity measure at each scale fully considers all possible time-shifted starting points at that scale as (9):

By iterating all scales, a TSMFE feature vector is obtained, which comprehensively describes the variation trend of the EEG signals across multiple time scales, providing multi-scale time-domain characterization (10):

2.1.3. Hierarchical Multi-Band Fuzzy Entropy (HMFE)

Different frequency bands of EEG signals are associated with distinct cognitive states and physiological processes that occur in the brain. For example, delta bands (0–4 Hz) are typically associated with deep sleep states, theta bands (4–8 Hz) with memory and emotional processing, alpha bands (8–13 Hz) with relaxed states, and beta bands (13–30 Hz) with active thinking and attention. To obtain a more comprehensive understanding of different frequency bands, HMFE is proposed by decomposing the original signals into different frequency bands through DWT [30] and then calculating the FE feature separately for each one.

Technically, extracting the HMFE feature requires specifying embedding dimension m, tolerance r, fuzzy factor n, the selected wavelet basis ψ, and the decomposition level L. First, the x(t) is decomposed into L layers of frequency band coefficients using the DWT, which conducts decomposition at different frequency resolutions through a series of orthogonal filters, yielding coefficients across various frequency bands, represented as (11):

where AL means the L-th level approximation coefficients, corresponding to the lowest frequency components of the EEG signals, Di denotes the i-th level detail coefficients, corresponding to the components in different high-frequency bands, where i = 1, 2, …, L. Each level of detail coefficients corresponds to a specific frequency band, ordered from high to low.

After obtaining the DWT coefficients, it is necessary to reconstruct the time-domain detail corresponding to each frequency band through inverse DWT (IDWT). During this process, only the coefficients of the current band are retained, while coefficients from others are set to zero, ensuring that each reconstructed signal ( and ) contains information only from its specific range (12) and (13):

where ‘0’ indicates that the coefficients for the corresponding frequency bands are set to zero. In this study, the ‘wrcoef’ function provided by MATLAB R2023b is used to reconstruct specific levels of approximation or detail coefficients from the DWT. Furthermore, to quantify the complexity of each frequency band, and considering that the energies of different frequency bands may vary significantly, an adaptive adjustment is applied to and for each reconstructed band, based on the standard deviation of each band and , and the standard deviation of the original signal . It can avoid issues where the same tolerance leads to insufficient sensitivity for low-energy bands or excessive sensitivity for high-energy bands (14) and (15):

As a result, with the help of the adjusted tolerance, the FE for each reconstructed frequency band is calculated according to (5) and can be expressed as (16) and (17):

Lastly, the FE values from all frequency bands are combined to form the HMFE, aiding in the differentiation of lie state from frequency characteristics (18):

2.2. Classification Method

To establish a lightweight entropy-based method, different classifiers (SVM, kNN, NB, and LDA) are employed to train and test the extracted multi-scale entropy features, which are then fused into a vector form to serve as the input data for the classifiers. After thoroughly assessing the classifiers for discriminating between lie and truth states, the most suitable one for lie detection can be determined in the proposed method.

First, SVM involves identifying a hyperplane that maximizes the classification margin, which separates data points belonging to different classes [31]. By maximizing this margin, SVM can achieve improved generalization ability, performing well even with limited training samples. In this study, two kernel functions are evaluated: linear (SVM-Linear) and RBF (SVM-RBF), which aim to investigate the influence of different decision boundary complexities on lie detection. The linear kernel is proper for linearly separable data, allowing for the construction of simple decision boundaries. On the other hand, the RBF kernel maps the original data to a higher-dimensional space, demonstrating good adaptability in complex data classification.

Second, kNN is an instance-based method, categorized as a non-parametric classification in machine learning [32]. In k-NN, for a sample with an unknown class, it identifies the k-nearest neighbors from the training set. The class of the unknown sample is then determined by weighted averaging based on the labels of these neighbors. In this study, two k values (k = 3 and k = 5) are assessed. The choice of these small, odd integers is a standard practice in binary classification to avoid tied votes. This selection also allows for a direct exploration of the bias-variance trade-off in a computationally efficient manner, as k = 3 results in a model with lower bias and higher variance, making it highly adaptive to local data structures, while k = 5 provides a smoother decision boundary with lower variance, which can enhance generalization. Given the goal of evaluating a lightweight system and the nature of the dataset, these values serve as effective and representative benchmarks for the kNN’s performance.

Third, LDA is a classical linear classification that finds one or more linear combinations of features such that the projections of data from different classes onto these linear combinations achieve maximum between-class variance and minimum within-class variance [33]. It assumes identical covariance matrices for all classes, offers high computational efficiency, and provides easily interpretable results, which makes it particularly well-suited for modeling and predicting classes given feature values.

Next, NB classifier assumes that all features are conditionally independent given the class [34]. Although this naive assumption rarely holds perfectly in real-world scenarios, it is favored for its simplicity, efficiency, adaptability to small sample sizes, and exemplary performance in EEG-based applications.

Moreover, accuracy is used to evaluate these classifiers. It denotes the proportion of correctly classified samples relative to the total number of samples (19):

where true positive (TP) means the number of samples whose true label is lie and are correctly predicted as lie, true negative (TN) represents the number of samples whose true label is truth and are correctly predicted as truth, false positive (FP) indicates the number of samples whose true label is truth but are incorrectly predicted as lie, and false negative (FN) means the number of samples whose true label is lie but were incorrectly predicted as truth.

Please note that while other metrics such as sensitivity, specificity, and the complete confusion matrix are often crucial for the method evaluation, accuracy is selected as the primary metric in this study due to the balanced nature of the experimental dataset, where each subject provides an equal number of trials for the ‘lie’ and ‘truth’ states (25 trials per class). Thus, in a perfectly balanced binary classification task, accuracy is a direct and unbiased metric to show overall performance, as a majority class does not skew it and can provide a straightforward measure of how well the classifiers can distinguish between the two different states accordingly. More details about the experimental dataset are described subsequently.

2.3. Experimental Dataset

In this study, method validation was conducted using the publicly available LieWaves dataset [35], which is specifically designed to offer high-quality EEG signals for neurophysiology-based lie detection by analyzing individual brain activities during lie and truth states. The data acquisition for the LieWaves dataset involves recruiting 27 healthy subjects, all are students or faculty members from the Faculty of Technology at Karadeniz Technical University, Türkiye. Their average age is 23.1 years, and none has any known health issues. Before the experiments, all subjects were fully informed about the content and procedures and voluntarily signed an ethics-informed consent form.

During the experiment, each subject underwent two independent paradigms designed to elicit truth and lie states using a concealed information test-style protocol. To prevent fatigue effects, ten different rosaries were used as visual stimuli, with five allocated to each paradigm, as shown in Figure 2.

Figure 2.

Ten rosaries act as the visual stimuli in the LieWaves dataset.

Regarding the truth paradigm, subjects were first familiarized with a set of five rosaries. During the EEG recording session, their tasks were to acknowledge recognition when these items were presented truthfully. Concerning the lie paradigm, subjects were shown a different set of five rosaries and were instructed to select one as a critical item. So, their tasks were to deceptively deny having seen this specific item when it was presented, constituting the lie condition accordingly.

For both paradigms, each subject completed 25 trials (five rosaries, each repeated five times). Each trial lasted three seconds, consisting of a one-second black screen prompt followed by a two-second visual stimulus presentation. Moreover, all subjects provided their responses, either a truthful expression or a deceptive one, by pressing a designated key on a keyboard. Consequently, 75 s (25 trials × 3 s) of EEG signals were collected from each subject for each paradigm.

The data acquisition was performed using an Emotiv Insight headset, which includes the five channels (AF3, T7, PZ, T8, and AF4). Particularly, its low number of channels and wireless, portable design provided convenience during EEG data collection. Additionally, it exhibited an internal sampling rate of 2048 Hz. However, the data has been downsampled internally before output, so the dataset provided data at a final sampling rate of 128 Hz. Meanwhile, to ensure data quality, raw EEG signals were pre-processed, which involved removing the direct current (DC) offset and then applying a band-pass filter with a frequency range of 0.5–45 Hz. This filtering step serves to eliminate low-frequency drifts (below 0.5 Hz) and high-frequency noise, including 50/60 Hz power-line interference, while preserving the primary EEG frequency bands of interest (delta, theta, alpha, and beta) for analysis. While filtering helps attenuate several high-frequency muscle activities, dedicated artifact removal techniques are necessary to address muscle artifacts. In short, the dataset has dimensions of 27 × 2 × 5 × 75, where ‘27’ represents the number of subjects, ‘2’ denotes the number of experimental paradigms (lie and truth), ‘5’ means the number of channels, and ‘75’ indicates the duration of each experiment in seconds. Based on this, two types of experiments (the subject-dependent experiment and the cross-subject experiment) were conducted, with each employing the feature vector extracted from the same EEG channel as input data. The details are as follows:

2.3.1. Subject-Dependent Experiment

The subject-dependent experiment primarily evaluates the proposed method for distinguishing between lie and truth states within the same individual and for identifying the representative channel of each subject. To this end, the 50 trials per subject are assessed by leave-one-out cross-validation (LOOCV). In this procedure, one trial serves as the test sample, and the remaining 49 trials are used for training. This process is iterated 50 times, such that each trial is used as the test sample exactly once. The final accuracy is the average performance across all 50 iterations. Additionally, 10-fold cross-validation is also performed. Through the two cross-validation methods, the accuracy of each channel using different classifiers can be fully evaluated for each subject.

2.3.2. Cross-Subject Experiment

The cross-subject experiment aims to assess the generalization capability of the proposed method on unseen subject data, which is vital when dealing with new users in practical cases. To this end, the leave-one-subject-out cross-validation (LOSOCV) is adopted. Specifically, in each iteration, samples from one subject (including all their trials) are selected as the test set, while all trials from the remaining 26 subjects constitute the training set. This process is iterated until all 27 subjects have served as the test set once. Finally, the average accuracy across all 27 iterations is calculated to reflect the average performance. Simultaneously, each channel and its corresponding accuracy are analyzed for each subject when serving as the test set, which also facilitates the channel selection.

3. Experimental Results

The experimental results are analyzed for two scenarios: subject-dependent and cross-subject. A thorough analysis of different channels is also conducted. Please note that for convenient presentation of the results, ‘C1’ to ‘C6’ sequentially refer to the six types of classifiers evaluated, where C1 is for SVM-Linear, C2 is for SVM-RBF, C3 is for kNN-3, C4 is for kNN-5, C5 is for NB, and C6 is for LDA. Meanwhile, ‘S1’ to ‘S27’ denote the 27 subjects in the dataset.

3.1. Subject-Dependent Results

Table 1 shows the accuracies for different classifiers across subjects under LOOCV and 10-fold cross-validation, where the best results are underlined in bold, and ‘SD’ refers to standard deviation.

Table 1.

Lie detection accuracy (%) using different classifiers in the subject-dependent experiment under LOOCV and 10-fold cross-validation.

From Table 1, it can be observed that in the subject-dependent experiment, C6 (LDA) achieves the highest average accuracies under two cross-validation methods, at 82.74% and 82.00%, respectively, which indicates that the proposed multi-scale entropy approach combined with LDA exhibits satisfactory performance in distinguishing lie and truth states within individuals. It is noteworthy that considerable variations in detection accuracy exist in several subjects. For instance, S2, S9, S13, and S25 consistently attained accuracies of up to 98% across both cross-validation methods, demonstrating high discriminability of their individual EEG signals in lie detection. However, S17, S19, and S23 show lower accuracies. Such disparity reflects inter-individual heterogeneity in physiological responses. To further investigate the specific performance of each subject under the LDA, particularly to select the representative channels, Table 2 shows the channels that yield the highest accuracy by utilizing the LDA classifier for all 27 subjects.

Table 2.

The channels that yield the highest accuracy when utilizing the LDA classifier for all 27 subjects in the subject-dependent experiment.

In Table 2, the statistical results indicate that PZ and T7 are representative, as their occurrences are the most frequent among all channels. From a neuroscience perspective, the PZ is commonly associated with cognitive control, while the T7 is related to language processing, memory retrieval, and auditory processing [36,37]. The involvement of PZ reflects the increased demand for executive functions, such as response inhibition (suppressing the truth) and cognitive monitoring (managing the narrative of the lie). Concurrently, the involvement of T7 highlights its crucial role in accessing relevant semantic knowledge and potentially rehearsing the false statement internally. Together, their prominences reveal that lying is not a localized process but instead involves a coordinated network that needs both executive control over behavior and complex semantic operations, offering neurophysiological support for lie detection.

3.2. Cross-Subject Results

Table 3 lists the accuracies of the cross-subject experiment using different classifiers under LOSOCV, where the best results are underlined in bold. ‘SD’ refers to standard deviation.

Table 3.

Lie detection accuracy (%) using different classifiers in the cross-subject experiment under LOSOCV.

Table 3 reveals that in the cross-subject experiment, C2 (SVM-RBF) achieves the highest average accuracy of 64.07%. Compared to the results in the subject-dependent experiment (approximately 82%), the cross-subject accuracies are generally lower, which aligns with expectations, as generalizing across individual data is more challenging, requiring the classifier to learn features unaffected by inter-individual variability. Hence, there is a trade-off between the generalization and accuracy. Now, to further analyze the channel selection in the cross-subject experiment, Table 4 lists the channels that yield the highest accuracy when using the SVM-RBF classifier.

Table 4.

The channels that yield the highest accuracy when using the SVM-RBF classifier in the cross-subject experiment.

From Table 4, the statistical results indicate that PZ and T7 channels also exhibit a prominent occurrence as representatives, which support the potential importance of PZ and T7 channels in lie detection, as these two channels consistently provide relatively stable discriminative information through entropy-based analyses, even under the cross-subject generalization assessment, which corroborates the roles these two specific channel locations play in processing cognitive state related to lying.

3.3. Channel Selection Results

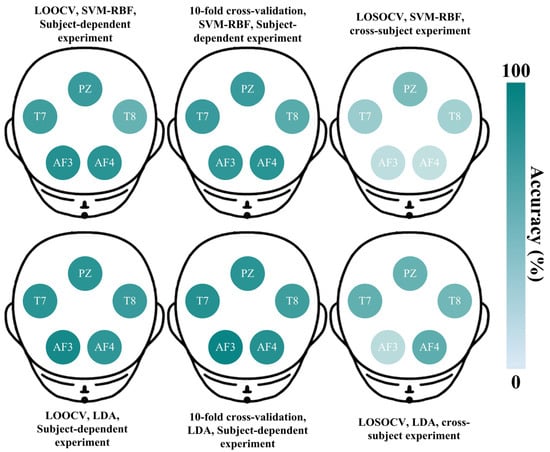

To thoroughly analyze channel selection in EEG-based lie detection, the accuracies across channels for the two best classifiers (SVM-RBF and LDA) are investigated in detail under three cross-validation methods (LOOCV, 10-fold cross-validation, and LOSOCV). As an example, Figure 3 illustrates the scalp maps from subject-dependent and cross-subject results of S4.

Figure 3.

Lie detection accuracies across five channels for the two classifiers (SVM-RBF and LDA) under three cross-validation methods (LOOCV, 10-fold cross-validation, and LOSOCV). The data source is from S4 in the LieWaves dataset.

In Figure 3, the deeper colors indicate higher accuracy. In the subject-dependent experiment of S4, both SVM-RBF and LDA exhibit satisfactory accuracies across five channels. The PZ and T7 channels consistently demonstrate better discriminative abilities in lie detection, which aligns with the results in Table 2. In the cross-subject experiment, the overall results indicate a general decrease in accuracy. While the PZ and T7 channels still show relatively deeper colors, further demonstrating their importance for lie detection. Based on this, the representative channels from both subject-dependent and cross-subject experiments are statistically illustrated in Figure 4.

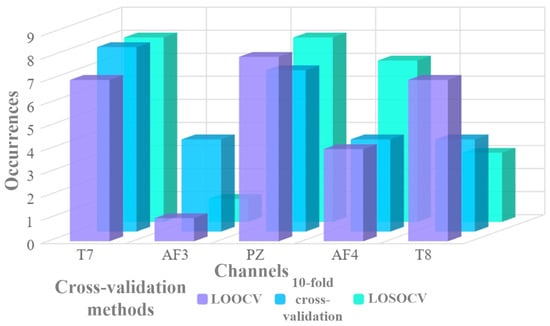

Figure 4.

Statistical results of the representative channels under subject-dependent and cross-subject experiments for EEG-based lie detection, where PZ and T7 channels show higher cumulative occurrences in this context.

Figure 4 presents the cumulative occurrences of each EEG channel serving as the representative channel across all cross-validation methods. It is evident that the T7 and PZ channels appear most frequently as the best-performing channels. It is also noteworthy that the T8 channel demonstrates a strong performance, although its cumulative occurrence is lower than that of T7 and PZ. The prominence of such channels, particularly T7 and PZ, not only highlights key brain regions of focus for lie detection but also provides empirical evidence for channel selection. In developing portable EEG-based lie detection devices with the fewest possible channels, the higher incidence rate of T7 and PZ makes them the primary candidates.

To further investigate the potential of a multi-channel configuration and to provide a direct comparison against the single-channel findings, an additional analysis is conducted using two-channel combinations. Here, the three channels that performed most prominently in the single-channel analysis (PZ, T7, and T8) are chosen to create feature sets for all their pairwise combinations (PZ-T7, PZ-T8, and T7-T8). The classification is then repeated using the same validation schemes and best-performing classifiers. The detailed results are presented in Table 5. ‘SD’ refers to standard deviation.

Table 5.

Lie detection accuracies (%) of the multi-channel configuration using SVM-RBF and LDA with different cross-validation methods.

4. Discussion

To contextualize the findings and evaluate the practical advantages of this study, several recent works in EEG-based lie detection are considered, as summarized in Table 6. It is crucial to emphasize that a direct comparison of accuracy between these studies is inappropriate, as they employ different datasets, subjects, and specific experimental protocols. Therefore, the purpose of Table 6 is not to claim numerical superiority but to highlight the key methodological advantage, which serves as a methodological summary to frame this study’s contribution in terms of efficiency and hardware requirements, contrasting the single-channel approach with the multi-channel systems used in other conceptually similar works.

Table 6.

A methodological summary of recent EEG-based lie detection works.

In this study, the discrimination between lie and truth can be achieved using a single-channel data based on the multi-scale entropy approach combined with conventional classifiers. Within the subject-dependent experiment, satisfactory performance is demonstrated, with the LDA classifier yielding average accuracies of 82.74% (LOOCV) and 82.00% (10-fold cross-validation). These results reveal that the multi-scale entropy features effectively capture the subtle neurophysiological behavior associated with the lie state. The prominence of the PZ (midline parietal) and T7 (left temporal) channels across experiments strongly suggests their critical involvement in the neurocognitive substrates of lie detection. However, it is essential to acknowledge the strong performance of the T8 channel as well, which suggests that the right temporal lobe plays a complementary role, indicating that a bilateral temporal network is involved in deception. The decision to prioritize PZ and T7 is driven by the practical goal of designing a system with the minimum number of channels necessary for robust performance. For a hypothetical two-channel device, PZ and T7 are the empirically supported optimal choices from our findings. Nonetheless, the notable results from T8 open an engaging way for future research into whether a three-channel configuration (PZ, T7, and T8) would yield a significant improvement in accuracy. As mentioned, the PZ channel is implicated in attention, working memory, and context integration, while the T7 channel is associated with semantic processing, both of which are key processes in deception. Such findings imply the proposed entropy-based approach contributes to representative channel selection, providing insightful understandings in the field of electrophysiological signals.

Moreover, when benchmarked against established EEG-based lie detection techniques, the significance of the single-channel results found in this study becomes evident. Although better accuracies have been reported using multi-channel approaches, they require greater computational resources, complex spatial filtering, and a larger number of electrodes placed on the scalp for recordings. In contrast, the proposed method achieves around 82% accuracy using only single-channel data, demonstrating a trade-off. An additional investigation reinforces this consideration, where combining features from the best-performing channels (e.g., PZ and T7) surprisingly led to a decrease in accuracy, dropping from 82.74% to 77.56% in the subject-dependent LOOCV test. This counterintuitive result is likely due to the curse of dimensionality, since the increased feature space hinders the classifier’s performance given the limited number of trials, which also provides compelling evidence that a single, well-selected channel is not merely a compromise for efficiency but is, in fact, the optimal approach for maximizing accuracy with the proposed multi-scale entropy features. Consequently, the representative channel selection performed in this study represents an advancement in computational cost and hardware deployment, reducing redundant data acquisition while maintaining satisfactory performance for individual-centric daily applications.

Next, an interesting observation from the subject-dependent results (Table 1) is that the kNN-3 classifier occasionally outperforms the kNN-5 classifier for several subjects. While one might typically expect performance to improve with a slightly larger k, this phenomenon can be explained by the bias-variance trade-off. A smaller k value, such as 3, creates a highly flexible decision boundary that is sensitive to the local structure of the data (i.e., low bias, high variance). For subjects whose EEG feature distributions for lie and truth are complex and intricately separated, this adaptive boundary may be superior at capturing the fine distinctions. In contrast, k = 5 enforces a smoother decision boundary, which, while potentially more robust against noise, might oversmooth these critical separating patterns for certain individuals, leading to a marginal decrease in accuracy. Thus, such variations reveal the inter-subject variability in neurophysiological responses and the challenge of finding a one-size-fits-all model.

Finally, the generalization needs to be enhanced within the cross-subject experiment, where an average accuracy of 64.07% is attained using the SVM-RBF classifier. While this result highlights the inherent difficulty of subject-independent lie detection and the influence of individual neurophysiological variability, it concurrently validates the transferable discriminative ability of the extracted entropy-based features. The selection of PZ and T7 as representative channels also provides valuable insights into the field of neuroscience. It can be said that the convergence of single-channel EEG processing with electrophysiological discoveries establishes a suitable pathway toward developing portable devices, further lowering deployment barriers while advancing the fundamental understanding of the neural dynamics underlying lie states.

5. Conclusions

This study establishes an EEG-based lie detection method by extracting multi-scale entropy with representative channel selection. Designed to comprehensively quantify EEG signal complexity across both temporal and spectral domains, the proposed entropy-based approach overcomes limitations inherent in traditional single-scale and coarse-graining methods. The experimental evaluation on the LieWaves dataset demonstrates satisfactory performance in the subject-dependent experiment, with the LDA classifier achieving an accuracy of approximately 82%. Then, the cross-subject experiment proves more challenging, yielding an accuracy of 64.07% using the SVM-RBF classifier. Moreover, the representative channels are found as PZ and T7, which are involved in insightful neurocognitive processes of lie detection, providing empirical evidence for optimizing channel selection on the scalp for practical applications. Future work will focus on enhancing the robustness of the multi-scale entropy approach in terms of cross-subject generalization capabilities, particularly in real-world scenarios with environmental noise and increased cognitive load, advancing the evolution of EEG-based applications.

Author Contributions

Conceptualization, J.L. (Jiawen Li), G.F., C.L., S.Z. and R.C.; Data curation, G.F., C.L. and X.R.; Formal analysis, S.Z., X.L., L.W. and M.I.V.; Funding acquisition, J.L. (Jiawen Li), S.Z., M.I.V. and R.C.; Investigation, G.F., C.L., J.L. (Jujian Lv) and R.C.; Methodology, J.L. (Jiawen Li), G.F., X.R., L.W. and J.L. (Jujian Lv); Project administration, S.Z., M.I.V. and R.C.; Resources, J.L. (Jiawen Li), X.L., L.W., J.L. (Jujian Lv) and R.C.; Software, J.L. (Jiawen Li), G.F., C.L., X.R. and J.L. (Jujian Lv); Supervision, S.Z., M.I.V., J.L. (Jujian Lv) and R.C.; Validation, J.L. (Jiawen Li), G.F., S.Z., X.L. and R.C.; Visualization, G.F., C.L. and X.R.; Writing—original draft, J.L. (Jiawen Li), G.F., C.L., X.R. and S.Z.; Writing—review and editing, X.L., L.W., M.I.V., J.L. (Jujian Lv) and R.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Open Research Fund of Key Laboratory of Cognitive Neuroscience and Applied Psychology (Education Department of Guangxi Zhuang Autonomous Region) under Grant 2025KLCNAP005, in part by the Open Research Fund of State Key Laboratory of Digital Medical Engineering under Grant 2025-M10, in part by the Open Project Program of State Key Laboratory of Computer-Aided Design and Computer Graphics (CAD&CG) under Grant A2533, in part by the Sichuan Science and Technology Program under Grant 2025ZNSFSC0780, in part by the Foundation of the 2023 Higher Education Science Research Plan of the China Association of Higher Education under Grant 23XXK0402, in part by the Foundation of the Sichuan Research Center of Applied Psychology (Chengdu Medical College) under Grant CSXL-25102, in part by the Neijiang Philosophy and Social Science Planning Project under Grant NJ2025ZD007, in part by the Key Discipline Improvement Project of Guangdong Province under Grant 2022ZDJS015, in part by the Guangdong Province Ordinary Colleges and Universities Young Innovative Talents Project under Grant 2023KQNCX036, in part by the Scientific Research Capacity Improvement Project of the Doctoral Program Construction Unit of Guangdong Polytechnic Normal University under Grant 22GPNUZDJS17, in part by the Graduate Education Demonstration Base Project of Guangdong Polytechnic Normal University under Grant 2023YJSY04002, and in part by the Research Fund of Guangdong Polytechnic Normal University under Grant 2022SDKYA015.

Data Availability Statement

The datasets generated and/or analyzed during the current study are available at https://github.com/zyzc75/ME-Lie-Detection (accessed on 1 September 2025).

Acknowledgments

The authors would like to appreciate the special contributions from Key Laboratory of Cognitive Neuroscience and Applied Psychology (Education Department of Guangxi Zhuang Autonomous Region), State Key Laboratory of Digital Medical Engineering, State Key Laboratory of Computer-Aided Design and Computer Graphics (CAD&CG), and ZUMRI-LYG Joint Laboratory.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Taha, B.N.; Baykara, M.; Alakuş, T.B. Neurophysiological approaches to lie detection: A systematic review. Brain Sci. 2025, 15, 519. [Google Scholar] [CrossRef]

- Elbatanouny, H.; Al Roken, N.; Hussain, A.; Aslam, S.; Jaber, F.; Abbas, S. A comprehensive analysis of deception detection techniques leveraging machine learning. Expert Syst. Appl. 2025, 283, 127601. [Google Scholar] [CrossRef]

- Li, J.; Feng, G.; Ling, C.; Ren, X.; Liu, X.; Zhang, S.; Wang, L.; Chen, Y.; Zeng, X.; Chen, R. A resource-efficient multi-entropy fusion method and its application for EEG-based emotion recognition. Entropy 2025, 27, 96. [Google Scholar] [CrossRef] [PubMed]

- Opancina, V.; Sebek, V.; Janjic, V. Advanced neuroimaging and criminal interrogation in lie detection. Open Med. 2024, 19, 20241032. [Google Scholar] [CrossRef] [PubMed]

- Yadav, A.; Agrawal, M.; Joshi, S.D. EEG-based source localization with enhanced virtual aperture using second order statistics. J. Neurosci. Methods 2023, 389, 109835. [Google Scholar] [CrossRef] [PubMed]

- Saad, J.; Evans, A.; Jaoui, I.; Frosolone, M.; Arpaia, P.; Gargiulo, L.; Abdalla, M.; Ali, H.; Mohajelin, F.; Rahmani, M. Comparison metrics and power trade-offs for BCI motor decoding circuit design. Front. Hum. Neurosci. 2025, 19, 1547074. [Google Scholar] [CrossRef]

- Li, J.W.; Barma, S.; Mak, P.U.; Chen, F.; Li, C.; Li, M.T.; Vai, M.I.; Pun, S.H. Single-channel selection for EEG-based emotion recognition using brain rhythm sequencing. IEEE J. Biomed. Health Inform. 2022, 26, 2493–2503. [Google Scholar] [CrossRef]

- Gao, J.; Gu, L.; Min, X.; Wang, Z.; Song, Z. Brain fingerprinting and lie detection: A study of dynamic functional connectivity patterns of deception using EEG phase synchrony analysis. IEEE J. Biomed. Health Inform. 2021, 26, 600–613. [Google Scholar] [CrossRef]

- Gao, J.; Min, X.; Kang, Q.; Wang, Z.; Gu, L.; Song, Z. Effective connectivity in cortical networks during deception: A lie detection study based on EEG. IEEE J. Biomed. Health Inform. 2022, 26, 3755–3766. [Google Scholar] [CrossRef]

- Sasatake, Y.; Matsushita, K. EEG baseline analysis for effective extraction of P300 event-related potentials for welfare interfaces. Sensors 2025, 25, 3102. [Google Scholar] [CrossRef]

- Eng, C.M.; Patton, L.A.; Bell, M.A. Infant attention and frontal EEG neuromarkers of childhood ADHD. Dev. Cogn. Neurosci. 2025, 72, 101524. [Google Scholar] [CrossRef]

- Simfukwe, C.; An, S.S.A.; Youn, Y.C. Time-frequency domain analysis of quantitative electroencephalography as a biomarker for dementia. Diagnostics 2025, 15, 1509. [Google Scholar] [CrossRef]

- Zhang, S.; Ling, C.; Wu, J.; Li, J.; Wang, J.; Yu, Y.; Liu, X.; Lv, J.; Vai, M.I.; Chen, R. EEG-ERnet: Emotion recognition based on rhythmic EEG convolutional neural network model. J. Integr. Neurosci. 2025, 24, 41547. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, Y.; Zeng, X.; Yuan, J.; Li, J.; Chen, R. Hierarchical cascaded networks with multi-task balanced loss for fine-grained hashing. Eng. Appl. Artif. Intell. 2025, 152, 110659. [Google Scholar] [CrossRef]

- Arpaia, P.; Frosolone, M.; Gargiulo, L.; Greco, A.; Nardone, M.; Peluso, F.; Rossi, F.; Scala, A. Specific feature selection in wearable EEG-based transducers for monitoring high cognitive load in neurosurgeons. Comput. Stand. Interfaces 2025, 92, 103896. [Google Scholar] [CrossRef]

- Cacciotti, A.; Pappalettera, C.; Miraglia, F.; Ruggieri, A.; Iellamo, F. Complexity analysis from EEG data in congestive heart failure: A study via approximate entropy. Acta Physiol. 2023, 238, e13979. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Xiong, X.; Zhou, J.; Chen, J.; Liu, Z.; Huang, D.; Li, S. CATM: A multi-feature-based cross-scale attentional convolutional EEG emotion recognition model. Sensors 2024, 24, 4837. [Google Scholar] [CrossRef] [PubMed]

- Niu, Y.; Xiang, J.; Gao, K.; Cao, Y.; Wu, J. Multi-frequency entropy for quantifying complex dynamics and its application on EEG data. Entropy 2024, 26, 728. [Google Scholar] [CrossRef]

- Chen, Q.; Mao, X.; Song, Y.; Gao, Y.; Liu, C. An EEG-based emotion recognition method by fusing multi-frequency-spatial features under multi-frequency bands. J. Neurosci. Methods 2025, 415, 110360. [Google Scholar] [CrossRef]

- Shang, H.; Huang, T.; Wang, Z.; Sun, X.; Lv, Y. Research on a transformer vibration fault diagnosis method based on time-shift multiscale increment entropy and CatBoost. Entropy 2024, 26, 721. [Google Scholar] [CrossRef]

- Fan, Y.; Lv, Y.; Yuan, R.; Niu, C.; Dong, X.; Pan, H.; Yang, Y. Amplitude-based time-shift multiscale feature fuzzy dispersion entropy: A novel health indicator for aero-engine fault diagnosis. IEEE Sens. J. 2025, 25, 15693–15710. [Google Scholar] [CrossRef]

- Zheng, J.; Wang, J.; Pan, H.; Sun, B. Refined time-shift multiscale slope entropy: A new nonlinear dynamic analysis tool for rotating machinery fault feature extraction. Nonlinear Dyn. 2024, 112, 19887–19915. [Google Scholar] [CrossRef]

- Zeng, X.; Zhou, J.; Liu, D.; Yuan, J.; Yin, M.; Xu, W.; Liu, S. Large-scale fine-grained image retrieval via Proxy Mask Pooling and multilateral semantic relations. Knowl.-Based Syst. 2025, 326, 114018. [Google Scholar] [CrossRef]

- Zeng, X.; Zhou, J.; Ye, C.; Yuan, J.; Li, J.; Jiang, J.; Chen, R.; Liu, S. Stacked and decorrelated hashing with AdapTanh for large-scale fine-grained image retrieval. Signal Process. Image Commun. 2025, 138, 117374. [Google Scholar] [CrossRef]

- Rahimi Saryazdi, A.; Bayani, A.; Ghassemi, F.; Tabanfar, Z.; Alishahi, S. Brain functional connectivity network during deception: A visibility graph approach. Eur. Phys. J. Spec. Top. 2025, 234, 937–950. [Google Scholar] [CrossRef]

- Aslan, M.; Baykara, M.; Alakuş, T.B. LSTMNCP: Lie detection from EEG signals with novel hybrid deep learning method. Multimed. Tools Appl. 2024, 83, 31655–31671. [Google Scholar] [CrossRef]

- Rahmani, M.; Mohajelin, F.; Khaleghi, N.; Saiedian, N. An automatic lie detection model using EEG signals based on the combination of type 2 fuzzy sets and deep graph convolutional networks. Sensors 2024, 24, 3598. [Google Scholar] [CrossRef]

- AlArfaj, A.A.; Mahmoud, H.A.H. A deep learning model for EEG-based lie detection test using spatial and temporal aspects. Comput. Mater. Continua 2022, 73, 5655–5669. [Google Scholar] [CrossRef]

- Xu, X.; Tang, J.; Xu, T.; Yang, X.; Xu, G.; Yao, S.; Chen, Z. Mental fatigue degree recognition based on relative band power and fuzzy entropy of EEG. Int. J. Environ. Res. Public Health 2023, 20, 1447. [Google Scholar] [CrossRef]

- Dhongade, D.; Captain, K.; Dahiya, S. EEG-based schizophrenia detection: Integrating discrete wavelet transform and deep learning. Cogn. Neurodyn. 2025, 19, 62. [Google Scholar] [CrossRef]

- Shanmugam, S.; Dharmar, S. Implementation of a non-linear SVM classification for seizure EEG signal analysis on FPGA. Eng. Appl. Artif. Intell. 2024, 131, 107826. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Ai, G.; Yang, X.; Sun, Y.; Li, Y. Distance optimization KNN and EMD based lightweight hardware IP core design for EEG epilepsy detection. Microelectron. J. 2024, 151, 106335. [Google Scholar] [CrossRef]

- Wu, S.; Bhadra, K.; Giraud, A.L.; Noury, J.; De Lucia, M. Adaptive LDA classifier enhances real-time control of an EEG brain–computer interface for decoding imagined syllables. Brain Sci. 2024, 14, 196. [Google Scholar] [CrossRef]

- Abdullayeva, E.; Örnek, H.K. Diagnosing epilepsy from EEG using machine learning and Welch spectral analysis. Trait. Signal 2024, 41, 971–977. [Google Scholar] [CrossRef]

- Aslan, M.; Baykara, M.; Alakus, T.B. LieWaves: Dataset for lie detection based on EEG signals and wavelets. Med. Biol. Eng. Comput. 2024, 62, 1571–1588. [Google Scholar] [CrossRef]

- Tackett, W.S.; Mechanic-Hamilton, D.; Das, S.; Mojena, M.; Stein, J.M.; Davis, K.A.; Detre, J.A. Lateralization of memory function in temporal lobe epilepsy using scene memory fMRI. Epilepsia Open 2024, 9, 2487–2494. [Google Scholar] [CrossRef]

- López-Caballero, F.; Coffman, B.A.; Curtis, M.; Sklar, A.L.; Yi, S.; Salisbury, D.F. Auditory sensory processing measures using EEG and MEG predict symptom recovery in first-episode psychosis with a single-tone paradigm. NeuroImage Clin. 2025, 45, 103730. [Google Scholar] [CrossRef] [PubMed]

- Edla, D.R.; Bablani, A.; Bhattacharyya, S.; Tripathi, D. Spatial spiking neural network for classification of EEG signals for concealed information test. Multimed. Tools Appl. 2024, 83, 79259–79280. [Google Scholar] [CrossRef]

- Wang, H.; Qi, Y.; Yu, H.; Liu, Y.; Gu, F. RCIT: An RSVP-based concealed information test framework using EEG signals. IEEE Trans. Cogn. Dev. Syst. 2021, 14, 541–551. [Google Scholar] [CrossRef]

- Rahimi Saryazdi, A.; Ghassemi, F.; Tabanfar, Z.; Jafari, M.; Kouchaki, M.T. EEG-based deception detection using weighted dual perspective visibility graph analysis. Cogn. Neurodyn. 2024, 18, 3929–3949. [Google Scholar] [CrossRef] [PubMed]

- Bablani, A.; Edla, D.R.; Tripathi, D.; Choubey, N.K. An efficient concealed information test: EEG feature extraction and ensemble classification for lie identification. Mach. Vis. Appl. 2019, 30, 813–832. [Google Scholar] [CrossRef]

- Kang, Q.; Li, F.; Gao, J. Exploring the functional Brain Network of Deception in source-level EEG via partial mutual information. Electronics 2023, 12, 1633. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).