Abstract

The paradigm-shifting developments of cryptography and information theory have focused on the privacy of data-sharing systems, such as epidemiological studies, where agencies are collecting far more personal data than they need, causing intrusions on patients’ privacy. To study the capability of the data collection while protecting privacy from an information theory perspective, we formulate a new distributed multiparty computation problem called privacy-preserving epidemiological data collection. In our setting, a data collector requires a linear combination of K users’ data through a storage system consisting of N servers. Privacy needs to be protected when the users, servers, and data collector do not trust each other. For the users, any data are required to be protected from up to E colluding servers; for the servers, any more information than the desired linear combination cannot be leaked to the data collector; and for the data collector, any single server can not know anything about the coefficients of the linear combination. Our goal is to find the optimal collection rate, which is defined as the ratio of the size of the user’s message to the total size of downloads from N servers to the data collector. For achievability, we propose an asymptotic capacity-achieving scheme when , by applying the cross-subspace alignment method to our construction; for the converse, we proved an upper bound of the asymptotic rate for all achievable schemes when . Additionally, we show that a positive asymptotic capacity is not possible when . The results of the achievability and converse meet when the number of users goes to infinity, yielding the asymptotic capacity. Our work broadens current researches on data privacy in information theory and gives the best achievable asymptotic performance that any epidemiological data collector can obtain.

1. Introduction

During any prevention and control period in an epidemic, strengthening the protection of personal information is conducive not only to safeguarding personal interests, but also better controlling the development of the epidemic. Differently from the collection of other homogeneous data, such as the large-scale labeled sample obtained in machine learning, the characteristics of medical or epidemiological data collection are reflected in the following aspects: (1) In order to establish a surveillance system for a dynamical group of people to collect syndromic data, the sample size is always changing [1,2]; (2) Data sharing and early response are critical in containing the spread of highly infectious diseases, such as COVID-19. This requires the data stored in the database to be updated to track real-time changes in symptoms, severity, or other disease-related patterns [3,4]; (3) Some of the epidemiological data, such as the locations of infected individuals, the blood oxygen saturation levels of patients with respiratory diseases after medication, or the physical condition monitoring data after viral infection, are related to the user’s privacy, so a “privacy-first” approach which uses dynamic identifiers and stores their data in a cryptographically secure manner is needed [5]. Hence, for these factors of epidemiological data collection, the key to ensuring the efficiency of information sharing and privacy protection is to maximize the balance between data privacy and collection rate in epidemic analysis.

While the data collection from public health authorities and the open-source access to researchers can provide convenience to epidemiologists, these strategies may also significantly intrude upon citizens’ privacy. Some of these individuals were affected by unwanted privacy invasion, and the ubiquitous data surveillance devices certainly exacerbate those concerns. Therefore, the responsible use of shared data and algorithms, the compliance with data protection regulations, and the appropriate respect for privacy and confidentiality have become important topics in epidemiological data collection [6,7]. In 2014, the Global System for Mobile Communications (GSM) Association outlined some privacy standards for data-processing agencies regarding mobile phone data collection in response to the Ebola virus [8]. Some other methods that effectively guarantee user privacy include: encryption mechanisms [9], the privacy-aware energy-efficient framework (P-AEEF) protocol [10], the differential privacy-based classification algorithm [11], and the spatio-temporal trajectory method [12]. Moreover, unauthorized agencies, malicious hackers, and unidentified attacks, such as traffic analysis attacks, fake-node injection, and selective forwarding, may also eavesdrop on users’ data under the current ambiguous and non-uniform collecting algorithms [13]. To avoid the leakage of information from malicious collecting servers or untrusted access, the privacy of data needs to be ensured during data storage and processing.

In line with ensuring the security and privacy of user data, epidemiological data collection also requires the protection of data collectors. In accessing public databases, various epidemiological investigation agencies have different requirements for data privacy. For example, many epidemiological surveys are conducted by governments, universities, research institutes, pharmaceutical companies, and private institutions. Access to public data may reveal these agencies’ data preferences, resulting in information leakage. Therefore, the private-preserving epidemiological data collection includes the user’s privacy regarding the storage and data collector, along with the data collector’s privacy in data storage.

In epidemiological modeling, many recent studies have shown that various models have a good fitting effect on the nature of epidemics, such as the Bayesian model [14] and deep learning models, including multi-head attention, long short-term memory (LSTM), and the convolutional neural network (CNN) [15]. Additionally, when it comes to privacy and security concerns, some work conducted in computer science, cryptography, and information theory provides handy tools to model and solve such problems. The privacy leakage was modeled in differential privacy [16], k-anonymity [17], t-closeness [18], interval privacy [19], etc. With those concerns and analysis, several studies in cryptography and information theory have focused on the issue of sharing messages to untrusted agencies, such as distributed linear separable computation [20,21,22], secured matrix multiplication [23,24,25], secure aggregation [26], participatory sensing [27], and private information retrieval [28,29,30,31]. Specifically, a cryptographical epidemiological model with data security and privacy called RIPPLE was analyzed [32]. This model enables the execution of epidemiological models based on the most-recent real contact graph while keeping all the users’ information private. As an extension to the model, the data collector uses the sum private information retrieval (PIR) schemes to obtain the statistical data, which is described as the sum of a set of elements from a database.

Inspired by the cryptographical epidemiological model in [32], we propose an information-theoretic privacy-preserving epidemiological-data-collection problem, described as follows. We have a large and changing number of users sharing their real-time epidemiological data to N servers. The server will update its database once new data are uploaded. The storage of those users’ data is also open to all authorized data collectors. For any data collector who desires only some statistical feature, rather than all the data, it directly retrieves the statistic from N servers. We designed the protocol of the interaction between the N servers and the data collector so that when all N servers honestly answer the queries from the data collector, then the desired statistical data can be correctly decoded at the data collector. To simplify the problem, we assume the desired statistics to be the linear combinations of users’ personal data. The privacy of this model is reflected in the following three aspects: (1) The privacy of users’ data against the data collector: after downloading all answers from the servers, the data collector can decode the desired data without learning anything about the irrelevant details of the users’ personal information. (2) The privacy of users’ data against servers: for any user successfully sharing the data to all N servers, the personal information of the user is still confidential, even if of any up to servers collude. (3) The privacy of the data collector’s preference against servers: the protocol between data collector and servers should promise that any single server cannot know any preference of the desired statistical data from the query generated by the data collector. In our paper, we take the above privacy concerns into consideration and analyze the data collector’s ability of privately receiving the shared data, with respect to the number of symbols it needs to download.

The remainder of the paper is organized as follows: In Section 2, we introduce an information-theoretic description of the privacy-preserving epidemiological-data-collection problem, and we define the communication rate and capacity of our problem. In Section 3, we give a closed-form expression for the asymptotic capacity, which is the main result of the paper. In Section 4, we derive a converse proof for , which provides an upper bound on the asymptotic capacity when the number of users tends to infinity. An asymptotically capacity-achieving scheme using the technique of cross-subspace alignment when is given in Section 5, and in Section 6, we prove that the problem is not asymptotically achievable when . Finally, in Section 7, we summarize our results and suggest some open problems in this field.

2. System Model

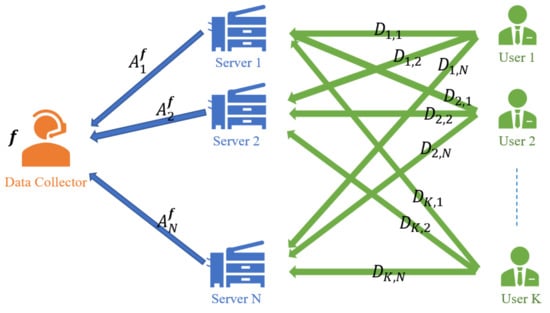

We formulate the privacy-preserving epidemiological-data-collection problem over a typical distributed, secure computation system, in which there are K users, N servers, and a data collector; and the number of users () is a large-enough integer. Here and throughout the paper, we assume that all of the random symbols in our system are generated by a large-enough finite field , and we standardize the entropy of any uniformly distributed symbol to be 1 by taking the term log in the entropy, conditional entropy, and mutual information to be base q. The model is depicted in Figure 1.

Figure 1.

The secure, privacy-preserving epidemiological-data-collection problem.

Let be K independent messages, where denotes the epidemiological data of user k and is an vector with L i.i.d. uniform symbols from the finite field —i.e.,

The data-collection problem contains two phases: the upload phase and the computation phase. The upload phase starts when the user is required to update its data to each of the N servers. Before uploading, user k knows nothing about the contents of other users’ epidemiological data. While uploading their personal data, the users would like to keep his/her message private against E colluding servers; i.e., any of up to E servers will learn nothing about the messages uploaded by K users. For any , let denote the uploaded message from the k-th user to the n-th server. We have the following equality called the privacy constraint of the users against E servers:

For any , let denote a random variable privately generated by user k, and its realization is not known to any of the N servers. User k utilizes the user-side randomness to encipher the message ; i.e., for any user k, there exist N functions such that , where is the encoding function from the user k to the server n. We have

When the servers receive the updated data from users, the old contents of the server will be replaced with the new contents, and all servers will be ready for the computation phase and allow the access of data updated by data collectors.

The computation phase starts when a data collector would like to compute statistical data of the current epidemiological database. To simplify our problem, we only consider statistical data to be a linear combination of all messages . Let and be the statistical data and the corresponding coefficient vector. Then, we have

where has the same number of symbols with the message length, and contains K elements in the finite field . In our setting, the coefficient vector only contains the preference of the data collector among K user’s epidemiological data records. Therefore, the value of does not depend on the users’ messages or the storage of N servers: .

Let denote a random variable privately generated by the data collector, and its realization is not available to any of the N servers and K users. In the computation phase, the data collector with its preference vector utilizes the randomness to generate its queries to N servers. Assume that the query from the data collector to the n-th server is denoted as . Then, the data collector uses the strategy g to map the randomness and the coefficient to the queries, such that

where is the encoding function from the data collector to the servers. Hence, we have

Since the randomness and queries are generated privately, and the user-side data and randomness are already known before the computation phase starts, the data collector has no knowledge of when the queries are generated. Thus, we have

Upon receiving the query , the n-th server will send an answer back to the data collector according to the storage of the n-th server. We assume that there is no drop-out in the model—i.e., each server n will successfully return its answer to the data collector. Let be the answer from the n-th server to the data collector, and then for any , there exists a deterministic function such that . Hence,

We would like to design a scheme in the upload and computation phases so that the following three constraints can be achieved. Firstly, the data collector is able to reconstruct the desired message from all the information it has received, which we call the decodability constraint. Let be the reconstruction function of the data collector, where . We have

Let be the probability of decoding error achieved by a given scheme and decoding function . We obtain

According to Fano’s inequality, the decodability constraint is equivalent to

when , where denotes any possible function of L satisfying .

The second constraint guarantees the data privacy of K users against the data collector. The privacy leakage to the data collector is unavoidable, as the data collector must learn some statistical data of the users. This constraint requires that the data collector can learn nothing about the information of the K users other than the desired data . We have

To protect the privacy of data collector, the third constraint requires the coefficient vector of the data collector to be indistinguishable from the perspective of each server; i.e., for any different (linearly independent) coefficient vectors and from the same scheme , the queries to every single server are identically distributed, so that any server cannot deduce merely from the query and storage without communicating to other servers. Hence, we have

where means that the random variables A and B have the same distribution. This constraint is called the privacy constraint of the data collector against non-colluding servers.

The reason why in the upload phase, we consider up to E servers may collude, and in the computation phase, we consider non-colluding servers to be defined by the following: the upload phase and the computation phase do not always occur at the same time. For example, the users are required to upload their epidemiological data on a regular basis, and the data collector may start his/her queries of certain statistics at relatively random times. Due to the dynamic topology of the servers, the numbers of colluding servers may be different during the uploading phase and the computation phase. Our work assumes that the servers are non-colluding in the computation phase, since the servers may be more interested in the epidemiological data than the data collector’s interest. If , then we have a model where the servers are non-colluding in both the upload phase and the computation phase.

For any scheme that satisfies the above decodability constraint, i.e., (9), and the privacy constraints, i.e., the privacy constraint of the users against E servers (2), the privacy constraint of the users against the data collector (10), and the privacy constraint of the data collector against the non-colluding servers (11), its communication rate is characterized by the number of symbols the data collector decodes per download symbol—i.e.,

It is worth noticing that R is not a function of due to (11).

A rate R is said to be (-error) achievable if there exists a sequence of schemes indexed by L with their communication rate less than or equal to R where the probability of error goes to zero as . The -error capacity of this random secure aggregation problem is defined as the supremum of all -error achievable rates, i.e., , where the supremum is over all possible -error achievable schemes.

3. Main Result

For the information-theoretical framework of our privacy-preserving epidemiological-data-collection problem presented in Section 2, our main result is the characterization of the asymptotic capacity when for any and .

To begin with, we show that the problem is infeasible when or , since in these cases, some constraints of our setting contradict each other, and no scheme can satisfy all the constraints. Firstly, when there is only one server, the privacy of users against the data collector and the privacy of data collector against servers will conflict with each other. The reason is as follows. First, according to (11), the answer A will be given that for two different , the distribution of A is the same. Second, the decodability (9) guarantees that can be derived from A. Then, can be derived from A, which contradicts the privacy of users against the data collector.

Moreover, when , the decodability constraint and the privacy of users against the servers will conflict with each other. The reason is as follows. First, the answers from N servers are given by the queries and the storage according to (7), so there is a Markov chain . Second, when , and are independent from according to (6) and (2), which contradicts the decodability constraint as is independent from the database . Therefore, no scheme can simultaneously satisfy all the constraints in a single-server or scenario, and there does not exist a positive capacity.

The following theorem gives the asymptotic capacity of private-preserving epidemiological data collection for an infinitely large K, where we have servers.

Theorem 1.

Consider E as a non-negative number, and there are servers. When , and the number of users , the asymptotic capacity of the secure privacy-preserving epidemiological-data-collection problem is

When , the converse proof of Theorem 1 will be given in Section 4, and the achievability proof will be given in Section 5 for any finite . When , our scheme in Section 5 remains achievable at the same rate, and the performance of the achievability and converse will meet. When , the proof of the zero asymptotic capacity is given in Section 6.

Remark 1.

From Theorem 1, we can see a threshold in the asymptotic capacity on the number of the maximized possible colluding servers E. When , there is no scheme with a positive asymptotic capacity; when , the asymptotic capacity is a decreasing function of E. When N approaches infinity while E is a constant, the asymptotic capacity approaches one.

Remark 2.

When the number of users K is a finite integer, the achievability and converse results do not always meet. From our converse proof in Section 4, the upper bound we give for finite K depends on the value of K. However, the performance (i.e., achievable rate) of our scheme in Section 5 is irrelevant to K, even though the scheme we propose is different for the finite . How to close the gap between the upper and lower bounds when K is finite is still an open problem.

4. Proof of Theorem 1: Converse When

We give the converse proof of Theorem 1 when in this section. The converse allows any feasible scheme , and we give an upper bound over the rates of all possible schemes. We start with the following lemma, which states an iterative relationship among the number of linear combinations of the users’ messages.

Lemma 1.

Consider K linear independent vectors . Let be a set and , . We have

Proof.

According to our problem setting, we have

where (15) holds because of the non-negativity of the following conditional entropy; i.e.,

and (16) holds because of (11). The equality in (17) follows due to the fact that

where the first equality follows from (7), and the second equality follows from (9). Finally, (18) holds because is independent from the queries and randomness, the security constraint and that are linear independent vectors. □

The following lemma shows that any answers in a set are independent from any queries conditioned on the same set of queries to the same coefficient and any size of messages and randomnesses. This is the direct inference from the independence of message, queries, and randomnesses generated by the data collector (6).

Lemma 2.

Assume that , , and . Then, we have the following equality:

Proof.

The proof is the same as Section VI, Lemma 1, in [29], and the key to this proof is that is determined by , conditioned on and . We omit the detailed proof here. □

The lemma below has a similar form to Lemma 2, and it shows that any set of answers with size of E do not depend on the desired statistic, conditioned on the same set of queries and the randomnesses generated by the data collector.

Lemma 3.

For any ,

Proof.

The following lemma shows that we can split the answers into two parts—one from E servers that cannot decode the database and the other from servers:

Lemma 4.

For any and , , we obtain

Proof.

Now, we can get the lower bound on the asymptotic download size when L and K goes infinity by applying (14) to (23). We have

where (30) follows from (23), (31) is because goes zero when , and (32) follows from the non-negativity of the conditional entropy. The iteration (14) can be continued because for any , when . Thus, we can calculate the upper bound of the asymptotic capacity when as follows:

Thus, for any possible scheme satisfying the constraints of the problem, the rate cannot be more than when and .

5. Proof of Theorem 1: Achievability When

In this section, we give a cross-subspace alignment (CSA) scheme based on the coding of interference in the computation phase to reach the asymptotic capacity [33] for any integers and . Throughout the scheme, we choose the length of each personal message , and we use the notation for .

First, we specify the encoding functions in the upload phase. Let be the l-th symbol of each , , and be the row vector of the l-th symbol of all K messages, i.e., . Assume that are N distinct coefficients all belonging to the set —i.e., for any . Note that the s are globally shared variables known to the users, servers, and the data collector. In order to protect the privacy of the users against the servers, each user k will generate random noises uniformly from . The uploaded information to the n-th server by the k-th user is given by

For convenience of notation, we write the content stored at server n in a vector form as

where , and is defined as .

In the computation phase, the query to Server n is determined by the coefficient and the randomness from the data collector . We design the query to Server n based on as

where are L random column vectors of length K, whose elements are uniformly distributed on , generated by the data collector.

For any server , the answer to the data collector is calculated by

According to the expansion of the representation (38) in the descending power of , we can see that is the sum of and a polynomial of degree E in . We can rewrite (38) as

where is the coefficient of for any . We can clearly see from (38) and (39) that is not a function of n. If we write the answers to the data collector from all the N servers together, we can get the following formula in a matrix form:

Now, we prove that this scheme satisfies the decodability constraint, i.e., (9), and the privacy constraints—i.e., the privacy constraint of the users against E servers (2), the privacy constraint of the users against the data collector (10), and the privacy constraint of the data collector against the non-colluding servers (11).

Recall that in our scheme, we let . The decodability constraint is satisfied because the matrix in (40) is an full-rank matrix when the s are distinct Lemma 5 in [33]. Hence, can be fully recovered from , and the desired data in (4) is obtained by

The privacy constraint of the users against E colluding servers is satisfied due to the sharing strategy of the users. In (33), we know that the k-th user shares its l-th symbol to the n-th server in a form

where denotes the storage in server n that shares. The security needs to guarantee that any E out of N servers do not know for any . For any set such that and , we choose the E servers to be in . We can write the storage of these servers with respect to what shares in a matrix form:

Notice that the Vandermonde matrix in (43), denoted by , is invertible for distinct , so the second term of (43) contains E symbols that are linearly independent. The privacy constraint of the users against E colluding servers can be guaranteed as the E additional random symbols protect the shared message. We have

where (44) comes from (43), and (45) holds because when , we have

and when , we have

so the remaining items are those such that .

To prove that the privacy constraint of the data collector against the non-colluding servers is satisfied, we notice that the query to each server is composed of the desired coefficient and independent additional randomness . Consider two linear independent vectors, . For any , the l-th entries of and are and , respectively. Notice that (thus ) is chosen uniformly from , and that and are two deterministic vectors in . The l-th symbol of or has the same distribution. Therefore, any single server can not distinguish queries from the data collector with one coefficient . Furthermore, if we assume the desired coefficient is a random variable with some distribution known only to the data collector, and is an implementation of , we can prove that the mutual information between and is zero. We have

where (46) is from (38), (47) is from (10), and (48) is from (6).

Finally, to prove that the privacy constraint of the users against the data collector is satisfied, we construct a basis of containing the desired . Assume that the vectors in the basis is denoted by where . We then have

where (49) holds because is independent with ; (50) holds because except for the term containing , all terms in (38) are given, so deducting them would not change the mutual information; (51) is because is a constant; (52) is because for any and , is a constant, and for , can be derived by and ; and (53) holds because for any , and are generated independently of .

Thus, we prove that the scheme satisfies all the constraints. As the answer from any server contains one symbol (the inner product) from , the rate of our proposed scheme is

6. Converse Result When

In this section, we prove that the asymptotic capacity is zero when . First, the inequality (23) also holds when because the inequality in (15) becomes an equality. Hence, we have

Thus, for any linear independent vectors , we have

where (56), (57), and (58) are from (55); and (59) holds because any K linear independent vectors constitute a basis in , so can be decoded. We can see that the download will go to infinity when , and thus the asymptotic capacity will be

The upper bound of C indicates that it is unfeasible to construct a scheme that has a positive communication rate when . However, differently from the scenario, our proof of the zero asymptotic capacity does not mean that there does not exist any scheme that satisfies all the constraints of our problem. In other words, there may be schemes that can satisfy all the constraints of the problem, albeit with an asymptotic capacity of zero. The detailed construction of a feasible scheme for and finite K is still an open problem.

7. Conclusions

Thanks to the research on data privacy and modeling of infectious diseases, the privacy-preserving epidemiological-data-collection problem was proposed, which aims to maximize the collection rate while protecting the privacy of all users’ data and the data collector’s preferences. We have found the asymptotic capacity of this problem, and the result shows that when there is more than one remaining server that not colluding with the other servers to decode the users’ data, the asymptotic capacity exists. The objective of this work was to find the best performance for the privacy-preserving epidemiological-data-collection problem, and we partly achieved this goal by giving the construction and proof of the optimal scheme when K is infinitely large. The achievability for was given by the cross-subspace alignment method, and the infeasibility of or was also proved. Our findings not only extend the research on secure multi-party computing systems in information theory, but also provide information-theoretic frameworks, implementations, and capacity bounds for the study of privacy epidemiological modeling. Although we characterized the asymptotic capacity for this problem, the exact capacity is still unknown. Some future directions include finding the exact capacity for finite K, the construction of a scheme when , and the performance of asymptotic capacity under irregular colluding patterns. In general, our result of asymptotic capacity for this problem will provide useful insights for further studies in data both privacy and epidemiological modeling.

Author Contributions

Conceptualization, J.C., N.L. and W.K.; methodology, J.C., N.L. and W.K.; formal analysis, J.C., N.L. and W.K.; writing—original draft preparation, J.C.; writing—review and editing, J.C., N.L. and W.K.; supervision, N.L. and W.K.; Funding acquisition, N.L. and W.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by the National Natural Science Foundation of China under Grants 61971135 and 62071115, and the Research Fund of National Mobile Communications Research Laboratory, Southeast University (No. 2023A03).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| COVID-19 | Corona Virus Disease 2019 |

| GSM | Global System for Mobile Communications |

| P-AEEF | Privacy-Aware Energy-Efficient Framework |

| LSTM | Long Short-Term Memory |

| CNN | Convolutional Neural Network |

| CSA | Cross Subspace Alignment |

References

- Kim, J.; Kwon, O. A Model for Rapid Selection and COVID-19 Prediction with Dynamic and Imbalanced Data. Sustainability 2021, 13, 3099. [Google Scholar] [CrossRef]

- Olson, D.; Lamb, M.; Lopez, M.R.; Colborn, K.; Paniagua-Avila, A.; Zacarias, A.; Zambrano-Perilla, R.; Rodríguez-Castro, S.R.; Cordon-Rosales, C.; Asturias, E.J. Performance of a Mobile Phone App-Based Participatory Syndromic Surveillance System for Acute Febrile Illness and Acute Gastroenteritis in Rural Guatemala. J. Med. Internet Res. 2017, 19, e368. [Google Scholar] [CrossRef] [PubMed]

- Demirci, J.R.; Bogen, D.L. An Ecological Momentary Assessment of Primiparous Women’s Breastfeeding Behavior and Problems From Birth to 8 Weeks. J. Hum. Lact. 2017, 33, 285–295. [Google Scholar] [CrossRef] [PubMed]

- Silva de Lima, A.L.; Hahn, T.; Evers, L.J.W.; de Vries, N.M.; Cohen, E.; Afek, M.; Bataille, L.; Daeschler, M.; Claes, K.; Boroojerdi, B.; et al. Feasibility of large-scale deployment of multiple wearable sensors in Parkinson’s disease. PLoS ONE 2017, 12, e0189161. [Google Scholar] [CrossRef] [PubMed]

- Fahey, R.A.; Hino, A. COVID-19, digital privacy, and the social limits on data-focused public health responses. Int. J. Inf. Manag. 2020, 55, 102181. [Google Scholar] [CrossRef]

- Ienca, M.; Vayena, E. On the responsible use of digital data to tackle the COVID-19 pandemic. Nat. Med. 2020, 26, 463–464. [Google Scholar] [CrossRef]

- Marabelli, M.; Vaast, E.; Li, J.L. Preventing the digital scars of COVID-19. Eur. J. Inf. Syst. 2021, 30, 176–192. [Google Scholar] [CrossRef]

- GSM Association. GSMA Guidelines on the Protection of Privacy in the Use of Mobile Phone Data for Responding to the Ebola Outbreak. 2014. Available online: https://www.gsma.com/mobilefordevelopment/resources/gsma-guidelines-on-the-protection-of-privacy-in-the-use-of-mobile-phone-data-for-responding-to-the-ebola-outbreak/ (accessed on 15 January 2023).

- Pahlevanzadeh, B.; Koleini, S.; Fadilah, S.I. Security in IoT: Threats and Vulnerabilities, Layered Architecture, Encryption Mechanisms, Challenges and Solutions. In Proceedings of the Advances in Cyber Security, Penang, Malaysia, 24–25 August 2021; Anbar, M., Abdullah, N., Manickam, S., Eds.; Springer: Singapore, 2021; pp. 267–283. [Google Scholar]

- Al-Turjman, F.; Deebak, B. Privacy-Aware Energy-Efficient Framework Using the Internet of Medical Things for COVID-19. IEEE Internet Things Mag. 2020, 3, 64–68. [Google Scholar] [CrossRef]

- Fan, W.; He, J.; Guo, M.; Li, P.; Han, Z.; Wang, R. Privacy preserving classification on local differential privacy in data centers. J. Parallel Distrib. Comput. 2020, 135, 70–82. [Google Scholar] [CrossRef]

- Su, J.; He, X.; Qing, L.; Niu, T.; Cheng, Y.; Peng, Y. A novel social distancing analysis in urban public space: A new online spatio-temporal trajectory approach. Sustain. Cities Soc. 2021, 68, 102765. [Google Scholar] [CrossRef]

- Muhammad, M.H.G.; Alyas, T.; Ahmad, F.; Butt, F.H.; Qazi, W.M.; Saqib, S. An analysis of security challenges and their perspective solutions for cloud computing and IoT. EAI Endorsed Trans. Scalable Inf. Syst. 2020, 8. [Google Scholar] [CrossRef]

- Anderson, S.C.; Edwards, A.M.; Yerlanov, M.; Mulberry, N.; Stockdale, J.E.; Iyaniwura, S.A.; Falcão, R.C.; Otterstatter, M.C.; Irvine, M.A.; Janjua, N.Z.; et al. Quantifying the impact of COVID-19 control measures using a Bayesian model of physical distancing. PLoS Comput. Biol. 2020, 16, e1008274. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Paki, R. Prediction of COVID-19 confirmed cases combining deep learning methods and Bayesian optimization. Chaos Solitons Fractals 2021, 142, 110511. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Proceedings of the Theory of Cryptography, New York, NY, USA, 4–7 March 2006; Halevi, S., Rabin, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Sweeney, L. k-anonymity: A model for protecting privacy. Int. J. Uncertainty Fuzziness-Knowl.-Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Li, N.; Li, T.; Venkatasubramanian, S. t-Closeness: Privacy Beyond k-Anonymity and l-Diversity. In Proceedings of the 2007 IEEE 23rd International Conference on Data Engineering, Istanbul, Turkey, 15–20 April 2007; pp. 106–115. [Google Scholar] [CrossRef]

- Ding, J.; Ding, B. Interval Privacy: A Framework for Privacy-Preserving Data Collection. IEEE Trans. Signal Process. 2022, 70, 2443–2459. [Google Scholar] [CrossRef]

- Wan, K.; Sun, H.; Ji, M.; Caire, G. Distributed Linearly Separable Computation. IEEE Trans. Inf. Theory 2021, 68, 1259–1278. [Google Scholar] [CrossRef]

- Wan, K.; Sun, H.; Ji, M.; Caire, G. On the Tradeoff Between Computation and Communication Costs for Distributed Linearly Separable Computation. IEEE Trans. Commun. 2021, 69, 7390–7405. [Google Scholar] [CrossRef]

- Wan, K.; Sun, H.; Ji, M.; Caire, G. On Secure Distributed Linearly Separable Computation. IEEE J. Sel. Areas Commun. 2022, 40, 912–926. [Google Scholar] [CrossRef]

- Chen, Z.; Jia, Z.; Wang, Z.; Jafar, S.A. GCSA Codes with Noise Alignment for Secure Coded Multi-Party Batch Matrix Multiplication. IEEE J. Sel. Areas Inf. Theory 2021, 2, 306–316. [Google Scholar] [CrossRef]

- Chang, W.T.; Tandon, R. On the Capacity of Secure Distributed Matrix Multiplication. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Hasırcıoǧlu, B.; Gómez-Vilardebó, J.; Gündüz, D. Bivariate Polynomial Codes for Secure Distributed Matrix Multiplication. IEEE J. Sel. Areas Commun. 2022, 40, 955–967. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, H. Information Theoretic Secure Aggregation with User Dropouts. In Proceedings of the 2021 IEEE International Symposium on Information Theory (ISIT), Melbourne, Australia, 12–20 July 2021; pp. 1124–1129. [Google Scholar] [CrossRef]

- Chen, Q.; Zheng, S.; Weng, Z. Data Collection with Privacy Preserving in Participatory Sensing. In Proceedings of the 2017 IEEE 23rd International Conference on Parallel and Distributed Systems (ICPADS), Shenzhen, China, 15–17 December 2017; pp. 49–56. [Google Scholar] [CrossRef]

- Sun, H.; Jafar, S.A. The Capacity of Private Information Retrieval. IEEE Trans. Inf. Theory 2017, 63, 4075–4088. [Google Scholar] [CrossRef]

- Wang, Q.; Sun, H.; Skoglund, M. The capacity of private information retrieval with eavesdroppers. IEEE Trans. Inf. Theory 2018, 65, 3198–3214. [Google Scholar] [CrossRef]

- Yao, X.; Liu, N.; Kang, W. The Capacity of Private Information Retrieval Under Arbitrary Collusion Patterns for Replicated Databases. IEEE Trans. Inf. Theory 2021, 67, 6841–6855. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, N.; Kang, W.; Li, Y. The Capacity of Symmetric Private Information Retrieval Under Arbitrary Collusion and Eavesdropping Patterns. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3037–3050. [Google Scholar] [CrossRef]

- Günther, D.; Holz, M.; Judkewitz, B.; Möllering, H.; Pinkas, B.; Schneider, T. PEM: Privacy-Preserving Epidemiological Modeling; Report 2020/1546; The International Association for Cryptologic Research Location: Hyderabad, India, 2020; p. 1546. [Google Scholar]

- Jia, Z.; Sun, H.; Jafar, S.A. Cross Subspace Alignment and the Asymptotic Capacity of X-Secure T-Private Information Retrieval. IEEE Trans. Inf. Theory 2019, 65, 5783–5798. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).