Abstract

In cases where a client suffers from completely unlabeled data, unsupervised learning has difficulty achieving an accurate fault diagnosis. Semi-supervised federated learning with the ability for interaction between a labeled client and an unlabeled client has been developed to overcome this difficulty. However, the existing semi-supervised federated learning methods may lead to a negative transfer problem since they fail to filter out unreliable model information from the unlabeled client. Therefore, in this study, a dynamic semi-supervised federated learning fault diagnosis method with an attention mechanism (SSFL-ATT) is proposed to prevent the federation model from experiencing negative transfer. A federation strategy driven by an attention mechanism was designed to filter out the unreliable information hidden in the local model. SSFL-ATT can ensure the federation model’s performance as well as render the unlabeled client capable of fault classification. In cases where there is an unlabeled client, compared to the existing semi-supervised federated learning methods, SSFL-ATT can achieve increments of 9.06% and 12.53% in fault diagnosis accuracy when datasets provided by Case Western Reserve University and Shanghai Maritime University, respectively, are used for verification.

1. Introduction

As an important part of modern industrial systems, the fault diagnosis of a rolling bearing is crucial [1,2,3]. Data-driven fault diagnosis methods can extract fault features directly from massive collections of data and allow for the construction of a fault diagnosis model for rapid equipment monitoring [4,5]. As a data-driven method, deep learning is more powerful in terms of representing complex nonlinear mapping relationships, so fault diagnosis methods based on deep learning are becoming more and more widely applied [6]. In practical industrial applications, the process of labeling a large quantity of data often demands significant human and material resources. Therefore, the construction of fault diagnosis models utilizing extensive unlabeled data has received a copious amount of attention from academics and industry experts [7,8]. Although unsupervised methodologies can solve the issue of unlabeled data, establishing a link between input data and output results is challenging due to the lack of known labels [9,10]. On the other hand, semi-supervised deep learning methods can optimize fault diagnosis models developed with minimal labeled data by utilizing a large quantity of unlabeled data, offering important engineering significance [11,12]. However, the pressing issue lies in how semi-supervised learning can be implemented in a single-client setting where no labeled data are present. Semi-supervised federated learning offers a solution, enabling clients bereft of labeled data to fortify their classification capabilities using information from other clients possessing labeled data. This response carries substantial engineering significance in addressing a critical concern in the semi-supervised deep learning modeling process when certain clients only have access to unlabeled data [13].

The existing semi-supervised federated learning methods are constrained by unreliable information hidden in the corresponding local model and struggle to optimize the performance of the federation model. Therefore, it is important to design a reliable screening mechanism for the local model and guide the federated learning process.

This paper starts from the perspective of a reliable information-screening mechanism for the corresponding local model. Then, a dynamic semi-supervised federated learning method based on an attention mechanism is proposed, aiming to solve the problem of negative migration for federated learning due to unreliable information from a low-quality local model and improve the classification ability of clients without labeled data.

The main contributions of this work are as follows:

- A dynamic semi-supervised federated learning fault diagnosis method based on an attention mechanism is proposed to solve the problem of negative transfer due to unreliable information hidden in a local model. This guarantees the performance of the federation model and enhances the classification ability of clients without labeled data.

- A federation strategy driven by an attention mechanism is designed to filter out unreliable information so that the federation model can incorporate useful information from an unreliable local model. A new loss function related to supervised classification, unsupervised feature reconstruction, and the reliability of the local model is designed to train the federation model. According to the reliability of the federation model, the local model can be optimized by dynamically adjusting how the unlabeled data are utilized and the extent to which they can contribute.

- In cases where there are certain clients without labeled data, the method proposed in this study can still ensure the performance of the federation model and render it capable of fault classification for local clients without labeled data.

2. Related Work

2.1. Semi-Supervised Deep-Learning-Based Fault Diagnosis Method

Semi-supervised deep-learning methods can achieve the full utilization of massive collections of unlabeled data to optimize fault diagnosis models built with a small quantity of labeled data [14]. The existing semi-supervised deep-learning methods can mainly be classified into generative semi-supervised methods, semi-supervised methods based on consistency regularization, graph-based semi-supervised methods, and semi-supervised methods based on pseudo-label self-training [15].

In generative semi-supervised methods, it is assumed that all samples are from the same latent model, and unlabeled data are treated as missing parameters of the potential model. An expectation maximization algorithm (EM) is usually used to determine the parameters [16,17]. Semi-supervised methods based on consistent regularization are designed to improve model robustness using unlabeled data by making predictions as consistent as possible for unlabeled data with different perturbations [18,19]. In graph-based semi-supervised learning methods, the connections among data are used to map a dataset into a graph, and then the similarity among samples is used for label propagation to achieve label prediction for unlabeled data [20,21].

Compared to the above semi-supervised deep-learning methods, the process of the semi-supervised learning method based on pseudo-label self-training is simpler and more effective [22]. Model performance can be improved via supervised learning using unlabeled data. Yu et al. [23] proposed a semi-supervised learning method that enhances the consistency of feature distribution between labeled and unlabeled data and improves the accuracy of fault diagnosis. Liu et al. [24] proposed a semi-supervised deep-learning method that alternately optimizes the pseudo-label and model parameters. The above methods use unlabeled data to improve the performance of a model through supervised learning, but the quality of the pseudo-label greatly affects the fault diagnosis performance of a model. A model’s performance can be increased by improving the quality of the pseudo-label. Ribeiro et al. [25] used a model’s prediction results to estimate reliability and then added the unlabeled data with the highest reliability to the model’s retraining process. Pedronette et al. [26] proposed a method consisting of determining a model’s reliability using marginal scores to find the most reliable pseudo-label from the unlabeled data and adding it to the labeled dataset. The above methods improve fault diagnosis performance by improving the quality of the pseudo-label, but the impact of feature accuracy on the quality of the pseudo-label is not emphasized. Zhang et al. [27] extracted data features using a variational self-encoder, which improved the fault diagnosis accuracy of the corresponding model. Tang et al. [28] used an unsupervised network to extract unlabeled data features and then fine-tuned the model jointly with the supervised network to improve semi-supervised fault diagnosis accuracy. When clients cannot achieve adequate labeling of data, it is expected that considering the utilization of labeled data from other clients to assist the client may solve the problem of difficulty in training fault diagnosis models caused by a lack of labeled data.

2.2. Semi-Supervised Federated Learning Fault Diagnosis Method

Semi-supervised federated learning is a method that combines semi-supervised learning and federated learning [29] to address the difficulty of achieving satisfactory fault diagnosis for clients with few labeled data and a massive number of unlabeled data. Albaseer et al. [30] proposed a semi-supervised federated learning method called FedSem, which used a federation model to assign pseudo-labels to unlabeled data and added them to the model retraining process. Diao et al. [31] proposed a semi-supervised federated learning method that is executed via alternating training by fine-tuning a federation model with labeled data and assigning pseudo-labels to unlabeled data using the federation model. However, poor quality of the pseudo-label leads to the degradation of model performance, and the problem of the pseudo-label being unreliable can be eliminated using active learning. Presotto et al. [32] combined active learning and label propagation algorithms to improve model performance by periodically using unlabeled data assigned a pseudo-label for local model training and then aggregating the models. However, the above methods only consider how to assign a high-quality pseudo-label, ignoring the fact that the potential fault feature information hidden in unlabeled data can also be used to assist in model building. Hou et al. [33] proposed a semi-supervised federated learning model called ANN-SSFL. In this approach, clients without labeled data acquired fault features through an autoencoder, and clients with labeled data trained the classifier through supervised learning. Both clients without labeled data and those with labeled data could contribute to the federation model. However, the above methods only consider how to make full use of fault feature information from unlabeled data, ignoring the model optimization effect from the information interaction occurring in federated learning. Shi et al. [34] proposed a personalized semi-supervised federated learning method called UM-pFSSL. This method allows each client to select models from other clients that contribute to the prediction of unlabeled data. The model performance of each client is improved by aggregating only the parameters of selected models. Itahara et al. [35] improved the fault diagnosis ability of a model by exchanging the model output of clients based on the idea of knowledge distillation, using it to label data in the public dataset, and then the local model was further trained using the newly labeled data. The above method improves the federation model’s performance from a feature extraction perspective. However, unreliable information hidden in clients’ data will inevitably degrade the performance of the federation model, so determining how to filter out unreliable information is an urgent problem that needs to be solved.

3. Dynamic Semi-Supervised Federated Learning Fault Diagnosis Method Based on an Attention Mechanism

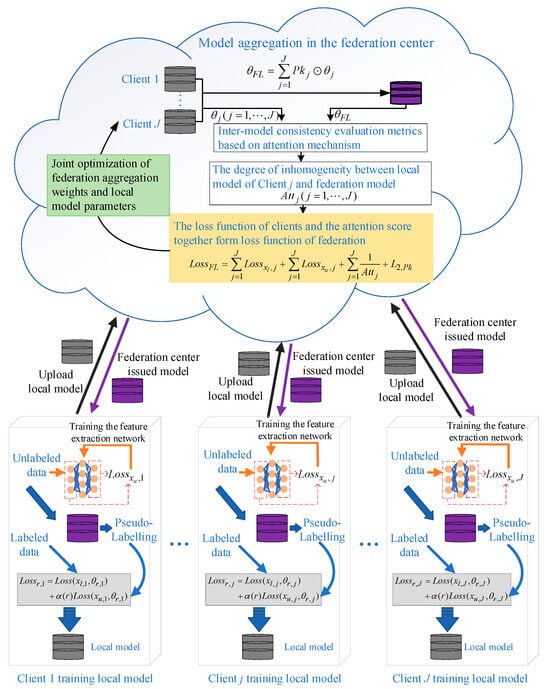

When there are certain clients without labeled data, existing semi-supervised federated learning methods can suffer from performance degradation due to an inability to screen for unreliable information. To ensure the performance of a federation model, a federation aggregation strategy based on an information reliability screening mechanism is necessary. This paper proposes a dynamic semi-supervised federated learning method for fault diagnosis based on an attention mechanism, whose main processes include a dynamic local training mechanism based on model performance, with the aim of dynamically adjusting the way and degree to which unlabeled data are used according to the performance of the federated model. On the other hand, local model optimization is achieved through supervised and unsupervised loss. An optimal federation aggregation strategy driven by reliable information screening is designed to filter reliable information by measuring the difference between the local model and the federated model through the attention mechanism and reflecting the contribution of each client using the attention score. The aim of this process is to filter out unreliable information through the attention mechanism and thus ensure the federated model’s performance. The block diagram of the dynamic semi-supervised federated learning fault diagnosis method based on an attention mechanism is shown in Figure 1.

Figure 1.

The algorithm of the dynamic semi-supervised federated learning fault diagnosis method based on an attention mechanism.

3.1. Dynamic Local Optimization Mechanism Based on Federation Performance

In this section, a dynamic unlabeled data utilization strategy is designed to dynamically adjust the way and the extent to which unlabeled data are used based on the performance of the federation model. The specific steps are as follows.

- Step 1: When the federation model is unreliable, the recursive optimization of the local model is achieved using unlabeled data.

After receiving model parameters from the federation center, they are used as the initialization parameters of the local model, as shown in Equation (1).

Client uses local data for model training. The feature extraction network is trained via multi-scale recursive feature reconstruction using unlabeled data. The forward propagation and parameter update processes are shown in Equations (2) and (3), respectively:

where and denote the encoding and decoding networks, respectively; denotes the learning rate; denotes the multi-scale recursive feature reconstruction loss obtained using unlabeled data; and denote the parameters of the encoding network before and after updating, respectively; and and denote the parameters of the decoding network before and after updating, respectively.

- Step 2: Dynamic local semi-supervised training based on the degree of pseudo-label utilization.

In the round of federated learning, the federation center distributes model parameters to the clients. Client uses the received federation model to predict the category information of unlabeled data, as shown in Equation (4).

Above, denotes the encoding parameters of the -round federation model, while denotes the classifier parameters of the -round federation model. The category with the maximum probability is taken as the federation model’s pseudo-label, as shown in Equations (5) and (6), where C is the total number of categories.

The local loss function is constructed using the classification loss of the labeled data and that of the unlabeled data with a pseudo-label, as shown in Equation (7):

where is a balance parameter between the classification loss of labeled data and the classification loss of unlabeled data with a pseudo-label, which can be dynamically adjusted according to the performance of the federation model, allowing the utilization degree of the unlabeled data to be changed during the training of the local semi-supervised model.

In this study, the number of communication rounds was used as a measure of federation model performance, and was determined according to the maximum utilization of unlabeled data , the current number of federation communications , and the maximum number of federation communications , which are calculated as shown in Equation (8).

In this step, the local model parameters are updated, as shown in Equation (9).

The above steps enable the utilization of labeled and unlabeled data in a supervised learning manner, and the differential utilization of unlabeled data can achieve the goal of the full use of large collections of unlabeled data from each client for model optimization.

3.2. Federation Strategy Driven by Screening of Reliable Information

Unreliable information is hidden in the local model because it is difficult to achieve high-quality model building for clients without labeled data. Therefore, at this stage, a semi-supervised federation aggregation strategy based on an attention mechanism is designed. The specific steps are as follows.

- Step 1: Semi-supervised federation model aggregation.

After receiving the local model uploaded by all clients, the federation center aggregates all local models using the initialized federation aggregation parameters, as shown in Equation (10).

Above, is the federation model, and is the aggregation weight of Client .

- Step 2: Establish model reliability metrics based on the degree of consistency.

The local model parameters are used as query values to query the attention distribution between each local model and federation model in turn, and the attention score is scaled to between 0 and 1. The contribution degree of the local model is calculated as shown in Equation (11):

where is the attentional evaluation function, which is the dot product, as shown in Equation (12):

where is the query vector in the attention mechanism, is the key vector in the attention mechanism, and represents the attention score between and .

- Step 3: The federation aggregation process is driven by the performance of the federation model and the reliability of the local model.

The loss function of the dynamic semi-supervised federation aggregation process is designed by integrating federation model performance and the local model reliability of the clients, as shown in Equation (13):

where denotes the supervised loss for Client , as shown in Equation (14); denotes the unsupervised loss for Client as shown in Equation (15); and denotes the total number of clients. is the regularization term that restricts the sum of the federation aggregation weights to 1, as shown in Equation (16):

where and denote the volume of labeled data and the volume of unlabeled data for Client , respectively; is the labeled sample for Client ; is the label corresponding to the sample for Client ; is the unlabeled sample for Client ; denotes the DNN model obtained via aggregation; and denote the encoding and decoding networks of the federation model, respectively; and the corresponding network parameters are denoted by and , respectively.

- Step 4: Joint optimization of local model parameters and federation aggregation weights.

The joint optimization of local model parameters and federation aggregation weights based on the loss function of the federation center can further improve the fault diagnosis performance of the federation model by improving the reliability of the local model. The gradients of the local model parameters and the federation aggregation weights are calculated as shown in Equations (17) and (18), respectively.

can be optimized according to the obtained gradient. Thus, the federation model performance and local model reliability can be used to jointly drive the federation aggregation process. The parameter-updating process is shown in Equations (19) and (20):

where and denote the aggregation weights and local model parameters of Client in round , while and denote the aggregation weights and local model parameters of Client after updating.

The joint optimization process can dynamically update the federation aggregation weights and local model parameters, which improves the reliability of local models and thus reduces the impact of unreliable local models on the performance of the federation model.

3.3. Fault Diagnosis Based on SSFL-ATT

This section highlights the detailed steps of the SSFL-ATT fault diagnosis method proposed to solve the problem of reliable information screening for local models.

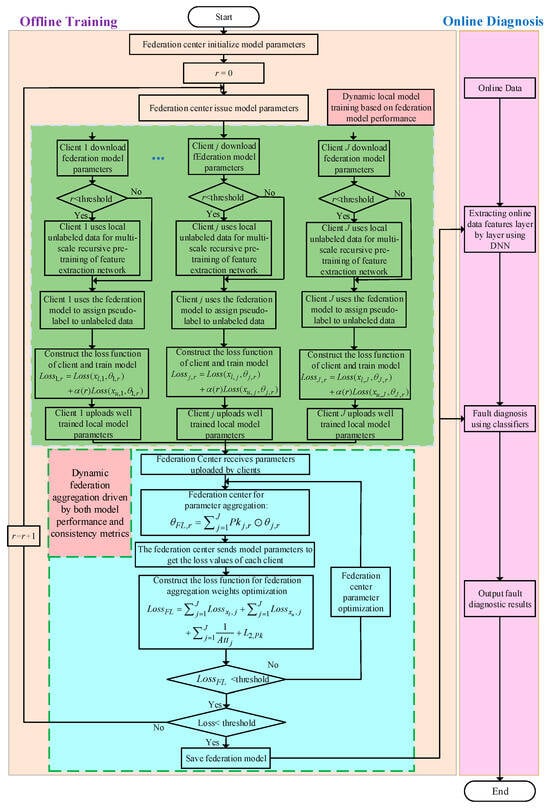

A flowchart of the dynamic semi-supervised federated learning algorithm based on the attention mechanism is shown in Figure 2. Fault diagnosis is divided into offline training and online diagnosis. In the offline training part, the green box is a dynamic local training mechanism based on model performance, and the blue box is the optimal federation aggregation strategy driven by both model performance and consistency metrics. In the fault diagnosis part, clients feed the preprocessed data into the federation model to obtain fault features, and the fault features are fed into the classifier to obtain the diagnosis results. The detailed steps are formally represented in Algorithm 1.

| Algorithm 1: Fault diagnosis based on SSFL-ATT |

| Require: local data Server executes: Initializing federation model Step1: Model training for semi-supervised federated learning Dynamic local training mechanism based on federation model performance Clients dynamically adjust how to use local unlabeled data based on federation model performance. Federation aggregation strategy driven by reliable information screening Reliable information can be screened from local models based on attention mechanisms The loss function can be designed by combining performance of federation model and reliability of local model Joint optimization of local model parameters and federation aggregation weights. Joint optimization of local model parameters and coalition aggregation weights can be achieved based on loss function of federation center , Step2: Fault diagnosis for each client Each client uses well-trained federation model to achieve fault diagnosis |

Figure 2.

Flowchart of the dynamic semi-supervised federated learning fault diagnosis method based on an attention mechanism.

4. Experiment and Analysis

4.1. Experimental Analysis of the Bearing Fault Simulation Platform at Case Western Reserve University

Using the benchmark dataset of Case Western Reserve University (CWRU) for experimental verification, this section validates the effectiveness of the proposed method through specific experimental analysis.

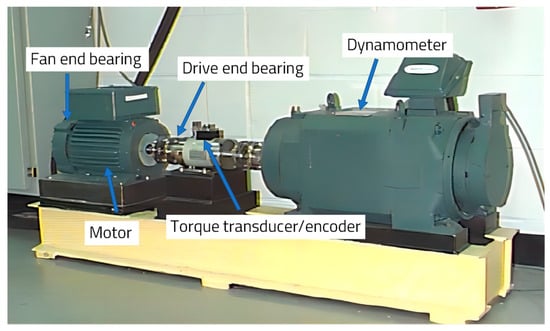

4.1.1. Bearing Data Description

The Case Western Reserve University bearing dataset is widely used in fault diagnosis. The experimental platform is shown in Figure 3 [36]. It mainly consists of a three-phase asynchronous motor, a torque transducer or decoder, and a power test meter. A single-point fault was introduced in the motor bearing using electro-discharge-machining (EDM) techniques with fault sizes of 0.007 in, 0.014 in, and 0.021 in. Accelerometers were installed at the drive and fan ends to collect vibration data for motor loads of 0 HP to 3 HP.

Figure 3.

The Case Western Reserve University bearing experiment bench [36].

In this section of the experiment, the monitoring signal of the accelerometer at the drive end was selected. The motor load was 0 HP, the speed was 1797 rpm, and the sampling frequency was 48 KHz. The four operation states of the rolling bearing comprise the normal operation state and the fault states of the inner ring, ball, and outer ring measured at a fault inch of 0.021. The detailed composition of the dataset is shown in Table 1.

Table 1.

Bearing data types.

To verify the superiority of the proposed method, it was compared with various existing models of semi-supervised federated learning. Table 2 describes the different models established in this section during the experimental validation and the experimental parameter settings.

Table 2.

Semi-supervised federated learning model and parameter settings.

An experimental scenario of semi-supervised federated learning was designed by changing the data of the clients. The dataset was obtained by intercepting the vibration data through a sliding window with a window size of 400 and a step size of 30, and the number of samples in the test set is 4 × 300. Table 3 shows the design of the experiment.

Table 3.

Experimental design.

4.1.2. Bearing Experiment Results and Analysis

Experiments 1–3 are designed to verify the effectiveness and superiority of SSFL-ATT when clients have different quantities of labeled data. The fault diagnosis results are shown in Table 4.

Table 4.

Bearing fault diagnosis results for different quantities of labeled data.

As can be seen in Columns 3 and 4 of Table 4, the use of only unsupervised and supervised learning methods resulted in some clients failing to achieve fault diagnosis. As shown in Columns 4 and 5, supervised learning was used to train local models. Then, the federated averaging algorithm was used to aggregate the models. Finally, the federation model was distributed to all the clients. Client 3 realized fault diagnosis without labeled data, but the diagnostic accuracy was poor. This was because the data for Client 3 were not involved in the model training to extract the corresponding fault information. As gleaned when comparing Columns 5 and 6, FedSem used the federation model to assign pseudo-labels to the unlabeled data of all the clients, in which the fault information hidden in the unlabeled data of Client 3 was fully utilized. However, negative transfer occurred in the federation model due to the unreliability of the pseudo-label. This led to the aggregation of unreliable information during the federation process. When comparing Columns 6 and 7, it is clear that Sem-Fed utilized the local model to assign a pseudo-label to unlabeled data, and therefore, ensured that the pseudo-label was not affected by unreliable information from other clients, enhancing the reliability of the pseudo-label and thus improving the performance of the federated model. As observed upon comparing Columns 7 and 8, different from FedSem, ANN-SSFL constructed the loss function of the federation center according to the accuracy of the feature representation for the unlabeled data, causing the unlabeled data to contribute sufficiently to the federation center, thus guaranteeing the comprehensiveness of the information utilization. However, unreliable information in unlabeled data were also aggregated in the federation model. Upon comparing Columns 8 and 9, it is clear that SSFL-ATT obtained better fault diagnosis accuracy than ANN-SSFL. SSFL-ATT filters out unreliable model information from the local model through the attention mechanism. And the loss function of the federation center can be used to guide the joint optimization of federation aggregation weights and local model parameters, thus guaranteeing the reliability of the federation model through the precise utilization of reliable information.

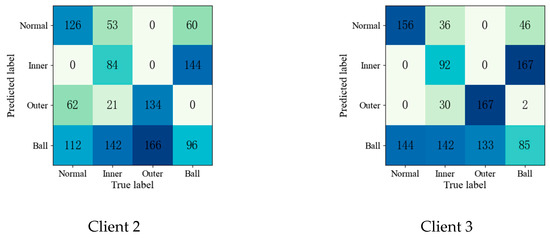

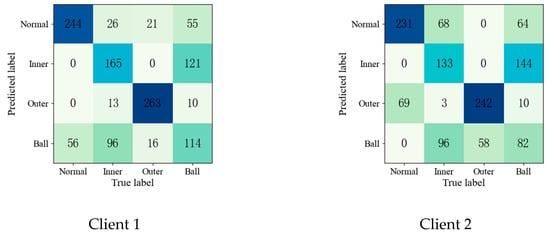

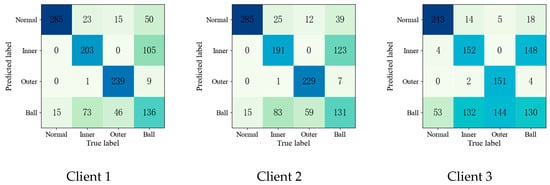

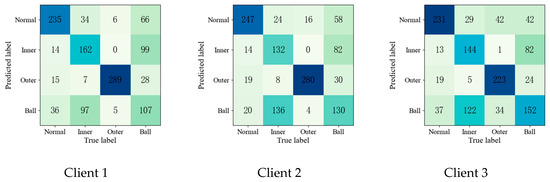

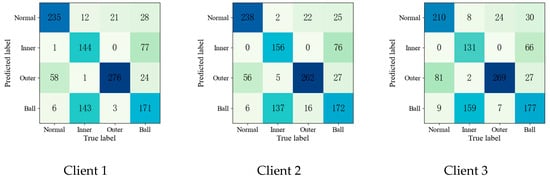

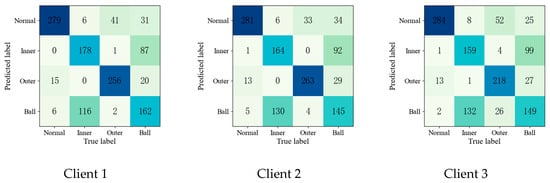

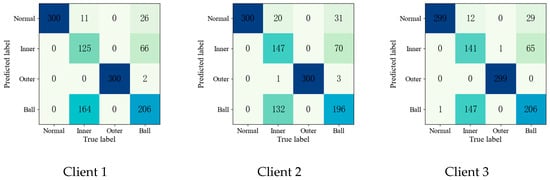

To further validate the effectiveness of the proposed method, the confusion matrices of each fault diagnosis method shown in Experiment 2 are given in Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8.

Figure 4.

Confusion matrix of feature clustering for fault diagnosis result.

Figure 5.

Confusion matrix of DNN for fault diagnosis result.

Figure 6.

Confusion matrix of FedAvg for fault diagnosis result.

Figure 7.

Confusion matrix of FedSem for fault diagnosis result.

Figure 8.

Confusion matrix of Sem-Fed for fault diagnosis result.

Upon comparing the confusion matrices of Figure 6, Figure 7 and Figure 8, it is clear that the diagnostic accuracy of both the health data and outer-race fault data was significantly degraded. This shows that unreliable information caused by local pseudo-label led to negative transfer in the federation model. As gleaned when comparing Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 with Figure 10, the diagnostic accuracy was close to 100% for both the health data and outer-race fault data, and the similar features of the inner-race fault data and ball fault data are easier to recognize. This finding shows that the proposed method can prevent unreliable model information from interfering with the federation model’s performance and improve the performance of the federation model through an effective federation aggregation strategy.

Figure 9.

Confusion matrix of ANN-SSFL for the fault diagnosis result.

Figure 10.

Confusion matrix of SSFL-ATT for the fault diagnosis result.

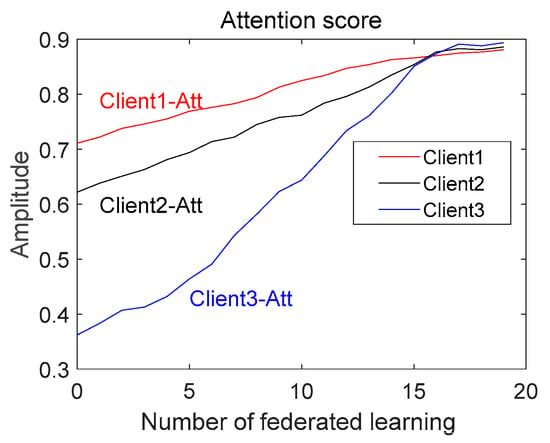

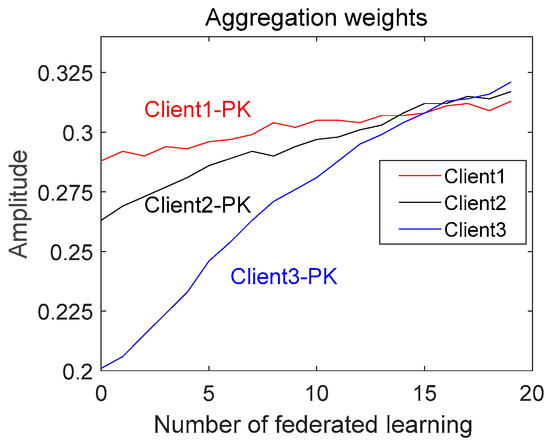

This section analyzes the evolution of the aggregation weights, Pk, and attention scores, Att, in Experiment 2. As shown in Figure 11, we plotted the attention scores of the clients at different stages on a graph to see how the attention scores changed. In the beginning, Client 1 and Client 2 had high attention scores, while Client 3 had a low attention score. This is because the performance of the federation model in the early stages was not high enough to assign a high-quality pseudo-label to unlabeled data. This resulted in a more erroneous pseudo-label for Client 3, which had a poorer-quality local model, and hence, a low attention score. As the federation proceeded, the attention scores of all the clients rose, and the attention score of Client 3 rose rapidly. This is because SSFL-ATT screened out unreliable information through an attention mechanism to ensure the performance of the federation model, which, in turn, ensured the quality of the pseudo-label for unlabeled data. Therefore, the quality of the local model was ensured, and, eventually, higher attention scores were obtained. In the late stage, the attention scores of the clients were similar because they all had high-quality pseudo-labels assigned by the federation model, leading to an improvement in the performance of the local model. It is worth noting that Client 3 had a higher attention score than the other clients. This is because Client 3 had a massive number of samples to which the federation model gave a high-quality pseudo-label, and Client 3, therefore, attained a high-quality local model, which, in turn, gave the model a higher attention score. Since Pk is a matrix, we determined the mean value of Pk and then plotted its evolutionary trend; as shown in Figure 12, the evolutionary trend of Pk is like that of Att, which further shows that the method proposed in this paper can filter reliable information and improve the performance of the federation model.

Figure 11.

Evolution of attention scores (Att).

Figure 12.

Evolution of aggregation weights (Pk).

Experiments 4–6 were designed to verify the improvement induced by SSFL-ATT on the performance of the fault diagnosis model when clients have different quantities of unlabeled data. The experimental results are shown in Table 5.

Table 5.

Bearing fault diagnosis results for different quantities of unlabeled client data.

Compare Columns 3–8 and 9 of Experiment 3 and Experiments 4–6. This comparison reveals that when the quantity of the labeled data is fixed, the fault diagnosis accuracy of all the methods decreases as the quantity of unlabeled data decreases. However, SSFL-ATT still achieved high classification accuracy, which indicates that SSFL-ATT was better able to extract reliable information from unreliable local models for clients without labeled data, thus achieving the aim of reducing the perturbation caused by unreliable pseudo-label information sent to the local model.

4.2. Experimental Analysis of Motor Fault Simulation Platform at Shanghai Maritime University

Using the Shanghai Maritime University motor dataset for experimental validation, this section verifies the effectiveness of the proposed method through specific experimental analysis.

4.2.1. Motor Dataset Description

The Shanghai Maritime University motor fault simulation experiment bench is shown in Figure 13. This bench consists of a drive motor, a magnetic powder brake set, a tachometer, a torque sensor, several single-axis acceleration sensors, several current clamps, and an eight-channel portable data acquisition system. Ten different datapoints of motor operation states were used in this section. The data were collected using a drive-end vibration sensor, a fan-end vibration sensor, three current clamps, a torque sensor, and a speed sensor at a sampling frequency of 12,800 Hz. The detailed composition of the dataset is provided in Table 6.

Figure 13.

Shanghai Maritime University motor fault simulation experiment bench.

Table 6.

Motor dataset composition.

During the experimental validation, DNN was used as the base network model, with the number of neurons in each layer set to 7/200/90/30/10 and a learning rate of 0.005 applied, and model optimization was performed using the Adam optimizer. The number of samples in the test set was 10 × 1000. The detailed design of the experiment is in Table 7.

Table 7.

Design of the experiment.

4.2.2. Motor Experimental Results and Analysis

In order to verify the effectiveness of SSFL-ATT with multi-channel signals, the results of Experiments 1–3 are shown in Table 8.

Table 8.

Motor fault diagnosis results with different quantities of label data for clients.

As can be seen in Table 8, the SSFL-ATT method was still superior to the other methods in the multi-channel signal experiments. Although the multi-channel signals contained richer information than the single-channel signals, the amount of unreliable information in the multi-channel signals also increased exponentially. Therefore, a reliable-information-screening mechanism was needed to filter out the unreliable information contained in the multichannel data, thus ensuring the adequate performance of the federal model. SSFL-ATT uses an attention mechanism to filter out unreliable information hidden in local models and enhance clients’ classification ability without labeled data. In the case of multi-channel data, Experiment 4 and Experiment 5 were designed to verify the fault diagnosis effect of the proposed method when the clients have different quantities of unlabeled data. The experimental results are shown in Table 9.

Table 9.

Motor fault diagnosis results with different quantities of label data for clients.

SSFL-ATT was still superior to the other methods in the above two experimental scenarios. From the experimental results in Table 9, it can be concluded that SSFL-ATT is still superior to the other methods, which further indicates that SSFL-ATT can still achieve reliable information screening and be used to build a well-performing federated model under multi-channel data. The experimental results from the Case Western Reserve University’s benchmark dataset and the motor fault dataset of Shanghai Maritime University show that SSFL-ATT was applicable and superior in relation to both the single-channel and multi-channel signals for fault diagnosis.

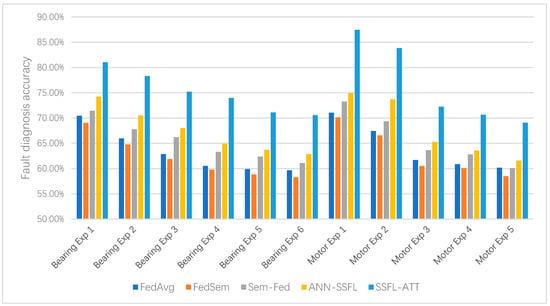

To illustrate the superiority of SSFL-ATT more intuitively in different experimental scenarios with two datasets, Figure 10 shows the histograms of all the experimental results. From Figure 14, it can be gleaned that SSFL-ATT was substantially improved in different experimental scenarios with both single-channel and multi-channel data. This result indicates that SSFL-ATT is more applicable and superior compared to other methods in terms of semi-supervised federated learning fault diagnosis.

Figure 14.

Histogram of the experimental results of different semi-supervised federated learning fault diagnosis methods.

5. Conclusions

In semi-supervised federated learning for fault diagnosis, when there are certain clients without labeled data, existing semi-supervised federated learning methods can lead to a negative transfer problem due to unreliable information hidden in these clients’ local models. This paper proposes a dynamic semi-supervised federated learning fault diagnosis method based on an attention mechanism for designing an optimal federation aggregation strategy. The federation aggregation strategy was dynamically optimized based on reliable information screened in the local model.

First, to ensure the effectiveness of its utilization for local unlabeled data, clients can dynamically adjust the way and extent to which unlabeled data are used according to the performance of the federation model. The aim is to fully utilize unlabeled data for model optimization while reducing the perturbation of the local training process with low-quality pseudo-labels. Then, in the process of federation aggregation, the occurrence of negative transfer in federated learning due to unreliable model information can be avoided by establishing reliability evaluation metrics based on the attention mechanism. At the same time, the feedback information from clients on the performance of the federation model can be combined to drive the federation aggregation process and achieve the joint optimization of aggregation weights and local model parameters. The experimental results show that SSFL-ATT can utilize an attention mechanism to filter out unreliable information to avoid negative transfer caused by unreliable information, and it can also effectively improve the classification ability of unlabeled clients. Compared to existing semi-supervised federal learning methods (a comparison shown in in the experiments of both single-channel and multi-channel signals), these results indicate that SSFL-ATT is superior and more applicable.

Author Contributions

Conceptualization, F.Z. and S.L.; methodology, F.Z.; software, S.T.; validation, S.L., F.Z. and S.T.; formal analysis, S.L.; investigation, T.W.; resources, X.H.; data curation, X.H.; writing—original draft preparation, S.L.; writing—review and editing, F.Z.; visualization, T.W.; supervision, C.W.; project administration, F.Z.; funding acquisition, X.H. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (62073213) and the National Natural Science Foundation Youth Science Foundation Project (52205111).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data involved in this article are presented in the article.

Acknowledgments

The authors would like to thank Case Western Reserve University for providing bearing vibration data and Shanghai Maritime University for providing motor data.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Yao, X.; Wang, J.; Cao, L.; Yang, L.; Shen, Y.; Wu, Y. Fault Diagnosis of Rolling Bearing based on VMD Feature Energy Reconstruction and ADE-ELM. In Proceedings of the 2022 IEEE International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Chongqing, China, 5–7 August 2022; pp. 81–86. [Google Scholar]

- Hou, Q.; Liu, Y.; Guo, P.; Shao, C.; Cao, L.; Huang, L. Rolling bearing fault diagnosis utilizing pearson’s correlation coefficient optimizing variational mode decomposition based deep learning model. In Proceedings of the 2022 IEEE International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Chongqing, China, 5–7 August 2022; pp. 206–213. [Google Scholar]

- Le, Y.; Liang, C.; Jinglin, W.; Xiaohan, Y.; Yong, S.; Yingjian, W. Bearing fault feature extraction measure using multi-layer noise reduction technology. In Proceedings of the 2022 IEEE International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Chongqing, China, 5–7 August 2022; pp. 53–56. [Google Scholar]

- Zhang, Y.; Zhou, T.; Huang, X.; Cao, L.; Zhou, Q. Fault diagnosis of rotating machinery based on recurrent neural networks. Measurement 2021, 171, 108774. [Google Scholar] [CrossRef]

- Liang, G.; Gumabay, M.V.N.; Zhang, Q.; Zhu, G. Smart Fault Diagnosis of Rotating Machinery with Analytics Using Deep Learning Algorithm. In Proceedings of the 2022 5th International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Wuhan, China, 22–24 April 2022; pp. 660–665. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; She, Z.; Zhang, A. A Semi-Supervision Fault Diagnosis Method Based on Attitude Information for a Satellite. IEEE Access 2017, 5, 20303–20312. [Google Scholar] [CrossRef]

- Yetiştiren, Z.; Özbey, C.; Arkangil, H.E. Different Scenarios and Query Strategies in Active Learning for Document Classification. In Proceedings of the 2021 6th International Conference on Computer Science and Engineering (UBMK), Ankara, Turkey, 15–17 September 2021; pp. 332–335. [Google Scholar]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Károly, A.I.; Fullér, R.; Galambos, P. Unsupervised clustering for deep learning: A tutorial survey. Acta Polytech. Hung. 2018, 15, 29–53. [Google Scholar] [CrossRef]

- Vale, K.M.O.; Gorgônio, A.C.; Gorgônio, F.D.L.E.; Canuto, A.M.D.P. An Efficient Approach to Select Instances in Self-Training and Co-Training Semi-Supervised Methods. IEEE Access 2022, 10, 7254–7276. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, H.; Chen, J.; Kong, Y.; Zheng, S. A Generic Semi-Supervised Deep Learning-Based Approach for Automated Surface Inspection. IEEE Access 2020, 8, 114088–114099. [Google Scholar] [CrossRef]

- Neto, H.N.C.; Hribar, J.; Dusparic, I.; Mattos, D.M.F.; Fernandes, N.C. A Survey on Securing Federated Learning: Analysis of Applications, Attacks, Challenges, and Trends. IEEE Access 2023, 11, 41928–41953. [Google Scholar] [CrossRef]

- Jeong, W.; Yoon, J.; Yang, E.; Hwang, S.J. Federated semi-supervised learning with inter-client consistency & disjoint learning. arXiv 2020, arXiv:2006.12097. [Google Scholar]

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Nigam, K.; McCallum, A.K.; Thrun, S.; Mitchell, T. Text Classification from Labeled and Unlabeled Documents using EM. Mach. Learn. 2000, 39, 103–134. [Google Scholar] [CrossRef]

- Fan, X.H.; Guo, Z.Y.; Ma, H.F. An Improved EM-Based Semi-supervised Learning Method. In Proceeding of the 2009 International Joint Conference on Bioinformatics, Shanghai, China, 3–5 August 2009; pp. 529–532. [Google Scholar]

- Laine, S.; Aila, T. Temporal Ensembling for Semi-Supervised Learning. In Proceeding of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017; pp. 1–13. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30, 1195–1204. [Google Scholar]

- Jia, J.T.; Schaub, M.T.; Segarra, S.; Benson, A.R. Graph-based Semi-Supervised & Active Learning for Edge Flows. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; ACM: New York, NY, USA, 2019; pp. 761–771. [Google Scholar]

- Yi, H.; Jiang, Q. Graph-based semi-supervised learning for icing fault detection of wind turbine blade. Meas. Sci. Technol. 2021, 32, 035117. [Google Scholar] [CrossRef]

- Liu, Y.F.; Zheng, Y.F.; Jiang, L.Y.; Li, G.H.; Zhang, W.J. Survey on Pseudo-Labeling Methods in Deep Semi-supervised Learning. J. Front. Comput. Sci. Technol. 2022, 16, 1279–1290. [Google Scholar]

- Yu, K.; Lin, T.R.; Ma, H.; Li, X.; Li, X. A multi-stage semi-supervised learning approach for intelligent fault diagnosis of rolling bearing using data augmentation and metric learning. Mech. Syst. Signal Process. 2021, 146, 107043. [Google Scholar] [CrossRef]

- Liu, T.; Ye, W. A semi-supervised learning method for surface defect classification of magnetic tiles. Mach. Vis. Appl. 2022, 33, 35. [Google Scholar] [CrossRef]

- Ribeiro, F.D.S.; Calivá, F.; Swainson, M.; Gudmundsson, K.; Leontidis, G.; Kollias, S. Deep Bayesian Self-Training. Neural Comput. Appl. 2020, 32, 4275–4291. [Google Scholar] [CrossRef]

- Pedronette, D.; Latecki, L.J. Rank-based self-training for graph convolutional networks. Inf. Process. Manag. 2021, 58, 102443. [Google Scholar] [CrossRef]

- Zhang, S.; Ye, F.; Wang, B.; Habetler, T.G. Semi-Supervised Bearing Fault Diagnosis and Classification Using Variational Autoencoder-Based Deep Generative Models. IEEE Sens. J. 2021, 21, 6476–6486. [Google Scholar] [CrossRef]

- Tang, S.J.; Zhou, F.N.; Liu, W. Semi-supervised bearing fault diagnosis based on Deep neural network joint optimization. In Proceeding of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 6508–6513. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.y. Communication-efficient learning of deep networks from decentralized data. Mach. Learn. 2017, 54, 1273–1282. [Google Scholar]

- Albaseer, A.; Ciftler, B.S.; Abdallah, M.; Al-Fuqaha, A. Exploiting unlabeled data in smart cities using federated edge learning. In Proceeding of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Byblos, Lebanon, 15–19 June 2020; pp. 1666–1671. [Google Scholar]

- Diao, E.; Ding, J.; Tarokh, V. SemiFL: Semi-supervised federated learning for unlabeled clients with alternate training. In Proceeding of Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2021; pp. 17871–17884. [Google Scholar]

- Presotto, R.; Civitarese, G.; Bettini, C. Semi-supervised and personalized federated activity recognition based on active learning and label propagation. Pers. Ubiquitous Comput. 2022, 26, 1281–1298. [Google Scholar] [CrossRef]

- Hou, K.C.; Wang, N.Z.; Ke, J.; Song, L.; Yuan, Q.; Miao, F.J. Semi-supervised federated learning model based on AutoEncoder neural network. Appl. Res. Comput. 2022, 39, 1071–1104. (In Chinese) [Google Scholar]

- Shi, Y.H.; Chen, S.G.; Zhang, H.J. Uncertainty Minimization for Personalized Federated Semi-Supervised Learning. IEEE Trans. Netw. Sci. Eng. 2023, 10, 1060–1073. [Google Scholar] [CrossRef]

- Itahara, S.; Nishio, T.; Koda, Y.; Morikura, M.; Yamamoto, K. Distillation-based semi-supervised federated learning for communication-efficient collaborative training with non-iid private data. IEEE Trans. Mob. Comput. 2021, 22, 191–205. [Google Scholar] [CrossRef]

- Case Western Reserve University Bearing Data Center Website. Available online: https://engineering.case.edu/bearingdatacenter (accessed on 20 July 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).