Abstract

Multi-task learning is a statistical methodology that aims to improve the generalization performances of estimation and prediction tasks by sharing common information among multiple tasks. On the other hand, compositional data consist of proportions as components summing to one. Because components of compositional data depend on each other, existing methods for multi-task learning cannot be directly applied to them. In the framework of multi-task learning, a network lasso regularization enables us to consider each sample as a single task and construct different models for each one. In this paper, we propose a multi-task learning method for compositional data using a sparse network lasso. We focus on a symmetric form of the log-contrast model, which is a regression model with compositional covariates. Our proposed method enables us to extract latent clusters and relevant variables for compositional data by considering relationships among samples. The effectiveness of the proposed method is evaluated through simulation studies and application to gut microbiome data. Both results show that the prediction accuracy of our proposed method is better than existing methods when information about relationships among samples is appropriately obtained.

1. Introduction

Multi-task learning is a statistical methodology that assumes a different model for each task and jointly estimates these models. By sharing the common information between them, the generalization performance of estimation and prediction tasks is improved [1]. Multi-task learning has been used in various fields of research, such as computer vision [2], natural language processing [3], and life sciences [4]. In life sciences, the risk factors may vary from patient to patient [5], and a model that is common to all patients cannot sufficiently extract general risk factors. In multi-task learning, each patient can be considered as a single task, and different models are built for each patient to extract both patient-specific and common factors for the disease [6]. Localized lasso [7] is a method that performs multi-task learning using network lasso regularization [8]. By treating each sample as a single task, localized lasso simultaneously performs multi-task learning and clustering in the framework of a regression model.

On the other hand, compositional data, which consist of the individual proportions of a composition, are used in the fields of geology and life sciences for microbiome analysis. Compositional data are constrained to always take positive values summing to one. Due to these constraints, it is difficult to apply existing multi-task learning methods to compositional data. In the field of microbiome analysis, studies on gut microbiomes [9,10] have suggested that there are multiple types of gut microbiome clusters that vary from individual to individual [11]. In the case of such data where multiple clusters may exist, it is difficult to extract sufficient information using existing regression models for compositional data.

In this paper, we propose a multi-task learning method for compositional data, focusing on the network lasso regularization and the symmetric form of the log-contrast model [12], which is a linear regression model with compositional covariates. The symmetric form is extended to the locally symmetric form in which each sample has a different regression coefficient vector. These regression coefficient vectors are clustered by the network lasso regularization. Furthermore, because the dimensionality of features in compositional data has been increasing, in particular in microbiome analysis [13], we use an -regularization [14] to perform variable selection. The advantage of using -regularization is being able to perform variable selection even if the number of parameters exceeds the sample size. In addition, -regularization is formulated by convex optimization, which leads to feasible computation, while classical subset selection is not. The estimation of the parameters included in the model is performed using an estimation algorithm based on the alternating direction method of multipliers [15], because the model includes non-differentiable points in the -regularization term and zero-sum constraints on the parameters. The constructed model includes regularization parameters, which are determined by cross-validation (CV).

The remainder of this paper is organized as follows. Section 2 introduces multi-task learning based on a network lasso. In Section 3, we describe the regression models for compositional data. We propose a multi-task learning method for compositional data and its estimation algorithm in Section 4. In Section 5, we discuss the effectiveness of the proposed method through Monte Carlo simulations. An application to gut microbiome data is presented in Section 6. Finally, Section 7 summarizes this paper and discusses future work.

2. Multi-Task Learning Based on a Network Lasso

Suppose that we have n observed p-dimensional data and n observed data for the response variable and that these pairs are given independently. The graph is also given, where represents the relationship between the sample pair and , and thus the diagonal components are zero.

We consider the following linear regression model:

where is the p-dimensional regression coefficient vector for sample , and is an error term distributed as independently. Note that we exclude the intercept from the model, because we assume the centered response and the standardized explanatory variables. Model (1) comprises a different model for each sample. In classical regression models, the regression coefficient vectors are assumed to be identical (i.e., ).

For Model (1), we consider the following minimization problem:

where is a regularization parameter. The second term in (2) is the network lasso regularization term [8]. For coefficient vectors and , the network lasso regularization term induces . If these vectors are estimated to be the same, then the m-th and l-th samples are interpreted as belonging to the same cluster. In the framework of multi-task learning, the minimization problem (2) considers one sample as one task by setting a coefficient vector for each sample. This allows us to extract the information of the regression coefficient vectors separately for each task. In addition, by clustering the regression coefficient vectors using the network lasso regularization term, we can extract the common information among tasks.

Yamada et al. [7] proposed the localized lasso for minimization problem (2) by adding an -norm regularization term [16] as follows:

The -norm regularization term induces group structure and intra-group level sparsity: several regression coefficients in a group are estimated to be zero, but at least one is estimated to be non-zero by squaring over the -norm. In the localized lasso, each regression coefficient vector is treated as a group in order to remain . The localized lasso is used for multi-task learning and variable selection.

3. Regression Modeling for Compositional Data

The p-dimensional compositional data are defined as proportional data in the simplex space:

This structure imposes dependence between the features of the compositional data. Thus, statistical methods defined for spaces of real numbers cannot be applied [17]. To overcome this problem, Aitchison and Bacon-Shone [12] proposed the log-contrast model, which is a linear regression model with compositional covariates.

Suppose that we have n observed p-dimensional compositional data and n objective variable data and these pairs are given independently. The log-contrast model is represented as follows:

where is a regression coefficient vector. Because the model uses an arbitrary variable as a reference for all other variables, the solution changes depending on the selection of the reference. By introducing , the log-contrast model is equivalently expressed in symmetric form as:

where , and is a regression coefficient vector. Lin et al. [13] proposed the minimization problem to select relevant variables in symmetric form by adding an -regularization term [14]:

Other models that extend this symmetric form of the problem have also been proposed [18,19,20,21].

4. Proposed Method

In this section, we propose a multi-task learning method for compositional data based on the network lasso and the symmetric form of the log-contrast model.

4.1. Model

We consider the locally symmetric form of the log-contrast model:

where , and is the regression coefficient vector for i-th sample of compositional data . For Model (8), we consider the following minimization problem:

where are regularization parameters. The second term is the network lasso regularization term, which performs the clustering of the regression coefficient vectors. The third term is the -regularization term [14]. This term is interpreted as a special case of the -regularization term used in Model (3). Unlike the -regularization term, it is difficult to optimize the -regularization directly, because it does not have a closed form of the updates. To construct the estimation algorithm that performs variable selection and preserves the constraints for regression coefficient vectors simultaneously, we employ the -regularization term. Since variable selection is performed by the -regularization term, we refer to the combination of the second term and the third term as sparse network lasso after sparse group lasso [22].

For Model (8), when a new data point is obtained after the estimation, there is no corresponding regression coefficient vector for . Thus, it is necessary to estimate the coefficient vector for predicting the response. Hallac et al. [8] proposed solving the following minimization problem:

where is the estimated regression coefficient vector for the i-th sample. This problem is also known as the Weber problem. The solution of this problem is interpreted as the weighted geometric median of . For our proposed method, we consider solving the constrained Weber problem with the zero-sum constraint in the form:

4.2. Estimation Algorithm

To construct the estimation algorithm for the proposed method, we rewrite minimization problem (9) as follows:

where is the p-dimensional vector of ones. The augmented Lagrangian for (12) is formulated as:

where are the Lagrange multipliers and are the tuning parameters. For simplicity of notation, the parameters in the model are collectively denoted as , the Lagrange multipliers are collectively denoted as Q, and the tuning parameters are collectively denoted as .

The update algorithm for with the alternating direction method of multipliers (ADMM) is obtained from the following minimization problem:

where k denotes the repetition number. By repeating the updates (14) and the update for Q, the estimation algorithm for (12) is represented by Algorithm 1. The estimation algorithm for (11) is represented by Algorithm 2 with the update from ADMM. The details of the derivations of the estimation algorithms are presented in Appendices Appendix A and Appendix B.

| Algorithm 1 Estimation algorithm for (12) via ADMM |

|

| Algorithm 2 Estimation algorithm for constrained Weber problem (11) via ADMM |

|

5. Simulation Studies

In this section, we report simulation studies conducted with our proposed method using artificial data.

In our simulations, we generated artificial data from the true model:

where , is p-dimensional compositional data, ,, are the true regression coefficient vectors, and is an error term distributed as independently. We generated compositional data as follows. First, we generated the data from the p-dimensional multivariate normal distribution independently, where , , and

Then, the data were converted to the compositional data by the following transformation:

The true regression coefficient vectors were set as:

We also assumed that the graph was observed. In the graph, the true value of each element was obtained with probability . We calculated MSE as , dividing the 120 samples into 100 training data and 20 validation data. Here, indicates the number of samples for validation data (i.e., 20). The regression coefficient vectors for the validation data were estimated based on the constrained Weber problem (11). To evaluate the effectiveness of our proposed method, it is compared with both Model (7) and the model obtained by removing the zero-sum constraint from minimization problem (9). We refer to the latter two comparison methods as compositional lasso (CL) and sparse network lasso (SNL), respectively. To the best of our knowledge, there are no studies that simultaneously perform regression and clustering on compositional data. Therefore, we compared with the CL and SNL models; CL assumes the situation where the existence of the multiple clusters is not considered, while SNL considers their existence while the nature of the compositional data is ignored.

The regularization parameters were determined by five-fold CV for both the proposed method and the comparison methods. The values of tuning parameters for ADMM were all set to one. We considered several settings: , , . We generated 100 datasets and computed the mean and standard deviation of MSE from the 100 repetitions.

Table 1, Table 2 and Table 3 show the results for the mean and standard deviation of MSE for each . The proposed method and SNL show better prediction accuracy than CL in almost settings. The reason for this may be that CL assumes only a single regression coefficient vector and thus fails to capture the true structure, which consists of three clusters. A comparison between the proposed method and SNL shows that the proposed method has higher prediction accuracy than SNL when and , whereas SNL shows better results in most cases when . This means that the proposed method is superior to SNL when the structure of the graph R is similar to the true structure. On the whole, the prediction accuracy deteriorates as decreases for both the proposed method and SNL, but this deterioration is more extreme for the proposed method. For both the proposed method and SNL, which assume multiple regression coefficient vectors, the standard deviation is somewhat large.

Table 1.

Mean and deviation of MSE in for simulations.

Table 2.

Mean and deviation of MSE in for simulations.

Table 3.

Mean and deviation of MSE in for simulations.

6. Application to Gut Microbiome Data

The gut microbiome affects human health/disease, for example, in terms of obesity. Gut microbiomes may be exposed to inter-individual heterogeneity from environmental factors such as diet as well as from hormonal factors and age [23,24]. In this section, we applied our proposed method to the real dataset reported by [9]. This dataset was obtained from a cross-sectional study of 98 healthy volunteers conducted at the University of Pennsylvania to investigate the connections between long-term dietary patterns and gut microbiome composition. Stool samples were collected from the subjects, and DNA samples were analyzed by 454/Roche pyrosequencing of 16S rRNA gene segments of the V1–V2 region. In the results, the counts for more than 17,000 species-level OTUs were obtained. Demographic data, such as body mass index (BMI), sex, and age, were also obtained.

We used centered BMI as the response and the compositional data of the gut microbiome as the explanatory variable. In order to reduce their number, we used single-linkage clustering based on an available phylogenetic tree to aggregate the OTUs, which is provided as the function tip_glom in the R package “phyloseq” see [25]. In this process, some closely related OTUs defined on the leaf nodes of the phylogenetic tree are aggregated into one OTU when the cophenetic distances between the OTUs are smaller than a certain threshold. We set the threshold at . As a result, 166 OTUs were obtained after the aggregation. Since some OTUs had zero counts, making it impossible to take the logarithm, these counts were replaced by the value one before converting them to compositional data.

We computed the graph as follows:

where is the distance between the i-th and j-th samples. Distance was calculated in the following two ways: (i) Gower distance [26] was calculated using the sex and age data of the subjects. (ii) Log-ratio distance (e.g., see [27]) was calculated using the explanatory variable, as follows:

where . Using these two ways of calculating distance, we obtained two different R and estimation results. We refer to these two methods as Proposed (i) and Proposed (ii), respectively. Equation (19) is the same as the one used in Yamada et al. [7] in the application to real datasets.

To evaluate the prediction accuracy of our proposed method, we calculated MSE by randomly splitting the dataset into 90 samples as the training data and eight samples as the validation data. We again used the method of Lin et al. (2014), which is referred to as compositional lasso (CL), as a comparison method. The regularization parameters were determined by five-fold CV for both our proposed method and CL. The mean and standard deviation of MSE were calculated from 100 repetitions.

Table 4 shows the mean and standard deviation of MSE in the real data analysis. We observe that Proposed (i) has the smallest mean and standard deviation of MSE. This indicates that our proposed method captures the inherent structure of the compositional data by providing an appropriate graph R. However, the standard deviation is large even for Proposed (i), which indicates that the prediction accuracy may strongly depend on the assignments of samples to the training data and the validation data.

Table 4.

Mean and standard deviation of MSE for real data analysis (100 repetitions).

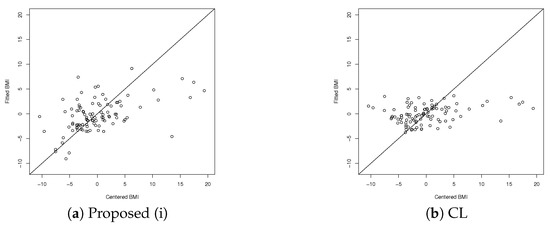

Table 5 shows the coefficient of determination using leave-one-out cross-validation (LOOCV) for Proposed (i) and CL. The fittings of the observed and predicted BMI are plotted in Figure 1a,b for Proposed (i) and CL, respectively. The horizontal axis represents the centered observed BMI values, and the vertical axis represents the corresponding predicted BMI. As shown, CL does not predict data with centered observed values between and as being in that interval, whereas Proposed (i) predicts these data correctly to some extent.

Table 5.

Coefficients of determination using LOOCV.

Figure 1.

Observed and predicted BMI using LOOCV.

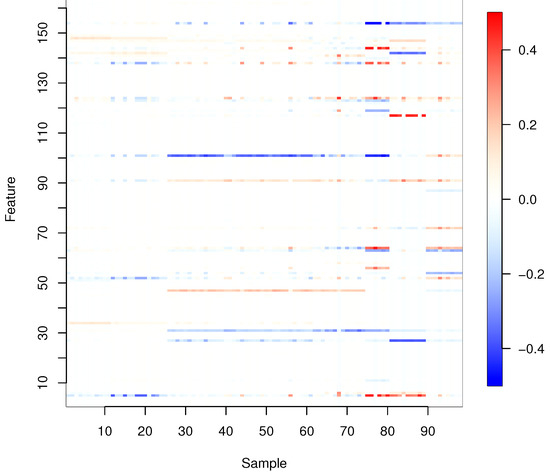

Figure 2 shows the regression coefficients estimated by Proposed (i) for all samples, where the regularization parameters are determined by LOOCV. To obtain the results in Figure 2, we used hierarchical clustering to group together similar regression coefficient vectors, setting the number of clusters as five.

Figure 2.

Estimated regression coefficients for all samples.

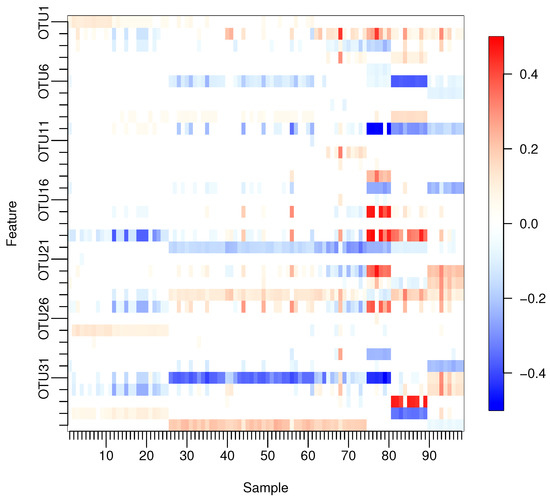

With our proposed method, many of the estimated regression coefficients were not exactly zero but close to zero. Thus, we will treat estimated regression coefficients as being exactly zero to simplify the interpretation. Figure 3 shows only those coefficients that satisfy in at least one sample, where the corresponding variables are listed in Table 6.

Figure 3.

Only the estimated regression coefficients with for at least one sample.

Table 6.

Variables with estimated regression coefficients for at least one sample.

It is reported that the human gut microbiome can be classified into three clusters, called enterotypes, which are characterized by three predominant genera: Bacteroides, Prevotella, and Ruminococcus [11]. In the dataset, OTUs of genus levels Prevotella and Ruminococcus were aggregated into the OTUs of family levels Prevotellaceae and Ruminococcaceae by the single-linkage clustering. In Figure 3, Bacteroides correspond to OTU5, 6, 7, 8, 9, and 10; Prevotellaceae corresponds to OTU12; and Ruminococcaceae corresponds to OTU30 and 31. For these OTUs, the differences are clear between OTU6, 9, 10 and OTU30, 31 among samples 81–90, in which only Bacteroides are correlated to the response. On the other hand, the differences among samples 65–74 are also indicated, in which only Bacteroides do not affect BMI. These results suggest that Bacteroides, Prevotellaceae, and Ruminococcaceae may have different effects on BMI that are associated with enterotypes. In addition, it is reported that women with a higher abundance of Prevotellaceae are more obese [28]. The regression coefficients of non-zero Prevotellaceae are all positive, and the eight corresponding samples are all females. On the other hand, in OTU29 indicating Roseburia, 9 samples out of 10 are negatively associated with BMI. Roseburia is also reported to be negatively correlated with indicators of body weight [29].

7. Conclusions

We proposed a multi-task learning method for compositional data based on a sparse network lasso and log-contrast model. By imposing a zero-sum constraint on the model corresponding to each sample, we could extract the information of latent clusters in the regression coefficient vectors for compositional data. In the results of simulations, the proposed method worked well when clusters existed for the compositional data and an appropriate graph R was obtained. In a human gut microbiome analysis, our proposed method is better than the existing method in prediction accuracy by considering the heterogeneity from age and sex. In addition, cluster-specific OTUs such as ones related to enterotypes were detected in terms of effects on BMI.

Although our proposed method shrinks some regression coefficients that do not affect response to zero, many coefficients close to zero remain. Furthermore, in both the simulations and human gut microbiome analysis, the prediction accuracy of the proposed method deteriorated significantly when the obtained R did not capture the true structure. Moreover, the standard deviations of MSE were high in almost all cases. We leave these as topics of future research.

Author Contributions

Conceptualization, A.O.; methodology, A.O.; formal analysis, A.O.; data curation, A.O.; writing—original draft preparation, A.O. and S.K.; supervision, S.K.; funding acquisition, S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by JSPS KAKENHI Grant Numbers JP19K11854 and JP20H02227.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the gut microbiome data not being personally identifiable.

Informed Consent Statement

Patient consent was waived due to the gut microbiome data not being personally identifiable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, A.O., upon reasonable request. The source codes of the proposed method are available at https://github.com/aokazaki255/CSNL.

Acknowledgments

The authors would like to thank the Associate Editor and the reviewers for their helpful comments and constructive suggestions. The authors would like to also thank Tao Wang of Shanghai Jiao Tong University for providing the gut microbiome data used in Section 6. Supercomputing resources were provided by the Human Genome Center (the Univ. of Tokyo).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations is used in this manuscript:

| ADMM | alternating direction method of multipliers |

Appendix A. Derivations of Update Formulas in ADMM

Appendix A.1. Update of w

In the update of , we minimize the terms of the augmented Lagrangian (13) depending on as follows:

From , we obtain the update:

Appendix A.2. Update of a

The update of is obtained by the joint minimization of and as follows:

In [8], the analytical solution was given as:

where

Appendix A.3. Update of b

The update of is obtained by the following minimization problem:

Because the minimization problem with respect to contains a non-differentiable point in the norm of , we consider the subderivative of . Then, we obtain the update:

where is the soft-thresholding operator given by .

Appendix A.4. Update of Q

The updates for the Lagrange multipliers denoted as Q are obtained by gradient descent as follows:

Appendix B. Update Algorithm for Constrained Weber Problem via ADMM

We consider the updates for the following constrained Weber problem via ADMM based on: [30].

The minimization problem (A9) is equivalently represented as:

The augmented Lagrangian for (A10) is formulated as:

where are Lagrange multipliers and are tuning parameters.

Appendix B.1. Update of w i *

In the update of , we minimize the terms of the augmented Lagrangian (A11) depending on as follows:

From , we obtain the update:

Appendix B.2. Update of e

In the update of , we minimize the terms of augmented Lagrangian (A11) depending on as follows:

The minimization problem (A14) is equivalently expressed as:

In [30], because the right-hand side of (A15) is the proximal map of the function , by using Moreau’s decomposition (e.g., [31]), the updates of are obtained by:

Appendix B.3. Update of u and v

The updates for Lagrange multipliers and v are obtained by gradient descent as follows:

References

- Argyriou, A.; Evgeniou, T.; Pontil, M. Convex multi-task feature learning. Mach. Learn. 2008, 73, 243–272. [Google Scholar] [CrossRef]

- Abdulnabi, A.H.; Wang, G.; Lu, J.; Jia, K. Multi-Task CNN Model for Attribute Prediction. IEEE Trans. Multimed. 2015, 17, 1949–1959. [Google Scholar] [CrossRef]

- Luong, M.T.; Le, Q.V.; Sutskever, I.; Vinyals, O.; Kaiser, L. Multi-task Sequence to Sequence Learning. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Lengerich, B.J.; Aragam, B.; Xing, E.P. Personalized regression enables sample-specific pan-cancer analysis. Bioinformatics 2018, 34, i178–i186. [Google Scholar] [CrossRef]

- Cowie, M.R.; Mosterd, A.; Wood, D.A.; Deckers, J.W.; Poole-Wilson, P.A.; Sutton, G.C.; Grobbee, D.E. The epidemiology of heart failure. Eur. Heart J. 1997, 18, 208–225. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Zhou, J.; Tan, P.N. FORMULA: FactORized MUlti-task LeArning for task discovery in personalized medical models. In Proceedings of the 2015 SIAM International Conference on Data Mining (SDM), Vancouver, BC, Canada, 30 April–2 May 2015; pp. 496–504. [Google Scholar]

- Yamada, M.; Koh, T.; Iwata, T.; Shawe-Taylor, J.; Kaski, S. Localized Lasso for High-Dimensional Regression. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 325–333. [Google Scholar]

- Hallac, D.; Leskovec, J.; Boyd, S. Network Lasso: Clustering and Optimization in Large Graphs. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 387–396. [Google Scholar]

- Wu, G.D.; Chen, J.; Hoffmann, C.; Bittinger, K.; Chen, Y.Y.; Keilbaugh, S.A.; Bewtra, M.; Knights, D.; Walters, W.A.; Knight, R.; et al. Linking Long-Term Dietary Patterns with Gut Microbial Enterotypes. Science 2011, 334, 105–108. [Google Scholar] [CrossRef] [PubMed]

- Dillon, S.M.; Frank, D.N.; Wilson, C.C. The gut microbiome and HIV-1 pathogenesis: A two-way street. AIDS 2016, 30, 2737–2751. [Google Scholar] [CrossRef]

- Arumugam, M.; Raes, J.; Pelletier, E.; Le Paslier, D.; Yamada, T.; Mende, D.R.; Fernandes, G.R.; Tap, J.; Bruls, T.; Batto, J.M.; et al. Enterotypes of the human gut microbiome. Nature 2011, 473, 174–180. [Google Scholar] [CrossRef]

- Aitchison, J.; Bacon-Shone, J. Log contrast models for experiments with mixtures. Biometrika 1984, 71, 323–330. [Google Scholar] [CrossRef]

- Lin, W.; Shi, P.; Feng, R.; Li, H. Variable selection in regression with compositional covariates. Biometrika 2014, 101, 785–797. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Publishers Inc.: Delft, The Netherlands, 2011. [Google Scholar]

- Kong, D.; Fujimaki, R.; Liu, J.; Nie, F.; Ding, C. Exclusive Feature Learning on Arbitrary Structures via ℓ1,2-norm. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1655–1663. [Google Scholar]

- Aitchison, J. The statistical analysis of compositional data. J. R. Stat. Soc. 1982, 44, 139–160. [Google Scholar] [CrossRef]

- Shi, P.; Zhang, A.; Li, H. Regression analysis for microbiome compositional data. Ann. Appl. Stat. 2016, 10, 1019–1040. [Google Scholar] [CrossRef]

- Wang, T.; Zhao, H. Structured subcomposition selection in regression and its application to microbiome data analysis. Ann. Appl. Stat. 2017, 11, 771–791. [Google Scholar] [CrossRef]

- Bien, J.; Yan, X.; Simpson, L.; Müller, C.L. Tree-aggregated predictive modeling of microbiome data. Sci. Rep. 2021, 11, 14505. [Google Scholar] [CrossRef]

- Combettes, P.L.; Müller, C.L. Regression Models for Compositional Data: General Log-Contrast Formulations, Proximal Optimization, and Microbiome Data Applications. Stat. Biosci. 2021, 13, 217–242. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. A note on the group lasso and a sparse group lasso. arXiv 2010, arXiv:1001.0736. [Google Scholar]

- Haro, C.; Rangel-Zúñiga, O.A.; Alcala-Diaz, J.F.; Gómez-Delgado, F.; Pérez-Martínez, P.; Delgado-Lista, J.; Quintana-Navarro, G.M.; Landa, B.B.; Navas-Cortés, J.A.; Tena-Sempere, M.; et al. Intestinal microbiota is influenced by gender and body mass index. PloS ONE 2016, 11, e0154090. [Google Scholar] [CrossRef]

- Saraswati, S.; Sitaraman, R. Aging and the human gut microbiota–from correlation to causality. Front. Microbiol. 2015, 5, 764. [Google Scholar] [CrossRef]

- McMurdie, P.J.; Holmes, S. phyloseq: An R package for reproducible interactive analysis and graphics of microbiome census data. PloS ONE 2013, 8, e61217. [Google Scholar] [CrossRef]

- Gower, J.C. A General Coefficient of Similarity and Some of Its Properties. Biometrics 1971, 27, 857–871. [Google Scholar] [CrossRef]

- Greenacre, M. Compositional Data Analysis in Practice; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Cuevas-Sierra, A.; Riezu-Boj, J.I.; Guruceaga, E.; Milagro, F.I.; Martínez, J.A. Sex-Specific Associations between Gut Prevotellaceae and Host Genetics on Adiposity. Microorganisms 2020, 8, 938. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Q.; Li, D.; He, Y.; Li, Y.; Yang, Z.; Zhao, X.; Liu, Y.; Wang, Y.; Sun, J.; Feng, X.; et al. Discrepant gut microbiota markers for the classification of obesity-related metabolic abnormalities. Sci. Rep. 2019, 9, 13424. [Google Scholar] [CrossRef] [PubMed]

- Chaudhury, K.N.; Ramakrishnan, K.R. A new ADMM algorithm for the Euclidean Median and its application to robust patch regression. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal Algorithms. Found. Trends Optim. 2014, 1, 127–239. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).